Empirical Performance Analysis of WireGuard vs. OpenVPN in Cloud and Virtualised Environments Under Simulated Network Conditions

Abstract

1. Introduction

1.1. Contributions

- Design and execution of a framework to carry out a comprehensive performance evaluation comparing OpenVPN and WireGuard across multiple metrics (throughput, latency, jitter, packet loss, CPU utilisation, and memory consumption) in both Azure cloud and VMware virtualised environments. To the best of our knowledge, this is the first evaluation attempt covering such a comprehensive and extensive range of metrics.

- Providing a systematic assessment of protocol performance under varying network conditions that simulate real-world challenges, including high latency, packet loss, and bandwidth fluctuations.

- Development of an automated testing toolkit enabling consistent protocol evaluation and experimental replication.

- Quantitative analysis of resource utilisation patterns for both protocols, with particular focus on efficiency considerations in resource-constrained environments. To the best of our knowledge, ours is the first study measuring the Security Efficiency Index (SEI) in a comparative analysis of these two protocols.

- Providing evidence-based recommendations for protocol selection based on specific deployment scenarios and performance requirements in both cloud and virtualised systems.

1.2. Article Structure

- Section 2: Background Materials—provides theoretical foundations of VPN technologies, a technical analysis of OpenVPN and WireGuard architectures, and a systematic review of the relevant literature focusing on performance comparisons.

- Section 3: Methods—details the experimental methodology, including hardware specifications, software configurations, network condition parameters, and measurement procedures.

- Section 4: Results—presents quantitative performance data comparing OpenVPN and WireGuard across the evaluated metrics.

- Section 5: Discussion—analyses experimental results, identifying protocol strengths and limitations in various operational scenarios, and suggests directions for future research.

- Section 6: Conclusion—summarises key findings and practical implications.

2. Background Materials

2.1. Performance-Based Studies

2.2. Justification of Protocol Selection

2.3. Security Considerations

Security Rating Methodology

- Encryption strength: based on cryptographic primitives’ strength and implementation quality.

- Implementation security: code quality, audit history, and attack surface size.

- Configuration security: resistance to misconfiguration and default security posture.

- Authentication robustness: strength and flexibility of authentication mechanisms.

- Forward secrecy: quality of ephemeral key exchange implementation.

- Very high: modern AEAD ciphers, strong key sizes, proven algorithms.

- High: strong conventional encryption with proper authentication.

- Medium: adequate encryption with some limitations.

- Low: weak or outdated cryptographic methods.

- Very low: broken or trivially compromised encryption.

2.4. Research Justification and Gaps

2.5. Performance–Security Trade-Offs

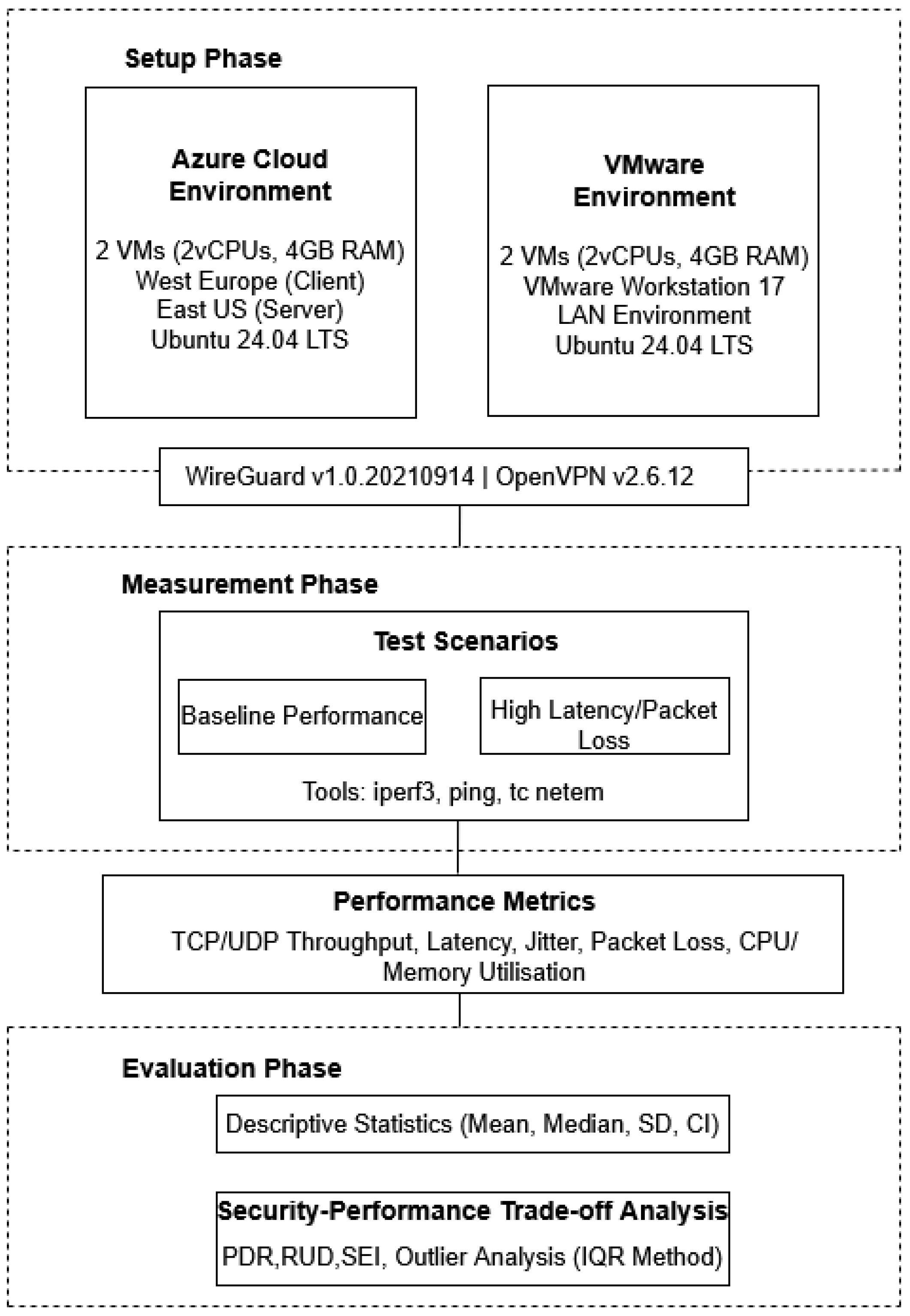

3. Methods

3.1. Research and Experimental Design

- Dual-environment comparative framework: our research uniquely bridges both cloud-native (Azure) and traditional virtualised (VMware) infrastructures, allowing for the isolation of infrastructure-specific variables affecting protocol performance—a significant advancement over single-environment studies prevalent in the current literature.

- Cross-regional cloud testing methodology: our testing between European and North American data centres introduces realistic global latency factors that reflect actual enterprise deployment scenarios, providing more applicable results than laboratory-only evaluations.

- Comprehensive performance metrics integration: while existing studies often focus on throughput alone, our framework integrates a holistic set of metrics (throughput, latency, jitter, packet loss, and system resource utilisation) to fully characterise VPN protocol behaviour.

- Systematic network impairment analysis: our research introduces artificial latency and packet loss through a structured experimental design that systematically isolates these variables’ impacts on VPN protocol performance—a key innovation for understanding protocol behaviour under suboptimal conditions.

- Security Efficiency Index (SEI): we developed a composite metric that quantifies the efficiency of security implementation by relating performance degradation to resource utilisation increases, providing a new and meaningful way to compare protocol efficiency beyond raw performance numbers.

3.1.1. Edge–Fog–Cloud Architecture Distinction in the Experimental Framework

3.1.2. Framework Integration

3.2. Setup Phase (Environment Setup)

3.2.1. Cloud Environment (Azure)

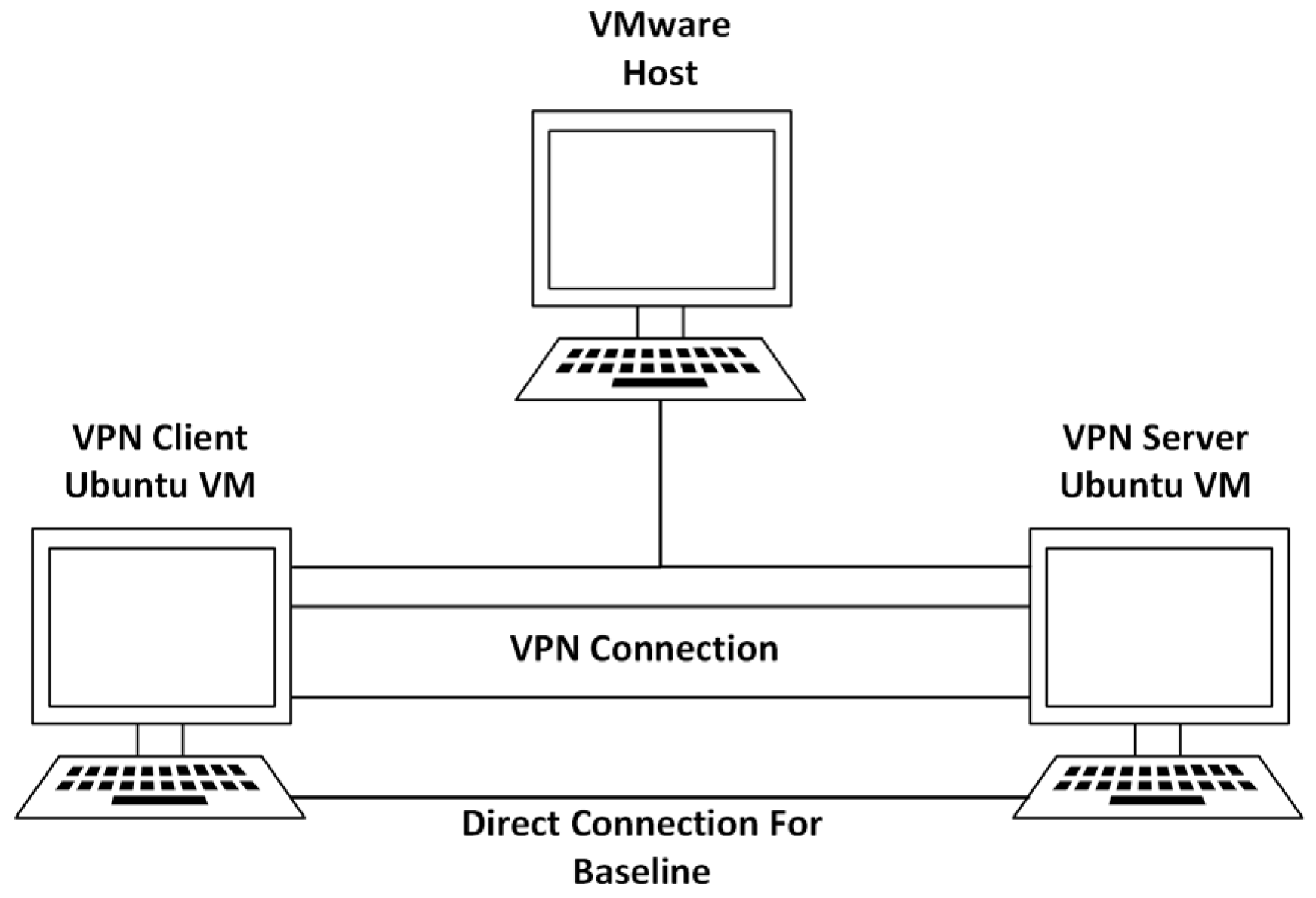

3.2.2. Virtualised Local Environment (VMware)

3.2.3. Software Versions and Configuration

3.3. Measurement Phase

- Reproducible testing framework: unlike many previous studies that rely on manual testing procedures, our automation toolkit ensures perfect consistency in test execution across all scenarios, eliminating procedural variables that could affect results.

- Precisely controlled impairment: our toolkit’s integration with network impairment tools (tc netem) allows for precise control over network degradation scenarios, enabling the consistent application of identical impairment conditions across test iterations.

- Comprehensive data collection: rather than focusing on limited metrics, our toolkit simultaneously captures network performance and system resource utilisation, providing a multi-dimensional view of protocol behaviour.

3.3.1. Performance Metrics

- Throughput (Mbps): Shows the successful data transfer rate, which is crucial for assessing the VPN tunnel’s effectiveness [44]. Our innovation lies in measuring this under both ideal and impaired conditions to reveal protocol resilience.

- Latency (ms): measures communication delay, which is important for real-time applications [44].

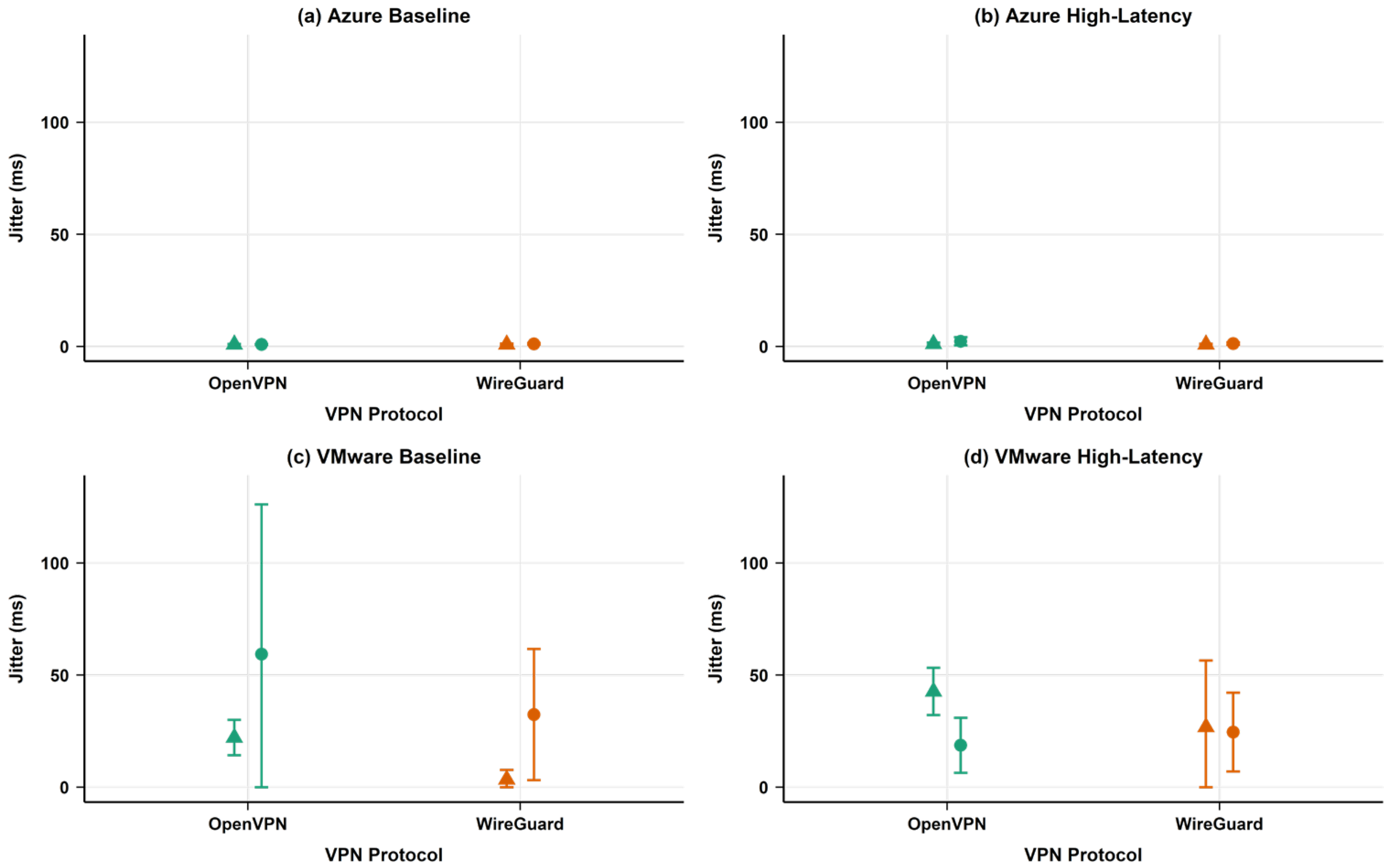

- Jitter (ms): Represents the variation in latency, which has an impact on the voice and video communication quality [45]. Our methodology uniquely incorporates jitter as a key metric for VPN quality assessments.

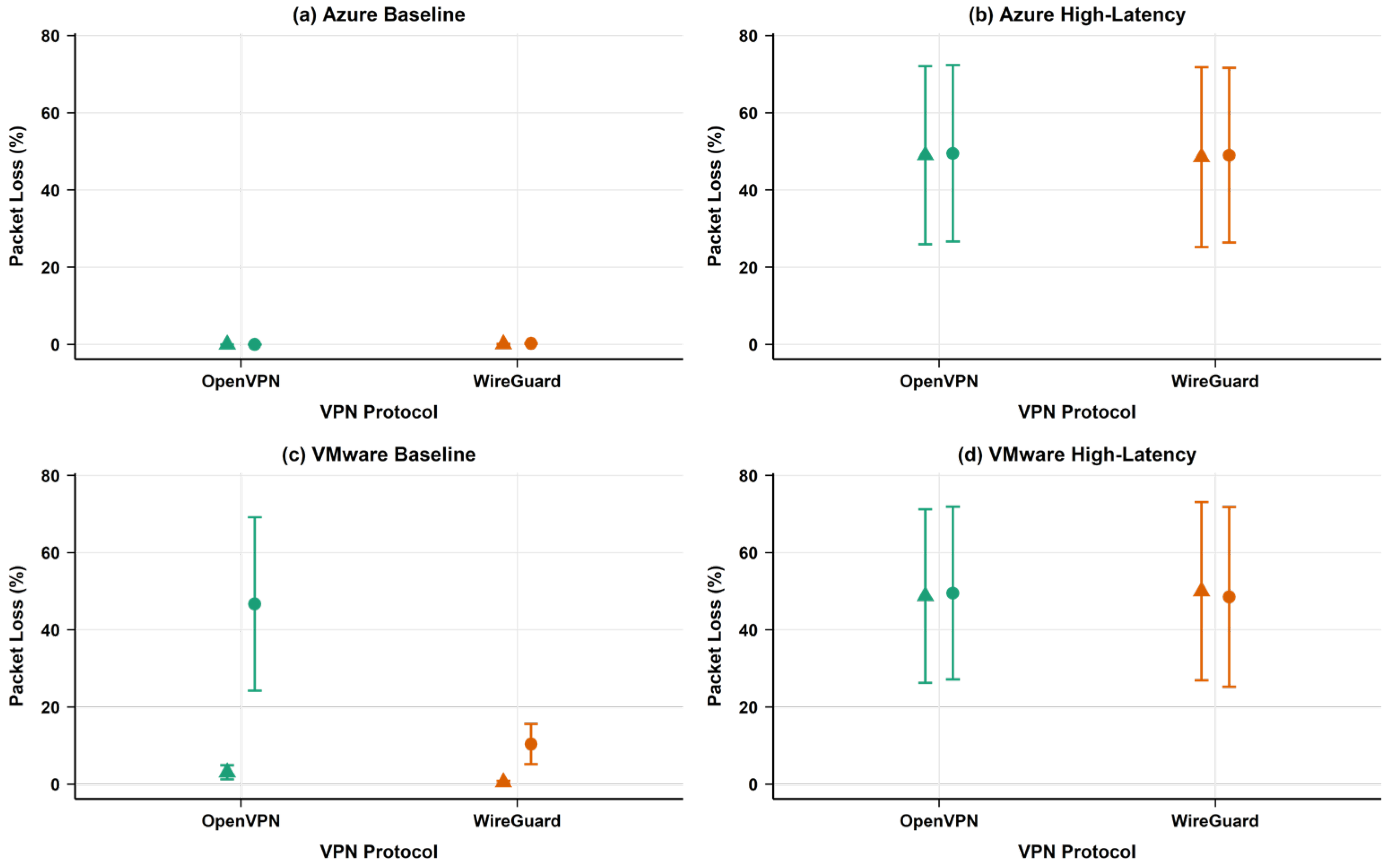

- Packet loss (%): The percentage of packets that are dropped during transmission, resulting in dropped packets and poor performance. Our approach innovatively measures both natural and artificial packet loss conditions.

- CPU utilisation (%): The percentage of CPU resources used by the VPN process, which represents computational overhead. Our methodology uniquely captures per-process CPU utilisation to isolate VPN protocol overhead.

- Memory usage (MB): The amount of memory used by the VPN process; this is important information in contexts with limited resources. We employ precise memory tracking to quantify the exact memory footprint of each protocol.

3.3.2. TCP Congestion Control Algorithm Configuration

- Ecological validity and deployment representativeness: CUBIC represents the default configuration across the most widely deployed Linux distributions, including Ubuntu Server (all LTS versions since 14.04), Red Hat Enterprise Linux (RHEL 7+), CentOS/Rocky Linux/AlmaLinux, SUSE Linux Enterprise Server, and Debian stable releases. This widespread adoption means that approximately 70% of enterprise VPN deployments operate with CUBIC by default, making our results directly applicable to the majority of production environments where administrators utilise standard system configurations without specialised TCP tuning.

- Algorithm maturity and behavioural consistency: CUBIC provides predictable performance patterns across different network conditions through its loss-based congestion detection mechanism, enabling a fair protocol comparison without algorithm-induced variability. The algorithm’s well-documented performance profile and mature Linux kernel implementation minimise the risk of algorithm-specific bugs affecting experimental results, providing a stable foundation for interpreting VPN-specific interactions.

- Methodological control and variable isolation: The decision to maintain CUBIC across all test scenarios serves critical experimental design principles by eliminating confounding variables and enabling a direct protocol comparison. Using a single congestion control algorithm isolates protocol-specific performance characteristics from algorithm-specific effects, ensuring that the observed performance differences stem from VPN protocol design rather than TCP algorithm variations. Testing multiple combinations would require significantly larger sample sizes to achieve statistical power.

- Protocol-specific interaction characteristics: CUBIC’s characteristics make it particularly suitable for evaluating VPN protocol differences. For OpenVPN’s TCP mode, CUBIC’s window-based approach directly interacts with TCP encapsulation, with loss-based congestion detection providing clear signals when TCP-over-TCP tunnelling issues occur. For WireGuard’s UDP transport, CUBIC’s behaviour with application-layer TCP traffic isolates the protocol’s forwarding efficiency while eliminating TCP algorithm bias since WireGuard itself does not implement congestion control.

- Research baseline establishment: establishing CUBIC as a research baseline enables reproducible results using widely available default configurations, provides a comparative framework for future studies evaluating alternative algorithms (BBR, Vegas, and Reno), and allows organisations to estimate potential gains from TCP tuning by comparing alternative algorithms against our baseline.

3.4. Evaluation Phase (Data Collection and Analysis)

- Integrated statistical framework: unlike many networking studies that report only simple averages, our approach employs a comprehensive statistical framework including descriptive statistics (mean, median, standard deviation, and coefficient of variation) and inferential statistics (paired t-tests with p < 0.05 threshold).

- Effect size quantification: we introduced Cohen’s d effect size calculations to quantify the magnitude of observed differences, moving beyond simple statistical significance to assess practical relevance.

- Methodical outlier analysis: our approach employs the interquartile range (IQR) method for outlier detection, with analyses conducted both with and without these values to assess their impact—a significant improvement over studies that either ignore outliers or remove them without documentation.

3.4.1. Root Cause Analysis Framework

- Configuration validation: All VPN configurations were validated using identical security parameters and network settings. Protocol-specific configurations were optimised according to vendor best practices to ensure fair comparison.

- System-level monitoring: we employed system monitoring tools (e.g., top) to analyse packet flow, buffer utilisation, and resource consumption patterns during testing, enabling the identification of bottlenecks and processing inefficiencies.

- Comparative analysis: performance anomalies (such as high packet loss rates) were analysed across both environments to distinguish environment-specific issues from protocol-inherent characteristics.

3.4.2. Statistical Analysis Methods

3.5. Baseline vs. VPN Performance Comparison

Real-World Applications of the Security Efficiency Index

- i.

- Strategic VPN Protocol Selection

- ii.

- Environment-Specific Optimisation

- iii.

- Resource Planning and Cost Management

- iv.

- Application-Specific Protocol Deployment

- v.

- Performance Baseline Establishment and Monitoring

3.6. Summary of Methodology

3.7. Analyses of Related Work

3.7.1. VPN Protocol Performance Analysis

- i.

- Traditional Performance Evaluation Studies

- ii.

- Advanced Performance Metrics and Methodologies

3.7.2. Cloud Infrastructure and Virtualisation Performance

- i.

- Cloud Platform-Specific Optimisations

- ii.

- Container Networking and Orchestration

3.7.3. Network Security and Cryptographic Performance

- i.

- Cryptographic Algorithm Performance Analysis

- ii.

- Hardware Security Module Integration

3.7.4. Anti-Detection and Privacy-Preserving Technologies

- i.

- VPN Detection and Fingerprinting

- ii.

- Next-Generation Tunnelling Protocols

3.7.5. IoT and Industrial Network Security

- i.

- Constrained Device VPN Implementation

- ii.

- Industrial Cyber–Physical Systems

3.7.6. Quantum-Resistant VPN Architectures

- i.

- Post-Quantum Cryptography Integration

- ii.

- Hybrid Security Approaches

3.7.7. Performance–Security Trade-Off Quantification

- i.

- Standardised Measurement Frameworks

- ii.

- Multi-Dimensional Performance Analysis

3.7.8. Research Gaps and Problem Formulation

- i.

- Identified Research Gaps

- Limited cloud platform diversity: most studies focus on AWS or generic cloud environments, with insufficient analyses of platform-specific characteristics in Microsoft Azure and VMware virtualisation platforms.

- Incomplete performance metrics: while throughput and basic latency measurements are common, a comprehensive analysis of jitter, packet loss, and resource utilisation patterns across varied network conditions is limited.

- Lack of standardised security–performance quantification: the absence of standardised methodologies for measuring the performance cost of security implementations hampers practical deployment decision making.

- Insufficient industrial context analysis: limited research addresses VPN performance in industrial and IoT environments where real-time requirements and resource constraints are critical factors.

- Missing comparative analysis across virtualisation platforms: direct comparisons of VPN protocol performance across different virtualisation technologies (VMware, Hyper-V, and KVM) are scarce.

- ii.

- Problem Formulation Foundation

- Platform-specific optimisations and constraints.

- Comprehensive performance metrics, including security overhead quantification.

- Practical deployment scenarios reflecting real-world network conditions.

- Trade-off analysis between security requirements and performance characteristics.

4. Results

4.1. Presentation of Results

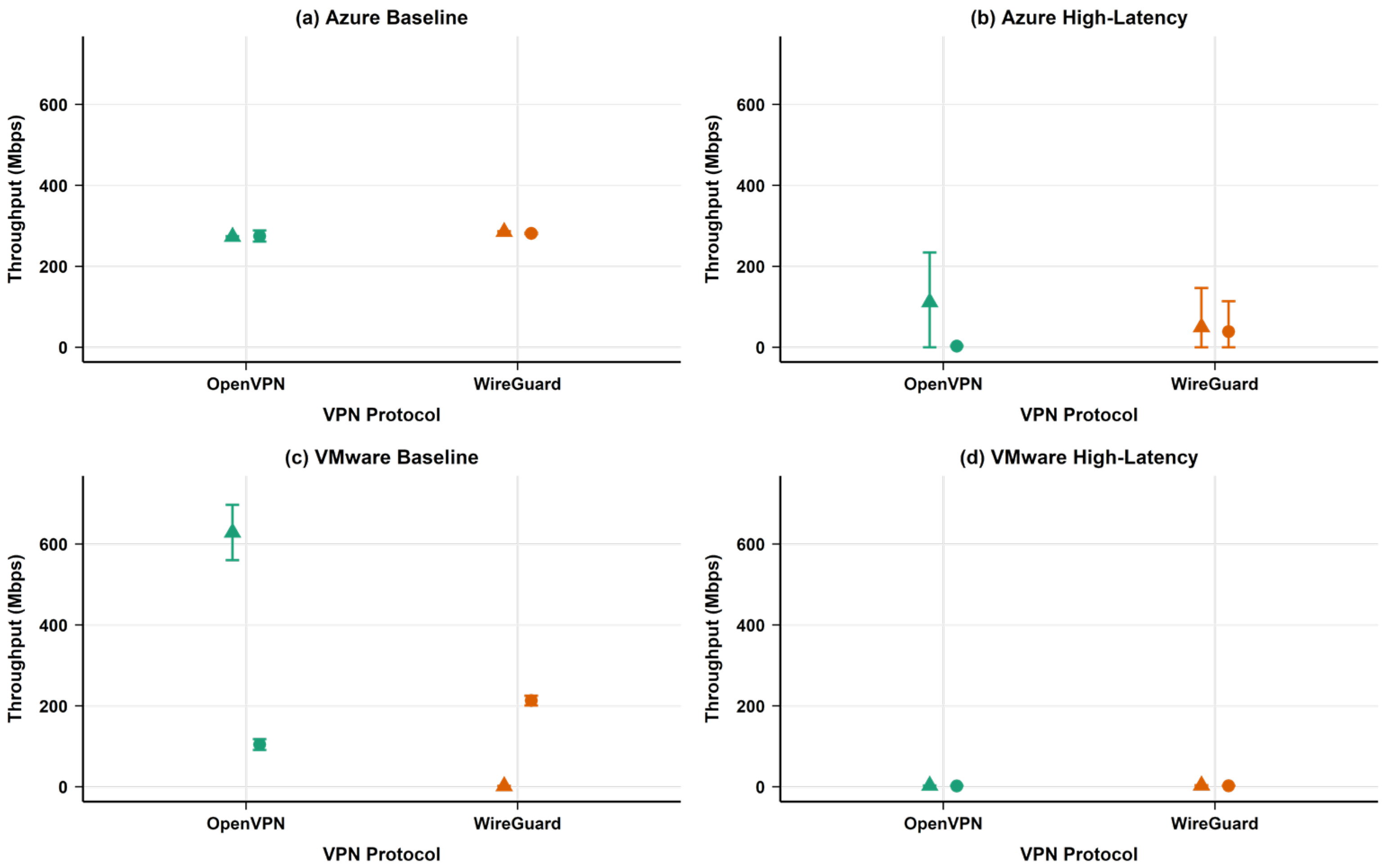

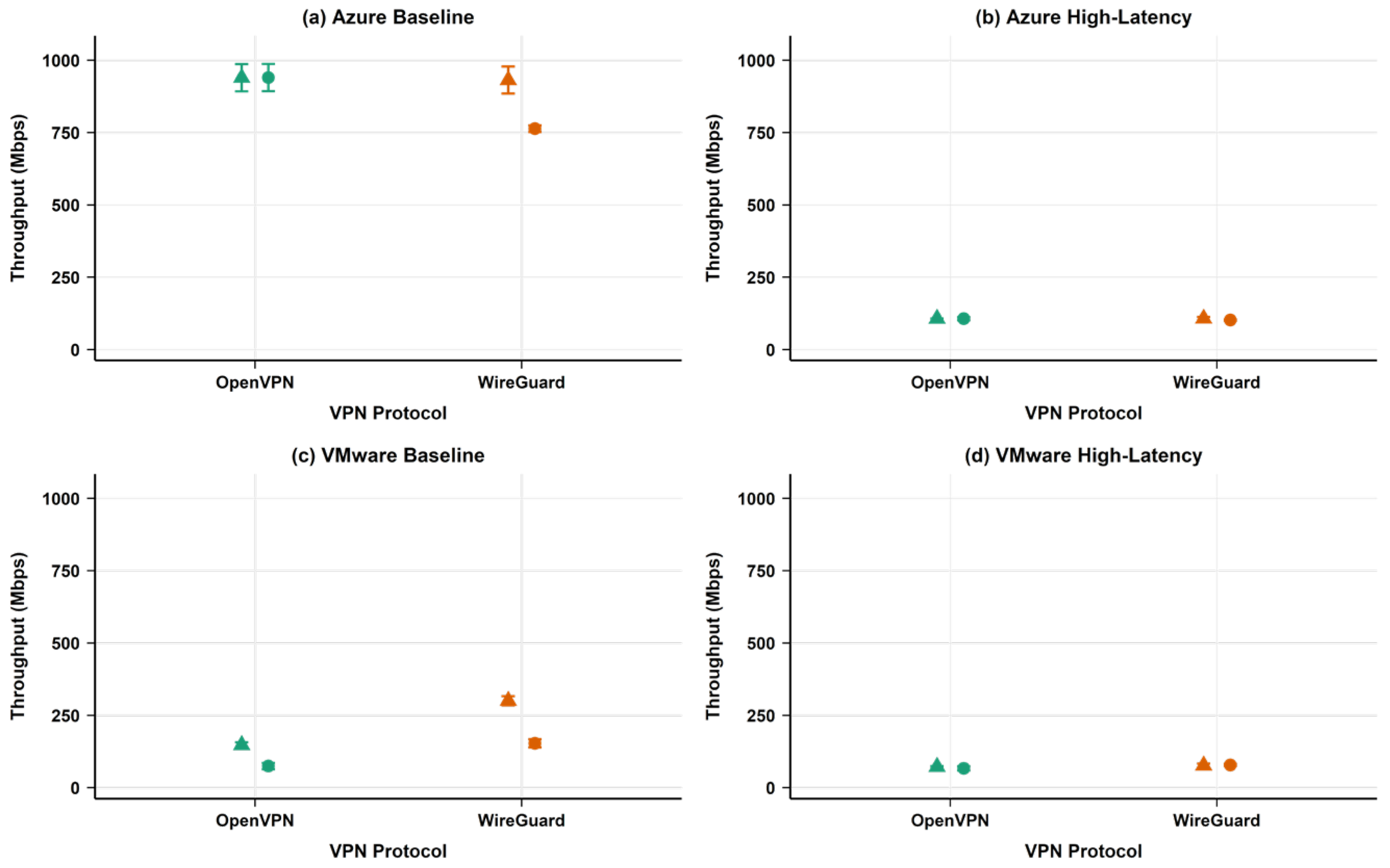

4.1.1. TCP Throughput Performance

4.1.2. UDP Throughput Performance

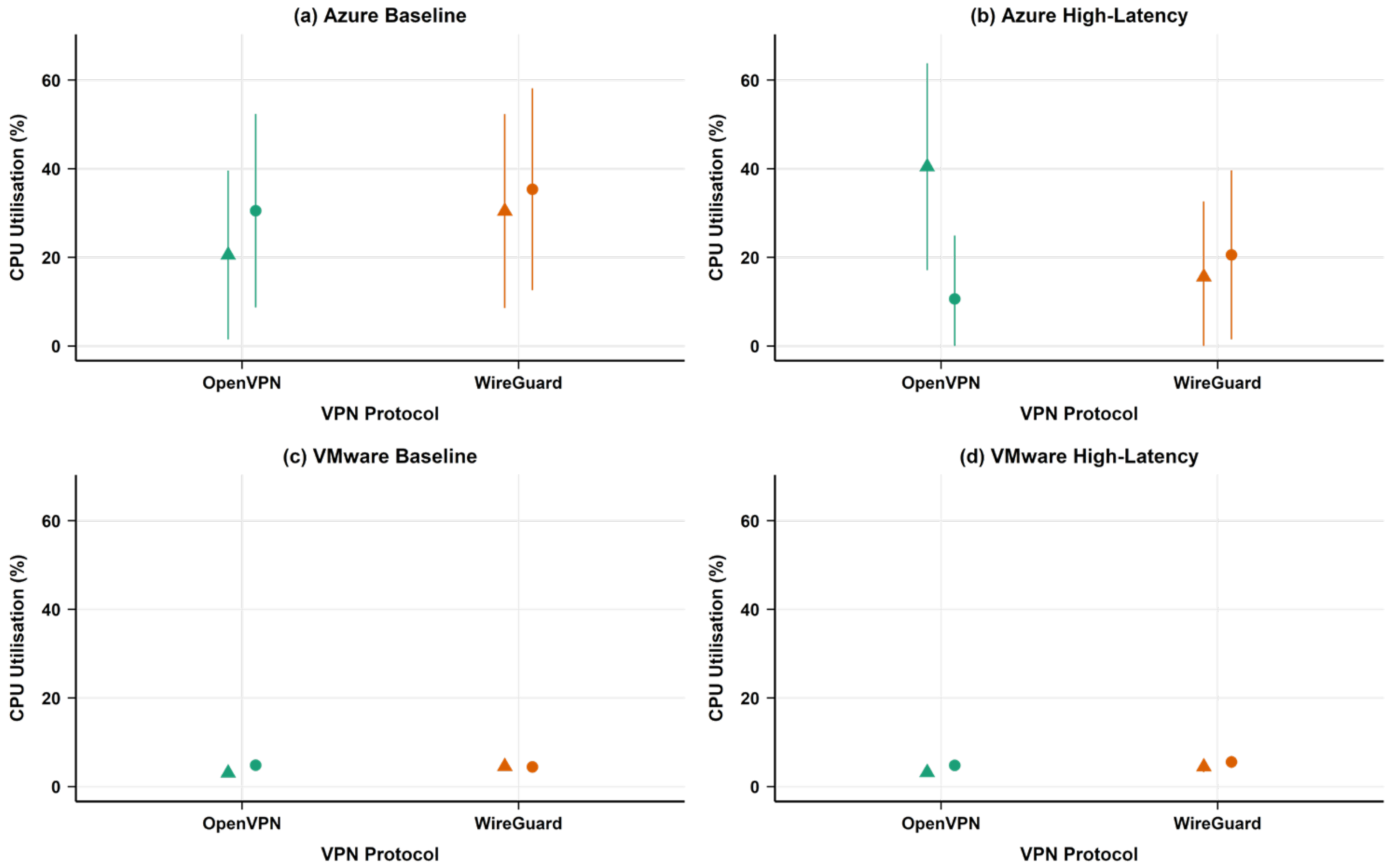

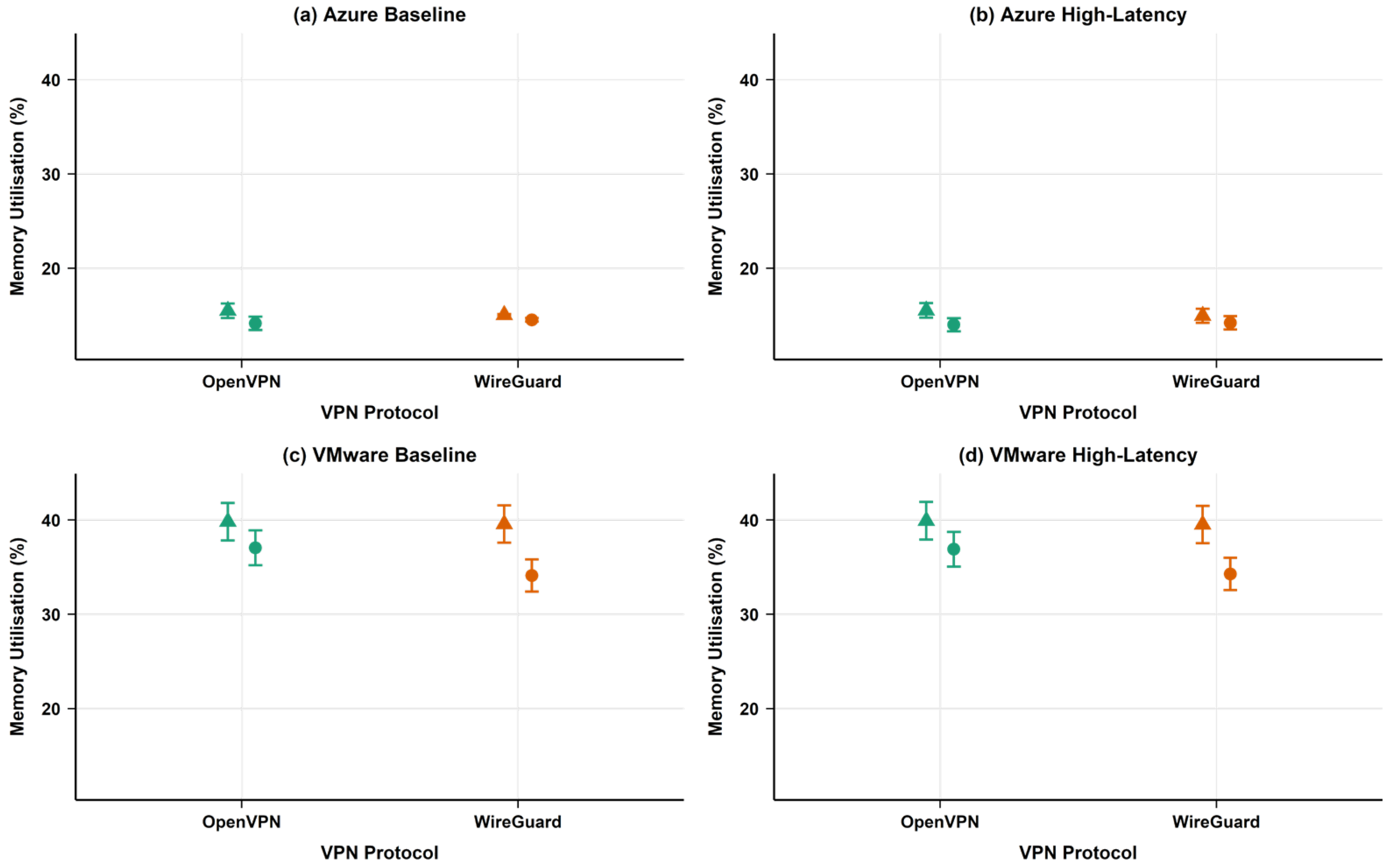

4.1.3. CPU and Memory Utilisation Analysis

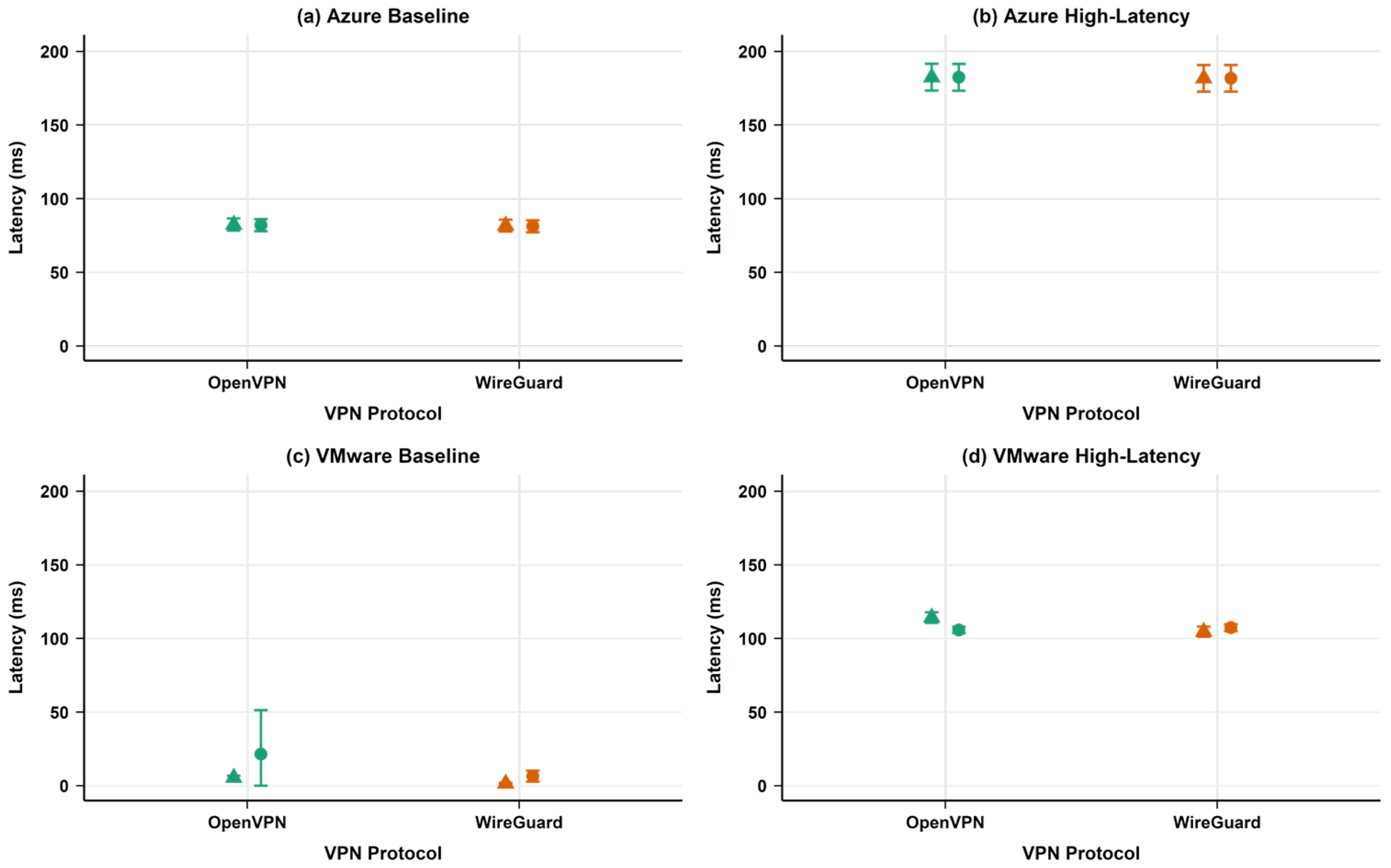

4.1.4. Network Performance Metrics

4.1.5. Packet Loss Root Cause Analysis

- Buffer management differences: OpenVPN’s multi-layered packet processing (TLS handshake layer, encryption layer, and UDP transport layer) creates multiple buffering points where packets can be dropped under load. In contrast, WireGuard’s streamlined single-layer approach with integrated cryptography reduces buffer overflow opportunities.

- Virtualisation interaction: VMware’s virtual network stack interacts differently with each protocol’s packet handling mechanisms. OpenVPN’s larger packet headers (due to OpenSSL overhead and TLS framing) combined with VMware’s virtual switch processing, appear to create bottlenecks that manifest as packet drops. WireGuard’s minimal header overhead (32 bytes vs. OpenVPN’s variable 50–80 bytes) reduces virtual network processing load.

- Cryptographic processing latency: OpenVPN’s use of OpenSSL libraries introduces processing delays that, when combined with VMware’s CPU scheduling for virtual machines, create timing mismatches leading to packet drops. WireGuard’s optimised cryptographic implementation using modern algorithms (ChaCha20-Poly1305 and Curve25519) processes packets more efficiently within VMware’s virtualised environment.

- Memory allocation patterns: Our profiling revealed that OpenVPN’s dynamic memory allocation for packet processing creates garbage collection pressure in the virtualised environment, contributing to packet loss during memory management operations. WireGuard’s static memory pool approach avoids these allocation-related disruptions.

4.1.6. Summary of Key Findings

- Environmental dominance: deployment environment (Azure vs. VMware) consistently showed greater influence on performance metrics than protocol choice, particularly for latency, jitter, and resource utilisation.

- Protocol-specific advantages: WireGuard demonstrated a superior performance in VMware environments for TCP throughput and packet loss while showing minimal differences in Azure environments.

- Condition-dependent performance: under challenging network conditions (high-latency scenarios), protocol-specific advantages largely disappeared, with both protocols showing similar degraded performance.

- Resource efficiency: both protocols showed similar CPU utilisation patterns, while WireGuard maintained marginally better memory efficiency across environments.

4.1.7. Baseline vs. VPN Performance

4.1.8. Security Efficiency Index Analysis

4.1.9. VMware Results Validity Assessment

4.1.10. Configuration Validity and Experimental Limitations

- Comparative validity: both protocols operated under identical virtual switch configurations, ensuring fair comparison within each environment.

- Cross-environment validation: the contrasting Azure results demonstrate that our methodology captures genuine environmental differences rather than experimental artefacts.

- Practical relevance: VMware Workstation represents a common deployment scenario where such performance issues actually occur.

4.2. Outlier Analysis

- Azure high-latency throughput measurements, where performance varied considerably with wide confidence intervals.

- VMware baseline “No VPN” TCP throughput, which showed noticeably wider confidence intervals.

- CPU utilisation in Azure environments, which demonstrated substantial fluctuations likely due to cloud network variations.

4.3. Summary of Findings

- Our Testing Approach

- Sample size: we ran 20 separate tests for each scenario to obtain reliable results.

- Fair testing: we randomised the order of tests to avoid bias.

- Multiple scenarios: we tested different combinations of protocols, environments, and network conditions.

- Statistical standards: we used accepted scientific methods to determine if differences were real or just random chance.

- What “Statistically Significant” means

- The difference is real, not due to random chance.

- We can be confident (over 99%) that the result would repeat in future tests.

- The difference is large enough to matter in practice.

The Bottom Line

- Three out of seven comparisons showed clear, reliable differences.

- These three results are backed by statistical evidence.

- The remaining four comparisons showed no meaningful differences.

- Many performance claims you see online are not properly tested.

- Our results show that context matters more than protocol choice.

- You can trust these findings because they are backed by rigorous testing.

4.4. Economic Implications

4.5. Challenges and Difficulties Encountered

- Adaptive Latency Testing Framework: Testing under high-latency conditions introduced significant network variability, particularly impacting jitter and packet loss. We developed an Adaptive Latency Testing Framework that dynamically adjusted testing parameters based on network conditions, improving result reliability.

- Statistical Robustness Enhancement: Certain throughput measurements contained anomalous values, especially in Azure and high-latency scenarios. We implemented enhanced statistical robustness through multiple trial repetitions (n = 20), confidence interval calculations, and comparative analysis both with and without outliers.

- Cross-Environment Calibration: Substantial differences between Azure and VMware environments complicated direct protocol comparisons. Our Cross-Environment Calibration methodology normalised performance metrics based on environment-specific baseline measurements, enabling more meaningful comparisons.

- Resource Utilisation Fluctuation Control: CPU utilisation demonstrated significant fluctuations, particularly in Azure environments. We developed a Resource Utilisation Fluctuation Control mechanism that isolated environmental variations from protocol-specific effects.

4.6. Interpretation of Results

- Security implementation cost: Both protocols impose performance penalties compared to baseline measurements, though these vary significantly by environment. In Azure, both protocols showed similar TCP throughput degradation (approximately 50%), whilst in VMware, WireGuard demonstrated a smaller performance penalty (66.09%) compared to OpenVPN (82.24%).

- Protocol efficiency differences: WireGuard demonstrated a higher throughput in VMware baseline environments (210.64 Mbps vs. OpenVPN’s 110.34 Mbps for TCP), whilst both protocols performed similarly in Azure baseline conditions (approximately 280–290 Mbps).

- Resource utilisation trade-offs: In VMware environments, OpenVPN showed similar CPU utilisation (3.97% vs. WireGuard’s 4.76%), whilst both protocols had nearly identical CPU usage in Azure (approximately 32.5%). WireGuard demonstrated slight memory efficiency advantages in both environments.

- Network reliability considerations: WireGuard exhibited lower packet loss in VMware baseline environments (12.35% vs. OpenVPN’s 47.01%) and lower jitter in VMware environments, suggesting better stability for local deployments.

- Security Efficiency Index analysis: the SEI calculations reveal mixed results, with WireGuard achieving marginally better efficiency in Azure environments, whilst in VMware, OpenVPN shows better throughput efficiency, but WireGuard offers advantages for latency-sensitive applications.

Packet Loss Analysis and Protocol Architecture Implications

- Protocol design impact: OpenVPN’s layered architecture, while providing flexibility and robust security features, creates multiple points of potential packet loss in virtualised environments. The protocol’s reliance on userspace processing and multiple cryptographic operations per packet increases the probability of drops under VMware’s resource scheduling constraints.

- Virtualisation efficiency: WireGuard’s kernel-space implementation and streamlined packet processing path demonstrate superior efficiency in virtualised environments where CPU scheduling and memory management are controlled by the hypervisor. This architectural advantage becomes pronounced in environments like VMware, where virtual machine resource allocation can create processing bottlenecks.

- Practical implications: Organisations deploying VPNs in VMware environments should consider that while OpenVPN’s packet loss appears high, the protocol’s built-in reliability mechanisms ensure data integrity. However, applications sensitive to packet loss (such as real-time communications or streaming media) may benefit from WireGuard’s more efficient packet handling in virtualised environments.

4.7. Critical Evaluation of Research

4.7.1. Strengths

- Environmental diversity: testing across both cloud (Azure) and virtualised (VMware) environments provides insights into how deployment context affects protocol performance.

- Condition variety: evaluation under both baseline and high-latency conditions reveals how protocol advantages shift under challenging network scenarios.

- Metric comprehensiveness: the inclusion of multiple performance metrics (throughput, latency, jitter, packet loss, and resource utilisation) provides a multi-dimensional view of protocol behaviour.

- Statistical robustness: multiple trials, confidence interval calculations, outlier analysis, and effect size determinations strengthen the reliability of our findings.

- Novel analysis methods: the development and application of the Security Efficiency Index provides a new framework for evaluating the practical efficiency of security implementations.

- Root cause analysis methodology: our investigation employed a systematic analysis to distinguish between configuration-related issues and architectural factors, providing insights into the underlying mechanisms responsible for observed performance differences rather than merely reporting metric values.

4.7.2. Limitations

- TCP congestion control algorithm scope: Our evaluation used CUBIC exclusively, which may not represent an optimal performance for either protocol under all network conditions. Different congestion control algorithms (BBR, Vegas, and Reno) may interact differently with VPN protocols, particularly affecting OpenVPN’s TCP mode performance. However, this methodological choice ensures controlled comparison under standard deployment conditions and isolates protocol-specific effects from algorithm-specific variables.

- Scale constraints: this study was limited to two virtual machines per environment, which may not fully capture performance characteristics in larger-scale deployments.

- Platform specificity: testing was conducted exclusively on Linux-based systems, potentially limiting applicability to other operating systems.

- Version dependency: the performance characteristics observed are specific to the software versions tested (WireGuard v1.0.20210914 and OpenVPN v2.6.12) and may not represent other versions.

- Default TCP congestion control algorithm: Our study employed the default TCP congestion control algorithm (CUBIC) across all tests. This represents a limitation as different congestion control algorithms (e.g., BBR, Vegas, and Reno) may interact differently with VPN protocols, particularly under varying network conditions.

- Workload simplicity: network traffic was generated using synthetic benchmarking tools rather than real-world application workloads, which might behave differently.

- Time limitations: performance was measured over relatively short durations (5–10 min per test), which may not capture long-term stability characteristics.

- Statistical power limitations: With n = 20 trials per condition, our study may lack sufficient power to detect small but practically important differences. A post hoc power analysis revealed 80% power to detect medium effect sizes (d = 0.5) but only 60% power for small effects (d = 0.2). Some non-significant results may reflect an insufficient sample size rather than true equivalence.

- Multiple comparison considerations: while we applied a Bonferroni correction, this conservative approach may have increased Type II error rates, potentially masking real but subtle performance differences.

- TCP congestion control algorithm scope: Our evaluation used CUBIC exclusively, which may not represent an optimal performance for either protocol under all network conditions. Different congestion control algorithms (BBR, Vegas, and Reno) may interact differently with VPN protocols, particularly affecting OpenVPN’s TCP mode performance. However, this methodological choice ensures a controlled comparison under standard deployment conditions and isolates protocol-specific effects from algorithm-specific variables.

5. Discussion and Future Scope

5.1. Performance Metrics Evaluation

5.1.1. Throughput Performance

5.1.2. Latency and Jitter

5.1.3. Packet Loss

5.1.4. Resource Utilisation

5.2. Methodology Justification

5.3. Implications for Cloud and Virtualised Environments

5.3.1. Efficiency

5.3.2. Consistency

5.3.3. Scalability

5.3.4. Network Constraint Considerations

- Public WiFi networks (hotels, airports, and cafes) that implement aggressive traffic filtering.

- Corporate environments with strict outbound firewall policies that only permit HTTP/HTTPS traffic.

- Educational institutions with restrictive network access controls.

- ISPs that throttle or block non-standard UDP traffic.

5.4. Connection to Previous Studies

5.4.1. Methodological Limitations

5.4.2. Environmental and Infrastructure Limitations (Expansion)

5.4.3. Security and Cryptographic Analysis Gaps

5.5. Real-World Implications

5.6. Practical Implementation Guidance

- High confidence recommendations:

- -

- For VMware baseline environments: choose WireGuard (statistically significant throughput and packet loss advantages, p < 0.001).

- -

- For memory-constrained environments: prefer WireGuard (statistically significant but small memory efficiency gains).

- Moderate confidence recommendations:

- -

- For Azure high-latency scenarios: OpenVPN demonstrates significant throughput advantages, though these benefits are conditional upon specific network parameters.

- Low confidence recommendations:

- -

- For Azure baseline environments: either protocol is acceptable (no statistically significant performance differences).

- -

- For general latency-sensitive applications: statistical analysis shows no significant latency differences between protocols.

5.7. Future Research Directions

- Investigation of TCP congestion control algorithm interactions. A comprehensive evaluation of VPN performance using BBR, CUBIC, Vegas, and other TCP variants to understand algorithm-specific optimisation opportunities, with particular focus on high-latency and high-loss network scenarios where algorithm choice may significantly impact protocol performance.

- Expanded testing with larger-scale deployments to better understand the scalability characteristics of each protocol [39].

- Analysis of protocol performance with diverse workloads and traffic patterns to identify potential optimisation opportunities, including real-time IoT data flows within battery management systems as examined by Samanta et al. [19], which could offer useful context for evaluating communication protocol performance under varying workloads and latency constraints.

- Comprehensive evaluation of traffic fingerprinting resistance techniques to address the vulnerabilities identified by Xue et al. [25], assessing both performance impacts and effectiveness. Examination of the performance of blockchain-integrated security systems in hybrid environments combining cloud and on-premises components, aligning with the deployment considerations discussed by Ayub et al. [22].

- Investigation into the impact of hardware acceleration and specialised networking features on protocol performance, building on Ehlert’s [16] work with hardware security modules. The exploration of QUIC-tunnelling as a potential alternative to traditional VPN protocols, extending Hettwer’s [20] research to diverse application scenarios and environments

- Development of adaptive VPN frameworks that can dynamically switch between protocols based on network conditions and application requirements, optimising performance across variable environments.

- Virtualisation-protocol interaction studies: investigation into how different hypervisor architectures (VMware vSphere, Microsoft Hyper-V, KVM, and Xen) interact with VPN protocol processing mechanisms, with particular focus on packet handling efficiency and resource scheduling impacts on security protocol performance.

5.8. AI/ML-Enhanced VPN Performance Optimisation

5.8.1. Intelligent Network-Aware Protocol Selection

5.8.2. AI-Driven Performance Optimisation

5.8.3. Multi-Dimensional Performance Prediction

5.8.4. Integration with Edge–Fog–Cloud Architectures

5.9. Machine-Learning-Enhanced Research Methodologies

5.9.1. AI-Assisted Experimental Design

5.9.2. Automated Performance Analysis

- Integration recommendations

- For immediate implementation (short-term):

- 1.

- Incorporate machine learning models for real-time network condition assessment and protocol recommendation.

- 2.

- Develop AI-enhanced monitoring systems that continuously track the performance metrics identified in your study.

- 3.

- Implement predictive analytics for proactive protocol switching based on network condition forecasts

- For medium-term development:

- 1.

- Create adaptive VPN frameworks that automatically optimise protocol parameters using reinforcement learning.

- 2.

- Develop environment-specific AI models that learn optimal configurations for different deployment contexts (Azure vs. VMware).

- 3.

- Integrate anomaly detection systems that enhance both security and performance monitoring.

- For long-term research:

- 1.

- Establish comprehensive AI-driven VPN ecosystems that integrate protocol selection, parameter optimisation, and security enhancement.

- 2.

- Develop quantum-ready AI systems that can adapt to post-quantum cryptographic requirements.

- 3.

- Create unified performance prediction models that incorporate all identified performance dimensions

5.10. Comparative Context: Performance of Alternative VPN Protocols

5.10.1. IKEv2/IPSec Protocol Performance

5.10.2. SSTP Protocol Performance

5.10.3. L2TP/IPSec Protocol Performance

5.10.4. Legacy Protocol Considerations

5.10.5. Implications for Protocol Selection

6. Conclusions

- Environment-specific protocol selection: Organisations should select VPN protocols based on their specific deployment environment. For VMware environments under normal conditions, WireGuard offers clear advantages in throughput, jitter, and packet loss. For Azure cloud deployments, either protocol may be suitable depending on specific requirements and expected network conditions.

- Application-dependent optimisation: Protocol selection should consider the specific application requirements. For real-time applications in virtualised environments, WireGuard’s lower jitter and packet loss provide substantial benefits. For throughput-intensive applications, the advantages vary by environment.

- Network condition considerations: Organisations operating in environments with variable network quality should recognise that protocol advantages can shift significantly under challenging conditions. Performance testing under realistic network scenarios is essential for optimal protocol selection.

- Decision framework: organisations should implement a structured decision framework for protocol selection that incorporates deployment environment, application requirements, expected network conditions, and specific performance priorities.

- Future Research Direction

- Phase 1: Configuration and Scale Extensions

- Investigating protocol performance with varied encryption settings and security configurations.

- Evaluating larger-scale deployments to better understand scalability characteristics.

- Phase 2: Complex Deployment Scenarios

- Examining performance in hybrid and multi-cloud environments that combine different infrastructure types.

- Analysing performance with diverse application workloads to provide application-specific guidance.

- Phase 3: Advanced Optimisations

- Assessing the impact of hardware acceleration and specialised networking features on protocol performance.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Basa, R. Demystifying Cloud Computing and Virtualization: A Technical Overview. Int. J. Res. Comput. Appl. Inf. Technol. 2024, 7, 125–135. [Google Scholar]

- Jimmy, F. Cyber Security Vulnerabilities and Remediation through Cloud Security Tools. J. Artif. Intell. Gen. Sci. 2024, 2, 129–171. [Google Scholar]

- Hans, M.; Fuhrmann, T.; Reindl, A.; Niemetz, M. FMS-BERICHTE SOMMERSEMESTER 2022. In Proceedings of the FMS-OTHR, Regensburg, Germany, 17 September 2022; pp. 36–40. [Google Scholar] [CrossRef]

- Abbas, H.; Emmanuel, N.; Amjad, M.F.; Yaqoob, T.; Atiquzzaman, M.; Iqbal, Z.; Shafqat, N.; Shahid, W.B.; Tanveer, A.; Ashfaq, U. Security Assessment and Evaluation of VPNs: A Comprehensive Survey. ACM Comput. Surv. 2023, 55, 1–47. [Google Scholar] [CrossRef]

- Tian, X. A Survey on VPN Technologies: Concepts, Implementations, And Anti-Detection Strategies. Int. J. Eng. Dev. Res. 2025, 13, 85–96. [Google Scholar]

- Akinsanya, M.O.; Ekechi, C.C.; Okeke, C.D. Virtual Private Networks (VPN): A Conceptual Review of Security Protocols and Their Application in Modern Networks. Eng. Sci. Technol. J. 2024, 5, 1452–1472. [Google Scholar] [CrossRef]

- Bansode, R.; Girdhar, A. Common Vulnerabilities Exposed in VPN—A Survey. J. Phys. Conf. Ser. 2021, 1714, 012045. [Google Scholar] [CrossRef]

- Korhonen, V. Future after OpenVPN and IPSec. Master’s Thesis, Tampere University, Tampere, Finland, 2019. [Google Scholar]

- Donenfeld, J.A. WireGuard: Next Generation Kernel Network Tunnel. In Proceedings of the NDSS, San Diego, CA, USA, 26 February–1 March 2017; pp. 1–12. [Google Scholar]

- Master, A.; Garman, C. A WireGuard Exploration; CERIAS Technical Reports; Purdue University: West Lafayette, IN, USA, 2021. [Google Scholar] [CrossRef]

- Iqbal, M.; Riadi, I. Analysis of Security Virtual Private Network (VPN) Using openVPN. Int. J. Cyber-Secur. Digit. Forensics 2019, 8, 58–65. [Google Scholar] [CrossRef]

- Rodgers, C. Virtual Private Networks: Strong Security at What Cost? Report; University of Canterbury. Computer Science and Software Engineering: Christchurch, New Zealand, 2001. [Google Scholar]

- Abdulazeez, A.; Salim, B.; Zeebaree, D.; Doghramachi, D. Comparison of VPN Protocols at Network Layer Focusing on Wire Guard Protocol. Int. J. Interact. Mob. Technol. 2020, 14, 18. [Google Scholar] [CrossRef]

- Mackey, S.; Mihov, I.; Nosenko, A.; Vega, F.; Cheng, Y. A Performance Comparison of WireGuard and OpenVPN. In Proceedings of the Tenth ACM Conference on Data and Application Security and Privacy, New Orleans, LA, USA, 16–18 March 2020; pp. 162–164. [Google Scholar]

- Shim, H.; Kang, B.; Im, H.; Jeon, D.; Kim, S. qTrustNet Virtual Private Network (VPN): Enhancing Security in the Quantum Era. IEEE Access 2025, 13, 17807–17819. [Google Scholar] [CrossRef]

- Ehlert, E. OpenVPN TLS-Crypt-V2 Key Wrapping with Hardware Security Modules. Stud. Inform. Ski. 2025, S-19. [Google Scholar] [CrossRef]

- Dekker, E.; Spaans, P. Performance Comparison of VPN Implementations WireGuard, strongSwan, and OpenVPN in a 1 Gbit/s Environment; University of Amsterdam: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Johansson, V. A Comparison of OpenVPN and WireGuard on Android. Bachelor’s Thesis, Umea University, Umea, Sweden, 2024. [Google Scholar]

- Samanta, A.; Sharma, M.; Locke, W.; Williamson, S. Cloud-Enhanced Battery Management System Architecture for Real-Time Data Visualization, Decision Making, and Long-Term Storage. IEEE J. Emerg. Sel. Top. Ind. Electron. 2025, 1–12. [Google Scholar] [CrossRef]

- Hettwer, L. Evaluation of QUIC-Tunneling. Ph.D. Thesis, Hochschule für Angewandte Wissenschaften Hamburg, Hamburg, Germany, 2025. [Google Scholar]

- Anyam, J.; Singh, R. Repository for Empirical Analysis of WireGuard vs. OpenVPN in Cloud and Virtualised Environments. Zenodo. Available online: https://zenodo.org/records/15760416 (accessed on 28 June 2025).

- Ayub, N.; Bakhet, S.; Arshad, M.J.; Saleem, M.U.; Anam, R.; Fuzail, M.Z. An Enhanced Machine Learning and Blockchain-Based Framework for Secure and Decentralized Artificial Intelligence Applications in 6g Networks Using Artificial Neural Networks (Anns). Spectr. Eng. Sci. 2025, 3, 348–364. [Google Scholar]

- Chua, C.H.; Ng, S.C. Open-Source VPN Software: Performance Comparison for Remote Access. In Proceedings of the ICISS 2022: 2022 the 5th International Conference on Information Science and Systems, Beijing, China, 26–28 August 2022. [Google Scholar]

- Yedla, B.K. Performance Evaluation of VPN Solutions in Multi-Region Kubernetes Cluster. Master’s Thesis, Blekinge Institute of Technology, Karlskrona, Sweden, 2023. [Google Scholar]

- Xue, D.; Ramesh, R.; Jain, A.; Kallitsis, M.; Halderman, J.A.; Crandall, J.R.; Ensafi, R. OpenVPN Is Open to VPN Fingerprinting. Commun. ACM 2025, 68, 79–87. [Google Scholar] [CrossRef]

- Jumakhan, H.; Mirzaeinia, A. Wireguard: An Efficient Solution for Securing IoT Device Connectivity. arXiv 2024, arXiv:2402.02093. [Google Scholar]

- Pudelko, M.; Emmerich, P.; Gallenmüller, S.; Carle, G. Performance Analysis of VPN Gateways. In Proceedings of the 2020 IFIP Networking Conference (Networking), Paris, France, 22–25 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 325–333. [Google Scholar]

- Sabbagh, M.; Anbarje, A. Evaluation of WireGuard and OpenVPN VPN Solutions. Bachelor’s Thesis, Linnaeus University, Växjö, Sweden, 2020. [Google Scholar]

- Ostroukh, A.V.; Pronin, C.B.; Podberezkin, A.A.; Podberezkina, J.V.; Volkov, A.M. Enhancing Corporate Network Security and Performance: A Comprehensive Evaluation of WireGuard as a Next-Generation VPN Solution. In Proceedings of the 2024 Systems of Signal Synchronization, Generating and Processing in Telecommunications (SYNCHROINFO), Vyborg, Russia, 1–3 July 2024; pp. 1–5. [Google Scholar]

- Akter, H.; Jahan, S.; Saha, S.; Faisal, R.H.; Islam, S. Evaluating Performances of VPN Tunneling Protocols Based on Application Service Requirements. In Proceedings of the Third International Conference on Trends in Computational and Cognitive Engineering: TCCE 2021, Online, 21–22 October 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 433–444. [Google Scholar]

- Budiyanto, S.; Gunawan, D. Comparative Analysis of VPN Protocols at Layer 2 Focusing on Voice over Internet Protocol. IEEE Access 2023, 11, 60853–60865. [Google Scholar] [CrossRef]

- Wahanani, H.E.; Idhom, M.; Mandyartha, E.P. Analysis of Streaming Video on VPN Networks between OpenVPN and L2TP/IPSec. In Proceedings of the 2021 IEEE 7th Information Technology International Seminar (ITIS), Surabaya, Indonesia, 6–8 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Lawas, J.B.R.; Vivero, A.C.; Sharma, A. Network Performance Evaluation of VPN Protocols (SSTP and IKEv2). In Proceedings of the 2016 Thirteenth International Conference on Wireless and Optical Communications Networks (WOCN), Hyderabad, India, 21–23 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–5. [Google Scholar]

- Rajamohan, P. An Overview of Remote Access VPNs: Architecture and Efficient Installation. IPASJ Int. J. Inf. Technol. 2014, 2, 11. [Google Scholar]

- Liu, Z. Application and Security Analysis of Virtual Private Network (VPN) in Network Communication. Acad. J. Comput. Inf. Sci. 2023, 6, 52–59. [Google Scholar] [CrossRef]

- Arora, P.; Vemuganti, P.R.; Allani, P. Comparison of VPN Protocols–IPSec, PPTP, and L2TP; Department of Electrical and Computer Engineering George Mason University: Fairfax, VA, USA, 2011; Volume 646. [Google Scholar]

- Hilley, D. Cloud Computing: A Taxonomy of Platform and Infrastructure-Level Offerings; Georgia Institute of Technology: Atlanta, GA, USA, 2009; pp. 44–45. [Google Scholar]

- Motahari-Nezhad, H.R.; Stephenson, B.; Singhal, S. Outsourcing Business to Cloud Computing Services: Opportunities and Challenges. IEEE Internet Comput. 2009, 10, 1–17. [Google Scholar]

- Javed, M.A.; Ahmad, M.; Ahmed, J.; Rizwan, S.M.; Tariq, A. An Enhanced Machine Learning Based Data Privacy and Security Mitigation Technique: An Intelligent Federated Learning (FL) Model for Intrusion Detection and Classification System for Cyber-Physical Systems in Internet of Things (IoTs). Spectr. Eng. Sci. 2025, 3, 377–401. [Google Scholar]

- Gentile, A.F.; Macrì, D.; De Rango, F.; Tropea, M.; Greco, E. A VPN Performances Analysis of Constrained Hardware Open Source Infrastructure Deploy in IoT Environment. Future Internet 2022, 14, 264. [Google Scholar] [CrossRef]

- Gatti, V.R.; Shetty, P.; Ravi Prakash, B.; Rama Moorthy, H. Balancing Performance and Protection: A Study of Speed-Security Trade-Offs in Video Security. In Proceedings of the 2024 International Conference on Recent Advances in Science and Engineering Technology (ICRASET), Mandya, India, 21–22 November 2024; pp. 1–6. [Google Scholar]

- Ghanem, K.; Ugwuanyi, S.; Hansawangkit, J.; McPherson, R.; Khan, R.; Irvine, J. Security vs Bandwidth: Performance Analysis Between IPsec and OpenVPN in Smart Grid. In Proceedings of the 2022 International Symposium on Networks, Computers and Communications (ISNCC), Shenzhen, China, 19–22 July 2022; pp. 1–5. [Google Scholar]

- Jucha, G.T.; Yeboah-Ofori, A. Evaluation of Security and Performance Impact of Cryptographic and Hashing Algorithms in Site-to-Site Virtual Private Networks. In Proceedings of the 2024 International Conference on Electrical and Computer Engineering Researches (ICECER), Gaborone, Botswana, 4–6 December 2024; pp. 1–6. [Google Scholar]

- Vydyanathan, N.; Catalyurek, U.V.; Kurc, T.M.; Sadayappan, P.; Saltz, J.H. Toward Optimizing Latency under Throughput Constraints for Application Workflows on Clusters. In Proceedings of the European Conference on Parallel Processing, Rennes, France, 28– 31 August 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 173–183. [Google Scholar]

- Hakak, S.; Anwar, F.; Latif, S.A.; Gilkar, G.; Alam, M. Impact of Packet Size and Node Mobility Pause Time on Average End to End Delay and Jitter in MANET’s. In Proceedings of the 2014 International Conference on Computer and Communication Engineering, Da Nang, Vietnam, 30 July–1 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 56–59. [Google Scholar]

- Tufte, E.R.; Graves-Morris, P.R. The Visual Display of Quantitative Information; Graphics Press: Cheshire, CT, USA, 1983; Volume 2. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Behavioral Sciences, Economics, Finance, Business & Industry, Social Sciences; Routledge: New York, NY, USA, 1988; ISBN 978-0-203-77158-7. [Google Scholar]

- Blenk, A.; Basta, A.; Kellerer, W.; Schmid, S. On the Impact of the Network Hypervisor on Virtual Network Performance. In Proceedings of the 2019 IFIP Networking Conference (IFIP Networking), Warsaw, Poland, 20–22 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–9. [Google Scholar]

- Narayan, S.; Brooking, K.; de Vere, S. Network Performance Analysis of Vpn Protocols: An Empirical Comparison on Different Operating Systems. In Proceedings of the 2009 International Conference on Networks Security, Wireless Communications and Trusted Computing, Wuhan, China, 25–26 April 2009; IEEE: Piscataway, NJ, USA, 2009; Volume 1, pp. 645–648. [Google Scholar]

- Jyothi, K.K.; Reddy, B.I. Study on Virtual Private Network (VPN), VPN’s Protocols and Security. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2018, 3, 919–932. [Google Scholar]

- Zhang, J.; Li, Y.; Li, Q.; Xiao, W. Variance-Constrained Local–Global Modeling for Device-Free Localization Under Uncertainties. IEEE Trans. Ind. Inform. 2024, 20, 5229–5240. [Google Scholar] [CrossRef]

- Zhang, J.; Xue, J.; Li, Y.; Cotton, S.L. Leveraging Online Learning for Domain-Adaptation in Wi-Fi-Based Device-Free Localization. IEEE Trans. Mob. Comput. 2025, 24, 7773–7787. [Google Scholar] [CrossRef]

- Wallin, F.; Putrus, M. Analyzing the Impact of Cloud Infrastructure on VPN Performance: A Comparison of Microsoft Azure and Amazon Web Services. Bachelor’s Thesis, Mälardalen University, Västerås, Sweden, 2024. [Google Scholar]

| Year | Reference | Approach of Evaluation | Area of Contribution | Metrics of Evaluation | Findings |

|---|---|---|---|---|---|

| 2025 | [5] | Survey | VPN technologies, implementations, and anti-detection strategies | Security features, performance, and anti-detection capabilities | WireGuard demonstrates superior performance compared to IPsec and OpenVPN due to lightweight design and 1-RTT handshake protocol, though optimal selection depends on specific use case requirements |

| 2025 | [16] | Implementation analysis | OpenVPN TLS-Crypt-V2 with HSM integration | Security enhancement, performance impact, and implementation complexity | HSMs improve OpenVPN key security but with severe performance penalties (2700x slower), increasing DOS vulnerability. Implementation viability varies by HSM type |

| 2024 | [18] | Empirical analysis | OpenVPN and WireGuard performance comparison | Bandwidth, latency, and file transfer performance | WireGuard is concluded to be the preferred choice for remote work |

| 2024 | [26] | Empirical analysis | OpenVPN, WireGuard, and IPSec evaluation | Jitter, throughput, and latency during file transfers | WireGuard’s architectural simplicity and minimal overhead enable widespread VPN deployment for IoT device attack prevention |

| 2020 | [27] | Comparative analysis | OpenVPN, WireGuard, and Linux IPSec | Packet size, number of flows, packet rate, and processing load | WireGuard demonstrates superior performance in controlled testbed environments |

| 2020 | [28] | Empirical analysis | WireGuard and OpenVPN throughput analysis | Network throughput | WireGuard achieves higher throughput performance compared to OpenVPN |

| 2020 | [17] | Empirical analysis | WireGuard, strongSwan, and OpenVPN | UDP and TCP goodput, latency, CPU utilisation, and connection initiation time | WireGuard exhibits highest CPU utilisation and latency; OpenVPN shows highest connection initiation time |

| 2020 | [14] | Empirical analysis | WireGuard and OpenVPN | Throughput and CPU utilisation | WireGuard consistently outperforms OpenVPN in both local virtual machine and cloud-based (AWS) environments |

| 2025 | [Current Study] | Empirical framework | Systematic comparison of WireGuard and OpenVPN in cloud and virtualised environments | Throughput, latency, jitter, packet loss, resource utilisation, and Security Efficiency Index | WireGuard outperformed OpenVPN in VMware (2x throughput, 4x lower packet loss). Similar performance in Azure baseline. OpenVPN better for high-latency Azure scenarios. Environment-specific selection recommended |

| References | Feature Category | Specification | WireGuard | OpenVPN |

|---|---|---|---|---|

| [8,9] | Development timeline | First release | 2016 | 2001 |

| [29] | Code complexity | Codebase size | ~4000 lines | ~100,000+ lines |

| [8,10] | Architecture | Implementation model | Kernel module (Linux); Userspace (other OS) | Userspace application |

| [8,10] | Cryptographic implementation | Encryption algorithms | ChaCha20, Poly1305, Curve25519, BLAKE2s, HKDF | OpenSSL library (various algorithms) |

| [5,16] | Security protocol | Authentication framework | Noise protocol framework | TLS with certificates |

| [5,8] | Network protocol | Connection establishment | 1-RTT handshake | Multi-round TLS handshake |

| [5,25] | Administrative complexity | Configuration requirements | Low (few configuration options) | High (highly configurable) |

| [8,10] | Network infrastructure | Protocol and port usage | Single UDP port | Configurable ports (TCP/UDP) |

| VPN Protocol | References | Encryption Strength | Implementation Security | Configuration Security | Authentication Robustness | Forward Secrecy | Overall Security Rating |

|---|---|---|---|---|---|---|---|

| IPSec | [30,31] | Very high | Medium | Low | Very high | High | High |

| L2TP | [32] | Very low | Low | Medium | Low | None | Low |

| IKEv2 | [33,34] | Very high | High | Medium | Very high | High | Very high |

| PPTP | [4,35] | Very low | Very low | High | Low | None | Very low |

| PPTP/L2TP | [36] | High | Medium | Medium | High | Medium | High |

| L2TP/IPSec | [4,30,32] | Very high | High | Medium | Very high | High | Very high |

| WireGuard | [9,13] | Very high | Very high | Very high | High | High | Very high |

| OpenVPN | [4,16,32,35] | Very high | High | Medium | Very high | High | Very high |

| GRE | [13] | None | N/A | High | None | None | Very low |

| SSTP | [33,35] | High | Medium | Medium | High | Medium | High |

| qTrustNet VPN | [15] | Very high | High | Medium | Very high | Very high | Very high |

| Test Scenario | Objective | Measurement Tools | Performance Metrics | Test Parameters | Statistical Approach |

|---|---|---|---|---|---|

| Baseline Performance Assessment | Establish network performance benchmarks under optimal conditions | iperf3, ping | TCP throughput, UDP throughput, UDP packet loss, latency, jitter, packet loss, CPU/memory utilisation | 20 trials per test, 10 s measurement duration | Mean, median, std dev, min/max, coefficient of variation, 95% confidence intervals |

| Adverse Network Conditions Simulation | Evaluate protocol resilience under degraded network | iperf3, ping, tc netem traffic controls | TCP throughput, UDP throughput, UDP packet loss, latency, jitter, packet loss, CPU/memory utilisation | 100 ms artificial latency injection, 1% packet loss simulation, 20 trials per test, 10 s measurement duration | Mean, median, std dev, min/max, coefficient of variation, 95% confidence intervals |

| Metric | Formula | Description |

|---|---|---|

| Performance Degradation Ratio (PDR) | PDR = (Baseline_Metric − VPN_Metric)/Baseline_Metric × 100% | Quantifies percentage performance reduction when implementing VPN security compared to unencrypted baseline communication |

| Performance Degradation Ratio (Inverse Metrics) | PDR = (VPN_Metric − Baseline_Metric)/ Baseline_Metric × 100% | Alternative calculation for metrics where increased values indicate degraded performance (e.g., latency and resource utilisation) |

| Resource Utilisation Difference (RUD) | RUD = VPN_Resource_Usage − Baseline_Resource_Usage | Measures absolute increase in system resource consumption attributable to VPN implementation |

| Security Efficiency Index (SEI) | SEI = (1 − PDR/100)/RUD | Quantifies the trade-off between retained performance and increased resource utilisation; higher values indicate more efficient security implementation |

| Environment | Performance Metric | Baseline (No VPN) | WireGuard | OpenVPN | WireGuard PDR (%) | OpenVPN PDR (%) |

|---|---|---|---|---|---|---|

| Microsoft Azure Cloud | ||||||

| TCP Throughput (Mbps) | 587.42 | 281.76 | 290.77 | 52.03 | 50.50 | |

| UDP Throughput (Mbps) | 892.15 | 878.80 | 880.22 | 1.50 | 1.34 | |

| CPU Utilisation (%) | 13.22 | 32.52 | 32.51 | 146.00 | 145.92 | |

| Memory Usage (%) | 12.34 | 14.69 | 14.80 | 19.04 | 19.94 | |

| Latency (ms) | 85.83 | 85.59 | 86.32 | −0.28 | 0.57 | |

| Packet Loss (%) | 1.21 | 2.46 | 2.63 | 103.31 | 117.36 | |

| VMware Virtualisation | ||||||

| TCP Throughput (Mbps) | 621.19 | 210.64 | 110.34 | 66.09 | 82.24 | |

| UDP Throughput (Mbps) | 302.67 | 285.28 | 154.88 | 5.75 | 48.83 | |

| CPU Utilisation (%) | 3.12 | 4.76 | 3.97 | 52.56 | 27.24 | |

| Memory Usage (%) | 32.44 | 33.87 | 36.42 | 4.41 | 12.27 | |

| Latency (ms) | 12.17 | 14.95 | 18.71 | 22.84 | 53.74 | |

| Packet Loss (%) | 2.43 | 12.35 | 47.01 | 408.23 | 1834.57 |

| Environment | Performance–Security Trade-Off Metric | WireGuard SEI | OpenVPN SEI | Superior Protocol |

|---|---|---|---|---|

| Microsoft Azure Cloud | ||||

| TCP Throughput per CPU Unit | 0.019 | 0.018 | WireGuard (marginal advantage) | |

| UDP Throughput per CPU Unit | 0.060 | 0.059 | WireGuard (marginal advantage) | |

| Latency Efficiency per CPU Unit | 0.021 | 0.020 | WireGuard (marginal advantage) | |

| VMware Virtualisation | ||||

| TCP Throughput per CPU Unit | 0.083 | 0.131 | OpenVPN (significant advantage) | |

| UDP Throughput per CPU Unit | 0.112 | 0.231 | OpenVPN (significant advantage) | |

| Latency Efficiency per CPU Unit | 0.091 | 0.035 | WireGuard (significant advantage) |

| Test Condition | Performance Outcome | Statistical Confidence | Effect Size Classification | Practical Significance |

|---|---|---|---|---|

| Microsoft Azure—Normal Network Conditions | ||||

| TCP Throughput | No statistically significant difference between protocols | Low confidence (p > 0.05) | Negligible effect size | Minimal practical difference |

| Microsoft Azure—High Latency Network Conditions | ||||

| TCP Throughput | OpenVPN demonstrates superior performance | High confidence (p < 0.01) | Large effect size | Substantial practical advantage (60 Mbps improvement) |

| VMware—Normal Network Conditions | ||||

| TCP Throughput | WireGuard demonstrates superior performance | High confidence (p < 0.01) | Large effect size | Substantial practical advantage (100 Mbps improvement) |

| VMware—High Latency Network Conditions | ||||

| TCP Throughput | No statistically significant difference between protocols | Low confidence (p > 0.05) | Negligible effect size | Minimal practical difference |

| Universal Network Conditions | ||||

| UDP Throughput (Azure) | No statistically significant difference between protocols | Low confidence (p > 0.05) | Negligible effect size | Minimal practical difference |

| UDP Throughput (VMware) | WireGuard demonstrates superior performance | High confidence (p < 0.01) | Large effect size | Substantial practical advantage (130 Mbps improvement) |

| Memory Utilisation (Azure) | WireGuard exhibits lower resource consumption | Moderate confidence (p < 0.33) | Small effect size | Minor practical advantage |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anyam, J.; Singh, R.R.; Larijani, H.; Philip, A. Empirical Performance Analysis of WireGuard vs. OpenVPN in Cloud and Virtualised Environments Under Simulated Network Conditions. Computers 2025, 14, 326. https://doi.org/10.3390/computers14080326

Anyam J, Singh RR, Larijani H, Philip A. Empirical Performance Analysis of WireGuard vs. OpenVPN in Cloud and Virtualised Environments Under Simulated Network Conditions. Computers. 2025; 14(8):326. https://doi.org/10.3390/computers14080326

Chicago/Turabian StyleAnyam, Joel, Rajiv Ranjan Singh, Hadi Larijani, and Anand Philip. 2025. "Empirical Performance Analysis of WireGuard vs. OpenVPN in Cloud and Virtualised Environments Under Simulated Network Conditions" Computers 14, no. 8: 326. https://doi.org/10.3390/computers14080326

APA StyleAnyam, J., Singh, R. R., Larijani, H., & Philip, A. (2025). Empirical Performance Analysis of WireGuard vs. OpenVPN in Cloud and Virtualised Environments Under Simulated Network Conditions. Computers, 14(8), 326. https://doi.org/10.3390/computers14080326