Automated Recognition of Rock Mass Discontinuities on Vegetated High Slopes Using UAV Photogrammetry and an Improved Superpoint Transformer

Highlights

- Integrated close range UAV photogrammetry with an improved Superpoint Transformer to segment rock and vegetation on steep vegetated slopes.

- VDVI and volumetric density features with hierarchical filtering achieved 89.5 percent overall accuracy, 25 times faster processing, and automatically extracted discontinuity planes with key geometric parameters.

- Enables rapid, safe discontinuity mapping for hazardous high slopes, reducing field exposure while preserving centimetre scale geometric detail for engineering decisions.

- Delivers orientation, spacing, persistence, and trace statistics to support slope stability evaluation, rockfall hazard screening, and digital geotechnical inventories in vegetation covered terrains.

Abstract

1. Introduction

1.1. Point-Cloud-Based Structural Plane Extraction

1.2. Vegetation Separation and Classification in Point Clouds

1.3. Objective of This Study

2. Methodology

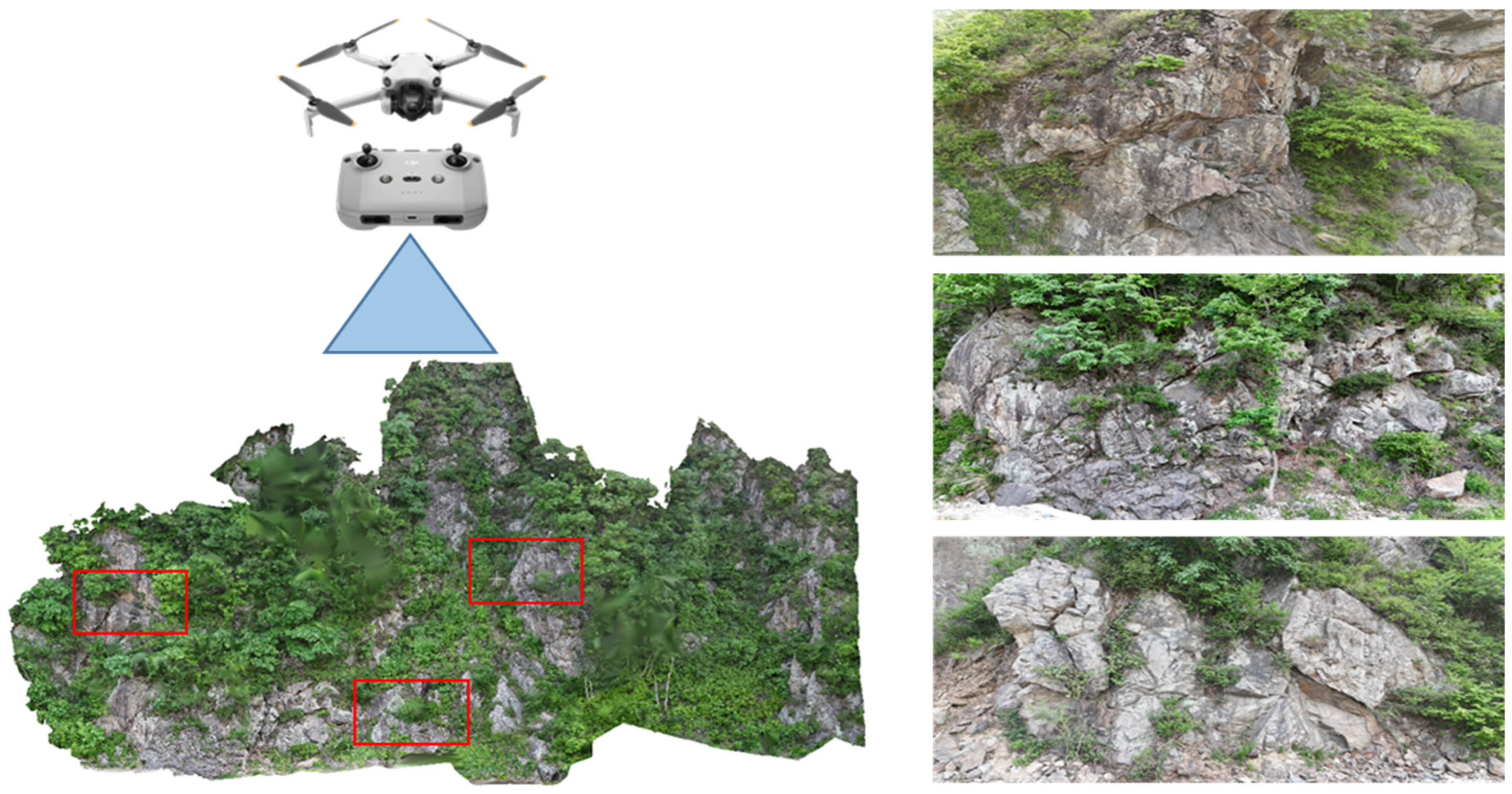

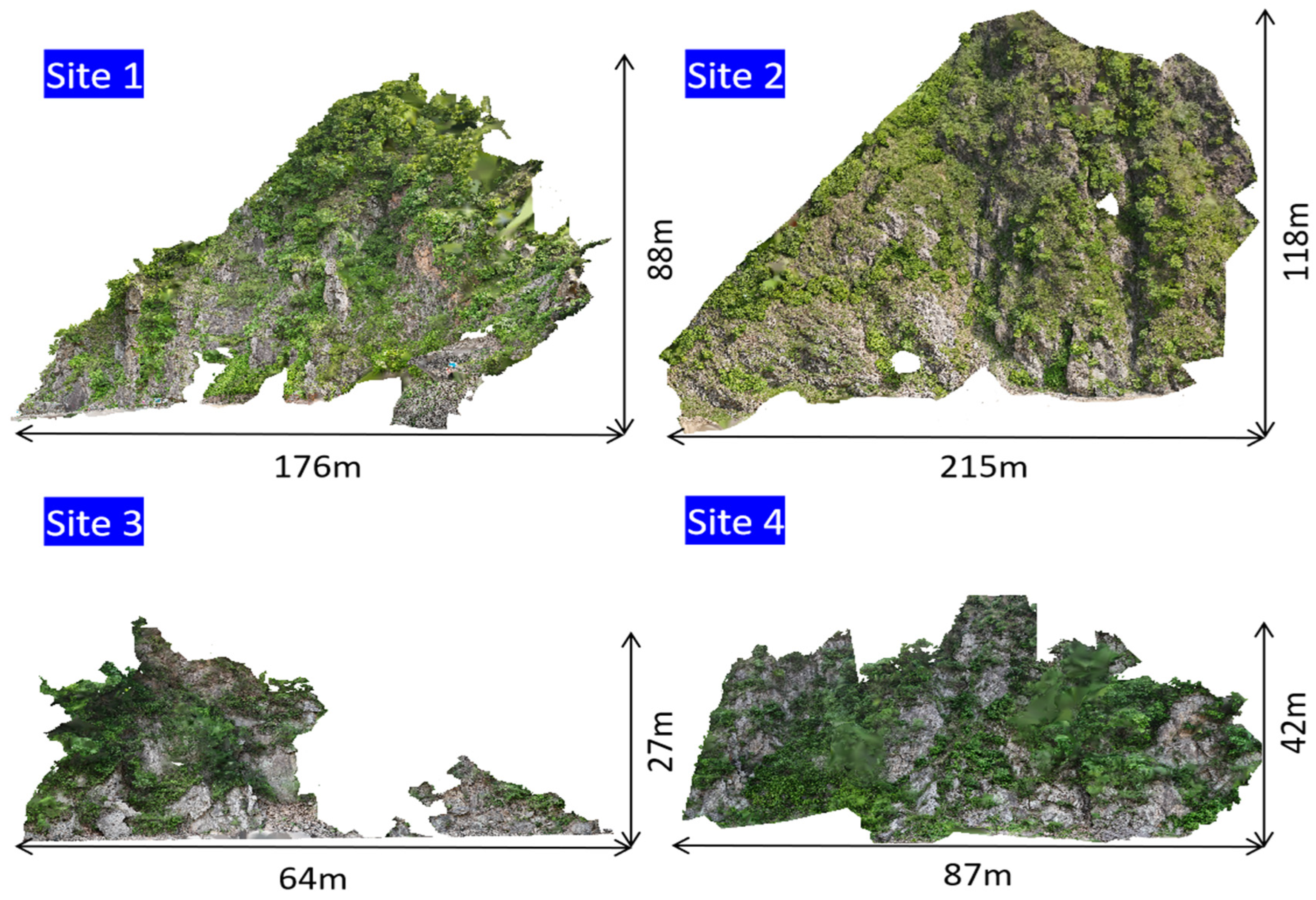

2.1. Data Acquisition

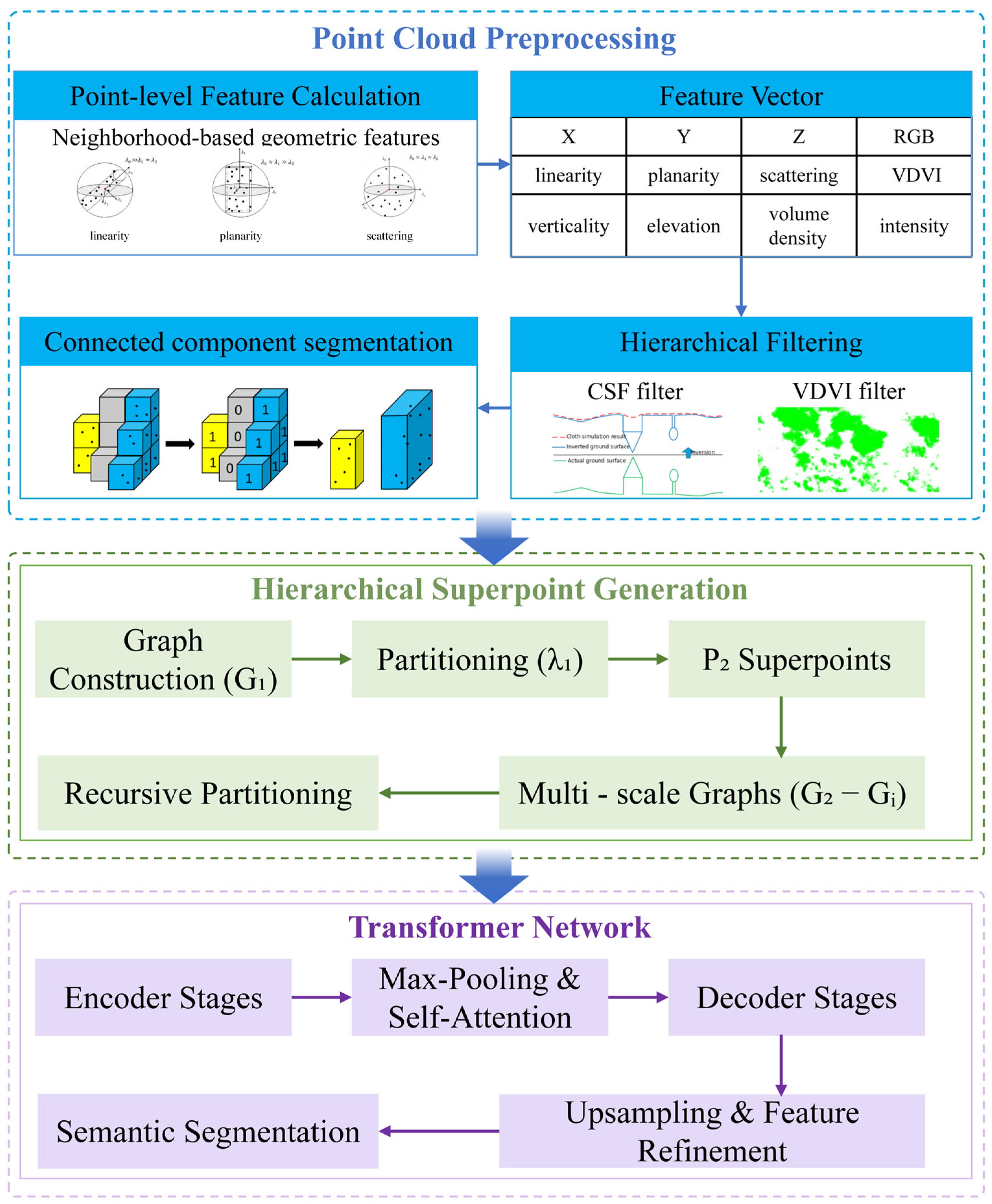

2.2. Data Preprocessing

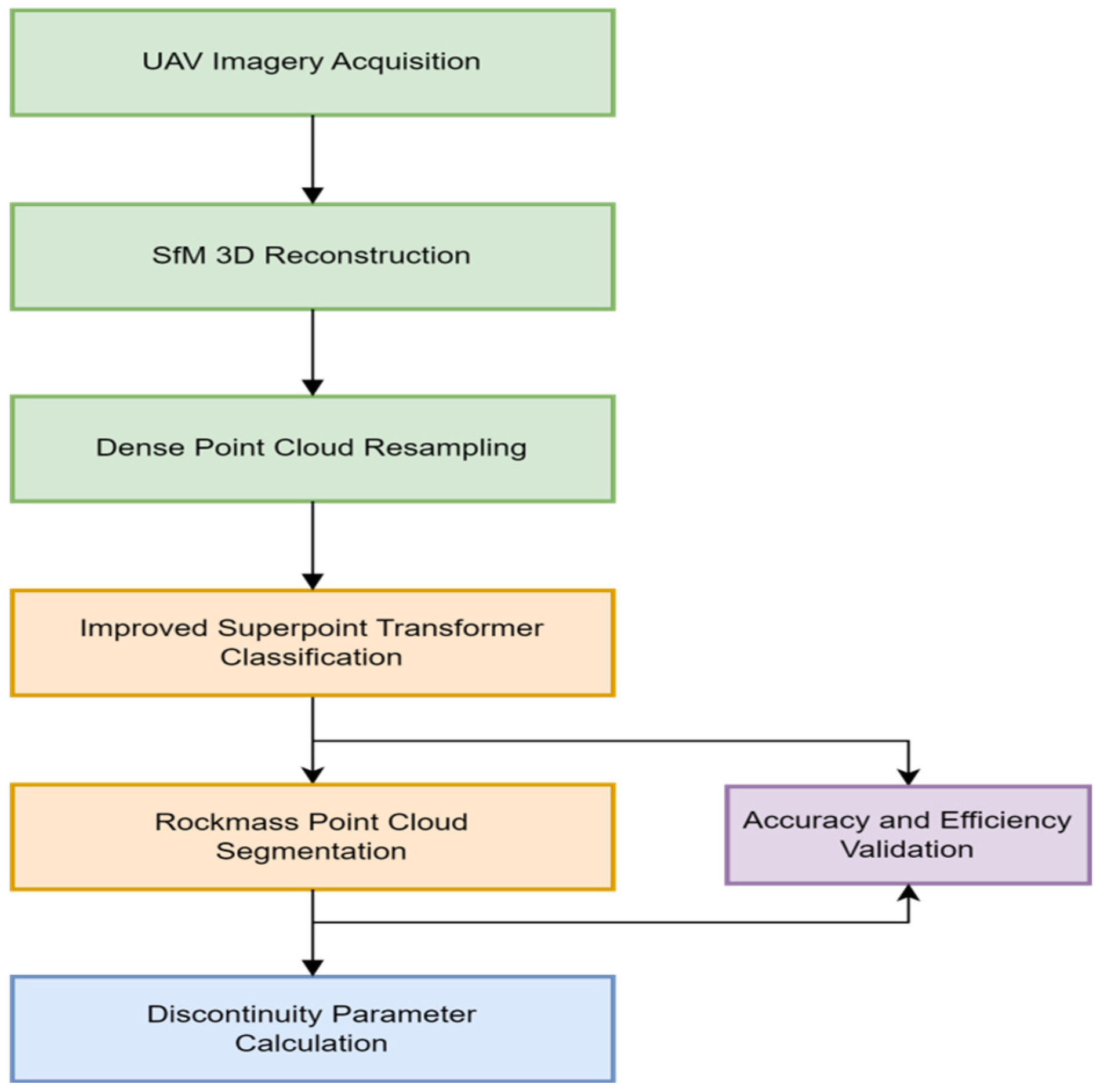

2.2.1. The Overall Workflow

2.2.2. SfM–MVS 3D Reconstruction

2.2.3. Dense Point-Cloud Export and Mesh-to-Point Resampling

2.2.4. Outlier and Noise Removal

2.3. Rock and Vegetation Point Cloud Classification

2.3.1. The SPT Algorithm

2.3.2. Improvements of the SPT Algorithm (ISPT)

- (i)

- Feature Augmentation

- (ii)

- Hierarchical Filtering

- (iii)

- Connected Component Segmentation

2.3.3. The Overall Workflow of the ISPT Algorithm

2.4. Recognition and Parameter Extraction of Rock Mass Discontinuity

2.5. Accuracy Validation

2.5.1. Reconstruction Accuracy and Model Quality Validation

- (i)

- Georeferencing accuracy

- (ii)

- Relative geometric accuracy

- (iii)

- Point-cloud quality

2.5.2. Classification Accuracy Validation

3. Results

3.1. The SPT Model Training

3.2. Reconstruction Accuracy and Model Quality Results

- (i)

- Georeferencing Accuracy

- (ii)

- Relative Geometric Accuracy

- (iii)

- Point-Cloud Quality

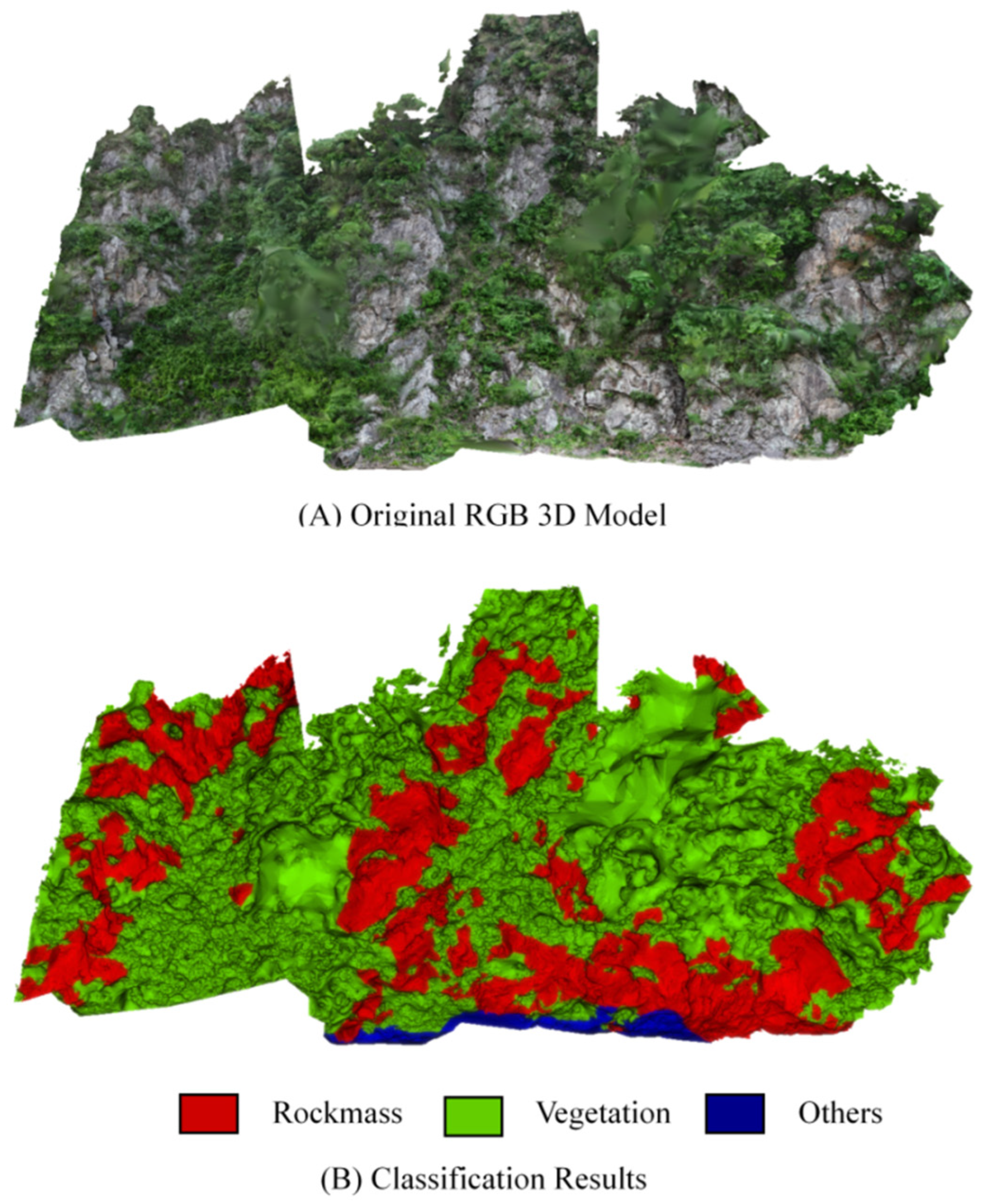

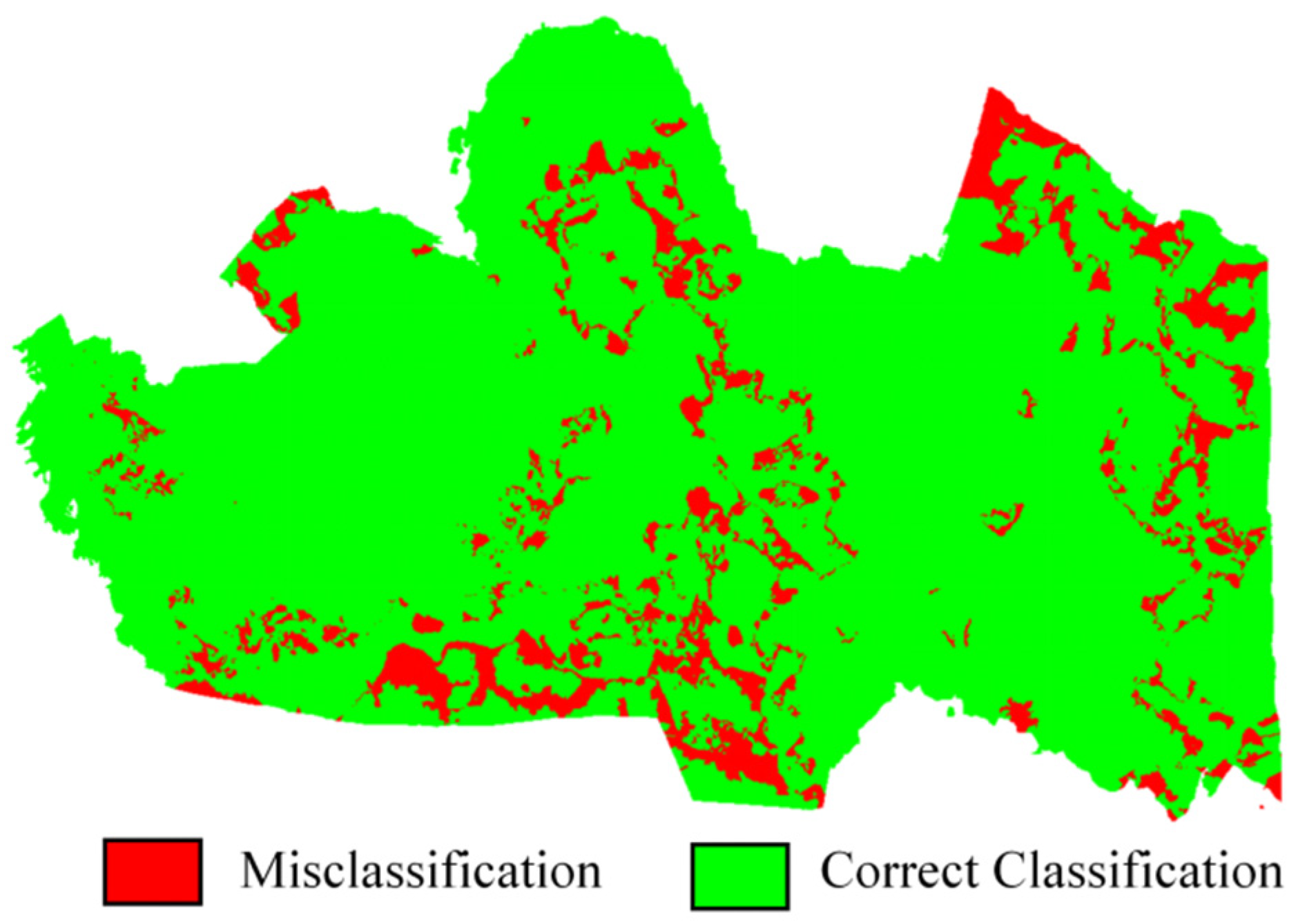

3.3. Vegetation and Rock Mass Classification Results

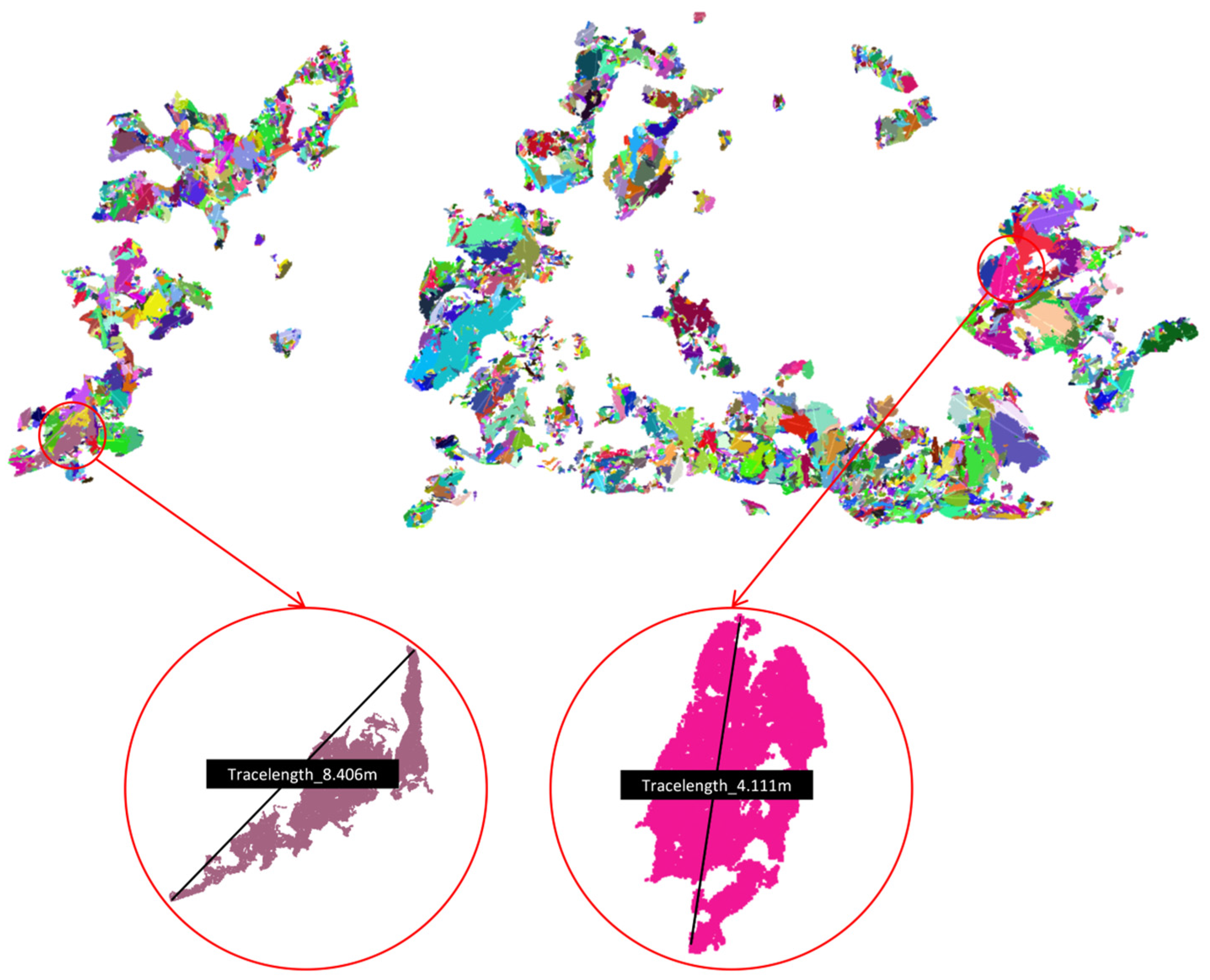

3.4. Recognition Results of Planar Surfaces in Rock Mass

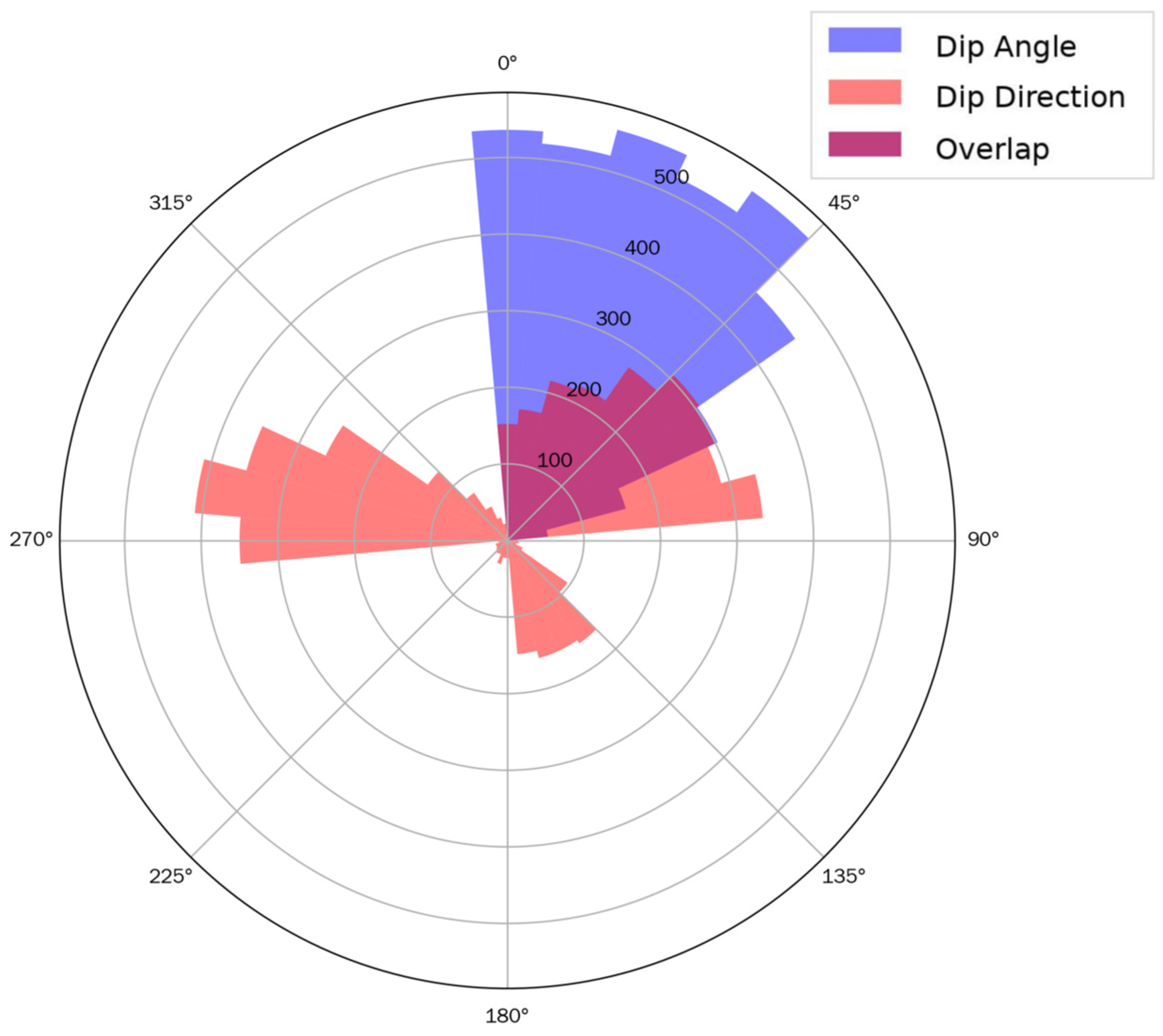

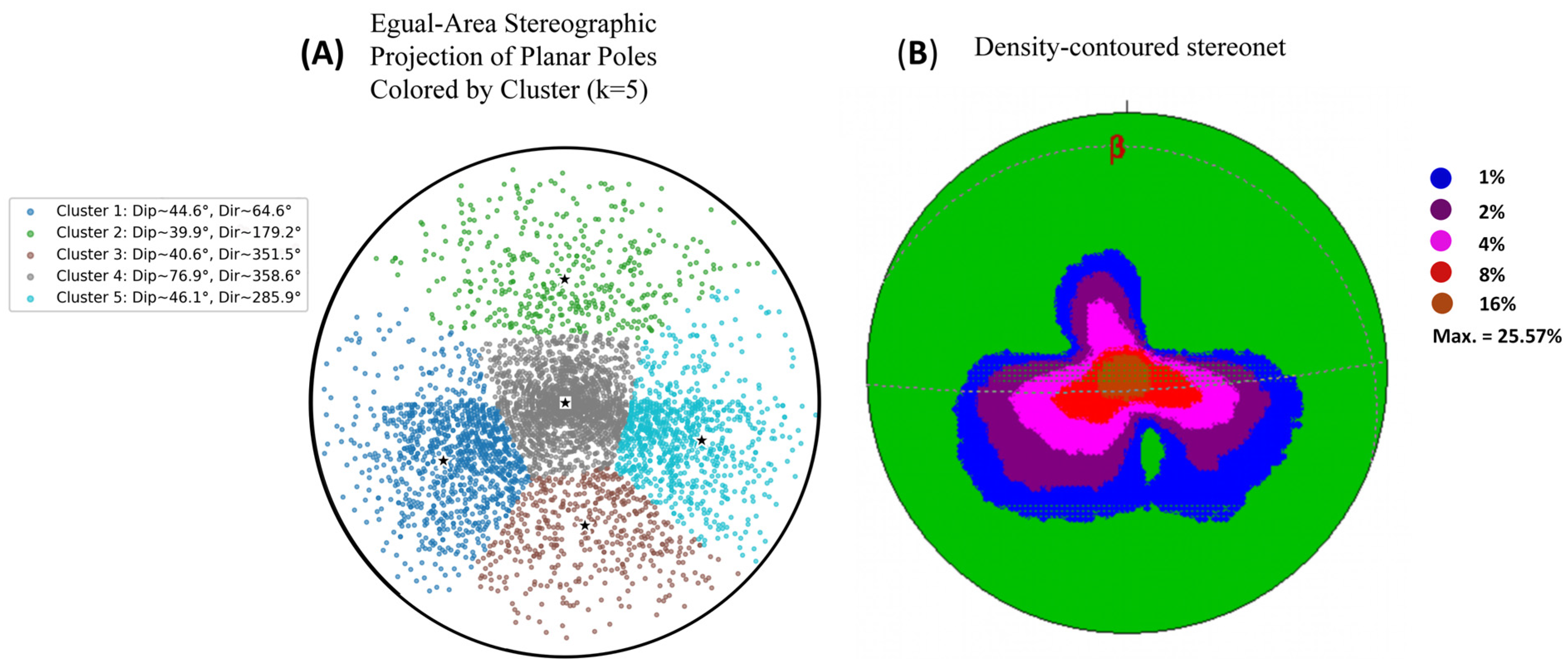

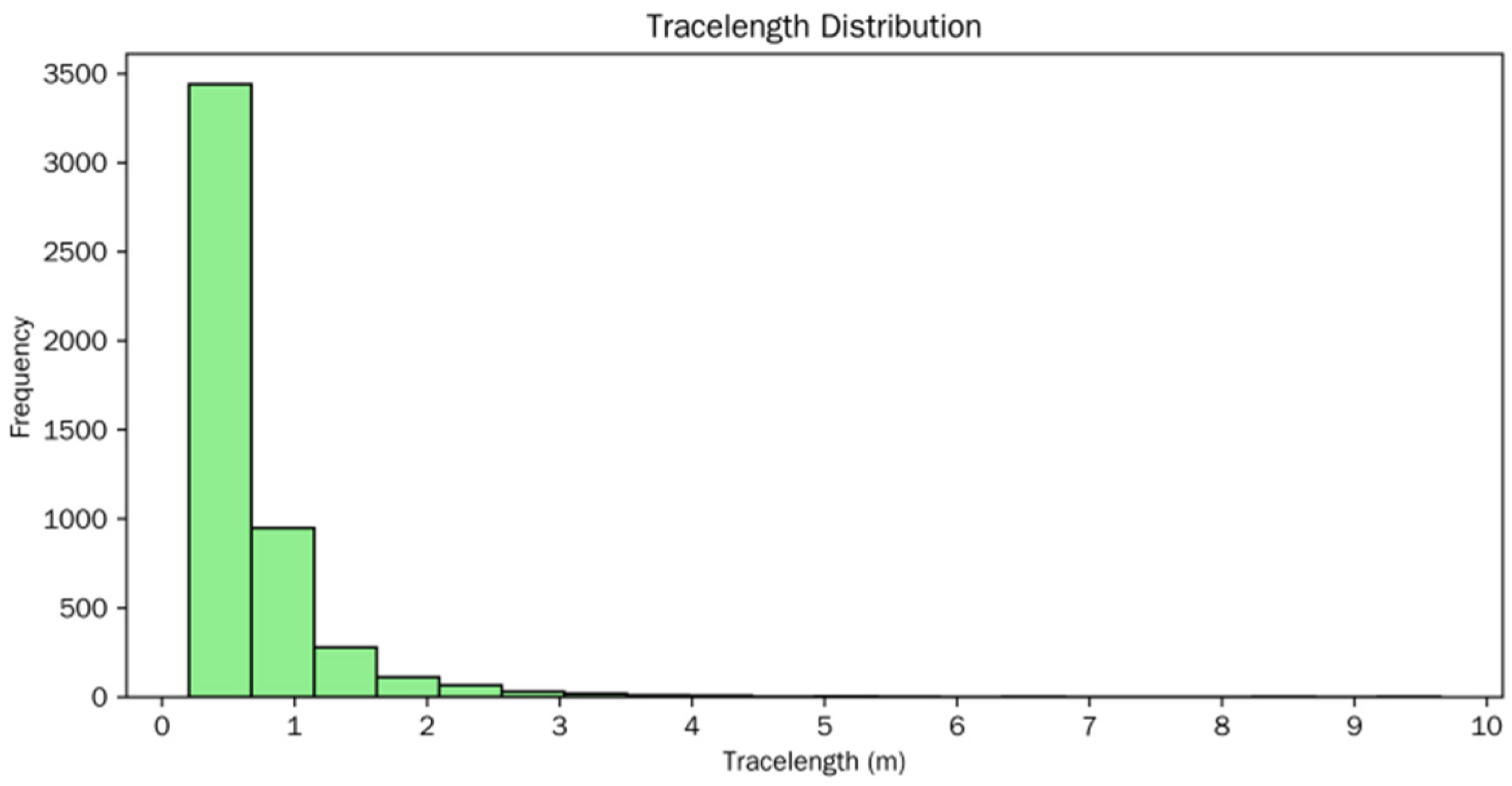

3.5. Rock Mass Structural Parameters Extraction Results

4. Discussion

4.1. Comparisons

4.2. Advantages

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hudson, J.A.; Priest, S.D. Discontinuities and Rock Mass Geometry. Int. J. Rock Mech. Min. Sci. Geomech. Abstr. 1979, 16, 339–362. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, Q.; Huang, R.; Chen, J.; Xue, Y.; Xu, P. Identification of Structural Domains Considering the Size Effect of Rock Mass Discontinuities: A Case Study of an Underground Excavation in Baihetan Dam, China. Tunn. Undergr. Space Technol. 2016, 51, 75–83. [Google Scholar] [CrossRef]

- Sharma, V.M.; Saxena, K.R. (Eds.) In-Situ Characterization of Rocks; A. A. Balkema Publishers: Leiden, The Netherlands, 2001. [Google Scholar]

- Li, H.; Li, X.; Li, W.; Zhang, S.; Zhou, J. Quantitative Assessment for the Rockfall Hazard in a Post-Earthquake High Rock Slope Using Terrestrial Laser Scanning. Eng. Geol. 2019, 248, 1–13. [Google Scholar] [CrossRef]

- Cirillo, D.; Zappa, M.; Tangari, A.C.; Brozzetti, F.; Ietto, F. Rockfall Analysis from UAV-Based Photogrammetry and 3D Models of a Cliff Area. Drones 2024, 8, 31. [Google Scholar] [CrossRef]

- Lovitt, J.; Rahman, M.; McDermid, G. Assessing the Value of UAV Photogrammetry for Characterizing Terrain in Complex Peatlands. Remote Sens. 2017, 9, 715. [Google Scholar] [CrossRef]

- Mao, Z.; Hu, S.; Wang, N.; Long, Y. Precision Evaluation and Fusion of Topographic Data Based on UAVs and TLS Surveys of a Loess Landslide. Front. Earth Sci. 2021, 9, 801293. [Google Scholar] [CrossRef]

- Han, X.; Yang, S.; Zhou, F.; Wang, J.; Zhou, D. An Effective Approach for Rock Mass Discontinuity Extraction Based on Terrestrial LiDAR Scanning 3D Point Clouds. IEEE Access 2017, 5, 26734–26742. [Google Scholar] [CrossRef]

- Liang, H.; Xiao, J.; Ying, W. A Vegetation Filtering Method for Rock Mass Point Clouds Based on Multi-Dimensionality Features and MLP. J. Univ. Chin. Acad. Sci. 2020, 37, 345–351. [Google Scholar]

- Kong, X.; Xia, Y.; Wu, X.; Wang, Z.; Yang, K.; Yan, M.; Li, C.; Tai, H. Discontinuity Recognition and Information Extraction of High and Steep Cliff Rock Mass Based on Multi-Source Data Fusion. Appl. Sci. 2022, 12, 11258. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, S.; Xiao, J.; Wang, F.; Wang, Y.; Liu, L. Accurate Rock-Mass Extraction From Terrestrial Laser Point Clouds via Multiscale and Multiview Convolutional Feature Representation. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4430–4443. [Google Scholar] [CrossRef]

- Hussain, Y.; Schlögel, R.; Innocenti, A.; Hamza, O.; Iannucci, R.; Martino, S.; Havenith, H.-B. Review on the Geophysical and UAV-Based Methods Applied to Landslides. Remote Sens. 2022, 14, 4564. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Hao, J.; Zhang, X.; Wang, C.; Wang, H.; Wang, H. Application of UAV Digital Photogrammetry in Geological Investigation and Stability Evaluation of High-Steep Mine Rock Slope. Drones 2023, 7, 198. [Google Scholar] [CrossRef]

- Drews, T.; Miernik, G.; Anders, K.; Höfle, B.; Profe, J.; Emmerich, A.; Bechstädt, T. Validation of Fracture Data Recognition in Rock Masses by Automated Plane Detection in 3D Point Clouds. Int. J. Rock Mech. Min. Sci. 2018, 109, 19–31. [Google Scholar] [CrossRef]

- Chen, B.; Maurer, J.; Gong, W. Applications of UAV in Landslide Research: A Review. Landslides 2025, 22, 3029–3048. [Google Scholar] [CrossRef]

- Yang Bisheng, D.Z. Progress and Perspective of Point Cloud Intelligence. Acta Geod. Sin. 2019, 48, 1575–1585. [Google Scholar]

- Hartwig, M.E.; Santos, G.G.D.S.D. Enhanced Discontinuity Mapping of Rock Slopes Exhibiting Distinct Structural Frameworks Using Digital Photogrammetry and UAV Imagery. Environ. Earth Sci. 2024, 83, 624. [Google Scholar] [CrossRef]

- Yang, L.; Li, Y.; Li, X.; Meng, Z.; Luo, H. Efficient Plane Extraction Using Normal Estimation and RANSAC from 3D Point Cloud. Comput. Stand. Interfaces 2022, 82, 103608. [Google Scholar] [CrossRef]

- Pola, A.; Herrera-Díaz, A.; Tinoco-Martínez, S.R.; Macias, J.L.; Soto-Rodríguez, A.N.; Soto-Herrera, A.M.; Sereno, H.; Ramón Avellán, D. Rock Characterization, UAV Photogrammetry and Use of Algorithms of Machine Learning as Tools in Mapping Discontinuities and Characterizing Rock Masses in Acoculco Caldera Complex. Bull. Eng. Geol. Environ. 2024, 83, 260. [Google Scholar] [CrossRef]

- Wu, S.; Wang, Q.; Zeng, Q.; Zhang, Y.; Shao, Y.; Deng, F.; Liu, Y.; Wei, W. Automatic Extraction of Outcrop Cavity Based on a Multiscale Regional Convolution Neural Network. Comput. Geosci. 2022, 160, 105038. [Google Scholar] [CrossRef]

- Oppikofer, T.; Jaboyedoff, M.; Pedrazzini, A.; Derron, M.; Blikra, L.H. Detailed DEM Analysis of a Rockslide Scar to Characterize the Basal Sliding Surface of Active Rockslides. J. Geophys. Res. Earth Surf. 2011, 116, F02016. [Google Scholar] [CrossRef]

- Xu, Q.; Ye, Z.; Liu, Q.; Dong, X.; Li, W.; Fang, S.; Guo, C. 3D Rock Structure Digital Characterization Using Airborne LiDAR and Unmanned Aerial Vehicle Techniques for Stability Analysis of a Blocky Rock Mass Slope. Remote Sens. 2022, 14, 3044. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Suk, T. Filtering Green Vegetation Out from Colored Point Clouds of Rocky Terrains Based on Various Vegetation Indices: Comparison of Simple Statistical Methods, Support Vector Machine, and Neural Network. Remote Sens. 2023, 15, 3254. [Google Scholar] [CrossRef]

- Anders, N.; Valente, J.; Masselink, R.; Keesstra, S. Comparing Filtering Techniques for Removing Vegetation from UAV-Based Photogrammetric Point Clouds. Drones 2019, 3, 61. [Google Scholar] [CrossRef]

- Wernette, P.A. Machine Learning Vegetation Filtering of Coastal Cliff and Bluff Point Clouds. Remote Sens. 2024, 16, 2169. [Google Scholar] [CrossRef]

- Wang, Y.; Koo, K.-Y. Vegetation Removal on 3D Point Cloud Reconstruction of Cut-Slopes Using U-Net. Appl. Sci. 2021, 12, 395. [Google Scholar] [CrossRef]

- Blanco, L.; García-Sellés, D.; Guinau, M.; Zoumpekas, T.; Puig, A.; Salamó, M.; Gratacós, O.; Muñoz, J.A.; Janeras, M.; Pedraza, O. Machine Learning-Based Rockfalls Detection with 3D Point Clouds, Example in the Montserrat Massif (Spain). Remote Sensing 2022, 14, 4306. [Google Scholar] [CrossRef]

- Pinto, M.F.; Melo, A.G.; Honório, L.M.; Marcato, A.L.M.; Conceição, A.G.S.; Timotheo, A.O. Deep Learning Applied to Vegetation Identification and Removal Using Multidimensional Aerial Data. Sensors 2020, 20, 6187. [Google Scholar] [CrossRef]

- Fan, Z.; Wei, J.; Zhang, R.; Zhang, W. Tree Species Classification Based on PointNet++ and Airborne Laser Survey Point Cloud Data Enhancement. Forests 2023, 14, 1246. [Google Scholar] [CrossRef]

- Han, X.; Liu, C.; Zhou, Y.; Tan, K.; Dong, Z.; Yang, B. WHU-Urban3D: An Urban Scene LiDAR Point Cloud Dataset for Semantic Instance Segmentation. ISPRS J. Photogramm. Remote Sens. 2024, 209, 500–513. [Google Scholar] [CrossRef]

- Vivaldi, V.; Bordoni, M.; Mineo, S.; Crozi, M.; Pappalardo, G.; Meisina, C. Airborne Combined Photogrammetry—Infrared Thermography Applied to Landslide Remote Monitoring. Landslides 2023, 20, 297–313. [Google Scholar] [CrossRef]

- Kalinicheva, E.; Landrieu, L.; Mallet, C.; Chehata, N. Predicting Vegetation Stratum Occupancy from Airborne LiDAR Data with Deep Learning 2022. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102863. [Google Scholar] [CrossRef]

- Diab, A.; Kashef, R.; Shaker, A. Deep Learning for LiDAR Point Cloud Classification in Remote Sensing. Sensors 2022, 22, 7868. [Google Scholar] [CrossRef] [PubMed]

- Šašak, J.; Gallay, M.; Kaňuk, J.; Hofierka, J.; Minár, J. Combined Use of Terrestrial Laser Scanning and UAV Photogrammetry in Mapping Alpine Terrain. Remote Sens. 2019, 11, 2154. [Google Scholar] [CrossRef]

- Salvini, R.; Vanneschi, C.; Coggan, J.S.; Mastrorocco, G. Evaluation of the Use of UAV Photogrammetry for Rock Discontinuity Roughness Characterization. Rock Mech. Rock Eng. 2020, 53, 3699–3720. [Google Scholar] [CrossRef]

- Chen, K.; Jiang, Q. Discontinuity Surface Orientation Extraction and Cluster Analysis Based on Point Cloud Data. Can. Geotech. J. 2025, 62, 1–9. [Google Scholar] [CrossRef]

- Zhu, J.; Xia, Y.; Wang, B.; Yang, Z.; Yang, K. Research on the Identification of Rock Mass Structural Planes and Extraction of Dominant Orientations Based on 3D Point Cloud. Appl. Sci. 2024, 14, 9985. [Google Scholar] [CrossRef]

- Robert, D.; Raguet, H.; Landrieu, L. Efficient 3D Semantic Segmentation with Superpoint Transformer. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 17149–17158. [Google Scholar]

- Wang, X.; Wang, M.; Wang, S.; Wu, Y. Extraction of Vegetation Information from Visible Unmanned Aerial Vehicle Images. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2015, 31, 152–159. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Wang, X.; Zou, L.; Shen, X.; Ren, Y.; Qin, Y. A Region-Growing Approach for Automatic Outcrop Fracture Extraction from a Three-Dimensional Point Cloud. Comput. Geosci. 2017, 99, 100–106. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D Terrestrial Lidar Data Classification of Complex Natural Scenes Using a Multi-Scale Dimensionality Criterion: Applications in Geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- Yu, H.; Taleghani, A.D.; Al Balushi, F.; Wang, H. Machine Learning for Rock Mechanics Problems; an Insight. Front. Mech. Eng. 2022, 8, 1003170. [Google Scholar] [CrossRef]

- Han, X.; Chen, X.; Deng, H.; Wan, P.; Li, J. Point Cloud Deep Learning Network Based on Local Domain Multi-Level Feature. Appl. Sci. 2023, 13, 10804. [Google Scholar] [CrossRef]

| Authors (Year) | Techniques | Advantages | Disadvantages | Application Scenarios |

|---|---|---|---|---|

| Pola et al. (2024) [20] | UAV photogrammetry + k-means on normals + RANSAC | Maps multiple discontinuity sets remotely; uses free 3D tools | Relies on quality of point cloud; requires manual ROI removal pre- step | Large inaccessible caldera outcrops |

| Šašak et al. (2019) [35] | SfM photogrammetry, TLS/ALS comparison | High-res DTMs/DOMs; easy deployment; low cost for steep cliffs | No direct dense cloud; vegetation occlusion; lower absolute accuracy than TLS | Coastal cliff rockfall assessment; geomorphology |

| Salvini et al. (2020) [36] | UAV-SfM point cloud vs. field/TLS validation | UAV point clouds can reliably capture joint roughness (>60 cm scales) | Less accurate for very short profiles (<60 cm); requires multiple flight heights | Quantitative joint roughness analysis |

| Chen et al. (2025) [37] | PCA normals + Hough + region-growing + DBSCAN | Robust normal estimation (Hough); clusters planes automatically; widely cited | Sensitive to point density and noise; complex parameter tuning | Algorithmic plane extraction from TLS/photogrammetry clouds |

| Zhu et al. (2024) [38] | Hough transform + CFSFDP clustering | Automates the number of planes; robust normal at edges; handles large slopes | Computationally intensive; accuracy depends on voting parameters; memory heavy | Highway slope joint mapping; engineering geology |

| Wang et al. (2021) [27] | 2D image segmentation (U-Net) + 3D reprojection (point cloud) | Effectively removes vegetation for change detection; uses proven CNN models | Requires labeled training data; 2D segmentation errors project to 3D | Time-series monitoring of vegetated cut slopes |

| Fan et al. (2023) [30] | PointNet++ deep network | End-to-end learning of point features; high accuracy (~92%) | Data-hungry; requires downsampling or augmentation; slow to train | Tree species and vegetation classification from LiDAR |

| Dataset Classification | Data Samples | Point Cloud Quantity |

|---|---|---|

| Training Set | Site1-1 | 17,428,162 |

| Site1-2 | 17,143,557 | |

| Site2-1 | 5,498,532 | |

| Site2-2 | 12,190,668 | |

| Validation Set | Site3-1 | 1,187,707 |

| Site3-2 | 10,889,558 | |

| Test Set | Site4-1 | 9,178,136 |

| Site4-2 | 7,863,002 |

| Parameters | Value |

|---|---|

| Batch size | 1 |

| Epoch | 500 |

| Parameters of Superpoint Segmentation | Voxel Size: 0.03 KNN: 30 Number of Point Feature Samples within Superpoints: [Sample_point_min: 32; Sample_point_max: 128] |

| Point features used for training | RGB, VDVI, Linearity, Curvature, Planarity, Scattering, Verticality, Elevation, Volumetric density. |

| Horizontal edge features used for training | (1) ‘mean_off’; (2) ‘std_off’; (3) ‘mean_dist’; (4) ‘angle_source’; (5) ‘angle_target’; (6) ‘centroid_dir’; (7) ‘centroid_dist’; (8) ‘normal_angle’; (9) ‘log_length’; (10) ‘log_surface’; (11) ‘log_volume’; (12) ‘log_size’ |

| Metric | Site1 | Site2 | Site3 | Site4 |

|---|---|---|---|---|

| Horizontal RMSE (XY) [cm] | 2.9 | 3.7 | 2.3 | 4.8 |

| Vertical RMSE (Z) [cm] | 4.5 | 6 | 3.4 | 7.5 |

| 3D RMSE [cm] | 5.4 | 7 | 4.1 | 8.9 |

| Relative distance RMSE [cm] | 1.7 | 2.3 | 1.3 | 2.8 |

| Surface roughness [cm] | 1.3 | 1.6 | 1 | 1.9 |

| Coverage ratio [%] | 95 | 93 | 98 | 90 |

| Point density [pts/m2] | 950 | 870 | 1100 | 820 |

| Accuracy Metrics | Class | Accuracy Score |

|---|---|---|

| IoU | Vegetation | 63.8 |

| Rock | 88.4 | |

| mACC | 72.5 | |

| mIoU | 76.1 | |

| OA | 89.5 |

| Cluster ID | Mean Dip Angle (°) | Mean Dip Direction (°) | Sample Count (n) |

|---|---|---|---|

| 1 | 44.6 | 64.6 (ENE) | 1045 |

| 2 | 39.9 | 179.2 (S) | 407 |

| 3 | 40.6 | 351.5 (NNW) | 480 |

| 4 | 76.9 | 358.6 (N) | 2103 |

| 5 | 46.1 | 285.9 (WNW) | 883 |

| Methods | Accuracy Metrics | Accuracy Score |

|---|---|---|

| ISPT (proposed method) | mACC | 72.5 |

| mIoU | 76.1 | |

| OA | 89.5 | |

| CANUPO | mACC | 63.3 |

| mIoU | 66.5 | |

| OA | 78.2 | |

| Original SPT | mACC | 69.5 |

| mIoU | 73 | |

| OA | 85.8 |

| Methods | Time-Consuming (min) |

|---|---|

| ISPT (proposed method) | 6.52 |

| CANUPO | 164.28 |

| Original SPT | 5.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Wan, P.; Han, X.; Zhai, R.; Gan, X. Automated Recognition of Rock Mass Discontinuities on Vegetated High Slopes Using UAV Photogrammetry and an Improved Superpoint Transformer. Remote Sens. 2026, 18, 357. https://doi.org/10.3390/rs18020357

Wan P, Han X, Zhai R, Gan X. Automated Recognition of Rock Mass Discontinuities on Vegetated High Slopes Using UAV Photogrammetry and an Improved Superpoint Transformer. Remote Sensing. 2026; 18(2):357. https://doi.org/10.3390/rs18020357

Chicago/Turabian StyleWan, Peng, Xianquan Han, Ruoming Zhai, and Xiaoqing Gan. 2026. "Automated Recognition of Rock Mass Discontinuities on Vegetated High Slopes Using UAV Photogrammetry and an Improved Superpoint Transformer" Remote Sensing 18, no. 2: 357. https://doi.org/10.3390/rs18020357

APA StyleWan, P., Han, X., Zhai, R., & Gan, X. (2026). Automated Recognition of Rock Mass Discontinuities on Vegetated High Slopes Using UAV Photogrammetry and an Improved Superpoint Transformer. Remote Sensing, 18(2), 357. https://doi.org/10.3390/rs18020357