Highlights

What are the main findings?

- We propose HOSU, a hybrid OVSS framework that integrates distribution-aware fine-tuning, text-guided multi-level regularization, and masked feature consistency to inject DINOv2’s fine-grained spatial priors into CLIP, thereby enhancing segmentation of complex scene distributions and small-scale targets in UAV imagery, while maintaining CLIP-only inference.

- Extensive experiments across four training settings and six UAV benchmarks demonstrate that our method achieves state-of-the-art performance with significant improvements over existing approaches, while ablation studies further confirm that each module plays a key role in the overall performance gain.

What is the implication of the main finding?

- This work offers a new perspective on leveraging the complementary strengths of heterogeneous foundation models, presenting a generalizable framework that enhances the fine-grained spatial perception of vision–language models in dense prediction tasks.

- Our method unlocks open-vocabulary segmentation for UAV imagery, filling an important research gap and delivering robust generalization to unseen categories and diverse aerial scenes, with substantial practical value for real-world UAV applications such as urban planning, disaster assessment, and environmental monitoring.

Abstract

Open-vocabulary semantic segmentation (OVSS) is of critical importance for unmanned aerial vehicle (UAV) imagery, as UAV scenes are highly dynamic and characterized by diverse, unpredictable object categories. Current OVSS approaches mainly rely on the zero-shot capabilities of vision–language models (VLMs), but their image-level pretraining objectives yield ambiguous spatial relationships and coarse-grained feature representations, resulting in suboptimal performance in UAV scenes. In this work, we propose a novel hybrid framework for OVSS in UAV imagery, named HOSU, which leverages the priors of vision foundation models to unleash the potential of vision–language models in representing complex spatial distributions and capturing fine-grained small-object details in UAV scenes. Specifically, we propose a distribution-aware fine-tuning method that aligns CLIP with DINOv2 across intra- and inter-region feature distributions, enhancing the capacity of CLIP to model complex scene semantics and capture fine-grained details critical for UAV imagery. Meanwhile, we propose a text-guided multi-level regularization mechanism that leverages the text embeddings of CLIP to impose semantic constraints on the visual features, preventing their drift from the original semantic space during fine-tuning and ensuring stable vision–language correspondence. Finally, to address the pervasive occlusion in UAV imagery, we propose a mask-based feature consistency strategy that enables the model to learn stable representations, remaining robust against viewpoint-induced occlusions. Extensive experiments across four training settings on six UAV datasets demonstrate that our approach consistently achieves state-of-the-art performance compared with previous methods, while comprehensive ablation studies and analyses further validate its effectiveness.

1. Introduction

The semantic segmentation [1,2,3,4,5] of unmanned aerial vehicle (UAV) imagery is the process of assigning semantic labels to every pixel in aerial scenes, enabling fine-grained parsing and structured understanding of complex environments. This task is particularly important because UAVs can capture large-scale areas with high spatial resolution, and accurate pixel-level scene understanding transforms raw data into actionable maps that support real-world applications [6,7,8,9], including urban planning, disaster response, precision agriculture, environmental monitoring, and infrastructure inspection.

With the rapid progress of deep learning, semantic segmentation techniques have achieved significant advances and greatly enhanced UAV image analysis [8,9,10,11]. However, most existing approaches are constrained to a fixed set of predefined categories established during training. This closed-set limitation substantially hinders their ability to generalize in open and dynamic environments—conditions that are common in UAV applications. As a result, the applicability of conventional closed-set segmentation models to UAV scenarios remains fundamentally limited.

The recent emergence of vision–language models (VLMs) [12,13] has shifted semantic segmentation from a closed-vocabulary paradigm to open-vocabulary semantic segmentation (OVSS) [14,15,16,17]. Unlike traditional approaches that rely on task-specific label sets and require costly pixel-wise annotation for every new concept, OVSS enables models to segment arbitrary categories merely by providing textual descriptions. This flexibility allows perception systems to continuously expand their semantic understanding without retraining, making OVSS particularly powerful for real-world environments where novel or long-tail categories frequently emerge. A growing body of research has sought to exploit the zero-shot capabilities of VLMs for this purpose. For instance, SAN [17] introduces a lightweight side network to augment CLIP with mask proposals and attention-guided recognition, SED [18] designs a hierarchical encoder–decoder with early category rejection to improve both accuracy and efficiency, and DeOP [19] proposes a one-pass decoupled framework with patch severance and classification anchor learning to boost performance. Despite these advances, most VLMs are pretrained with image-level objectives, leading to ambiguous spatial localization [20,21] and the limited modeling of fine-grained structures. As a result, achieving pixel-level segmentation remains particularly challenging in open-world conditions characterized by complex scene distributions and the frequent presence of small-scale targets, especially when dealing with UAV imagery.

Meanwhile, vision foundation models (VFMs) [22,23,24] such as DINOv2 [22] have shown remarkable ability to capture fine-grained and localized spatial features [25,26]. Trained with large-scale self-supervised objectives, they learn rich visual representations that preserve structural details and contextual cues, making them well-suited for tasks requiring precise spatial understanding. However, these representations are purely visual and lack inherent alignment with language, which constrains their effectiveness in open-vocabulary segmentation tasks where semantic grounding to textual concepts is essential.

Therefore, this paper aims to incorporate the powerful semantic priors of DINOv2 into the challenging task of open-world semantic segmentation of UAV imagery. To this end, we propose HOSU, a novel hybrid framework for open-vocabulary semantic segmentation of UAV imagery, which leverages DINOv2’s fine-grained spatial perception to unlock the full potential of CLIP in addressing complex scene distributions and the prevalence of small-scale targets in UAV scenarios. Specifically, we propose a distribution-aware fine-tuning strategy that aligns the CLIP visual features with DINOv2 representations across both intra-region and inter-region distributions, enabling the capture of fine-grained details within regions and the modeling of semantic distributions across regions. Meanwhile, we design a text-guided multi-level regularization mechanism that exploits the CLIP text embeddings to impose hierarchical semantic constraints on visual features, preventing them from drifting away from the original semantic space during fine-tuning and thereby ensuring stable vision–language correspondence. Finally, to mitigate the impact of incomplete target regions caused by occlusions from high-altitude viewpoints, we propose a mask-based feature consistency strategy that guides the model to learn stable and robust feature representations, thereby maintaining consistent feature distributions under occlusion. Extensive experiments across four training settings and six UAV datasets demonstrate that our approach consistently achieves state-of-the-art results, outperforming existing methods. Complementary ablation studies further confirm the effectiveness and robustness of the proposed framework.

The main contributions of this paper are as follows:

- We propose a novel hybrid framework for open-vocabulary semantic segmentation of UAV imagery, named HOSU, which leverages the priors of vision foundation models to unlock the capability of vision–language models in scene distribution perception and fine-grained feature representation.

- We propose a text-guided multi-level regularization method that leverages the CLIP text embeddings to regularize visual features, preventing their drift from the original semantic space during fine-tuning and preserving coherent correspondence between visual and textual semantics.

- We propose a mask-based feature consistency strategy to address the prevalent occlusion in UAV imagery, enabling the model to learn stable feature representations that remain robust against missing or partially visible regions.

- Extensive experiments conducted across multiple UAV benchmarks validate the effectiveness of our method, consistently demonstrating state-of-the-art performance and clear improvements over existing open-vocabulary segmentation approaches.

2. Related Work

2.1. Semantic Segmentation

Semantic segmentation is a fundamental problem in computer vision that aims to assign category labels to each pixel in an image. Since the pioneering Fully Convolutional Network (FCN) [1], numerous CNN-based methods have been proposed to enhance contextual representation. For example, dilated convolutions [2], pyramid pooling [27], and atrous spatial pyramid pooling (ASPP) [28] have been widely adopted to enlarge receptive fields and capture multi-scale context, while attention-based designs such as PSANet [29], DANet [30], and CCNet [31] further improve long-range dependency modeling. To address resolution loss in CNNs, architectures like U-Net [32] and HRNet [33] emphasize skip connections or high-resolution representations. More recently, Transformers have been introduced for segmentation, with approaches such as SegFormer [3], Segmenter [4], and Mask2Former [5] demonstrating strong global reasoning ability and unifying multiple segmentation tasks under a mask classification paradigm. Beyond standard supervised settings, alternative regimes such as few-shot [34], semi-supervised [35], and weakly supervised [36] segmentation have been explored to reduce annotation costs.

The semantic segmentation of UAV imagery faces challenges such as scale variation, complex layouts, and occlusions. Early CNN-based encoder–decoder models like FCN [1] and U-Net [32] became the foundation, with multi-scale feature learning [37] and spatial–channel relation modules [6]. However, CNNs suffer from limited receptive fields, leading to weak global context. To address this, some works introduced dilated convolutions [7] and global context attention [38] to capture richer semantic dependencies. More recently, transformers have shown strong global modeling ability. Recent UAV segmentation methods employ Swin Transformer [39] as the backbone, incorporating designs such as densely connected decoders [40], dual encoders like ST-UNet [8], and multi-scale modules [9]. Bilateral awareness networks [41] combined transformer paths with CNN texture paths, while transformer-based decoders with global–local attention [10] further improved fine-grained recognition. Overall, transformer-based methods achieve stronger adaptability to complex UAV scenes compared with CNN approaches. Despite these advances, most methods remain limited to predefined categories, highlighting the need for open-vocabulary to handle diverse and dynamic scenarios such as UAV imagery.

2.2. Open-Vocabulary Semantic Segmentation

Open-vocabulary semantic segmentation (OVSS) extends beyond closed-set recognition by enabling segmentation for arbitrary categories through vision–language pretraining [16,17,42,43]. Compared with conventional semantic segmentation that is restricted to predefined label spaces, OVSS allows models to generalize to previously unseen or rare categories simply by providing textual prompts. This scalable capability is especially beneficial for real-world scenarios where object distributions are long-tailed or continuously evolving, making OVSS a promising and practical direction for open-world visual perception. With the success of large-scale vision–language models (VLMs) such as CLIP [12] and ALIGN [13], many approaches adapt image–text alignment to pixel-level tasks. Two-stage pipelines typically decouple region generation and semantic grounding. OpenSeg identifies class-agnostic proposals and links them to text embeddings [44]. ZegFormer [42] and ZSseg [14] follow similar strategies, while OVSeg [43] fine-tunes CLIP with region–text pairs. MaskCLIP [45] refines proposals using CLIP attention maps. Although effective, such approaches often depend on external mask generators trained with limited annotations, restricting generalization. One-stage methods directly predict masks from CLIP features. ZegCLIP [15] and SAN [17] leverage CLIP embeddings without requiring external proposal networks, while FC-CLIP [46] keeps the CLIP encoder frozen to preserve zero-shot capability. CAT-Seg [16] aggregates image–text similarities into a cost volume for robust fine-tuning. Despite these advances, existing OVSS approaches often inherit spatial ambiguity and coarse feature representations from their image-level pretraining, making it difficult to model complex scene distributions and recognize small-scale objects commonly found in UAV imagery. To address this, our method leverages the strong prior knowledge embedded in DINOv2 to enhance vision–language models in capturing complex scene distributions and fine-grained feature representations in UAV scenarios.

2.3. Vision Foundation Models

Visual foundation models [22,23] have achieved remarkable progress across a wide range of vision tasks. DINOv2 [22] learns versatile image- and patch-level representations via self-supervised training, serving as a strong general-purpose feature extractor. The Segment Anything Model (SAM) [23], trained on over one billion masks, delivers promptable, class-agnostic segmentation with strong zero-shot transfer, and its extension Semantic-SAM [24] further introduces category supervision to increase semantic granularity. Rein [25] enhances domain generalization in semantic segmentation by fine-tuning visual foundation models such as DINOv2 and SAM, leading to significant performance improvements. Mask DINO [26] extends DINO by introducing a mask prediction branch within a shared architecture, enabling a unified, scalable, and efficient framework for detection and segmentation tasks. However, despite their impressive capabilities, these representations remain purely visual and lack intrinsic language alignment, constraining their applicability to open-vocabulary segmentation where semantic grounding to textual concepts is essential.

3. Methods

In this paper, we propose a hybrid framework for OVSS in UAV imagery, dubbed HOSU, which leverages the semantic priors of DINOv2 to unlock the potential of CLIP for modeling complex scene distributions and capturing fine-grained features of small-scale objects—challenges that are particularly critical in UAV scenarios as illustrated in Figure 1. Specifically, we propose a distribution-aware fine-tuning method that aligns CLIP features with DINOv2 across intra- and inter-region distributions, enabling CLIP to inherit DINOv2’s spatial perception and fine-grained recognition, thereby enhancing UAV scene segmentation (Section 3.1). Meanwhile, to prevent the visual features of CLIP from drifting away from the text embeddings during fine-tuning, we propose a text-guided multi-level regularization method that leverages text embeddings to impose semantic constraints on the visual features across layers, preserving alignment between visual and textual representations (Section 3.2). Finally, we propose a mask-based feature consistency strategy that enforces consistency between masked and unmasked features, enabling the model to learn stable representations, preventing significant variations caused by partial occlusion of target regions (Section 3.3). We present the overall training objective and describe the inference pipeline of our framework (Section 3.4).

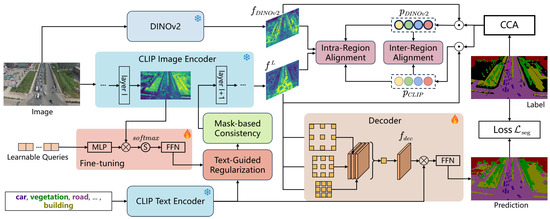

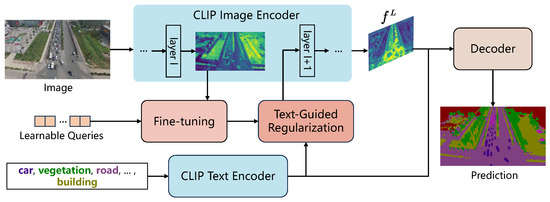

Figure 1.

An overview of our proposed framework. We introduce a set of learnable proxy queries to refine the CLIP visual features, avoiding the direct fine-tuning of its original weights that could compromise prior semantic knowledge. The refined features are guided by DINOv2 to capture both global scene structures and fine-grained object semantics through alignment across intra- and inter-region feature distributions. To prevent these refined features from drifting away from the CLIP semantic space and weakening cross-modal alignment, we design a text-guided multi-level regularization strategy that injects semantic signals from text embeddings into the visual stream at multiple depths, ensuring consistent correspondence between visual and textual representations. In addition, a mask-based consistency learning strategy is proposed to encourage stable and reliable feature representations under the partial occlusion of target regions. Finally, the reorganized CLIP features are processed with depthwise separable convolutions to capture multi-scale contextual information, and similarity matching with text embeddings is performed to generate the final prediction masks.

3.1. Distribution-Aware Fine-Tuning Method

Due to its holistic pretraining objective, CLIP often struggles to adapt to the complex spatial distributions of UAV imagery and to accurately recognize small-scale objects. In contrast, DINOv2 demonstrates strong capability in capturing localized and fine-grained spatial features. To exploit this complementarity, we propose a distribution-aware fine-tuning method that aligns CLIP features with those of DINOv2 across both intra- and inter-region distributions. Specifically, we construct region-level prototype vectors for both CLIP and DINOv2. For inter-region alignment, similarity matrices are computed from the prototypes, with the DINOv2-derived matrix supervising that of CLIP to guide global structural perception. For intra-region alignment, similarity distributions between each feature vector and its regional prototype are aligned to those of DINOv2, promoting finer semantic understanding within regions. However, directly tuning the CLIP visual encoder may disrupt its pretrained latent space and weaken its generalization ability. To prevent this, we keep the CLIP visual encoder frozen and introduce a set of learnable proxy queries that interact with its features. These queries serve as lightweight intermediaries to absorb the UAV-specific distribution shift and infuse fine-grained spatial cues from DINOv2, thereby achieving effective feature refinement without compromising the CLIP holistic semantic priors.

3.1.1. Construction of Region-Level Prototypes

The process begins with the extraction of visual features from CLIP as illustrated in Figure 1:

where x denotes the input image, denotes the visual encoder of CLIP, and denotes the visual representation extracted from the l-th layer of . A set of learnable proxy queries q is then introduced to refine these features, yielding the updated feature maps as follows:

where q denotes the randomly initialized learnable queries, and FFN consists of a fully connected transformation followed by a non-linear activation function. denotes the refined feature representation, L denotes the total number of layers in the CLIP visual encoder, and represents the dimension of the features at the l-th layer. All layers share the same MLP weights. These learnable proxy queries act as semantic interfaces, enabling adaptive interaction with CLIP features to extract task-relevant semantics without disrupting the CLIP pretrained semantic priors. Next, we perform connected component analysis (CCA) on the labels of image x to extract the edge outlines of the region masks:

where y denotes the label of image x, represent the masks of the connected regions, and N is the total number of connected regions. We then construct prototype vectors for each connected region from both CLIP and DINOv2 by extracting their final-layer visual features and applying element-wise multiplication between the region mask and the corresponding features:

where and denote the prototype vectors of the n-th region constructed from CLIP and DINOv2 features, respectively. denotes the final-layer visual features from CLIP, and denotes the final-layer features from DINOv2. and represent the height and width of the feature maps and , respectively. denotes the adaptive downsampling operation.

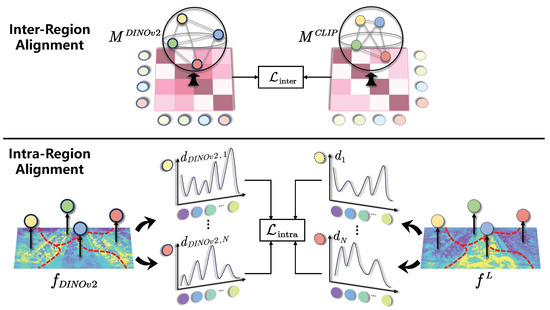

3.1.2. Enhancing Spatial Perception via Inter-Region Alignment

To enhance the capacity of CLIP for modeling complex scene distributions, we align its inter-region feature distributions with those of DINOv2 as illustrated in the upper part of Figure 2. We construct a similarity matrix from the prototype vectors of different regions to capture their pairwise relationships and reflect the overall structural distribution of the scene. This alignment encourages CLIP to better model scene-level semantic layouts and enhances its robustness in scenarios with diverse and irregular regions. Specifically, we first construct a similarity matrix between the prototype vectors of different regions:

where and denote the inter-region similarity matrices constructed from CLIP and DINOv2 features, respectively. To encourage CLIP to better capture structural relationships across regions, we impose a consistency constraint on with respect to , thereby aligning the CLIP inter-region distribution with that of DINOv2:

where denotes the Huber loss, which ensures stable optimization by reducing sensitivity to outliers. The inter-region consistency loss aligns the similarity distribution of CLIP features with that of DINOv2, thereby injecting the DINOv2 spatial priors into the CLIP feature space. Consequently, CLIP is enhanced in capturing structural relationships among regions, which is particularly beneficial for modeling complex scene distributions in UAV imagery.

Figure 2.

A brief illustration of the proposed distribution-aware fine-tuning method. It consists of two complementary components: inter-region and intra-region semantic distribution alignment. For inter-region alignment, similarity matrices are constructed from the prototype vectors of different regions, with the matrix derived from DINOv2 prototypes used as supervision to guide CLIP features in modeling global scene structures. For intra-region alignment, similarity distributions are computed between each feature vector and its corresponding prototype within a region, and CLIP features are encouraged to match the similarity distributions of DINOv2, thereby capturing fine-grained semantics within regions.

3.1.3. Fine-Grained Representation Through Intra-Region Alignment

To further strengthen the local representations of CLIP features and improve their ability to capture small-scale objects in UAV imagery, we align their intra-region feature distributions with those of DINOv2 as illustrated at the bottom of Figure 2. For each region, we compute the similarity between each feature and its prototype to form cost vectors that characterize the local distribution:

where k denotes the feature index of region , and denotes the resize operation used to align the height and width of CLIP and DINOv2 feature maps. Cosine represents the cosine similarity. We then employ KL divergence to measure the consistency between the two cost vector distributions, thereby encouraging CLIP features to align with the fine-grained feature distributions captured by DINOv2:

By minimizing the KL divergence, CLIP features are guided to capture fine-grained local representations, thereby enhancing their ability to perceive small-scale objects that are common in UAV imagery. When combined with the inter-region loss, this design allows CLIP to inherit both the global structural awareness and the local fine-grained perception capabilities of DINOv2.

3.2. Text-Guided Multi-Level Regularization

To prevent the CLIP visual features from drifting away from the original semantic space during fine-tuning—an issue that can lead to the collapse of its cross-modal alignment—we propose a text-guided multi-level regularization method as illustrated in Figure 3. This approach leverages the CLIP text embeddings to continuously inject semantic signals into visual features at multiple layers, constraining their representations to remain semantically coherent with the textual space and ensuring stable cross-modal alignment throughout fine-tuning.

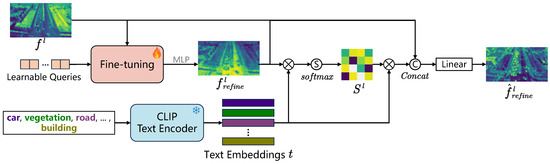

Figure 3.

A brief illustration of the proposed text-guided multi-level regularization. To prevent the CLIP visual features from drifting away from the original semantic space under the guidance of DINOv2—an issue that could collapse its cross-modal alignment—we impose semantic alignment constraints on each layer of the CLIP visual features using its own text embeddings. By continuously injecting semantic signals throughout the visual feature hierarchy during fine-tuning, this design ensures that CLIP maintains consistent correspondence between visual representations and language embeddings.

To do this, we first extract the text embeddings from the CLIP text encoder:

where denotes the text encoder of CLIP, represents the text of the j-th class name, and J is the total number of class texts. Subsequently, the text embeddings are employed to restructure the visual features across all layers. Specifically, we compute the dot product between the text embeddings and the refined CLIP visual features to obtain the similarity map:

where establishes an explicit association between the instance-level semantics of the visual features and the text embeddings. Building on this association, we leverage the similarity map together with the text embeddings t to reorganize the visual features of CLIP:

where denotes the reorganized feature representation propagated to the subsequent layers of CLIP, Linear refers to a two-layer MLP with layer normalization, and Concat indicates feature concatenation along the channel dimension. This design introduces multi-level semantic guidance by continuously injecting language-derived information into the visual feature reorganization process. As a result, it preserves the alignment between visual and textual representations, mitigates semantic drift during fine-tuning, and strengthens the model’s capacity to capture fine-grained feature representations and complex semantic distributions in UAV imagery.

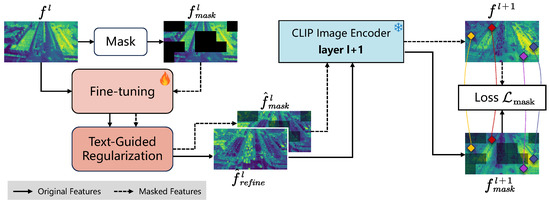

3.3. Masked Feature Consistency for Occlusion Robustness

Considering that occlusions are prevalent in UAV imagery and often cause partial loss of target features, leading to distribution shifts and unstable semantic representations, we propose a masked feature consistency strategy. This strategy deliberately masks part of the region features during training and enforces consistency with their unmasked counterparts, thereby preventing the model from over-relying on locally salient cues and encouraging it to learn more robust and occlusion-invariant semantic representations as illustrated in Figure 4. Specifically, a patch-wise mask is first generated by sampling from a uniform distribution:

where denotes the Iverson bracket, m denotes the patch size, r denotes the masking ratio, and a and b indicate the patch indices. The masked features are then obtained by applying element-wise multiplication between the mask and the original features :

Figure 4.

A brief illustration of the proposed masked feature consistency. A randomly sampled mask is first applied to the visual features, after which both the original and masked features are refined through the fine-tuning and text-guided regularization modules before being propagated to the next layer of the CLIP visual encoder. A consistency constraint is then enforced on the commonly unmasked regions, encouraging the model to learn stable and occlusion-invariant semantic representations.

Subsequently, these features are refined through the fine-tuning module and the text-guided regularization module, and then propagated to the next layer of the CLIP visual encoder, producing two sets of features, and . A consistency constraint is then imposed between these two feature sets, applied only to the regions that remain unmasked in both:

where denotes the temperature coefficient, and denotes the spatial resolution of the feature map at the -th layer of the CLIP visual encoder. In practice, we apply masked feature consistency only at selected layers to avoid over-regularizing and disrupting feature representations, preserving the integrity of the feature hierarchy while still providing sufficient consistency supervision. In this way, the representations in are supervised by their corresponding features in , ensuring that the information retained after masking remains aligned with the unmasked counterpart. This consistency constraint encourages the model to learn stable and robust semantic representations that are invariant to occlusion, thereby reducing its sensitivity to missing local cues and enhancing generalization under occluded conditions in UAV imagery.

3.4. Overall Training Objective and Inference Pipeline

Finally, we design a simple decoder consisting of multiple parallel 3 × 3 depthwise separable convolutions [47] with different dilation rates [48], followed by a 1 × 1 convolution for feature fusion as illustrated in Figure 1. At this stage, the visual features generated by CLIP are processed through three parallel 3 × 3 depthwise separable convolutions with distinct dilation rates, producing feature maps that capture complementary contextual information at multiple scales. These multi-scale feature maps are then fused by a 1 × 1 convolution to form a unified global feature representation with dimensionality matching that of the CLIP text embeddings. Finally, matrix multiplication is performed along the channel dimension to establish visual–textual correspondence, and cross-entropy loss is computed against the ground-truth labels to supervise the segmentation learning process:

where denotes the spatial size of the feature map , and Downsample denotes the downsampling operation. Accordingly, the overall training objective is formulated as

where , , and are hyperparameters that balance the relative contributions of the different loss terms.

During inference, as illustrated in Figure 5, the image and text are first processed by the CLIP visual and text encoders to obtain the visual features and text embeddings. These representations are then passed through the decoder to generate the prediction maps, followed by an upsampling operation. Finally, the segmentation masks are obtained by assigning each pixel to the category with the highest similarity score:

where Upsample denotes the upsampling operation, and h and w represent the spatial indices of the image. Notably, DINOv2 is not involved during the inference stage, ensuring that our method remains concise, efficient, and broadly applicable.

Figure 5.

A brief illustration of the proposed open-vocabulary framework inference pipeline. During inference, the CLIP visual features at each layer are refined by proxy queries and regularized with text embeddings before being propagated to the next layer. The final visual features are then integrated with the CLIP text embeddings through a simple decoder to establish semantic correspondence and generate prediction masks. Notably, DINOv2 features are omitted at this stage, keeping the pipeline concise, efficient, and broadly applicable.

4. Results

4.1. Experimental Setup

4.1.1. Datasets

In our experiments, we evaluate the proposed approach on a diverse set of UAV datasets encompassing both real-world and synthetic scenarios as summarized in Table 1. These datasets differ in scale, resolution, semantic categories, and scene types, thereby offering a comprehensive benchmark to assess semantic segmentation methods across different flight conditions and viewpoints.

Table 1.

Details of the pixel-level UAV datasets used in this study, including dataset links, number of categories, and the corresponding class definitions.

The SynDrone dataset [49] is a large-scale synthetic benchmark designed for UAV scene understanding in urban environments. It contains 72,000 annotated frames across eight video sequences, with 60,000 images allocated for training and 12,000 for testing. Each frame has a resolution of 1920 × 1080 pixels and is annotated with 28 semantic categories. To capture diverse perspectives, the dataset incorporates camera angles of 30°, 60°, and 90°. All data were generated using a customized version of the CARLA simulator.

The Aeroscapes dataset [50] consists of 3269 UAV-captured images at a resolution of 1280 × 720, each annotated at the pixel level. It defines 11 semantic categories, including person, vehicle, road, building, and vegetation, and focuses on aerial views of urban environments such as streets, parking lots, and parks.

The ICG Drone dataset [54] consists of high-resolution aerial imagery designed to support semantic interpretation of residential and green urban environments. It offers pixel-level annotations for 22 semantic categories covering a wide range of residential structures and objects. A high-resolution camera was used to capture images at 6000 × 4000 pixels (24 Mpx), resulting in 400 publicly available images.

The UAVid dataset [53] consists of 30 video sequences, from which 420 aerial frames are annotated at the pixel level. The dataset is split into 200 training, 70 validation, and 150 testing images, with resolutions of 3840 × 2160 or 4096 × 2160 pixels. It defines seven semantic classes, including buildings, roads, cars, trees, low vegetation, and pedestrians.

The Urban Drone Dataset (UDD) [52] contains imagery collected from multiple Chinese cities at medium to high altitudes, covering diverse urban landscapes. In our experiments, we use two labeled subsets. UDD5 includes 160 high-resolution aerial images (3840 × 2160, 4096 × 2160, 4000 × 3000) with pixel-level annotations for four classes—vegetation, building, road, and vehicle—split into 120 for training and 40 for validation. UDD6, acquired under a similar protocol, contains 141 images (106 train/35 val) annotated for five semantic categories: facade, road, vegetation, vehicle, and roof.

The Varied Drone Dataset (VDD) [51] is designed to overcome the limitations of existing UAV benchmarks in terms of scale and scene diversity. It consists of 400 high-resolution aerial images of size 4000 × 3000 pixels, divided into 280 training, 80 validation, and 40 testing samples. Each image is annotated at the pixel level for six semantic categories. The dataset covers a broad spectrum of environments, including urban, industrial, rural, and natural scenes, with diverse viewpoints and illumination conditions.

We adopt mean Intersection over Union (mIoU) across all semantic categories as the evaluation metric, with higher values reflecting better segmentation performance.

4.1.2. Implementation Details

Our framework is implemented on the Detectron2 codebase. CLIP [12] is adopted as the backbone, while DINOv2 [22] is incorporated as an auxiliary prior, with both kept frozen during training. DINOv2 is used solely as a training prior and is not involved during inference. The decoder, equipped with dilation rates of 1, 6, 12, and 18, together with the query embeddings, is optimized using AdamW [55]. We set the learning rate to for the decoder and for the queries, without applying weight decay to the query parameters. A linear learning rate warmup with 1.5k iterations is applied at the beginning of training. The optimizer uses default momentum coefficients . All models are trained for 60k iterations with a batch size of 4 on four NVIDIA RTX 4090 GPUs. To standardize the input, images are randomly cropped to pixels. Data augmentation follows the DACS pipeline [56], including random cropping, horizontal flipping, color jittering, and Gaussian blurring.

4.2. Main Results

4.2.1. Quantitative Comparisons with Previous Methods

To comprehensively evaluate the effectiveness and generalization ability of our framework, we conduct experiments under four training settings using SynDrone, UDD5, VDD, and Aeroscapes, and evaluate performance across six UAV remote sensing benchmarks: VDD, UDD5, UDD6, Aeroscapes, UAVid, and ICG Drone. We compare our method against a diverse set of recent open-vocabulary segmentation approaches, including ZegFormer [42], OpenSeeD [57], SED [18], OVSeg [43], SAN [17], and CAT-Seg [16], ensuring a rigorous and fair evaluation.

As shown in Table 2, our method consistently surpasses recent open-vocabulary segmentation approaches across a wide range of training configurations and evaluation datasets, confirming both its effectiveness and generalization capability. When trained on SynDrone, which contains synthetic UAV imagery with clean textures and balanced semantic distributions, our framework still generalizes well to real-world datasets such as VDD and ICG Drone—achieving +3.1 and +3.4 mIoU improvements over CAT-Seg, respectively. This strong synthetic-to-real transfer demonstrates that HOSU effectively preserves the CLIP high-level semantic priors through text-guided regularization, enabling adaptation to real textures and material variations without overfitting to synthetic appearances.

Table 2.

Performance comparison between the proposed method and existing methods. Top three results are highlighted as best, second, and third, respectively.

Under the UDD5 training configuration, which consists of high-altitude orthographic UAV imagery dominated by large homogeneous structures (roads, roofs, and vegetation), HOSU achieves significant gains when tested on low-altitude oblique datasets such as Aeroscapes and UAVid. Despite drastic differences in viewpoint, scale, and object density, our framework outperforms SAN and CAT-Seg by +3.9 mIoU and +3.2 mIoU, respectively. This cross-altitude generalization arises from the synergy between inter-region alignment, which enhances global contextual reasoning, and intra-region refinement, which improves fine-grained local perception—allowing the model to remain stable under severe perspective distortion and occlusion.

When trained on VDD, which provides diverse medium-to-high-altitude imagery but with a relatively limited set of broad land-cover categories (e.g., roads, buildings, and vegetation), HOSU achieves strong generalization to datasets with richer category diversity, such as ICG Drone and Aeroscapes. The model attains +2.5 and +2.4 mIoU gains, respectively, confirming its ability to transfer from coarse, low-category training data to dense, semantically complex testing domains. This capability stems from the proposed text-guided semantic regularization and distributional alignment mechanisms, which jointly preserve semantic distinctiveness and transferable knowledge across category granularity levels.

Finally, when trained on Aeroscapes, which features low-altitude oblique imagery with strong viewpoint variation, frequent occlusion, and numerous small dynamic objects, HOSU maintains robust generalization to high-altitude structural datasets such as UDD6 and VDD, achieving +3.5 mIoU improvement on the latter. Despite the clear altitude and perspective gap between these domains, our framework retains stable performance through its masked feature consistency and multi-scale aggregation mechanisms, which ensure representation stability under partial visibility while capturing both global structure and fine local detail.

These results demonstrate that HOSU exhibits strong and comprehensive generalization across diverse UAV imaging scenarios and domain variations. For oblique or low-altitude datasets such as Aeroscapes and UAVid, which feature frequent occlusion, viewpoint distortion, and dense small or dynamic targets, conventional open-vocabulary models often struggle due to fragmented features under partial visibility. In contrast, the HOSU masked feature consistency ensures stable representations between masked and unmasked views, greatly enhancing robustness to occlusion and geometric distortion. For high-altitude orthographic datasets (UDD5, UDD6, and VDD), which emphasize broad homogeneous regions like roads, roofs, and vegetation, the HOSU multi-scale aggregation and global semantic alignment enable the model to capture large-scale contextual dependencies while maintaining semantic distinctiveness. In high-resolution datasets such as ICG Drone and UAVid, where fine boundaries and detailed textures are critical, the HOSU cross-scale feature fusion and distributional alignment refine edges and enhance class separability, leading to more precise segmentation of small and intricate objects. Taken together, HOSU demonstrates robust generalization across four critical UAV domain dimensions—from synthetic to real imagery, high-altitude (nadir) to low-altitude (oblique) views, coarse to fine-grained categories, and low-altitude (oblique) to high-altitude (nadir) transfers. The largest cross-domain gains occur between UDD5 → Aeroscapes (+3.9 mIoU over SAN, +3.2 over CAT-Seg) and Aeroscapes → VDD (+3.5 over CAT-Seg), underscoring the ability of HOSU to learn scale- and viewpoint-invariant representations that remain consistent across heterogeneous imaging geometries. Overall, these findings confirm the robust transferability, adaptability, and real-world practicality of HOSU for UAV-based remote sensing.

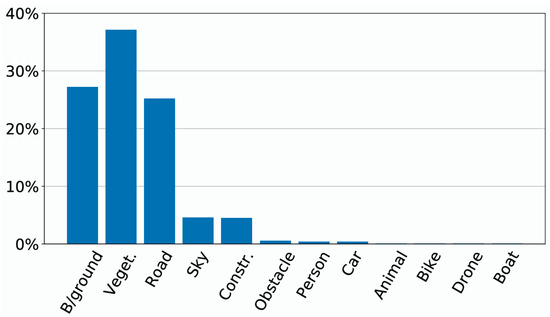

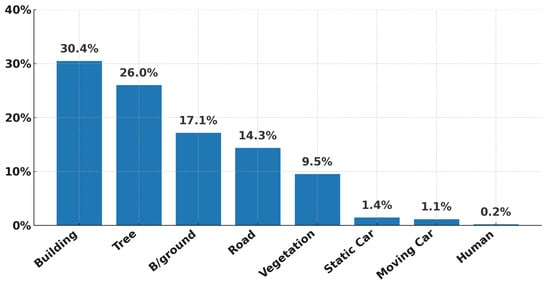

To further validate the effectiveness of the proposed method, we present detailed class-wise IoU comparisons against CAT-Seg with models trained on UDD5 and tested on Aeroscapes as shown in Table 3 and Figure 6. Our method achieves consistent improvements across nearly all categories. The most substantial gains appear in minority classes with extremely low pixel proportions, such as Drone (+9.8%), Obstacle (+8.0%), and Animal (+6.5%), all of which together account for less than 2% of the total pixels. These improvements indicate that HOSU effectively alleviates the long-tailed imbalance problem by enhancing recognition of small or infrequent objects rather than simply boosting performance on dominant classes. At the same time, for major categories such as Road, Vegetation, and Sky—which together make up over 85% of the dataset—our model still yields measurable gains (Road +1.0%, Vegetation +5.2%, Construction +2.8%), confirming that HOSU preserves accuracy on large, frequent categories while improving underrepresented ones. A similar trend is observed in the UAVid results (Table 4, Figure 7), where the dataset is dominated by large-area classes such as Building (30.4%) and Tree (26.0%), while rare categories like Static Car (1.4%) and Human (0.2%) are severely underrepresented. Our method achieves notable improvements on these scarce categories—Static Car (+3.2%) and Human (+3.9%)—demonstrating strong generalization to low-frequency instances. For common categories such as Building and Vegetation, HOSU still achieves notable performance gains, confirming its ability to maintain high accuracy on large, frequent categories while simultaneously improving recognition of underrepresented ones. These results demonstrate that our framework not only improves overall performance but also delivers more balanced gains across heterogeneous object categories—an essential property for UAV imagery, which is inherently affected by severe class imbalance.

Table 3.

Comparison of the class-wise IoU with training on UDD5 and testing on Aeroscapes. Red colors indicate the relative gains of our method compared to CAT-Seg for each class.

Figure 6.

Pixel distribution of the Aeroscapes dataset.

Table 4.

Comparison of the class-wise IoU with training on VDD and testing on UAVid. Red colors indicate the relative gains of our method compared to CAT-Seg for each class.

Figure 7.

Pixel distribution of the UAVid dataset.

4.2.2. Qualitative Comparisons with Previous Methods

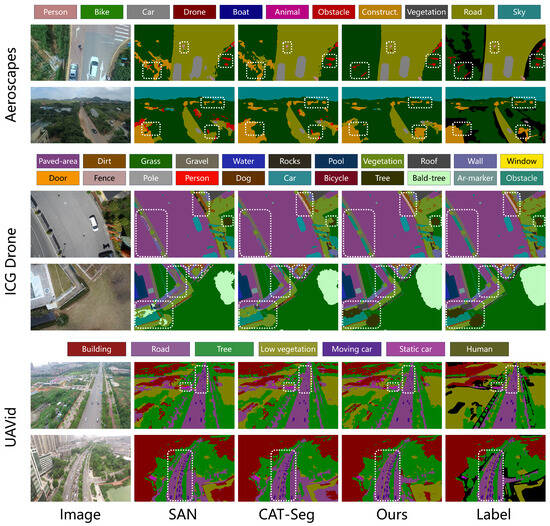

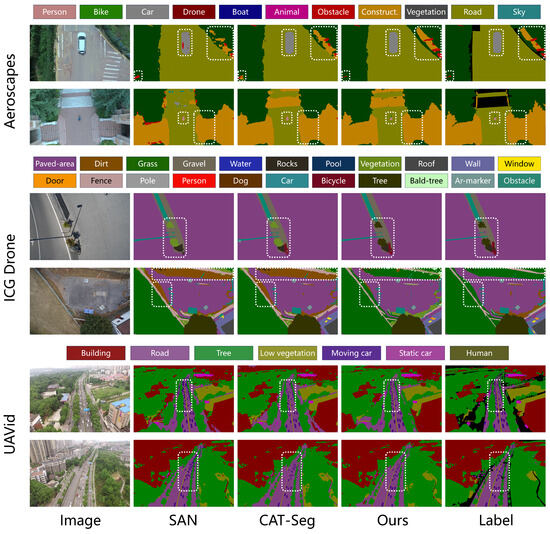

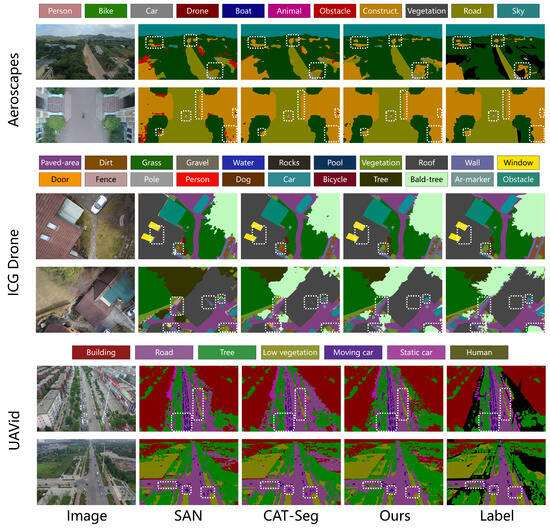

To further demonstrate the advantages of our method, we conduct qualitative comparisons with SAN and CAT-Seg under four different training settings.

Figure 8 presents qualitative results on the Aeroscapes, ICG Drone, and UAVid datasets with all models trained on SynDrone. Compared with SAN and CAT-Seg, our method generates predictions that more closely match the ground-truth labels, particularly in delineating fine-grained boundaries and preserving small-scale objects. On Aeroscapes, our framework successfully detects small obstacles and provides more accurate localization of construction regions. On ICG Drone, it achieves clearer separation among visually similar vegetation classes such as trees, grass, and shrubs. On UAVid, it identifies a greater number of small-scale vehicles with higher precision. These results demonstrate that our method offers a stronger capability to capture complex scene distributions while delivering more accurate localization and recognition of small-scale targets.

Figure 8.

Qualitative comparison with previous methods, where all models are trained on the SynDrone dataset. From left to right: input image, predictions from SAN, CAT-Seg, and our method, followed by the ground-truth label. We deploy the white dash boxes to highlight different prediction parts. The color bars above each dataset indicate the corresponding semantic categories represented by different colors.

Figure 9 presents comparisons on the same three benchmarks with models trained on UDD5. Our method generates segmentation maps that align more closely with the ground truth, particularly in complex UAV scenarios. On Aeroscapes, it achieves a clearer separation of roads from surrounding vegetation and construction while providing the finer localization of obstacles. On ICG Drone, it more effectively distinguishes dirt from grass, reducing the misclassifications common in SAN and CAT-Seg. On UAVid, it delivers sharper delineation of lanes and vehicles and preserves structural consistency across dense urban regions. These results confirm the advantage of our framework in handling diverse and challenging aerial scenes.

Figure 9.

Qualitative comparison with previous methods, where all models are trained on the UDD5 dataset. From left to right: input image, predictions from SAN, CAT-Seg, and our method, followed by the ground-truth label. We deploy the white dash boxes to highlight different prediction parts. The color bars above each dataset indicate the corresponding semantic categories represented by different colors.

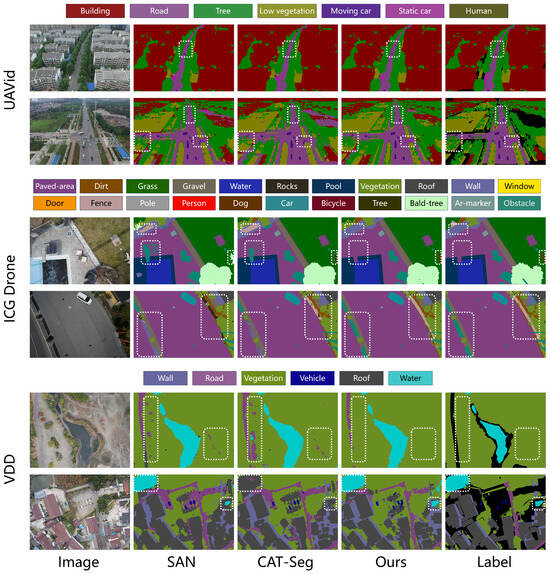

Figure 10 presents qualitative comparisons when models are trained on VDD. As shown, our framework consistently produces segmentation maps that are closer to the ground truth than baseline approaches. On Aeroscapes, it reduces boundary ambiguities between roads and vegetation and more accurately detects small objects such as bikes, which SAN and CAT-Seg often miss. On ICG Drone, it captures the structural layout of roads and buildings with greater fidelity, avoids fragmented predictions, and effectively distinguishes grass from other vegetation. On UAVid, it yields more coherent region distributions across low vegetation, trees, and roads while providing finer localization and recognition of vehicles. These results highlight the superior capability of our approach in handling both large-scale structures and small, intricate targets across diverse UAV scenes.

Figure 10.

Qualitative comparison with previous methods, where all models are trained on the VDD dataset. From left to right: input image, predictions from SAN, CAT-Seg, and our method, followed by the ground-truth label. We deploy the white dash boxes to highlight different prediction parts. The color bars above each dataset indicate the corresponding semantic categories represented by different colors.

Figure 11 presents results on UAVid, ICG Drone, and VDD with all models trained on Aeroscapes. Compared to SAN and CAT-Seg, our method consistently yields predictions that better match the ground truth. On UAVid, it achieves more precise recognition of small-scale vehicles and trees, avoiding the over-smoothed outputs of baselines. On ICG Drone, it preserves the semantic structure of roads and surrounding areas more faithfully, reducing fragmented or implausible predictions. On VDD, it demonstrates stronger capability in distinguishing roads from vegetation and provides clearer delineation of water bodies, producing more coherent and reliable segmentation maps across diverse scenarios.

Figure 11.

Qualitative comparison with previous methods, where all models are trained on the Aeroscapes dataset. From left to right: input image, predictions from SAN, CAT-Seg, and our method, followed by the ground-truth label. We deploy the white dash boxes to highlight different prediction parts. The color bars above each dataset indicate the corresponding semantic categories represented by different colors.

Overall, these qualitative comparisons provide compelling evidence of the effectiveness of our framework across diverse UAV scenarios. Unlike prior methods that often generate fragmented predictions or overlook small-scale structures, our approach consistently produces segmentation maps that are more coherent, accurate, and semantically consistent. This demonstrates its strong generalization ability under varying flight conditions, viewpoints, and scene distributions, while also capturing fine-grained details such as small vehicles, obstacles, and vegetation boundaries. These strengths confirm the capability of our framework to model complex scene distributions and accurately represent fine-grained semantic details.

4.3. Ablation Studies and Further Analysis

4.3.1. Ablation Study on Primary Components

Table 5 presents an ablation study under the UDD5 training setting, with evaluation performed on VDD, UDD6, Aeroscapes, UAVid, and ICG Drone. We progressively incorporate four components—the intra-region loss , the inter-region loss , the text-guided multi-level regularization (TG), and the masked feature consistency loss . The baseline (row 1) corresponds to directly training CLIP and generating predictions through the proposed decoder without incorporating any of these components.

Table 5.

Ablation study on the primary components of the proposed framework conducted with training on the UDD5 dataset, where TG denotes the proposed text-guided multi-level regularization.

The improvement in Row 2 demonstrates that the intra-region alignment loss () enhances the ability of CLIP to capture fine-grained details and small objects by aligning its local feature distributions with DINOv2. This leads to sharper boundaries and the more accurate recognition of small or structurally complex targets, particularly on VDD (+1.7), Aeroscapes (+1.1), and UAVid (+0.9). Row 3 shows that the inter-region alignment loss () strengthens global semantic reasoning by aligning feature distributions across semantic regions, enabling the model to capture large-scale contextual dependencies and spatial layouts across scenes. The improvement is most apparent on VDD (+1.5) and UDD6 (+1.0), where extensive homogeneous regions such as roads and roofs dominate, demonstrating enhanced scene-level coherence and semantic consistency. When both and are used together (Row 4), the model achieves balanced improvements across all benchmarks, indicating that local feature refinement and global reasoning are highly complementary—allowing the model to preserve small targets within globally consistent scene structures. Incorporating text-guided regularization (Row 5) further improves overall performance by maintaining vision–language alignment. By integrating the CLIP textual embeddings into the visual stream at multiple stages, it anchors refined features within the original semantic space, preventing drift caused by distribution alignment and resulting in substantial gains on datasets with fine-grained categories such as Aeroscapes (+1.3), UAVid (+0.9), and ICG Drone (+1.1). Finally, adding the masked feature consistency loss (Row 6) produces consistent gains across all UAV benchmarks—especially on Aeroscapes (+0.9), UAVid (+0.6), and ICG Drone (+0.8), where occlusions and partial visibility are frequent. By enforcing consistency between masked and unmasked views of the same scene, this module reduces dependence on fully visible regions and strengthens robustness to occlusion, viewpoint changes, and missing content. Overall, these components jointly enhance fine-grained recognition, global reasoning, semantic stability, and occlusion robustness, leading to superior generalization across diverse UAV segmentation tasks.

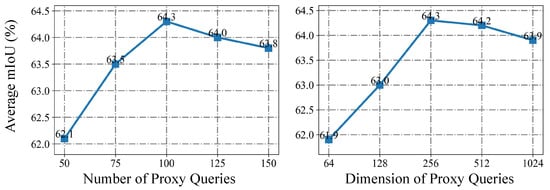

4.3.2. Effect of Proxy Query Length and Dimension

The left plot of Figure 12 illustrates the impact of varying the number of proxy queries. As the length increases from 50 to 100, the average mIoU rises steadily from 62.1 to 64.3, showing that longer query sequences capture richer contextual cues. However, further extending the length to 125 or 150 leads to a slight drop in performance (64.0 and 63.8) since excessive queries introduce redundancy and noise that weaken semantic understanding.

Figure 12.

Impact of proxy query length and dimension on segmentation performance trained on UDD5 and evaluated on the Aeroscapes dataset.

The right plot of Figure 12 examines the effect of query dimensionality. Increasing the dimension from 64 to 256 yields clear gains, with mIoU improving from 62.0 to 64.3 as higher-dimensional embeddings capture more discriminative details. Performance remains comparable at 512 (64.2) but drops at 1024 (63.9), indicating that excessively high-dimensional queries introduce redundancy and heighten the risk of overfitting, making the model overly sensitive to minor details.

4.3.3. Effect of Loss Weights

Table 6, Table 7 and Table 8 report the ablation study on varying the loss weights , , and under the UDD5 training setting. Across all three parameters, performance consistently peaks when the weight is set to 1, yielding mIoU scores of 72.1 on VDD, 81.2 on UDD6, 64.3 on Aeroscapes, 67.2 on UAVid, and 45.4 on ICG Drone. In contrast, reducing the weights to 0.5 or enlarging them to 1.5 or 2 leads to clear performance drops—for example, setting lowers the score on VDD to 70.9, while reduces UAVid to 66.1.

Table 6.

Ablation study on the loss weight trained on the UDD5 dataset.

Table 7.

Ablation study on the loss weight trained on the UDD5 dataset.

Table 8.

Ablation study on the loss weight trained on the UDD5 dataset.

These results indicate that small weights weaken the contribution of their respective objectives, while overly large weights place excessive emphasis on them, disturbing the balance across components. Setting all three weights to 1 provides the most stable configuration, enabling the intra-region, inter-region, and masked consistency objectives to work together effectively and achieve optimal performance.

4.3.4. Ablation Study on Patch Size and Mask Ratio

Table 9 reports the ablation results on different patch sizes and mask ratios, trained on UDD5 and evaluated on the Aeroscapes dataset. Smaller patch sizes (4 and 8) generally yield higher segmentation performance, with accuracy declining as the patch size grows to 32 or 64. Notably, the best result is obtained with a patch size of 16, reaching 64.3 mIoU at a mask ratio of 0.3. For mask ratios, moderate values (0.3) consistently outperform both lower (0.2) and higher (0.5) settings. For instance, with a patch size of 16, the mIoU rises from 64.0 at ratio 0.2 to 64.3 at ratio 0.3, before dropping to 63.2 at ratio 0.5.

Table 9.

Ablation study of the patch size and the mask ratio of our method trained on UDD5 and evaluated on the Aeroscapes dataset.

These results indicate that moderate patch granularity and masking strength provide the best balance, enabling the model to learn robust features while avoiding excessive noise or information loss.

4.3.5. Ablation Study on Different Decoder

As shown in Table 10, we investigate the impact of different decoder architectures by integrating Segmenter, SegFormer, and Mask2Former into our framework under the UDD5 training setting. Across all evaluation datasets, our decoder consistently achieves the highest mIoU, confirming its superior adaptability and feature integration capability. Specifically, our decoder surpasses Mask2Former by +1.9% on VDD (72.1 vs. 70.2), +1.8% on UDD6 (81.2 vs. 79.4), +2.2% on Aeroscapes (64.3 vs. 62.1), +0.5% on UAVid (67.2 vs. 66.7), and +1.8% on ICG Drone (45.4 vs. 43.6). Similar trends hold when compared with Segmenter and SegFormer, where the performance gains range from +1.4% to +4.1%, demonstrating robust generalization across different UAV imaging conditions and scene compositions.

Table 10.

Comparison of different decoder architectures integrated into our framework under the UDD5 training setting.

These consistent improvements highlight the superiority of our decoder in aggregating multi-scale features and maintaining fine-grained structures, which are crucial for handling diverse scene layouts and densely distributed small objects in UAV imagery. This study demonstrates that the proposed decoder design effectively enhances the segmentation capability of the overall framework.

4.3.6. Inference Efficiency Comparison

As shown in Table 11, we present a comparative analysis of the inference efficiency among different OVSS methods, all evaluated under the same computational setting (RTX 4090 GPU, input resolution of 640 × 640, and CLIP ViT-L/14 as the visual backbone). Our proposed HOSU achieves the most favorable balance between model size and computational efficiency. It introduces the fewest parameters (431.15 M), lower than OVSeg (532.61 M) and SAN (447.31 M). Moreover, HOSU attains the lowest computational complexity (1012.91 GFLOPs), reducing the FLOPs by 68.5% compared to OVSeg and 49.4% compared to CAT-Seg, while maintaining the highest inference throughput of 9.33 FPS, surpassing SAN (8.55 FPS) and significantly outperforming OVSeg (0.80 FPS) and CAT-Seg (2.70 FPS).

Table 11.

Inference efficiency comparison. All results are measured with a single RTX 4090 GPU. The resolution of the input image is 640 × 640. The CLIP model is ViT-L/14.

These results clearly demonstrate that HOSU not only improves segmentation accuracy but also delivers superior efficiency, making it highly suitable for resource-constrained UAV scenarios.

4.3.7. Training Cost Analysis

As shown in Table 12, our method exhibits significant improvements in training efficiency compared to existing OVSS frameworks. The proposed HOSU requires only 14.39 M trainable parameters, which is 10.2× fewer than OVSeg (147.23 M) and 4.9× fewer than CAT-Seg (70.27 M), substantially reducing parameter overhead during optimization. In addition, HOSU achieves the shortest training time of 10.3 h, outperforming SAN (18.0 h), OVSeg (17.6 h), and CAT-Seg (12.6 h) under the same hardware configuration. Regarding GPU memory consumption, HOSU again shows clear advantages, requiring only 83.1 GB, which is markedly lower than SAN (102.7 GB) and OVSeg (88.5 GB).

Table 12.

Comparisons of training cost among different OVSS methods. All results are measured with a single RTX 4090 GPU. The CLIP model is ViT-L/14.

These findings verify the practicality of HOSU by enabling scalable and resource-efficient optimization, without imposing excessive parameter burden or memory consumption.

5. Conclusions

In this work, we propose a novel hybrid framework for open-vocabulary semantic segmentation of UAV imagery, named HOSU, which aims to inject the prior knowledge of DINOv2 into CLIP, enabling it to capture more fine-grained object features and enhance its perception of scene semantic distributions. Specifically, we first propose a distribution-aware fine-tuning strategy that aligns CLIP with DINOv2 across intra- and inter-region feature distributions, thereby strengthening the capacity of CLIP to model both global scene structures and local semantic details. Then, we propose a text-guided multi-level regularization mechanism that leverages the CLIP text embeddings to impose semantic constraints on visual features, ensuring stable vision–language alignment during fine-tuning. Finally, to address the occlusions commonly encountered in UAV imagery, we design a masked feature consistency strategy that encourages the model to learn robust and occlusion-invariant feature representations. Extensive experiments across four training settings and six UAV benchmarks demonstrate that HOSU consistently achieves state-of-the-art performance and delivers significant improvements over existing methods. Beyond quantitative gains, HOSU produces more coherent and semantically consistent predictions by avoiding fragmented or unreasonable outputs and aligning more closely with the underlying scene distributions. It also exhibits stronger perception and localization capabilities for small-scale targets.

Overall, this work not only fills a critical gap in open-vocabulary semantic segmentation of UAV imagery but also opens a promising avenue for effectively leveraging vision foundation models to strengthen the fine-grained perception of vision–language models. For future work, we plan to extend this framework in two key directions. First, we will investigate adapting HOSU to multispectral UAV data, which may require modality-bridging adapters or cross-modal alignment strategies to project heterogeneous spectral signals into CLIP’s joint embedding space. Second, given the strong representational robustness achieved by our approach, we intend to extend HOSU to additional aerial perception tasks—such as open-vocabulary object detection and instance segmentation—by equipping the framework with task-specific prediction heads. These future enhancements will further broaden the applicability and practical value of HOSU in real-world UAV sensing scenarios.

Author Contributions

Conceptualization, F.L. and X.W. (Xuanbin Wang); methodology, F.L. and Z.Z.; validation, F.L. and X.W. (Xuanbin Wang); formal analysis, F.L. and Z.Z.; investigation, F.L.; resources, Y.X. and X.W. (Xuan Wang); writing—original draft preparation, F.L.; writing—review and editing, F.L. and X.W. (Xuan Wang); visualization, F.L.; supervision, Y.X. and X.W. (Xuanbin Wang); project administration, F.L. and Y.X.; funding acquisition, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Young Scientists Fund of the National Natural Science Foundation of China (Grant No. 52302506), the Shaanxi Key Research and Development Program (Grant No. 2025GH-YBXM-022), the Fundamental Research Funds for the Central Universities (Grant No. G2024KY0603), and the Fundamental Research Funds for the National Key Laboratory of Unmanned Aerial Vehicle Technology (Grant No. WR202414).

Data Availability Statement

The data that support the findings of this study are all derived from publicly available datasets.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Liang-Chieh, C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A. Semantic image segmentation with deep convolutional nets and fully connected crfs. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 7262–7272. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 1290–1299. [Google Scholar]

- Mou, L.; Hua, Y.; Zhu, X.X. Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high-resolution aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7557–7569. [Google Scholar] [CrossRef]

- Li, Z.; Chen, X.; Jiang, J.; Han, Z.; Li, Z.; Fang, T.; Huo, H.; Li, Q.; Liu, M. Cascaded multiscale structure with self-smoothing atrous convolution for semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5605713. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Zhang, C.; Jiang, W.; Zhang, Y.; Wang, W.; Zhao, Q.; Wang, C. Transformer and CNN hybrid deep neural network for semantic segmentation of very-high-resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408820. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Li, X.; Cheng, Y.; Fang, Y.; Liang, H.; Xu, S. 2DSegFormer: 2-D Transformer Model for Semantic Segmentation on Aerial Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4709413. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Lin, Y.; Cao, Y.; Hu, H.; Bai, X. A simple baseline for open-vocabulary semantic segmentation with pre-trained vision-language model. In Proceedings of the European Conference on Computer Vision, Vienna, Austria, 18–24 July 2022; pp. 736–753. [Google Scholar]

- Zhou, Z.; Lei, Y.; Zhang, B.; Liu, L.; Liu, Y. Zegclip: Towards adapting clip for zero-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 11175–11185. [Google Scholar]

- Cho, S.; Shin, H.; Hong, S.; Arnab, A.; Seo, P.H.; Kim, S. Cat-seg: Cost aggregation for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 4113–4123. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Hu, H.; Bai, X. Side adapter network for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2945–2954. [Google Scholar]

- Xie, B.; Cao, J.; Xie, J.; Khan, F.S.; Pang, Y. Sed: A simple encoder-decoder for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3426–3436. [Google Scholar]

- Han, C.; Zhong, Y.; Li, D.; Han, K.; Ma, L. Open-vocabulary semantic segmentation with decoupled one-pass network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 1086–1096. [Google Scholar]

- Yi, M.; Cui, Q.; Wu, H.; Yang, C.; Yoshie, O.; Lu, H. A simple framework for text-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7071–7080. [Google Scholar]

- Wang, Y.; Sun, R.; Luo, N.; Pan, Y.; Zhang, T. Image-to-image matching via foundation models: A new perspective for open-vocabulary semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3952–3963. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Li, F.; Zhang, H.; Sun, P.; Zou, X.; Liu, S.; Li, C.; Yang, J.; Zhang, L.; Gao, J. Segment and recognize anything at any granularity. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 467–484. [Google Scholar]

- Wei, Z.; Chen, L.; Jin, Y.; Ma, X.; Liu, T.; Ling, P.; Wang, B.; Chen, H.; Zheng, J. Stronger Fewer & Superior: Harnessing Vision Foundation Models for Domain Generalized Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 28619–28630. [Google Scholar]

- Li, F.; Zhang, H.; Xu, H.; Liu, S.; Zhang, L.; Ni, L.M.; Shum, H.Y. Mask dino: Towards a unified transformer-based framework for object detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 3041–3050. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Loy, C.C.; Lin, D.; Jia, J. Psanet: Point-wise spatial attention network for scene parsing. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 15–21 June 2019; pp. 3146–3154. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. pp. 234–241. [Google Scholar]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-resolution representations for labeling pixels and regions. arXiv 2019, arXiv:1904.04514. [Google Scholar] [CrossRef]

- Zhang, B.; Xiao, J.; Qin, T. Self-guided and cross-guided learning for few-shot segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtually, 19–25 June 2021; pp. 8312–8321. [Google Scholar]

- Deng, J.; Lu, J.; Zhang, T. Diff3detr: Agent-based diffusion model for semi-supervised 3d object detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 57–73. [Google Scholar]

- Tang, F.; Xu, Z.; Qu, Z.; Feng, W.; Jiang, X.; Ge, Z. Hunting attributes: Context prototype-aware learning for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 3324–3334. [Google Scholar]

- Zhao, W.; Du, S. Learning multiscale and deep representations for classifying remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 155–165. [Google Scholar] [CrossRef]

- He, D.; Shi, Q.; Liu, X.; Zhong, Y.; Zhang, L. Generating 2m fine-scale urban tree cover product over 34 metropolises in China based on deep context-aware sub-pixel mapping network. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102667. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, L.; Li, R.; Duan, C.; Zhang, C.; Meng, X.; Fang, S. A novel transformer based semantic segmentation scheme for fine-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer meets convolution: A bilateral awareness network for semantic segmentation of very fine resolution urban scene images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Dai, D. Decoupling zero-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11583–11592. [Google Scholar]

- Liang, F.; Wu, B.; Dai, X.; Li, K.; Zhao, Y.; Zhang, H.; Zhang, P.; Vajda, P.; Marculescu, D. Open-vocabulary semantic segmentation with mask-adapted clip. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7061–7070. [Google Scholar]

- Ghiasi, G.; Gu, X.; Cui, Y.; Lin, T.Y. Scaling open-vocabulary image segmentation with image-level labels. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 25–27 October 2022; pp. 540–557. [Google Scholar]

- Zhou, C.; Loy, C.C.; Dai, B. Extract free dense labels from clip. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 25–27 October 2022; pp. 696–712. [Google Scholar]

- Yu, Q.; He, J.; Deng, X.; Shen, X.; Chen, L.C. Convolutions Die Hard: Open-Vocabulary Segmentation with Single Frozen Convolutional CLIP. arXiv 2023, arXiv:2308.02487. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Rizzoli, G.; Barbato, F.; Caligiuri, M.; Zanuttigh, P. Syndrone-multi-modal uav dataset for urban scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 2210–2220. [Google Scholar]

- Nigam, I.; Huang, C.; Ramanan, D. Ensemble knowledge transfer for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, CA, USA, 12–15 March 2018; pp. 1499–1508. [Google Scholar]

- Cai, W.; Jin, K.; Hou, J.; Guo, C.; Wu, L.; Yang, W. Vdd: Varied drone dataset for semantic segmentation. J. Vis. Commun. Image Represent. 2025, 109, 104429. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Y.; Lu, P.; Chen, Y.; Wang, G. Large-scale structure from motion with semantic constraints of aerial images. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; pp. 347–359. [Google Scholar]

- Lyu, Y.; Vosselman, G.; Xia, G.S.; Yilmaz, A.; Yang, M.Y. UAVid: A semantic segmentation dataset for UAV imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Graz University of Technology. ICG Drone Dataset. 2023. Available online: https://fmi-data-index.github.io/semantic_drone.html (accessed on 7 June 2023).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Tranheden, W.; Olsson, V.; Pinto, J.; Svensson, L. Dacs: Domain adaptation via cross-domain mixed sampling. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 6–8 January 2021; pp. 1379–1389. [Google Scholar]

- Zhang, H.; Li, F.; Zou, X.; Liu, S.; Li, C.; Yang, J.; Zhang, L. A simple framework for open-vocabulary segmentation and detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 1020–1031. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |