Abstract

Accurate and efficient rock mass characterization is essential in geotechnical engineering, yet traditional tunnel face mapping remains time consuming, subjective, and potentially hazardous. Recent advances in digital technologies and AI offer automation opportunities, but many existing solutions are hindered by slow 3D scanning, computationally intensive processing, and limited integration flexibility. This paper presents Tunnel Rapid AI Classification (TRaiC), an open-source MATLAB-based platform for rapid and automated tunnel face mapping. TRaiC integrates single-shot 360° panoramic photography, AI-powered discontinuity detection, 3D textured digital twin generation, rock mass discontinuity characterization, and Retrieval-Augmented Generation with Large Language Models (RAG-LLM) for automated geological interpretation and standardized reporting. The modular eight-stage workflow includes simplified 3D modeling, trace segmentation, 3D joint network analysis, and rock mass classification using RMR, with outputs optimized for Geo-BIM integration. Initial evaluations indicate substantial reductions in processing time and expert assessment workload. Producing a lightweight yet high-fidelity digital twin, TRaiC enables computational efficiency, transparency, and reproducibility, serving as a foundation for future AI-assisted geotechnical engineering research. Its graphical user interface and well-structured open-source code make it accessible to users ranging from beginners to advanced researchers.

1. Introduction

Tunnel face mapping is an essential activity in tunnel construction to provide important geological and structural stability information. Although it is time consuming, physically demanding, and sometimes dangerous, manual mapping is still commonly used to classify rock masses and assess stability [1,2]. Traditional methods depend heavily on the experience and judgment of field personnel, which can lead to inconsistencies and errors in geological interpretation [3,4,5,6,7,8]. In addition to these technical limitations, manual mapping puts workers in hazardous underground environments where they may face falling rocks, unstable ground, and poor ventilation [9]. These safety risks, along with the subjective nature of geological observations, can affect the quality of support design and tunnel stability [4,10]. To overcome these challenges, the tunneling industry is moving toward advanced technologies such as digital mapping systems [4,6], AI-based analysis [10,11], photogrammetry [12,13], and automated classification methods to improve accuracy, consistency, and safety in tunnel face mapping.

Recent advancements in digital mapping technologies have significantly enhanced the capabilities of tunnel face rock mass characterization and documentation. These technologies incorporate 3D imaging, photogrammetry, and automated data processing to produce detailed and geo-referenced models of the tunnel environment [14,15,16,17]. Automated data acquisition systems generate high-resolution 3D models and integrate them into geological information systems, supporting real-time decision-making and reducing dependence on manual measurements [18]. Additionally, in mechanized tunneling, autonomous imaging units facilitate continuous documentation by capturing and processing videos into 3D models [19].

Despite these technological advances, the full integration of automated systems for tunnel face characterization still remains an open challenge. While existing systems excel at data acquisition, they still often require complex post-processing, handling large datasets, expert knowledge, and significant computing power [20,21]. Furthermore, although computer vision and AI technologies offer promising avenues for automating geological assessments, their application is still under development [22]. A key limitation is the incomplete correlation between automatically detected features and their structural significance, which restricts the system’s ability to make autonomous decisions [23]. Bridging this gap is critical for the future of tunnel engineering, where AI-driven workflows could enable safer, faster, and more objective tunnel face evaluations. In addition, the AI models trained on face mapping data can forecast tunneling conditions and support decision-making during excavation [24].

The motivation for this research comes from the growing need for fast, accurate, and consistent tunnel face mapping to improve construction efficiency. Traditional manual geological sketching is slow, subjective, and often lacks the precision needed for modern tunneling projects [4]. When tunnel face assessments are delayed or inaccurate, they can lead to poor support design decisions and increased safety risks [25]. In response, digital tunnel face mapping systems using 3D scanning devices have been introduced to reduce human error and enable faster data collection and analysis directly at the tunnel face [14]. However, these systems still face several technical and operational challenges. Underground environmental conditions such as dust, humidity, poor lighting, surface occlusion, and limited surveying time can significantly reduce data quality [26,27]. These issues show the need for more robust and user-friendly systems that can provide more rapid and accurate data analysis to support fast decision-making.

Building on recent technological developments, this research focuses on advancing the use of 360-degree cameras for high-resolution, rapid tunnel face photography. These cameras offer advantages in speed, efficiency, and spatial coverage (in a single shot), making them well-suited for underground spaces [12,28]. They support 3D reconstructions of tunnel faces, enabling accurate joint mapping and discontinuity orientation detection with results comparable to manual observations. Beyond tunnel mapping, 360-degree imagery has also proven effective in other underground applications, such as real-time monitoring and volumetric analysis of excavated materials [16,29]. Although challenges remain, particularly regarding model resolution and georeferencing, these can be addressed through hybrid imaging techniques and GNSS-assisted solutions [12,28]. Therefore, expanding the integration of 360-degree cameras into automated mapping systems presents a strong potential to improve documentation processes and increase both safety and efficiency in tunneling projects.

On the other hand, integrating Large Language Models (LLMs) into tunnel face mapping offers a promising opportunity to improve data analysis, documentation, and decision-making. LLMs used in frameworks like GeoPredict-LLM and Geo-RAG have shown strong capabilities in synthesizing complex geological data by combining multimodal inputs, such as images, sensor readings, and text, into coherent insights through advanced data fusion and language understanding [30]. This is especially useful in tunnel engineering, where information is often fragmented across different formats. By framing geological prediction tasks as language problems, LLMs enable faster and more accurate interpretation, supporting real-time lithology classification and automated reporting during construction [31,32]. Additionally, their compatibility with other technologies, including computer vision and deep learning models, can improve safety assessments, reduce costs, and make construction processes more efficient [33]. Despite concerns such as the risk of inaccurate outputs and the continued need for expert supervision [34,35], LLMs have the potential to serve as intelligent assistants in geotechnical engineering, enhancing, rather than replacing, human expertise and contributing to the future of AI-supported geological mapping.

The objective of this research is to develop a rapid digital tunnel face mapping system that combines 360-degree spherical panorama photography of the tunnel face, ceiling, and walls with AI-driven discontinuity detection, 3D trace data analysis, and Large Language Model (LLM)-based geological feature description. This integrated approach aims to improve the accuracy and efficiency of rock mass characterization and classification. The system will also incorporate a Retrieval-Augmented Generation (RAG) framework powered by LLMs to analyze historical tunnel data using engineering classification systems such as the Rock Mass Rating (RMR). This will enable data-informed support recommendations and predictive analysis of future ground conditions.

Overall, the research contributes to several key areas in tunnel geotechnical engineering and digital documentation. The proposed system introduces a novel integration of 360° imaging and AI-based analysis for fast and accurate tunnel face assessment. It supports the creation of a textured digital twin of tunnel faces for visualization and 3D analysis, while automating discontinuity characterization to reduce subjectivity and improve consistency. Additionally, the RAG-LLM framework will support intelligent report generation using standardized formats and insights from historical data. To ensure practical applications and wider adoption, the research will result in an open-source software toolkit for use by both researchers and industry professionals.

2. Review Existing Techniques

2.1. Existing Tunnel Face Mapping Methods

Traditional tunnel face mapping, often referred to as manual geo-structural mapping, involves engineering geologists or rock mechanics specialists directly observing and recording geological features at the tunnel face. This includes noting rock mass quality, jointing patterns, groundwater conditions, and other relevant features through visual inspection. This method is time-consuming and can significantly slow down construction, especially when it requires committee-based evaluations or more detailed site assessments. Manpower involvement is another critical factor in terms of manual mapping quality and reliability, which largely relies on the knowledge and expertise of the personnel implementing such works. Environmental constraints, such as poor lighting, limited access, unstable ground conditions, and poor ventilation (the tunnel face is the most polluted part of the tunnel), further limit the accuracy of the observations. Time constraints during excavation also reduce the opportunity for careful examination. Therefore, manual mapping sometimes provides information that lacks consistency and reliability despite the effort required. These limitations indicate that safer, faster, and more efficient methods are required to evaluate rock mass conditions in tunnel engineering.

To address the limitations of traditional methods, digital mapping systems using mobile devices have recently been developed to support real-time geological evaluations during construction, effectively reducing the need for manual field assessments [4]. These systems enable rapid analysis and digital storage of geological data, helping to minimize human error and improve consistency in results. Digital imaging technologies further enhance the clarity and objectivity of tunnel face assessments [36]. Artificial intelligence (AI) and deep learning are revolutionizing tunnel face analysis. Instead of relying on a geologist’s judgment, convolutional neural networks can now interpret photos of rock faces. For instance, ref. [10] trained a CNN (EfficientNet-based) to predict RMR scores directly from tunnel-face images. Their model “interprets the entire tunnel face image holistically” to improve objectivity. They report that the AI predictions closely followed the evolution of field-rated RMR, effectively reducing the subjectivity inherent in human evaluation. Similarly, Liu et al. (2024) [33] developed a hybrid Transformer-UNet network to identify rock type and weathering from rock surface images, achieving over 95% accuracy. These neural models cut the lag time of traditional lab testing: whereas lab-based strength tests are “complex, time-consuming, and unable to reflect real-time changes,” AI can provide instant estimates of rock strength on-site. In summary, machine learning allows tunnel teams to make faster, data-driven decisions.

Real-time decisions can be made based on accurate information and this is how the digital tools and advanced technologies contribute to safer and more efficient tunnel construction. While traditional mapping remains limited by subjectivity and environmental challenges, the integration of digital technologies presents a more effective alternative. Future research should be dedicated to the further development of these systems in order to increase the accuracy and reproducibility of the geological assessment.

2.2. Research Gap Identification

Despite recent advancements in digital tunnel face mapping, several important limitations still prevent its effective use in real tunnel construction environments. One problem is the absence of accurate, fast, and simple mapping methods that require minimal specialized expertise. Many current digital tools involve multiple complex steps, which can significantly slow down decision-making in tunneling projects, therefore making them inapplicable. For example, systems based on photogrammetry or LiDAR often require a longer time for 3D scanning, special software, expert engineers, and also need high-performance computers, which may not always be available. Taking all of these into consideration, the existing methods (both traditional and digital) still struggle to balance speed, simplicity, reduced dependence on expert interpretation, and low hardware requirements. Addressing this gap by developing a rapid, easy-to-use, and low-cost system that requires minimal computing support would represent a significant advance for both industry applications and research.

3. Tunnel Rapid AI Classification (TRaiC) Platform

3.1. System Architecture Overview

The developed platform is named Tunnel Rapid AI Classification (TRaiC), to ensure it is easy to refer to in this research and in future studies built upon it. The current release of the TRaiC package includes 120 MATLAB functions, with plans to expand as new features are developed. The platform supports a wide range of tasks, including geometric modeling, AI-based trace detection, 3D point cloud generation, discontinuity analysis and characterization, image segmentation and annotation, LLM interface geologic description, and data exchange through a modular design that allows seamless integration of general and specialized tools. While the core computations are easily handled via user-friendly graphical interfaces, the source code is fully accessible. This enables users to interact with the system through a straightforward interface, while researchers can explore and modify the underlying code for future developments.

3.1.1. Integration of Different Technological Components

This study implements an eight-stage processing pipeline that integrates multiple technological components to transform raw tunnel face imagery into comprehensive geological assessments. The workflow begins with defining the geometry of the tunnel excavation profile using a simple 2D drawing module, which captures fundamental geometric parameters, stores them, and establishes the scale and spatial framework for all subsequent analyses. Note that, in this study, the direction of the tunnel axis (y-axis) is toward true North, so the azimuth of the tunnel is assumed to be zero. This is followed by converting the 360° panorama spherical images into standardized cube face photos, providing a visual foundation for the 3D visualization and automated discontinuity analysis. Then, a trace detection technique will be employed to apply AI-based image processing algorithms to identify discontinuity traces within these cube faces, generating trace segments on 2D images that later will define fracture networks and structural discontinuities. To integrate the detected traces with the 3D geometry of the tunnel, a textured 3D digital twin will be created. This will result in a 3D RGB point cloud representation of the tunnel, effectively bridging the gap between 2D image analysis and 3D spatial modeling.

The integration will continue through the advanced analysis phase, where the 3D Traces Network Analysis algorithm will process the spatial relationships between 3D traces to identify discontinuity planes, size distribution, and the spacing of discontinuities. Simultaneously, a Face Annotation module will be employed to enable artificial intelligence to analyze the photos obtained from the tunnel face, walls, and ceiling, producing both annotated imagery and descriptive text that provides a semantic understanding of the geological conditions.

The RMR Scoring module will synthesize quantitative data from the trace analysis with qualitative geological descriptions generated by AI vision to generate standardized rock mass rating tables for RMR score estimations. Finally, all collected data, images, and analyses will be integrated into a multimodal RAG-LLM-based AI system to produce a comprehensive geological and tunnel face mapping report. This integrated approach ensures that each technological component contributes specialized capabilities while maintaining data coherence through the centralized code structure, ultimately delivering a complete digital twin of the tunnel section in standard 3D format with both quantitative measurements and expert-level geo-metadata.

3.1.2. Central Data Structure and Overall Workflow Diagram

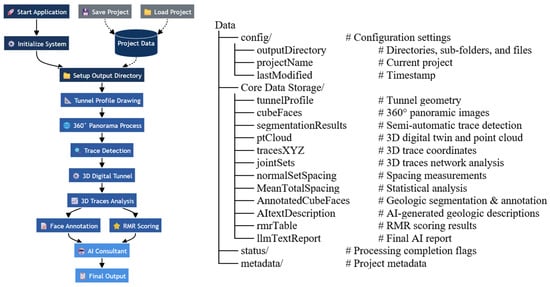

Since the long-term goal is that the system developed in this research can be practically applied in real tunneling projects and its results can be used as the input to a Geo-BIM (building information modeling) system for tunneling and geological digital data storage and management, the system’s central data structure is organized as a hierarchical structure containing four primary categories: configuration data (outputs directory and structures, project specification, timestamps), core data storage (tunnel profile, cube faces, 3D digital twin, point clouds, 3D traces and discontinuities, joint sets, spacing analyses, feature annotations, RMR tables, and final reports), processing status flags (Boolean indicators for each module’s analysis completion state), and code metadata (creation date, version, contractor and author information). This centralized approach ensures data consistency across all tunnel section excavation sequences. The overall workflow and data structure are as shown in Figure 1.

Figure 1.

Overall workflow diagram and central data structure of the TRaiC platform showing the eight-stage processing pipeline and hierarchical data organization for 360° digital tunnel face mapping. Solid arrows indicate process flow; dashed arrows indicate data operations.

3.2. Tunnel Profile Drawing and Geometric Modeling Interface

This tool is designed to provide users with the capability to generate accurate, real-scale tunnel geometries through both parametric and custom drawing approaches, supporting multiple standard tunnel configurations including circular, rectangular (with rounded corners), D-shaped, and horseshoe profiles. It leverages advanced user interaction, including grid-based coordinate snapping, real-time shape manipulation through draggable control points, and dynamic visualization. Once the tunnel profile is designed, profile coordinates are automatically exported in CSV format at the selected output directory, providing the data consistent for subsequent applications. Therefore, it enables rapid prototyping of tunnel geometries for engineering-grade tunnel design applications.

3.3. Panoramic Image to Cubemap Conversion System

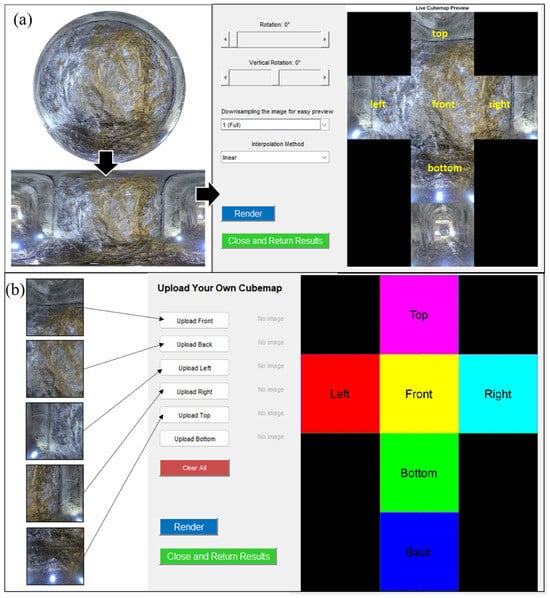

The code developed in this step employs an algorithm for converting spherical panoramic images into cubemap representations through spherical coordinate transformations and interpolation techniques. The system employs mathematical projections that map 2:1 aspect ratio panoramic imagery onto six discrete cubic faces (front, back, left, right, top, bottom). The interface incorporates real-time preview capabilities and interactive rotation parameters for both horizontal and vertical axes to accurately adjust the tunnel face in the front face image. The rendering engine processes each cube map face independently. The rendered images are stored in a structured format for the upcoming process steps. The user interface of the code is shown in Figure 2a. In cases where a 360-degree camera is not available, users can alternatively capture individual images of each tunnel face (front, back, left, right, ceiling, and floor) using a standard camera (like a mobile phone) and upload them separately into the system for processing (Figure 2b).

Figure 2.

(a) User interface for converting panoramic images into six discrete cubic faces with real-time preview capabilities and interactive adjustment; (b) alternative input method for uploading individual tunnel face images captured with a standard camera.

3.4. AI-Driven Semi-Automatic Interactive Trace Detection

Joint traces are the most important structural element of discontinuities visible in tunnel faces that could be used for geological characterization (ISRM, 1978) [37]. In this research, we implement a comprehensive artificial intelligence-driven analysis system for processing cubemap representations of tunnel environments. The proposed methodology can address the inherent challenges associated with accurate trace identification in complex rock mass structures. The system in this platform employs a pre-trained neural network classifier based on the DeepLabV3+ architecture, a semantic segmentation framework developed by Google that has demonstrated exceptional effectiveness in joint trace identification applications [38,39,40,41,42]. Following the methodology established by Lee et al. (2022) [39], the training procedure incorporates diverse joint configurations with varying frequencies and orientations, supplemented by noise samples collected from different geological regions to enhance the classifier’s robustness in detecting faint or small discontinuities [43]. The trained classifier performs pixel-level semantic segmentation on each tunnel face independently, generating binary trace masks that represent detected traces within the tunnel imagery.

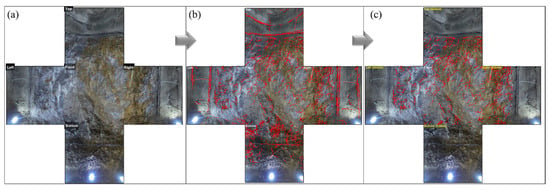

As shown in Figure 3a, the system employs a cubemap-style preview arrangement that mirrors the geometric relationships between tunnel faces, facilitating intuitive spatial understanding of the three-dimensional tunnel environment and enabling efficient validation of automated detection results. The code incorporates automated AI processing, but to enhance the accuracy of automated trace detection, the system integrates two critical manual supervisory steps that allow users to apply custom masks to processed images. The first supervisory step enables iterative refinement through selecting and removing incorrect trace segments (Figure 3b). The second step provides an interface for adding trace lines that are not recognized by the AI. This two-step manual operation ensures rational inclusion of all relevant discontinuities and significantly enhances both the accuracy and reliability of trace detection in complex rock mass environments (Figure 3c).

Figure 3.

AI-driven semi-automatic interactive trace detection system: (a) cubemap-style preview interface; (b) manual supervisory interface for removing and adding incorrectly classified pixels; (c) final refined results after integrated AI processing and manual supervision.

3.5. Digital Twin Development and 3D Reconstruction

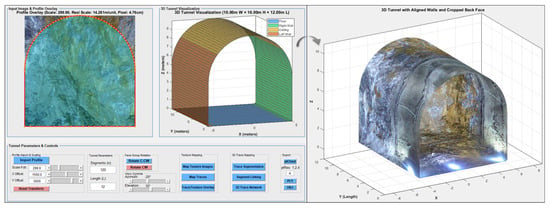

In this step, we developed a framework that integrates geometric modeling and texture mapping to create a textured 3D model of the tunnel (Figure 4). The method begins with the standardization and transformation of 2D tunnel cross-sectional profiles, ensuring proper scale and alignment with the reference image captured from the front face of the tunnel. Then it generates parametric 3D tunnel models by extruding the standardized profiles along a user-defined excavation length and mesh numbers (to ensure optimal computational alignment, the mesh count must be a multiple of four). Users can dynamically adjust tunnel parameters, including mesh (or segment) count, excavation length, and face group orientations, with real-time visualization updates enabling immediate assessment of geometric modifications.

Figure 4.

The user interface for the transformation of a 2D tunnel profile to a 3D textured digital twin of the tunnel.

This advanced visualization is achieved by means of a complex texture mapping technique which projects the 2D images rendered from the spherical panorama onto tunnel faces. It also supports standard export formats, including OBJ and PLY files for 3D mesh models, as well as RGB point cloud generation with configurable resolution settings to accommodate varying computational or visual requirements. Such capabilities are important for tunnel simulation purposes, where the combination of geometric accuracy and textural realism is essential for effective geological feature mapping.

3.6. Traces 2D Segmentation

The trace analysis system enables users to do line segment detection on detected trace pixels on 2D images. The system integrates two well-established computer vision algorithms for linear feature extraction: the Hough Transform method, which utilizes parameter space transformation to detect straight lines through edge point clustering, and the Line Segment Detector (LSD) algorithm, which employs gradient-based analysis for precise line segment identification with sub-pixel accuracy.

3.6.1. Hough Transform Method

The Hough Transform employs parameter space transformation to detect straight lines by mapping collinear points from image space to peaks in Hough space. The implementation utilizes polar parameterization where ρ = x cos θ + y sin θ, with ρ representing the perpendicular distance from the origin to the line and θ denoting the angle of the perpendicular relative to the horizontal axis. For each edge pixel, a sinusoidal curve is generated in Hough space by varying θ from 0° to 180°, with line detection achieved through identification of local maxima corresponding to curve intersections.

The algorithm operates with configurable resolution parameters, including rho resolution of 5 pixels and theta resolution of 5 degrees as default values. Peak detection employs non-maximum suppression with a peak threshold set to 0.1 (10% of maximum intensity), maximum peak limitation of 100, and suppression neighborhood of 7 × 7 pixels. The detected peaks are subsequently converted to image coordinates with geometric constraints, including minimum line length filtering of 20 pixels and gap filling parameters of 10 pixels for segment connectivity enhancement.

3.6.2. Line Segment Detector

The LSD algorithm implements gradient-based analysis combined with region-growing techniques for precise line segment extraction. The method employs connected component analysis followed by Principal Component Analysis (PCA) for linear structure identification and geometric characterization. The detection pipeline begins with connected component analysis to identify discrete pixel clusters, followed by linearity evaluation through eigenvalue decomposition of the covariance matrix. A ratio test is applied where λ1/λ2 must exceed a 5.0 threshold for linear feature validation, with line fitting subsequently performed via Singular Value Decomposition (SVD) or linear regression fallback methods.

The algorithm operates with several configurable parameters, including a minimum line length of eight pixels, a maximum gap tolerance of five pixels, an angle tolerance of 25 degrees, minimum support points of three pixels, and a connection distance threshold of two pixels. The method achieves sub-pixel precision in endpoint determination through continuous optimization of line parameters within identified regions, providing enhanced geometric accuracy compared to parametric approaches.

3.6.3. Comparison of Methods

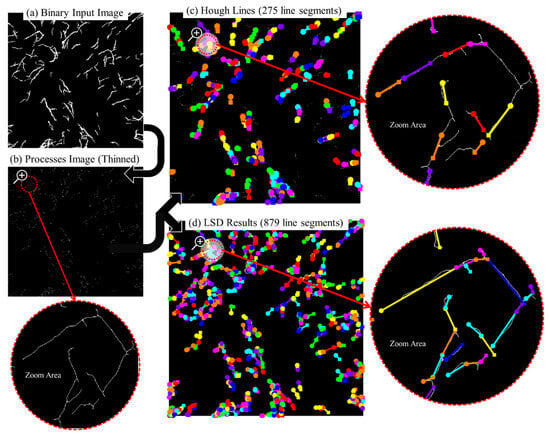

Both methods require comprehensive binary image preprocessing to optimize detection performance (Figure 5a). Common preprocessing operations include morphological skeleton extraction for line thinning to single-pixel width (Figure 5b), spur removal and area opening for artifact elimination, and optional morphological closing with disk-shaped structuring elements. Additional enhancement operations involve bridge operations for segment connectivity improvement and iterative refinement processes to maintain geometric integrity while reducing noise artifacts.

Figure 5.

Two-dimensional trace segmentation comparison: (a) original binary input; (b) preprocessed skeletal image; (c) LSD algorithm results; (d) Hough Transform results.

Figure 5c,d demonstrates comparative results obtained from both methods on identical binary input data using the aforementioned default parameter settings. The LSD algorithm (Figure 5c), by detecting 879 line segments, demonstrates superior robustness, providing more accurate line detection with enhanced geometric precision compared to the Hough Transform method (Figure 5d), which only detected 275 line segments. However, LSD requires significantly higher computational cost due to its iterative region-growing and PCA-based analysis, making it more suitable for offline analysis where precision is prioritized over processing speed. The Hough Transform offers computational efficiency ideal for real-time applications but may produce less precise results, particularly for complex line configurations with fragmented or noisy structures.

Both algorithms exhibit high sensitivity to parameter configurations, requiring empirical optimization through systematic parameter adjustment for individual datasets. The detection quality is highly dependent on image preprocessing quality, binary threshold selection, algorithm-specific parameter configuration, and input image characteristics such as noise level, line thickness, and structural fragmentation. Although both methods require careful parameter tuning to achieve optimal performance across varying image conditions, LSD always needs less effort to find the optimal settings.

3.7. Two-Dimensional Line-Segments Linking

This section presents a thorough computational framework for semi-automated line segment linking, addressing a fundamental challenge in image processing of discontinuity networks for trace analysis. The methodology employs a multi-stage optimization approach that integrates orientation-based clustering and iterative geometric optimization to address fragmentation and redundancy issues inherent in conventional image processing and edge detection algorithms. The framework implements a threshold-based agglomerative clustering methodology that groups line segments according to angular similarities, with orientation angles calculated using arctangent functions and normalized to the range (0°, 180°) to resolve directional ambiguity. Initial processing employs angular threshold parameters for targeted analysis of segments with similar directional properties, followed by iterative refinement procedures that systematically evaluate cluster membership through validation criteria, optimizing angular proximity to dynamically updated centroids computed using circular statistical methods until convergence is achieved.

The segment linking pipeline consists of three main stages, designed to systematically link related line segments using a dual-threshold strategy. In the first stage, the algorithm identifies and connects collinear segments by evaluating their angular similarity and spatial proximity, applying specific distance and angle thresholds. The second stage focuses on geometric refinement, where nearby endpoints are averaged and misaligned endpoints are projected onto their corresponding lines. Additional linking iterations are then performed to capture any remaining connections. The framework supports two processing modes: a unified mode for comprehensive analysis and a cluster-wise mode for orientation-based grouping.

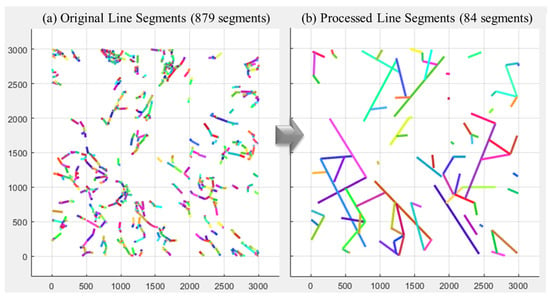

Figure 6 illustrates an example of the segment linking process. Figure 6a presents the original 879 line segments, and Figure 6b shows the processed result, where these were consolidated into 84 longer linked segments. Although the current algorithm performs well, the final outcome is highly sensitive to threshold selection, and different settings can lead to substantially different results. This variability can significantly influence subsequent analytical results of the software. Therefore, comprehensive research and development are required to enhance the line segment linking algorithm to more accurately reflect the real nature of discontinuity traces in rock masses.

Figure 6.

Line segment linking results: (a) original fragmented segments; (b) final linked segments.

3.8. Three-Dimensional Trace Network Creation

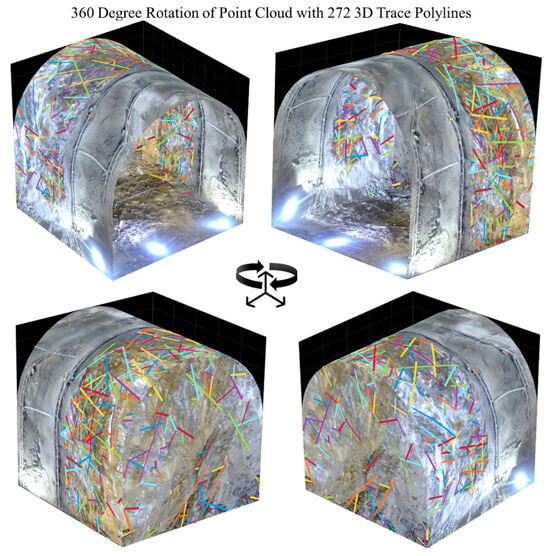

The approach presented in this research for 3D trace mapping employs a novel technique that begins with the generation of six-channel texture representations combining conventional RGB pixel information with corresponding XYZ coordinates. This technique establishes direct correspondence between 2D image pixels and their 3D positions in the tunnel coordinate system. The core methodology centers on specialized coordinate transformation algorithms that perform pixel-wise extraction of discontinuity traces from multiple face projections. The system implements Bresenham’s line algorithm [43] for pixel sequence extraction along detected traces, followed by coordinate lookup operations that retrieve associated XYZ data from the spatial texture channels. This methodology generates detailed 3D trace polylines that can preserve the geometric characteristics of each discontinuity within the tunnel coordinate system. As shown in Figure 7, the code provides a visualization of the combined point cloud representations with overlaid discontinuity traces polylines for immediate visual validation of reconstruction accuracy and supporting subsequent structural analysis of the surrounding rock mass.

Figure 7.

Three-dimensional trace network overlaid on the tunnel point cloud for spatial validation and visualization.

3.9. Joint Set Analysis Using TNA Code

3.9.1. Joints Visible Size Distribution

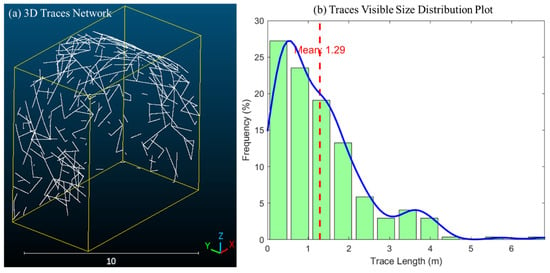

The methodology employed in this section to measure the size distribution of joints relies on digital 3D trace data acquired from tunnel faces. As explained in the previous section, the visible length of discontinuity traces on tunnel faces is detected and digitized as polylines. These digitized traces serve as the input data for subsequent joint measurements and analysis. This method also highlights the importance of accurately measuring the visible trace length and shape of discontinuities, as their size and distribution in space are essential for a full description of the rock mass and for further analysis of the orientation and spacing of discontinuities. A sample data of a 3D traces network and its size distribution are, respectively, shown in Figure 8a,b.

Figure 8.

Three-dimensional traces length analysis: (a) 3D trace network with digitized discontinuity polylines; (b) size distribution histogram of joint traces showing mean visible trace length of 1.29 m.

3.9.2. Joint Orientation Measurement Strategy

This study adopted a semi-automatic 3D trace networks analysis approach for the recognition of joint orientations, building upon the methodology developed by Mehrishal et al. (2024) [44]. This approach is particularly well-suited for characterizing rock mass discontinuities from 3D trace data, overcoming many of the limitations associated with traditional field measurements and purely 2D techniques. The methodology encompassed several key steps:

Curved Trace Identification: Utilizing the concept of a curvature index to distinguish between curved and straight traces enables us to identify traces with sufficient longitudinal curvature to define their discontinuity plane. Traces with a curvature index exceeding a user-defined threshold value (5% in this research) were considered curved, and their best-fitting planes were determined using PCA.

Co-planar Trace Identification: For straight traces, the methodology focuses on identifying co-planar traces by spatially analyzing intersecting traces, incorporating a tolerance threshold to account for natural variations and numerical inaccuracies in real rock mass data, due to surface undulation of natural discontinuity plane.

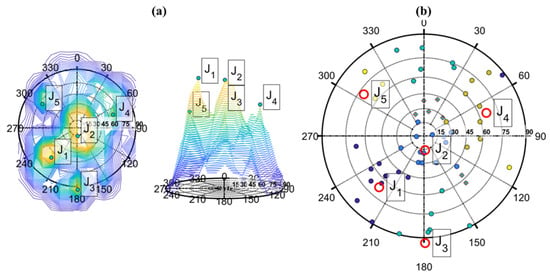

Based on the identified curved and co-planar traces, the orientation of the corresponding discontinuity planes was measured. To identify the principal joint sets from the derived discontinuity planes, we employed Kernel density function stereonet analysis to find the centers of local maximum pole concentrations (Figure 9a), followed by a cone-filtering approach to group neighboring poles with similar orientations into principal joint sets (Figure 9b). This comprehensive methodology allowed for a robust and accurate characterization of the rock mass’s jointing system in this software.

Figure 9.

Joint set identification: (a) 2D and 3D representation of Kernel density analysis of poles distributions on Stereonet; (b) principal joint sets from cone-filtering of similar orientations.

3.9.3. Joint Spacing Measurement Methodology

This research provides a comprehensive methodology for calculating joint set spacing developed by Mehrishal et al. 2025 [45], using the aforementioned 3D trace network analysis algorithm (TNA). This approach aims to overcome the limitations of traditional scanline survey techniques by leveraging 3D planes of discontinuities and systematically evaluating virtual scanlines and various geological assumptions. The primary objective is to provide a more accurate assessment of discontinuity spacing by measuring the orthogonal distance between adjacent joint planes. To address the complexity of discontinuity spacing, the study differentiates between two types of spacing, as established in previous research [46,47]:

Total Spacing: This is defined as the distance between immediately adjacent discontinuities along a defined scanline, irrespective of the joint set. While it indicates the overall fracturing in a rock mass (and it is applicable for empirical rock mass classification and RMR calculation), it is subject to bias due to being measured along a single scanline and does not provide information specific to individual discontinuity sets.

Normal Set Spacing: This is the distance measured along a scanline parallel to the mean normal of the joint set. Typically, in real rock outcrops, it is not possible to measure normal set spacing, and it could be derived from correcting the scanline orientation relative to the joint set normal orientation. In this research, the average of all normal set spacings is the mean normal set spacing. Normal set spacing is considered a robust indicator for block size and distribution within the rock mass, serving as a crucial input for numerical modeling and rock classification systems such as RQD calculations [48].

To accurately calculate the structural characteristics of rock masses, the proposed methodology in this software enables the user to systematically evaluate several assumptions and conditions related to the orientation, size, and spatial distribution of discontinuities within a multi-scenario framework:

- Parallel Orientation Assumption: This assumes all discontinuities within a set are parallel to each other and aligned with the mean set orientation. While this simplifies calculations, it may not accurately reflect natural conditions.

- Original Orientation Consideration: This approach accounts for discontinuity planes in their original orientations during spacing calculations, which is more realistic but can lead to local variations in spacing.

- Fully Persistent: Discontinuities that completely penetrate the rock mass.

- Partially Persistent: Discontinuities that partially extend until they intersect a scanline passing through the nearest plane.

- Non-Persistent: Disk-shaped discontinuities that extend into the rock mass only along their visible trace on the outcrop. The spacing values derived under these three states can vary significantly.

- Virtual 3D Scanline Strategy: The methodology employs multiple infinite scanlines positioned at the centers of discontinuity planes, minimizing conventional sampling bias. This approach offers a significant improvement over traditional methods, which often require impractical scanline positioning in the field.

- Scanline Length: Virtual scanlines with infinite lengths, parallel to the mean set orientation, are utilized to estimate spacing, addressing inaccuracies that can arise from short scanlines.

- Scanline Patterns: Two techniques are employed to mitigate imprecision resulting from small sample sizes, a uniformly scattered scanline pattern and scattering scanlines per plane.

- Co-planar Plane Merging: This addresses the issues of fragmented exposure by identifying and merging co-planar discontinuity planes within each joint set. This is critical because some traces might belong to a single plane but appear as separate discontinuities when exposed on different faces of outcrops. Treating these as independent planes would skew mean spacing calculations, potentially leading to near-zero spacing values.

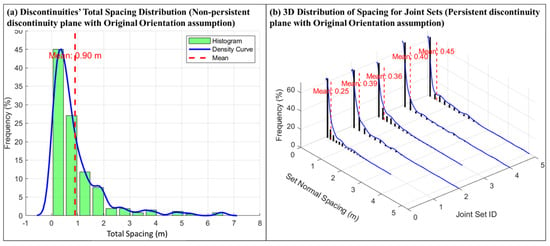

By systematically analyzing five different scenarios that combine assumptions about joint orientation, persistence, and scanline positioning, the proposed methodology provides spacing values that reflect varying degrees of conservatism and geological realism. This comprehensive approach ensures a more robust and accurate characterization of the jointing system within the rock mass. Figure 10a illustrates an example of the total spacing distribution under the assumption that discontinuity planes are non-persistent while maintaining their original orientations. In addition, Figure 10b presents the normal set spacing distribution assuming that joints are fully persistent within the tunnel domain, also retaining their original orientations. These are examples that show how the TRaiC platform represents discontinuity spacing analysis results.

Figure 10.

Joint spacing analysis results: (a) total spacing distribution; (b) normal set spacing distribution.

3.10. LLM-Assisted Tunnel Face Description System

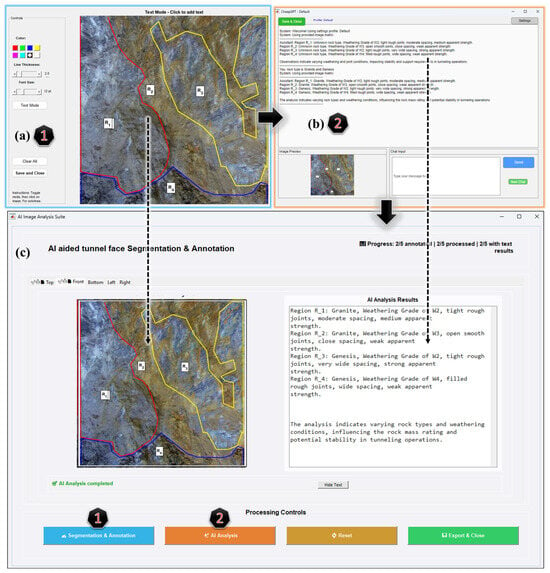

The recognition and characterization of geological features in tunnel construction requires professional expertise. This research presents a novel hybrid methodology that integrates users’ manual image segmentation and annotation with Large Language Model (LLM) image analysis capabilities for systematic qualitative description and geological interpretation of rock mass (to effectively combine human domain expertise with artificial intelligence capabilities for detailed analysis and interpretation).

The key innovation is the realization of a multimodal AI framework using large vision language models for automatic geology interpretation. We use structured prompt engineering to perform rock mass characterization while maintaining adherence to established geological classification systems, specifically the Rock Mass Rating (RMR) parameters based on well-known terminologies in rock mechanics and geoengineering. The interface handles both textual queries and image data (through base64 encoding, which converts tunnel face images for API transmission), enabling the LLM to identify rock types, weathering conditions, joint characteristics, and structural features.

The prompt engineering methodology constrains analysis to specific geological parameters, including rock type identification, weathering grade classification (W1–W5 scale), joint condition assessment, qualitative discontinuity spacing evaluation, and apparent strength determination. This method allows the results to be consistent with established geological classification schemes and maintain an output suitable for practical field applications. This methodology combines human expertise precision with LLM-based analysis efficiency and represents a significant advancement in geological image analysis.

The hybrid methodology workflow is illustrated in Figure 11. Figure 11a shows the initial manual annotation interface where a tunnel engineer identifies and marks geological features of interest within the tunnel faces (face, ceiling, and side walls) imagery. The graphical interactive polygon drawing and text annotation user interface enables precise selection of rock mass regions, allowing geological professionals to guide the AI analysis toward specific areas requiring detailed characterization. Figure 11b presents the image preprocessing and upload module, where annotated geological images are prepared for OpenAI API version 4.0 transmission (ensuring compatibility with a vision–language model like GPT-4o, while maintaining image quality necessary for accurate geological feature recognition). Figure 11c demonstrates the geological interpretation results. It outputs geological descriptions including rock type identification, weathering grade classification, joint condition assessment, and structural feature analysis. The system has a particular effectiveness in documentation and standard report exportation. It can significantly reduce interpretation time compared to traditional manual assessment methods, but the accuracy of the geologic interpretations significantly depends on the capabilities of LLMs, and more systematic research needs to be developed to validate the results.

Figure 11.

Hybrid geological analysis workflow: (a) manual image segmentation/annotation interface; (b) image preprocessing and prompt module; (c) LLM-generated geological interpretation results.

3.11. RMR Scoring Module Overview

Currently, a simple interface is presented in TRaiC to implement Bieniawski’s (1989) [49] Rock Mass Rating (RMR) classification system specifically for tunnel engineering applications. The system operates by accepting three primary input datasets: qualitative tunnel face description results, measured joint spacing values (total spacing and normal set spacings), and joint orientation data. These inputs are processed through a structured parameter acquisition framework that encompasses all six standard RMR parameters, including uniaxial compressive strength (UCS), rock quality designation (RQD), joint spacing, joint condition, groundwater condition, and joint orientation adjustment. Here, the UCS, condition of discontinuities, and groundwater conditions are all evaluated based on the descriptions and understanding of rock mass provided in the previous sections. The total spacing is another basic parameter of RMR that is directly measured based on 3D traces data analysis. In addition, the normal set spacing will be used to calculate RQD percentages based on volumetric joint count (Jv) presented by Palmstrom (2005) [48]. Finally, using the joint orientation data, the software features a specialized joint orientation analysis component that evaluates the spatial relationship between joint planes and tunnel axis, generating tunnel-specific favorability assessments and corresponding numerical adjustment factors that represent a modification over standard RMR applications.

One of the limitations of the existing orientation adjustment categorization is that there is no accurate definition of when the discontinuity could be assumed perpendicular to the tunnel axis and when it is parallel to the tunnel axis. So, in this research, to develop a computer code to automate the calculations, it is assumed that the discontinuity and tunnel are parallel when the acute angle between the strike of the discontinuity and the tunnel axis is less than 35 degrees; otherwise, the discontinuity is perpendicular to the tunnel. Table 1 represents an enhanced version of the RMR joint orientation adjustment table, after Bieniawski (1989) [49] and Moomivand (2018) [50].

Table 1.

RMR joint orientation adjustment table (after Bieniawski (1989) [49], and Moomivand (2018) [50].

The code employs a real-time calculation script that continuously processes input parameters to measure the total RMR score. Users input parameters, including editable entry fields for the UCS, RQD, and joint spacing (pre-calculated from input datasets), and dropdown selections for joint condition and groundwater assessments corresponding to established geological classifications. Results are presented alongside the corresponding RMR rock class designation (Class I through Class V), with automatic assignment of some primary engineering parameters, including stand-up time estimates for various tunnel spans and relevant cohesion strength. The code generates a structured technical report that incorporates complete parameter assessments, scoring breakdowns, project identification information, rock classifications, and assessment dates, ensuring compliance with professional documentation standards appropriate for direct integration with the professional geotechnical reporting module of the software.

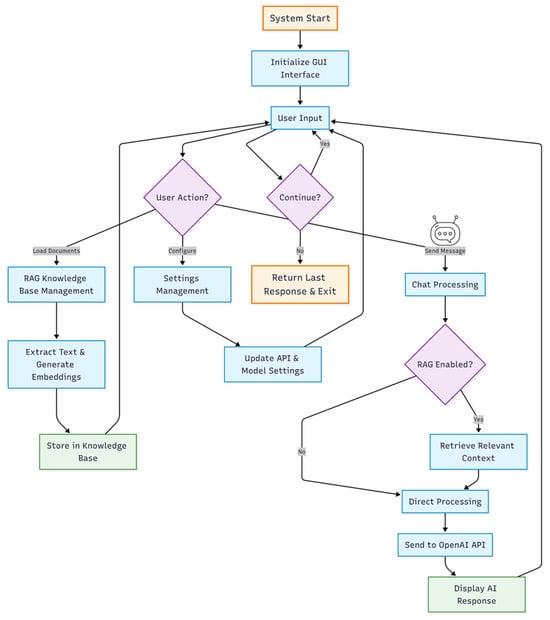

3.12. RAG-LLM Report Generation System

The RAG-LLM (Retrieval Augmented Generation-Large Language Models) report generation system in this research represents a novel approach to intelligent technical documentation in tunnel engineering. This system combines the powerful context-aware capabilities of modern language models with domain-specific knowledge retrieval to produce standardized, accurate, and comprehensive tunnel face mapping reports. This system addresses the urgent need for a rapid, consistent, standardized, and expert-level reporting system in tunnel engineering by leveraging both historical data and contemporary AI capabilities. Figure 12 schematically provides the system’s operational workflow.

Figure 12.

Workflow of the RAG-LLM system for generating tunnel face mapping reports.

3.12.1. Private Database Construction

Tunnel Database Curation

The foundation of the RAG system relies on a comprehensive curation process for historical tunnel face mapping data. This process involves the systematic collection and preprocessing of tunnel geo-engineering documents, including past inspection reports, geological surveys, rock mass classification records, and technical standards such as RMR (Rock Mass Rating) and ISRM suggested methods. The curation methodology ensures data quality through automated text extraction from multiple document formats (PDF, DOCX, TXT) and implements content validation mechanisms to maintain consistency across the knowledge base. For each document, the text is extracted, the content is validated, and the metadata is enriched, for installation into a knowledge database.

Database Organization and Indexing

The knowledge database architecture is organized in a hierarchical structure (for document chunking) that categorizes documents based on their technical domain and relevance to tunnel reporting (segments large documents into semantically coherent sections while maintaining contextual relationships). Each document chunk is processed through embedding generation using state-of-the-art language models, creating high-dimensional vector representations that capture semantic meaning and technical context. The embedding vectors are stored alongside comprehensive metadata that includes source information, document type classification, and relevance indicators. The current version of TRaiC utilizes embedding techniques through OpenAI’s text-embedding-ada-002 model to create high-dimensional vector representations of document content. These embeddings capture semantic relationships and technical concepts, enabling advanced similarity-based retrieval that goes beyond simple keyword matching. Thus, documents are categorized into different categories such as Standards, Guidelines, Historical Inspection Reports, and General reference materials. Such categorization provides ease of access to the information when generating a report and allows for context-sensitive retrieval.

Data Retrieval Optimization

The retrieval optimization framework implements search mechanisms that leverage cosine similarity calculations between query embeddings and document embeddings. The system incorporates configurable similarity thresholds and document retrieval limits to balance between relevance and computational efficiency. This approach ensures that only highly relevant information is retrieved for each specific query context and generation process. Threshold adjustment is based on query complexity and document availability, and it is tunable via a graphical interface provided for RAG settings. Moreover, the system realizes intelligent ranking algorithms, which rank the documents according to their relevance score, publication time, and technical authority. This multi-factor ranking approach can improve the quality of the retrieved context and the accuracy of the generated reports. However, although it is verified that the methodology is effective, but still requires further research to validate the final results.

3.12.2. LLM Integration

The LLM integration system architecture centers on model selection from OpenAI’s family of language models (particularly on GPT-4 variants) optimized for technical applications and capable of processing multi-modal inputs (images and documents).

The LLM Customization approach utilizes prompt engineering and model parameter modification. Domain-specific system prompts can guide the model’s behavior toward technical reporting standards (technically accurate and professionally formatted). This approach maintains compatibility with commercial language model APIs while achieving domain-specific performance improvements through carefully crafted instruction sets and context management. On the other hand, model parameter modification enables optimization of performance, cost, creativity, and response quality for different use cases within the reporting workflow.

The TRaiC system uses a structured, multi-layered prompting approach that combines base system instructions, domain-specific guidance for tunnel face mapping, and contextual data retrieved through the RAG process. This approach emphasizes alignment with established reporting standards, proper citation of sources, and effective integration of relevant context. Technical reporting prompts are thoughtfully constructed to lead the language model systematically, covering key stages such as condition assessment, hazard identification, and suggesting recommendations. A central component of this approach is context integration, which ensures that retrieved document sections are incorporated seamlessly into the generation process while maintaining clear attribution and source transparency. By doing so, the system enables the LLM to draw on historical knowledge while preserving accuracy and accountability in the reporting process.

3.12.3. Data Security and Privacy Considerations

Cloud-Based vs. Local Deployment Options

Engineering projects inherently involve significant data security concerns, as they handle sensitive information and confidential project details. In the current TRaiC platform, the RAG-LLM implementation relies on cloud-based APIs, which are not fully secure for handling critical data. Recognizing this limitation, the system is designed with the flexibility to support alternative deployments, allowing organizations to adopt locally hosted, open-source language models that run entirely within secure environments. This feature ensures full control over data.

Open-Source LLM Integration Framework

The methodology offers a practical framework for deploying open-source language models, such as Llama Vision and Llava variants, on personal computers or secure local servers (removing the need for external API calls), ensuring that data remains within the organization’s controlled environment. The necessary scripts for connecting the platform to local models have been published and are freely accessible via https://github.com/ahmadmehri/LoLlama (accessed on 19 August 2025) repository. This local deployment strategy relies on dedicated computational infrastructure to support large language model inference, while maintaining RAG functionality through locally hosted embedding models and vector databases.

Security-Performance Trade-Off

If we ignore the hardware requirements, the transition from cloud-based to locally deployed LLMs introduces key challenges related to accuracy and performance. While open-source models show strong potential, their capabilities can significantly vary compared to advanced commercial models. To address this, the security-focused deployment approach requires research and development for evaluation and validation to ensure locally hosted models deliver acceptable accuracy for technical reporting. Therefore, we emphasize the need for systematic research development to measure performance differences between cloud and local deployments, assessing factors such as technical accuracy, compliance with engineering standards, response consistency, and overall reliability in tunnel face reporting.

3.13. Report Generation Pipeline

The report generation module in the TRaiC system is designed to transform raw analytical data into well-structured tunnel face mapping reports. It uses MATLAB’s built-in template-based framework for report generation to organize rock mass analysis components such as geological characterization, classification, hazard identification, and engineering recommendations. In this reporting system, each section can be updated or expanded independently, and it can integrate multiple data types, like text, images, diagrams, and analytical visuals, into a cohesive document. Images are placed in the context using AI-generated markers, ensuring they enhance the accompanying narrative. The platform supports multiple output formats, including PDF, Word, and HTML, for easy sharing across organizational platforms. Therefore, this end-to-end automation streamlines report production and ensures that final documents meet the technical and professional standards required in geotechnical engineering.

4. Future Work and Development Roadmap

The TRaiC platform represents a significant advancement in digital tunnel face mapping. This platform successfully integrates the advantages of 360-degree panoramic photos, artificial intelligence-aided rock joints characterization, and large language models capabilities into a unified system for rapid geo-engineering assessment. However, in order to reach full industrial utilization in tunnel engineering applications, there are still some critical areas that need to be developed further. The following roadmap includes the main research directions and the development priorities crucial for overcoming current limitations and further adoption in the tunnel engineering industry.

4.1. Technical Enhancement Toward Full Automation

Overall, the future development of the TRaiC platform should focus on minimizing human involvement through automation strategies and improved technical capabilities.

4.1.1. Adaptive Thresholding and Quality Assurance

The current implementation of the analysis process relies on several manual interventions that introduce subjectivity and limit the full automation of the process. One of the primary challenges lies in the sensitivity of key processes, such as trace detection, trace line segmentation, trace segment linking, and 3D discontinuity characterization, to the predefined threshold values. To address this, future research should prioritize the development of intelligent, adaptive thresholding mechanisms capable of adjusting analysis parameters automatically. Therefore, implementing an automated quality assessment protocol is essential for validating outputs and fine-tuning thresholds without human oversight.

4.1.2. Enhanced Trace Detection Through Hybrid Approaches

Current trace detection methodologies can benefit from a more integrated approach that combines traditional computer vision techniques with AI-driven methods. The hybrid framework would enable systematic cross-validation of trace detection results. Such an approach is expected to significantly improve accuracy and reliability in identifying geological discontinuities.

4.1.3. Robust Segment Linking Algorithms

As noted in Section 3.7, the current segment-linking methodology exhibits limitations due to its dependency on user-defined thresholds for angular similarity, spatial proximity, and geometric refinement. This dependency often results in inconsistent connectivity patterns that do not accurately represent the natural fracture network. Future research should explore robust optimization algorithms capable of dynamically calibrating these parameters to produce linkage patterns that better approximate true geological structures.

4.1.4. Expansion of Rock Mass Classification Systems

In its current version, the system supports only the RMR (Rock Mass Rating) classification scheme. Expanding its functionality to include additional international standards such as the Q-System and Geological Strength Index (GSI) would enhance the platform’s adaptability across different geological settings and improve its applicability in global tunneling projects.

4.1.5. Automated Image Segmentation for Geological Feature Extraction

A significant limitation of the current LLM-Assisted Tunnel Face Description System is its reliance on manual image segmentation, which is time-consuming and susceptible to human bias. A promising future direction involves developing an automated image segmentation module using advanced computer vision algorithms. This capability would enable the system to automatically identify and delineate geological features, such as lithological units or weathering zones, within tunnel face imagery. Integrating automated segmentation into the LLM-based framework would mark a critical step toward achieving a fully automated, scalable, and efficient geological analysis solution.

4.2. Large Language Model Validation

The current implementation of LLM-based geological interpretations represents a promising advancement in automated geological assessment, but systematic validation is essential to ensure reliability in engineering applications. Future development should prioritize the creation of domain-specific parameter-tuned language models supported by extensive RAG datasets of tunnel face descriptions, geological reports, and engineering documentation, to improve accuracy in technical interpretations. This requires the establishment of comprehensive benchmarking protocols that compare LLM interpretations against expert geological assessments across diverse geological conditions and project contexts. The development of validation frameworks should include statistical measures of accuracy, consistency, and reliability, with particular attention to identifying systematic biases or limitations in automated interpretations.

The implementation of continuous learning systems represents another critical advancement. The feedback mechanisms allow the system to learn from user corrections and improve performance over time through active learning approaches. This requires the development of a data management system that can track user interactions, identify patterns in corrections and final results, and automatically update model parameters to improve future performance. Additionally, the development of explainable AI techniques would enable users to understand the reasoning behind automated interpretations, facilitating better integration of human expertise with artificial intelligence capabilities.

4.3. Security and Privacy Enhancement

As mentioned before, the current reliance on cloud-based APIs for LLM functionality presents data security concerns for sensitive information. The current platform has the capability to include a framework for deploying open-source language models on local computers to address security requirements. For example, Ollama is designed to facilitate exactly this kind of local AI development. Just the development of local deployment requires careful consideration of the performance trade-offs associated with local versus cloud-based processing.

Future research should establish systematic evaluation protocols for comparing the accuracy and reliability of locally deployed models against cloud-based alternatives, with particular attention to domain-specific performances in tunnel face mapping applications. This includes developing benchmarking frameworks that assess technical accuracy, compliance with engineering standards, and response consistency. The incorporation of compliance frameworks assuring adherence to various international data protection/privacy standards and specific industries’ security requirements is another fundamental priority.

4.4. Integration and Interoperability Development

The development of comprehensive Geo-BIM frameworks represents a critical advancement for integrating TRaiC data with Building Information Modeling systems to support digital twin development for tunnel infrastructure. Future research could focus on creating standardized frameworks that ensure compatibility with industry-standard data formats and protocols, such as IFC, CityGML, and OpenBIM, to facilitate integration with existing engineering workflows. This requires the development of data translation algorithms that can convert geological assessment data into BIM-compatible formats while maintaining the integrity and accuracy of the original information. The implementation of lifecycle management strategies would support long-term data management for infrastructure maintenance, monitoring, and analysis throughout the tunnel’s operational period.

5. Conclusions

This study introduced the Tunnel Rapid AI Classification (TRaiC) platform, an open-source solution designed to enhance the efficiency, accuracy, and safety of tunnel face mapping and rock mass characterization. By integrating 360° panoramic imaging, AI-driven discontinuity detection, 3D digital twin generation, and Retrieval-Augmented Generation with Large Language Models, TRaiC addresses the limitations of traditional manual mapping and existing digital methods that are often labor-intensive, subjective, and computationally demanding.

The proposed eight-stage modular workflow demonstrates how advanced image processing, automated trace analysis, and intelligent report generation can streamline geotechnical assessments and facilitate rapid decision-making during tunneling operations. Unlike photogrammetric approaches, TRaiC delivers a lightweight, high-resolution textured digital twin optimized for Geo-BIM integration, ensuring both practicality and interoperability within modern engineering workflows. The open-source nature of the platform further promotes transparency, reproducibility, and collaborative research, paving the way for continuous innovation in AI-assisted geotechnical engineering.

The results indicate that although the platform has the potential to significantly enhance the tunnel documentation methods and rock mass characterization but there are some limitations and bugs in the actual implementation that need to be improved. Relying on semi-automated stages, being sensitive to the setting of thresholds, being paired with non-secure LLMs, and the absence of capabilities of local model deployment indicate a requirement for future research in automation, local model deployment, and better system interoperability. Improving the rock mass classification beyond RMR is a further priority to enhance the industrial transfer of the platform.

Overall, TRaiC represents a significant advancement toward a fully automated, intelligent, and secure digital ecosystem for underground construction by combining AI-based methodologies with practical engineering requirements. While it lays a strong foundation for future developments in digital rock mass characterization and geotechnical decision-making systems, the current work serves primarily as a prototype rather than a ready-for-production solution.

6. Code Availability and Reproducibility

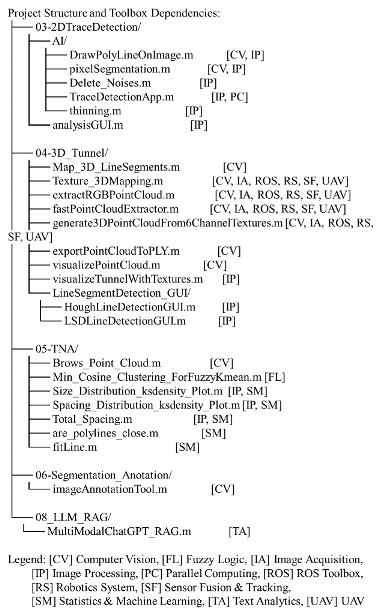

The latest version of the source code is available at GitHub (https://github.com/ahmadmehri/TRaiC-Tunnel-Rapid-ai-Classification-, accessed on 19 August 2025), and a demonstration video is also included to show the complete workflow. The code developed by MATLAB R2023b and the implementation requires the following toolboxes:

- Computer Vision Toolbox (v9.0 or later);

- Fuzzy Logic Toolbox (v2.8 or later);

- Image Acquisition Toolbox (v6.0 or later);

- Image Processing Toolbox (v11.1 or later);

- Parallel Computing Toolbox (v7.2 or later);

- ROS Toolbox (v1.2 or later);

- Robotics System Toolbox (v3.0 or later);

- Sensor Fusion and Tracking Toolbox (v2.0 or later);

- Statistics and Machine Learning Toolbox (v11.7 or later);

- Text Analytics Toolbox (v1.5 or later);

- UAV Toolbox (v1.0 or later).

A dependency checker script (analyzeProjectToolboxes.m) is provided in the repository to automaticallyverify toolbox availability and compatibility before execution. Users can run this script to ensure all required dependencies are installed on their system. The toolboxes are primarily utilized across five main modules, as illustrated below:

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs17162891/s1, Video S1: Introduction of TRaiC software.

Author Contributions

Conceptualization, S.M. and J.-J.S.; Software, J.L.; Validation, S.M.; Formal analysis, J.K.; Investigation, S.M. and J.K.; Resources, I.-S.K.; Data curation, J.L.; Writing—original draft, Y.S.; Writing—review & editing, S.M.; Visualization, I.-S.K.; Supervision, J.-J.S.; Project administration, J.-J.S.; Funding acquisition, J.-J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Seoul National University‘s international collaborative research support pro-gram, SNU OPEN WORLD (SOW) and grants from the Human Resources Development program (No. 20204010600250) and the Training Program of CCUS for the Green Growth (No. 20214000000500) by the Korea Institute of Energy Technology Evaluation and Planning (KETEP), funded by the Ministry of Trade, Industry, and Energy of the Korean Government (MOTIE).

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Materials. The codes and sample data used in this study are available in the GitHub repository: https://github.com/ahmadmehri/TRaiC-Tunnel-Rapid-ai-Classification- (accessed on 19 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Permatasari, R.D.; Setiawan, H.; Husein, S. Tunnel Face Mapping for Evaluate Support System Design of the Inlet Area at the Budong-Budong Dam Diversion Tunnel, West Sulawesi, Indonesia. IOP Conf. Ser. Earth Environ. Sci. 2025, 1486, 012008. [Google Scholar] [CrossRef]

- Yusoff, I.N.; Mohamad Ismail, M.A.; Tobe, H.; Date, K.; Yokota, Y. Quantitative Granitic Weathering Assessment for Rock Mass Classification Optimization of Tunnel Face Using Image Analysis Technique. Ain Shams Eng. J. 2023, 14, 101814. [Google Scholar] [CrossRef]

- Kim, K.-Y.; Kim, C.-Y.; Yim, S.-B.; Yun, H.-S.; Seo, Y. A Study on Problems and Improvements of Face Mapping during Tunnel Construction. J. Eng. Geol. 2006, 16, 265–273. [Google Scholar]

- Lee, H.-K.; Song, M.-K.; Jeong, Y.-O.; Lee, S.S.-W. Development of an Automatic Rock Mass Classification System Using Digital Tunnel Face Mapping. Appl. Sci. 2024, 14, 9024. [Google Scholar] [CrossRef]

- Leng, B.; Qiu, W.-G.; Du, C. Application of Digital Image Processing to Tunnel Driving Face. Yantu Lixue/Rock Soil Mech. 2006, 27, 385–388. [Google Scholar]

- Park, S.W.; Kim, H.G.; Bae, S.W.; Kim, C.Y.; Yoo, W.K.; Lee, J.D. Development of Mobile System Based on Android for Tunnel Face Mapping. J. Eng. Geol. 2014, 24, 343–351. [Google Scholar] [CrossRef]

- Pham, C.; Kim, B.-C.; Shin, H.-S. Deep Learning-Based Identification of Rock Discontinuities on 3D Model of Tunnel Face. Tunn. Undergr. Space Technol. 2025, 158, 106403. [Google Scholar] [CrossRef]

- Sagong, M.; Lee, J.S.; You, K.; Kim, J.G. Digitalized Tunnel Face Mapping System (DiTFAMS) Using PDA and Wireless Network. Tunn. Undergr. Space Technol. 2006, 21, 390. [Google Scholar] [CrossRef]

- Nilsen, B. Main Challenges for Deep Subsea Tunnels Based on Norwegian Experience. J. Korean Tunn. Undergr. Space Assoc. 2015, 17, 563–573. [Google Scholar] [CrossRef]

- Kim, Y.; Yun, T.S. Enhanced Rock Mass Rating Prediction from Tunnel Face Imagery: A Decision-Supportive Ensemble Deep Learning Approach. Eng. Geol. 2024, 339, 107625. [Google Scholar] [CrossRef]

- Tsuruta, R.; Utsuki, S.; Nakaya, M. Development of a System for Automatic Evaluation of the Geological Conditions of Tunnel Faces Using Artificial Intelligence and Application to a Construction Site. In IAEG/AEG Annual Meeting Proceedings, San Francisco, California, 2018-Volume 4; Shakoor, A., Cato, K., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 49–55. ISBN 978-3-319-93132-6. [Google Scholar]

- Janiszewski, M.; Torkan, M.; Uotinen, L.; Rinne, M. Rapid Photogrammetry with a 360-Degree Camera for Tunnel Mapping. Remote Sens. 2022, 14, 5494. [Google Scholar] [CrossRef]

- Paar, G.; Mett, M.; Ortner, T.; Kup, D.; Kontrus, H. High-resolution Real-time Multipurpose Tunnel Surface 3D Rendering. Geomech. Tunn. 2022, 15, 290–297. [Google Scholar] [CrossRef]

- Attard, L.; Debono, C.J.; Valentino, G.; Di Castro, M. Tunnel Inspection Using Photogrammetric Techniques and Image Processing: A Review. ISPRS J. Photogramm. Remote Sens. 2018, 144, 180–188. [Google Scholar] [CrossRef]

- Gaich, A.; Pischinger, G. 3D Images for Digital Geological Mapping: Focussing on Conventional Tunnelling. Geomech. Tunn. 2016, 9, 45–51. [Google Scholar] [CrossRef]

- Gaich, A.; Pötsch, M. 3D Images for Data Collection in Tunnelling—Applications and Latest Developments/3D-Bilder Für Die Datenerfassung Im Tunnelbau—Anwendung Und Aktuelle Entwicklungen. Geomech. Tunn. 2015, 8, 581–588. [Google Scholar] [CrossRef]

- Leem, J.; Mehrishal, S.; Kang, I.-S.; Yoon, D.-H.; Shao, Y.; Song, J.-J.; Jung, J. Optimizing Camera Settings and Unmanned Aerial Vehicle Flight Methods for Imagery-Based 3D Reconstruction: Applications in Outcrop and Underground Rock Faces. Remote Sens. 2025, 17, 1877. [Google Scholar] [CrossRef]

- Rabensteiner, S.; Weichenberger, F.P.; Chmelina, K. Automation and Digitalisation of the Geological Documentation in Tunnelling. Geomech. Tunn. 2022, 15, 298–304. [Google Scholar] [CrossRef]

- Gaich, A.; Pötsch, M. 3D Images for Digital Tunnel Face Documentation at TBM Headings—Application at Koralmtunnel Lot KAT2/3D-Bilder Zur Digitalen Ortsbrustdokumentation Bei TBM-Vortrieben—Anwendung Beim Koralmtunnel Baulos KAT2. Geomech. Tunn. 2016, 9, 210–221. [Google Scholar] [CrossRef]

- Hansen, T.F.; Erharter, G.H.; Marcher, T.; Liu, Z.; Tørresen, J. Improving face decisions in tunnelling by machine learning-based MWD analysis. Geomech. Tunn. 2022, 15, 222–231. [Google Scholar] [CrossRef]

- Leem, J.; Kim, J.; Kang, I.-S.; Choi, J.; Song, J.-J.; Mehrishal, S.; Shao, Y. Practical Error Prediction in UAV Imagery-Based 3D Reconstruction: Assessing the Impact of Image Quality Factors. Int. J. Remote Sens. 2025, 46, 1000–1030. [Google Scholar] [CrossRef]

- Kim, J.; Choi, J.; Mehrishal, S.; Song, J.-J. The Goodness-of-Fit of Models for Fracture Trace Length Distribution: Does the Power Law Provide a Good Fit? J. Struct. Geol. 2024, 188, 105270. [Google Scholar] [CrossRef]

- Sjölander, A.; Belloni, V.; Ansell, A.; Nordström, E. Towards Automated Inspections of Tunnels: A Review of Optical Inspections and Autonomous Assessment of Concrete Tunnel Linings. Sensors 2023, 23, 3189. [Google Scholar] [CrossRef]

- Allende Valdés, M.; Merello, J.P.; Cofré, P. Artificial Intelligence Technique for Geomechanical Forecasting. In Tunnels and Underground Cities: Engineering and Innovation Meet Archaeology, Architecture and Art; Peila, D., Viggiani, G., Celestino, T., Eds.; CRC Press: Boca Raton, FL, USA, 2019; pp. 1629–1636. ISBN 978-0-429-42444-1. [Google Scholar]

- Hase, R.; Mihara, Y.; Awaji, D.; Hojo, R.; Shimizu, S. Development and Applicability Assessment of a Tunnel Face Monitoring System Against Tunnel Face Collapse. In Geo-Sustainnovation for Resilient Society; Hazarika, H., Haigh, S.K., Chaudhary, B., Murai, M., Manandhar, S., Eds.; Lecture Notes in Civil Engineering; Springer Nature Singapore: Singapore, 2024; Volume 446, pp. 23–33. ISBN 978-981-9992-18-8. [Google Scholar]

- Chapman, M.A.; Min, C.; Zhang, D. Continuous Mapping of Tunnel Walls in A Gnss-Denied Environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B3, 481–485. [Google Scholar] [CrossRef]

- Zhang, P.; Zhao, Q.; Tannant, D.D.; Ji, T.; Zhu, H. 3D Mapping of Discontinuity Traces Using Fusion of Point Cloud and Image Data. Bull. Eng. Geol. Environ. 2019, 78, 2789–2801. [Google Scholar] [CrossRef]

- Previtali, M.; Barazzetti, L.; Roncoroni, F.; Cao, Y.; Scaioni, M. 360° Image Orientation and Reconstruction with Camera Positions Constrained by Gnss Measurements. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, XLVIII-1/W1-2023, 411–416. [Google Scholar] [CrossRef]

- Usman, F.; Banuar, N.; Kurniawan, T.B.; Ismail, M.N.; Othman, N.A. Integration of Spherical 360° Panoramic Virtual Tour with Assessment Data for Risk Assessment and Maintenance of Tunnel and Cavern. Appl. Mech. Mater. 2016, 858, 50–56. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, Z.; Wang, Z.J.; Da Cheng, B.; Nie, L.; Luo, W.; Yu, Z.Y.; Yuan, L.W. GeoRAG: A Question-Answering Approach from a Geographical Perspective. arXiv 2025, arXiv:2504.01458. [Google Scholar]

- Jacinto, M.; Silva, M.; Oliveira, L.; Medeiros, G.; Rodrigues, T.; Medeiros, D.; Montalvão, L.; Gonzalez, M.; De Almeida, R.V. Geologist Copilot: Generative AI Application to Support Drilling Operations with Automated and Explained Lithology Interpretation. In Proceedings of the 85th EAGE Annual Conference & Exhibition, Oslo, Norway, 10–13 June 2024; European Association of Geoscientists & Engineers: Utrecht, The Netherlands; pp. 1–5. [Google Scholar]