Abstract

Hyperspectral imagery (HSI) has demonstrated significant potential in remote sensing applications because of its abundant spectral and spatial information. However, current mainstream hyperspectral image classification models are generally characterized by high computational complexity, structural intricacy, and a strong reliance on training samples, which poses challenges in meeting application demands under resource-constrained conditions. To this end, a lightweight hyperspectral image classification model inspired by bionic design, named BioLiteNet, is proposed, aimed at enhancing the model’s overall performance in terms of both accuracy and computational efficiency. The model is composed of two key modules: BeeSenseSelector (Channel Attention Screening) and AffScaleConv (Scale-Adaptive Convolutional Fusion). The former mimics the selective attention mechanism observed in honeybee vision for dynamically selecting critical spectral channels, while the latter enables efficient fusion of spatial and spectral features through multi-scale depthwise separable convolution. On multiple hyperspectral benchmark datasets, BioLiteNet is shown to demonstrate outstanding classification performance while maintaining exceptionally low computational costs. Experimental results show that BioLiteNet can maintain high classification accuracy across different datasets, even when using only a small amount of labeled samples. Specifically, it achieves overall accuracies (OA) of 90.02% ± 0.97%, 88.20% ± 5.26%, and 78.64% ± 7.13% on the Indian Pines, Pavia University, and WHU-Hi-LongKou datasets using just 5% of samples, 10% of samples, and 25 samples per class, respectively. Moreover, BioLiteNet consistently requires fewer computational resources than other comparative models. The results indicate that the lightweight hyperspectral image classification model proposed in this study significantly reduces the requirements for computational resources and storage while ensuring classification accuracy, making it well-suited for remote sensing applications under resource constraints. The experimental results further support these findings by demonstrating its robustness and practicality, thereby offering a novel solution for hyperspectral image classification tasks.

1. Introduction

Hyperspectral imagery (HSI), characterized by tens to hundreds of consecutive spectral bands at each pixel, enables much finer discriminative capabilities than traditional RGB or multispectral imagery. It has exhibited broad application potential in areas such as agricultural monitoring, urban management, environmental detection, and resource surveying []. Hyperspectral data is characterized by abundant spectral–spatial joint features, which offer a robust data foundation for fine-grained object recognition and classification tasks. However, significant differences exist among various hyperspectral datasets in terms of sensor types, spatial resolutions, spectral band ranges, and land cover category distributions, leading to noticeable distribution biases between datasets. These biases not only exacerbate data inconsistencies during model training and generalization but also impose higher demands on the adaptability and robustness of models when applied across different regions and sensor platforms [].

Early hyperspectral image classification methods primarily relied on conventional statistical learning techniques, such as Principal Component Analysis (PCA) [] and Linear Discriminant Analysis (LDA) [], which perform linear transformations to compress high-dimensional spectral data and enhance inter-class separability. Support Vector Machines (SVMs) construct classification hyperplanes in feature space based on the maximum margin principle, offering strong classification capabilities. In addition, non-parametric models such as K-Nearest Neighbors (KNN) and Random Forests (RFs) have also been widely applied in hyperspectral classification tasks []. These methods are generally simple in structure, computationally efficient, and offer good interpretability and deployability. However, they typically depend on hand-crafted features and make strong assumptions about data linear separability or distribution, making them less effective in handling the complex spatial structures and nonlinear spectral characteristics commonly found in hyperspectral imagery.

In recent years, the widespread adoption of deep learning in hyperspectral image (HSI) classification has led to substantial accuracy improvements across various models. However, these methods are often dependent on deep learning architectures and complex computational operations, resulting in a substantial increase in computational complexity and the number of parameters, thereby limiting their feasibility for real-time deployment in resource-constrained environments []. For example, the HyBridSN model on the Indian Pines dataset comprises approximately 1.15 M trainable parameters, with a forward inference cost of approximately 416.8 M FLOPs []; DMCN contains approximately 2.77 M parameters, resulting in a computational load of approximately 3.21 billion FLOPs []. Although the latest lightweight Transformer model, ALSST, contains only 157.13 K parameters, its forward pass still incurs a computational cost of approximately 1.53 billion FLOPs [], which remains relatively high. These large model sizes and computational overheads collectively pose significant challenges to practical deployment. Hyperspectral image classification is frequently implemented on resource-constrained platforms, including unmanned aerial vehicles (UAVs), satellite ground stations, and mobile terminals, thereby imposing stringent requirements on model lightweighting and rapid deployment. In certain application scenarios (e.g., disaster monitoring and early warning of agricultural pests and diseases), classification and decision-making are required to be performed on-site or in near real-time environments, with inference latency constrained to within the millisecond or second range []. Edge devices are typically equipped with low-power CPUs or lightweight GPUs, with limited memory and storage capacity; therefore, the number of parameters and FLOPs must be minimized to support on-device execution []. Remote sensing platforms or drones are constrained by limited battery life and are sensitive to algorithmic energy consumption; moreover, transmitting raw hyperspectral data to the cloud is both time-consuming and bandwidth-intensive. Thus, preprocessing and classification are preferably performed locally []. These deployment demands have necessitated the development of lightweight hyperspectral classification models that balance accuracy and computational efficiency. With the growing adoption of multimodal remote sensing data fusion, including multispectral and infrared modalities, the associated models are typically required to align and fuse features from multiple modalities, thereby further increasing parameter counts and computational complexity []. Although such multimodal approaches offer improved accuracy, they demand significantly more parameters and FLOPs than their unimodal counterparts, thereby rendering deployment on resource-constrained platforms such as unmanned aerial vehicles (UAVs) and satellite ground stations nearly infeasible, and significantly limiting their potential for real-time and lightweight applications []. Therefore, it is imperative to develop more lightweight hyperspectral classification networks that balance multimodal fusion performance with the feasibility of deployment on edge devices, while promoting compact model design in terms of both parameter count and computational cost.

Although numerous lightweight approaches have been proposed to reduce model complexity, these methods still encounter significant limitations in practical edge AI deployment scenarios. For example, the HBiNet model adopts the same training ratio of 200 samples per class as HybridSN on the PaviaU dataset (approximately 5%), yet achieves an overall classification accuracy of only 81.2%, which is markedly lower than HybridSN’s 99.35% under the same training conditions. This highlights the difficulty of maintaining both accuracy and compactness in binarization methods under extreme compression []. Q-FCN (Quantized Feature Convolutional Network) achieves an inference speed of approximately 120 FPS on embedded CPUs []; however, due to its reliance on specific hardware instruction sets for computational precision, it experiences memory overflow and compatibility issues on some low-end platforms. Therefore, there is a pressing need for a hyperspectral image classification network that can operate efficiently in resource-constrained environments while maintaining high classification accuracy, and that can systematically address challenges related to architectural optimization, operator adaptation, and engineering processes in edge AI deployment.

Bionic design draws inspiration from the forms and working principles of natural organisms, applying them to engineering and technological designs to enhance system efficiency and intelligence. In the field of computer vision and signal processing, bionic design approaches have achieved repeated success. For example, underwater target detection models inspired by the lateral line system of sharks can effectively capture local frequency variations []; multi-view attention networks mimicking the head rotation mechanism of owls can accurately locate targets under low-light conditions []; and adaptive convolutional kernel designs inspired by the morphology of butterfly wings have demonstrated superior performance in multi-scale texture analysis []. Of particular interest are the visual attention mechanisms exhibited by bees in complex natural environments. Despite their highly simplified brain architecture and extremely limited neural resources, honeybees are capable of efficiently identifying small targets (e.g., specific flower species) within complex natural environments, exhibiting remarkable spatial localization and spectral discrimination capabilities []. This ability stems primarily from their highly evolved visual processing mechanisms. Rapid perception across a broad field of view and at low resolution is first achieved through their compound eye structure, enabling initial capture of the global scene and salient regions []. Subsequent local focusing on target areas is facilitated by ultraviolet-sensitive visual channels and local enhancement mechanisms within the visual center []. Ultimately, spatial and chromatic patterns at multiple scales are integrated to perform target discrimination.

Inspired by the biological mechanism characterized by rapid perception, selective attention, and discriminative decision-making, a bionic and lightweight hyperspectral image classification network, termed BioLiteNet, is proposed in this study. BioLiteNet is designed to substantially reduce model parameters and computational complexity while maintaining high classification accuracy, thereby enabling the efficient and deployable recognition and classification of hyperspectral images. As shown in Figure 1, the proposed model adopts a simplified architecture inspired by bees, yet achieves classification performance comparable to that of complex, large-scale models symbolized by birds, reflecting the bionic design philosophy of “achieving more with less”. The main contributions of this paper are as follows.

Figure 1.

Comparison between BioLiteNet and large-scale models in hyperspectral image classification: compact and efficient (the bee represents BioLiteNet, while the birds represent large-scale models).

(1) A bionically inspired lightweight hyperspectral image classification model, BioLiteNet, is proposed. To mitigate the trade-off between model accuracy and complexity in existing approaches, a bio-inspired processing framework with the capabilities of rapid perception, local focus, and discriminative output is designed, drawing inspiration from the visual system of bees. This framework focuses on the synergistic optimization between the discriminative power of key regions and perceptual efficiency, enhancing classification performance while maintaining model compactness, thereby making it particularly suitable for remote sensing tasks in resource-constrained environments.

(2) The BeeSenseSelector module is designed to emulate a biologically inspired mechanism for localized perceptual attention. To address the redundancy and inefficiency of traditional attention mechanisms when applied to high-dimensional spectral–spatial features, the module emulates the selective attention mechanism observed in honeybee vision. It guides the model to concentrate on the most discriminative regions within the spectral–spatial domain, thereby enabling the dynamic suppression of redundant information and enhancing the precision and computational efficiency of feature representation.

(3) The AffScaleConv module is proposed to enable the scale-adaptive fusion of spectral–spatial features. To address the modeling requirements arising from multi-scale spatial structures and spectrally non-uniform variations in hyperspectral images, a convolutional operator with adjustable receptive fields and localized perception capability is designed. This operator enhances the model’s capacity to capture the dependencies between spatial patterns and spectral bands. As a result, classification accuracy is significantly improved, while the lightweight structure of the model is preserved.

(4) Extensive empirical validation has been conducted on multiple hyperspectral remote sensing datasets. The experimental results demonstrate that the proposed method achieves high classification accuracy on several mainstream hyperspectral datasets, while maintaining a low parameter count and computational overhead. It outperforms multiple existing classification approaches and confirms the effectiveness of the proposed bionic structure in balancing sensing efficiency and discriminative capability, thereby exhibiting strong adaptability and deployment potential.

2. Related Work

Early hyperspectral image classification methods primarily depended on conventional machine learning algorithms such as Support Vector Machines (SVMs) [], Random Forest [], and k-Nearest Neighbors (k-NN) []. These approaches typically rely solely on pixel-level spectral features for classification and are often based on handcrafted features or prior knowledge, thereby limiting the ability to fully exploit the rich spectral information inherent in hyperspectral data []. With the rapid development of deep learning technologies, neural networks have demonstrated powerful end-to-end feature learning capabilities. Researchers have begun exploring their application in hyperspectral image classification tasks to achieve more accurate classification []. The following sections will focus on existing research.

2.1. Existing Hyperspectral Image Classification Methods

In recent years, with the development of deep learning, the convolutional neural network (CNN) has emerged as an important tool for hyperspectral image classification. Early methods, such as the Spectral–Spatial Residual Network (SSRN) [], efficiently extracted spectral-space features through a combination of 1D and 2D convolutions and addressed the degradation issue in deep neural networks through the use of residual connectivity. To further enhance feature expression capability, several approaches have proposed hybrid structures, such as HybridSN, which combines the advantages of 3D and 2D convolutions [,]. This structure retains the capability for spectral-space joint feature extraction while controlling network complexity. In recent years, the Transformer architecture has also seen widespread use in hyperspectral image modeling. For instance, SpectralFormer employs a hierarchical spectral attention mechanism to capture inter-spectral relationships while also considering spatial structure perception [,]. Although the above methods have made significant progress in accuracy, most of these methods involve deep learning architectures with a large number of parameters, which makes their deployment on resource-constrained devices difficult.

2.2. Lightweighting Techniques in Hyperspectral Classification

To achieve the efficient deployment of hyperspectral image classification models on resource-constrained platforms such as UAVs and mobile terminals, lightweight modeling has become a focal point of research in recent years. In response to the challenges posed by complex model structures and high computational overhead, various lightweight schemes have been proposed. For example, HyperLiteNet, proposed by Zhao et al., provides fast extraction of spectral-space features through a shallow, non-deep parallel structure, resulting in a significant reduction in model parameters and computational complexity, making it suitable for deployment in application environments with high resource and response speed requirements []. However, this method relies on shallow feature processing, which limits its expressive power and makes it challenging to effectively model the nonlinear relationships between complex ground objects. Li et al. designed LLFWCNN (Low-Frequency Wavelet CNN), which is based on wavelet decomposition, retains only the low-frequency components of the image for subsequent feature extraction, and combines it with a lightweight convolutional structure to achieve very low model complexity []. However, while removing high-frequency details, this approach sacrifices the ability to recognize texture boundaries and fine targets, leading to limitations in scenarios with higher accuracy demands or complex category distributions. Huang et al. proposed the Spectral–Spatial Mamba (SS-Mamba), which achieves superior lightweight design and computational efficiency with a parameter count of 0.47 M and an inference speed of 10.45 ms per 100 samples [], outperforming traditional Transformers. However, its spatial branch is relatively shallow, limiting its ability to effectively model complex textures and fine-grained boundaries, which leads to reduced classification accuracy in scenarios with ambiguous category transitions or intricate spatial distributions. Although the feature enhancement module effectively suppresses redundant information, it lacks sufficient sensitivity to small objects and local details. Although existing lightweight methods have achieved significant advancements in parameter compression and computational efficiency, many of them are hindered by insufficient expressive power, limited context modeling, and poor adaptability to complex scenarios. In practical hyperspectral classification tasks, determining how to control computational resources while preserving the ability to accurately model and discriminate key information remains a significant challenge for current lightweight network design.

2.3. Biomimetic and Bio-Inspired Mechanisms in Hyperspectral Image Processing

Hyperspectral images (HSIs), due to their high spectral dimensionality and complex spatial structures, suffer from issues such as spectral band redundancy, low spatial information utilization, and blurred class boundaries []. These challenges make feature extraction and discrimination processes susceptible to redundant interference, thereby placing higher demands on feature selectivity and discriminative accuracy. Although traditional convolutional and attention mechanisms can enhance feature representation capabilities, they often rely on deep stacking and complex operators, which significantly increase model parameters and computational costs, making it difficult to balance lightweight design and computational efficiency. Consequently, researchers have turned their attention to biomimetic design, seeking inspiration from the efficient perception, selection, and decision-making strategies observed in nature to address the challenges of feature sparsity and redundant interference in hyperspectral data. For example, Zhou et al. proposed a band selection method based on the Improved Ant Colony Algorithm (IMACA-BS), simulating the optimal foraging behavior of ants in complex path environments and introducing pheromone updating and heuristic functions to eliminate redundant bands and select highly discriminative spectral bands. The method demonstrates superior classification performance on standard datasets, including Indian Pines, Pavia University, and Botswana, highlighting the significant role of the ant colony global search mechanism in enhancing spectral feature selection efficiency []. Furthermore, attention mechanisms, a quintessential bio-inspired strategy, have been incorporated into hyperspectral image processing to augment the model’s ability to perceive critical regions. Inspired by the human visual system, the Spatio-Temporal Attention Fusion Network (SSAN) proposed by Mei et al. integrates a spectral attention module constructed with Bi-RNN and a CNN-driven spatial attention module to dynamically focus on important bands in the spectral sequence, while also accounting for spatial structure perception []. The model significantly outperforms traditional convolutional models across several hyperspectral datasets, thereby validating the effectiveness of the biological attention strategy in modeling high-dimensional data. Although current methods typically integrate feature selection or attention modules with the main network in a decoupled manner, they lack true joint training and collaborative optimization capabilities. Taking SSAN as an example, its dual-branch architecture effectively fuses spectral and spatial information, but remains a modular assembly rather than being end-to-end optimized with the backbone, making it difficult to balance performance and efficiency in a unified system. Moreover, while the Bi-RNN-based attention mechanism enhances perceptual capability, it significantly increases parameter count and computational load. For instance, SSAN contains approximately 2.1 million parameters and over 25 million FLOPs on the Indian Pines dataset [], making its inference speed unsuitable for real-time or edge deployment. Nevertheless, these approaches—such as ant colony-based band selection and biologically inspired attention mechanisms—provide practical insights, highlighting the potential of deriving computational models from biological behavior and structures.

3. BioLiteNet

3.1. Overview of BioLiteNet

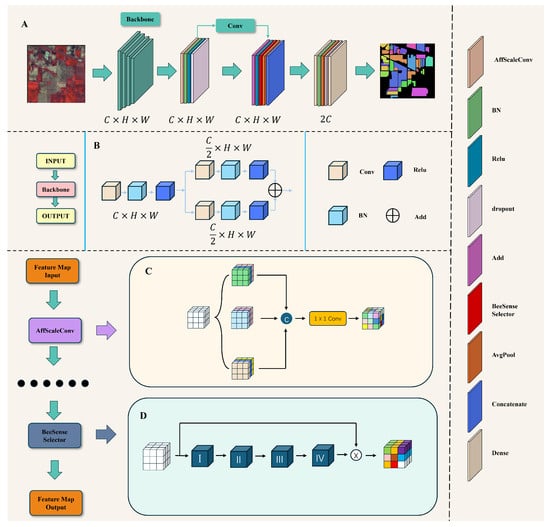

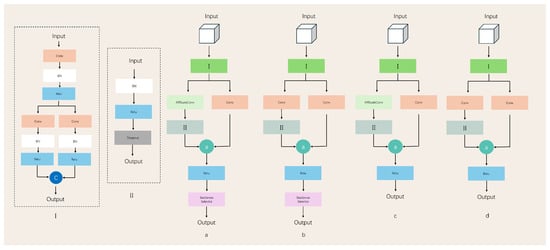

In hyperspectral image classification, the effective fusion and filtering of spectral and spatial feature information is crucial to improving the classification performance of deep learning models and reducing computational overhead [,]. To this end, this paper presents a novel lightweight network structure, BioLiteNet, designed to fully leverage the synergistic information between high-dimensional spectra and rich spatial contexts. While ensuring strong discriminative capability for different ground object categories, the model significantly reduces the number of parameters and the floating-point computation overhead. The core goal of BioLiteNet is to further reduce the reliance on large-scale training samples and multiple iterations of training while balancing classification accuracy and computational efficiency, thereby achieving efficient and robust hyperspectral image classification. Figure 2 shows a block diagram of the network structure of BioLiteNet.

Figure 2.

Illustrates the overall framework of BioLiteNet. (A) The BioLiteNet structure. (B) The backbone. (C) AffScaleConv, with top–down convolution kernel sizes of , , and , respectively. (D) BeeSenseSelector. I represents global average pooling, II represents convolution, III represents Sigmoid activation, and IV represents Top-K channel selection.

The BioLiteNet model proposed in this paper is composed of several functional modules, with the two core components being BeeSenseSelector and AffScaleConv. The BeeSenseSelector module integrates global spectral statistics and local spatial responses. Through the dynamic Top-K channel screening strategy, it simulates the focusing mechanism of honeybees on key targets during the sensing process and adaptively selects the spectral–spatial channels with the highest discriminative power for classification, thereby suppressing redundant noise and enhancing the sparsity and effectiveness of the feature representation. The AffScaleConv module is inspired by the perceptual characteristics of the honeybee’s multi-scale perspective. It incorporates multiple parallel depth-separable convolutional branches to capture spatial textures and spectral features at various scales, and fuses their outputs through convolution, considering both feature richness and computational efficiency.

In the overall structure, a hyperspectral image block of shape is taken as input by the model. The original spectral dimensions are first compressed to 32 channels using convolution to reduce dimensional redundancy and enhance computational efficiency, while BatchNorm and ReLU activation functions are employed to stabilize the training process. Subsequently, the dual-path spatial feature extraction module is employed, where one path utilizes convolution to model the local spatial structure, and the other path utilizes convolution to preserve the spectral response features. The outputs are concatenated along the channel dimension to form the fused features. This stage also incorporates a residual connection structure, where the fused features are processed through a convolution and subsequently merged with the backbone output to enhance gradient propagation. The features are then passed into the AffScaleConv module for multi-scale enhancement and subsequently into the BeeSenseSelector module for channel-level filtering, dynamically retaining the most discriminative spectral–spatial channels. Finally, the high-dimensional features are extracted and concatenated using global average pooling and maximum pooling, classified through a two-layer fully connected structure, and the final category prediction results are obtained using the softmax layer after operations such as BatchNorm, ReLU, and Dropout. To validate the advantages of BioLiteNet in terms of model size and computational resource consumption, this paper conducts a comparative analysis of parameter count and FLOPs against mainstream models such as HybridSN, ViT, and DGPF. BioLiteNet leverages global average pooling and a Top-k channel selection strategy to effectively eliminate the parameter redundancy caused by fully connected layers and large convolution kernels, while its dynamic channel pruning mechanism significantly reduces redundant computational overhead. For example, on the Indian Pines dataset, BioLiteNet achieves a parameter size of only 0.02 MB and FLOPs of approximately 0.086 MB, representing reductions of 99.1% and 99.99% compared to HybridSN, respectively. Compared with Transformer-based models like ViT and lvt, BioLiteNet achieves reductions of over three orders of magnitude in both parameter size and computational load. On the Pavia University and WHU-Hi-LongKou datasets, BioLiteNet similarly maintains FLOPs below 0.1 MB, significantly lower than existing mainstream models.

3.2. BeeSenseSelector

Early channel attention mechanisms have yielded significant advancements in the field of computer vision. SENet, proposed by Hu Jie et al., aggregates spatial information through global average pooling and learns nonlinear dependencies between channels using two fully connected layers, thereby assigning adaptive weights to each channel. This approach significantly enhances the model’s expressive power and performance []. Subsequently, Wang Qilong et al. proposed a lightweight channel attention module (e.g., ECA-Net) that replaces the fully connected layer with one-dimensional convolution, thereby enabling more efficient channel weight computation and addressing the issues of excessive parameters and high computational cost associated with fully connected layers [,]. All these methods perform channel recalibration based on global statistical information, thereby enhancing the network’s response to key feature channels. However, hyperspectral image data are characterized by high-dimensional spectral channels and strong spectral correlations, and traditional channel attention mechanisms often overlook the complex and dynamic relationships between different bands, resulting in inadequate filtering capabilities for redundant and irrelevant spectral information []. In addition, fixed fully connected or convolutional structures incur significantly higher computational and storage overheads when dealing with hundreds of spectral channels, which impedes deployment in resource-constrained environments. Particularly in hyperspectral imagery, the spectral features of different scenes and targets vary significantly, and a fixed attention allocation strategy proves inadequate for adapting to the diverse spectral–spatial information distribution []. Inspired by the “selective focusing” mechanism in the honeybee visual system, which operates with limited neural resources, honeybees are able to rapidly and accurately capture spectral and spatial information critical to survival in complex natural environments, demonstrating efficient and dynamic attentional modulation. The honeybee visual system reduces the processing of irrelevant and redundant information through local selective focusing, thereby maximizing the utilization of effective information. This offers important insights into the design of efficient and adaptive channel selection modules. Building upon this, the BeeSenseSelector module is proposed, which mimics the selective focusing strategy of the honeybee visual system and achieves efficient screening of key bands by dynamically adjusting the weights of spectral channels. Compared to traditional attention mechanisms, BeeSenseSelector effectively eliminates redundant channels, reduces computational complexity, and enhances model robustness while maintaining sensitivity to critical spectral information. This makes it particularly well-suited for application in high-dimensional sparse data scenarios of hyperspectral images. The structure of the module is illustrated in Figure 2D.

The BeeSenseSelector module is designed to mimic the focus selection mechanism of the honeybee visual system through dynamic channel filtering, thereby significantly reducing computational complexity while preserving discriminative power. The core process is comprised of three stages:

(1) Channel Scoring: Global average pooling is initially applied to the input feature maps to aggregate the spatial dimensions into channel descriptor vectors. Subsequently, a convolution with Sigmoid activation is applied to the descriptor vectors, mapping them to the interval to obtain the importance scores for each channel, which are used to measure the discriminative contribution of each spectral–spatial channel.

(2) Top-k Channel Selection: The number of channels to be retained for each sample is calculated based on the preset retention ratio, , where . The channel values and indices corresponding to the top k highest scores for each batch of samples are then obtained separately. This step simulates the attentional mechanism by which bees prioritize the most salient spectral channels in complex visual scenes. It should be noted that the Top-k operation is essentially a non-continuous and non-differentiable hard selection that picks the highest-scoring channels only during the forward pass, causing the gradients of unselected channels to be zero during backpropagation, resulting in gradient truncation. However, gradients can still propagate backward through the computation path of the channel importance scores to the preceding convolutional weights and input features, ensuring effective parameter updates.

(3) Mask Construction and Feature Re-Weighting: The above Top-K channel indices are converted into a binary mask, retaining a value of 1 only at the Top-K index positions. Next, it is expanded into a mask tensor matching the shape of the original feature map and then element-wise multiplied with the original feature map to produce an output feature that retains only the key channel information.

By combining the above three stages, the entire operation of the BeeSenseSelector module can be summarized using the following equation:

where denotes the output feature map. denotes the averaging over spatial locations for each channel, yielding a channel description vector of the specified shape. denotes the Sigmoid activation function, which maps the convolved channel scores to the interval , indicating the importance probability of each channel. , which indicates the number of channels retained. represents the channel retention ratio. Broadcast denotes the extension of the channel mask into a tensor that matches the shape of the original feature map. ⊙ denotes element-wise multiplication. denotes the original input feature map.

3.3. AffScaleConv

In hyperspectral image (HSI) classification tasks, the image data exhibit high continuity and correlation in both spectral and spatial dimensions, which imposes higher requirements on model design due to the high-dimensional and redundant nature of the feature representation. Specifically, the spectral dimension of hyperspectral data includes hundreds of contiguous bands, which are often strongly correlated []; the spatial dimension is characterized by the complex textures and structural features of ground objects in the image []. This dual spectral–spatial continuity renders the model highly sensitive to receptive field size, convolution kernel scale, and feature extraction strategies. If the receptive field is too small, the model may struggle to capture global semantic information, resulting in inadequate recognition of macro-structural features; if the receptive field is too large, fine-grained texture features may be overlooked, which can hinder the recognition of subtle differences. In addition, the distribution of ground objects in remote sensing scenes is complex and variable, with a large variation in the spatial textures and spectral features across different classes. As a result, a single-scale convolution operation struggles to capture both local textures and global context, which limits the discriminative capability of the model []. Therefore, the design of an effective multi-scale perception mechanism is crucial for enhancing the performance of HSI classification []. Multi-scale modeling enables the simultaneous extraction of features at different levels of granularity, thereby enhancing the model’s ability to recognize diverse ground objects []. Drawing on successful approaches in natural image processing, several multi-scale structures have been proposed. For instance, Gong et al. introduced the Multi-Scale Spectral–Spatial Convolutional Transformer (MSSSCT) model, which adopts the ASPP concept. This model utilizes dilated convolutions with varying dilation rates across parallel branches to significantly expand the receptive field while maintaining a relatively low parameter count. As a result, the model effectively captures multi-scale contextual information and enhances the discriminative capability of ground objects []. Furthermore, the Inception module proposed by Szegedy et al. strengthens the network by integrating multi-scale features through the utilization of various convolution kernel sizes (e.g., , , ) along with maximum pooling operations. This combination of multi-scale features enhances the model’s capacity to adapt and improve its robustness to information spanning diverse spatial scales []. However, despite the remarkable success of these methods in natural image processing, their direct application to hyperspectral image classification still presents significant challenges. Firstly, the high-dimensional spectral channels of hyperspectral data cause the computational and storage overheads associated with multi-scale operations to escalate rapidly, resulting in significant resource consumption and making it difficult to meet the efficiency demands of practical applications []. Secondly, these methods primarily focus on spatial scales, neglecting the continuity of the spectral dimension and the joint spectral–spatial characteristics. They fail to account for the complex and dynamic correlations between spectral channels, thereby limiting the comprehensiveness and discriminative power of feature representation []. Therefore, the design of multi-scale perception modules specifically tailored to the features of hyperspectral data, which can account for multi-scale information in both the spatial and spectral dimensions, while enhancing classification performance and maintaining model lightweightness, has become a key focus of current research [].

To this end, inspired by the “multi-scale perception—rapid focusing” strategy of the honeybee visual system, this paper proposes the AffScaleConv, a lightweight multi-scale convolutional module, designed to improve its ability in multi-scale feature modeling while preserving the compactness of the model. The structure of the module is shown in Figure 2C. Specifically, AffScaleConv is designed with three convolutional branches, each featuring different receptive field sizes. Each branch encodes the input features at varying kernel scales to capture multi-granular information, such as local texture, regional structure, and global context. Unlike conventional multi-scale structures, AffScaleConv does not use standard convolutions but instead employs depthwise separable convolutions, which significantly reduce computational overhead, thus drastically decreasing both the number of parameters and FLOPs. The features extracted from each scale branch are then concatenated along the channel dimension, with channel fusion and feature compression achieved via a convolution. This design mimics the process by which bees observe a target multiple times from different distances and angles during flight, ultimately converging the observations to form a unified perception. In conclusion, AffScaleConv realizes the structural benefits of “multi-scale acquisition, channel compression, and feature fusion,” ensuring efficient operation while significantly enhancing the model’s ability to distinguish between features at various scales in hyperspectral images. This is particularly evident in tasks with limited samples and fine-grained classification, where it demonstrates greater robustness and discriminative power.

The formula is as follows:

where denotes the output feature map. Conv denotes ordinary convolution for channel fusion. ⊕ denotes the concatenation along the channel dimension. denotes a depthwise separable convolution operation using kernels. denotes the original input feature map.

4. Experiment

4.1. Experimental Setup

To minimize the interference of the experimental environment on model performance evaluation and enhance the reproducibility of the results, a unified hardware setup and software configuration was employed across all experiments, with the specific operating environment and parameter settings detailed in Table 1.

Table 1.

Software and hardware parameters.

During training, the RMSprop optimizer was selected to enhance convergence speed and maintain training stability. The initial learning rate was set to 0.001, the learning rate decay factor to 0.001, the batch size to 32, and the total number of training epochs fixed at 64 to balance training efficiency with computational resource constraints. To ensure the reliability and statistical stability of the results, each experimental group was independently repeated 10 times, with the average of the 10 trials used as the final performance metric.

4.2. Description of the Datasets

To provide a more comprehensive overview of the composition of each dataset, the corresponding category names and sample sizes are presented in Table 2.

Table 2.

Division of the HSI datasets.

The Indian Pines dataset is a classical ground object classification dataset acquired by the AVIRIS sensor, developed and maintained by NASA’s Jet Propulsion Laboratory (JPL) in Pasadena, California, USA, over Northwestern Indiana, USA. The dataset consists of 224 spectral bands ranging from 0.4 to 2.5 micrometers, with a spatial resolution of 20 m, of which 200 active bands are retained after removing heavily noise-interfered bands. The scenario, which mainly covers agricultural and woodland areas and contains a total of 16 ground object classes, serves as a typical testbed for evaluating the performance of hyperspectral image classification algorithms under conditions of limited samples and highly redundant features.

The Pavia University dataset was acquired by the ROSIS sensor (Reflective Optics System Imaging Spectrometer), developed by the German Aerospace Center (DLR) in Cologne, Germany. The specific model used is ROSIS-03, which has been operational since 1999. The sensor was flown over the urban area of the University of Pavia, Italy, with a high spatial resolution of 1.3 m. The image has dimensions of 610 × 340 pixels and initially contains 115 spectral bands, with 103 active bands retained after preprocessing. The dataset covers a wide range of typical urban surface features (e.g., roads, rooftops, grass, and water bodies), including a total of nine ground object classes, and is commonly used for model evaluation in hyperspectral image classification tasks in urban environments.

The WHU-Hi-LongKou dataset is one of the hyperspectral remote sensing datasets collected and made public by Wuhan University’s RSIDEA team for UAV platforms, primarily used for crop classification in fine agriculture scenarios. The dataset was collected in the agricultural area of Longkou City, Shandong Province, China, using the Headwall Nano-Hyperspec hyperspectral sensor with high spectral and spatial resolution, containing 270 bands and 22 agriculture-related ground object classes. Compared to traditional aviation platforms, WHU-Hi-LongKou exhibits higher data complexity and stronger detail representation, making it suitable for evaluating the model’s generalization ability under conditions of high spatial resolution and fine category differentiation.

4.3. Evaluation Indicators

In order to comprehensively evaluate the performance of the model in hyperspectral image classification tasks, three commonly used metrics are used for quantitative analysis: average accuracy (AA), overall accuracy (OA), and Kappa coefficient (K).

Average accuracy (AA) is used to assess the model’s balance in classification across categories and is calculated as the arithmetic mean of the recall across all categories as follows:

where denotes the recall of the -th class (that is, the proportion of correctly classified pixels in the class relative to the total number of pixels in that class), and is the total number of classes.

Overall accuracy (OA) represents the proportion of correctly classified samples relative to the total number of samples and is defined as follows:

where denotes the number of true positive pixels correctly classified as positive, represents the number of true negative pixels correctly classified as negative, and and represent the number of false positives and false negatives, respectively.

The Kappa coefficient (K) is a statistical measure derived from the confusion matrix, used to evaluate the consistency between the model classification results and those obtained by random classification. The formula for its calculation is as follows:

where r represents the number of categories. denotes the number of correctly classified samples of the i-th class. and correspond to the total values in the row i and the column i of the confusion matrix, respectively. N denotes the total number of pixels involved in the evaluation.

4.4. Comparison Experiment

In order to comprehensively evaluate the performance of the proposed model, ten representative hyperspectral image classification methods were selected for comparison, encompassing traditional convolutional networks, small-sample learning networks, and the Transformer architecture, specifically HybridSN [], Yang [], DGPF-RENet [], ViT [], LeViT [], RvT [], T2T [], DiT [], HiT [], and SS-Mamba []. These models represent the current research results from different main directions in hyperspectral classification, providing valuable references for both representativeness and performance.

HybridSN is a model that integrates 3D and 2D convolutional feature extraction structures to model both spectral and spatial information, demonstrating consistent performance across several classical datasets. The Yang model improves the extraction of fine-grained spectral features by combining 2D/3D convolutions and regularization strategies. DGPF-RENet, on the other hand, introduces a residual feature extraction and global location awareness mechanism from the perspective of reducing data dependence, making it suitable for small-sample hyperspectral learning scenarios. The SS-Mamba model is built upon a linear state–space model and incorporates the Mamba architecture to enhance the modeling of long-sequence spectral features. This method adopts a dual-branch design for spectral and spatial information, capturing pixel-level spectral sequences and their local spatial context separately, thereby achieving efficient spectral–spatial joint feature extraction. With its lightweight structure and excellent sequence modeling capability, SS-Mamba demonstrates strong classification performance and computational efficiency across multiple datasets.

Among the Transformer-based methods, ViT was the first model to introduce a pure Transformer architecture for image recognition, relying on a global self-attention mechanism to perform feature encoding. LeViT builds on this by introducing locality constraints, thereby achieving a better trade-off between speed and accuracy. RvT, on the other hand, enhances Transformer’s spatial modeling capabilities by integrating a positional enhancement mechanism and a robustness component. The T2T model mimics the human visual perception process of focusing layer by layer, improving the continuity of feature expression through token-to-token operations. DiT is a deep Transformer network that strengthens model abstraction by leveraging deeper structures. The HiT model, specifically designed for hyperspectral images, integrates convolutional operations within the Transformer, combined with a spectral adaptive module to effectively capture local details and enhance spatial continuity modeling.

All comparison methods are evaluated using a unified dataset and training protocol. The remaining samples in the original dataset, excluding those used for training, are fully utilized for testing. To ensure the stability and reproducibility of the results, each experiment is independently repeated 10 times, and the average is reported as the final evaluation metric. All results are rounded to two decimal places.

4.5. Results and Analyses

To validate the effectiveness of the proposed model, a systematic comparison was conducted with current mainstream methods on three widely used hyperspectral image classification datasets: Indian Pines, Pavia University, and WHU-Hi-LongKou. The selected comparison methods encompass traditional convolutional networks (e.g., HybridSN and Yang), Transformer architectures (e.g., ViT, LeViT, RvT, T2T, DiT, and HiT), and a low-sample representative model, DGPF-RENet. The experimental results are presented in Table 3, Table 4 and Table 5.

Table 3.

Results on the Indian Pines dataset.

Table 4.

Results on the Pavia University dataset.

Table 5.

Results on the WHU-Hi-LongKou dataset.

In the hyperspectral image (HSI) classification task, a comprehensive evaluation of the proposed model’s effectiveness was conducted through systematic comparisons with typical CNN, Transformer, Lightweight network, and few-shot learning methods across multiple datasets and training sample ratios. The experimental results demonstrate that the proposed method shows significant advantages in terms of overall accuracy, stability, and small-sample adaptability, particularly due to the incorporation of the bionic module structures BeeSenseSelector and AffScaleConv.

From the perspective of traditional convolutional neural network (CNN) methods, HybridSN has demonstrated consistently superior performance across multiple datasets, particularly on the Pavia University dataset. With 10% of the training samples, HybridSN achieved an overall accuracy (OA) of 99.85%, an average accuracy (AA) of 99.65%, and a Kappa coefficient of 99.80%, demonstrating strong discriminative capability in scenarios characterized by well-defined spatial structures and category boundaries. This improvement is primarily attributed to the fused architecture of 3D and 2D convolutions, which effectively extracts joint spectral–spatial features and enhances the model’s capacity for spatial context modeling. In contrast, the Yang model, also based on the CNN architecture, exhibits poor performance in several scenarios, particularly when few training samples are available. For example, on the Indian Pines dataset, the OA, AA, and Kappa of the Yang model are 61.68%, 42.07%, and 55.66%, respectively, with a training sample size of 5%, which are significantly lower than the corresponding values for HybridSN (95.86%, 88.48%, and 95.28%). This suggests that, in the absence of a multi-scale structural design, conventional 3D convolution methods exhibit limitations in spatial expression.

Transformer-based methods demonstrate unique advantages in modeling high-dimensional spectral sequences in hyperspectral imagery (HSI), owing to their exceptional ability to capture long-range dependencies. As an example, LeViT achieves an OA of 74.62% with the 25-sample setting on the WHU-Hi-LongKou dataset, which is significantly better than the standard Transformer architecture ViT (OA = 59.58%) and the shallower T2T structure (OA = 63.51%). In addition, lightweight Transformer architectures incorporating CNN modules, such as LeViT and RvT, demonstrate relatively stable performance across multiple datasets, suggesting that integrating local-awareness mechanisms into the Transformer backbone helps mitigate its limitations in spatial detail modeling. However, two major issues persist with the Transformer method as a whole: First, the architecture lacks strong constraints on local spatial continuity, which makes it challenging to accurately identify ground object classes with indistinct boundaries or complex textures. Second, the Transformer model exhibits high sensitivity to the number of training samples, resulting in substantial performance fluctuations under small sample conditions. For example, in an experiment utilizing only 5% of the samples from the Indian Pines dataset, the OA values for ViT and T2T were 38.11% and 39.40%, respectively, with both Kappa values falling below 27%.

The DGPF method for small-sample learning demonstrates strong accuracy and stability across multiple datasets. Particularly, in the WHU-Hi-LongKou dataset with the 25-sample setting, its OA reaches 96.76%, AA is 96.58%, and the Kappa coefficient is 95.77%, significantly outperforming all Transformer-based methods and traditional CNN models. In contrast, DGPF demonstrates robust overall performance, particularly in scenarios with scarce training samples, showcasing small-sample learning capability superior to most Transformer-based methods and traditional CNNs. However, on the Pavia University dataset, its overall classification accuracy (OA) and consistency metric (K) were 94.79% and 93.08%, respectively, still trailing HybridSN (OA 99.85%, K 99.80%). This gap reflects that DGPF is still constrained by its relatively limited ability to model spatial details within its structure when modeling regions with fuzzy spatial boundaries or the heterogeneous mixing of ground object classes. Although DGPF exhibits strong advantages in joint spectral–spatial feature extraction, its performance may be somewhat insufficient in scenarios involving complex spatial structures or blurred boundary transitions due to the lack of multi-scale fusion or deep spatial convolution mechanisms in local spatial context modeling.

The lightweight network SS-Mamba incorporates the Mamba architecture to efficiently model spectral sequences and spatial features. Under the setting of 25 training samples on the WHU-Hi-LongKou dataset, SS-Mamba achieved an OA of 78.21% and a Kappa coefficient of 71.23%. Although this falls short of DGPF (OA 96.76%), it significantly outperforms traditional Transformer architectures such as ViT (OA 59.58%) and certain CNN-based methods. Benefiting from its state–space sequence modeling and dual-branch design, SS-Mamba demonstrates robust spectral discrimination and spatial perception capabilities in small-sample scenarios, exhibiting strong adaptability and robustness. However, SS-Mamba still shows limitations in scenes with complex boundaries or rich texture details. On the Pavia University dataset with 10% training samples, its OA and Kappa reached only 81.55% and 74.27%, respectively, which are notably lower than HybridSN and DGPF. The primary reason is that, while SS-Mamba enhances spectral sequence modeling, its spatial branch remains relatively shallow, lacking the multi-scale convolutional structures of HybridSN and the global positional awareness modules of DGPF. As a result, its discriminative power is constrained in regions with blurred category boundaries or heterogeneous mixtures.

Compared to other models, the proposed method demonstrates superior overall performance across all training settings on the three datasets. On the Indian Pines dataset, even with only 5% of training samples, the method achieved 90.02% OA and 88.56% Kappa, significantly outperforming all Transformer-based models (e.g., ViT, T2T, and DeepViT, which were all below 55%) and several CNN methods. With 10% of the training samples, OA increased to 95.55%, and Kappa reached 94.92%, ranking second only to HybridSN and DGPF. In a 25-sample experiment on the WHU-Hi-LongKou dataset, the method achieved 78.64% OA and 75.06% AA, outperforming all compared Transformer models and some classical CNN architectures. In the 10% sample setting of Pavia University, this method outperformed most Transformer networks, achieving 88.20% OA and 84.25% Kappa, and was only surpassed by the extreme performance of HybridSN and DGPF (99.85% OA, 99.80% Kappa). It is worth noting that the recently proposed SS-Mamba method has also demonstrated a good balance of performance in hyperspectral classification tasks. Its sequence processing mechanism based on state–space modeling offers stronger spectral sequence modeling capabilities compared to traditional Transformers in small-sample scenarios. However, compared to the method proposed in this paper, SS-Mamba still shows certain limitations in spatial detail modeling and classification performance on complex boundary regions. For example, under the 25-sample setting on the WHU-Hi-LongKou dataset, SS-Mamba achieved an overall accuracy (OA) and Kappa of 78.21% and 71.23%, respectively, which are close to those of our method (OA 78.64% and Kappa 71.64%). However, on the Indian Pines and Pavia University datasets, SS-Mamba’s overall performance was noticeably inferior. Particularly, in the 10% sample experiment on Pavia University, SS-Mamba only attained an OA of 81.55% and a Kappa of 74.27%, both lower than our method (OA 88.20% and Kappa 84.25%). This reflects its limited discriminative capability in areas with complex spatial textures or ambiguous class boundaries. This gap mainly stems from the collaborative design of the BeeSenseSelector and AffScaleConv modules proposed in this paper. The BeeSenseSelector assesses channel importance via global average pooling and 1 × 1 convolution, and dynamically selects key spectral–spatial channels using a Top-K strategy. This effectively suppresses redundant information and enhances response to discriminative features, improving the model’s ability to filter and focus on high-dimensional sparse features. The AffScaleConv module achieves efficient fusion of spatial–spectral features under different receptive fields through multi-scale depthwise separable convolution branches, significantly enhancing the model’s expression and discrimination capabilities for complex boundaries and fine-grained targets. Although SS-Mamba has advantages in spectral sequence modeling and lightweight design, its spatial branch structure is relatively shallow and lacks strongly constrained local spatial detail modeling strategies. This results in slightly lower classification accuracy than our method in scenarios with complex land-cover distribution and blurred boundary transitions. In summary, the model presented in this paper consistently outperforms most Transformer, classical CNN methods and the lightweight network SS-Mamba while maintaining both lightweight and small-sample adaptability. Its performance is second only to HybridSN and DGPF, which exhibit optimal performance in specific scenarios. This balance is achieved through two bionic structural designs: the BeeSenseSelector module assesses channel importance through global average pooling and 1 × 1 convolution, dynamically filtering key spectral–spatial channels using a Top-K strategy to significantly suppress redundant information. The AffScaleConv module constructs multi-scale depth-separable convolutional branches to efficiently fuse spatial–spectral features across different receptive fields, thereby enhancing the model’s ability to discriminate multi-scale and complex boundaries while maintaining parameter efficiency.

It should be noted that on the WHU-Hi-LongKou dataset, BioLiteNet exhibits significantly lower classification accuracy for Class 4 (broad-leaf soybean), Class 7 (roads and buildings), and Class 8 (mixed weeds) compared to other categories. This is mainly due to the following reasons: Although Class 4 has a relatively large number of samples, its ground coverage area is small, and its spectral features are highly similar to those of Class 5 (narrow-leaf soybean), causing some confusion between these two classes. Class 7 has fewer samples (7124), and its morphological and spectral characteristics are complex and variable, making it difficult for the model to fully capture its feature distribution. Class 8 has the fewest samples (5229), with spatially scattered and diverse ground objects lacking stable spectral features, which further affects the model’s discriminative ability. Moreover, as a lightweight model, BioLiteNet performs slightly worse than more complex models such as HybridSN and DGPF in regions with ambiguous boundaries and complex class transitions. This is mainly because the hard selection mechanism in the BeeSenseSelector module may discard some edge-detail features, and the lightweight convolution structure of the AffScaleConv module limits its ability to represent complex textures and small targets, which is especially evident in high-resolution scenarios like the WHU-Hi-LongKou dataset. Nevertheless, BioLiteNet maintains stable and competitive classification performance across multiple datasets, while its parameter size is approximately 0.02 MB, and computational complexity is about 0.096 MB FLOPs, significantly lower than those of HybridSN and DGPF, demonstrating a good balance between performance and lightweight design.

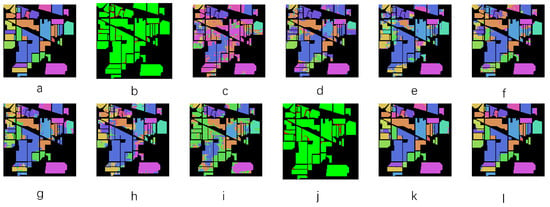

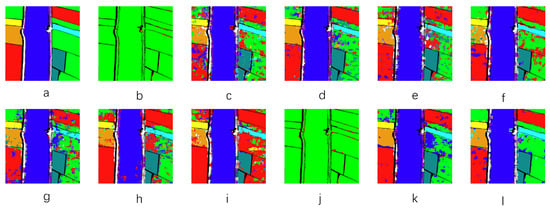

Figure 3.

Visualization of HSI classification results on the Indian Pines dataset. (a) Ground Truth; (b) HybridSN; (c) ViT; (d) LeViT; (e) DiT; (f) HiT; (g) Yang; (h) RvT; (i) T2T; (j) DGPF; (k) SS-Mamba; (l) Ours. (b,j) additionally show the distribution of misclassified pixels, where green denotes correctly classified pixels and red indicates misclassified ones.

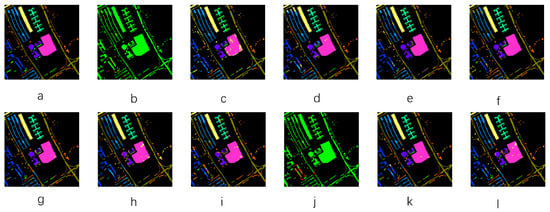

Figure 4.

Visualization of HSI classification results on the Pavia University dataset. (a) Ground Truth; (b) HybridSN; (c) ViT; (d) LeViT; (e) DiT; (f) HiT; (g) Yang; (h) RvT; (i) T2T; (j) DGPF; (k) SS-Mamba; (l) Ours. (b,j) additionally show the distribution of misclassified pixels, where green denotes correctly classified pixels and red indicates misclassified ones.

Figure 5.

Visualization of HSI classification results on the WHU-Hi-LongKou dataset. (a) Ground Truth; (b) HybridSN; (c) ViT; (d) LeViT; (e) DiT; (f) HiT; (g) Yang; (h) RvT; (i) T2T; (j) DGPF; (k) SS-Mamba; (l) Ours. (b,j) additionally show the distribution of misclassified pixels, where green denotes correctly classified pixels and red indicates misclassified ones.

Figure 3, Figure 4 and Figure 5 display the classification visualizations of different models across the three datasets: Indian Pines, Pavia University, and WHU-Hi-LongKou. Overall, HybridSN and DGPF-RENet demonstrate the clearest classification images, closely matching the ground truth labels. HybridSN effectively fuses spectral and spatial information by combining 3D and 2D convolutional architectures, better preserving the continuity of feature boundaries and category differentiation, with almost no noticeable areas of category confusion in the images. DGPF, on the other hand, maintains good classification consistency, even in scenarios with complex category distributions and sparse samples, due to its efficient low-sample modeling capability. The regional block structure remains intact, with fewer misclassified pixels, indicating its strong ability to express joint spectral–spatial features. SS-Mamba demonstrates strong spectral sequence modeling capability and regional consistency in classification visualization results. Its long-sequence feature extraction mechanism, based on a state–space model, enables it to maintain good spectral discriminative power even in scenarios with limited samples or complex class distributions. In major category regions, the classification exhibits complete block structures and high intra-class consistency, with relatively few misclassified pixels, indicating SS-Mamba’s strong stability and adaptability in spectral–spatial joint feature representation.

In contrast, the Transformer series of methods (e.g., ViT, T2T, LeViT, and HiT) generally exhibit more prominent speckled misclassification regions in the visualizations, particularly ViT and T2T. These methods suffer from blurred feature boundaries and significant category confusion due to their lack of local spatial awareness; the Transformer variant, such as HiT, which incorporates a convolutional structure, shows slight improvement in boundary handling, but still fails to fully restore the integrity of the category block structure.

The BioLiteNet model proposed in this paper also demonstrates favorable results on this dataset. Visualizations of the images indicate that the model can more accurately distinguish most of the primary class regions and maintains better spatial structure consistency, with misclassification significantly reduced compared to most Transformer methods. Furthermore, as illustrated in the confusion matrix heatmaps of Figure 6, the model achieves near-perfect classification accuracy for major categories such as Corn-notill, Soybean-mintill, and Woods on the Indian Pines dataset, demonstrating excellent capability in extracting features from large-sample classes. Simultaneously, it maintains high recognition rates for small-sample categories like Alfalfa and Oats, reflecting strong generalization performance in few-shot scenarios. For fine-grained and visually similar categories such as Grass-pasture and Grass-trees, the model effectively suppresses misclassifications, showcasing its precise local feature perception ability.On the Pavia University dataset, the model achieves extremely high classification accuracy for the dominant Meadows category, ensuring stability in large-sample classification. Even when faced with texture-complex categories like Asphalt, Trees, and Bitumen, the model maintains a low confusion rate, reflecting its powerful spatial feature extraction and contextual understanding capabilities. Additionally, for rare categories such as Painted metal sheets, the model is still able to achieve satisfactory recognition, highlighting its adaptability to small-sample scenarios. For the large-scale, complex WHU-Hi-LongKou dataset, the model continues to deliver outstanding classification performance, achieving near-perfect accuracy for major categories like Broad-leaf soybean, Water, and Rice, which validates its strong adaptability to large-scale high-resolution imagery. Furthermore, the model maintains precise discrimination for fine-grained categories such as Sesame and Mixed weed, demonstrating its keen sensitivity to subtle feature differences and category boundaries. Even in regions with complex backgrounds and high inter-class interference, the classification results remain clear and accurate, further showcasing the model’s superior spatial feature analysis and contextual modeling capabilities. Although its accuracy in some boundary details is not on par with that of HybridSN and DGPF, the overall classification block structure remains clear, with misclassified areas concentrated and minimal noise. This validates the synergistic advantages of the BeeSenseSelector and AffScaleConv modules in feature focusing and multi-scale fusion.

Figure 6.

Confusion matrix heatmaps of BioLiteNet on three hyperspectral datasets. (I) Indian Pines, (II) Pavia University, (III) WHU-Hi-LongKou.

BioLiteNet also demonstrates strong adaptability across different imaging conditions. The Indian Pines, Pavia University, and WHU-Hi-LongKou datasets exhibit significant variations in lighting, spatial resolution, and class distribution, yet BioLiteNet maintains stable classification performance across all these datasets. However, in certain edge scenarios, such as blurred object boundaries and complex category transition regions, the classification performance of BioLiteNet shows a noticeable decline compared to internal homogeneous areas, with boundary pixels prone to category confusion. This phenomenon is closely related to the trade-off between lightweight design and dominant feature extraction in the model architecture. On one hand, the BeeSenseSelector module employs a Top-k hard selection strategy, retaining only the most discriminative spectral–spatial channels. While this effectively reduces feature redundancy, it may overlook secondary features that, although weaker, are essential for capturing boundary details, thus diminishing the model’s discrimination capability in transition regions. On the other hand, although the AffScaleConv module incorporates multi-scale receptive fields, its core design is still based on lightweight depthwise separable convolutions, which limits its expressiveness in modeling complex boundary textures and small-scale objects. This limitation becomes more pronounced in high-resolution scenarios like WHU-Hi-LongKou, where category boundaries are often interwoven and exhibit fuzzy transitions. These factors collectively lead to BioLiteNet’s slightly inferior accuracy in boundary details compared to more complex models, yet it still achieves a well-balanced trade-off between lightweight efficiency and overall performance.

Finally, we evaluated the parameters as well as FLOPs of various models on three datasets, and the results are given in Table 6, where our batch size is 32.

Table 6.

Parameters and FLOPs of all models.

The model proposed in this study achieves strong performance across multiple benchmark datasets. More importantly, compared with existing mainstream methods, it substantially reduces the number of parameters (Params) and computational cost (FLOPs) while maintaining competitive classification accuracy, thereby reflecting improved efficiency. The experimental results suggest that the proposed method effectively lowers model complexity while preserving accuracy, achieving a balanced trade-off between computational overhead and classification performance, which meets the dual requirements of efficiency and accuracy in practical applications.

4.6. Ablation Experiments

To validate the effectiveness of the proposed method, ablation experiments were conducted on the Indian Pines dataset using 10% of the training samples; the various configurations are shown in Figure 7, and the experimental results are provided in Table 7.

Figure 7.

Shows the ablation study results: (a) Our full model. (b) Without AffScaleConv. (c) Without BeeSenseSelector. (d) Without both AffScaleConv and BeeSenseSelector, where (I) indicates that the input features undergo Conv, BatchNorm, and ReLU operations, followed by feature concatenation, and (II) indicates the feature maps processed by BatchNorm, ReLU, and Dropout operations.

Table 7.

Ablation study of different modules.

When both AffScaleConv and BeeSenseSelector are removed, although the model retains the base architecture, OA, AA, and Kappa degrade to varying extents due to the lack of multi-scale feature extraction and channel screening. The number of parameters and FLOPs increase to 27.7 k and 131.7 k, respectively, as a result of the uncompressed redundant channels, leading to a substantial increase in the computational overhead. When only BeeSenseSelector is removed, the model can extract cross-scale features using AffScaleConv, and its OA, AA, and Kappa recover to 95.16%, 84.57%, and 94.46%, respectively. The number of parameters and FLOPs decrease to 20.9 k and 90.6 k, respectively, but performance improvement is limited due to the presence of channel redundancy. In contrast, after removing AffScaleConv alone, the model achieves peak performance thanks to the channel focusing mechanism of BeeSenseSelector, without the need for multi-scale fusion (OA 96.61%, AA 89.53%, Kappa 96.12%). However, at this point, the number of parameters and FLOPs rise to 28.8 k and 133.8 k, respectively. In the absence of multi-scale feature fusion, the model has to rely on larger parameters and computations to maintain its discriminative performance, leading to a significant increase in the number of parameters and FLOPs. Ultimately, the model proposed in this paper achieves an OA of 95.55%, AA of 85.24%, and Kappa of 94.92 under the synergy of both components, while controlling the number of parameters and FLOPs at 22 k and 92.6 k, respectively, achieving the optimal trade-off between performance and efficiency. In summary, the two modules, BeeSenseSelector and AffScaleConv, complement each other in terms of functionality: the former effectively eliminates redundant spectral bands through dynamic channel filtering, while the latter enriches feature representations through multi-scale deep convolution, and, based on this, significantly reduces the model’s parameters and computational cost. Their synergistic interaction jointly forms a lightweight and highly discriminative hyperspectral feature extraction framework.

5. Conclusions

This paper presents BioLiteNet, a lightweight hyperspectral image classification model based on biomimetic design. On several hyperspectral remote sensing datasets, the model achieves significant model compression and reduction in computational overhead while maintaining high classification accuracy. Specifically, the number of parameters in BioLiteNet on the Indian Pines, Pavia University, and WHU-Hi-LongKou datasets is maintained below 22 K, with the computation required for forward inference (FLOPs) being only 92.6 K, 71.0 K, and 96.0 K, respectively. Compared to the millions of parameters and billions of computations commonly found in mainstream CNN and Transformer architectures, BioLiteNet achieves over 99% parameter compression and a reduction in computational complexity by approximately times, demonstrating exceptional lightweight characteristics and deployment potential. The model integrates two core innovative modules, BeeSenseSelector and AffScaleConv, effectively enhancing classification accuracy and model efficiency. The BeeSenseSelector module enhances the discriminatory power of feature expression by dynamically filtering highly discriminative spectral channels, significantly suppressing redundant information interference. The AffScaleConv module, on the other hand, introduces a multi-scale deep convolution mechanism, enabling the effective fusion of spatial–spectral features across different receptive fields, while further enhancing the model’s representational capability while maintaining a compact structure. Experimental results indicate that the proposed method achieves outstanding performance across multiple classic hyperspectral datasets (e.g., Indian Pines, Pavia University, and WHU-Hi-LongKou), particularly in scenarios with very few training samples, where it continues to demonstrate exceptional generalization ability and robustness. In comparative experiments, the proposed model not only outperforms most existing Transformer architectures and several traditional convolutional neural networks in classification accuracy but also achieves substantial reductions in both parameter size and computational complexity. On the Indian Pines dataset, the proposed method achieves an overall accuracy (OA) of 90.02% with only 5% of the training samples, while also maintaining high accuracy on both the Pavia University and WHU-Hi-LongKou datasets. This demonstrates the model’s effectiveness and applicability in small sample environments. In addition, the ablation experiments conducted in this study further highlight the significance of the BeeSenseSelector and AffScaleConv modules in enhancing model performance. BeeSenseSelector enhances classification discriminability by dynamically filtering important spectral channels and effectively suppressing redundant information. AffScaleConv, on the other hand, improves the representation of spatial–spectral features by integrating features from different receptive fields using multi-scale depth convolution, while maintaining the model’s lightweight structure. Overall, the bionic lightweight hyperspectral classification model proposed in this paper strikes an optimal balance between accuracy and efficiency, offering novel ideas and solutions for future hyperspectral image classification tasks in resource-constrained environments. In future research, the adaptability of the model in more complex remote sensing scenarios will be further explored, and the classification performance and generalization of the model will be further improved through model enhancements.

Author Contributions

Conceptualization, B.Z. and S.C.; methodology, B.Z., S.C. and J.L.; software, J.L., Y.G. and Y.W.; validation, H.Y. and B.X.; formal analysis, B.Z., S.C. and Y.W.; investigation, J.L., Y.G. and Y.H.; resources, L.L.; data curation, J.L. and Y.W.; writing—original draft preparation, B.Z. and S.C.; writing—review and editing, L.L., Y.G. and Y.H.; visualization, B.Z. and J.L.; supervision, L.L.; project administration, L.L.; funding acquisition, L.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Training Program for Excellent Young Innovators of Changsha (Grant No. kq2209001), Hunan Excellent Young Scientists Fund (Grant No. 2025JJ40066).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Acknowledgments

The authors thank the anonymous reviewers and the editors for their insightful comments and helpful suggestions that helped improve the quality of our manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Li, W.; Sun, K.; Wei, J. Adapting Cross-Sensor High-Resolution Remote Sensing Imagery for Land Use Classification. Remote Sens. 2025, 17, 927. [Google Scholar] [CrossRef]

- Uddin, M.P.; Mamun, M.A.; Hossain, M.A. PCA-based feature reduction for hyperspectral remote sensing image classification. IETE Tech. Rev. 2021, 38, 377–396. [Google Scholar] [CrossRef]

- Li, X.; Zhang, L.; You, J. Locally weighted discriminant analysis for hyperspectral image classification. Remote Sens. 2019, 11, 109. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–spatial classification of hyperspectral imagery with 3D convolutional neural network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2019, 17, 277–281. [Google Scholar] [CrossRef]

- Sun, Y.; Lei, L.; Tan, X.; Guan, D.; Wu, J.; Kuang, G. Structured graph based image regression for unsupervised multimodal change detection. ISPRS J. Photogramm. Remote Sens. 2022, 185, 16–31. [Google Scholar] [CrossRef]

- Wang, M.; Sun, Y.; Xiang, J.; Sun, R.; Zhong, Y. Adaptive learnable spectral–spatial fusion transformer for hyperspectral image classification. Remote Sens. 2024, 16, 1912. [Google Scholar] [CrossRef]

- De Lucia, G.; Lapegna, M.; Romano, D. Unlocking the potential of edge computing for hyperspectral image classification: An efficient low-energy strategy. Future Gener. Comput. Syst. 2023, 147, 207–218. [Google Scholar] [CrossRef]

- Liu, J.; Xiang, J.; Jin, Y.; Liu, R.; Yan, J.; Wang, L. Boost precision agriculture with unmanned aerial vehicle remote sensing and edge intelligence: A survey. Remote Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Liu, S.; Chu, R.S.; Wang, X.; Luk, W. Optimizing CNN-based hyperspectral image classification on FPGAs. In Proceedings of the International Symposium on Applied Reconfigurable Computing, Darmstadt, Germany, 9–11 April 2019; Springer: Cham, Switzerland, 2019; pp. 17–31. [Google Scholar]

- Wang, Y.; Zhang, T.; Zhao, L.; Hu, L.; Wang, Z.; Niu, Z.; Sun, X. RingMo-lite: A remote sensing multi-task lightweight network with CNN-transformer hybrid framework. arXiv 2023, arXiv:2309.09003. [Google Scholar] [CrossRef]

- Qingyun, F.; Zhaokui, W. Cross-modality attentive feature fusion for object detection in multispectral remote sensing imagery. Pattern Recognit. 2022, 130, 108786. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, K.; Zhou, X.; Zeng, L.; Huang, S. Enhancing Binary Convolutional Neural Networks for Hyperspectral Image Classification. Remote Sens. 2024, 16, 4398. [Google Scholar] [CrossRef]