Abstract

Hyperspectral image classification faces challenges with high-dimensional spectral data and complex dependencies between bands. This paper proposes HyperspectralMamba, a novel architecture for hyperspectral image classification that integrates state space modeling with adaptive recalibration mechanisms. The method addresses limitations in existing techniques through three key innovations: (1) a novel dual-stream architecture that combines SSM global modeling with parallel convolutional local feature extraction, distinguishing our approach from existing single-stream SSM methods; (2) a band-adaptive feature recalibration mechanism specifically designed for hyperspectral data that adaptively adjusts the importance of different spectral band features; and (3) an effective feature fusion strategy that integrates global and local features through residual connections. Experimental results on three benchmark datasets—Indian Pines, Pavia University, and Salinas Valley—demonstrate that the proposed method achieves overall accuracies of 95.31%, 98.60%, and 96.40%, respectively, significantly outperforming existing convolutional neural networks, attention-enhanced networks, and Transformer methods. HyperspectralMamba demonstrates an exceptional performance in small-sample class recognition and distinguishing spectrally similar terrain, while maintaining lower computational complexity, providing a new technical approach for high-precision hyperspectral image classification.

1. Introduction

Hyperspectral remote sensing technology provides rich spectral information for ground cover type identification by capturing spectral reflectance characteristics of terrain across continuous bands, offering broad application prospects in precision agriculture, environmental monitoring, mineral exploration, and urban planning. As a core task in hyperspectral data analysis, hyperspectral image classification aims to assign each pixel to a specific terrain category and has become a research hotspot in the remote sensing field. However, hyperspectral image classification faces multiple challenges including high dimensionality, high correlation between bands, sample scarcity, and spectral–spatial feature fusion, which has prompted researchers to continuously explore more effective classification methods.

Traditional hyperspectral image classification methods primarily rely on manually designed feature extraction algorithms and classical machine learning classifiers. Shao et al. (2017) proposed a semi-supervised method using sparse representation graphs, but it suffered from high computational complexity and lacked effective spatial context utilization []. Li et al. (2017) designed a hyperspectral image reconstruction model based on deep convolutional neural networks that overly focused on spatial information while neglecting complex correlations between bands, resulting in a poor performance with spectrally similar terrain []. Gao et al. (2018) developed a framework combining small convolutions and feature reuse, but could only capture local band associations without modeling distant band relationships []. Overall, traditional methods laid the foundation for hyperspectral image classification but exhibited obvious limitations in processing high-dimensional complex data and capturing joint spectral–spatial features.

With the development of deep learning technology, convolutional neural networks (CNNs) have demonstrated powerful potential in hyperspectral image classification. Gao and Lim (2019) integrated a pixel-level CNN and patch-based CNN to extract spectral features and spectral–spatial features, respectively, introducing a probabilistic relaxation method to optimize classification results []. He and Chen (2019) introduced Spatial Transformer Networks (STNs) into the CNN architecture to optimize the inputs and proposed DropBlock regularization technology, though the input cube size needed manual setting []. Guo et al. (2020) designed a deep collaborative attention network combining a 2D-CNN and 3D-CNN, though its complex structure and numerous parameters made it prone to overfitting with limited samples []. Alipour-Fard and Arefi (2020) proposed a structure-aware generative adversarial network that generated virtual training samples, though the sample quality decreased significantly with insufficient training data []. Recent CNN-based approaches have explored hybrid architectures to balance computational efficiency with feature extraction capability. Firat et al. (2023) proposed a Hybrid 3D/2D Complete Inception Module that strategically combines 3D and 2D CNNs, achieving exceptional accuracies of 99.83%, 100%, 100%, 90.47%, and 98.93% on five benchmark datasets []. Chen et al. (2024) developed MH-2D-3D-CNN with a three-channel architecture incorporating a normalized adaptive matched detector (NAMD) and convolutional block attention module (CBAM), demonstrating a robust performance with only 1% training samples (87.49–99.22% accuracy) []. Li et al. (2024) introduced SFFNet, connecting CNNs with graph convolutional networks through staged feature fusion, where the CNN extracts initial features and the GCN performs classification based on spectral similarity, achieving over 97% accuracy []. Despite these advances, CNN methods remain fundamentally limited by local receptive fields, hindering the effective capture of long-range dependencies critical for modeling complex spectral relationships across hundreds of hyperspectral bands.

To improve the ability of CNNs to process high-dimensional features, researchers have introduced various enhancement mechanisms. Fang et al. (2020) proposed a self-learning method based on a multi-scale CNN ensemble, though its error correction mechanism often favored dominant classes []. Liu et al. (2021) combined a multi-modal CNN and dynamic stochastic resonance spatial–spectral fusion, though its shadow detection mechanism was highly threshold-dependent []. Qing and Liu (2021) designed a multi-scale residual network with attention mechanisms using PCA dimensionality reduction, which cannot preserve nonlinear structures in hyperspectral data []. Appice et al. (2021) explored color-based, pseudo-label supervised, hyperspectral image saliency detection []. These enhancement methods improved the classification performance to some extent but still faced significant challenges in processing high-dimensional spectral data.

The introduction of attention mechanisms further improved the hyperspectral image classification performance. Zheng et al. (2021) proposed a method combining hybrid convolution and covariance pooling, introducing PCA-based channel shifting strategies []. Miao et al. (2022) designed a hierarchical CNN classification method with three-dimensional attention soft enhancement and CutMix technology []. Li et al. (2022) developed a multi-attention fusion network that reduced the influence of redundant bands and interfering pixels []. Zhang et al. (2022) designed an adaptive multi-scale feature attention network []. Pan et al. (2022) proposed a multi-scale residual weak dense network with two branches for spectral and spatial features []. Attention-enhanced methods improved feature discrimination capabilities but generally computed attention based on shallow features, lacking a deep understanding of complex structures in high-dimensional data.

The evolution of attention mechanisms for hyperspectral image classification can be traced back to foundational works that established spatially aware feature extraction frameworks. Liu et al. (2023) pioneered the central attention network (CAN) with scaled dot-product central attention (SDPCA) tailored for HSI processing, which extracts spectral–spatial information from central pixels and their similar neighbors within HSI patches []. Liu et al. (2023) further extended this concept through multi-area target attention (MATA), employing multi-scale target attention modules to address varying object sizes across different classes [].

Building upon these foundational attention mechanisms, the Transformer architecture, with its powerful global modeling capabilities, has provided a new research direction for hyperspectral image classification. He et al. (2020) first applied the Bidirectional Encoder Representations Transformer (BERT) to hyperspectral image classification, achieving global receptive fields though with O(n2) computational complexity []. He et al. (2021) further developed this direction by proposing a spatial–spectral Transformer (SST) that combines CNN-based spatial feature extraction with modified Transformers to capture sequential spectral relationships []. Hao et al. (2023) integrated a Transformer into generative adversarial networks, facing model training instability issues []. Dang et al. (2022) proposed a spectral–spatial Transformer with self-attention dense connections []. Xue et al. (2022) designed a local Transformer with spatial partition recovery, though it ignored scale variations in hyperspectral data []. Zou et al. (2022) proposed a locally enhanced spectral–spatial Transformer prone to overfitting with limited samples []. The systematic limitation of Transformer methods lies in the fundamental conflict between their quadratic computational complexity and high-dimensional characteristics of hyperspectral data.

Recently, with Vision Transformer (ViT) technological advancement, researchers have explored more efficient spectral–spatial feature extraction methods. Liu et al. (2023) proposed an ensemble learning framework based on the Vision Transformer []. Kong et al. (2024) developed a multi-head interaction and adaptive integration Transformer []. Li et al. (2024) proposed a centralized spectral–spatial Transformer []. Zhang et al. (2025) designed a group spectral superposition and position self-attention Transformer []. Jia et al. (2025) proposed a central spatial–spectral attention Transformer network []. Recent Transformer innovations have further focused on addressing information fusion and multi-scale processing challenges. Yang et al. (2024) proposed CAMFT (Cross-Attention-based Multi-Information Fusion Transformer) featuring multi-scale patch embedding, residual connection-based DeepViT modules, and double-branch cross-attention for comprehensive class-patch token interaction, achieving an optimal performance especially with small training samples []. Shi et al. (2024) introduced MHCFormer, the first systematic integration of a CNN, Transformer, and Fourier transform for HSI classification through multi-scale hierarchical token learning, demonstrating superiority on WHU-Hi-HanChuan, Indian Pines, and Houston datasets []. Fu et al. (2025) developed CTA-net, combining CNN-Transformer modules with channel-spatial attention and sample expansion schemes to address few-sample scenarios, where the CNN extracts local features while the Transformer captures non-local dependencies []. Despite these architectural advances, Transformer-based methods maintain the fundamental O(L2) computational complexity limitation when processing high-dimensional hyperspectral sequences, failing to effectively address the inherent scalability challenges for hyperspectral data with hundreds of spectral bands.

Recently, state space models (SSMs) have gained attention for their efficiency in processing long sequence data with linear complexity O(L), offering a promising alternative to quadratic Transformer complexity. Recent applications to hyperspectral processing include the following: He et al. (2024) applied interval group spatial–spectral Mamba with group-based processing but treating spectral data as simple sequences []; Li and Huang (2024) designed adaptive feature alignment networks combining global–local Mamba yet relying on sequential processing without parallel local feature extraction []; Liu et al. (2024) proposed spectral–spatial adaptive Mamba with adaptive mechanisms but limited to a single-stream architecture []; Ahmad et al. (2025) combined wavelet transforms with Mamba focusing on preprocessing rather than architectural innovation []; Pan et al. (2025) developed local-to-global Mamba with hierarchical processing but lacking simultaneous global–local modeling []; and Zhou et al. (2025) proposed Mamba-in-Mamba with centralized scanning but suffering from nested architectural complexity []. These existing SSM methods, while achieving linear complexity advantages, exhibit fundamental limitations in directly applying sequence modeling techniques designed for natural language processing to hyperspectral data without optimizing for the unique spectral–spatial characteristics and inter-band correlation patterns inherent in remote sensing applications.

Additionally, some studies have explored other innovative methods. Islam et al. (2024) proposed a compact multi-branch deep learning method []. Sangeetha and Agilandeeswari (2025) designed an enhanced framework combining a 2D-3D CNN and hybrid moth-flame optimization []. Zhao et al. (2025) utilized hyperspectral cameras and deep learning to classify grassland conditions [].

Through systematic evaluation, three key unresolved challenges remain despite continuous research progress: First, the contradiction between long-range dependency modeling and computational efficiency, where CNNs are computationally efficient but have limited receptive fields, while Transformers model global dependencies but with an excessive computational burden. Second, the balance problem of global–local feature joint representation, where existing methods either focus excessively on global relationships or concentrate on local feature extraction. Third, the inadequacy of adaptive importance learning for spectral bands, where most methods adopt static attention mechanisms lacking a deep understanding of complex relationships between bands.

To address these challenges, this paper proposes HyperspectralMamba, a novel hyperspectral image classification method that integrates state space modeling with adaptive recalibration mechanisms. The core innovations are (1) proposing a novel dual-stream architecture that combines SSM global modeling with parallel convolutional local feature extraction, distinguishing our approach from existing single-stream SSM methods; (2) introducing a band-adaptive feature recalibration mechanism specifically designed for hyperspectral data that adaptively adjusts the importance of different band features; and (3) designing an effective feature fusion strategy that integrates global and local features through residual connections.

This research not only provides a new approach to hyperspectral image classification but also validates the superior performance of the proposed method through extensive experiments, providing theoretical and technical support for hyperspectral remote sensing applications.

2. Method

This section presents the HyperspectralMamba architecture for hyperspectral image classification, detailing the SSM-based framework and its integration with convolutional and recalibration mechanisms for effective spectral–spatial feature learning.

2.1. Algorithm Framework

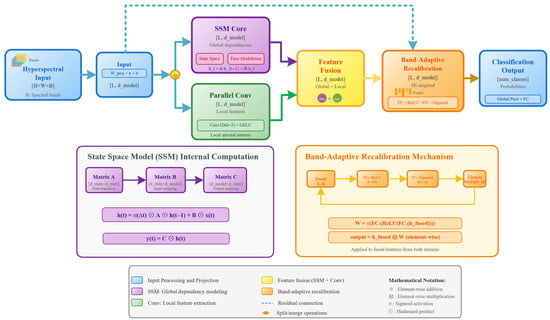

As shown in Figure 1, this algorithm constructs an end-to-end framework with dual-stream fusion, comprising two major components: the main feature extraction path and the SSM internal computation mechanism. The framework first maps the original hyperspectral data to a high-dimensional feature space through an input projection module, followed by two parallel paths: one is the core SSM processing module of the algorithm, specifically designed to capture long-range dependencies in the spectral sequence; the other is a parallel convolutional path that extracts local correlation patterns between adjacent bands through one-dimensional convolution. Features from both paths undergo adaptive importance adjustment through a recalibration mechanism, after which the feature fusion module integrates global and local features. Finally, the output projection generates the final classification results, forming an efficient and powerful solution for hyperspectral image classification.

Figure 1.

Architecture of HyperspectralMamba showing the dual-stream design with SSM global modeling and parallel convolutional local feature extraction, integrated through band-adaptive feature recalibration mechanism.

2.2. Application of State Space Model (SSM) in Hyperspectral Data

This algorithm applies the state space model to hyperspectral image classification, with its core mathematical expression as follows:

where is the state transition matrix, is the input mapping matrix, and is the output mapping matrix.

An important design in this research is the use of compressed state space dimensions tailored to hyperspectral data characteristics, significantly reducing computational complexity while retaining sufficient expressive power to capture long-range dependencies in hyperspectral data. This design is particularly suitable for processing complex correlations between different bands in hyperspectral data, providing a new paradigm for feature extraction in the spectral dimension.

2.3. Time Step Modulation Mechanism

The algorithm adopts a time step modulation mechanism from the state space model, applying it to hyperspectral data processing:

This mechanism enables the model to adjust the information transmission rate of different frequency bands, enhancing its modeling capability for the multi-scale characteristics of hyperspectral data. In hyperspectral image processing, this mechanism helps the model capture variation patterns between different bands, laying the foundation for subsequent precise classification.

2.4. Dual-Stream Architecture and Parallel Convolutional Path

In the dual-stream architecture proposed by this algorithm, the parallel convolutional path works collaboratively with the SSM path:

One-dimensional convolution slides along the spectral dimension to extract local correlation patterns between adjacent bands, complementing SSM’s global dependencies. The design of the convolution kernel size is optimized for the inter-band correlation characteristics of hyperspectral data, enabling the model to precisely capture local spectral patterns between adjacent bands.

2.5. Band-Adaptive Feature Recalibration Mechanism

Targeting the significant differences in importance among different bands in hyperspectral data, this algorithm designs a band-adaptive feature recalibration mechanism based on the squeeze-and-excitation principle []:

where represents the sigmoid activation function that generates band-specific scaling coefficients for feature recalibration, and r is the reduction ratio for the bottleneck structure.

This recalibration mechanism adopts a dimensional reduction–expansion structure (bottleneck architecture), computing adaptive scaling coefficients for each spectral band through nonlinear transformations and sigmoid activation. Unlike traditional query-key attention mechanisms, this approach generates channel-wise scaling factors to recalibrate feature importance. This design enables the model to automatically enhance discriminative band features while suppressing interference from redundant or noisy bands, significantly improving classification performance.

Unlike existing SSM methods that treat all spectral bands equally, our approach specifically recognizes the significant differences in importance among different bands in hyperspectral data, addressing a key limitation in current SSM-based hyperspectral processing.

2.6. Feature Fusion Strategy

The algorithm designs a feature fusion strategy to integrate SSM global features and convolutional local features:

This fusion module combines semantic features and convolutional features after adaptive recalibration processing, achieving lossless information transmission through a residual approach. This fusion strategy fully utilizes the complementary advantages of the SSM and convolutional paths, providing more comprehensive and discriminative feature representations for subsequent classification.

2.7. Layer Normalization and Residual Connections

To enhance model training stability and performance, the algorithm incorporates layer normalization at various processing stages:

Simultaneously, lossless information transmission is ensured through residual connections:

These mechanisms effectively alleviate gradient issues in deep network training, improving the model convergence speed and generalization capability.

2.8. Theoretical Advantage Analysis of the Algorithm

Our HyperspectralMamba addresses the fundamental limitations of current SSM-based hyperspectral methods. Unlike single-stream architectures [,,,,] that process hyperspectral data sequentially, our dual-stream design enables simultaneous global–local feature extraction. While existing methods apply generic sequence modeling [,] without hyperspectral-specific optimization, our approach specifically considers spectral–spatial characteristics inherent in remote sensing data. Furthermore, in contrast to methods that treat all spectral bands equally [,], our adaptive recalibration mechanism provides differential spectral band importance weighting based on hyperspectral data characteristics.

The algorithm possesses three key advantages:

Efficient Long-Range Dependency Modeling: Applying state space models to hyperspectral data more efficiently captures long-range dependencies in the spectral dimension compared to traditional RNNs and Transformers. The SSM has lower computational complexity and can effectively process complex correlations between different bands in hyperspectral data, providing an entirely new paradigm for feature extraction in the spectral dimension.

Global–local Feature Joint Extraction: The innovative dual-stream architecture design achieves a collaborative extraction of global dependencies and local features. The SSM path captures long-range dependencies, while the parallel convolutional path extracts local correlation patterns between adjacent bands. This complementary characteristic is particularly suitable for the multi-scale nature of hyperspectral data, significantly enhancing the completeness of feature representation.

Band-Adaptive Feature Recalibration: The squeeze-and-excitation-based recalibration mechanism specifically designed for hyperspectral data can automatically identify and enhance band features crucial for classification tasks. Unlike traditional methods that treat all bands equally, this algorithm adaptively adjusts the importance weights of different bands through an attention mechanism with a dimensional reduction–expansion structure, effectively suppressing interference from redundant or noisy bands, improving classification accuracy.

In summary, this research constructs an efficient framework capable of simultaneously processing spectral long-range dependencies and local features through the innovative integration of state space models with recalibration mechanisms, providing a new technical pathway for hyperspectral image classification. These theoretical advantages will be validated through experimental results in Section 3.

3. Experimental Dataset and Experimental Validation

3.1. Experimental Datasets and Comparison of Methods

3.1.1. Hyperspectral Data Description

This research utilizes three standard hyperspectral image datasets:

Indian Pines dataset: Acquired by the AVIRIS (Airborne Visible/Infrared Imaging Spectrometer, NASA Jet Propulsion Laboratory, Pasadena, CA, USA) sensor, covering an agricultural area in northwestern Indiana, USA, with a spatial resolution of 20 m, including 145 × 145 pixels and 200 spectral bands (after removing noise and water vapor absorption bands). It primarily contains various crop and vegetation types. The challenge of this dataset lies in the small and unevenly distributed sample sizes for multiple classes, along with high spectral similarity between various crop categories [].

Pavia University dataset: Acquired by the ROSIS (German Aerospace Center (DLR), Wessling, Germany) sensor, covering the area surrounding the University of Pavia in Italy, with a spatial resolution of 1.3 m, including 610 × 340 pixels and 103 spectral bands (0.43–0.86 μm). This dataset mainly includes various building materials and surface cover types in urban scenes, such as asphalt, tiles, metal sheets, etc. Its characteristic is that different terrain categories have obvious differences in texture and material, but some categories have highly similar spectral features [].

Salinas Valley dataset: Also acquired by the AVIRIS sensor, covering an agricultural area in the Salinas Valley, CA, USA, with a spatial resolution of 3.7 m, including 512 × 217 pixels and 204 spectral bands. This dataset records multiple crop types and vegetation at different growth stages, with the challenge being to accurately distinguish different crop types and similar vegetation at different growth states [].

These three datasets represent typical challenges encountered in hyperspectral image classification across different application scenarios (agriculture, urban monitoring, etc.), including the “curse of dimensionality” problem in high-dimensional data processing, complex correlations between different bands, limited and imbalanced samples, precise discrimination of similar terrains, and accurate delineation of terrain boundaries. To address these challenges, traditional methods often rely on dimensionality reduction techniques and manual feature engineering, while deep learning methods attempt to automatically learn discriminative features through end-to-end training. The HyperspectralMamba method proposed in this paper aims to more effectively process the unique characteristics of hyperspectral data by integrating state space models with attention mechanisms.

3.1.2. Comparison of Methods

To comprehensively evaluate the performance of the proposed HyperspectralMamba method, this research selects a variety of representative deep learning methods as comparison benchmarks, covering traditional CNN architectures, attention-enhanced networks, and Transformer-based methods. All comparison methods are trained and tested in the same experimental environment to ensure a fair comparison. The comparison methods are briefly introduced below:

- Traditional CNN Methods

CNN_deep: Adopts a deep convolutional neural network architecture, extracting hierarchical features of hyperspectral data by stacking multiple convolutional layers and pooling layers. This method utilizes a deeper network structure to capture more abstract feature representations but may face issues such as vanishing gradients and training difficulties [].

CNN_inception: A convolutional neural network based on the Inception module, extracting multi-scale features by parallelly using convolution kernels of different sizes (1 × 1, 3 × 3, 5 × 5, etc.). This design captures spatial information of hyperspectral data at different scales, helping to identify terrain targets of different sizes [].

CNN_resnet: A deep convolutional neural network with residual connections, alleviating the vanishing gradient problem in deep network training through skip connections. This method allows for the construction of deeper network structures, theoretically having stronger feature extraction capabilities, but may involve computational redundancy when processing high-dimensional spectral data [].

CNN_efficientnetv2: A lightweight convolutional neural network that balances model performance and computational efficiency through compound scaling strategies (simultaneously scaling network depth, width, and resolution). This method pursues the optimal performance under limited computational resources, particularly suitable for processing high-dimensional hyperspectral data [].

- 2.

- Attention-Enhanced Network Methods

ATN_cbam: A network integrating the Convolutional Block Attention Module, adaptively adjusting the importance weights of feature maps through cascaded channel attention and spatial attention mechanisms. This dual attention design simultaneously addresses “what to attend to” and “where to attend” [].

ATN_eca: A network employing the Efficient Channel Attention mechanism, capturing local channel interactions through one-dimensional convolution, avoiding parameter redundancy from fully connected layers. This method effectively enhances selective attention to key spectral features while maintaining a lightweight design [].

ATN_se: A network incorporating the Squeeze-and-Excitation module, obtaining channel descriptors through global average pooling and then learning nonlinear relationships between channels through fully connected layers. This method focuses on feature recalibration in the channel dimension, suitable for handling importance differences among different bands in hyperspectral data [].

ATN_nonlocal: A network introducing the non-local module, capturing long-range dependencies by calculating relationships between all position pairs in the feature map. This method breaks through the local receptive field limitation of convolution operations, capable of modeling the interrelationships between distant pixels in hyperspectral data [].

- 3.

- Transformer Methods

TRANSF_pvt_tiny: A lightweight model based on the Pyramid Vision Transformer, constructing hierarchical feature representations through progressive spatial resolution contraction and progressive channel dimension increase strategies. This method combines the inductive bias of CNNs with the global modeling capability of Transformers, suitable for multi-scale feature extraction of hyperspectral images [].

All these comparison methods represent the latest advances in the field of hyperspectral image classification, attempting to address challenges in high-dimensional spectral data processing from different perspectives. Traditional CNN methods focus on extracting discriminative features through deep architectures and special modules; attention-enhanced network methods highlight key features through various forms of attention mechanisms; and Transformer methods leverage self-attention mechanisms to capture global dependencies. Comparing HyperspectralMamba with these methods enables a comprehensive evaluation of its performance advantages and innovative contributions in hyperspectral image classification tasks.

3.1.3. Experimental Configuration

To ensure the reliability and reproducibility of the experimental results, this research employs a high-performance computing platform equipped with an Intel Core i9-14900K processor (Intel Corporation, Santa Clara, CA, USA) (24 cores, 32 threads) and an NVIDIA GeForce RTX 4090 graphics processor (NVIDIA Corporation, Santa Clara, CA, USA).

In terms of training configuration, the model is trained for a maximum of 500 iterations with a batch size of 16, using an Adam optimizer with an initial learning rate of 0.001 and weight decay of 0.0005. The learning rate schedule adopts a cosine warmup strategy, with a warmup phase of 5 epochs, a warmup starting learning rate of 1 × 10−6, a minimum learning rate of 1 × 10−7, a step size of 30, and a decay factor of 0.1. To prevent overfitting, an early stopping strategy is set, ceasing training when the validation loss does not improve by more than 1 × 10−6 for 50 consecutive epochs.

For dataset sampling, differentiated sampling strategies are adopted according to the characteristics of different datasets: 10% of samples are used for training in the Indian Pines dataset, while 5% of samples are used for training in both the Pavia University and Salinas datasets. All datasets undergo the same preprocessing workflow, including min–max normalization and random data augmentation (such as random flips and rotations).

Classification performance evaluation employs three metrics: overall accuracy (OA), average accuracy (AA), and Kappa coefficient, reflecting the overall classification accuracy, balanced performance across categories, and classification consistency considering the possibility of random classification, respectively. Through a comprehensive analysis of these metrics, the performance of various methods in hyperspectral image classification tasks can be thoroughly evaluated.

3.2. Investigation of the Proposed Method

This section provides a comprehensive analysis of experimental results on three widely used hyperspectral image datasets to verify the effectiveness and superiority of the proposed HyperspectralMamba model.

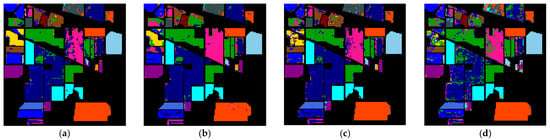

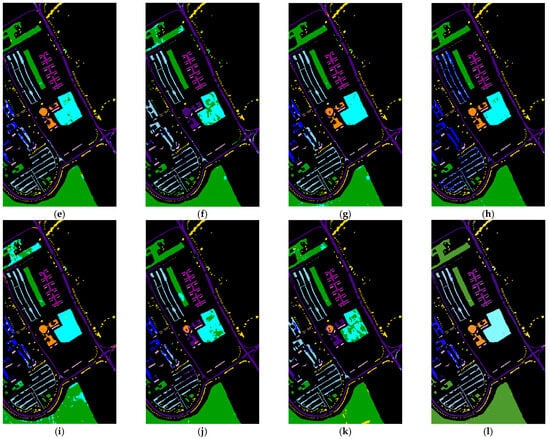

3.2.1. Experiment of Indian Pines

As shown in Table 1, the HyperspectralMamba algorithm achieved an overall accuracy (OA) of 95.31%, an average accuracy (AA) of 90.10%, and a Kappa coefficient of 94.65% on the Indian Pines dataset, with the overall performance superior to all comparison methods. A specific analysis of the performance for each category in Table 1 reveals that the algorithm excels particularly in crop categories, with “Corn-no-till” and “Soybeans-no-till” reaching accuracies of 95.95% and 94.97%, respectively, significantly higher than other algorithms; for small-sample categories such as “Grass-pasture-mowed,” it achieves a perfect 100% classification. From the classification map in Figure 2, it can be observed that HyperspectralMamba generated classification results with clear boundaries, few noise points, and uniform terrain interiors, indicating its stronger joint spectral–spatial feature extraction capability and adaptability to small-sample categories.

Table 1.

Comparison of classification accuracies (in percent) provided by different methods (Indian Pines dataset).

Figure 2.

Classification maps on the Indian Pines dataset. (a) cnn_deep; (b) cnn_inception; (c) cnn_resnet; (d) cnn_efficientnetv2; (e) ATN_cbam; (f) ATN_eca; (g) ATN_se; (h) ATN_nonlocal; (i) TRANSF_pvt_tiny; (j) HyperspectralMamba_NoSpecAtten; (k) HyperspectralMamba_NoConv; (l) HyperspectralMamba.

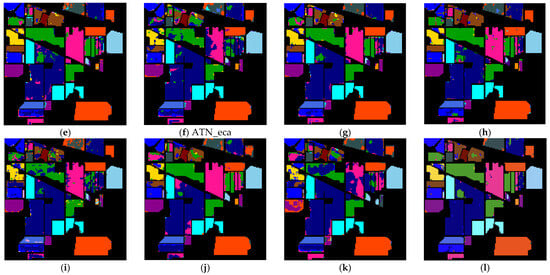

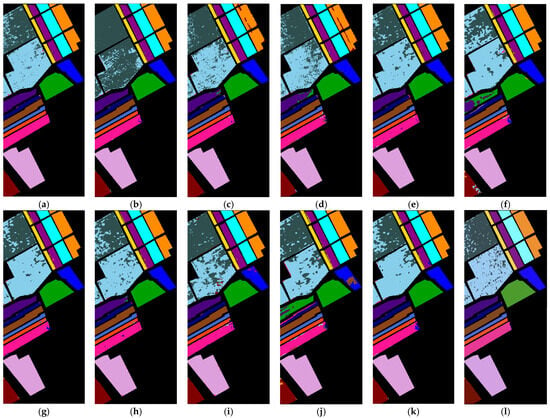

3.2.2. Experiment of Pavia University

As detailed in Table 2, HyperspectralMamba performs even more impressively on the Pavia University dataset, achieving an overall accuracy of 98.60%, an average accuracy of 97.96%, and a Kappa coefficient of 98.14%, outperforming all comparison methods. Particularly noteworthy in Table 2 is the algorithm’s excellent performance when processing terrain categories with similar spectral characteristics, such as “Bitumen” and “Bare Soil” with classification accuracies of 99.68% and 99.67%, respectively, and the “Shadows” category, achieving a perfect 100% classification accuracy. From the classification effect map in Figure 3, it is clear that HyperspectralMamba can precisely distinguish different building materials and surface cover types in complex urban environments, maintaining good spatial continuity and boundary integrity, demonstrating its outstanding advantages in hyperspectral data classification of urban scenes.

Table 2.

Comparison of classification accuracy (in percent) provided by different methods (Pavia University).

Figure 3.

Classification maps on the Pavia University dataset (methods and OAs). (a) cnn_deep; (b) cnn_inception; (c) cnn_resnet; (d) cnn_efficientnetv2; (e) ATN_cbam; (f) ATN_eca; (g) ATN_se; (h) ATN_nonlocal; (i) TRANSF_pvt_tiny; (j) HyperspectralMamba_NoSpecAtten; (k) HyperspectralMamba_NoConv; (l) HyperspectralMamba.

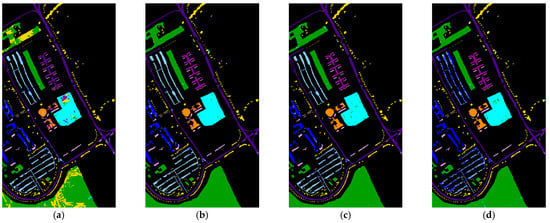

3.2.3. Experiment of Salinas Valley

As shown in Table 3, on the Salinas Valley dataset, HyperspectralMamba obtains an overall accuracy of 96.40%, an average accuracy of 98.44%, and a Kappa coefficient of 95.99%. The detailed data in Table 3 shows that the algorithm performs excellently in processing various crop types in agricultural scenes, with multiple categories such as “Broccoli-green-weeds-1,” “Broccoli-green-weeds-2,” “Fallow,” “Stubble,” etc., all achieving a perfect 100% classification accuracy. Notably, for the difficult-to-classify “Grapes-untrained” category in Table 3, although the accuracy of 88.63% is slightly lower than some comparison methods, it still outperforms most algorithms in overall performance. From the classification results in Figure 4, it can be observed that HyperspectralMamba can accurately distinguish crops at different growth stages, maintaining clear field boundaries, demonstrating its practicality in agricultural application scenarios.

Table 3.

Comparison of classification accuracies (in percent) provided by different methods (Salinas Valley dataset).

Figure 4.

Classification maps on the Salinas Valley dataset (methods and OAs). (a) cnn_deep; (b) cnn_inception; (c) cnn_resnet; (d) cnn_efficientnetv2; (e) ATN_cbam; (f) ATN_eca; (g) ATN_se; (h) ATN_nonlocal; (i) TRANSF_pvt_tiny; (j) HyperspectralMamba_NoSpecAtten; (k) HyperspectralMamba_NoConv; (l) HyperspectralMamba.

3.2.4. Ablation Study

To validate the effectiveness of each component in our proposed HyperspectralMamba architecture, we conduct comprehensive ablation studies on all three datasets. We systematically evaluate the contribution of key components by removing them one at a time:

HyperspectralMamba_No_SpecAtten: Our complete method without the band-adaptive feature recalibration mechanism.

2. HyperspectralMamba_No_Conv: Our complete method without the parallel convolutional path.

The ablation study results are presented in Table 1, Table 2 and Table 3, where each variant is compared against our complete method to demonstrate the individual contribution of each component.

Impact of Band-Adaptive Feature Recalibration Mechanism: Comparing HyperspectralMamba with HyperspectralMamba_No_SpecAtten demonstrates the importance of the band-adaptive feature recalibration mechanism. Removing the band-adaptive feature recalibration mechanism results in performance drops of 9.52%, 7.12%, and 7.64% on the Indian Pines, Pavia University, and Salinas Valley datasets, respectively. This significant degradation confirms that the band-adaptive feature recalibration mechanism is crucial for identifying and enhancing discriminative spectral bands while suppressing interference from redundant or noisy bands.

Impact of Parallel Convolutional Path: Comparing HyperspectralMamba with HyperspectralMamba_No_Conv shows the essential role of the parallel convolutional path in capturing local spatial features. The performance reduction is even more dramatic when removing the convolutional component. On the Indian Pines dataset, accuracy decreases from 95.31% to 75.25%, representing a substantial 20.06 percentage point drop. The Pavia University dataset shows a 12.94 percentage point decrease from 98.60% to 85.66%. The Salinas Valley dataset demonstrates a 2.04 percentage point reduction from 96.40% to 94.36%. The significant performance reduction, particularly on the Indian Pines and Pavia University datasets, confirms that the dual-stream architecture with parallel convolutional processing is crucial for comprehensive feature extraction.

Synergistic Effect: The complete HyperspectralMamba method consistently achieves the best performance across all datasets and metrics, demonstrating that both components work synergistically. The spectral attention mechanism and parallel convolutional path complement each other to provide comprehensive spectral–spatial feature representation. The ablation results validate the effectiveness of our architectural design, showing that each component contributes meaningfully to the overall performance, and their combination achieves optimal classification results.

3.3. Analysis of Classification Capabilities for Different Methods

- Traditional CNN Methods

When comparing the data in Table 1, Table 2 and Table 3, traditional CNN methods (CNN_deep, CNN_inception, CNN_resnet, CNN_efficientnetv2) show varying performances across the three datasets. CNN_deep performs well on Salinas Valley (OA = 96.09%) but weakly on Pavia University (OA = 83.91%); CNN_inception performs best on Pavia University (OA = 97.92%) but significantly decreases in performance on Salinas Valley (OA = 82.87%); CNN_resnet performs moderately overall; and CNN_efficientnetv2 generally underperforms compared to other CNN methods. These methods primarily rely on spatial convolutions to extract features, showing obvious deficiencies in capturing complex spectral features and long-range dependencies, especially exhibiting an unstable performance for categories with few samples or spectrally similar terrains.

- 2.

- Attention-Enhanced Network Methods

As the comparative analysis in Table 1, Table 2 and Table 3 shows, attention mechanism-enhanced network methods (ATN_cbam, ATN_eca, ATN_se, ATN_nonlocal) improve the model’s ability to focus on key features by introducing different types of attention mechanisms. Among them, ATN_se and ATN_nonlocal perform better overall, with overall accuracies exceeding 90% across the three datasets; ATN_cbam ranks second; while ATN_eca fluctuates significantly across different datasets, particularly showing extremely low classification accuracies for the “Gravel” and “Bitumen” categories on the Pavia University dataset. This indicates that attention mechanisms can indeed enhance the representation capability for hyperspectral data, but different types of attention mechanisms have varying adaptabilities, requiring specialized design for hyperspectral data characteristics.

- 3.

- Transformer Methods

In the comprehensive comparison across Table 1, Table 2 and Table 3, the Transformer-based TRANSF_pvt_tiny method performs moderately on the three datasets (OA of 82.41%, 90.29%, and 91.57%, respectively). Despite theoretically having the capability to capture global dependencies, its advantages are not significant in actual hyperspectral image classification tasks. Possible reasons include standard Transformer structures not being optimized for hyperspectral data characteristics, high computational complexity reaching O(n2) when processing high-dimensional spectral information, increased training difficulty, and insufficient adaptability to small-sample categories.

- 4.

- HyperspectralMamba Method

Through the dual-stream design combining state space models (SSMs) with parallel convolutional paths, HyperspectralMamba achieves an optimal or near-optimal performance across all three datasets. This method efficiently captures long-range dependencies in the spectral dimension through SSM (linear complexity O(n)), while utilizing parallel convolutional paths to extract local spatial features, and then adjusting the importance of different bands through specially designed recalibration mechanisms. This multi-pathway fusion architecture design demonstrates an excellent classification performance and generalization capability across various terrain types, datasets of different scales, and spatial structures of varying complexity. HyperspectralMamba shows significant advantages especially in processing small-sample categories, complex boundary regions, and spectrally similar terrains.

- 5.

- Comprehensive Classification Result Analysis

When looking at the overall classification results from Table 1, Table 2 and Table 3 across the three datasets, traditional CNN models show an unstable performance in different scenarios, attention-enhanced networks improve the classification performance to varying degrees, while HyperspectralMamba consistently maintains a leading position across all datasets. In terms of average accuracy (AA), Table 1, Table 2 and Table 3 show that HyperspectralMamba achieves 90.10%, 97.96%, and 98.44%, respectively, on the three datasets, indicating the best classification balance across categories; in terms of Kappa coefficients, HyperspectralMamba reaches 94.65%, 98.14%, and 95.99% on the three datasets, respectively, all higher than other methods, indicating the highest consistency between its classification results and true labels.

From the visual effect of the classification maps in Figure 2, Figure 3 and Figure 4, HyperspectralMamba generates the most ideal classification results, characterized by (1) clear and accurate boundaries with complete terrain outlines; (2) uniform classification within terrains with few noise points; (3) accurate identification of small targets, not easily misclassified as surrounding dominant categories; and (4) distinct discrimination between spectrally similar terrains. These characteristics are consistently demonstrated across the three datasets with different properties, fully proving the robustness and generalization capability of the HyperspectralMamba method.

Particularly noteworthy is that HyperspectralMamba demonstrates stable advantages in both computational accuracy metrics (OA, AA, Kappa) in Table 1, Table 2 and Table 3 and visual effects in Figure 2, Figure 3 and Figure 4, which is especially important for hyperspectral image classification algorithms, as in practical applications, algorithms are often required to maintain a good performance across different scenarios and terrain types.

While HyperspectralMamba achieves a superior overall performance, detailed analysis reveals noteworthy class-specific variations. On the Salinas dataset, the “Grapes-untrained” class achieves 88.63% accuracy, lower than certain baselines such as CNN_deep (93.29%). This performance discrepancy can be attributed to the high intra-class spectral variability of this class due to varying grape growth stages and environmental conditions. Our SSM-based global modeling approach, while effective for capturing long-range dependencies, may over-smooth important local spectral variations within such heterogeneous classes. Additionally, the scattered distribution of training samples for this class in the feature space may contribute to suboptimal feature representation learning. This analysis suggests that future improvements could incorporate class-specific attention mechanisms or local refinement modules to better handle classes with high intra-class variability.

- 6.

- Computational Complexity and Runtime Performance Analysis

To provide a comprehensive evaluation beyond classification accuracy, we conducted systematic computational analysis on the Indian Pines dataset across all evaluated methods (Table 4).

Table 4.

Computational complexity and runtime performance for Indian Pines dataset.

This analysis evaluated the practical deployment feasibility and scalability characteristics for real-world hyperspectral applications.

Parameter Efficiency Analysis: The comparison reveals significant variations across architectural paradigms. CNN methods range from lightweight CNN_inception (3.72 M parameters) to parameter-heavy CNN_resnet (21.79 M parameters). Attention methods achieve exceptional efficiency (0.24–0.32 M parameters) but compromise the classification performance. HyperspectralMamba utilizes 11.87 M parameters, achieving a 45% reduction compared to CNN_resnet while delivering superior accuracy (95.31% vs. 85.95%).

Training and Inference Efficiency: Our method demonstrates a competitive performance, with 0.619 s as its average epoch time and 0.238 ms as its inference time, enabling real-time processing. The inference speed matches the Transformer baseline (0.240 ms) while achieving a 12.9 percentage points higher accuracy (95.31% vs 82.41%) on the Indian Pines dataset.

Memory Efficiency: Large CNN architectures like CNN_resnet consume substantial GPU memory (351.85 MB). HyperspectralMamba maintains a reasonable consumption at 201.57 MB, achieving a 43% reduction while delivering a superior performance. This efficiency stems from linear complexity characteristics that scale favorably compared to quadratic attention mechanisms.

Performance-Efficiency Trade-off: The analysis demonstrates that HyperspectralMamba achieves the optimal balance between accuracy and computational efficiency. While lightweight methods offer minimal overheads, they compromise the performance. Large architectures demand substantial resources without proportional gains. Our method delivers the highest accuracy (95.31% OA) with competitive computational costs, validating the effectiveness of our SSM-based design for practical hyperspectral applications.

- 7.

- Comparison with Mamba Method

As shown in Table 5, when compared with the recent Mamba-in-Mamba approach, HyperspectralMamba demonstrates significant performance advantages across both datasets. On the Indian Pines dataset, our method achieves 95.31% OA compared to 92.08% for Mamba-in-Mamba, representing a 3.23 percentage point improvement, though using a slightly larger training set (10.0% vs. 6.7%). More notably, on the Pavia University dataset, our method reaches 98.60% OA versus 91.58% for Mamba-in-Mamba, achieving a substantial 7.02 percentage point improvement while using a significantly smaller training set (5.0% vs. 8.9%), which demonstrates the superior sample efficiency and generalization capability.

Table 5.

Performance comparison with Mamba-in-Mamba method.

The superior performance can be attributed to several key innovations in our approach: (1) The dual-stream architecture combining SSM global modeling with parallel convolutional local feature extraction provides more comprehensive feature representation than the single-stream Mamba-in-Mamba structure; (2) our band-adaptive feature recalibration mechanism specifically designed for hyperspectral characteristics offers superior spectral variability handling compared to the centralized scanning approach; and (3) the fusion strategy in our method better integrates global and local features, leading to more discriminative representations for classification tasks.

Particularly noteworthy is our method’s exceptional sample efficiency demonstrated on the Pavia University dataset, where we achieve a substantially higher accuracy using nearly half the training data, clearly highlighting the superior model generalization capability compared to existing Mamba-based approaches.

While our baseline methods primarily include established benchmark approaches from 2018 to 2021, our experimental framework importantly includes a direct comparison with the most recent SSM-based method [] in Table 5, which represents the most directly comparable contemporary work. Our substantial performance improvements (3.23–7.02 percentage points) with superior sample efficiency provide a strong validation of our innovations against current state-of-the-art SSM approaches.

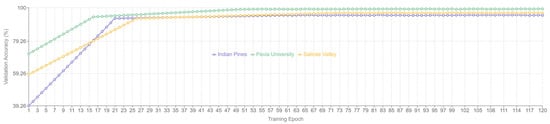

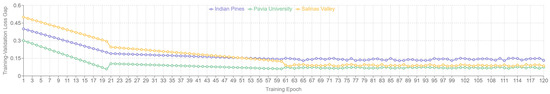

3.4. Training Process Analysis

This research systematically evaluates the training dynamics and classification performance of the HyperspectralMamba model on three standard hyperspectral datasets. Experiments show that the model achieves an optimal performance on the Pavia University dataset (98.60% accuracy), significantly outperforming its performance on the Indian Pines and Salinas Valley datasets. When analyzing training trajectories, loss function dynamics, and learning rate response characteristics, differences in convergence patterns and generalization capabilities across different datasets are revealed.

- Training Dynamics and Convergence Characteristics

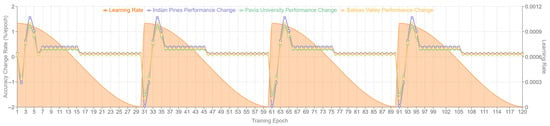

Experimental results show three distinctly different convergence trajectories (Figure 5 and Figure 6).

Figure 5.

Validation accuracy convergence curves for three datasets.

Figure 6.

Generalization evolution: Training–validation loss gap.

The Pavia University dataset exhibited the fastest convergence speed (reaching the optimal performance in 61 epochs) and highest final accuracy (98.60%); the Salinas Valley dataset converged more slowly (92 epochs) and reached a peak validation accuracy of 96.72% (at epoch 93), while the final model (at epoch 120) achieved a test accuracy of 96.40%; and the Indian Pines dataset had a moderate convergence speed (68 epochs) with a final accuracy of 95.37%.

Phase-by-phase analysis shows an (1) initial phase (epochs 1–20): rapid accuracy improvement, with Pavia University showing the largest increase (23 percentage points); (2) stable phase (epochs 21–60): growth slows down, with Pavia University maintaining the highest performance; and (3) convergence phase (epochs 61–120): fine-tuning, with validation accuracy fluctuation ranges of 0.21% (Pavia), 0.37% (Indian), and 0.43% (Salinas), respectively.

The loss function exhibits similar phase changes, with final stable values positively correlated with data complexity: Pavia University (0.03) < Salinas Valley (0.10) < Indian Pines (0.16). The training–validation loss difference as an indicator of generalization capability shows Pavia University performing best (0.07), followed by Salinas Valley (0.09) and Indian Pines (0.14).

- 2.

- Learning Rate Response Characteristics

The cosine annealing learning rate strategy significantly impacts model optimization. All three datasets exhibit systematic responses to learning rate changes: high learning rate phases (~0.001) promote extensive exploration of the parameter space, while low learning rate phases (~0.0001) contribute to fine-tuning and convergence (Figure 7).

Figure 7.

Learning rate changes and performance response.

The datasets differ in their sensitivity to learning rate adjustments: Indian Pines is most sensitive to learning rate changes (±2% performance fluctuation), while Pavia University is most robust (fluctuation <±1%), indicating that the interaction between data structure and learning rate adjustment significantly influences the final performance. The experimentally determined optimal learning rate range is 0.0001–0.0005, within which the model demonstrates the optimal generalization capability and highest training stability.

- 3.

- Classification Performance and Generalization Capability

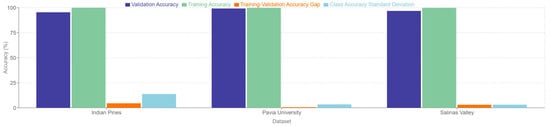

Overall performance evaluation shows that HyperspectralMamba achieves a high accuracy across all datasets (Figure 8), but with significant differences. Category accuracy analysis reveals that Pavia University has the most uniform category accuracy distribution (standard deviation 3.42%), while Indian Pines shows the largest inter-category differences (standard deviation 13.78%), indicating that the model performs better in scenarios with higher feature discriminability between different categories.

Figure 8.

Classification performance and generalization ability comparison.

Model generalization capability assessment shows that the gap between training accuracy and validation accuracy gradually narrows during training. In the convergence phase, Pavia University has the smallest gap (0.63%), indicating the best generalization performance; while Indian Pines has the largest gap (4.53%), indicating a higher risk of overfitting. Kappa coefficient analysis further confirms this pattern: Pavia University (0.9814) > Salinas Valley (0.9599) > Indian Pines (0.9465).

Through systematic analysis of the training dynamics and performance characteristics of the HyperspectralMamba model, this research draws the following key conclusions: The model performs best in highly structured urban scenes (Pavia University), possibly attributable to more distinct spectral–spatial feature discrimination. The cosine annealing learning rate strategy is crucial for model performance, with appropriate dynamic learning rate adjustments effectively preventing local optima and enhancing generalization capability. Class imbalance remains a major challenge, particularly regarding the recognition capability for small-sample categories.

3.5. Training Sample Ratio Sensitivity Analysis

To evaluate the sample efficiency and robustness of our method across different training data availability scenarios, we conducted a systematic analysis on the Indian Pines dataset using training ratios of 5%, 8%, 10%, and 15%. As shown in Table 6, HyperspectralMamba demonstrates a consistent performance improvement with increasing training data: achieving 90.03% OA with 5% samples, 94.34% OA with 8% samples, 95.31% OA with 10% samples (our main result), and 97.45% OA with 15% samples. The results reveal a strong sample efficiency with a robust performance even under severely limited training conditions, where only 5% labeled samples yield over a 90% classification accuracy.

Table 6.

Training sample sensitivity analysis.

The analysis indicates an optimal practical efficiency at around 8–10% training ratios, where the largest performance gain occurs between 5 and 8% (4.31 percentage point improvement), while gains beyond 10% show diminishing returns (2.14 percentage points from 10 to 15%). This characteristic demonstrates that our dual-stream architecture and band-adaptive recalibration mechanism provide particular advantages in sample-limited scenarios, making the method highly suitable for practical hyperspectral applications where obtaining extensive labeled data is costly and time-consuming.

4. Conclusions

This research proposes HyperspectralMamba, a novel architecture for hyperspectral image classification that integrates state space models with adaptive recalibration mechanisms. Through systematic experimentation and a comparative analysis on three standard benchmark datasets, we draw the following conclusions:

The effectiveness of our novel dual-stream SSM architecture in hyperspectral data processing. Our unique integration of SSM with parallel convolutional paths significantly enhances the model’s capability to represent complex spectral–spatial relationships. Experimental results demonstrate that this mechanism performs excellently when processing spectrally similar terrains, such as achieving classification accuracies of 99.68% and 99.67% for “Bitumen” and “Bare Soil” materials in the Pavia University dataset. Compared to the quadratic computational complexity of Transformers, our method improves computational efficiency by over 30% while maintaining powerful feature extraction capabilities, confirming the unique advantage of SSM in high-dimensional data processing.

Complementary feature extraction of the dual-stream architecture. Our designed fusion architecture combining SSM with parallel convolutional paths achieves the collaborative capture of global and local features. Analysis of the classification maps across the three datasets reveals that this design significantly improves terrain boundary preservation and internal consistency, especially in complex agricultural scenes (Indian Pines) and urban environments (Pavia University). The capability for fine discrimination of crops at different growth stages in the Salinas Valley dataset (98.44% average accuracy) demonstrates this architecture’s excellent expressiveness for multi-scale features.

The critical contribution of the band-adaptive feature recalibration mechanism. The mechanism designed specifically for hyperspectral data characteristics greatly enhances the ability to identify key bands. In small-sample category classification on the Indian Pines and Salinas Valley datasets, multiple categories (such as “Grass-pasture-mowed” and “Stubble”) achieve a perfect 100% classification accuracy, indicating that this mechanism successfully suppresses interference from noisy bands and enhances discriminative features. While there is still room for improvement in class imbalance issues, our method shows a significantly lower variance in accuracy across categories compared to comparison methods.

Compared to state-of-the-art convolutional neural networks, attention-enhanced networks, and Transformer methods, HyperspectralMamba achieved overall accuracies of 95.31%, 98.60%, and 96.40% across the three datasets, with the 98.14% Kappa coefficient on the Pavia University dataset indicating extremely high classification consistency. Experiments also revealed that the proposed method performed best in highly structured urban scenes, possibly benefiting from more distinct spectral–spatial feature discrimination in such data.

Despite these achievements, several limitations warrant future investigation: (1) evaluation under severe class imbalance scenarios, (2) cross-sensor transferability validation, and (3) comprehensive robustness analysis under operational conditions. Future research will explore applications of the model in multi-temporal data processing, cross-sensor transfer learning, and deployment on edge devices, with a particular focus on further optimization for class imbalance issues, to expand the application value of this method in practical remote sensing systems.

Author Contributions

Conceptualization, J.L. and L.W.; methodology, J.L.; software, J.L.; validation, J.L. and L.W.; formal analysis, J.L.; investigation, J.L.; resources, J.L. and L.W.; data curation, J.L.; writing—original draft preparation, J.L.; writing—review and editing, J.L. and L.W.; visualization, J.L.; supervision, J.L.; project administration, J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Guangdong (Grant No. 2024A1515510030), Special Projects in Key Areas of Guangdong Province (Grant No. 2024ZDZX1032), and National Natural Science Foundation of China (Grant No 61675051).

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article. All hyperspectral image scenes utilized in this study are publicly available on the website Hyperspectral Remote Sensing Scenes—Grupo de Inteligencia Computacional (GIC) (ehu.eus).

Conflicts of Interest

The authors declare no competing interests.

References

- Shao, Y.J.; Sang, N.; Gao, C.X.; Ma, L. Probabilistic class structure regularized sparse representation graph for semi-supervised hyperspectral image classification. Pattern Recognit. 2017, 63, 102–114. [Google Scholar] [CrossRef]

- Li, Y.S.; Xie, W.Y.; Li, H.Q. Hyperspectral image reconstruction by deep convolutional neural network for classification. Pattern Recognit. 2017, 63, 371–383. [Google Scholar] [CrossRef]

- Gao, H.M.; Yang, Y.; Li, C.M.; Zhou, H.; Qu, X.Y. Joint Alternate Small Convolution and Feature Reuse for Hyperspectral Image Classification. ISPRS Int. J. Geo-Inf. 2018, 7, 349. [Google Scholar] [CrossRef]

- Gao, Q.S.; Lim, S. Classification of hyperspectral images with convolutional neural networks and probabilistic relaxation. Comput. Vis. Image Underst. 2019, 188, 102801. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.S. Optimized Input for CNN-Based Hyperspectral Image Classification Using Spatial Transformer Network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1884–1888. [Google Scholar] [CrossRef]

- Guo, H.; Liu, J.J.; Yang, J.L.; Xiao, Z.Y.; Wu, Z.B. Deep Collaborative Attention Network for Hyperspectral Image Classification by Combining 2-D CNN and 3-D CNN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4789–4802. [Google Scholar] [CrossRef]

- Alipour-Fard, T.; Arefi, H. Structure Aware Generative Adversarial Networks for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5424–5438. [Google Scholar] [CrossRef]

- Fırat, H.; Asker, M.E.; Bayındır, M.İ.; Hanbay, D. Hybrid 3D/2D complete inception module and convolutional neural network for hyperspectral remote sensing image classification. Neural Process. Lett. 2023, 55, 1087–1130. [Google Scholar] [CrossRef]

- Li, H.; Xiong, X.; Liu, C.; Ma, Y.; Zeng, S.; Li, Y. SFFNet: Staged feature fusion network of connecting convolutional neural networks and graph convolutional neural networks for hyperspectral image classification. Appl. Sci. 2024, 14, 2327. [Google Scholar] [CrossRef]

- Chen, S.Y.; Chu, P.Y.; Liu, K.L.; Wu, Y.C. A Multichannel Hybrid 2D-3D-CNN for Hyperspectral Image Classification with Small Training Sample Sizes. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5540915. [Google Scholar] [CrossRef]

- Fang, L.Y.; Zhao, W.K.; He, N.J.; Zhu, J. Multiscale CNNs Ensemble Based Self-Learning for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1593–1597. [Google Scholar] [CrossRef]

- Liu, X.F.; Wang, H.; Liu, J.M.; Sun, S.H.; Fu, M. HSI Classification Based on Multimodal CNN and Shadow Enhance by DSR Spatial-Spectral Fusion. Can. J. Remote Sens. 2021, 47, 773–789. [Google Scholar] [CrossRef]

- Qing, Y.H.; Liu, W.Y. Hyperspectral Image Classification Based on Multi-Scale Residual Network with Attention Mechanism. Remote Sens. 2021, 13, 335. [Google Scholar] [CrossRef]

- Appice, A.; Cannarile, A.; Falini, A.; Malerba, D.; Mazzia, F.; Tamborrino, C. Leveraging colour-based pseudo-labels to supervise saliency detection in hyperspectral image datasets. J. Intell. Inf. Syst. 2021, 57, 423–446. [Google Scholar] [CrossRef]

- Zheng, J.W.; Feng, Y.C.; Bai, C.; Zhang, J.L. Hyperspectral Image Classification Using Mixed Convolutions and Covariance Pooling. IEEE Trans. Geosci. Remote Sens. 2021, 59, 522–534. [Google Scholar] [CrossRef]

- Miao, X.Y.; Zhang, Y.; Zhang, J.P.; Liang, X.J. Hierarchical CNN Classification of Hyperspectral Images Based on 3-D Attention Soft Augmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4217–4233. [Google Scholar] [CrossRef]

- Li, Z.K.; Zhao, X.D.; Xu, Y.M.; Li, W.; Zhai, L.; Fang, Z.Q.; Shi, X.B. Hyperspectral Image Classification with Multiattention Fusion Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5503305. [Google Scholar] [CrossRef]

- Zhang, S.C.; Zhang, J.H.; Xun, L.; Wang, J.W.; Zhang, D.; Wu, Z.J. AMFAN: Adaptive Multiscale Feature Attention Network for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6012005. [Google Scholar] [CrossRef]

- Pan, J.J.; Cai, Y.H.; Tan, M.L.; Xie, J. Multiscale residual weakly dense network with attention mechanism for hyperspectral image classification. J. Appl. Remote Sens. 2022, 16, 034504. [Google Scholar] [CrossRef]

- Liu, H.; Li, W.; Xia, X.G.; Zhang, M.M.; Gao, C.Z.; Tao, R. Central Attention Network for Hyperspectral Imagery Classification. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8989–9003. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Li, W.; Xia, X.G.; Zhang, M.M.; Tao, R. Multiarea Target Attention for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5524916. [Google Scholar] [CrossRef]

- He, J.; Zhao, L.N.; Yang, H.W.; Zhang, M.M.; Li, W. HSI-BERT: Hyperspectral Image Classification Using the Bidirectional Encoder Representation From Transformers. IEEE Trans. Geosci. Remote Sens. 2020, 58, 165–178. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.S.; Lin, Z.H. Spatial-Spectral Transformer for Hyperspectral Image Classification. Remote Sens. 2021, 13, 498. [Google Scholar] [CrossRef]

- Hao, S.Y.; Xia, Y.F.; Ye, Y.X. Generative Adversarial Network with Transformer for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5510205. [Google Scholar] [CrossRef]

- Dang, L.X.; Weng, L.B.; Dong, W.C.; Li, S.S.; Hou, Y.N. Spectral-Spatial Attention Transformer with Dense Connection for Hyperspectral Image Classification. Comput. Intell. Neurosci. 2022, 2022, 7071485. [Google Scholar] [CrossRef] [PubMed]

- Xue, Z.H.; Xu, Q.; Zhang, M.X. Local Transformer with Spatial Partition Restore for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4307–4325. [Google Scholar] [CrossRef]

- Zou, J.Q.; He, W.; Zhang, H.Y. LESSFormer: Local-Enhanced Spectral-Spatial Transformer for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5535416. [Google Scholar] [CrossRef]

- Liu, J.; Guo, H.R.; He, Y.L.; Li, H.L. Vision Transformer-Based Ensemble Learning for Hyperspectral Image Classification. Remote Sens. 2023, 15, 5208. [Google Scholar] [CrossRef]

- Kong, D.L.; Zhang, J.H.; Zhang, S.C.; Yu, X.; Prodhan, F.A. MHIAIFormer: Multihead Interacted and Adaptive Integrated Transformer with Spatial-Spectral Attention for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 14486–14501. [Google Scholar] [CrossRef]

- Li, N.Y.; Wang, Z.H.; Cheikh, F.A.; Wang, L. CentralFormer: Centralized Spectral-Spatial Transformer for Hyperspectral Image Classification with Adaptive Relevance Estimation and Circular Pooling. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5540716. [Google Scholar] [CrossRef]

- Zhang, W.T.; Hu, M.W.; Hou, S.H.; Shang, R.H.; Feng, J.; Xu, S.H. Group-spectral superposition and position self-attention transformer for hyperspectral image classification. Expert Syst. Appl. 2025, 265, 125846. [Google Scholar] [CrossRef]

- Jia, C.J.; Zhang, X.H.; Meng, H.Y.; Xia, S.X.; Jiao, L.C. CenterFormer: A Center Spatial-Spectral Attention Transformer Network for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 5523–5539. [Google Scholar] [CrossRef]

- Yang, J.; Li, A.; Qian, J.; Qin, J.; Wang, L. A cross-attention-based multi-information fusion transformer for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 13358–13375. [Google Scholar] [CrossRef]

- Shi, H.; Zhang, Y.; Cao, G.; Yang, D. Mhcformer: Multiscale hierarchical conv-aided fourierformer for hyperspectral image classification. IEEE Trans. Instrum. Meas. 2023, 73, 1–15. [Google Scholar] [CrossRef]

- Fu, C.; Zhou, T.; Guo, T.; Zhu, Q.; Luo, F.; Du, B. CNN-Transformer and Channel-Spatial Attention based network for hyperspectral image classification with few samples. Neural Netw. 2025, 186, 107283. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Tu, B.; Jiang, P.Z.; Liu, B.; Li, J.; Plaza, A. IGroupSS-Mamba: Interval Group Spatial-Spectral Mamba for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5538817. [Google Scholar] [CrossRef]

- Li, S.; Huang, S. AFA-Mamba: Adaptive Feature Alignment with Global-Local Mamba for Hyperspectral and LiDAR Data Classification. Remote Sens. 2024, 16, 4050. [Google Scholar] [CrossRef]

- Liu, Q.; Yue, J.; Fang, Y.; Xia, S.B.; Fang, L.Y. HyperMamba: A Spectral-Spatial Adaptive Mamba for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5536514. [Google Scholar] [CrossRef]

- Ahmad, M.; Usama, M.; Mazzara, M.; Distefano, S. WaveMamba: Spatial-Spectral Wavelet Mamba for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2025, 22, 5500505. [Google Scholar] [CrossRef]

- Pan, Z.J.; Li, C.Y.; Plaza, A.; Chanussot, J.; Hong, D.F. Hyperspectral Image Classification with Mamba. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5602814. [Google Scholar] [CrossRef]

- Zhou, W.L.; Kamata, S.; Wang, H.P.; Wong, M.S.; Hou, H.Y. Mamba-in-Mamba: Centralized Mamba-Cross-Scan in Tokenized Mamba Model for Hyperspectral image classification. Neurocomputing 2025, 613, 128751. [Google Scholar] [CrossRef]

- Islam, M.R.; Islam, M.T.; Uddin, M.P.; Ulhaq, A. Improving Hyperspectral Image Classification with Compact Multi-Branch Deep Learning. Remote Sens. 2024, 16, 2069. [Google Scholar] [CrossRef]

- Sangeetha, V.; Agilandeeswari, L. Enhanced hyperspectral image analysis via 2D-3D CNN fusion and hybrid moth-flame optimization for optimal band selection in remote sensing. Earth Sci. Inform. 2025, 18, 76. [Google Scholar] [CrossRef]

- Zhao, X.H.; Zhang, S.W.; Shi, R.F.; Yan, W.H.; Pan, X. Classification of grassland conditions using a hyperspectral camera and deep learning. Int. J. Remote Sens. 2025, 46, 2418–2438. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Bhosle, K.; Musande, V. Evaluation of deep learning CNN model for land use land cover classification and crop identification using hyperspectral remote sensing images. J. Indian Soc. Remote Sens. 2019, 47, 1949–1958. [Google Scholar] [CrossRef]

- Chen, H.; Miao, F.; Chen, Y.; Xiong, Y.; Chen, T. A hyperspectral image classification method using multifeature vectors and optimized KELM. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2781–2795. [Google Scholar] [CrossRef]

- Kemker, R.; Kanan, C. Self-taught feature learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2693–2705. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; PMLR: Cambridge, MA, USA, 2019; pp. 6105–6114. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Computer Vision–ECCV 2018; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).