Abstract

Detecting small objects using remote sensing technology has consistently posed challenges. To address this issue, a novel detection framework named ACLC-Detection has been introduced. Building upon the Yolov11 architecture, this detector integrates an attention mechanism with lightweight convolution to enhance performance. Specifically, the deep and shallow convolutional layers of the backbone network are both introduced to depthwise separable convolution. Moreover, the designed lightweight convolutional excitation module (CEM) is used to obtain the contextual information of targets and reduce the loss of information for small targets. In addition, the C3k2 module in the neck fusion network part, where C3k = True, is replaced by the Convolutional Attention Module with Ghost Module (CAF-GM). This not only reduces the model complexity but also acquires more effective information. The Simple Attention module (SimAM) used in it not only suppresses redundant information but also has zero impact on the growth of model parameters. Finally, the Inner-Complete Intersection over Union (Inner-CIOU) loss function is employed, which enables better localization and detection of small targets. Extensive experiments conducted on the DOTA and VisDrone2019 datasets have demonstrated the advantages of the proposed enhanced model in dealing with small objects in aerial imagery.

1. Introduction

The maturation of remote sensing imaging technology signifies that humans can observe broader scenes from a multidimensional perspective. In the early days of remote sensing, its primary purpose was to reconnoiter the distribution of enemy positions. However, with the continuous development of remote sensing technology, it has even become possible to capture the appearance of the Earth from space, marking the entry of remote sensing technology into a new era. In recent years, remote sensing technology has been advancing rapidly, while the emergence of deep neural networks has also brought about a revolution in computer vision, greatly influencing the development of remote sensing imaging techniques. With the advancement of deep learning technology, computers are now capable of automatically detecting and categorizing objects in remote sensing imagery. This progress has significantly expanded the practical applications of remote sensing images. For instance, approaches utilizing convolutional neural network applied to land use and land cover classification [1,2,3] have demonstrated improved performance in terms of accuracy and effectiveness. Advanced algorithms are used for urban planning to identify buildings [4,5,6] and can distinguish between different types and scales of buildings. Effective change detection can also be carried out for environmental changes caused by deforestation and urban expansion [7]. In addition, there are applications such as crop monitoring [8,9] and natural disaster monitoring [10,11], which not only have a wider range of applications, but also have better detection quality and higher detection efficiency compared with traditional methods.

Despite the remarkable achievements brought by the close collaboration between deep learning and remote sensing imaging technologies, numerous challenges have also emerged. Remote sensing images characterized by small targets and complex backgrounds often face significant limitations in detection accuracy. To address this issue, many scholars have made improvements in various directions. Hui et al. [12] introduced a densely connected, residual-based, super-resolution component into the target detector, which can restore the loss of image details during data augmentation, improve the clarity of small objects, and strengthen the feature extraction capability through Sub-pixel convolution combined with information alignment strategies. This effectively solves the issue of diminished feature representation in small-scale targets during continuous convolution and sampling processes. Li et al. [13] incorporated an Efficient Channel Attention (ECA) module into the model, utilizing the generated fusion weights to enhance the saliency of targets and improve the feature representation of targets at different scales, thereby achieving better performance in detecting small targets. Zhang et al. [14] introduced an innovative Small-Scale Network (SSN) module designed to maintain fine-grained distinctions in images. This approach builds a more comprehensive feature representation by utilizing stacked convolutional layers with reduced kernel sizes and incorporating residual connections. Hao et al. [15] introduced a dual-branch attention mechanism (C2f-CloAtt) into the backbone network of his model, aiming to enhance the ability to capture local features. At the same time, a Transformer structure was integrated at the bottom layer of the backbone network to maximize the expression performance of the feature maps. These improvements help the model process contextual information more effectively and enhance the detection performance for small-sized targets. However, such improvements usually come with an increase in model parameters and computational complexity, which may pose certain application challenges on platforms with limited resources or high real-time requirements.

Inspired by the aforementioned research, this paper builds an improved model—ACLC-Detection based on YOLOv11 [16]—aiming to address the issues of false detections and missed detections caused by the insufficient features of small targets in remote sensing images. First, to tackle the weak feature response of small targets, a Convolutional Excitation Module (CEM) is designed. The channel attention mechanism within CEM is employed to filter out the high-frequency feature information of small targets, while spatial attention is utilized to suppress interference from complex backgrounds. This enhances the collection of detailed information during the feature extraction stage and reduces information loss of small targets. In order to decrease the number of parameters in the model, the conventional convolution in the front end of the CEM is replaced with depthwise separable convolution. Calculations indicate that optimizing certain convolutional layers significantly impacts the parameter count while preserving feature diversity. Second, to efficiently fuse high- and low-level features and achieve higher detection accuracy, a Convolutional Attention Module with Ghost Module (CAF-GM) is designed. This module generates a large number of redundant feature maps through linear transformations, retaining the details of small targets while reducing model parameters. At the end of the model, a Simple Attention Module is used to dynamically suppress background noise, thus improving the signal-to-noise ratio in those areas. Finally, to address the localization challenges of small targets, an improved Inner-CIOU loss function is applied to further refine the error values. In contrast to the initial CIOU loss function, the improved loss function introduces a scale factor ratio and resolves the slow convergence issue of small targets through internal auxiliary bounding boxes.

In summary, the key contributions of this study can be summarized as follows:

1. This paper designs a lightweight convolutional attention module, which employs the CBM attention mechanism to strengthen the characteristic response ability to detect small targets, and introduces depthwise separable convolution to significantly reduce the computational cost of the model. This approach achieves model lightweighting while effectively improving the detection performance of small-sized targets in remote sensing images.

2. A Convolutional Attention Module with Ghost Module (CAF-GM) is designed. This module employs the Ghost Module to compress parameters during the feature fusion stage and combines the Simple Attention Module (SimAM) to dynamically suppress background noise. This enhances the information retention and localization stability of multi-scale targets, especially small targets.

3. This paper introduces an improved CIOU loss function. This method utilizes an adjustable scaling factor to dynamically optimize the scale range of bounding box regression. This addresses the interference of background information on small targets, ensures the convergence speed, and improves detection accuracy.

2. Related Work

In this chapter, we will focus on several topics that are closely related to this paper. These include Section 2.1, which provides an introduction to YOLOv11, Section 2.2, which summarizes the research on lightweight convolution, and finally, Section 2.3, which reviews the development of attention mechanisms. By revisiting these framework structures, we can lay a solid foundation for the subsequent work.

2.1. YOLOv11 Detection Framework

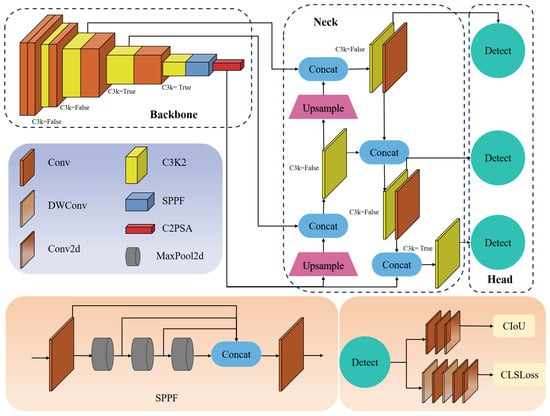

YOLOv11 is a work released by the Ultralytics team in 2024. Its architecture is shown in Figure 1. This model architecture consists of three main components: the backbone network, the intermediate network, and the detection module. Among them, the backbone network is responsible for extracting multi-level feature information from the input image; the intermediate network is used to integrate feature maps from different scales to enhance the feature expression ability. The detection head outputs the final detection results, including regression and classification. In Figure 1, different modules are represented by graphics of different colors and shapes. It can be seen that compared with the YOLOv8 model released in 2023, YOLOv11 has achieved significant breakthroughs, which are mainly reflected in the overall structural update and stronger generalization ability suited for multimodal tasks. In terms of the overall architecture, YOLOv11 has made improvements to YOLOv8 in its structure. Specifically, the original c2f module has been replaced with the C3K2 module. The key point is to replace the original large convolutional kernel with two smaller ones. Since convolution operations account for the majority of the computational load, this substitution significantly reduces the amount of computation. Moreover, the C3K2 module is divided into two cases: C3K = True and C3K = False, which are labeled in Figure 1. The specific configurations are illustrated in Figure 2. It can be found that the former, which is located in the deeper part of the network, indicates that the C3K = True parameter activates deep semantic capture, thereby increasing the depth of feature extraction. The latter represents the original c2f module, that is, C3K2 = c2f. In addition, the modification made to the backbone network is the introduction of cross-stage partial fusion and pyramid squeeze attention mechanism after the original spatial pyramid fast pooling layer. The specific structure of the spatial pyramid module is shown in Figure 1. This attention mechanism employs various-sized convolution kernels to extract multi-scale feature maps and uses the compression–excitation structure to dynamically weight the channel features, thereby enhancing the information expression of key channels. After introducing this module into the model, the detection effect for occluded targets has been significantly improved.

Figure 1.

The overall framework of YOLOv11.

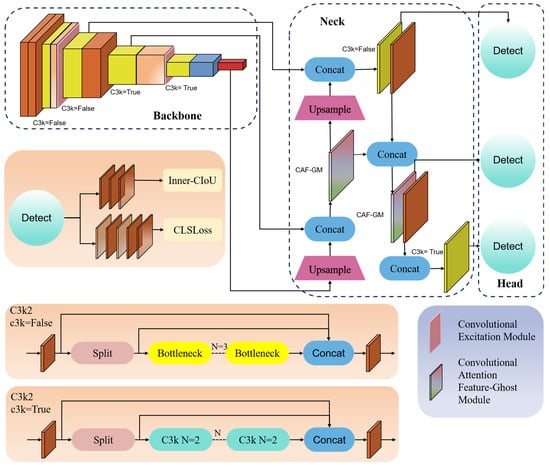

Figure 2.

The overall framework of our ACLC-Detection. Implementation of DSConv and CEM mechanism in the feature extraction network, CAF-GM module for feature enhancement in the neck network, and loss function optimization for enhanced details.

In the neck network part, a dynamic weight allocation mechanism is introduced to replace the PANet + FPN architecture in YOLOv8. This mechanism can learn by changing parameters, thus dynamically tuning the importance of features at various scales during the fusion procedure, which helps lower the false positive rate in complicated background scenarios. The final detection head uses depthwise separable convolution operations to replace the conventional convolutional layers, reducing unnecessary computations and improving detection efficiency.

2.2. Evolution and Utilization of Lightweight Convolution

The ongoing advancement of deep learning has resulted in growing complexity within convolutional neural networks. Deeper convolutional layers bring greater computational load and model complexity. To reduce the computational load and model complexity, lightweight convolution techniques have emerged. Their appearance has significantly reduced computational complexity while maintaining model detection accuracy. Specifically, depthwise separable convolution [17], which was initially applied in the MobileNet architecture, is one of the more commonly used lightweight convolutions at present. It reduces the computational load through depthwise convolution and pointwise convolution. This is also the most frequently used lightweight convolution in this paper. Group convolution [18] groups the channels and performs convolution operations independently on each group, significantly reducing the computational burden. Additionally, this grouping process improves the model’s capacity to extract a wide range of features. Subsequently, the ShuffleNet [19] and EfficientNet [20,21] architectures were introduced. They used channel shuffling to randomly shuffle channel groups and compound scaling to optimize network depth, ensuring lightweighting while further optimizing model performance. Currently, lightweight convolution has achieved more diversified development. Hybrid convolution combining two lightweight convolutions, dynamic quantization, and pruning techniques have all been well implemented. Meanwhile, many scholars have applied their improved models to various fields by leveraging the advantages of lightweighting. Mao [22] and others applied the Efficient Channel Attention module to the optimization of MBConv, proposing a new type of convolutional block named Efficient-MBConv. It captures spatial channel information through one-dimensional convolution, reduces model parameters, and achieves lightweighting, which is of great significance in the field of intelligent driving. Tang et al. [23] developed a multidimensional fusion module using stacked multi-scale blocks and multibranch blocks based on depthwise separable convolution. The multi-scale blocks, by combining convolutional kernels of different sizes, not only obtain multi-scale features but also significantly reduce network parameters, which is applied to mobile devices for detecting breast cancer. Tang et al. [24] analyzed the cosine similarity between feature maps of different layers in the ConvNeXt model and removed redundant feature map layers, substantially decreasing the quantity of parameters and computational demands of the network. This provides valuable references for real-time, in-vehicle monitoring systems. Li et al. [25] designed a multi-scale lightweight convolutional module (MSLConv) in her model, which splits the input feature map into two equal parts along the channel dimension. One part undergoes convolutional operations, while the other part achieves long-range dependencies through residual connections. Moreover, the MSLConv module combines features across various scales and layers, effectively reducing the model’s parameter count and addressing the balance between accuracy and efficiency in high-voltage circuit inspection. The Extended Convolutional Mixer (EConvMixer), introduced by Garas [26], integrates three types of convolution operations: dilated depthwise convolution, standard depthwise convolution, and pointwise convolution. By incorporating a dilation rate, the dilated depthwise convolution effectively expands the receptive field while maintaining the same number of parameters. The operation of convolving a single channel also reduces computational complexity. The design of separable convolution consisting of depthwise and pointwise filtering operations reduces the model’s parameter count, similar to depthwise separable convolution. The lightweight model proposed by the author shows excellent performance in super-resolution images.

2.3. Evolution and Utilization of Attention Mechanisms

In addition to object detection, the broader domain of artificial intelligence as a whole has also seen significant advancements, with the attention mechanism serving as a key technology. The concept of the attention mechanism is a technology that imitates the biological cognitive process. In a complex environment, organisms tend to focus on things that are most closely related to their goals and ignore other unimportant things. This approach can improve efficiency and capture key information efficiently. The attention mechanism was first applied to computer vision in the 1990s. However, due to its reliance on manually designed features, its limitations were significant. It was not until 2014, when the attention mechanism appeared in machine translation tasks, that it started gaining widespread application. Subsequently, the emergence of Transformer [27] was also entirely utilizing the attention mechanism. Currently, the attention mechanism is no longer limited to obtaining more important information but is also gradually developing in a lightweight direction. For example, the Efficient Channel Attention (ECA) [28] module uses one-dimensional convolution to model the dependencies between channels, avoiding the introduction of too many parameters by fully connected layers and having a low computational complexity. The Coordinate Attention (CA) [29] module combines channel information and spatial information in its structure and reduces the computational load by decomposing global average pooling. In addition, scholars have widely applied the attention mechanism and achieved remarkable results. Zhu et al. [30] integrated the Transformer prediction head with the attention mechanism of the convolution block attention module (CBAM) in the YOLOv5 model, effectively enhancing the target detection performance in remote sensing images. Ye et al. [31] introduced a cross-feature attention (XFA) mechanism, which integrates the inductive bias of one-dimensional convolution with the interaction between different features. This mechanism effectively reduces the computational complexity of the self-attention mechanism from the original quadratic level to the linear level, while maintaining the ability to perceive spatial context information. Peng et al. [32] introduced an Attention Feature Extractor (AttFE) module into the YOLOv7 model, using the SENet module to recalibrate feature maps in a data-driven manner, thereby retaining task-oriented features and suppressing distracting features. Cai et al. [33] employed an Efficient Channel Attention Mechanism (ECAM) based on global max pooling optimization, which not only maintained the model’s parameter count but also enhanced its ability to accurately detect small-scale objects.

3. Materials and Methods

As shown in Figure 2, this paper optimizes and improves the original YOLOv11 model to obtain a more lightweight and efficient detection model, ACLC-Detection. Compared with the traditional YOLOv11, ACLC-Detection proposes corresponding solutions to the following three problems: (1) The remote sensing images analyzed in this study typically exhibit high-resolution features, which leads to increased computational cost of the model and more complex image backgrounds. The backbone network, which is a key step in object detection, plays an essential part in the subsequent steps and even in the final results due to the completeness and effectiveness of feature extraction. Therefore, taking into account the characteristics of remote sensing images, this paper replaces some traditional convolutions in the backbone network with depthwise separable convolutions that can significantly reduce the computational load to meet real-time requirements. Moreover, the convolutional excitation module proposed in this paper is introduced after the depthwise separable convolutions, as annotated in Figure 2. It combines the dual advantages of channel-wise attention and spatial domain attention, dynamically enhancing the key features of targets while suppressing background information unrelated to the targets. This effectively addresses the issue of complex backgrounds in remote sensing images. The combination of these two techniques can be said to achieve a balance between efficiency and accuracy, not only alleviating the problem of limited resources but also providing better robustness in complex environments. (2) In the neck fusion network part, the core task is to integrate information derived from feature maps across multiple scales output by the backbone network. However, there is still computational pressure brought by high-resolution inputs. Moreover, in the neck network, features at different levels have different characteristics. Shallow features, though high in resolution and rich in detail, are weak in semantic information. Deep features are strong in semantic information but low in resolution and suffer from severe loss of detail information. Therefore, it is particularly important to balance the two. This paper designs a module named CAF-GM, as shown in the neck network part of Figure 2. The Ghost convolution operation used inside generates multi-scale mappings through simple linear transformations, which are more sensitive to small targets. Moreover, the SimAM mechanism at the end of the model is an energy function that uses a 3D attention mechanism to allocate more weight to the deep semantic information part and to enhance the guidance of shallow features. It can also suppress background noise in shallow features and reduce interference. (3) Although the CIOU loss function integrates geometric attributes such as the overlapping region, the distance between center points, and the aspect ratio, it assigns the same loss weight to all samples. This leads to a significant impact on small samples by IOU and a severe decline in accuracy. In addition, the aspect ratio of CIOU reflects relative differences and cannot reflect the absolute scale size, which is very disadvantageous for multi-scale targets in remote sensing images. Therefore, this study adopts the Inner-CIOU loss function to address the existing limitations. Its advantage lies in the introduction of auxiliary bounding boxes and a dynamic scaling mechanism. Different auxiliary boxes are used for targets of different scales. This improvement has significantly enhanced the positioning accuracy and operational stability of the model in complex remote sensing scenarios, while also strengthening its adaptability to changes in the size of targets in the images.

The three improvements made to the YOLOv11 model are highly targeted and necessary. The ACLC-Detection proposed in this paper not only provides corresponding solutions to the challenges posed by remote sensing images but also lowers the number of model parameters and decreases computational complexity by designing more lightweight modules, enabling the model to be deployed in real time. Section 3.1, Section 3.2 and Section 3.3 will provide in-depth explanations of the corresponding frameworks, functions, and advantages of these three improvement strategies.

3.1. Lightweight Convolutional Attention Module

3.1.1. Convolutional Excitation Module (CEM)

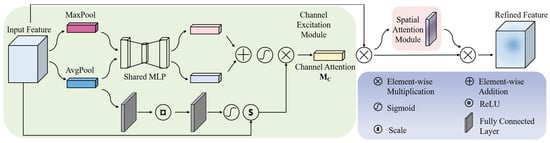

The attention mechanism has been proven to effectively improve the ability of convolutional neural networks to represent features. In this paper, a new attention module named the Convolutional Excitation Module (CEM) is proposed, and its structure is shown in Figure 3. It can be seen that the feature maps entering the CEM have five branches that can be executed in parallel. The first and fifth branches are similar to residual connections. The second branch first undergoes global max pooling and then enters a shared fully connected layer. Correspondingly, the third branch first undergoes global average pooling and also goes through the shared fully connected layer, with its results being element-wise multiplied with those of the second branch. The fourth branch passes through a shared global average pooling and then through the initial fully connected layer to reduce the number of channels, followed by a ReLU nonlinear activation layer and a subsequent fully connected layer responsible for restoring the original channel count. With the coordinated operation of multiple branches, the extraction of information from the input feature maps is notably enhanced. The formulas are represented as follows:

Figure 3.

The schematic diagram of the CE Module.

In expressions (1) and (2), represent the input of the spatial attention module, the output generated by the channel excitation module, the input fed into the CEM, and the output produced by the CEM, respectively. The result generated by the spatial attention module is similarly indicated. The workflow structure is similar to that of the CBAM. The mathematical expressions for the channel excitation module and the spatial attention mechanism are presented in Equations (3) and (4), respectively.

In expressions (3), denotes the activation function of , represents the element-wise multiplication, indicates the weighting operation, denotes the fully connected layer, and represents the activation function .

Specifically, the advantages of the Convolutional Excitation Module (CEM) are as follows: (1) It efficiently combines channel attention and spatial attention. By element-wise multiplication of features output from two dimensions, it enhances features, preserves more important and critical information, and reduces the interference of background information. (2) The global average pooling layer is enhanced by incorporating a fully connected branch. Additionally, a gating mechanism composed of two fully connected layers is designed to recalibrate the channel-wise features of the network, which effectively improves the model’s representational capacity. (3) In this module, global pooling operations do not introduce additional parameters. The fully connected layers perform dimensionality reduction, remarkably decreasing the parameter count. Subsequent activation functions and element-wise multiplication operations also maintain a low parameter count. This indicates that the module has the advantage of being lightweight and is more widely applicable in deployment environments with limited resources.

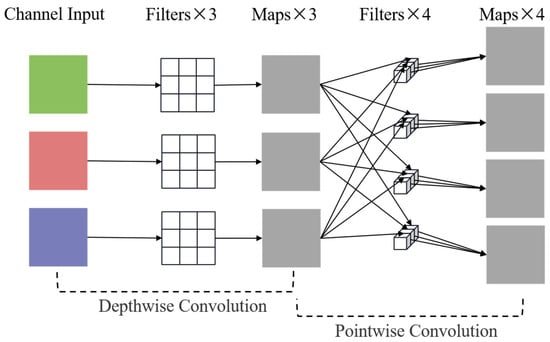

3.1.2. Lightweight Convolutional Module

The convolution operation involves processing an image with several convolution kernels and outputting features in both spatial and channel aspects. However, the number of parameters and the computational load of convolution operations are closely related to the dimensions of the convolutional kernel, the count of input channels, and the quantity of output channels. The relationship is not a simple additive one, so optimizing traditional convolutions is essential. Therefore, to balance the relationship between accuracy and parameters, this paper replaces two conventional convolutions in the model with depthwise separable convolutions. This reduces the model’s parameters without significantly compromising accuracy and is used in conjunction with the convolutional excitation module proposed in the previous section. Figure 4 illustrates the architecture of the depthwise separable convolution. It can be seen that it consists of two components: depthwise convolution and pointwise convolution. In depthwise convolution, single-channel kernels are applied to each channel of the input image individually. Therefore, the quantity of input channels corresponds equally to the number of output channels. However, due to its independent single-channel convolution, it has limited ability to learn information across channels. Subsequently, pointwise convolution is used to compensate for this disadvantage. Essentially, pointwise convolution uses 1 × 1 convolutions to enhance the dimensionality and expand the quantity of output feature maps, thus augmenting the richness of the extracted information. Specifically, Equations (5) and (6) present the expressions used to determine the parameter count and computational demand of standard convolution:

Figure 4.

The schematic diagram of the depthwise separable convolution module.

In the equations, represents the dimensions of the convolution kernel, and represent the quantity of input and output channels, respectively, and represents the dimensions of the resulting feature map. indicates the quantity of parameters, and represents the computational load. It can be seen that in conventional convolution, non-unit input and output channel numbers significantly enhance computational complexity and expand the parameter count. Depthwise convolution uses convolution kernels with a channel size of 1 for each input channel, resulting in an output feature map with a channel number of 1. Pointwise convolution employs a 1 × 1 convolution operation, which greatly reduces the quantity of parameters and the computational burden. The expressions for depthwise separable convolution are presented in Equations (7) and (8).

Although the formulas for depthwise separable convolution may appear longer when compared directly, this is actually due to the substitution of multiplication operations with addition operations, which significantly reduces the quantity of parameters and the computational burden. In general scenarios, when the number of output channels is relatively large and a 3 × 3 convolution kernel is used, the quantity of parameters and the computational burden of depthwise separable convolution can be reduced to one-ninth of the original. Therefore, its advantages are very pronounced: by reducing the quantity of input feature map channels, it reduces the parameters of the convolutional layer, thereby achieving faster computation speed and lower computational load, which is particularly effective on small devices.

3.2. Convolutional Attention Module with Ghost Module (CAF-GM)

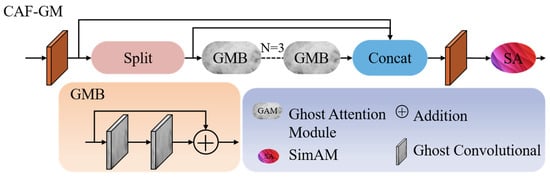

To improve the completeness of feature maps within the neck network section, integrate more valuable information, and decrease the computational burden of the model, we propose a novel module called CAF-GM and incorporate it into the cervical region of the network, as illustrated in Figure 5. The construction of the new module is based on the c2f framework. The feature maps are initially processed through a 1 × 1 convolutional layer upon entering the CAF-GM module. The number of convolution kernels used in this layer is set to twice the number of input feature map channels, which results in doubling the number of output feature map channels. This process enhances the model’s ability to represent features effectively. Split divides the feature map into two parts, one of which proceeds with subsequent operations in sequence, while the other is connected via a residual connection for concatenation. The GMB module replaces the conventional convolution in the Bottleneck module with Ghost convolution. After splitting, the feature map undergoes continuous processing by GMB to capture deeper hierarchical information. The final conventional convolution compresses the channels of the concatenated feature map. Additionally, a SimAM module is added at the end of the model. Overall, the CAF-GM module has been optimized in terms of lightweight convolution and attention. By concatenating feature maps obtained from different processing methods, the model’s capacity to integrate information across multiple scales has been substantially enhanced. The use of 1 × 1 convolution for dimensionality increase and decrease reduces the model’s computational load and enhances its expressive power. The SimAM module placed at the end can further strengthen feature representation and suppress redundant information.

Figure 5.

The schematic diagram of the CAF-GM module.

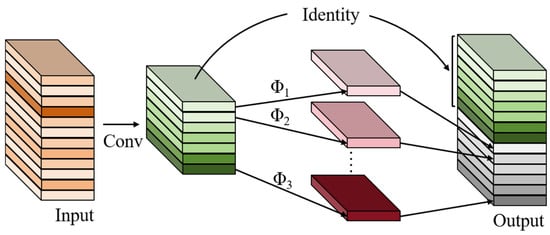

3.2.1. Ghost Module

The process and structure of the Ghost convolution operation are shown in Figure 6. It is evident from the figure that the input feature map initially goes through a 1 × 1 convolutional layer for dimensionality reduction, compressing the channels. Subsequently, each channel’s features undergo a simple linear transformation Φ. Specifically, this process involves three key components: depthwise convolution, batch normalization, and an activation function. After this step, more feature maps are generated. Finally, the feature maps extracted through simple operations will be concatenated with the feature maps obtained from the conventional convolution in the initial stage. Subsequently, they will undergo batch normalization processing and the effect of the ReLU activation function successively, and finally an output feature map will be generated. The central concept behind the Ghost module is to make use of already generated feature maps to generate more Ghost feature maps through simple linear transformations. In contrast to traditional convolutions that use additional convolutional layers, this approach avoids the former’s drawbacks and thereby enhances the network’s computational efficiency. Therefore, Ghost fully utilizes computational resources and is highly suitable for platforms with limited resources.

Figure 6.

The Ghost module.

Regarding the input feature map , a collection of feature maps is obtained through the conventional convolution operation , as shown in Equation (9).

Subsequently, the set of feature maps undergoes a linear transformation. represents the -th feature map within the collection . After undergoing the linear transformation function , Ghost feature maps are generated. denotes that the -th base feature map is transformed into the -th Ghost feature map through the linear transformation. This linear transformation can be regarded as a reweighting or recombination of the base feature maps. The complete calculation formula is shown in Equation (10).

In the equation, represents the final collection of feature maps. In conclusion, the Ghost module effectively enhances the expression ability of the feature map through the aforementioned methods, while significantly reducing the model’s parameter size and computational complexity. This characteristic is of great value in designing lightweight, high-efficiency network structures with good generalization performance.

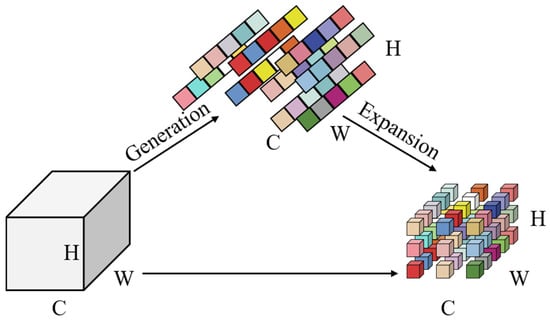

3.2.2. Simple Attention Module (SimAM)

The attention mechanism is an effective means of improving the accuracy of object detection, especially in complex environments. However, the majority of attention mechanisms substantially enlarge the model’s parameter count and computational demands, which in turn weakens the ability to meet real-time processing requirements. To address this issue, the SimAM module is incorporated at the conclusion of the CAF-GM framework in this paper. It is a lightweight, parameter-free attention module based on neural theory. Its structure is shown in Figure 7. The advantages of introducing this module are as follows: (1) It enhances the expression ability of key features. SimAM derives three-dimensional weights through an energy function, which can flexibly adjust the importance of neurons and suppress information with low importance. Without this module, the output multi-scale features would contain redundant information, which is undesirable. (2) The lightweight advantage is significant. Since the SimAM module uses an energy function for derivation and does not introduce parameter training, there are no additional parameters and the computational load is extremely low.

Figure 7.

The SimAM module.

Specifically, SimAM defines an energy function to describe the relationship between an activated target neuron and its surrounding inhibited neurons, as shown in Equation (11):

In the equation, represents the energy, and represent the parameters, including weights and biases, of the linear transformation, respectively. represents the real output generated by the target neuron, is the expected output of the target neuron, represents the outputs of other neurons, is the expected outputs of other neurons, and is the overall count of neurons. The two expected outputs are linear transformations of the input features corresponding to each neuron in a single channel. To further simplify the computation, researchers use binary labels (−1) and (1) to represent and and introduce a regularization term. The simplified calculation formula is shown in Equation (12):

In Equation (12), the binary labels, the linear transformations corresponding to the two expectations, and the introduced regularization term are all incorporated into the formula. represents the regularization coefficient, which can adjust the complexity of the model. and in the two linear transformations, respectively, denote the target neuron and other neurons of the input features in a single channel. By solving this energy function, mathematical expressions for the weights and biases can be obtained. Here, the conditions are further simplified by assuming that all elements in a single channel follow the same distribution, leading to the minimal energy calculation formula, as shown in Equation (13):

In this context, and , respectively, represent the average value and the standard deviation of the outputs from all neurons. The lower the energy, the greater the distinction we consider between the target neuron and its surrounding neurons, and the more important its position in the attention mechanism. The last phase of this attention mechanism involves refining features, during which SimAM utilizes a multiplicative scaling approach to effectively amplify the significance of critical information. The expression is shown in Equation (14):

In the equation, denotes the enhanced feature map. The activation function compresses the input values between 0 and 1 to prevent large energy values without affecting the relative importance of each neuron. is the set of minimum energy across all channels and spatial dimensions. denotes multiplication, and denotes the initial input feature map. By calculating the importance of individual neurons, the method achieves stronger interpretability. Most importantly, it does not significantly increase the model’s complexity or computational load, yet it effectively improves the model’s performance in visual tasks.

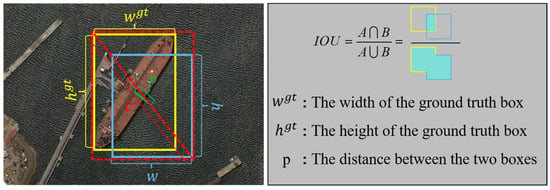

3.3. Inner Complete Intersection over Union (Inner-CIOU) Loss Function

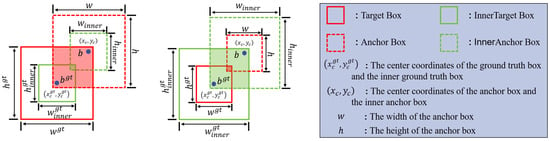

The loss function has a decisive impact on the final detection accuracy. By using a more reasonable loss function, it helps the model to more effectively optimize the difference between the predicted results and the actual labels during the training process. In the YOLOv11 model, the loss functions are divided into two main types: confidence loss and classification loss. The confidence loss evaluates the gap between the predicted confidence scores and the actual values, mainly by quantifying the difference between the predicted bounding boxes and the corresponding ground-truth boxes. For bounding boxes that do not contain the target, the loss function penalizes high-confidence predictions that are incorrect. Classification loss, on the other hand, evaluates the discrepancy between the target’s predicted class and its actual class, typically using cross-entropy loss for computation. In this paper, we mainly focus on optimizing and improving confidence loss. The YOLOv11 model employs the CIoU metric as its confidence loss function, which further considers shape loss based on DIoU. The corresponding visualization can be seen on the left portion of Figure 8. The yellow filled rectangle denotes the actual bounding box of the target object, while the blue filled rectangle indicates the predicted bounding box. The red dashed rectangle represents the minimal enclosing rectangle that covers both the actual and detected box. To the right in Figure 8, a visual interpretation of the IOU analysis is presented, accompanied by annotations for clarity. The corresponding mathematical expressions are provided in Equations (15) and (16):

Figure 8.

A visual representation of CIOU and IoU.

In Equation (15), denotes the full Intersection over Union (IoU) loss function, represents the proportion of the overlap between the ground-truth box and the predicted box relative to their combined area, has been introduced in Figure 8, denotes the diagonal length of the minimal enclosing rectangle that includes both the actual and detection boxes, is a weighting factor used to balance the term, is a metric employed to assess the degree of resemblance between the aspect ratios (width-to-height proportions) of the detection box and the actual label box, and is calculated as shown in Equation (16). In Equation (16), and , respectively, represent the height and width of the predicted box, while and represent the height and width of the ground-truth box, and they are also labeled in Figure 8.

CIoU enhances the regression accuracy of bounding boxes by analyzing both the overlapping regions and the distance between their center points. However, for small targets with small bounding boxes, subtle changes in the shape of the box and the center point are difficult to reflect in the loss function, especially in high-resolution images where CIoU does not perform satisfactorily. The improved Inner-CIoU introduces a scale factor ratio. When the scale factor is greater than 1, the auxiliary box will expand to the corresponding size, enlarging the size of small targets during regression. This can avoid the large positional deviations caused by the small pixel ratio of small targets. Moreover, small targets will be magnified with the expansion of the auxiliary box, and more detailed positional information will be noticed. The visualization of Inner-CIoU is shown in Figure 9, which can be divided into four parts: the red solid line denotes the target box, the inner target box depicted by the solid green line, the anchor box represented by the red dashed line, and the internal anchor box depicted by the green dotted line. The remaining characters are used to describe the parameters of these boxes, and some parameters have been labeled to the right of Figure 9. The calculation formulas for the Inner-CIoU loss function are shown in Equations (17) and (18):

Figure 9.

A visual representation of Inner-CIoU.

In Equation (17), and have been introduced in the preceding content. represents the inner Intersection over Union (IoU), which is the degree of overlap of the scaled boxes, and its formula is shown in Equation (18). denotes the intersection of the inner ground-truth box and the inner anchor box, while denotes the combination of the internal ground-truth bounding box and the internal anchor bounding box. The formulas are expressed in Equations (19) and (20):

In the formulas, represents the left, right, upper, and lower edges of the inner ground-truth bounding box, while denotes the inner anchor box’s left, right, upper, and lower boundary edges. is the scaling factor used to calculate the boundaries of the inner boxes. Compared with the original loss function, Inner-CIoU retains some characteristics of CIoU while also having its own distinctions. When the size of the auxiliary bounding box is smaller than that of the real bounding box, the absolute value of the gradient will increase accordingly, thereby improving the convergence efficiency of those samples with a higher Intersection over Union (IoU) ratio. Conversely, if the auxiliary bounding box exceeds the actual bounding box in size, it tends to yield improved regression performance for samples with low IoU. In summary, the Inner-CIoU loss function addresses the issues in small target detection tasks through scalable auxiliary bounding boxes, gradient reshaping, and dynamic scale factors.

4. Experimental Results

4.1. DataSet

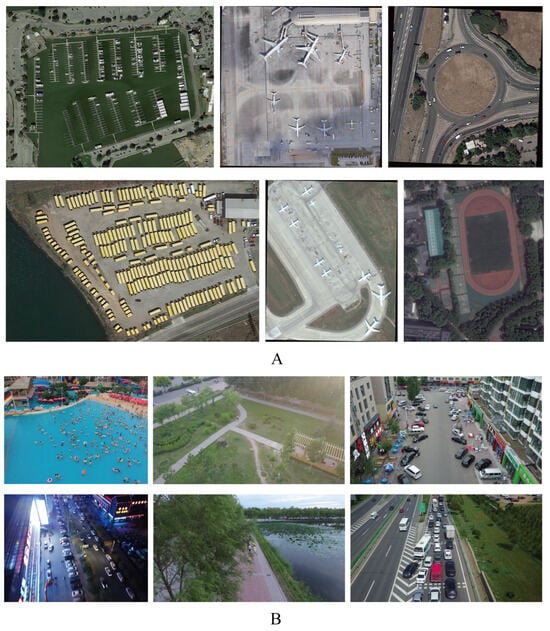

In terms of datasets, to meet the features of remotely sensed imagery, such as multi-scale distribution of targets and dense small targets, this paper carefully selected the DOTA1.0 [34] dataset and the VisDrone2019 [35] dataset and carried out comprehensive experiments to validate the benefits of the proposed model in dealing with these issues. Part of the images from the two datasets are displayed in Figure 10.

Figure 10.

(A,B) Sample scene images from the dataset. (A) Image from the DOTA dataset. (B) Image from the VisDrone2019 dataset.

Part A of Figure 10 shows representative scenes from the DOTA dataset. The leftmost column shows dense small targets, including ships and vehicles. The middle column shows targets of different scales, and the rightmost column shows large-sized targets. Part B displays scenes from the VisDrone2019 dataset. The leftmost column also shows densely distributed small targets, such as people and vehicles. The middle column shows occluded scenes with targets obscured by trees and buildings, and the rightmost column shows targets of different scales and orientations caused by the shooting angle.

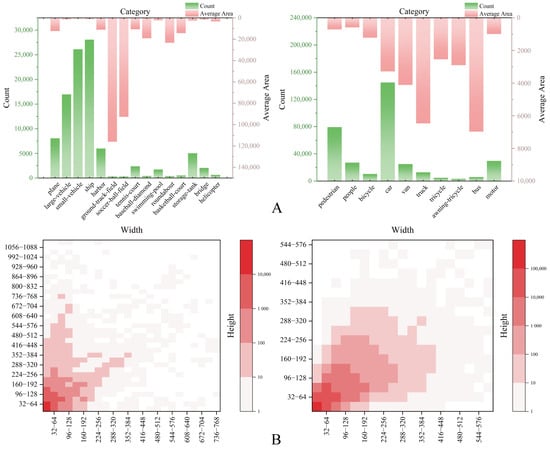

Part A of Figure 11 shows the quantity of different target categories and the mean area of their bounding boxes within the training sets of the two datasets. On the left side of Part A are the results from the DOTA dataset, whereas the right panel displays the results from the VisDrone2019 dataset. In both figures, the x-axis represents the target categories, with category annotations featured below. The left green y-axis of each figure indicates the quantity of categories, while the corresponding red y-axis on the right displays the mean area of the bounding boxes for each category. It can be seen that in the DOTA dataset, large vehicles, small vehicles, and ships are the most numerous, with ships ranking first with a quantity of over 27,500. In contrast, targets such as ground-track-field and soccer-ball-field have lower numbers, generally below 2000. However, observing the right y-axis in this part reveals that the average bounding box area for the most numerous targets, vehicles and ships, is at the lowest level. Ground-track-field and soccer-ball-field, which have a smaller proportion, lead other targets in terms of bounding box pixel area, ranking first and second, respectively. This indicates that targets like vehicles and ships have the characteristics of being numerous and small in scale, while ground-track-field and soccer-ball-field have the characteristics of being few in number and large in size. It is also found that helicopters have the characteristics of being few in number and small in average pixel area, which brings more challenges to the detection task. Part B shows the distribution of targets in the DOTA and VisDrone2019 datasets according to their width and height pixel values. The horizontal axis denotes the variation in pixel width, while the vertical axis indicates the variation in pixel length. The darker the color, the more targets there are within that width and height pixel range. It is evident that both datasets show a stepwise decrease in the number of targets as width and height increase from small to large. There are fewer elongated targets, while numerous small targets have similar width and height.

Figure 11.

Relevant statistics of the dataset (A,B). Part (A) shows the number and average area of various types of targets in the DOTA and VisDrone2019 datasets on the left and right sides, respectively. Part (B) shows the classification of targets in the DOTA and VisDrone2019 datasets based on pixel length and width on the left and right sides, respectively.

4.2. Experimental Setup

A good and stable experimental environment is needed in the experimental phase. The conditions available in the experimental process of this paper are shown in Table 1. The central processing unit used is the Intel Core i7-9700k, whose 8-core and 8-thread provide powerful computing capabilities. The graphics processing unit used is the NVIDIA GeForce RTX 3090Ti, which can perform parallel computing and improve training efficiency. The study utilizes PyTorch, version 1.9.1, as its deep learning framework, whose flexibility and ease of use have won widespread acclaim. It also supports dynamic computation graphs and automatic differentiation. CUDA10.2, used in this paper to accelerate image processing units, can fully exploit the computing power of the GPU. The corresponding CUDNN7.6.5 is an accelerator for neural networks, employed to enhance the efficiency of convolutional neural networks within deep learning architectures and boost overall performance. The development environment is PyCharm2024.2.4, which can perform code editing, debugging, version control, and other functions, and it has a high development efficiency. The scripting language is Python3.8. The entire computing environment is configured to offer a highly efficient, stable, and user-friendly development platform to guarantee the seamless advancement of the project.

Table 1.

Setup and training environment.

Additionally, the training parameters used in this study are summarized in Table 2. The experiment employs SGD as the optimizer for the neural network. The learning rate is configured to 0.001, with a total of 200 training epochs. A momentum value of 0.937 and batch size set to 16 are applied, while the weight decay is set to 0.0005. The resolution of the input image is 640. To ensure the fairness of the experiment and eliminate the influence of other factors, no data augmentation strategy was adopted during the training process. The training set, validation set, and test set were divided in a proportion of 6:2:2. The input image resolution is 640, and no image enhancement methods have been used. The NMS threshold is set to 0.45.

Table 2.

Hyperparameter settings.

4.3. Evaluation Metric

To objectively assess the model’s performance, this study employs multiple evaluation metrics to compare the experimental outcomes. These metrics include precision, recall, the F1 score, average precision, and mean average precision.

Accuracy is the percentage of actual positive cases within the total number of samples that were predicted to be positive. The formula is shown in Equation (21):

In the formula, denotes the count of instances that are actually positive and have been correctly predicted as positive, and refers to the count of instances that are actually negative but have been incorrectly classified as positive by the model.

Recall measures the ratio of positive instances that are correctly identified as positive out of all actual positive samples. The mathematical expression for recall is given in Equation (22):

In the formula, refers to the count of instances that are actually positive but incorrectly classified as negative by the model. Although accuracy and recall can largely evaluate the model’s effectiveness, in practice, when data is imbalanced, the insufficiency of minority performance can be masked, and when there are many target categories, it is necessary to evaluate performance across all categories. At this time, the F1 score, AP, and mAP will show greater advantages. Specifically, F1 represents the average of P and R, and its mathematical expression is provided in Equation (23):

It strikes a balance between accuracy and completeness. AP (average precision) represents a variant of the region beneath the precision–recall (PR) curve. It integrates the model’s precision performance at different recall levels, providing a more comprehensive reflection of the precision of identifying objects. The formula is shown in Equation (24). mAP (mean average precision) is the mean value of average precision calculated across all object categories. The computational formula is presented in Equation (25).

In the formulas, denotes the quantity of distinct object classes, and represents the mean precision value for the -th category. In addition to these metrics, the number of parameters (Parameter), the model’s scale, complexity, and computational efficiency are typically evaluated based on the quantity of FLOPs and the count of FPS.

4.4. Results and Analysis

In this part, extensive experiments are going to be conducted and presented in three parts: comparison experiments with the original model, comparison experiments with more models, and ablation experiments. These will be shown in Section 4.4.1, Section 4.4.2 and Section 4.4.3, respectively.

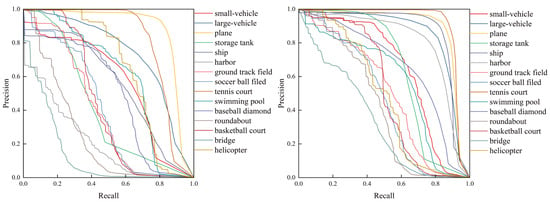

4.4.1. Comparison Experiments with the Original Model

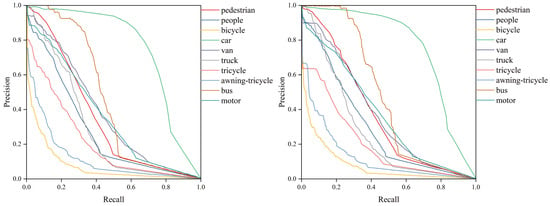

In this section, a comprehensive comparison is carried out between the baseline model and the enhanced model introduced in this study. As illustrated in Figure 12, the precision–recall (PR) curves obtained from the DOTA dataset are contrasted. The left portion of Figure 12 presents the outcomes of the original model, whereas the right portion showcases the performance of the improved model. The 15 categories of targets in the DOTA dataset are represented by curves of different colors. The horizontal axis indicates recall, while the vertical axis reflects precision. Each target represented by a curve is labeled on the right side of each subplot. By analyzing the trends of the curves, it can be observed that the PR curves of the improved model tend more towards the upper-right corner, indicating an improved equilibrium between accuracy and comprehensiveness compared with the original model. This is particularly evident for targets such as bridges and tennis courts, where the improvement is more significant.

Figure 12.

Comparison of PR curves based on the DOTA dataset. The left side shows the results of the original model, while the right side shows the results of the model presented in this paper.

Figure 13 presents a comparative analysis of the two models on the VisDrone2019 dataset, with the same graphical representation as in Figure 12. Among the 10 detected categories, the detection performance for cars is the best, while that for bicycles is the worst. Referring to Part A of Figure 11 for the target categories in VisDrone2019, it can be inferred that the final detection results are closely related to the number and size of the targets. Bicycles, with their low quantity and small area, consistently exhibit a lower level of detection quality. Faced with such challenges posed by the dataset, the improved model holds an advantageous position and demonstrates better performance in most targets. Although subtle differences in Figure 13 may not be easily detected, specific data comparisons can be found in Section 4.4.2, which includes comparisons with other models.

Figure 13.

Comparison of PR curves based on the VisDrone2019 dataset. The left side shows the results of the original model, while the right side shows the results of the model presented in this paper.

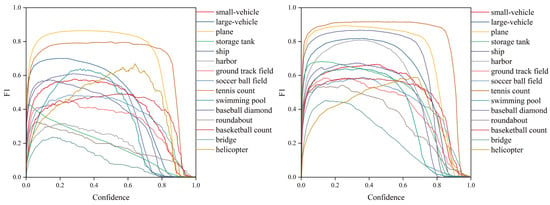

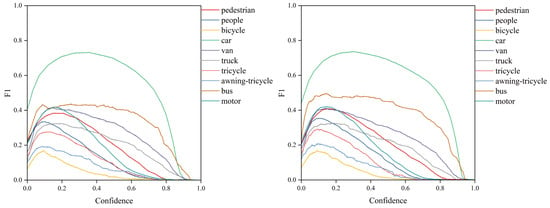

Evaluating based solely on precision and recall is one-sided. Therefore, this study chooses F1 as the average of precision and recall for performance assessment. Figure 14 displays the F1 score curves derived from the DOTA dataset. The left part of the figure illustrates the outcomes of the original model, while the right part presents those of the enhanced model. The x-axis represents the confidence level. Observing the trends of the curves in the figure, it can be found that the F1 score of various targets basically reaches the maximum value before the confidence level is 0.2. However, comparing the two figures reveals that the improved model has a higher F1 score and a wider confidence interval when the F1 score is at its maximum. Finally, the change curve of the improved model is smoother with fewer local fluctuations, which also indicates that the enhanced model exhibits greater robustness and is more resistant to external disturbances.

Figure 14.

Comparison of F1 curves based on the DOTA dataset. The left side shows the results of the original model, while the right side shows the results of the model presented in this paper.

Figure 15 presents the F1 score curves based on the VisDrone2019 dataset, with the subplot representation and distribution referenced from Figure 14. Unlike the trend based on the DOTA dataset in Figure 14, the highest F1 scores in both subplots of Figure 15 are below 0.8, lower than the highest values in Figure 14, and the confidence intervals where the highest F1 scores are maintained are also shorter. Some target categories even exhibit peak phenomena, such as the bicycle target, which once again illustrates that object detection for the VisDrone2019 dataset is an extremely challenging task. However, the model proposed in this paper still performs more outstandingly. This can be seen in the bus and bicycle targets in the two subplots of Figure 15. For the bus target, the enhanced model achieves a superior F1 score and a wider confidence interval with a higher F1 score. For the bicycle target, the improved model reduces this peak effect and has a certain confidence interval where the F1 score remains at a high level.

Figure 15.

Comparison of F1 curves based on the VisDrone2019 dataset. The left side shows the results of the original model, while the right side shows the results of the model presented in this paper.

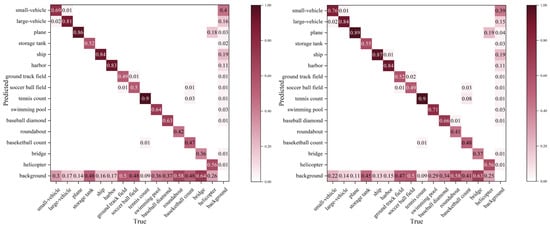

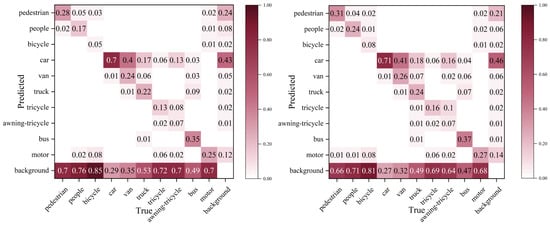

Finally, the baseline model’s forecasting outcomes and the detection model, as well as the true conditions, are plotted into a confusion matrix. The outcomes derived from the DOTA dataset are presented in Figure 16. The horizontal axis denotes the actual values, while the vertical axis indicates the forecasted values. The numbers in the figure indicate probabilities, which are calculated based on the actual number of prediction results. The deeper the color, the higher the probability. Ideally, we want the targets to be correctly predicted, that is, the likelihood that the predicted value accurately reflects the actual value should be substantial. In the figure, this is represented by high values and the most intense hues appearing along the diagonal line extending from the upper-left corner to the lower-right corner. However, in reality, there will inevitably be some values on the edges of the image, indicating that the probability of incorrect prediction exists. The goal of this paper is to increase the values on the diagonal and decrease the values in other positions. Comparing the two figures in Figure 16, it can be found that, except for the soccer field target, which decreased by one point, and the roundabout target, which also decreased by one point, the values for the tennis court and helicopter targets remained unchanged. The correct detection probabilities of the other targets have all increased, and the probabilities of detection errors for each target have also decreased.

Figure 16.

Based on the confusion matrix diagram of the DOTA dataset, the left side shows the results of the original model, while the right side shows the results of the improved model.

Figure 17 presents the confusion matrix results based on the VisDrone2019 dataset. It is evident that the results on this dataset are not as satisfactory as those on the DOTA dataset. Ideally, the highest values should be distributed along the diagonal. However, in Figure 17, the borders of the image exhibit more intense coloration, indicating a higher probability of false positives for certain targets. This is particularly evident for bicycles and people, which have very high false positive rates. Although the improved model has increased the correct detection rate of the targets and decreased the false positive rate for all targets, there is still significant room for improvement to meet the challenges posed by the VisDrone2019 dataset.

Figure 17.

Based on the confusion matrix diagram of the VisDrone2019 dataset, the left side shows the results of the original model, while the right side shows the results of the improved model.

4.4.2. Comparison Experiments with Other Models

In addition to the comparative analysis with the original model, this subsection will further present more extensive experimental comparisons with other models. Firstly, both two-stage and one-stage detectors were selected as the experimental subjects, as shown in Table 3. The selected two-stage detectors include Fast R-CNN [36], Faster R-CNN [37], Cascade R-CNN [38], and RepPoints [39]. The selected one-stage detectors areYOLOv5,YOLOv7 [40], YOLOv8 [41], YOLOv10 [42], FNI-DETR [43], WDFS-DETR [44], and the original model YOLOv11. Table 3 proves the experimental data based on the DOTA dataset. The comparison metrics include the number of model parameters, computational complexity, mean average precision (mAP), and mAP values under different thresholds and different size conditions. As can be seen from the data in the figure, compared with two-stage algorithms and one-stage algorithms based on Transformer models, the YOLO series demonstrates a notable advantage in both parameter efficiency and computational complexity. The improved model is far ahead of the others, which is closely related to the lightweight convolution used in the model. Significant progress has also been made in the detection of small targets. Although there is a slight decline in the ability to detect large targets, this loss is acceptable.

Table 3.

Comparative analysis with other models on the DOTA dataset.

Table 4 presents the results based on the VisDrone2019 dataset. When compared with the performance in Table 3, there are some shortcomings. The detection performance declines significantly under various thresholds and different scales, and even the detection accuracy for small targets is only around 20%. However, when analyzed under the same dataset, it is found that the performance of all models is at a relatively low level. The ACLC-Detection proposed in this paper still maintains a leading position.

Table 4.

Comparative analysis with other models on the VisDrone2019 dataset.

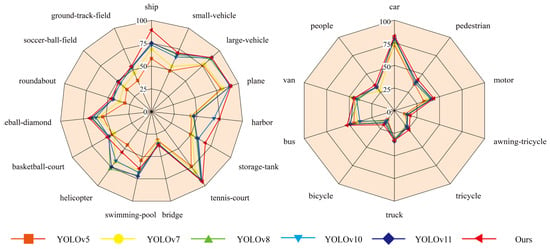

In addition to direct data comparison, this paper also uses radar charts with stronger visualization capabilities for supplementary analysis, as shown in Figure 18. The left side presents the results based on the DOTA dataset, whereas the right portion displays the outcomes derived from the VisDrone2019 dataset. In each subplot, various colors are utilized to denote distinct models. The models selected for the experiment are from the YOLO series, and they are labeled below the charts. Targets of different categories in the same dataset are also labeled on each radar chart. It can be observed from the radar charts that the most significant improvements in the DOTA dataset are for small objects like ships and vehicles. Despite the large number of categories in the DOTA dataset, the results of each dataset have a relatively clear distinction. Observing the results of the VisDrone2019 dataset, it is evident that although the enhanced model leads other models in detection levels in each kind, the overall detection levels are generally low and relatively concentrated. However, the performance of the improved model introduced in this study remains satisfactory.

Figure 18.

Comparison and analysis of AP50 values. The left subplot shows the results based on the DOTA dataset, while the right subplot shows the results based on the VisDrone2019 dataset.

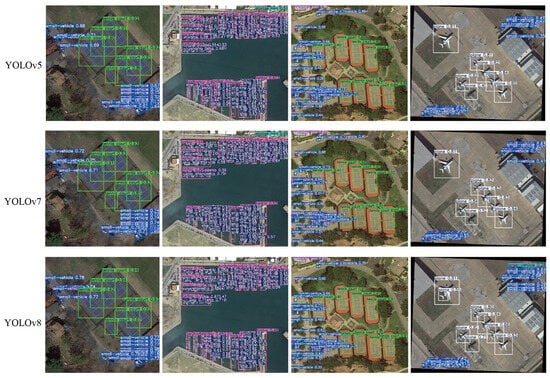

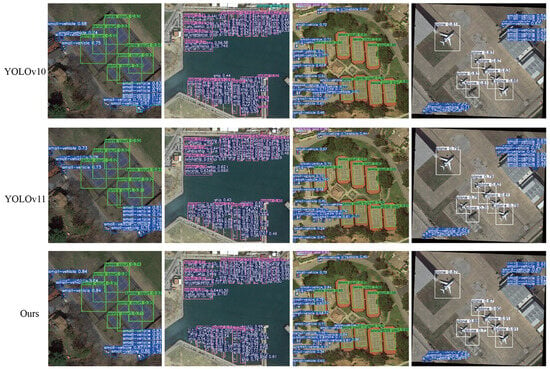

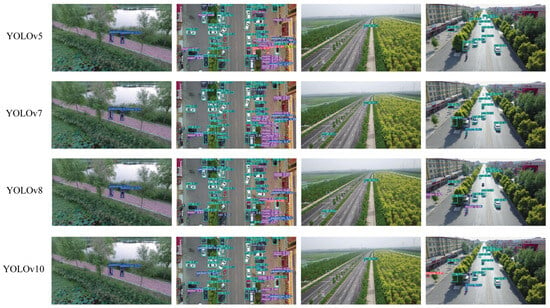

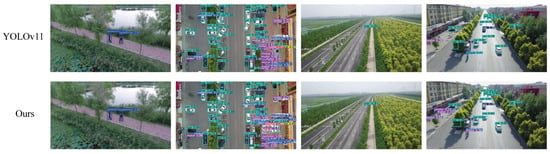

Finally, this paper conducted the most visually effective comparative experiments. As shown in Figure 19, the visualization results are analyzed based on the DOTA dataset. The models corresponding to the four scenarios are labeled on the far left of each row. The selected scene images contain dense small targets and targets of various scales. By comparing the detection accuracy of different models in the same scene, especially the results for small targets, the advantages of the ACLC-Detection model can be intuitively seen.

Figure 19.

Analysis of the visualization results based on the DOTA dataset.

Similarly, as shown in Figure 20, four representative scenarios were selected from the VisDrone2019 dataset. The first column shows small targets of pedestrians, the second column shows dense small targets of vehicles, the third column shows small targets of vehicles, and the fourth column shows vehicles of different scales caused by the shooting angle. Judging solely from the final results, the model introduced in this study achieved first place. Observing the third column, it can be found that the YOLOv7 and YOLOv8 models mistakenly identified the road signs as vehicles. In the fourth column, almost all models misidentified the grass on the left side of the image as vehicle targets. For the small target on the far left, the YOLOv5 and YOLOv7 models failed to detect it, YOLOv10 identified it as a bicycle, while the other models identified it as a motorcycle.

Figure 20.

Analysis of the visualization results based on the VisDrone2019 dataset.

4.4.3. Ablation Experiment

Finally, ablation experiments were carried out from the model’s own perspective to evaluate how various enhancement approaches affect its performance. First, as shown in Table 5, four methods were, respectively, introduced based on the DOTA dataset, and comparisons were made in terms of mAP values under different scales, model parameters, computational complexity, and FPS. Only one method was introduced in each experiment. Since the change in dataset does not affect the model’s parameters and computational complexity, the changes in parameters and computational complexity presented in Table 6 remain consistent with Table 5. In Table 5, the introduction of lightweight, depthwise separable convolution reduced the model’s parameters and computational complexity, thereby increasing the computational speed. However, the improvement in accuracy was not significant. The CEM, although lightweight, inevitably increased the model’s parameters and computational complexity. Fortunately, the CEM effectively improved detection accuracy. It is important to highlight that after the introduction of Inner-CIOU, there were no changes in the model’s parameters and computational complexity, as the loss function does not participate in the calculation of these indicators.

Table 5.

Ablation experiments based on DOTA dataset.

Table 6.

Ablation experiments based on the VisDrone2019 dataset.

In Table 6, which is based on the VisDrone2019 dataset, the trends in the model’s metrics are consistent with those in Table 5. However, due to the lower image resolution in the VisDrone2019 dataset compared to the DOTA dataset, the processing speed is relatively faster, which is reflected in the table as a higher FPS value and faster computational speed.

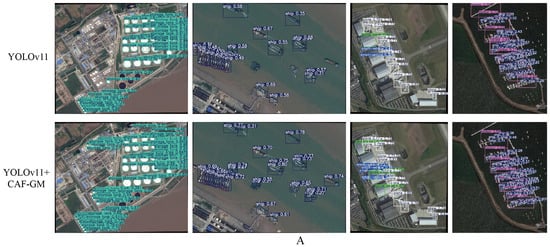

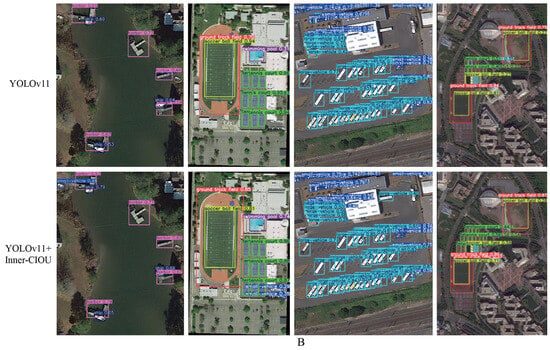

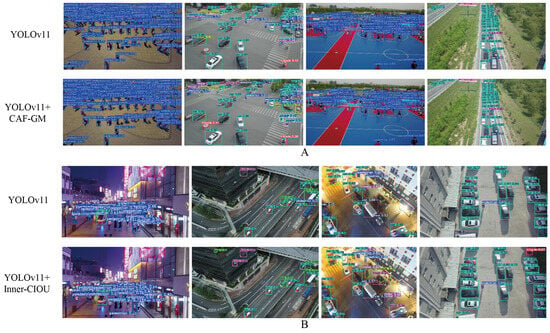

Finally, a visual comparative evaluation of the ablation results was conducted. As illustrated in Figure 21, the experiments were conducted using the DOTA dataset. Part A shows the visual comparison between the baseline model and the model with only CAF-GM network introduced in four scenarios, while Part B presents the visual comparison results between the baseline model and the model with only the Inner-CIOU loss function introduced in four scenarios. It can be observed that in Part A, the most noticeable improvement is that the model with the new module introduced can detect more small targets, including in the first and second columns of scenarios. In addition, there is an improvement in the precision of detected targets. In Part B, after the introduction of the Inner-CIOU loss function, the model’s capability to identify the location of small targets becomes more accurate. For example, in the first column, the optimized anchor boxes have a tighter containment relationship with the actual targets, such as ships and ports.

Figure 21.

Visualization results of ablation experiments on the DOTA dataset, where Part (A) shows the results with the introduction of the CAF-GM module, and Part (B) shows the results with the introduction of the Inner-CIOU loss function.

Figure 22 presents the results based on the VisDrone2019 dataset, with the experimental methods and data distribution consistent with Figure 21. Whether it is the introduction of the CAF-GM module in Part A or the Inner-CIOU loss function in Part B, the optimized model shows performance improvements in both the thorough capture of information related to small targets and the ability to detect targets across multiple scales. It detects more targets and achieves precise localization in dense pedestrian targets and significantly improves detection accuracy in multi-scale vehicle targets.

Figure 22.

Visualization results of ablation experiments on the VisDrone2019 dataset, where Part (A) shows the results with the introduction of the CAF-GM module, and Part (B) shows the results with the introduction of the Inner-CIOU loss function.

In Table 7, a comparative analysis is conducted between the Inner-CIOU loss function introduced in this paper and the current state-of-the-art loss functions, including the SIOU loss function and the DIOU-R loss function. The metrics used for comparison include accuracy, recall, mAP50, frames per second (FPS), and application scenarios. The analysis of Table 7 shows that for the remote sensing image problem addressed in this study, the loss function proposed in this paper performs better in detecting small targets and multi-scale targets, thus achieving the best precision metrics. The detection speed is superior to that of the SIOU loss function but inferior to that of the DIOU-R loss function. This is because the DIOU-R loss function is more suitable for resource-constrained platforms with high real-time requirements. The SIOU loss function is designed for targets with directional consistency and does not yield satisfactory detection accuracy on the DOTA dataset. In summary, the loss function introduced in this paper to tackle the challenges in remote sensing image detection demonstrates the optimal detection performance for small- and multi-scale targets. Although it lags behind the DIOU-R loss function in detection speed, it remains within an acceptable range.

Table 7.

Comparison and analysis of three loss functions based on the DOTA dataset.

5. Conclusions

Overall, this paper proposes three improvement strategies to tackle the challenge of detecting small targets in remote sensing images when combined with deep learning technologies. Firstly, starting from the feature extraction stage, a lightweight convolutional model is developed with the aim of minimizing the loss of information pertaining to small targets at the initial stage while ensuring model lightweighting. This model utilizes the lightweight nature of depthwise separable convolution and the local enhancement feature of the CEM for target features, obtaining more comprehensive and complete target details during feature extraction. Secondly, to enable small target features to efficiently participate in the recognition process, the CAF-GM mechanism is introduced into the neck network. This mechanism outputs more feature maps through inexpensive operations and uses the SimAM mechanism to suppress redundant background information, allowing important features to play a significant role. Finally, to tackle the localization challenge associated with small objects, the Inner-CIOU loss function is introduced. This function optimizes the discrepancy between the predicted values and the actual observations of the bounding boxes through a scale factor, achieving high-quality detection of small targets. The model improvements are based on lightweight methods, which are advantageous for mobile deployment and real-time detection compared to the original model. Experiments on the DOTA and VisDrone2019 datasets have demonstrated that the proposed model exhibits excellent performance in various detection metrics.

However, it is not difficult to find that, as remote sensing datasets, the effectiveness of detection on the VisDrone2019 dataset is significantly lower than that on the DOTA dataset. This is mainly because the targets in the VisDrone2019 dataset are more similar to the background, with many targets even located behind the background. Additionally, there is a high degree of similarity between different small targets. The dataset also features a wide range of scenarios under strong-light, low-light, and even nighttime conditions. Although the proposed model outperforms other models in comparison, in the face of these real challenges, our next step will be to conduct a more in-depth theoretical analysis and experimental verification. We hope that in future remote sensing image detection, even when confronted with more complex environments, the model can maintain excellent detection capability.

Author Contributions

Conceptualization, S.L. and F.S.; methodology, C.Y. and J.X.; software, J.D.; validation, C.Y. and S.L.; resources, F.S.; data curation, F.S.; writing—original draft preparation, S.L.; writing—review and editing, S.L.; visualization, S.L.; supervision, F.S.; project administration, J.D., Q.L. and T.Z.; funding acquisition, F.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant. number: 61671470).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tejasree, G.; Agilandeeswari, L. Land Use/Land Cover (LULC) Classification Using Deep-LSTM for Hyperspectral Images. Egypt. J. Remote Sens. Space Sci. 2024, 27, 52–68. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for Land Cover and Land Use Classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef]

- Mountrakis, G.; Heydari, S.S. Effect of Intra-Year Landsat Scene Availability in Land Cover Land Use Classification in the Conterminous United States Using Deep Neural Networks. ISPRS J. Photogramm. Remote Sens. 2024, 212, 164–180. [Google Scholar] [CrossRef]

- Jia, P.; Chen, C.; Zhang, D.; Sang, Y.; Zhang, L. Semantic Segmentation of Deep Learning Remote Sensing Images Based on Band Combination Principle: Application in Urban Planning and Land Use. Comput. Commun. 2024, 217, 97–106. [Google Scholar] [CrossRef]

- Sun, S.; Xiong, T. Application of Remote Sensing Technology in Sustainable Urban Planning and Development. Appl. Comput. Eng. 2023, 3, 283–288. [Google Scholar] [CrossRef]

- Morin, E.; Herrault, P.-A.; Guinard, Y.; Grandjean, F.; Bech, N. The Promising Combination of a Remote Sensing Approach and Landscape Connectivity Modelling at a Fine Scale in Urban Planning. Ecol. Indic. 2022, 139, 108930. [Google Scholar] [CrossRef]

- Wang, S.; Wu, W.; Zheng, Z.; Li, J. CTST: CNN and Transformer-Based Spatio-Temporally Synchronized Network for Remote Sensing Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 16272–16288. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, S.; Lizaga, I.; Zhang, Y.; Ge, X.; Zhang, Z.; Zhang, W.; Huang, Q.; Hu, Z. UAS-Based Remote Sensing for Agricultural Monitoring: Current Status and Perspectives. Comput. Electron. Agric. 2024, 227, 109501. [Google Scholar] [CrossRef]

- Wang, X.; Zeng, H.; Yang, X.; Shu, J.; Wu, Q.; Que, Y.; Yang, X.; Yi, X.; Khalil, I.; Zomaya, A.Y. Remote Sensing Revolutionizing Agriculture: Toward a New Frontier. Future Gener. Comput. Syst. 2025, 166, 107691. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Wang, D.; Yu, L.; Yu, J. Improved CNN Classification Method for Groups of Buildings Damaged by Earthquake, Based on High Resolution Remote Sensing Images. Remote Sens. 2020, 12, 260. [Google Scholar] [CrossRef]

- Jia, S.; Chu, S.; Hou, Q.; Liu, J. Application of Remote Sensing Image Change Detection Algorithm in Extracting Damaged Buildings in Earthquake Disaster. IEEE Access 2024, 12, 149308–149319. [Google Scholar] [CrossRef]

- Hui, Y.; Wang, J.; Li, B. DSAA-YOLO: UAV Remote Sensing Small Target Recognition Algorithm for YOLOV7 Based on Dense Residual Super-Resolution and Anchor Frame Adaptive Regression Strategy. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 101863. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Z.; Sun, H. DiffuYOLO: A Novel Method for Small Vehicle Detection in Remote Sensing Based on Diffusion Models. Alex. Eng. J. 2025, 114, 485–496. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, Y. A Small Target Detection Algorithm Based on Improved YOLOv5 in Aerial Image. PeerJ Comput. Sci. 2024, 10, e2007. [Google Scholar] [CrossRef] [PubMed]

- Yi, H.; Liu, B.; Zhao, B.; Liu, E. Small Object Detection Algorithm Based on Improved YOLOv8 for Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 1734–1747. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, W.; Lv, S.; Yu, J.; Ge, D.; Guo, J.; Li, L. YOLOv11-CAFM Model in Ground Penetrating Radar Image for Pavement Distress Detection and Optimization Study. Constr. Build. Mater. 2025, 485, 141907. [Google Scholar] [CrossRef]

- Gennari, M.; Fawcett, R.; Prisacariu, V.A. DSConv: Efficient Convolution Operator. Inst. Electr. Electron. Eng. 2019, 5148–5157. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar] [CrossRef]

- Mao, R.; Wu, G.; Wu, J.; Wang, X. A Lightweight Convolutional Neural Network for Road Surface Classification under Shadow Interference. Knowl. Based Syst. 2024, 306, 112761. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, D.; Flesch, R.C.C.; Jin, T. A Multi-Input Lightweight Convolutional Neural Network for Breast Cancer Detection Considering Infrared Thermography. Expert Syst. Appl. 2025, 263, 125738. [Google Scholar] [CrossRef]

- Tang, X.; Chen, Y.; Ma, Y.; Yang, W.; Zhou, H.; Huang, J. A Lightweight Model Combining Convolutional Neural Network and Transformer for Driver Distraction Recognition. Eng. Appl. Artif. Intell. 2024, 132, 107910. [Google Scholar] [CrossRef]

- Lirong, L.; Hao, C.; Junwei, D.; Bing, M.; Zhijie, Z.; Zhangqin, H.; Jie, L. High-Voltage Transmission Line Inspection Based on Multi-Scale Lightweight Convolution. Measurement 2025, 251, 117193. [Google Scholar] [CrossRef]

- Gendy, G.; Sabor, N.; He, G. Lightweight Image Super-Resolution Network Based on Extended Convolution Mixer. Eng. Appl. Artif. Intell. 2024, 133, 108069. [Google Scholar] [CrossRef]