Abstract

The foundation model fine-tuning optimization method has gradually become a research hotspot due to the development of generative pretrained transformer. However, compared to natural scene images, remote sensing images have a wide range of spatial scales, complex objects, and limited labelled samples, which introduce great challenges to image interpretation. To reduce the gap between nature scene images and remote sensing images, this paper proposes a novel RLita optimization method for foundation models. Specifically, a region-level image–text alignment optimization method is proposed to represent the features of images and texts as visual and sematic representation vectors in one embedding space for better model generalization, and a parameter-efficient tuning strategy is designed to reduce computational resources. Experiments on five remote sensing datasets including object detection, semantic segmentation, and change detection show the effectiveness of the RLita method.

1. Introduction

Image interpretation is the process of extracting, recognizing, and understanding useful information from an image. Image interpretation tasks include object detection and recognition, semantic segmentation and change detection, and so on. With the continuous development of remote sensing sensors, remote sensing image interpretation technology has received increasing attention, and has been widely applied in various practical fields such as land resources monitoring, crop monitoring and yield estimation, and forest carbon sink estimation.

With the rapid development of deep learning technology, foundation models represented by generative pretrained transformer (GPT-4) [1,2,3,4] and vision transformer (ViT) [5] have shown great performance in both natural language processing and computer vision fields. The core of the foundation model training method lies in learning the hidden knowledge from massive data autonomously for strong generalization ability and adaptability. Training optimization methods for foundation models are of great significance in enhancing performance and flexibility, reducing costs, supporting complex tasks, and improving hardware efficiency. Mainstream architectures for training foundation models include transformer decoders [6], general language models [7], and Model of Mixed Experts [8,9,10]. Transformer decoders [11], one of the most popular architectures in the field of natural language processing in recent years, achieves deep mining and efficient use of contextual information through self-attention mechanism and trans-attention mechanism. General Language Model [7] is a general language model based on autoregressive blank infilling that improves the performance of BERT, T5, and GPT on various NLU tasks. Model of Mixed Experts [8,9,10], as an integrated learning technique, speeds up model training and achieves better performance by directly combining multiple “experts” models. These above foundation models mostly focus on the nature scene images, using pretrained models with weights from natural scene images. Compared to natural scene images, remote sensing images have a wide range of spatial scales, complex objects, and limited labelled samples. Thus, a direct transfer of foundation model optimization methods from the nature scene images to the remote sensing images poses a number of problems, as shown in Figure 1. Firstly, the high resolution and large size of remote sensing images make foundation model training and inference process consume a large number of computational resources and storage space, which leads to a high but non-negligible hardware demand. Secondly, multi-scale remote sensing objects with complex background also brings considerable difficulties to model training and optimization. In RS images of a large scene, the boundaries and background of the objects could be blurred. In addition, RS images are susceptible to interference from external factors such as weather, light, clouds, and shadows, which makes the model need strong generalization ability to adapt to the diversity of RS objects. Further, the scarcity of high-quality annotated data is a great challenge to the acquisition and processing of remote sensing image datasets. The annotation of remote sensing images requires professional geographical knowledge and experience due to the specific characteristics of remote sensing images. Thus, how to effectively deal with the time-consuming and labor-intensive annotation process has become an important factor constraining the training of foundation models of remote sensing images.

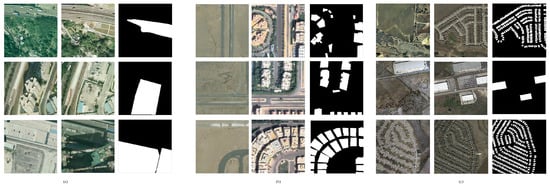

Figure 1.

Some examples of RS images. RS images have crowded scenes, containing objects with various sizes and blurred borders. These challenges increase the difficulty of precise image interpretation.

For the above problems, this paper proposes a novel region-level image–text alignment foundation model optimization method designed for remote sensing object interpretation tasks. Firstly, this paper proposes a region-level image–text alignment optimization method which improves the generalization of foundation model, specifically a dual-stream network architecture consisting of an image encoder and a text encoder representing the image and text features as visual and linguistic representation vectors within the same embedding space. Secondly, this paper proposes a parameter-efficient tuning strategy adapted to the remote sensing domain to reduce computational resources, specifically inserting lightweight adaptation modules into the pretrained vision transformer (ViT), aiming to fully exploit the potential of the model for adapting to new scenarios in downstream tasks. Moreover, this paper fine-tuned a SAM (Segment Anything Model) method based on existing annotated data for batch implementation of target extraction and annotation, so as to construct region-level image–text pairs with high efficiency.

In summary, the contributions of this paper can be outlined as follows:

- This paper proposes a novel region-level image–text alignment method for foundation models designed for remote sensing object interpretation tasks, which is designed by a multi-granularity CLIP module and a parameter-efficient tuning strategy.

- For better generalization of remote sensing objects, a region-level image–text alignment optimization module named multi-granularity CLIP is proposed.

- To reduce computational resources, a parameter-efficient tuning strategy is designed to adapted to remote sensing images, which is the vision adapter (VAP) module.

- To mitigate the cost of image annotation, region-level image–text pairs are constructed based on annotated data by contrastive learning for segmenting object mask features.

The proposed method has been evaluated on remote sensing datasets, including FAIR1M [12], DIOR [13], DIOR-R [14], iSAID [15], Vaihingen [16], LEVIR-CD [17]. Specifically, our method is applied to detection and segmentation algorithms utilizing Swin Transformer [18] as the foundational architecture. The approach demonstrated superior performance, achieving state-of-the-art (SOTA) results.

2. Related Works

2.1. Vision Pretraining for Remote Sensing

In recent years, the development of deep learning and deep neural networks has led to the emergence of specialized algorithms designed for downstream tasks in remote sensing. Notably, deep learning models have been proposed for remote sensing object detection [19,20,21,22], semantic segmentation [18,23,24], and object tracking [25,26,27]. However, these algorithms often rely on pretraining with weights from natural scene images, which may not be optimal given the unique characteristics of remote sensing images.

One challenge in remote sensing pretraining is the scarcity of high-quality annotated data. Unlike large-scale labeled datasets such as ImageNet-21K [28] or JFT-300M [29] for natural images, remote sensing lacks similar resources. Consequently, unsupervised pretraining methods are commonly employed. For example, SimCLR [30] creates positive and negative samples through data augmentations and spectral channel decompositions. This approach enables effective unsupervised pretraining using remote sensing data. Another approach involves contrastive learning; for example, Ayush et al. [31] use pairs of remote sensing images captured at the same geographical coordinates but different time points as positive samples. This method has shown promise in remote sensing scene classification and temporal analysis. However, its application to large-scale remote sensing base models remains unexplored.

Due to the development of MAE [32] and SimMIM [33], recent advancements in mask-based unsupervised pretraining have led to the development of more robust and performant remote sensing base models. For instance, building upon the MAE framework, SatMAE [34] utilizes large-scale unlabeled remote sensing data for pretraining. Its downstream task performance surpasses that of contrastive-based remote sensing pretraining models. RingMo [35] employs multiple masks of varying scales for unsupervised pretraining. This series includes RingMo [35] for general tasks, RingMo-SAM [36] for instance segmentation, and RingMo-Lite [37] for lightweight deployment. Cha et al. [38] apply MAE pretraining to ViT models across different scales. Remarkably, using remote sensing data for pretraining improves the performance of ViT models of all scales compared to natural scene pretraining. Moreover, by further increasing model size and training data volume, SkySense [39] achieves additional performance gains. These novel remote sensing pretraining methods offer exciting opportunities for advancing remote sensing image analysis and open up intriguing avenues for future research.

2.2. Multimodal Pretraining for Remote Sensing

After CLIP [40], a series of pretraining methods for remote sensing multi-modal models have emerged one after another. RemoteCLIP [41] constructs Box-to-Caption image–text pairs using scene classification, object detection, and semantic segmentation datasets for all remote sensing data. Then RemoteCLIP utilizes these pairs for CLIP pretraining. As a result, RemoteCLIP demonstrates breakthroughs in applications like zero-shot transfer. Based on retrieval, DUCH [42] includes feature extraction and hash quantization in its training module, and applies both single-modal and multimodal contrastive losses. These help DUCH achieve significant results in both Image-to-Text Retrieval and Text-to-Image Retrieval.

Based on the experience of natural scenes, Visual-Language Pretraining (VLP) has two advantages over Masked Image Modeling (MIM). First is its ability for open-vocabulary scene recognition. ODISE [43] applies CLIP to open-scene segmentation tasks, resulting in notable performance improvements. As well, FC-CLIP [44] proposes a one-stage framework based on CLIP, achieving better performance with fewer parameters and lower training costs compared to previous methods. Another improvement is stronger scalability. VLP models exhibit stronger performance gains as pretraining data scales up, whereas MIM experiences diminishing returns with larger data sizes [45].

2.3. Parameter Efficient Fine-Tuning

With the continuous evolution of pretrained base models, their parameters have grown significantly. Consequently, fine-tuning these models for specific downstream tasks demands substantial computational resources. Parameter Efficient Fine-Tuning (PEFT) methods have emerged as a series of techniques that facilitate rapid model transfer, initially gaining prominence in the field of Natural Language Processing (NLP). Adapter [46] introduced trainable bottleneck structures between layers to enhance model adaptability. BitFit [47] selectively adjusts critical bias terms that play pivotal roles during task adaptation. Prompt-Tuning [48] embeds learnable tokens into the input layer, providing explicit guidance to the model. And LoRA [49] injects trainable rank-decomposed matrices into each attention layer of the transformer architecture. This series of methods also inspired the emergence of the PEFT method in the visual field.

In computer vision (CV), inspired by Prompt-Tuning, VPT [50] develops visual prompt modules and applies them to visual transformer models. IVPT [51] enhances connectivity between different layers’ prompt tokens. VQT [52] aggregates intermediate features from the visual transformer. As well, E2VPT [53] introduces a set of learnable key-value prompts in self-attention and input layers. Further, AdaptFormer [54] utilizes parallel adapter structures for visual tasks. LoRand [55] creates compact adapter structures using low-rank fusion, exploring their potential in object detection and image segmentation. KAAdaptation [56] further decomposes fine-tuning parameters using Kronecker products. NOAH [57] is a comprehensive approach that combines VPT, LoRA, and Adapter methods, achieving superior results.

Moreover, specialized PEFT methods cater to Visual-Language tasks, like Token Mixing [58], TaCA [59], and DPL [60]. In addition, for remote sensing tasks, AiRs [61] proposes more expressive adaptation modules and a more efficient integration strategy. These PEFT techniques significantly facilitate the practical deployment of foundational models.

3. Methodology

In this section, we introduce our proposed multi-granularity alignment method for Contrastive Language-Image Pretraining (CLIP) model pretraining and the efficient parameter fine-tuning (PET) technique. Region-level image–text alignment method can accurately align image and text features to complete the pretraining of visual language models, and is more efficient for various downstream tasks, including remote sensing. The parameter-efficient fine-tuning (PET) technology can fully develop the potential of this model to adapt to downstream tasks in new scenarios.

3.1. Multi-Granularity CLIP Module

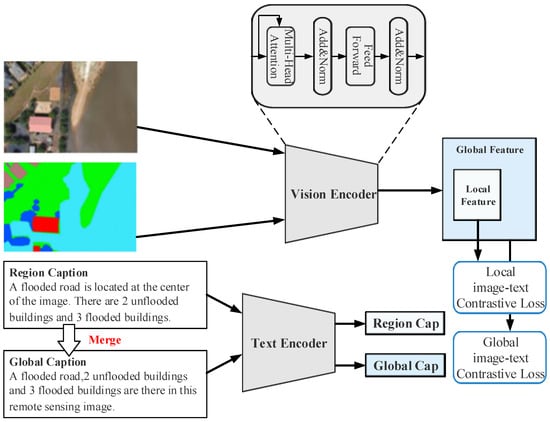

The proposed multi-granularity CLIP model is depicted in Figure 2, featuring a dual-stream network architecture consisting of an image encoder and a text encoder. Both encoders terminate with a linear layer followed by a normalization layer, designed to project the final category token into a unified dimension and normalize it, thus jointly representing the image and text features as visual and linguistic representation vectors within the same embedding space.

Figure 2.

An illustration of our proposed multi-granularity CLIP method. Global features capture high-level semantic context, while local features focus on fine-grained details of key regions. Such strategy helps this model use these capabilities across different spatial scales, thereby boosting performance in downstream tasks like classification and segmentation.

To better model relationships among salient objects in the global image, we process the images using a pretrained object-detection network, obtaining region bounding boxes and their corresponding category information. For object categories within these bounding boxes that possess attributes, we retrieve their textual descriptions. These text pieces collectively provide a finer-grained, more comprehensive, and accurate description for the process of regional contrastive learning.

The above process can be described mathematically as follows: For each global image–text pair (I, ), we generate pairs of bounding-box region-level image cropped from the original global image based on the bounding box coordinates from the pretrained object-detection network and text caption describing the objects in the cropped region. While the global description text encapsulates a complete description of the entire image, the bounding-box region description texts offer localized, more detailed descriptions, providing fine-grained textual content.

Thus, is treated as a positive sample pair, and the instances of are also considered multiple positive sample pairs. All these image–text pairs are fed simultaneously into the dual-stream CLIP model’s image encoder and text encoder to extract the global representations and the region-based bounding-box representations .

This process can be described mathematically as follows in Equations (1)–(4):

where and , b represents batch size, d represents dimensions.

This mechanism allows the model to learn from both the holistic context and the fine-grained details present in both the visual and textual modalities, thereby improving the alignment between them.

Ultimately, the pairs and are brought closer in their respective feature spaces through the use of a contrastive learning loss function, whereas other image–text pairs within the same batch are treated as negative samples and their distances from the positive sample features are pushed apart. We term this entire contrastive learning process as multi-granularity representation alignment. The overall loss function employed in this process using multi-granularity supervision can be described by the following Formulae (5)–(7):

where the weight serves as a hyperparameter controlling the relative contribution of the global loss function and the bounding-box region loss function with an empirical value of 0.5 in the experiment, and refers to the image–text contrastive loss function from the CLIP paper.

We initialize the image encoder and text encoder using the pretrained weights from EVA-CLIP. Note that during the inference process, only the original image–text pairs are used, the visual representations derived from I, and the textual representations originating from .

In summary, the global and local captions we constructed are designed to ensure the quality and consistency of the descriptions. The global description provides an overall summary, while the local description focuses on details. We also use methods such as center analysis to ensure the relevance of the local description to the global context. In terms of diversity, we use connected domain analysis and global statistics to capture the characteristics of different objects and scenes in the image, and strive to generate diverse descriptive texts.

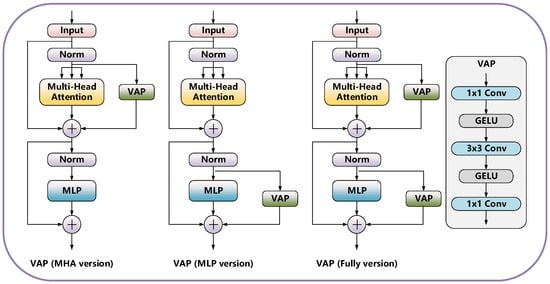

3.2. VAP Module

In the context of migrating to downstream tasks in remote sensing, given that our multi-granularity CLIP model has undergone extensive pretraining across various granularity levels, its adaptability to different downstream tasks has significantly improved. However, the prevalent paradigm in computer vision, which involves pretraining followed by fine-tuning, can be computationally expensive and often makes complete fine-tuning unfeasible. Thus, we employ Parameter-efficient transfer (PET) technology, inspired by parameter-efficient transfer learning (PETL) on language transformers, to insert lightweight adaptation modules into the pretrained vision transformer (ViT), aiming to fully exploit the potential of the model for adapting to new scenarios in downstream tasks.

During implementation, we freeze the pretrained parameters in the backbone of the multi-granularity CLIP model, only fine-tuning the PET module. This approach ensures that the existing knowledge learned during pretraining is well-preserved, preventing detrimental effects on the high-quality existing knowledge from subsequent transfer tasks. Simultaneously, while transferring to downstream data, we introduce learnable modules and task-specific decoders to enhance the model’s understanding of the particular task at hand.

This technique enables adaptation to downstream tasks with the introduction of only a small number of trainable parameters (less than 0.5% of the total model parameters), striking a balance between preserving the integrity of the pretrained knowledge and effectively tuning the model for specific downstream applications in remote sensing.

As shown in the Figure 3, the VAP module consists of three convolutional layers: a 1 × 1 convolutional layer for channel dimension reduction, a 3 × 3 convolutional layer with an equal number of input and output channels, and another 1 × 1 convolutional layer for channel dimension expansion. In between these convolutional layers, GELU is utilized as the activation function. Given that ViT flattens image data into a one-dimensional token sequence, it is necessary to restore the token sequence back into a two-dimensional structure before applying the convolutions. The token is included here to mark individual images.

Figure 3.

An illustration of our proposed VAP module, which consists of three versions: VAP (MHA version), VAP (MLP version), and VAP (full version). By inserting adapters at different locations, rapid and accurate remote sensing image interpretation can be achieved.

The parameter-efficient tuning (PET) approach involves integrating the VAP module in parallel with the multi-head self-attention module within the original multi-granularity CLIP model. Both components work together, and their computations can be described mathematically using the following Formulas (8) and (9):

In the formulation, the S is a hyperparameter used to control the extent of involvement of the VAP module during the training process. The empirical value for this hyperparameter is 0.1 in our experiments. LN denotes Layer Normalization. The VAP module bears resemblance to the residual structure found in ResNet; if the multi-head attention layers and MLP layers were removed from the model, one would observe that the Vit module essentially corresponds to a CNN structure within a ResNet.

Through the application of the above parameter-efficient tuning (PET) technique, our model demonstrates strong generalizability in adapting to and performing tasks on downstream (remote sensing) data. It effectively leverages the pretrained knowledge while efficiently refining its parameters to cater specifically to the nuances of the new domain, ensuring robust performance across a variety of remote sensing tasks. This highlights the effectiveness of the PET strategy in enhancing the model’s adaptability without requiring extensive retraining or introducing significant computational overhead.

4. Experiments

4.1. Comparison Methods and Experiment Settings

In the experiments section of our study, a variety of methods have been employed for comparison. RingMo [35], SkySense [39], Roi Transformer [21], Oriented RCNN [62], RingMo-SAM [36], and ChangeFormer [63] are incorporated for comparison. RingMo [35] is a remote sensing foundation model that leverages masked image modeling for self-supervised pretraining. Skysense [39] presents a multi-modal remote sensing foundation model that integrates diverse data sources for diverse tasks. Roi Transformer [21] learns to transform region proposals for detecting arbitrarily oriented objects in aerial images. Oriented R-CNN [62] integrates rotated bounding boxes and a rotated region proposal network to detect objects with arbitrary orientations. RingMo-SAM [36] introduces a foundation model for multimodal remote-sensing image segmentation for optical and SAR images. ChangeFormer [63] proposes a transformer-based Siamese network for change detection in remote sensing images. This diverse selection allows for a comprehensive evaluation of our proposed method. The codes will be publicly available at https://github.com/InfiniteProgress700/RLita soon (accessed on 5 May 2025).

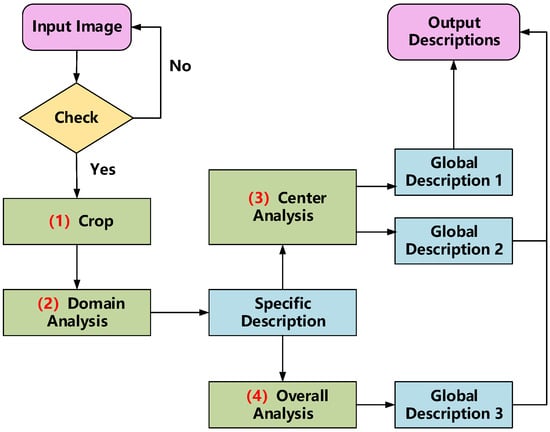

To mitigate the cost of image annotation, region-level image–text pairs are constructed based on annotated data by contrastive learning for segmenting object mask features, based on existing annotated datasets such as remote sensing image object detection and semantic segmentation, and design specifications to generate corresponding image descriptions. The global description of an image consists of three parts: Global Description 1—Describe the terrain information in the area where the center of the image coincides with the center of the original image, and the length/width is half of the original image. Global Description 2—Describe the terrain information except for the central area defined in 1. Global Description 3—Describe the information of the entire image features. At the same time, add information such as the type, location, and mask of independent objects as local area descriptions in the description.

The specific steps for generating descriptions using annotated data from different scenarios are shown in Figure 4.

Figure 4.

Flowchart of the description generation stage. The main steps are as follows: (1) adaptive image cropping for manageable processing, (2) connected-domain analysis for object attribute extraction, (3) center-based spatial description generation, and (4) global statistical analysis for comprehensive scene characterization.

(1) Image cropping. If the original image resolution exceeds a predefined threshold, the image and its corresponding labels are cropped into smaller slices. This step ensures that the image is manageable for further processing while maintaining crucial details of the scene.

(2) Connected domain analysis. In semantic segmentation task, for each connected domain, several key attributes are extracted, including the minimum bounding rectangle, the object category, and other relevant information. The mask of each connected domain is then compressed into RLE for efficient storage and processing. In the case of object-detection labels, only the MBR and category information are considered, as object detection typically focuses on identifying the location and type of objects within the scene.

(3) Center analysis. Following this, location-based center descriptions are generated by analyzing the spatial relationship between the connected domains or object bounding boxes and predefined regions within the image. Specifically, the image is divided into a center area (denoted by a red dashed line) and a non-center area (denoted by a blue dashed line) in Figure 5. Descriptions are then created based on the number and type of connected domains or target boxes that intersect these regions. For example, a description could be generated such as, “A farm is located at the center of the image”, if the relevant connected domain falls within the center area. These descriptions are categorized as global descriptions 1 and 2, reflecting the relationship between object location and the image’s broader context.

(4) Global analysis. A global description is generated for the entire image, summarizing the quantity and types of objects present across the whole scene. This description is derived from statistical analysis of all connected domains in the image. If the number of objects exceeds a specific threshold, generalized terms such as “many” or “a lot of” are used to convey the relative abundance of objects. For instance, a description like, “A large number of buildings and a road are visible in this remote sensing image”, would be produced. This step corresponds to global description 3, offering a comprehensive summary of the entire image’s content.

Figure 5.

Example of description generation.

4.2. Experiments on Object Detection

4.2.1. Datasets and Evaluation Metrics

For object detection, the FAIR1M [12], DIOR [13], and DIOR-R [14] datasets are employed. During the experiments, 8 NVIDIA V100 GPU with 32 GB memory has been used.

FAIR1M [12]: It contains more than 1 million instances. Over 15,000 images with a resolution of 0.3 to 0.8 m from various platforms are provided. The whole objects are annotated to five categories and 37 subcategories by OBBs. It includes five categories, which are airplane, vehicle, ship, road, and court. Each of these categories consists of several different fine-grained subcategories. During the experiments, the size of all images was maintained at 800 × 800 pixels. The learning rate during training is set to 0.00032, and the batch size is 4. The training epoch is 12, and the learning rate adjustment strategy of MultiStepLR is adopted. The learning rate is reduced once in the 7th epoch and again in the 9th epoch.

DIOR [13] and DIOR-R [14]: The DIOR dataset consists of 23,463 images distributed across 20 classes, totaling 192,472 objects. Following DIOR’s predefined partitioning scheme, 11,725 images are selected for training and 11,738 for testing. The images in the DIOR dataset typically have a width of around 800 pixels. During the experiments, the size of all images is maintained at 800 × 800 pixel dimensions; we focus more on the results of the complex composite object categories. The DIOR-R dataset has added annotations for oriented bounding boxes in addition to horizontal bounding boxes, suitable for narrow remote sensing objects such as ships and bridges. The learning rate during training is set to 0.00024, and the batch size is 6. The training epoch is 12, and the learning rate adjustment strategy of MultiStepLR is adopted. The learning rate is reduced once in the 7th epoch and again in the 9th epoch.

For object detection, the evaluation metrics employed are mean Average Precision (mAP) and mAP at IoU threshold of 50 (). mAP calculates the average precision by considering IoU thresholds from 50 to 95, with a step size of 5. It provides a comprehensive assessment of detection performance across a range of IoU thresholds. mAP50 specifically evaluates the average precision at an IoU threshold of 50, providing a measure of object-detection performance with a stricter overlap criterion. Further, time per epoch (Time) is used to measure the training speed, and memory is used to measure the memory cost during training.

4.2.2. Comparison with the SOTA

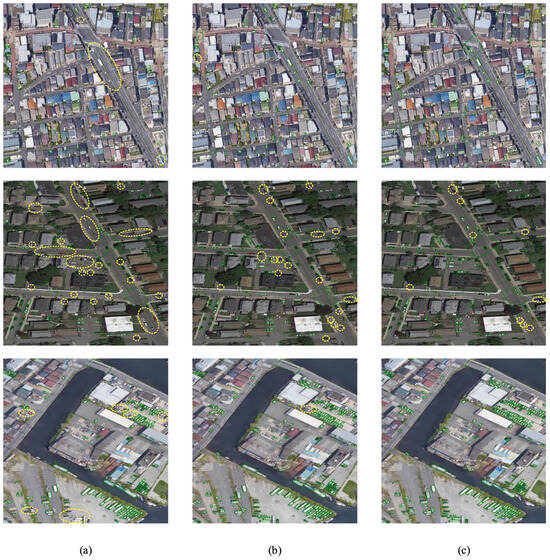

In this section, we present the experimental results of the RLita method compared with several state-of-the-art (SOTA) methods on remote sensing datasets, FAIR1M (Table 1), DIOR, and DIOR-R (Table 2). On the FAIR1M dataset, our proposed RLita method achieves a mean Average Precision (mAP) of 73.80%, which represents a significant improvement compared to the SOTA methods. Specifically, RLita outperforms RingMo by 27.59%, SkySense by 19.23%, and Oriented RCNN by 29.50%. On the DIOR dataset, RLita achieves a mean Average Precision at 50% (mAP50) of 85.24%, outperforming the SOTA methods by a notable margin. Specifically, RLita surpasses RingMo by 9.34% and SkySense by 6.51%. On the DIOR-R dataset, RLita achieves a mAP50 of 75.90%, surpassing SkySense by 1.63%. From the visualization results in Figure 6, we can see that the above methods can achieve good results in simple scenarios. However, our proposed method performs better in complex scenarios and for small, densely located objects.

Table 1.

Comparison with SOTA methods on FAIR1M [12].

Table 2.

Comparison with SOTA methods on DIOR [13] and DIOR-R [14].

In order to demonstrate the capability of our RLita to deal with various resolutions, imbalanced samples, and multiple scenes, we further experiment on object-detection tasks. As shown in Figure 7, our methods can accurately detect changeable objects with various resolutions and different scenes, including cars, ships, dams, and playgrounds.

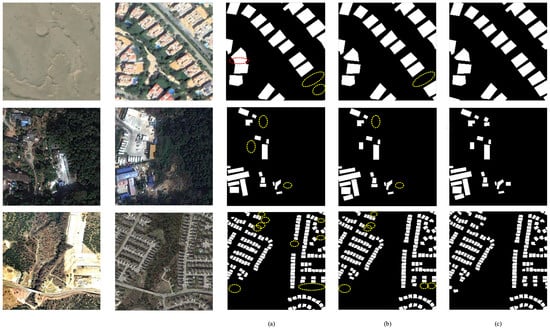

4.2.3. Ablation Experiments

A series of ablation experiments is completed on the proposed RLita. Firstly, we compare our models from different amounts of data. As Table 3 shows, the quantitative results on object-detection task prove the effectiveness of our combination of local and global image–text loss. As well, Figure 8 shows the corresponding visualization results. From the miss detections selected by yellow circles, the combination of local and global focus effectively improves the robustness of object detection, which helps RLita to obtain higher accuracy. In Table 4, our RLita achieves comparable mAP values with fewer parameters and shorter training time. To sum up, we draw several conclusions:

(1) The local image–text loss module mainly utilizes the semantic information of the surrounding environment, effectively enhancing the feature reconstruction and expression ability of the model, therefore improving the performance of object recognition.

(2) The global image–text loss module comprehensively analyzes the associated targets between adjacent and distant areas, improving the global representation ability of the model for object location.

(3) After combining the local image–text loss module and the global image–text loss module, the model has a positive effect on local and global feature representation, greatly improving the accuracy of object detection and recognition.

(4) In the FAIR1M dataset, after adding the VAP (full version) module, the model reduced the number of training parameters by 96% and training time by 80% while keeping the mAP basically unchanged, effectively reducing the number of model parameters and training time, and saving a lot of computing resources.

(5) In the DIOR dataset, after adding the VAP (full version) module, the model reduced the number of training parameters by 96% and training time by 90% while keeping the mAP basically unchanged, effectively reducing the number of model parameters and training time, and saving a lot of computing resources.

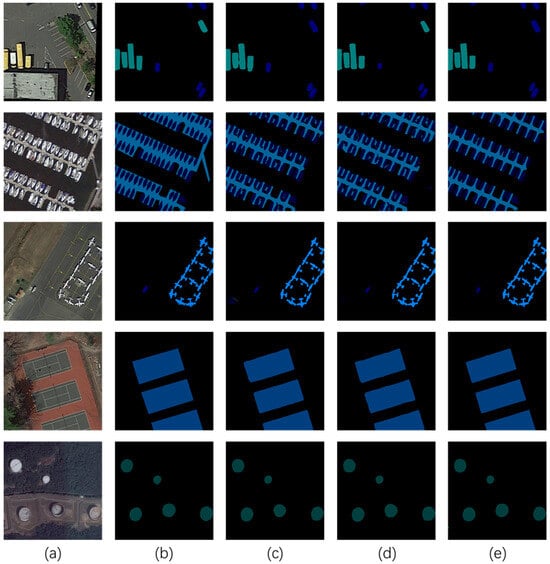

Figure 6.

Visualization results of our method and compared methods on the FAIR1M [12] dataset. (a) Images. (b) RingMo. (c) Roi Transformer. (d) Oriented RCNN. (e) RLita. Boxes of different colors represent the detection results.

Figure 7.

Visualization results of our RLita for object detection with various resolutions. (a) High resolution. (b) Middle resolution. (c) Low resolution. Boxes represent the detection results.

Table 3.

Results of region-level pretraining method in object detection experiments.

Table 3.

Results of region-level pretraining method in object detection experiments.

| Dataset | Local Image-Text Loss | Global Image-Text Loss | mAP (%) |

|---|---|---|---|

| ✓ | 45.39 | ||

| FAIR1M [12] | ✓ | 69.42 | |

| ✓ | ✓ | 73.80 | |

| ✓ | 52.07 | ||

| DIOR [13] | ✓ | 78.31 | |

| ✓ | ✓ | 85.24 |

Table 4.

Results of VAP module in object detection experiments.

Table 4.

Results of VAP module in object detection experiments.

| Dataset | VAP | Para./FLOPs | Training Time | mAP(%) |

|---|---|---|---|---|

| FAIR1M [12] | / | 9.68 M/114 G | 234.71 h | 74.69 |

| MHA version | 5.36 M/95 G | 144.82 h | 74.25 | |

| MLP version | 4.07 M/76 G | 98.60 h | 73.89 | |

| fully version | 0.38 M/76 G | 46.25 h | 73.80 | |

| DIOR [13] | / | 9.68 M/114 G | 205.03 h | 85.93 |

| MHA version | 5.36 M/95 G | 118.32 h | 85.57 | |

| MLP version | 4.07 M/76 G | 77.19 h | 85.31 | |

| fully version | 0.38 M/76 G | 21.98 h | 85.24 |

Figure 8.

Visualization results of our method trained with different loss. Yellow circles indicate the missed detections. (a) Local image–text loss. (b) Global image–text loss. (c) Combine local with global image–text loss. Boxes represent the detection results.

4.3. Experiments on Semantic Segmentation

4.3.1. Datasets and Evaluation Metrics

For semantic segmentation, the iSAID [15] and Vaihingen [16] datasets are employed. During the experiments, 8 NVIDIA V100 GPU with 32 GB memory has been used.

iSAID [15]: The iSAID dataset is a semantic segmentation dataset derived from DOTA [64], containing a total of 655,451 instances across 15 object categories. Nonobject pixels are designated as background. Among the 2806 images in the dataset, annotations are available for 1869 images. 1411 images are selected for training and 458 images for testing. The images in this dataset vary in size, ranging from 800 × 800 to 4000 × 13,000 pixels. During the experimentations, all images are cropped to a size of 512 × 512 pixels with an overlap of 128 pixels during the cropping process. The learning rate during training is set to 0.0001, and the batch size is 4. The total training epoch is 38.

Vaihingen [16]: The Vaihingen dataset typically includes 6 categories (excluding background), with a total of 33 images. Usually, 16 sheets are used for training and 17 sheets are used for testing. During the experimentations, images are usually cropped to a size of 512 × 512 pixels in 256 pixel steps. The learning rate during training is set to 0.00012, and the batch size is 4. The training epoch is 18.

For semantic segmentation, the evaluation metrics employed are mean Intersection over Union (mIoU). mIoU computes the average Intersection over Union (IoU) for each class, measuring the overlap between predicted and ground truth segmentation masks. IoU is calculated as the ratio of the intersection area to the union area of the predicted and ground truth masks for each class.

4.3.2. Comparison with the SOTA

The results on iSAID [15] are listed in Table 5. Obviously, it is observed that RLita achieved optimal detection results on the iSAID dataset. Both the mIoU metrics outperformed all other comparative methods. Our method outperforms the other methods, with an mIoU value that is 22.97% higher than RingMo-SAM, 13.76% higher than RingMo, and 10.05% higher than SkySense. This indicates a significant performance advantage of the “Our RLita” method in the image segmentation task on the iSAID dataset. mIoU on the challenging Vaihingen [16] datasets of RLita is 75.44, which shows the effectiveness and generalization of Our RLita.

Table 5.

Comparison with SOTA methods on iSAID [15].

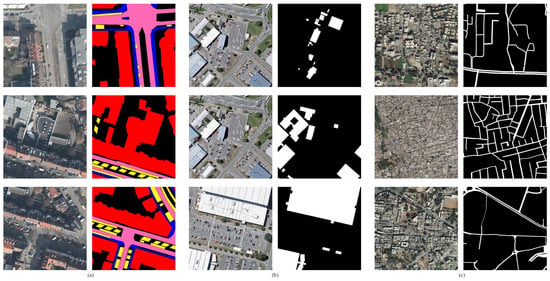

We visualize the segmentation results of our method and compare the methods on iSAID [15] data, as shown in Figure 9. From the results of visualization, we can see that our method is better for the segmentation boundary of ground objects.

Figure 9.

Visualation results of our method and compared methods on the iSAID [15] dataset. (a) Images. (b) Labels. (c) RingMo-SAM. (d) RingMo. (e) RLita.

In order to demonstrate the capability of our RLita to deal with various resolutions, imbalanced samples, and multiple scenes, we further experiment on semantic segmentation tasks. As shown in Figure 10, our method can nicely segment changeable things with different resolutions and different downstream tasks, including road segmentation and building segmentation.

Figure 10.

Visualization results of our RLita for semantic segmentation with various resolutions. (a) High resolution. (b) Middle resolution. (c) Low resolution.

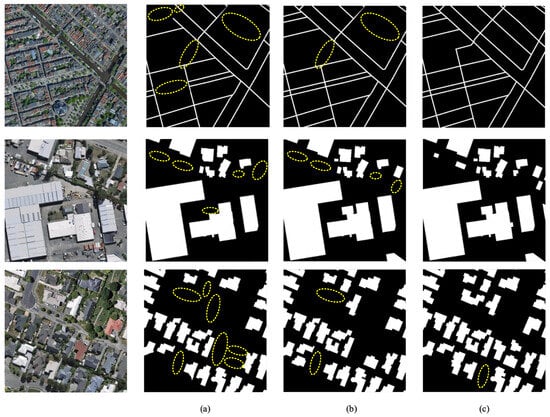

4.3.3. Ablation Experiments

We complete a series of ablation experiments on the proposed RLita method. Firstly, we compare our models from different amounts of data. As Table 6 shows, the quantitative results on semantic segmentation task prove the effectiveness of our combination of local and global image–text loss. As well, Figure 11 shows the corresponding visualization results. The fusion of local and global features effectively extracts and integrates semantic information, helping RLita to obtain higher mIoU values. In Table 7, our RLita achieves comparable mIoU values with lower computational complexity and shorter training time. To sum up, we draw several conclusions:

(1) The local image–text loss module mainly utilizes the semantic information of the detailed surrounding environment, effectively enhancing the semantic information representation ability of the model.

(2) The global image–text loss module comprehensively analyzes the semantic information differences between adjacent and distant regions, enhancing the performance of semantic segmentation.

(3) After combining the local image–text loss module with the global image–text loss module, the model has a positive effect on the representation of local and global features, greatly improving the accuracy of semantic segmentation.

(4) In the iSAID dataset, after adding the VAP (fully version) module, the model reduced the number of training parameters by 96% and shortened the training time by 96% while keeping the mIoU basically unchanged. This effectively reduced the number of model parameters and training time, saving a lot of computing resources.

(5) In the Vaihingen dataset, after adding the VAP (fully version) module, the model reduced the number of training parameters by 96% and training time by 91% while keeping the mIoU basically unchanged, effectively reducing the number of model parameters and training time, and saving a lot of computing resources.

Figure 11.

Visualation results of our method trained with different loss. Yellow circles indicate the missed segmentation. (a) Local image–text loss. (b) Global image–text loss. (c) Combine local with global image–text loss.

Table 6.

Results of region-level pretraining method in semantic segmentation experiments.

Table 6.

Results of region-level pretraining method in semantic segmentation experiments.

| Dataset | Local Image-Text Loss | Global Image-Text Loss | mIoU (%) |

|---|---|---|---|

| ✓ | 69.42 | ||

| iSAID [15] | ✓ | 78.35 | |

| ✓ | ✓ | 80.96 | |

| ✓ | 66.82 | ||

| Vaihingen [16] | ✓ | 73.39 | |

| ✓ | ✓ | 75.44 |

Table 7.

Results of VAP module in semantic segmentation experiments.

Table 7.

Results of VAP module in semantic segmentation experiments.

| Dataset | VAP | Para./FLOPs | Training Time | mIoU (%) |

|---|---|---|---|---|

| iSAID [15] | / | 7.12 M/138 G | 127.30 h | 82.04 |

| MHA version | 3.88 M/115 G | 90.68 h | 81.36 | |

| MLP version | 3.01 M/92 G | 69.45 h | 81.17 | |

| fully version | 0.29 M/92 G | 5.29 h | 80.96 | |

| Vaihingen [16] | / | 7.12 M/138 G | 203.97 h | 77.13 |

| MHA version | 3.88 M/115 G | 109.34 h | 76.05 | |

| MLP version | 3.01 M/92 G | 78.36 h | 75.80 | |

| fully version | 0.29 M/92 G | 17.82 h | 75.44 |

4.4. Experiments on Change Detection

4.4.1. Datasets and Evaluation Metrics

For change detection, the LEVIR-CD [17] datasets are employed. During the experiments, 8 NVIDIA V100 GPU with 32 GB memory has been used.

LEVIR-CD [17]: Dataset is a new large-scale RS binary change-detection dataset. The bitemporal images in the LEVIR-CD dataset were collected in TX, USA, with capture times ranging from 2002 to 2018. The dataset is a very high resolution (VHR) RS image containing 637 pairs of 1024 × 1024 pixels. The labels of the images are binary. The LEVIR-CD [17] dataset focuses on building growth and building decline. During the experiments, the size of all images is maintained at 1024 × 1024 pixel dimensions. The learning rate during training is set to 0.00012, and the batch size is 4. The training epoch is 150 and the warm-up operation ends at 5th epoch.

For change detection, the evaluation metrics employed are F1. The F1 value is a weighted average of model accuracy and recall, with a maximum value of 1 and a minimum value of 0. It takes into account both the accuracy and recall of the classification model.

4.4.2. Comparison with the SOTA

The results on LEVIR-CD are listed in Table 8. Obviously, it is observed that RLita achieved optimal detection results on LEVIR-CD. The F1 metrics outperformed all other comparative methods. Our method achieves an F1 score of 94.08%, which is 3.68% higher than ChangeFormer, 2.22% higher than RingMo, and 1.50% higher than SkySense on the LEVIR-CD dataset. This demonstrates a notable performance improvement of our method compared to the state-of-the-art approaches.

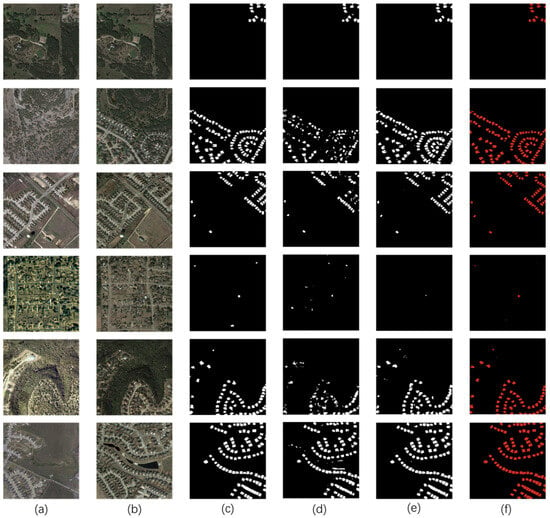

We visualize the detection results of our method and compare the methods on LEVIR-CD data, as shown in Figure 12. We show test results of two images in the Figure 12d–f. From the visualized results, we can see that our method can better capture the details of the changes.

Figure 12.

Visualation results of our method and compared methods on the LEVIR-CD [17] dataset. (a) T0 Images. (b) T1 Images. (c) Labels. (d) ChangeFormer. (e) RingMo. (f) RLita.

In order to demonstrate the capability of our RLita to deal with various resolutions, imbalanced samples, and multiple scenes, we further experiment on change-detection tasks. As shown in Figure 13, our method can nicely capture changeable things with different resolutions and different objects, including roads, buildings, and vegetations.

Figure 13.

Visualization results of our RLita for change detection with various resolutions. (a) High resolution. (b) Middle resolution. (c) Low resolution.

4.4.3. Ablation Experiments

A series of ablation experiments is completed on the proposed RLita method. Firstly, we compare our models from different amounts of data. Table 9 shows that RLita achieves the best performance with local and global image–text loss. In Figure 14, local and global image–text loss helps RLita segment delicate boundaries and perceive multi-scale differences, achieving high-precision change detection. Table 10 our RLita achieves highest mIoU values with lower computational complexity and shorter training time. We have several conclusions:

(1) The local image–text loss module mainly utilizes semantic information before and after changes in the surrounding environment, effectively enhancing the ability to represent adjacent semantic information associations.

(2) The global image–text loss module comprehensively analyzes the information differences of the associated objects between distant areas, improving the global representation ability for change detection.

(3) After combining the local image–text loss module with the global image–text loss module, the model has a positive effect on the representation of local and global features, greatly improving the accuracy of change detection.

(4) In the LEVIR-CD dataset, after adding the VAP (fully version) module, the model reduced the number of training parameters by 96% and shortened the training time by 96% while keeping F1 basically unchanged. This effectively reduced the number of model parameters and training time, saving a lot of computing resources.

Figure 14.

Visualation results of our method with different training loss. Yellow circles indicate the missed segmentation. Red circles indicate the false segmentation. (a) Local image–text loss. (b) Global image–text loss. (c) Combine local with global image–text loss.

Table 8.

Comparison with methods on LEVIR-CD [17].

Table 8.

Comparison with methods on LEVIR-CD [17].

| Method | |

|---|---|

| ChangeFormer | 90.40 |

| RingMo | 91.86 |

| SkySense | 92.58 |

| Our RLita | 94.08 |

Table 9.

Results of region-level pretraining method in change-detection experiments.

Table 9.

Results of region-level pretraining method in change-detection experiments.

| Dataset | Local Image-Text Loss | Global Image-Text Loss | F1 (%) |

|---|---|---|---|

| ✓ | 79.36 | ||

| LEVIR-CD [17] | ✓ | 91.79 | |

| ✓ | ✓ | 94.08 |

Table 10.

Results of VAP module in change-detection experiments.

Table 10.

Results of VAP module in change-detection experiments.

| Dataset | VAP | Para./FLOPs | Training Time | F1(%) |

|---|---|---|---|---|

| LEVIR-CD [17] | / | 13.3 M/121 G | 149.33 h | 94.41 |

| MHA version | 7.94 M/100 G | 67.32 h | 94.13 | |

| MLP version | 5.02 M/81 G | 44.80 h | 93.66 | |

| fully version | 0.52 M/81 G | 5.78 h | 94.08 |

5. Discussion

The proposed RLita framework presents a methodological advancement in optimizing foundation models for remote sensing applications through a novel region-level image-text alignment mechanism. By establishing fine-grained correspondences between localized image regions and their contextual descriptions, our model achieves enhanced cross-modal semantic coherence, leading to superior transfer learning performance across diverse downstream tasks including but not limited to object detection, semantic segmentation, and change detection.

Moreover, the proposed parameter-efficient tuning strategy demonstrates dual advantages: it reduces training overhead compared to full-parameter fine-tuning while maintaining competitive accuracy, and facilitates practical deployment through reduced memory footprint and accelerated inference speeds.

Current experimental validation, while demonstrating methodological efficacy, remains constrained by limited dataset diversity and scale. Future work will focus on expanding the evaluation framework to incorporate multi-source satellite imagery across different geographical regions and atmospheric conditions, thereby strengthening the validity of our findings.

6. Conclusions

This paper proposes a novel region-level image–text alignment foundation model optimization method designed for remote sensing object interpretation tasks. Specifically, this method fully exploits the characteristics of remote sensing data, designing a region-level image–text alignment optimization method which improves the generalization of the foundation model. Moreover, this paper designs a parameter-efficient tuning strategy adapted to the remote sensing domain to reduce computational resources. However, while RLita has been validated to perform excellently on transformer models of different sizes and pretraining modes, its performance on remote sensing models based on convolutional networks remains to be verified. Therefore, studying the performance of RLita on convolutional network-based remote sensing models is the next focus.

Author Contributions

Conceptualization, Q.Z. and D.W.; methodology, Q.Z. and D.W.; software, X.Y.; validation, Q.Z., D.W. and X.Y.; formal analysis, X.Y.; investigation, D.W. and X.Y.; resources, Q.Z.; data curation, Q.Z.; writing—original draft preparation, Q.Z.; writing—review and editing, Q.Z.; visualization, X.Y.; supervision, X.Y.; project administration, Q.Z.; funding acquisition, Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Radford, A.; Narasimhan, K. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.mikecaptain.com/resources/pdf/GPT-1.pdf (accessed on 27 April 2025).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. 2019. Available online: https://storage.prod.researchhub.com/uploads/papers/2020/06/01/language-models.pdf (accessed on 27 April 2025).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. GLM: General Language Model Pretraining with Autoregressive Blank Infilling. arXiv 2021, arXiv:2103.10360. [Google Scholar]

- Chowdhury, M.; Zhang, S.; Wang, M.; Liu, S.; Chen, P.Y. Patch-level Routing in Mixture-of-Experts is Provably Sample-efficient for Convolutional Neural Networks. arXiv 2023, arXiv:2306.04073. [Google Scholar]

- Rajbhandari, S.; Li, C.; Yao, Z.; Zhang, M.; Aminabadi, R.Y.; Awan, A.A.; Rasley, J.; He, Y. DeepSpeed-MoE: Advancing Mixture-of-Experts Inference and Training to Power Next-Generation AI Scale. arXiv 2022, arXiv:2201.05596. [Google Scholar]

- Zhang, Y.; Cai, R.; Chen, T.; Zhang, G.; Zhang, H.; Chen, P.Y.; Chang, S.; Wang, Z.; Liu, S. Robust Mixture-of-Expert Training for Convolutional Neural Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023. [Google Scholar]

- Lin, K.; Wang, L.; Liu, Z. End-to-End Human Pose and Mesh Reconstruction with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J.Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, J.; Li, K.; Xie, X.; Lang, C.; Yao, Y.; Han, J. Anchor-Free Oriented Proposal Generator for Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Waqas Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Shahbaz Khan, F.; Zhu, F.; Shao, L.; Xia, G.-S.; Bai, X. isaid: A large-scale dataset for instance segmentation in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019; pp. 28–37. [Google Scholar]

- International Society for Photogrammetry and Remote Sensing.2D Semantic Labeling. Available online: https://www.itc.nl/ISPRS_WGIII4/tests_datasets.html (accessed on 27 April 2025).

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Scrdet: Towards more robust detection for small, cluttered and rotated objects. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Niu, R.; Sun, X.; Tian, Y.; Diao, W.; Chen, K.; Fu, K. Hybrid multiple attention network for semantic segmentation in aerial images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–18. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Fu, K. DABNet: Deformable contextual and boundary-weighted network for cloud detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Li, B.; Fu, K. Multi-object tracking in satellite videos with graph-based multitask modeling. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Bao, J.; Chen, K.; Sun, X.; Zhao, L.; Diao, W.; Yan, M. Siamthn: Siamese target highlight network for visual tracking. IEEE Trans. Circuits Syst. Video Technol. 2023. [Google Scholar] [CrossRef]

- Cui, Y.; Hou, B.; Wu, Q.; Ren, B.; Wang, S.; Jiao, L. Remote sensing object tracking with deep reinforcement learning under occlusion. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Stojnic, V.; Risojevic, V. Self-supervised learning of remote sensing scene representations using contrastive multiview coding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Ayush, K.; Uzkent, B.; Meng, C.; Tanmay, K.; Burke, M.; Lobell, D.; Ermon, S. Geography-aware self-supervised learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Xie, Z.; Zhang, Z.; Cao, Y.; Lin, Y.; Bao, J.; Yao, Z.; Dai, Q.; Hu, H. Simmim: A simple framework for masked image modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Cong, Y.; Khanna, S.; Meng, C.; Liu, P.; Rozi, E.; He, Y.; Burke, M.; Lobell, D.; Ermon, S. Satmae: Pre-training transformers for temporal and multi-spectral satellite imagery. Adv. Neural Inf. Process. Syst. 2022, 35, 197–211. [Google Scholar]

- Sun, X.; Wang, P.; Lu, W.; Zhu, Z.; Lu, X.; He, Q.; Li, J.; Rong, X.; Yang, Z.; Chang, H.; et al. RingMo: A Remote Sensing Foundation Model With Masked Image Modeling. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–22. [Google Scholar] [CrossRef]

- Yan, Z.; Li, J.; Li, X.; Zhou, R.; Zhang, W.; Feng, Y.; Diao, W.; Fu, K.; Sun, X. RingMo-SAM: A Foundation Model for Segment Anything in Multimodal Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, T.; Zhao, L.; Hu, L.; Wang, Z.; Niu, Z.; Cheng, P.; Chen, K.; Zeng, X.; Wang, Z.; et al. RingMo-lite: A Remote Sensing Lightweight Network with CNN-Transformer Hybrid Framework. IEEE Trans. Geosci. Remote Sens. 2024. [Google Scholar] [CrossRef]

- Cha, K.; Cha, K.; Seo, J.; Lee, T. A Billion-scale Foundation Model for Remote Sensing Images. arXiv 2023, arXiv:2304.05215. [Google Scholar] [CrossRef]

- Guo, X.; Lao, J.; Dang, B.; Zhang, Y.; Yu, L.; Ru, L.; Zhong, L.; Huang, Z.; Wu, K.; Hu, D.; et al. Skysense: A multi-modal remote sensing foundation model towards universal interpretation for earth observation imagery. arXiv 2023, arXiv:2312.10115. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Liu, F.; Chen, D.; Guan, Z.; Zhou, X.; Zhu, J.; Ye, Q.; Fu, L.; Zhou, J. RemoteCLIP: A Vision Language Foundation Model for Remote Sensing. arXiv 2023, arXiv:2306.11029. [Google Scholar] [CrossRef]

- Mikriukov, G.; Ravanbakhsh, M.; Demir, B. Deep unsupervised contrastive hashing for large-scale cross-modal text-image retrieval in remote sensing. arXiv 2022, arXiv:2201.08125. [Google Scholar]

- Xu, J.; Liu, S.; Vahdat, A.; Byeon, W.; Wang, X.; De Mello, S. Open-vocabulary panoptic segmentation with text-to-image diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Yu, Q.; He, J.; Deng, X.; Shen, X.; Chen, L.C. Convolutions die hard: Open-vocabulary segmentation with single frozen convolutional clip. arXiv 2023, arXiv:2308.02487. [Google Scholar]

- El-Nouby, A.; Izacard, G.; Touvron, H.; Laptev, I.; Jegou, H.; Grave, E. Are large-scale datasets necessary for self-supervised pre-training? arXiv 2021, arXiv:2112.10740. [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-efficient transfer learning for NLP. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Zaken, E.B.; Ravfogel, S.; Goldberg, Y. Bitfit: Simple parameter-efficient fine-tuning for transformer-based masked language-models. arXiv 2021, arXiv:2106.10199. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; Association for Computational Linguistics: St, Stroudsburg, PA, USA, 2021. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Jia, M.; Tang, L.; Chen, B.C.; Cardie, C.; Belongie, S.; Hariharan, B.; Lim, S.N. Visual prompt tuning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Yoo, S.; Kim, E.; Jung, D.; Lee, J.; Yoon, S. Improving visual prompt tuning for self-supervised vision transformers. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Tu, C.-H.; Mai, Z.; Chao, W.-L. Visual query tuning: Towards effective usage of intermediate representations for parameter and memory efficient transfer learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Han, C.; Wang, Q.; Cui, Y.; Cao, Z.; Wang, W.; Qi, S.; Liu, D. E2VPT: An Effective and Efficient Approach for Visual Prompt Tuning. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; IEEE Computer Society: Piscataway, NJ, USA, 2023. [Google Scholar]

- Chen, S.; Ge, C.; Tong, Z.; Wang, J.; Song, Y.; Wang, J.; Luo, P. Adaptformer: Adapting vision transformers for scalable visual recognition. Adv. Neural Inf. Process. Syst. 2022, 35, 16664–16678. [Google Scholar]

- Yin, D.; Yang, Y.; Wang, Z.; Yu, H.; Wei, K.; Sun, X. 1% vs 100%: Parameter-efficient low rank adapter for dense predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- He, X.; Li, C.; Zhang, P.; Yang, J.; Wang, X.E. Parameter-efficient model adaptation for vision transformers. Proc. AAAI Conf. Artif. Intell. 2023, 37, 817–825. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, K.; Liu, Z. Neural prompt search. arXiv 2022, arXiv:2206.04673. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, L.; Xiong, P.; Jin, Q. Token mixing: Parameter-efficient transfer learning from image-language to video-language. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1781–1789. [Google Scholar] [CrossRef]

- Zhang, B.; Ge, Y.; Xu, X.; Shan, Y.; Shou, M.Z. Taca: Upgrading your visual foundation model with task-agnostic compatible adapter. arXiv 2023, arXiv:2306.12642. [Google Scholar]

- Xu, C.; Zhu, Y.; Zhang, G.; Shen, H.; Liao, Y.; Chen, X.; Wu, G.; Wang, L. DPL: Decoupled Prompt Learning for Vision-Language Models. arXiv 2023, arXiv:2308.10061. [Google Scholar]

- Hu, L.; Yu, H.; Lu, W.; Yin, D.; Sun, X.; Fu, K. AiRs: Adapter in Remote Sensing for Parameter-Efficient Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2024. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 207–210. [Google Scholar]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. Dota: A largescale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).