Abstract

This paper presents a pioneering study in the application of real-time surface landmine detection using a combination of robotics and deep learning. We introduce a novel system integrated within a demining robot, capable of detecting landmines in real time with high recall. Utilizing YOLOv8 models, we leverage both optical imaging and artificial intelligence to identify two common types of surface landmines: PFM-1 (butterfly) and PMA-2 (starfish with tripwire). Our system runs at 2 FPS on a mobile device missing at most 1.6% of targets. It demonstrates significant advancements in operational speed and autonomy, surpassing conventional methods while being compatible with other approaches like UAV. In addition to the proposed system, we release two datasets with remarkable differences in landmine and background colors, built to train and test the model performances.

1. Introduction

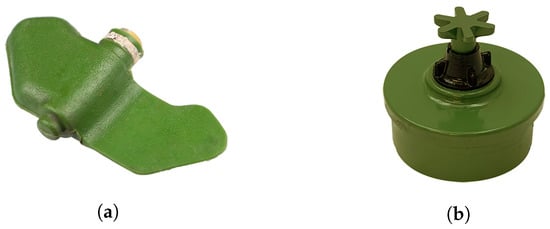

The use of landmines and other explosive ordnance is a huge humanitarian demining problem continuously fed by the increasing number of conflicts around the world as reported yearly in [1]. The estimate of land and urban territories where antipersonnel (AP) and antitank (AT) mines and other unexploded ordnances (UXOs) are buried or abandoned, says that with current detection and clearance technologies, it will require decades to release the land to civil use [2]. The complexity of the problem involves sustainable solutions for detection, clearance, and victim assistance. For the detection of buried plastic and metal case AP and AT landmines, there are standard sensors based on metal detectors [3,4,5], and subsurface ground penetrating radar [6], whic are also combined in dual mode handheld equipment [7,8,9]. More recently, the inspection of large areas has been achieved by antenna arrays of microwave radar [10], optical or infrared cameras [11,12], and magnetometers [13] mounted on UAVs [14,15,16] or mounted on robotic vehicles [17,18,19]. In addition to buried landmines, there are surficial explosive ordnances that are abandoned on the surface or deployed by cluster munition [20]. For the specific task of detection and classification of surficial UXOs, there are electronic (sensors) and informatic (AI) technologies that can help to design autonomous systems for the detection and positioning/mapping such threats. In particular, portable and wearable high resolution cameras can provide video streaming that can be analyzed in real time by AI based models, trained with custom data containing the targets in different environments. The present work focus on the innovation of the application of optical images and AI for the detection of visible surficial UXOs based on a prototype system that includes a remotely controlled sensorized robotic platform. This approach is completely safe, not subject to random detection errors due to tiredness, and can work for long periods (hours) depending on the battery charge storage capacity. An important requirement considered in this research is the real-time detection of the surface threats for maneuvering the robotic platform around the detected threats. The two targets selected in this work are two small plastic landmines largely found on the ground of post-conflict areas and potentially lethal for kids or adults: PFM-1 (“Butterfly”) and PMA-2 (“Starfish”) landmines (see Figure 1a and Figure 1b, respectively).

Figure 1.

The two landmines taken into consideration in this study. (a) PFM-1 “Butterfly” landmine, (b) PMA-2 “Starfish” landmine.

Furthermore, the quest for more effective landmine detection solutions has led to the exploration of cutting-edge technologies, particularly in the field of deep learning. Object detection has emerged as a pivotal tool in addressing the challenges associated with identifying and localizing landmines, including unexploded ordnances (UXOs). In object detection, the primary goal is to enable machines to recognize and delineate specific objects within a given scene or image. This technology has found extensive application in diverse domains, ranging from autonomous vehicles [21] and surveillance systems [22,23] to medical imaging [24] and, more recently, the domain of humanitarian demining [25,26]. In the context of landmine detection, object detection models, based both on convolutional neural networks (CNNs) and transformers (ViT), have demonstrated remarkable capabilities in discerning objects from the surrounding environment. Despite all of these models being able to process high-resolution optical images, not all can provide real-time analysis, forcing a choice between faster models and higher accuracy. As we will see in Section 2.2, we chose the YOLO family, due to its properties of fast processing, lightweight, and portability. In Section 3.1.1, we introduce the infrastructure of our real-time landmine detection system integrated with the robotic platform, and further present possible integrations with additional platforms such as UAV. To the best of our knowledge, our research represents a pioneering effort in designing a real-time, lightweight, and high-recall landmine detection system within the constraints of the resources and platforms at our disposal [19,27]. Our work fills a critical gap in landmine detection research, where real-time deployment remains a challenge. In this context, we present a practical and efficient solution for surface landmine detection with a focus on real-time applicability, expanding the possibilities for operational deployment in diverse scenarios.

2. Related Works

To contextualize the advancements in our field, we examine a diverse array of methodologies and technologies in the next sections. This includes an exploration of optical imaging, dynamic thermography, and various analytical approaches such as image processing and neural networks. While our study specifically utilizes optical imaging, neural network analysis, and real-time remote interfacing, this comparative overview serves to highlight the contributions of different techniques and provides a broad perspective on the current innovations and practices in the field.

2.1. Data

Traditional landmine detection methods have limitations, particularly in terms of speed and accuracy. In this section, we delve into the utilization of data-driven approaches, specifically optical imaging, as promising means for the real-time detection of surface landmines.

2.1.1. Optical Imaging

In recent conflicts, scatterable landmines are often used for covering large areas with a short deployable time. The detection of such surface explosive ordnances over large areas is more productive when optical sensors are mounted on UAVs. In [28], the authors propose a solution with two cameras in the VIS and NIR spectrum and the combination by data fusion. The approach is interesting and some preliminary results on the successful detection of small M14 (6 cm diameter) plastic landmines stimulate further developments. This work also points out some of the limitations of the solution for the data fusion, which are the acquisition of VIS and NIR visible images (350–1000 nm) at different times and spatial positions. Moreover, the operating distance of the UAV must be short enough (few meters) to obtain the best optical quality of the images, but the effect of the UAV motors on the vegetation can make the image fusion process more difficult as the grass is moving under the wind pressure. The image sensor is a Sequoia multispectral camera and generates a pixel resolution image with a capturing interval of 2 s. A similar sensor arrangement was also used on a UAV in [29]. This work was calibrated on a common scatterable landmine, largely used in Afghanistan, that is the PFM-1, also called the “butterfly”. The work concentrates on analyzing the detection performance for different backgrounds: grass, snow, and low vegetation. In [11], the authors focus on the same targets (PFM-1) but use thermal imaging for investigating alternative methods to VIS images that are influenced by variable environmental conditions (shade, sun light irradiance). The detection of surface explosive remnants of war (ERW) by remote sensing technologies opens the possibility of integrating different sensor types on different platforms. This concept has been investigated in a special issue [30] where optical images, radar, and LIDAR data can be generated by different platforms and correlated. In our work, we propose to use a COTS sensor for optical imaging mounted on a robotic platform for landmine detection and positioning. The sensor is an iPhone 13 pro where a video camera and a LIDAR provide data during the movement of the robot that is surveying an area. The acquisition of images can also be achieved with other sensors, but the choice of a COTS can be an advantage due to its ease of replacement. The detection of surface landmines with this robot allows the definition of a clear and safe path for a robot swarm where other robots are equipped with other sensors (radar, metal detectors, etc.) [19].

2.1.2. Dynamic Thermography

An alternative method to optical images used for surface landmines is based on the thermal properties of landmines that differ from the scene. The early experiments on modeling the thermal response of landmines are published in [31]. The temperature gradients were analyzed during thermal transients, and the main equations of dynamic thermography based on infrared sensors were reported. Later, in [32], the application of chaotic neural networks was applied to discriminate a plastic case PMN shallow-buried in sandy soil within an investigated area in the order of a square meter. The problem of creating a large dataset for the testing algorithm was tackled by a model simulation approach in [33]. A review of the research undertaken on infrared thermography [34] made clear the issue of discriminating shallowly buried AP mines in inhomogeneous soil with a limited false alarm rate. Moreover, the computational costs of the thermal model of the landmines and the soil were analyzed in [35]. More recently the need for fast detection methods and the availability of hyperspectral sensors installed on UAV flying over a scene have been proposed for creating large databases down streaming the data by fast and efficient communications [36]. This method was demonstrated for detecting PFM-1 landmines by using the optical and IR images acquired with flights at different times at sunset [11]. The acquisition of IR images is still a limiting factor for fast detection, but the use of AI and large databases [37] will certainly improve the performance of this method.

2.2. Analysis Approach

The methods employed for surface landmine detection can be broadly categorized into two main classes: image-processing techniques and neural network-based approaches. These methods play a pivotal role in the development of effective landmine detection systems, each offering unique capabilities and advantages. In this section, we delve into these methodologies and their respective subcategories, shedding light on their contributions and applications within the field of landmine detection.

2.2.1. Image Processing

Image processing methods have long been a cornerstone of surface landmine detection, aiding in the analysis of sensor data and the extraction of valuable information. Within this category, the earlier subcategory is represented by thresholding, a common approach in landmine detection often used in combination with data from metal detectors and other sensors. The method involves setting a specific threshold for different types of landmines at various distances based on prior analysis. Yoo et al. [13] employed thresholding, where operators, after analyzing conditions in a training set, established thresholds for different landmine types at varying distances. Takahashi et al. [3] utilized information about soil properties and conditions, such as magnetic susceptibility, electrical conductivity, dielectric permittivity, water content, and heterogeneity, to perform thresholding analyses that exhibited effectiveness across different soil conditions. Another group of techniques play a crucial role in handling the raw data obtained from various sensors: algorithmic and signal processing. Common methods include correlation functions, cross-correlation with simulated samples, and least mean squares (LMS) or their variants. Tesfamariam et al. [38] discussed the application of correlation functions in landmine detection, while Gonzalez-Huici et al. [39] explored cross-correlation with simulated samples. LMS methods, including 2D and 3D variants, were investigated by Dyana [40].

2.2.2. Neural Networks

Neural networks have emerged as a transformative force in landmine detection, offering powerful tools for pattern recognition and classification tasks. Within this category, early works explored statistical machine learning, techniques that gained prominence in landmine detection, providing advanced approaches to handle sensor data. Deiana et al. [41] discussed the use of statistical approaches, while Shi et al. [42] explored AdaBoost classifiers. Gader et al. [43] applied hidden Markov models in their work. Nunez-Nieto et al. [44] used logistic regression and neural network techniques (Multilayer Perceptron, MLP) to calculate the probability of the presence of buried explosives. However, their work focused on relatively larger landmines in sandy soil, which may not be representative of all scenarios. Silva et al. [45] employed a fusion approach, combining multilayer perceptron (MLP), support vector machines (SVM), decision trees (DT), and k-nearest neighbors (kNN). They achieved high accuracy; however, their experiments were based on surface images of colored landmines on sand, which might not reflect real-world conditions. More recently, deep learning has gained traction in surface landmine detection, offering the capability to automatically learn intricate patterns from sensor data. Several studies have utilized deep learning architectures to enhance the accuracy and efficiency of landmine detection. Following the footsteps of Silva et al. [45], Pryshchenko et al. [46] adopted a fusion approach, analyzing signals with fully connected neural networks (FCNN), recurrent neural networks (RNN), gated recurrent unit (GRU), and long short-term memory (LSTM) models for ultrawideband GPR antennas. The results of these models were fused for improved performance. Baur et al. [29] employed a faster R-CNN architecture in UAV-based thermal imaging for landmine detection, introducing the power of deep learning into this context. Bestagini et al. [47] trained an autoencoder convolutional neural network (AE-CNN) for anomaly detection using GPR data from landmine-free regions. Their model, trained exclusively on “good” scans representing soil without landmines, achieved an impressive accuracy of 93% with minimal data pre-processing and training. It is worth noting that the train set and test set had the same distribution (both on sand) and that they were relatively homogeneous and free of clutter, which makes it easier to detect anomalies.

These different image processing and neural network-based methods represent the core of the techniques utilized in surface landmine detection. In the subsequent sections, we provide insights into the tools and methodologies that enable real-time applications, as well as the experimental results and discussions that arise from the implementation of these methods.

2.3. Real-Time Applications

In the context of landmine detection, real-time capabilities are paramount for practical deployment, enabling timely responses to potential threats. While there exist several noteworthy works in the field of landmine detection, most of them are in a laboratory condition [47], are not tested on out of distribution (OOD) data [13,47], only considered navigation problems [17], and are, in general, not optimized for real-time applications [29,45,46,47]. Our research is primarily motivated by the need for real-time landmine detection in diverse scenarios. We acknowledge that the landscape of landmine detection research predominantly focuses on various methods, datasets, and models, but few prioritize real-time applicability.

3. Tools and Methods

In this section, we illustrate the tools involved in the study (the robot and the camera) in Section 3.1, as well as a more in-depth description of the proposed system integration (Section 3.1.1). Then, we describe the two important elements for a machine learning algorithm: the data (Section 3.2) and the model choice (Section 3.3).

3.1. Robot Configuration

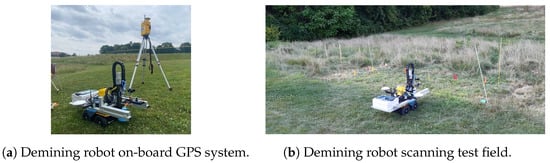

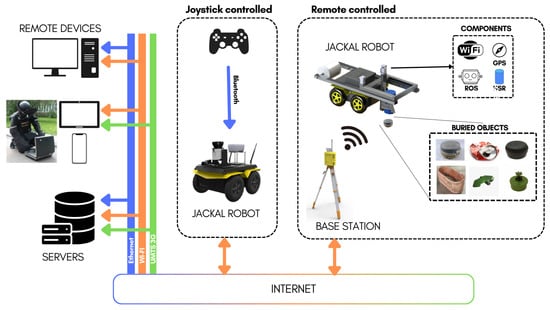

Our research is conducted in the context of the “Demining Robots” project, which involves a fleet of robots designed for various roles related to landmine detection and demining. Within this project, one of our robots, named UGO-first, plays a crucial role in the detection of surface landmines. UGO-first [19,27] is equipped with an array of advanced sensors, making it a versatile platform for our research. These sensors include LiDAR, holographic radar, accelerometers, and high-precision GPS with a remarkable 5 cm positional accuracy and many cameras (RGB and depth). Some robots within our swarm are equipped with trip-wire detection systems, as certain landmines (i.e., “starfish”) are connected to nearly invisible tripwires that can trigger detonation. A complete description of the robot swarm functionalities is shown in Figure 2 and Figure 3. To achieve real-time detection of surface landmines, we leverage the capabilities of a simple RGB camera mounted on the robot.

Figure 2.

Details of demining robot. On the (left), is the robot with the on-board GPS system and off-board GPS tower. On the (right), a panoramic view of the robotic platform scanning the test field.

Figure 3.

Details of UGO-first robot and architecture schema. On the (left), is the communication schema between the robotic platform and the remote server, remote terminal, and joystick control for remote driving. On the (right), an innovative Industry 4.0 paradigm schema is applied to the demining procedure.

3.1.1. System Architecture

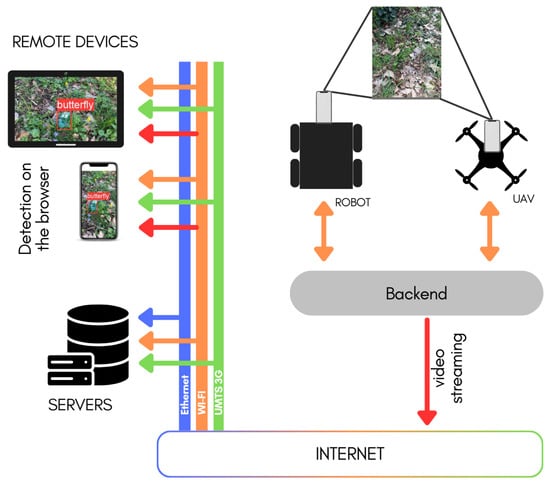

In this work, we introduce a lightweight system that can run on mobile devices controlled by remote personnel, far from the dangerous fields. The model’s run speed depends on the user’s device capability as it runs completely on the user’s browser. The architecture system is shown in Figure 4. From the image, we can appreciate the flexibility of the system, which only requires a Python backend to run on the robot device, which connects to the mobile camera. In our case, the sensor is an iPhone 13 pro where a video camera provides data during the movement of the robot that is surveying an area. The backend provides a streaming video from the camera input and it waits for the frontend to connect. The frontend downloads from different sources the YOLO ONNX weights and processes the input streaming with the model. The image, together with the detection, are then shown on the display, as we can see from the tablet and smartphone images on the top left of Figure 4.

Figure 4.

The proposed system architecture. The components of the camera, backend, and frontend are independent of the moving vehicle used. This flexibility makes it possible to be used both on robot (left) and UAV (right) vehicles.

3.2. Data Collection

To create a comprehensive dataset (called “SurfLandmine”) for training and testing our real-time surface landmine detection system, we collected data in various environmental conditions that simulate real-world scenarios. The collected data include examples in different conditions, such as:

- Environment: We considered both grass and gravel terrains to account for variations in surface characteristics.

- Weather: Our data collection covered different weather conditions, including cloudy, sunny, and shadowy settings, as these factors can influence image quality and landmine visibility.

- Slope: Different slope levels (high, medium, and low) were considered to assess how the angle of the camera relative to the ground affects detection performance.

- Obstacles: We accounted for the presence of obstacles, such as bushes, branches, walls, bars, trees, trunks, and rocks, which can obscure landmines in a real-world scenario.

In terms of landmine selection, we opted for two common surface landmine types: the PFM-1 (butterfly) and PMA-2 (starfish with tripwire) illustrated in Figure 1a and Figure 1b, respectively. These landmines were chosen due to their prevalence in real-world scenarios, with PFM-1 often being dropped from airplanes and PMA-2 designed for surface placement. In total, we collected 47 videos, each with an average duration of 107 s, recorded at a frame rate of 6 frames per second (FPS). Approximately 25% of the frames contained at least one landmine, resulting in a total of 6640 annotated frames out of 29,109 frames in the dataset. Additional information about the dataset is provided in Table 1 and Table 2.

Table 1.

Data statistics. Values are rounded averages over the 47 ITA videos (IID), and 11 USA videos (OOD).

Table 2.

Data categories. Values were calculated over the 47 ITA videos (IID), and 11 USA videos (OOD).

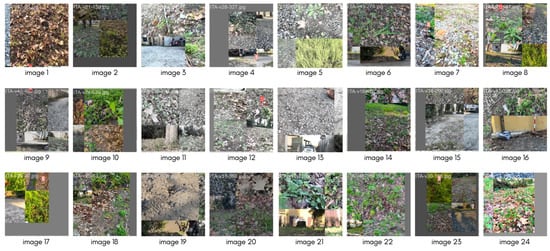

The annotation process was carried out using the computer vision annotation tool (CVAT) [48], a widely used framework for image annotation. Figure 5 shows examples of annotated frames in different conditions.

Figure 5.

Examples of annotated data using CVAT [48].

3.3. Model Selection

Given our need for real-time processing in landmine detection, we adopted a single-step object detection method. There are two primary approaches in object detection: single-stage and multi-stage. Multi-stage methods, like the R-CNN family (R-CNN [49], Fast R-CNN [50], Faster R-CNN [51]), first propose potential object regions, then detect objects in these regions. In contrast, single-stage methods, such as YOLO [52,53], directly detect objects without a separate region proposal step.

We chose YOLOv8 [54] for its real-time performance and efficiency, crucial for detecting surface landmines. After converting YOLOv8 models to the ONNX format, they became significantly lightweight (approximately 3MB for the “nano” version and 11 MB for the “small” version), enabling smooth operation on various platforms, including web browsers, smartphones, and iPads. This lightweight nature does not significantly burden computational resources. Additionally, YOLOv8’s requirement for a smaller annotated dataset for fine-tuning suited our objectives for efficient and effective landmine detection. A more in-depth description of YOLOv8 with an architecture illustration is given in the Appendix A.

4. Experimental Setup

To rigorously assess the performance of our landmine detection system, we have established a set of metrics that allow us to evaluate the precision, recall, and overall effectiveness of the model under different conditions. These metrics are crucial for ensuring that the system minimizes false negatives, as the detection of every landmine is imperative for safety reasons.

4.1. Metrics

For evaluating our object detection model, we focus on the following key metrics:

- Precision: The ratio of correctly predicted positive observations to the total predicted positives. High precision correlates with a low false positive rate.

- Recall: The ratio of correctly predicted positive events to all actual positives. Crucial for minimizing the risk of undetected landmines.

- F1 Score: The weighted average of Precision and Recall, a measure of the model’s accuracy.

- Intersection over Union (IoU): Measures the accuracy of an object detector on a dataset by calculating the overlap between the predicted and actual bounding boxes.

- Mean Average Precision (mAP): Averages the precision scores across all classes and recall levels, providing an overall effectiveness measure of the model.

These metrics are calculated on a validation set that is separate from the training data to ensure an unbiased evaluation of the model’s performance. These formulas provide a quantitative framework to evaluate the performance of the object detection model, with a particular focus on minimizing the occurrence of false negatives, which is critical in the context of landmine detection.

4.2. Training

The YOLOv8 model training was conducted on a dataset named “SurfLandmine”, which we collected and annotated. The training process involved:

- Pre-trained model: The model was initially trained on the “ImageNet” dataset, which contains RGB image data and corresponding annotations of 1000 classes, gathered from the internet. This step in necessary to learn important image features such as colors, shapes, and general objects.

- Initial fine-tuning: The model was fine-tuned on the “SurfLandmine” dataset, which contains RGB video data and corresponding annotations of landmines under various conditions captured in Italy. The video data are treated as single image-data frames, and shuffled. The dataset encompasses diverse weather conditions, soil compositions, and environmental settings to provide a robust training foundation.

- Validation: A subset of the data was used to validate the model performance periodically during training, ensuring that the model generalizes well to new, unseen data.

- Augmentations: Following the YOLO augmentation settings, we employed a series of 7 augmentations, which are listed in Table 3 and described below.

Table 3. Image augmentation parameters. Not listed parameters were assigned a coefficient of 0.0, so are not used for augmenting the images.

Table 3. Image augmentation parameters. Not listed parameters were assigned a coefficient of 0.0, so are not used for augmenting the images.

To better understand the sets of augmentation used, as research has shown augmentations play a crucial role in model performances and generalization, we report some examples in Figure 6. From the figure, we can see a group of 24 images. In the images, we can appreciate the overlapping of some of the augmentations; i.e., almost all the images are composed of multiple patches arranged as a grid. This is called “mosaic”, and it is the seventh augmentation we listed in Table 3. Additionally, when an image is shifted, this is the effect of “translation”, while a distortion of color is an effect of “saturation”, “value”, and “hue” augmentations. The values represented in the Table 3 are the probability that an augmentation is applied, from 0 to 1. As we can see, mosaic is always applied while hue augmentation is less probable. In Figure 6, most of the augmentations are applied at the same time, showing complex layouts.

Figure 6.

Examples of advanced augmentations of YOLOv8.

The initial training aimed to create a strong baseline model capable of detecting landmines with high recall in environmental conditions similar to those found in Italy.

5. Results

The YOLOv8 model’s effectiveness in landmine detection was rigorously evaluated using independent and identically distributed (IID) data as well as out of distribution (OOD) data. The results are encapsulated in the confusion matrices for the YOLOv8-nano and YOLOv8-small models, provided in Table 4 and Table 5, respectively.

Table 4.

Confusion matrix for YOLOv8-nano. In black, absolute values of landmines detection; in blue, percentages calculated horizontally, which refer, approximately, to false negatives, and in red, percentages calculated vertically, which refer to false positives.

Table 5.

Confusion matrix for YOLOv8-small. In black, absolute values of landmines detection; in blue, percentages calculated horizontally, which refer, approximately, to false negatives, and in red, percentages calculated vertically, which refer to false positives.

5.1. IID Data Evaluation

According to Table 4, the YOLOv8-nano model exhibited high precision in identifying “butterfly” (97.69%) and “starfish” (99.4%) mines. However, the model showed a lower precision (35.47%) as the false positive rate for “background”, where non-target objects were misclassified as targets. In particular, despite the low false negative rate (2.31% and 0.59% for “butterfly” and “starfish”, respectively), which is fundamental in landmine detection applications, the model had a false positive rate of approximately 27% when detecting “butterfly”, and 47% when detecting “starfish”, which can be appreciated in the “Background” row (bottom) of Table 4 and the red percentages in column “Butterfly” and “Starfish”, respectively.

Table 5 shows that the YOLOv8-small model performed similarly, with a slight improvement in the false positive rate (33.48%). The recall for “butterfly” and “starfish” was recorded at 97.4% and 98.85%, respectively. The false positive rate for “butterfly” was slightly reduced compared to the nano model, but it remained a concern for practical applications.

In Figure 7, we present the six scenarios derived from Table 4 and Table 5, encompassing detected landmines, missed detections, and false positive landmines. The initial images demonstrate that, despite variations in lighting, the model is capable of detecting objects both near and far. The second row of images shows instances of false positives, where shadows and specific parts of the image, which are actually background elements, are mistakenly identified as landmines, labeled as “starfish” and “butterfly”, respectively. Lastly, the third row illustrates missed detections. These account for only 1.6% of the total landmines (ground truth). Missed targets are indicated with a purple circle in the image.

Figure 7.

Example of correct (top), wrong (middle), and missing (bottom) detection for “starfish” (left) and “butterfly” (right). Detected objects are shown with a red rectangle, wrongly detected background for landmines in yellow, and missed landmines circled in purple.

5.2. Out-of-Distribution (OOD) Data Evaluation

Regarding the data collected in the USA, named Test (OOD) in Table 1 and Table 2, we noticed rather different performances. A first analysis must be undertaken regarding the composition of the terrain. The soil appears filled with grass, both long and short, and the long grass sometimes occluded the landmines. The detail is shown in Appendix B. Another difference between IID and OOD is that in the latter, the “butterfly” is presented in a different tone of green while the “starfish” is presented in green and blue, while in IID only in green. Despite having been used in the augmentations’ various color changing functions (see Figure 6 and Table 3), further analysis should address additional augmentations (i.e., greyscale, or saturating the green channel, among others). Due to these presented difficulties, both our models struggle to detect our landmines. However, in our context, missing many of the ground truths (when filtered with an IoU threshold of 0.5) enforces the value and difficulty of the OOD dataset proposed, making it out of distribution, indeed.

5.3. Summary of Findings

The detailed analysis of the model’s performance on the proposed datasets suggests that the YOLOv8 models are capable of high recall and precision within the distribution of the training data, obtained running in a smartphone browser at 2 FPS (frames per second), underlying the potential of such a lightweight model to run on almost any device due to its portability (3 MB for the nano model and 11 MB for the small). The results underline the high shift in the data distribution between the IID and OOD, which adds value to the SurfLandmine dataset, making it difficult to detect any object and having a high false negative rate observed in the OOD data evaluation. In this context, the necessity for continued refinement of the model is noticeable, potentially through methods such as targeted data augmentation, specialized training on edge cases, and further hyperparameter optimization to bolster the model’s generalization capabilities.

6. Discussion

The IID data testing demonstrates that both “nano” and “small” YOLOv8 models have a strong capacity for detecting objects similar to those in the training set. Nonetheless, the relatively high false positive rates suggest that further optimization is required. One important feature that is worth noticing is that the model can detect 98.4% of the existing objects in the test set, thus only missing 1.6%. These metrics, however, are punctual metrics and do not consider time in the equation. This means that while 1.6% of targets are not detected, this does not mean that one target is “always” not detected in a multi-frame video. As a consequence, it is possible that while the robot is moving, and the camera too, the target becomes visible from a different perspective. We speculate that despite being already low as a false-negative rate, this value can result in a better score if tackled from the point of view of tracking. Finally, the over 30% of false positives, which lower the accuracy of our models, is not to be considered a weakness. The real-time application of our model is intended to be a fast attention-driver for the human behind the screen of the robotic platform control. The system is not thought to be used for autonomous navigation, hence having “some degree of false positives” (30%) is tolerable. Future work will focus on refining the models to improve their accuracy, particularly in reducing false positives, and on conducting thorough OOD testing to ensure the models’ robustness in diverse operational environments.

7. Conclusions

In this study, we have developed and presented a novel real-time surface landmine detection system, integrated within a demining robot. This system is distinguished by its ability to function in real-time, achieving a processing speed of 2.6 frames per second. Notably, it is designed for accessibility and ease of use, being operable via both web browsers and smartphone devices. Our system demonstrates a high recall rate, making it a significant advancement in the field of landmine detection.

To our knowledge, this is the first instance where surface landmine detection has been addressed with an emphasis on operational speed. This focus has yielded a system with extended operational duration, a notable improvement over alternative methods such as unmanned aerial vehicles (UAVs), which are generally limited by battery life.

A crucial aspect of our system is its handling of false positives, a common challenge in detection systems. While both YOLOv8-nano and YOLOv8-small models exhibit a relatively high rate of false positives, we posit that in the context of landmine detection, it is preferable to err on the side of caution. The false positives generated by our system can be quickly and efficiently assessed by a human operator using a smartphone. This approach significantly reduces the risk of overlooking actual threats, thereby minimizing potential damage to both the robot and human operators.

Author Contributions

Conceptualization, M.B. and L.C.; methodology, E.V. and M.B.; software, E.V.; validation, E.V., M.B. and L.C.; investigation, L.C.; resources, L.C.; data curation, E.V.; writing—original draft preparation, E.V., M.B. and L.C.; writing—review and editing, E.V., M.B. and L.C.; visualization, E.V. and L.C.; supervision, M.B.; project administration, L.C.; funding acquisition, L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by ASMARA, Tuscany Regional, and by NATO-SPS, grant number G5731 [55].

Data Availability Statement

Data and code to replicate our experiments can be found in the following links: “Surface Landmine Detection Dataset” at miccunifi/SULAND-Dataset (https://www.github.com/miccunifi/SULAND-Dataset, accessed on 9 February 2024) and “Real-time Surface Landmine Detection” at miccunifi/RT-SULAND (https://www.github.com/miccunifi/RT-SULAND, accessed on 9 February 2024).

Acknowledgments

The authors extend their profound gratitude to Claudia Pecorella for her diligent efforts in recording and annotating a substantial set of 47 videos pivotal for landmine detection. Her meticulous work has been instrumental in realizing the objectives of this research. We also appreciate Franklin & Marshall College (PA) USA and their significant contribution in recording 10 of these videos, which greatly enriched the final dataset and subsequently the results of this study. Special thanks are also due to the other laboratories and teams of NATO-SPS G-5731 for facilitating such collaborations and providing invaluable resources and insights. The invaluable efforts of both individuals and their respective labs have undeniably paved the way for advancements in the realm of landmine detection research. We remain indebted to their dedication and expertise.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Radar and Methodologies: | |

| ATI | Apparent Thermal Inertia |

| COTS | Commercial-Off-The-Shelf |

| DATI | Differential Apparent Thermal Inertia |

| ERW | Explosive Remnants of War |

| GPR | Ground Penetrating Radar |

| HSI | Hyperspectral Imaging |

| IRT | Infrared Thermography |

| MLP | Multi-Layer Perceptron |

| NDE | Non-Destructive Evaluation |

| NIR | Near-Infrared |

| RTK | Real-Time Kinematic |

| SAR | Synthetic Aperture Radar |

| UAV | Unmanned Aerial Vehicles |

| UWB | Ultra-Wide-Band |

| UXO | Unexploded Ordnance |

| Artificial Intelligence and deep learning: | |

| AE | AutoEncoder |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Networks |

| CNN | Convolutional Neural Networks |

| DL | Deep Learning |

| FCNN | Fully-Connected Neural Networks |

| GRU | Gated Recurrent Unit |

| LSTM | Long Short-Term Memory |

| OOD | Out Of Distribution |

| R-CNN | Region-based CNN |

| RNN | Recurrent Neural Networks |

Appendix A. YOLOv8 Details

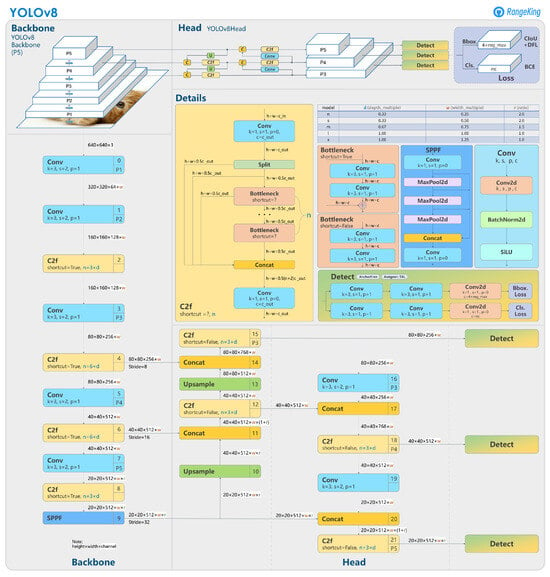

Ultralytics YOLOv8 model, created by Ultralytics, is cutting edge and state of the art (SOTA). It builds on the success of earlier YOLO versions and adds new features and enhancements to increase performance and versatility further. YOLOv8 is an excellent option for various object recognition, picture segmentation, and image classification jobs since it is quick, precise, and simple. An overview of the architecture is shown in Figure A1. Among the YOLO family models, YOLO v8 includes various critical features that can be summarized in four main components: (i) improved accuracy and speed compared to previous versions of YOLO; (ii) an updated backbone network based on EfficientNet, which improves the model’s ability to capture high-level features; (iii) a new feature fusion module that integrates features from multiple scales; (iv) enhanced data augmentation techniques, including MixUp and CutMix.

Figure A1.

YOLOv8 architecture from MMYolo (https://github.com/open-mmlab/mmyolo/tree/main/configs/yolov8, accessed on 9 February 2024).

Appendix B. Out of Distribution

In Figure A2, we can appreciate a few examples of short, medium, and long grass. These are very different compared to the training data presented in Figure 5. Although, intuitively, we can see the second field as easier, we should consider that the networks never encountered anything similar, in terms of grass shape and length. One additional point that is worth mentioning is that in the Test OOD data, the landmines are of a different color.

Figure A2.

Examples of OOD data. From left: short, medium, long (butterfly), and long (starfish).

References

- Monitor, T. Landmine and Cluster Munition Monitor. Available online: http://www.the-monitor.org/en-gb/home.aspx (accessed on 9 February 2024).

- Coalition, C.M. Cluster Munition Monitor 2023. Available online: http://www.the-monitor.org/media/3383234/Cluster-Munition-Monitor-2023_Web.pdf (accessed on 9 February 2024).

- Takahashi, K.; Preetz, H.; Igel, J. Soil Properties and Performance of Landmine Detection by Metal Detector and Ground-Penetrating Radar—Soil Characterisation and Its Verification by a Field Test. J. Appl. Geophys. 2011, 73, 368–377. [Google Scholar] [CrossRef]

- Masunaga, S.; Nonami, K. Controlled Metal Detector Mounted on Mine Detection Robot. Int. J. Adv. Robot. Syst. 2007, 4, 26. [Google Scholar] [CrossRef]

- Kim, B.; Yoon, J.W.; Lee, S.e.; Han, S.H.; Kim, K. Pulse-Induction Metal Detector with Time-Domain Bucking Circuit for Landmine Detection. Electron. Lett. 2015, 51, 159–161. [Google Scholar] [CrossRef]

- Yarovoy, A.G.; van Genderen, P.; Ligthart, L.P. Ground Penetrating Impulse Radar for Land Mine Detection. In Proceedings of the Eighth International Conference on Ground Penetrating Radar, SPIE, Gold Coast, Australia, 23–26 May 2000; Volume 4084, pp. 856–860. [Google Scholar] [CrossRef]

- Furuta, K.; Ishikawa, J. (Eds.) Anti-Personnel Landmine Detection for Humanitarian Demining; Springer: London, UK, 2009. [Google Scholar] [CrossRef]

- Motoyuki, S. ALIS: Advanced Landime Imaging System. Available online: http://cobalt.cneas.tohoku.ac.jp/users/sato/ALIS2.pdf (accessed on 9 February 2024).

- Daniels, D.J. Ground Penetrating Radar for Buried Landmine and IED Detection. In Unexploded Ordnance Detection and Mitigation; Byrnes, J., Ed.; NATO Science for Peace and Security Series B: Physics and Biophysics; Springer Science & Business Media: Dordrecht, The Netherlands, 2009; pp. 89–111. [Google Scholar] [CrossRef]

- Giannakis, I.; Giannopoulos, A.; Warren, C. A Realistic FDTD Numerical Modeling Framework of Ground Penetrating Radar for Landmine Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 37–51. [Google Scholar] [CrossRef]

- Nikulin, A.; De Smet, T.S.; Baur, J.; Frazer, W.D.; Abramowitz, J.C. Detection and Identification of Remnant PFM-1 ‘Butterfly Mines’ with a UAV-Based Thermal-Imaging Protocol. Remote Sens. 2018, 10, 1672. [Google Scholar] [CrossRef]

- Ege, Y.; Kakilli, A.; Kılıç, O.; Çalık, H.; Çıtak, H.; Nazlıbilek, S.; Kalender, O. Performance Analysis of Techniques Used for Determining Land Mines. Int. J. Geosci. 2014, 5, 1163–1189. [Google Scholar] [CrossRef][Green Version]

- Yoo, L.S.; Lee, J.H.; Ko, S.H.; Jung, S.K.; Lee, S.H.; Lee, Y.K. A Drone Fitted With a Magnetometer Detects Landmines. IEEE Geosci. Remote. Sens. Lett. 2020, 17, 2035–2039. [Google Scholar] [CrossRef]

- Colorado, J.; Perez, M.; Mondragon, I.; Mendez, D.; Parra, C.; Devia, C.; Martinez-Moritz, J.; Neira, L. An Integrated Aerial System for Landmine Detection: SDR-based Ground Penetrating Radar Onboard an Autonomous Drone. Adv. Robot. 2017, 31, 791–808. [Google Scholar] [CrossRef]

- Garcia-Fernandez, M.; Alvarez-Lopez, Y.; Las Heras, F. Autonomous Airborne 3D SAR Imaging System for Subsurface Sensing: UWB-GPR on Board a UAV for Landmine and IED Detection. Remote Sens. 2019, 11, 2357. [Google Scholar] [CrossRef]

- García Fernández, M.; Álvarez López, Y.; Arboleya Arboleya, A.; González Valdés, B.; Rodríguez Vaqueiro, Y.; Las-Heras Andrés, F.; Pino García, A. Synthetic Aperture Radar Imaging System for Landmine Detection Using a Ground Penetrating Radar on Board a Unmanned Aerial Vehicle. IEEE Access 2018, 6, 45100–45112. [Google Scholar] [CrossRef]

- Subramanian, L.S.; Sharath, B.L.; Dinodiya, A.; Mishra, G.K.; Kumar, G.S.; Jeevahan, J. Development of Landmine Detection Robot. AIP Conf. Proc. 2020, 2311, 050008. [Google Scholar] [CrossRef]

- Bartolini, A.; Bossi, L.; Capineri, L.; Falorni, P.; Bulletti, A.; Dimitri, M.; Pochanin, G.; Ruban, V.; Ogurtsova, T.; Crawford, F.; et al. Machine Vision for Obstacle Avoidance, Tripwire Detection, and Subsurface Radar Image Correction on a Robotic Vehicle for the Detection and Discrimination of Landmines. In Proceedings of the 2019 PhotonIcs & Electromagnetics Research Symposium—Spring (PIERS-Spring), Rome, Italy, 17–20 June 2019; pp. 1602–1606. [Google Scholar] [CrossRef]

- Crawford, F.; Bechtel, T.; Pochanin, G.; Falorni, P.; Asfar, K.; Capineri, L.; Dimitri, M. Demining Robots: Overview and Mission Strategy for Landmine Identification in the Field. In Proceedings of the 2021 11th International Workshop on Advanced Ground Penetrating Radar (IWAGPR), Valletta, Malta, 1–4 December 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Monitor. Cluster Munition Use. 2018. Available online: http://www.the-monitor.org/en-gb/our-research/cluster-munition-use.aspx, (accessed on 9 February 2024).

- Qian, R.; Lai, X.; Li, X. 3D Object Detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Raiyn, J. Detection of Objects in Motion—A Survey of Video Surveillance. Adv. Internet Things 2013, 3, 73–78. [Google Scholar] [CrossRef]

- Luna, E.; San Miguel, J.C.; Ortego, D.; Martínez, J.M. Abandoned Object Detection in Video-Surveillance: Survey and Comparison. Sensors 2018, 18, 4290. [Google Scholar] [CrossRef] [PubMed]

- Kaur, A.; Singh, Y.; Neeru, N.; Kaur, L.; Singh, A. A Survey on Deep Learning Approaches to Medical Images and a Systematic Look up into Real-Time Object Detection. Arch. Comput. Methods Eng. 2022, 29, 2071–2111. [Google Scholar] [CrossRef]

- FP7. D-BOX: “Demining Tool-BOX for Humanitarian Clearing of Large Scale Area from Anti-Personal Landmines and Cluster Munitions”. Final Report. 2016. Available online: https://cordis.europa.eu/project/id/284996/reporting/en, (accessed on 9 February 2024).

- Killeen, J.; Jaupi, L.; Barrett, B. Impact Assessment of Humanitarian Demining Using Object-Based Peri-Urban Land Cover Classification and Morphological Building Detection from VHR Worldview Imagery. Remote Sens. Appl. Soc. Environ. 2022, 27, 100766. [Google Scholar] [CrossRef]

- Pochanin, G.; Capineri, L.; Bechtel, T.; Ruban, V.; Falorni, P.; Crawford, F.; Ogurtsova, T.; Bossi, L. Radar Systems for Landmine Detection: Invited Paper. In Proceedings of the 2020 IEEE Ukrainian Microwave Week (UkrMW), Kharkiv, Ukraine, 21–25 September 2020; pp. 1118–1122. [Google Scholar] [CrossRef]

- Qiu, Z.; Guo, H.; Hu, J.; Jiang, H.; Luo, C. Joint Fusion and Detection via Deep Learning in UAV-Borne Multispectral Sensing of Scatterable Landmine. Sensors 2023, 23, 5693. [Google Scholar] [CrossRef] [PubMed]

- Baur, J.; Steinberg, G.; Nikulin, A.; Chiu, K.; de Smet, T.S. Applying Deep Learning to Automate UAV-Based Detection of Scatterable Landmines. Remote Sens. 2020, 12, 859. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, G.; Sun, L.; Jeon, B. Special Issue “Multi-Platform and Multi-Modal Remote Sensing Data Fusion with Advanced Deep Learning Techniques”. 2024. Available online: https://www.mdpi.com/journal/remotesensing/special_issues/48NZ9Y0J79, (accessed on 9 February 2024).

- Schachne, M.; Van Kempen, L.; Milojevic, D.; Sahli, H.; Van Ham, P.; Acheroy, M.; Cornelis, J. Mine Detection by Means of Dynamic Thermography: Simulation and Experiments. In Proceedings of the 1998 Second International Conference on the Detection of Abandoned Land Mines (IEE Conf. Publ. No. 458), Consultant, UK, 12–14 October 1998; pp. 124–128. [Google Scholar] [CrossRef]

- Angelini, L.; Carlo, F.D.; Marangi, C.; Mannarelli, M.; Nardulli, G.; Pellicoro, M.; Satalino, G.; Stramaglia, S. Chaotic Neural Networks Clustering: An Application to Landmine Detection by Dynamic Infrared Imaging. Opt. Eng. 2001, 40, 2878–2884. [Google Scholar] [CrossRef]

- Muscio, A.; Corticelli, M. Land Mine Detection by Infrared Thermography: Reduction of Size and Duration of the Experiments. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1955–1964. [Google Scholar] [CrossRef]

- Thành, N.T.; Hào, D.N.; Sahli, H. Infrared Thermography for Land Mine Detection. In Augmented Vision Perception in Infrared: Algorithms and Applied Systems; Hammoud, R.I., Ed.; Advances in Pattern Recognition; Springer: London, UK, 2009; pp. 3–36. [Google Scholar] [CrossRef]

- Pardo, F.; López, P.; Cabello, D.; Pardo, F.; López, P.; Cabello, D. Heat Transfer for NDE: Landmine Detection. In Developments in Heat Transfer; IntechOpen: London, UK, 2011. [Google Scholar] [CrossRef]

- Barnawi, A.; Budhiraja, I.; Kumar, K.; Kumar, N.; Alzahrani, B.; Almansour, A.; Noor, A. A Comprehensive Review on Landmine Detection Using Deep Learning Techniques in 5G Environment: Open Issues and Challenges. Neural Comput. Appl. 2022, 34, 21657–21676. [Google Scholar] [CrossRef]

- Tenorio-Tamayo, H.A.; Forero-Ramírez, J.C.; García, B.; Loaiza-Correa, H.; Restrepo-Girón, A.D.; Nope-Rodríguez, S.E.; Barandica-López, A.; Buitrago-Molina, J.T. Dataset of Thermographic Images for the Detection of Buried Landmines. Data Brief 2023, 49, 109443. [Google Scholar] [CrossRef] [PubMed]

- Tesfamariam, G.T.; Mali, D. Application of Advanced Background Subtraction Techniques for the Detection of Buried Plastic Landmines. Int. J. Emerg. Technol. Adv. Eng. 2014, 4, 318–323. [Google Scholar]

- Gonzalez-Huici, M.A.; Giovanneschi, F. A Combined Strategy for Landmine Detection and Identification Using Synthetic GPR Responses. J. Appl. Geophys. 2013, 99, 154–165. [Google Scholar] [CrossRef]

- Dyana, A.; Rao, C.H.S.; Kuloor, R. 3D Segmentation of Ground Penetrating Radar Data for Landmine Detection. In Proceedings of the 2012 14th International Conference on Ground Penetrating Radar (GPR), Shanghai, China, 4–8 June 2012; pp. 858–863. [Google Scholar] [CrossRef]

- Deiana, D.; Anitori, L. Detection and Classification of Landmines Using AR Modeling of GPR Data. In Proceedings of the XIII Internarional Conference on Ground Penetrating Radar, Lecce, Italy, 21–25 June 2010; pp. 1–5. [Google Scholar] [CrossRef]

- Shi, Y.; Song, Q.; Jin, T.; Zhou, Z. Landmine Detection Using Boosting Classifiers with Adaptive Feature Selection. In Proceedings of the 2011 6th International Workshop on Advanced Ground Penetrating Radar (IWAGPR), Las Vegas, NV, USA, 12–14 December 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Gader, P.; Mystkowski, M.; Zhao, Y. Landmine Detection with Ground Penetrating Radar Using Hidden Markov Models. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1231–1244. [Google Scholar] [CrossRef]

- Núñez-Nieto, X.; Solla, M.; Gómez-Pérez, P.; Lorenzo, H. GPR Signal Characterization for Automated Landmine and UXO Detection Based on Machine Learning Techniques. Remote Sens. 2014, 6, 9729–9748. [Google Scholar] [CrossRef]

- Silva, J.S.; Guerra, I.F.L.; Bioucas-Dias, J.; Gasche, T. Landmine Detection Using Multispectral Images. IEEE Sens. J. 2019, 19, 9341–9351. [Google Scholar] [CrossRef]

- Pryshchenko, O.A.; Plakhtii, V.; Dumin, O.M.; Pochanin, G.P.; Ruban, V.P.; Capineri, L.; Crawford, F. Implementation of an Artificial Intelligence Approach to GPR Systems for Landmine Detection. Remote Sens. 2022, 14, 4421. [Google Scholar] [CrossRef]

- Bestagini, P.; Lombardi, F.; Lualdi, M.; Picetti, F.; Tubaro, S. Landmine Detection Using Autoencoders on Multipolarization GPR Volumetric Data. IEEE Trans. Geosci. Remote Sens. 2021, 59, 182–195. [Google Scholar] [CrossRef]

- Sekachev, B.; Manovich, N.; Zhiltsov, M.; Zhavoronkov, A.; Kalinin, D.; Hoff, B.; TOsmanov; Kruchinin, D.; Zankevich, A.; DmitriySidnev; et al. Opencv/Cvat: V1.1.0. Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2014, arXiv:cs/1311.2524. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:cs/1504.08083. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:cs/1506.01497. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. YOLO: You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:cs/2304.00501. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://www.ultralytics.com/yolo, (accessed on 9 February 2024).

- NATO SPS. DEMINING ROBOTS. 2023. Available online: http://www.natospsdeminingrobots.com/ (accessed on 9 February 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).