Deep Learning-Based Real-Time Detection of Surface Landmines Using Optical Imaging

Abstract

1. Introduction

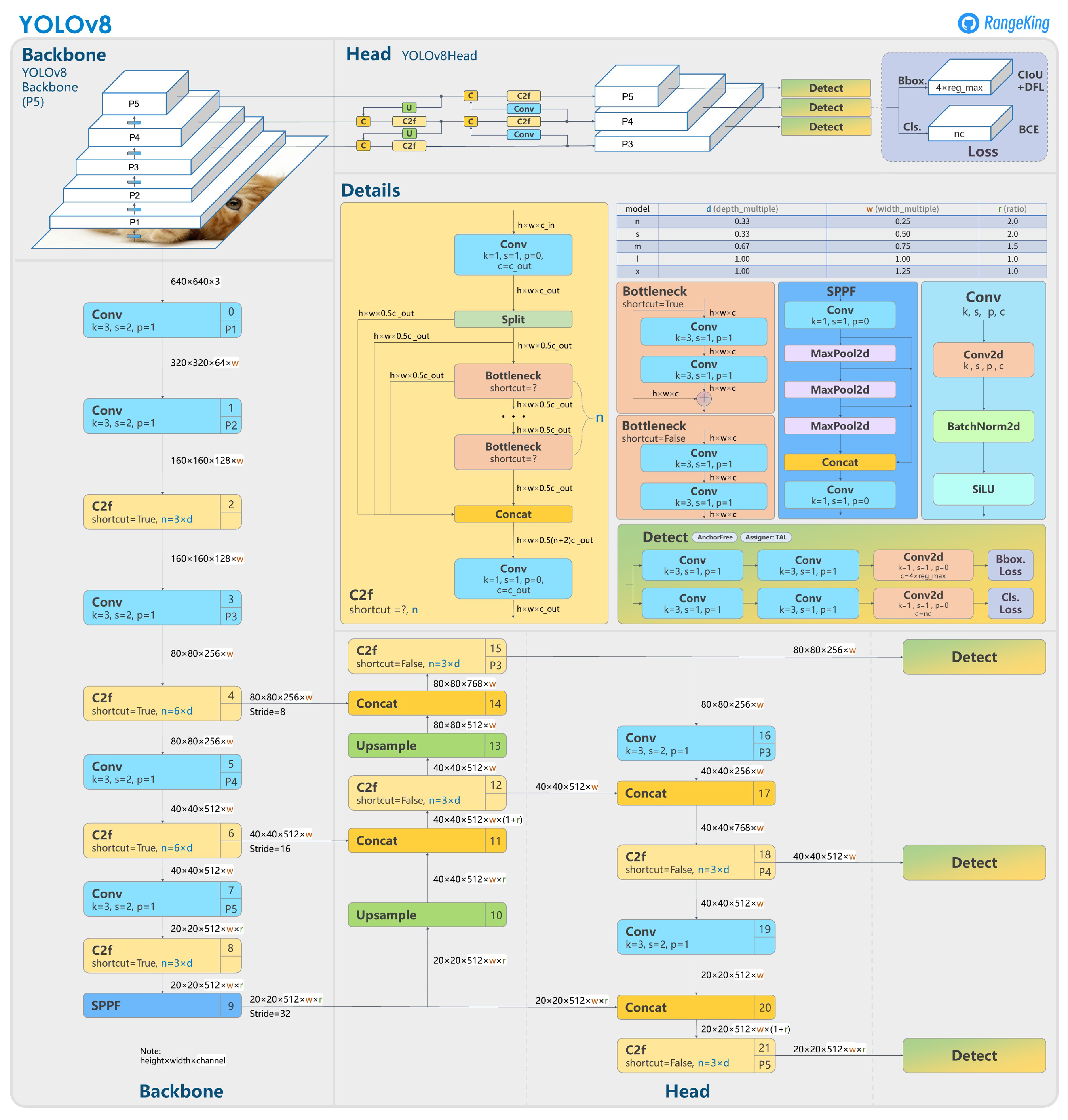

2. Related Works

2.1. Data

2.1.1. Optical Imaging

2.1.2. Dynamic Thermography

2.2. Analysis Approach

2.2.1. Image Processing

2.2.2. Neural Networks

2.3. Real-Time Applications

3. Tools and Methods

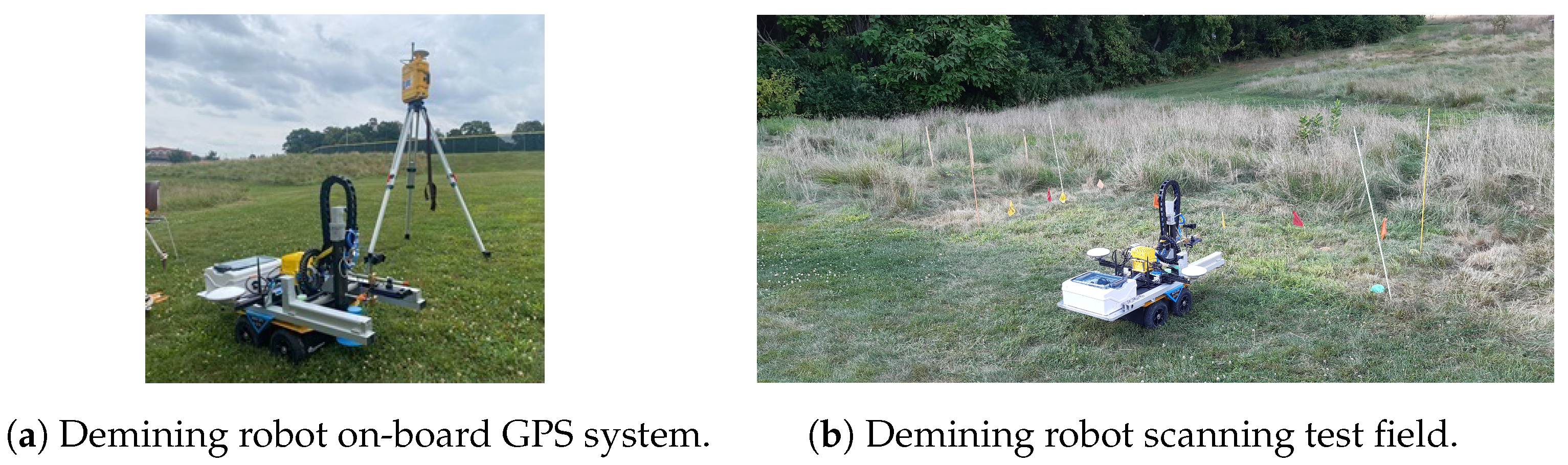

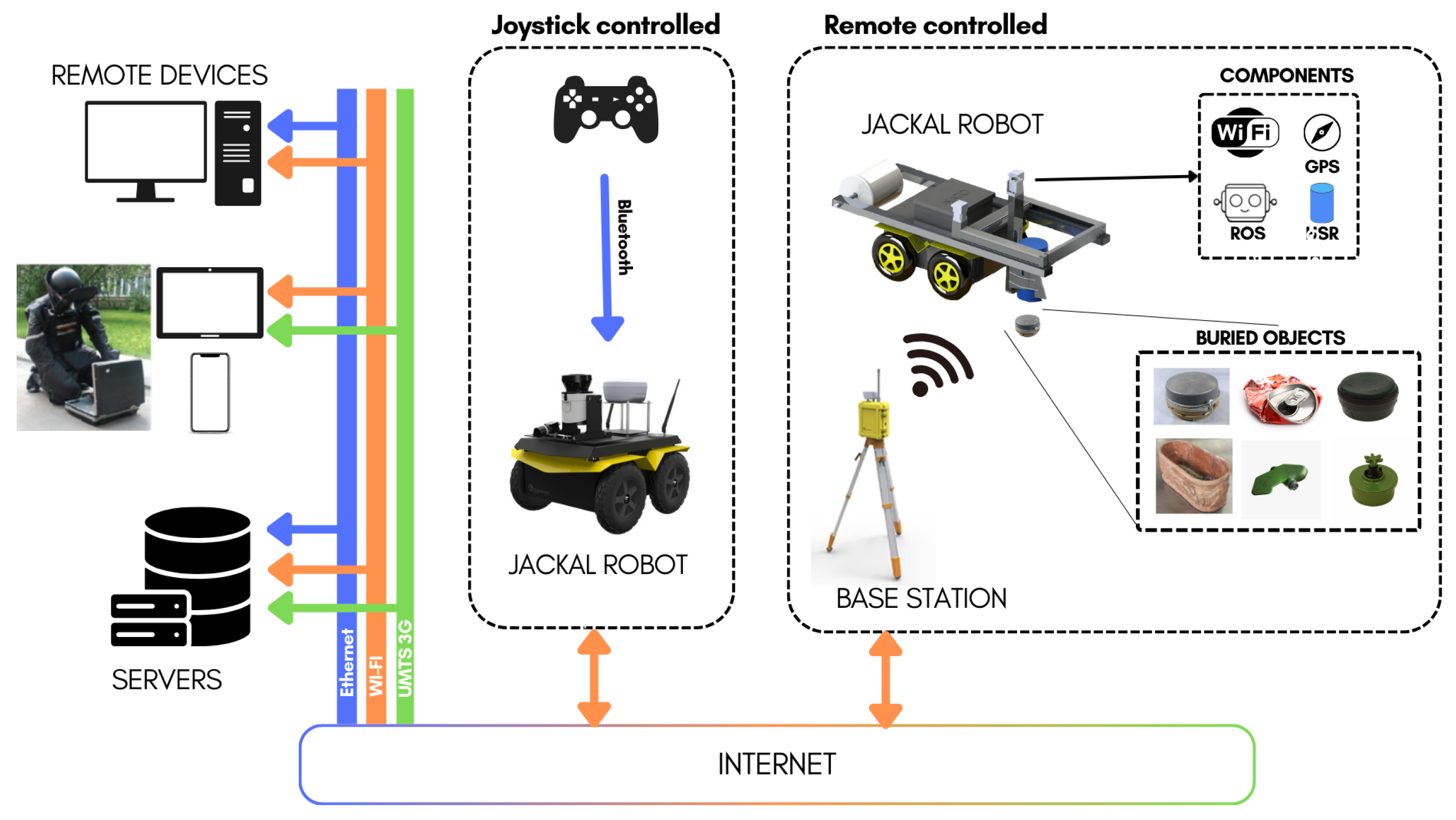

3.1. Robot Configuration

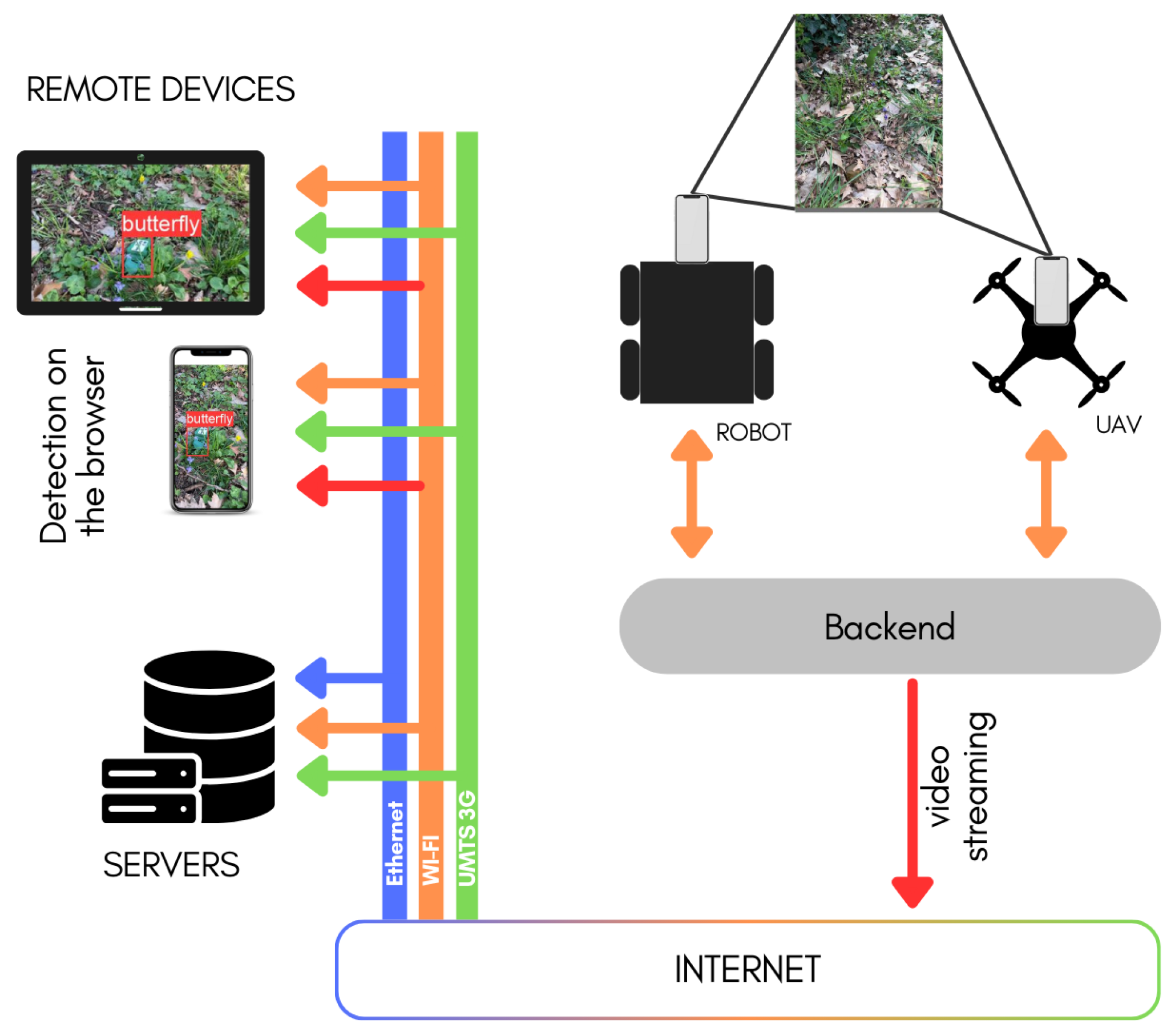

3.1.1. System Architecture

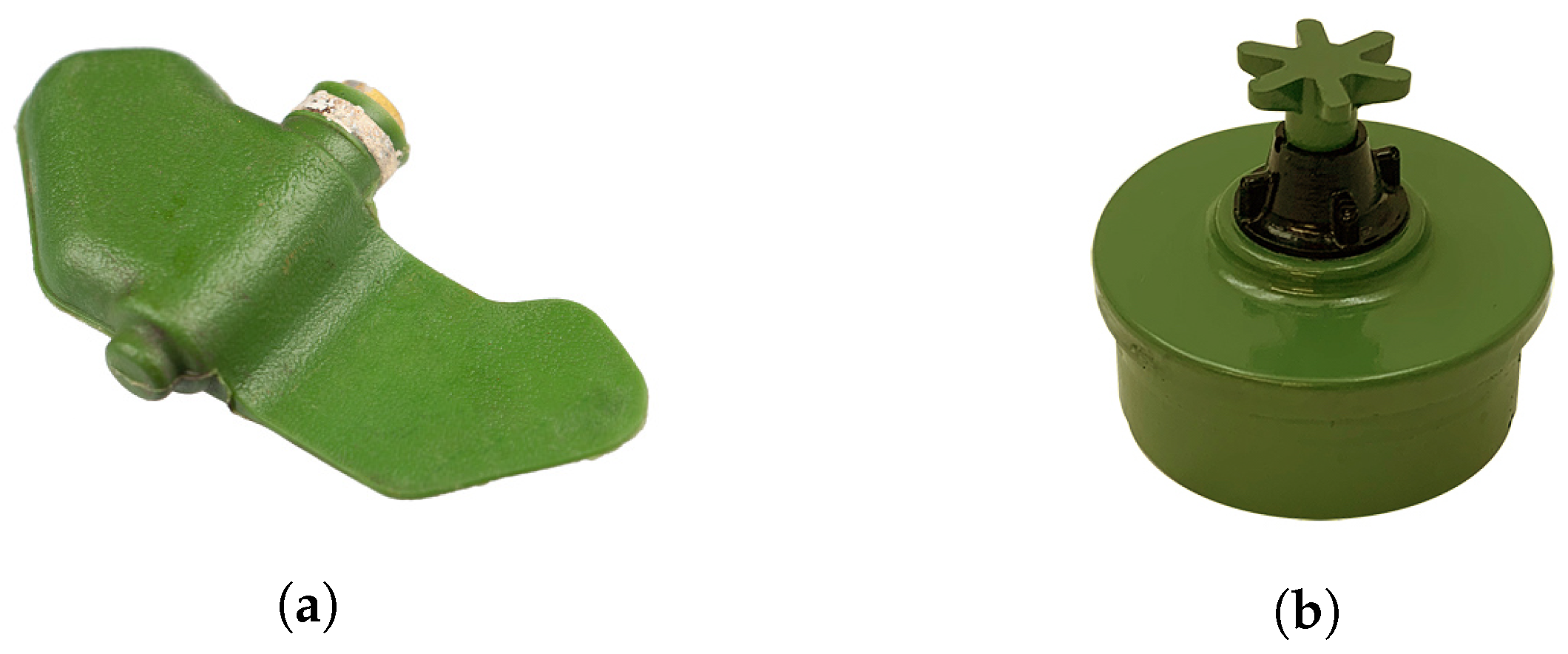

3.2. Data Collection

- Environment: We considered both grass and gravel terrains to account for variations in surface characteristics.

- Weather: Our data collection covered different weather conditions, including cloudy, sunny, and shadowy settings, as these factors can influence image quality and landmine visibility.

- Slope: Different slope levels (high, medium, and low) were considered to assess how the angle of the camera relative to the ground affects detection performance.

- Obstacles: We accounted for the presence of obstacles, such as bushes, branches, walls, bars, trees, trunks, and rocks, which can obscure landmines in a real-world scenario.

3.3. Model Selection

4. Experimental Setup

4.1. Metrics

- Precision: The ratio of correctly predicted positive observations to the total predicted positives. High precision correlates with a low false positive rate.

- Recall: The ratio of correctly predicted positive events to all actual positives. Crucial for minimizing the risk of undetected landmines.

- F1 Score: The weighted average of Precision and Recall, a measure of the model’s accuracy.

- Intersection over Union (IoU): Measures the accuracy of an object detector on a dataset by calculating the overlap between the predicted and actual bounding boxes.

- Mean Average Precision (mAP): Averages the precision scores across all classes and recall levels, providing an overall effectiveness measure of the model.

4.2. Training

- Pre-trained model: The model was initially trained on the “ImageNet” dataset, which contains RGB image data and corresponding annotations of 1000 classes, gathered from the internet. This step in necessary to learn important image features such as colors, shapes, and general objects.

- Initial fine-tuning: The model was fine-tuned on the “SurfLandmine” dataset, which contains RGB video data and corresponding annotations of landmines under various conditions captured in Italy. The video data are treated as single image-data frames, and shuffled. The dataset encompasses diverse weather conditions, soil compositions, and environmental settings to provide a robust training foundation.

- Validation: A subset of the data was used to validate the model performance periodically during training, ensuring that the model generalizes well to new, unseen data.

- Augmentations: Following the YOLO augmentation settings, we employed a series of 7 augmentations, which are listed in Table 3 and described below.

5. Results

5.1. IID Data Evaluation

5.2. Out-of-Distribution (OOD) Data Evaluation

5.3. Summary of Findings

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Radar and Methodologies: | |

| ATI | Apparent Thermal Inertia |

| COTS | Commercial-Off-The-Shelf |

| DATI | Differential Apparent Thermal Inertia |

| ERW | Explosive Remnants of War |

| GPR | Ground Penetrating Radar |

| HSI | Hyperspectral Imaging |

| IRT | Infrared Thermography |

| MLP | Multi-Layer Perceptron |

| NDE | Non-Destructive Evaluation |

| NIR | Near-Infrared |

| RTK | Real-Time Kinematic |

| SAR | Synthetic Aperture Radar |

| UAV | Unmanned Aerial Vehicles |

| UWB | Ultra-Wide-Band |

| UXO | Unexploded Ordnance |

| Artificial Intelligence and deep learning: | |

| AE | AutoEncoder |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Networks |

| CNN | Convolutional Neural Networks |

| DL | Deep Learning |

| FCNN | Fully-Connected Neural Networks |

| GRU | Gated Recurrent Unit |

| LSTM | Long Short-Term Memory |

| OOD | Out Of Distribution |

| R-CNN | Region-based CNN |

| RNN | Recurrent Neural Networks |

Appendix A. YOLOv8 Details

Appendix B. Out of Distribution

References

- Monitor, T. Landmine and Cluster Munition Monitor. Available online: http://www.the-monitor.org/en-gb/home.aspx (accessed on 9 February 2024).

- Coalition, C.M. Cluster Munition Monitor 2023. Available online: http://www.the-monitor.org/media/3383234/Cluster-Munition-Monitor-2023_Web.pdf (accessed on 9 February 2024).

- Takahashi, K.; Preetz, H.; Igel, J. Soil Properties and Performance of Landmine Detection by Metal Detector and Ground-Penetrating Radar—Soil Characterisation and Its Verification by a Field Test. J. Appl. Geophys. 2011, 73, 368–377. [Google Scholar] [CrossRef]

- Masunaga, S.; Nonami, K. Controlled Metal Detector Mounted on Mine Detection Robot. Int. J. Adv. Robot. Syst. 2007, 4, 26. [Google Scholar] [CrossRef]

- Kim, B.; Yoon, J.W.; Lee, S.e.; Han, S.H.; Kim, K. Pulse-Induction Metal Detector with Time-Domain Bucking Circuit for Landmine Detection. Electron. Lett. 2015, 51, 159–161. [Google Scholar] [CrossRef]

- Yarovoy, A.G.; van Genderen, P.; Ligthart, L.P. Ground Penetrating Impulse Radar for Land Mine Detection. In Proceedings of the Eighth International Conference on Ground Penetrating Radar, SPIE, Gold Coast, Australia, 23–26 May 2000; Volume 4084, pp. 856–860. [Google Scholar] [CrossRef]

- Furuta, K.; Ishikawa, J. (Eds.) Anti-Personnel Landmine Detection for Humanitarian Demining; Springer: London, UK, 2009. [Google Scholar] [CrossRef]

- Motoyuki, S. ALIS: Advanced Landime Imaging System. Available online: http://cobalt.cneas.tohoku.ac.jp/users/sato/ALIS2.pdf (accessed on 9 February 2024).

- Daniels, D.J. Ground Penetrating Radar for Buried Landmine and IED Detection. In Unexploded Ordnance Detection and Mitigation; Byrnes, J., Ed.; NATO Science for Peace and Security Series B: Physics and Biophysics; Springer Science & Business Media: Dordrecht, The Netherlands, 2009; pp. 89–111. [Google Scholar] [CrossRef]

- Giannakis, I.; Giannopoulos, A.; Warren, C. A Realistic FDTD Numerical Modeling Framework of Ground Penetrating Radar for Landmine Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 37–51. [Google Scholar] [CrossRef]

- Nikulin, A.; De Smet, T.S.; Baur, J.; Frazer, W.D.; Abramowitz, J.C. Detection and Identification of Remnant PFM-1 ‘Butterfly Mines’ with a UAV-Based Thermal-Imaging Protocol. Remote Sens. 2018, 10, 1672. [Google Scholar] [CrossRef]

- Ege, Y.; Kakilli, A.; Kılıç, O.; Çalık, H.; Çıtak, H.; Nazlıbilek, S.; Kalender, O. Performance Analysis of Techniques Used for Determining Land Mines. Int. J. Geosci. 2014, 5, 1163–1189. [Google Scholar] [CrossRef][Green Version]

- Yoo, L.S.; Lee, J.H.; Ko, S.H.; Jung, S.K.; Lee, S.H.; Lee, Y.K. A Drone Fitted With a Magnetometer Detects Landmines. IEEE Geosci. Remote. Sens. Lett. 2020, 17, 2035–2039. [Google Scholar] [CrossRef]

- Colorado, J.; Perez, M.; Mondragon, I.; Mendez, D.; Parra, C.; Devia, C.; Martinez-Moritz, J.; Neira, L. An Integrated Aerial System for Landmine Detection: SDR-based Ground Penetrating Radar Onboard an Autonomous Drone. Adv. Robot. 2017, 31, 791–808. [Google Scholar] [CrossRef]

- Garcia-Fernandez, M.; Alvarez-Lopez, Y.; Las Heras, F. Autonomous Airborne 3D SAR Imaging System for Subsurface Sensing: UWB-GPR on Board a UAV for Landmine and IED Detection. Remote Sens. 2019, 11, 2357. [Google Scholar] [CrossRef]

- García Fernández, M.; Álvarez López, Y.; Arboleya Arboleya, A.; González Valdés, B.; Rodríguez Vaqueiro, Y.; Las-Heras Andrés, F.; Pino García, A. Synthetic Aperture Radar Imaging System for Landmine Detection Using a Ground Penetrating Radar on Board a Unmanned Aerial Vehicle. IEEE Access 2018, 6, 45100–45112. [Google Scholar] [CrossRef]

- Subramanian, L.S.; Sharath, B.L.; Dinodiya, A.; Mishra, G.K.; Kumar, G.S.; Jeevahan, J. Development of Landmine Detection Robot. AIP Conf. Proc. 2020, 2311, 050008. [Google Scholar] [CrossRef]

- Bartolini, A.; Bossi, L.; Capineri, L.; Falorni, P.; Bulletti, A.; Dimitri, M.; Pochanin, G.; Ruban, V.; Ogurtsova, T.; Crawford, F.; et al. Machine Vision for Obstacle Avoidance, Tripwire Detection, and Subsurface Radar Image Correction on a Robotic Vehicle for the Detection and Discrimination of Landmines. In Proceedings of the 2019 PhotonIcs & Electromagnetics Research Symposium—Spring (PIERS-Spring), Rome, Italy, 17–20 June 2019; pp. 1602–1606. [Google Scholar] [CrossRef]

- Crawford, F.; Bechtel, T.; Pochanin, G.; Falorni, P.; Asfar, K.; Capineri, L.; Dimitri, M. Demining Robots: Overview and Mission Strategy for Landmine Identification in the Field. In Proceedings of the 2021 11th International Workshop on Advanced Ground Penetrating Radar (IWAGPR), Valletta, Malta, 1–4 December 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Monitor. Cluster Munition Use. 2018. Available online: http://www.the-monitor.org/en-gb/our-research/cluster-munition-use.aspx, (accessed on 9 February 2024).

- Qian, R.; Lai, X.; Li, X. 3D Object Detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Raiyn, J. Detection of Objects in Motion—A Survey of Video Surveillance. Adv. Internet Things 2013, 3, 73–78. [Google Scholar] [CrossRef]

- Luna, E.; San Miguel, J.C.; Ortego, D.; Martínez, J.M. Abandoned Object Detection in Video-Surveillance: Survey and Comparison. Sensors 2018, 18, 4290. [Google Scholar] [CrossRef] [PubMed]

- Kaur, A.; Singh, Y.; Neeru, N.; Kaur, L.; Singh, A. A Survey on Deep Learning Approaches to Medical Images and a Systematic Look up into Real-Time Object Detection. Arch. Comput. Methods Eng. 2022, 29, 2071–2111. [Google Scholar] [CrossRef]

- FP7. D-BOX: “Demining Tool-BOX for Humanitarian Clearing of Large Scale Area from Anti-Personal Landmines and Cluster Munitions”. Final Report. 2016. Available online: https://cordis.europa.eu/project/id/284996/reporting/en, (accessed on 9 February 2024).

- Killeen, J.; Jaupi, L.; Barrett, B. Impact Assessment of Humanitarian Demining Using Object-Based Peri-Urban Land Cover Classification and Morphological Building Detection from VHR Worldview Imagery. Remote Sens. Appl. Soc. Environ. 2022, 27, 100766. [Google Scholar] [CrossRef]

- Pochanin, G.; Capineri, L.; Bechtel, T.; Ruban, V.; Falorni, P.; Crawford, F.; Ogurtsova, T.; Bossi, L. Radar Systems for Landmine Detection: Invited Paper. In Proceedings of the 2020 IEEE Ukrainian Microwave Week (UkrMW), Kharkiv, Ukraine, 21–25 September 2020; pp. 1118–1122. [Google Scholar] [CrossRef]

- Qiu, Z.; Guo, H.; Hu, J.; Jiang, H.; Luo, C. Joint Fusion and Detection via Deep Learning in UAV-Borne Multispectral Sensing of Scatterable Landmine. Sensors 2023, 23, 5693. [Google Scholar] [CrossRef] [PubMed]

- Baur, J.; Steinberg, G.; Nikulin, A.; Chiu, K.; de Smet, T.S. Applying Deep Learning to Automate UAV-Based Detection of Scatterable Landmines. Remote Sens. 2020, 12, 859. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, G.; Sun, L.; Jeon, B. Special Issue “Multi-Platform and Multi-Modal Remote Sensing Data Fusion with Advanced Deep Learning Techniques”. 2024. Available online: https://www.mdpi.com/journal/remotesensing/special_issues/48NZ9Y0J79, (accessed on 9 February 2024).

- Schachne, M.; Van Kempen, L.; Milojevic, D.; Sahli, H.; Van Ham, P.; Acheroy, M.; Cornelis, J. Mine Detection by Means of Dynamic Thermography: Simulation and Experiments. In Proceedings of the 1998 Second International Conference on the Detection of Abandoned Land Mines (IEE Conf. Publ. No. 458), Consultant, UK, 12–14 October 1998; pp. 124–128. [Google Scholar] [CrossRef]

- Angelini, L.; Carlo, F.D.; Marangi, C.; Mannarelli, M.; Nardulli, G.; Pellicoro, M.; Satalino, G.; Stramaglia, S. Chaotic Neural Networks Clustering: An Application to Landmine Detection by Dynamic Infrared Imaging. Opt. Eng. 2001, 40, 2878–2884. [Google Scholar] [CrossRef]

- Muscio, A.; Corticelli, M. Land Mine Detection by Infrared Thermography: Reduction of Size and Duration of the Experiments. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1955–1964. [Google Scholar] [CrossRef]

- Thành, N.T.; Hào, D.N.; Sahli, H. Infrared Thermography for Land Mine Detection. In Augmented Vision Perception in Infrared: Algorithms and Applied Systems; Hammoud, R.I., Ed.; Advances in Pattern Recognition; Springer: London, UK, 2009; pp. 3–36. [Google Scholar] [CrossRef]

- Pardo, F.; López, P.; Cabello, D.; Pardo, F.; López, P.; Cabello, D. Heat Transfer for NDE: Landmine Detection. In Developments in Heat Transfer; IntechOpen: London, UK, 2011. [Google Scholar] [CrossRef]

- Barnawi, A.; Budhiraja, I.; Kumar, K.; Kumar, N.; Alzahrani, B.; Almansour, A.; Noor, A. A Comprehensive Review on Landmine Detection Using Deep Learning Techniques in 5G Environment: Open Issues and Challenges. Neural Comput. Appl. 2022, 34, 21657–21676. [Google Scholar] [CrossRef]

- Tenorio-Tamayo, H.A.; Forero-Ramírez, J.C.; García, B.; Loaiza-Correa, H.; Restrepo-Girón, A.D.; Nope-Rodríguez, S.E.; Barandica-López, A.; Buitrago-Molina, J.T. Dataset of Thermographic Images for the Detection of Buried Landmines. Data Brief 2023, 49, 109443. [Google Scholar] [CrossRef] [PubMed]

- Tesfamariam, G.T.; Mali, D. Application of Advanced Background Subtraction Techniques for the Detection of Buried Plastic Landmines. Int. J. Emerg. Technol. Adv. Eng. 2014, 4, 318–323. [Google Scholar]

- Gonzalez-Huici, M.A.; Giovanneschi, F. A Combined Strategy for Landmine Detection and Identification Using Synthetic GPR Responses. J. Appl. Geophys. 2013, 99, 154–165. [Google Scholar] [CrossRef]

- Dyana, A.; Rao, C.H.S.; Kuloor, R. 3D Segmentation of Ground Penetrating Radar Data for Landmine Detection. In Proceedings of the 2012 14th International Conference on Ground Penetrating Radar (GPR), Shanghai, China, 4–8 June 2012; pp. 858–863. [Google Scholar] [CrossRef]

- Deiana, D.; Anitori, L. Detection and Classification of Landmines Using AR Modeling of GPR Data. In Proceedings of the XIII Internarional Conference on Ground Penetrating Radar, Lecce, Italy, 21–25 June 2010; pp. 1–5. [Google Scholar] [CrossRef]

- Shi, Y.; Song, Q.; Jin, T.; Zhou, Z. Landmine Detection Using Boosting Classifiers with Adaptive Feature Selection. In Proceedings of the 2011 6th International Workshop on Advanced Ground Penetrating Radar (IWAGPR), Las Vegas, NV, USA, 12–14 December 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Gader, P.; Mystkowski, M.; Zhao, Y. Landmine Detection with Ground Penetrating Radar Using Hidden Markov Models. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1231–1244. [Google Scholar] [CrossRef]

- Núñez-Nieto, X.; Solla, M.; Gómez-Pérez, P.; Lorenzo, H. GPR Signal Characterization for Automated Landmine and UXO Detection Based on Machine Learning Techniques. Remote Sens. 2014, 6, 9729–9748. [Google Scholar] [CrossRef]

- Silva, J.S.; Guerra, I.F.L.; Bioucas-Dias, J.; Gasche, T. Landmine Detection Using Multispectral Images. IEEE Sens. J. 2019, 19, 9341–9351. [Google Scholar] [CrossRef]

- Pryshchenko, O.A.; Plakhtii, V.; Dumin, O.M.; Pochanin, G.P.; Ruban, V.P.; Capineri, L.; Crawford, F. Implementation of an Artificial Intelligence Approach to GPR Systems for Landmine Detection. Remote Sens. 2022, 14, 4421. [Google Scholar] [CrossRef]

- Bestagini, P.; Lombardi, F.; Lualdi, M.; Picetti, F.; Tubaro, S. Landmine Detection Using Autoencoders on Multipolarization GPR Volumetric Data. IEEE Trans. Geosci. Remote Sens. 2021, 59, 182–195. [Google Scholar] [CrossRef]

- Sekachev, B.; Manovich, N.; Zhiltsov, M.; Zhavoronkov, A.; Kalinin, D.; Hoff, B.; TOsmanov; Kruchinin, D.; Zankevich, A.; DmitriySidnev; et al. Opencv/Cvat: V1.1.0. Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2014, arXiv:cs/1311.2524. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:cs/1504.08083. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:cs/1506.01497. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. YOLO: You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:cs/2304.00501. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://www.ultralytics.com/yolo, (accessed on 9 February 2024).

- NATO SPS. DEMINING ROBOTS. 2023. Available online: http://www.natospsdeminingrobots.com/ (accessed on 9 February 2024).

| Split | Duration (s) | Ann. (%) | Frames Ann. | Frames |

|---|---|---|---|---|

| Train | 112 | 25 | 154 | 669 |

| Val | 87 | 24 | 113 | 522 |

| Test (IID) | 104 | 24 | 140 | 624 |

| Test (OOD) | 74 | 80 | 352 | 443 |

| Environment | Weather | Slope | ||||||

|---|---|---|---|---|---|---|---|---|

| Split | Grass | Gravel | Sunny | Shadow | Cloudy | High | Medium | Low |

| Train | 26 | 8 | 15 | 12 | 7 | 11 | 1 | 22 |

| Val | 3 | 2 | 1 | 3 | 1 | 0 | 1 | 4 |

| Test (IID) | 4 | 2 | 2 | 3 | 2 | 2 | 0 | 4 |

| Test (OOD) | 11 | 0 | 8 | 1 | 2 | 3 | 2 | 6 |

| Key | Value | Description |

|---|---|---|

| hsv_h | 0.015 | image HSV-Hue augmentation (fraction) |

| hsv_s | 0.7 | image HSV-Saturation augmentation (fraction) |

| hsv_v | 0.4 | image HSV-Value augmentation (fraction) |

| translate | 0.1 | image translation (+/−fraction) |

| scale | 0.5 | image scale (+/−gain) |

| fliplr | 0.5 | image flip left-right (probability) |

| mosaic | 1.0 | image mosaic (probability) |

| Butterfly | Starfish | Background | ||||

|---|---|---|---|---|---|---|

| Butterfly | 254 | → 98% | 0 | → 0% | 6 | → 2% |

| ↓ 72% | ↓ 0% | ↓ 85% | ||||

| Starfish | 0 | → 0% | 168 | → 99% | 1 | → 1% |

| ↓ 0% | ↓ 56% | ↓ 15% | ||||

| Background | 98 | → 42% | 134 | → 58% | 0 | → 0% |

| ↓ 28% | ↓ 44% | ↓ 0% | ||||

| Butterfly | Starfish | Background | ||||

|---|---|---|---|---|---|---|

| Butterfly | 263 | → 97% | 0 | → 0% | 7 | → 3% |

| ↓ 75% | ↓ 0% | ↓ 78% | ||||

| Starfish | 0 | → 0% | 172 | → 99% | 2 | → 1% |

| ↓ 0% | ↓ 57% | ↓ 22% | ||||

| Background | 89 | → 41% | 130 | → 59% | 0 | → 0% |

| ↓ 25% | ↓ 43% | ↓ 0% | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vivoli, E.; Bertini, M.; Capineri, L. Deep Learning-Based Real-Time Detection of Surface Landmines Using Optical Imaging. Remote Sens. 2024, 16, 677. https://doi.org/10.3390/rs16040677

Vivoli E, Bertini M, Capineri L. Deep Learning-Based Real-Time Detection of Surface Landmines Using Optical Imaging. Remote Sensing. 2024; 16(4):677. https://doi.org/10.3390/rs16040677

Chicago/Turabian StyleVivoli, Emanuele, Marco Bertini, and Lorenzo Capineri. 2024. "Deep Learning-Based Real-Time Detection of Surface Landmines Using Optical Imaging" Remote Sensing 16, no. 4: 677. https://doi.org/10.3390/rs16040677

APA StyleVivoli, E., Bertini, M., & Capineri, L. (2024). Deep Learning-Based Real-Time Detection of Surface Landmines Using Optical Imaging. Remote Sensing, 16(4), 677. https://doi.org/10.3390/rs16040677