Enhancing Digital Twins with Human Movement Data: A Comparative Study of Lidar-Based Tracking Methods

Abstract

1. Introduction

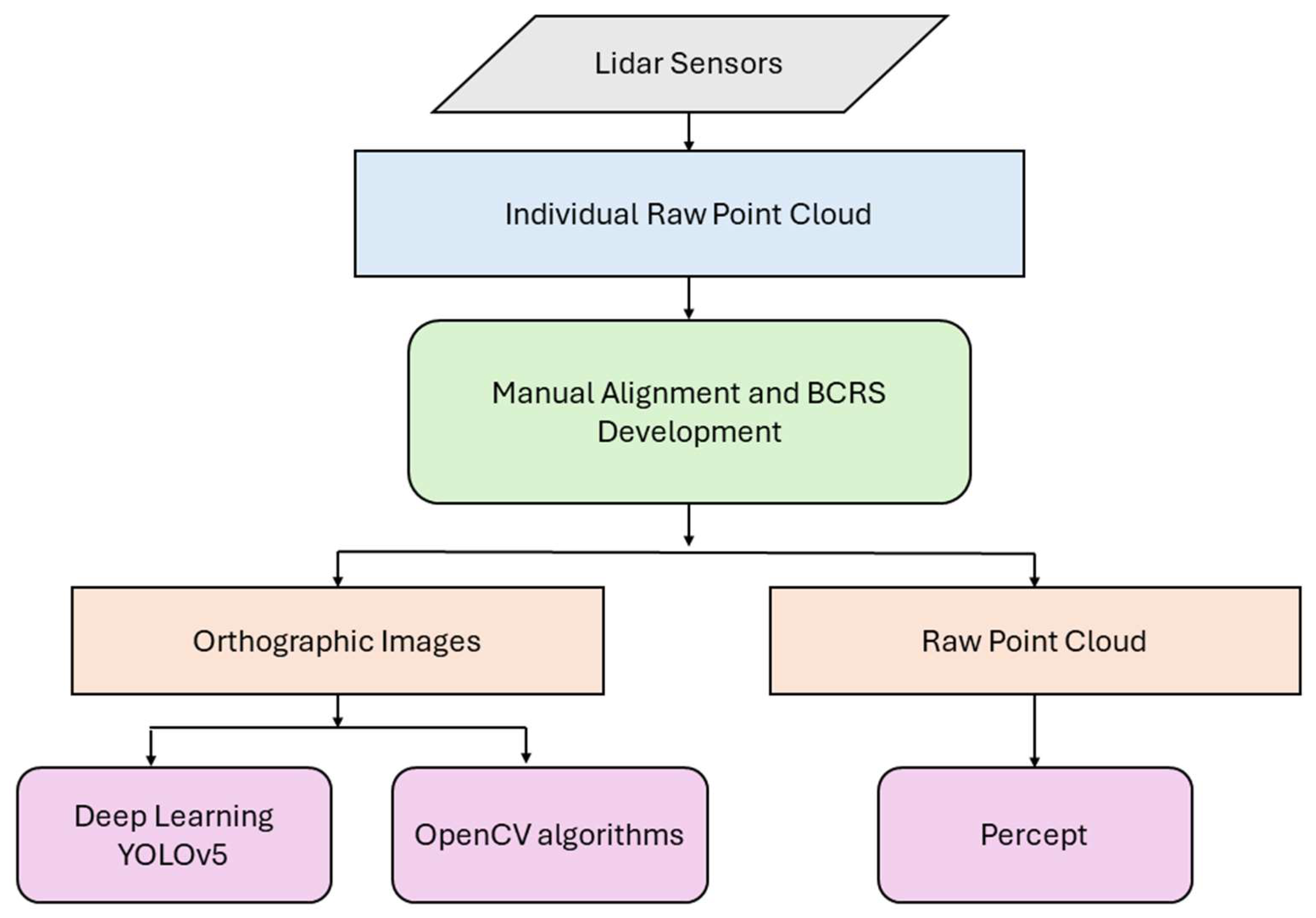

2. Materials and Methods

2.1. YOLOv5

2.2. OpenCV’s Background Subtraction and Frame Differencing

2.3. Blickfeld Percept Software

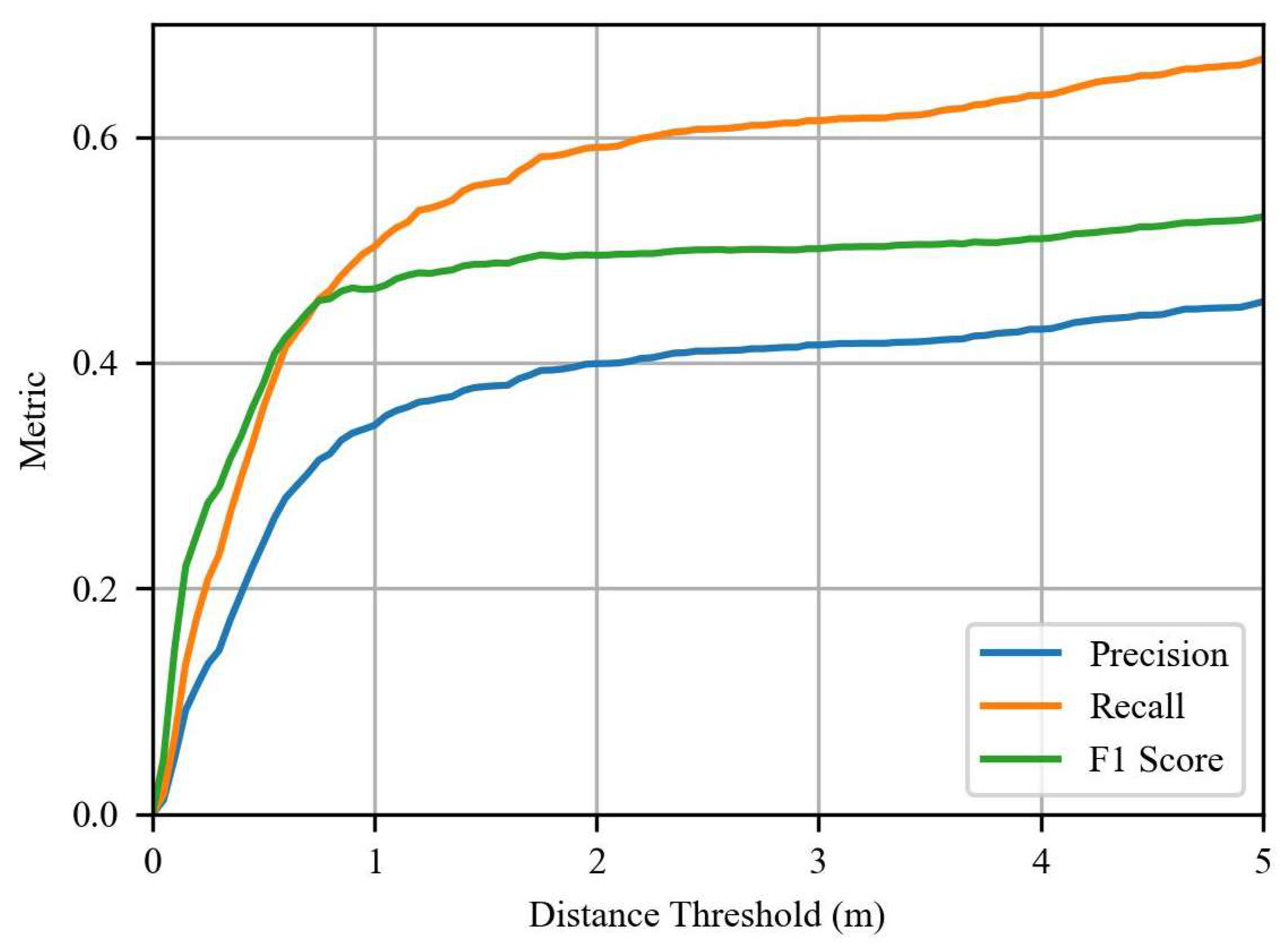

2.4. Accuracy Assessment

3. Results

3.1. Object Detection

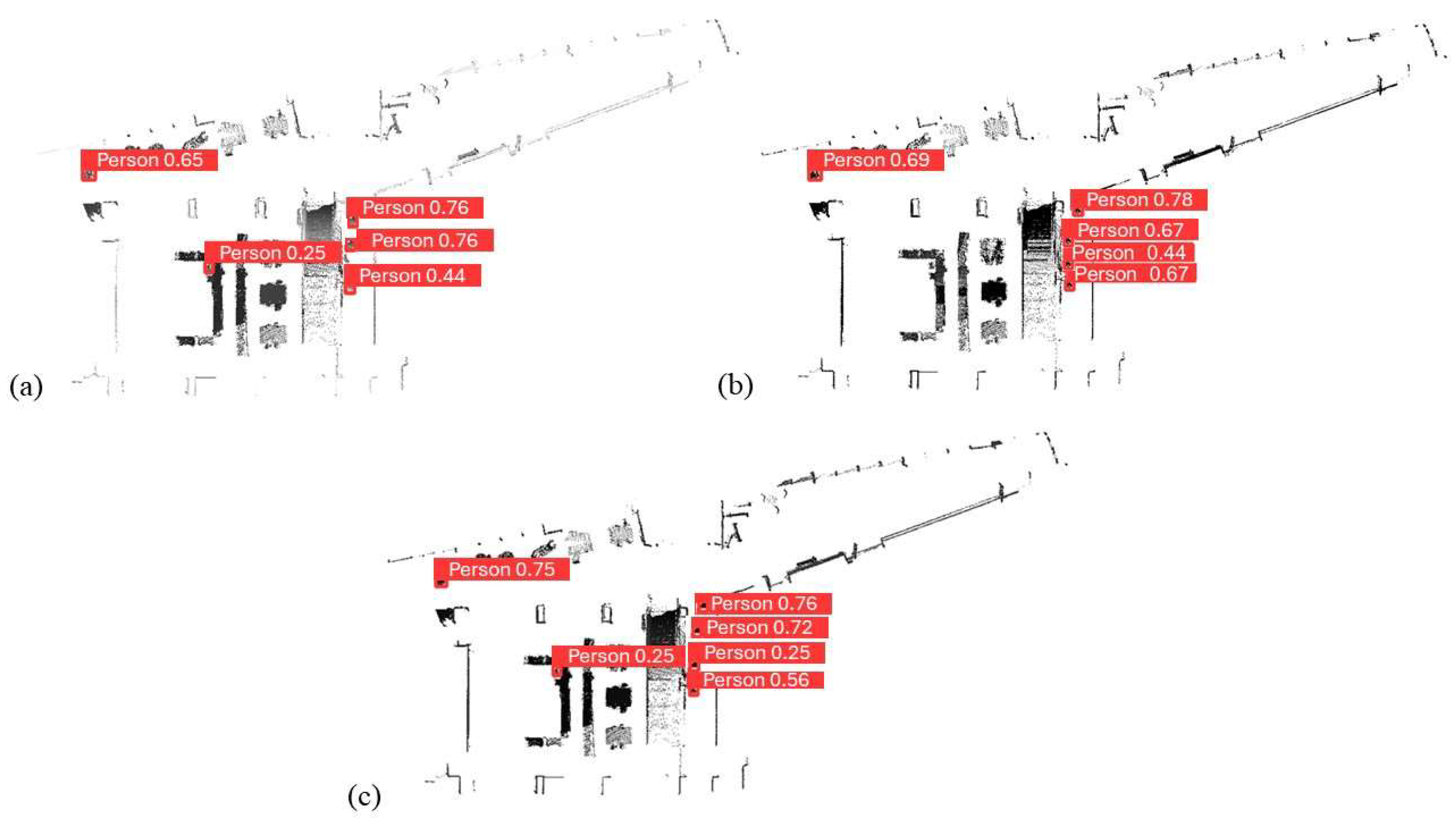

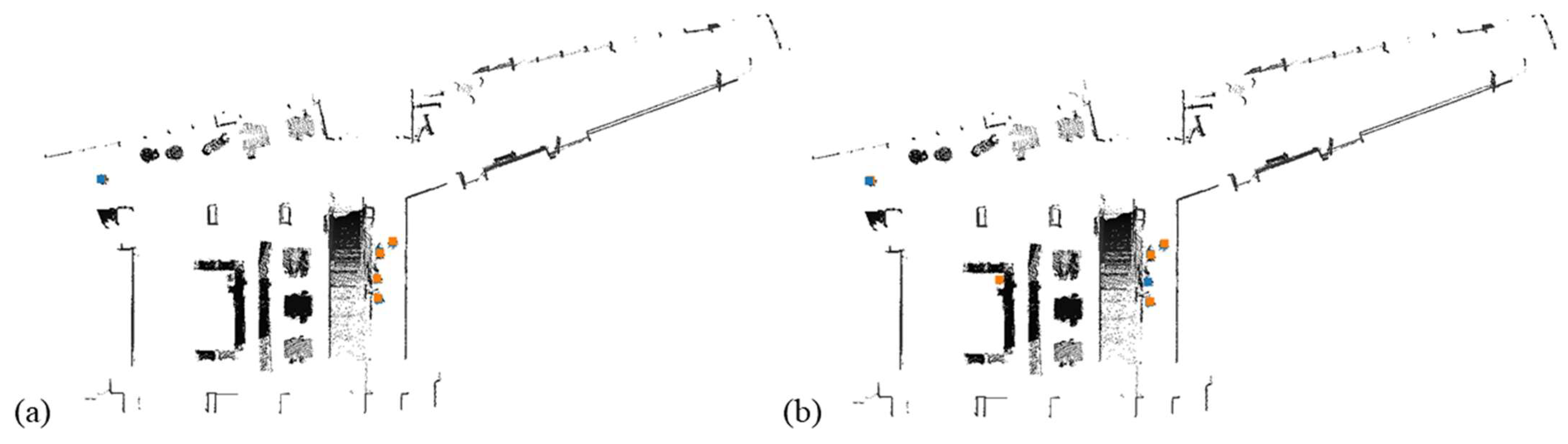

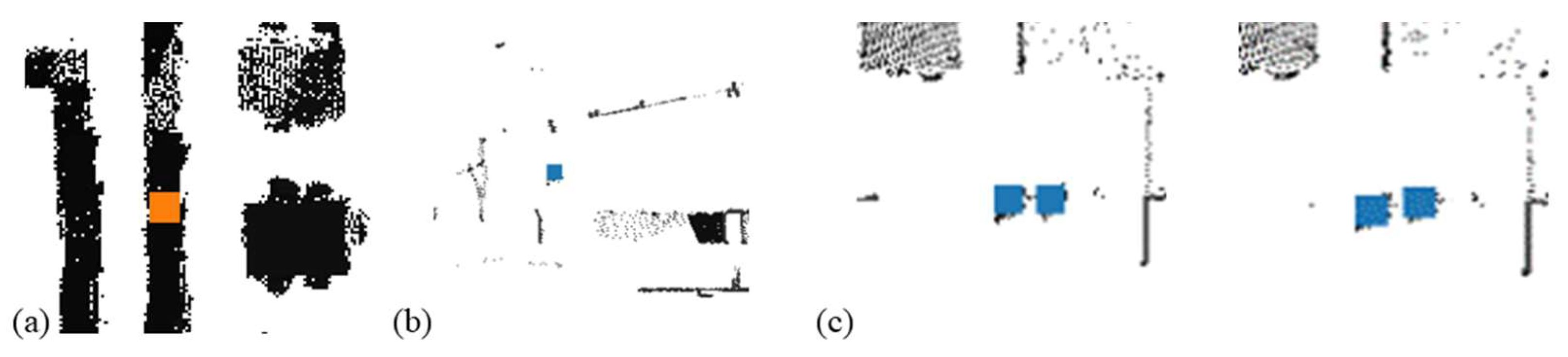

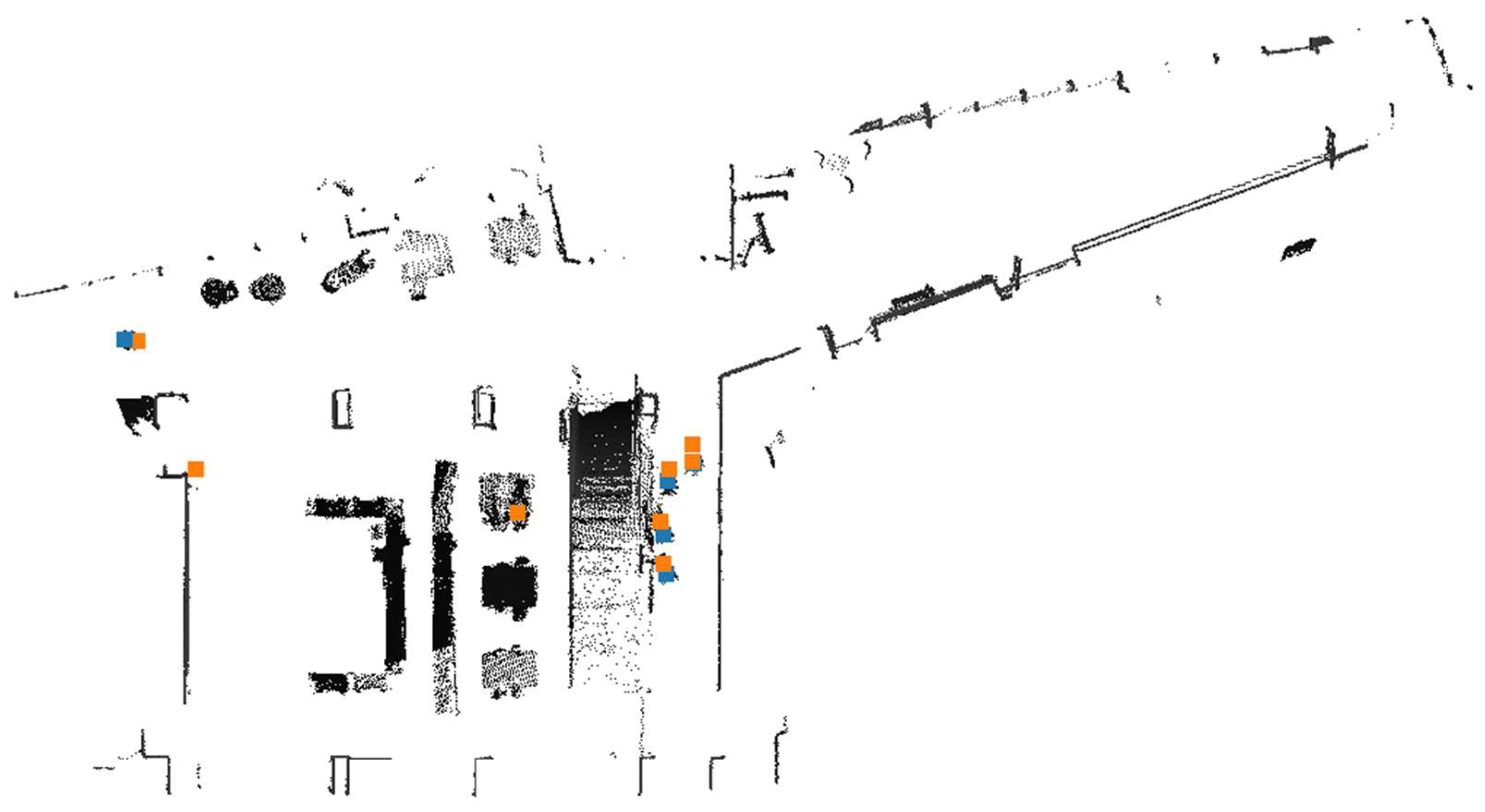

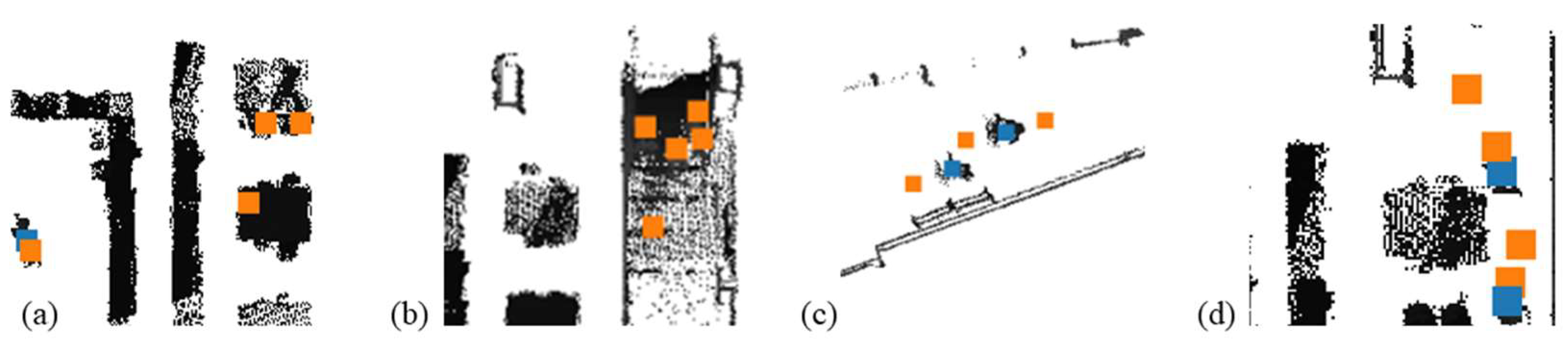

3.1.1. Deep Learning

3.1.2. OpenCV

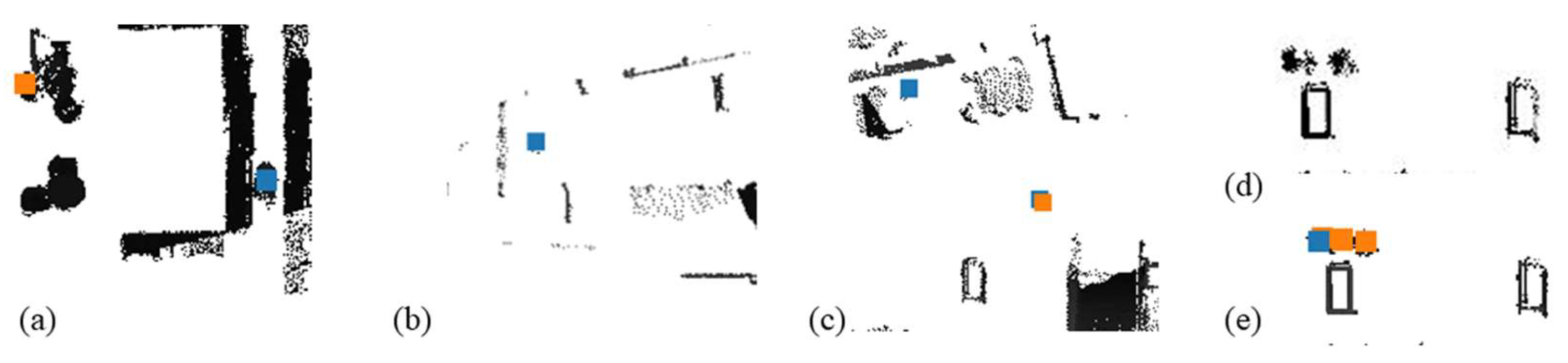

3.1.3. Blickfeld’s Percept

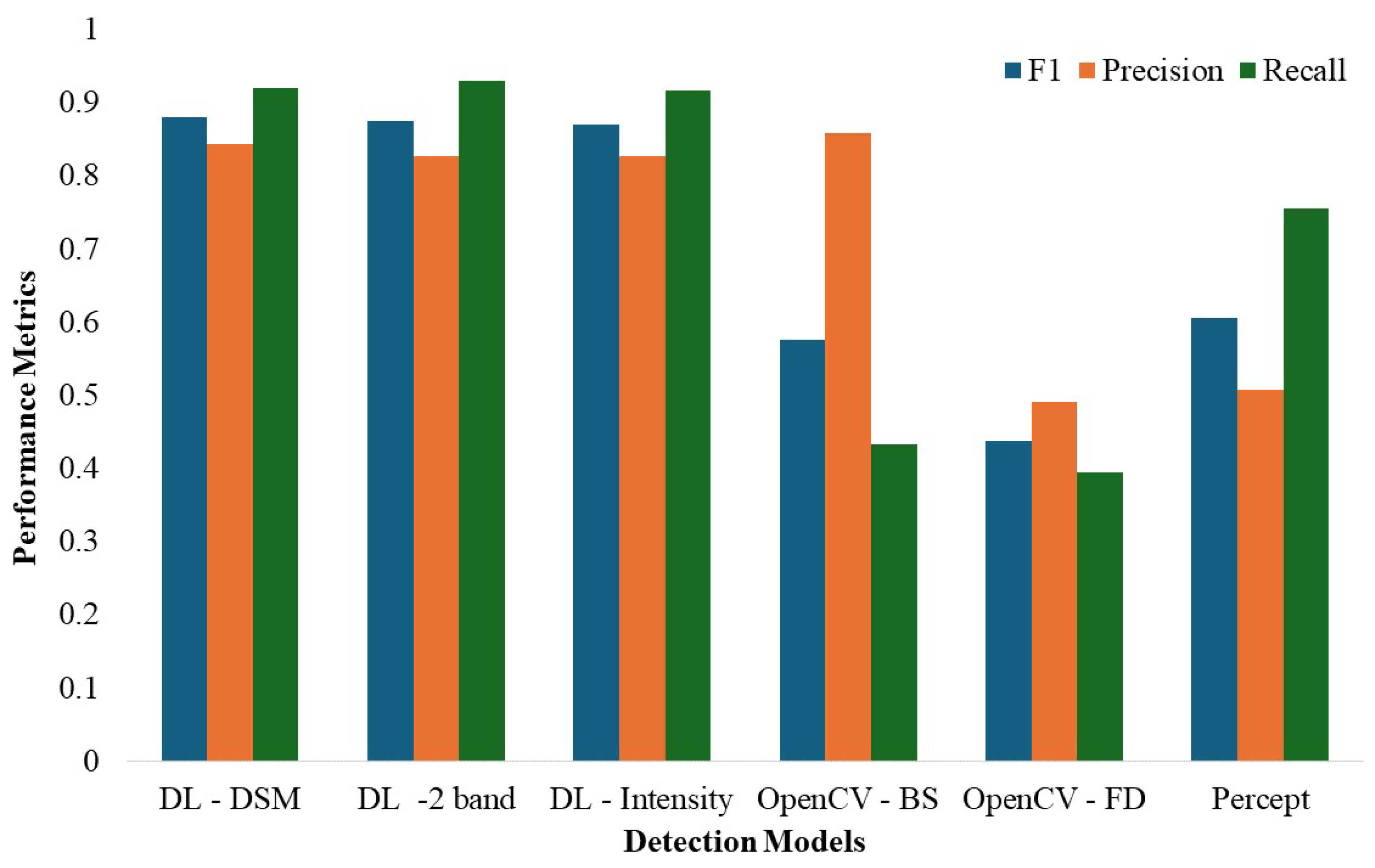

3.2. Comparison of Detection Techniques

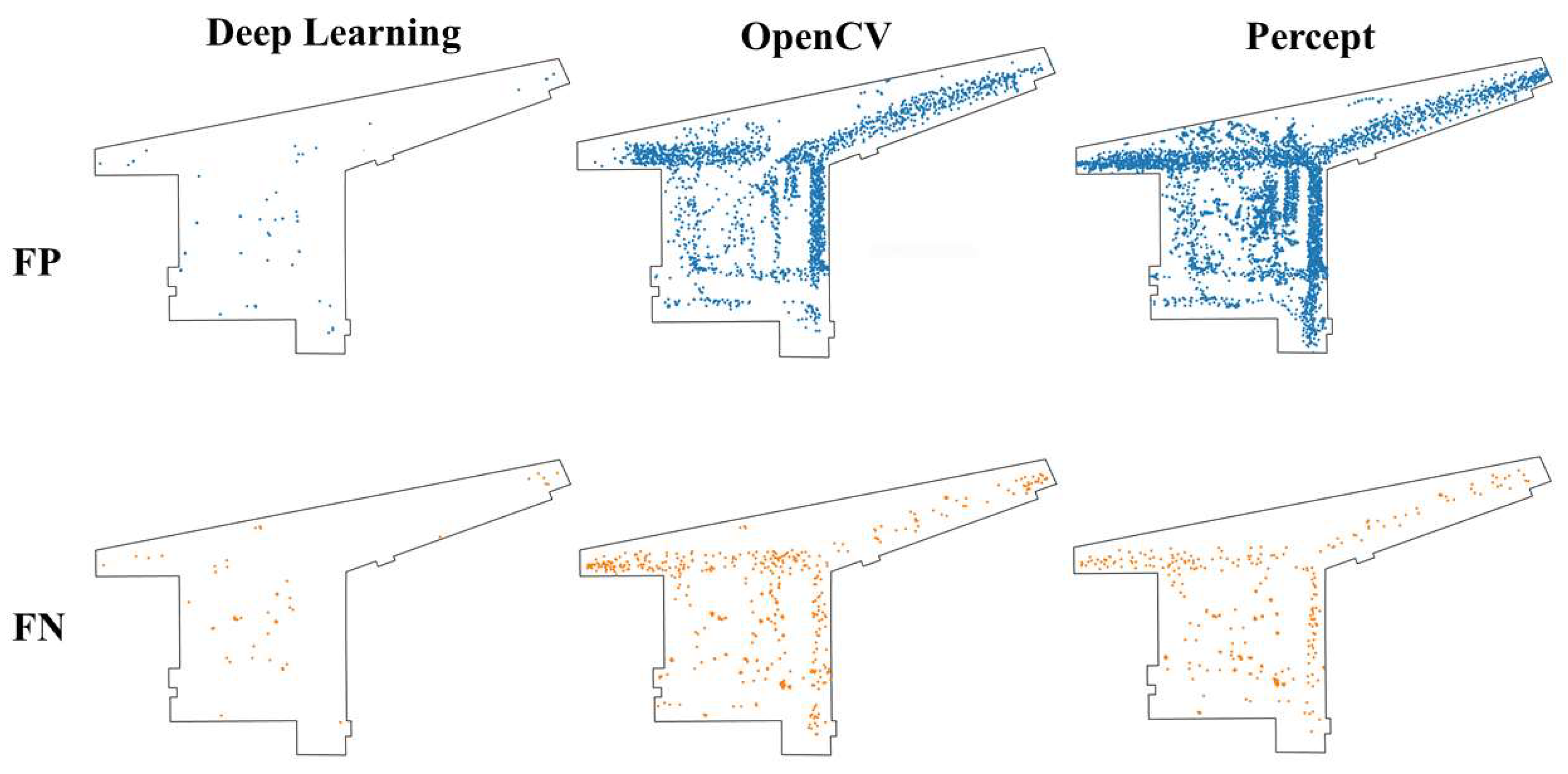

3.3. Spatial Distribution of False Positives and False Negatives

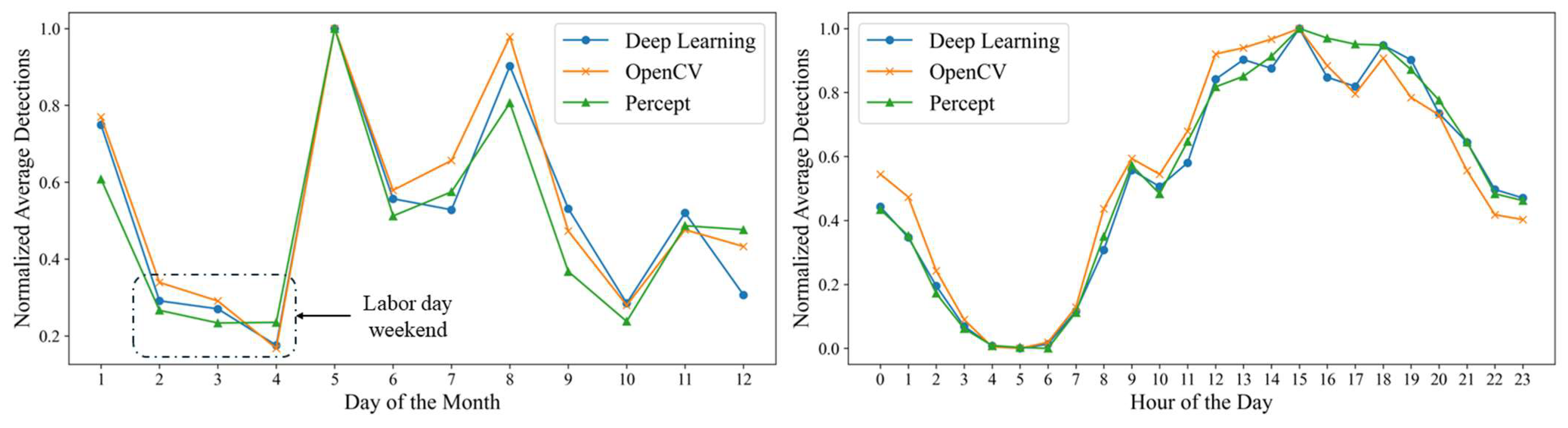

3.4. Aggregate Movement Analysis and Temporal Patterns

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Day Biehler, D.; Simon, G.L. The Great Indoors: Research Frontiers on Indoor Environments as Active Political-Ecological Spaces. Prog. Hum. Geogr. 2011, 35, 172–192. [Google Scholar] [CrossRef]

- Klepeis, N.E.; Nelson, W.C.; Ott, W.R.; Robinson, J.P.; Tsang, A.M.; Switzer, P.; Behar, J.V.; Hern, S.C.; Engelmann, W.H. The National Human Activity Pattern Survey (NHAPS): A Resource for Assessing Exposure to Environmental Pollutants. J. Expo Sci. Environ. Epidemiol. 2001, 11, 231–252. [Google Scholar] [CrossRef] [PubMed]

- Odonohue, D. Everything You Need To Know About Indoor Navigation And Mapping—April 14, 2024. 2022. Available online: https://mapscaping.com/indoor-navigation-and-mapping/ (accessed on 14 April 2024).

- Sabins, F.F., Jr.; Ellis, J.M. Remote Sensing: Principles, Interpretation, and Applications, 4th ed.; Waveland Press: Long Grove, IL, USA, 2020; ISBN 978-1-4786-4506-1. [Google Scholar]

- Otero, R.; Lagüela, S.; Garrido, I.; Arias, P. Mobile Indoor Mapping Technologies: A Review. Autom. Constr. 2020, 120, 103399. [Google Scholar] [CrossRef]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing; Elsevier: Amsterdam, The Netherlands, 2006; ISBN 978-0-08-048058-9. [Google Scholar]

- Basiri, A.; Lohan, E.S.; Moore, T.; Winstanley, A.; Peltola, P.; Hill, C.; Amirian, P.; Figueiredo e Silva, P. Indoor Location Based Services Challenges, Requirements and Usability of Current Solutions. Comput. Sci. Rev. 2017, 24, 1–12. [Google Scholar] [CrossRef]

- Dwiyasa, F.; Lim, M.-H. A Survey of Problems and Approaches in Wireless-Based Indoor Positioning. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; pp. 1–7. [Google Scholar]

- Zhou, B.; Ma, W.; Li, Q.; El-Sheimy, N.; Mao, Q.; Li, Y.; Gu, F.; Huang, L.; Zhu, J. Crowdsourcing-Based Indoor Mapping Using Smartphones: A Survey. ISPRS J. Photogramm. Remote Sens. 2021, 177, 131–146. [Google Scholar] [CrossRef]

- Wolf, P.R. Surveying and Mapping: History, Current Status, and Future Projections. J. Surv. Eng. 2002, 128, 79–107. [Google Scholar] [CrossRef]

- Jiménez-Muñoz, J.C.; Sobrino, J.A. A Generalized Single-Channel Method for Retrieving Land Surface Temperature from Remote Sensing Data. J. Geophys. Res. Atmos. 2003, 108, 4688. [Google Scholar] [CrossRef]

- Tomlinson, C.J.; Chapman, L.; Thornes, J.E.; Baker, C. Remote Sensing Land Surface Temperature for Meteorology and Climatology: A Review. Meteorol. Appl. 2011, 18, 296–306. [Google Scholar] [CrossRef]

- Kogan, F.N. Remote Sensing of Weather Impacts on Vegetation in Non-Homogeneous Areas. Int. J. Remote Sens. 1990, 11, 1405–1419. [Google Scholar] [CrossRef]

- Xie, Y.; Sha, Z.; Yu, M. Remote Sensing Imagery in Vegetation Mapping: A Review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Haque, U.; Hashizume, M.; Kolivras, K.N.; Overgaard, H.J.; Das, B.; Yamamoto, T. Reduced Death Rates from Cyclones in Bangladesh: What More Needs to Be Done? Bull. World Health Organ. 2012, 90, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Weng, Q. Enhancing Temporal Resolution of Satellite Imagery for Public Health Studies: A Case Study of West Nile Virus Outbreak in Los Angeles in 2007. Remote Sens. Environ. 2012, 117, 57–71. [Google Scholar] [CrossRef]

- Brooker, S.; Michael, E. The Potential of Geographical Information Systems and Remote Sensing in the Epidemiology and Control of Human Helminth Infections. In Advances in Parasitology; Remote Sensing and Geographical Information Systems in Epidemiology; Academic Press: Cambridge, MA, USA, 2000; Volume 47, pp. 245–288. [Google Scholar]

- Hay, S.I. An Overview of Remote Sensing and Geodesy for Epidemiology and Public Health Application. In Advances in Parasitology; Remote Sensing and Geographical Information Systems in Epidemiology; Academic Press: Cambridge, MA, USA, 2000; Volume 47, pp. 1–35. [Google Scholar]

- Harris, R. Satellite Remote Sensing. An Introduction; Routledge and Kegan Paul: London, UK, 1987. [Google Scholar]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. Available online: https://www.hindawi.com/journals/js/2017/1353691/ (accessed on 29 November 2022).

- Talukdar, S.; Singha, P.; Mahato, S.; Shahfahad; Pal, S.; Liou, Y.-A.; Rahman, A. Land-Use Land-Cover Classification by Machine Learning Classifiers for Satellite Observations—A Review. Remote Sens. 2020, 12, 1135. [Google Scholar] [CrossRef]

- Alqurashi, A.F.; Kumar, L.; Sinha, P. Urban Land Cover Change Modelling Using Time-Series Satellite Images: A Case Study of Urban Growth in Five Cities of Saudi Arabia. Remote Sens. 2016, 8, 838. [Google Scholar] [CrossRef]

- Tesoriero, R.; Tebar, R.; Gallud, J.A.; Lozano, M.D.; Penichet, V.M.R. Improving Location Awareness in Indoor Spaces Using RFID Technology. Expert Syst. Appl. 2010, 37, 894–898. [Google Scholar] [CrossRef]

- Cihlar, J. Land Cover Mapping of Large Areas from Satellites: Status and Research Priorities. Int. J. Remote Sens. 2000, 21, 1093–1114. [Google Scholar] [CrossRef]

- Cracknell, A.P. The Development of Remote Sensing in the Last 40 Years. Int. J. Remote Sens. 2018, 39, 8387–8427. [Google Scholar] [CrossRef]

- Peterson, B.; Bruckner, D.; Heye, S. Measuring GPS Signals Indoors. In Proceedings of the Institute of Navigation ION GPS-97, Kansas City, MI, USA, 16–19 September 1997; pp. 615–624. [Google Scholar]

- Koyuncu, H.; Yang, S.-H. A Survey of Indoor Positioning and Object Locating Systems. Int. J. Comput. Sci. Netw. Secur. (IJCSNS) 2010, 10, 121–128. [Google Scholar]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic Structure from Motion: A New Development in Photogrammetric Measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Liu, X. Airborne LiDAR for DEM Generation: Some Critical Issues. Prog. Phys. Geogr. Earth Environ. 2008, 32, 31–49. [Google Scholar] [CrossRef]

- Chen, J. Grid Referencing of Buildings. In Proceedings of the Adjunct Proceedings of the 14th International Conference on Location Based Services, Zurich, Switzerland, 15–17 January 2018; pp. 38–43. [Google Scholar]

- Pintore, G.; Mura, C.; Ganovelli, F.; Fuentes-Perez, L.; Pajarola, R.; Gobbetti, E. State-of-the-Art in Automatic 3D Reconstruction of Structured Indoor Environments. Comput. Graph. Forum 2020, 39, 667–699. [Google Scholar] [CrossRef]

- Kim, T.-H.; Park, T.-H. Placement Optimization of Multiple Lidar Sensors for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2139–2145. [Google Scholar] [CrossRef]

- Zlatanova, S.; Sithole, G.; Nakagawa, M.; Zhu, Q. Problems In Indoor Mapping and Modelling. In Proceedings of the ISPRS Acquisition and Modelling of Indoor and Enclosed Environments 2013, Cape Town, South Arfica, 11–13 December 2013; Volume XL-4-W4. pp. 63–68. [Google Scholar]

- Kjærgaard, M.B.; Blunck, H.; Godsk, T.; Toftkjær, T.; Christensen, D.L.; Grønbæk, K. Indoor Positioning Using GPS Revisited. In Pervasive Computing; Floréen, P., Krüger, A., Spasojevic, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 38–56. [Google Scholar]

- Ijaz, F.; Yang, H.; Ahmad, A.; Lee, C. Indoor Positioning: A Review of Indoor Ultrasonic Positioning Systems. In Proceedings of the Advanced Communication Technology (ICACT), 2013 15th International Conference, PyeongChang, Republic of Korea, 27–30 January 2013; p. 1150, ISBN 978-1-4673-3148-7. [Google Scholar]

- Mautz, R. Overview of Current Indoor Positioning Systems. Geod. Ir Kartogr. 2009, 35, 18–22. [Google Scholar] [CrossRef]

- Li, K.-J. Indoor Space: A New Notion of Space. In Web and Wireless Geographic Information System; Springer: Berlin/Heidelberg, Germnay, 2008; pp. 1–3. [Google Scholar]

- Montello, D.R. You Are Where? The Function and Frustration of You-Are-Here (YAH) Maps: Spatial Cognition & Computation; Volume 10, pp 2–3. Available online: https://www.tandfonline.com/doi/abs/10.1080/13875860903585323 (accessed on 16 May 2024).

- Chen, J.; Clarke, K. Indoor Cartography. Cartogr. Geogr. Inf. Sci. 2019, 47, 1–15. [Google Scholar] [CrossRef]

- Giudice, N.A.; Walton, L.A.; Worboys, M. The Informatics of Indoor and Outdoor Space: A Research Agenda. In Proceedings of the 2nd ACM SIGSPATIAL International Workshop on Indoor Spatial Awareness, San Jose, CA, USA, 2 November 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 47–53. [Google Scholar]

- Newell, R.G.; Sancha, T.L. The Difference between CAD and GIS. Comput.-Aided Des. 1990, 22, 131–135. [Google Scholar] [CrossRef]

- Sulaiman, M.Z.; Aziz, M.N.A.; Bakar, M.H.A.; Halili, N.A.; Azuddin, M.A. Matterport: Virtual Tour as A New Marketing Approach in Real Estate Business During Pandemic COVID-19; Atlantis Press: Amsterdam, The Netherlands, 2020; pp. 221–226. [Google Scholar]

- Shults, R.; Levin, E.; Habibi, R.; Shenoy, S.; Honcheruk, O.; Hart, T.; An, Z. Capability of Matterport 3d Camera for Industrial Archaeology Sites Inventory. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 1059–1064. [Google Scholar] [CrossRef]

- Batty, M. Digital Twins. Environ. Plan. B Urban Anal. City Sci. 2018, 45, 817–820. [Google Scholar] [CrossRef]

- Jones, D.; Snider, C.; Nassehi, A.; Yon, J.; Hicks, B. Characterising the Digital Twin: A Systematic Literature Review. CIRP J. Manuf. Sci. Technol. 2020, 29, 36–52. [Google Scholar] [CrossRef]

- Blair, G.S. Digital Twins of the Natural Environment. Patterns 2021, 2, 100359. [Google Scholar] [CrossRef]

- Wang, P.; Yang, L.T.; Li, J.; Chen, J.; Hu, S. Data Fusion in Cyber-Physical-Social Systems: State-of-the-Art and Perspectives. Inf. Fusion 2019, 51, 42–57. [Google Scholar] [CrossRef]

- Schluse, M.; Rossmann, J. From Simulation to Experimentable Digital Twins: Simulation-Based Development and Operation of Complex Technical Systems. In Proceedings of the 2016 IEEE International Symposium on Systems Engineering (ISSE), Edinburgh, UK, 3–5 October 2016; pp. 1–6. [Google Scholar]

- El-Sheimy, N.; Li, Y. Indoor Navigation: State of the Art and Future Trends. Satell. Navig. 2021, 2, 7. [Google Scholar] [CrossRef]

- Purohit, A.; Sun, Z.; Mokaya, F.; Zhang, P. SensorFly: Controlled-Mobile Sensing Platform for Indoor Emergency Response Applications. In Proceedings of the 10th ACM/IEEE International Conference on Information Processing in Sensor Networks, Chicago, IL, USA, 12–14 April 2011; pp. 223–234. [Google Scholar]

- Tashakkori, H.; Rajabifard, A.; Kalantari, M. A New 3D Indoor/Outdoor Spatial Model for Indoor Emergency Response Facilitation. Build. Environ. 2015, 89, 170–182. [Google Scholar] [CrossRef]

- Gunduz, M.; Isikdag, U.; Basaraner, M. A Review of Recent Research in Indoor Modelling & Mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B4, 289–294. [Google Scholar] [CrossRef]

- Wei, Y.; Akinci, B. A Vision and Learning-Based Indoor Localization and Semantic Mapping Framework for Facility Operations and Management. Autom. Constr. 2019, 107, 102915. [Google Scholar] [CrossRef]

- Jens, K.; Gregg, J.S. How Design Shapes Space Choice Behaviors in Public Urban and Shared Indoor Spaces—A Review. Sustain. Cities Soc. 2021, 65, 102592. [Google Scholar] [CrossRef]

- Zimring, C.; Joseph, A.; Nicoll, G.L.; Tsepas, S. Influences of Building Design and Site Design on Physical Activity: Research and Intervention Opportunities. Am. J. Prev. Med. 2005, 28, 186–193. [Google Scholar] [CrossRef] [PubMed]

- Hillier, B.; Leaman, A.; Stansall, P.; Bedford, M. Space Syntax. Available online: https://journals.sagepub.com/doi/abs/10.1068/b030147?casa_token=uXzG9WNvYzgAAAAA:ERkqLR5WTkPhvr6x7eJdFTkX9kpy-_ylZ5qbaReN_oNI_ak2juuD9OshMTg8VycVWj5xc_JLbsOK (accessed on 15 December 2022).

- Petrovska, N.; Stevanovic, A. Traffic Congestion Analysis Visualisation Tool. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 1489–1494. [Google Scholar]

- Birnhack, M.; Perry-Hazan, L. School Surveillance in Context: High School Students’ Perspectives on CCTV, Privacy, and Security. Youth Soc. 2020, 52, 1312–1330. [Google Scholar] [CrossRef]

- Munaro, M.; Lewis, C.; Chambers, D.; Hvass, P.; Menegatti, E. RGB-D Human Detection and Tracking for Industrial Environments. In Intelligent Autonomous Systems 13; Menegatti, E., Michael, N., Berns, K., Yamaguchi, H., Eds.; Springer International Publishing: Cham, Switerland, 2016; pp. 1655–1668. [Google Scholar]

- Edelman, G.; Bijhold, J. Tracking People and Cars Using 3D Modeling and CCTV. Forensic Sci. Int. 2010, 202, 26–35. [Google Scholar] [CrossRef]

- Video Surveillance and Public Space: Surveillance Society vs. Security State|SpringerLink. Available online: https://link.springer.com/chapter/10.1007/978-3-031-11756-5_14 (accessed on 8 September 2024).

- A Detailed Comparison of LiDAR, Radar and Camera Technology. Available online: https://insights.outsight.ai/how-does-lidar-compares-to-cameras-and-radars/ (accessed on 8 September 2024).

- Günter, A.; Böker, S.; König, M.; Hoffmann, M. Privacy-Preserving People Detection Enabled by Solid State LiDAR. In Proceedings of the 2020 16th International Conference on Intelligent Environments (IE), Madrid, Spain, 20–23 July 2020; pp. 1–4. [Google Scholar]

- Nielsen, M.S.; Nikolov, I.; Kruse, E.K.; Garnæs, J.; Madsen, C.B. Quantifying the Influence of Surface Texture and Shape on Structure from Motion 3D Reconstructions. Sensors 2023, 23, 178. [Google Scholar] [CrossRef]

- Kang, Z.; Yang, J.; Yang, Z.; Cheng, S. A Review of Techniques for 3D Reconstruction of Indoor Environments. ISPRS Int. J. Geo-Inf. 2020, 9, 330. [Google Scholar] [CrossRef]

- Wu, D.; Liang, Z.; Chen, G. Deep Learning for LiDAR-Only and LiDAR-Fusion 3D Perception: A Survey. Intell. Robot. 2022, 2, 105–129. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-Time. In Proceedings of the Robotics: Science and Systems X, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Fritsche, P.; Kueppers, S.; Briese, G.; Wagner, B. Fusing LiDAR and Radar Data to Perform SLAM in Harsh Environments. In Informatics in Control, Automation and Robotics: 13th International Conference, ICINCO 2016, Lisbon, Portugal, 29–31 July 2016; Madani, K., Peaucelle, D., Gusikhin, O., Eds.; Springer International Publishing: Cham, Switerland, 2018; pp. 175–189. ISBN 978-3-319-55011-4. [Google Scholar]

- Bilik, I. Comparative Analysis of Radar and Lidar Technologies for Automotive Applications. IEEE Intell. Transp. Syst. Mag. 2023, 15, 244–269. [Google Scholar] [CrossRef]

- Mielle, M.; Magnusson, M.; Lilienthal, A.J. A Comparative Analysis of Radar and Lidar Sensing for Localization and Mapping. In Proceedings of the 2019 European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019; pp. 1–6. [Google Scholar]

- Antonarakis, A.S.; Saatchi, S.S.; Chazdon, R.L.; Moorcroft, P.R. Using Lidar and Radar Measurements to Constrain Predictions of Forest Ecosystem Structure and Function. Ecol. Appl. 2011, 21, 1120–1137. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 1–13. [Google Scholar] [CrossRef]

- Paul, M.; Haque, S.M.E.; Chakraborty, S. Human Detection in Surveillance Videos and Its Applications—A Review. EURASIP J. Adv. Signal Process. 2013, 2013, 176. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 7–12 December 2015; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 Network for Real-Time Multi-Scale Traffic Sign Detection. Neural Comput. Applic 2023, 35, 7853–7865. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Molchanov, V.V.; Vishnyakov, B.V.; Vizilter, Y.V.; Vishnyakova, O.V.; Knyaz, V.A. Pedestrian Detection in Video Surveillance Using Fully Convolutional YOLO Neural Network. In Proceedings of the Automated Visual Inspection and Machine Vision II, SPIE, Munich, Germany, 29 June 2017; Volume 10334, pp. 193–199. [Google Scholar]

- Garg, R.; Singh, S. Intelligent Video Surveillance Based on YOLO: A Comparative Study. In Proceedings of the 2021 International Conference on Advances in Computing, Communication, and Control (ICAC3), Mumbai, India, 3–4 December 2021; pp. 1–6. [Google Scholar]

- Nguyen, H.H.; Ta, T.N.; Nguyen, N.C.; Bui, V.T.; Pham, H.M.; Nguyen, D.M. YOLO Based Real-Time Human Detection for Smart Video Surveillance at the Edge. In Proceedings of the 2020 IEEE Eighth International Conference on Communications and Electronics (ICCE), Phu Quoc Island, Vietnam, 13–15 January 2021; pp. 439–444. [Google Scholar]

- Kannadaguli, P. YOLO v4 Based Human Detection System Using Aerial Thermal Imaging for UAV Based Surveillance Applications. In Proceedings of the 2020 International Conference on Decision Aid Sciences and Application (DASA), Sakheer, Bahrain, 8–9 November 2020; pp. 1213–1219. [Google Scholar]

- Sualeh, M.; Kim, G.-W. Dynamic Multi-LiDAR Based Multiple Object Detection and Tracking. Sensors 2019, 19, 1474. [Google Scholar] [CrossRef] [PubMed]

- BenAbdelkader, C.; Cutler, R.; Davis, L. Motion-Based Recognition of People in EigenGait Space. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washinton, DC, USA, 20–21 May 2002; pp. 267–272. [Google Scholar]

- Villarreal, M.; Baird, T.D.; Tarazaga, P.A.; Kniola, D.J.; Pingel, T.J.; Sarlo, R. Shared Space and Resource Use within a Building Environment: An Indoor Geography. Geogr. J. 2024, e12604. [Google Scholar] [CrossRef]

- Chan, T.H.; Hesse, H.; Ho, S.G. LiDAR-Based 3D SLAM for Indoor Mapping. In Proceedings of the 2021 7th International Conference on Control, Automation and Robotics (ICCAR), Singapore, 23–26 April 2021; pp. 285–289. [Google Scholar]

- Zhou, L.; Koppel, D.; Kaess, M. LiDAR SLAM With Plane Adjustment for Indoor Environment. IEEE Robot. Autom. Lett. 2021, 6, 7073–7080. [Google Scholar] [CrossRef]

- Sharif, M.H. Laser-Based Algorithms Meeting Privacy in Surveillance: A Survey. IEEE Access 2021, 9, 92394–92419. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Nature: Cham, Switerland, 2022; ISBN 978-3-030-34372-9. [Google Scholar]

- Culjak, I.; Abram, D.; Pribanic, T.; Dzapo, H.; Cifrek, M. A Brief Introduction to OpenCV. In Proceedings of the 2012 Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2012; pp. 1725–1730. [Google Scholar]

- Schulte-Tigges, J.; Förster, M.; Nikolovski, G.; Reke, M.; Ferrein, A.; Kaszner, D.; Matheis, D.; Walter, T. Benchmarking of Various LiDAR Sensors for Use in Self-Driving Vehicles in Real-World Environments. Sensors 2022, 22, 7146. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Make 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- KaewTraKulPong, P.; Bowden, R. An Improved Adaptive Background Mixture Model for Real-Time Tracking with Shadow Detection. In Video-Based Surveillance Systems: Computer Vision and Distributed Processing; Remagnino, P., Jones, G.A., Paragios, N., Regazzoni, C.S., Eds.; Springer: Boston, MA, USA, 2002; pp. 135–144. ISBN 978-1-4615-0913-4. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. 2000, 25, 120–125. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean Shift: A Robust Approach toward Feature Space Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Jhaldiyal, A.; Chaudhary, N. Semantic Segmentation of 3D LiDAR Data Using Deep Learning: A Review of Projection-Based Methods. Appl. Intell. 2022, 53, 6844–6855. [Google Scholar] [CrossRef]

- Zamanakos, G.; Tsochatzidis, L.; Pratikakis, I.; Amanatiadis, A. A Comprehensive Survey of LIDAR-Based 3D Object Detection Methods. Comput. Graph. 2021, 99, 153–181. [Google Scholar] [CrossRef]

- Chen, G.; Wang, F.; Qu, S.; Chen, K.; Yu, J.; Liu, X.; Xiong, L.; Knoll, A. Pseudo-Image and Sparse Points: Vehicle Detection With 2D LiDAR Revisited by Deep Learning-Based Methods. IEEE Trans. Intell. Transp. Syst. 2021, 22, 7699–7711. [Google Scholar] [CrossRef]

- Elaksher, A.; Ali, T.; Alharthy, A. A Quantitative Assessment of LIDAR Data Accuracy|EndNote Click. Remote Sens. 2022, 15, 442. [Google Scholar] [CrossRef]

- Glennie, C.L.; Hartzell, P.J. Accuracy assessment and calibration of low-cost autonomous lidar sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B1-2020, 371–376. [Google Scholar] [CrossRef]

| Model | Deep Learning | OpenCV | Blickfeld’s Percept | |||

|---|---|---|---|---|---|---|

| DSM | Intensity and DSM Combined | Intensity | Background Subtraction | Frame Differencing | ||

| F1 | 0.879 | 0.875 | 0.869 | 0.575 | 0.438 | 0.606 |

| Precision | 0.843 | 0.826 | 0.827 | 0.858 | 0.491 | 0.507 |

| Recall | 0.919 | 0.930 | 0.916 | 0.433 | 0.395 | 0.754 |

| Sum (TP) | 1061 (91.9%) | 1074 (93.0%) | 1058 (91.6%) | 500 (43.3%) | 456 (39.5%) | 699 (60.5%) |

| Sum (FP) | 197 (17.1%) | 226 (19.6%) | 221 (19.1%) | 83 (7.2%) | 472 (40.9%) | 680 (58.9%) |

| Sum (FN) | 94 (8.1%) | 81 (7.0%) | 97 (8.4%) | 655 (56.7%) | 699 (60.5%) | 228 (19.7%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karki, S.; Pingel, T.J.; Baird, T.D.; Flack, A.; Ogle, T. Enhancing Digital Twins with Human Movement Data: A Comparative Study of Lidar-Based Tracking Methods. Remote Sens. 2024, 16, 3453. https://doi.org/10.3390/rs16183453

Karki S, Pingel TJ, Baird TD, Flack A, Ogle T. Enhancing Digital Twins with Human Movement Data: A Comparative Study of Lidar-Based Tracking Methods. Remote Sensing. 2024; 16(18):3453. https://doi.org/10.3390/rs16183453

Chicago/Turabian StyleKarki, Shashank, Thomas J. Pingel, Timothy D. Baird, Addison Flack, and Todd Ogle. 2024. "Enhancing Digital Twins with Human Movement Data: A Comparative Study of Lidar-Based Tracking Methods" Remote Sensing 16, no. 18: 3453. https://doi.org/10.3390/rs16183453

APA StyleKarki, S., Pingel, T. J., Baird, T. D., Flack, A., & Ogle, T. (2024). Enhancing Digital Twins with Human Movement Data: A Comparative Study of Lidar-Based Tracking Methods. Remote Sensing, 16(18), 3453. https://doi.org/10.3390/rs16183453