Abstract

The European Space Agency’s Sentinel-1 constellation provides timely and freely available dual-polarized C-band Synthetic Aperture Radar (SAR) imagery. The launch of these and other SAR sensors has boosted the field of SAR-based flood mapping. However, flood mapping in vegetated areas remains a topic under investigation, as backscatter is the result of a complex mixture of backscattering mechanisms and strongly depends on the wave and vegetation characteristics. In this paper, we present an unsupervised object-based clustering framework capable of mapping flooding in the presence and absence of flooded vegetation based on freely and globally available data only. Based on a SAR image pair, the region of interest is segmented into objects, which are converted to a SAR-optical feature space and clustered using K-means. These clusters are then classified based on automatically determined thresholds, and the resulting classification is refined by means of several region growing post-processing steps. The final outcome discriminates between dry land, permanent water, open flooding, and flooded vegetation. Forested areas, which might hide flooding, are indicated as well. The framework is presented based on four case studies, of which two contain flooded vegetation. For the optimal parameter combination, three-class F1 scores between 0.76 and 0.91 are obtained depending on the case, and the pixel- and object-based thresholding benchmarks are outperformed. Furthermore, this framework allows an easy integration of additional data sources when these become available.

1. Introduction

Floods have been, and continue to be, the most occurring of all natural disasters, causing substantial human and economic losses [1,2]. Moreover, their frequency, intensity, and impacts are expected to further increase due to climate change [3]. Insights into the occurrence and dynamics of floods are thus of paramount importance, as they contribute to emergency relief, damage assessment, and flood forecast improvement [4,5,6]. Spaceborne satellites have evolved into the preferred source of flood observations due to their synoptic view and near real-time availability. In contrast to optical sensors, Synthetic Aperture Radar (SAR) sensors allow for observations during both day and night as well as under cloudy conditions. Furthermore, water surfaces are generally clearly distinguishable from the surrounding land due to their smooth character. Indeed, SAR sensors send out microwaves to the Earth’s surface and measure the returned signal or backscatter, which depends on the roughness, structure and dielectric properties of the surface as well as on the properties of the incoming wave [7]. Smooth surfaces act as specular scatterers and typically appear dark on SAR imagery, while the surrounding rougher surfaces act as surface scatterers, vegetation leads to predominantly volume scattering and double-bounce scattering typically occurs in the presence of stemmy vegetation or artificial structures [8].

Throughout the past decade, several SAR sensors with varying characteristics have been put in space [9]. Especially the launch of the European Space Agency’s (ESA) Sentinel-1 constellation, providing freely available dual-polarized C-band imagery, has boosted the development of SAR-based flood mapping algorithms [10]. These are typically pixel-based and make use of thresholds to separate the dark and homogeneous flood patches from their brighter surroundings, based on a single image or image pair. Examples include automated single-scene thresholding [11,12], change detection [13,14,15], and texture-based methods [16,17]. Recent advancements consider longer time series [18,19] and fuse imagery from different sensors [20,21]. Several studies also make use of supervised machine learning methods like random forest [19,22]. A major drawback of the latter is the lack of transferability due to the absence of generalized training data, though initiatives like Sen1Floods11 [23] could overcome this barrier. However, automated flood mapping algorithms mostly focus on the retrieval of open water surfaces only. Despite their humanitarian and economic importance, floods in urban and vegetated areas remain particularly challenging to map [24]. In urban areas, double-bounce backscatter is the predominant backscattering mechanism. In case of flooding, this mechanism is enhanced and an increased intensity is expected [24]. While Mason et al. [25] suggested the use of a SAR simulator combined with a detailed digital surface model for urban flood mapping, Chini et al. [26] and Li et al. [27] obtained promising results using inSAR coherence information.

In vegetated areas, the resulting backscatter often originates from a mixture of mechanisms and strongly depends on both the incoming microwave and the vegetation characteristics. Polarization greatly influences the resulting backscatter, as the sensitivity to a specific backscattering mechanism strongly varies across polarizations. Therefore, multi-polarized imagery and deduced polarimetric parameters provide substantive information for flood mapping in vegetated and wetland areas [28,29]. Besides the polarization, the signal’s wavelength is one of the most important wave properties since it determines its penetration capacity and the size of the objects with which it interacts [8]. When sufficient penetration occurs, flooding under vegetation is expected to lead to an increased backscatter due to enhanced double-bounce effects. Based on this reasoning, L-band radar has successfully been used to map flooding under dense forest canopies [30] and monitor wetlands [31,32,33], but promising results were also obtained with C- and X-band radar for forests under leaf-off conditions [34,35]. However, especially for the latter two, the visibility and separability of flooded vegetation (FV) remains a topic under investigation.

Several studies have reported observed backscatter changes due to flooding beneath vegetation. With C-band, Hess and Melack [36] observed maximum backscatter increases of 2.3 and 5.8 dB in HV and HH polarization respectively for forest stands. Lang et al. [37] reported similar values, ranging from 1 to 5 dB, for different forest types in C-HH depending on the incidence angle. They furthermore observed an increasing backscatter difference with decreasing incidence angles, in accordance with previous research, except for very sharp incidence angles (23.5°) at which the detectability dropped sharply [37,38,39]. Long et al. [14] successfully mapped flooded marshland using a threshold of 7.7 dB on a C-HH difference image, while Tsyganskaya et al. [19] observed a backscatter increase of about 2 dB for an example FV segment with C-VV and Refice et al. [40] reported a backscatter increase of almost 5 dB in C-VV for a cluster comprising mainly flooded herbaceous vegetation. An overview of relevant findings for C- as well as X- and L-band is given by Martinis and Rieke [41]. With respect to separability, Voormansik et al. [35] reported a clear separability between flooded and non-flooded forest on C-HH imagery with an incidence angle of 24°, for leaf-off deciduous as well as coniferous and mixed forest types. On the other hand, Martinis and Rieke [41] observed a low separability between flooded and non-flooded deciduous dense forest on C-HH imagery with an incidence angle of 30–33°, significantly lower than for L- and X-band. Accordingly, Brisco et al. [42] reported significant confusion between the flooded vegetation and upland classes for C-HH, C-VV, and C-HV. However, when considering the HV/HH polarization ratio, the separability between these classes increased to excellent. Furthermore, Tsyganskaya et al. [19] successfully made use of a polarization ratio to identify FV.

A broad overview of FV classification approaches, within the context of both flood and wetland monitoring, is provided by Tsyganskaya et al. [29]. The majority of these is supervised, which limits their transferability and applicability for near real-time flood monitoring. Furthermore, most of them consider single scene backscatter intensity only. While some have demonstrated the use of interferometric coherence, both for X- [43,44] and L-band [45], the application of this information source remains uncommon. Moreover, the use of polarimetric parameters remains rather limited, despite promising results. For example, Brisco et al. [46] demonstrated the inclusion of polarimetric decomposition bands in a curvelet-based change detection framework. Plank et al. [47] combined polarimetric parameters of both quad-polarized L-band ALOS-2/PALSAR-2 and dual-polarized Sentinel-1 C-band imagery in a Wishart classification framework. This framework is unsupervised but requires the manual labeling of meaningful classes. Mainly the limited availability of quad-polarized SAR imagery seems to hamper the application of polarimetry based approaches. Based on Sentinel-1 intensity data only, Tsyganskaya et al. [19] presented a supervised time series-based approach. Depending on the case, the normalized VV intensity or VV/VH ratio were found to be the most important features [19,48]. In a recent proof-of-concept, Refice et al. [40] have successfully combined multi-temporal C- and L-band imagery to monitor flooding in a remote vegetated area, by using a clustering-based approach. Olthof and Tolszczuk-Leclerc [49] developed a supervised machine learning approach based on dual-pol RADARSAT-2 imagery, using automatically extracted training data based on a Landsat inundation frequency product to map open water and using region growing to include flooded vegetation. Based on X-band data, Pierdicca et al. [50] presented an object-based region-growing approach capable of detecting flooded narrow-leaf crops, while Grimaldi et al. [51] applied a single scene based approach making use of probability binning and historic flood information. However, despite these and other considerable contributions, a transferable, unsupervised framework for flood mapping in vegetated areas based on freely available data only is still lacking.

In this study, we present an unsupervised, object-based clustering framework for flood mapping. This framework makes use of freely and globally available data only, is capable of easily fusing different data sources and does not require training data. Based on dual-polarized SAR and optical data, a classification into four classes, i.e., dry land, permanent water, open flood, and flooded vegetation, is made. Moreover, forested areas possibly hiding flooding are indicated. In the remainder of this paper, the full processing chain is described in detail. Finally, results for four case studies are described and discussed.

2. Materials

2.1. Study Cases

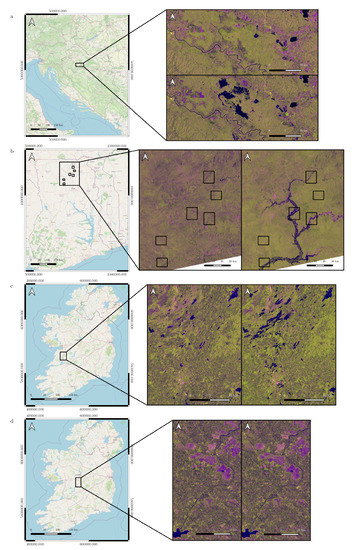

In order to illustrate the accuracy and robustness of the presented methodology, results are presented based on four study cases with varying characteristics, both in terms of flood type and vegetation cover: the Sava River in Croatia, the White Volta River in Ghana, and River Fergus and Shannon in Ireland. Of these, only the former two contain flooded vegetation areas. The location of these areas is shown in Figure 1, while descriptions are given below.

Figure 1.

Overview of the study cases and Synthetic Aperture Radar (SAR) data used in this study. The imagery for the Sava (a), Volta (b), Fergus (c), and Shannon (d) regions of interest (ROIs) are displayed in EPSG 32,633, 32,630, 32,629, and 32,629, respectively. The SAR reference and flood images are shown as (VV,VH,VV/VH) composites, with visualization limits (−20, 0), (−25, −5) and (0, 20) dB.

The Lonsjko Polje Nature Reserve is a large wetland area located along the Sava River in Croatia. The area is used as a retention basin to protect the surroundings from flooding in case of high discharge. Therefore, it experiences regular and long-lasting floods. These occur especially in late spring, due to snowmelt, but also in autumn and winter, due to intensive rain. In 2019, the area was flooded between early May and late June. Reference data were constructed based on a cloud-free Sentinel-2 image capturing the flood on 7 June 2019, a 0.5 m resolution aerial imagery covering the 2016 winter floods, and hydraulic knowledge (water level time series and levee location). Forest is the dominating vegetation type, next to some wet grasslands and agricultural fields along the river and surrounding the rural settlements. A considerable fraction of the flooding is situated in the lowland forests. Given the canopy density during summer, this part of the flooding is expected to be invisible on C-band SAR imagery.

The White Volta is part of the main river system in Ghana. It emerges in Burkina Faso and discharges into Lake Volta. In August 2018, communities in Northern and Upper East regions of Ghana were affected by heavy and continuous seasonal rainfall. Additionally, excess water from the Bagre Dam, located in Burkina Faso, was spilled from the 31st of August until the 10th of September. The combination of these two events caused unprecedented flooding in many local communities along the White Volta and continued throughout September [52]. Along the river banks, mainly shrubs and herbaceous vegetation occur. Several patches of open forest can be found too. Furthermore, the region of interest (ROI) also comprises several settlements and a considerable amount of agriculture. Reference data were constructed based on a Sentinel-2 image acquired on September 19. As this image is not fully cloud-free, six cloud-free subsets were selected. These are also indicated on Figure 1. Several patches of flooded vegetation were identified.

In the winter of 2015–2016, the passage of Storm Desmond led to exceptionally high amounts of rainfall in the UK and Ireland. In Ireland, this led to groundwater flooding that lasted for several months [53]. The Copernicus Emergency Management Service (EMS) was activated and the flooding was mapped for several areas [54]. Two areas were considered in this study, i.e., the surroundings of Ennis and Corofin along Rivers Fergus, and the surroundings of Ballinasloe and Portumna along River Shannon. The former was delineated by the EMS based on a COSMO-SkyMed image acquired on 16 December 2015, while the latter was delineated based on a COSMO-SkyMed image of 9 January 2016. These mappings were used as means of reference. The ROI along River Fergus comprises the city of Ennis, agriculture zones and several lakes. The vegetation is mainly herbaceous but several forest patches can be found too. The ROI along River Shannon is less forested, although several smaller patches occur along the river. Moreover, here, mainly herbaceous vegetation occurs alongside agriculture and several peat bogs. Based on the reference data, no flooded vegetation is expected.

2.2. Data

The classification framework makes use of globally available, open source data sets only. These include Sentinel-1 imagery, Sentinel-2 imagery or the Copernicus Global Land Service (CGLS) land cover product [55], and the Shuttle Radar Topography Mission (SRTM) Digital Elevation Model (DEM). An overview of the acquisition dates or time ranges of the data sources is provided in Table 1.

Table 1.

Overview of dates/date ranges for the used Sentinel-1 (S-1) and Sentinel-2 (S-2) imagery per study area.

For each study case, a Sentinel-1 image pair, i.e., an image acquired before and one during the flood, was selected. Sentinel-1 provides C-band SAR imagery in two polarizations, i.e., VV and VH, with a spatial resolution of 10 m and a repeat frequency of six to twelve days depending on the location. In this study, the Level-1 Ground Range Detected product of Interferometric Wide swath data was used. In order to avoid distortions due to differences in viewing angle, images of the same relative orbit were selected. This selection was done manually in the present study, but could be automated too [56]. For each image, the precise orbit file was applied, thermal and border noise were removed, and a radiometric calibration to sigma0 and terrain correction were applied. Next, the images were co-registered and speckle filtered using the Lee Sigma filter (7 × 7 window). These preprocessing steps were performed with ESA’s SNAP software.

In order to include information on vegetation cover, two data sources are compared. First, Sentinel-2 imagery can provide insights on the vegetation state around the time of flooding. However, clouds often hamper optical observations, especially in rainy periods. If no cloud-free image can be found within the 3 month time range before the start of the flood, a cloud-free composite (CFC) over this time range is calculated. This CFC is obtained based on the approach of Simonetti et al. [57], by calculating the median of the image stack after cloud masking based on a classification tree. Within this study, the Level-2A product, i.e., 10 m resolution Bottom Of Atmosphere (BOA) reflectance, is used. Second, land cover (LC) products can provide a more general view on the vegetation state. They are often derived from longer image time series and the data quality thus does not depend on the presence of clouds. However, these products often have a coarser resolution and, in case of strong seasonality, the current state of the vegetation cannot be deduced. Due to its global availability, the CGLS land cover product was selected [55]. It has a resolution of 100 m and provides a discrete land cover map comprising 23 classes as well as class fractions for the ten base classes: bare ground and sparse vegetation, moss and lichens, herbaceous vegetation, shrubland, cropland, forest, built-up, snow and ice, permanent and seasonal water).

Finally, a DEM over each ROI is considered. The hydrologically conditioned elevation, derived from the SRTM and included in the HYDROSHEDS product [58] is used in this study.

3. Methods

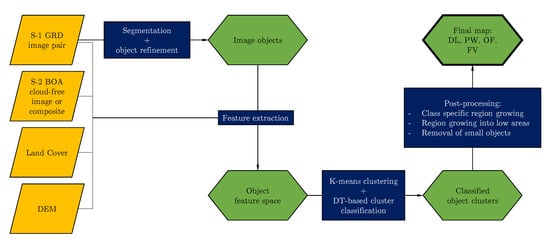

The unsupervised clustering framework is summarized in Figure 2 and comprises several steps, i.e., segmentation of the image into objects, extraction of the object feature space (FS), K-means clustering and classification, and post-processing refinement. In this section, each of these steps is described in detail.

Figure 2.

Overview of the methodological framework, resulting in a multi-class classification discriminating dry land (DL), permanent water (PW), open flood (OF), and flooded vegetation (FV).

3.1. Image Segmentation Using the Quickshift Algorithm

First, image pixels are grouped into object preliminaries using the quickshift algorithm. Quickshift is a further elaboration of mean shift, a density estimation based algorithm that finds clusters by assigning data points to nearby density modes [59]. It is based on the assumption that the feature space represents an empirical probability density function of the represented parameter, and clusters (or segments) are thus represented by dense regions. Whereas mean shift makes use of gradient descent for mode seeking, quickshift uses a kernel-based approximation. First, local density is estimated using a Gaussian kernel, with a size defined by the parameter . Given a kernel K, the density of a pixel p is calculated as follows,

where I is the image array; r and c are the row and column indices, respectively; and is the center pixel. Both spatial and image color distance are thus considered for the density calculation. Next, each pixel is assigned to its nearest neighbor with a higher density. The parameter controls the maximal size of the resulting objects. All pixels that are more then away from the nearest pixel with a higher density, are assigned as a cluster (or segment) seed.

The quickshift algorithm is applied on a feature space comprising four bands, i.e., the VV and VH bands of the SAR image pair. The two algorithm parameters and were determined empirically. In order to capture small scale features, these parameters are set to resp. A 7 × 7 window and a value of 4.

Next, the resulting object preliminaries are refined iteratively. For each object, the object mean difference with each of its neighbors is calculated for the four input bands. If for all four bands, this difference is below a precalculated threshold, the objects are merged. The threshold is determined as the minimum of the standard deviations of the considered object and neighbor for that specific band:

Furthermore, in order to prevent the objects from becoming too irregularly shaped, a shape constraint is added. Objects are merged only if the perimeter over rooted area is below 12. This threshold was set on a trial-and-error basis. The object refinement constraints can thus be summarized as follows.

3.2. Object-Based Clustering

In order to detect the intrinsic structure of the feature space, object-based clustering is applied. In a first phase, several clustering algorithms, including K-means, spectral clustering, and quickshift clustering, were tested. However, given its computational speed, robustness, and good results, the K-means algorithm was withheld.

K-means is an iterative clustering algorithm that aims to minimize the within-cluster sum-of-squares [60]. For a predefined number of clusters k, the cluster centroids are first randomly set. Each sample is then assigned to the nearest centroid. Lastly, updated cluster centroids are obtained by taking the mean of the samples in that cluster. The latter two steps are repeated until a (local) minimum of the within-cluster sum-of-squares is found. In order to circumvent the issue of local optima, the procedure is repeated for different centroid initializations. K-means can result in a sub-optimal cluster partitioning when the clusters are of differing size or density, or have non-globular shapes. In unbalanced datasets, K-means tends to split up the larger clusters. This issue can be overcome by over-clustering the dataset and combining the subclusters of larger clusters in a later phase. In order to determine the optimal value of k and illustrate the effect of a varying k, all values between 2 and 15 were tested and compared.

In order to include information on both the flooding and the vegetation state, K-means is run on a feature space consisting of both SAR and optical or land cover data. Several feature spaces are tested. With respect to the SAR features, the VV and VH band of the reference and flood image can be complemented with the ratio features, where ratio stands for the ratio in linear scale or difference in log scale (dB) between the VV and VH polarization, and/or the increase features, i.e., the difference in VV/VH/ratio between the reference and flood image. The vegetation state can be represented by the discrete land cover class, the nine land cover fractions or a selection of three or ten optical bands. The combination of these features results in 15 feature spaces, i.e., SAR, SARlc, SARlcfrac, SARo3, SARopt, SARincF, SARincFlc, SARincFlcfrac, SARincFo3, SARincFopt, SARwC, SARwClc, SARwClcfrac, SARwCo3, and SARwCopt. These feature spaces consist out of different SAR and optical subspaces, which are summarized in Table 2. For example, the feature space SARwCopt consists of the subspaces SAR, wC and opt, or the following bands: , , , , , , , , , , , , , , , , , and . The feature space is obtained by calculating object means for continuous bands and modes for discrete bands. Also the object standard deviations are calculated for all bands. Each feature is scaled to zero mean and unit variance.

Table 2.

Overview of feature subspaces considered for object clustering.

3.3. Cluster Classification

The final classification aims to discriminate dry land (DL), permanent water (PW), open flooding (OF), and flooded vegetation (FV) if present. Given that K-means is not capable to correctly separate clusters of differing sizes, over-clustering is targeted and k will be set to a value above 2. This implies that the resulting clusters still need to be classified into one of the above-mentioned classes. Clusters are classified based on their centroid. For the PW and OF classes, the VV and VH bands are considered and the thresholds are obtained by means of tiled pixel-based thresholding using the KI algorithm, as suggested by [12]. The PW and OF class are defined as follows.

As mentioned before, flooding beneath vegetation is expected to increase backscatter intensity due to enhanced double-bounce backscattering. As VV is more sensitive to double-bounce, this increase is expected to be more pronounced in the VV band as compared to the VH band. Thus, the FV class is characterized by an increase in both and . The thresholds are both set to a value of 3, based on a calibration as well as reported values in literature [19,36,40]. The FV class is defined as

3.4. Post-Processing Refinement

The initial classification is further refined using contextual information. Several studies have underlined the added value of classifying pixels or objects not only based on their spectral properties, but also on their surroundings [16,47,61]. Context is integrated here by means of a region growing (RG) approach, which iteratively adds neighboring objects that satisfy a preset condition to the seed region. First, the PW, OF, and FV classes are refined. For PW and FV, the growing condition is the same as the classification rule (cf. Section 3.3). For OF, the object mean should be below the threshold in only one of the polarizations instead of the two. This way, objects that exhibit a slightly increased backscatter in one of both polarizations due to e.g., wind roughening are included too. Table 3 summarizes the growing conditions as well as the seed and source classes for PW, OF and FV. For FV for example, only DL objects adjacent to OF or FV objects are considered.

Table 3.

RG parameters for the PW, OF, and FV classes.

Next, low elevation areas adjacent to the flood are considered. Densely vegetated areas can be falsely classified as DL due to limited penetration, but can be included based on hydrologic considerations. Objects of which more than 50% of the neighbors are flooded and whose elevation is lower than the minimum elevation of the flooded neighbors, are included in the OF class using a RG approach. Then, a minimal mapping unit (MMU) of 10 pixels (1000 m2) is applied. This parameter value was set empirically and is in line with reported values [47,62]. Finally, densely forested areas might hide flooding due to limited penetration. Therefore, objects whose land cover is closed forest are flagged as forested areas (FA).

3.5. Accuracy Assessment

The accuracy of the presented approach is assessed primarily by the F1 score, the harmonic mean of Precision and Recall. Similarly as the Critical Success Index (CSI), this measure does not consider the correctly classified dry land pixels (true negatives in a binary classification) which cause an overestimation of the accuracy [63]. The F1 score is preferred here given its higher popularity for classifications problems in general. It is calculated based on the number of true positives (TP), false positives (FP) and false negatives (FN), which can be retrieved from the contingency matrix.

In order to get an idea of over- and underestimations, the Precision and Recall are also calculated separately. The respective equations are

As the resulting classification comprises four classes, i.e., DL, PW, OF, and FV, the accuracy metrics cannot be calculated in a binary way. Therefore, a multi-class accuracy is determined as the unweighted average of the accuracies obtained for each class separately. This way, the different classes have an equal influence on the resulting accuracy and a bias due to a dominating class (often DL) is avoided. Moreover, as FV is not present in all ROIs, OF and FV are considered as one class. Thus, a 3-class F1 score is obtained based on the DL, PW, and OF + FV classes. In order to allow comparison with other studies, who typically result in a single scene or change flood map, the single (PW + OF + FV vs. DL) and change (OF + FV vs. DL + PW) accuracies are calculated too. The results are furthermore compared to a benchmark, i.e., the classifications resulting from object- and pixel-based thresholding. The thresholds were obtained by automated tile-based thresholding using the KI algorithm [12], while the PW and OF class definitions were the same as in Equation (4).

4. Results and Discussion

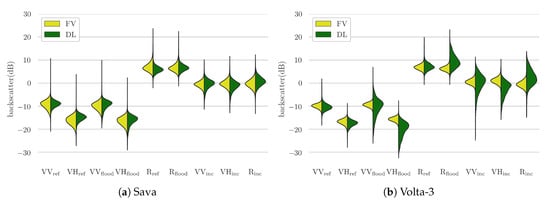

4.1. Separability of Flooded Vegetation

In order to know whether the flooded vegetation present in the ROI can be mapped at all, it is important to get an idea of the separability between this class and others. Previous studies indicated the separability between FV and OF is generally good, while significant confusion can occur between the FV and DL classes [41,42]. Figure 3 shows the class distributions of FV and DL across the SAR features for the Sava ROI and subset 3 of the Volta ROI. In the Sava ROI, deciduous forest is the predominant type of FV. As the flooding occurred in summer, limited penetration is expected. This can also be seen in Figure 3, as there is a strong overlap and similarity between the two classes for all SAR features. The flooded vegetation in Volta-3 is a mixture of shallow water, shrubs, and grassland. The resulting backscatter shows a more pronounced shift from the DL class for several SAR features. At the time of flooding, VH shows a shift towards lower backscatter values, while VV is shifted towards both higher and lower backscatter values. This observation confirms the assumption that VV is more sensitive to double-bounce, and FV thus leads to increased backscatter values. VH is less sensitive to both double-bounce and roughening of specular surfaces, resulting in a drop due to more specular reflection rather than an increase due to double-bounce. The VVinc and VHinc features show similar shifts, while the Rinc feature shows a clear increase, explained by the differences in backscatter response between VV and VH. However, a considerable overlap between the DL and FV classes remains in all features, so classifying FV by means of single feature thresholding is not possible.

Figure 3.

Violin plots demonstrating the class distributions of dry land (DL) and flooded vegetation (FV) objects across the different SAR features for the Sava ROI (a) and subset 3 of the Volta ROI (b).

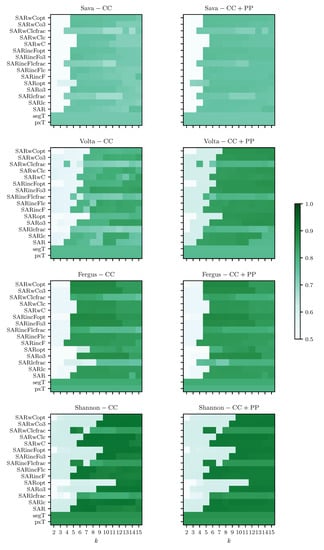

4.2. K-Means Cluster Classification

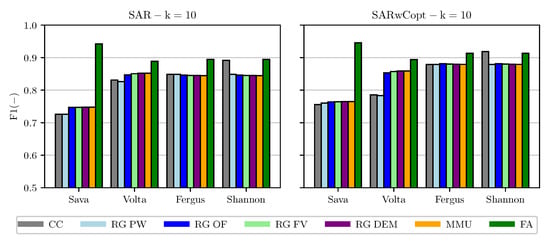

K-means clustering and cluster classification (CC) were applied on several feature spaces for a range of k values. The resulting three-class F1 scores as well as the F1 scores for the pixel- and object-based thresholding benchmark are visualized in the left column of Figure 4. For all ROIs, several FS/k combinations outperform the benchmarks. However, significant differences between the FS/k combinations exist and several trends can be identified. First of all, k values below 5 lead to poor results for all cases. As K-means has difficulties identifying clusters of varying sizes and the DL class is significantly larger than the others, over-clustering is necessary. Moreover, the optimal k value increases with an increasing number of features. The structure of the feature space indeed becomes more complex when the number of dimensions increases, and more clusters are needed to capture the full structure. Despite their differences in land cover, no significant differences concerning the optimal k value could be detected across the ROIs.

Figure 4.

Three-class F1 scores for K-means clustering and cluster classification (CC, left) and K-means clustering and cluster classification, complemented by the post-processing refinement, (CC+PP, right), using different feature spaces and a varying number of clusters k.

Considering the SAR features only, the benchmarks are outperformed for several values of k for all ROIs. As the same thresholds were used to classify the clusters, objects, and pixels, this improved accuracy underlines the added value of first grouping similar objects in clusters based on the inherent structure of the data. By doing so, objects that do not satisfy the PW or OF class definition but are similar to a group of objects that does, are nevertheless classified into this class. Therefore, issues like water roughening are circumvented. However, the accuracy for the SAR FS across k values is rather inconsistent. Other FSs lead to higher accuracies, although the optimal FS seems to depend on the ROI and the outcome is quite sensitive to the choice of k. The inclusion of the land cover fractions leads to poor results across all ROIs, due to the skewness of these features, which typically contain a lot of zero values. This feature subspace was thus found unsuited for K-means clustering without transformation, but the results were included here for completeness. In general, the SARincF and SARwC FSs complemented by three or ten optical bands perform well and the optimal k lies around 10. This k value is in line with the findings of Refice et al. [40], who initially opted for a higher number of clusters but obtained 12 distinct clusters after merging.

In order to include information on texture too, some tests were done considering both the object means and standard deviations (results not shown). However, the inclusion of standard deviation features did not improve the resulting accuracies, on the contrary. Due to the image segmentation and object refinement as well as the speckle filtering, standard deviation and texture in general was found significantly less descriptive than the mean.

It is important to note that across all ROIs, FSs, and k values, only one cluster was classified as FV, i.e., for SARwC and k = 15 on the Volta ROI. The FV areas in both the Sava and Volta ROI were primarily classified as DL. In the former, this confusion can be attributed to the limited penetration of the C-band SAR signal. In the latter, several objects but no clusters—except for one—satisfy the FV class definition. The detected cluster (SARwC-15) has a mean VVinc of 3.1 and a mean Rinc of 4.1. It exists mainly out of FV objects but contains 17% DL objects too. For lower values of TVVinc and TRinc, more FV clusters would be detected but all of these would lead to a considerable amount of FPs due to confusion with the DL class. The threshold values of 3 were chosen based on the considered cases as well as values reported in literature. However, these could need some refinement based on insights brought by additional cases.

4.3. Post-Processing Refinement

The post-processing procedure comprises several steps, i.e., the refinement of the OF, PW, and FV classes using region growing, the inclusion of low elevation areas using DEM-based region growing, the removal of small flood objects, and the indication of forested areas. The three-class F1 scores obtained after applying the RG and MMU steps on all FS/k combinations are shown in the right column of Figure 4. As can be seen when comparing the two columns in this figure, the post-processing procedure improves both the accuracy and the robustness of the methodology. Especially the sensitivity to the choice of k is decreased but also the differences amongst FSs are reduced. Across all ROIs, the best results are obtained by SARwCopt with 10 clusters. However, considering the SAR FS, a three-class F1 of only 0.016 less (0.8368 compared to 0.8532) is obtained. The added value of the optical features is thus rather limited, although improvements can be obtained depending on the case. The improvement compared to the cluster classification is most pronounced for the Sava and Volta ROIs, while differences are marginal for the Fergus and Shannon ROIs. However, the initial accuracy for the latter is already high, so only marginal improvements can be obtained. Moreover, these ROIs do not contain flooded vegetation areas.

Figure 5 shows the evolution of the three-class F1 score throughout the different processing steps, including forest flagging, for SAR-10 and SARwCopt-10. As can be seen here, the impact of these steps strongly depends on the ROI as well as the FS/k combination. In general, mainly RG PW and RG OF alter the classification outcome. RG FV significantly altered the classification outcome for Volta too, although the resulting change in accuracy is limited due to the small size of this class. Forest flagging also significantly increases the accuracy for all ROIs. The shown values are the accuracies calculated over the non-forested areas only. The increase is most pronounced for the Sava ROI due to the high abundance of forests in this region. The high accuracy mostly indicates that the visible flooding is mapped highly accurately and underlines the incapability of Sentinel-1 to map flooding under dense forest canopies. The limited impact of RG DEM is not unexpected, given the coarse resolution (both horizontally and vertically) of the SRTM DEM product. Especially in forested areas, the reliability of this product can be poor [64]. A finer resolution DEM could substantially increase the added value of this step.

Figure 5.

Three-class F1 score per processing step for clustering based on the SAR features with k = 10 (left) and based on the SARwCopt features with k = 10 (right). These steps are cluster classification (CC), region growing (RG) for the permanent water (PW), open flooding (OF) and flooded vegetation (FV) class, region growing refinement based on elevation (RG DEM), the application of a minimal mapping unit (MMU), and the indication of forested areas (FA).

For each PP step, several parameter values were compared. For example, the outcome of RG OF into regions below the threshold in VV and VH vs. in VV or VH was compared. The latter lead to minimal differences for Fergus, Shannon, and Sava but increased the accuracy for Volta with up to 0.10. RG DEM only has a negligible impact on the outcome. Relaxing the growing condition to the mean or maximum of neighboring heights increased the accuracy for the Volta ROI but significantly lowered the accuracy for other cases. On the other hand, the outcome proved insensitive to the fraction of flooded neighbors required. For RG FV too, several values of TVVinc and TRinc were tested. Again, a value of 3 resulted in the best trade-off of FPs and FNs, but some refinement based on additional cases might be beneficial. Furthermore, the chosen MMU value provided the best trade-off between FPs and FNs across all ROIs.

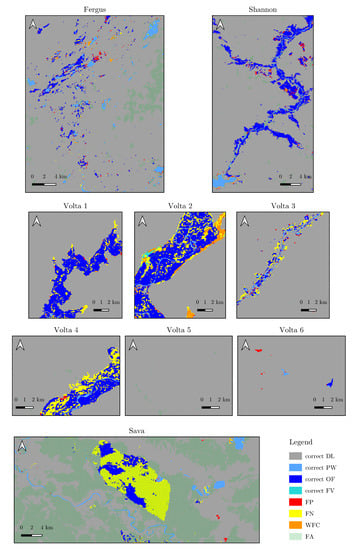

4.4. Final Flood Maps

Contingency maps for the final classifications are shown in Figure 6. In general, most of the water surfaces are mapped correctly. In the Sava ROI, a high number of false negatives (FN) occurs due to dense forest canopies, hampering the SAR signal penetration. As can be seen, almost the entire floodplain is forested. More FNs occur in the Volta subsets, especially at the flood edges. In the latter, quite some within-flood confusion (WFC) occurs too. These are areas that were mapped as PW, OF or FV but belonged to another of those three according to the reference data. These WFCs are mainly confusions between FV and OF, which can be explained by the fact that the flooded vegetation in this ROI is a mixture of shallow water and flooded vegetation and thus the backscatter signature is a mix too. For both the FNs in the Sava ROI and the WFCs in the Volta ROI, the inclusion of additional SAR features like L-band imagery could be beneficial.

Figure 6.

Contingency maps of the final classification all ROIs. These maps discriminate between correctly classified dry land (DL), permanent water (PW), open flooding (OF), and flooded vegetation (FV), false positives (FP), false negatives (FN), within flood confusion (WFC), and forested areas (FA).

In order to allow comparison with other studies, Table 4 summarizes the F1 scores when considering the flood classification as a three-class, single scene or change detection problem. The single and change F1 scores exceed the three-class value for all ROIs except for the Sava area, where the DL accuracy is significantly higher than the PW and OF values. Although these values are encouraging, they should be interpreted with care as they were calculated on relatively small reference subsets. This prevents the accuracy from being too heavily biased by the DL class, but does not allow to thoroughly test the algorithm for FP detection in the context of automated flood monitoring. Within the Volta ROI, one subset was selected in a flood-free area and did not result in erroneous flood detection. However, ideally, the approach would also be applied on a non-flood image pair in order to test whether seasonal backscatter changes are not erroneously picked up as flooding.

Table 4.

Accuracy of CC+PP for SARwCopt-10 expressed in terms of different metrics for all ROIs.

4.5. Limitations and Future Improvements

Although the results presented in this paper illustrate the potential of the developed framework, several aspects could be improved in the future.

First of all, the considered feature space could be further extended and improved. As some confusion occurred between the OF and FV classes in the Volta ROI and the flooded forests in the Sava ROI could not be detected, the inclusion of additional SAR features could further refine the flood class definitions and help overcome these issues. Olthof and Rainville [65] and Plank et al. [47] illustrated the potential of including multiple polarizations and derived polarimetric parameters, as well as SAR imagery acquired using different wavelengths. Especially L-band imagery could significantly contribute to the framework’s capability of mapping flooding in forested areas [31,33,40]. Within the context of open source data, the upcoming NISAR mission, which is expected to be launched in 2022 and will provide L- and S-band SAR imagery, is promising [66]. Moreover, ESA is planning to include an L-band satellite, named ROSE-L, in its Copernicus program. It is part of the six high-priority candidate missions currently being studied [67]. As the Sentinel constellation provides systematic imagery with short revisit times, the inclusion of time series information could improve the classification outcome too [19]. As such, the sensitivity to the choice of the reference image could be decreased, though anomaly detection is less suited for persistent flooding. The inclusion of time series could furthermore contribute to the detection of thematic clusters as long as the weight of the flood features is maintained.

Second, the robustness of the classification thresholds could be further increased. Currently, the FV classification thresholds were set as fixed values. For the considered cases, these values provided a good trade-off between over- and underestimation. However, more cases are needed to check the general applicability of these values. Besides good reference data, a thorough investigation of the impact of incidence angle and vegetation type on the resulting backscatter for different wavelengths and polarizations, based on an experimental set-up, would provide invaluable information for the future improvement of FV classification algorithms. Furthermore, future work could investigate whether the selection of the FV threshold values could be automated, similar as is the case for the OF and PW thresholds. Moreover, the incidence angle dependency of both classification thresholds is a topic for further investigation.

Third, some modifications can be made to further upscale the presented framework. Given the global availability of the input data, the framework’s independence on training data and the good results for floods with varying characteristics, it has substantial potential for automated near-real time flood monitoring. However, the presented results were obtained on relatively small (around 1000 km2 for Fergus/Shannon/Sava, 16,000 km2 for Volta), manually delineated subsets with a significant fraction of flooding. On full scenes, the fraction of flooding is usually significantly lower and the detection of a flood cluster can be hampered, similar as with global thresholding. Moreover, the computation time, which was 39 minutes for the Fergus ROI on an Intel E5-2660v3 (Haswell-EP @ 2.6 GHz) computation node using 4 cores and 16 GB of RAM, is expected to significantly increase with increasing image sizes. However, both issues could be overcome by detecting the core area of flooding prior to applying the clustering framework. This could be done by applying a split-based approach, as was suggested by Chini et al. [68]. Last, confusion between urban and flooded vegetation areas did not occur in the considered ROIs. However, given the similar backscatter behavior of these thematic classes, confusion could occur in other regions. This could be prevented by masking out urban areas, similar as was done for forested areas, e.g., based on Global Urban Footprint [69].

5. Conclusions

In this study, an unsupervised clustering-based approach that can be used for automated, near real-time flood mapping in vegetated areas based on freely available data is presented. After image segmentation, K-means clustering is applied on an object-based feature space consisting of SAR and optical features. The resulting clusters are classified based on their centroids. Finally, the classification is refined by a region growing post-processing refinement. The final outcome discriminates between dry land, permanent water, open flooding and flooded vegetation, while forested areas which might hide flooding are indicated too. Results are presented based on four case studies, of which two contain areas of flooded vegetation. The results for the ROIs without flooded vegetation illustrate the added value of the clustering approach, as the pixel- and object-based benchmarks are outperformed by the clustering framework even when considering the same SAR features. Although good results are obtained based on the SAR bands only, additional SAR and optical features lead to further improvements. Moreover, the post-processing refinement significantly increases the accuracy of the methodology and decreases the sensitivity to the parameter choice. Across all ROIs, the best result was obtained based on the SARwCopt FS using 10 clusters. For the Sava, Volta, Fergus, and Shannon ROIs, three-class F1 scores of 0.7648, 0.8588, 0.8793, and 0.9098, respectively are obtained. The detection of flooding beneath dense forest canopies was not possible using C-band SAR and optical features only. Flooding in less densely vegetated areas could be mapped successfully, although significant confusion with the open flood class occurred. However, the clustering framework allows to easily integrate additional features. For example, the inclusion of L-band SAR imagery could substantially improve the FV classification accuracy. The code of this object-based flood mapping framework is available through https://github.com/h-cel/OBIAflood.

Author Contributions

L.L. developed the methodology, carried out the analysis and interpretation, and wrote the paper. N.E.C.V. and F.M.B.V.C. contributed to the critical review of the methodology as well as the content, structure and language of the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research Foundation Flanders under Project G.0179.16N.

Acknowledgments

The authors would like to thank ESA’s Copernicus program for providing the Sentinel-1 and -2 imagery, the CGLS land cover product and the EMS mapping products. We would furthermore like to thank the World Wide Fund for Nature for providing the HydroSHEDS product.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript.

| CGLS | Copernicus Global Land Service |

| DEM | Digital Elevation Model |

| DL | dry land |

| ESA | European Space Agency |

| FA | forested areas |

| FV | flooded vegetation |

| FS | feature space |

| OF | open flood |

| LC | land cover |

| MMU | minimal mapping unit |

| PW | permanent water |

| RG | region growing |

| ROI | region of interest |

| S-1 | Sentinel-1 |

| S-2 | Sentinel-2 |

| SAR | Synthetic Aperture Radar |

| SRTM | Shuttle Radar Topography Mission |

References

- Centre for Research on the Epidemiology of Disasters (CRED); United Nations Office for Disaster Risk Reduction (UNISDR). The Human Cost of Weather-Related Disasters 1995–2015. 2015. Available online: https://www.cred.be/sites/default/files/HCWRD_2015.pdf (accessed on 30 July 2020).

- Centre for Research on the Epidemiology of Disasters (CRED). Natural Disasters 2019. 2020. Available online: https://emdat.be/sites/default/files/adsr_2019.pdf (accessed on 30 July 2020).

- Milly, P.C.D.; Wetherald, R.T.; Dunne, K.; Delworth, T.L. Increasing risk of great floods in a changing climate. Nature 2002, 415, 514–517. [Google Scholar] [CrossRef] [PubMed]

- Voigt, S.; Giulio-Tonolo, F.; Lyons, J.; Kučera, J.; Jones, B.; Schneiderhan, T.; Platzeck, G.; Kaku, K.; Hazarika, M.K.; Czaran, L.; et al. Global trends in satellite-based emergency mapping. Science 2016, 353, 247–252. [Google Scholar] [CrossRef] [PubMed]

- Plank, S. Rapid Damage Assessment by Means of Multi-Temporal SAR—A Comprehensive Review and Outlook to Sentinel-1. Remote Sens. 2014, 6, 4870–4906. [Google Scholar] [CrossRef]

- Van Wesemael, A.; Verhoest, N.E.C.; Lievens, H. Assessing the Value of Remote Sensing and In Situ Data for Flood Inundation Forecasts. Ph.D. Thesis, Ghent University, Ghent, Belgium, 2019. [Google Scholar]

- Woodhouse, I.H. Introduction to Microwave Remote Sensing; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar] [CrossRef]

- Meyer, F. Spaceborne Synthetic Aperture Radar: Principles, Data Access, and Basic Processing Techniques. In The SAR Handbook: Comprehensive Methodologies for Forest Monitoring and Biomass Estimation; NASA: Washington, DC, USA, 2019; Chapter 1. [Google Scholar]

- Adeli, S.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.J.; Brisco, B.; Tamiminia, H.; Shaw, S. Wetland Monitoring Using SAR Data: A Meta-Analysis and Comprehensive Review. Remote Sens. 2020, 12, 2190. [Google Scholar] [CrossRef]

- Grimaldi, S.; Li, Y.; Pauwels, V.R.N.; Walker, J.P. Remote Sensing-Derived Water Extent and Level to Constrain Hydraulic Flood Forecasting Models: Opportunities and Challenges. Surv. Geophys. 2016, 37, 977–1034. [Google Scholar] [CrossRef]

- Schumann, G.; Di Baldassarre, G.; Bates, P.D. The Utility of Spaceborne Radar to Render Flood Inundation Maps Based on Multialgorithm Ensembles. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2801–2807. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Matgen, P.; Hostache, R.; Schumann, G.; Pfister, L.; Hoffmann, L.; Savenije, H. Towards an automated SAR-based flood monitoring system: Lessons learned from two case studies. Phys. Chem. Earth Parts A/B/C 2011, 36, 241–252. [Google Scholar] [CrossRef]

- Long, S.; Fatoyinbo, T.E.; Policelli, F. Flood extent mapping for Namibia using change detection and thresholding with SAR. Environ. Res. Lett. 2014, 9, 035002. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Plank, S.; Ludwig, R. An automatic change detection approach for rapid flood mapping in Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 123–135. [Google Scholar] [CrossRef]

- Dasgupta, A.; Grimaldi, S.; Ramsankaran, R.; Pauwels, V.R.; Walker, J.P. Towards operational SAR-based flood mapping using neuro-fuzzy texture-based approaches. Remote Sens. Environ. 2018, 215, 313–329. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Unsupervised Rapid Flood Mapping Using Sentinel-1 GRD SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3290–3299. [Google Scholar] [CrossRef]

- Schlaffer, S.; Chini, M.; Giustarini, L.; Matgen, P. Probabilistic mapping of flood-induced backscatter changes in SAR time series. Int. J. Appl. Earth Obs. Geoinf. 2017, 56, 77–87. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. Detection of temporary flooded vegetation using Sentinel-1 time series data. Remote Sens. 2018, 10, 1286. [Google Scholar] [CrossRef]

- Markert, K.N.; Chishtie, F.; Anderson, E.R.; Saah, D.; Griffin, R.E. On the merging of optical and SAR satellite imagery for surface water mapping applications. Results Phys. 2018, 9, 275–277. [Google Scholar] [CrossRef]

- DeVries, B.; Huang, C.; Armston, J.; Huang, W.; Jones, J.W.; Lang, M.W. Rapid and robust monitoring of flood events using Sentinel-1 and Landsat data on the Google Earth Engine. Remote Sens. Environ. 2020, 240, 111664. [Google Scholar] [CrossRef]

- Huang, W.; DeVries, B.; Huang, C.; Lang, M.; Jones, J.; Creed, I.; Carroll, M. Automated Extraction of Surface Water Extent from Sentinel-1 Data. Remote Sens. 2018, 10, 797. [Google Scholar] [CrossRef]

- Bonafilia, D.; Tellman, B.; Anderson, T.; Issenberg, E. Sen1Floods11: A Georeferenced Dataset to Train and Test Deep Learning Flood Algorithms for Sentinel-1. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Pierdicca, N.; Pulvirenti, L.; Chini, M. Flood Mapping in Vegetated and Urban Areas and Other Challenges: Models and Methods. In Flood Monitoring through Remote Sensing; Springer International Publishing: Cham, Switzerland, 2018; pp. 135–179. [Google Scholar] [CrossRef]

- Mason, D.C.; Dance, S.L.; Vetra-Carvalho, S.; Cloke, H.L. Robust algorithm for detecting floodwater in urban areas using synthetic aperture radar images. J. Appl. Remote Sens. 2018, 12, 1–20. [Google Scholar] [CrossRef]

- Chini, M.; Pelich, R.; Pulvirenti, L.; Pierdicca, N.; Hostache, R.; Matgen, P. Sentinel-1 InSAR Coherence to Detect Floodwater in Urban Areas: Houston and Hurricane Harvey as a Test Case. Remote Sens. 2019, 11, 107. [Google Scholar] [CrossRef]

- Li, Y.; Martinis, S.; Wieland, M.; Schlaffer, S.; Natsuaki, R. Urban Flood Mapping Using SAR Intensity and Interferometric Coherence via Bayesian Network Fusion. Remote Sens. 2019, 11, 2231. [Google Scholar] [CrossRef]

- Brisco, B.; Kapfer, M.; Hirose, T.; Tedford, B.; Liu, J. Evaluation of C-band polarization diversity and polarimetry for wetland mapping. Can. J. Remote Sens. 2011, 37, 82–92. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-based detection of flooded vegetation—A review of characteristics and approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Martinez, J.M.; Le Toan, T. Mapping of flood dynamics and spatial distribution of vegetation in the Amazon floodplain using multitemporal SAR data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- Pistolesi, L.I.; Ni-Meister, W.; McDonald, K.C. Mapping wetlands in the Hudson Highlands ecoregion with ALOS PALSAR: An effort to identify potential swamp forest habitat for golden-winged warblers. Wetl. Ecol. Manag. 2015, 23, 95–112. [Google Scholar] [CrossRef]

- San Martín, L.S.; Morandeira, N.S.; Grimson, R.; Rajngewerc, M.; González, E.B.; Kandus, P. The contribution of ALOS/PALSAR-1 multi-temporal data to map permanently and temporarily flooded coastal wetlands. Int. J. Remote Sens. 2020, 41, 1582–1602. [Google Scholar] [CrossRef]

- Evans, T.L.; Costa, M.; Telmer, K.; Silva, T.S.F. Using ALOS/PALSAR and RADARSAT-2 to Map Land Cover and Seasonal Inundation in the Brazilian Pantanal. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 3, 560–575. [Google Scholar] [CrossRef]

- Townsend, P.A. Mapping seasonal flooding in forested wetlands using multi-temporal Radarsat SAR. Photogramm. Eng. Remote Sens. 2001, 67, 857–864. [Google Scholar]

- Voormansik, K.; Praks, J.; Antropov, O.; Jagomägi, J.; Zalite, K. Flood Mapping with TerraSAR-X in Forested Regions in Estonia. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 562–577. [Google Scholar] [CrossRef]

- Hess, L.L.; Melack, J.M. Remote sensing of vegetation and flooding on Magela Creek Floodplain (Northern Territory, Australia) with the SIR-C synthetic aperture radar. In Aquatic Biodiversity: A Celebratory Volume in Honour of Henri J. Dumont; Springer: Dordrecht, The Netherlands, 2003; pp. 65–82. [Google Scholar] [CrossRef]

- Lang, M.W.; Townsend, P.A.; Kasischke, E.S. Influence of incidence angle on detecting flooded forests using C-HH synthetic aperture radar data. Remote Sens. Environ. 2008, 112, 3898–3907. [Google Scholar] [CrossRef]

- Richards, J.A.; Woodgate, P.W.; Skidmore, A.K. An explanation of enhanced radar backscattering from flooded forests. Int. J. Remote Sens. 1987, 8, 1093–1100. [Google Scholar] [CrossRef]

- Töyrä, J.; Pietroniro, A.; Martz, L.W. Multisensor Hydrologic Assessment of a Freshwater Wetland. Remote Sens. Environ. 2001, 75, 162–173. [Google Scholar] [CrossRef]

- Refice, A.; Zingaro, M.; D’Addabbo, A.; Chini, M. Integrating C- and L-Band SAR Imagery for Detailed Flood Monitoring of Remote Vegetated Areas. Water 2020, 10, 2745. [Google Scholar] [CrossRef]

- Martinis, S.; Rieke, C. Backscatter Analysis Using Multi-Temporal and Multi-Frequency SAR Data in the Context of Flood Mapping at River Saale, Germany. Remote Sens. 2015, 7, 7732–7752. [Google Scholar] [CrossRef]

- Brisco, B.; Shelat, Y.; Murnaghan, K.; Montgomery, J.; Fuss, C.; Olthof, I.; Hopkinson, C.; Deschamps, A.; Poncos, V. Evaluation of C-Band SAR for Identification of Flooded Vegetation in Emergency Response Products. Can. J. Remote Sens. 2019, 45, 73–87. [Google Scholar] [CrossRef]

- Chaabani, C.; Chini, M.; Abdelfattah, R.; Hostache, R.; Chokmani, K. Flood Mapping in a Complex Environment Using Bistatic TanDEM-X/TerraSAR-X InSAR Coherence. Remote Sens. 2018, 10, 1873. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of SAR Data for Detecting Floodwater in Urban and Agricultural Areas: The Role of the Interferometric Coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1532–1544. [Google Scholar] [CrossRef]

- Zhang, M.; Li, Z.; Tian, B.; Zhou, J.; Zeng, J. A method for monitoring hydrological conditions beneath herbaceous wetlands using multi-temporal ALOS PALSAR coherence data. Remote Sens. Lett. 2015, 6, 618–627. [Google Scholar] [CrossRef]

- Brisco, B.; Schmitt, A.; Murnaghan, K.; Kaya, S.; Roth, A. SAR polarimetric change detection for flooded vegetation. Int. J. Digit. Earth 2013, 6, 103–114. [Google Scholar] [CrossRef]

- Plank, S.; Jüssi, M.; Martinis, S.; Twele, A. Mapping of flooded vegetation by means of polarimetric Sentinel-1 and ALOS-2/PALSAR-2 imagery. Int. J. Remote Sens. 2017, 38, 3831–3850. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P. Flood Monitoring in Vegetated Areas Using Multitemporal Sentinel-1 Data: Impact of Time Series Features. Water 2019, 11, 1938. [Google Scholar] [CrossRef]

- Olthof, I.; Tolszczuk-Leclerc, S. Comparing Landsat and RADARSAT for Current and Historical Dynamic Flood Mapping. Remote Sens. 2018, 10, 780. [Google Scholar] [CrossRef]

- Pierdicca, N.; Pulvirenti, L.; Boni, G.; Squicciarino, G.; Chini, M. Mapping Flooded Vegetation Using COSMO-SkyMed: Comparison With Polarimetric and Optical Data Over Rice Fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2650–2662. [Google Scholar] [CrossRef]

- Grimaldi, S.; Xu, J.; Li, Y.; Pauwels, V.R.; Walker, J.P. Flood mapping under vegetation using single SAR acquisitions. Remote Sens. Environ. 2020, 237, 111582. [Google Scholar] [CrossRef]

- International Federation of Red Cross (IFRC); Red Crescent Societies. Ghana: Floods in Upper East Region—Emergency Plan of Action Final Report; IFRC: Geneva, Switzerland, 2019. [Google Scholar]

- Campanyà i Llovet, J.; McCormack, T.; Naughton, O. Remote Sensing for Monitoring and Mapping Karst Groundwater Flooding in the Republic of Ireland. In Proceedings of the EGU General Assembly 2020, Vienna, Austria, 4–8 May 2020; p. 18921. [Google Scholar] [CrossRef]

- Copernicus Emergency Management Service (©2015 European Union), EMSR149.

- Buchhorn, M.; Lesiv, M.; Tsendbazar, N.E.; Herold, M.; Bertels, L.; Smets, B. Copernicus Global Land Cover Layers—Collection 2. Remote Sens. 2020, 12, 1044. [Google Scholar] [CrossRef]

- Hostache, R.; Matgen, P.; Wagner, W. Change detection approaches for flood extent mapping: How to select the most adequate reference image from online archives? Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 205–213. [Google Scholar] [CrossRef]

- Simonetti, D.; Simonetti, E.; Szantoi, Z.; Lupi, A.; Eva, H.D. First Results From the Phenology-Based Synthesis Classifier Using Landsat 8 Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1496–1500. [Google Scholar] [CrossRef]

- Lehner, B.; Verdin, K.; Jarvis, A. New Global Hydrography Derived From Spaceborne Elevation Data. Eos Trans. Am. Geophys. Union 2008, 89, 93–94. [Google Scholar] [CrossRef]

- Vedaldi, A.; Soatto, S. Quick shift and kernel methods for mode seeking. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2008; pp. 705–718. [Google Scholar] [CrossRef]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A k-means clustering algorithm. J. R. Stat. Soc. Ser. C Appl. Stat. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Landuyt, L.; Van Wesemael, A.; Schumann, G.J.P.; Hostache, R.; Verhoest, N.E.C.; Van Coillie, F.M.B. Flood Mapping Based on Synthetic Aperture Radar: An Assessment of Established Approaches. IEEE Trans. Geosci. Remote Sens. 2019, 57, 722–739. [Google Scholar] [CrossRef]

- Debusscher, B.; Van Coillie, F. Object-Based Flood Analysis Using a Graph-Based Representation. Remote Sens. 2019, 11, 1883. [Google Scholar] [CrossRef]

- Stephens, E.; Schumann, G.; Bates, P. Problems with binary pattern measures for flood model evaluation. Hydrol. Process. 2014, 28, 4928–4937. [Google Scholar] [CrossRef]

- Weydahl, D.J.; Sagstuen, J.; Dick, O.B.; Rønning, H. SRTM DEM accuracy assessment over vegetated areas in Norway. Int. J. Remote Sens. 2007, 28, 3513–3527. [Google Scholar] [CrossRef]

- Olthof, I.; Rainville, T. Evaluating Simulated RADARSAT Constellation Mission (RCM) Compact Polarimetry for Open-Water and Flooded-Vegetation Wetland Mapping. Remote Sens. 2020, 12, 1476. [Google Scholar] [CrossRef]

- Jet Propulsion Laboratory (JPL). NISAR: Mission Concept. Available online: https://nisar.jpl.nasa.gov/mission/mission-concept/ (accessed on 1 September 2020).

- Pierdicca, N.; Davidson, M.; Chini, M.; Dierking, W.; Djavidnia, S.; Haarpaintner, J.; Hajduch, G.; Laurin, G.V.; Lavalle, M.; López-Martínez, C.; et al. The Copernicus L-band SAR mission ROSE-L (Radar Observing System for Europe) (Conference Presentation). In Proceedings of the SPIE Remote Sensing—Active and Passive Microwave Remote Sensing for Environmental Monitoring, Strasbourg, France, 9–12 September 2019. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A Hierarchical Split-Based Approach for Parametric Thresholding of SAR Images: Flood Inundation as a Test Case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Esch, T.; Bachofer, F.; Heldens, W.; Hirner, A.; Marconcini, M.; Palacios-Lopez, D.; Roth, A.; Üreyen, S.; Zeidler, J.; Dech, S.; et al. Where We Live—A Summary of the Achievements and Planned Evolution of the Global Urban Footprint. Remote Sens. 2018, 10, 895. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).