An OSM Data-Driven Method for Road-Positive Sample Creation

Abstract

1. Introduction

2. Materials and Method

2.1. Experimental Data

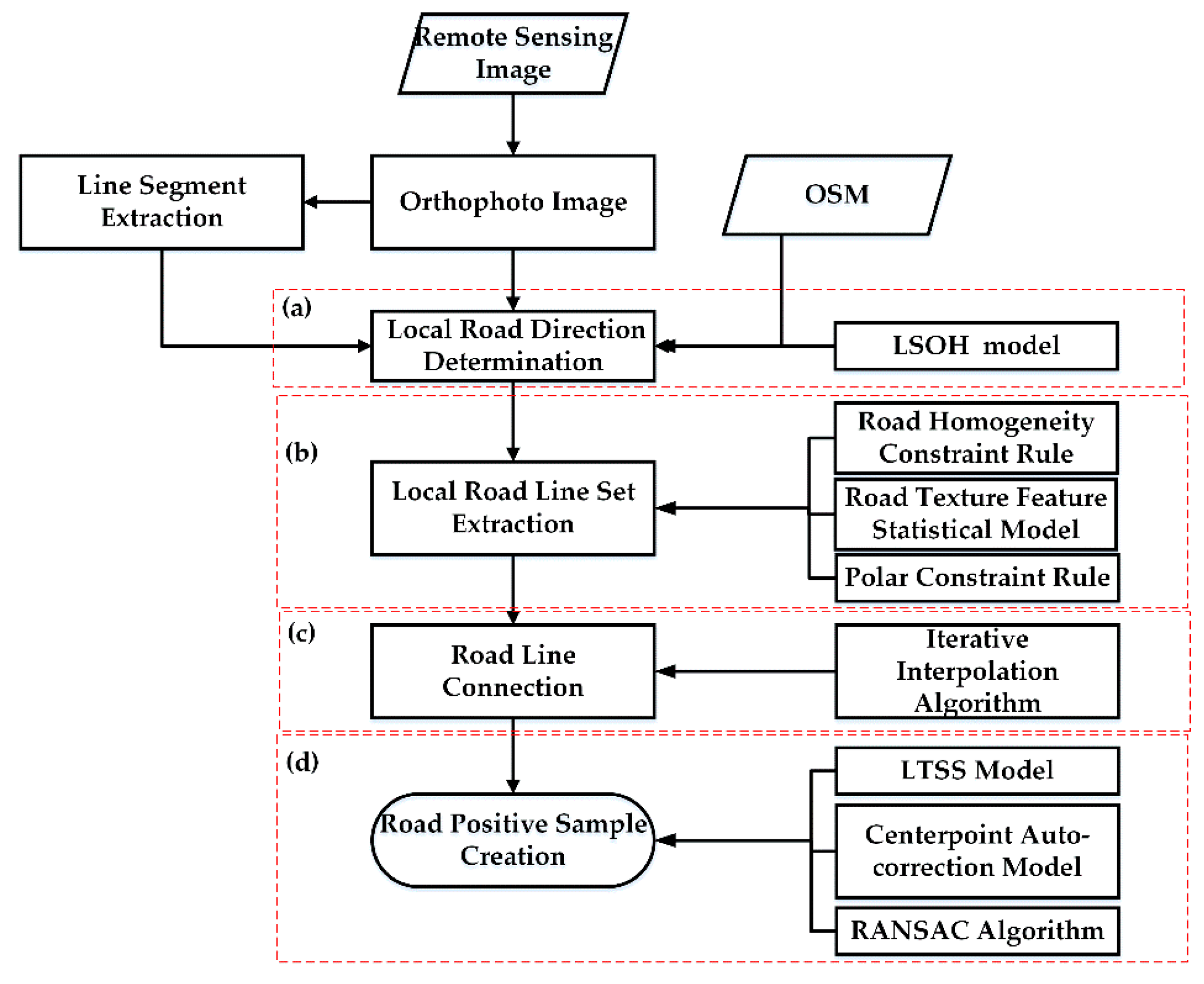

2.2. Methodology

2.2.1. Local Road Direction Determination

2.2.2. Local Road Line Set Extraction

- Local road line location selection

- 2.

- Optimization of the local road line set based on the polar constraint

2.2.3. Road Line Connection

2.2.4. Road-Positive Sample Creation

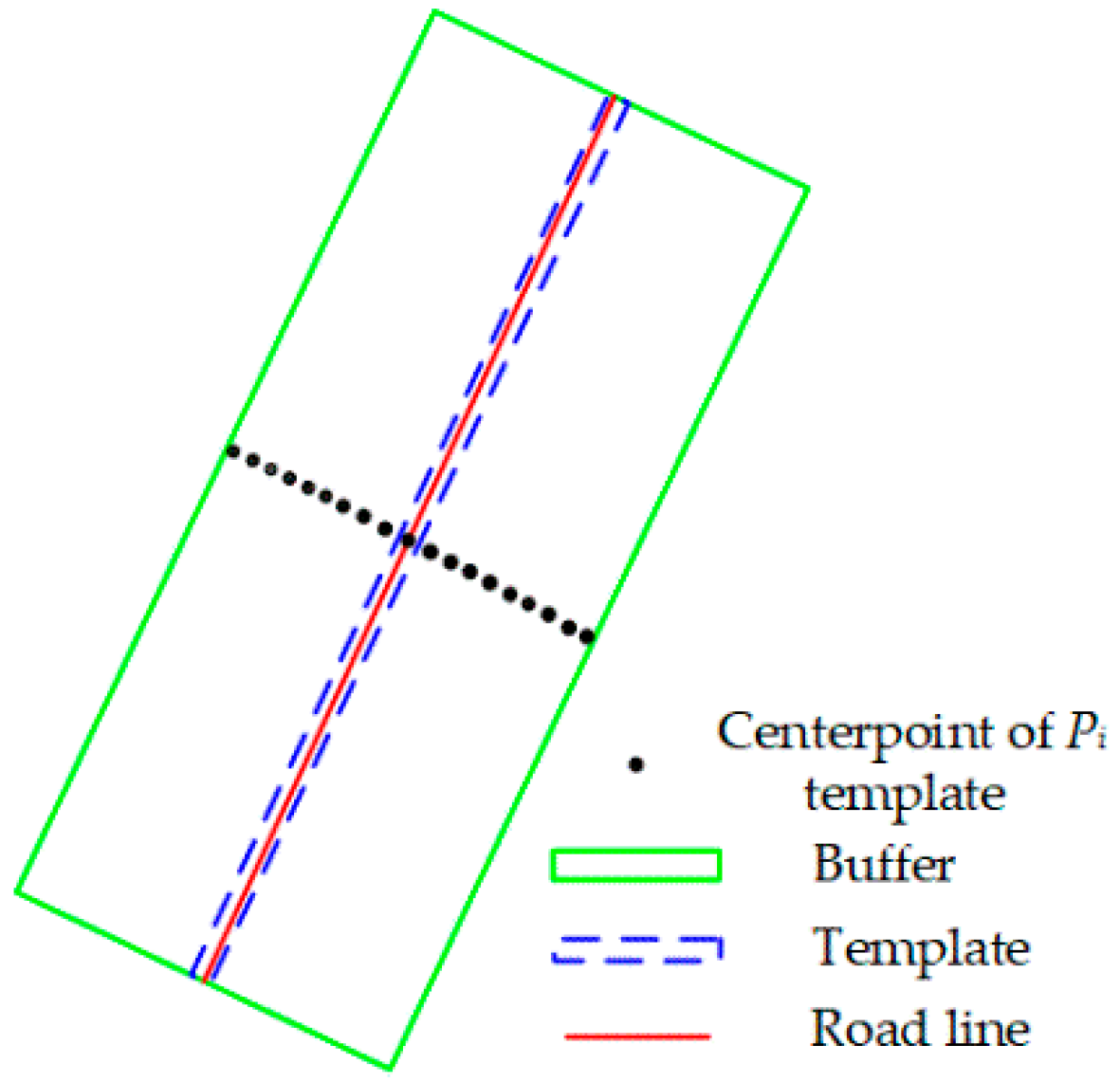

- Statistical region construction

- 2.

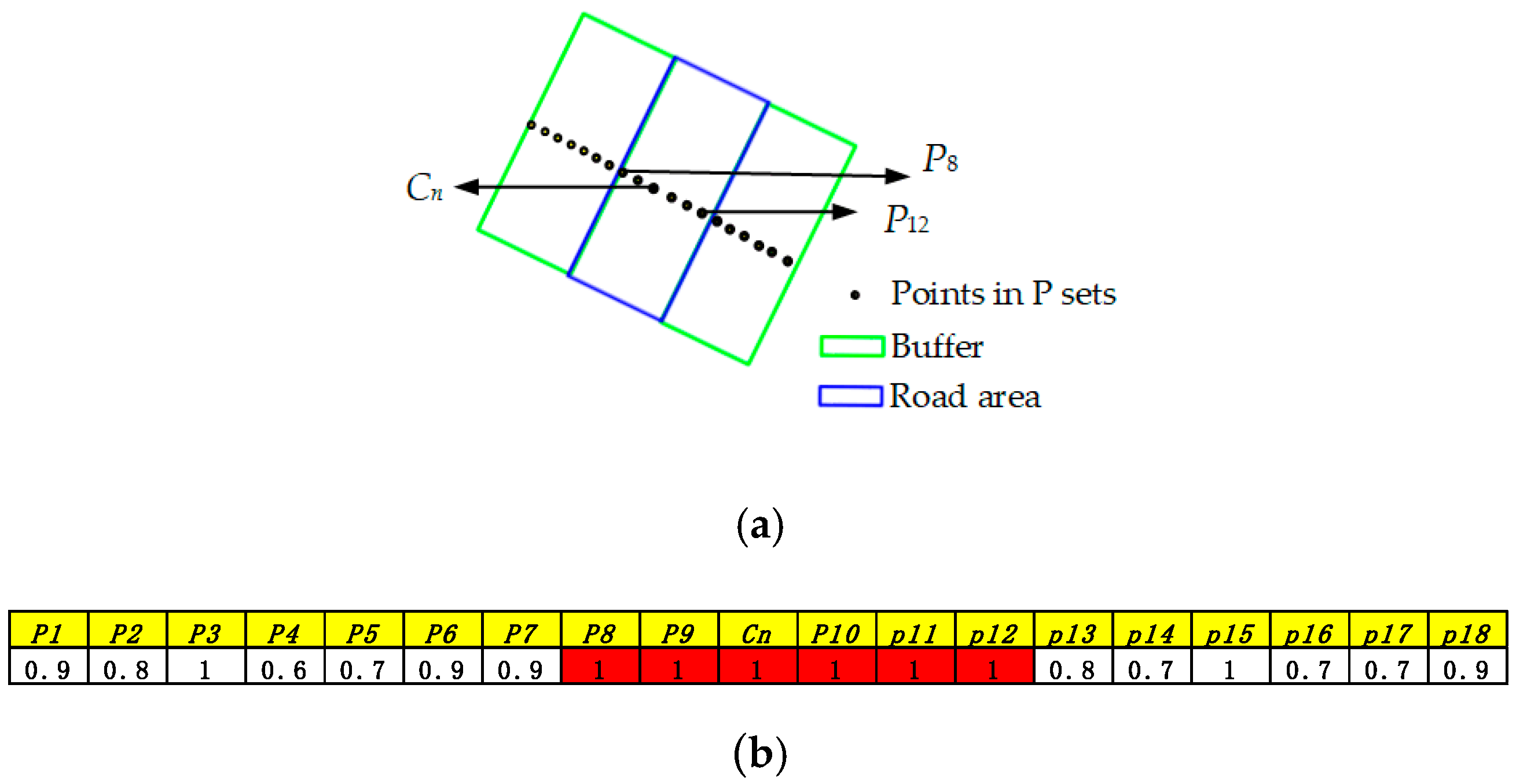

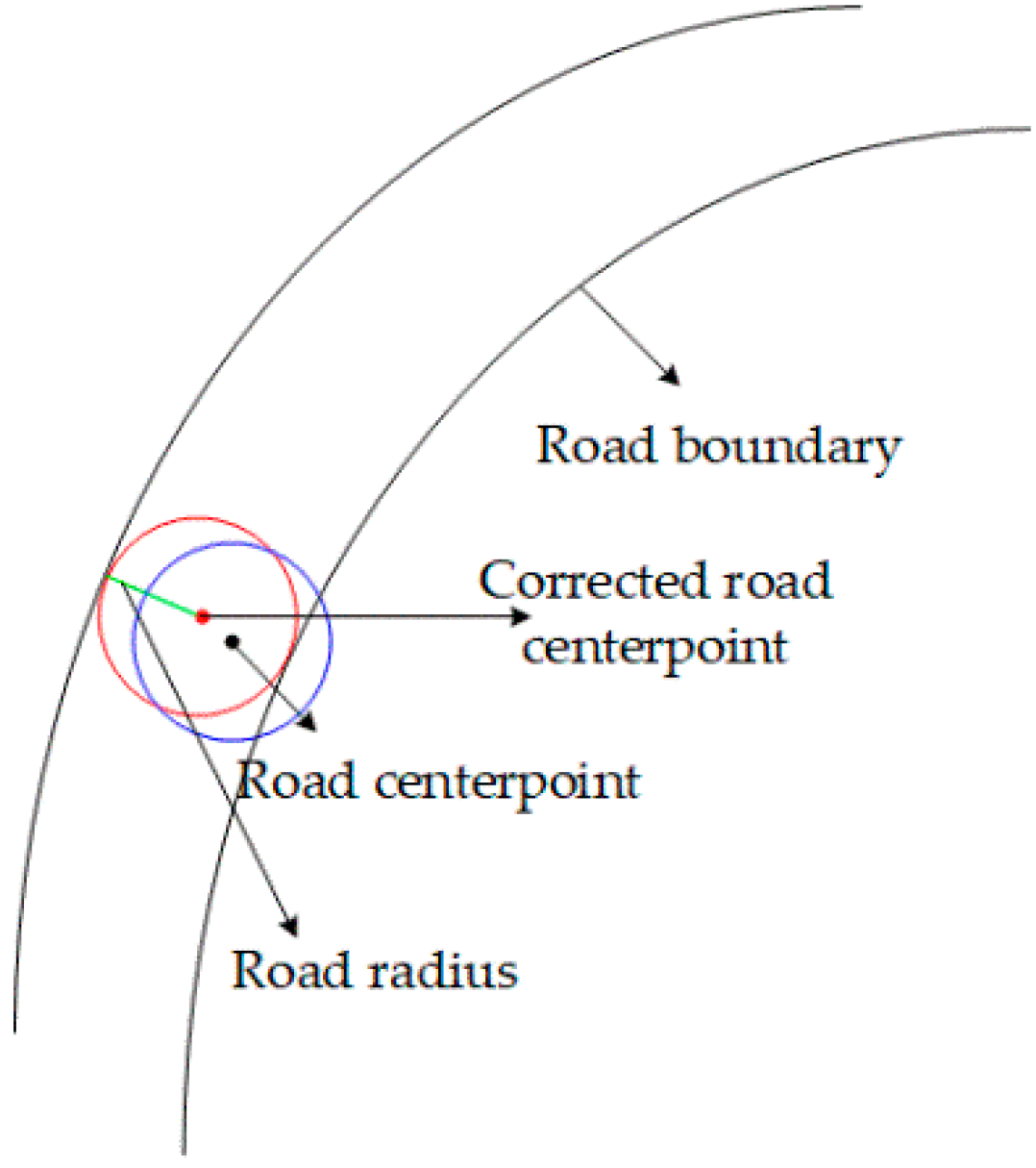

- Local road width determination

- 3.

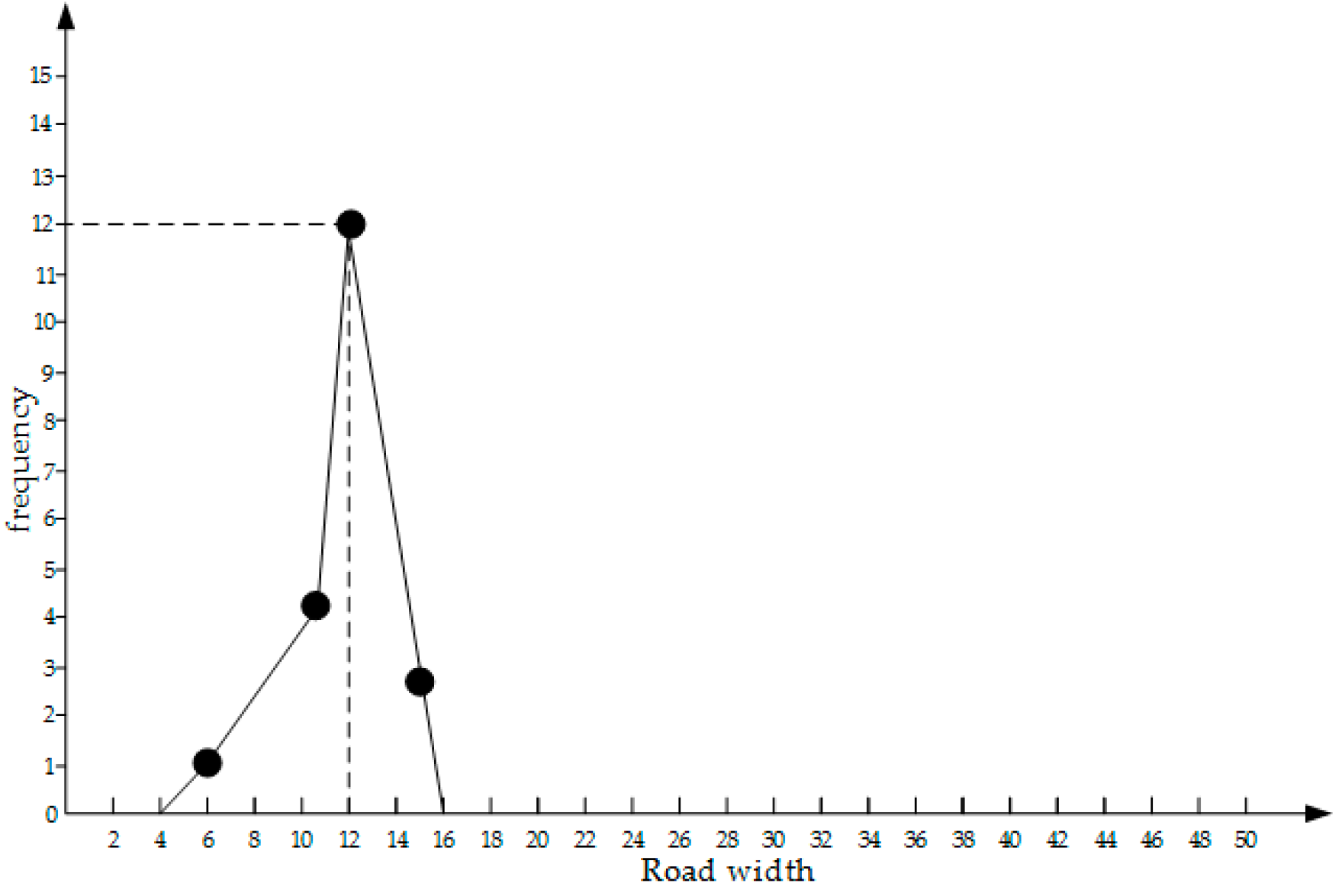

- Road width determination

3. Experimental Analysis and Evaluation

3.1. Comparison Method

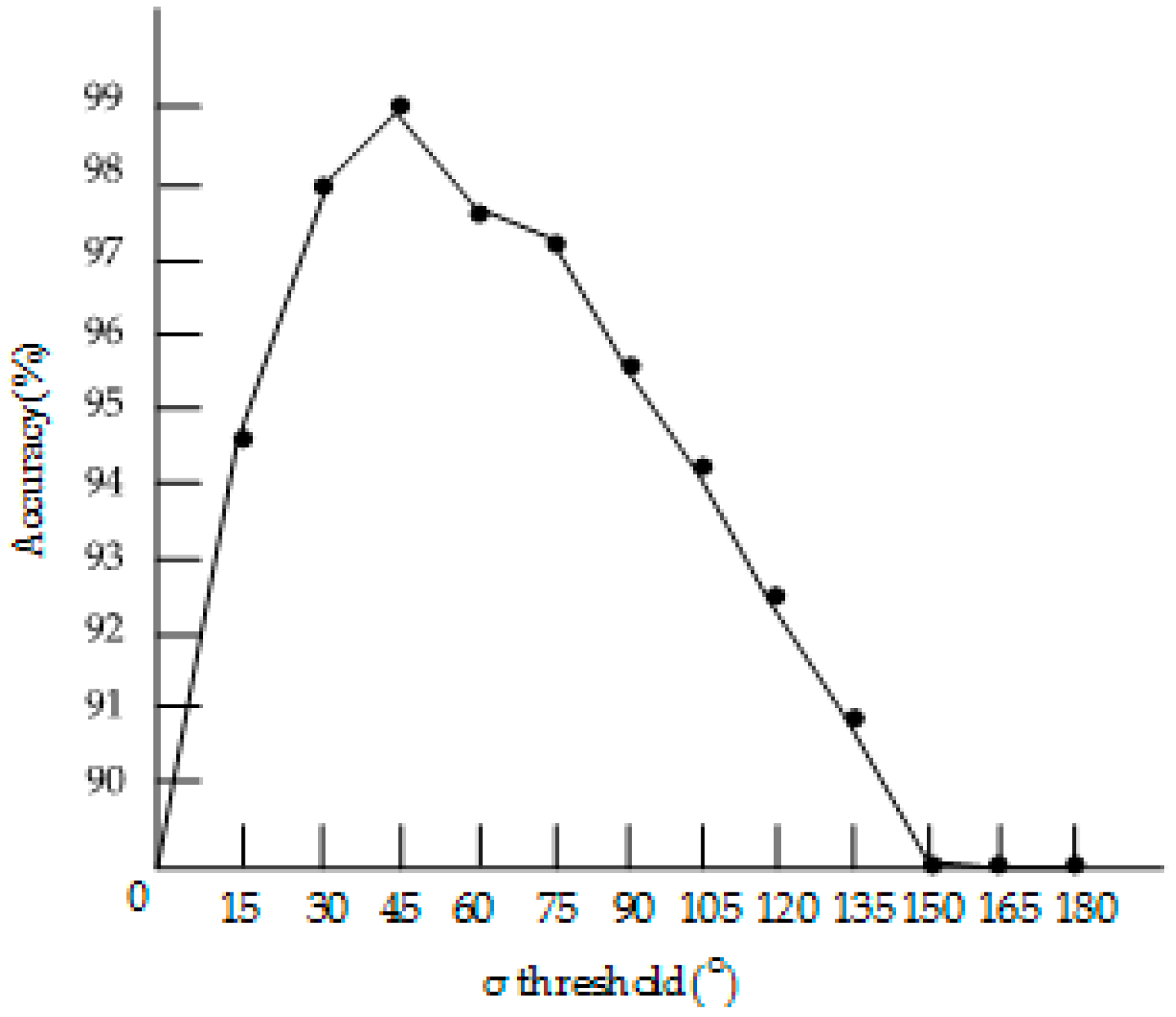

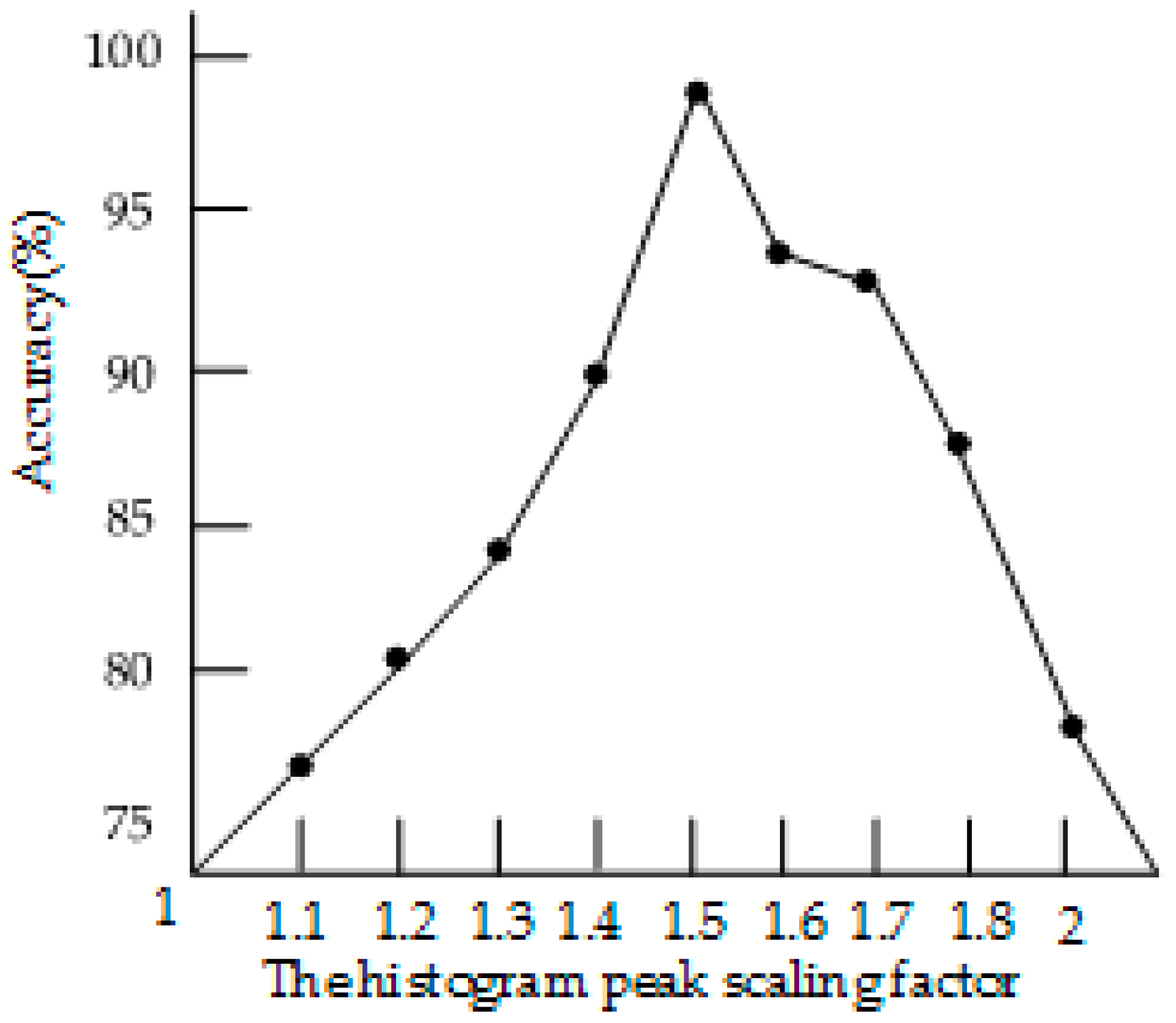

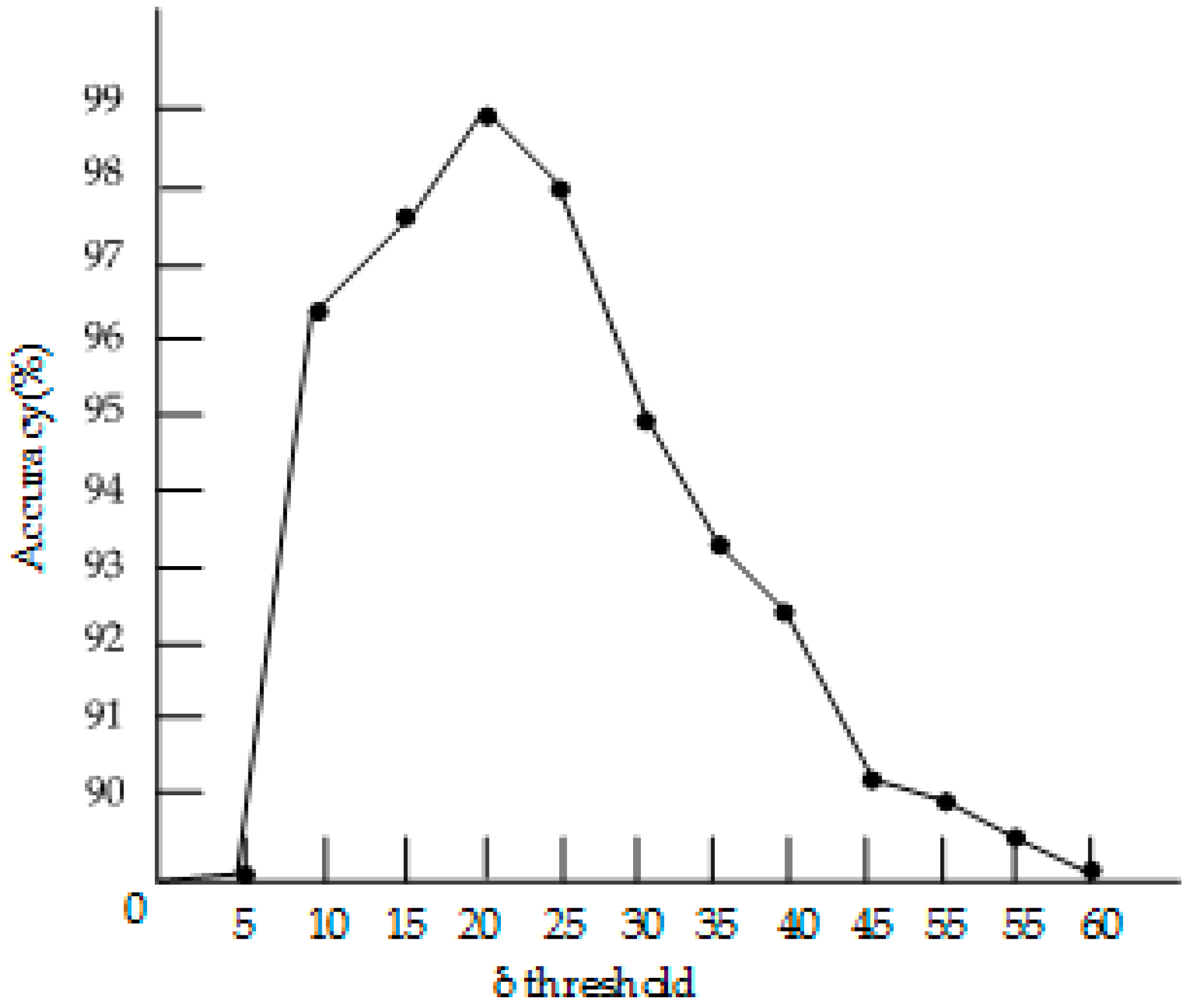

3.2. Parameter Analysis

3.3. Evaluation Index

3.4. Experimental Results and Analysis

3.4.1. Experiment 1

3.4.2. Experiment 2

3.4.3. Experiment 3

3.4.4. Experimental Analysis

4. Discussion

- (1)

- Effective connection between traditional methods and deep learning methods.

- (2)

- Enhancement of the universality of the deep learning method.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ziemsa, M.; Gerkeb, M.; Heipke, C. Automatic road extraction from remote sensing imagery incorporating prior information and colour segmentation. Remote Sens. Spat. Inf. Sci. 2007, 36, 141–147. [Google Scholar]

- Huang, X.; Lu, Q.; Zhang, L. A multi-index learning approach for classification of high-resolution remotely sensed images over urban area. ISPRS J. Photogramm. Remote Sens. 2014, 90, 36–48. [Google Scholar] [CrossRef]

- Steger, C.; Glock, C.; Eckstein, W. Model-based road extraction from images. In Automatic Extraction of Man-Made Objects from Aerial and Space Images; Birkhäuser: Basel, Switzerland, 1995. [Google Scholar]

- Baumgartner, A.; Steger, C.T.; Mayer, H.; Eckstein, W. Semantic objects and context for finding roads. Proc. SPIE Int. Soc. Opt. Eng. 1997, 3072, 98–109. [Google Scholar]

- Treash, K.; Amaratunga, K. Automatic road detection in grayscale aerial images. J. Comput. Civ. Eng. 2000, 14, 60–69. [Google Scholar] [CrossRef]

- Sghaier, M.O.; Lepage, R. Road extraction from very high resolution remote sensing optical images based on texture analysis and beamlet transform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1946–1958. [Google Scholar] [CrossRef]

- Shao, Y.; Guo, B.; Hu, X.; Di, L. Application of a fast linear feature detector to road extraction from remotely sensed imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 4, 626–631. [Google Scholar] [CrossRef]

- Talbot, H.; Appleton, B. Efficient complete and incomplete path openings and closings. Image Vis. Comput. 2007, 25, 416–425. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.; Meer, F.; Werff, H.; Coillie, F.; et al. Geographic object-based image analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R. Hierarchical graph-based segmentation for extracting road networks from high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2017, 126, 245–260. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. RIFD-CNN: Rotation-invariant and fisher discriminative convolutional neural networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2884–2893. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2017, 99, 1–5. [Google Scholar] [CrossRef]

- Liu, R.; Miao, Q.; Song, J.; Quan, Y.; Li, Y.; Xu, P.; Dai, J. Multiscale road centerlines extraction from high-resolution aerial imagery. Neurocomputing 2019, 329, 384–396. [Google Scholar] [CrossRef]

- Gong, J.; Ji, S. Photogrammetry and deep learning. Acta Geod. Cartogr. Sin. 2018, 47, 693–704. [Google Scholar]

- Teerapong, P.; Kulsawasd, J.; Siam, L.; Panu, S. Road segmentation of remotely-sensed images using deep convolutional neural networks with landscape metrics and conditional random fields. Remote Sens. 2017, 9, 680–698. [Google Scholar]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road extraction from high-resolution remote sensing imagery using deep learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Ding, G.; Guo, Y.; Chen, K. DECODE: Deep confidence network for robust image classification. IEEE Trans. Image Process. 2019, 28, 3752–3765. [Google Scholar] [CrossRef] [PubMed]

- Gao, L.; Zhang, X.; Gao, J. Fusion image based radar signal feature extraction and modulation recognition. IEEE Access 2019, 7, 13135–13148. [Google Scholar] [CrossRef]

- Gui, Y.-M.; Wang, R.-J.; Wang, X.; Wei, Y.-Y. Using Deep Neural Networks to improve the performance of protein-protein interactions prediction. Int. J. Pattern Recognit. Artif. Intell. 2020. [Google Scholar] [CrossRef]

- Zhu, X.; Ghahramani, Z.; Lafferty, J. Semi-supervised learning using gaussian fields and harmonic functions. In Proceedings of the 20th International conference on Machine learning, Washington, DC, USA, 21–24 August 2003; pp. 912–919. [Google Scholar]

- Zhou, D.; Bousquet, O.; Lal, T.N. Learning with local and global consistency. Adv. Neural Inf. Process. Syst. 2004, 16, 321–328. [Google Scholar]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Miller, D.J.; Uyar, H.S. A mixture of experts classifier with learning based on both labeled and unlabeled data. Adv. Neural Inf. Process. Syst. 1997, 9, 571–577. [Google Scholar]

- Nigam, K.; Mc Callum, A.K.; Thrun, S. Text classification from labeled and unlabeled documents using EM. Mach. Learn. 2000, 39, 103–134. [Google Scholar] [CrossRef]

- Chapelle, O.; Zien, A. Semi-supervised learning by low density separation. In Proceedings of the Tenth International Workshop on Artificial Intelligence and Statistics, Bridgetown, Barbados, 6–8 January 2005; pp. 57–64. [Google Scholar]

- Li, Y.F.; Tsang, I.W.; Kwok, J.T. Convex and scalable weakly labeled. J. Mach. Learn. Res. 2013, 14, 2151–2188. [Google Scholar]

- Zhou, Z.H.; Li, M. Semi-supervised learning by disagreement. Knowl. Inf. Syst. 2010, 24, 415–439. [Google Scholar] [CrossRef]

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998; pp. 92–100. [Google Scholar]

- Wan, T.; Lu, H.; Lu, Q.; Luo, N. Classification of high-resolution remote-sensing image using openstreetmap information. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2305–2309. [Google Scholar] [CrossRef]

- Cao, C.; Sun, Y. Automatic road centerline extraction from imagery using road GPS data. Remote Sens. 2014, 6, 9014–9033. [Google Scholar] [CrossRef]

- Li, Q.; Luan, X.; Luan, X.; Yang, B.S.; Liu, L. Polygon-based approach for extracting multilane roads from openstreetmap urban road networks. Int. J. Geogr. Inf. Sci. 2014, 28, 2200–2219. [Google Scholar] [CrossRef]

- Chen, B.; Sun, W.; Vodacek, A. Improving image-based characterization of road junctions, widths, and connectivity by leveraging openstreetmap vector map. In Proceedings of the IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014. [Google Scholar]

- Zhang, J.-l.; Zhang, X.-f.; Xu, D.; Wang, D.-s.; Liu, Q.-h.; Li, N. Road_net data construction for intelligent transportation based on the open street map. Road Traffic Saf. 2014, 1, 8. [Google Scholar]

- Haklay, M.; Weber, P. OpenStreetMap: User-generated street maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Zhou, G.; Zhang, R.; Zhang, D.; Huang, J.; Baysal, O. Real-time ortho-rectification for remote-sensing images. Int. J. Remote Sens. 2018, 40, 2451–2465. [Google Scholar] [CrossRef]

- Neis, P.; Zipf, A. Analyzing the contributor activity of a volunteered geographic information project—The case of openstreetmap. ISPRS Int. J. Geo Inf. 2012, 1, 146–165. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, Z.; Xu, M. Road structure refined CNN for road extraction in aerial image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 709–713. [Google Scholar] [CrossRef]

- Jiguang, D.; Zhang, L.; Li, J.; Fang, X. A line extraction method for chain code tracking with phase verification. Acta Geod. Cartogr. Sin. 2017, 46, 218–227. [Google Scholar]

- Vosselman, G.; de Knech, J. Automatic Extraction of Manmade Objects from Aerial and Space Images; Birkhauser Verlag: Basel, Switzerland, 1995; pp. 65–74. [Google Scholar]

- Ballard, D.H. Generalizing the hough transform to detect arbitrary shapes. Pattern Recognit. 1981, 13, 111–122. [Google Scholar] [CrossRef]

- Lian, R.; Wang, W.; Li, J. Road extraction from high-resolution remote sensing images based on adaptive circular template and saliency map. Acta Geod. Cartogr. Sin. 2018, 47, 950–958. [Google Scholar]

- Tan, R.; Wan, Y.; Yuan, F. Semi-automatic road extraction of high resolution remote sensing images based on circular template. Bull. Surv. Mapp. 2014, 63–66. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sujatha, C.; Selvathi, D. Connected component-based technique for automatic extraction of road centerline in high resolution satellite images. EURASIP J. Image Video Process. 2015, 2015, 8. [Google Scholar] [CrossRef]

- Arsanjani, J.J.; Zipf, A.; Mooney, P.; Helbich, M. Introduction to OpenStreetMap in GIScience: Experiences, research, and applications. In OpenStreetMap in GIScience. Lecture Notes in Geoinformation and Cartography; Springer: Cham, Switzerland, 2015; p. 15. [Google Scholar]

- Gao, K.; Zhang, Y.; Zhang, W.; Lin, S. Affine stable characteristic based sample expansion for object detection. In Proceedings of the 9th ACM International Conference on Image and Video Retrieval, CIVR 2010, Xi’an, China, 5–7 July 2010. [Google Scholar]

| CNN | UNet | |

|---|---|---|

| Network layer | 37 | 57 |

| Convolution kernel size | 3 | |

| Pool size | 2 * 2 | |

| Padding | same | |

| Activation function | ReLU | |

| Optimization function | Adam | |

| Loss | categorical_crossentropy | |

| Method | Com % | Cor % | Q % | Time (min) |

|---|---|---|---|---|

| The proposed method | 96.58 | 97.08 | 93.85 | 9 |

| CNN network | 71.34 | 89.51 | 68.85 | 366 |

| UNet network | 81.25 | 86.57 | 80.79 | 352 |

| Experiment | Com % | Cor % | Q % | Time (min) |

|---|---|---|---|---|

| Experiment 2 | 94.21 | 97.02 | 91.56 | 4 |

| Experiment 3 | 85.22 | 97.71 | 83.55 | 7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Li, C.; Zuo, Y.; Ai, H. An OSM Data-Driven Method for Road-Positive Sample Creation. Remote Sens. 2020, 12, 3612. https://doi.org/10.3390/rs12213612

Dai J, Li C, Zuo Y, Ai H. An OSM Data-Driven Method for Road-Positive Sample Creation. Remote Sensing. 2020; 12(21):3612. https://doi.org/10.3390/rs12213612

Chicago/Turabian StyleDai, Jiguang, Chengcheng Li, Yuqiang Zuo, and Haibin Ai. 2020. "An OSM Data-Driven Method for Road-Positive Sample Creation" Remote Sensing 12, no. 21: 3612. https://doi.org/10.3390/rs12213612

APA StyleDai, J., Li, C., Zuo, Y., & Ai, H. (2020). An OSM Data-Driven Method for Road-Positive Sample Creation. Remote Sensing, 12(21), 3612. https://doi.org/10.3390/rs12213612