Abstract

High spatial resolution maps of Los Angeles, California are needed to capture the heterogeneity of urban land cover while spanning the regional domain used in carbon and water cycle models. We present a simplified framework for developing a high spatial resolution map of urban vegetation cover in the Southern California Air Basin (SoCAB) with publicly available satellite imagery. This method uses Sentinel-2 (10–60 × 10–60 m) and National Agriculture Imagery Program (NAIP) (0.6 × 0.6 m) optical imagery to classify urban and non-urban areas of impervious surface, tree, grass, shrub, bare soil/non-photosynthetic vegetation, and water. Our approach was designed for Los Angeles, a geographically complex megacity characterized by diverse Mediterranean land cover and a mix of high-rise buildings and topographic features that produce strong shadow effects. We show that a combined NAIP and Sentinel-2 classification reduces misclassified shadow pixels and resolves spatially heterogeneous vegetation gradients across urban and non-urban regions in SoCAB at 0.6–10 m resolution with 85% overall accuracy and 88% weighted overall accuracy. Results from this study will enable the long-term monitoring of land cover change associated with urbanization and quantification of biospheric contributions to carbon and water cycling in cities.

1. Introduction

A major trend in human habitation is the movement towards cities. According to a 2015 report of the International Organization for Migration, 54% of the global population now lives in cities versus 30% in 1950. These trends are almost certain to continue, with 67% of the world’s population expected to live in cities by the mid 21st century. Within the United States, urban areas grew over 10% from 57.9 million acres in 2000 to 68.0 million acres in 2010.

Currently, 70% of global fossil fuel CO2 emissions are from urban sources [1], but the percentage is likely to change with urbanization, increasing human presence in cities, and changing socio-ecological and climate factors. In particular, there is growing evidence and awareness that urban environments function as ecosystems with strong feedbacks from both the altered biophysical environment and the biota to the resultant system. Recent work has shown that the urban biosphere has a substantial influence on regional carbon and water cycling [2,3]. For example, up to 20% of the excess CO2 flux in the Los Angeles basin has be attributed to biogenic emissions from Los Angeles’ irrigated landscapes, and urban/suburban water use restrictions can decrease irrigation by between 6–35% in Los Angeles [4,5]. Recent studies in Boston [6] and Los Angeles [7] suggest that soil respiration is significantly higher in urban areas due to the fertilization and watering of irrigated lawns and other extensive land management practices. Furthermore, there are several well-established impacts of urban vegetation on climate, such as indirect cooling of urban areas through shading and transpiration, but these factors are heavily dependent on the type of vegetation, land management practices, and air temperature [8].

The Southern California Air Basin (SoCAB) contains both unmanaged, non-urban vegetation and heavily managed urban vegetation with different and unknown impacts on water, carbon, and climate. As new water restrictions and carbon policies are implemented as cities grow, there is an urgent need to better characterize urban ecosystems and land cover classes. We rely heavily on regional distributed land surface models to accurately hindcast and forecast carbon and water cycles. These models require land cover and land use maps that distinguish between land cover types like tree, shrub, grass, water, and impervious surface at appropriate spatial resolutions, but often ignore or misrepresent urban regions due to poorly characterized land cover. High-resolution land cover maps with specific urban vegetation classes are needed to better estimate CO2 sources and sinks within heterogeneous urban environments, detect urban land cover and land use change, and quantify changes in the hydrologic cycle.

Current categorical land cover classification maps in the SoCAB region either cover large areas with a poor spatial resolution, or small areas with a high spatial resolution. For example, the US National Land Cover Database (NLCD) covers the continental US with a spatial resolution of 30 m and classifies urban regions as different levels of development intensity, which is relatively coarse for urban applications [9]. In Los Angeles, several studies have created higher spatial resolution maps using a combination of field studies and remote sensing technology to investigate the socioenvironmental value of Los Angeles’s urban forests and other environmental impacts of urban land cover [10,11,12,13]. Field studies are critical to validating vegetation classification based on remotely sensed imagery but challenging to replicate over large areas. Thus, the region of interest for such studies have been limited to the census defined City of Los Angeles or Los Angeles County (Figure 1), limiting their applicability for use in regional carbon and hydrologic cycle models. For example, [14] assessed 28 1-hectare plots throughout the city using high-resolution aerial imagery, QuickBird, Landsat, moderate resolution imaging spectroradiometer (MODIS), and airborne lidar to categorize species richness, tree density, and tree cover. Nowak et al. [11] used the Forest Service’s Urban Forest Effects (UFORE) model to assess 348 0.04-hectare field plots throughout the city to quantify urban tree species distribution, urban forest structure, and its effects on human health in Los Angeles. Wetherley et al. [15] used airborne visible-infrared imaging spectrometer (AVIRIS) (18 m) and AVIRIS Next Generation (AVIRIS-NG) (4 m) imagery to estimate sub-pixel fractions of urban land cover in Santa Barbara, CA with multi-endmember spectral analysis (MESMA) and evaluated with known field pixels to obtain accuracies ranging between 75% and 91% for spectrally similar and dissimilar classes, respectively. The Environmental Protection Agency (EPA) EnviroAtlas project recently developed a NAIP and LiDAR-based 1 m land cover classification with 82% overall accuracy and 10 distinct land cover classes in 30 urban communities, including Los Angeles County [16].

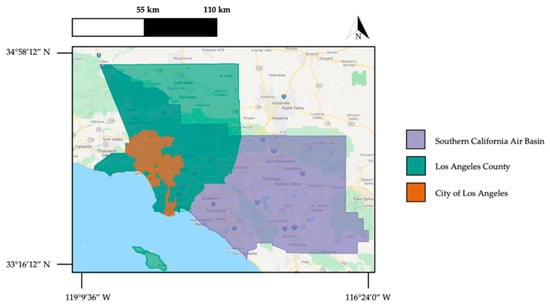

Figure 1.

Spatial relationship of SoCAB to census-designated City of Los Angeles and Los Angeles County within southern California. High-resolution land cover classification is needed across SoCAB for accurate CO2 flux inversion modeling, but available maps at an adequate resolution for flux quantification only cover the City of Los Angeles or Los Angeles County.

Outside of southern California, Erker et al. [17] used airborne imagery from National Agriculture Imagery Program (NAIP) imagery to classify five main classes of vegetation across the entire state of Wisconsin at 0.6 m resolution: tree/woody vegetation, grass/herbaceous vegetation, impervious surface/bare soil, water, and non-forested wetland. The final classification contained misclassifications from shade effects due to topography and heterogeneous urban environments, both of which are prevalent in SoCAB due to the presence of 16.8 million people and a substantial elevation gradient, with elevations ranging from 0 m at the Pacific Ocean to 3500 m in the San Gabriel Mountains.

Our study will use publicly available data to create a flexible framework for urban land cover mapping with land cover classes relevant to disentangling urban carbon fluxes. As a case study, we will apply this framework over the entire SoCAB domain and classify multiple land cover classes, including grass, shrubs, and forests. We will implement a novel technique for classifying urban and non-urban shadow pixels that have been a major source of uncertainty in previous remote sensing-based urban land cover classifications and provide an accuracy assessment of our classification map.

This classification effort will use a supervised random forest algorithm in Google Earth Engine (GEE) and a combination of NAIP imagery (0.6 × 0.6 m) and Sentinel-2 imagery (10 × 10 m) to classify impervious surface, tree, grass, shrub, water, and bare soil/non-photosynthetic vegetation (NPV) in heterogeneous urban and non-urban regions across SoCAB. NAIP imagery will be used to classify urban regions and Sentinel-2 imagery will be used to classify non-urban regions and shadow regions. We combine bare soil and NPV into a single NPV/bare soil class because these land cover classes have a negligible effect on the carbon cycle and are challenging to distinguish using only red-green-blue (RGB)-near infra-red (NIR) optical imagery. Our framework for both urban and non-urban land cover classification across SoCAB covers a larger region of southern California at a higher spatial resolution (0.6–10 m) than other known classifications. In addition, this classification utilizes NAIP and Sentinel-2 imagery that are widely available across the United States to map land cover types relevant to carbon and hydrological cycle modeling.

2. Materials and Methods

In the present study, we implement a modified method for vegetation classification following Erker et al. [17] to better account for shadow effects (Figure 2). The following sections describe our classification scheme in more detail, including brief description of Sentinel-2 and NAIP imagery data (Section 2.1), preprocessing for water and shadow effects (Section 2.2), selection of training and validation data (Section 2.3) for supervised image classification (Section 2.4) using object-based classification (Section 2.5), and validation (Section 2.6). All code and documentation for our high-resolution vegetation classification is available in the Supplementary Materials section.

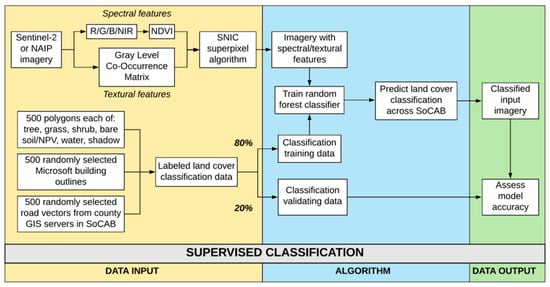

Figure 2.

Our workflow for supervised classification of urban vegetation can be implemented with multispectral Sentinel-2 or NAIP imagery using labeled training polygons from across SoCAB. This method utilizes both spectral and textural features. The classification accuracy is determined from presence-only validation data separated from the training data prior to training the random forest classifier in Google Earth Engine.

2.1. Sentinel-2 and NAIP Data

All classification efforts were completed in Google Earth Engine (GEE) (https://earthengine.google.com) in August 2019 using built-in NAIP and Level 1C Sentinel-2 data sets under the Earth Engine snippets ee.ImageCollection(“USDA/NAIP/DOQQ”) and ee.ImageCollection(“COPERNICUS/S2”), respectively. GEE is a cloud-based platform for geospatial analysis that supports a multi-petabyte data catalogue through internet-based application programming interfaces (API) in Python and JavaScript [18]. The JavaScript API and data catalogue provided both Sentinel-2 imagery and NAIP imagery necessary for our classification effort and provided a fast and efficient way to classify and export the entire SoCAB spatial domain in 8 hours.

NAIP airborne imagery is delivered by the United States Department of Agriculture USDA’s Farm Service Agency to provide leaf-on imagery over the entire continental US during the growing season and is freely and publicly accessible at either a 60 cm or 1 m resolution. Like most states, California’s NAIP imagery contains four spectral bands (red, green, blue, and near-infrared) at 60 cm resolution and is re-acquired roughly every four years (2008, 2012, and 2016). The NAIP dataset’s high spatial resolution and availability over the entire country made it an ideal first step for urban vegetation classification in Los Angeles. This classification used 2016 NAIP imagery, the most recent collection available over southern California on Google Earth Engine.

Imagery from the Copernicus Sentinel-2 mission has a relatively high spatial resolution (10–60 m × 10–60 m spatial resolution and 440–2200 nm wavelength) and frequent revisit time (3–5 days in midlatitudes) for an earth-observing satellite, making it ideal for studying vegetation change. Sentinel-2 is a 12-band multispectral satellite with red, green, blue (RGB), and near-infrared (NIR) bands (Band 2, Band 3, Band 4, Band 8) at 10 m spatial resolution, and other bands with 20–60 m spatial resolution. This classification used Level 1C Sentinel-2 imagery, which provides top-of-atmosphere reflectance in cartographic geometry and has been radiometrically and geometrically corrected by the European Space Agency (ESA). Due to Sentinel-2’s limited operating time and availability in Google Earth Engine, only Level 1C imagery from February 2018 to December 2018 was used, rather than Level 2A Sentinel-2 imagery.

2.2. Imagery Preprocessing

2.2.1. Shadow Effects

Sentinel-2 imagery provides the advantage of frequent revisit time throughout the year as the sun angle changes, and as such, shadow position shifts and shadow effects can be minimized by calculating the median spectral signature in urban and non-urban regions of SoCAB. We used the median 2018 Sentinel-2 spectral signature per pixel, aligned with the time frame for 2016 NAIP acquisition, to produce shadow-corrected Sentinel-2 imagery as an input to the land cover classification (Figure 3). This study combined 2016 NAIP imagery and 2018 Sentinel-2 imagery because at the time of development neither 2016 Sentinel-2 imagery nor 2018 NAIP imagery for California was available on Google Earth Engine.

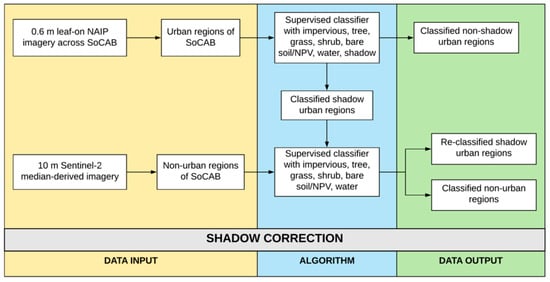

Figure 3.

Combining classified Sentinel-2 and NAIP imagery into a single data product across SoCAB enabled classification in shadow and mountainous regions, while maintaining a high spatial resolution in other urban regions.

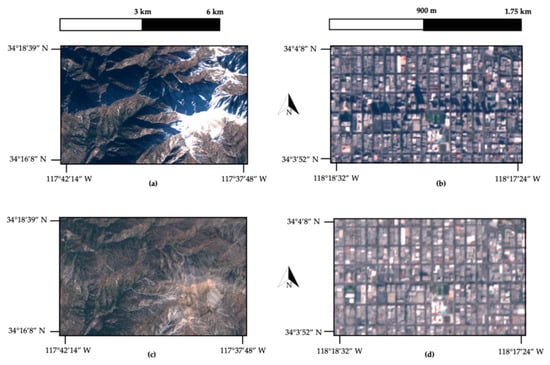

Using median-derived Sentinel-2 imagery helps to exclude both high and low spectral reflectance values that often skew NAIP training data, corresponding to snow/clouds/albedo and shadows, respectively. To demonstrate, we compare Sentinel-2 images derived from a single snapshot and from the 6-month median in urban and non-urban cases (Figure 4). Our method is effective at removing both urban shadows from non-urban shadows in topographically complex mountainous regions outside (Figure 4a,c) and from tall buildings (Figure 4b,d).

Figure 4.

10 m Sentinel-2 imagery was used to improve land cover classification accuracy in non-urban areas and urban shadow-classified regions of SoCAB. Compared to a single 2018 10 m image: (a) Mt. San Antonio in Angeles National Forest; (b) Wilshire Center, Los Angeles, calculating the median per pixel value over a 6-month period significantly reduced the effects of shadows and snow from: (c) topographically complex mountainous regions; (d) urban building shadows.

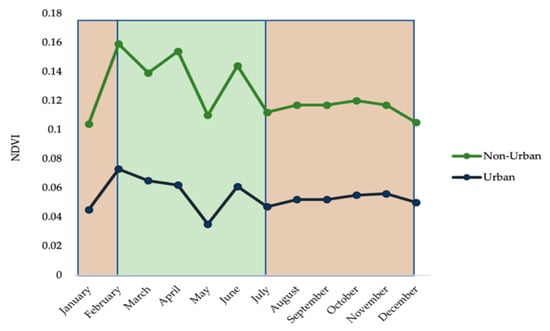

Recognizing that a pixel’s spectral signature may change substantially between leaf-on and leaf-off seasons for urban and non-urban pixels, we used the Sentinel-2 median monthly normalized vegetation index (NDVI) from 2015 to 2019 to define the boundaries of leaf-on and leaf-off seasons (Figure 5). Urban regions were defined as areas of SoCAB overlapping the five 2010 U.S. Census Bureau urbanized areas in Los Angeles: Camarillo, Los Angeles-Long Beach-Anaheim, Riverside-San Bernardino, Simi Valley, and Thousand Oaks, with large agricultural and undeveloped areas removed [12]. Both non-urban and urban ecosystems in SoCAB experience a leaf-on period from February to June, and a leaf-off period from July to January. A recent study of photosynthetic seasonality in California found a double peak in solar-induced fluorescence (SIF) activity in April and June due to grass and shrub ecosystems versus evergreen ecosystems, similar to our non-urban Sentinel-2 NDVI data [19]. Determining the leaf-on and leaf-off timeframes in SoCAB is important to the urban/non-urban land cover classification study because separating textural and spectral features into leaf-on and leaf-off periods effectively doubles the number of training features for our supervised classification process.

Figure 5.

Calculating the median Sentinel-2 per pixel value across multiple months has several advantages, such as (1) significantly diminishing spectral effects from shadows, clouds and snow across SoCAB and (2) potentially enabling observation of vegetation phenological traits between leaf-on and leaf-off periods in urban and non-urban regions of SoCAB. We chose the distinct periods to be February-June for rising urban and non-urban NDVI that indicate leaves coming on, and July-January for decreasing NDVI as leaves senesce and eventually die.

2.2.2. Water Pixels

Prior to performing supervised classification on NAIP and Sentinel-2 imagery, all water pixels were masked in Google Earth Engine to reduce misclassifications due to confusion between spectrally similar water and impervious surface or shadowed pixels. The normalized difference water index (NDWI) uses green and NIR spectral bands to identify open water features in remotely sensed imagery [20]. The modified NDWI (MDNWI) uses the green and MIR spectral bands to identify open water features, particularly in regions dominated by built-up land features by suppressing urban, vegetation, and soil noise [21]. NDWI can be calculated from both Sentinel-2 and NAIP imagery using green and NIR bands, and MNDWI can be calculated from Sentinel-2 using green and SWIR bands scaled to 20 m resolution to more accurately classify water features in urban areas [22]. A threshold approach masked all pixels with MNDWI or NDWI greater than 0.3 as water [23]. Sentinel-2 MNDWI was used to mask larger water features at a 20 m resolution, such as lakes and reservoirs, and NAIP NDWI was used to mask smaller water features at a 0.6 m resolution, such as pools.

2.3. Training and Validating Data for Supervised Classification

To train and validate the land cover classification model, we relied on known GIS layers for impervious surface (roads and buildings), and hand-drawn polygons for the other classes. Building footprints were obtained as polygons from Microsoft US Building Footprints [24], which contains 8 million building footprints in SoCAB. Road centerline data was obtained as vectors from Los Angeles, Orange, Riverside, San Bernardino, Ventura, and Santa Barbara county GIS servers, then mosaicked together for a total of 630,000 road vectors. For the remaining land cover classes, we drew 500 polygons per land cover type for tree, grass, shrub, and bare soil/NPV, (i.e., dormant and dead vegetation) and 200 polygons per land cover type for shadow, pool and lake.

Training data for impervious surface classification were obtained independently for buildings and roads, then combined as one data set with 1000 randomly chosen polygons and vectors. Training data for water classification was obtained separately for pools and lakes, then combined into a single water class to reduce model complexity. The training data shapefiles for each land cover class (impervious, tree, grass, shrub, bare soil/NPV, shadow, and water) were imported to Google Earth Engine, and 5000 points were randomly selected from each class and binned as training (80%) and validating (20%) data.

2.4. Supervised Image Classification with NAIP and Sentinel-2 Imagery

We performed two distinct image classifications on 1) urban areas defined by Wetherley et al. [12] and 2) non-urban and urban areas with significant shadow effects. NAIP imagery was used for the initial classification of all urban areas, and Sentinel-2 data was used to refine classification in urban areas with shadows. An index-based thresholding method was used to remove water pixels from NAIP and Sentinel-2 imagery prior to supervised classification (Section 2.2.1).

The first image classification was performed across all urban areas with NAIP imagery at 60 cm resolution using the land cover classes of impervious surface, tree, grass, bare soil/NPV, and shadow in Google Earth Engine. Regions classified as shadow were reclassified with 10 m Sentinel-2 imagery resampled to 60 cm with nearest neighbor resampling using the land cover classes of impervious, tree, grass, shrub, and bare soil/NPV. It was assumed that there were no shadows in the Sentinel-2 imagery after shadow removal and that all water pixels were masked in the MNDWI and NDWI thresholding step. The second image classification was performed across all non-urban areas with Sentinel-2 imagery at 10 m resolution using the land cover classes of impervious surface, tree, grass, shrub, and bare soil/NPV. To create the final land cover classification map over SoCAB, all non-urban pixels classified with Sentinel-2 were resampled to 60 cm using nearest neighbor resampling, and land cover maps of urban shadow, urban non-shadow, and non-urban areas were mosaicked together in Google Earth Engine. As such, our land cover classification map has a resolution of 0.6 m in urban areas and 10 m in non-urban areas of SoCAB.

2.5. Object-Based Classification

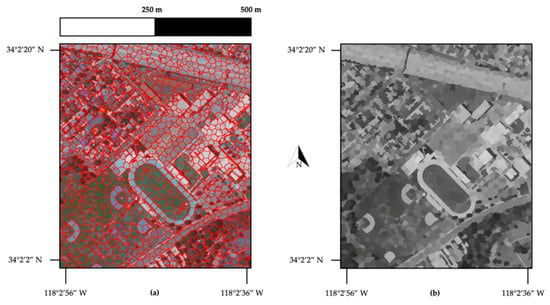

Given the high spatial resolution of NAIP imagery, we used object-based vegetation classification [25] to resolve “salt-and-pepper” effects, where individual pixels are incorrectly classified as a different class from their neighbors, thereby decreasing classification accuracy. Object-based classification uses a superpixel segmentation method to decrease computational complexity and increase classification accuracy [26]. This study implemented simple non-iterative clustering (SNIC) to identify clusters of spectrally similar pixels in Google Earth Engine. The SNIC algorithm identifies neighboring pixels at an arbitrary seed pixel that are spectrally similar in an RGB image [27]. At the resolution of NAIP imagery, the size of a single SNIC superpixel typically clustered around a tree or similarly sized land cover object (Figure 6) [28].

Figure 6.

Object-based image classification significantly improves classification accuracy of high-resolution imagery in heterogeneous urban and suburban regions: (a) the SNIC algorithm identifies spectrally similar groups of neighboring pixels based on RGB values near South El Monte High School; (b) spectral and textural values within a single “superpixel” are averaged prior to being used for training data in a supervised learning algorithm.

In addition to increasing classification accuracy, implementing an object-based classification method for NAIP imagery decreased the computational resources needed to perform land cover classification across all of SoCAB. For example, there are 553 NAIP images covering all of SoCAB (17,100 square km), each with 100–150 million 60 cm pixels. At a minimum, there are 55 billion 60 cm pixels requiring land cover classification. Reducing the number of objects in a single tile to 20 million superpixels, which roughly corresponds to a new seed pixel every 5 pixels, the total number of pixels to classify was decreased to 11 billion.

For classifying NAIP and Sentinel-2 imagery, we used a combination of multispectral and textural features to train a Rifle Serial Classifier with 30 ensembled decision trees, which is the Random Forest algorithm implementation in Google Earth Engine [29]. The random forest supervised machine learning model was used to classify urban land cover as: impervious surface, tree, grass, bare soil/NPV, and shrub. Spectral features included red, green, blue, NIR, and NDVI. We derived textural features, commonly used to identify spatial tone relationships in images, from Google Earth Engine’s native gray-level co-occurrence matrix (GLCM) functions on the NDVI spectral band [28]. The selected textural features for this classification were contrast, entropy, correlation, and inertia and are defined in Haralick et al [28].

2.6. Land Cover Classification Validation

We withheld 20% of the initial training data to perform validation of our land cover classification across SoCAB and generate three confusion matrices: 1) SoCAB classification using only NAIP, 2) SoCAB classification using only Sentinel-2, and 3) SoCAB classification using the combined NAIP/Sentinel-2 approach. Confusion matrices are frequently used to calculate the performance of a supervised classification algorithm and provide a visualization of an algorithm’s performance in terms of user’s accuracy, producer’s accuracy, and overall accuracy. The user’s accuracy refers to the classification accuracy from the point of view of the map viewer, or how frequently that the predicted class is the same as the known ground features. The producer’s accuracy refers to the classification accuracy from the point of view of the map maker, or how frequently known ground features are correctly predicted as such. The overall accuracy refers to the number of features that were correctly predicted out of the total number of known features [30]. We used a weighted accuracy assessment using the percentage of pixels classified as each land cover class to calculate the weighted overall accuracy for each land cover map.

3. Results

Our land cover map classified SoCAB into 6 distinct land cover classes: impervious surface, tree, grass, shrub, bare soil/NPV, and water. Our combined NAIP/Sentinel-2 map classified urban regions of SoCAB at 0.6 m resolution and non-urban regions at 10 m. This classification determined that SoCAB is 19.85% impervious surface, 18.78% tree, 9.86% grass, 32.51% shrub, 18.46% bare soil/NPV, and 0.54% water.

3.1. NAIP-Only and Sentinel-2-Only Classification

The NAIP-only classification of SoCAB at a 60 × 60 cm resolution had an overall accuracy of 78% and weighted overall accuracy of 80% (Table 1). At this resolution, our land cover map could distinguish individual trees from large shrubs at the scale of a city street. Implementing an object-based classification approach and including textural features in the training data set significantly reduced the “salt-and-pepper” classification effect that decreased the accuracy of our initial high-resolution land cover classification with only spectral features (63% overall accuracy). However, the NAIP-only method often misclassified heterogeneous urban areas and topographically complex regions of SoCAB. In urban regions of Los Angeles, shadows cast by skyscrapers and taller residential buildings were frequently misclassified as shrub and tree, leading to a high bias in the proportion of unmanaged vegetation in heavily developed regions of SoCAB. In mountainous regions, strong uncorrected shadow effects led to large regions of mountainous vegetation misclassifying as water.

Table 1.

Confusion matrix for NAIP only classification with overall accuracy of 78% and weighted overall accuracy of 80%.

The Sentinel-2-only classification of SoCAB at 10 × 10 m resolution had an overall accuracy of 87% and weighted overall accuracy of 90% (Table 2). The lower spatial resolution of Sentinel-2 imagery limited the “salt-and-pepper” classification effect due to the larger individual pixel size. In comparison to the NAIP only classification, user’s accuracy and producer’s accuracies were within 5–30% for all land cover classes, with the exception of the producer’s accuracy of water. In non-urban regions of SoCAB, using median-derived imagery significantly reduced shadow effects from vegetation and steep terrain, and removed high reflectance surfaces including snow and clouds, thus increasing classification accuracy. In more developed urban areas within SoCAB, merely resampling via nearest neighbor the 10 × 10 m resolution resulted in misclassification or urban vegetation classes.

Table 2.

Confusion matrix for Sentinel-2 only classification with overall accuracy of 87% and weighted overall accuracy of 90%.

3.2. NAIP/Sentinel-2 Combined Classification

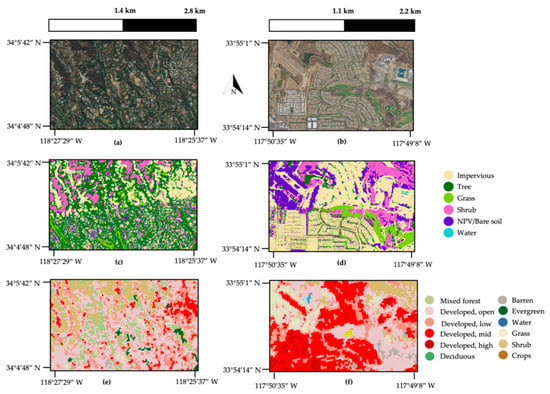

Combining NAIP and Sentinel-2 imagery for a single high-resolution shadow-corrected land cover map over SoCAB at 60 × 60 cm had an overall accuracy of 85% and a weighted overall accuracy of 88% (Table 3). Although overall accuracy is slightly lower than the Sentinel-2-only classification, the NAIP/Sentinel-2 classification maintains the higher spatial resolution necessary for characterizing heterogeneous urban vegetation in Los Angeles. Using Sentinel-2 imagery to classify non-urban regions of SoCAB and to reclassify shadowed NAIP pixels increased the overall classification accuracy to 85% compared to 78% overall accuracy with the NAIP-only classification. This classification results in a final product that has a 10 m spatial resolution in non-urban areas and a 0.6 m spatial resolution in urban areas of SoCAB (Figure 7).

Table 3.

Confusion matrix for NAIP/Sentinel-2 classification with overall accuracy of 85% and weighted overall accuracy of 88%.

Figure 7.

Our final NAIP and Sentinel-2 combined classification for SoCAB provided a significant improvement in spatial resolution over the previous NLCD classification at a high accuracy of 85%, successfully differentiating between six distinct land cover classes and minimizing the shadow effects on land cover classification at 0.6 to 10 m resolution. This classification was highly effective in urban and non-urban regions of SoCAB, using 10 m Sentinel-2 imagery and 0.6 m NAIP imagery: (a) West Hollywood, Los Angeles, California; (b) Carbon Canyon Regional Park in Brea, California. (c,d) 10 m Sentinel-2 imagery is used classify non-urban regions and 0.6 m NAIP imagery is used to classify urban areas to increase overall accuracy. (e,f) Significant urban and suburban vegetation is visible in our classification, in comparison to mixed “low-intensity” to “medium-intensity” and shrub classification in the original NLCD classification.

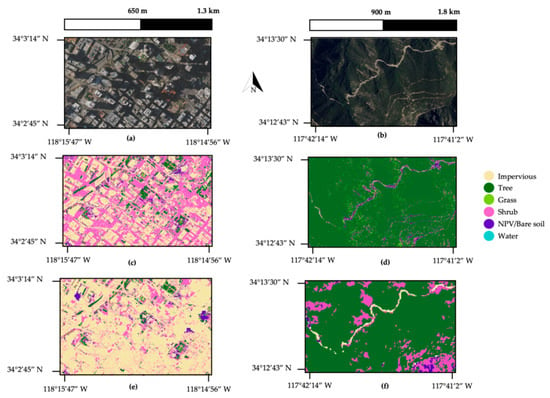

3.3. Shadow Classification with Median-Corrected Sentinel-2 Imagery

The NAIP-only method misclassified vegetation in regions impacted by impervious shadows cast by topography, skyscrapers and taller residential buildings (Figure 8a,b). These locations were frequently misclassified as shrub and tree, leading to unrealistic classification of unmanaged vegetation within the megacity (Figure 8c). Uncorrected shadow effects also confounded NAIP-only classification in unmanaged and non-urban mountainous regions in SoCAB, such as Angeles National Forest and the Santa Monica Mountains (Figure 8d). Time resolved imagery from Sentinel-2 correctly identified pixels with shadows. Re-classifying known “shadow” pixels with median-corrected Sentinel-2 imagery improved overall classification accuracy by 7% and reduced the presence of unmanaged vegetation in downtown Los Angeles (Figure 8e,f).

Figure 8.

Our combined classification of median-derived Sentinel-2 and NAIP imagery in urban and non-urban areas significantly reduced misclassifications due to shadow effects. NAIP imagery is not ortho-corrected and shows strong shadows in (a) heavily developed downtown Los Angeles, California and (b) Angeles National Forest. Our preliminary NAIP-only classification performed poorly in these regions, strongly overclassifying impervious surface as shrub in urban regions (c) and misclassifying shrub and forest as water and impervious surface in mountainous regions (d). Reclassifying urban areas that classified as “shadow” from our NAIP classification using median-derived Sentinel-2 imagery significantly reduced the number of impervious surface pixels that misclassified as shrub (e). All non-urban areas were classified with Sentinel-2 imagery in the final product, significantly reducing topographic shadow effects and reducing the “salt and pepper” classification problem (f).

4. Discussion

In this study, we combined NAIP imagery with Sentinel-2 satellite imagery to create a high-resolution land cover classification map across urban and non-urban regions of SoCAB. The product of a novel data combination of Sentinel-2 and NAIP imagery is the highest spatial resolution land cover classification map currently available for SoCAB. Moreover, our method as implemented in Google Earth Engine (GEE) can be replicated for any urban area of the United States, provided sufficient training data are available. Preliminary tests also show applicability of our supervised classification algorithm for classifying urban vegetation outside the United States using Sentinel-2 imagery. Our map has wide ranging applications for climate science in southern California, including carbon flux quantification, urban land use planning, and hydrology modeling.

4.1. Supervised Classification Errors

The main source of error in our SoCAB vegetation classification map was misclassification of non-water pixels as water due to spectral similarity between water and impervious surface or shadow pixels. Trees and shrubs in mountainous regions of San Bernardino National Forest and Angeles National Forest were misclassified as water. Shadows cast by buildings, high reflectance roofs, and some high reflectance roads in desert regions of SoCAB were also misclassified as water. Although masking NAIP and Sentinel-2 imagery prior to classification with NDWI and MNDWI thresholding reduced water misclassification, an important area of future research will be to further refine water classification methods to limit misclassification in urban and topographically complex regions.

Another source of error was confusion between grass and trees in our classification. These results are similar to Erker et al. [17], who noted that trees and grass can have very similar spectra that are difficult to distinguish with four-band imagery, like NAIP or Sentinel-2 RGB and NIR bands. Incorporating textural features into our random forest classifier reduced this error, but tall grass and illuminated tree canopies can appear texturally similar, using gray-level co-occurrence matrices [17]. LiDAR imagery has been shown to improve differentiation between grass, shrub and tree [31], but is not available across all of SoCAB at high spatial resolution, and does not have repeated acquisitions like Sentinel-2 and NAIP imagery in most areas. Future work should investigate incorporation of LiDAR to improve classifications.

Re-classifying NAIP urban shadow pixels with shadow-corrected Sentinel-2 imagery reduced misclassification of impervious surface as shrub and tree but did not fully eliminate this source of error. This is likely because the spectral and textural features within a single Sentinel-2 pixel (10 m x 10 m) represent the averaged features across many (~278) 60 × 60 cm pixels, so any classification of that pixel is more prone to error [32]. Furthermore, vegetation classes are more likely to be confused in coarser resolutions because urban vegetation is often at found at resolutions <1 m2. Lastly, the sensitivity of the classification depends in part on the training data used. The training data used for shrub was derived in the wildlands. Shrub vegetation in southern California is highly heterogeneous, consisting of shrubs of many different species and sizes, as well as bare soil and sand. Thus, the shrub training polygons drawn for our supervised classifier were also heterogeneous, containing both vegetated shrub and bare soil, leading to possible spectral and textural confusion between shrub and bare soil/NPV.

4.2. Potential for Error with Sentinel-2 and NAIP Imagery

There are some concerns with mixing 2018 Sentinel-2 imagery and 2016 NAIP imagery, as the United States Drought Monitor calculated that portions of SoCAB were in a D4 exceptional drought in April 2016 versus D2 severe drought in April 2018. For example, there was likely less irrigated turf grass in 2016 than in 2018 due to water usage restrictions during drought years, which could confusion classification distinctions between bare soil/NPV and grass. The use of commercial very high resolution (VHR) time series imagery such as Planet Lab or Digital Globe will help resolve this issue by matching imagery time frames more closely and provide multiple years of data.

Another possible concern is geolocation between Sentinel-2 and NAIP imagery, as this classification approach combined both data sets over the same region. Based on the algorithm theoretical basis documents for NAIP and Sentinel-2 imagery, NAIP has a 1 m horizontal accuracy ground sample distance and Sentinel-2 has absolution geolocation of less than 11 m [33,34]. We assumed that the geolocation between Sentinel-2 and NAIP imagery was accurate enough for this classification, but further work is needed in this area.

4.3. Comparison to Other Los Angeles, CA Land Cover Maps

Previous land cover classifications across SoCAB either failed to cover the entire spatial domain at a high enough spatial resolution for carbon flux modeling [12,16], or cover the entire spatial domain at a coarse resolution [9]. Google Earth Engine has been used for accurate high-resolution urban land cover classification across the globe, but no published results of such a classification for Los Angeles exist [35,36,37,38]. The overall accuracy for our combined classification, 85%, is comparable to the EPA EnviroAtlas project, but covers a larger spatial domain and only incorporates publicly available data sets (i.e., NAIP, Sentinel-2). Wetherley et al. [12] developed an AVIRIS-derived 15 m fractional land cover classification across urban areas of Los Angeles, with accuracies varying between 77% (impervious surface) to 94% (turfgrass) for six distinct land cover classes. Although the AVIRIS-based classification has a higher accuracy within some land cover classes (i.e., turfgrass), AVIRIS imagery is not available in many regions and covers a smaller spatial domain than our NAIP and Sentinel-2 combined classification across SoCAB.

To our knowledge, the NLCD database represents the only other urban vegetation classification scheme for SoCAB. The 2011 NLCD was derived from Landsat imagery by the USGS and Multi-Resolution Land Characteristics Consortium (MRLC) and spans the continental United States at a 30 m resolution, with 16 distinct land cover classes and 89% overall accuracy [39]. In heterogeneous urban centers like Los Angeles, the NLCD database reported nearly all pixels as “developed, open space” or “developed, low, mid or high intensity,” with no reference to urban vegetation (Figure 7). For example, the Black Gold Golf Course in Yorba Linda clearly appears as irrigated grass in NAIP imagery (Figure 7b) and was correctly classified as such in our Sentinel-2/NAIP classification (Figure 7d), but classified as “developed, open space” in the NLCD classification (Figure 7f), with no reference to irrigated grass as managed urban vegetation.

While the NLCD classification covers the entire SoCAB spatial domain with a high overall accuracy, the 30 m spatial resolution is too coarse for a heterogeneous urban environment like Los Angeles and NLCD categorizations do not cover necessary land cover classes for CO2 flux quantification. We note that NAIP imagery is not available outside the continental United States and thus 60 × 60 cm classification using our combined technique is not possible. However, our results suggest that 10 × 10 m Sentinel-2 satellite imagery may be a suitable alternative to coarse classification (e.g., MODIS landcover binned into the International Geosphere-Biosphere Programme (IGBP) categorizations) for international urban land cover classification.

5. Conclusions

This study used a supervised classification approach with NAIP and Sentinel-2 imagery to classify five distinct land cover types across SoCAB at a high spatial resolution, including three classes of photosynthetically active vegetation. Our novel combination of NAIP and Sentinel-2 in heavily shadowed urban and topographically complex regions of SoCAB improved overall classification accuracy to 85% and weighted overall accuracy to 88%. Implementing object-based image classification on high-resolution NAIP imagery using SNIC both increased classification accuracy and reduced computational requirements. This urban land cover classification will enable the high-resolution monitoring of regional carbon and water cycles in southern California.

Future work should continue to advance regional carbon and water cycle science by including incorporation of this dataset with other sensors (LiDAR, imaging spectroscopy, ECOSTRESS, and Landsat) that provide additional information valuable for distinguishing landcover and land use change. Specifically, further differentiation within the general “tree” classification to identify vegetation type (tree, grass, shrub) would require structure information most easily distinguished from LiDAR. Information on deciduous and evergreen vegetation across SoCAB to differentiate different vegetation types and how they sequester and emit carbon as a function of leaf phenology, size, and species [40] would require revisit observations (e.g., Landsat/Sentinel-2) and surface chemistry from an imaging spectrometer [41,42]. Our high-resolution land cover map with an 88% weighted overall accuracy across SoCAB that was developed by incorporating two optical imagery data sets demonstrates the value of combining data from multiple remote-sensing sensors for urban land cover classification.

Supplementary Materials

Google Earth Engine to produce this research and a final high-resolution urban land cover classification: http://dx.doi.org/10.17632/zykyrtg36g.1.

Author Contributions

Conceptualization, N.P., R.W.C., and N.S.; methodology, R.W.C. and N.S.; software, R.W.C.; validation, R.W.C.; formal analysis, R.W.C and V.Y.; investigation, R.W.C.; resources, N.P.; data curation, R.W.C.; writing—original draft preparation, R.W.C.; writing—review and editing, N.S., N.P., and R.W.C.; visualization, V.Y.; supervision, N.S. and N.P.; project administration, N.S.; funding acquisition, N.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NASA OCO-2 Science Team, grant number 17-OCO2-17-0025.

Acknowledgments

The authors would also like to thank Kristal Verhulst Whitten for her mentorship and guidance at the beginning of this project. A portion of this research was carried out at the Jet Propulsion Laboratory, California Institute of Technology, under a contract with the National Aeronautics and Space Administration. Support from the Earth Science Division OCO-2 program is acknowledged. Copyright 2020. All rights reserved.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mitchell, L.E.; Lin, J.C.; Bowling, D.R.; Pataki, D.E.; Strong, C.; Schauer, A.J.; Bares, R.; Bush, S.E.; Stephens, B.B.; Mendoza, D.; et al. Long-term urban carbon dioxide observations reveal spatial and temporal dynamics related to urban characteristics and growth. Proc. Natl. Acad. Sci. USA 2018, 115, 2912–2917. [Google Scholar] [CrossRef] [PubMed]

- Hutyra, L.R.; Yoon, B.; Alberti, M. Terrestrial carbon stocks across a gradient of urbanization: A study of the Seattle, WA region. Glob. Chang. Biol. 2011, 17, 783–797. [Google Scholar] [CrossRef]

- Seto, K.C.; Güneralp, B.; Hutyra, L.R. Global forecasts of urban expansion to 2030 and direct impacts on biodiversity and carbon pools. Proc. Natl. Acad. Sci. USA 2012, 109, 16083–16088. [Google Scholar] [CrossRef] [PubMed]

- Mini, C.; Hogue, T.S.; Pincetl, S. Estimation of residential outdoor water use in Los Angeles, California. Landsc. Urban. Plan. 2014, 127, 124–135. [Google Scholar] [CrossRef]

- Miller, J.; Lehman, S.; Verhulst, K.; Miller, C.; Duren, R.; Yadav, V.; Sloop, C. Large and Seasonally Varying biospheric CO2 fluxes in the Los Angeles megacity revealed by atmospheric radiocarbon. Proc. Natl. Acad. Sci. USA. under review.

- Decina, S.M.; Hutyra, L.R.; Gately, C.K.; Getson, J.M.; Reinmann, A.B.; Short Gianotti, A.G.; Templer, P.H. Soil respiration contributes substantially to urban carbon fluxes in the greater Boston area. Environ. Pollut. 2016, 212, 433–439. [Google Scholar] [CrossRef]

- Crum, S.M.; Liang, L.L.; Jenerette, G.D. Landscape position influences soil respiration variability and sensitivity to physiological drivers in mixed-use lands of Southern California, USA. J. Geophys. Res. Biogeosci. 2016, 121, 2530–2543. [Google Scholar] [CrossRef]

- Pataki, D.E.; McCarthy, H.R.; Litvak, E.; Pincetl, S. Transpiration of urban forests in the Los Angeles metropolitan area. Ecol. Appl. 2011, 21, 661–677. [Google Scholar] [CrossRef]

- Homer, C.; Dewitz, J.; Yang, L.; Jin, S.; Danielson, P.; Xian, G.; Coulston, J.; Herold, N.; Wickham, J.; Megown, K. Completion of the 2011 national land cover database for the conterminous United States—Representing a decade of land cover change information. Photogramm. Eng. Remote Sens. 2015, 81, 346–354. [Google Scholar]

- McPherson, E.G.; Simpson, J.R.; Xiao, Q.; Wu, C. Million trees Los Angeles canopy cover and benefit assessment. Landsc. Urban. Plan. 2011, 99, 40–50. [Google Scholar] [CrossRef]

- Nowak, D.J.; Hoehn, R.E.I.; Crane, D.E.; Stevens, J.C.; Cotrone, V. Assessing Urban Forest Effects and Values, Los Angeles’ Urban Forest; USDA: Newtown Square, PA, USA, 2010. [Google Scholar]

- Wetherley, E.B.; McFadden, J.P.; Roberts, D.A. Megacity-scale analysis of urban vegetation temperatures. Remote Sens. Environ. 2018, 213, 18–33. [Google Scholar] [CrossRef]

- Xiao, Q.; Ustin, S.L.; McPherson, E.G. Using AVIRIS data and multiple-masking techniques to map urban forest tree species. Int. J. Remote Sens. 2004, 25, 5637–5654. [Google Scholar] [CrossRef]

- Gillespie, T.W.; De Goede, J.; Aguilar, L.; Jenerette, G.D.; Fricker, G.A.; Avolio, M.L.; Pincetl, S.; Johnston, T.; Clarke, L.W.; Pataki, D.E. Predicting tree species richness in urban forests. Urban. Ecosyst. 2017, 20, 839–849. [Google Scholar] [CrossRef]

- Wetherley, E.B.; Roberts, D.A.; McFadden, J.P. Mapping spectrally similar urban materials at sub-pixel scales. Remote Sens. Environ. 2017, 195, 170–183. [Google Scholar] [CrossRef]

- Pickard, B.R.; Daniel, J.; Mehaffey, M.; Jackson, L.E.; Neale, A. EnviroAtlas: A new geospatial tool to foster ecosystem services science and resource management. Ecosyst. Serv. 2015, 14, 45–55. [Google Scholar] [CrossRef]

- Erker, T.; Wang, L.; Lorentz, L.; Stoltman, A.; Townsend, P.A. A statewide urban tree canopy mapping method. Remote Sens. Environ. 2019, 229, 148–158. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Turner, A.J.; Köhler, P.; Magney, T.S.; Frankenberg, C.; Fung, I.; Cohen, R.C. A double peak in the seasonality of California’s photosynthesis as observed from space. Biogeosciences 2020, 17, 405–422. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, Y.; Ling, F.; Wang, Q.; Li, W.; Li, X. Water bodies’ mapping from Sentinel-2 imagery with Modified Normalized Difference Water Index at 10-m spatial resolution produced by sharpening the swir band. Remote Sens. 2016, 8, 354. [Google Scholar] [CrossRef]

- McFeeters, S.K. Using the normalized difference water index (ndwi) within a geographic information system to detect swimming pools for mosquito abatement: A practical approach. Remote Sens. 2013, 5, 3544–3561. [Google Scholar] [CrossRef]

- US Building Footprints. Available online: https://github.com/Microsoft/USBuildingFootprints (accessed on 1 July 2019).

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Achanta, R.; Süsstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Haralick, R.M.; Dinstein, I.; Shanmugam, K. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Padarian, J.; Minasny, B.; McBratney, A.B. Using Google’s cloud-based platform for digital soil mapping. Comput. Geosci. 2015, 83, 80–88. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- MacFaden, S.W.; O’Neil-Dunne, J.P.M.; Royar, A.R.; Lu, J.W.T.; Rundle, A.G. High-resolution tree canopy mapping for New York City using LIDAR and object-based image analysis. J. Appl. Remote Sens. 2012, 6, 063567-1. [Google Scholar] [CrossRef]

- Maclachlan, A.; Roberts, G.; Biggs, E.; Boruff, B. Subpixel land-cover classification for improved urban area estimates using landsat. Int. J. Remote Sens. 2017, 38, 5763–5792. [Google Scholar] [CrossRef]

- Clerc, S.; Devignot, O.; Pessiot, L. Sentinel-2 L1C Data Quality Report 2020; European Space Agency: Paris, France, 2020. [Google Scholar]

- USDA Farm Service Agency, Aerial Photography Field Office. National Agriculture Imagery Program Information Sheet 2009; USDA Farm Service Agency, Aerial Photography Field Office: Salt Lake City, UT, USA, 2009.

- Aguilar, R.; Zurita-Milla, R.; Izquierdo-Verdiguier, E.; de By, R.A. A cloud-based multi-temporal ensemble classifier to map smallholder farming systems. Remote Sens. 2018, 10, 729. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Y.; Clinton, N.; Wang, J.; Wang, X.; Liu, C.; Gong, P.; Yang, J.; Bai, Y.; Zheng, Y.; et al. Mapping major land cover dynamics in Beijing using all Landsat images in Google Earth Engine. Remote Sens. Environ. 2017, 202, 166–176. [Google Scholar] [CrossRef]

- Patela, N.N.; Angiuli, E.; Gamba, P.; Gaughan, A.; Lisini, G.; Stevens, F.R.; Tatem, A.J.; Trianni, G. Multitemporal settlement and population mapping from landsatusing google earth engine. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 199–208. [Google Scholar] [CrossRef]

- Ravanelli, R.; Nascetti, A.; Cirigliano, R.V.; Di Rico, C.; Leuzzi, G.; Monti, P.; Crespi, M. Monitoring the impact of land cover change on surface urban heat island through Google Earth Engine: Proposal of a global methodology, first applications and problems. Remote Sens. 2018, 10, 1488. [Google Scholar] [CrossRef]

- Wickham, J.; Stehman, S.V.; Gass, L.; Dewitz, J.A.; Sorenson, D.G.; Granneman, B.J.; Poss, R.V.; Baer, L.A. Thematic accuracy assessment of the 2011 National Land Cover Database (NLCD). Remote Sens. Environ. 2017, 191, 328–341. [Google Scholar] [CrossRef]

- Mahadevan, P.; Wofsy, S.C.; Matross, D.M.; Xiao, X.; Dunn, A.L.; Lin, J.C.; Gerbig, C.; Munger, J.W.; Chow, V.Y.; Gottlieb, E.W. A satellite-based biosphere parameterization for net ecosystem CO2 exchange: Vegetation Photosynthesis and Respiration Model (VPRM). Glob. Biogeochem. Cycles 2008, 22, GB2005. [Google Scholar] [CrossRef]

- Asner, G.P.; Martin, R.E. Spectral and chemical analysis of tropical forests: Scaling from leaf to canopy levels. Remote Sens. Environ. 2008, 112, 3958–3970. [Google Scholar] [CrossRef]

- Singh, A.; Serbin, S.P.; McNeil, B.E.; Kingdon, C.C.; Townsend, P.A. Imaging spectroscopy algorithms for mapping canopy foliar chemical and morphological traits and their uncertainties. Ecol. Appl. 2015, 25, 2180–2197. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).