Abstract

Accurate and timely detection of road surface cracks plays a crucial role in ensuring sustainable infrastructure maintenance and improving road safety, particularly under complex and dynamic environmental conditions. However, existing deep learning-based detection methods often suffer from high computational overhead, limited scalability across diverse crack patterns, and insufficient robustness against complex background interference, hindering real-world deployment in resource-constrained UAV platforms. To address these challenges, this study proposes CrackLite-Net, an improved and lightweight variant of the YOLO12n architecture tailored for adaptive UAV-based road crack detection. First, a novel GhostPercepC2f backbone module is introduced, combining ghost feature generation with axis-aware attention to enhance spatial perception of crack structures while significantly reducing redundant computations and model parameters. Second, a Spatial Attention-Enhanced Feature Pyramid Network (SAFPN) is developed to perform adaptive multi-scale feature integration. By incorporating spatial attention and energy-guided filtering, SAFPN strengthens the representation of cracks with varying widths, orientations, and shapes. Third, the Selective Channel-Enhanced Cross-Stage Fusion module (SC2f) consolidates channel-wise feature dependencies using an adaptive lightweight convolution mechanism, effectively suppressing noise and improving feature discrimination in visually cluttered road scenes. Experimental evaluations on the newly constructed LCrack dataset demonstrate that CrackLite-Net achieves a mAP of 92.3% with only 2.2 M parameters, outperforming YOLO12 by 3.9% while delivering superior efficiency. Cross-dataset validation on RDD2022 further confirms the model’s strong generalization capability across different environments and imaging conditions. Overall, the results highlight CrackLite-Net as an effective, energy-efficient, and deployable solution for sustainable road infrastructure inspection using UAV platforms.

1. Introduction

The timely detection of road surface cracks is essential for reducing economic losses and enhancing traffic efficiency [1]. As a prevalent and increasingly severe form of pavement distress, road cracks pose a significant risk to the structural integrity and operational safety of transportation infrastructure [2]. Such defects not only compromise surface stability but may also lead to progressive structural failures, substantially increasing maintenance complexity and associated costs [3,4]. In addition to incurring considerable financial losses, road cracks have been identified as contributing factors in various traffic accidents [5]. Environmental conditions—such as temperature fluctuations, moisture, and heavy traffic loads—can accelerate the deterioration of crack-affected pavements, further undermining road safety and service performance [6]. Consequently, the adoption of advanced crack detection technologies is imperative to improve inspection accuracy and efficiency, thereby enabling the early prevention and effective mitigation of potential safety hazards and economic impacts [7,8].

Early studies on road crack detection can generally be divided into traditional approaches and machine learning-based methods. Traditional detection techniques primarily depended on manual inspection [9]. For instance, Cheng et al. [10] introduced a pavement inspection procedure in which personnel visually assessed road surface conditions while driving on highways. Although these methods are straightforward and easy to implement, they present several drawbacks, including high labor intensity, low efficiency, safety hazards, and strong subjectivity [11,12]. In response to these limitations, automated crack detection techniques—particularly those based on machine learning—have been widely adopted in the field of transportation safety to reduce human workload, improve detection accuracy, and enhance overall efficiency [13].

Early machine learning methods for road crack detection predominantly relied on handcrafted feature extraction to identify and localize cracks within images [14,15,16]. For instance, Chen et al. [17] employed a Support Vector Machine (SVM) classifier to distinguish between different crack types. Mayer et al. [18] adopted a thresholding technique that identified crack regions by applying empirically determined thresholds, effectively separating cracks from the background. Li et al. [19] introduced the Neighboring Difference Histogram Method (NDHM), which achieved precise image segmentation by optimizing threshold values globally, thereby improving detection accuracy. While traditional object detection approaches have shown effectiveness in simple scenarios [20], they are often limited by low computational efficiency and a lack of robustness in complex environments. These limitations lead to increased inspection costs and reduced reliability, making such methods insufficient for the demands of modern road maintenance [21]. With the rapid development of unmanned aerial vehicle (UAV) technology, consumer-grade drones—characterized by low cost and ease of use—have found widespread applications across various fields, offering considerable operational advantages. As a result, UAV-based computer vision approaches have emerged as a promising direction for addressing the challenges of road crack detection [22].

Deep learning has significantly advanced object detection by enabling automatic feature extraction and end-to-end learning, improving robustness and accuracy in complex and unstructured environments [23,24,25,26,27]. Existing deep learning-based object detection approaches can be broadly categorized into CNN-based detection approaches and Transformer-based detection approaches, which differ in their feature modeling strategies and receptive field mechanisms.

CNN-based object detection models, including the classical Faster R-CNN and YOLO-series detectors, rely on convolutional operations to construct local spatial features and exhibit strong translation invariance and spatial inductive bias. These architectures have been widely applied to road crack detection due to their fast inference speed and reliable performance. Xu et al. [28] applied Faster R-CNN and Mask R-CNN to road crack detection and demonstrated accurate performance in structured pavement environments. Zhou et al. [29] integrated UAV imaging with Faster R-CNN to achieve automated crack localization, verifying the feasibility of combining aerial acquisition with deep detection. Liu et al. [30] improved the Mask R-CNN architecture by optimizing the FPN design, significantly enhancing crack feature aggregation.

In one-stage detection models [31,32,33,34,35,36,37,38], Jin et al. [39] improved YOLOv3 using K-Means++ to optimize anchor size selection, addressing multi-scale variability in crack shapes. Yu et al. [40] improved YOLOv4 for complex background crack detection by refining the loss function, improving robustness against noise interference. Guo et al. [41] proposed an enhanced YOLOv5-based road damage detection method, improving detection robustness by optimizing the multi-scale feature extraction strategy and demonstrating strong performance across diverse pavement conditions. Ye et al. [42] developed an attention-enhanced YOLOv7-based detector for concrete crack recognition, effectively improving fine-grained feature perception. Zeng et al. [43] introduced YOLOv8-PD, which enhances road damage detection by refining PANet and embedding a lightweight attention module, yielding substantial improvements in accuracy and inference efficiency on real-world pavement datasets. Despite their efficiency and strong deployment adaptability, CNN-based approaches face challenges when addressing irregular crack patterns and large-scale variations due to fixed kernel geometry and limited receptive field adaptability.

In recent years, Transformer-based architectures have gained increasing attention in pavement crack detection owing to their ability to model long-range dependencies and global spatial relationships. These models overcome the intrinsic locality constraints of CNNs and achieve more comprehensive crack perception, particularly in highly complex backgrounds. Guo et al. [44] proposed a Transformer-driven detection framework that improves robustness in noisy and cluttered pavement environments, outperforming classical CNN segmentation models. Liu et al. [45] introduced CrackFormer, which incorporates self-attention and scaling-attention mechanisms to enhance boundary continuity and fine texture perception in thin or low-contrast cracks. Chen et al. [46] presented an enhanced Swin-UNet that integrates hierarchical Swin Transformer blocks, achieving superior segmentation accuracy on multiple public datasets by strengthening global-local semantic representation. Although Transformer-based models have strong global feature modeling capability, YOLO12 features significantly lower computational cost and fewer parameters, making it more suitable for UAV-based road crack detection scenarios where onboard computing resources are limited.

Despite significant progress in crack detection using deep learning, several challenges still hinder practical deployment. In real-world road inspection scenarios, cracks vary widely in scale and morphology and are often obscured by complex background elements such as stains, shadows, and textured pavement surfaces. These factors pose substantial difficulties for accurate feature extraction. Moreover, many state-of-the-art crack detection models are burdened with large parameter sizes and high computational demands, making them unsuitable for deployment on resource-constrained platforms such as UAVs. To address these challenges, this paper introduces CrackLite-Net, an improved and lightweight variant of YOLO12n designed to achieve real-time adaptive road crack detection by balancing detection accuracy with computational efficiency and robustness in complex environments. The major contributions of this paper are summarized below:

- The backbone module GhostPercepC2f combines the low-cost feature generation of the Ghost structure with an axis-aware attention mechanism. This integration enhances spatial sensitivity to crack features while maintaining a low computational footprint.

- The SAFPN (Spatial Attention-Enhanced Feature Pyramid Network) module enables adaptive multi-scale feature fusion. It incorporates inter-layer feature interaction, attention-guided enhancement, and feature flow reconstruction, improving the model’s ability to detect cracks with varying widths and lengths.

- The SC2f (Selective Channel-Enhanced Cross-Stage Feature Fusion Module) dynamically models channel-wise feature importance. It replaces fully connected layers with adaptive one-dimensional convolution, effectively emphasizing crack-relevant features and suppressing irrelevant background information. This design improves the model’s overall discriminative capability with minimal computational overhead.

- In addition, existing public road crack datasets lack high-resolution UAV imagery with detailed crack annotations, which are essential for real UAV inspection scenarios. Therefore, we constructed the LCrack dataset to address these limitations and provide more realistic and diverse samples for model training and generalization evaluation.

2. Materials and Methods

2.1. Datasets

To address the lack of high-resolution UAV-based crack datasets that reflect realistic road surface conditions in Chinese urban road environments, we constructed a new dataset named LCrack. Existing public datasets such as RDD2022 mainly focus on vehicle-mounted or ground-level perspectives, with limited support for low-altitude UAV imagery and insufficient representation of fine-grained crack morphology. Therefore, a custom dataset was required to enable accurate detection in real-world UAV inspection scenarios. The dataset was collected using a DJI Mini 4 UAV in Linyi, China, during March 2025, on a clear and calm afternoon with stable lighting conditions. Images were captured at the highest available resolution supported by the device under a vertical top-down perspective from an altitude of 3–6 m. Representative UAV collection views and crack samples are provided in Figure 1.

Figure 1.

Representative dataset sample images.

A total of 5000 high-resolution UAV images were collected and manually annotated for four common types of road surface damage, including longitudinal cracks, transverse cracks, alligator cracks, and potholes. All annotations were generated in YOLO format, ensuring compatibility with mainstream object detection frameworks. The dataset was randomly divided into training, validation, and testing subsets with a ratio of 8:1:1, supporting model training, hyperparameter tuning, and objective performance evaluation. As a region-specific, high-resolution dataset, LCrack overcomes the limitations of existing public datasets in capturing fine-grained crack morphology under realistic UAV inspection scenarios, thereby providing a reliable foundation for model development and optimization.

To further assess the generalization capability of the proposed model, a supplementary experiment was conducted on the publicly available RDD2022 dataset [47], from which 6000 images were selected. RDD2022 includes road damage annotations from Japan, India, and the Czech Republic, encompassing a wide range of environmental conditions such as different lighting, weather, camera angles, and pavement materials. The selected subset includes the same four damage categories as LCrack and was carefully sampled to ensure diversity in region, surface type, and defect severity.

The RDD2022 subset was divided into training, validation, and testing sets in an 8:1:1 ratio, with 4800 images used for training, 600 for validation, and 600 for testing. This experimental configuration allows for an objective evaluation of the model’s robustness and adaptability in diverse geographic and environmental contexts beyond the scope of the LCrack dataset.

2.2. CrackLite-Net

2.2.1. Architecture

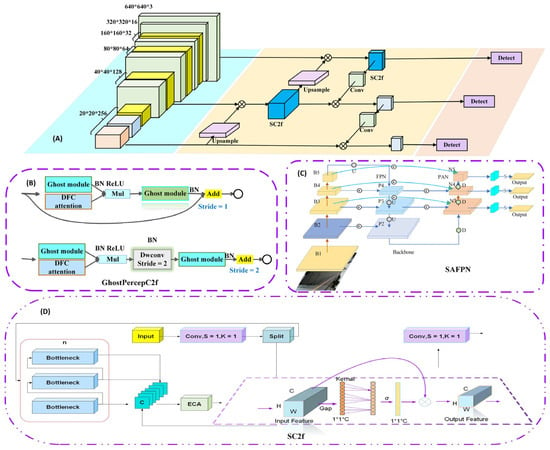

To achieve efficient and accurate detection of fine-grained crack patterns in complex road scenes, this paper proposes a Real-Time Lightweight Network for Adaptive Road Crack Detection framework named CrackLite-Net. The overall architecture of CrackLite-Net comprises four key components: an input module, a backbone network based on the GhostPercepC2f module for lightweight feature extraction, a feature fusion neck constructed using the Spatial Attention-Enhanced Feature Pyramid Network (SAFPN), and a selective feature enhancement module called SC2f (Selective Channel-enhanced Cross-stage Feature Fusion Module). The overall structure of CrackLite-Net is illustrated in Figure 2. Specifically, the input module receives image tensors with a shape of 630 × 630 × 3, in which 630 × 630 refers to the unified spatial resolution after preprocessing and 3 denotes the channels.

Figure 2.

Architecture of the proposed CrackLite-Net. (A) Overall architecture. (B) Architecture of GhostPercepC2f. (C) Architecture of SAFPN. (D) Architecture of SC2f. Abbreviations: U = Upsample; D = Downsample; C = Concat; S = SimAM. * Indicates feature weighting.

Real-time UAV-based crack inspection presents several key challenges, including complex road surface backgrounds, irregular crack geometry, and high variability in crack scale and morphology due to different flight altitudes and imaging angles.

The proposed CrackLite-Net addresses these challenges effectively. GhostPercepC2f serves as the core of the backbone and enhances crack-related feature sensitivity by integrating low-cost Ghost operations with an efficient axis-aware attention mechanism. SAFPN is responsible for multi-scale feature aggregation and incorporates spatial attention to improve the representation of elongated or low-contrast cracks. SC2f adaptively refines inter-channel dependencies and reinforces crack-relevant features through lightweight channel modulation.

2.2.2. GhostPercepC2f

Timely and accurate detection of road surface cracks plays a vital role in road maintenance and infrastructure safety. However, crack patterns are often narrow, fragmented, and embedded in visually complex backgrounds, posing significant challenges for automated detection. Many existing deep learning models deliver high accuracy but rely on deep and computationally intensive backbones. These models often contain a large number of parameters, resulting in increased inference latency, high memory usage, and elevated energy consumption—factors that are especially problematic for real-time deployment on UAVs or edge devices.

Even in streamlined architectures such as YOLO12n, which aim to balance accuracy and efficiency, the backbone network still incurs a substantial computational burden during feature fusion and representation learning. This hinders the responsiveness required for real-time inspection and limits practical use in field conditions where resources are constrained.

To alleviate these limitations, this study introduces GhostPercepC2f, a lightweight feature extraction module specifically designed for road crack detection. This module builds upon the efficient structure of the Ghost Module while incorporating a streamlined attention mechanism that enhances spatial awareness with minimal computational overhead. GhostPercepC2f uses Ghost blocks as its foundation and augments them with a lightweight attention unit that helps the network focus on crack-related patterns more effectively.

Rather than applying a fully connected attention layer—which is computationally expensive, especially for high-resolution images—GhostPercepC2f uses an axis-aware aggregation strategy. It performs depthwise separable convolutions along the horizontal and vertical directions independently, allowing the network to capture contextual dependencies without the burden of dense connections. Let be the input feature map, with each spatial position denoted as t . A full attention operation over would require operations, which is impractical for most real-time applications. Our approach instead reduces the complexity to , making it significantly more efficient, as defined in Equations (1) and (2):

where the symbol ⊙ denotes the element-wise multiplication, represents the learnable weights in the fully connected layer, and and correspond to the transformation weights along the height and width dimensions, respectively. The feature tensor can be regarded as a set of tokens, with each token denoted as .

To further reduce overhead, the module first downsamples the feature map before applying the attention mechanism, and then restores the original resolution via upsampling. This allows the model to incorporate local contextual information while preserving spatial detail—particularly useful when detecting fine cracks. The attention maps guide the network to focus on structurally meaningful regions, improving its ability to separate cracks from visually similar background patterns.

GhostPercepC2f is implemented in two variants with different strides (1 and 2). For stride 1, the attention-enhanced output is concatenated with the original input, extending the receptive field without altering resolution. For stride 2, spatial downsampling is applied through depthwise convolution, and the shortcut connection is resized accordingly to allow residual fusion via element-wise addition.

By combining efficient feature generation with targeted spatial attention, GhostPercepC2f enhances the model’s sensitivity to weak or occluded cracks while maintaining fast inference speed. This makes it a practical and effective backbone component for road inspection tasks deployed on UAVs or edge devices.

2.2.3. SAFPN

In real-world road inspection scenarios, crack detection is often challenged by varying crack lengths, irregular orientations, and fine-grained spatial characteristics under diverse lighting and surface textures. Although YOLO12 integrates FPN and PAN modules to address multi-scale issues, these structures introduce considerable computational redundancy and insufficiently capture spatial-semantic dependencies, particularly for elongated or fragmented crack patterns. To address these limitations, we propose a redesigned feature fusion architecture, termed Spatial Attention-Enhanced Feature Pyramid Network (SAFPN).

The SAFPN module is designed to enhance both the accuracy and computational efficiency of crack feature integration across multiple resolution levels. To achieve this, two key structural optimizations are implemented. First, redundant nodes that contribute minimally to feature fusion are pruned, thereby simplifying the overall network topology and reducing unnecessary computation. Second, shortcut connections are introduced between adjacent feature levels to enable more effective bidirectional flow of spatial information. These modifications not only alleviate the burden of deep feature stacking but also significantly improve the model’s ability to capture fine-grained crack patterns that are often obscured within complex and textured road surfaces. Through this streamlined yet expressive design, SAFPN facilitates more discriminative and context-aware feature representation for crack detection tasks.

To simplify the network structure, redundant nodes that contribute minimally to feature fusion are pruned. Meanwhile, inter-layer shortcut connections are introduced to facilitate efficient information propagation between scales. These structural refinements lead to both reduced model complexity and improved feature integration, ultimately enhancing landslide detection performance.

To further enhance fusion adaptability across scales, SAFPN employs a layer-wise weight normalization strategy. Specifically, for each input feature level , a learnable importance score is assigned and then normalized with respect to the sum of all scores across levels:

This operation ensures that the overall fusion remains balanced (i.e., weights sum to 1), while enabling the model to selectively emphasize feature levels that are more informative for crack representation.

To refine the network’s focus on critical structural cues, SAFPN incorporate a lightweight spatial attention mechanism based on an energy-based filtering principle. For each channel, its saliency is evaluated by minimizing an energy function that quantifies the deviation of neuron activations from their statistical mean. The energy of a neuron is defined as:

In this context, represents the mean of all neurons in a channel, denotes their variance, and

is the regularization coefficient. This formulation allows the network to suppress noise-dominated channels and reinforce those highlighting structural discontinuities or edge-like patterns, which are typical indicators of road cracks.

After computing the per-channel energy response, we apply a Sigmoid activation to obtain final attention weights:

where represents the input feature, and refers to the enhanced feature obtained after activation, corresponding to a dot product operation. represents the activation energy of a channel, which measures the deviation of neuron responses from their mean value. Higher energy indicates stronger structural relevance to crack patterns. This mechanism adaptively reassigns importance to each channel without introducing additional parameters, enabling efficient yet effective enhancement of crack-sensitive features. The SAFPN module, when integrated into the YOLO12 detection neck, offers improved spatial resolution awareness, precise localization of thin cracks, and enhanced suppression of irrelevant texture background, which is especially valuable under challenging illumination and material variation conditions present in real-world road inspection.

2.2.4. SC2f

In the context of road crack detection, cracks typically appear as thin, fragmented, and low-contrast linear patterns that are easily confused with background noise such as pavement textures, stains, shadows, or debris. These interfering elements often exhibit visual characteristics similar to actual cracks, which significantly hampers detection accuracy. While the C2f module in YOLO12 leverages cross-stage connections and local context modeling to enhance spatial feature perception, it lacks sufficient capability in capturing channel-wise semantic relevance, limiting its effectiveness in highlighting crack-specific features. To address this limitation, we introduce an enhanced feature fusion module named SC2f (Selective Channel-enhanced Cross-stage Feature Fusion Module). Built upon the original C2f structure, SC2f incorporates a lightweight channel-aware mechanism that adaptively models inter-channel dependencies, enabling the network to emphasize informative crack-related features while suppressing irrelevant or redundant responses. By jointly enhancing spatial encoding and channel discrimination, SC2f strengthens the model’s robustness in detecting subtle crack patterns under complex road surface conditions.

This design improves upon conventional channel attention mechanisms by substituting the computationally intensive fully connected layers with a lightweight, adaptive one-dimensional convolution. This modification not only reduces parameter overhead but also enhances the network’s capacity to selectively model channel-wise feature importance. Within the proposed SC2f module, the channel enhancement process operates as follows:

Given an input feature map , a global average pooling (GAP) operation is first applied across the spatial dimensions, producing a compact descriptor of size . This vector summarizes the global spatial activation of each channel, serving as a condensed representation of feature relevance.

An adaptive one-dimensional convolution is then performed on this vector. The kernel size is dynamically determined based on the number of channels, allowing the convolution to flexibly capture local inter-channel dependencies without introducing significant computational complexity. This step enables the module to learn refined channel relationships that are context-sensitive and scale-aware.

The convolution output is subsequently passed through a Sigmoid activation function, generating a normalized weight vector in the range . These weights encode the relative significance of each channel in the current context, enabling the network to dynamically prioritize informative features.

Finally, the original feature map is rescaled by these learned weights via channel-wise multiplication, resulting in the enhanced output . This reweighting mechanism effectively amplifies channels associated with crack-like structures while suppressing irrelevant or misleading background responses. By embedding this selective channel modulation into SC2f, the model achieves more robust and discriminative feature representations, particularly under visually complex and noisy road surface conditions. Further analysis of this mechanism’s effect on crack detection performance is provided below:

where the parameter adjusts the movement of the convolutional kernel size, while γ controls the rate of kernel size concerning the number of channels. denotes the number of channels, is the adaptive convolution kernel size determined by channel count, and controls the degree of kernel size adjustment.

2.3. Experimental Settings

2.3.1. Experimental Environment

All experiments were conducted on a Linux platform using PyTorch 1.7.0 and Python 3.8. Training experiments were conducted on an NVIDIA RTX 4090 workstation with 24 GB of video memory. Data acquisition for the drone was performed using a DJI MINI 4 pro drone. The training was carried out with an initial learning rate of 0.01, a total of 150 epochs, a momentum value of 0.937, and a weight decay coefficient of 0.0005. A batch size of 32 was used to ensure training efficiency and maintain model stability.

2.3.2. Evaluation Metrics

To thoroughly assess the effectiveness of the proposed method, we adopted standard performance metrics, including precision, recall, F1-score, and mean Average Precision (mAP). These indicators are defined as follows:

precision measures the proportion of correctly predicted positive instances among all predicted positives, while recall represents the proportion of true positives identified out of all actual positive cases. The F1 balances these two metrics by providing a harmonic mean, reflecting the trade-off between false positives and false negatives. The mean Average Precision (mAP) is calculated by averaging the precision scores across all target categories, offering a comprehensive evaluation of the model’s detection performance. To highlight the model’s lightweight design, we also report the parameter count, which quantifies the total number of trainable weights and biases and serves as an indicator of model efficiency.

3. Experimental Results and Analysis

3.1. Ablation Study

To clarify the contribution of each module within CrackLite-Net, three incremental configurations were constructed based on the progressive integration strategy. Method (1) introduces only the GhostPercepC2f module on top of the YOLO12n baseline to enhance lightweight feature representation. Method (2) integrates both GhostPercepC2f and SAFPN, enabling more effective multi-scale feature fusion. Method (3) corresponds to the full version of CrackLite-Net, in which GhostPercepC2f, SAFPN, and SC2f operate jointly to improve spatial and channel-wise feature discrimination. The experimental results in Table 1 show that detection performance increases consistently as modules are added, demonstrating the effectiveness of the proposed architectural components.

Table 1.

Ablation Results of the Optimized Structure.

As shown in Table 1, when only the GhostPercepC2f module was incorporated (Method (1)), the mAP increased from 88.4% to 89.8%, while the number of parameters was significantly reduced from 2.6 M to 2.1 M. This result demonstrates that GhostPercepC2f effectively decreases model complexity while simultaneously improving detection accuracy. By combining low-cost Ghost feature generation with axis-aware attention, the module enhances the network’s sensitivity to fine crack structures, making it well suited for deployment on resource-constrained platforms.

With the subsequent integration of the SAFPN module (Method (2)), the mAP further improved to 90.2%, without increasing the model size. This improvement is attributed to the attention-driven multi-scale fusion strategy of SAFPN, which strengthens the representation of crack patterns with varying widths and geometric shapes, thereby enhancing adaptability to diverse crack morphologies.

Finally, when all three modules—GhostPercepC2f, SAFPN, and SC2f—were combined (Method (3)), the mAP reached 92.3%, the highest among all configurations, with only a slight parameter increase to 2.2 M. Compared with the baseline, this reflects a 3.9% improvement in accuracy and approximately 15.4% reduction in parameter size. The performance gain is mainly attributed to the SC2f module, which dynamically models inter-channel dependencies, highlights crack-relevant channels, and suppresses background interference, thereby improving feature discrimination with minimal computational cost.

3.2. Comparison Experiments

This experiment evaluates both the detection accuracy and computational efficiency of the proposed CrackLite-Net in comparison with representative object detection models on the LCrack dataset, with the goal of determining whether CrackLite-Net—designed for deployment on resource-constrained platforms—can achieve competitive or superior performance while maintaining a compact model size.

Furthermore, to assess the generalization capability of the model, cross-dataset testing was conducted using the publicly available RDD2022 dataset, which contains road damage images captured under diverse lighting conditions, surface textures, and pavement environments across multiple countries. This experiment examines whether a model trained exclusively on the LCrack dataset can preserve its detection performance when applied to previously unseen domains. The results are summarized in Table 2.

Table 2.

Generalization Assessment on the LCrack and RDD2022 Dataset.

Table 2 presents the detection performance of various models on the LCrack and RDD2022 datasets, revealing notable differences in generalization capability across methods. CrackLite-Net achieves the highest overall performance on the LCrack dataset, with Precision, Recall, F1-score, and mAP reaching 92.4%, 85.2%, 88.7%, and 93.3%, respectively, markedly surpassing mainstream detectors such as YOLO12 (84.1% F1, 88.2% mAP) and RT-DETR (82.3% F1, 85.3% mAP). This indicates that the proposed model is more effective in identifying fine crack structures and low-contrast texture patterns embedded within complex pavement backgrounds.

The results obtained on the RDD2022 dataset further verify the robustness and cross-domain adaptability of CrackLite-Net. Due to substantial domain shifts between UAV and ground-level imaging—including variations in scene complexity, illumination conditions, and sensor configuration—all models exhibit performance degradation. Nevertheless, CrackLite-Net maintains the highest F1-score and mAP (58.6% and 61.2%), outperforming YOLO12 by +1.4% F1/+0.4% mAP, RT-DETR by +3.1% F1/+4.0% mAP, and Swin-T by +4.4% F1/+7.6% mAP. These results demonstrate that the proposed network maintains stable recognition capability under domain shifts and diverse imaging environments.

In addition, CrackLite-Net contains only 2.2 M parameters, representing the lowest complexity among all compared models and significantly smaller than RT-DETR (32.0 M) and Swin-T (28.3 M), as well as YOLO10 (2.7 M) and YOLO11 (2.6 M). This confirms that the lightweight architecture design substantially reduces computational cost while preserving high accuracy, enabling practical real-time deployment on UAVs or edge computing platforms.

Overall, CrackLite-Net achieves an effective balance among detection accuracy, cross-domain generalization, and computational efficiency, demonstrating strong potential for real-world pavement inspection applications under diverse acquisition and operational conditions.

To further validate the performance of our method, we conducted comparative experiments with other crack detection methods (MN-YOLOv5, YOLOv8-PD, and LEE-YOLO) on the LCrack dataset. The results are shown in Table 3 below.

Table 3.

Comparative Testing of Crack Detection Methods.

As shown in Table 3, MN-YOLOv5 and YOLOv8-PD demonstrate relatively stable performance in road crack detection tasks, with YOLOv8-PD exhibiting a noticeable advantage in terms of Precision and mAP. Compared with these two methods, LEE-YOLO further improves overall detection accuracy, achieving higher F1 and mAP, which reflects stronger detection consistency and feature representation capability. Overall, CrackLite-Net achieves the best comprehensive performance, indicating that it achieves a more effective balance between precision and recall and is particularly suitable for detecting complex crack patterns in practical UAV-based inspection scenarios.

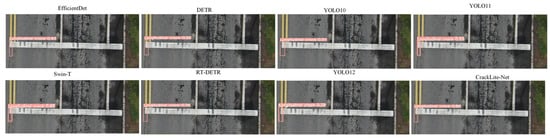

3.3. Visualization

Figure 3 presents qualitative examples of longitudinal crack detection using different models on the LCrack dataset. It can be observed that EfficientDet, DETR, YOLO10, and YOLO11 exhibit weak responses to crack boundaries and are prone to missed detections or partial structural loss under challenging conditions such as uneven illumination, pavement abrasion, and strong background texture interference. Swin-T and RT-DETR show improved performance compared to the former models, achieving more reliable recognition of the main crack region; however, they still struggle to capture the full extent of long and slender crack structures.

Figure 3.

Qualitative comparison of longitudinal crack detection on the LCrack dataset using different detection models.

In contrast, both YOLO12 and CrackLite-Net provide more accurate crack localization, with CrackLite-Net producing the most complete bounding result and the highest confidence score (0.80). This demonstrates its enhanced capability in extracting and distinguishing fine crack features under weak texture and low-contrast conditions. Such improvements are primarily attributed to the GhostPercepC2f module’s fine-grained feature enhancement and the effective multi-scale and channel-aware fusion strategies introduced by SAFPN and SC2f.

Overall, these qualitative results further validate the superior performance of CrackLite-Net on the LCrack dataset, particularly in scenarios involving complex backgrounds and subtle crack patterns.

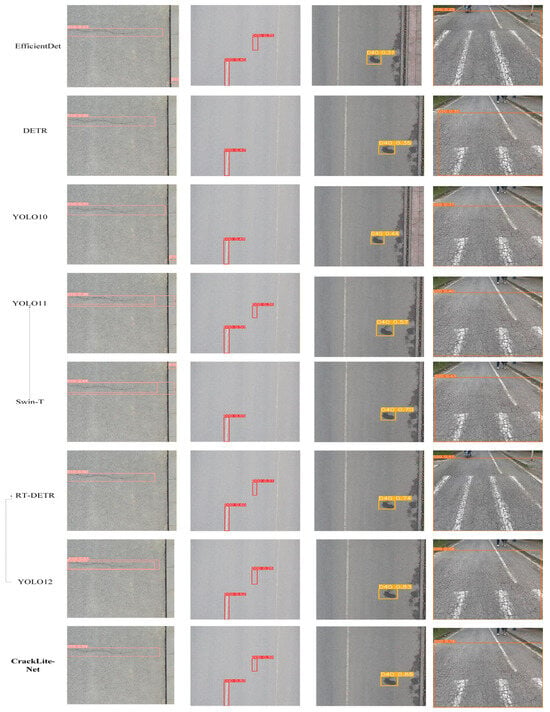

Figure 4 illustrates the qualitative detection results of various models on longitudinal crack samples from the RDD2022 dataset. It can be observed that EfficientDet, DETR, YOLO10, and YOLO11 exhibit weak responses along crack boundaries, with noticeable localization deviations and low confidence scores (all below 0.55). This indicates that these models struggle to accurately detect crack targets in low-contrast scenes with significant texture noise interference. Swin-T and RT-DETR demonstrate relatively better responses along the main crack structure and achieve higher confidence scores; however, they still suffer from incomplete detection and missing segments in fragmented crack regions.

Figure 4.

Qualitative comparison of longitudinal crack detection on the RDD2022 dataset using different detection models.

In contrast, both YOLO12 and CrackLite-Net successfully identify the complete crack structure. CrackLite-Net achieves the highest confidence score (0.80) and the most complete bounding region, demonstrating stronger robustness to uneven illumination, surface abrasion, and complex background textures. This improvement is attributed to its lightweight attention-enhanced design, which more effectively focuses on crack-related regions while suppressing background noise. These qualitative results further validate the superiority of CrackLite-Net in realistic road inspection scenarios, particularly when detecting low-contrast, fine-scale cracks under noisy and visually complex conditions.

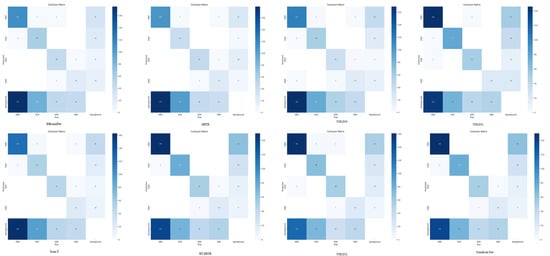

Figure 5 presents the confusion matrices of different detection models evaluated on the RDD2022 dataset, illustrating their ability to distinguish between crack categories—Longitudinal Crack, Transverse Crack, Alligator Crack, and Pothole—and background samples. Overall, CrackLite-Net achieves the highest classification accuracy across all crack categories, as indicated by the darker diagonal regions and significantly reduced off-diagonal confusion. This confirms its robustness and generalization capability under cross-domain conditions. In particular, CrackLite-Net demonstrates superior recognition performance on Alligator Crack and Pothole, while markedly decreasing misclassification with the background class, indicating its improved ability to discriminate structural crack patterns from pavement textures and noise interference.

Figure 5.

Confusion matrices of different models on the RDD2022 dataset.

In comparison, models such as EfficientDet, DETR, YOLO10, and YOLO11 exhibit evident misclassification and omission errors, especially for Transverse Crack and Pothole, where non-diagonal regions appear noticeably darker. This suggests that these methods struggle with low-contrast and fragmented damage types and are more susceptible to variations in illumination, surface material, and background texture. Swin-T and RT-DETR achieve relatively better performance on Longitudinal Crack, yet still show significant confusion when dealing with the more complex and highly fragmented Alligator Crack category.

The superior performance of CrackLite-Net can be attributed to its architectural design: GhostPercepC2f strengthens the representation of weak structural features, SAFPN enhances multi-scale feature fusion, and SC2f effectively suppresses background noise while emphasizing crack-relevant channels, collectively improving class separability.

Overall, the confusion results demonstrate that CrackLite-Net provides more stable and discriminative classification performance in cross-domain crack recognition scenarios, particularly under conditions involving complex texture interference and large variations in crack scale.

4. Discussion

This study introduces CrackLite-Net, a lightweight and adaptive deep learning framework developed to improve the reliability of road crack detection in complex field conditions. The framework incorporates three key modules: GhostPercepC2f, which strengthens spatial feature perception while maintaining computational efficiency; SAFPN, which enables adaptive multi-scale feature fusion guided by spatial attention; and SC2f, which enhances channel selectivity to better distinguish crack-related responses from background interference. The combination of these components enables more accurate detection of cracks with diverse shapes, widths, and structural variations, while maintaining a compact network architecture suitable for real-time operation.

Experimental evaluations on the LCrack dataset show that CrackLite-Net improves mAP by 3.9% compared with YOLO11n, achieving 92.3% mAP with only 2.2 M parameters. Cross-dataset testing on RDD2022 further demonstrates that the model generalizes well across different pavement surfaces and imaging environments, outperforming several widely used detectors while maintaining lower computational overhead. CrackLite-Net achieves 132 FPS on an NVIDIA RTX 4090 GPU, outperforming YOLO12n (109 FPS) while maintaining superior detection accuracy. These results verify the real-time capability of CrackLite-Net. In addition, based on the evaluation results on the RDD2022 dataset, we observed that the detection performance for alligator cracks was the lowest, which can be attributed to their irregular morphology and large structural variations compared with other crack types.

Despite its advantages, CrackLite-Net still faces challenges. Extremely fine cracks or cracks under heavy occlusion remain difficult to detect accurately, and background textures with crack-like patterns can lead to false alarms in low-contrast scenes. Among several crack types, alligator cracks exhibit the lowest detection performance, likely due to their highly irregular and fragmented morphology. Furthermore, the current framework relies solely on single still images and does not incorporate temporal cues, which limits its ability to support continuous monitoring and deterioration trend analysis.

Future work will focus on addressing these limitations by enhancing the detection of fine-scale cracks, exploring multimodal sensing and contrastive learning strategies to improve robustness in visually complex environments, integrating temporal modeling to capture dynamic surface changes, and further reducing model size to facilitate deployment on resource-constrained UAV platforms and embedded edge devices.

5. Conclusions

In this paper, we propose CrackLite-Net, a lightweight crack detection framework tailored for UAV-based road surface inspection and sustainable transportation applications. By integrating GhostPercepC2f, SAFPN, and SC2f, the network enhances multi-scale feature representation while maintaining a compact structure. Experiments on the LCrack dataset and cross-dataset evaluation on RDD2022 verify that CrackLite-Net achieves higher accuracy, stronger generalization, and real-time performance compared with state-of-the-art detectors, demonstrating its practical value for edge deployment and automated road maintenance. Despite these advantages, the model still faces challenges in detecting extremely fine and heavily occluded cracks and may generate false positives in low-contrast textures. Future work will focus on improving robustness under complex visual conditions, incorporating temporal cues, and further optimizing the model for resource-constrained UAV platforms.

Author Contributions

Conceptualization, Y.Z. and R.P.; Formal analysis, Methodology, Software, Validation, Y.Z. and R.P.; Writing—original draft, R.P.; Writing—review and editing, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Henan Province Science and Technology Research Project (Grant No.: 252102111173).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the editors and anonymous reviewers for their helpful suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zou, Q.; Cao, Y.; Li, Q.; Mao, Q.; Wang, S. CrackTree: Automatic crack detection from pavement images. Pattern Recognit. Lett. 2012, 33, 227–238. [Google Scholar] [CrossRef]

- Wu, S.; Fang, J.; Zheng, X.; Li, X. Sample and Structure-Guided Network for Road Crack Detection. IEEE Access 2019, 7, 130032–130043. [Google Scholar] [CrossRef]

- Cebon, D. Vehicle-Generated Road Damage: A Review. Veh. Syst. Dyn. 1989, 18, 107–150. [Google Scholar] [CrossRef]

- Deng, L.; Zhang, A.; Guo, J.; Liu, Y. An Integrated Method for Road Crack Segmentation and Surface Feature Quantification under Complex Backgrounds. Remote Sens. 2023, 15, 1530. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, C.; Li, G.; Xu, H. Analysis of the Impact of Different Road Conditions on Accident Severity at Highway–Rail Grade Crossings Based on Explainable Machine Learning. Symmetry 2025, 17, 147. [Google Scholar] [CrossRef]

- Qiu, Q.; Lau, D. Real-Time Detection of Cracks in Tiled Sidewalks Using YOLO-Based Method Applied to Unmanned Aerial Vehicle (UAV) Images. Autom. Constr. 2023, 147, 104745. [Google Scholar] [CrossRef]

- Du, Y.; Cheng, Q.; Liu, X.; Xu, J.; Yi, Y. Enhancing Road Maintenance through Cyber-Physical Integration: The LEE-YOLO Model for Drone-Assisted Pavement Crack Detection. IEEE Trans. Intell. Transp. Syst. 2025, 26, 14169–14178. [Google Scholar] [CrossRef]

- Mohan, A.; Poobal, S. Crack Detection Using Image Processing: A Critical Review and Analysis. Alex. Eng. J. 2018, 57, 787–798. [Google Scholar] [CrossRef]

- Li, T.; Li, G. Road Defect Identification and Location Method Based on an Improved ML-YOLO Algorithm. Sensors 2024, 24, 6783. [Google Scholar] [CrossRef] [PubMed]

- Cheng, H.D.; Miyojim, M. Automatic Pavement Distress Detection System. Inf. Sci. 1998, 108, 219–240. [Google Scholar] [CrossRef]

- Liu, F.; Liu, J.; Wang, L. Asphalt Pavement Crack Detection Based on Convolutional Neural Network and Infrared Thermography. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22145–22155. [Google Scholar] [CrossRef]

- Bhardwaj, M.; Khan, N.U.; Baghel, V. Fuzzy C-Means Clustering Based Selective Edge Enhancement Scheme for Improved Road Crack Detection. Eng. Appl. Artif. Intell. 2024, 136, 108955. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature Pyramid and Hierarchical Boosting Network for Pavement Crack Detection. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1525–1535. [Google Scholar] [CrossRef]

- Maode, Y.; Shaobo, B.; Kun, X.; Yuyao, H. Pavement Crack Detection and Analysis for High-Grade Highway. In Proceedings of the 8th International Conference on Electronic Measurement and Instruments (ICEMI 2007), Xi’an, China, 16–18 August 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 4548–4552. [Google Scholar] [CrossRef]

- Zhou, J. Wavelet-Based Pavement Distress Detection and Evaluation. Opt. Eng. 2006, 45, 027007. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic Road Crack Detection Using Random Structured Forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Chen, C.; Seo, H.; Jun, C.H.; Zhao, Y. Pavement crack detection and classification based on fusion feature of LBP and PCA with SVM. Int. J. Pavement Eng. 2021, 23, 3274–3283. [Google Scholar] [CrossRef]

- Alam, S.Y.; Loukili, A.; Grondin, F.; Rozière, E. Use of the Digital Image Correlation and Acoustic Emission Technique to Study the Effect of Structural Size on Cracking of Reinforced Concrete. Eng. Fract. Mech. 2015, 143, 17–31. [Google Scholar] [CrossRef]

- Kaseko, M.S.; Ritchie, S.G. A Neural Network-Based Methodology for Pavement Crack Detection and Classification. Transp. Res. Part C Emerg. Technol. 1993, 1, 275–291. [Google Scholar] [CrossRef]

- Xu, H.; Zheng, W.; Liu, F.; Li, P.; Wang, R. Unmanned Aerial Vehicle Perspective Small Target Recognition Algorithm Based on Improved YOLOv5. Remote Sens. 2023, 15, 3583. [Google Scholar] [CrossRef]

- Mayer, H. Fatigue Crack Growth and Threshold Measurements at Very High Frequencies. Int. Mater. Rev. 1999, 44, 1–34. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, Z.J.; Ren, W. Crack Detection from the Slope of the Mode Shape Using Complex Continuous Wavelet Transform. Comput.-Aided Civ. Infrastruct. Eng. 2012, 27, 187–201. [Google Scholar] [CrossRef]

- Ye, X.; Wang, L.; Huang, C.; Luo, X. Wind Turbine Blade Defect Detection with a Semi-Supervised Deep Learning Framework. Eng. Appl. Artif. Intell. 2024, 136, 108908. [Google Scholar] [CrossRef]

- Zhang, A.; Wang, K.C.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated Pixel-Level Pavement Crack Detection on 3D Asphalt Surfaces Using a Deep-Learning Network. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Wu, W.; Yin, Y.; Wang, X.; Xu, D. Face Detection with Different Scales Based on Faster R-CNN. IEEE Trans. Cybern. 2019, 49, 4017–4028. [Google Scholar] [CrossRef]

- Zhou, K.; Lei, D.; Chun, P.J.; She, Z.; He, J.; Du, W.; Hong, M. Evaluation of BFRP Strengthening and Repairing Effects on Concrete Beams Using DIC and YOLO-v5 Object Detection Algorithm. Constr. Build. Mater. 2024, 411, 134594. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Xu, X.; Zhao, M.; Shi, P.; Ren, R.; He, X.; Wei, X.; Yang, H. Crack Detection and Comparison Study Based on Faster R-CNN and Mask R-CNN. Sensors 2022, 22, 1215. [Google Scholar] [CrossRef]

- Zhou, Q.; Ding, S.; Qing, G.; Hu, J. UAV Vision Detection Method for Crane Surface Cracks Based on Faster R-CNN and Image Segmentation. J. Civ. Struct. Health Monit. 2022, 12, 845–855. [Google Scholar] [CrossRef]

- Liu, Z.; Yeoh, J.K.W.; Gu, X.; Dong, Q.; Chen, Y.; Wu, W.; Wang, L.; Wang, D. Automatic pixel-level detection of vertical cracks in asphalt pavement based on GPR investigation and improved Mask R-CNN. Autom. Constr. 2023, 146, 104689. [Google Scholar] [CrossRef]

- Li, R.; Yu, J.; Li, F.; Yang, R.; Wang, Y.; Peng, Z. Automatic Bridge Crack Detection Using Unmanned Aerial Vehicle and Faster R-CNN. Constr. Build. Mater. 2023, 362, 129659. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Xiang, X.; Wang, Z.; Qiao, Y. An Improved YOLOv5 Crack Detection Method Combined with Transformer. IEEE Sens. J. 2022, 22, 14328–14335. [Google Scholar] [CrossRef]

- Tran, V.P.; Tran, T.S.; Lee, H.J.; Kim, K.D.; Baek, J.; Nguyen, T.T. One-Stage Detector (RetinaNet)-Based Crack Detection for Asphalt Pavements Considering Pavement Distresses and Surface Objects. J. Civ. Struct. Health Monit. 2021, 11, 205–222. [Google Scholar] [CrossRef]

- Su, P.; Han, H.; Liu, M.; Yang, T.; Liu, S. MOD-YOLO: Rethinking the YOLO Architecture at the Level of Feature Information and Applying It to Crack Detection. Expert Syst. Appl. 2024, 237, 121346. [Google Scholar] [CrossRef]

- Zhu, X.; Hang, X.; Gao, X.; Yang, X.; Xu, Z.; Wang, Y.; Liu, H. Research on Crack Detection Method of Wind Turbine Blade Based on a Deep Learning Method. Appl. Energy 2022, 328, 120241. [Google Scholar] [CrossRef]

- Suhendar, H. Road Crack Detection Using YOLO-V5 and Adaptive Thresholding. J. Appl. Intell. Syst. 2023, 8, 425–431. [Google Scholar] [CrossRef]

- Jing, Y.; Ren, Y.; Liu, Y.; Wang, D.; Yu, L. Automatic Extraction of Damaged Houses by Earthquake Based on Improved YOLOv5: A Case Study in Yangbi. Remote Sens. 2022, 14, 382. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, J.; Fu, X.; Yu, T.; Guo, Y.; Wang, R. DC-SPP-YOLO: Dense Connection and Spatial Pyramid Pooling Based YOLO for Object Detection. Inf. Sci. 2020, 522, 241–258. [Google Scholar] [CrossRef]

- Yu, Z.; Shen, Y.; Shen, C. A Real-Time Detection Approach for Bridge Cracks Based on YOLOv4-FPM. Autom. Constr. 2021, 122, 103514. [Google Scholar] [CrossRef]

- Guo, G.; Zhang, Z. Road Damage Detection Algorithm for Improved YOLOv5. Sci. Rep. 2022, 12, 15523. [Google Scholar] [CrossRef]

- Ye, G.; Qu, J.; Tao, J.; Dai, W.; Mao, Y.; Jin, Q. Autonomous Surface Crack Identification of Concrete Structures Based on the YOLOv7 Algorithm. J. Build. Eng. 2023, 73, 106688. [Google Scholar] [CrossRef]

- Zeng, J.; Zhong, H. YOLOv8-PD: An improved road damage detection algorithm based on YOLOv8n model. Sci. Rep. 2024, 14, 12052. [Google Scholar] [CrossRef]

- Guo, F.; Qian, Y.; Liu, J.; Yu, H. Pavement Crack Detection Based on Transformer Network. Autom. Constr. 2023, 145, 104646. [Google Scholar] [CrossRef]

- Liu, H.; Miao, X.; Mertz, C.; Xu, C.; Kong, H. CrackFormer: Transformer Network for Fine-Grained Crack Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3783–3792. [Google Scholar] [CrossRef]

- Chen, S.; Feng, Z.; Xiao, G.; Chen, X.; Gao, C.; Zhao, M.; Yu, H. Pavement Crack Detection Based on the Improved Swin-UNet Model. Buildings 2024, 14, 1442. [Google Scholar] [CrossRef]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2022: A Multi-National Image Dataset for Automatic Road Damage Detection. Geosci. Data J. 2024, 11, 846–862. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).