Abstract

Since service robots serving as salespersons are expected to be deployed efficiently and sustainably in retail environments, this paper explores the impacts of their interaction cues on customer experiences within small-scale self-service shops. The corresponding customer experiences are discussed in terms of fluency, comfort and likability. We analyzed customers’ shopping behaviors and designed fourteen body gestures for the robots, giving them the ability to select appropriate movements for different stages in shopping. Two experimental scenarios with and without robots were designed. For the scenario involving robots, eight cases with distinct interaction cues were implemented. Participants were recruited to measure their experiences, and statistical methods including repeated-measures ANOVA, regression analysis, etc., were used to analyze the data. The results indicate that robots solely reliant on voice interaction are unable to significantly enhance the fluency, comfort and likability effects experienced by customers. Combining a robot’s voice with the ability to imitate a human salesperson’s body movements is a feasible way to truly improve these customer experiences, and a robot’s body movements can positively influence these customer experiences in human–robot interactions (HRIs) while the use of colored light cannot. We also compiled design strategies for robot interaction cues from the perspectives of cost and controllable design. Furthermore, the relationships between fluency, comfort and likability were discussed, thereby providing meaningful insights for HRIs aimed at enhancing customer experiences.

1. Introduction

Service robots have been gradually applied in various fields in society, and are beginning to perform different activities that are typically undertaken by humans, such as customer service roles in shopping malls [1,2], educational and medical support for children and the elderly [3,4,5,6], and museum guides [7]. The adoption of service robots provides sustainable benefits from both social and economic perspectives. For example, service robots in shopping malls can act as shopkeepers or assistants at service desks to complete tasks including providing information [8,9], making deliveries [10], and distributing flyers [11]. They can relieve humans from performing repetitive and monotonous tasks, reduce the long-term operational costs, help attract customers, etc. The role of service robots usually consists of multiple tasks according to different scenarios. Some of robots’ tasks need to dealt with on a one-by-one basis over time, but some are separate tasks [12]. To complete the tasks successfully and guarantee smooth HRI, appropriate expressions for robots that are tailored to specific contexts and different tasks are quite important. Many researchers focus on the effects of interaction cues including robot behavior [1,13,14], eye gaze [15], and voice [16,17,18,19] to provide design suggestions on robot expression. These interaction cues can enhance the predictability of a robot’s behaviors, thereby facilitating greater user acceptance and enabling integration of the robot into diverse scenarios. Meanwhile, in some studies, different contexts are considered as they can lead to varied effectiveness and experiences in HRI [20,21,22]. Designing appropriate expressions for robots according to context in HRI design can provide much better experiences for humans, improve the effectiveness of interaction and promote the sustainable application of robots.

When using robots in shopping scenarios, we cannot expect that every customer is proficient in interacting with the robot. The ability to interact with robots and the personal perceptions of robots can affect customer experiences. Some studies reveal that likeability in HRI can affect the emotions of humans [23,24,25], and different robot behaviors can draw the attention of passersby [26] and evoke positive or negative emotions in humans [13,27]. In other words, in shopping scenarios, robot behaviors could result in customers having different shopping experiences. Furthermore, when considering the detailed process in shopping, robots often need to undertake multiple sub-tasks, in which case the fluency aspect in HRI is also part of the customer experience.

In recent years, many small-scale shops have increasingly adopted the self-service mode, which involves characteristics such as the integration of surveillance cameras, no salespersons and sometimes predefined voice prompts. When customers wish to purchase goods, they select the items independently and complete the payment using their smartphones by scanning a payment code. Hence, the application of service robots in small-scale shops without salespersons is considered, and three research questions (RQs) are explored as follows.

Research question 1 (RQ1): Does the integration of a robot salesperson into a small-scale unmanned shop enhance the fluency, comfort and likeability of the customer shopping experience?

Research question 2 (RQ2): What combination of interaction cues for robots can enhance the fluency, comfort and likeability of the customer shopping experience when a robot acts as a salesperson in a small-scale unmanned shop?

We are concerned with the customers’ experiences during shopping and their feelings after shopping. Research question 3 (RQ3) is incorporated into the scope of consideration.

RQ3: Does the fluency and comfort experienced by customers during the shopping process positively affect their perception of the likeability of the shopping process?

The structure is organized as follows. Section 2 reviews the related work. Section 3 describes the early-stage preparations, including the analysis of customer behavior in shopping and the design of robot body gestures in small-scale self-service shops. Section 4 explains the experiments. Section 5 illustrates the discussions, presents the findings and outlines some limitations. Section 6 concludes this study.

2. Related Works

2.1. Studies of HRI in Shopping Scenarios

When service robots are deployed in shops, they are often expected to assume the role of salesperson by performing multiple tasks such as interacting with customers, assisting in product searches, and handling checkout operations. The customer shopping behaviors show temporal continuity. Customers’ shopping experiences can be influenced by many factors throughout this continuous process, including the expression of the salesperson, the communication between the customer and the salesperson, and so on. All of these factors make the application of robots in shopping scenarios context-dependent. When robots serve as salespeople, it is necessary to consider how to design their expressive behaviors and interactions with humans based on context. Some studies focus on human–human interactions to propose different approaches for applying robots in shopping [28,29,30]. For instance, Liu et al. recorded voice and action data of a shopkeeper and customers in a camera shop scenario to explore proactive behaviors in robots [30]. However, some research reveals that duplicating typical human behaviors may not produce optimal choices of robots [31,32,33]. The work of Naito et al. highlighted the need to investigate robot-specific best practices for customer service and showed that a robot shopkeeper can choose direct behavior to improve customer experiences [33].

In addition, some works focus on the social tasks accomplished by robots in shopping scenarios. For example, Edirisinghe et al. explored a service robot with friendly guidance and appropriate admonishment and obtained overall positive impressions from both customers and shop staff [34]. Sabelli et al. discussed the long-term presence of the Robovie robot, which served as a mascot in a shopping mall, through a qualitative study and explored how visitors interacted with, understood and accepted it [2]. Studies of tasks such as monitoring blind regions with a robot shop clerk [35], sharing environments where humans and robots have to cross each other in narrow corridors [36] and having conversations with people on walks [37] can also provide valuable guidance for robot applications in shopping scenarios.

2.2. Customer Experiences in HRI

Customers generally have clear aims when entering a shop, and their behaviors are influenced by the aims. When engaged in a shopping scenario, robots interact with customers during service. From the customers’ perspective, the perception of a robot expression is critical to their experience. The robot expression consists of various interactive cues, through which customers understand the robot’s intention and provide appropriate feedback. For robots to be integrated into shopping scenarios, HRIs need to reach a good level of fluency to ensure customer experience. Some studies have developed fluency scales in HRI [38], and have proposed unified predictive models to generate the robot’s motion to make its intent more transparent to the human [39]. The comfort in shopping is also an important experience for customers, which may lead to continuing or withdrawing from the interaction. Some studies focus on comfortability in HRI [40,41,42,43]. For instance, Eunil Park et al. found that individuals who interacted with a robot with a similar personality were more comfortable than those who engaged with a robot with a different personality [44]. The works of Koay et al. [43] and Ball et al. [45] revealed through different experiments across various scenarios that encounters with a robot from the front are more comfortable.

The likelihood of a customer returning to the shop is influenced by their perception of the robot’s service to some extent. When customers feel that robots in shops are likable, they will accept the use of a robot within the scenario [46,47]. Likeability usually describes the positive impression that people form of others [48]. Some studies showed that likeability is one of the key concepts in HRI evaluation [14,18,49]. The work of Salem et al. indicated that the perception of likeability is impacted by the non-verbal behaviors displayed by robotic systems, and it also results in increased future contact intentions in humans [50]. It also illustrates the necessary of a robot’s body gestures.

3. Early-Stage Preparation

3.1. Analysis of Behaviors in Shopping

The main roles of humans in shopping scenarios are salesperson and customer. When robots are deployed in shopping scenarios, the responsibilities of a robot salesperson are expected to be identical to those of a human salesperson. Hence, the behaviors of a human salesperson are analyzed. The communication behaviors between a salesperson and a customer in a small-scale shop when making a purchase are summarized and divided into four stages as follows based on the observations in our daily life. Here, the four stages are named as T1, T2, T3, and T4, respectively.

T1 (the beginning stage): Greeting behaviors between the salesperson and customers, such as conversation and hand beckoning. The start point is usually from the salesperson.

T2 (the inquiry stage): This is the stage for selecting goods. The inquiries come from the customers or the salesperson. The customers ask the salesperson about the location of the goods and other problems they may encounter in shopping. To provide customers with the information about goods, the salesperson typically combines verbal answers with guiding behaviors, such as pointing with their hands or walking toward the goods.

T3 (the transfer stage): This usually happens at the cashier desk. Customers hand items for purchase to the salesperson or place them on the cashier desk. The salesperson informs the customer of the price, and either scans the customer’s payment QR code or instructs them how to scan the merchant QR code to pay. In this stage, voice and gesture guidance are necessary.

T4 (the end stage): Customers finish the payments of goods. The salesperson talks with the customer to make sure the shopping process is complete, after which they exchange farewells. In this stage, conversations are sometimes accompanied with body gestures including nodding and waving.

The above stages are also consistent with the operation pipelines of robots in stores revealed in the studies of Okafuji et al., Song et al., and Brengman et al. [51,52,53]. For example, Song et al. conducted a long-term field experiment at a bakery store for 6 months, and illustrated the robot’s behaviors including welcoming customers, recommending products and expressing thanks to the customers when they leave [52]. Brengman et al. collected 28 h of video materials of robots deployed in a chocolate store, and illustrated the four sequential stages in the robot’s behaviors: drawing attention, gaining interest, inducing desire and making a sale [53]. The salesperson usually chooses voice and body gestures for welcoming, guiding and bidding farewell in shopping. On this basis, in small-scale self-service shops, the stages from T1 to T4 of the robot’s behaviors can be briefly summarized as follows.

T1: Vocal greetings are sometimes given to customers, and sometimes they are not.

T2: Providing information or guidance about the goods that customers intend to buy.

T3: Guiding the customers to scan the merchant QR code. The code is often placed in a visible location for easy access.

T4: Sometimes there is a robot voice prompt for the end of shopping or a farewell message.

In this study, the designs of the robot’s voices, V1, V2, V3 and V4, for different stages are respectively shown in Table 1.

Table 1.

The design of robot’s voices for different shopping stages.

3.2. Design of Robot’s Body Gestures

3.2.1. Robot Platform and Its Body Gestures

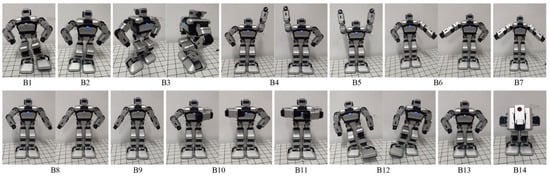

The Yanshee robot, which utilizes the open hardware platform architecture of Raspberry Pi + STM32, is used. In order to make the robot’s body gestures more in line with the body language semantics of salespeople across different shopping stages, we designed 14 body gestures for the Yanshee robot, designated as B1 to B14. The body gestures were recorded on videos, and the typical body movements are shown in Figure 1. Their corresponding descriptions are given in Table 2.

Figure 1.

The designed robot’s body gestures.

Table 2.

The descriptions of the designed robot’s body gestures.

3.2.2. Participants and Procedure

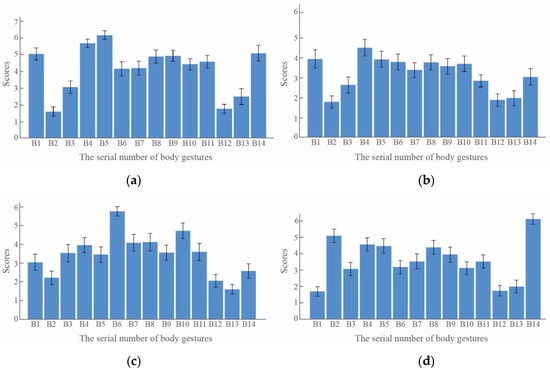

Fifty participants aged 18–30 were recruited. They were required to watch the videos of the robot’s body gestures and answer the questionnaire shown in Table 3. Before watching, to ensure participants understood the research purpose, corresponding descriptions of where and why this robot’s body gestures would be used are given. A Likert scale from 1 to 7 was chosen to assess the matching degree, and the higher the score, the more consistently the behavior matches. When the score is 1, it means the behavior is very inappropriate. Based on the quantitative data from the participants, the scores for the matching of the robot’s body gestures are shown in Figure 2.

Table 3.

The questionnaire about the robot’s body gestures.

Figure 2.

The scores of robot body gestures for four stages in shopping. (a) T1 (the beginning stage); (b) T2 (the inquiry stage); (c) T3 (the transfer stage); (d) T4 (the end stage).

3.2.3. The Employed Robot’s Body Gestures

The obtained scores were analyzed by the repeated-measures ANOVA, using SPSS 26.0. In stage T1, the body gesture B5 shows a significant difference from other body gestures (p < 0.01). In stage T2, B4 does not exhibit a significant difference compared to B1 (p = 0.07), while it shows a significant difference from the other body gestures (p < 0.05). In stage T3, B6 exhibits a significant difference from other body gestures (p < 0.001), and in stage T4, B14 also shows a significant difference from other body gestures (p < 0.001). This indicates that body gestures B5 (M = 6.14, SD = 0.9), B4 (M = 4.7 SD = 1.52) and B1 (M = 4.12, SD = 1.662), B6 (M = 5.74 SD = 0.88) and B14 (M = 5.74 SD = 0.88) can be respectively used to express greeting, guide to select goods, guide to pay and bid farewell in shopping. Moreover, compared to B4, in addition to having a relatively lower M-value and a relatively larger SD, B1 takes a longer time to execute due to the consideration of the robot’s dynamic balance when going forwards. Therefore, B4 is adopted in T2.

4. Experiments

4.1. Hypothesis and Scenarios

The customer experiences in unmanned small-scale shops are analyzed from two perspectives: the experience throughout the shopping process and the feelings after shopping. The former focuses on the fluency and comfort during the shopping process, while the latter considers the likeability. The corresponding details are shown in Table 4.

Table 4.

The details for customer experiences.

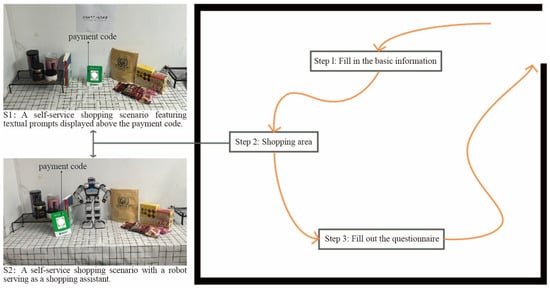

Consider the research questions mentioned in Section 1. For RQ1, a typical self-service shopping scenario and a scenario wherein the Yanshee robot serves as a salesperson are constructed. The two scenarios are named S1 and S2, respectively, and shown in Figure 3. S1 shows a self-service shopping scenario featuring textual prompts displayed above the payment code, and S2 shows a self-service shopping scenario with a robot serving as a shopping assistant. In the context of self-service, in addition to voice prompts, other interaction cues are typically absent. We proposed the first hypothesis for RQ1 and RQ2.

Figure 3.

The scenarios and the flow of participants in the experiments.

Hypothesis 1 (H1).

Robots that rely on voice as the sole cue of interaction to function as sales assistants can significantly enhance the fluency, comfort and likeability of the shopping experience in small self-service shops.

We set up interaction cues combining different channels to explore the appropriate customer–robot interaction in a small-scale self-service shop. In the scenario of a robot serving as a salesperson, we proposed the second hypothesis in four aspects.

Hypothesis 2a (H2a).

When only a single interaction cue is designed, the robot’s voice prompts show a more positive impact than the robot’s actions on customer experiences of fluency, comfort and likeability.

Hypothesis 2b (H2b).

When a new interaction cue is added to form a dual-channel combination with robot voice prompts, the addition of robot body movements can positively influence customer experiences of fluency, comfort and likeability.

Hypothesis 2c (H2c).

When a new interaction cue is added to form a dual-channel combination with robot voice prompts, the addition of the robot’s colored lights can positively influence customer experiences of fluency, comfort and likeability.

Hypothesis 2d (H2d).

The robot’s three-channel interaction cue combination can significantly enhance customer experiences of fluency, comfort and likeability compared to the dual-channel interaction cue combination.

For RQ3, according to the two scenarios, without a robot (S1) and a with robot (S2), the third hypothesis is proposed from two aspects.

Hypothesis 3a (H3a).

In the scenario without a robot, both the fluency and the comfort during the shopping positively influence the likeability for customers in small, unmanned shops.

Hypothesis 3b (H3b).

In the scenario with a robot serving as a salesperson, both the fluency and the comfort during the shopping positively influence the likeability for customers in small, unmanned shops.

4.2. Design of Interaction Cues for Robot

Customers interacting with a service robot have relatively definite intention in a shopping scenario. They observe and listen to the robots to find the interaction cues. Different combinations of interaction cues show different levels of efficiency in expressing the robot’s intention. Many studies have revealed that, from the viewer’s perspective, judgements of perception concerning the magnitude of a stimulus feature can be influenced more by its left side than by its right side [54,55,56]. It is seen as a type of leftward bias. Currently, we are not sure whether this happens when humans observe robots in shopping scenarios. Hence, in the design of interactive cues for the Yanshee robot, the hand movements, B4 and B6, illustrated in Figure 2 are designed for the left and right hands, and are named as B4_left, B4_right, B6_left and B6_right, respectively.

Although the major approach to improving HRI is to imitate the interaction mechanisms of human–human interactions [57,58], some recent studies have suggested that this is not enough and that the distinctive characteristics of robots should be utilized in HRI [28,29,30]. Hence, the characteristics of the Yanshee robot are also considered in the design of experiments. The Yanshee robot has the ability to emit different colored lights from its chest. We designed two light types, C1 and C2. For C1, the yellow light blinks to attract the customer’s attention. It is employed in the stages of T1 and T4 to welcome the customer and say goodbye. For C2, the green light being kept on in the stages of T2 and T3 expresses that the guidance process is smooth. A yellow blinking light and a solid green light are designed based on traffic signal lights. Overall, the designed interaction cues for each stage in the experiments are shown in Table 5.

Table 5.

The designed interaction cues for each stage in the experiments.

Based on Table 5, the combinations of interactive cues for the Yanshee robot are designed from the perspectives of single-channel, dual-channel and three-channel in scenario S2. A total of eight combinations were designed, as shown in Table 6, and are designated as SR1 through SR8. SR1-SR3 employ different single-channel interaction cues, SR4-SR6 utilize different dual-channel combinations and SR7-SR8 incorporate different three-channel combinations.

Table 6.

The descriptions of the eight combinations of interaction cues.

4.3. Experimental Procedure

Twenty-five males (M = 23.24, SD = 1.74) and twenty-five females (M = 23.44, SD = 1.16) with the age range of 20~26 years were recruited from Nanjing Forestry University. They were asked about some basic information including personal information and their history of interactions with robots. Then, they were required to enter the shopping scenarios. The participants first shopped in S1, a scenario which is without a robot. After finishing, they took a break, and then shopped in S2, a scenario which is with a robot. In scenario S2, the Yanshee robot can display eight combinations of interaction cues. These combinations were random for the participants. After that, they were interviewed to complete questionnaires about their experiences. The questions in the questionnaire are shown in Table 7. Selection results from participants were transformed into 7-point evaluations, ranging from 1 (very weak) to 7 (very strong). The flow of participants in the experiments can be seen in Figure 3, indicated by the orange arrows. An example of the HRI process for scenario S2 is shown in Figure 4, in which the Yanshee robot displays the combination of interaction cues, SR5.

Table 7.

The questionnaire for customer experiences of HRI.

Figure 4.

An example of an HRI process in scenario S2 in the case of a robot with a combination of interaction cues, SR5.

4.4. Results

4.4.1. The Reliability and Validity of the Questionnaire

Cronbach’s reliability coefficients and the values of Kaiser–Meyer–Olkin (KMO) for scenarios S1 (without robot) and S2 (with robot) are shown in Table 8. All significance values of Bartlett’s test of sphericity are less than 0.001. The M-values and SDs of fluency, comfort and likeability scores across different scenarios are shown in Table 9.

Table 8.

The reliability and validity of the questionnaire.

Table 9.

The M-values and SDs of fluency, comfort and likeability scores.

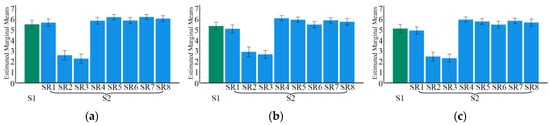

4.4.2. The Results of Repeated-Measures ANOVA

The repeated-measures ANOVA was used to analyze the obtained data. For Mauchly’s test of sphericity, p < 0.001. The results of the multivariate tests indicated significant effects for fluency (F(4.359, 213.614) = 101.050, p < 0.001 and partial η2 = 0.673), comfort (F(4.999, 244.974) = 69.142, p < 0.001 and partial η2 = 0.585) and likeability (F(4.880, 239.114) = 91.092, p < 0.001 and partial η2 = 0.650). The estimated marginal means for fluency, comfort and likeability are shown in Figure 5. The data for scenario S1 are plotted in green, and those for the eight conditions of scenario S2 (SR1~SR8) are plotted in blue. Based on multivariate tests, significant differences were found between scenario S1 and various conditions of scenario S2. Specifically, for fluency, S1 differed from SR2, SR3, SR5, SR7 and SR8. For comfort, S1 differed from SR2, SR3, SR4, SR5 and SR7. For likeability, S1 differed from SR2, SR3, SR4, SR5, SR7 and SR8. All p-values are less 0.05 in these comparisons. In scenario S2, the data for conditions SR2 and SR3 differed significantly from all other conditions (all p < 0.05) for fluency, comfort and likeability. Furthermore, for fluency, significant differences were found between SR1 and both SR5 and SR7 (p < 0.01), as well as between SR7 and SR4 (p = 0.019) and SR6 (p = 0.028). For comfort, significant differences were found between SR1 and other conditions (all p < 0.05). Additionally, SR6 differed significantly from SR4 (p = 0.001), SR5 (p = 0.007), and SR7 (p = 0.026). For likeability, significant differences were found between SR1 and other conditions (all p < 0.01), as well as between SR6 and SR4 (p = 0.009) and between SR6 and SR7 (p = 0.046).

Figure 5.

The estimated marginal means for fluency, comfort and likeability in different cases. (a) Fluency; (b) comfort; (c) likeability.

4.4.3. The Results of Regression Analysis

The correlations of fluency, comfort and likeability in the cases shown in Table 6 were analyzed by Spearman correlation test. The results show significant correlations among the three aspects of customer experiences in both scenario S1 (without robot) and scenario S2 (with robot), with all p values smaller than 0.001. Then, the linear regression analysis was used to further analyze the data [59]. The effects of fluency and comfort on likeability in scenarios S1 (without robot) and S2 (with robot) were examined. To demonstrate the impacts on likeability, the results in Table 10 show that the p-values of ANOVA and coefficients are smaller than 0.05. This indicates that, in some cases, either fluency or comfort can be omitted from the regression models since it does not have a significant effect on likeability. For example, in scenario S2, the influential predictors of likeability vary across conditions. For the cases of SR4, SR5, SR6 and SR8, both fluency and comfort are positive predictors. For the cases of SR1, SR2 and SR7, only comfort shows a positive predictive influence, while fluency is excluded. For the case of SR3, only fluency shows a positive predictive influence, while the comfort is excluded. All the values of R2 and Durbin–Watson (D-W) in Table 10 indicate that all regression models for scenarios S1 and S2 meet the assumptions of good fit and independent errors. Furthermore, in scenario S1, the values of standardized beta coefficients of fluency and comfort are 0.430 and 0.349, with t-values of 3.155 and 2.562 and p-values of 0.003 and 0.014, respectively. This indicates that both fluency and comfort exert positive predictive impacts on likeability in S1.

Table 10.

The results obtained by the regression analysis.

5. Discussions

5.1. The Improvements in Fluency, Comfort and Likeability in Small-Scale Unmanned Shopping

According to the results of repeated-measures ANOVA and Table 9, there are significant differences in fluency, comfort and likeability between the scenario without a robot (S1) and the scenario with a robot (S2), with p < 0.001. The differences vary according to the combination of interaction cues employed by the robot. However, when only the voice (SR1) was utilized, no significant difference was observed compared to scenario S1. This indicates that H1 is not supported. Considering the results shown in Figure 5, in the scenario with a robot (S2), under the single-channel body movement cases (SR2 and SR3), the scores for fluency, comfort and likeability are significantly lower than those under all other cases, including S1. This indicates that H2a is supported. This is consistent with the study showing that a single visual stimulus caused some stress to users, and a single auditory stimulus was less influential than visual stimuli [60]. Furthermore, it indicates that in the absence of appropriate interaction cues, the use of robots in self-service shops does not improve the customer experience.

In addition, for fluency, the dual-channel case (voice and right-side body movement, SR5) and the three-channel cases (voice, body movements and light, SR7 and SR8) have significantly better scores than all other cases. For comfort, the dual-channel cases (voice and body movements, SR4 and SR5) and the three-channel cases (voice, left-side body movements and light, SR7) have significantly better scores than all other cases. For likeability, the dual-channel cases (voice and body movements, SR4 and SR5) and the three-channel cases (voice, body movements and light, SR7 and SR8) have significantly better scores than all other cases. Moreover, with regard to fluency, comfort and likeability, no significant differences are observed among SR6, S1 and SR1. This indicates that H2c is not supported.

Based on the results mentioned above, for RQ1, voice constitutes a critical interaction cue for a robot salesperson; however, the mere presence of voice is insufficient to enhance fluency, comfort, and likeability. The interaction cue of a robot’s colored light is not essential. The improvements in fluency, comfort and likeability are primarily achieved by integrating the robot’s voice prompts with body movements that imitate a human salesperson’s behavioral patterns.

5.2. The Design of Interaction Cues for Robot Salesperson in Small-Scale Self-Service Shop

H2b is partially supported, as the combination of the robot’s left-side body movements and voice prompts has no significant effect on fluency. According to the results shown in Section 4.4.2, for comfort and likeability, the scores for the voice-only case (SR1) are significantly lower than those for the cases combining the robot’s voice and body movements (SR4 and SR5). Furthermore, no significant difference is found between the scores of SR4 and SR5. For fluency, the scores for the voice-only case (SR1) are significantly lower than those for the case combining the robot’s voice and right-side body movements (SR5), while there is no significant difference between SR1 and the case combining the robot’s voice and left-side body movements (SR4). Therefore, there are positive impacts of the robot’s body movements on the customer experiences of comfort and likeability, as well as of its right-side body movements on experiences of fluency. This result is the part that supports H2b. Furthermore, it reveals the asymmetry in customers’ perceptions of fluency for the robot’s left-side and right-side body movements. When an individual is positioned in front of a robot, the movements of the robot’s right side correspond to the individual’s left visual field. The study of Jewell et al. revealed that healthy people usually respond quicker and judge stimuli that appear in the left visual field compared to the right [61]. Therefore, the findings indicate that the leftward bias mentioned in Refs. [54,55,56] is evident in the perception of fluency of HRI, and are consistent with Ref. [61].

For RQ2, the dual-channel case (robot’s voice and right-side body movements, SR5), and the three-channel case (robot’s voice, right-side body movements and color light, SR7) yield higher scores in fluency, comfort and likeability compared to the other cases. While considering the design costs, adopting the dual-channel interaction cues of the robot’s voice and its right-side body movements emerges as the optimal approach.

5.3. The Internal Relationships Among Customer Experiences of Fluency, Comfort and Likeability in Small-Scale Self-Service Shop

H3a is supported, but H3b is partially supported. For RQ3, according to the analysis shown in Section 4.4.3, we discuss from the view of scenarios with and without the robot. In the scenario without a robot (S1), fluency and comfort during the shopping process show positive impacts on the likeability. The interaction between fluency and comfort is also considered. The results show no interaction between fluency and comfort (p = 0.621). This finding indicates that the interaction between fluency and comfort does not have a significant impact on likeability. In addition, in the scenario with a robot (S2), not all cases result in both fluency and comfort positively influencing likeability. For example, in the case that the robot’s voice is used as the sole interaction cue (SR1), only the comfort level has a positive impact on the likeability.

When robots are employed as store assistants, they are expected to truly enhance the customer experience. Therefore, we discuss the two cases, SR5 and SR7, mentioned in Section 5.2, because their corresponding interaction cues are more meaningful in terms of fluency, comfort and likeability. In the case where the robot’s voice and right-side body movements were used (SR5), both fluency and comfort during the shopping process show positive impacts on the likeability. The corresponding interaction between fluency and comfort are also considered. The results show no interaction between fluency and comfort (p = 0.719). It means that the interaction between fluency and comfort in this case does not affect the likeability. In the case where the robot’s voice, right-side body movements and light were used (SR7), just comfort during the shopping process has a positive impact on the likeability. Hence, for designers, implementing the dual-channel interaction cues consisting of the robot’s voice and right-side body movements offers two distinct avenues for enhancing likeability. Meanwhile, the advantage of the three-channel interaction cues, comprising the robot’s voice, right-side body movements and light, is that they focus on a single aspect, comfort, thereby simplifying control of the design to improve likeability.

5.4. Limitations

The findings in this study demonstrate the feasibility and necessity of using service robots in small-scale self-service shops, and could be utilized for designing interactions to guide customers and provide more appropriate customer experiences. Limitations can be described from three directions. One of them is that the experimental platform, the Yanshee robot, is a small-scale robot with 17 degrees of freedom and cannot exhaustively cover the different robots used in real scenarios. Service robots in many public areas may have larger sizes, more degrees of freedom and more interactive expressions. This may have an impact on customers’ perception. Another limitation is that it is unclear whether the results can inform an HRI design for all age groups of customers in small-scale self-service shops as the participants in our experiments were relatively young. Expanding the age range of customers may lead to more meaningful design directions. And finally, in the shopping scenario without a robot, when there is no salesperson in the shop, customers simply shop without asking any questions. However, when a service robot is engaged as a salesperson, customers may expect robots to solve more complex issues such as introducing product features, handling returns, etc., in self-service shops. Such expectations may also affect the corresponding customer experiences.

6. Conclusions

This paper mainly studied the impact of service robots on customer experiences in small-scale self-service shops. We analyzed customer shopping behaviors and performed early-stage experiments to select appropriate robot body gestures for imitating salesperson behaviors and establishing self-service shopping scenarios. Then, the robot, Yanshee, was used for the subsequent experiments. Two self-service shopping scenarios were designed, with one featuring a service robot, and the other involving no robotic assistance. In the scenario with a robot, eight cases with different interaction cues were designed. Customer experiences in terms of fluency, comfort and likability were evaluated.

The findings of this study explain how varying interaction cues impact fluency, comfort and likability in self-service shopping environments. Furthermore, the study identifies feasible and effective combinations of interaction cues to enhance each of these customer experiences. Additionally, the intrinsic relationships among fluency, comfort and likability are examined, thereby offering valuable insights for the design of a service robot’s interaction cues. The main contributions of this study are summarized as follows:

1. A self-service shop with a voice-only robot shows no significant improvement in fluency, comfort and likeability over a shop with no robot. When only the robot’s body movements are present, fluency, comfort and likability are likely to be diminished. In contrast, combining voice prompts with body movements that imitate the behavioral patterns of a human salesperson represents a feasible and effective approach for deploying robots in self-service shops.

2. When introducing a new type of interaction cue based on the robot’s voice, enhancing the robot’s body movements can positively influence customers’ experiences of fluency, comfort and likeability, whereas the addition of colored light cannot yield similar effects.

3. The feasible and effective interaction cue combinations include the dual-channel cues (robot’s voice prompts and right-side body movements) and the three-channel cues, (robot’s voice prompts, right-side body movements and colored light). Compared to a scenario without robots, both combinations can enhance the customer experiences in terms of fluency, comfort and likeability. The first combination is cost-effective and improves likeability by enhancing fluency and comfort. The second combination’s effect on likeability is mediated by perceived comfort, making it easier to control from a design perspective.

The contributions of this study provide design guidance for HRI in self-service shops aimed at improving customer experiences, and thereby facilitating a shift in users’ perception of robots from mere tools to interactive companions. It can help to increase the duration and interaction frequency of robot interactions, enabling them to integrate more harmoniously into society. In addition, the interaction cues designed in this study can also improve the predictability and interpretability of robot behavior. These improvements can benefit users’ acceptance and trust in the robots, promoting interaction efficiency and reducing the operational costs.

Author Contributions

Conceptualization, formal analysis, methodology, data curation, writing—draft preparation, funding acquisition, W.G.; investigation, data curation, visualization, Y.T.; data curation, formal analysis, W.Z.; investigation, Y.J. and S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 52105262, U2013602.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of College of Furnishings and Industrial Design of Nanjing Forestry University (date of approval: 18 January 2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Acknowledgments

The authors would like to express sincere gratitude to Takayuki Kanda for his valuable suggestions on this study. Meanwhile, the authors would like to thank all of the volunteers that took the time and effort to participate in testing.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Okafuji, Y.; Ozaki, Y.; Baba, J.; Nakanishi, J.; Ogawa, K.; Yoshikawa, Y.; Ishiguro, H. Behavioral Assessment of a Humanoid Robot When Attracting Pedestrians in a Mall. Int. J. Soc. Robot. 2022, 14, 1731–1747. [Google Scholar] [CrossRef]

- Sabelli, A.M.; Kanda, T. Robovie as a Mascot: A Qualitative Study for Long-Term Presence of Robots in a Shopping Mall. Int. J. Soc. Robot. 2016, 8, 211–221. [Google Scholar] [CrossRef]

- Fridin, M. Storytelling by a Kindergarten Social Assistive Robot: A Tool for Constructivelearning in Preschool Education. Comput. Educ. 2014, 70, 53–64. [Google Scholar] [CrossRef]

- Karunarathne, D.; Morales, Y.; Nomura, T.; Kanda, T.; Ishiguro, H. Will Older Adults Accept a Humanoid Robot as a Walking Partner? Int. J. Soc. Robot. 2019, 11, 343–358. [Google Scholar] [CrossRef]

- Manzi, F.; Peretti, G.; Dio, C.D.; Cangelosi, A.; Itakura, S.; Kanda, T.; Ishiguro, H.; Massaro, D.; Marchetti, A. A Robot Is Not Worth Another: Exploring Children’s Mental State Attribution to Different Humanoid Robots. Front. Psychol. 2020, 11, 2011. [Google Scholar] [CrossRef]

- Qiu, H.L.; Li, M.L.; Shu, B.Y.; Bai, B. Enhancing Hospitality Experience with Servicerobots: The Mediating Role of Rapport Building. J. Hosp. Mark. Manag. 2020, 29, 247–268. [Google Scholar]

- Lio, T.; Satake, S.; Kanda, T.; Hayashi, K.; Ferreri, F.; Hagita, N. Human-Like Guide Robot that Proactively Explains Exhibits. Int. J. Soc. Robot. 2020, 12, 549–566. [Google Scholar]

- Gockley, R.; Bruce, A.; Forlizzi, J.; Michalowski, M.; Mundell, A.; Rosenthal, S. Designing Robots for Long-term Social Interaction. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005. [Google Scholar]

- Gross, H.M.; Boehme, H.J.; Schroeter, C.; Mueller, S.; Bley, A. Shopbot: Progress in Developing an Interactive Mobile Shopping Assistant for Everyday Use. In Proceedings of the 2008 IEEE International Conference on Systems, Man, and Cybernetics, Nashville, TN, USA, 12–15 October 2008. [Google Scholar]

- Mutlu, B.; Forlizzi, J. Robots in Organizations: The Role of Workflow, Social, and Environmental Factors in Human–Robot Interaction. In Proceedings of the 3rd ACM/IEEE International Conference on Human–Robot Interaction, Boston, MA, USA, 12–15 March 2008. [Google Scholar]

- Shi, C.; Satake, S.; Kanda, T.; Ishiguro, H. A Robot that Distributes Flyers to Pedestrians in a Shopping Mall. Int. J. Soc. Robot. 2018, 10, 421–437. [Google Scholar] [CrossRef]

- Xiao, J.; Zhang, Z.; Terzi, S.; Anwer, N.; Eynard, B. Dynamic Task Allocations with Q-Learning Based Particle Swarm Optimization for Human-Robot Collaboration Disassembly of Electric Vehicle Battery Recycling. Comput. Ind. Eng. 2025, 204, 111133. [Google Scholar] [CrossRef]

- Hsieh, W.F.; Sato-Shimokawara, E.; Yamaguchi, T. Investigation of Robot Expression Style in Human-Robot Interaction. J. Robot. Mechatron. 2020, 32, 224–235. [Google Scholar] [CrossRef]

- Tsiourti, C.; Weiss, A.; Wac, K.; Vincze, M. Multimodal Integration of Emotional Signals from Voice, Body and Context: Effect of (In) Congruence on Emotion Recognition and Attitudes Towards Robots. Int. J. Soc. Robot. 2019, 11, 555–573. [Google Scholar] [CrossRef]

- Faibish, T.; Kshirsagar, A.; Hoffman, G.; Edan, Y. Human Preferences for Robot Eye Gaze in Human-to-Robot Handovers. Int. J. Soc. Robot. 2022, 14, 995–1012. [Google Scholar] [CrossRef] [PubMed]

- Mcginn, C.; Torre, I. Can you Tell the Robot by the Voice An Exploratory Study on the Role of Voice in the Perception of Robots. In Proceedings of the 14th IEEE International Conference on Human–Robot Interaction, Portland, OR, USA, 11–14 March 2019. [Google Scholar]

- Tahir, Y.; Dauwels, J.; Thalmann, D.; Thalmann, N.M. A User Study of a Humanoid Robot as a Social Mediator for Two-Person Conversations. Int. J. Soc. Robot. 2020, 12, 1031–1044. [Google Scholar] [CrossRef]

- Berzuk, J.M.; Young, J.E. More than words: A Framework for Describing Human-Robot Dialog Designs. In Proceedings of the 17th Annual ACM IEEE International Conference on Human-Robot Interaction, Pittsburgh, PA, USA, 7–10 March 2022. [Google Scholar]

- Xiao, J.; Terzi, S. Large Language Model-Guided Graph Convolution Network Reasoning System for Complex Human-Robot Collaboration Disassembly Operations. Procedia CIRP 2025, 134, 43–48. [Google Scholar] [CrossRef]

- Porfirio, D.; Sauppé, A.; Albarghouthi, A.; Mutlu, B. Transforming Robot Programs Based on Social Context. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Xiao, J.; Zhang, Z.; Terzi, S.; Tao, F.; Anwer, N.; Eynard, B. Multi-Scenario Digital Twin-Driven Human-Robot Collaboration Multi-Task Disassembly Process Planning Based on Dynamic Time Petri-Net and Heterogeneous Multi-Agent Double Deep Q-Learning Network. J. Manuf. Syst. 2025, 83, 284–305. [Google Scholar] [CrossRef]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2020, 13, 833–849. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulic, D.; Croft, E.; Zoghbi, E. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Sandoval, E.B.; Brandstatter, J.; Yalcin, U.; Bartneck, C. Robot Likeability and Reciprocity in Human Robot Interaction: Using Ultimatum Game to determinate Reciprocal Likeable Robot Strategies. Int. J. Soc. Robot. 2021, 13, 851–862. [Google Scholar] [CrossRef]

- Tatarian, K.; Stower, R.; Rudaz, D.; Chamoux, M.; Kappas, A.; Chetouani, M. How does Modality Matter? Investigating the Synthesis and Effects of Multi-modal Robot Behavior on Social Intelligence. Int. J. Soc. Robot. 2022, 14, 893–911. [Google Scholar] [CrossRef]

- Saad, E.; Neerincx, M.A.; Hindriks, K.V. Welcoming Robot Behaviors for Drawing Attention. In Proceedings of the 14th Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 11–14 March 2019. [Google Scholar]

- Erden, M.S. Emotional Postures for the Humanoid-Robot Nao. Int. J. Soc. Robot. 2013, 5, 441–456. [Google Scholar] [CrossRef]

- Sugiyama, O.; Kanda, T.; Imai, M.; Ishiguro, H.; Hagita, N.; Anzai, Y. Humanlike Conversation with Gestures and Verbal Cues based on A Three-layer Attention-drawing Model. Connect Sci 2006, 18, 379–402. [Google Scholar] [CrossRef]

- Liu, P.; Glas, D.F.; Kanda, T.; Ishiguro, H.; Hagita, H. A Model for Generating Socially-Appropriate Deictic Behaviors Towards People. Int. J. Soc. Robot. 2017, 9, 33–49. [Google Scholar] [CrossRef]

- Liu, P.; Glas, D.F.; Kanda, T.; Ishiguro, H. Learning Proactive Behavior for Interactive Social Robots. Auton. Robot. 2018, 42, 1067–1085. [Google Scholar] [CrossRef]

- Rea, D.J.; Schneider, S.; Kanda, T. Is this all you can do? Harder!: The Effects of (Im)Polite Robot Encouragement on Exercise Effort. In Proceedings of the 16th ACM/IEEE International Conference on Human-Robot Interaction, Vancouver, BC, Canada, 8–11 March 2021. [Google Scholar]

- Ham, J.; Midden, C.J.H. A Persuasive Robot to Stimulate Energy Conservation: The Influence of Positive and Negative Social Feedback and Task Similarity on Energy-Consumption Behavior. Int. J. Soc. Robot. 2014, 6, 163–171. [Google Scholar] [CrossRef]

- Naito, M.; Rea, D.J.; Kanda, T. Hey Robot, Tell It to Me Straight: How Different Service Strategies Affect Human and Robot Service Outcomes. Int. J. Soc. Robot. 2023, 15, 969–982. [Google Scholar] [CrossRef] [PubMed]

- Edirisinghe, S.; Satoru, S.; Kanda, T. Field Trial of a Shopworker Robot with Friendly Guidance and Appropriate Admonishments. ACM Trans. Hum.-Robot. Interact. 2023, 12, 1–37. [Google Scholar] [CrossRef]

- Even, J.; Satake, S.; Kanda, T. Monitoring Blind Regions with Prior Knowledge Based Sound Localization. In Proceedings of the International Conference on Social Robotics, Seoul, Republic of Korea, 27–29 November 2019. [Google Scholar]

- Senft, E.; Satake, S.; Kanda, T. Would You Mind Me if I Pass by You? Socially-Appropriate Behaviour for an Omni-based Social Robot in Narrow Environment. In Proceedings of the 15th ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020. [Google Scholar]

- Sono, T.; Satake, S.; Kanda, T.; Imai, M. Walking Partner Robot Chatting about Scenery. Adv. Robot. 2019, 33, 742–755. [Google Scholar] [CrossRef]

- Paliga, M.; Pollak, A. Development and Validation of the Fluency in Human-Robot Interaction Scale. A Two-Wave Study on Three Perspectives of Fluency. Int. J. Hum.-Comput. Stud. 2021, 155, 102698. [Google Scholar] [CrossRef]

- Sheikholeslami, S.; Hart, J.W.; Chan, W.P.; Quintero, C.P.; Croft, E. Prediction and Production of Human Reaching Trajectories for Human-Robot Interaction. In Proceedings of the 13th ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018. [Google Scholar]

- Chatterji, N.; Allen, C.; Chernova, S. Effectiveness of Robot Communication Level on Likeability, Understandability and Comfortability. In Proceedings of the 28th IEEE International Conference on Robot and Human Interactive Communication, Daejeon, Republic of Korea, 14–18 October 2019. [Google Scholar]

- Redondo, M.E.L.; Niewiadomski, R.; Rea, F.; Incao, S.; Sandini, G.; Sciutti, A. Comfortability Analysis Under a Human–Robot Interaction Perspective. Int. J. Soc. Robot. 2024, 16, 77–103. [Google Scholar] [CrossRef]

- Redondo, M.E.L.; Vignolo, A.; Niewiadomski, R.; Rea, F.; Sciutti, A. Can Robots Elicit Different comfortability Levels? In Proceedings of the 12th International Conference on Social Robotics, Porto, Portugal, 1–3 November 2020.

- Koay, K.L.; Walters, M.L.; Dautenhahn, K. Methodological Issues Using a Comfort Level Device in Human–Robot Interactions. In Proceedings of the 14th IEEE International Workshop on Robot and Human Interactive Communication, Osaka, Japan, 13–15 July 2005. [Google Scholar]

- Park, E.; Jin, D.; Del Pobil, A.P. The Law of Attraction in Human-Robot Interaction. Int. J. Adv. Robot. Syst. 2012, 9, 35. [Google Scholar] [CrossRef]

- Ball, A.; Silvera-Tawil, D.; Rye, D.; Velonaki, M. Group Comfortability When a Robot Approaches. In Proceedings of the 6th International Conference on Social Robotics, Brisbane, Queensland, Australia, 27–30 October 2014. [Google Scholar]

- Seo, K.H.; Lee, J.H. The Impact of Service Quality on Perceived Value, Image, Satisfaction, and Revisit Intention in Robotic Restaurants for Sustainability. Sustainability 2025, 17, 7422. [Google Scholar] [CrossRef]

- Zhu, D.H. Effects of Robot Restaurants’ Food Quality, Service Quality and High-Tech Atmosphere Perception on Customers’ Behavioral Intentions. J. Hosp. Tour. Technol. 2022, 13, 699–714. [Google Scholar] [CrossRef]

- Haring, K.S.; Matsumoto, Y.; Watanabe, K. How Do People Perceive and Trust a Lifelike Robot. In Proceedings of the International Conference on Intelligent Automation & Robotics, Kuala Lumpur, Malaysia, 26–29 June 2013. [Google Scholar]

- Maniscalco, U.; Minutolo, A.; Storniolo, P.; Esposito, M. Towards a More Anthropomorphic Interaction with Robots in Museum Settings: An Experimental Study. Robot. Auton. Syst. 2024, 171, 104561. [Google Scholar] [CrossRef]

- Salem, M.; Eyssel, F.; Rohlfing, K.; Kopp, S.; Joublin, F. To Err is Human(-like): Effects of Robot Gesture on Perceived Anthropomorphism and Likability. Int. J. Soc. Robot. 2013, 5, 313–323. [Google Scholar] [CrossRef]

- Okafuji, Y.; Song, S.; Baba, J.; Yoshikawa, Y.; Ishiguro, H. Influence of Collaborative Customer Service by Service Robots and Clerks in Bakery Stores. Front. Robot. AI 2023, 10, 1125308. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Baba, J.; Okafuji, Y.; Nakanishi, J.; Yoshikawa, Y.; Ishiguro, H. New Comer in the Bakery Store: A Long-Term Exploratory Study Toward Design of Useful Service Robot Applications. Int. J. Soc. Robot. 2024, 16, 1901–1918. [Google Scholar] [CrossRef]

- Brengman, M.; De Gauquier, L.; Willems, K.; Vanderborght, B. From Stopping to Shopping: An Observational Study Comparing a Humanoid Service Robot with a Tablet Service Kiosk to Attract and Convert Shoppers. J. Bus. Res. 2021, 134, 263–274. [Google Scholar] [CrossRef]

- Rodway, P.; Schepman, A.; Crossley, B.; Lee, J. A Leftward Perceptual Asymmetry When Judging the Attractiveness of Visual Patterns. Laterality 2019, 24, 1–25. [Google Scholar] [CrossRef]

- Bourne, V.J. How Are Emotions Lateralised in the Brain? Contrasting Existing Hypotheses Using the Chimeric Faces Test. Cogn. Emot. 2010, 24, 903–911. [Google Scholar] [CrossRef]

- Nicholls, M.E.R.; Hobson, A.; Petty, J.; Churches, O.; Thomas, N.A. The Effect of Cerebral Asymmetries and Eye Scanning on Pseudoneglect for a Visual Search Task. Brain Cogn. 2017, 111, 134–143. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C.I. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places. Philos. Soc. Sci. 1996, 30, 120–124. [Google Scholar]

- Admoni, H.; Scassellati, B. Social Eye Gaze in Human–Robot Interaction: A Review. J. Hum.-Robot. Interact. 2017, 6, 25–63. [Google Scholar] [CrossRef]

- Ge, W.; Zhang, J.; Shi, X.; Tang, W.; Tang, W.; Qian, L. Effect of Dynamic Point Symbol Visual Coding on User Search Performance in Map-Based Visualizations. ISPRS Int. J. Geo-Inf. 2025, 14, 305. [Google Scholar] [CrossRef]

- Zhou, C.; Xu, B.; Xu, X.; Kaner, J. Exploring the Creation of Multi-Modal Soundscapes in the Indoor Environment: A Study of Stim-Uulus Modality and Scene Type Affecting Physiological Recovery. J. Build. Eng. 2025, 100, 113327. [Google Scholar] [CrossRef]

- Jewell, G.; McCourt, M.E. Pseudoneglect: A Review and Meta-Analysis of Performance Factors in Line Bisection Tasks. Neuropsychologia 2000, 38, 93–110. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).