Abstract

Wind power plays an increasingly vital role in sustainable energy production, yet the harsh environments in which turbines operate often lead to mechanical or structural degradation. Detecting such faults early is essential to reducing maintenance expenses and extending operational lifetime. In this work, we propose a deep learning-based image classification framework designed to assess turbine condition directly from drone-acquired imagery. Unlike object detection pipelines, which require locating specific damage regions, the proposed strategy focuses on recognizing global visual cues that indicate the overall turbine state. A comprehensive comparison is performed among several lightweight and transformer-based architectures, including MobileNetV3, ResNet, EfficientNet, ConvNeXt, ShuffleNet, ViT, DeiT, and DINOv2, to identify the most suitable model for real-time deployment. The MobileNetV3-Large network achieved the best trade-off between performance and efficiency, reaching 98.9% accuracy while maintaining a compact size of 5.4 million parameters. These results highlight the capability of compact CNNs to deliver accurate and efficient turbine monitoring, paving the way for autonomous, drone-based inspection solutions at the edge.

1. Introduction

The growing global emphasis on sustainability and carbon reduction has accelerated innovation across the renewable energy landscape. Among these technologies, wind power has emerged as one of the most mature and scalable solutions for clean electricity generation [1]. The deployment of wind turbines has expanded rapidly worldwide over the past decade, contributing significantly to national and regional energy portfolios. In 2021, wind power accounted for approximately 10.2% of total electricity generation in the United States, positioning it as the second-largest source of newly installed capacity after solar energy [2,3]. Beyond its economic potential, wind energy plays a vital role in lowering greenhouse gas emissions and advancing the objectives of the Paris Agreement, which seeks to restrict global temperature rise to 1.5 °C [4].

Wind energy systems can be broadly categorized into onshore and offshore configurations [5]. Figure 1 illustrates the basic process by which kinetic wind energy is converted into electrical power and subsequently integrated into the grid. In these systems, the turbine blades rotate in response to air flow, driving a generator that initially produces Direct Current (DC). The generated DC power is then converted into Alternating Current (AC) through an inverter before being supplied to the power grid for end-user consumption [6,7].

Maintaining the operational reliability and energy efficiency of wind turbines is critical to ensuring consistent and cost-effective power generation. Exposure to fluctuating weather conditions, mechanical stress, and material fatigue can result in component degradation or complete system faults [8,9]. These issues not only increase maintenance costs but also cause unplanned outages that impact energy output. According to the European Wind Energy Association, nearly 30% of total generation costs can be attributed to maintenance and operational activities [10].

Owing to its minimal environmental footprint and scalability, wind energy continues to gain traction among industries and communities seeking cleaner power alternatives [11]. However, prolonged turbine operation under dynamic environmental conditions makes continuous monitoring indispensable. Effective inspection and diagnostic systems are therefore essential to prevent performance degradation and extend turbine lifespan [6,12].

Figure 1.

Onshore versus Offshore Wind Turbine Systems [13].

Early detection of wind turbine damage is essential for maintaining efficiency and extending system lifespan. Mechanical or structural faults such as gear issues or blade cracks can reduce power output, cause financial losses, and lead to further deterioration, like corrosion. Since turbine components are interdependent, damage to one part can impact the entire system’s performance both immediately and in the long term.

Computer vision techniques have shown strong potential for detecting damage in wind turbines by analyzing visual anomalies on turbine components. These methods leverage image processing, pattern recognition, and deep learning models to identify irregularities indicative of damage. Despite it being effective in many cases, the performance of computer vision approaches may be constrained when used in isolation, particularly in scenarios where complementary data or diagnostic methods are necessary for comprehensive assessment. Therefore, integrating computer vision with additional monitoring strategies can enhance the overall reliability of damage detection systems in wind turbines.

Effective monitoring and timely intervention are essential for preserving operational efficiency and extending the lifespan of wind turbines. Traditional maintenance practices often rely on manual inspection methods, which are time-consuming, labor-intensive, and costly. These limitations highlight the need for automated, scalable, and cost-efficient solutions.

To address these challenges, this work proposes the integration of Artificial Intelligence (AI) technologies, particularly deep learning-based computer vision methods, to enhance the reliability and efficiency of wind turbine maintenance. Specifically, we develop a deep learning-based image classification model designed to automatically categorize turbines into “Damaged” and “Undamaged” classes. The approach is designed for integration with drone- or robot-acquired imagery, enabling scalable and real-time damage detection in operational environments.

In this work, we propose an image classification-based approach instead of object detection, motivated by the nature of the task, where each image corresponds to a single, holistic state (e.g., normal or faulty). Unlike object detection, which requires identifying and localizing multiple objects within an image, our problem does not involve spatially distinct entities that need to be detected individually. Instead, the entire image reflects a global condition, making classification more suitable and efficient.

This choice offers several key advantages: (1) it significantly simplifies the annotation process, as only image-level labels are needed instead of precise bounding boxes; (2) classification models are computationally lighter and faster, which is ideal for real-time inference and deployment in resource-constrained environments (e.g., embedded systems or edge devices); and (3) classification is better aligned with applications where the anomaly or condition alters the overall texture, frequency, or global visual pattern of the image, rather than isolated regions.

Additionally, classification models can still provide interpretability (e.g., via attention maps or saliency visualization) without the overhead of object detection architectures. This makes our solution more scalable, easier to train, and better adapted to the target application.

The proposed classification solution can be based on one of the deep learning variants that might be based on different concepts, such as convolutional and/or transformer models, to achieve the best performance. A comparative analysis is also done in this work to find the most suitable deep learning model (single or ensemble) for the damage detection step. Thus, this solution is designed to employ deep learning models to autonomously analyze images collected by drones/robots that capture wind turbines. By precisely identifying turbines affected by damage, the classifier facilitates the implementation of proactive maintenance strategies, allowing for swift damage rectification and optimization of the overall performance and lifespan of wind energy installations.

This means that the proposed solution will be used for periodic, regular automated checks to identify potential mechanical issues and/or structural damage on wind turbine components. It can ensure consequently optimal performance (minimizing the impact on energy output). The proposed solution is an efficient and robust damage detection approach that can help identify cracks or wear to repair or replace damaged components, which will lead to reduced energy losses. In some cases, damaged components may need replacement to restore the system’s efficiency and maximize energy production.

Despite extensive progress in the field, several challenges persist in existing research on wind turbine condition monitoring [14]:

- Heavy reliance on handcrafted or domain-specific features, which limits scalability and adaptability across different operational conditions [15].

- High computational and memory costs of existing deep models, restricting their suitability for real-time or embedded deployment [16].

- Limited use of drone-acquired visual data and the absence of domain adaptation techniques specifically designed for aerial inspection perspectives.

- Insufficient integration of multimodal signals (e.g., thermal, vibration, acoustic) for robust and comprehensive decision-making in predictive maintenance frameworks [17].

These limitations collectively motivate the development of lightweight and efficient models tailored for real-time, drone-based visual monitoring of wind turbines.

1.1. Motivations & Contributions

In this work, we propose using a drone or ground robot equipped with an RGB imaging camera, microprocessor, and independent power supply to inspect wind turbine damage, as shown in Figure 2. The system is designed to autonomously navigate around the wind farm, capturing images of each turbine for analysis. Two processing modes are considered: offline, where images are stored for post-flight analysis, and online, where captured images are processed in real time using the onboard trained model.

Figure 2.

Drone Wind Turbine Damage Detection approach that will be based on the proposed ensemble DL models.

In the case of offline mode, the collected wind turbine images will be stored for post-processing (after the return of the robotic system (i.e., Drone or ground robot)). Furthermore, the post-processing operation begins after the robotic system (i.e., Drone or ground robot) has landed. In this case, the proposed solution will be divided into two steps: mid-flight and post-processing operations. The mid-flight operation will cover navigation and the collection of wind turbine images with their corresponding locations. Post-processing will classify the collected wind turbine images (from the mid-flight operation) using the trained deep-learning image classification model to detect damaged wind turbines. On the other hand, in the case of online mode, the processing will be done live at the robotic system (i.e., Drone or ground robot) level by processing each collected image using the trained model. In case the wind turbine is damaged, the robotic system (i.e., Drone or ground robot) will communicate this result with the application server or cloud.

The main objective of this work is to propose a global framework that can benefit from the proposed AI detection approach (machine/deep learning model) with new technologies such as drones and robots.

Therefore, the main contributions of this work are listed below:

- Dataset Collection: Collect a sufficient number of samples for each class to construct a new dataset and introduce data augmentation techniques to consequently increase the performance of the trained model.

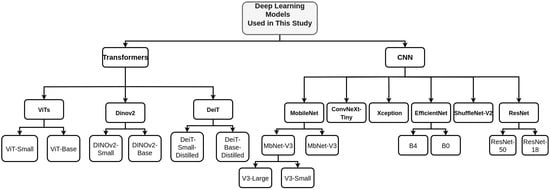

- Comparative Analysis: Conduct a comparative analysis between different deep neural network architectures (see Figure 3) with fine-tuning to identify the best damage detection classifier model(s).

Figure 3. A set of main CNN and Transformer deep learning image classifier models.

Figure 3. A set of main CNN and Transformer deep learning image classifier models. - Optimization Strategies: Investigate knowledge distillation and self-attention mechanisms to enhance lightweight Convolutional Neural Networks (CNNs), assessing their effect on performance and robustness under deployment constraints.

- Real-World Suitability: Demonstrate that lightweight, high-performing models can be effectively deployed in real-world monitoring systems, such as drones or robots, enabling timely and autonomous wind turbine inspection.

Therefore, the main contribution of this work is a comparative analysis between different deep neural network architectures with and without fine-tuning to identify the best one for damage detection and identification in wind turbines (see Table 1).

Table 1.

Key acronyms along with their abbreviations.

1.2. Organization

The remainder of this paper is organized as follows. Section 2 provides an overview of wind energy systems, including their components, turbine types, typical damage types, and the formulation of the damage detection problem. Section 3 reviews related work on wind turbine damage detection using image-based and deep learning techniques. Section 4 introduces the proposed solution, describing the dataset, preprocessing, the deep learning models employed, and the fine-tuning strategy. Section 5 outlines the experimental setup, including data augmentation, training procedures, and enhancements such as knowledge distillation and Multi-Head Self-Attention (MHSA). Section 6 presents the evaluation metrics and a detailed comparison of model performances, along with confusion matrices and prediction visualizations. Section 7 discusses the key findings and insights drawn from the results, particularly concerning model efficiency and deployment feasibility. Finally, Section 8 concludes the paper and outlines directions for future work, including multimodal input integration and real-time deployment on UAV or robotic systems.The key acronyms used throughout this paper are summarized in Table 1.

2. Fundamentals of Wind Energy Systems

In this section, we outline the fundamentals of wind energy systems. The first part describes their structure and operating principles, while the second discusses the main damage types and detection approaches.

2.1. Structure and Operating Principles of Wind Turbines

Wind energy systems are designed to capture the kinetic motion of air and transform it into mechanical or electrical power. Electricity generation represents the main use of this conversion process, which provides a renewable and environmentally sustainable energy source capable of supporting large-scale decarbonization efforts [18].

A typical wind power plant comprises several turbines, most often horizontal-axis units equipped with three blades and mounted on tall towers to reach higher and steadier airflows [19]. Each turbine operates through a set of interdependent subsystems, including the rotor blades, drive shafts, gearbox, generator, yaw mechanism, braking system, tower, and control unit. These components together ensure efficient conversion of aerodynamic energy into usable electrical power.

When wind speed exceeds the turbine’s cut-in threshold, the aerodynamic blades begin to rotate and transfer torque to a low-speed shaft. This torque is transmitted through the gearbox, which increases the rotational speed to drive the generator effectively. The generator then produces electricity through electromagnetic induction, typically delivering power as Alternating Current (AC) for integration into the electrical grid [20].

The essential mechanical and structural parts of a modern wind turbine are summarized in Table 2 [21,22,23].

Table 2.

Main components of a wind turbine.

To maintain optimal power capture, turbines use a yaw system that reorients the nacelle toward the wind’s direction. This adjustment maximizes aerodynamic efficiency by ensuring that the blades face incoming air flow. The tower not only elevates the turbine but also provides structural rigidity for the entire assembly.

Under extreme wind conditions or during maintenance, a braking system halts the blades to prevent excessive mechanical stress. The controller monitors real-time operational variables such as wind velocity, yaw position, and electrical output and adjusts the blade pitch or yaw angle accordingly to preserve safe and efficient operation [24]. The coordinated performance of these subsystems enables reliable conversion of wind energy into electrical power [25].

2.2. Wind Turbine Damage Mechanisms

Wind turbines are subject to a wide range of mechanical and electrical stresses originating from environmental and operational factors. Variations in wind speed and direction, blade aerodynamics, rotor balance, bearing wear, and generator or gearbox integrity can each contribute to component degradation over time [26,27]. These issues generally fall into several categories that correspond to the subsystem in which the fault occurs. An overview of the most common categories is provided in Table 3.

Table 3.

Typical fault categories observed in wind turbines.

2.3. Damage Detection and Identification

The fault detection process aims to determine whether a wind turbine is operating normally or exhibiting signs of damage. Each observation is therefore classified into one of two categories: damaged or undamaged. The detection model learns to associate visual input data with the correct operational state based on labeled examples collected during training.

In practice, this mapping is achieved by training deep learning models on annotated image datasets, enabling them to automatically recognize patterns that indicate turbine faults. Once trained, the best-performing model can be deployed on drones or inspection robots to assess turbine health in real time and transmit alerts when anomalies are detected.

To ensure robust and accurate performance, various neural network architectures may be explored and fine-tuned. The evaluation relies on standard performance indicators such as accuracy, precision, recall, F1-score, and the area under the ROC curve which collectively measure the model’s ability to distinguish between damaged and undamaged turbines while minimizing false detections. These metrics are widely adopted in the literature for assessing the reliability of classification-based fault detection systems.

3. Related Work

Image classification represents a fundamental task across numerous visual domains such as manufacturing, agriculture, and renewable energy. The goal is to categorize an image into predefined classes, for instance, identifying it as “faulty” or “non-faulty” in a binary setup, or into multiple defect types in a multi-class configuration. These techniques have been applied extensively in diverse sectors for example, plant disease identification in agriculture [28], automated quality inspection in manufacturing [29], and lesion detection in medical diagnostics [30].

In the context of wind energy, early studies have explored the use of infrared thermography for identifying anomalies in turbine blades. Thermal imaging enables the detection of hotspots indicative of structural defects or material stress [31]. For instance, Han et al. [32] implemented convolutional neural networks (CNNs) to interpret thermographic data and accurately locate heat-related irregularities, thus enabling preventive maintenance. Similarly, Mostafavi et al. [33] combined thermal and vibration sensors to distinguish between normal and faulty turbine states, analyzing efficiency variations under mechanical wear or structural damage. Although thermography achieves good diagnostic precision, its deployment remains expensive due to the need for specialized imaging hardware and controlled environmental conditions.

More recent approaches rely on conventional computer vision and deep learning frameworks to automate turbine fault detection. Several works have enhanced visual data through preprocessing steps such as wavelet transformation and morphological filtering, which improve image clarity before classification. Subsequently, deep convolutional networks like modified VGG-19 architectures have been trained to identify blade surface defects, achieving accuracies up to 87.8% with real-time inference performance [34]. Attention-based architectures such as Vision Transformers (ViTs) have also been investigated for this task [35,36]. While ViT models demonstrate strong recognition of surface impurities and outperform CNN baselines like MobileNet, VGG16, and ResNet50, their effectiveness in detecting actual structural damage is limited largely due to restricted training datasets and the subtle visual characteristics of such faults.

In addition to conventional computer vision studies, several works have provided comprehensive overviews of condition monitoring and fault diagnosis strategies in wind turbines [14]. These reviews trace the evolution of data-driven approaches and highlight persistent challenges such as high computational cost, limited real-time applicability, and the need for more efficient diagnostic models [37]. Recent surveys have further emphasized the importance of integrating multimodal sensing and advanced learning techniques to improve reliability and scalability in wind turbine monitoring systems [38]. The proposed image-based lightweight framework aligns with these insights by enabling real-time visual assessment from drone-acquired imagery, offering a computationally efficient and practical solution for edge-based wind turbine inspection systems.

4. Proposed Solution

This section presents the complete workflow of the proposed damage classification system, including dataset characteristics, model architectures (both CNN and Vision Transformer-based), and the fine-tuning strategy employed to adapt pre-trained models to the wind turbine domain.

4.1. Datasets Description

The dataset comprises RGB images of wind turbine blades collected from two publicly available online datasets, which are the Nordtank dataset provided by DTU Wind Energy [39], and the Roboflow Wind Turbine Faults Detection dataset [40]. The Roboflow dataset includes multi-class annotations representing various fault types but exhibits significant class imbalance. To address this issue, we merged it with the Nordtank dataset, which provides binary labels focused solely on damage detection. This integration helped to achieve a more balanced distribution across classes and improved the robustness of the training data.

It is categorized into two classes: Undamaged, which includes blades without visible flaws, and Damaged, which includes blades with clear signs of physical deterioration such as cracks, erosion, or deformation. Both datasets are filtered to remove irrelevant images and noise, such as images dominated by the sky or grass near the wind turbines. In several cases, the wind turbine blades are barely visible or entirely absent from the frame. These noisy and uninformative samples were excluded to ensure the dataset contains only meaningful content for training robust and accurate models.

The collected images vary in background, quality, and lighting, with some containing noisy elements such as watermarks or text overlays. A rigorous manual cleaning process was conducted to ensure labeling consistency and remove low-quality or irrelevant samples.

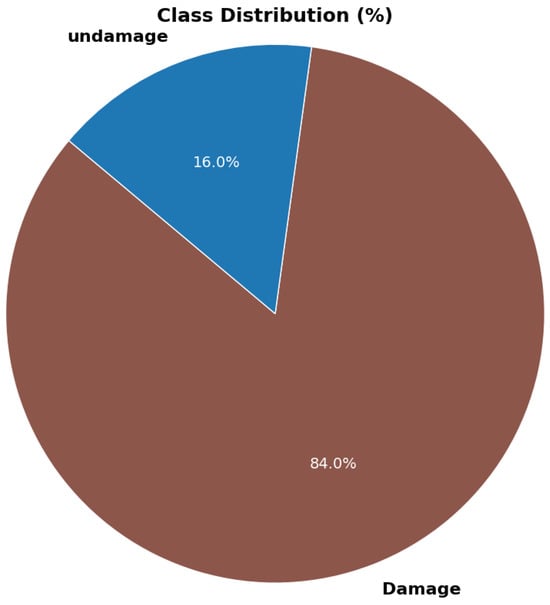

After preprocessing, the final dataset comprises 6954 images labeled as “Damaged” and 1329 images as “Undamaged”, highlighting a significant class imbalance, as illustrated in Figure 4.

Figure 4.

Class distribution of the collected dataset: “Damaged” vs. “Undamaged”.

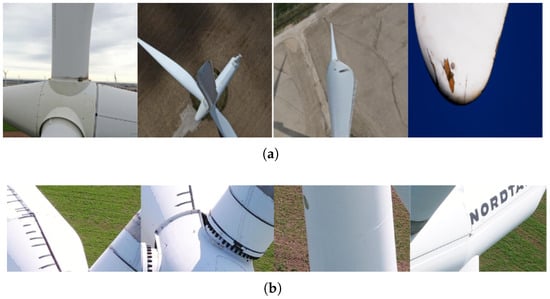

To mitigate this imbalance, we combined the multi-class Roboflow dataset with the binary Nordtank dataset, effectively enriching the minority (damaged) samples. Example images of damaged and undamaged blades are provided in Figure 5, where panel (a) corresponds to damaged blades (a) and panel (b) corresponds to undamaged blades (b). In addition, extensive data augmentation techniques including random rotation, flipping, color jitter, and Gaussian noise were applied during training to increase intra-class variability and prevent overfitting to the majority class. This combined strategy helped reduce the impact of class imbalance without requiring explicit re-weighting or synthetic oversampling. Future work may nonetheless investigate complementary approaches such as focal loss or adaptive class weighting to further improve robustness.

Figure 5.

Example of wind turbine blade images showing (a) damaged and (b) undamaged conditions.

4.2. Model Definition

The classification approach leverages pre-trained deep learning models that are fine-tuned on the wind turbine image dataset. Each input image is labeled as either Damaged or Undamaged. The images are processed through the backbone of a pre-trained model to extract meaningful visual features automatically. These features are then passed through a classification head that outputs the probability of each class, allowing the model to predict whether a turbine is damaged or not.

4.3. CNN & Vision Transformers (ViTs) Models

To establish a strong deep learning baseline for damage classification, we evaluated a range of Convolutional Neural Networks (CNNs) and Vision Transformer (ViT) architectures. These models were selected based on their proven performance in image recognition tasks and their adaptability to resource-constrained environments.

4.3.1. CNN Models

Several convolutional neural networks pre-trained on the ImageNet dataset [41] were explored as feature extractors. The selected models include both standard and lightweight architectures, optimized either for accuracy, computational efficiency, or low-latency inference. Table 4 provides a comparative summary of the CNN models, detailing their parameter sizes, design characteristics, strengths, and limitations.

Table 4.

Comparison of Convolutional Neural Network (CNN) Models evaluated in this study.

4.3.2. Vision Transformers (ViTs)

In parallel with CNNs, Vision Transformer architectures were assessed for their ability to capture long-range dependencies and model global context through self-attention mechanisms. ViTs process images as sequences of fixed-size patches, enabling a fundamentally different approach to visual representation learning. Table 5 summarizes the ViT models considered in this study, highlighting their configurations and suitability for fault detection tasks.

Table 5.

Comparison of Vision Transformer (ViT) Models evaluated in this study.

Together, these architectures offer complementary strengths: CNNs excel in capturing local patterns and texture, while ViTs are advantageous for learning global structure and contextual relationships. Their evaluation provides a comprehensive baseline for comparing traditional and attention-based image models in the context of damage and fault classification.

4.4. Fine-Tuning

Fine-tuning involves refining a pre-trained model through subtle adjustments to optimize its performance within a specific application domain. Following the initial training, the pre-trained model undergoes fine-tuning by unfreezing some or all of its layers and retraining them, including the classification head, using a very low learning rate. This iterative process enables the gradual adaptation of the pre-trained features to better align with the characteristics of the new dataset. Fine-tuning holds promise for delivering substantial improvements by iteratively refining the model’s learned representations to better suit the nuances and complexities of the target dataset.

5. Experimental Setup and Methodology

This section outlines the methodology used for automated wind turbine damage classification using deep learning. We describe the dataset preparation, model selection, training procedure, and evaluation metrics. Experiments were also conducted to explore the effect of knowledge distillation and the integration of Multi-Head Self-Attention (MHSA) into CNN architectures.

5.1. Data Preprocessing and Augmentation

All input images were resized to pixels, normalized using the standard ImageNet statistics, and split into 80% for training and 20% for validation. To improve model generalization and robustness, several augmentation techniques were applied during training, as summarized in Table 6.

Table 6.

Data augmentation techniques applied during training.

To ensure robustness and reproducibility of the reported results, all experiments were conducted under a deterministic configuration with fixed random seeds controlling data shuffling, weight initialization, and GPU operations. This setup minimizes stochastic variation across runs and guarantees that model outcomes are reproducible. Repeated training under identical settings yielded negligible differences in performance metrics (standard deviation below 0.1% for both accuracy and F1-score), confirming the stability of the results. Therefore, full cross-validation was deemed unnecessary, as the outcomes remained consistent across independent executions.

5.2. Training Procedure

All models were trained using a supervised learning framework with consistent hyperparameter settings to ensure fair comparison.Recent studies have also investigated efficient training strategies aimed at reducing computational cost and training time of deep networks through intelligent data reduction and sampling methods [55].

The training configuration is summarized as follows:

- Loss Function: Cross-entropy loss was used for all classification tasks.

- Optimizer: The Adam optimizer was employed with a fixed initial learning rate of .

- Learning Rate Scheduling: A Reduce-on-Plateau strategy was adopted to automatically decrease the learning rate when the validation loss plateaued.

- Batch Size: Mini-batches of 128 samples were used during training and 256 during evaluation.

- Early Stopping: Training was halted if no improvement in validation loss was observed for five consecutive epochs, to prevent overfitting.

- Epochs: Models were trained for up to 30 epochs, with checkpointing enabled based on validation accuracy improvements.

This training protocol was consistently applied across all model architectures, ensuring methodological consistency and reliable performance comparisons.

5.3. Knowledge Distillation

In this part, we present the knowledge distillation framework designed to transfer knowledge from high-capacity teacher networks to lightweight student models, improving their accuracy and generalization while preserving computational efficiency. We also explore the integration of self-attention mechanisms into CNN backbones to capture global contextual dependencies, along with the evaluation metrics used to assess model performance and deployment feasibility.

5.3.1. Knowledge Distillation Formulation and Implementation

To enhance the performance of lightweight convolutional neural networks, a Knowledge Distillation (KD) framework is employed. In this setup, a high-capacity model acts as a teacher, guiding the training of smaller student models by providing both hard and soft supervision.

Experiments were conducted using two different teacher architectures: MobileNetV3-Large, a lightweight yet expressive model optimized for mobile devices, and EfficientNet-B0, a well-balanced architecture known for its high accuracy-to-efficiency ratio. The student models were selected from the MobileNetV3-Small family, using width multipliers of 1.0, 0.75, and 0.50 to control model capacity.

The earlier presentation of the KD objective was intentionally high-level to keep notation concise. In the revised formulation, the softened probabilities of both the teacher and student incorporate the temperature parameter T, which controls the smoothness of the class distributions. The complete objective is expressed as:

Here, denotes the cross-entropy loss with respect to the ground-truth labels, while represents the Kullback–Leibler divergence between the softened outputs of the teacher () and student () networks. The parameter balances the two loss components, and T denotes the temperature value, set to in our experiments following standard practice [53].

This distillation strategy allows student networks to benefit from the nuanced knowledge captured by more expressive teacher models. Comparative results across the two teacher configurations revealed that the choice of teacher has a measurable impact on student performance, particularly for the smallest variant (width multiplier 0.50), highlighting the sensitivity of distillation effectiveness to teacher capacity and representation power.

5.3.2. Integration of Multi-Head Self-Attention Mechanisms

To examine the impact of global contextual reasoning on damage classification performance, an MHSA mechanism was incorporated into selected CNN backbones. The objective was to enhance the models’ ability to capture long-range spatial dependencies that traditional convolutional operations may not fully exploit.

The integration involved reshaping the spatial feature maps produced by the final convolutional block into sequential representations. These sequences were then processed through a self-attention module capable of modeling interactions between all spatial locations. The resulting context-enriched features were subsequently aggregated and passed through a classification head to generate the final predictions.

This hybrid architecture aims to combine the strengths of CNNs namely, hierarchical feature extraction, with the global modeling capabilities of self-attention. However, experimental evaluations indicated only marginal performance gains, suggesting that for this particular classification task, CNNs may already possess sufficient representational capacity. The limited improvement implies that the added complexity of self-attention may not yield significant returns unless applied to more structurally diverse or semantically complex input data.

5.3.3. Evaluation Metrics

The performance of all deep learning models was evaluated using standard classification metrics, as summarized in Table 7. These indicators measure both the predictive accuracy and the deployment efficiency of each model.

Table 7.

Evaluation metrics and model efficiency indicators used for performance assessment.

6. Experimental Results

In this section, we report the experimental results for CNN and ViT models trained for wind turbine damage classification, evaluated with Accuracy, F1-Score, Precision, and Recall. We compare baseline models against MHSA-augmented variants, analyze MobileNetV3-Large via ablations on MHSA depth and the number of attention heads, and assess knowledge distillation on MobileNetV3-Small students. We also provide confusion matrices and representative predictions for the top-performing models to contextualize the quantitative results.

6.1. Effect of MHSA-Augmented on Deep Learning Models: CNN and Vision Transformer Models

As described in Section 5.3.2, we appended an MHSA block to each backbone and compared it with the unmodified (baseline) models. Table 8 and Table 9 report the results side by side. Without MHSA, MobileNetV3-Large offers the best trade-off (98.92% accuracy)with only 5.4 M parameters, followed by EfficientNet-B0 and ResNet-18. Adding MHSA produced only marginal effects: slight improvements for a few models (e.g., ResNet-18, DeiT-Small-Distilled) and unchanged or mildly lower scores for many others, while also introducing a modest compute overhead.

Table 8.

Performance Comparison of CNN Models With and Without MHSA.

Table 9.

Performance Comparison of Vision Transformer Models With and Without MHSA.

On the other hand, Table 9 presents the performance of ViT models in their baseline form and with additional MHSA layers. Among the baseline models, DeiT-Base-Distilled achieved the highest accuracy (98.45%), albeit with a substantial number of parameters. Smaller variants such as ViT-Small offered reasonable performance with significantly lower complexity.

Augmenting ViTs with MHSA layers resulted in minimal performance changes. Given that ViTs inherently rely on self-attention mechanisms, the impact of additional MHSA was limited typically within a ±0.3% margin rendering most improvements statistically insignificant.

Across models, MHSA yielded at most negligible improvements (e.g., +0.13 for ResNet-18, +0.07 for DeiT-Small-Distilled); for many models it either matched baseline or reduced performance (e.g., MobileNetV3-Large: ; DeiT-Base-Distilled: ).

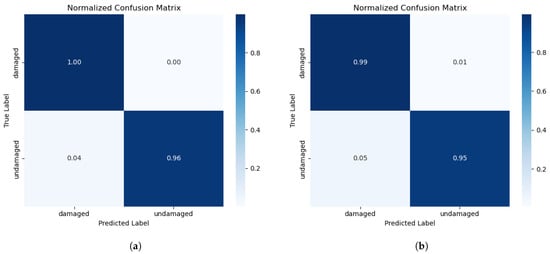

To further interpret model performance, we include confusion matrices and prediction visualizations for the two best-performing models: MobileNetV3-Large and EfficientNet-B0. These analyses provide insight into the classification behavior across damaged and undamaged classes.

Figure 6 presents the normalized confusion matrices for MobileNetV3-Large and EfficientNet-B0, displayed side by side for comparison. MobileNetV3-Large shows nearly perfect classification, with minimal confusion between classes. EfficientNet-B0 also performs strongly with slightly more misclassifications in the undamaged class.

Figure 6.

Normalized confusion matrices for the two best-performing CNN models. (a) MobileNetV3-Large. (b) EfficientNet-B0.

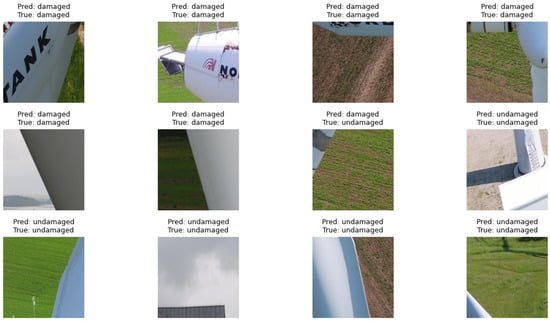

Figure 7 displays a grid of representative predictions made by the MobileNetV3-Large model. Each image is annotated with both the predicted and ground-truth label, demonstrating high model confidence and generally correct classification. It is important to note that certain types of turbine damage especially subtle surface-level or internal structural anomalies may not be easily perceptible to the human eye. This further underscores the importance of leveraging automated learning-based approaches, which can identify patterns and features that might be overlooked in manual inspections. In this context, minor classification errors can be attributed not necessarily to model failure but to the inherent difficulty of the task, thereby reinforcing the value of AI-assisted systems in high-risk, visually complex domains.

Figure 7.

Sample predictions from the MobileNetV3-Large model. Each image is annotated with the predicted and ground-truth class label.

6.2. MobileNetV3-Large: Analysis of MHSA Depth and Number of Heads

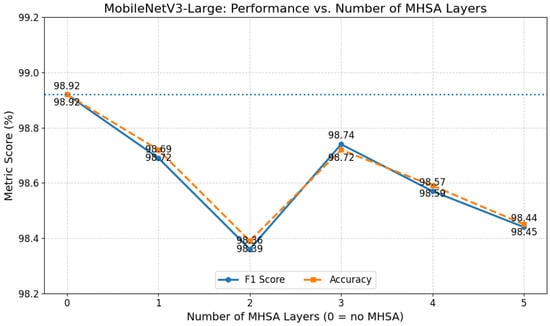

Since MobileNetV3-Large achieved the highest baseline performance, an additional ablation study was conducted to analyze the effect of MHSA architectural parameters on its accuracy and generalization. Specifically, we examined two aspects: the number of stacked MHSA layers and the number of attention heads within a single MHSA block.

Figure 8 illustrates the variation in performance as the number of MHSA layers increases from 0 (no MHSA) to 5. The baseline configuration without MHSA achieved the best results (Accuracy and F1 = 98.92%), while adding attention layers led to small, non-monotonic changes. Performance slightly decreased with two layers, recovered marginally at three, and declined again thereafter. Overall, additional attention depth produced negligible improvement, confirming that a single global representation extracted by the backbone already captures the most discriminative information needed for this task.

Figure 8.

MobileNetV3-Large performance as a function of the number of MHSA layers (0 = no MHSA). The dashed horizontal blue line indicates the baseline performance of the model without MHSA. Increasing attention depth introduces minor, inconsistent variations without improving accuracy.

We further investigated the impact of the number of attention heads within a single MHSA layer, testing configurations with 2, 4, 8, 16, 32, and 64 heads. All configurations yielded identical metrics (Accuracy = 98.72%, F1 = 0.9869, Precision = 0.9870, Recall = 0.9872), demonstrating that head multiplicity does not affect performance. This invariance occurs because the MHSA operates on a single global feature token from the CNN backbone, leaving no spatial diversity for multiple heads to leverage. Therefore, to maintain simplicity and computational efficiency, the four-head configuration is retained for all subsequent experiments.

In summary, both analyses reveal that neither increasing the MHSA depth nor the number of attention heads provides a measurable benefit for MobileNetV3-Large. This finding reinforces that lightweight CNNs already encode sufficient contextual information for effective wind turbine damage classification, and deeper or more granular attention modules add unnecessary complexity without improving accuracy.

6.3. Effect of Knowledge Distillation on MobileNetV3-Small Variants

To evaluate the impact of KD on compact models, we conducted experiments using three variants of MobileNetV3-Small: Small-100, Small-075, and Small-050. Each variant corresponds to a different width multiplier (1.0, 0.75, and 0.50, respectively). A pretrained MobileNetV3-Large model was used as the primary teacher, and training was conducted under two regimes:

- Baseline: Standard supervised training using hard ground-truth labels.

- Distillation: Training with a combination of cross-entropy loss on ground-truth labels and a Kullback–Leibler divergence loss on soft labels predicted by the teacher model.

Table 10 reports the performance of each configuration. Interestingly, only the smallest variant (MobileNetV3-Small-050) exhibited a clear benefit from knowledge distillation with MobileNetV3-Large, improving in accuracy from 97.38% to 97.85%. This improvement was consistent across all evaluation metrics, suggesting that KD effectively compensates for the limited capacity of highly constrained models.

Table 10.

Performance comparison of MobileNetV3-Small variants trained with and without knowledge distillation using different teacher models.

In contrast, the larger variants (Small-075 and Small-100) showed a marginal drop in performance when trained with KD using MobileNetV3-Large. These models may already be expressive enough to capture the task-specific patterns without additional soft supervision, and the use of soft labels might introduce unnecessary regularization, thus slightly degrading the optimization process.

To further investigate the effect of teacher architecture, we repeated the distillation process using EfficientNet-B0 as the teacher. As shown in Table 10, all student variants performed competitively. Notably, for the Small-100 and Small-050 variants, EfficientNet-B0 led to improvements over the baseline and MobileNetV3-Large-based distillation, reaffirming that teacher architecture can significantly influence KD outcomes.

Knowledge distillation produced, at best, a negligible gain for a single student (MobileNetV3-Small-050: +0.47 % over baseline); for larger students, performance either remained at baseline or dropped slightly, indicating KD was not broadly beneficial under our settings.

Overall, the effectiveness of knowledge distillation is influenced by both the size of the student model and the architecture of the teacher. While MobileNetV3-Large provides the most benefit to the smallest student variant, EfficientNet-B0 delivers competitive performance, particularly for the largest student model (Small-100). These findings underscore the importance of selecting an appropriate teacher model based on the student’s capacity and the complexity of the task.

7. Discussion

The experimental results provide several important insights into the suitability of different deep learning models for wind turbine damage classification.

First, lightweight CNN architectures such as MobileNetV3-Large small and EfficientNet-B0 consistently outperformed more complex Vision Transformers in both accuracy and efficiency. MobileNetV3-Large, in particular, achieved the best trade-off between performance (98.92% accuracy) and parameter count (5.4M), making it ideal for edge deployment in drones or robotic inspection systems.

Beyond computational efficiency, the superior performance of lightweight models such as MobileNetV3-Large stems from the task’s reliance on global visual cues, texture degradation, color shifts, or edge wear well captured by depthwise separable convolutions. Larger architectures, optimized for complex semantics, tend to overfit under these simpler conditions. The 1.08% misclassified samples (Section 6, Figure 7) mainly involved borderline or visually ambiguous cases, where even human judgment is uncertain.

Second, MHSA produced architecture-dependent and generally negligible effects (Table 8 and Table 9): even the few apparent gains (e.g., ResNet-18, DeiT-Small-Distilled) were marginal; for many models, results matched baseline or decreased (e.g., MobileNetV3-Large, DeiT-Base-Distilled).

Third, knowledge distillation (Table 10) yielded, at best, a minor (negligible) improvement for the most capacity-constrained student (MobileNetV3-Small-050); for larger students, performance was indistinguishable from baseline or slightly worse, consistent with soft-label supervision acting as excess regularization.

From a deployment perspective, the strong performance of small, low-complexity models, which do not require advanced modifications (e.g., attention layers or distillation), is encouraging. It implies that effective wind turbine inspection systems can be built using resource-efficient models, minimizing the need for computationally expensive architectures or additional supervision.

These results also highlight the importance of matching model complexity to task difficulty. In our case, damage classification from RGB images presents relatively well-defined visual patterns, making it well-suited for compact convolutional models.

Overall, because MHSA did not confer uniform or meaningful gains and KD helped only the smallest student and only marginally lightweight CNNs without extra attention or distillation, it remains the most reliable choice for edge deployment in this task.

8. Conclusions and Future Work

To address the challenges of wind turbine monitoring, selecting the appropriate computer vision approach is crucial. In this work, an image classification approach is adopted rather than object detection, as the task involves recognizing global patterns associated with system states, making it more efficient and better suited to drone-based monitoring scenarios. Furthermore, this classification study presented a comprehensive evaluation of deep learning models for wind turbine damage detection using image data. We compared a range of convolutional and transformer-based architectures, including MobileNetV3, ResNet, EfficientNet, ViT, DeiT, and DINOv2, across key metrics such as accuracy, F1 score, and model size.

The results show that MobileNetV3-Large achieved the best overall performance, with an accuracy of 98.92% and an F1-score of 0.9892, using only 5.4 million parameters. This makes it highly suitable for real-time and edge-based deployment scenarios. Transformer models such as DeiT and ViT also performed well but required significantly more parameters without offering a substantial performance gain.

We also evaluated two enhancement techniques, MHSA and knowledge distillation, but neither yielded uniform or practically meaningful gains. MHSA delivered at best negligible, architecture-dependent improvements (notably for ResNet-18 and DeiT-Small-Distilled), while knowledge distillation provided only a small benefit for the most capacity-constrained student (MobileNetV3-Small-050). Consequently, for this task and dataset, lightweight CNNs without added attention or distillation remain the most reliable choice for edge deployment.

In conclusion, our findings highlight that lightweight CNNs, particularly MobileNetV3-Large, offer an optimal balance between accuracy and efficiency for wind turbine damage classification.

Future work will focus on expanding the damage taxonomy and integrating multimodal sensing modalities, such as thermal infrared and acoustic data, to improve robustness under diverse environmental conditions.

Additionally, efforts will be directed toward extending the current classification framework into a localization-aware detection pipeline, where object detection or attention-based region proposals can pinpoint specific damaged areas on turbine blades. Such extensions would enable more interpretable predictions and facilitate automated repair prioritization.

Finally, deploying these enhanced models on UAV or robotic platforms will be a key step toward achieving fully autonomous, real-time inspection and maintenance in operational wind farms.

Author Contributions

Conceptualization, A.H. and H.N.N.; methodology, A.H. and H.N.N.; investigation, A.H. and H.N.N.; section writing, A.H. and H.N.N.; writing—original draft preparation, A.H.; writing—review and editing, H.N.N.; visualization, A.H. and H.N.N.; supervision, H.N.N.; project administration, H.N.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

None of the authors have a conflicts of interest.

References

- Kumar, Y.; Ringenberg, J.; Depuru, S.S.; Devabhaktuni, V.K.; Lee, J.W.; Nikolaidis, E.; Andersen, B.; Afjeh, A. Wind energy: Trends and enabling technologies. Renew. Sustain. Energy Rev. 2016, 53, 209–224. [Google Scholar] [CrossRef]

- U.S. Energy Information Administration (EIA). Renewables Account for Most New U.S. Electricity Generating Capacity in 2021. Available online: https://www.eia.gov/todayinenergy/detail.php?id=46416 (accessed on 10 June 2025).

- U.S. Energy Information Administration (EIA). Electricity Generation from Wind. Available online: https://www.eia.gov/energyexplained/wind/electricity-generation-from-wind.php (accessed on 10 June 2025).

- United Nations Framework Convention on Climate Change (UNFCCC). The Paris Agreement. Available online: https://unfccc.int/process-and-meetings/the-paris-agreement (accessed on 10 June 2025).

- Heier, S. Grid Integration of Wind Energy: Onshore and Offshore Conversion Systems; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Sasinthiran, A.; Gnanasekaran, S.; Ragala, R. A review of artificial intelligence applications in wind turbine health monitoring. Int. J. Sustain. Energy 2024, 43, 2326296. [Google Scholar] [CrossRef]

- Tripathi, S.; Tiwari, A.; Singh, D. Grid-integrated permanent magnet synchronous generator based wind energy conversion systems: A technology review. Renew. Sustain. Energy Rev. 2015, 51, 1288–1305. [Google Scholar] [CrossRef]

- Lu, B.; Li, Y.; Wu, X.; Yang, Z. A review of recent advances in wind turbine condition monitoring and fault diagnosis. In Proceedings of the 2009 IEEE Power Electronics and Machines in Wind Applications, Lincoln, NE, USA, 24–26 June 2009; pp. 1–7. [Google Scholar]

- U.S. Department of Energy (DOE). Land-Based Wind Market Report: 2023 Edition; Technical report; U.S. Department of Energy: Washington, DC, USA, 2023.

- European Wind Energy Association (EWEA). “Wind Energy—The Facts”. 2009. Available online: https://www.wind-energy-the-facts.org/images/090522bdwetflatvia.pdf (accessed on 10 June 2025).

- Wang, S.; Wang, S. Impacts of wind energy on environment: A review. Renew. Sustain. Energy Rev. 2015, 49, 437–443. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Li, Q.; Wang, X.; Zhou, Z.; Xu, H.; Zhang, D.; Qian, P. Overview of Condition Monitoring Technology for Variable-Speed Offshore Wind Turbines. Energies 2025, 18, 1026. [Google Scholar] [CrossRef]

- Nord-Lock Group. “Offshore Floating Wind Energy,” Nord-Lock Knowledge Blog. 2020. Available online: https://www.nord-lock.com/cs-cz/zajimavosti/knowledge/2020/offshore-floating-wind-energy/ (accessed on 13 October 2025).

- Salameh, J.P.; Cauet, S.; Etien, E.; Sakout, A.; Rambault, L. Gearbox condition monitoring in wind turbines: A review. Mech. Syst. Signal Process. 2018, 111, 251–264. [Google Scholar] [CrossRef]

- Chen, P.; Zhang, R.; He, C.; Jin, Y.; Fan, S.; Qi, J.; Zhou, C.; Zhang, C. Progressive contrastive representation learning for defect diagnosis in aluminum disk substrates with a bio-inspired vision sensor. Expert Syst. Appl. 2025, 249, 128305. [Google Scholar] [CrossRef]

- Tu, H.; Wang, X.; Li, Y. Handling Multi-Source Uncertainty in Accelerated Degradation Through a Wiener-Based Robust Modeling Scheme. Sensors 2025, 25, 6654. [Google Scholar] [CrossRef]

- Qi, J.; Chen, Z.; Kong, Y.; Qin, W.; Qin, Y. Attention-guided graph isomorphism learning: A multi-task framework for fault diagnosis and remaining useful life prediction. Reliab. Eng. Syst. Saf. 2025, 250, 111209. [Google Scholar] [CrossRef]

- Burton, T.; Jenkins, N.; Sharpe, D.; Bossanyi, E. Wind Energy Handbook; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Manwell, J.F.; McGowan, J.G.; Rogers, A.L. Wind Energy Explained: Theory, Design and Application; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Ackermann, T. Wind Power in Power Systems; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Allal, Z.; Noura, H.N.; Vernier, F.; Salman, O.; Chahine, K. Wind turbine fault detection and identification using a two-tier machine learning framework. Intell. Syst. Appl. 2024, 22, 200372. [Google Scholar] [CrossRef]

- Alabdali, Q.A.; Bajawi, A.M.; Fatani, A.M.; Nahhas, A.M. Review of recent advances of wind energy. Sustain. Energy 2020, 8, 12–19. [Google Scholar]

- Knudsen, H.; Nielsen, J.N. Introduction to the modelling of wind turbines. In Wind Power in Power Systems; John Wiley & Sons: Hoboken, NJ, USA, 2012; pp. 767–797. [Google Scholar]

- Kim, M.; Dalhoff, P. Yaw Systems for wind turbines—Overview of concepts, current challenges and design methods. J. Phys. Conf. Ser. 2014, 524, 012086. [Google Scholar] [CrossRef]

- Abraham, A.; Dasari, T.; Hong, J. Effect of turbine nacelle and tower on the near wake of a utility-scale wind turbine. J. Wind Eng. Ind. Aerodyn. 2019, 193, 103981. [Google Scholar] [CrossRef]

- Zhang, C.; Cai, X.; Rygg, A.; Molinas, M. Modeling and analysis of grid-synchronizing stability of a Type-IV wind turbine under grid faults. Int. J. Electr. Power Energy Syst. 2020, 117, 105544. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, M.; Zhu, Y.; Zhang, K.; Li, Q. A review of fault diagnosis, status prediction, and evaluation technology for wind turbines. Energies 2023, 16, 1125. [Google Scholar] [CrossRef]

- Demilie, W.B. Plant disease detection and classification techniques: A comparative study of the performances. J. Big Data 2024, 11, 5. [Google Scholar] [CrossRef]

- Islam, M.R.; Zamil, M.Z.H.; Rayed, M.E.; Kabir, M.M.; Mridha, M.; Nishimura, S.; Shin, J. Deep Learning and Computer Vision Techniques for Enhanced Quality Control in Manufacturing Processes. IEEE Access 2024, 12, 121449–121479. [Google Scholar] [CrossRef]

- Wu, Q.; Huang, Y.; Wang, S.; Qi, L.; Zhang, Z.; Hou, D.; Li, H.; Zhao, S. Artificial intelligence in lung cancer screening: Detection, classification, prediction, and prognosis. Cancer Med. 2024, 13, e7140. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, L.; Zhang, W.; Ai, Y. Non-destructive testing of wind turbine blades using an infrared thermography: A review. In Proceedings of the 2013 International Conference on Materials for Renewable Energy and Environment, Chengdu, China, 19–21 August 2013; Volume 1, pp. 407–410. [Google Scholar]

- Han, Y.; Liu, T.; Li, K. Fault diagnosis of wind turbine blades under wide-weather multi-operating conditions based on multi-modal information fusion and deep learning. Struct. Health Monit. 2025. online ahead of print. [Google Scholar] [CrossRef]

- Mostafavi, A.; Friedmann, A. Wind turbine condition monitoring dataset of Fraunhofer LBF. Sci. Data 2024, 11, 1108. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Q.; Wang, S.; Zhao, Y. Detection of solar panel defects based on separable convolution and convolutional block attention module. Energy Sources Part A Recover. Util. Environ. Eff. 2023, 45, 7136–7149. [Google Scholar] [CrossRef]

- Dwivedi, D.; Babu, K.V.S.M.; Yemula, P.K.; Chakraborty, P.; Pal, M. Identification of surface defects on solar pv panels and wind turbine blades using attention based deep learning model. Eng. Appl. Artif. Intell. 2024, 131, 107836. [Google Scholar] [CrossRef]

- Mansoor, M.; Tan, X.; Mirza, A.F.; Gong, T.; Song, Z.; Irfan, M. WindDefNet: A Multi-Scale Attention-Enhanced ViT-Inception-ResNet Model for Real-Time Wind Turbine Blade Defect Detection. Machines 2025, 13, 453. [Google Scholar] [CrossRef]

- Saci, A.; Nadour, M.; Cherroun, L.; Hafaifa, A.; Kouzou, A.; Rodriguez, J.; Abdelrahem, M. Condition Monitoring Using Digital Fault-Detection Approach for Pitch System in Wind Turbines. Energies 2024, 17, 4016. [Google Scholar] [CrossRef]

- Al Lahham, E.; Kanaan, L.; Murad, Z.; Khalid, H.M.; Hussain, G.A.; Muyeen, S.M. Online condition monitoring and fault diagnosis in wind turbines: A comprehensive review on structure, failures, health monitoring techniques, and signal processing methods. Green Technol. Sustain. 2025, 3, 100153. [Google Scholar] [CrossRef]

- DTU Wind Energy. Nordtank Dataset for Wind Turbine Blade Damage Detection. 2023. Available online: https://gitlab.windenergy.dtu.dk/fair-data/winddata-revamp/winddata-documentation/-/blob/master/nordtank.md (accessed on 3 July 2025).

- Bdhsn, A. Wind Turbine Faults Detection—Computer Vision Project Dataset. 2023. Available online: https://universe.roboflow.com/detectionanas/wind-turbine-faults-detection (accessed on 15 June 2025).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Springer: Berlin/Heidelberg, Germany, 2021; pp. 63–72. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Zhao, H.; Zhang, X.; Gao, Y.; Wang, L.; Xiao, L.; Liu, S.; Huang, B.; Li, Z. Diagnostic performance of EfficientNetV2-S method for staging liver fibrosis based on multiparametric MRI. Heliyon 2024, 10, 15. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Ramos, L.; Casas, E.; Romero, C.; Rivas-Echeverría, F.; Morocho-Cayamcela, M.E. A study of convnext architectures for enhanced image captioning. IEEE Access 2024, 12, 13711–13728. [Google Scholar] [CrossRef]

- Dewangkoro, H.I.; Rohmadi, A.; Yudha, E.P. Integrating Efficient Channel Attention Plus into ConvNeXt Network for Remote Sensing Image Scene Classification. In Proceedings of the 2025 International Electronics Symposium (IES), Surabaya, Indonesia, 5–7 August 2025; pp. 580–584. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International conference on Machine Learning, Virtually, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Demidovskij, A.; Tugaryov, A.; Trutnev, A.; Kazyulina, M.; Salnikov, I.; Pavlov, S. Lightweight and Elegant Data Reduction Strategies for Training Acceleration of Convolutional Neural Networks. Mathematics 2023, 11, 3120. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).