1. Introduction

In the era of data-driven decision-making, the ability to interact with databases through natural language has become increasingly critical. Over the past few years, the task of mapping natural language questions to SQL queries—known as Text-to-SQL—has garnered significant research attention, catalyzed by comprehensive benchmarks such as Spider [

1], BIRD [

2], and specialized datasets. The emergence of large language models (LLMs; [

3]) has accelerated advancements in the field, with recent approaches increasingly relying on pre-trained language models that demonstrate remarkable effectiveness in generating complex, multi-table queries.

The conversational text-to-SQL (CoT2SQL) task extends the standard single-turn query generation paradigm to multi-turn, dialogue-based scenarios. This would allow users to interact with database systems in a more conversational manner. Benchmarks like CoSQL [

4] and SParC [

5] have been instrumental in pushing the boundaries of this research. Unlike single-turn tasks, these datasets introduce significant complexity by requiring models to manage sophisticated linguistic challenges: coreference resolution, handling elliptical expressions, tracking topic changes, and dynamically inferring which database schema elements remain contextually relevant across multiple dialogue turns.

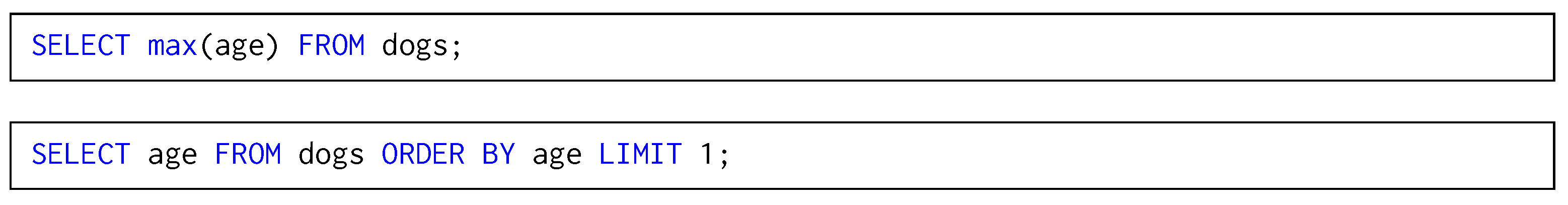

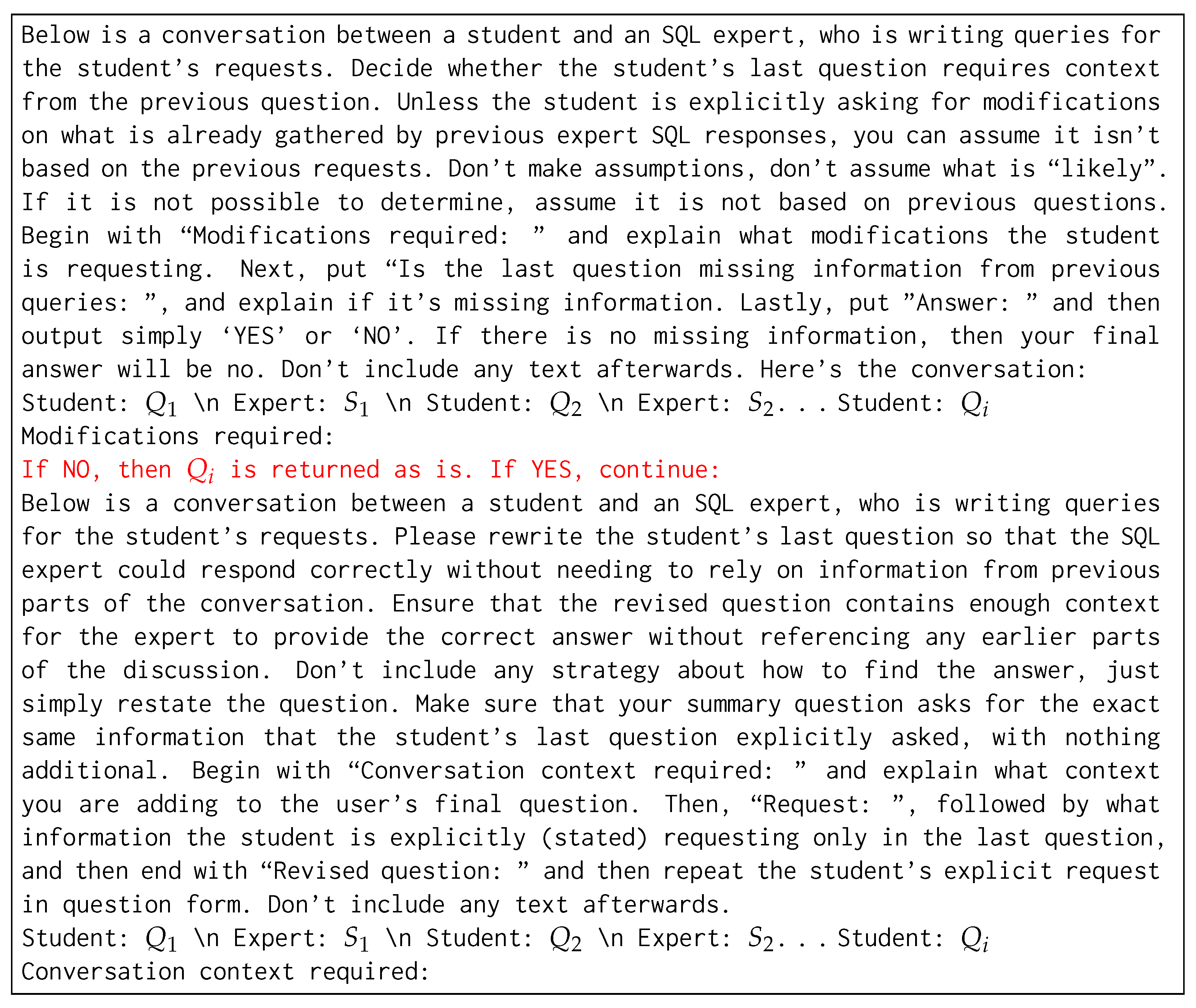

The evaluation of conversational Text-to-SQL presents its own set of methodological challenges. Existing metrics—Exact Set Matching (

ESM) and Execution-based (

EXE) evaluation—each come with inherent limitations.

ESM, used by CoSQL and SParC, is notoriously strict, demanding near-exact syntactic equivalence between generated and ground truth queries, which can lead to false negatives (

Figure 1).

Conversely,

EXE, employed by Spider, BIRD, and other single-turn text-to-SQL datasets, can incorrectly deem semantically different queries as equivalent if they produce identical results (

Figure 2). These metric constraints significantly impact model development and performance assessment.

Despite the importance of conversational interfaces for databases, most recent research on Text-to-SQL focuses on the context independent scenario. Pretrained LLM-based models (PLMs), while achieving high EXE scores in single-turn scenarios, often struggle on ESM-based benchmarks because their generated queries diverge syntactically from the gold standard. This discrepancy has shifted attention toward single-turn datasets like BIRD, leaving fine-tuned language models (FLMs) as the top performers on CoSQL and SParC when evaluated via ESM.

We investigate how to leverage large language models for conversation-based Text-to-SQL. The main contributions of this work are as follows:

Fine-tuning a single-Turn Model for Multi-Turn Scenarios. We adapt CodeS [

6], originally designed for single-turn tasks, to the multi-turn dialogues of CoSQL and SParC. We highlight the practical fine-tuning approaches required to handle context, from conversation history concatenation to question rewriting using GPT.

Model Merging. We introduce a model-merging approach that integrates these two fine-tuned variants via parameter averaging, yielding improved robustness in multi-turn scenarios. We investigate the effectiveness of merging model weights from different fine-tuning strategies, aiming to mitigate the tension between full history and question rewriting approaches.

Our findings show that merging can yield improvements on the CoSQL and SParC datasets, and we demonstrate a new state of the art approach on CoSQL under the ESM metric.

This paper first details related work on conversational text-to-SQL and evaluation metrics (

Section 2). We then outline our approach, including introducing a new baseline for CoT2SQL, fine-tuning protocols, data preparation, and model merging (

Section 3). Next, we present our experiments and results (

Section 4), followed by an analysis of how LLMs can enhance this task and their inherent limitations (

Section 5). We posit that large language models will serve as the cornerstone for bridging the remaining gaps in this task, driving conversation-based text-to-SQL forward into next-generation applications.

2. Related Work

2.1. Conversational Text-to-SQL

Early progress in text-to-SQL systems focused on the single-turn task, with Spider emerging as a popular benchmark. Spider [

1] was among the first large-scale, multi-domain datasets featuring complex queries involving keywords like

GROUP BY and

HAVING, and spanned 200 databases across 138 distinct domains, making generalizability a core requirement. More recent single-turn datasets, such as BIRD [

2], have pushed complexity further by introducing real-world queries and hand-crafted test sets to guard against data leakage in LLMs. Both Spider and BIRD rely on

EXE as a primary metric, allowing multiple SQL queries that return identical results to be treated as correct. This flexibility, however, can obscure subtle differences in logical form.

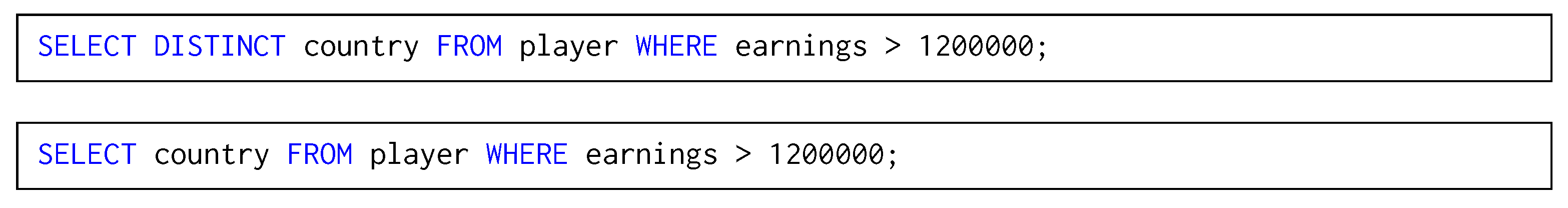

Building on single-turn datasets, SParC [

5] introduced multi-turn conversation flows. Sets of questions were artificially constructed in sequence based on Spider’s databases. In contrast, CoSQL [

4] was collected via a Wizard-of-Oz setup, resulting in dialogue data that is more “natural” and often messier—complete with clarifications, typos, and even nonsensical queries (

Figure 3). SParC and CoSQL require models to manage context shifts, coreference, and schema relevance across multiple queries. Both CoSQL and SParC rely on

ESM for official ranking on the leaderboard, emphasizing exact syntactic matching.

Table 1 summarizes key properties of these datasets.

In recent years, as pre-trained LLMs have gained notoriety, researchers have focused primarily on BIRD and other single-turn datasets. By contrast, CoSQL and SParC have seen less recent adoption, partly due to the strictness and potential limitations of ESM and partly because their official leaderboards are no longer maintained.

2.2. Recent Approaches

Models based on pretrained large language models (PLMs) have achieved impressive single-turn text-to-SQL performance. On the BIRD dataset, GPT-based systems, such as AskData+GPT-4o (AT&T), or Gemini-based systems like CHASE-SQL+Gemini [

7], perform at state of the art, while Spider’s leaderboard similarly features GPT-driven approaches like DAIL-SQL [

8] and DIN-SQL [

9]. Among fine-tuned models (FLMs), IBM’s Granite [

10] also demonstrated strong performance on BIRD. Generally, both PLM and FLM approaches involve forms of (1) Schema Linking, where the most relevant parts of the schema are used, since the schema would otherwise be too large for the context of the models, (2) Self-Consistency, where models generate multiple potential results and the most consistent is chosen, and (3) Query Correction, where syntactically invalid queries are attempted to be automatically fixed.

Despite their popularity and wide-spread use on Spider and BIRD, PLMs can produce a variety of syntactically distinct queries. This typically inflates

EXE scores while leading to suboptimal

ESM performance. Thus, PLM approaches for CoT2SQL are rare. Some recent approaches that use PLMs, such as CoE-SQL [

11], rely on chain-of-thought reasoning to improve SQL accuracy on

EXE, but still lag behind fine-tuned models on

ESM. Consequently, most approaches for CoT2SQL involve fine-tuned models. RASAT [

12], coupled with PICARD [

13], incorporates relation-aware self-attention, allowing the inherited weights from T5 to better understand schemas. [

14] presented RESDSQL, which utilizes first an encoder to identify relevant schema items, and subsequently the decoder first generates the SQL skeleton with keywords, before re-inserting the previously determined schema items.

CodeS-7b is an open-source LLM specifically designed for text-to-SQL, originally developed and optimized for single-turn queries [

6]. Despite being fine-tuned, it performs very well on Spider using the

EXE metric, showing comparable results to the top PLM-based approaches. Although surpassed on the BIRD dataset by other approaches, it remains the primary open-sourced LLM that shows promising results on the text-to-SQL task.

Question rewriting as a method for converting a multi-turn dataset into a single-turn version has been proposed by [

15]. They introduced QURG, where they trained a model to rewrite questions based on the context.

2.3. Evaluation Metrics

As discussed, CoSQL and SParC rank submissions via Exact Set Matching (ESM), which requires near-perfect syntactic matching of clauses. Although ESM reduces false positives, it often overlooks logically equivalent rewrites, creating false negatives. In contrast, execution-based (EXE) metrics used by Spider and BIRD can fail to catch logically distinct queries if they incidentally produce the same results on a given database.

Ascoli et al. [

16] proposes an alternative,

ETM, which compares queries by their structure while still acknowledging syntactic variation that doesn’t change query logic.

ETM reduces false negatives by recognizing logically valid rewrites and cuts down on false positives by ensuring a shared logical form, rather than a superficial match in query results. Thus, throughout our experiments, we report results under

EXE,

ESM, and

ETM.

2.4. Model Merging

Recent findings suggest that averaging the parameters of multiple trained checkpoints, known as model merging, can yield performance gains for a wide variety of tasks [

17]. We adopt a similar approach, averaging weights from CodeS models fine-tuned with different strategies for handling multi-turn dialogues (full history vs. rewriting).

3. Approach

3.1. Baselines

We introduce two strong baseline models using schema-based prompting with two PLMs, GPT 4-Turbo (GPT4; [

18]) and Claude 3-Opus (CLA3; [

19]). These baselines do not leverage any task-specific fine-tuning, but rather leverage PLMs’ intrinsic capabilities to interpret natural language inputs and generate corresponding queries.

Figure 4 describes the prompt used by our models; detailed explanations are provided in

Appendix A.

3.2. Model Training

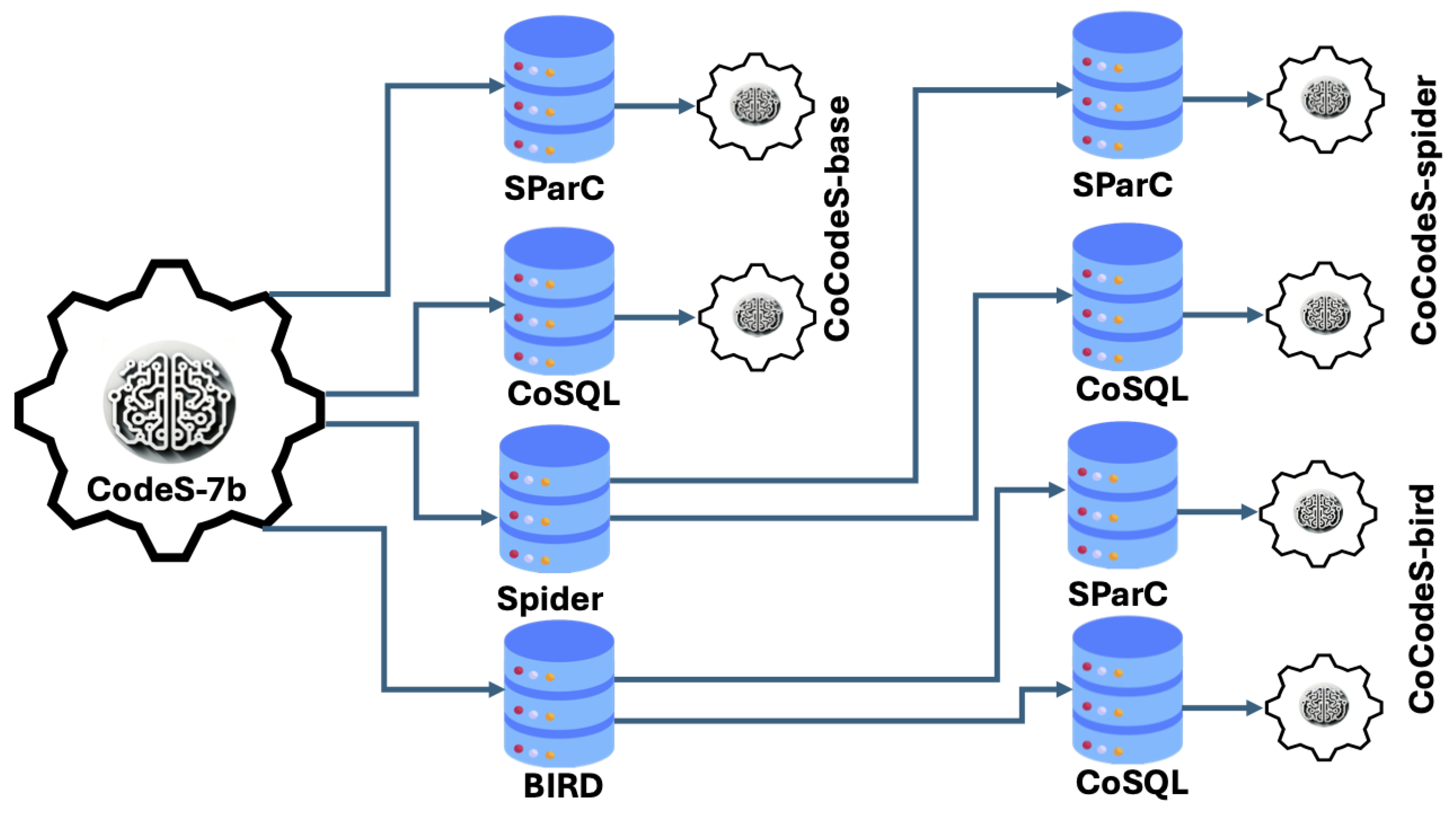

We present CoCodeS, our proposed adaptation of the single-turn text-to-SQL model CodeS-7b to the multi-turn tasks of CoSQL and SParC. The creation process fr CoCodeS is shown in

Figure 5. For all of our finetuning, we use the official training splits for CoSQL and SParC. Since the official test sets for these datasets are not publicly released, we report results on the development sets as a proxy for final performance, and do not use it for any training or finetuning. Although the dev set already has no schema overlaps with the training set, reducing the likelihood of overfitting to the development set, future work can also include cross-validation over data splitting strategies to further avoid over-fitting. We train a variety of models:

CoCodeSbase: The base CodeS-7b model, finetuned on CoSQL or SParC.

CoCodeSspider: The base CodeS-7b model, finetuned first on Spider, then further on CoSQL or SParC.

CoCodeSbird: The base CodeS-7b model, finetuned first on BIRD, then further on CoSQL or SParC.

Figure 5.

Overview of the CoCodeS creation process.

Figure 5.

Overview of the CoCodeS creation process.

All models are trained with the same hyperparameters, done on NVIDIA H100 GPUs. Training employed a batch size of 1, a maximum sequence length of 4096 tokens, and a fixed random seed (42). We initialized from the seeklhy/codes-7b-bird checkpoint on HuggingFace and fine-tuned for 4 epochs using a learning rate of with a warmup ratio of . Each training example included up to 6 tables and 10 columns per schema context. Training took about 40 GPU hours for both CoSQL and SParC.

3.3. Handling Conversational Context

Conversational text-to-SQL requires incorporating multi-turn context. We experiment with two primary strategies:

Full History Concatenation. A straightforward approach is to concatenate the entire dialogue history before each turn. Specifically, for turn

i, we form:

where ⊕ denotes string concatenation. This aggregated input (within a length limit) is fed into our model.

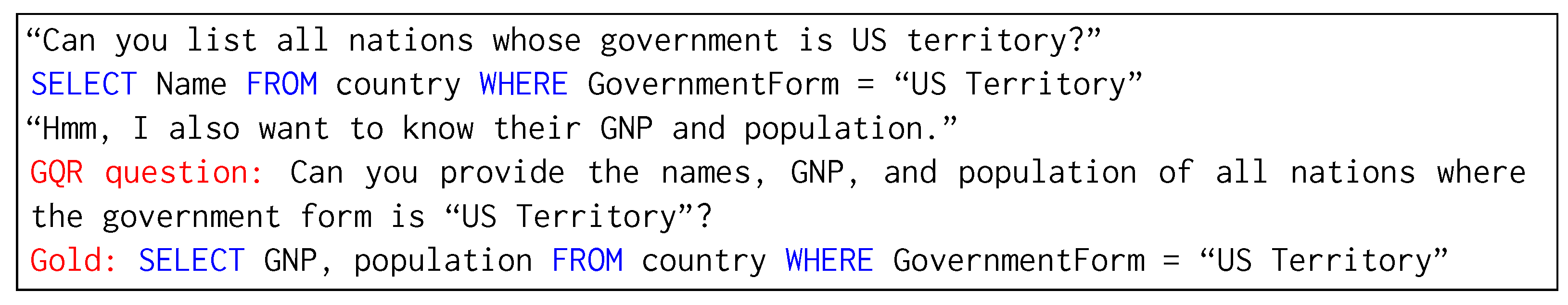

Question Rewriting. We employ a separate GPT-based rewriting module (GQR) to summarize all relevant dialogue context and transform the current user query, along with all the contextual information from the dialogue history, into a single-turn question. Given the conversation history (

, GPT 4-Turbo is prompted (using temperature 0 for reproducibility) to generate a single-turn query

that incorporates all necessary details from previous turns. The extended prompt is given in

Figure 6. GQR outputs a condensed query that includes the necessary contextual details, which is then used as a prompt for our model.

3.4. Weighted Model Merging

Both full history and GQR approaches have complementary strengths. Full history ensures no context is lost, but can lead to very long prompts, which could be detrimental to the performance of the model. On the other hand, GQR simplifies input, which can help the LLM generalize better, but can potentially lead to loss of information. To harness both advantages, we create a merge module, which leverages an effective technique to take in two fine-tuned models and output a merged one.

Concretely, we train two distinct CoCodeS variants, one fine-tuned with full history concatenation (M

FH) and another fine-tuned with with data processed by the GQR model (M

GQR). Each model has a distinct set of parameters,

and

. We create M

merged by averaging the parameters of M

FH and M

GQR:

where

in our experiments. Intuitively, if the two models have learned complementary aspects of the data, their parameter average may inherit strengths from both. We measure the performance of M

merged as opposed to M

FH and M

GQR for all of our CoCodeS variants on the CoSQL and SParC dev sets.

4. Experiments

We train and evaluate 6 CoCodeS models, for each of CoSQL and SParC. First, we train CodeS-7b on the training set by concatenating the inputs to flatten it to a single-turn dataset (CoCodeSbase-FH). We also train CodeS-7b on the queries processed by the GQR module (CoCodeSbase-GQR). We then take CodeS-7b and pre-train it on the entire Spider training set before doing the same process, creating CoCodeSspider-FH and CoCodeSspider-GQR. Likewise, CodeS-7b is trained on BIRD’s training set before applying the fine-tuning process, creating CoCodeSbird-FH and CoCodeSbird-GQR. We then merge each full history model with its corresponding GQR model to create a merged model. For both approaches, we employ the same schema definition as in our baselines.

Table 2 shows the results for all CoCodeS models, along with our baselines. We also reproduce RASAT + PICARD (R + P) and take the output of STAR [

20] from their repository for comparison. The same merged models are tested on both the full history data as well as the GQR data. We observe that for both SParC and CoSQL, the full history models outperform GQR models on both

ESM and

EXE. This is possibly due to loss of crucial information in the GQR stage, but we discuss other potential issues in

Section 5.

STAR in particular stands out, as it performs extremely well on ESM, without having similar results on EXE and ETM, the most stark difference in the metrics. This is because STAR does not actually predict values, something ESM intentionally did not check for, since at the time of release, models weren’t yet capable of performing text-to-SQL well enough to evaluate them fully. As such, STAR’s high scores on ESM do not transfer to practical applications, where value prediction is essential.

Notably, weight merging of the two models yields an improvement on both ESM and EXE for the bird-based models, suggesting the two training methods do capture complementary information. Although the merged models always perform better than those trained purely on the GQR data, merging doesn’t seem to always help for the full history data. One possible explanation for this is the large difference in scores for the full history models and the GQR models, and the full history models might not have very much downside compared to their GQR counterparts. Our best model (birdM) outperforms all leaderboard entries for CoSQL on ESM and ETM, achieving a new state of the art under the official metric.

In general, the intermediate finetuning step on Spider and BIRD increased scores for CoSQL, with BIRD showing the most improvement (more than 5% on ETM). However, for SParC, the models with intermediate tuning on Spider and BIRD surprisingly performed worse than the base model. Since SParC was built with Spider’s data, it’s possible that the model is overfitting when training on Spider’s dataset before training again on SParC’s dataset, giving a possible reason for the slightly degraded performance. Furthermore, BIRD’s data has much more complex queries than those in SParC’s, which could potentially skew results. This hypothesis is further backed because when using the GQR data, the discrepancy isn’t at all clear, and all 3 variants show similar results. This is because the GQR module is rewriting all the data, possibly making the questions more complex and similar to what exists in the BIRD data.

In general, models scored higher on SParC than on CoSQL, which makes sense since it’s considered an easier task. However, our baselines performed significantly worse when evaluated on EXE and ESM on SParC than on CoSQL. This is especially true for our Claude baseline, which scored more than 6% higher on CoSQL than on SParC for EXE. This pattern is reversed for ETM, where like the rest of the models, they score higher on SParC than on CoSQL. This points to many edge cases of false positives for EXE in CoSQL, which were subsequently corrected by ETM.

5. Analysis

5.1. GQR Analysis

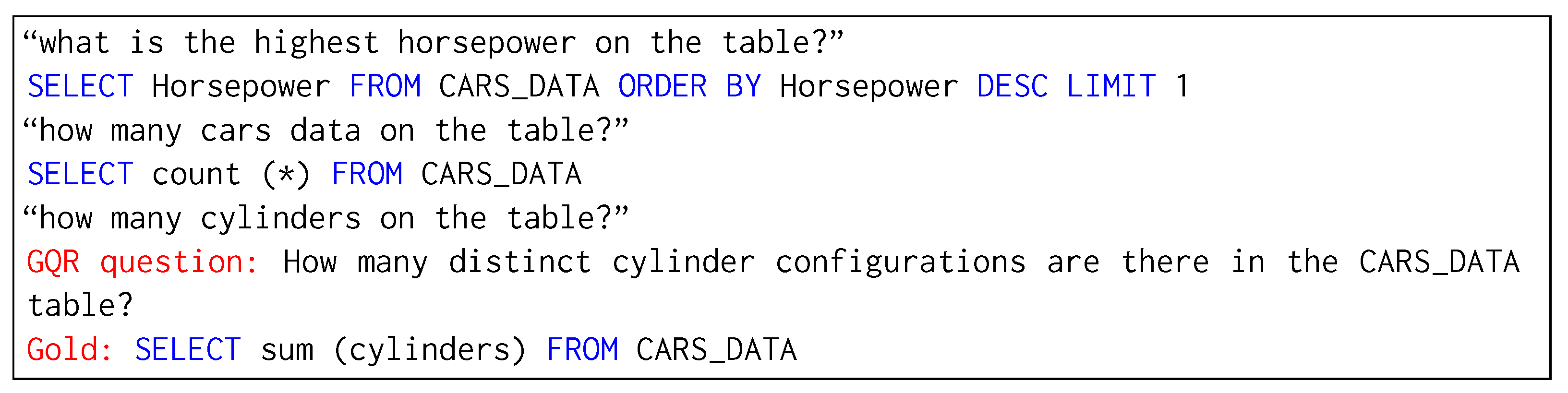

Although question rewriting was intended to simplify input length and clarify context, our experiments show it can degrade performance slightly compared to full-history concatenation across all metrics. Specifically, on CoSQL, GQR scored about 4% lower than full-history, while on SParC this discrepancy increases to about 8%. Closer inspection reveals that the GPT-generated rewrites had two main issues. First, it may restructure certain queries, omitting minor details. Furthermore, while analyzing SParC and CoSQL dialogues, we found that annotations sometimes exhibit inconsistencies, and many questions have significant ambiguity, making it unclear if the gold SQL is truly unique or whether multiple distinct queries could be valid responses. Ambiguous questions can generate multiple plausible rewrites from GQR, especially regarding which columns to include, and the selected rewrite might cause model output to differ from the reference SQL.

Figure 7 illustrates a case where GQR adds a column that the gold query omits, and

Figure 8 shows a case where information is lost between questions. These mismatches penalize GQR under all metrics, as the model’s final SQL output diverges from the gold standard.

When dealing with ambiguity, GQR can over-simplify a question towards one interpretation of the ambiguity, making the ambiguity disappear. However, this interpretation might not be what the gold SQL is based on, causing the models to perform worse on the processed data. We manually analyzed 50 examples of GQR in CoSQL where the base CodeS model was unable to correctly answer the question but did with the full history, and found that 76% of the error was due to these ambiguities. The remaining difference was due to summaries actually losing information (GQR omission) from the entire sequence.

5.2. Merging Weights

Merging weights proved to be a simple yet effective strategy. Even though the rewriting approach alone did not always surpass the full history, merging boosted alignment with the gold SQL across all metrics for the BIRD-based model on CoSQL, our best model. This suggests a synergy between more direct context usage (full history) and a condensed rewriting approach (GPT-based rewriting) once they are integrated at the parameter level. Future research could explore alternative merging techniques, such as weighted averages or partial parameter sharing. There may also be gains from merging multiple rewriting strategies or from training specialized modules that adapt rewriting output to align more closely with gold queries.

5.3. Baselines and Zero-Shot

Our zero-shot baselines (GPT4-Turbo and Claude-3-Opus) illuminate how large LLMs fare without in-domain fine-tuning. Overall, especially on CoSQL, these models perform extremely well, often performing as well or better than their fine-tuned counterparts. This shows how strong these PLMs are at understanding and interpreting conversational context. Notably, our baselines performed better on CoSQL than on SParC, which in unexpected as SParC is typically considered the simpler dataset. This is in part due to PLMs’ inherent variability, which hinders reproducibility: repeated runs can produce different SQL outputs, sometimes boosting or harming the final score.

Under EXE, such models can appear stronger than they really are if the test database is too narrow to reveal logical errors. ETM mitigates this risk by checking structural consistency, thus reducing false positives. This is why our baselines do not perform worse under the ETM metric, and instead align with what we expect, a higher score for SParC.

5.4. LLMs: Opportunities and Limitations

Despite the challenges noted above, large language models show considerable promise for conversation-based text-to-SQL. They provide flexible strategies for rewriting queries, can leverage extensive pretraining knowledge, and exhibit a remarkable ability to handle follow-up clarifications or user corrections in multi-turn settings. However, persistent limitations remain. Pretraining overlaps with public datasets like SParC and CoSQL may lead to superficial memorization that does not translate well to unseen datasets. Under strict matching metrics such as

ESM, LLMs frequently produce syntactically varied yet logically equivalent queries, incurring artificially low scores that do not reflect genuine usability. Furthermore, context and token constraints often restrict how effectively LLMs can incorporate extended conversation histories, particularly if rewriting modules inadvertently exclude key details or if user queries contain inherent ambiguities [

21]. Previous work, such as Gao et al. [

22], has analyzed these restraints in detail, particularly in the single-turn text-to-SQL domain, looking at potential solutions like schema-linking, where certain attributes of the question are linked to the schema of the tables themselves.

Looking ahead, future research may focus on integrating schema-aware paraphrasing, refining context-tracking mechanisms, and incorporating metadata at each dialogue turn to maintain consistency. Building new datasets—ideally hand-crafted and thus not part of large-scale pretraining corpora—can also ensure robust evaluation that isn’t affected by the ambiguities that plague SParC and CoSQL. Another option could be to have multiple options for gold queries, to allow ambiguities to be interpreted in multiple ways. Future research can also pursue retrieval-augmented generation for maintaining dialogue context beyond limited token windows. In addition, context rewriting can be done with open source models, instead of GPT 4-Turbo with minimal modifications to the GQR prompt. Addressing these gaps could yield more robust and human-like conversational agents for querying SQL databases, especially when paired with improved evaluation protocols and well-curated, higher-quality datasets.

6. Conclusions

In this work, we investigated both zero-shot and fine-tuned approaches for conversational text-to-SQL. We present a new state-of-the-art system CoCodeS on CoSQL under the official ESM metric by adapting the single-turn CodeS model via multi-stage fine-tuning first on BIRD followed by CoSQL, employing both full-history concatenation and GPT-based rewriting to handle conversational context, and finally merging the resulting models through parameter averaging. Zero-shot baselines perform surprisingly well on CoT2SQL, occasionally surpassing fine-tuned models, highlighting both the power and the variability of pre-trained LLMs. Our experiments showed that question rewriting, although it simplifies context, generally underperforms full-history concatenation on both datasets, particularly on SParC; a closer inspection linked this gap to ambiguous annotations where plausible rewrites diverge from the gold queries.

Overall, these findings underscore the promise of large language models in multi-turn SQL generation but also emphasize how dataset inconsistencies and variance pose ongoing challenges. Future work may benefit from more rigorous benchmarks, less ambiguous annotations, and continued exploration of context-tracking and query-rewriting strategies to develop robust and user-friendly conversational database systems.