Abstract

The OSI model used to be a common network model for years. In the case of ad hoc networks with dynamic topology and difficult radio communications conditions, gradual departure is happening from the classical kind of OSI network model with a clear delineation of layers (physical, channel, network, transport, application) to the cross-layer approach. The layers of the network model in ad hoc networks strongly influence each other. Thus, the cross-layer approach can improve the performance of an ad hoc network by jointly developing protocols using interaction and collaborative optimization of multiple layers. The existing cross-layer methods classification is too complicated because it is based on the whole manifold of network model layer combinations, regardless of their importance. In this work, we review ad hoc network cross-layer methods, propose a new useful classification of cross-layer methods, and show future research directions in the development of ad hoc network cross-layer methods. The proposed classification can help to simplify the goal-oriented cross-layer protocol development.

1. Introduction

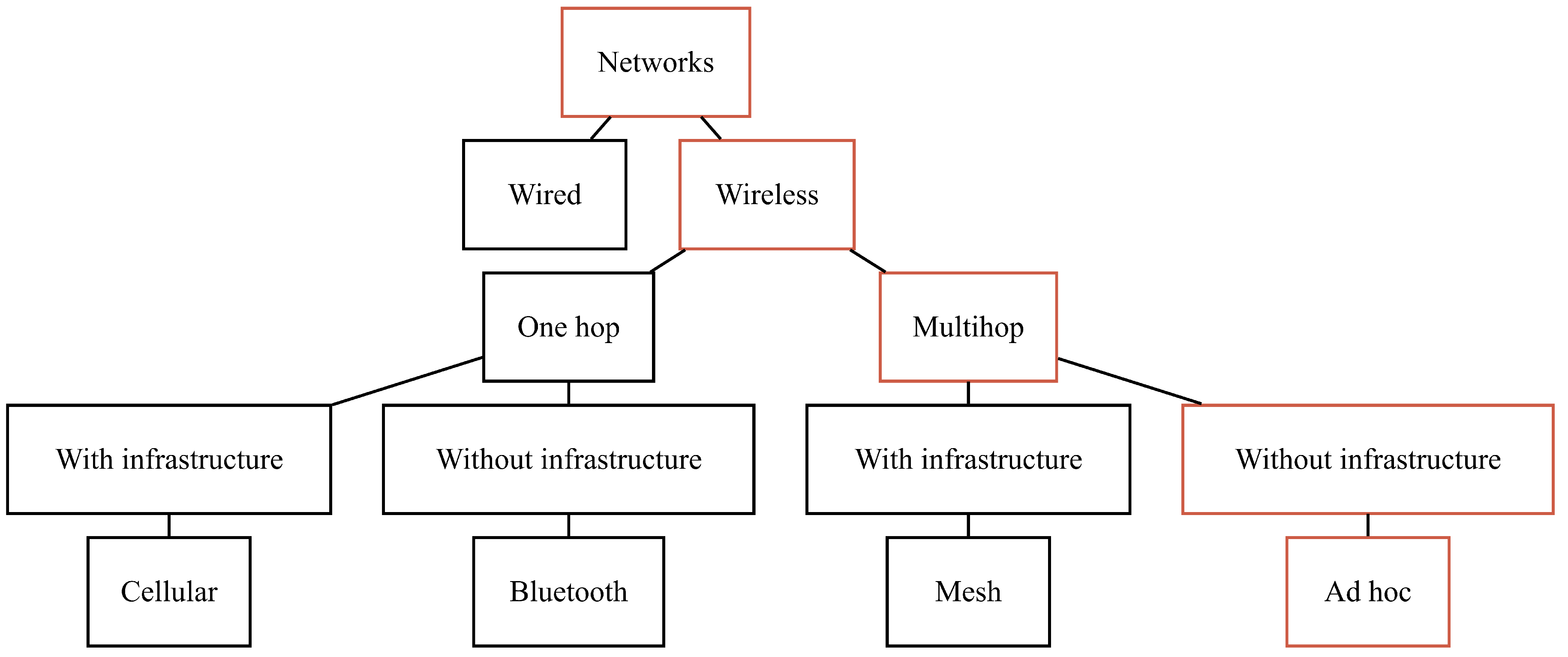

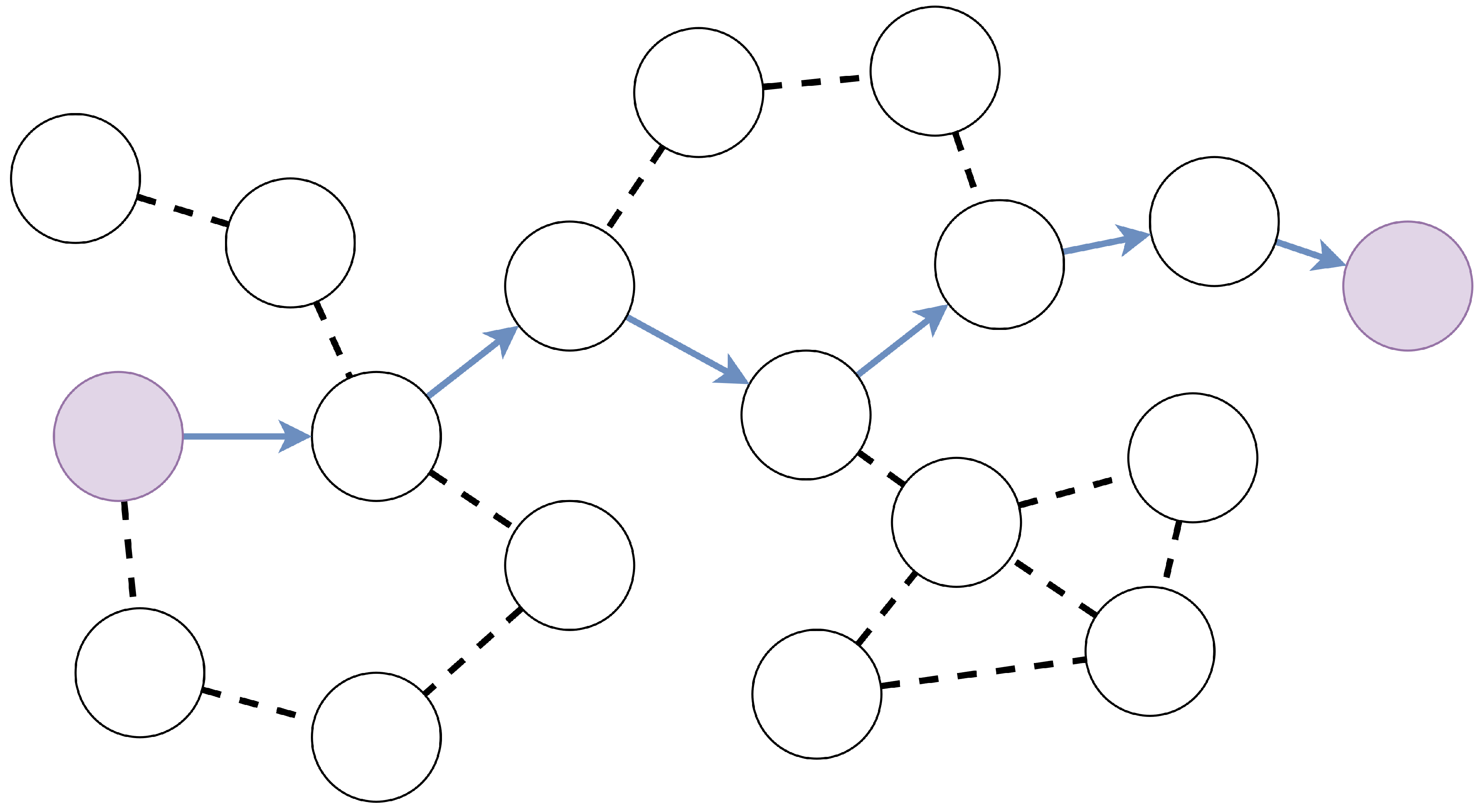

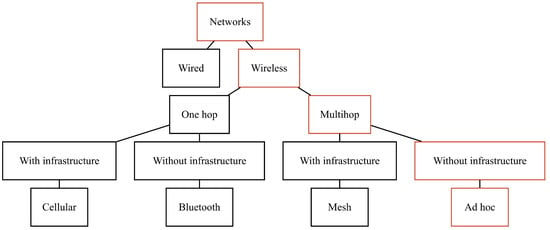

In this work, we present the classification of cross-layer methods in ad hoc networks and consider the methods themselves. An ad hoc network is a network of mobile radio nodes with no infrastructure: without gateways, access points, base stations, etc. (Figure 1 and Figure 2). A classic, well-established approach to network protocols is to split protocols into OSI model layers. This is a very effective approach for wired networks with reliable communication channels where the performance of protocols of different layers does not depend on the state of other layers. In the case of a mobile ad hoc network with dynamic topology (with radio channels exposed to noise, interference, no line of sight, etc.), the insulation of layers leads to sub-optimal network operation. The use of cross-layer methods in the development of protocols of ad hoc networks allows achieving optimal performance for ad hoc networks. Here and further, cross-layer methods are considered methods with no layer isolation, intensive exchange of information between layers, and joint layer management.

Figure 1.

Network classification.

Figure 2.

Ad hoc network.

Ad hoc networks have the following properties [1]:

- Self-organization. Nodes are organized into networks and manage the network independently and in a distributed manner without centralized intervention. Each node acts as both the user and router [1].

- Peer network. Nodes of ad hoc networks are equal and perform the same functions [1].

- Limited resources. Ad hoc networks usually consist of portable devices with low computing power, memory, and battery charge [1].

- Network robustness. The failure of any network node almost never affects the performance of the network as a whole, unlike the failure of the central router of classical networks [2,3].

- Dynamic topology. The network nodes move relative to each other. As a result, node connections and routes between nodes appear and disappear [2,3].

- Limited constantly changing capacity of radio lines. Radio lines are susceptible to interference from neighboring nodes, noise from outside, multipath fading, and the Doppler effect [4].

- Shared channel resource. The lack of dedicated channel resources (the contention of nodes over channel resources) leads to interference and packet collisions, which reduces network bandwidth [4].

- Vulnerability to attacks. In classical networks, all data from a single subnet passes through one powerful firewall, and an attack from outside the network is directed only to the firewall. In the ad hoc network, each node is equally vulnerable to attacks from outside [5].

- Large amount of service data for routing. It is necessary because of ad hoc network dynamic topology to send more (than static networks) service information about the lines state, etc., to find network routes [4].

- Frequent network fragmentation. Network mobility can cause the ad hoc network to be divided into isolated subnets [4].

- Complexity of providing QoS. The poorly predictable instability of radio lines, interference of nodes, frequent route breaks, and delay in finding a new route all make the task of providing QoS in ad hoc networks very difficult [4].

Ad hoc networks are often used in the following areas [1]:

- Military use. It is difficult to deploy a cellular network in a warzone, but an ad hoc network can be deployed almost instantly.

- Rescue and search operations. In the event of a disaster, the local infrastructure is destroyed, and in the case of search operations in sparsely populated areas, the infrastructure initially does not exist. The rapid deployment of an ad hoc network allows immediate rescue and search operations.

- Distributed file sharing. During a meeting, such as a conference, one can quickly deploy a network to share files and presentations.

The International Organization for Standardization (ISO) created sub-cluster 16 (SC16) in 1977 to develop architecture for standard protocols. In 1979, the OSI [6] was created. The OSI model consists of seven layers: physical layer, channel layer, network layer, transport layer, session layer, presentation layer, and application layer. The task of the OSI model is to group similar communication functions into logical layers. The layer can only communicate with the upper and lower adjacent layers. However, with the development of the transport layer TCP protocol and network layer IP protocol, the TCP/IP model became dominant, consisting of five layers: application layer, transport layer, network layer, channel layer, and physical layer [6,7,8]. Layer architecture works very well in wired networks with stable connections and quasi-permanent topology. The layer isolation of different protocols allows for modular independent development of these protocols regardless of other layers. That speeds up the development and performance of the network. This is possible because events at different layers have little impact on each other.

In ad hoc networks with dynamic topology and difficult radio communications conditions, events at different layers may influence each other significantly. Therefore, to achieve high performance of an ad hoc network, one should not use an architecture with isolated layers [9,10,11].

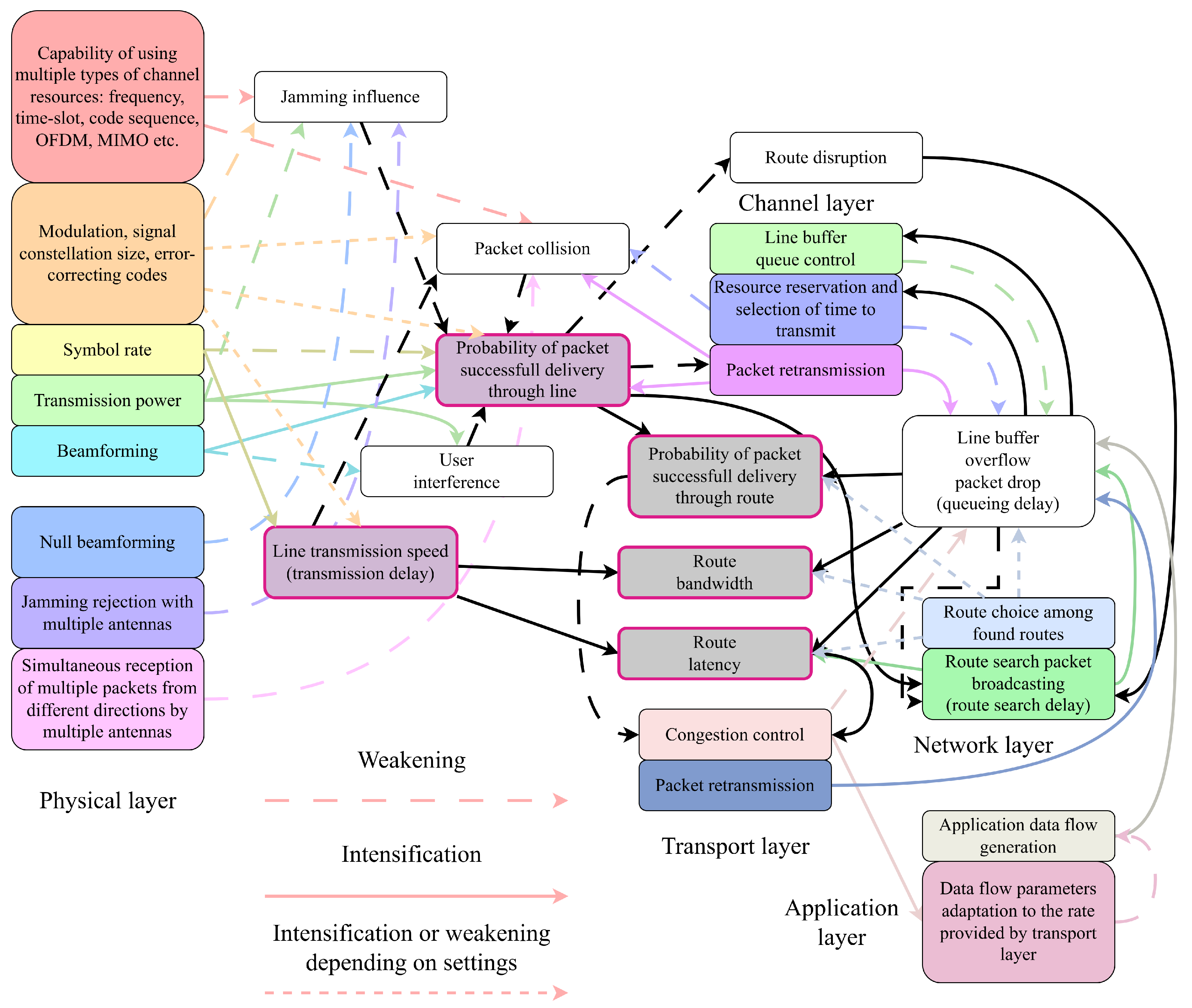

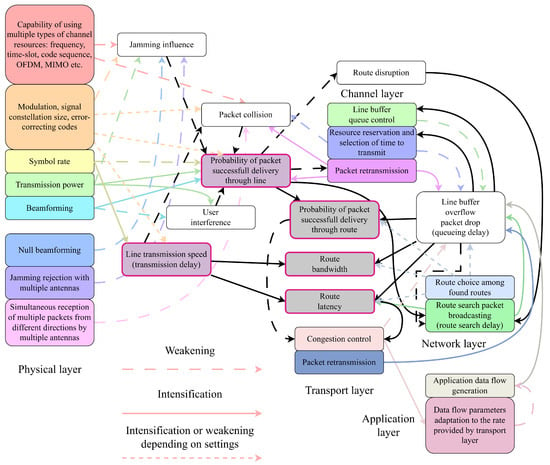

During the analysis, we constructed the scheme (graph) of the interdependence and influence of OSI layers on each other (Figure 3). Almost all layer influence is indirect, with the help of intermediate events. The only direct influence is transport layer congestion control, which forces the application layer to slow down transmission.

Figure 3.

The interdependence of OSI layers in an ad hoc network.

In Figure 3, we can, for example, trace the following circular indirect layer influence with the positive feedback loop on the high packet corruption rate. To guarantee a high probability of packet delivery, the packet needs either to be retransmitted multiple times at the channel layer, or it is necessary to increase transmission power at the physical layer. Transmission power increase or multiple packet retransmissions can cause excessive interference to other nodes, which causes a low probability of successful packet delivery. At the network layer, the current route ceases to exist because of the low probability of successful packet delivery, so it is necessary to start the process of new route discovery by broadcasting route request packets, and route request packets cause even more interference.

Cross-layer methods strive to improve the performance of ad hoc networks by collaboratively developing protocols using multiple-layer interaction [12,13,14]. Compared to the layered OSI approach, the cross-layer approach allows interaction between layers, reading and changing the parameters of some other layers [15,16,17,18]. Cross-layer methods have emerged from the desire to ensure interaction and joint optimization of multiple layers [19]. For example, the physical layer can select data rate, power, and coding to meet application layer requirements. Cross-layer design is also indispensable in increasing battery lifetime (minimizing energy consumption) [20,21] and in optimizing the end-to-end latency for real-time applications [22,23], as energy consumption and latency depend on all layers.

The theoretical justification of the effectiveness of cross-layer methods is given in one of the first works on network coding [24]. Network coding is the process of using network parameter settings to meet all the data flow transmission requirements. Reference [24] shows that performing only routing by intermediate nodes results in suboptimal network performance.

Cross-layer interactions can be subdivided into two large categories [25]:

- Provision of information. Adjacent and disconnected layers share information through interfaces or by using a common database.

- Layer integration. Layer separation disappears, and all layers except maybe the application layer are merged into one.

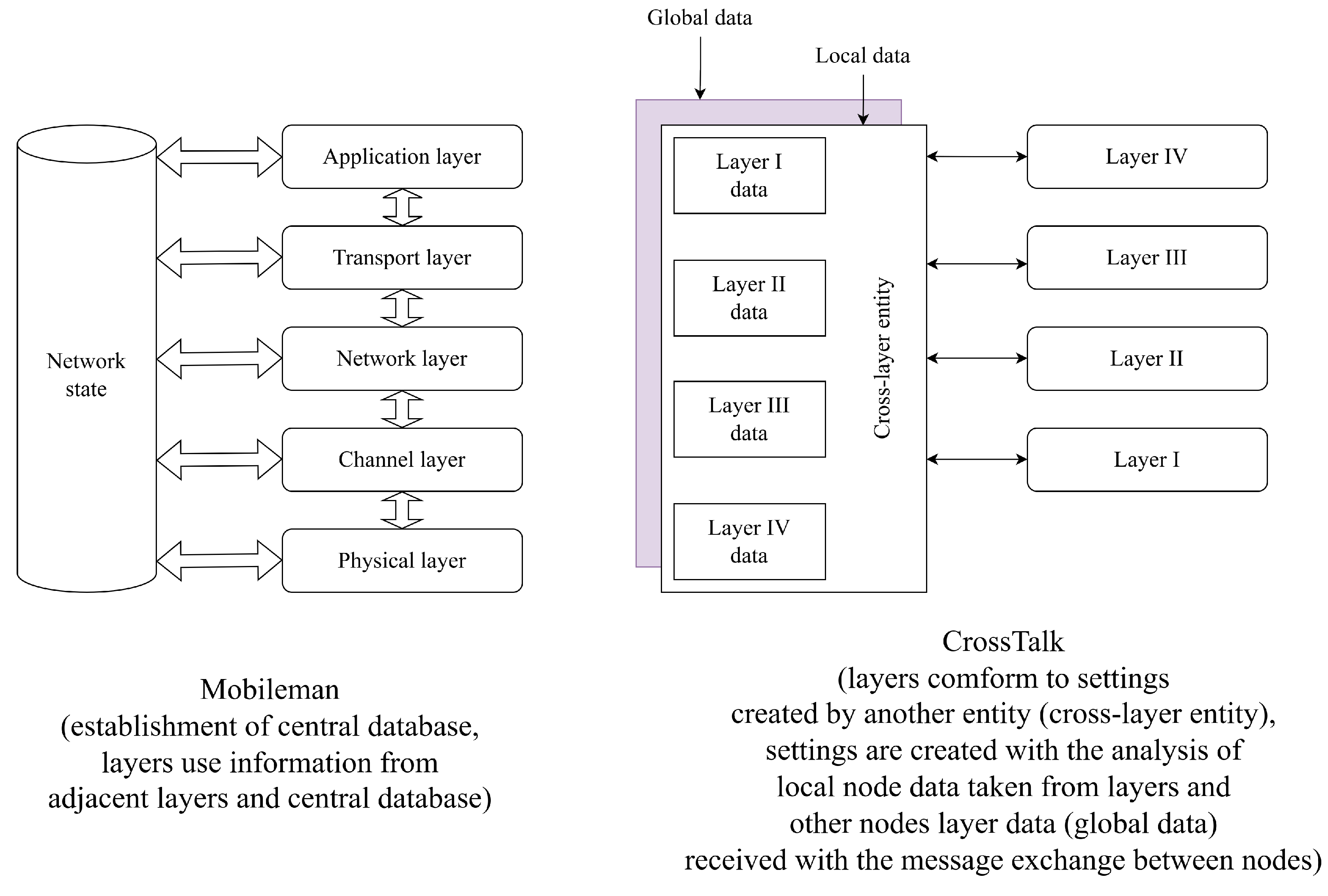

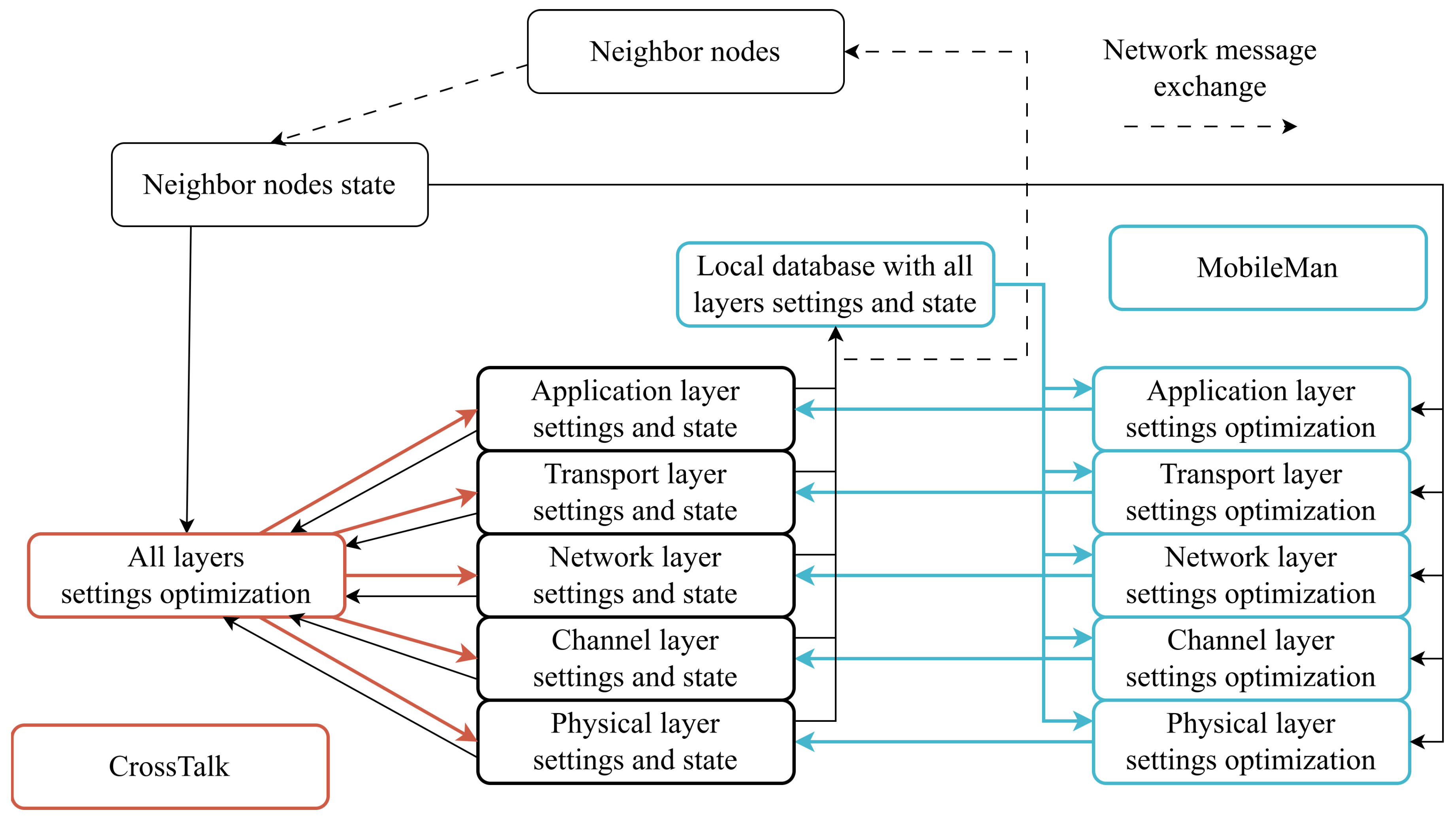

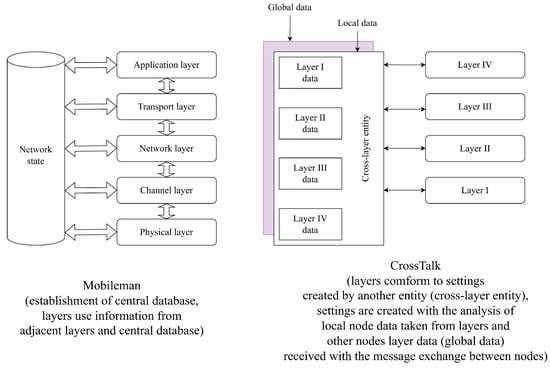

There are two main architectures for cross-layer methods (Figure 4): “MobileMan” [26] and “CrossTalk” [27].

Figure 4.

Two main cross-layer architectures: “MobileMan” and “Crosstalk”.

The “MobileMan” architecture [26] preserves the original layer architecture (Figure 4). The main achievement of this architecture is the creation of a central database that collects all network state data that can be used by various layers individually. “Mobileman” is the “provision of information” kind of cross-layer interaction.

The “CrossTalk” architecture [27] introduces the notion of global network state and local node state (Figure 4). Global information is collected using the messages from neighboring nodes. An important difference from the “MobileMan” architecture is that in “Crosstalk”, the cross-layer entity controls all layers, while in “MobileMan”, the layers tune themselves separately using each other’s information. “Crosstalk” is the “layer integration” kind of cross-layer interaction.

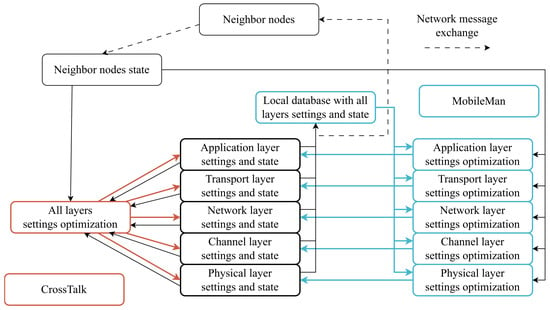

Cross-layer methods, based on the “MobileMan” and “Crosstalk” architectures, can be presented in terms of iterative optimization of multiple-layer configurations [11] to achieve QoS requirements for the data flows (Figure 5).

Figure 5.

Cross-layer methods as the iterative optimization of multiple-layer configuration.

There are the following difficulties in developing cross-layer methods [11,28,29,30,31,32]:

- Cross-layer method parameter selection. One of the fundamental questions of cross-layer methods is the choice of layer parameters on the basis of which decisions about network operation are made. It is very important to consider the physical layer parameters, but it is also important to consider the application layer parameters. For example, the received signal strength indicator (RSSI) is a good indicator of signal power, but this parameter does not take into account noise and interference. The noise level is taken into account in the signal-to-noise ratio (SNR), but interference is also not included. The signal-to-interference + noise ratio (SINR) parameter is much more useful because it simultaneously takes into account the signal strength and noise. With the SNR [33], one can estimate the proximity of the nodes (i.e., the nodes are within each other’s radio connection). Queue occupancy information is available at the channel layer. In addition to the above parameters, parameters that provide information about data flows are also used. As shown in [34,35], another approach is to use composite parameters obtained by combining many other parameters.

- Layer interdependency and the complexity of independent development. As shown in [9], cross-layer protocol design is nothing more than an OSI violation in which interlinked layers interact. The modification and improvement of a highly interconnected system is difficult. However, achieving modularity is one of the most difficult tasks in developing cross-layer methods.

- Overly complex interaction between layers. The flow of information between the different layers creates unforeseen relationships between layers, reducing the efficiency of the entire system. Therefore, it is necessary to choose the interaction between layers very carefully.

2. Related Works

In this section, we present our study on reviews of cross-layer methods in ad hoc networks (Table 1).

Table 1.

Review publications on cross-layer methods in ad hoc networks.

Early reviews of cross-layer methods in ad hoc networks reviewed possible approaches to the development rather than existing methods.

Srivastava et al. [9] introduced one of the first classifications for cross-layer method development approaches. The classification consists of four approaches: the creation of new interfaces between layers, the merging of adjacent layers, the development of fixed lower layers without creating new interfaces, and the holistic optimization of all layers. Ren et al. [36] approached the development of cross-layer methods by dividing them into three types: the use of event signaling between layers, the joint optimization of layer subsets, and the holistic optimization of all layers.

More recent reviews provide reviews and classifications of methods themselves. References [37,41] provide a classification of methods according to the layer combinations used in methods. Publications [28,38,39,40] focus on classifying not all cross-layer methods but cross-layer methods belonging to the same layer: cross-layer routing protocols, cross-layer transport protocols, and cross-layer applications for streaming video. Trivedi et al. [38] consider the routing protocols for vehicular ad hoc networks. The protocols are divided into two types: with the use of composite metrics from combining the physical and channel layer metrics and joint work of the network and channel layer. Awang et al. [28] deal with cross-layer routing protocols in ad hoc networks. The authors created a table with reviewed methods and what metrics and layers are used by methods. Goswami et al. [40] focus exclusively on the transport layer (the TCP protocol modifications) and the interaction of the transport layer with other layers to improve transport protocol performance. Mantzouratos et al. [39] focus on cross-layer methods that implement video streaming applications in ad hoc networks. The authors categorized methods according to the layers used in the methods. The categorization and distribution of methods by categories are the following: 1%—all except network and transport layer, 9%—use all layers (holistic approach), 40%—all layers except application layer, 50%—application layer, network and channel layer.

Every method for network control has a main goal or set of goals to achieve:

- Line throughput. Line throughput is the volume of successfully delivered data through the line per unit of time.

- Energy consumption. The less the node energy spent per successful information transmission, the more battery life, and the greater the longevity of network connectivity.

- Line packet error rate. Line packet error rate is inversely proportional to line throughput with regard to the line bandwidth, which is the line transmission rate.

- Line latency. Line latency is the sum of multiple delays: queueing delay, propagation delay, transmission delay, etc.

- Route throughput. Route throughput is the minimal throughput of lines inside the route.

- Route packet error rate. Route packet error rate is the complimentary of the lines inside route probabilities of successful packet delivery multiplication.

- Route stability. Route stability is how long all lines in the route remain existing and within the desired range of parameters.

- Route latency. Route latency is the sum of line latencies inside the route.

- Interference. Interference is the simultaneous transmission of multiple nodes when the total power of other nodes transmitted, except the sender, corrupts the received packets. Low interference is not harmful. High interference causes packet collisions.

- Data overhead. The volume of service data. The more, for example, the routing protocol sends route request packets, the lower the throughput.

The aforementioned goals are usually achievable with the intermediate goal of creating the specific method, for example, channel access method, routing protocol etc.

In our work, we propose a new classification of cross-layer methods in ad hoc networks based not on a specific combination of layers used but on the intermediate goal pursued with the help of multiple layers.

To this date, the practical realizations of ad hoc networks with cross-layer paradigms are almost non-existent. Some of the closest activities to practical implementations are DARPA (Defense Advanced Research Projects Agency) research [42] and different press releases and policies, such as the policy for developing ad hoc networks for automated vehicles in the European Union [43] and mesh internet in rural Africa [44]. The area of cross-layer ad hoc network control is still mostly under theoretical development. The known cross-layer method classifications, which are method-based, indicate that the development of new cross-layer methods is rather focused on the methodology itself. We hope that our work may facilitate and spur the practical implementations of ad hoc networks utilizing the cross-layer paradigm to its utmost value.

In the following review, methods are grouped by their intermediate goals and discussed in the corresponding sections.

3. Cross-Layer Method Publications Review

3.1. One Layer

Most of the cross-layer methods are usually focused on one layer interacting with other layers to optimize the work of the layer being focused on.

3.1.1. Physical Layer

Cross-layer methods that are focused on the physical layer use multi-element antenna systems to optimize the antenna beamforming and space-time coding for the minimization of interference between users and the maximization of bandwidth (Table 2). These methods use the data about neighboring nodes from the link layer and the data about data flows from the network layer.

Table 2.

Cross-layer methods concentrated on the physical layer.

Zorzi et al. [45] consider the problems of the development of the channel layer protocol for ad hoc networks with nodes with multiple antenna elements with MIMO technology. Network nodes are capable of forming directional antenna patterns, interference rejection, and MIMO space-time coding of signals. For single omnidirectional antennas, nodes send a transmission request packet, and the target node responds with a permission packet. All other nodes that have accepted the permission and request packet cease to use the radio channel for the time required to transfer the packet between the two nodes. If the receiving node can use a radio channel, the node allows the packet to receive. In the case of multiple antennas with independent transceivers, the node can receive multiple packets at the same time under sufficient spatial separation of senders; when transmitting packets, it can beamform in a way that reduces interference. As a result, requests for transmission packets are advisory in nature and are used to assess the needs of data streams. Therefore, the authors conclude that the interaction of physical and channel layers is necessary.

Hamdaoui et al. [46] propose a way to maximize bandwidth by using MIMO. The work uses the interaction of physical, channel, and network layers. Based on the knowledge of the amount of data streams passing through nodes, each node communicates with adjacent nodes to derive distributed complex coefficients of antenna elements for joint nullification of antenna directional patterns and simultaneous acceptance of packet transfer from several nodes depending on the data flows to be transmitted to nodes.

3.1.2. Channel Layer

Cross-layer methods concentrated on the channel layer consist of resource reservation, random access, and cooperative transmission methods.

- Channel layer (resource reservation)

In the works focused on the resource reservation at the channel layer (Table 3), the channel layer rarely uses physical layer data, using mainly network layer data [47]. The network layer reports on the data streams passing through the nodes. On the basis of this data, the channel layer makes the decision to reserve resources. When splitting the network into clusters, route data helps to maximize the number of dedicated channels for intra-cluster communication, and in the case of a tree-like network, where nodes transmit data to a single data collector node, the route tree allows the allocation of disjoint channel resources between the previous and next link. The resource reservation by a channel layer based on the information from the network layer allows for increased network capacity, route stability, and reduced power consumption.

Table 3.

Cross-layer methods concentrated on channel layer resource reservation.

Gong et al. [48] propose the allocation of node channels using a routing protocol in such a way as to minimize interference of nodes along the route. The proposed method for allocating channels increases the bandwidth in comparison with the allocation of channels based on local node information.

In their subsequent work, Gong et al. [49] suggest reserving channels using information about network layer routes. The proposed protocol uses fewer service messages and provides more bandwidth than using only channel layer information.

De Renesse et al. [50] suggest that the channel layer should use the network layer information about the routes passing through the node and, on the basis of this information, pre-reserve bandwidth, minimizing the probability of packet collision. The network layer, in turn, searches for routes based on available reserved bandwidth.

Mansoor et al. [47] divide ad hoc networks into clusters; clusters are formed by the nodes themselves using a distributed algorithm. The access time in each cluster is represented as a synchronized sequence of super-frames. The superframe consists of four periods: the beacon period, the spectrum sensing period, the period of detection of adjacent nodes, and the period of data transmission. The cluster head in the beacon period sends information about synchronization and allocated cluster resources. In this work, the clustering is carried out in such a way as to simultaneously minimize the number of clusters and allocate the maximum number of free frequency channels for intra-cluster communication. For the formation of clusters and allocation of channels, joint work of the physical and channel layers is used. The routing algorithm, on the other hand, prefers the nodes that are the heads of the clusters to be included in the route when routes are selected, as the links with them are more stable. In the ad hoc network in question, there are the main users behind whom a certain channel resource is assigned and secondary users who, having found that the resource is not in use, can use it. When secondary users discover that the primary user has started using their resource, the secondary user must switch to another free resource; the switching takes time. The routing protocol looks for paths with the least delay. The delay is evaluated based on data from the link layer. The delay consists of a delay in the queue, a delay in switching to a new channel resource, and a delay in waiting time for the recharge of the resource.

Raj et al. [51] proposed a cross-layer channel assignment and routing algorithm for a cognitive radio ad hoc network that provides stable paths. A novel metric for the probability of successful transmission of a channel is derived by considering channel statistics, sensing periodicity of the secondary users, time-varying channel quality, and a time-varying set of idle channels from the physical layer. Furthermore, the number of available channels and their characteristics are considered for the selection of a next-hop node with an aim to avoid the one-hop neighbors located in the primary user transmission range. The primary user is the user with granted resources, which are not used all the time. Secondary users attempt to use the resources of primary users when resources are free.

In their subsequent work, Mansoor et al. [52] offer a cross-layer approach that minimizes power consumption in UAV networks. The approach uses network and channel layers. The network layer uses the AODV protocol for routing, the cluster head is selected by the GSO (Glow Swarm optimization) protocol, and the Cooperative MAC protocol is used at the channel layer. The network has a cluster head. Using AODV, the cluster head finds routes to all nodes and creates a node tree. The cluster head then assigns TDMA slots to other nodes along the node tree in such a way as to minimize packet interference and collisions, which results in lower power consumption, as fewer packets are sent repetitively.

- Channel layer (random access)

In work focused on optimizing channel random access (Table 4), the channel layer uses data from the physical and network layers. The physical layer evaluates the state of the channel and reports to the channel layer. If the channel state is poor, the data link layer does not transmit the packet, as the packet is likely to be corrupted, further interfering with other users. The channel layer can also indicate the physical layer at what power the packet should be transmitted, making a trade-off between the high probability of successful packet delivery and the high interference for the other users.

Table 4.

Cross-layer methods concentrated on channel layer random access.

Toumpis et al. [53] analyze the mutual influence of power control, queue types, routing protocols, and channel access protocols. The authors found that the effectiveness of the CSMA/CA channel layer protocol is highly dependent on the routing protocol, and the proposed two channel-layer protocols are superior to CSMA/CA. One of the protocols performs joint channel access control and power control, ensuring higher throughput and energy efficiency. The second protocol sacrifices energy efficiency in exchange for more bandwidth.

Pham et al. [54] propose a cross-layer approach. The physical layer in that approach shares the channel state with the other layers. If the channel state is bad, the upper layers decide not to transmit a packet, as this will result in energy consumption, interference, and waiting for packet reception confirmation reply. The approach is applicable to the Rayleigh fading channel. The level of future Rayleigh channel fading can be predicted based on previous measurements. The authors developed an attenuation prediction algorithm based on the Markov chains and examined in detail the interaction of the physical and channel layers. The authors derived theoretical formulas for bandwidth, packet processing speed, probability of packet loss and average packet delay in Rayleigh channels. The proposed approach increases network capacity and reduces energy consumption.

- Channel layer (cooperative transmission)

In cooperative transmission-oriented works (Table 5), the channel layer interacts with the network and physical layer. Cooperative transmission is based on the fact that when the sending node sends a data packet, the packet is received not only by the receiving node but also by several other nodes (other nodes are usually called auxiliary nodes). The receiving node sends an acknowledgment packet. If the acknowledgment packet has not been sent, one of the nodes that received the data packet and whose communication channel is less attenuated sends the packet to the recipient. The difficulty of cooperative transmission is the correct formation of cooperative transmission groups and the choice of when cooperative transmission is more efficient and when cooperative transmission causes interference and is less reliable than ordinary transmission. Interaction with the physical layer in the cooperative transmission is used to obtain information about channel fading and to set signal-code structures for packet transmission from the sending node and auxiliary node. The network layer provides information about the direction of data flows. With this information, groups of nodes can be effectively selected for cooperative transmission.

Table 5.

Cross-layer methods concentrated on channel layer cooperative transmission.

Dai et al. [55] propose the interaction of channel and physical layer in cooperative transmission. In this work, nodes are expected to possess orthogonal communication channels (frequency, time slot, etc). When a packet is delivered, it is received by the recipient node and nodes that can participate in the cooperative transmission. The authors suggested the use of automatic packet forwarding by auxiliary nodes if they did not receive a confirmation packet after some time. Only nodes with the same communication channels as the recipient node are used for cooperative transmission forwarding.

Liu et al. [56] propose a protocol where, at the beginning, the sender node codes the packet encoded with error-correcting code so that half of the packet bits can be repaired. The sender node then sends half of the packet to the helper node and the other half to the recipient node. The helper node restores the second half of the packet, encodes again and sends it to the recipient node. By splitting the packets into two halves, the packet halves can be transmitted at different speeds depending on the channel state. For cooperative transmission, the channel layer uses physical layer information about the channel state and available signal-code structures for packet transmission.

Dong et al. [57] propose cooperative beamforming (virtual antenna with the help of multiple nodes). For cooperative beamforming, the physical and channel layers of the nodes interact. Nodes in the neighborhood use time division multiple access (TDMA) to broadcast data packets. Then, nodes that participate in the cooperative beamforming transmit the packets to recipients. To form a virtual antenna, node coordinates and time synchronization are required.

Aguilar et al. [58] make a channel layer to use information about signal strength from the physical layer to choose whether to use cooperative transmission or not. Cooperative transmission groups are formed based on route date from the network layer.

Ding et al. [59] use network layer data about routes to form cooperative transmission groups. At the same time, at the physical layer, the control of transmission power is optimized based on data from the channel layer about cooperative transmission groups.

Wang et al. [60] propose a cooperative transmission using network packet coding. When the helper node re-sends a data packet to a recipient, it cannot send its own packets. To solve this problem, the authors suggested using network packet coding. The helper node creates a modified packet based on its own packet and the packet intended for the recipient node. The receiving node, based on a corrupted packet from the sending node and a modified packet from the helper node, recovers both the packet from the sender and the packet from the helper node. As a result, network bandwidth is increased, and latency is reduced.

3.1.3. Network Layer

Cross-layer methods concentrated on the network layer consist of cross-layer routing protocols and cross-layer routing metrics, which can be used to turn a one-layer routing protocol into a cross-layer routing protocol.

- Network layer (routing metrics)

Cross-layer routing metrics are composite metrics based on metrics collected from multiple layers (Table 6). Cross-layer routing metrics are used for existing routing protocols, turning routing protocols into cross-layer routing protocols.

Table 6.

Cross-layer methods concentrated on network layer routingmetrics.

Park et al. [61] propose a route reliability metric as the probability of successful packet delivery across the route. Successful packet delivery rate calculation is based on the received packets’ signal-to-noise ratio from the physical layer.

Draves et al. [62] propose two metrics: the metric of expected transmission time (ETT) and the weighted cumulative expected transmission time (WCETT). The ETT metric improves the expected transmission count (ETX) metric [74] (ETX is equal to the sum of hops, including retransmissions) by measuring the transmission speed. The ETT metric is equal to the ETX metric multiplied by the ratio of the packet size to the transmission speed. The WCETT metric improves the ETT metric by giving a lower score to routes in which subsequent lines use the same channel resources (data about channel resources is gained from the channel layer) to reduce user interference. However, this metric does not account for interference from other routes.

Yang et al. [63] propose the metric of interference and channel-switching (MIC). The MIC metric improves the WCETT metric by accounting for interference from other routes. The metric uses the number of adjacent nodes for each line of the route to evaluate interference from other routes.

The standard 802.11 s [64] uses the ALM metric (Airtime Link Metric). This metric measures the time required to deliver a packet of 8224 bits between the sender and receiver. To obtain this metric, the network layer uses the following data from the channel layer: number of bits in the frame, line transmission speed, probability of frame error, channel access delay, and frame service field size.

Ramachandran et al. [65] propose the metrics for estimating the probability of route packet loss, the probability of line packet loss, and the remaining battery charge of the node from the power of the received signal.

Oh et al. [66] propose a metric defined as the ratio of the time taken to transmit a packet to the sum of the transmission time and the time taken to wait for the channel to be free. If this metric is small, the channel is less congested, and the line has a low transmission delay; in this case, the line is more suitable for real-time applications.

Hieu et al. [67] offer a route metric for ad hoc networks with radio links with multiple transmission rates. The metric takes into account the maximum allowable transmission rate and the number of packet retransmissions. The selection of the lowest metric route ensures a high bandwidth route and prevents the selection of low bandwidth routes. A small metric value means that the route has a few intermediate nodes. The metric is based on the data of a number of packet retransmissions from the channel layer and the available line transmission speeds from the physical layer.

Katsaros et al. [34] use a weighted sequence of the signal-to-noise ratio and packet loss probability data from the channel layer to estimate line reliability.

Mucchi et al. [68] propose a metric that takes into account the mobility of nodes, the line load, and the fading of radio lines. This metric is called the network intersection metric. This metric uses the average packet wait time in the queue, the average packet transmission time and the packet loss probability estimate, which is based on the signal-to-noise ratio, packet length, modulation, and error-correcting code.

Ahmed et al. [69] use a metric for line reliability estimation based on queue size, number of hops, distance, and relative speed from the sender node to the receiving node.

Bhatia et al. [70] offer a path stability metric based on queue length, the signal-to-noise ratio, and the probability of packet loss. The queue size assesses the probability of packet drop due to line buffer overflow, the probability of packet loss estimates interference and the signal-to-noise ratio estimates distance and node mobility (if the node moves far away, the signal-to-noise ratio drops). By combining the three metrics into one, one can estimate the stability of the lines and the stability of the routes.

Zent et al. [71] present a metric based on delay, bandwidth, and geographic direction.

Jagadeesan et al. [72] propose a metric based on the residual energy of the node and channel occupancy.

Qureshi et al. [73] have derived a theoretical estimate of route energy consumption based on the probability of packet loss, the number of retransmissions and the number of route hops. The authors have created an algorithm for calculating transmission power for all route nodes in order to minimize the energy spent with a given probability of package delivery.

- Network layer (routing protocols)

The single-layer routing protocol in ad hoc networks, using probing packets, can only receive information about the nodes through which the probing packet passed and the packet travel time that estimates the delay of the found route (Table 7). As a result, the routing protocol can select routes by delay and hop number. Cross-layer routing protocols can use the cross-layer metrics described above or collect information from different layers.

Table 7.

Cross-layer methods concentrated on network layer routing protocols.

Canales et al. [75] offer a routing protocol that uses data about free time slots from the channel layer to reduce interference. When sending a route request packet, it is checked on each hop whether it is possible to reserve non-interfering time slots on previous and next hops; if it’s not possible, then the request packet is dropped.

Amel et al. [76] propose a modification of the AODV routing protocol, where instead of the hop number metric, the delivery probability is estimated based on the signal-to-noise ratio obtained from the physical layer.

Chen et al. [77] suggest an improvement to the AOMDV routing protocol. The new routing protocol uses a weighted function from the queue size of the line buffer and the residual energy of the node to find routes.

Madhanmohan et al. [78] propose a modification of the AODV protocol, in which a route request is sent with information about how much power is needed to transmit the packet alongside the route. If one of the route lines does not support the specified power, the route request packet through that line is not set.

Weng et al. [79] propose a cross-layer protocol of channel and network layer. The new protocol minimizes the energy consumption in the network. The routing protocol uses data from the channel layer and finds paths with minimal power consumption for packet transmission. The energy consumption is estimated based on the probability of packet line successful delivery, the number of packet collisions, the residual energy of the nodes, and the number of packets transferred. The channel layer protocol gives a larger share of the channel to those nodes that are more likely to successfully transmit packets.

Attada et al. [80] propose an improvement of the DYMO routing protocol based on the channel resources reservation. When reserving resources, physical layer parameters such as transmission power, transmission speed, signal constellation size, and error code type are requested. By reserving resources on the basis of physical layer parameters rather than just the capacity of the line, the network capacity increases.

Ahmed et al. [81] propose to use the signal-to-noise ratio. When one selects the next node to forward the route request message, only nodes with the minimum allowable signal-to-noise ratio are selected. The high signal-to-noise ratio implies that the node is close and will not be out of radio sight for a relatively long time.

Chander et al. [82] offer a multicast routing protocol. The protocol uses physical, channel, and network layer information to form a multicast tree. The following data is used: signal strength, fading estimation, line lifetime, residual node energy, and cost of updating the multicast tree. Cross-layer interaction allows the routing protocol to find stable, slow-changing multicast route trees.

Safari et al. [83] propose the enhancement for the AODV routing protocol called cross-layer adaptive fuzzy-based ad hoc on-demand distance vector routing protocol (CLAF-AODV). The suggested method employs two-level fuzzy logic and a cross-layer design approach to select the appropriate nodes with a higher probability of participating in broadcasting by considering parameters from the three first layers of the open systems interconnection (OSI) model to achieve a quality of service, stability, and adaptability. It not only investigates the quality of the node and the network density around the node to make a decision but also investigates the path that the broadcast packet traveled to reach this node. The proposed protocol reduces the number of broadcast packets and significantly improves network performance with respect to throughput, packet loss, interference, and average energy consumption compared to the standard AODV and the fixed probability AODV (FP-AODV) routing protocols.

3.1.4. Transport Layer

The transport layer is responsible for the congestion control. The transport layer protocol estimates overload by measuring the interarrival time between delivery confirmation packets or by the lack of confirmation packets. When congestion is detected, the transport layer starts transmitting packets with a lower frequency. Congestion estimation takes into account only the line buffer overload. However, packets in ad hoc networks can be lost due to line noises and packet collisions. Then, the slowdown of packet transmission by the transport layer will not affect the probability of packet loss; the transport layer will slow down to the minimum speed, underutilizing the network capacity. To avoid this problem, cross-layer transport protocols use data from the channel and network layers to find out the cause of packet loss (Table 8).

Table 8.

Cross-layer methods concentrated on transport layer protocols.

Yu et al. [84] propose an adaptation of the TCP protocol to ad hoc networks. When delivery confirmation packets do not arrive, TCP believes that packets have been dropped due to line congestion rather than due to radio line noise or route disruption due to node movement, resulting in TCP slowing down the transmission speed by underutilizing the network’s free capacity. To avoid this, the channel layer reports the packet loss to TCP so that the packet is retransmitted from the TCP cache of adjacent nodes. This allows TCP to avoid congestion control and use full available line capacity.

Kliazovich et al. [85] modify the TCP protocol to take into account the channel layer data: the delay and the capacity of the line. More data allows for more accurate congestion estimates than using only inter-arrival time of delivery confirmation packets.

Nahm et al. [86] propose the TCP protocol modification to address the problem of network cascading overload. The cascading overload begins with the transport protocol overloading one of the route lines; the channel layer detects line overload and reports the routing protocol about line break; the routing protocol starts the procedure of finding a new route, overloading the network with route request messages. Therefore, the authors proposed a mechanism for controlling congestion at the transport layer that reduces the likelihood of network congestion due to the search for new routes. To achieve that, a new method of fractional increase in the TCP window size with explicit notification of the network layer that the route is not broken is proposed.

Chang et al. [87] offer transport and network layer protocols that use network event information. Network events are route and connection failure, network packet reception errors, line buffer overflow at the channel layer, and long channel access time. The transport protocol behaves in the following manner. When a connection is severed, the transport protocol continues to transmit data packets and sends a request to the network layer to find a new route. If a line packet transmission fails, the transport protocol retransmits the packet without waiting for a delivery confirmation packet, which will never be sent. When the packet is discarded due to the line buffer overflow, the transport protocol triggers the congestion control mechanism. In the case of long channel access time, the transport protocol also triggers the congestion control mechanism. Each node uses an overload metric counter, which increases when a buffer overflow event or a long access channel time event occurs and decreases when the event disappears. Routing is performed using the hop metric and the overload metric.

Alhosainy et al. [88] use multipath routing and optimize the network bandwidth by selecting, at the transport layer, total speeds for a set of routes and the distribution of speeds within a set of routes, depending on the packet collision probability. The optimization problem is presented as a dual decomposition problem.

Sharma et al. [89] propose improvements to the MPTCP transport protocol. To avoid congestion control due to packet loss, the transport protocol uses a route delay variance estimate and an average number of line retransmissions in routes. As a result, the transport protocol can differentiate between packet drops due to line buffer overflows and packet corruption in radio lines.

3.1.5. Application Layer

Cross-layer methods concentrated on the application layer consist of overlay network methods and applications.

- Application layer (overlay network)

An overlay network is a collection of nodes and the services they provide (e.g., file sharing). An overlay network is implemented by applications. The overlay network has its own routing and neighbor discovery. However, the problem is that the overlay network topology and routes in it may not correspond to the physical network: neighboring nodes in the overlay network may be very far away from each other, and short routes in the overlay network may be very long in the underlying physical network. Therefore, cross-layer overlay networks use routing protocols to collect information about the overlay network. As a result, information about both the physical network and the overlay network is collected simultaneously, hence minimizing the amount of service information sent out by the overlay network (Table 9).

Table 9.

Cross-layer methods concentrated on application layer overlay networks.

Beylot et al. [90] use network layer interaction for peer-to-peer application layer protocol “Gnutella” from conventional networks, as the peer discovery task is the same as the route discovery task. As a result, application layer service data are added to the network layer service data of the routing protocol. Routing protocol route discovery success is higher than using the peer node discovery protocol of the “Gnutella” application protocol.

In the works [91,93], interaction with the OLSR routing protocol is suggested without the specification of an overlay network protocol.

Delmastro et al. [92] propose the adaptation of the “Pastry” protocol to ad hoc networks.

Boukerche et al. [94] propose an adaptation of the peer-to-peer (P2P) network “Gnutella” to ad hoc networks by utilizing node location information and information from the network layer.

Kuo et al. [95] propose a P2P overlay network that interacts with the network and physical layer. To know about the link disconnections with virtual nodes, the network layer sends route disconnection messages, and the physical layer sends signal-to-noise ratio values to the application layer. With a gradual decrease in the signal-to-noise ratio, one can assume that the virtual node of the P2P network is about to disconnect, and then P2P nodes update the virtual network topology. Furthermore, all P2P nodes can be connected to each other through routes with common nodes. The virtual network will look fully connected, but the routes will redundantly pass through the physical nodes of the virtual nodes. By using route information from the network layer, the problem of false full connectivity can be avoided.

- Application layer (applications)

There are not a lot of cross-layer applications that adapt to other layers because data from the application layer is usually treated as input data for other layers (Table 10).

Table 10.

Cross-layer methods concentrated on application layer applications.

In transport ad hoc networks, a moving vehicle information dissemination application, depending on the rate of ad hoc network state change (information from the other four layers), which reflects the trajectories and vehicle distribution density, can disseminate information more or less frequently, thus avoiding network congestion and delivering only the most critical information.

Puthal et al. [96] propose a congestion control mechanism for transport ad hoc networks, where, depending on the congestion level estimate, the application layer determines the number of messages to be sent and the size of the sliding window for congestion detection. The application layer also performs the task of the transport layer. The information from multiple layers about channel occupancy, queue occupancy, number of neighboring nodes, and transmission speed is used to estimate the congestion level.

3.2. Multiple Layers

Cross-layer methods that optimize the operation of multiple layers consist of methods with external entities optimizing and controlling multiple layers, and in the case where multiple layers are independent, they share information with each other.

3.2.1. External Entity Multiple Layers Control and Optimization

External entities can control and optimize multiple layers. This approach is consistent with (inherited from) the “CrossTalk” architecture [27]. External entity control is divided into fuzzy logic-based methods and dual decomposition optimization-based methods.

- External entity multiple layers control and optimization (fuzzy logic)

Fuzzy logic-based cross-layer methods use a set of rules on how to transform a set of input metrics from different layers into output metrics for tuning the layers. The input metrics are converted into classes depending on how the metrics value space is partitioned into ranges. The output of the fuzzy logic-based system is the classes of metrics, which are converted into parameter values. Most of the conversion rules in publications are chosen empirically by the authors (Table 11).

Table 11.

Cross-layer methods concentrated on multiple-layer optimization with fuzzy logic.

Xia et al. [97] propose the use of information from physical, channel, and application layers (vehicle traffic speed to account for signal fading due to the Doppler effect, average line transmission delay, probability of successful packet delivery in the line) as inputs to the fuzzy logic system. The system outputs correction factors to specify the type of modulation and error-correcting codes, transmission power, maximum number of retransmissions, and rate of packet stream creation at the application layer.

Li et al. [98] propose a generalized method to evaluate the usefulness of nodes in selecting the next node for forwarding. The authors use fuzzy logic. Nodes may have many metrics, but the variance of some metrics among the set of nodes, among which the next node for forwarding is selected, may be different. If some metric does not differ much between nodes, less attention can be paid to it. Metrics normalized with respect to the mathematical expectation of the variance of metrics are fed into the fuzzy logic evaluator, and the evaluator outputs the weights of metrics. Then, the summation of metrics with weights yields the utility value of the node for forwarding. The node with the maximum value for forwarding is selected by the next node. The proposed method is suitable for the metrics of all layers.

Hasan et al. [99] propose a cross-layer approach based on fuzzy logic. The fuzzy logic evaluator uses end-to-end transport layer delay, application layer packet delivery probability and physical layer transmission speed as inputs, and monitors parameters such as transmission power and signal-code constructions of the physical layer, number of channel layer retransmissions, number of network layer route hops and application layer transmission rate. The three input parameters are assigned three values (low, medium, and high) within the fuzzy logic framework. As a result, nine combinations of parameter values are obtained, and these nine values are matched with rules that set the output parameters in nine different ranges.

- External entity multiple layers control and optimization (dual decomposition)

The dual decomposition can be used to solve the problem of finding the optimal parameters of network operation. Optimization constraints are the values of data flow volumes between pairs of nodes, available signal-code constructions of transceivers and their transmission speeds, and parameters of MIMO antennas. The purpose of the optimization is to select routes and distribute flows along them, to set the transmission power, transmission rate, signal-code constructions of transmitters, parameters of MIMO space-time coding, and channel access time (Table 12).

Table 12.

Cross-layer methods concentrated on multiple-layer optimization with dual decomposition.

Liu et al. [100] propose a cross-layer optimization of the ad hoc MIMO network throughput. The simultaneous optimization of route selection, bandwidth selection for each packet transmission, and transmission power for each MIMO complex-matrix channel is performed. The optimization takes place at the network, channel, and physical layers. The optimization problem is decomposed into two sub-problems: network layer, and physical and link layer together. The two optimization problems are then solved by the plane dissection method and subgradient method.

Cammarano et al. [101] propose an optimization of ad hoc network operation parameters with cognitive radio to maximize the network throughput through dual decomposition. The transmission rate at the transport layer, the routes at the network layer, and the channel access time at the channel layer are used together to optimize network throughput.

Teng et al. [102] propose the optimization of network performance at all five layers through vertical decomposition of the optimization problem. The paper uses the Lagrange multiplier method and derives a partial Lagrangian formula that takes into account the network’s target function and constraints from all five layers. Using dual decomposition, the complex optimization problem is decomposed into three simple problems with control parameters moved iteratively between the three problems. Solving the three optimization problems is computationally challenging, so the authors proposed a simplified heuristic to solve the three problems.

3.2.2. Independent Layers and Information Sharing

The layers can stay independent, optimizing their performance based on the information that the layers share with each other. This approach is consistent with the “MobileMan” architecture [26] (Table 13).

Table 13.

Cross-layer methods with independent layer optimization.

Yuen et al. [103] propose to use channel layer resource reservation packets by physical layer to estimate the channel state and send a reply so that the sender can choose appropriate signal-code construction based on channel estimation. The network layer obtains information about reserved resources and signal-code constructions from appropriate layers to choose routes.

Wu et al. [104] address the problem of allocating physical and channel layer resources to maximize throughput for multicast data streams. The result is a set of achievable trade-offs between throughput and energy efficiency. The physical layer resource is transmission speed, and the channel layer resource is time slots. The network layer translates the data flow requirements between sender and receiver into link resource requirements. The purpose of this work is to minimize line congestion and energy consumption in a bandwidth and energy-constrained environment.

Setton et al. [105] were among the first to propose the use of a cross-layer approach to provide low-latency video streaming. Depending on the signal-to-noise ratio, the authors vary the optimal value of packet size, type of signal constellation and symbol rate. The joint use of the channel layer and network layer solves the problem of allocating link resources according to the flows along the routes. Smart transmission scheduling at the transport layer can reduce the probability of packet loss. The application layer can adaptively select the video streaming rate according to the congestion of the routes.

Shah et al. [106] propose a cross-layer channel layer and network layer co-operation protocol for ad hoc networks with high link asymmetry. In this network, there are nodes with high transmission power and nodes with low transmission power. As a result, one node with high transmission power can send a packet to a node that has low transmission power, and the second node cannot send a reply due to low transmission power, hence link asymmetry. Link asymmetry is a problem when low-power nodes send transmission request packets. The high-power nodes will not receive them and will interfere with the transmission of low-power nodes. To solve this problem, network layer routing information is used. The transmission request packet is forwarded not only to neighboring nodes but also some number of hops further away depending on the power difference between the nodes. In this case, the request packet is sent further only to those nodes with which there is an asymmetric link. The routing protocol, in turn, from the channel layer, learns about the lists of neighboring nodes and creates reverse routes so that despite the asymmetry of links, communication can be bidirectional.

Huang et al. [107] propose a cross-layer approach that controls the transmission power, signal constellation size and channel access time depending on packet transmission rate, QoS requirements, power constraints and channel state. The authors’ technique helps to deal with the divergence problem of the traditional power control and facilitate the execution of scheduling in a distributed manner; a power control algorithm with adaptive SINR was adopted. The authors simplified the scheduling by automatically implementing it in the power control phase after solving the “transmit–receive collision”.

Jakllari et al. [108] consider a cross-layer approach using virtual MISO. In virtual MISO, multiple nodes jointly form a virtual antenna system for transmission and transmit information to a single node. For this purpose, nodes in visibility of each other are used. When one of the nodes in the group of virtual MISO receives a packet, the node distributes the packet to the nodes in the group and then the nodes in the group send the packet to the next recipient. Sending to the group may cause more interference, but the gains from the virtual antenna system can be greater. Authors state that throughput can be increased by a factor of 1.5, delivery delay can be reduced by up to 75 percent, and the number of attempts to find new routes after breaking links can be reduced by up to 60 percent. The cross-layer approach consists of several things. In the case of the network layer, routes are first searched using the usual routing protocol; then the found route is checked if there are MISO links and if the route nodes are part of MISO groups, the route is rebuilt using this information with potentially fewer hops and more stable links due to the spatial separation of MISO. The physical layer receives information from the channel layer that the transmitter is part of the virtual antenna system.

Yu et al. [109] propose a joint optimization of the selection of the data flow rate at the transport layer and the probability of sending a packet at the channel layer—the channel layer chooses whether or not to transmit a packet with some probability depending on the channel load.

Alshbatat et al. [13] optimize the UAV network parameters depending on the height and angle of the drones. UAVs can alternately use an omnidirectional antenna and a narrow directional antenna. The use of a narrow directional antenna is necessary when the UAV is at high altitude and the line budget does not allow the use of wide directional antennas. Each UAV has two directional antennas with main lobe rotation (one for communication in the down direction (in a geometrical sense), another for communication in the up direction) and two omnidirectional antennas. The UAVs also use GPS and an inertial navigation system to orient the antennas. The UAVs transmit their coordinates and orientations in space to each other based on the coordinates and orientation, and the antennas can be properly pointed toward the receivers. The authors use the OLSR routing protocol for omnidirectional antennas and a modification of the OLSR protocol for directional antennas. In this modification, the routing protocol learns the properties of directional antennas and the coordinates and orientations of the nodes.

Pham et al. [110] propose the optimization of link delay and power consumption through joint congestion control at the transport layer, channel layer queuing control, transmission speed control, and power control at the physical layer. The control is performed at different time scales. Very often at the physical layer, since signal fading can change rapidly, the average frequency for the channel layer is determined at time intervals proportional to the length of the channel packet, and the transport layer controls congestion at time intervals in seconds. Routing is not considered in the paper.

Xie et al. [111] propose using a cross-layer approach for joint routing, dynamic spectrum allocation, choice of package transmission moment, and power control with the minimization of user interference and the guarantee of the signal-to-noise ratio for ad hoc networks with cognitive radio.

Aljubayri et al. [112] offer joint multipath routing and congestion control to maximize bandwidth and reduce queuing delay. A global optimization algorithm is proposed, in which all nodes take into account the data of the entire network, and a distributed optimization algorithm is proposed, in which nodes take into account only the data from neighboring nodes.

4. Discussion

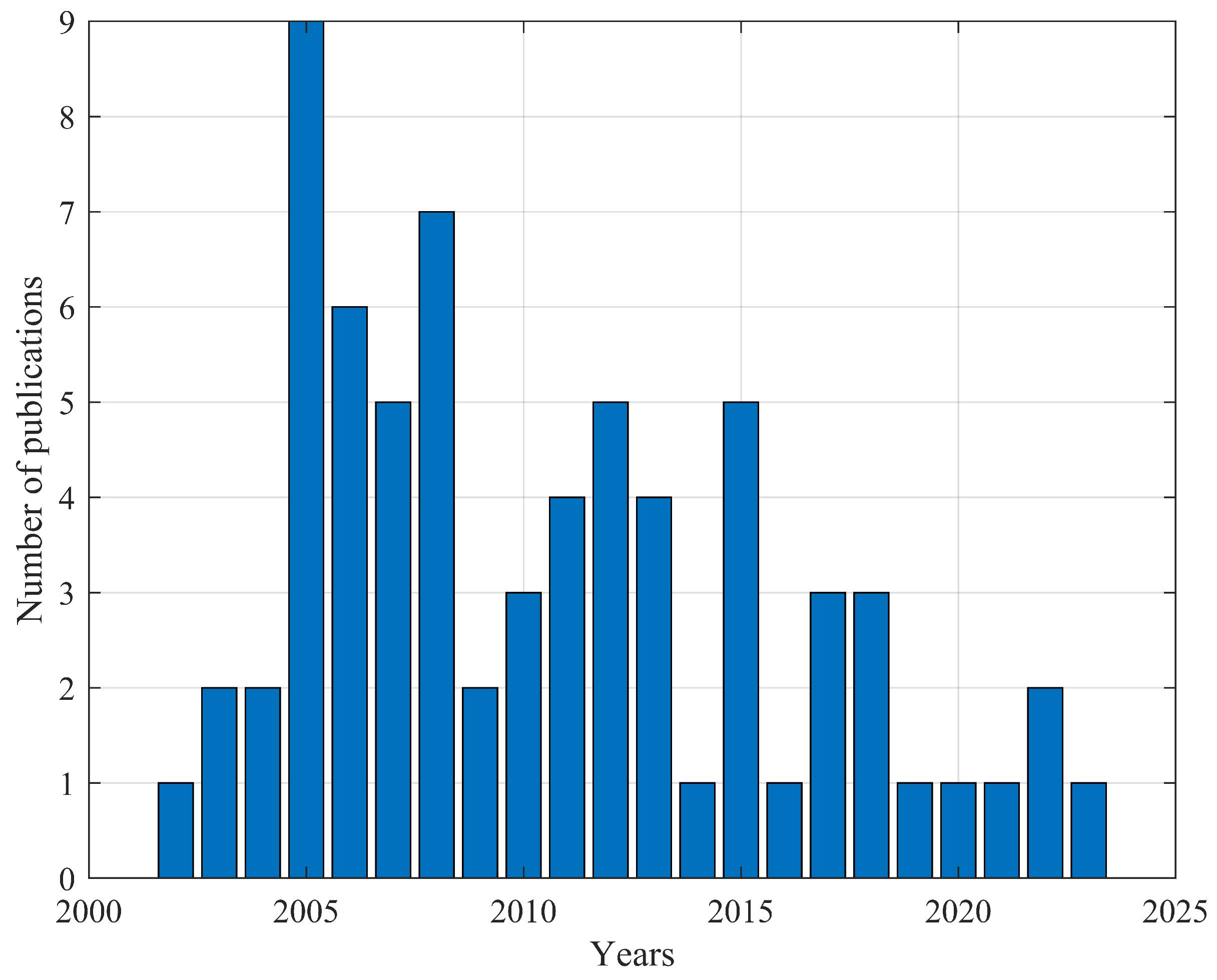

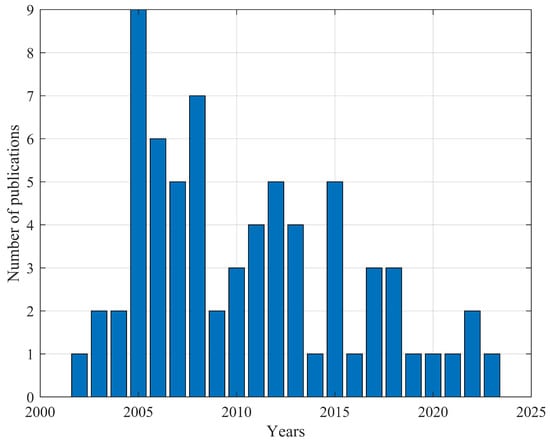

A total of 69 publications were reviewed between the years 2002 and 2023. The publication distribution by year is represented as a histogram (Figure 6).

Figure 6.

Cross-layer methods for ad hoc networks publication activity.

In the reviewed publications, cross-layer methods use different combinations of OSI model layers. Using the algorithm for finding associative rules FPG (frequent pattern growth) [113], the frequency of occurrence of different combinations of layers in the considered publications (Table 14) has been calculated. Cross-layer methods most commonly use the channel, network, and physical layers (Table 14). Without the first three layers, ad hoc networks cannot exist; the first three layers have the greatest influence on ad hoc networks.

Table 14.

Layer usage frequency in reviewed publications.

Applying FPG to the goals of the methods in the reviewed publications, we obtained the following results (Table 15). The optimization of line parameters is the most widespread compared to the optimization of route parameters. It happens mainly because, to optimize route parameters, one needs to obtain a more holistic view of the network, which is harder and incurs more service data overhead compared to obtaining neighborhood knowledge. The two most popular parameters are line throughput and energy consumption, and the sum of their frequencies is more than half (0.57). It happens because all other line parameters influence and define throughput. The line throughput optimization implicitly optimizes other parameters. Route parameters, in turn, are dependent on the line parameters and on the energy consumption. If the node battery is depleted, the route with that node is disrupted.

Table 15.

Goal frequency of the methods in the reviewed publications.

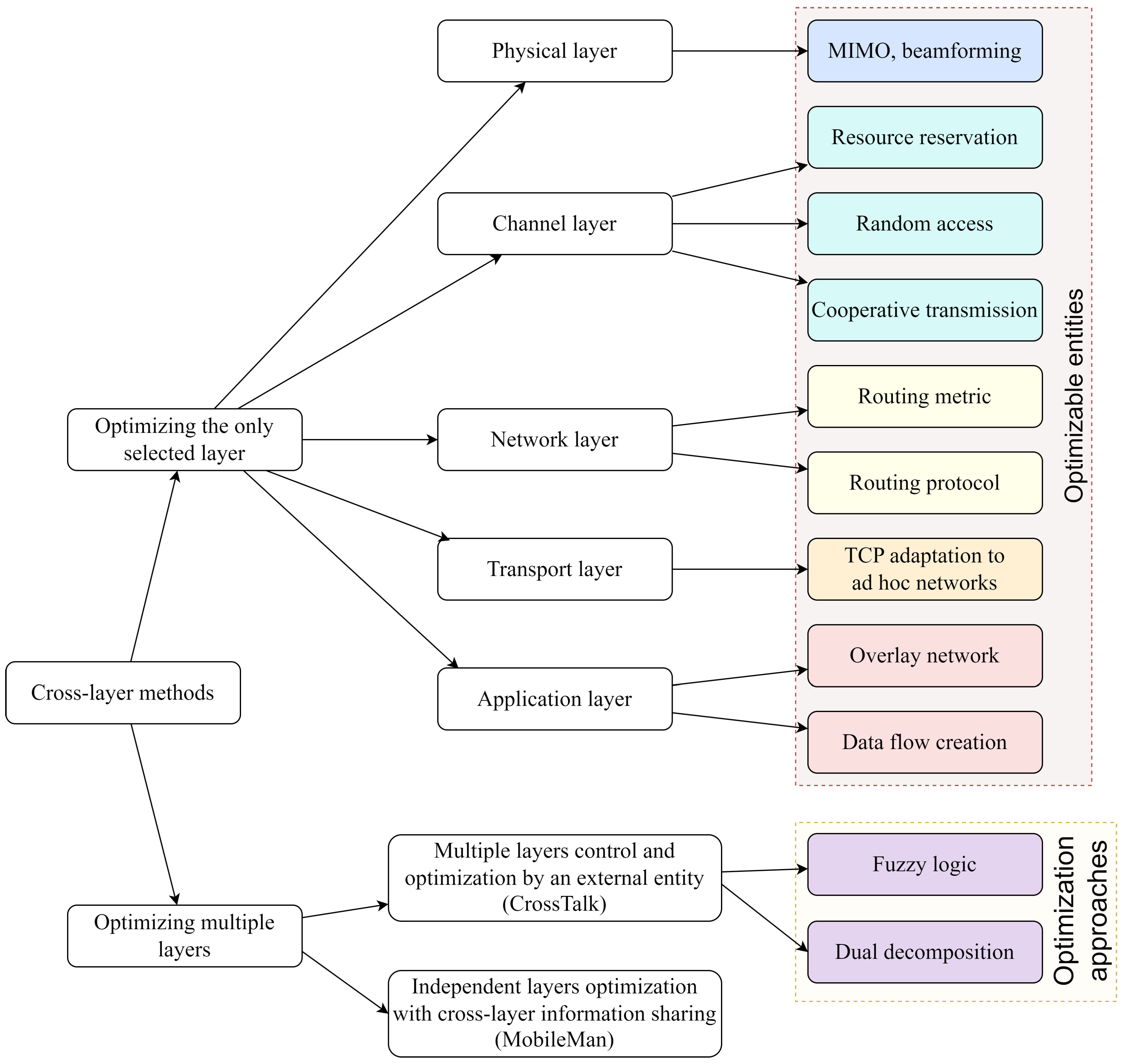

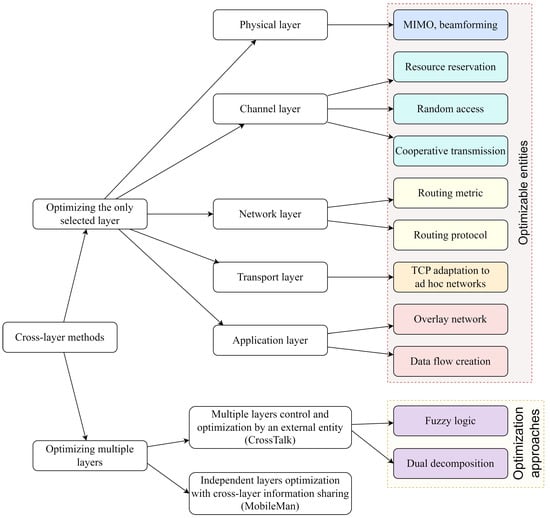

We can see in the reviewed works that cross-layer methods fall into two broad categories: first, methods concentrated on one layer using other layers, and second, methods concentrated on joint optimization of multiple layers. Inside these two broad categories, ten goals for using a cross-layer approach are visible:

- MIMO, beamforming.

- Resource reservation.

- Random channel access.

- Cooperative transmission.

- Routing metrics.

- Routing protocols.

- TCP adaptation to ad hoc networks.

- Overlay network.

- Application data flow adaptation to network state.

- Optimization of multiple layers working simultaneously.

In previous works [9,28,36,37,38,39,40,41], cross-layer methods were classified by used layer combinations. Such classification is exhaustive and poorly reflects the essence of cross-layer methods. Based on the goal of using the cross-layer approach, we deduced the new classification of cross-layer methods in ad hoc networks (Figure 7) according to the purpose pursued by using multiple layers. The proposed classification can help to simplify the goal-oriented cross-layer protocol development.

Figure 7.

Cross-layer methods for ad hoc network classification.

The overall objectives for network control protocols are always throughput, latency, and packet error rate. These objectives remain invariant regardless of the number of layers involved in the optimization process. At the same time, these objectives can be achieved in a variety of ways. The proposed classification helps to systematize these ways.

Quite often, when developing a new cross-layer protocol, there is a goal to achieve (it can be a routing protocol, channel access protocol, etc.). Figure 7 helps to choose the publications to base the research of new cross-layer protocols upon, given the protocols in publications were already categorized using the proposed classification. The proposed classification is goal-based, contrary to usual combination-based classifications. Combination-based classifications may be ambiguous. For example, let one cross-layer method use physical, channel, and network layers. This method can be categorized equally as channel access protocol, routing protocol, or protocol with independent layers and information sharing. At the same time, the proposed classification provides an explicit reasoning about the considered protocol. This circumstance makes the proposed classification more useful. The proposed classification helped us to highlight the 10 problems that can be solved using a cross-layer approach. These 10 problems are enumerated above. These problems were solved earlier in the confines of one corresponding layer.

5. Conclusions

The overall objectives for network control protocols are always throughput, latency, and packet error rate. These objectives remain invariant regardless of the number of layers involved in the optimization process. At the same time, these objectives can be achieved in a variety of ways. This variety is very wide, so a useful classification is needed for it. The existing surveys offer the only way to classify cross-layer ad hoc network control methods based on the involved layers. This classification is simple but not very useful because the number of the involved layers does not give us any information on the goals of the considered methods.

We have introduced the new classification of cross-layer methods in ad hoc networks, which is not based on combinations of used OSI layers. It is based on the main goal of the cross-layer method instead. Cross-layer methods can be divided into two large groups: first, optimization methods for tasks of one layer with information usage from other layers, and second, methods for the collaborative optimization of multiple layers (Figure 7). The methods of one-layer optimization are divided into corresponding layers: physical, channel, network, transport, and application. The methods of multiple layer optimization are divided into, first, the optimization of layers by a separate independent entity (this approach corresponds to the “CrossTalk” architecture [27]), and second, the independent optimization of layers by themselves, but with information sharing (this approach corresponds to the “MobileMan” architecture [26]).

The proposed classification helps to systematize the cross-layer ad hoc network control methods based on the purpose of these methods. Thus, the proposed classification is not morphology-based but is goal-based.

Most cross-layer methods use the first three layers of the OSI model. Network, channel, and physical layers form the basis of ad hoc networks. Without these layers, ad hoc networks cannot exist. Meeting the requirements for the delivery of data flows (and the possibility of delivery) depends mostly on the bottom three layers. The studies of cross-layer methods for upper layers are rare. The optimization of the application layer does not matter, as this layer creates data streams for the network to deliver. Data from the application layer should be treated as an input parameter for cross-layer methods. The transport layer is responsible for the delivery guarantee and congestion control, but the confirmation of delivery can be performed at the application layer, and congestion can be avoided by the routing protocol (network layer), which chooses paths with the least busy lines.

6. Future Directions

There is a lack of cross-layer methods concentrated on applications in which applications perceive network state, communicate with each other (inside one node), and generate data flows at the necessary rate or with a rate as close to the network congestion occurrence as possible. The transport layer is responsible for congestion control, but congestion control is usually not QoS sensitive; multiple applications will be given equal access to available bandwidth. Therefore, application congestion control is capable of achieving QoS requirements better than other layers or, at least, acknowledging that QoS requirements cannot be met.

Cross-layer methods concentrated on routing protocols with route selection based on physical layer antenna characteristics (beamforming capabilities, number of antenna sectors etc.) are still not completely developed. There were quite a few routing protocols created that consider antenna properties, but in an abstract way, never using data about antenna patterns (dependence of antenna gain from elevation and azimuth angles) to the authors’ knowledge. Aljumaily et al. [114] consider ad hoc networks with random beamforming. Nodes steer beams in random directions, eventually scanning all angular space and, with some probability, obtain reply packets. Routes are constructed based on line existence probability. Biomo et al. [115] propose a routing algorithm for ad hoc networks with sector antennas. Nodes in that network can transmit or receive simultaneously in multiple sectors without intersector interference. Sectors are effectively considered as additional lines compared to the one omnidirectional antenna. Lahsen et al. [116] use q-learning and ant-colony algorithm optimization to jointly select beam and transmission power. The routing protocol of Lahsen et al. uses the ratio of node beams to node the neighbors’ number. Nodes with bigger ratios are preferred during route construction as their lines have less interference. Rana et al. [117] propose the route request scheme dealing with the problem of the impossibility of receiving route replies from all sides when using directional antennas.

One can estimate interference of packet transmission using knowledge about antenna patterns and transmission power. Choosing routes with directive antennas transmitting packets with different antenna patterns at each hop can minimize network interference and packet collisions and increase network capacity. In particular, multicast routes can benefit from antenna transmission pattern selection because multicast routes are trees. Sometimes, a node can have multiple children, and then an omnidirectional antenna pattern is preferable. Sometimes, a node can have one child, and then a directive pattern is preferable.

It should be noted that thorough research was conducted regarding potential ad hoc network capacity using directive antennas such as sector antennas and beamforming antennas [118,119,120], the channel layer protocols development [55,56,57,58,59,60,121,122,123,124], and fast neighborhood discovery protocols for directive antennas [125,126]. However, channel layer protocols optimize line performance, not flow performance.

Furthermore, cross-layer methods concentrated on routing protocols rarely use estimated data flow rates from application or transport layers. If the flow rate is low, the routing protocol can be lenient in choosing the route because a low rate can hardly cause network congestion. The contrary situation occurs for high bandwidth flows.

Cross-layer methods with external entity multiple-layer control and optimization based on fuzzy logic lack a theoretical base because, in all publications, to our knowledge, fuzzy rules are chosen empirically. Automated heuristics are much needed for fuzzy rule set creation. As for dual decomposition optimization applications, there are many more heuristic optimization methods that are still not applied to multiple-layer control.

In cross-layer methods with independent layers and information sharing, layers are independent, but information sharing forms implicit interconnections between layers. Some layer connections can possibly cause instability of all layers. Therefore, it is necessary to conduct research about what information layers should be shared and how often to update it.

Furthermore, almost all of cross-layer methods depend on an information exchange between network nodes. There is still no research, to our knowledge, which addresses the problem of the estimation of what data should be sent between nodes and how often, depending on network and data flow state, their change rate, and with regard to underlying network control methods.

Author Contributions

Conceptualization, V.I. and M.T.; methodology, V.I.; software, V.I.; validation, V.I.; investigation, V.I.; data curation, V.I.; writing—original draft preparation, V.I. and M.T.; writing—review and editing, V.I. and M.T.; visualization, V.I.; supervision, M.T.; project administration, M.T.; funding acquisition, M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All the numerical data analyzed in the paper is presented in the text.

Acknowledgments

The authors thank Andrey E. Schegolev for his valuable insights.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ALM | Airtime Link Metric |

| AODV | Ad-hoc On-demand Distance Vector |

| AOMDV | Ad-hoc On-demand Multipath Distance Vector |

| CLAF-AODV | Adaptive Fuzzy-based Ad Hoc On-Demand Distance Vector |

| CSMA/CA | Collision Sensing Medium Access / Collision Avoidance |

| DARPA | Defense Advanced Research Projects Agency |

| DYMO | Dynamic MANET On-demand |

| ETT | Expected Transmission Time |

| ETX | Expected Transmission Count |

| FP-AODV | Fixed Probability AODV |

| FPG | Frequent Pattern Growth |

| GSO | Glow Swarm optimization |

| IP | Internet Protocol |

| ISO | International Organization for Standardization |

| MAC | Medium Access Control |

| MIC | Metric of Interference and Channel-switching |

| MIMO | Multiple-Input Multiple-Output |

| MISO | Multiple-Input Single-Output |

| MPTCP | Multipath Transport Control Protocol |

| OLSR | Optimized Link State Routing |

| OSI | Open Systems Interconnection |

| P2P | Peer-to-Peer |

| QoS | Quality of Service |

| RSSI | Received Signal Strength Indicator |

| SINR | Signal-to-Interference + Noise Ratio |

| SNR | Signal-to-Noise Ratio |

| TCP | Transport Control Protocol |

| TDMA | Time Division Multiple Access |

| UAV | Unmanned Aerial Vehicle |

| WCETT | Weighted Cumulative Expected Transmission Time |

References

- Raza, N.; Umar Aftab, M.; Qasim Akbar, M.; Ashraf, O.; Irfan, M. Mobile ad-hoc networks applications and its challenges. Commun. Netw. 2016, 8, 131–136. [Google Scholar] [CrossRef]

- Kirubasri, G.; Maheswari, U.; Venkatesh, R. A survey on hierarchical cluster based routing protocols for wireless multimedia sensor networks. J. Converg. Inf. Technol. 2014, 9, 19. [Google Scholar]

- Sennan, S.; Somula, R.; Luhach, A.K.; Deverajan, G.G.; Alnumay, W.; Jhanjhi, N.; Ghosh, U.; Sharma, P. Energy efficient optimal parent selection based routing protocol for Internet of Things using firefly optimization algorithm. Trans. Emerg. Telecommun. Technol. 2021, 32, e4171. [Google Scholar] [CrossRef]

- Zemrane, H.; Baddi, Y.; Hasbi, A. Mobile adhoc networks for intelligent transportation system: Comparative analysis of the routing protocols. Procedia Comput. Sci. 2019, 160, 758–765. [Google Scholar] [CrossRef]

- Pandey, M.A. Introduction to mobile ad hoc network. Int. J. Sci. Res. Publ. 2015, 5, 1–6. [Google Scholar]

- Zimmermann, H. OSI reference model-the ISO model of architecture for open systems interconnection. IEEE Trans. Commun. 1980, 28, 425–432. [Google Scholar] [CrossRef]

- Goldsmith, A. Wireless Communications; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Stallings, W. Wireless Communications & Networks; Pearson Education India: Delhi, India, 2009. [Google Scholar]

- Srivastava, V.; Motani, M. Cross-layer design: A survey and the road ahead. IEEE Commun. Mag. 2005, 43, 112–119. [Google Scholar] [CrossRef]

- Conti, M.; Maselli, G.; Turi, G.; Giordano, S. Cross-layering in mobile ad hoc network design. Computer 2004, 37, 48–51. [Google Scholar] [CrossRef]

- Jurdak, R. Wireless Ad Hoc and Sensor Networks: A Cross-Layer Design Perspective; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]