Abstract

When a natural or human disaster occurs, time is critical and often of vital importance. Data from the incident area containing the information to guide search and rescue (SAR) operations and improve intervention effectiveness should be collected as quickly as possible and with the highest accuracy possible. Nowadays, rescuers are assisted by different robots able to fly, climb or crawl, and with different sensors and wireless communication means. However, the heterogeneity of devices and data together with the strong low-delay requirements cause these technologies not yet to be used at their highest potential. Cloud and Edge technologies have shown the capability to offer support to the Internet of Things (IoT), complementing it with additional resources and functionalities. Nonetheless, building a continuum from the IoT to the edge and to the cloud is still an open challenge. SAR operations would benefit strongly from such a continuum. Distributed applications and advanced resource orchestration solutions over the continuum in combination with proper software stacks reaching out to the edge of the network may enhance the response time and effective intervention for SAR operation. The challenges for SAR operations, the technologies, and solutions for the cloud-to-edge-to-IoT continuum will be discussed in this paper.

1. Introduction

When a natural or human disaster occurs, the first 72 hours are particularly critical to locate and rescue victims [1]. Although advanced technological solutions are being investigated by researchers and industry for search and rescue (SAR) operations, rescue teams and first responders still suffer from limited situational awareness in an emergency. The main motivation for this is a generalized lack of modern and integrated digital communication and technologies. Relying only on direct visual or verbal communication is indeed the root cause of limited situational awareness. First responders have very limited, sparse, non-integrated ways of receiving information about the evolution of an emergency and its response (e.g., team members and threat locations). A real-time visual representation of the emergency response context would greatly improve their decision accuracy and confidence on the field. “The greatest need for cutting-edge technology, across disciplines, is for devices that provide information to the first responders in real-time” [2].

Mobile robot teams comprising possibly robots with heterogeneous sensory (cameras, infrared cameras, hyperspectral cameras, light detection and ranging—LiDARs, radio detection additionally, ranging—RADARs, etc.) and mobility (land, air) capabilities have the potential of scaling up first responders’ situational awareness [3]. They are, therefore, an important asset in the response to catastrophic incidents, such as wildfires, urban fires, landslides, and earthquakes as they also offer the possibility to generate 3D maps of a disaster scene with the use of cameras and sensors. However, in many cases, robots used by first responders are remotely teleoperated, or they can operate autonomously only in scenarios with good global positioning system (GPS) coverage. Furthermore, 3D maps cannot be provided in low-visibility scenarios, such as when smoke is present, in which remote operation is also very complicated. Operating robots in scenarios such as large indoor fires requires the robots to be able to navigate autonomously in environments with smoke and/or where GPS is not available.

Other advanced technologies, such as artificial intelligence (AI) and computer vision are also gaining momentum in SAR operations to fully exploit the information available through cameras and sensors and by this enable smart decision-making and enhanced mission control. On the other hand, these technologies are often very resource-demanding in time and computation. Therefore, the computational power in the cloud and at the edge of the infrastructure has shown great potential to strongly support them. Edge computing is where devices with embedded computing and “hyper-converged” infrastructures integrate and virtualize key components of information technology (IT) infrastructure such as storage, networking and computing. It is also the first step towards a computing continuum that spans from network-connected devices to remote clouds. To leverage this continuum, the seamless integration of capabilities and services is required. Advanced connectivity techniques such as 5G (fifth generation) mobile networks and software-defined networking (SDN) offer unified access to the edge and cloud from anywhere. Workloads can potentially run wherever it makes the most sense for the application to run. At the same time, the different environments along the continuum will work together to provide the right resources for the task at hand. The range of applications and workloads with unique cost, connectivity, performance, and security requirements will demand a continuum of computing and analysis at every step of the topology, from the edge to the cloud, with new approaches to orchestration, management, and security being required.

In this context, there are several open research and technological questions that need to be addressed. On the one side, there is a need to reduce the computational and storage load on the physical device to perform timely actions, but simply offloading to the edge may not improve as the edge also has limited resources. On the other side, how to use all the technologies and devices available to improve situational awareness for first responders is nontrivial due to heterogeneity in communication protocols, semantics and data formats (e.g., generating a collaborative map of the area using the information from drones and robots considering that data fusion from heterogeneous data sources is a challenging task). In this paper, we will investigate how the cloud-to-edge-to-IoT continuum can support and enable post-disaster SAR operations, and we will propose solutions for the challenges and technological/research questions introduced above. Indeed, distributed applications paired with advanced resource orchestration solutions over the continuum reaching out to the edge of the network can enhance the response time and effective intervention for SAR operation [4,5,6]. The challenges will be discussed, and the possible solutions will be described as part of the Horizon Europe NEPHELE project (NEPHELE project website: https://nephele-project.eu/ (accessed on 19 January 2023)). This project proposes a lightweight software stack and synergetic meta-orchestration framework for the next-generation compute continuum ranging from the IoT to the remote cloud, which perfectly matches the needs of SAR operations.

The paper is organized as follows. Section 2 reports the related work on the main related technologies in cloud computing, sensor networks, the Internet of Things (IoT), robotic applications and cloud robotics. Section 3 describes the reference use case for SAR operations, with its requirements. Section 4 describes the proposed solutions based on the approach followed in the NEPHELE project. Section 5 concludes the paper.

2. Related Work

In this section, we will go over the main technologies that we believe can and should be considered to best cope with SAR scenarios and enhance the situational awareness of first responders.

2.1. Cloud and Edge Robotics for SAR

The idea of utilizing a “remote brain” for robots can be traced back to the 90s [7,8]. Since then, attempts to adopt cloud technologies in the robotics field have seen a constantly growing momentum. The benefits deriving from the integration of networked robots and cloud computing are, among others, powerful computation, storage, and communication resources available in the cloud which can support the information sharing and processing for robotic applications. The possibility of remotely controlling robotic systems further reduces costs for the deployment, monitoring, diagnostics, and orchestration of any robotic application. This, in turn, allows for building lightweight, low-cost, and smarter robots, as the main computation and communication burden is brought to the cloud. Since 2010, when the cloud robotics term first appeared, several projects (e.g., RoboEarth [9], KnowRob [10], DAVinci [11], Robobrain [12]) investigated the field, pushing forward both research and products to appear on the market. Companies started investing in the field as they recognized the large potential of cloud robotics. This led to the first open-source cloud robotics frameworks appearing in recent years [13]. An example of this is the solution from Rapyuta Robotics (Available online: https://www.rapyuta-robotics.com/ (accessed on 19 January 2023)). Similarly, commercial solutions for developers have seen the light such as Formant (Available online: https://formant.io/ (accessed on 19 January 2023)), Robolaunch (Available online: https://www.robolaunch.io/ (accessed on 19 January 2023)) and Amazon’s Robomaker [14]. Additionally, for key features such as navigation and localization in indoor environments, commercial products exist on the market. Adopting commercial solutions, however, leads to additional costs that might threaten the success of offering a low-cost product/service to the market. Open source offers customizable solutions with production and maintenance costs kept low, whereas ad hoc designed solutions allow us to deploy customized technology solutions in terms of services and features envisioned for the final product. With the advances in edge [15] and fog computing [16] in the last few years, robotics has seen an even greater potential as higher bandwidth and lower latencies can be achieved [17]. With computing and storage resources at the edge of the infrastructure, applications can be executed closer to the robotic hardware which generally improves the performance [17] and enables advanced applications that pure cloud-based robotics would not be able to support [18,19,20].

Multiple research and development (R&D) projects have investigated the adoption of robotic solutions in SAR operations. For instance, the SHERPA project [21] developed a mixed ground and aerial robotic platform with human–robot interaction to support search and rescue activities in real-world hostile environments; RESPOND-A [22] developed holistic solutions by bringing together 5G wireless communications, augmented and virtual reality, autonomous robot and unmanned aerial vehicle coordination, intelligent wearable sensors and smart monitoring, geovisual analytics and immersive geospatial data analysis, passive and active localization and tracking, and interactive multi-view 360-degrees video streaming. These are two examples of several projects that have investigated and demonstrated the use of robotic applications in SAR and disaster scenarios. Whereas the adoption of cloud and edge robotics for SAR has gained interest in recent years [20,23,24], how the cloud and edge computing frameworks can support SAR operations at their best is still an open challenge. Nonetheless, we believe that the cloud-to-edge-to-IoT continuum can strongly support and enable the widespread use of cloud robotics in a large set of application domains.

2.2. Cloud Continuum

With the term cloud continuum, we intend the edge computing extension of cloud services towards the network boundaries [25] reaching out to end devices (i.e., the IoT devices). Edge computing revealed itself as a valid paradigm to bring cloud computation and data storage closer to the data source of applications. On the one hand, edge computing provides better performance for delay-sensitive applications, whereas, on the other hand, applications with a high computational cost have access to more processing resources distributed along the continuum. This continuum reaches out to the IoT devices as sensors are the source of massive amounts of data that are challenging for infrastructure, data analysis, and storage. The Cloud-Edge-IoT computing continuum has been explored in various areas of research. For instance, it has been investigated to provide services and applications for autonomous vehicles [26], smart cities [27], UAV cooperative schemes [28] and Industry 4.0 [29], where most of the data require real-time processing using machine learning and artificial intelligence.

One of the main challenges of the cloud continuum is the orchestration between the components of the system, which includes computational resources, learning models, algorithms, networking capabilities and edge/IoT device mobility. Context awareness is required in the continuum to decide when and where to store data and perform computation [30]. For IoT environments, context information is generated by processing raw sensor data to make decisions in order to reduce energy consumption, reduce overall latency and message overload [31], achieve a more efficient data transmission rate in large-scale scenarios using caching [32], and store and process placement across the continuum [33,34]. The mobility-aware multi-objective IoT application placement (mMAPO) method [25] proposes an optimization model for the application placement across the continuum considering the edge device mobility to optimize the completion time, energy consumption and economic cost. Task offloading from ground IoT devices to UAV (unmanned aerial vehicle) cooperative systems to deploy edge servers is explored in [35], where authors also present an energy allocation strategy to maximize long-term performance.

The use of the cloud continuum for SAR operations has the potential to extend the capabilities and resources available and by this enhance the situational awareness of first responders. The orchestration process of the different systems, including robots and rescue teams, is a challenging aspect that might obtain support from models from industry, health and military systems [36].

2.3. Sensor Networks and IoT for SAR Operations

Sensor networks have seen broad application in areas such as healthcare, transportation, logistics, farming, and home automation, but even more in search and rescue operations. Sensors capture signals from the physical world and increase the information available to make decisions. The IoT as an extension of the sensor network includes any type of device and system such as vehicles, drones, machines, robots, human wearables or cameras with a unique identifier that can gather, exchange and process data without explicit human intervention. The use of IoT systems has been widely explored to extend the capabilities of SAR teams in disaster situations. Drone and UAV capabilities allow access to remote areas in less time, deploy communications systems, improve area visibility and transport light cargo if necessary [37]. Acoustic source location based on azimuth estimation and distance was proposed to estimate the victim’s location [38,39]. Image/video resources in drone-based systems are also used for automatic person detection by applying deep convolutional neural networks and image processing tools [40]. SARDO [41] is a drone-based location system that allows locating victims by locating mobile phones in the area without any modification to the mobile phones or infrastructure support.

Collaborative multi-robots have a high impact on SAR operations. providing real-time mapping and monitoring of the area [42]. The multi-robot systems in SAR operation have been explored for urban [43], maritime [44] and wilderness [45] operational environments. Several types of robots are involved in multi-robot systems such as UAVs, unmanned surface vehicles (USVs), unmanned ground vehicles (UGVs) or unmanned underwater vehicles (UUVs). Serpentine robots are useful in narrow and complex spaces [46]. The snake robot proposed in [47] has a gripper module to remove small objects and a camera to move the eyes and look in all directions without moving the body. There are also more flexible and robust ground units with long-term autonomy and the capacity to carry aerial robots to extend the coverage area using an integrated vision system [48]. Maritime SAR operations are supported by UAVs, UUVs and USVs, where the UUV includes seafloor pressure sensors, hydrophones for tsunami detection and a sensor for measuring water conditions such as temperature, the concentration of different chemicals, depth, etc. [49].

Other fundamental elements in SAR operations are personal devices such as smartphones and wearables [23]. One of the constraints during a disaster is infrastructure failure where electricity is off or communications equipment is destroyed, damaged or saturated. COPE is a solution to exploit the multi-network feature of mobile devices to provide an alert messages delivery system based on smartphones [50]. COPE considers several energy levels to use the technologies available in smartphones nowadays (Bluetooth, Wi-Fi, cellular) for alert diffusion in the disaster area. Drones are used to collect emergency messages from smartphones and take the messages to areas where there is connectivity. This approach is explored in [51] to propose a collaborative data collection protocol that organizes wireless devices in multiple tiers by targeting fair energy consumption in the whole network, thereby extending the network lifetime. Wearables are also important in SAR operations because they can monitor the vital signs such as temperature, heart rate, respiration rate and blood pressure of the victims and rescuers [51]. This information can be collected by the rescuer or the robot-based system to determine the victim’s status and provide a more convenient treatment depending on the conditions [52].

SAR dogs have also been involved in disaster events to locate victims even in limited vision and sound scenarios. A wearable computing system for SAR dogs is proposed in [53], where the dog uses a deep learning-assisted system in a wearable device. The system incorporates inertial sensors, such as a three-axial accelerometer and gyroscope, and a wearable microphone. The computational system collects audio and motion signals, processes the sensor signals, communicates the critical messages using the available network and determines the victim’s location based on the dog’s location. Incorporating IoT systems into SAR operations increases rescue teams’ capabilities, saving time by receiving information in real time. The development of autonomous systems allows the integration of machines precisely and reduces the risks for victims and rescuers.

2.4. Situation Awareness and Perception with Mobile Robots

Mobile robot teams with heterogeneous sensors have the potential of scaling up first responders’ situational awareness. Being able to cover wide areas, these are an important asset in the response to catastrophic incidents, such as wildfires, urban fires, landslides, and earthquakes. In their essence, robot teams make possible two key dimensions of situation awareness: space distribution and time distribution [54]. Although the former means the possibility of multiple robots perceiving simultaneously different (far) locations of the area of interest, the latter arises from the fact that multiple robots, possibly endowed with complementary sensor modalities, can visit a location at different instant times, thus coping with environments’ evolution over time, i.e., with dynamic environments. Together with space and time distribution, robot teams also potentially provide efficiency, reliability, robustness and specialization [55]; efficiency because they allow building in fewer time models of wide areas, which is of utmost importance to provide situational awareness within response missions to catastrophic scenarios; reliability and robustness because the failure of individual robots in the team does not necessarily compromise the overall mission success; specialization because robots have complementary, i.e., heterogeneous, sensory and mobility capabilities, thus increasing the team’s total utility.

Although distributed robot teams potentially allow for persistent, long-term perception in wide areas, i.e., they allow for cooperative perception, fulfilling this potential requires solving two fundamental scientific sub-problems [56]: (i) data integration for building and updating a consistent unified view of the situation, eventually over a large time span; (ii) multi-robot coordination to optimize the information gain in active perception [57,58]. The former sub-problem deals with space and time distribution and involves data fusion, i.e., merging partial perceptual models of individual robots into a globally consistent perceptual model, so that robots can update percepts in a spot previously visited by other robotic teammates. The latter sub-problem involves the use of partial information contained in the global perceptual model to decide where either a robot or the robot team should go next to acquire novel or more recent data to augment or update the current perceptual model, thus closing the loop between sensing and actuation. In order to optimize the collective performance and take full advantage of space distribution provided by the multi-robot system, active perception requires action coordination among robots, which usually is based on sharing some coordination data among robots (e.g., state information). In both sub-problems, devising distributed solutions that do not rely on a central point of failure [55] is a key requirement to operate in the wild, e.g., in forestry environments or in large urban areas, so that adequate resiliency and robustness are attained in harsh operational conditions, including individual robots’ hardware failures and communication outages.

Cooperative perception has been studied in specific testbeds [55] or several robotics application domains, such as long-term security and care services in man-made environments [57], monitoring environmental properties [58,59,60,61,62,63], the atmospheric dispersion of pollutants monitoring [64], precision agriculture [65,66] or forest fire detection and monitoring [67,68]. However, the research problem has been only partially and sparsely solved (i.e., usually tackling only a specific dimension of cooperative perception), and it is still essentially an open research problem. Especially in field robotic applications, including robotics to aid first responders in catastrophic incidents, which involve a much wider diversity of robots in terms of sensory capabilities and mobility and strict time response constraints, a more thorough and extensive treatment is needed to encompass all the dimensions of the cooperative perception problem, thus being able to fulfill the requirements posed by those complex real scenarios.

2.5. Simultaneous Localization and Mapping

Operating robots in scenarios such as large indoor fires or collapsed buildings requires the robots to be able to navigate in environments with smoke and/or where GPS is not available. This involves using simultaneous localization and mapping (SLAM) techniques. SLAM consists of the concurrent construction of a model of the environment (the map that can be used also for navigation) and the estimation of the position of the robot moving within it and is considered a fundamental problem for robots to become truly autonomous. As such, over the years, a large variety of SLAM approaches have been developed, with methods based on different sensors (camera, LiDAR, RADAR, etc.), new data representations and consequently new types of maps [69,70]. Similarly, various estimation techniques have emerged inside the SLAM field. Nonetheless, it is still an actively researched problem in robotics, so much so that the robotics research community is only now working towards designing benchmarks and mechanisms to compare different SLAM implementations. In recent years, there has been particularly intense research into VSLAM (visual SLAM) [71]. Specifically, the focus is on using primarily visual (camera) sensors to allow robots to track and keep local maps of their relative positions also in indoor environments (where GPS-based navigation fails). Extracting key features from images, the robot can determine where it is in the local environment by comparing features to a database of images taken of the environment during prior passes by the robot. As this latter procedure quickly becomes the bottleneck for its applicability, solutions are being explored to offload the processing to the cloud [72] and to the edge [56]. Additionally, in this context, we believe the cloud-to-edge-to-IoT continuum will sustain these advanced technologies.

2.6. ROS Applications

With robots coming out of research labs to interact with the real world in several domains comes the realization that robots are but one of the many components of “robotic applications”. The distributed nature of such systems (i.e., robots, edge, cloud, mobile devices), the inherent complexity of the underlying technologies (e.g., networking, artificial intelligence—AI, specialized processors, sensors and actuators, robotic fleets) and the related development practices (e.g., continuous integration/continuous development, containerization, simulation, hardware-in-the-loop testing) pose high entry barriers for any actor wishing to develop such applications. With new features and robotic capabilities being constantly developed in all main five elements of modern advanced robotics (i.e., perception, modeling, cognition, behavior and control) and AI solutions expanding what is possible each day, software updates will become frequent across all involved devices, requiring development and operation (DevOps) practices to be adapted to the specific nature of robotic applications and their lifecycle (e.g., mobile robots, frequent disconnections, over-the-air updates, safety).

ROS (robot operating system) is an open-source framework for writing robotic software that was conceived with the specific purpose of fostering collaboration. In its relatively short existence (slightly more than ten years) it has managed to become the de facto standard framework for robotic software. The ROS ecosystem now consists of tens of thousands of users worldwide, working in domains ranging from tabletop hobby projects to large industrial automation systems (see Figure 1).

Figure 1.

The ROS ecosystem is composed of multiple elements: (i) plumbing: a message-passing system for communication; (ii) tools: a set of development tools to accelerate and support application development; (iii) capabilities: drivers, algorithms, user interfaces as building blocks for applications; (iv) community: a large, diverse, and global community of students, hobbyists and multinational corporations and government agencies.

Open Robotics (Available online: https://www.openrobotics.org (accessed on 19 January 2023)) sponsors the development of ROS and aims to generally “support the development, distribution, and adoption of open-source software for use in robotics research, education, and product development”. Currently, ROS is the most widely used framework for creating generic and universal robotics applications. It aims to create complex and robust robot behaviors across the widest possible variety of robotic platforms. ROS achieves that by allowing the reuse of robotics software packages and creating a hardware-agnostic abstraction layer. This significantly lowers the entry threshold for non-robotic-field developers who want to develop robotic applications.

On the other hand, from the architectural point of view, ROS still treats the robot as a central point of the system and relies on local computation. This limitation makes the task of creating large-scale and advanced robotics applications much harder to achieve. The current state of cloud platforms does not support the actual robotic application needs off the shelf [13,14,15,16,17]. It includes bi-directional data flow, multi-process applications or exposing sockets outside the cloud environment. These hurdles make them less accessible for robotics application developers. By creating a cloud-to-edge-to-IoT continuum which provides the needs of such robotic environments, we can lower the hurdle for an application developer to use or extend robots’ capabilities.

3. Challenges for Risk Assessment and Mission Control in SAR Operations in Post-Disaster Scenarios

When a natural or human disaster occurs, the main objective is to rescue as many victims as possible in the shortest possible time. To this aim, the rescue team needs to (1) locate and identify victims, (2) assess the victims’ injuries and (3) assess the damages and comprehend the remaining risks to prioritize rescue operations. All these actions are complementary and require a different part of the data collected in the area. On the data coming from sensors, cameras and other devices, image recognition, AI-powered decision-making, path planning and other technological solutions can be implemented to support the rescue teams in enhancing their situational awareness. Today, only part of the available data can be collected, and robots, although a great support, are not fully autonomous and just act as relays to the rescuers. The main technical challenges are linked to the heterogeneity of devices and strict time requirements. Data should be filtered and processed at different levels of the continuum to guarantee short delays while maintaining full knowledge of the situation. Devices are heterogeneous in terms of CPU, memory, sensors and energy capacities. Some of the hardware and software components are very specific to the situation (use-case specific), whereas others are common to multiple scenarios. Different complementary applications can be run on top of the same devices but exploit different sets of data, potentially incomplete. The network is dynamic because of link fluctuations, the energy depletion of devices and device mobility (which can also be exploited when controllable).

The high-level goal for this scenario is to enhance situational awareness for first responders. Sensor data fusion built on ROS can help provide precise 3D representations of emergency scenarios in real time, integrating the inputs from multiple sensors, pieces of equipment and actors. Furthermore, collecting and visually presenting aggregated and processed data from heterogeneous devices and prioritizing selected information based on the scenario is an additional objective. All the information that is being collected should improve the efficiency of decision-making and responses and increase safety and coordination.

Robotic platforms have features that are highly appreciated by first responders, such as the possibility to generate 3D maps of a disaster scene in a short time. Open-source technologies (i.e., ROS) offer the tools to aggregate sensor data from different coordinate frameworks. To achieve this, precise localization and mapping solutions in disaster scenarios are needed, together with advanced sensor data fusion algorithms. The envisaged real-time situation awareness is only possible through substantial research advancement with respect to the state of the art in localization, mapping, and cooperative perception in emergency environments. The ability to provide information from a single specialized device (e.g., drone streaming) has been demonstrated, whereas correctly integrating multiple heterogeneous moving data sources with imprecise localization in real-time is still an open challenge. We can summarize the main technical requirements and challenges as follows:

- Dynamic multi-robot mapping and fleet management: the coordination, monitoring and optimization of the task allocation for mobile robots that work together in building a map of unknown environments;

- Computer vision for information extraction: AI and computer vision enable people detection, position detection and localization from image and video data;

- Smart data filtering/aggregation/compression: a large amount of data is collected from sensors, robots, and cameras in the intervention area for several services (e.g., map building, scene and action replay). Some of them can be filtered, and others can be downsampled or aggregated before sending them to the edge/cloud. Smart policies should be defined to also tackle the high degree of data heterogeneity;

- Device Management: some application functionalities can be pre-deployed on the devices or at the edge. The device management should also enable bootstrapping and self-configuration, support hardware heterogeneity and guarantee the self-healing of software components;

- Orchestration of software components: given the SAR application graph, a dynamic placement of software components should be enabled based on service requirements and resource availability. This will require performance and resource monitoring at the various levels of the continuum and dynamic component redeployment;

- Low latency communication: communication networks to/from disaster areas towards the edge and cloud should guarantee low delays for a fast response in locating and rescuing people under mobility conditions and possible disconnections.

4. Nephele Project as Enabler for SAR Operations

NEPHELE (NEPHELE project website: https://nephele-project.eu/ (accessed on 19 January 2023)) is a research and innovation action (RIA) project funded by the Horizon Europe program under the topic "Future European platforms for the Edge: Meta Operating Systems" for the duration of three years (September 2022–August 2025). Its vision is to enable the efficient, reliable and secure end-to-end orchestration of hyper-distributed applications over a programmable infrastructure that is spanning across the compute continuum from IoT to edge to cloud. In doing this, it aims at removing the existing openness and interoperability barriers in the convergence of IoT technologies against cloud and edge computing orchestration platforms and introducing automation and decentralized intelligence mechanisms powered by 5G and distributed AI technologies. To reach this overall objective, the NEPHELE project aims to introduce two core innovations, namely:

- An IoT and edge computing software stack for leveraging the virtualization of IoT devices at the edge part of the infrastructure and supporting openness and interoperability aspects in a device-independent way. Through this software stack, the management of a wide range of IoT devices and platforms can be realized in a unified way, avoiding the usage of middleware platforms, whereas edge computing functionalities can be offered on demand to efficiently support IoT applications’ operations. The concept of the virtual object (VO) is introduced, where the VO is considered the virtual counterpart of an IoT device. The VO significantly extends the notion of a digital twin as it provides a set of abstractions for managing any type of IoT device through a virtualized instance while augmenting the supported functionalities through the hosting of a multi-layer software stack, called a virtual object stack (VOStack). The VOStack is specifically conceived to provide VOs with edge computing and IoT functions, such as, among others, distributed data management and analysis based on machine learning (ML) and digital twinning techniques, authorization, security and trust based on security protocols and blockchain mechanisms, autonomic networking and time-triggered IoT functions taking advantage of ad hoc group management techniques, service discovery and load balancing mechanisms. Furthermore, IoT functions similar to the ones usually supported by digital twins will be offered by the VOStack;

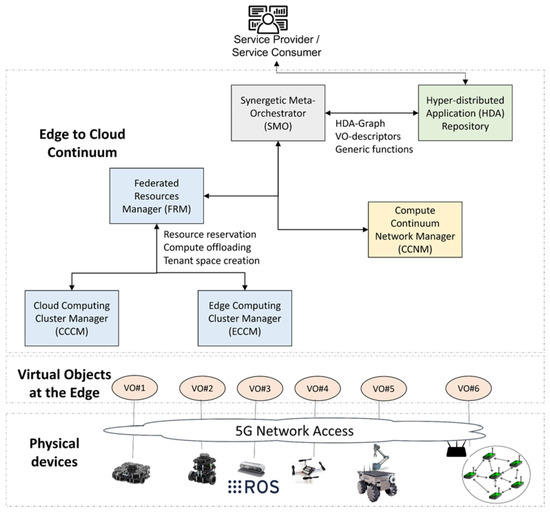

- A synergetic meta-orchestration framework for managing the coordination between cloud and edge computing orchestration platforms, through high-level scheduling supervision and definition. Technological advances in the areas of 5G and beyond networks, AI and cybersecurity are going to be considered and integrated as additional pluggable systems in the proposed synergetic meta-orchestration framework. To support modularity, openness and interoperability with emerging orchestration platforms and IoT technologies, a microservices-based approach is adopted where cloud-native applications are represented in the form of an application graph. The application graph is composed of independently deployable application components that can be orchestrated. Such components regard application components that can be deployed at the cloud or the edge part of the continuum, VOs and IoT-specific virtualized functions that are offered by the VOs. Each component in the application graph is also accompanied by a sidecar -based on a service-mesh approach for supporting generic/supportive functions that can be activated on demand. The meta-orchestrator is responsible for activating the appropriate orchestration modules to efficiently manage the deployment of the application components across the continuum. It includes a set of modules for federated resources management, the control of cloud and edge computing cluster managers, end-to-end network management across the continuum and AI-assisted orchestration. The interplay among VOs and IoT devices will allow for exploitation functions even at the device level in a flexible and opportunistic fashion. The synergetic meta-orchestrator (SMO) interacts with a set of further components for both computational resources management (federated resources manager—FRM) and network management across the continuum, by taking advantage of emerging network technologies. The SMO makes use of the hyper-distributed applications (HDA) repository, where a set of application graphs, application components, virtualized IoT functions and VOs are made available to/by application developers (see Figure 2).

Figure 2. NEPHELE’s high-level architecture. Three layers are foreseen: a physical devices layer, with all the IoT devices (e.g., robots, drones, and sensors) connected over a wireless network to the platform; a virtual objects layer at the edge, with the virtual representation of the physical devices as a VO; edge-to-cloud continuum with a set of logic blocks for cloud and networking resource management and the orchestration of the application components.

Figure 2. NEPHELE’s high-level architecture. Three layers are foreseen: a physical devices layer, with all the IoT devices (e.g., robots, drones, and sensors) connected over a wireless network to the platform; a virtual objects layer at the edge, with the virtual representation of the physical devices as a VO; edge-to-cloud continuum with a set of logic blocks for cloud and networking resource management and the orchestration of the application components.

The NEPHELE outcomes are going to be demonstrated, validated and evaluated in a set of use cases across various vertical industries, including areas such as disaster management as presented in this paper, logistic operations in ports, energy management in smart buildings and remote healthcare services.

4.1. The Search and Rescue Use Case in NEPHELE

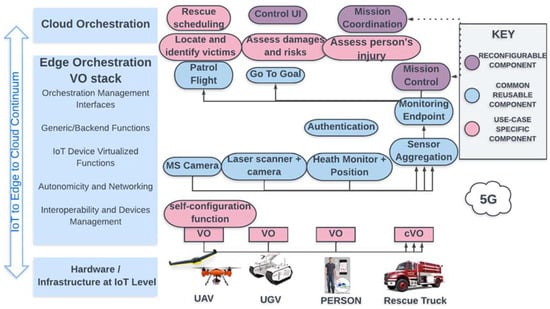

For the specific use case discussed in this paper, we foresee a service provider which defines the logic of a SAR application to be deployed and executed over the NEPHELE platform. The application logic is represented as an HDA graph which will be available on the NEPHELE HDA repository (see Figure 2). The application logic will define the high-level goal and the key performance indicator (KPI) requirements for the application. To run and deploy the HDA represented by the graph, some input parameters will be given, such as the time, zone, and area to be covered. The application graph will foresee the use of one or more VOs as representative of IoT devices such as robots or sensors and one or more generic functions to support the application (see Figure 3). This latter will support the SAR operations with movement, sensing and mapping capabilities and may be provided through the service mesh approach that enables managed, observable and secure communication across the involved microservices. The VO description required by the SAR HDA graph to be deployed at the edge of the infrastructure will also be available on the NEPHELE repository. In Figure 3, we also see highlighted the different levels of the VOStack and their matching to the SAR application components/microservices.

Figure 3.

VOStack mapping to SAR application scenarios. Hyperdistributed applications are formed of multiple components that are either use-case specific or generic or reconfigurable. These components are placed on different levels of the IoT-to-edge-to-cloud continuum and based on their functionalities are part of the different layers of the VOStack.

We foresee a service consumer (e.g., a firefighter brigade) owning a set of physical devices (robots, drones and sensors). These devices are ready to be used with some basic software components running and connected to a local network, e.g., a 5G access point. As for the software components already deployed on the robots, we foresee the ROS environment correctly set up, with some basic ROS components already running. The sensors can be either pre-deployed in the area or carried by firefighter personnel. When a SAR mission is started, the Service Consumer connects to the NEPHELE HDA repository and looks for the HDA to deploy and provides the input data that are required. Then, the following operations will have to be initiated by the NEPHELE platform (see Figure 2 for reference of the building blocks):

- The synergetic meta-orchestrator (SMO) receives the HDA graph, the set of parameters for the specific instance of the SAR application, the VO descriptors needed for the application and a descriptor of the supportive functions to be deployed in the continuum. The supportive functions are provided by the VOStack and can be, for instance, risk assessments, mission control with task prioritization and optimized planning, health monitoring based on AI and computer vision, predictions of dangerous events, the localization and identification of victims, and so on. The SMO will interact with the federated resources manager and the compute continuum network manager to deploy the networking, computing and storage resource over the continuum according to the requirements derived from the HDA graph, the VO descriptors and the input parameters given by the service consumer;

- The federated resources manager (FRM) orchestrator will ensure that the application components will be deployed either on the edge or on the cloud based on the computational and storage resources needed for the application components and the overall resource availability. For instance, large data amounts used to replay some actions from robots paired with depth images of the surroundings (e.g., using rosbags) can be stored on the remote cloud. On the other hand, maps to be navigated by the robot could be stored at the edge for further action planning. Similarly, computation can be performed on the edge for identifying imminent danger situations or planning a robotic arm movement so that low delay is guaranteed, whereas complex mission optimization and prioritization computations can be performed in the cloud, and the needed resources should be allocated. The FRM will produce a deployment plan that will be provided to the compute continuum network manager;

- The cloud computing cluster manager (CCCM) is responsible for the cloud deployments and interaction with the edge computing cluster manager (ECCM) (e.g., reserve resources, create tenant spaces at the edge and compute offloading mechanisms);

- The edge computing cluster manager (ECCM) is responsible for the edge deployments, providing feedback on the application component and resource status; it receives inputs for compute offloading. Moreover, the ECCM will orchestrate the VOs that are part of the HDA graph and synchronize the device updates from IoT devices to edge nodes and vice versa.

- The compute continuum network manager (CCNM) will receive the deployment plan from the FRM to set up the network resources needed for the different application components for end-to-end network connectivity and meet the networking requirements for the application across the compute continuum. Exploiting 5G technologies, a network slice based on the bandwidth requirements for each robotic device will be the output of the CCNM. Each network slice will ensure it meets the QoS requirements and service level agreements for the given application.

- Once the VOs are deployed, a southbound interface for VO-to-IoT device interactions will be used to interoperate with the physical devices (i.e., robots, drones and sensor gateways). The VO will have knowledge on how to communicate with the IoT devices (i.e., robots, sensor gateways), as this will be stored and available on the VO storage. We assume the IoT devices to be up and running with their basic services and to be connected to the network;

- Physical robots and sensor networks will communicate with each other through the corresponding VOs using a peer interface, whereas the application component that will use the data stream from the VOs will use the Northbound interface. Application components such as map merging, decision-making, health monitoring, etc., will interact with the VOs to exchange relative information;

- The deployed VOs will use the Northbound interface to interact with the orchestrator for monitoring and scaling requests when, for instance, more robots are needed to cover a given area.

The SAR HDA application will have a classic three-tier architecture with a presentation tier, an application tier and a data tier all implemented with a service mesh approach for the on-demand activation of generic/supportive functions for the hyper-distributed application.

Presentation tier: the application will foresee a frontend for visualization and mission control by the end-user. A mission-specific dashboard will provide real-time situational awareness (i.e., a 3D map with the location of robots, rescue team members, victims and threats) to take well-informed confident decisions. The dashboard integrates data coming from heterogeneous sensors and equipment (e.g., drones, mobile robots). This will be accessible through a web browser or a graphical user interface (GUI) remotely and enable the service consumer to interact with the application tier to take mission decisions, trigger tasks that have been suggested by the automized application tier and analyze historic data for further information collection and situational awareness.

Application tier: the inputs and requests coming from the presentation tier are collected and the application components are activated to execute mission tasks. All application components for supporting the application logic are foreseen and run on the different layers of the continuum. As an example, localization functions and camera streaming will run directly on the robots/drones. Three-dimensional SLAM solutions and video analysis will run on the edge in cases where the IoT devices (i.e., robots and drones) do not have the required resources. Other more advanced and computationally demanding functions and components will instead run on the cloud (or edge) through VO-supportive functions. Examples of these are AI algorithms for mission control, risk assessment, danger prediction, optimization problems for path planning, and so on. In all of these components, new data can be produced, and old data can be accessed from the data tier.

Data tier: this foresees a storage element for storing processed images or historical data about the SAR mission. The data produced by the IoT devices (drones, robots, sensors) will be compressed, downsampled and/or secured before being stored for future use by the application tier. These functions on the data will be running, if possible, on the drones and robots themselves, to reduce the data transmissions. Data analysis and complex information extraction will be offered by support functions from the VO. The data can be either stored on the VO data storage or on remotely distributed storage.

4.2. NEPHELE’s Added Value

The implementation of the described use case will demonstrate several benefits obtained with the NEPHELE innovation and research activities. Most importantly, they will help in coping with the identified challenges for the SAR operations and take important steps in meeting the overall high-level goal of improving situational awareness for first responders in cases of natural or human disasters. The benefits deriving from the solutions proposed in NEPHELE can be summarized in the following points.

- Reduced delay in time-critical missions: by exploiting compute and storage resources at the edge of the networks, with the possibility of the dynamic adaptation of the application components deployment over the continuum, lower delays will be expected for computationally demanding tasks on a large amount of data. This will be of high importance as it will strongly enhance first responders’ effectiveness and security in their operations;

- Efficient data management: a large amount of data available and collected by sensors, drones and robots will be filtered, compressed and analyzed by exploiting supportive functions made available by the VOStack in NEPHELE. Only a subset of the produced data will be stored for future reuse based on data importance. This will reduce the bandwidth needed for communications from the incident area to the applications layers that introduce intelligence into the application and by this reduce the delay in communication and the risk of starvation in terms of networking resources;

- Robot fleet management and trajectory optimization: exploiting the IoT-to-edge-to-cloud compute continuum, smart decisions will be taken and advanced algorithms will be provided for optimal robot and drone trajectory planning in multi-robot environments. Solutions will rely on AI techniques able to learn from what fleets robots see in their environment and enable semantic navigation with time-optimized trajectories;

- Rescue operations prioritization: AI techniques and optimization algorithms can elaborate the high amount of data and information collected from the intervention area to support rescue teams in giving priorities to the intervention tasks. The compute continuum will enable computationally heavy and complex decisions in a dynamic environment where risk prediction and assessment, victims’ health monitoring and victim identification may produce new information continuously and new decisions should be triggered;

- HW-agnostic deployment: the introduction of the VO concept and the multilevel meta-orchestration open for device-independent deployment and bootstrapping using generic HW. Different software components of an HDA can be deployed at every level of the IoT-to-edge-to-cloud continuum, which reduces the HW requirements (e.g., in computation and storage) at the IoT level for enabling a given application;

- AI for computer vision and image processing: advanced AI algorithms can be deployed as part of the supportive functions made available through the VOSstack innovation from NEPHELE. These can then be enabled on demand and deployed over the compute continuum for image and analysis and computer vision to locate and identify victims and perform risk assessments and predictions;

- End-to-end security: IoT devices and HDA users will benefit from the security and authentication, authorization, and accounting (AAA) functionalities offered by the NEPHELE framework. These functions will be offered as support functions for the VOs representing the IoT devices and will help in controlling access to the services, authorization, enforcing policies and identifying users and devices;

- Optimal network resource orchestration: based on the HDA requirements, an optimized network resource allocation policy will be enforced over the IoT-to-edge-to-cloud continuum. Here, the experience in network slicing and software-defined networking (SDN) will be exploited to be able to support time-critical applications such as the SAR operations presented in this paper.

To summarize, the NEPHELE framework will enable and support the integration of different technologies and solutions over the cloud-to-edge-to-IoT continuum. Indeed, combining all these elements into a single framework represents a breakthrough advance in the cloud-to-edge-to-IoT continuum-based applications. Better performance and enhanced situational awareness in SAR operations are nicely paired with advanced technological solutions offering smart decision-making and optimization techniques for mission control and robotic applications in mobile environments.

5. Conclusions

In this paper, it has been discussed how the cloud-to-edge-to-IoT compute continuum can support SAR operations in cases of natural and human disasters. Augmented computing, networking, and storage resources from the “remote brain” in the edge/cloud can strongly enhance the situational awareness of the first responders. An analysis of current challenges with respect to the technology used in SAR operations has been presented with an overview of advanced solutions that may be adopted in these scenarios. The NEPHELE project and its main concepts were introduced as an enabler for cloud/edge robotics applications with low delay requirements and mission control to enhance the situational awareness of first responders. With the proposed solutions, network, storage, and computation resources can be dynamically allocated through advanced techniques such as network slicing. The orchestration and smart placement of application components exploiting AI models will enable adaptation to current status and dynamics factors. The VOStack in the NEPHELE project will enable the elaboration of data effectively and efficiently with supportive functions tailored to the specific use-case requirement.

Our future work will consist of the implementation of a hyper-distributed application that demonstrates the benefits described in the paper for a post-earthquake scenario in a port. In such a scenario, we can imagine that the network infrastructure is down, the map of the port is not reliable due to collapsed infrastructure and buildings and several dangerous factors (e.g., containers with dangerous materials or at risk of collapsing) are of high risk for the SAR operations. It will be an ROS application for multiple robots and drones that enables the dynamic mapping of an unknown area. AI and computer vision models will be used for object detection and victim identification in the area and to update the map of the post-disaster scenario. Advanced data aggregation solutions will be investigated including consensus-based solutions as proposed, e.g., in [73] to extract information from a wide set of different sources in an efficient and effective manner. The extracted information will be used for mission control purposes, for priority definition in the SAR tasks and the assessment of risks and the health of victims. For integration with the NEPHELE framework, virtualization techniques and cloud-native technologies will be adopted.

Author Contributions

Conceptualization, L.M., G.T. and N.M.; writing—original draft preparation, L.M. and A.A.; writing—review and editing, L.M., A.A., G.T. and N.M.; funding acquisition, L.M., N.M. and G.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Union’s Horizon Europe research and innovation program under grant agreement No 101070487. Views and opinions expressed are however those of the authors only and do not necessarily reflect those of the European Union. The European Union cannot be held responsible for them.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ochoa, S.F.; Santos, R. Human-Centric Wireless Sensor Networks to Improve Information Availability during Urban Search and Rescue Activities. Inf. Fusion 2015, 22, 71–84. [Google Scholar] [CrossRef]

- Choong, Y.Y.; Dawkins, S.T.; Furman, S.M.; Greene, K.; Prettyman, S.S.; Theofanos, M.F. Voices of First Responders—Identifying Public Safety Communication Problems: Findings from User-Centered Interviews; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018; Volume 1. [Google Scholar]

- Saffre, F.; Hildmann, H.; Karvonen, H.; Lind, T. Self-swarming for multi-robot systems deployed for situational awareness. In New Developments and Environmental Applications of Drones; Springer: Cham, Switzerland, 2022; pp. 51–72. [Google Scholar]

- Queralta, J.P.; Raitoharju, J.; Gia, T.N.; Passalis, N.; Westerlund, T. Autosos: Towards multi-uav systems supporting maritime search and rescue with lightweight ai and edge computing. arXiv 2020, arXiv:2005.03409. [Google Scholar]

- Al-Khafajiy, M.; Baker, T.; Hussien, A.; Cotgrave, A. UAV and fog computing for IoE-based systems: A case study on environment disasters prediction and recovery plans. In Unmanned Aerial Vehicles in Smart Cities; Springer: Cham, Switzerland, 2020; pp. 133–152. [Google Scholar]

- Alsamhi, S.H.; Almalki, F.A.; AL-Dois, H.; Shvetsov, A.V.; Ansari, M.S.; Hawbani, A.; Gupta, S.K.; Lee, B. Multi-Drone Edge Intelligence and SAR Smart Wearable Devices for Emergency Communication. Wirel. Commun. Mob. Comput. 2021, 1–12. [Google Scholar] [CrossRef]

- Goldberg, K.; Siegwart, R. Beyond Webcams: An Introduction to Online Robots; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Inaba, M.; Kagami, S.; Kanehiro, F.; Hoshino, Y.; Inoue, H. A Platform for Robotics Research Based on the Remote-Brained Robot Approach. Int. J. Robot. Res. 2000, 19, 933–954. [Google Scholar] [CrossRef]

- Waibel, M.; Beetz, M.; Civera, J.; D’Andrea, R.; Elfring, J.; Gálvez-López, D.; Haussermann, K.; Janssen, R.; Montiel, J.; Perzylo, A.; et al. Roboearth. IEEE Robot. Autom. Mag. 2011, 18, 69–82. [Google Scholar]

- Tenorth, M.; Beetz, M. KnowRob: A knowledge processing infrastructure for cognition-enabled robots. Int. J. Robot. Res. 2013, 32, 566–590. [Google Scholar] [CrossRef]

- Arumugam, R.; Enti, V.R.; Bingbing, L.; Xiaojun, W.; Baskaran, K.; Kong, F.F.; Kumar, A.S.; Meng, K.D.; Kit, G.W. DAvinCi: A Cloud Computing Framework for Service Robots. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 3084–3089. [Google Scholar]

- Saxena, A.; Jain, A.; Sener, O.; Jami, A.; Misra, D.K.; Koppula, H.S. Robobrain: Large-scale Knowledge Engine for Robots. arXiv 2014, arXiv:1412.0691. [Google Scholar]

- Ichnowski, J.; Chen, K.; Dharmarajan, K.; Adebola, S.; Danielczuk, M.; Mayoral-Vilches, V.; Zhan, H.; Xu, D.; Kubiatowicz, J.; Stoica, I.; et al. FogROS 2: An Adaptive and Extensible Platform for Cloud and Fog Robotics Using ROS 2. arXiv 2022, arXiv:2205.09778. [Google Scholar]

- Amazon RoboMaker. Available online: https://aws.amazon.com/robomaker/ (accessed on 29 November 2018).

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.H.; Morrow, M.J.; Polakos, P.A. A Comprehensive Survey on Fog Computing: State-of-the-Art and Research Challenges. IEEE Commun. Surv. Tutor. 2017, 20, 416–464. [Google Scholar]

- Groshev, M.; Baldoni, G.; Cominardi, L.; De la Oliva, A.; Gazda, R. Edge Robotics: Are We Ready? An Experimental Evaluation of Current Vision and Future Directions. Digit. Commun. Netw. 2022; in press. [Google Scholar] [CrossRef]

- Huang, P.; Zeng, L.; Chen, X.; Luo, K.; Zhou, Z.; Yu, S. Edge Robotics: Edge-Computing-Accelerated Multi-Robot Simultaneous Localization and Mapping. IEEE Internet Things J. 2022, 9, 14087–14102. [Google Scholar] [CrossRef]

- Xu, J.; Cao, H.; Li, D.; Huang, K.; Qian, H.; Shangguan, L.; Yang, Z. Edge Assisted Mobile Semantic Visual SLAM. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; pp. 1828–1837. [Google Scholar]

- McEnroe, P.; Wang, S.; Liyanage, M. A Survey on the Convergence of Edge Computing and AI for UAVs: Opportunities and Challenges. IEEE Internet Things J. 2022, 9, 15435–15459. [Google Scholar] [CrossRef]

- SHERPA. Available online: http://www.sherpa-fp7-project.eu/ (accessed on 19 January 2023).

- RESPOND-A. Available online: https://robotnik.eu/projects/respond-a-en/ (accessed on 19 January 2023).

- Delmerico, J.; Mintchev, S.; Giusti, A.; Gromov, B.; Melo, K.; Horvat, T.; Cadena, C.; Hutter, M.; Ijspeert, A.; Floreano, D.; et al. The Current State and Future Outlook of Rescue Robotics. J. Field Robot. 2019, 36, 1171–1191. [Google Scholar] [CrossRef]

- Bravo-Arrabal, J.; Toscano-Moreno, M.; Fernandez-Lozano, J.; Mandow, A.; Gomez-Ruiz, A.J.; García-Cerezo, A. The Internet of Cooperative Agents Architecture (X-IoCA) for Robots, Hybrid Sensor Networks, and MEC Centers in Complex Environments: A Search and Rescue Case Study. Sensors 2021, 21, 7843. [Google Scholar] [CrossRef] [PubMed]

- Kimovski, D.; Mehran, N.; Kerth, C.E.; Prodan, R. Mobility-Aware IoT Applications Placement in the Cloud Edge Continuum. IEEE Trans. Serv. Comput. 2022, 15, 3358–3371. [Google Scholar] [CrossRef]

- Peltonen, E.; Sojan, A.; Paivarinta, T. Towards Real-time Learning for Edge-Cloud Continuum with Vehicular Computing. In Proceedings of the 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 14 June–31 July 2021; pp. 921–926. [Google Scholar]

- Mygdalis, V.; Carnevale, L.; Martinez-De-Dios, J.R.; Shutin, D.; Aiello, G.; Villari, M.; Pitas, I. OTE: Optimal Trustworthy EdgeAI Solutions for Smart Cities. In Proceedings of the 2022 22nd IEEE International Symposium on Cluster, Cloud and Internet Computing (CCGrid), Taormina, Italy, 16–19 May 2022; pp. 842–850. [Google Scholar]

- Hu, X.; Wong, K.; Zhang, Y. Wireless-Powered Edge Computing with Cooperative UAV: Task, Time Scheduling and Trajectory Design. IEEE Trans. Wirel. Commun. 2020, 19, 8083–8098. [Google Scholar] [CrossRef]

- Bacchiani, L.; De Palma, G.; Sciullo, L.; Bravetti, M.; Di Felice, M.; Gabbrielli, M.; Zavattaro, G.; Della Penna, R. Low-Latency Anomaly Detection on the Edge-Cloud Continuum for Industry 4.0 Applications: The SEAWALL Case Study. IEEE Internet Things Mag. 2022, 5, 32–37. [Google Scholar] [CrossRef]

- Wang, N.; Varghese, B. Context-aware distribution of fog applications using deep reinforcement learning. J. Netw. Comput. Appl. 2022, 203, 103354–103368. [Google Scholar] [CrossRef]

- Dobrescu, R.; Merezeanu, D.; Mocanu, S. Context-aware control and monitoring system with IoT and cloud support. Comput. Electron. Agric. 2019, 160, 91–99. [Google Scholar] [CrossRef]

- Zhao, X.; Yuan, P.; Li, H.; Tang, S. Collaborative Edge Caching in Context-Aware Device-to-Device Networks. IEEE Trans. Veh. Technol. 2018, 67, 9583–9596. [Google Scholar] [CrossRef]

- Tran, T.X.; Hajisami, A.; Pandey, P.; Pompili, D. Collaborative Mobile Edge Computing in 5G Networks: New Paradigms, Scenarios, and Challenges. IEEE Commun. Mag. 2017, 55, 54–61. [Google Scholar] [CrossRef]

- Lee, J.; Lee, J. Hierarchical Mobile Edge Computing Architecture Based on Context Awareness. Appl. Sci. 2018, 8, 1160. [Google Scholar] [CrossRef]

- Cheng, Z.; Gao, Z.; Liwang, M.; Huang, L.; Du, X.; Guizani, M. Intelligent Task Offloading and Energy Allocation in the UAV-Aided Mobile Edge-Cloud Continuum. IEEE Netw. 2021, 35, 42–49. [Google Scholar] [CrossRef]

- Rosenberger, P.; Gerhard, D. Context-awareness in Industrial Applications: Definition, Classification and Use Case. In Proceedings of the 51st Conference on Manufacturing Systems (CIRP), Stockholm, Sweden, 16–18 May 2018; pp. 1172–1177. [Google Scholar]

- Waharte, S.; Trigoni, N. Supporting Search and Rescue Operations with UAVs. In Proceedings of the 2010 International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010; pp. 142–147. [Google Scholar]

- Sibanyoni, S.V.; Ramotsoela, D.T.; Silva, B.J.; Hancke, G.P. A 2-D Acoustic Source Localization System for Drones in Search and Rescue Missions. IEEE Sens. J. 2018, 19, 332–341. [Google Scholar] [CrossRef]

- Manamperi, W.; Abhayapala, T.D.; Zhang, J.; Samarasinghe, P.N. Drone Audition: Sound Source Localization Using On-Board Microphones. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 508–519. [Google Scholar] [CrossRef]

- Sambolek, S.; Ivasic-Kos, M. Automatic Person Detection in Search and Rescue Operations Using Deep CNN Detectors. IEEE Access 2021, 9, 37905–37922. [Google Scholar] [CrossRef]

- Albanese, A.; Sciancalepore, V.; Costa-Perez, X. SARDO: An Automated Search-and-Rescue Drone-Based Solution for Victims Localization. IEEE Trans. Mob. Comput. 2021, 21, 3312–3325. [Google Scholar] [CrossRef]

- Queralta, J.P.; Taipalmaa, J.; Can Pullinen, B.; Sarker, V.K.; Nguyen Gia, T.; Tenhunen, H.; Gabbouj, M.; Raitoharju, J.; Westerlund, T. Collaborative Multi-Robot Search and Rescue: Planning, Coordination, Perception, and Active Vision. IEEE Access 2020, 8, 191617–191643. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Lu, H.; Xiao, J.; Qiu, Q.; Li, Y. Robust SLAM System Based on Monocular Vision and LiDAR for Robotic Urban Search and Rescue. In Proceedings of the 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), Shanghai, China, 11–13 October 2017; pp. 41–47. [Google Scholar]

- Murphy, R.; Dreger, K.; Newsome, S.; Rodocker, J.; Slaughter, B.; Smith, R.; Steimle, E.; Kimura, T.; Makabe, K.; Kon, K.; et al. Marine Heterogeneous Multi-Robot Systems at the Great Eastern Japan Tsunami Recovery. J. Field Robot. 2012, 29, 819–831. [Google Scholar] [CrossRef]

- Silvagni, M.; Tonoli, A.; Zenerino, E.; Chiaberge, M. Multipurpose UAV for search and rescue operations in mountain avalanche events. Geomat. Nat. Hazards Risk 2016, 8, 18–33. [Google Scholar] [CrossRef]

- Konyo, M. Impact-TRC Thin Serpentine Robot Platform for Urban Search and Rescue. In Disaster Robotics; Springer: Cham, Switzerland, 2019; pp. 25–76. [Google Scholar]

- Han, S.; Chon, S.; Kim, J.; Seo, J.; Shin, D.G.; Park, S.; Kim, J.T.; Kim, J.; Jin, M.; Cho, J. Snake Robot Gripper Module for Search and Rescue in Narrow Spaces. IEEE Robot. Autom. Lett. 2022, 7, 1667–1673. [Google Scholar] [CrossRef]

- Liu, K.; Zhou, X.; Zhao, B.; Ou, H.; Chen, B.M. An Integrated Visual System for Unmanned Aerial Vehicles Following Ground Vehicles: Simulations and Experiments. In Proceedings of the 2022 IEEE 17th International Conference on Control & Automation (ICCA), Naples, Italy, 27–30 June 2022; pp. 593–598. [Google Scholar]

- Jorge, V.A.M.; Granada, R.; Maidana, R.G.; Jurak, D.A.; Heck, G.; Negreiros, A.P.F.; dos Santos, D.H.; Gonçalves, L.M.G.; Amory, A.M. A Survey on Unmanned Surface Vehicles for Disaster Robotics: Main Challenges and Directions. Sensors 2019, 19, 702. [Google Scholar] [CrossRef] [PubMed]

- Mezghani, F.; Mitton, N. Opportunistic disaster recovery. Internet Technol. Lett. 2018, 1, e29. [Google Scholar] [CrossRef]

- Mezghani, F.; Kortoci, P.; Mitton, N.; Di Francesco, M. A Multi-tier Communication Scheme for Drone-assisted Disaster Recovery Scenarios. In Proceedings of the 2019 IEEE 30th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Istanbul, Turkey, 8–11 September 2019; pp. 1–7. [Google Scholar]

- Jeong, I.C.; Bychkov, D.; Searson, P.C. Wearable Devices for Precision Medicine and Health State Monitoring. IEEE Trans. Biomed. Eng. 2018, 66, 1242–1258. [Google Scholar] [CrossRef]

- Kasnesis, P.; Doulgerakis, V.; Uzunidis, D.; Kogias, D.; Funcia, S.; González, M.; Giannousis, C.; Patrikakis, C. Deep Learning Empowered Wearable-Based Behavior Recognition for Search and Rescue Dogs. Sensors 2022, 22, 993. [Google Scholar] [CrossRef]

- Arkin, R.; Balch, T. Cooperative Multiagent Robotic Systems. In Artificial Intelligence and Mobile Robots; Kortenkamp, D., Bonasso, R.P., Murphy, R., Eds.; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Rocha, R.; Dias, J.; Carvalho, A. Cooperative multi-robot systems: A study of vision-based 3-D mapping using information theory. Robot. Auton. Syst. 2005, 53, 282–311. [Google Scholar] [CrossRef]

- Singh, A.; Krause, A.; Guestrin, C.; Kaiser, W.J. Efficient Informative Sensing using Multiple Robots. J. Artif. Intell. Res. 2009, 34, 707–755. [Google Scholar] [CrossRef]

- Schmid, L.M.; Pantic, M.; Khanna, R.; Ott, L.; Siegwart, R.; Nieto, J. An Efficient Sampling-Based Method for Online Informative Path Planning in Unknown Environments. IEEE Robot. Autom. Lett. 2020, 5, 1500–1507. [Google Scholar] [CrossRef]

- Fung, N.; Rogers, J.; Nieto, C.; Christensen, H.; Kemna, S.; Sukhatme, G. Coordinating Multi-Robot Systems Through Environment Partitioning for Adaptive Informative Sampling. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Hawes, N.; Burbridge, C.; Jovan, F.; Kunze, L.; Lacerda, B.; Mudrova, L.; Young, J.; Wyatt, J.; Hebesberger, D.; Kortner, T.; et al. The STRANDS Project: Long-Term Autonomy in Everyday Environments. IEEE Robot. Autom. Mag. 2017, 24, 146–156. [Google Scholar]

- Singh, A.; Krause, A.; Guestrin, C.; Kaiser, W.; Batalin, M. Efficient Planning of Informative Paths for Multiple Robots. In Proceedings of the 20th International Joint Conference on Artificial Intelligence, Hyderabad, India, 6–12 January 2007. [Google Scholar]

- Ma, K.; Ma, Z.; Liu, L.; Sukhatme, G.S. Multi-robot Informative and Adaptive Planning for Persistent Environmental Monitoring. In Proceedings of the 13th International Symposium on Distributed Autonomous Robotic Systems, DARS, Montbéliard, France, 28–30 November 2016. [Google Scholar]

- Manjanna, S.; Dudek, G. Data-driven selective sampling for marine vehicles using multi-scale paths. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Salam, T.; Hsieh, M.A. Adaptive Sampling and Reduced-Order Modeling of Dynamic Processes by Robot Teams. IEEE Robot. Autom. Lett. 2019, 4, 477–484. [Google Scholar] [CrossRef]

- Euler, J.; Von Stryk, O. Optimized Vehicle-Specific Trajectories for Cooperative Process Estimation by Sensor-Equipped UAVs. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May 2017–3 June 2017. [Google Scholar]

- Gonzalez-De-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Lopez-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of robots for environmentally-safe pest control in agriculture. Precis. Agric. 2016, 18, 574–614. [Google Scholar] [CrossRef]

- Tourrette, T.; Deremetz, M.; Naud, O.; Lenain, R.; Laneurit, J.; De Rudnicki, V. Close Coordination of Mobile Robots Using Radio Beacons: A New Concept Aimed at Smart Spraying in Agriculture. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7727–7734. [Google Scholar]

- Merino, L.; Caballero, F.; Martinez-de-Dios, J.R.; Maza, I.; Ollero, A. An Unmanned Aerial System for Automatic Forest Fire Monitoring and Measurement. J. Intell. Robot. Syst. 2012, 65, 533–548. [Google Scholar] [CrossRef]

- Haksar, R.N.; Trimpe, S.; Schwager, M. Spatial Scheduling of Informative Meetings for Multi-Agent Persistent Coverage. IEEE Robot. Autom. Lett. 2020, 5, 3027–3034. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous Localization and Mapping: A Survey of Current Trends in Autonomous Driving. IEEE Trans. Intell. Veh. 2017, 2, 194–220. [Google Scholar] [CrossRef]

- De Jesus, K.J.; Kobs, H.J.; Cukla, A.R.; De Souza Leite Cuadros, M.A.; Tello Gamarra, D.F. Comparison of Visual SLAM Algorithms ORB-SLAM2, RTAB-Map and SPTAM in Internal and External Environments with ROS. In Proceedings of the 2021 Latin American Robotics Symposium (LARS), 2021 Brazilian Symposium on Robotics (SBR), and 2021 Workshop on Robotics in Education (WRE), Natal, Brazil, 11–15 October 2021. [Google Scholar]

- Benavidez, P.; Muppidi, M.; Rad, P.; Prevost, J.J.; Jamshidi, M.; Brown, L. Cloud-based Real Time Robotic Visual SLAM. In Proceedings of the 2015 Annual IEEE Systems Conference (SysCon) Proceedings, Vancouver, BC, Canada, 13–16 April 2015. [Google Scholar]

- Wu, J.; Wang, S.; Chiclana, F.; Herrera-Viedma, E. Two-Fold Personalized Feedback Mechanism for Social Network Consensus by Uninorm Interval Trust Propagation. IEEE Trans. Cybern. 2022, 52, 11081–11092. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).