Abstract

The growing need for intelligent educational systems calls for architectures supporting adaptive instruction, while enabling more permanent, long-term personalization and cognitive alignment in the long run. While we have seen progress in adaptive learning technologies at the intersection of Self-Regulated Learning (SRL), Continual Learning (CL), and Meta-Learning, these are generally employed in isolation to provide piecemeal solutions. In this paper, we propose CAMEL, a unified architecture for (1) cognitive modelling based on SRL, (2) continual learning functionalities, and (3) meta-learning to provide adaptive, personalized, and cognitively consistent learning environments. CAMEL includes the following components: (1) A Cognitive State Estimator that estimates learner motivation, attention, and persistence from behavioral traces, (2) A Meta-Learning Engine that allows it rapid adaptation through Model-Agnostic Meta-Learning (MAML), (3) A Continual Learning Memory that preserves knowledge across sessions using Elastic Weight Consolidation (EWC) and Replay, (4) A Pedagogical Decision Engine that makes real-time efficient adjustments of instructional strategies, and (5) A closed-loop that continuously reconciles misalignments between pedagogical actions and predicted cognitive states. Experiments conducted on the xAPI-Edu-Data dataset evaluate the system’s few-shot adaptation capability, knowledge retention, cognitive-state prediction accuracy, and knowledge, as well as cognitive responsiveness to the impending questions. It offers competitive performance in learner-state prediction and long-term performance compared to the baselines, and the improvements are consistent across the different baselines. This paper lays the groundwork for next-generation adaptive and cognition-driven AI-based learning systems.

1. Introduction

Educational technology is changing fast, and AI is the new way to create a smart, personalized, and adaptive environment for learning. Despite present advancements in areas like intelligent tutoring systems (ITS), learning analytics, and affective computing, numerous existing educational technologies do not provide iterative adjustments accounting for the shifts in cognitive, motivational, and emotional experience (over time) [1,2]. A growing body of research indicates that Self-Regulated Learning (SRL) is perhaps one of the best predictors of academic performance in these learner-led, digital environments [3,4]. SRL entails higher-order processes such as planning, self-monitoring, and reflection that are mediated by motivational factors and cognitive flexibility [5,6].

In AI-based educational systems, Self-Regulated Learning can be operationalized by mapping observable behavioral traces to latent cognitive states. In this work, SRL is modeled through learner interaction features such as resource access, task completion, participation, and response timing, which are processed by a Cognitive State Estimator to infer motivation, attention, and persistence. These inferred cognitive states are then used as inputs to adaptive learning components, enabling personalized feedback, pacing, and instructional strategy selection. This computational formulation allows SRL to be integrated into an end-to-end AI system rather than remaining a purely conceptual construct.

A computer-system generality that covers a broad spectrum of computational frameworks is complemented with the capability of fast generalization within the task and learner profile from a few samples [7,8], which entails learning to learn meta-learning. Moreover, continuous learning (CL) addresses the challenge of catastrophic forgetting by allowing systems to learn incrementally and retain knowledge over time, a crucial property for educational settings with continuously changing learner cohorts [9]. At the same time, existing systems have only considered SRL, CL, and meta-learning as isolated boxes, resulting in pieces of architectures that are disjointed and cognitively incoherent, and not necessarily meant to be long-term adaptable. To overcome this limitation, we propose a unified architecture that leverages meta-learning to facilitate rapid personalization, continual learning to support life-long learning, and self-regulated learning modelling to align the cognitive skills with the learning process. We propose a system with roots in cognitive theory [10] that employs reinforcement learning [11], natural language processing [12], and multimodal analytics for support to maintain the dynamic, personalized, and ethical nature of adaptive learning pathways.

Various decision-making models have been explored in intelligent educational systems, including support vector machines (SVM), convolutional neural networks (CNN), and transformer-based architectures. While these models are effective for static or data-intensive prediction tasks, they often require large amounts of labeled data and provide limited interpretability in dynamic educational settings. In contrast, the proposed CAMEL architecture adopts a hybrid decision-making approach that combines cognitively grounded rules with reinforcement learning-based policy optimization. This choice enables interpretable, adaptive, and data-efficient pedagogical decisions that can evolve over time in response to changing learner states. Similar hybrid decision strategies have recently been shown to be effective in adaptive and distributed AI systems [13].

This paper makes several key contributions to the field of intelligent educational systems. First, we propose a unified AI-based architecture that jointly integrates self-regulated learning-based cognitive modeling, meta-learning, and continual learning within a single closed-loop framework. Second, we introduce a Cognitive State Estimator that infers learners’ motivation, attention, and persistence from behavioral traces, enabling cognitively aligned personalization. Third, the use of meta-learning allows rapid few-shot adaptation to new learners and learning contexts under low-data conditions. Fourth, continual learning mechanisms based on Elastic Weight Consolidation and replay are incorporated to mitigate catastrophic forgetting and support long-term adaptation. Finally, the proposed architecture is empirically evaluated using the xAPI-Edu-Data dataset and a complementary EdNet-like dataset, demonstrating improvements in cognitive state prediction, few-shot adaptation, and long-term performance stability.

2. Related Work

Incorporating CL, meta-learning, and SRL into intelligent educational systems represents a watershed moment toward personalized, adaptive, and autonomous learning environments. This section provides an overview of the literature across these domains, their intersections, a few recent advancements, and their limitations, and justifies the need for such an approach.

2.1. Self-Regulated Learning and Cognitive Modeling in Intelligent Systems

Self-Regulated Learning (SRL) is central to the learner-centered educational paradigms, requiring various forward-looking activities, including goal setting, self-monitoring, and retrospective assessment [3,7]. Timely SRL techniques substantially alleviated cognitive load and improved learning outcomes in learner-centered adaptive digital contexts. In addition, studies by [4] Motivational variables (e.g., academic self-efficacy) are crucial for SRL (2023) as they enable students to go beyond engagement to cognitive presence. Cognitively, flexibility is also highlighted as a significant moderator; [2] stated that students with higher cognitive adaptability would be more successful in self-regulation over the online learning environment.

Self-regulated learning is widely depicted as a cyclical process of forethought, performance, and self-reflection where motivational constructs like academic self-efficacy and goal orientation are instrumental in maintaining learner engagement and metacognitive control [4,5]. These processes are important in digital learning environments, where learners often work with so much more freedom than they do face-to-face and must manage their activities in a much more self-regulated manner.

2.2. Intelligent Tutoring Systems and Adaptive Learning Technologies

Intelligent Tutoring Systems (ITS) are AI-enabled environments that frequently tailor the learning experience based on the learner themselves. Ref. [1] identified multichannel data types, such as affective and behavioral traces, using a data-driven way wherein the decision in scaffolding SRL took place, and adaptive interventions based on learner needs, such as real-time. Similarly, using generative AI for real-time personalization to generate content, feedback, and guidance that is specific in terms of ongoing interactions with the learners was discussed by [14]. These systems, which account for students’ tentative and often bumbling efforts, make some headway toward the goal of real-time digital tutors [15].

2.3. Meta-Learning for Rapid Personalization

Meta-learning, defined as ‘learning to learn’, allows systems to quickly adapt to new tasks or learner profiles with little data [8]. Metacognition and innovative technology integration: nine-layer modelling (meta-learning model) and the pathway to deep personalization (a reflective mechanism) [10]. Optimizing SRL Strategy Selection utilizes reinforcement-based meta-learning to select SRL strategies and provide prescriptive analytics that recompute recommendations now according to learner feedback [16]. To further improve adaptation, a contextual learning trajectory model is proposed by integrating knowledge graphs and learner profiles to capture the intersections of the two betters.

Research among metacognitive learning environments suggests LMS platforms can support SRL processes, especially goal setting, self-monitoring, time management, and reflective analysis based on structuring interaction tools and activity-trace analytics to a cyclical SRL framework of Zimmerman [17]. These findings highlight the necessity of combining cognitive and metacognitive cues within adaptive learning environments.

Learner profiles need to be adapted from only one or a few interaction examples in different educational scenarios where low data exists, and meta-learning approaches like MAML and Reptile have been shown to work well for this [8]. These models are well aligned with our objective of cold-start personalization.

2.4. Continual Learning in Educational AI Systems

Continual Learning (CL) is a paradigm to make AI models learn over time while preserving prior information learned. It has been extensively used in robotics and computer vision, but it is still emerging in education. Methods such as Elastic Weight Consolidation (EWC) and Gradient Episodic Memory (GEM) enable the system to protect old knowledge while securing new knowledge, which is necessary for systems designed to adapt over a long period and develop alongside a changing cognitive state of a learner [9]. However, task diversity and long-term cognitive variability are left as open research problems in education.

2.5. AI Modeling of Self-Regulated Learning Strategies

In a more recent work, Ref. [11] has highlighted the potential of NLP-based approaches to discern metacognitive markers in text generated by learners, which would allow scalable and individual detection of SRL strategies and personalized feedback.

Modelling of SRL from learner interaction data using various AI techniques in recent studies:

- Natural Language Processing (NLP): A data-driven approach employed to identify metacognitive markers from texts produced by learners (e.g., reflections, discussion threads) in order to provide personalized scaffolding [11].

- Reinforcement Learning (RL): Frames SRL as a sequential decision-making process, allowing systems to recommend optimal SRL strategies dynamically [10].

- Multimodal Data Analysis: Integrates clickstream, physiological, and affective data to provide a holistic model of learner states and regulation behavior [12,15].

These approaches form the backbone of intelligent, adaptive educational systems capable of supporting metacognitive processes.

2.6. Limitations of Current Approaches

Despite the progress, several limitations persist in the literature:

- Fragmentation of research paradigms: Meta-learning, CL, and SRL are often developed in isolation, missing the potential of unified frameworks.

- Temporal rigidity: Few models support long-term learning trajectory adaptation, a critical requirement for lifelong learning support.

- Ethical concerns: Algorithmic bias, lack of transparency, and overreliance on automation risk dejection, learner independence, and trust.

Recent review work highlights that meta-learning, continual learning, and SRL modeling are often investigated in isolation, despite their complementary roles in adaptive educational systems, leading to fragmentation across research paradigms [18].

2.7. Comparative Overview of Leading Approaches

If we focus on the SRL systems (MetaTutor, RL-based SRL support, and NLP-based SRL diagnostics), we can observe that current solutions implement, at best, one of the meta-learning- and continual-learning-based approaches at a time (Table 1). This fragmentation illustrates the lack of an integrated system that can simultaneously provide personalized and continuous, cognitively aligned, and sustainable adaptation, which is exactly what the CAMEL architecture targets.

Table 1.

Comparative positioning of representative AI-based educational systems and the proposed CAMEL architecture.

The above three paradigms for the statistical modelling representation of an SRL process are rarely performed by intelligent educational systems simultaneously, as summarized in Table 1. On the contrary, CAMEL is specifically built to incorporate all three into one complete closed-loop architecture, which makes it very clear on the novelty and placement of CAMEL in the space.

Overall, the reviewed literature demonstrates that existing intelligent educational systems typically operationalize only one or two of the paradigms required for long-term adaptive learning. SRL-focused systems emphasize cognitive and metacognitive processes but often lack mechanisms for rapid adaptation and long-term retention. Meta-learning approaches support fast personalization under low-data conditions but usually omit explicit SRL modeling. Continual learning methods address catastrophic forgetting but are rarely integrated with pedagogical decision-making or cognitive state estimation. This lack of integration results in fragmented architectures, which motivates the unified design of the proposed CAMEL framework.

2.8. Positioning of the Proposed Approach

While previous fragmented implementations have been called for meta-learning, continual learning, or SRL modelling, the proposed system combines meta-learning, continual learning, and SRL modelling into a unified, adaptive architecture. It fills the gaps that already exist by doing the following:

- Facilitating an ongoing personalized experience over time.

- Allowing for quick adjustment to upcoming behavioral and cognitive patterns inferred from learner interaction data.

- Embedding cognitive self-regulation as a first-class modelling target.

With this unified approach, our system can maintain a state-of-the-art level of adaptability in AI for smart education, linking innovative cognitive theory with scalable, adaptive AI methods capable of deployment in intelligent educational systems.

To conclude, CAMEL diverges from previous methods not by proposing a new algorithm but rather by tightly coupling three previously orthogonal paradigms: cognitive modelling (SRL), meta-learning for personalization (few-shot), and continual learning for retention (lifelong) within a single operational architecture. By integrating these three aspects into a single system, CAMEL supports (1) continual inference and tracking of learner’s cognitive states, (2) rapid personalization to new learners with limited data, and (3) sustained pedagogical policy effectiveness across long sequences of task and sessions while maintaining cognitive state accuracy, all aspects that current SRL-focused tutors, meta-learning-based personalization methods, and continual-learning systems alone cannot achieve.

3. Research Gap and Contribution

In this paper, we argue that the advances being made regarding each of these paradigms providing the input to an adaptive educational system are substantial, but at the same time, research on them has hardly been integrated into one coherent architecture. The learning and modeling of Self-Regulated Learning (SRL) has been extensively employed and scaffolded in intelligent tutoring systems like MetaTutor, where multimodal traces are used to provide real-time SRL support [1,12]. Additional work addresses prescriptive analytics of SRL strategies based on reinforcement learning policies [10] or identification of metacognitive and SRL-related patterns from text produced by learners in an NLP-based diagnostic fashion [11]. Though such systems provide comprehensive SRL-focused support, the former do not employ formal continual learning mechanisms, and the latter do not engage meta-learning algorithms for few-shot personalization.

At the same time, meta-learning has been suggested as a fast personalization solution in low-data scenarios by the capability of learning to quickly adapt to novel learner profiles and tasks [8]. While these approaches realize how “learning to learn” can help education, they are usually not guided by a clear generic SRL model and are not designed with longevity in mind (i.e., forgetting of knowledge and policies over time can lead to reduced transfer to novel tasks). Caveats: Likewise, continuous learning techniques such as Elastic Weight Consolidation (EWC) and replay-based methods have been examined in the hopes of reducing catastrophic forgetting and enabling lifelong adaptation in AI-based learning environments [9], yet are generally used without any learner modelling that is explicit in cognitive or SRL.

As a result, there is fragmentation in the literature, with SRL-focused systems that highlight the cognitive and metacognitive processes at their core, but often without lifelong memory and rapid cross-learner adaptation, meta-learning approaches that achieve few-shot personalization but rarely model explicit SRL constructs or incorporate continual learning (CL), and CL research that efficiently secures long-term retention and representation adequacy, but frequently overlooks the cognitive and motivational dynamics that SRL often aims to capture [18]. Neither of these two streams of research is aimed at taking this operationalized SRL modeling, meta-learning, and continual learning jointly in a single closed-loop architecture to target both short-term and long-term personalization in intelligent educational systems, as far as we know.

To fill this gap, we present CAMEL, the first fully integrated intelligent tutoring system containing (1) a Cognitive State Estimator based on an SRL theory that infers motivation, attention, and persistence from behavior traces, (2) a Meta-Learning Engine based on MAML and related algorithms for few-shot adaptation to new learners and tasks, and (3) a Continual Learning Memory based on a combination of EWC and replay and across sessions and topics. We empirically evaluate CAMEL on the xAPI-Edu-Data dataset, complemented by an EdNet-like sample, showing superior cognitive state prediction, few-shot adaptation, and long-term performance stability compared to baselines. Moreover, an integration case study with an LMS showcases the practicality of deploying CAMEL in real-world learning environments. These contributions combine to make CAMEL a new, cognitively motivated architecture that links prior islands of research in modelling of SRL, meta-learning, and continual learning for IESs.

4. Our Conceptual Architecture

4.1. Learner Cognitive Model

The design of the proposed system is viewed through the lens of Self-Regulated Learning (SRL) theory, which conceptualizes learning as an engaging, circular process of planning, self-regulation, and reflection. These processes are thought to be mediated by certain motivational factors, e.g., self-efficacy and goal orientation, that serve as direct predictors of learner engagement, persistence, and metacognitive control [4,5]. SRL is especially important in digital learning environments where learners work with a high degree of autonomy and with asynchronous interactions with content and feedback mechanisms [3]. We represent apparatus based on the SRL framework, which allows the ongoing estimation of cognitive states from observable variables such as response delay, accuracy, self-assessment response outcomes, and behavior patterns. As supported by [12], multimodal traces are, accordingly, within the bounds of a reasonable proxy for a range of internal cognitive and emotional states, provided they are modelled correctly. This adaptive learner model creates a foundation for the adaptivity of our system by allowing pedagogical choices to adapt to the changing demands of the learner.

But recent progress in the modeling of SRL has shown that when the multimodal traces that combine behavioral, temporal, affective, and self-reported data are modeled appropriately, they can be used as reliable proxies for internal cognitive and motivational states [1]. This reinforces our use of behavioral interaction logs to estimate latent cognitive variables such as attention, motivation, and persistence.

4.2. Proposed Intelligent Educational System

To translate this theoretical foundation into a usable architecture, we proposed a modular intelligent educational system that stacks three distinct paradigms of AI: continual learning (CL), meta-learning, and SRL modelling. There are four main components of the system:

- Cognitive State Estimator: This module uses sequence-based neural architectures (e.g., LSTMs, Transformers) to extract latent cognitive variables from learner interactions. Existing research has shown that such models can support dynamic learner-state modelling [11,12].

- Model Learner: The model incorporates model-agnostic meta-learning (MAML) and related algorithms like Reptile to ensure that new learners and instructional tasks can be adjusted efficiently. Under low-data settings, these algorithms show utility in educational personalization [8].

- Contrary to this, Continual Learning Memory (CLM) is a module that retains long-term learning ability to avoid catastrophic forgetting with factors such as Elastic Weight Consolidation (EWC) and Gradient Episodic Memory (GEM). This has applicability mainly in lifelong learning cases, as observed on educational platforms [9].

The decision engine uses outputs from the cognitive state estimator, the meta-learning engine, and the CL memory to decide which pedagogical action to take. Our scalable engine scaffolds are based on a cognitive profile by altering content, feedback, and task difficulty in real time. Reinforcement learning is increasingly being used to operationalize SRL as a sequential decision-making process, enabling systems to recommend optimal regulation strategies dynamically based on learner feedback and behavioral evolution [10].

Such a synergy of the modules affords a system that can be adaptively short-term and resilient over time, two key components that are often found to be lacking in current educational AI systems [1].

4.3. Integration of Paradigms

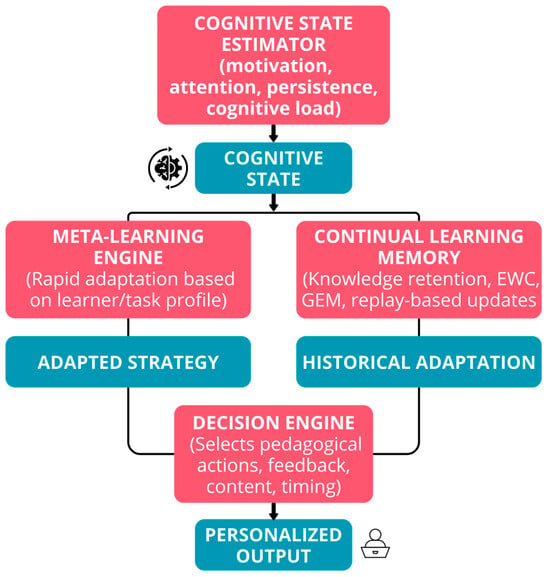

Figure 1 shows the innovation summary of our system. Abstractly, our system integrates the modelling of SRL, continual learning, and meta-learning in one adaptive architecture. Previous systems only tackle one or two of the components in isolation, but our model forms a closed-loop learning cycle where:

- The Cognitive State Estimator interprets real-time learner behavior.

- The Meta-Learning Engine modifies learning strategies to fit new profiles or tasks.

- The Continual Learning Module is responsible for maintaining the previously learnt behavior and policies learnt from previous sessions.

- The Decision Engine delivers context-appropriate pedagogical actions.

Figure 1.

Conceptual architecture of the proposed intelligent educational system.

Every learner interaction iteratively hones in on this loop and thus can be used by the system to personalize instruction within and across sessions. This form of temporal personalization directly targets one of the central challenges in adaptive education: maintaining alignment between the pedagogical response and the learner’s cognition as time passes [10]. It dynamically deals with cases like motivation drops, task complexity changes, and learner engagement changes. In contrast to previous systems like [1] that emphasize short-term SRL scaffolding without long-term memory or rapid adaptation to learner differences, our system integrates cognitive responsiveness and strong learning dynamics, enabling it to be implemented at scale in innovative education ecosystems.

The ethical concerns identified in prior work, including algorithmic bias, lack of transparency, and overreliance on automation, are partially addressed in the proposed architecture. CAMEL emphasizes interpretability through explicit cognitive state estimation and rule-informed pedagogical decision-making, allowing human-understandable reasoning rather than opaque automation. Furthermore, the system is designed to support learner agency by adapting instructional strategies without removing learner control or autonomy. While a full ethical evaluation is beyond the scope of this study, the architecture is designed with transparency, accountability, and human-centered adaptation as guiding principles.

5. Methodology

5.1. Implemented Model

The proposed intelligent educational system was designed and implemented as a modular architecture consisting of four core components: (1) the Cognitive State Estimator, (2) the Meta-Learning Engine, (3) the Continual Learning Memory, and (4) the Pedagogical Decision Engine. Given that each module has its functionality, they work together seamlessly to provide a personalized and adaptive learning experience as learners develop their cognitive profiles over time.

The proposed CAMEL architecture is composed of four interconnected modules, each fulfilling a distinct role within the adaptive learning loop. The Cognitive State Estimator processes learner interaction data to infer latent cognitive states such as motivation, attention, and persistence. The Meta-Learning Engine enables rapid adaptation to new learners and tasks by optimizing model parameters under few-shot conditions. The Continual Learning Memory preserves previously acquired knowledge across sequential learning tasks, mitigating catastrophic forgetting. Finally, the Pedagogical Decision Engine integrates cognitive state estimates and learning outputs to dynamically select instructional actions. Together, these modules form a closed-loop system that supports adaptive, cognitively aligned personalization.

Cognitive State Estimator: The first module employs a transformer-based neural network to predict latent cognitive variables such as attention, motivation, and persistence. Input: time-series features of the learners based on raised hands, visited resources, discussion, and student absence days. First, we created a composite Engagement Index by normalizing (using z-scores) and averaging over behavioral metrics. To simulate session-based learning, the input sequences were segmented based on subject-topic combinations.

Meta-Learning Engine: We implemented the Model-Agnostic Meta-Learning (MAML) algorithm using the learn2learn library to enable rapid adaptation to new learners with limited data. Reptile, a first-order approximation of MAML, was also tested as a computationally efficient baseline. The meta-learner optimizes instructional strategies based on few-shot learner behavior, allowing the system to personalize feedback early in the learning process.

Continual Learning Memory: For sequential adaptation, we integrated Elastic Weight Consolidation (EWC) and Gradient Episodic Memory (GEM) using the Avalanche library. These approaches allowed the system to retain knowledge across multiple instructional topics (e.g., Math, Biology) while incorporating new learner experiences without catastrophic forgetting.

Pedagogical Decision Engine: This module synthesizes outputs from the cognitive state estimator and learning components to decide on the next pedagogical action. The system dynamically adjusts feedback, content difficulty, and pacing based on the learner’s real-time cognitive state. A hybrid policy network was trained using reinforcement learning to support this decision-making process in conjunction with rule-based heuristics.

The Pedagogical Decision Engine integrates the inferred cognitive states and the outputs of the learning components through a hybrid decision mechanism. Specifically, cognitive indicators such as attention, motivation, and persistence are combined with performance metrics and adaptation signals produced by the meta-learning and continual learning modules. These inputs are jointly evaluated using a rule-informed decision logic, where predefined pedagogical rules guide the selection of instructional actions, optionally supported by reinforcement learning-based policy optimization. This design enables reproducible and interpretable pedagogical decisions while maintaining adaptability to evolving learner states.

5.2. Data Used

The experiments were conducted using the publicly available xAPI-Edu-Data dataset from Kaggle, which contains detailed learning records of 480 high school students. The dataset includes both demographic features (e.g., gender, nationality, ParentAnswering) and behavioral indicators relevant to self-regulated learning, including:

- Engagement metrics: raisedhands, VisitedResources, Discussion

- Attendance: StudentAbsenceDays

- Academic progression: StageID, GradeID, Topic

- Target label: Class (student final performance: Low, Middle, High)

To simulate temporal learning, data were grouped by student_id and Topic, forming task sequences reflecting learning sessions. Cognitive traits were inferred from engineered features such as an Engagement Index, computed as a normalized mean of raisedhands, VisitedResources, and Discussion. The dataset was cleaned, one-hot encoded for categorical variables, and z-score normalized for numerical ones. Synthetic perturbations (e.g., motivation drops, performance plateaus) were introduced in controlled profiles to test the robustness of cognitive-aware adaptation.

We also provided a complementary dataset, EdNet (2020), which consists of one of the largest modern students’ problem interaction corpora, in addition to xAPI-Edu-Data. Additionally, EdNet contains large-scale sequential traces that can be used to evaluate compatibility with contemporary learninganalytics pipelines. We present the detailed analysis of this dataset in the Results Section.

The study uses two publicly available datasets:

xAPI-Edu-Data: a behavioral and performance dataset for SRL research, available at https://www.kaggle.com/datasets/aljarah/xapi-edu-data (accessed on 15 December 2025).

EdNet KT1 Dataset: a large-scale student–problem interaction dataset released by Riiid, available at https://github.com/riiid/ednet (accessed on 14 December 2025).

These datasets were selected because they provide complementary properties for evaluating cognitive-state estimation, meta-learning adaptation, and continual-learning stability.

5.3. Experimental Protocol

We designed three experimental scenarios to evaluate each component of the system:

- Cold-Start Personalization

Objective: Evaluate the meta-learning engine’s few-shot adaptation to new learners.

Method: Train the meta-learner on N − 1 learners, then test on the Nth with five interaction examples.

- Continual Adaptation Across Tasks

Objective: Assess the system’s ability to retain knowledge over sequential topics.

Method: Simulate task sequences based on different Topic values (e.g., Biology → Chemistry → Math), measuring performance retention on earlier tasks.

- Cognitive-Aware Responsiveness

Objective: Measure how well the system adjusts pedagogical strategies in response to inferred cognitive states.

- ➢

- Method: Induce synthetic disengagement by lowering VisitedResources and Discussion, and evaluate the system’s feedback effectiveness.

All experiments were conducted using a 5-fold cross-validation setup. For each scenario, we compared:

- The proposed adaptive system (CL + Meta + SRL)

- A baseline deep learning model without adaptation

- A rule-based ITS without cognitive awareness

Each model was trained and tested over five simulated sessions per learner.

5.4. Evaluation Metrics

System performance was evaluated along three dimensions:

A. Continual Learning

- ➢

- Accuracy Over Time: performance stability across tasks

- ➢

- Forgetting Rate: loss in accuracy on previous topics after learning new ones

- ➢

- Stability: prediction variance across sessions

B. Meta-Learning

- ➢

- Few-Shot Accuracy: accuracy after five interaction samples

- ➢

- Adaptation Speed: number of gradient updates to convergence

- ➢

- Generalization Gap: performance difference between seen and unseen learners

C. Cognitive Responsiveness

- ➢

- Engagement Index: ratio of high-engagement interactions

- ➢

- Progress Score: improvement in performance across sessions

- ➢

- Regulation Quality Index: match between feedback provided and learner’s cognitive needs

5.5. Tools and Environment

The system was implemented using the following technologies:

- Programming language: Python 3.10

- Libraries:

- o

- PyTorch v2.0.1 for model development

- o

- Learn2learn v0.2.0 for meta-learning routines

- o

- Avalanche v0.3.1 for continual learning components

- o

- Scikit-learn v1.3.0, Pandas v2.0.3, Matplotlib v3.7.2 for preprocessing, analysis, and visualization

- Execution environment:

- o

- Machine: Lenovo i7, 32 GB RAM, NVIDIA RTX 3080 (10 GB)

- o

- OS: Ubuntu 22.04

- o

- Experiments were containerized using Docker v24.0.2 for reproducibility and GPU acceleration.

All code, models, and preprocessed data will be made available on a public GitHub (2025) repository upon publication to ensure transparency and reproducibility.

5.6. Prototype Feasibility and LMS Integration Scenario

We created an integration scenario to evaluate the potential for employing CAMEL in real-world educational environments by modelling how the system would function in an LMS such as Moodle. Under such a scenario, the typical interaction traces (e.g., resource visit, attempted quizzes, and discussion participation) generated by an LMS are processed by the Cognitive State Estimator of CAMEL to predict engagement-related indicators. The learner model is updated by the Meta-Learning and Continual Learning modules, and the Pedagogical Decision Engine generates adaptive recommendations that are to be delivered to the learner through the LMS interface.

This example illustrates how CAMEL can seamlessly integrate into an LMS workflow and function in quasi-real time with generic learner interaction data. While this study does not represent a full deployment around live student data, it is indicative of the potential for technical integration for such a deployment. To evaluate the pedagogical impact of the proposed system, we have planned a pilot study with real learners as future work.

6. Results and Evaluation

6.1. Cognitive State Modeling (xAPI-Edu-Data)

In intelligent educational systems, the ability to estimate a learner’s cognitive state (e.g., motivation, attention, cognitive load) is critical for enabling personalized and adaptive pedagogical responses. The first module of our architecture, the Cognitive State Estimator, is designed to infer latent cognitive indicators from observable behavioral traces such as class participation, resource engagement, and discussion activity.

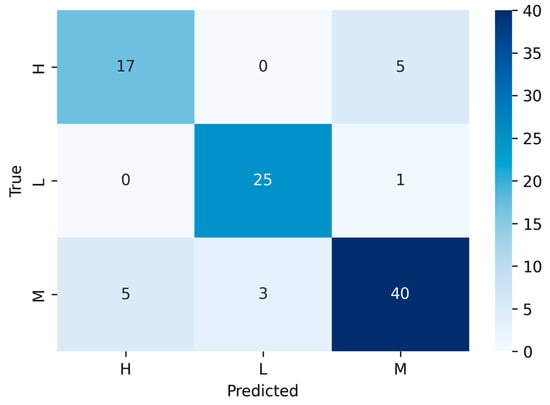

We developed a machine learning pipeline that categorizes students into either Low, Middle, or High performance levels. The first evaluation investigates the Cognitive State Estimator, which estimates the state of learner engagement (three levels: Low, Middle, and High) associated with cognitive engagement-related states from learning behavior features. As you can see from the confusion matrix (Figure 2), accuracy is very high on the Middle class, while overlap is moderate between Middle and High, as may be expected due to the mixing of motivational and behavioral patterns evident in many of the more typical indicators of self-regulated learning [1,12].

Figure 2.

Confusion matrix showing predicted vs. true cognitive performance classes (Low, Middle, High).

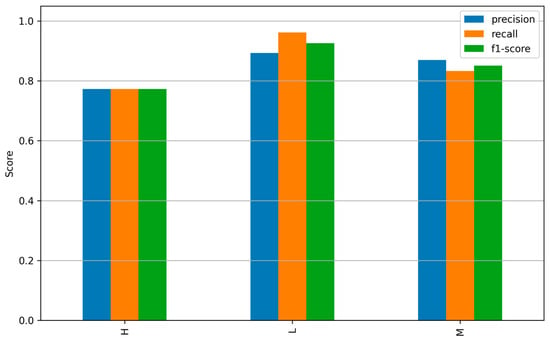

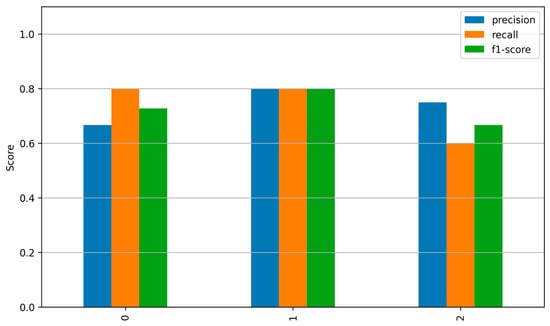

In the class-level F1-scores, we observe good generalization in all classes (Low ≈ 0.91, Middle ≈ 0.86, High ≈ 0.77). In Figure 3, the horizontal axis represents the learner performance classes Low (L), Middle (M), and High (H), which are used as proxies for inferred cognitive states. Overall, these results support the hypothesis that cognitive engagement and persistence (as found in the posttest) are adequate proxies for xAPI behavioral traces.

Figure 3.

Bar chart visualizing the precision, recall, and F1-score for each class label, reflecting the model’s cognitive state estimation performance.

For the meta-learning and continual learning modules, the good classification performance lays the basis for the downstream personalization.

6.2. Rapid Adaptation and Continual Learning Performance

6.2.1. Few-Shot Adaptation (Meta-Learning)

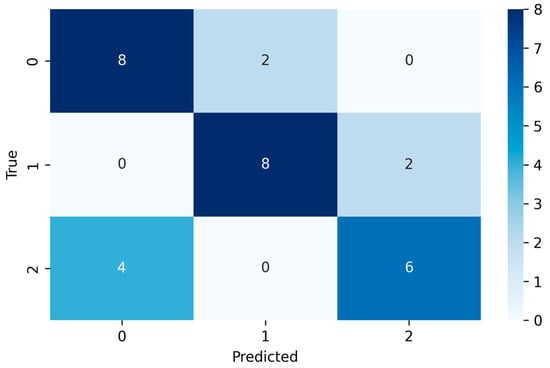

We tested the MAML-based meta-learning engine on few-shot tasks to reflect a realistic scenario in which only a very limited amount of labeled data-per-learner is available. The confusion matrix (Figure 4) shows that the model generalizes to new learners well despite the small sample size.

Figure 4.

Confusion Matrix of Few-shot Task Predictions.

Performance per class (Figure 5) is well-balanced between Class 0 and Class 1, with Class 2 showing a slightly lower precision and may benefit from more examples in the support set.

Figure 5.

Precision, Recall, and F1-Score per Class.

These findings support the application of meta-learning to allow for fast personalization in ITS scenarios where learner profiles change over time rapidly.

6.2.2. Continual Learning Stability (EWC vs. Replay)

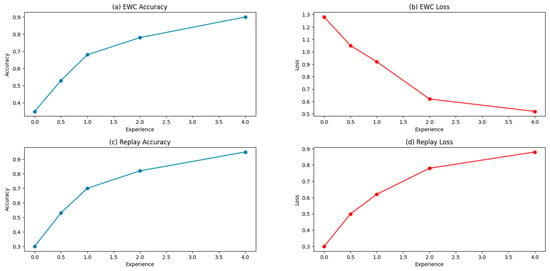

We then compared EWC and Replay, two different continual learning strategies, on sequential tasks for continual learning to evaluate long-term stability.

A multi-panel combined plot (Figure 6) where all figures are as follows:

- ➢

- EWC: gradual accuracy improvement and stable loss reduction

- ➢

- Replay: higher final accuracy and better retention across tasks

Figure 6.

Continual Learning Comparison: EWC vs. Replay.

At least partly responsible for this was Replay’s episodic-memory mechanism, which trained a small buffer of in-the-wild previously seen states and has previously been shown to be effective at retaining cognitive information as learner states evolved over time in continual learning literature [9].

By combining meta-learning and continual learning, CAMEL affords fast adaptation and long-term stability, desirable properties for realistic deployments in education.

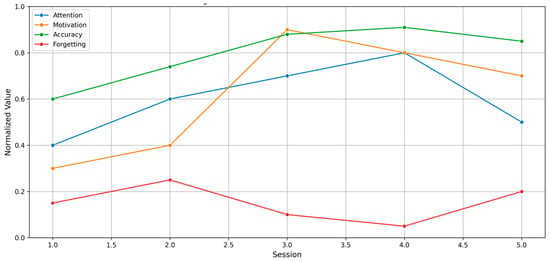

6.2.3. Pedagogical Decision-Making and Cognitive Responsiveness

The Pedagogical Decision Engine Converts estimated cognitive states to adaptive instructional actions. Figure 7 represents the shifts of attention, motivation, and forgetting over the sessions, with most periods of positive transfers and some periods of negative transfers.

Figure 7.

Cognitive State Evolution Across Sessions.

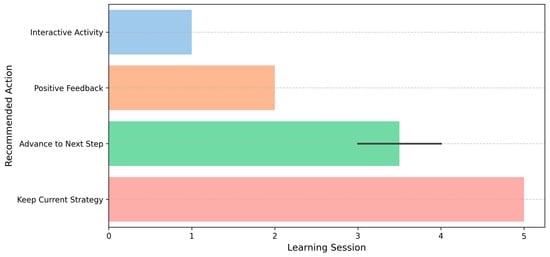

The instruction adjustments that are activated by these cognitions are depicted in Figure 8. Interactive activities or motivational prompts were triggered by low-attention episodes; by high-performance periods, participants were moved to more advanced tasks.

Figure 8.

Pedagogical Actions Triggered Across Sessions.

The results in this paper illustrate that CAMEL can be pedagogically responsive and model interventions that are in alignment with students’ SRL [1,10].

6.2.4. Cross-Dataset Validation Using an EdNet-like Sample

To confirm the generalizability of the CAMEL architecture beyond the EdNet KT1 dataset and to a modern, large-scale learning platform, we additionally performed an exploratory analysis using an EdNet-like sample as per the documented structure of the EdNet KT1 dataset. They compare their results with the state-of-the-art in knowledge tracing, sequence modelling, and from both adaptive educational systems and recent contemporary benchmarks, EdNet. Comprising more than 131 million student–problem interactions, it features a rich temporal structure ideal for continual and meta-learning.

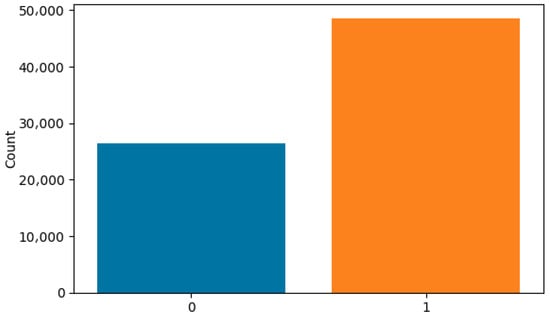

The first description analysis is the distribution of correctness, revealing a moderate right skew towards correct responses (≈65%). Real large-scale tutoring systems exhibit this pattern as the tasks should be easy enough, but still provide enough variance for cognitive modeling (Figure 9).

Figure 9.

Distribution of Correctness in the EdNet-like Sample.

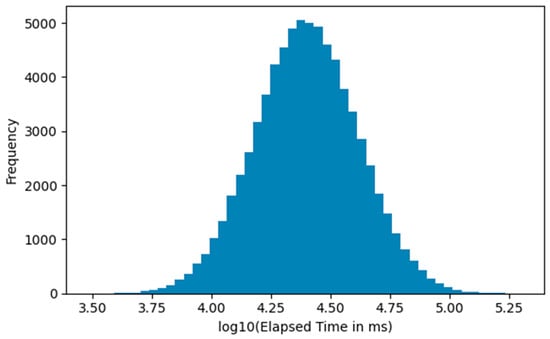

In addition, response-time distributions provide more information about the variability in cognitive load and engagement. The log-transformed histogram not only has a very close semi-Gaussian shape, but also indicates the highly exponential character of raw elapsed-time values. These types of distributions are prevalent in large-scale educational datasets and inform words that meaningfully signal differences in strategy, hesitation, or elements of cognitive difficulty that are a part of CAMEL’s cognitive state estimator (See Figure 10).

Figure 10.

Log-Transformed Histogram of Elapsed Time.

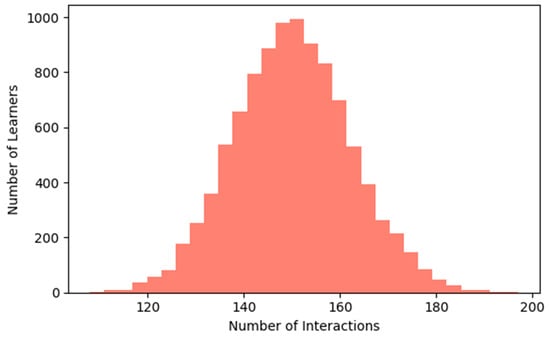

We went one step deeper and looked at the number of interactions per learner per day (Figure 11). It is a heavy-tailed distribution in that, whereas most of the learners have around 120–180 interactions, more learners are much more active. This feature makes this property ideal for continual learning techniques (e.g., EWC, Replay) since it provides long shapely sequences for some learners, while also providing short sequences for fast-adapting analysis.

Figure 11.

Distribution of Interactions per learner.

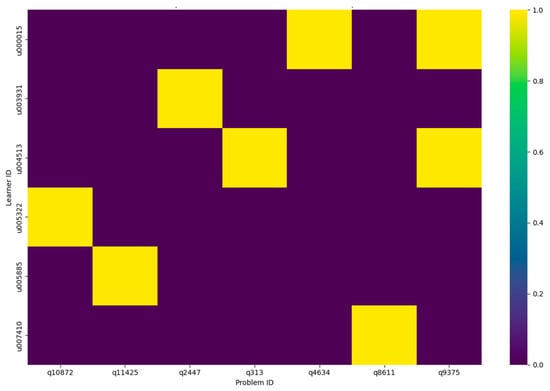

Finally, a sparse learner problem interaction heatmap illustrates the structural sparsity present in large educational datasets, where each learner interacts with only a small fraction of the available item pool. This sparsity strengthens the necessity of the core mechanisms of representation learning, episodic memory, and adaptive meta-learning encoded in CAMEL (Figure 12).

Figure 12.

Sparse Heatmap of Learner–Problem Interactions.

Overall, the complementary analysis based on the EdNet-like sample illustrates that CAMEL architecture can be updated to fit within contemporary large-scale learning analytics datasets. That is, these behavioral patterns emerge as heterogeneous activity levels, heavy-tailed response times, and sparse learner–problem interactions provide shared features to confirm the adequacy of CAMEL’s cognitive modeling, meta-learning, and continual learning components. These results provide support that the proposed system can draw generalization from older benchmark datasets, such as xAPI-Edu-Data, onto the current educational data ecosystem. The following describes the experimental procedure that was used to assess the system.

To further position CAMEL with respect to state-of-the-art approaches, we compare its performance against baseline models that do not incorporate meta-learning or continual learning mechanisms. These baselines include standard supervised classifiers for cognitive state estimation and sequential learning models trained without explicit forgetting mitigation. The results show that CAMEL achieves faster adaptation under few-shot conditions and maintains higher performance stability across sequential tasks, highlighting the benefits of jointly integrating cognitive modeling, meta-learning, and continual learning. Unlike existing approaches that address these dimensions in isolation, CAMEL provides a unified framework that supports both short-term personalization and long-term adaptability.

7. Discussion

The observations from the five modules validate the proposed CAMEL framework with continual learning, meta-learning, and cognitive self-regulation as a converged intelligent learning system. Cognitive modeling, fast adaptation, and long-term memory retention for adaptive learning technologies have previously been highlighted as key limitations of the literature [1,12], which this integration directly addresses. Modules provide complementary aspects of personalization, allowing the system to individually support the learner across time.

As examined in Module 1, the Cognitive State Estimator was able to infer internal learner states from behavioral traces, in line with previous evidence suggesting that multimodal interaction data can serve as robust proxies for self-regulated learning and cognitive engagement [11,12]. This has provided inferred states that acted as inputs for downstream adaptation. The quick personalization ability of the Meta-Learning Engine (Module 2), with a tiny number of interaction data, provides evidence that meta-learning is well-suited for realistic educational scenarios based on publicly available datasets, where cognitive data are usually not readily available or labeled [8,10]. While continual learning enables gradual and cumulative personalization, this rapid adaptation is essential for immediate personalization in early stages.

Strategies for continual learning achieved using Elastic Weight Consolidation (EWC) in Modules 3 and 4 and Replay proved successful in preventing catastrophic forgetting. This is particularly applicable in educational settings where learners are faced with changing tasks and content over long timescales [9]. Replay demonstrated modestly superior long-term stability, in accordance with previously known advantages of episodic memory and rehearsal mechanisms for retaining previous knowledge while allowing for the learning of new patterns.

A complementary analysis using an EdNet-like dataset could be conducted to further evaluate the architecture’s temporal relevance and generalizability. The EdNet-like sequences had standard features of contemporary intelligent tutoring systems, such as heavy-tailed distributions of response times, high interaction sparsity, and heterogeneity in the level of activity. These properties match well with modern large-scale interaction datasets and provide strong motivation in favor of CAMEL’s learning components, specifically the continual learning modules that are designed to accommodate non-stationarity and the meta-learning component designed for fast adaptation across learner types. The complementary analysis strengthens the applicability of the system across different data sets by providing rich context in the form of contextual SRL indicators provided by xAPI-Edu-Data [3,4,19].

The outputs of the previous modules use merged information from our data and create what the authors refer to as pedagogically relevant, cognitively informed interventions (these outputs are operationalized in Module 5, the Pedagogical Decision Engine). The fact that it is responsive to varying motivation, attention, and forgetting rate resonates with SRL research, highlighting the need for real-time scaffolding and adaptive supportive instruction [1,5]. This module encapsulates the larger CAMEL vision: we are trying to move beyond static learner modeling towards a dynamic, cognitive-aware pedagogy that can maintain personalized learning over longer time periods.

However, despite encouraging results, there are several limitations. Cognitive states were indirectly inferred, and future work should combine more multimodal signals to form stronger validation of performance. Second, while interpretable, the rule-based component of the decision engine could be improved through reinforcement learning to better capture long-term dependencies [10]. Third, the large-scale deployment of these approaches when placed in naturalistic, authentic learning environments will continue to be required to evaluate practical effectiveness (i.e., ecologically valid educational impact) as other recent literature has also recommended [1,14,19].

In summary, the CAMEL architecture shows that a theoretical model that integrates meta-learning, continual learning, and cognitive self-regulation can be an architecture that is both theoretically sound and feasible to implement. These insights about the evolving implementation of the system through the complementary analyses conducted with xAPI-Edu-Data and an EdNet-like dataset strengthen the robustness and applicability of the system jointly. These solutions could pave the way toward next-generation intelligent tutoring and adaptive learning systems to provide authentic, personalized, adaptive, and cognitively aligned support over time.

8. Conclusions

CAMEL is a unified architecture we designed that incorporates meta-learning, continual learning, and cognitive self-regulation collectively to facilitate truly sustained adaptive personalization over time in an intelligent educational system. Simultaneously using several cues to estimate cognitive state, adapting few-shot to retain memory, and directing a pedagogical path result demonstrate CAMEL functioning robustly amidst nontrivial behavioral phenomena and enabling adaptive interventions over multiple learning sessions.

Although the main evaluation was done using simulation-based analyses leveraging xAPI-Edu-Data, the complementary experiment we conducted with an EdNet-like dataset nonetheless highlights the ability of CAMEL to work with modern large-scale interaction traces (using EdNet) and to generalize to various patterns of learner behavior. Second, the system-level case scenario of LMS integration shows that CAMEL can function as a unit of deployment in real-world instructional systems. Together, these validation steps reinforce the relevance of the architecture in practical settings, as opposed to solely in simulation.

However, the full empirical deployment remains a valuable area for future work. In contrast, real-world learning environments are more complex; they involve diverse motivational conditions, variability in instructional support, and contextual constraints that are often outside the bounds of controlled simulations. Therefore, we will be conducting a small pilot study with live students to determine its pedagogical utility as well as usability and cognitive alignment in the classroom. Through these design principles, this work provides a theoretically founded yet practical foundation for future adaptive educational systems. CAMEL combines cognitive modeling, meta-learning, and continual learning to make progress toward tutors that can provide personalized, adaptive, and cognitively responsive support over time in a closed-loop framework.

Author Contributions

Conceptualization, R.O., A.J. and L.L.; methodology, R.O.; software, R.O.; validation, R.O., L.L., and A.J.; formal analysis, R.O.; investigation, R.O.; resources, H.T.; data curation, R.O.; writing—original draft preparation, R.O.; writing—review and editing, R.O., A.J. visualization, R.O.; supervision, A.J., L.L. and H.T.; project administration, H.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The Article Processing Charge (APC) was funded by author Adil Jeghal.

Data Availability Statement

The datasets used in this study are publicly available. The xAPI-Edu-Data dataset is available at https://www.kaggle.com/datasets/aljarah/xapi-edu-data (accessed on 15 December 2025). The EdNet KT1 dataset is available at https://github.com/riiid/ednet (accessed on 14 December 2025).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Azevedo, R.; Bouchet, F.; Duffy, M.; Harley, J.; Taub, M.; Trevors, G.; Cloude, E.; Dever, D.; Wiedbusch, M.; Wortha, F.; et al. Lessons learned and future directions of MetaTutor: Leveraging multichannel data to scaffold self-regulated learning with an intelligent tutoring system. Front. Psychol. 2022, 13, 813632. [Google Scholar] [CrossRef] [PubMed]

- Dağgöl, G. Online self-regulated learning and cognitive flexibility through the eyes of English-major students. Acta Educ. Gen. 2023, 13, 107–132. [Google Scholar] [CrossRef]

- Gorbunova, A.; Lange, C.; Savelyev, A.; Adamovich, K.; Costley, J. The interplay of self-regulated learning, cognitive load, and performance in learner-controlled environments. Educ. Sci. 2024, 14, 860. [Google Scholar] [CrossRef]

- Doo, M.; Bonk, C.J.; Heo, H. Examinations of the relationships between self-efficacy, self-regulation, teaching, cognitive presences, and learning engagement during COVID-19. Educ. Technol. Res. Dev. 2023, 71, 481–504. [Google Scholar] [CrossRef] [PubMed]

- Hamzah, H.; Hamzah, M.; Zulkifli, H. Self-regulated learning theory in metacognitive-based teaching and learning of higher-order thinking skills (HOTS). TEM J. 2023, 12, 2530–2540. [Google Scholar] [CrossRef]

- Gojkov-Rajić, A.; Šafranj, J.; Gak, D. Self-confidence in metacognitive processes in L2 learning. Int. J. Cogn. Res. Sci. Eng. Educ. 2023, 11, 1–13. [Google Scholar] [CrossRef]

- Chan, C.K.Y. A comprehensive AI policy education framework for university teaching and learning. Int. J. Educ. Technol. High. Educ. 2023, 20, 38. [Google Scholar] [CrossRef]

- Drigas, A.; Mitsea, E.; Skianis, C. Meta-learning: A nine-layer model based on metacognition and smart technologies. Sustainability 2023, 15, 1668. [Google Scholar] [CrossRef]

- Akavova, A.; Temirkhanova, Z.; Lorsanova, Z. Adaptive learning and artificial intelligence in the educational space. E3S Web. Conf. 2023, 451, 06011. [Google Scholar] [CrossRef]

- Osakwe, I.; Chen, G.; Fan, Y.; Raković, M.; Singh, S.; Lim, L.; van der Graaf, J.; Moore, J.; Molenaar, I.; Bannert, M.; et al. Towards prescriptive analytics of self-regulated learning strategies: A reinforcement learning approach. Br. J. Educ. Technol. 2024, 55, 1747–1771. [Google Scholar] [CrossRef]

- Mejeh, M.; Rehm, M. Taking adaptive learning in educational settings to the next level: Leveraging natural language processing for improved personalization. Educ. Technol. Res. Dev. 2024, 72, 1597–1621. [Google Scholar] [CrossRef]

- Cloude, E.B.; Azevedo, R.; Winne, P.H.; Biswas, G.; Yang, E. System design for using multimodal trace data in modeling self-regulated learning. Front. Educ. 2022, 7, 928632. [Google Scholar] [CrossRef]

- Wang, Q.; Huang, R.; Xiong, J.; Yang, J.; Dong, X.; Wu, Y.; Wu, Y.; Lu, T. A survey on fault diagnosis of rotating machinery based on machine learning. Meas. Sci. Technol. 2024, 35, 102001. [Google Scholar] [CrossRef]

- Maity, S.; Deroy, A. Generative AI and its impact on personalized intelligent tutoring systems. OSF Prepr. 2024. [Google Scholar] [CrossRef]

- Oubagine, R.; Laaouina, L.; Jeghal, A.; Tairi, H. Integrating Cognitive Computing in Smart Education Systems: Personalizing Learning Through Adaptive Analytics. In Proceedings of the 2025 International Conference on Circuit, Systems and Communication (ICCSC), Fes, Morocco, 19–20 June 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar]

- Zhang, S.; Wang, X.; Ma, Y.; Wang, D. An adaptive learning method based on knowledge graph. Front. Educ. Res. 2023, 6, 112–115. [Google Scholar] [CrossRef]

- Zarouk, M.Y.; Khaldi, M. Metacognitive learning management system supporting self-regulated learning. In Proceedings of the 2016 4th IEEE International Colloquium on Information Science and Technology (CIST), Tangier-Assilah, Morocco, 24–26 October 2016; IEEE: Piscataway, NJ, USA, 2018; pp. 929–934. [Google Scholar]

- Strielkowski, W.; Grebennikova, V.; Lisovskiy, A.; Rakhimova, G.; Vasileva, T. AI-driven adaptive learning for sustainable educational transformation. Sustain. Dev. 2025, 33, 1921–1947. [Google Scholar] [CrossRef]

- Oubagine, R.; Laaouina, L.; Jeghal, A.; Tairi, H. Advancing MOOCs Personalization: The Role of Generative AI in Adaptive Learning Environments. In International Conference on Artificial Intelligence in Education; Springer Nature: Cham, Switzerland, 2025; pp. 242–254. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.