Abstract

The estimation of leakage faults in evaporation tubes of supercharged boilers is crucial for ensuring the safe and stable operation of the central steam system. However, leakage faults of evaporation tubes feature high time dependency, strong coupling among monitoring parameters, and interference from noise. Additionally, the large number of monitoring parameters (approximately 140) poses a challenge for spatiotemporal feature extraction, feature decoupling, and establishing a mapping relationship between high-dimensional monitoring parameters and leakage, rendering the precise quantitative estimation of evaporation tube leakage extremely difficult. To address these issues, this study proposes a novel deep learning framework (LSTM-CNN–attention), combining a Long Short-Term Memory (LSTM) network with a dual-pathway spatial feature extraction structure (ACNN) that includes an attention mechanism(attention) and a 1D convolutional neural network (1D-CNN) parallel pathway. This framework processes temporal embeddings (LSTM-generated) via a dual-branch ACNN—where the 1D-CNN captures local spatial features and the attention models’ global significance—yielding decoupled representations that prevent cross-modal interference. This architecture is implemented in a simulated supercharged boiler, validated with datasets encompassing three operational conditions and 15 statuses in the supercharged boiler. The framework achieves an average diagnostic accuracy (ADA) of over 99%, an average estimation accuracy (AEA) exceeding 90%, and a maximum relative estimation error (MREE) of less than 20%. Even with a signal-to-noise ratio (SNR) of −4 dB, the ADA remains above 90%, while the AEA stays over 80%. This framework establishes a strong correlation between leakage and multifaceted characteristic parameters, moving beyond traditional threshold-based diagnostics to enable the early quantitative assessment of evaporator tube leakage.

1. Introduction

The steam power system features mature technology and high reliability, playing a crucial role in power generation, marine power, and other fields. The supercharged boiler directly provides energy by steam for the power system as the heart of the steam power system [1]; the supercharged boiler evaporator tubes are vital as the crucial steam-generating equipment. In recent years, increasing attention has been paid to the research on supercharged boiler evaporator tubes. Through the analysis of heat flux phenomena in supercharged boiler evaporators, it has been found that numerous factors can lead to tube rupture and damage [2]. A boiler evaporation tube leakage fault will cause great harm to the steam system, receiving extensive attention [3,4,5,6]. The study of boiler evaporator tube leakage faults focused on the analysis of the causes of leakage, including overheating, corrosion, and wear, trying to analyze the phenomenon to prevent leakage faults in advance [7]. However, as the evaporator tube is located in harsh environments, it is difficult to find the evolution of its failure process. The main reason for the evaporator tube’s leakage is the formation of a differential oxygen concentration cell, which leads to continuous corrosion and the thinning of the steel tube and, ultimately, perforated leakage under the pressure of the high-temperature internal medium [8], which makes it difficult to find and measure in time. Although the critical risks posed by steam tube leakage in pressurized boilers—including unplanned shutdowns, furnace explosions, degraded steam quality, and collateral equipment damage resulting from incorrect handling or a delayed response—this failure mode remains significantly underexplored in existing diagnostic research. Thus, it is essential to accurately estimate the leakage of the evaporation tube.

The study of fault diagnosis technology has thrived, yielding numerous achievements across fields, safeguarding equipment operation. Traditional methods, such as those based on expert experience [9,10], modes [11,12], and signal processing [13,14], work well for simple, structured equipment. However, precise boundary condition establishment is difficult for variable and complex conditions of evaporation pipelines. Sensor-based monitoring has opened new fault-dimensionality diagnosis paths by monitoring key parameter variables and the complex conditions of evaporation pipelines [15,16]. However, evaporation pipelines’ complex layout and harsh environment impede effective sensor-based fault monitoring, so fault analysis often relies on monitoring parameters of connected equipment [17,18]. Analyzing monitored parameters requires feature engineering to find fault-indicative key parameters [19]. Yet, the multitude of highly coupled parameters makes it difficult. Data-based intelligent fault diagnosis methods for evaporation pipelines, relying on sensor-monitored parameters, offer new methods for end-to-end diagnosis [20,21,22]. These methods enable models to learn fault features automatically, enhancing diagnosis efficiency and accuracy [1]. Moreover, quantitative severity estimation constitutes a critical post-diagnosis step to inform rational maintenance strategies [23]. However, existing research exhibits an insufficient methodological focus on quantitative severity estimation.

Preliminary investigations into fault severity estimation methodologies have been reported, albeit with limited scope and technical maturity. For instance, vibration-signal RMS-based models estimate fault severity [24], and prior knowledge enables small-sample, cross-gear, dimensional domain estimation [25]. Mohamed-Amine Babay et al. achieved promising results in predicting hydrogen production by analyzing variables such as solar radiation and environmental factors, employing machine learning methods including Random Forest and Multi-Layer Perceptron (MLP) [26]. In wind turbine turn-to-turn short circuit studies, LSTM achieved 97% accuracy in fault severity estimation [27]. LSTM with attention also worked well for cable fault severity estimation [28]. Yet, current estimation often targets a few variables, limiting its application to evaporation tube leakage faults with many spatial variables. However, current estimation often targets a few variables, limiting its application to evaporation tube leakage faults with many spatial variables. Especially, the variables feature high time dependency, strong coupling among monitoring parameters, and interference from noise. Thus, this impedes the establishment of intricate nonlinear mapping relationships between multivariate inputs and the evaporation tube leakage magnitude.

Techniques such as LSTM, CNN, and attention have paved new ways for spatiotemporal feature extraction, feature decoupling, and establishing a mapping relationship between high-dimensional monitoring parameters and leakage. Wang et al. utilized LSTM for small-scale, supercharged water reactor fault diagnosis with 92.06% average accuracy [29]. It can handle time-dimensional dependent data, capturing temporal fault features. However, LSTM struggles with extracting the spatial features of evaporation tube leakage multidimensional variables. CNN can extract the local fault features of variables, excelling in multivariable scenarios [30]. Fang et al. proposed a Bayesian-optimized CNN-LSTM for turbine fault diagnosis with over 90% accuracy [31]. Although CNN-LSTM can extract temporal and local fault features, different leakages’ data distributions require extracting global fault-relevant spatial features. An attention mechanism may achieve this goal [32]. The CNN-LSTM-SA model more comprehensively extracted spatiotemporal features in oil-well production data [33]. CNN-LSTM–attention combines CNN-LSTM’s strengths and attention’s ability to enhance global feature extraction. However, the CNN-LSTM–attention architecture employs a sequential connection approach: CNN first extracts local spatial features, LSTM captures temporal dependencies of these local spatial features, and attention dynamically adjusts global spatiotemporal features. This structural design tends to cause feature entanglement and fails to achieve the deep feature decoupling of input variables. Consequently, it cannot establish a comprehensive mapping relationship between spatiotemporal fault features and leakage. Therefore, the architecture needs to be restructured to enable holistic spatiotemporal feature extraction and the deep feature decoupling of input variables.

Therefore, integrating LSTM and ACNN (1D-CNN and attention) with a rational framework is expected to fully extract spatiotemporal features and achieve the feature decoupling of evaporation tube leakage faults features, thereby establishing a mapping relationship between high-dimensional monitoring parameters and leakage. This paper plans to use the LSTM-CNN–attention integration to estimate evaporation tube leakage in supercharged boilers.

2. Methodology

2.1. LSTM Theory

Hochreiter et al. proposed the Long Short-Term Memory (LSTM) neural network [34]. LSTM is an enhanced version of the recurrent neural network (RNN) algorithm that incorporates a gating mechanism to address the gradient vanishing and explosion problems commonly found in RNNs. This allows LSTM to effectively extract temporal features from time series data [35].

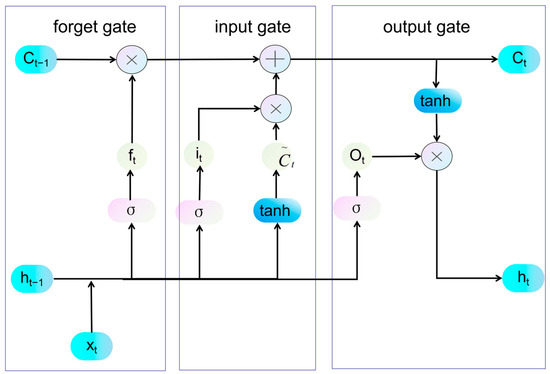

The LSTM structure is as follows in Figure 1. The core components of LSTM include three gates—the forget gate, the input gate, and the output gate—as well as two types of memory units: the short-term unit and the long-term unit.

Figure 1.

Structure of an LSTM module.

Forgetting gate: This component regulates the portion and extent of state information from the long-term memory unit that needs to be disregarded from the previous moment. It also selects the useful information that should be retained after passing through the forgetting gate.

In the formula, ft decides the information to be forgotten. ht−1 is the previous step’s short-term memory state, and it is the current step. Wf (weight matrix) and bf (bias term) are learnable parameters. σ is the sigmoid function, outputting a value between 0 and 1, with its expression below.

Input gate: It controls which information from the short-term memory cell of the previous time step and the cell information of the current time step can enter the current memory cell. This effectively prevents irrelevant information from entering the long-term memory cell.

In this context, it highlights the specific components from both the previous step’s short-term information and the current step’s cell data that contribute to enhancing the memory cell. The weight matrix, denoted as Wi, along with the bias term bi, plays a crucial role in refining this pivotal updating process.

Ct* is the candidate vector enhancing information representation. It controls the new information inflow to the current cell it. The range is from −1 to 1, encompassing all types of information. Wc is the weight matrix, and bc is the bias term for updating potential information. The tanh function produces outputs ranging from −1 to 1, as shown in the expression below.

The long-term memory cell at the current time step combines information from the previous long-term memory cell with new data, forming a comprehensive unit of information.

Ct is a comprehensive information unit that is then passed to the memory cell of the next time step.

Output gate: It regulates the information that can enter the short-term memory cell at the present step. It decides the final output information, serving as the ultimate checkpoint for data.

where Ot denotes a synthesized selection vector ranging from 0 to 1. Where Wo denotes a matrix of correlation weights between the synthesized information and the information in the current output short-term memory unit, and bo denotes the corresponding bias term.

The short-term memory cell is also called the output unit. It is the information unit that is finally output.

It selects the most important part of the current-step information as the output.

There is a weight matrix composed of Wf, Wi, Wc, and Wo, along with a bias term consisting of bf, bi, bc, and bo. All LSTM layers share these parameters. Thus, training an LSTM means adjusting these parameters to fit the specific task.

With its three-gate mechanism, LSTM can create the information before and after the sequence by participating in the calculation across time sequences, thereby effectively processing evaporator tube leakage monitoring parameters characterized by strong temporal dependencies and extracting critical temporal features. Thus, LSTM excels at temporal modeling by capturing temporal features. Its strength resides in detecting fault-related patterns exhibiting time-specific characteristics.

2.2. CNN Theory

The convolutional neural network (CNN) emerged when LeCun utilized the backpropagation (BP) network to develop LeNet-5, which is a classic CNN architecture [36]. CNNs are regularized feedforward neural networks that leverage convolution operations and pooling to automatically extract hierarchical features from data. They exhibit high fault tolerance and robustness [31].

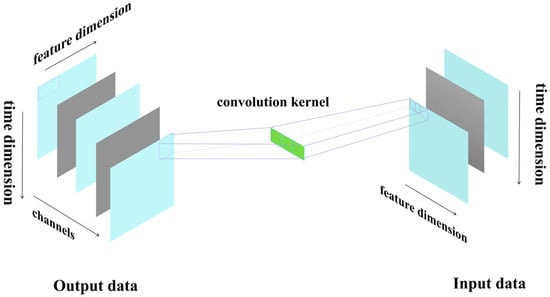

CNN’s structure consists of three key parts: the convolutional layer, the pooling layer, and the activation function layer. The convolutional layer is fundamental to the architecture. Figure 2 shows how the CNN works.

Figure 2.

The fundamentals of the convolutional layer.

Convolution operation: Essentially, a convolution kernel slides over the input data. It sums up the weighted values to extract local features from the data at each position.

where i represents the time position, while j indicates the variable position, with (m, n) serving as the row and column indices corresponding to the convolution kernel k(m, n). Where k(m, n) denotes the value of the convolution kernel at the position (m, n). The expression X(m + i, n + j) indicates that the variable at the position (i, j) slides over the convolution kernel k(m, n). Finally, Y(i, j) represents the value at the position (i, j) after the convolution calculation has been performed.

Pooling operation: The pooling operation essentially down-samples the input data, which reduces the volume of data while retaining key features. Max-pooling selects the maximum value within the pooling window, emphasizing the most prominent features and making less obvious fault features more distinct. In this study, max-pooling is chosen because the fault features are somewhat similar. This method helps to highlight the less apparent fault characteristics.

Max-pooling uses the size of the (k, k) pooling window. At position (i, j), it finds the maximum value Y(i, j) within the (k, k) window.

The activation function layer includes Sigmoid, tanh, ReLU, and so on. In this study, we used a ReLU activation function, which is widely used in CNN models with the advantages of fast convergence and simple gradient calculation. The expression of the ReLU function is as follows:

where f(xi) denotes that the value of f(xi) is xi when xi > 0; otherwise, it is 0.

The 1D-CNNs extract features from the variable-space dimension. They use multiple convolutional filters to perform dot-product operations on input data, extracting local information from the current data slice [37]. Thus, a 1D-CNN effectively extract local spatial features, enabling the processing of high-dimensional monitoring parameters for evaporator tube leakage while achieving dimensionality reduction.

2.3. Attention Mechanism Theory

The attention mechanism was introduced by Ashish Vaswani, Noam Shazeer, Niki Parmar, and others [38]. It dynamically assigns weights based on the relationships between elements, which helps capture long-range dependencies and extract global features. The attention process determines the importance of information through feature weighting [39].

For an input sequence X = {x1, x2, x3 … xn}, the attention first uses a linear transformation to generate a query vector Q, a key vector K, and a value vector V (K = V). The calculations are as follows:

where WQ, WK, and WV are the weight matrices to be learned. In attention, an attention score matrix, S, is used to represent the degree of relevance of the focused content. Also, it needs to be divided by a dimensional factor dk, to prevent gradient vanishing and explosion. The calculation formula of S is as follows:

where k represents the dimension size. Then, normalize the attention score, S, to obtain the attention weights. Finally, multiply by V to get the final output.

S~ represents the result of normalizing S.

The attention mechanism dynamically assigns weights to temporal steps and spatial features, enabling a task-specific information focus. By integrating the global context, it selectively filters irrelevant data while concentrating on salient features during complex data processing. This capability is critical for precise leakage estimation.

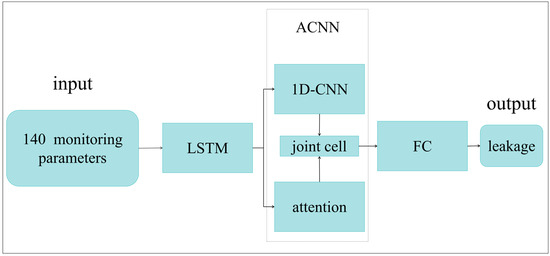

2.4. LSTM-CNN–Attention Framework for Leakage Estimation

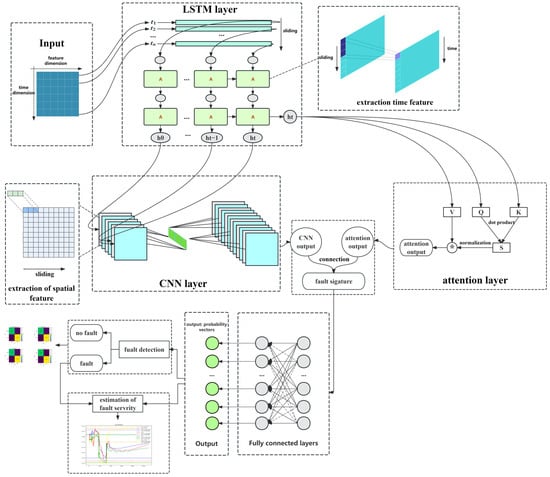

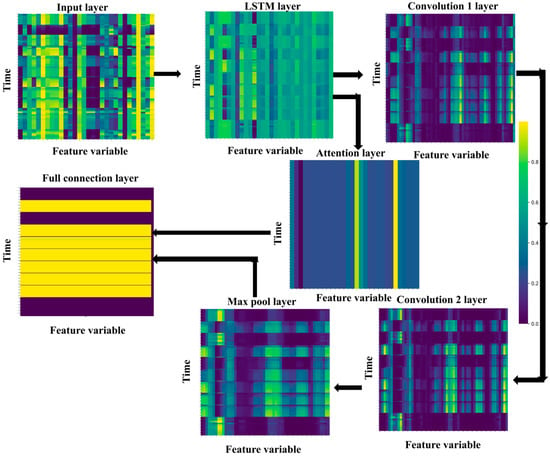

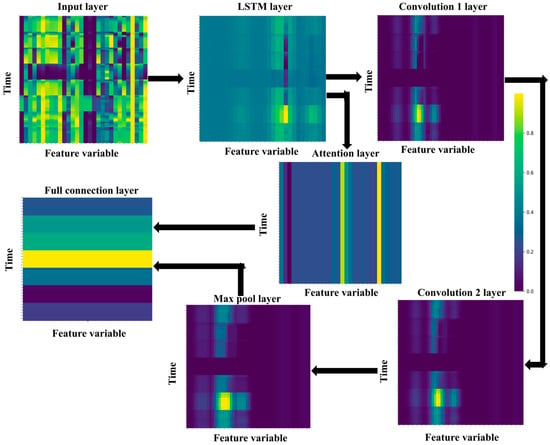

This paper proposes an LSTM-CNN–attention framework for evaporator tube leakage estimation according to existing methods. The LSTM-CNN–attention’s schematic is in Figure 3 and Figure 4. Input data undergoes sliding window preprocessing to enhance temporal fault features per variable. Then, it passes through the LSTM layer to get each variable’s time-based fault features. LSTM outputs are subsequently reshaped for the heterogeneous dual-channel ACNN module, which integrates the following:

Figure 3.

Structure of an LSTM-CNN–attention framework.

Figure 4.

The structure of an LSTM-CNN-attention module.

- (1)

- A 1D-CNN submodule with dual convolutional layers extracting local fault-variable features, followed by max-pooling for feature refinement;

- (2)

- An attention mechanism quantifying spatial variable-fault correlations to derive global spatial fault features.

The concatenated outputs from both channels are fed into a fully connected layer aggregating spatiotemporal fault representations, ultimately generating probabilistic fault distribution estimates.

This study uses one framework for two models. Just change the loss function during training, using BCE for fault diagnosis and MSE for fault severity estimation. We use a fault diagnosis model at first, and then a fault severity estimation model for data diagnosed as faulty.

The LSTM-CNN–attention architecture enables robust feature decoupling through multi-modal feature extraction. Specifically, LSTM captures temporal features per variable, while the dual-channel ACNN structure extracts comprehensive spatial features: 1D-CNN acquires localized spatial patterns, and attention derives global spatial characteristics. This explicit functional segregation prevents feature entanglement. Furthermore, architecture achieves precise leakage estimation from high-dimensional inputs via a staged processing mechanism comprising temporal modeling (LSTM), multidimensional spatial feature fusion (ACNN), and nonlinear mapping (FC).

This lightweight deep learning architecture offers distinct advantages: Reduced computational complexity: Compared to transformer structures, it incurs significantly lower computational overhead. Inherent multi-scale adaptability: A key characteristic is its ability to adapt to multi-scale data without requiring explicit scale alignment, offering greater flexibility than multi-head attention mechanisms. Multidimensional feature extraction: Crucially, the architecture facilitates feature extraction across multiple dimensions. This enables the modeling of complex nonlinear relationships, ultimately achieving the accurate prediction of unknown leakage levels.

This architecture also exhibits strong noise resistance when dealing with noisy real-world operating conditions. Its advantages are particularly pronounced in noisy environments for three key reasons. LSTM-based temporal denoising: The LSTM structure inherently performs denoising along the temporal dimension of features. Its temporal smoothing effect preliminarily reduces noise interference over time. The 1D-CNN’s translation invariance: The 1D-CNN possesses translation invariance, which inherently mitigates the impact of noise on local features. Attention-based dynamic reweighting: The attention mechanism dynamically adjusts the weights of the LSTM-processed features (which already have a lower noise impact) based on the global context. Together, these mechanisms form a dual-channel, two-stage noise suppression framework leveraging both LSTM and CNN pathways.

The LSTM gets time features of leakage faults. CNN extracts local spatial features of fault parameters. The attention adjusts weights to obtain global spatial features of fault parameters. It helps LSTM-CNN–attention fully capture spatiotemporal fault features. The proposed framework addresses the critical challenge of evaporator tube leakage quantification through a triple-layer spatiotemporal modeling mechanism that simultaneously captures high-dimensional monitoring parameters’ spatiotemporal features, decouples strongly correlated variables, and establishes complex nonlinear mappings between inputs and leakage fault characteristics. Overall, LSTM-CNN–attention can map effectively. In summary, the LSTM-CNN–attention framework enables the accurate estimation of evaporator tube leakage magnitude by establishing complex nonlinear mappings between high-dimensional variables and leakage quantities through comprehensive spatiotemporal feature extraction.

3. Fault Simulation Experiment and Data Preprocessing

3.1. Introduction to Supercharged Boiler Evaporator Tubes

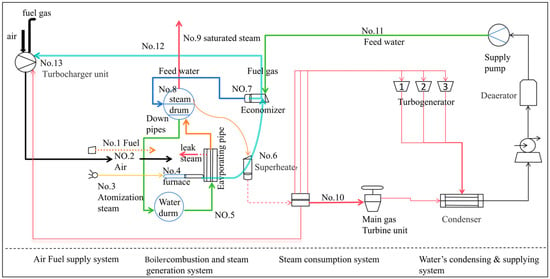

The supercharged boiler system consists of four subsystems: air supply, combustion, steam, and condensate feed water (Figure 5). Steam generation process: Condensate water is sent by the feed-water pump to the economizer for pre-heating and then enters the steam drum. Fuel burns in the furnace to release heat, vaporizing water via the evaporator tubes. The steam drives the turbine through the superheater, converting to mechanical energy. Un-vaporized water returns to the steam drum for recycling [12].

Figure 5.

Schematic flow diagram of the system and evaporator (simplified).

Water absorbs heat in the evaporator tubes and vaporizes into steam. The evaporator tubes are made up of many thin, seamless steel tubes. Their thin walls help with heat transfer. Heat transfer occurs in two ways: radiation and convection. The side near the furnace uses both convection and radiation for heat transfer. The side far from the furnace mainly relies on radiation for heat transfer.

3.2. An Introduction to the Boiler Simulation Model

Evaporation tube leakage faults in supercharged boilers data often come from simulation model data. This is because obtaining actual fault data incurs high costs, there are potential safety risks during the collection process, and the low frequency of actual faults makes it difficult to collect abundant data for analysis. Therefore, this study uses an existing supercharged boiler simulation model to acquire rich leakage fault data.

Our research team has published multiple papers addressing the fidelity of the model [12,16,17,18]. The steady-state error of key parameters in the supercharged boiler simulation model used in this paper is <2%, and the dynamic error is <5%. In these papers, a comprehensive thermodynamic system simulation model was established. Parameters of the simulation model were validated against measured data from actual installations, ensuring that the fidelity of the simulation model relative to the actual thermodynamic system meets both static and dynamic requirements. Our research group has conducted modeling and analysis for both transient conditions (load increase and decrease) and fault conditions (various fault types and severities). Eighteen representative parameters, including the superheated steam temperature and air supply rate, were selected. Testing was performed using seven distinct fault test cases. The results demonstrate that the evaporator tube leakage model for this supercharged boiler aligns with combustion mechanisms and empirical data on leakage faults. These findings indicate that the transient events modeled in this study accurately represent real-world fault scenarios. The accuracy of this model has been verified and can meet the research requirements for evaporation tube leakage faults in this study [12].

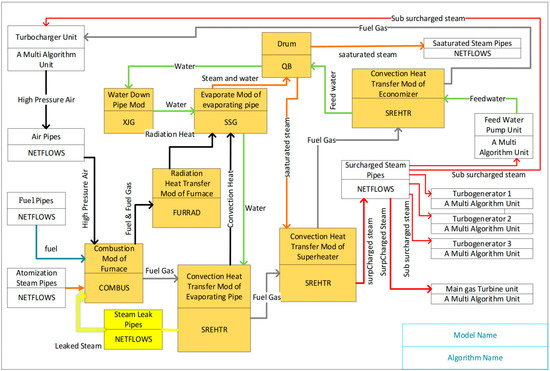

The simulation model of the supercharged boiler used in this previous study was built based on MINIS. It focuses on the leak fault of the evaporator tubes, so a boiler fault model was established, and relevant modules of the steam leakage model were improved. The module connection is shown in Figure 6 [12]. A steam leakage pipeline module was built based on the fluid network principle, connecting the evaporator tube and furnace modules (see for combustion model improvement). This model has been verified by simulation [12].

Figure 6.

A dynamic model of the steam power unit.

3.3. Fault Simulation Experiment of Boiler Evaporator Tubes

3.3.1. Experimental Scheme and Experimental Procedures

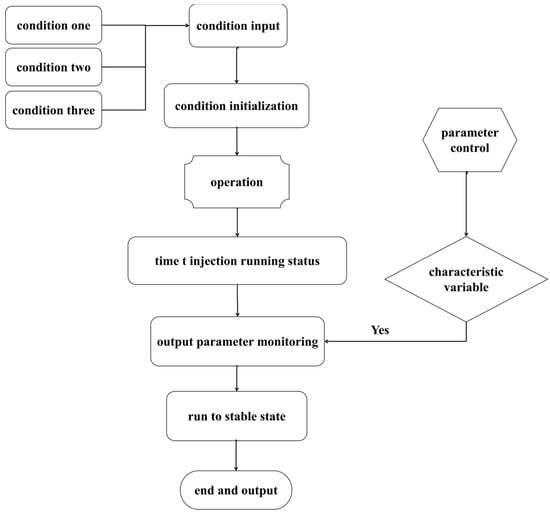

The leakage of high-temperature and high-pressure steam from evaporator tubes has a direct impact on equipment performance. Understanding this leakage is essential for operators to evaluate equipment operability and facilitate maintenance procedures. In supercharged boilers, different types of evaporator tube leaks affect the system in various ways, necessitating different response strategies. Therefore, the experiment introduced a series of simulated leakage faults. Li et al. [12] discovered that changes in load conditions often resemble minor leaks during their research on leakage, which complicates the process of leak identification. As a result, this study included load-rising conditions as the fault-free group for comparison. Supercharged boilers have different evaporator tube pressures under various operating conditions, leading to large distribution differences in the same operating state. Three groups of fault simulation tests with different conditions were conducted. The simulation test process is shown in Figure 7. We conducted fault simulations for three operating conditions, each of which contains 13 operating states. After running for time t, we injected the operating states into the model and ended the process when it reached a stable state. In this paper, an output variable monitoring unit was used to determine whether the output variables are characteristic. Finally, 140 characteristic variables were selected.

Figure 7.

Simulation experiment on the leakage fault of evaporation tubes in supercharged boilers.

The fault settings and markings for the leakage of evaporator tubes in the supercharged boiler are shown in Table 1. Operating conditions 1, 2, and 3 mean supercharged boiler loads at 20%, 30%, and 40%, respectively. Stable operation means no load increase and no evaporator tube leak fault set on the original load. A loading increase means raising the load on the original load. Evaporator tube leakage sets the corresponding leakage on the original load without a load increase. In this study, the leakage labels are set as the actual leakage values (multiplied by one). This approach is adopted despite using a range of leakage levels graded from 0.001 to 0.05. The rationale is that for trim leakage levels (i.e., micro-leaks), using the unscaled actual values directly as labels would lead to distorted accuracy metrics when calculating the average estimated accuracy (AEA) and the maximum relative error of estimation (MREE). This distortion arises because these metrics employ relative error in their computation. When label values are minimal, the relative error becomes highly sensitive, resulting in accuracy assessments that misrepresent the model’s actual predictive performance.

Table 1.

Test setup for evaporation tube leakage in supercharged boilers.

3.3.2. Fault Simulation Test Result

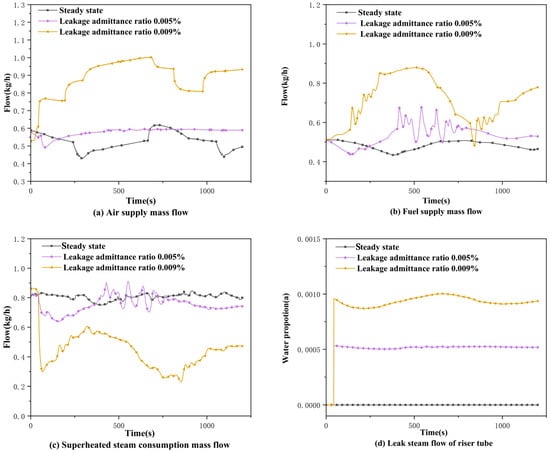

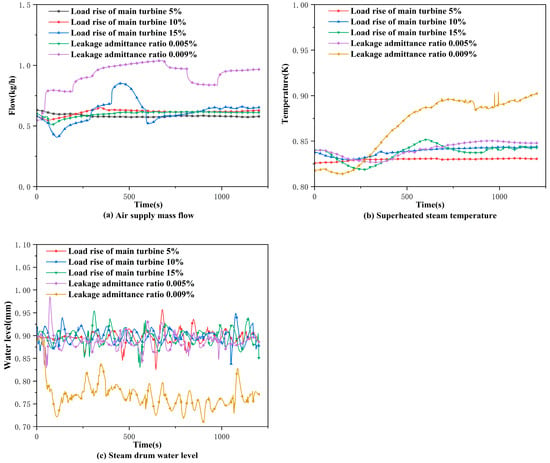

We obtained a series of data reflecting both fault conditions and normal operation through the fault simulation tests conducted on the evaporator tubes of the supercharged boiler. Figure 8 illustrates the changes in various monitoring parameters over time under operating condition 2, during stable operation, and when the leakage of the evaporator tubes is 0.005%. Figure 9 depicts the trends of some monitoring parameters over time under the same operating condition 2, demonstrating the effects of increasing the load by 5%, 10%, and 15%, with evaporator tube leakages of 0.005% and 0.009% during operation.

Figure 8.

Air supply mass flow, fuel supply, leak steam mass flow of riser tubes, and superheated steam consumption mass flow.

Figure 9.

Air supply flow, superheated steam temperature, and steam drum water level.

It can be seen from Figure 8d that the leakage under normal load disturbance is 0, and the leakage flow increases significantly with the increase in leakage size. Furthermore, as shown in Figure 8 and Figure 9, the law of change of these parameters and the actual law of change of the supercharged boiler is consistent, and the simulation accuracy is high, even for the simulation of the trace leakage, which can also maintain a high degree of accuracy [12].

We can find that the leak fault operation differs greatly from stable operation but is highly similar to the load-increasing operation from the evaporator tube leakage fault simulation results. Most characteristic parameters are hard to distinguish, with dynamic responses like load-increasing, and the main parameters still tend to balance [12]. Thus, diagnosing evaporator tube leaks, especially micro-leakage, is difficult.

3.4. Fault Data Preprocessing

After obtaining the test data of evaporator tube leak faults, we need to normalize, perform sliding-window processing, set corresponding labels, and divide the training set and test set.

3.4.1. Normalization

We can normalize each dataset of the three operating conditions separately to enhance the model’s ability to capture the time features of the fault. We used the maximum–minimum normalization method to normalize the three condition datasets.

Xinorm denotes the normalized value of the i-th data in a dataset, Xi represents this data, and min(X) and max(X) are the minimum and maximum values in the dataset, respectively.

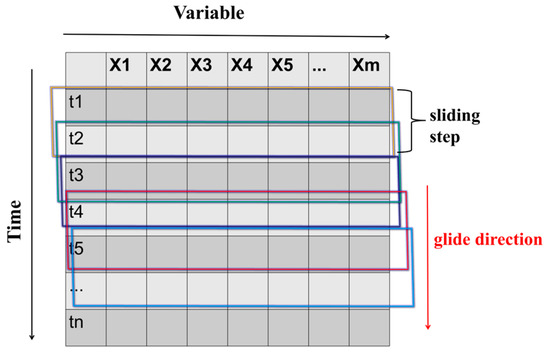

3.4.2. Sliding-Window Processing

Sliding-window processing is a technique used for time series data. It involves dividing long sequences of data into fixed-length segments, which simplifies feature extraction and analysis [40]. As shown in Figure 10, this method can be particularly effective in diagnosing faults quickly and reducing diagnosis latency when the sliding step is set to one. We will perform sliding-window processing on each of the three normalized datasets. This operation can be applied to every operating state within each operating condition datasets, using a window size of 20 and a step size of one.

Figure 10.

Sliding-window processing.

The window size is set to 20 s, determined by the minimum fault injection time in the experimental setup. To reflect real-world operational authenticity, fault injection times are randomly generated within the 20–100 s range. Setting the window size to the minimum injection time (20 s) ensures it captures both: pre-fault operational data, and the transition state from regular operation to fault conditions. The step length is set to one to enable the LSTM to capture the temporal evolution of each parameter when extracting time series features. Using a larger step size could obscure critical transitions within the time series.

3.4.3. Setting Labels for Fault Data

After acquiring the datasets that were subjected to normalization and sliding-window processing, we labeled the data corresponding to each operating condition, as shown in Table 2. If a leak fault occurred in the evaporator tube, it was designated as one; otherwise, it was labeled as zero. The severity of the fault was determined by multiplying the leakage amount by 10 to avoid any potential distortion during the leakage estimation. During the initial 20 s, the operational states remained stable, and the fault label was set to zero. After this period, once the fault was introduced, the fault label was changed to one.

Table 2.

The diagnosis model and estimation label setting.

3.4.4. Set Division

The datasets of the above three operating conditions were all divided into training sets and test sets, according to Table 3. Each condition dataset for an operating condition was split into one training set and three test sets. Each value in the table represented the proportion of the corresponding training set or test set in the data of that operating state.

Table 3.

Training set and test set partition.

Test set 1 was designed to illustrate the performance of the fault diagnosis and fault severity estimation methods proposed in this paper for known evaporator tube leakage fault magnitudes. In contrast, test sets 2 and 3 were aimed at demonstrating the performance of this framework for unseen evaporator tube leakage magnitudes.

3.5. Evaluation Metric Selection and Refinement

3.5.1. Average Diagnosis Accuracy (ADA) to Evaluate Leakage Fault Diagnosis

S0 is the number of fault-free samples, C0 is the number judged to be fault-free by the model, S1 is the number of faulty samples, and C1 is the number judged faulty by the model. This formula reflects the model’s overall classification ability for fault presence. The higher the value, the better the model’s fault diagnosis ability.

3.5.2. Average Estimated Accuracy (AEA) to Evaluate Leakage Fault Estimation

The xipred represents the fault severity value estimated by the model for the i-th time window, while xiture represents the true fault severity value. The n denotes the total number of time windows in the sample. The formula finds error in the model’s fault severity estimates for each time window. After averaging these errors, we subtract that average from 100% to get the model’s accuracy. A higher accuracy value indicates that the model estimates the fault severity more accurately.

3.5.3. Maximum Relative Error of Estimation (MREEP) to Evaluate Leakage Fault Estimation

The Maximum Relative Error Proportion (MREE) represents the largest deviation in the model’s estimations of fault severity across all the time windows. This value is used to assess the extent of the sdeviation in the fault severity that the model can estimate. A smaller MREE indicates less deviation in the model’s estimations, signifying a more accurate and reliable forecast of fault severity.

4. Result and Discussion

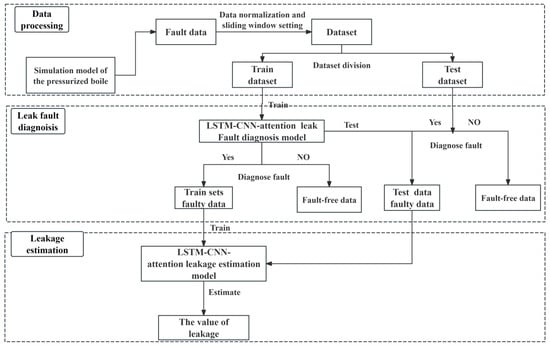

The implementation roadmap for diagnosing evaporator tube leaks and estimating leakage using the LSTM-CNN–attention method proposed in this paper is shown in Figure 11. The roadmap consists of three parts: data acquisition and processing, fault diagnosis, and fault severity estimation. Firstly, the fault simulation test of evaporator tube leakage is carried out to obtain experimental data and data preprocessing. Then the leakage fault diagnosis model is trained using the training set data and validated on the test set. Finally, the fault data on the training set determined by the leakage fault diagnosis model are used as the training set of the leakage estimation model to be trained and validated on the test set.

Figure 11.

Technical roadmap for fault diagnosis and estimation of fault severity.

We planned to conduct experiments using some common fault diagnosis framework as comparisons, including LSTM–attention, CNN–attention, CNN-LSTM–attention. Accurate fault identification is a prerequisite for estimating fault severity. Thus, it is necessary to explore the performance of LSTM-CNN–attention in diagnosing evaporator tube leakage faults. This study utilized four common fault diagnosis frameworks to diagnose and estimate leakage faults. The LSTM method can achieve 98% accuracy in identifying weak faults in micro-turbine blades, showing high accuracy in minor fault recognition [41]. The CNN can attain over 99% accuracy in identifying crack and shaft-eccentricity faults in a rotor system under different signal-to-noise ratios [42]. Yang, et al. proposed CNN-LSTM method which shows excellent performance on the TEP and TPFP datasets, and the addition of an adaptive adversarial domain can realize the fault diagnosis of unlabeled data [43]. The three frameworks were enhanced by incorporating an attention mechanism, resulting in the following variations: LSTM–attention, CNN–attention, and CNN-LSTM–attention [44,45,46]. Consequently, in subsequent comparative investigations, the LSTM–attention, CNN–attention, and CNN-LSTM–attention frameworks-selected as high-performance baselines-are subjected to rigorous comparative analysis against the proposed LSTM-CNN–attention framework.

The hyperparameter settings for LSTM with attention are based on reference [47], for CNN with attention on reference [48], and CNN-LSTM with attention on reference [33]. All these settings also included fine-tuning. The hyperparameter settings of the leakage estimation methods for the proposed LSTM-CNN–attention are shown in Table 4.

Table 4.

Parameter setting of LSTM-CNN–attention.

During the experiment, to ensure consistency in the comparison, the hyperparameters of the reference architectures were configured based on the settings used for the proposed LSTM-CNN–attention architecture in this paper. However, to guarantee the optimal performance of each architecture, fine-tuning through hyperparameter optimization was performed for each reference architecture, building upon the baseline settings from this paper (as the hyperparameters for the proposed architecture were themselves the result of optimization). This ensured that the other three architectures were also tested with their respective optimal hyperparameter configurations, thereby validating the superiority of the architecture proposed in this work.

We planned to use this framework to achieve leakage fault diagnosis and estimation in subsequent experiments. Since the methodology sequentially performs leakage fault detection prior to magnitude estimation, the accurate fault diagnosis of evaporator tube leakage constitutes a fundamental prerequisite for precise leakage quantification. We would compare with other methods to explore the ability of the novel LSTM-CNN–attention method in terms of diagnosing evaporation tube leakage faults, estimating leakage, adapting to operating conditions, and resisting noise. The experimental contents included diagnosis and estimation performance under the same operating conditions with different test sets, different operating condition datasets, and different noise levels.

In addition, for the fault leakage estimation experiments in this paper, each experimental group was repeated ten times, and the reported results represent the average value obtained from these repetitions to mitigate the effects of randomness and ensure reliable results.

4.1. Performance of the Model Under Different Test Sets in Operating Condition 2

Accurate leak fault diagnosis is essential for estimating leakage. Given that data distributions change with leakage and gathering data for all levels of severity is challenging in practice, it is important to investigate the diagnostic and predictive ability of the leakage estimation framework for both known and unseen levels of leakage.

The leakage estimation model used the Mean Squared Error (MSE) loss function, and the fault diagnosis model used the Binary Cross-Entropy (BCE) loss function. We chose the operating condition 2 dataset to train these models with its training set and validated them on test sets 1, 2, and 3.

The process of this method for extracting the fault features of supercharged boiler evaporator tubes is shown in Figure 12 and Figure 13. Figure 12 shows fault feature extraction in the fault diagnosis stage. Figure 13 shows the same in the fault severity estimation stage. Figure 12 and Figure 13 visualize the feature extraction process of models trained using the condition 2 dataset, employing heatmaps for representation.

Figure 12.

Feature extraction for fault diagnosis process.

Figure 13.

Feature extraction for fault severity estimation process.

These feature heatmaps provide an indirect interpretation of the feature extraction process and illustrate the deep feature decoupling. The reason why this architecture performs deep feature decoupling can be explained based on Figure 12 and Figure 13.

- (1)

- Stage 1: Strong Initial Coupling: As shown in the input layer, the multidimensional input variables (with an actual dimensionality of 140; only key strongly coupled parameters are visualized) exhibit highly complex interdependencies across both variable and temporal dimensions, manifesting strong coupling (darker shades indicate stronger coupling).

- (2)

- Stage 2: Reduced Temporal Coupling: The LSTM layer demonstrates the model’s capacity for temporal feature decoupling. After processing by the LSTM, the coupling between time windows (stride = 20) is reduced (evident by distinct color variations along the time axis for certain variables), though significant coupling among variables persists.

- (3)

- Stage 3: Reduced Local Spatial Coupling and Reduced Global Spatial Coupling: The Max Pooling Layer (following CNN operations) reveals local spatial feature decoupling. The CNN layer enhances distinguishability between local variables, leading to clearer feature separation within local regions. The attention mechanism performs global feature selection, reducing coupling among critical features. Key features exhibit distinct separation across the global variable dimension.

- (4)

- Stage 4: Evident Spatiotemporal Feature Decoupling: Decoupled features from the CNN and self-attention mechanisms are concatenated. Subsequent processing through fully connected layers enables effective fault classification and leakage quantification, as evidenced by the well-separated outputs in the final layer.

Collectively, Figure 12 and Figure 13 indirectly illustrate the framework’s capability for deep feature decoupling.

4.1.1. Diagnostic and Estimation Results for Test Set 1

Table 5 shows the results of the average diagnostic accuracy (ADA), average estimation accuracy (AEA), and maximum relative error of estimation (MREE) of the model on test set 1.

Table 5.

Different frameworks for leakage diagnosis and estimation performance on test set 1.

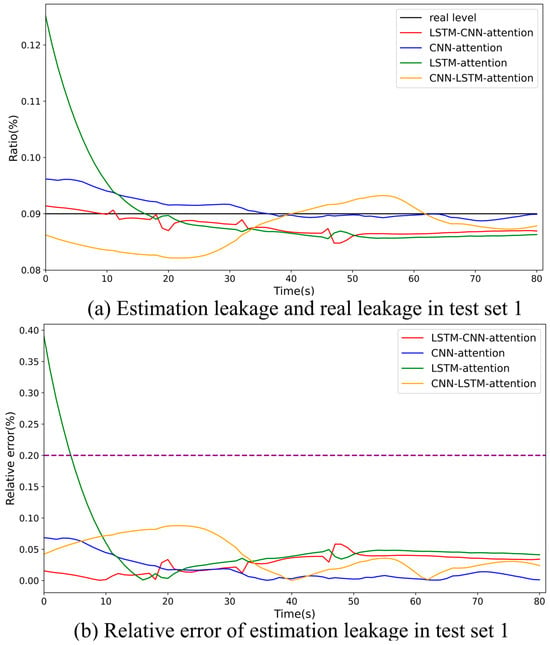

LSTM-CNN–attention has the highest ADA for supercharged boiler leaks at 99.33% from Table 5. Its AEA is only 0.47% lower than CNN–attention, with an MREE of just 4.96%. The other three frameworks also show a certain accuracy in diagnosing and estimating fault severity. But as fault diagnosis is the prerequisite, LSTM-CNN–attention performs best on test set 1 for diagnosing and estimating the known leakages.

Figure 14a displays the estimated leakage from the evaporator tube based on test set 1. Figure 14b illustrates the relative error results for the leakage estimations at various moments on test set 1. The dotted line indicates a relative error threshold of 20%. Typically, the relative error for severity estimations should be below this 20% limit.

Figure 14.

A distribution diagram of the estimated leakage of the evaporator tube and the relative error of the estimated leakage on test set 1.

The LSTM-CNN–attention framework is the most accurate and stable option for estimating known leakages. Figure 14a shows that the estimated leakages from the LSTM-CNN–attention, CNN–attention, and CNN-LSTM–attention models are closer to the true value of 0.09 in terms of both range and trend. Figure 14b indicates that the LSTM-CNN–attention model can maintain a relative estimation error within 5% for more stable leakage estimations.

Overall, LSTM-CNN–attention is the best on test set 1 for diagnosing and is the most accurate and stable method for known leakage estimation.

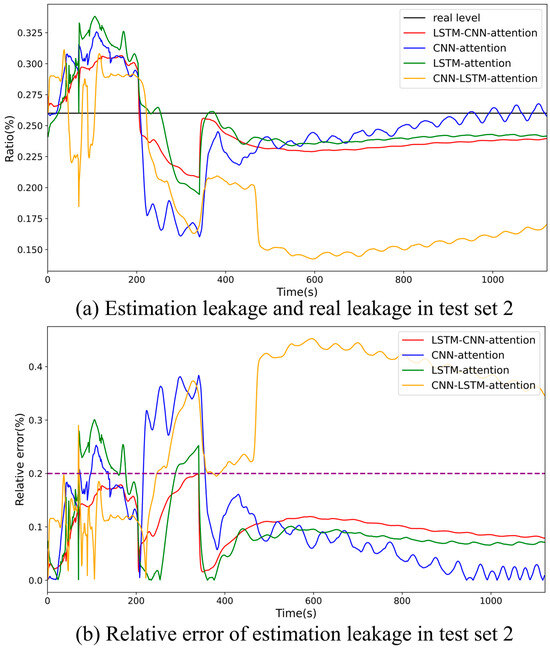

4.1.2. Diagnostic and Estimation Results for Test Set 2

Table 6 shows the results of the average diagnostic accuracy (ADA), average estimation accuracy (AEA), and maximum relative error of estimation (MREE) of the model on test set 2. Figure 15a displays the estimated leakage from the evaporator tube based on test set 2. Figure 15b illustrates the relative error results for the leakage estimations at various moments on test set 2.

Table 6.

Different frameworks for leakage diagnosis and estimation performance on test set 2.

Figure 15.

A distribution diagram of the estimated leakage of the evaporator tube and the relative error of the estimated leakage on test set 2.

The LSTM-CNN–attention method shows the highest accuracy in diagnosing and estimating unseen leakage on test set 2. As can be seen from Table 6, this method achieves an impressive ADA of 99.93%. Additionally, it records the highest AEA at 90.28%, with an MREE of 18.89%.

As depicted in Figure 15a, the distributions of the estimated values of LSTM-CNN–attention, LSTM–attention, and CNN–attention are all close to the actual leakage. However, some of the estimated values of CNN–attention deviate significantly. In Figure 15b, only the relative estimation error of LSTM-CNN–attention can consistently remain within 20%, maintaining a stable estimation ability. Therefore, the LSTM-CNN–attention framework shows the best performance in estimating the unseen evaporator tube leakage on test set 2.

Overall, LSTM-CNN–attention is the best framework on test set 2 and the most accurate and stable method for the unseen leakage estimation.

4.1.3. Diagnostic and Estimation Results for Test Set 3

Table 7 shows the results of the average diagnostic accuracy (ADA), average estimation accuracy (AEA), and maximum relative error of estimation (MREE) of the model on test set 3. Figure 16a displays the estimated leakage from the evaporator tube based on test set 3. Figure 16b illustrates the relative error results for the leakage estimations at various moments on test set 3.

Table 7.

Different frameworks for leakage diagnosis and estimation performance on test set 3.

Figure 16.

A distribution diagram of the estimated leakage of the evaporator tube and the relative error of the estimated leakage on test set 3.

Table 7 shows LSTM-CNN–attention has the highest ADA for supercharged boiler leaks, reaching 99.56%. Its AEA for leakage is also the highest, at 94.74%, with the lowest MREE of 7.29%. On test set 3, LSTM-CNN–attention is the most accurate in diagnosing and estimating the unseen leakage.

Figure 16a shows that the estimated values of LSTM-CNN–attention and LSTM–attention are closer to the real leakage. Figure 16b shows that their relative estimation errors are below 20%, so they are more stable in estimating leakage.

Overall, LSTM-CNN–attention is the best framework on test set 3. LSTM-CNN–attention and LSTM–attention are the most accurate and stable methods for the unseen leakage estimation.

Under condition 2, the LSTM-CNN–attention framework demonstrates the precise identification of evaporator tube leakage and accurate magnitude estimation, achieving superior accuracy over three existing deep learning architectures. Its high fault diagnosis accuracy confirms robust spatiotemporal feature extraction capabilities, while precise leakage quantification with a minimal estimation error and a maximum deviation error validates its effective complex nonlinear mapping from high-dimensional inputs to leakage magnitude. However, given its condition-specific applicability, subsequent sections investigate the framework’s performance across diverse operational scenarios.

4.2. The Cross-Conditional Performance Evaluation of the Frameworks

We need to examine the performance of the frameworks under different operating conditions. Given the significant differences in data distribution across these conditions and considering that diverse scenarios can arise during the operation of a supercharged boiler, the model must be capable of handling datasets from different situations.

The fault diagnosis and fault severity models use the same parameter settings as in the previous experiments. We trained the two models using the training sets from operating conditions 1 and 3. We evaluated the model performance using the data division method from test set 2 after training as the experiment for operating condition 2 was completed in experiment 2.

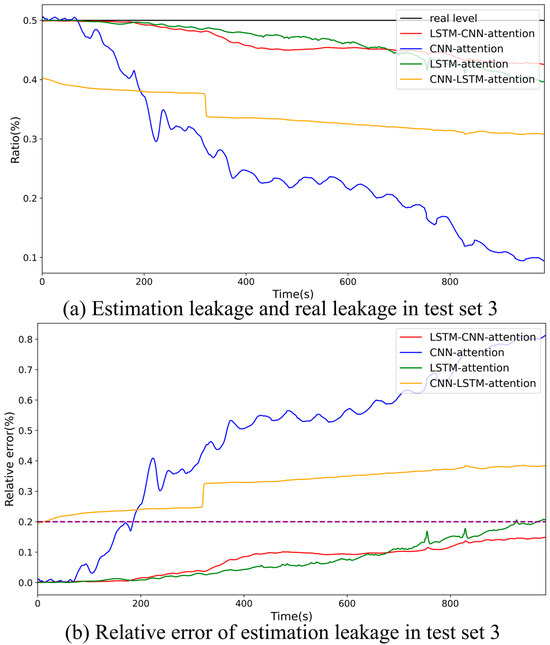

4.2.1. Estimation Results for the Dataset of Operating Condition 1

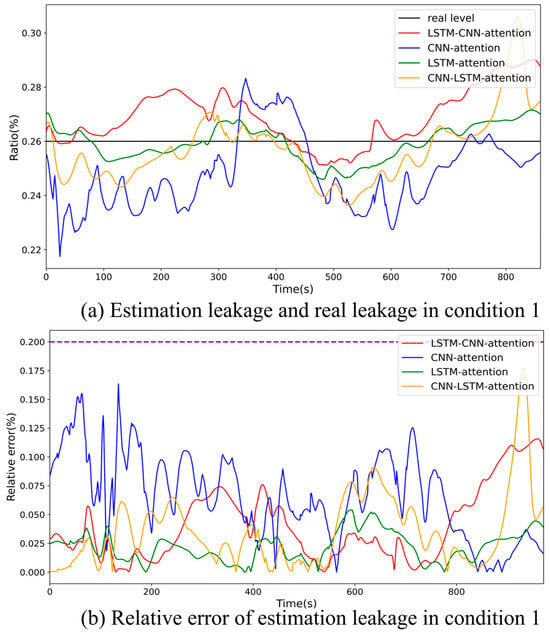

Table 8 shows the results of the average diagnostic accuracy (ADA), average estimation accuracy (AEA), and maximum relative error of estimation (MREE) of the model on operating condition 1. Figure 17a displays the estimated leakage from the evaporator tube based on operating condition 1. Figure 17b illustrates the relative error results for the leakage estimations at various moments on operating condition 1.

Table 8.

Different frameworks for leakage diagnosis and estimation performance on operating condition 1.

Figure 17.

A distribution diagram of the estimated leakage of the evaporator tube and the relative error of the estimated leakage on operating condition 1.

Table 8 indicates that under operating condition 1, the ADAs of the four frameworks can all reach over 99%, and the AEAs of all of them can reach over 90%. Among them, LSTM–attention has the highest AEA. Its ADA is only 0.17% lower than that of LSTM-CNN–attention, and it has an MREE of 4.92%. In addition, LSTM-CNN–attention also has the highest ADA, the second-highest AEA, with 96.62%, and the second-lowest MREE of the estimation, with 9.48%. So, LSTM–attention has the best performance, and LSTM-CNN–attention has the second-best performance on operating condition 1.

As can be seen from Figure 17a, the estimated value distributions of LSTM–attention, CNN-LSTM–attention, and LSTM-CNN–attention are all close to the actual leakage volume. Figure 17b shows that the relative estimation errors of the four methods can all be controlled within 20%. The relative estimation errors of LSTM–attention and LSTM-CNN–attention remain within 10%. This indicates that under operating condition 1, LSTM–attention has the highest accuracy in diagnosing and estimating the leakage of the supercharged boiler evaporator tube.

Overall, LSTM–attention is an accurate framework for diagnosis and estimation of leakage and is the most stable framework for known leakage estimation on operating condition 1. In addition, LSTM-CNN–attention also has a very high accuracy for diagnosis and estimation of leakage, and a very stable method for unseen leakage estimation on operating condition 1.

4.2.2. Diagnostic and Estimation Results on Test Set 2 for the Dataset of Operating Condition 3

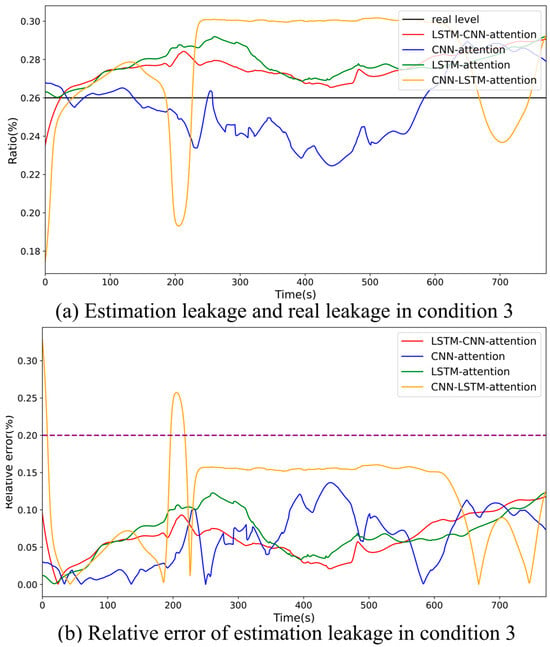

Table 9 shows the results of the average diagnostic accuracy (ADA), average estimation accuracy (AEA), and maximum relative error of estimation (MREE) of the model on operating condition 3. Figure 18a displays the estimated leakage from the evaporator tube based on operating condition 3. Figure 18b illustrates the relative error results for the leakage estimations at various moments on operating condition 3.

Table 9.

Different frameworks for leakage diagnosis and estimation performance on operating condition 3.

Figure 18.

A distribution diagram of the estimated leakage of the evaporator tube and the relative error of the estimated leakage on operating condition 3.

Table 9 indicates that under operating condition 3, all four methods achieve an ADA over 99% and an AEA above 85%. LSTM-CNN–attention tops the group, with an ADA of 99.99% and AEA of 93.89%, along with the smallest MREE of 11.73%.

LSTM-CNN–attention is the most accurate and stable method for unseen leakage estimation on operating condition 2. Figure 18a shows that the estimated value distributions of LSTM–attention, CNN–attention, and LSTM-CNN–attention are closer to the actual leakage volume. Figure 18b reveals that relative estimation errors of the four methods are within 20%, except for some of those from CNN-LSTM–attention. LSTM-CNN–attention has a more stable estimation with a relatively lower error distribution. Hence, LSTM-CNN–attention is more accurate and stable in diagnosing and estimating evaporator tube leakage under operating condition 3.

Both LSTM-CNN–attention and LSTM–attention, whose results are very close on operating conditions 1, 2, and 3, show excellent adaptability to different boiler operating conditions regarding evaporator tube leakage. Since ship boiler operating conditions vary during actual voyages, such adaptability is crucial. Analyzing the results, although both LSTM-CNN–attention and LSTM–attention methods perform well in operating condition adaptability, LSTM-CNN–attention has a better performance on predictive capability. Therefore, LSTM-CNN–attention, with a better predictive capability and operating condition adaptability, is more suitable for diagnosing boiler evaporator tube leakage and estimating leakage.

4.3. The Impact of Noise on the Models

We need to explore the impact of noise on the models. As the dataset employed in this study is sourced from simulation experiments, the associated data noise is minimal. Nevertheless, the data acquired by sensors of supercharged boilers typically encompasses a certain level of noise in the actual motion of boilers. Consequently, the model needs to exhibit robust noise resistance characteristics.

In marine boilers, the noise level varies among different hull models. Commonly, the noise picked up by boiler sensors is Gaussian white noise. The formula for calculating the signal-to-noise ratio is as follows:

In this context, SNR stands for the signal-to-noise ratio. Notably, a lower SNR value corresponds to a higher noise power, where Ps and Pn signify the signal power and noise power, respectively. For this experiment, a series of SNR values, spanning from −7 to a noise-free condition, was carefully set up. Conventionally, during ship navigation, the SNR of the signals received by supercharged boiler sensors typically ranges from −3 dB to 3 dB. Specifically, the dataset under operating condition 2 was chosen, and test set 2 was employed to evaluate how noise influences the model. To quantify this impact, three main parameters were utilized: ADA, AEA, and the MREE of estimation.

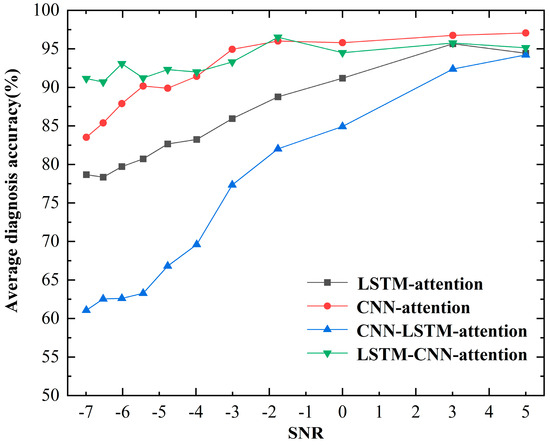

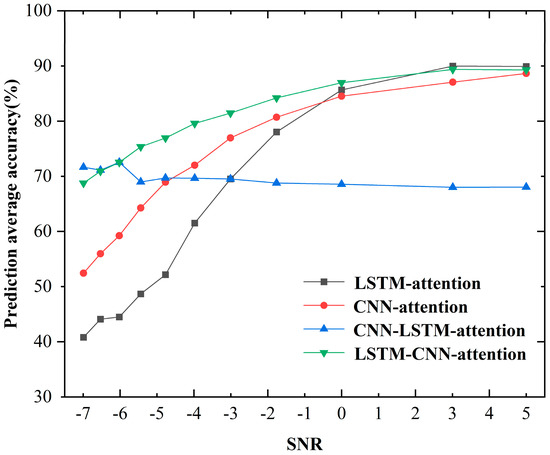

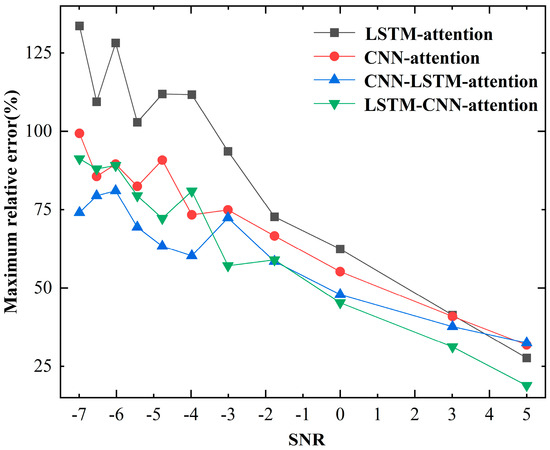

Figure 19 visually shows the change in diagnosis average accuracy (ADA) with SNR. Figure 20 visually shows the change in estimation average accuracy (AEA) with SNR. Figure 21 visually shows the change of maximum relative error of estimation (MREE) with SNR.

Figure 19.

The change in the diagnosis average accuracy (ADA) with SNR.

Figure 20.

The change in the estimation average accuracy (AEA) with SNR.

Figure 21.

The change in the maximum relative error of estimation (MREE) with SNR.

LSTM-CNN–attention has the strongest noise immunity in the diagnosis of evaporator tube leak faults. As illustrated in Figure 19, an increase in noise power led to a decline in the SNR, causing the ADA of the four methods to trend downward. However, even at an SNR of −7 dB, the LSTM-CNN–attention method sustains an accuracy of 90%, outperforming the other three methods.

LSTM-CNN–attention has the strongest noise immunity in the estimation of evaporator tube leakage. Figure 20 shows that LSTM-CNN–attention not only achieves a relatively high accuracy but also shows the least sensitivity to noise for estimating fault severity. Although CNN-LSTM–attention had the smallest impact of noise, its ADA consistently remained below 80%. Although the other three methods experience a reduction in accuracy due to noise, they demonstrate higher accuracies in noise-free conditions. When the SNR exceeded −4 dB, LSTM-CNN–attention can maintain an AEA of over 80%.

LSTM-CNN–attention possesses the strongest noise immunity in real-world noise, with the least extreme peak estimation error. As depicted in Figure 21, when the SNR is greater than −4 dB, LSTM-CNN–attention exhibits the smallest MREE, which remains at a relatively low level. When the SNR dropped below −4 dB, CNN-LSTM–attention attained the smallest MREE. But an SNR of −4 dB represents intense noise interference in actual motion. Typically, during the actual operation of a marine vessel, the SNR of the supercharged boiler ranges from −3 dB to 4 dB.

The LSTM-CNN–attention framework showcases robust noise resistance ability for the leak fault diagnosis and leakage estimation of the boiler evaporator tube, rendering it highly applicable to real-world engineering scenarios. A comprehensive analysis of the experimental results reveals that, while ensuring high diagnostic and estimation accuracies, LSTM-CNN attention is less affected by noise. It can endure an extreme SNR of approximately −4 dB, maintaining an average diagnostic accuracy above 90% and an average estimation accuracy above 80%.

Although LSTM–attention demonstrates commendable adaptability to diverse operating conditions, it lags in terms of its predictive capability and noise resistance. Compared to the three alternative frameworks, the LSTM-CNN–attention architecture demonstrates a superior predictive capability, exceptional operational adaptability, and optimal noise immunity, establishing itself as the preeminent framework for evaporator tube leakage magnitude estimation.

5. Conclusions

We proposed a novel LSTM-CNN–attention framework. By comprehensively extracting spatiotemporal fault features and establishing complex nonlinear mappings between high-dimensional input variables and leakage magnitude, this architecture achieves the precise estimation of evaporator tube leakage. The results are as follows:

- (1)

- LSTM-CNN–attention has an excellent predictive capability to map relationships between high-dimensional monitoring parameters and leakage. On different test sets of evaporation tube leakage in supercharged boilers, the average estimation accuracy (AEA) for leakage reached 97.25%, 90.28%, and 94.7%, respectively. It has a strong predictive capability in estimating leakage for unseen leakage.

- (2)

- The LSTM-CNN–attention method could adapt to different operating conditions. Under three different operating conditions, its AAD was over 99%, the AEA was over 90%, and the MREE of estimation could always be controlled within 20%. It has strong self-adaptability to operating conditions and can realize fault diagnosis and fault-severity estimation when there are significant differences in data distribution under different operating conditions.

- (3)

- LSTM-CNN–attention has an excellent noise-resistance performance. Compared with LSTM–attention and CNN–attention under different noise conditions, CNN-LSTM–attention had the highest ADA and the highest AEA. This makes it suitable for the fault diagnosis of evaporation tube leaks and severity estimation in supercharged boilers during actual ship navigation with noisy data.

We can use the novel LSTM-CNN–attention framework to estimate evaporation tube leakage in supercharged boilers, which provides operators with reference information on evaporation tube fault leaks, enabling them to make reasonable decisions and operations to make steam power generation safer and more stable. In this research, only the single fault of evaporation tube leakage in supercharged boilers is targeted. In future research, this method can be used to expand the fault types to multiple faults in supercharged boilers. Furthermore, our research team will continue its investigations to facilitate the industrial implementation of leak fault diagnosis in supercharged boiler evaporator tubes.

Author Contributions

Y.X.: software, writing—original draft, writing—review and editing, formal analysis, visualization and methodology. D.L.: supervision, conceptualization, investigation and writing—review and editing. Y.S.: supervision and writing—review and editing. S.X.: resources and validation. J.W.: data curation. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the scientific and industrial research projects, China (202501161716160001); comprehensive research projects, China (2025102040); and the Natural Science Foundation of Hubei Province, China (2022CFB872).

Data Availability Statement

The data that has been used is confidential.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Ozawa, M. Introduction to boilers. In Advances in Power Boilers; Elsevier: Amsterdam, The Netherlands, 2021; pp. 57–106. [Google Scholar] [CrossRef]

- Grądziel, S. Analysis of thermal and flow phenomena in natural circulation boiler evaporator. Energy 2019, 172, 881–891. [Google Scholar] [CrossRef]

- Alobaid, F.; Mertens, N.; Starkloff, R.; Lanz, T.; Heinze, C.; Epple, B. Progress in dynamic simulation of thermal power plants. Prog. Energy Combust. Sci. 2017, 59, 79–162. [Google Scholar] [CrossRef]

- Haghighat-Shishavan, B.; Firouzi-Nerbin, H.; Nazarian-Samani, M.; Ashtari, P.; Nasirpouri, F. Failure analysis of a superheater tube ruptured in a power plant boiler: Main causes and preventive strategies. Eng. Fail. Anal. 2019, 98, 131–140. [Google Scholar] [CrossRef]

- Xu, H.L. The Qualitative Reasoning Analysis of Boiler’s Four Tubes Leakage “Based on QSIM”. Master’s Thesis, North China Electric Power University, Beijing, China, 2014; p. 58. Available online: https://xueshu.baidu.com/usercenter/paper/show?paperid=1bfc1a3097749c9c6a8c9fb2c550218a&site=xueshu_se (accessed on 16 June 2025).

- Wang, N.S. Simulation Research and Intelligent Fault Diagnosis for Boiler Four Tube Leakage Faults. Master’s Thesis, North China Electric Power University, Beijing, China, 2014; p. 49. [Google Scholar]

- Hu, W.; Xue, S.; Gao, H.; He, Q.; Deng, R.; He, S.; Xu, M.; Li, Z. Leakage failure analysis on water wall pipes of an ultra-supercritical boiler. Eng. Fail. Anal. 2023, 154, 107670. [Google Scholar] [CrossRef]

- Xue, S.; Guo, R.; Hu, F.; Ding, K.; Liu, L.; Zheng, L.; Yang, T. Analysis of the causes of leakages and preventive strategies of boiler water-wall tubes in a thermal power plant. Eng. Fail. Anal. 2020, 110, 104381. [Google Scholar] [CrossRef]

- Duda, P.; Felkowski, Ł.; Dobrzański, J. An analysis of an incident during the renovation work of a power boiler superheater. Eng. Fail. Anal. 2015, 57, 248–253. [Google Scholar] [CrossRef]

- Başhan, V.; Demirel, H. Application of fuzzy dematel technique to assess most common critical operational faults of marine boilers. Politek. Derg. 2019, 22, 545–555. [Google Scholar] [CrossRef]

- Trojan, M. Modeling of a steam boiler operation using the boiler nonlinear mathematical model. Energy 2019, 175, 194–1208. [Google Scholar] [CrossRef]

- Li, D.; Xia, S.; Geng, J.; Meng, F.; Chen, Y.; Zhu, G. Discriminability Analysis of Characterization Parameters in Micro-Leakage of Turbocharged Boiler’s Evaporation Tube. Energies 2022, 15, 8636. [Google Scholar] [CrossRef]

- Indrawan, N.; Shadle, L.J.; Breault, R.W.; Panday, R.; Chitnis, U.K. Data analytics for leak detection in a subcritical boiler. Energy 2021, 220, 119667. [Google Scholar] [CrossRef]

- Lu, C.; Zeng, J.; Luo, S.; Cai, J. Detection and isolation of incipiently developing fault using Wasserstein distance. Processes 2022, 10, 1081. [Google Scholar] [CrossRef]

- Xie, X.; Wang, J.; Han, Y.; Li, W. Knowledge Graph-Based In-Context Learning for Advanced Fault Diagnosis in Sensor Networks. Sensors 2024, 24, 8086. [Google Scholar] [CrossRef]

- Li, D.; Cheng, G.; Xu, W.; Zhan, G.; Naval University of Engineering. Optimization Method of Operating Characteristics in Improving Supercharged Boiler System. Mar. Electr. Electron. Eng. 2017, 37, 72–76. [Google Scholar] [CrossRef]

- Li, D.; Geng, J.; Cheng, G.; Li, R.; Liu, X. Modular modeling method and experimental research of marine main steam turbines. Ship Sci. Technol. 2015, 37, 79–82. [Google Scholar]

- Geng, J.; Cheng, G.; Li, D.; Sun, L.; Geng, G. Research on modular modeling method for fluid network simulation. Ship Sci. Technol. 2015, 37, 128–131. [Google Scholar]

- Vorwerk-Handing, G.; Martin, G.; Kirchner, E. Integration of measurement functions in existing systems–retrofitting as basis for digitalization. In DS 91: Proceedings of NordDesign 2018, Linköping, Sweden, 14th–17th August 2018; Integration of Measurement Functions in Existing Systems—Retrofitting as Basis for Digitalization/The Design Society; Technische Universität Darmstadt: Darmstadt, Germany, 2018. [Google Scholar]

- Cai, B.; Hao, K.; Wang, Z.; Yang, C.; Kong, X.; Liu, Z.; Ji, R.; Liu, Y. Data-driven early fault diagnostic methodology of permanent magnet synchronous motor. Expert Syst. Appl. 2021, 177, 115000. [Google Scholar] [CrossRef]

- Lei, Y.; He, Z.; Zi, Y. Application of an intelligent classification method to mechanical fault diagnosis. Expert Syst. Appl. 2009, 36, 9941–9948. [Google Scholar] [CrossRef]

- Khalid, S.; Hwang, H.; Kim, H.S. Real-world data-driven machine-learning-based optimal sensor selection approach for equipment fault detection in a thermal power plant. Mathematics 2021, 9, 2814. [Google Scholar] [CrossRef]

- Sun, T.; Yu, G.; Gao, M.; Zhao, L.; Bai, C.; Yang, W. Fault diagnosis methods based on machine learning and its applications for wind turbines: A review. IEEE Access 2021, 9, 147481–147511. [Google Scholar] [CrossRef]

- Randall, R.B. Vibration-Based Condition Monitoring: Industrial, Automotive and Aerospace Applications; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Matania, O.; Bachar, L.; Khemani, V.; Das, D.; Azarian, M.H.; Bortman, J. One-fault-shot learning for fault severity estimation of gears that addresses differences between simulation and experimental signals and transfer function effects. Adv. Eng. Inform. 2023, 56, 101945. [Google Scholar] [CrossRef]

- Babay, M.A.; Adar, M.; Chebak, A.; Mabrouki, M. Forecasting green hydrogen production: An assessment of renewable energy systems using deep learning and statistical methods. Fuel 2025, 381, 133496. [Google Scholar] [CrossRef]

- Yan, J.; Senemmar, S.; Zhang, J. Inter-turn Short Circuit Fault Diagnosis and Severity Estimation for Wind Turbine Generators. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2024; Volume 2767, p. 032021. [Google Scholar] [CrossRef]

- Kim, H.; Lee, H.; Kim, S.; Kim, S.W. Attention recurrent neural network-based severity estimation method for early-stage fault diagnosis in robot harness cable. Sensors 2023, 23, 5299. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Zhang, J.; Wan, J.; Wu, S. A fault diagnosis method for small supercharged water reactors based on long short-term memory networks. Energy 2022, 239, 122298. [Google Scholar] [CrossRef]

- Jiao, J.; Zhao, M.; Lin, J.; Liang, K. A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 2020, 417, 36–63. [Google Scholar] [CrossRef]

- Dao, F.; Zeng, Y.; Qian, J. Fault diagnosis of hydro-turbine via the incorporation of bayesian algorithm optimized CNN-LSTM neural network. Energy 2024, 290, 130326. [Google Scholar] [CrossRef]

- Ren, H.; Liu, S.; Wei, F.; Qiu, B.; Zhao, D. A novel two-stream multi-head self-attention convolutional neural network for bearing fault diagnosis. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2024, 238, 5393–5405. [Google Scholar] [CrossRef]

- Pan, S.; Yang, B.; Wang, S.; Guo, Z.; Wang, L.; Liu, J.; Wu, S. Oil well production prediction based on CNN-LSTM model with self-attention mechanism. Energy 2023, 284, 128701. [Google Scholar] [CrossRef]

- Liu, Q.; Liang, T.; Huang, Z.; Dinavahi, V. Real-time FPGA-based hardware neural network for fault detection and isolation in more electric aircraft. IEEE Access 2019, 7, 159831–159841. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Yao, G.; Lei, T.; Zhong, J. A review of convolutional-neural-network-based action recognition. Pattern Recognit. Lett. 2019, 118, 14–22. [Google Scholar] [CrossRef]

- Geng, Z.; Wu, X.; Shi, Y.; Fomel, S. Deep learning for relative geologic time and seismic horizons. Geophysics 2020, 85, WA87–WA100. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017. Available online: https://proceedings.neurips.cc/paper_files/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html (accessed on 16 June 2025).

- Yang, L.; Wang, S.; Chen, X.; Chen, W.; Saad, O.M.; Zhou, X.; Pham, N.; Geng, Z.; Fomel, S.; Chen, Y. High-fidelity permeability and porosity prediction using deep learning with the self-attention mechanism. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 3429–3443. [Google Scholar] [CrossRef]

- Jiao, Z.; Ma, J.; Zhao, X.; Zhang, K.; Li, S. A methodology for state of health estimation of battery using short-time working condition aging data. J. Energy Storage 2024, 82, 110480. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, Y.; Li, N.; Zhai, J.; Han, Q.; Hou, Z. A weak fault identification method of micro-turbine blade based on sound pressure signal with LSTM networks. Aerosp. Sci. Technol. 2023, 136, 108226. [Google Scholar] [CrossRef]

- Zhao, W.; Hua, C.; Dong, D.; Ouyang, H. A novel method for identifying crack and shaft misalignment faults in rotor systems under noisy environments based on CNN. Sensors 2019, 19, 5158. [Google Scholar] [CrossRef] [PubMed]

- Zhi, Z.; Liu, L.; Liu, D.; Hu, C. Fault detection of the harmonic reducer based on CNN-LSTM with a novel denoising algorithm. IEEE Sens. J. 2021, 22, 2572–2581. [Google Scholar] [CrossRef]

- Ma, S.; Ding, W.; Zheng, Y.; Zhou, L.; Yan, Z.; Xu, J. Edge-cloud collaboration-driven predictive planning based on LSTM-attention for wastewater treatment. Comput. Ind. Eng. 2024, 195, 110425. [Google Scholar] [CrossRef]

- Tan, J.; Ding, J.; Li, J.; Han, L.; Cui, K.; Li, Y.; Wang, X.; Hong, Y.; Zhang, Z. Advanced Dynamic Monitoring and Precision Analysis of Soil Salinity in Cotton Fields Using CNN-Attention and UAV Multispectral Imaging Integration. Land Degrad. Dev. 2025, 36, 3472–3489. [Google Scholar] [CrossRef]

- Shi, M.; Yang, B.; Chen, R.; Ye, D. Logging curve prediction method based on CNN-LSTM-attention. Earth Sci. Inform. 2022, 15, 2119–2131. [Google Scholar] [CrossRef]

- Liu, J.; Tang, X.; Guan, X. Grain protein function prediction based on self-attention mechanism and bidirectional LSTM. Brief. Bioinform. 2023, 24, bbac493. [Google Scholar] [CrossRef]

- Wu, H.; Xu, X.A.; Xin, C.; Liu, Y.; Rao, R.; Li, Z.; Zhang, D.; Wu, Y.; Han, S. Intelligent fault diagnosis for triboelectric nanogenerators via a novel deep learning framework. Expert Syst. Appl. 2023, 226, 120244. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).