Abstract

The large language model (LLM) has significant potential for application in the field of electricity markets, but there are shortcomings in professional evaluation methods for LLM: single task, limited dataset coverage, and lack of depth. To this end, this article proposes the ELM-Bench framework for evaluating the LLM of the Chinese electricity market, which evaluates the model from 3 dimensions of understanding, generation, and safety through 7 tasks (such as common-sense Q&A and terminology explanations) with 2841 samples. At the same time, a specialized domain model QwenGOLD was fine-tuned based on the general LLM. The evaluation results show that the top-level general model performs well in general tasks due to high-quality pre-training, while QwenGOLD performs better in tasks such as prediction and decision-making in professional fields, verifying the effectiveness of domain fine-tuning. The study also found that fine-tuning has limited improvement on LLM’s basic abilities, but its score in professional prediction tasks is second only to Deepseek-V3, indicating that some general LLMs can handle domain data well without professional training. This can provide a basis for model selection in different scenarios, balancing performance and training costs.

1. Introduction

In recent years, generative artificial intelligence technologies have made significant breakthroughs. These advancements are particularly notable in large language models (LLMs) [1]. These models have demonstrated unprecedented potential in areas such as natural language understanding, text generation, knowledge answering, and logical reasoning. The power market, a core component of the national economy, is becoming increasingly complex and dynamic due to the transformation of the global energy structure. Every aspect of market operations generates vast amounts of data, which places high demands on the rapid and accurate processing of information and decision-making capabilities [2]. Traditional methods grounded in statistical models and optimization algorithms demonstrate exceptional capabilities in managing structured data and executing specific tasks. However, when faced with unstructured text, these methods have encountered significant bottlenecks. The so-called unstructured text refers to textual information that does not follow a fixed format and lacks clear data structures, such as clause expressions in policy documents and analytical viewpoints in market reports. This type of text often contains a large amount of implicit information, which needs to be interpreted accurately by combining context, professional background, and even semantic associations. In addition, existing methods are equally inadequate in capturing emotional trends. Emotional trend refers to the overall emotional tendency exhibited by market participants during a specific period through language, behavior, etc., such as optimistic expectations for a certain industry, concerns about policy adjustments, or a wait-and-see attitude toward product innovation. This trend is often hidden between the lines of unstructured text, with ambiguity and dynamism: a sentence “industry development faces opportunities and challenges” may imply cautious optimism, but may also reveal potential concerns, requiring judgment based on the emotional color of the context.

However, most existing methods rely on fixed keyword matching or simple semantic analysis, which makes it difficult to identify subtle emotional changes and track the evolution trajectory of emotions over time and events. This directly leads to their lack of generalized reasoning and decision-making ability in non-standardized scenarios—when faced with information with inconsistent formats and non-standard expressions, they cannot make flexible judgments through comprehensive analysis of the emotional tendencies, logical relationships, and background information of the text like humans do, making it difficult to form a comprehensive and accurate decision-making basis.

LLMs possess exceptional capabilities in comprehending, generating, and processing complex natural language. These capabilities have opened new avenues to tackle the challenges posed by unstructured information in the power market. Their robust text processing capabilities are expected to revolutionize various applications, including market interpretation, policy analysis, and even the generation of trading strategies. However, the power market has extremely stringent requirements for accuracy and reliability, and any information bias or decision-making error could lead to severe consequences. Therefore, systematically and thoroughly evaluating the adaptability of general LLMs to this highly specialized and critical field is of great importance. It is equally essential to assess their performance in acquiring domain knowledge and processing professional texts. Additionally, evaluating their capabilities in complex prediction and decision-making tasks is crucial for understanding their practical value in the power market.

Although some studies have started to explore the specific application prospects of LLMs in the energy and power sector [3], there is still a gap in the research. Some other studies focus on evaluating the performance of LLMs from a general perspective [4], but these also have limitations. Specifically, there is a lack of systematic, multidimensional evaluation benchmarks. Moreover, related research that specifically targets the comprehensive capabilities of LLMs in the power market remains insufficient. Current evaluation methods often have limitations in task coverage, failing to fully reflect the multi-level demands of the power market [5], and do not thoroughly assess the domain-specific knowledge of the models [6]. Additionally, issues such as data contamination and incomplete evaluation metrics [7] exist. These shortcomings collectively make it difficult to accurately gauge the true capability boundaries of different LLMs in the power market environment, thereby limiting their further development and broader application in this field.

To address the aforementioned research gaps, this paper constructs a comprehensive and structured evaluation framework for Chinese power market domain LLMs, named ELM-Bench. This framework innovatively focuses on three core dimensions: comprehension, generation, and security. It meticulously designs seven specific evaluation tasks to comprehensively assess model capabilities. These tasks include common knowledge questions, term explanations, classification tasks, text generation, predictive decision-making, technology ethics, and security protection. These tasks basically cover the main needs of different user groups for large-scale language models in the field. Specifically, for ordinary residents, assessment tasks such as common-sense quizzes and terminology explanations can effectively reflect the basic functions of LLMs, and specialized tasks such as classification and predictive decision-making can help power companies determine the actual role that different LLMs can play in market operations.

The design aims to comprehensively and systematically assess the general level, professional knowledge mastery, ability to handle complex domain tasks, and adherence to safety standards of the participating large language models in power market scenarios. We have carefully organized and developed an ELM-Bench dataset with a variety of question types, based on professional publications, policy documents, and existing public evaluation resources in the power market (Section 3.1). Based on this evaluation system and dataset, we selected four widely influential Chinese general large language models (Deepseek-V3, Qwen2.5-72B, Kimi-Moonshot-V1, and ERNIE-4.0-8K) and a self-built model (Qwen-GOLD) that is fine-tuned for the power market domain based on mainstream open-source base models (Qwen2.5-72B) as the evaluation subjects. This study will conduct a detailed comparison of these models’ performance across various evaluation tasks. It will also provide an in-depth analysis of the advantages and potential limitations of general large language models and vertical domain fine-tuning models in power market applications. The goal is to offer valuable empirical evidence and insights for model technology selection, deployment, and future research directions in this field.

The structure of the remaining sections of this paper is as follows: Section 2 will review existing research on the application and evaluation of large power language models both domestically and internationally. Section 3 will provide a detailed explanation of the design concept, framework, selection criteria for participating models, details of the evaluation task design, dataset construction process, and the evaluation index system used in the ELM-Bench evaluation system. Section 4 will present and analyze the experimental results of each participating model on the ELM-Bench benchmark. Section 5 will summarize the research findings and discuss the future development trends and key challenges of large power models in the power market.

2. Related Research

2.1. Research on Large Power Language Models

In recent years, the rapid advancement of generative artificial intelligence (AI) technology has been remarkable. Particularly, large language models (LLMs) have demonstrated powerful capabilities in comprehending, generating, and processing complex texts. Concurrently, the potential of LLMs in the power system field has become increasingly evident. For example, Shi and colleagues [8] systematically reviewed the large-scale deployment of LLMs in smart grids, emphasizing their significant value in enhancing information processing efficiency and promoting intelligent operations. Meanwhile, Grant and colleagues [9] used wind energy site selection policies as a case study to demonstrate the application of LLMs in understanding and extracting key points from policy documents, further validating their capability to process unstructured energy text information.

In the field of power market forecasting, the advantages of LLMs extend beyond text generation. They now also encompass multi-modal data fusion and time series modeling. Rushil et al. [10] conducted a Dutch case study. They integrated full-sky images with meteorological data end-to-end, which reduced the overall system operating cost by 30% compared to a single-modal baseline. Additionally, Qiu et al. [11] developed the EF-LLM framework. This framework combines AI automation mechanisms with sparse time series modeling techniques to achieve efficient prediction of power load and prices. Lu et al. [12] introduced a market sentiment proxy mechanism. By integrating unstructured text information with historical numerical data, they achieved a RMSE of 58.08 and an MAE of 45.45 for the CTSGAN model. This significantly outperformed other models and enhanced the sensitivity of electricity price predictions. Liu et al. [13] validated an LLM-based extreme electricity price prediction model in the Australian power market. The model demonstrated good stability in handling abnormal events. Beyond prediction, the application of LLMs in power market trading strategies and risk management has attracted significant attention. Shi et al. [14] explored the performance of LLMs in financial market data analysis. This provides insights into the integration of multi-source information and potential risk identification in the power market. Furthermore, Zhou and Ronitt [15] proposed an end-to-end LLM-driven trading system. It integrates real-time market intelligence automatic analysis and strategy optimization functions, showing significant intelligence potential.

In addition, the large language model (LLM) has demonstrated irreplaceable advantages in the field of electricity market policy analysis and rule understanding. The core of this advantage lies in its unique ability to integrate cross-disciplinary and multidimensional information in a short period of time, relying on a vast knowledge base and rapid learning capabilities, providing breakthrough ideas and inspirations for experts. According to Grant et al. [9], by automatically extracting and structuring wind energy siting regulations, LLMs can help market participants and regulatory bodies quickly grasp the core content of policies, thereby facilitating their decision-making processes.

Despite the numerous advantages of LLMs in the power market, they still face challenges, such as model interpretability, real-time performance, and adaptability to specialized knowledge. To address these issues, Zhao et al. [16] proposed a framework that integrates large language models with power system knowledge graphs to enhance the models’ domain understanding. Zhou et al. [17], in their review of LLM development, highlighted that improving cross-industry migration and security mechanisms will be crucial for the ongoing evolution of large language models. Therefore, the development of large power models will require deep collaboration across disciplines and fields.

2.2. Research on Evaluation of Large Power Models

As the complexity of the power market grows and artificial intelligence technology advances rapidly, the potential of large-scale power models in areas such as load forecasting, anomaly detection, and trading decisions is becoming increasingly evident. However, current evaluation methods still have significant limitations in task diversity, data coverage, and benchmark reliability, which hinder the full realization of these models’ potential.

The international academic community has conducted multi-faceted explorations into the performance optimization and reliability assessment of large-scale power models. In terms of model performance optimization, Chen et al. [5] proposed an innovative framework that leverages large language models to extract key information from diverse literature, achieving a true positive rate of 83.74% through 11 iterations, providing an efficient solution for information extraction in the energy sector. Additionally, Sblendorio et al. [6] introduced a new methodological framework to evaluate the feasibility of large language models (LLMs) in healthcare, particularly clinical care, focusing on their performance, safety, and alignment with ethical standards. By integrating multidisciplinary knowledge and expert reviews, this method defines seven key evaluation areas and uses a 7-point Likert scale to classify and assess nine state-of-the-art LLMs, offering a reference for model selection and safety assessment in power model evaluations. Lee et al. [7] conducted a review of the scope of studies evaluating LLMs in the medical field, analyzing the methodologies used in these studies. They noted that current LLM evaluations primarily focus on test exams or assessments by medical professionals but lack a clear evaluation framework. The study suggests that future research on the application of LLMs in healthcare needs more structured methods, providing important references for methodological design in power model evaluation studies. Koscelny et al. [18] used a 2 × 2 participant design to control for communication style and language style as independent variables, employing a stratified Bayesian regression model to analyze the impact of different information presentation methods on the effectiveness, credibility, and usability of medical chatbots. The study found that a perception of low availability significantly reduces the effectiveness of medical chatbots, while conversational style, although it enhances perceived availability, diminishes credibility. These findings offer valuable insights for optimizing user interaction design and information presentation in large-scale power model evaluations.

Yan et al. [19] evaluated the suitability and comprehensibility of three large language models (ChatGPT-4o, Claude3.5Sonnet, and Gemini1.5Pro) in answering clinical questions related to Kawasaki disease (KD) and analyzed the impact of different prompt strategies on the quality of their responses. The results showed that Claude3.5Sonnet performed best in terms of accuracy and quality, while Gemini1.5Pro excelled in comprehensibility. Prompt strategies significantly influenced the quality of model responses. These findings provide valuable insights for model selection and prompt strategy optimization in large language model evaluations. Khalil et al. [20] explored the potential of LLMs in assessing the quality of professional videos on YouTube. They had 20 models use the DISCERN tool to rate videos already assessed by experts and analyzed the consistency between model and expert ratings. The results indicated a wide range of agreement between LLMs and experts (ranging from −1.10 to 0.82), with models generally giving higher scores than experts. The study also found that incorporating scoring guidelines into prompts can enhance model performance. This suggests that certain LLMs can evaluate the quality of medical videos, and if used as independent expert systems or embedded in traditional recommendation systems, they could help address the issue of inconsistent quality in online professional videos. Nazar et al. [21] provided a comprehensive guide for designing, creating, and evaluating instruction fine-tuning datasets (ITDs) in the medical field, emphasizing the importance of high-quality data to enhance the performance of LLMs. The study explores three primary methods for dataset construction: expert manual labeling, AI-generated synthesis, and hybrid approaches. It also discusses metadata selection and human evaluation strategies, outlines future directions, and highlights the critical role of instruction fine-tuning datasets in AI-driven healthcare.

Chinese scholars have also made significant achievements in the evaluation of large-scale power models. Yang [22] and colleagues developed an adaptive evaluation method for medium- and long-term time-segmented trading in the power market, based on improved material element extension theory and C-OWA operators. This method constructs a comprehensive evaluation index system that covers multiple dimensions, including operational management, spot markets, and peak-valley time-of-use pricing, providing a quantitative tool for optimizing the trading mechanisms in the power market. Reference [23] provides a systematic review of evaluation methods for large language models, summarizing evaluation metrics, methods, and benchmarks from three aspects: performance, robustness, and alignment. It analyzes the strengths and weaknesses of various methods and explores future research directions. This study offers comprehensive methodological support for the performance evaluation of large-scale power models. In terms of data quality assessment, Li [24] and colleagues proposed a multi-level, multidimensional data quality assessment framework (QAC model), which precisely quantifies data quality at different levels, including physical, business, and scenario aspects, providing a scientific basis for evaluating the quality of training data for large language models.

However, it is worth noting that both domestic and foreign studies have shown that there is a key issue in the current evaluation criteria for large language models: data pollution. Simply put, data pollution refers to the mixing of data used during model training with data used during later testing, to the extent that training data can directly affect the results of testing data. Additionally, existing benchmarks suffer from limitations in task coverage and evaluation metrics. For example, some benchmarks only focus on specific tasks like price prediction or trading strategy generation in power markets, while neglecting other vital tasks, such as power system operation optimization and fault diagnosis. Furthermore, most current evaluation metrics primarily emphasize model prediction accuracy or the similarity of generated text, lacking a comprehensive assessment of models’ overall capabilities in power market scenarios. These collective shortcomings mean that existing benchmarks struggle to fully and accurately evaluate the performance of large power models in power market contexts. As a result, they hinder the deeper development and broader application of these models in this field.

3. Design of Evaluation System

3.1. Evaluation System Framework

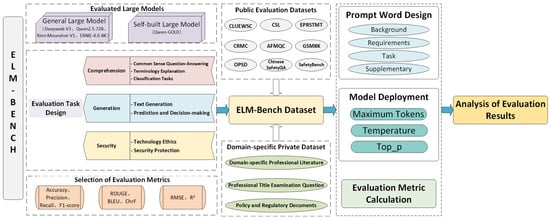

As the digital transformation of the power market deepens, large language models are increasingly demonstrating their potential in applications such as load forecasting, electricity price analysis, and policy interpretation. The current evaluation system for large language models in specialized fields continues to follow the ‘multiple-choice’ model used in general fields. While this model can quickly validate a model’s knowledge base and retrieval capabilities through standardized test sets, it falls short in addressing the importance of context understanding, coherence, open-ended generation capabilities, and security protection in the context of power market business scenarios. To address these challenges, we propose an evaluation framework for large language models in the power market domain, known as ELM-Bench, as illustrated in Figure 1.

Figure 1.

ELM-Bench, a large language model evaluation framework for electricity market fields.

The ELM-Bench framework provides a systematic approach for evaluating large-scale language models in the Chinese electricity market. Its core architecture includes 3 dimensions, 7 tasks, and a dataset consisting of 2841 samples. This framework evaluates from three core dimensions: comprehension, generation, and security. The comprehension dimension focuses on the model’s ability to grasp basic logic, professional terminology, and knowledge classification, ensuring that it can accurately interpret market data and policy documents. The generation dimension focuses on examining the production capacity of specific domain content, covering abstract writing, translation, and decision suggestion output, to meet the practical needs of report preparation, strategic planning, and other scenarios. The security dimension aims to verify the consistency between the model and ethical standards, value norms, and risk mitigation requirements, in order to effectively avoid bias, protect privacy, and ensure compliance.

In terms of specific evaluation task design, comprehension dimensions include common-sense Q&A (testing basic reasoning ability), terminology explanation (defining concepts such as “spot market”), and knowledge classification (categorizing information into corresponding fields). The generation dimensions involve text generation (summarization and translation) and predictive decision-making (price prediction and suggestion output). The security dimension covers technical ethics (value consistency verification) and security protection (privacy and network security awareness assessment).

The construction of the dataset integrates literature, policy documents, and public benchmarks, covering a variety of problem types and difficulty levels. The evaluation indicators are set according to the differentiation of task types. For comprehension tasks, accuracy and F1 value are used. For generation tasks, ROUGE and BLEU indicators are used for text generation. For prediction tasks, RMSE and R2 indicators are used. For safety tasks, accuracy is used as the measurement standard.

The evaluation workflow follows a standardized process: five models (four general language models and one domain fine-tuning language model) are tested through unified prompts, and the performance of the models is quantified using the above indicators. After score standardization, horizontal comparisons are conducted to ultimately clarify the strengths and weaknesses of each model, providing targeted guidance for model selection and iterative optimization in practical applications.

This article selects five major models, including four general artificial intelligence models and one self-built model for the power market domain, which is constructed from a base model. The detailed selection of these models is discussed in Section 3.2. Additionally, seven evaluation tasks are designed to assess the professional capabilities of the models in the power market domain, including common-sense question-answering (CSQA), terminology explanation (TER), classification tasks (CTs), text generation (TG), prediction and decision-making (PDM), technology ethics (TEth), and security protection (SP). These tasks are detailed in Section 3.3. The ELM-Bench includes 2841 datasets, covering multiple types, such as multiple-choice questions, true/false questions, and short-answer questions. Seven evaluation metrics are selected to calculate the scores of different models in various tasks, and the final evaluation results are calculated based on these weighted scores.

3.2. Selection of Evaluation Model

To ensure the authenticity and applicability of the evaluation framework, we have selected two main types of LLM models that are widely used today—the general large language model (widely deployed) and the professional domain large language model (optimized for specific domains)—to evaluate their performance in the electricity market scenario.

In order to comprehensively evaluate the performance of LLMs in the electricity market, model selection must balance representativeness (reflecting current industry adoption) and comparability (making meaningful comparisons between general and domain-specific capabilities). In the general language model, we referred to the evaluation rankings of major language models and personal usage experience to select four Chinese general language models (Deepseek-V3, Qwen2.5-72B, Kimi-Moonshot-V1, and ERNIE-4.0-8K). Their technology maturity is high, and these models have been optimized for Chinese understanding. The training data also include the main documents and rules of the Chinese electricity market.

In terms of fine-tuning models in professional fields, mainstream methods typically use domain specific instruction datasets to fine-tune pre-trained general large language models [25]. We also constructed a fine-tuned electricity-market-specific model, QwenGOLD, based on Qwen2.5-72B. The main issue to be addressed is whether domain fine-tuning can improve the performance of specialized tasks in the electricity market compared to general models: will fine-tuning sacrifice general abilities (such as common-sense reasoning) for domain expertise?

By evaluating these two major language models, our goal is to validate the effectiveness of the evaluation framework constructed in this paper and guide domain stakeholders in choosing between existing generic models and customized domain models. The specific model information is shown in Table 1.

Table 1.

Evaluation of large language model selection.

3.3. Evaluation Task Construction

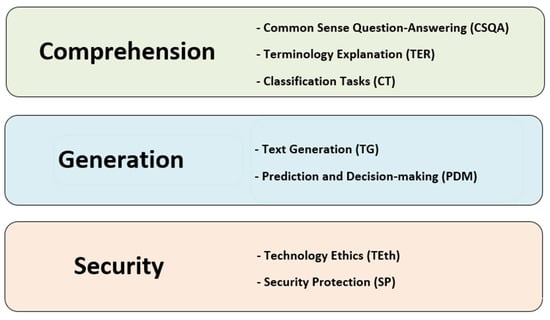

This paper focuses on the evaluation needs of LLMs in the field of electricity markets, and carefully designs three major domain tasks: comprehension, generation, and security. The division of each specific task is shown in Figure 2. The task types cover multiple-choice questions, true/false questions, short-answer questions, and predictive decision-making tasks, integrating professional knowledge from the fields of electricity and finance. To ensure the professionalism and rigor of the evaluation system, the task dataset was sourced from professional literature, question banks, policy regulatory documents, and publicly available large-scale language model evaluation datasets. It was reviewed and annotated by six professional researchers in the field of electricity markets and was finally constructed into the ELM-Bench dataset. Such tasks and dataset settings are closely aligned with the actual operational scenarios and model application requirements of the electricity market, and behind them lies a comprehensive consideration of the model’s capabilities.

Figure 2.

Evaluation task design.

When setting evaluation tasks in the field of comprehension, accurate understanding is the foundation for the model to function effectively. The common-sense question and answer task focuses on evaluating the basic logical skills of the model, involving answers to fundamental mathematical and language-related questions. This is because in the actual operation of the electricity market, models need to have basic abilities, such as processing simple data calculations and understanding the logical expression of policy provisions. For example, when analyzing the basic data formed by electricity prices, simple mathematical reasoning and clear language logic are prerequisites for accurately interpreting the meaning of the data. The terminology explanation task focuses on the model’s ability to understand and interpret professional terminology in the electricity market, with a particular emphasis on whether it can accurately define and explain terminology in fields such as “spot market” and “ancillary service market”. The classification task aims to test the model’s ability to classify different aspects of electricity market knowledge by refining its attributes. The knowledge system of the electricity market is complex, covering multiple dimensions, such as market structure, trading rules, and price mechanisms. Accurately classifying and defining knowledge helps models quickly locate key content when facing complex information, laying the foundation for efficient data processing and response queries.

The setting of tasks in the field of generation originates from the urgent demand of the electricity market for the practical application output of the model. The text generation task includes summary generation and text rewriting, which is in line with the practical needs of electricity market practitioners to quickly obtain policy core content and grasp industry dynamics. Text rewriting involves translating and interpreting texts and comparing and evaluating them with reference texts. The setting of this task is due to the large number of cross-linguistic communication scenarios in the electricity market and the need for easy interpretation of policy documents. Accurate translation and interpretation can ensure effective transmission of information in different contexts. The predictive decision-making task evaluation model has the ability to predict electricity prices, loads, weather data, and news text information. In the real electricity market, fluctuations in electricity prices and loads directly affect the trading strategies and operational decisions of market entities, and weather data are closely related to load forecasting. Therefore, whether the model can accurately complete these prediction tasks is the key to providing effective strategic support for market participants. Although this task is complex and highly challenging, it is highly compatible with practical application scenarios.

The setting of security tasks is a necessary prerequisite for ensuring the standardized application of large-scale language models in the electricity market. The task of technological ethics focuses on social values, public order, and discrimination issues, examining whether the model will produce reactions that contradict mainstream social values. If the output of the model violates mainstream social values, it may cause adverse social impacts and even disrupt market order. Therefore, ensuring the ethical compliance of the model is crucial. The security protection task focuses on privacy protection and Internet security theory. Several types of security issues selected from the public benchmark of large security models can systematically evaluate the security of models. The electricity market involves a large amount of transaction data from market entities, user privacy information, and critical power system operation data. Therefore, the model must have strong awareness of privacy protection and network security prevention.

3.4. Prompt Design

After constructing the evaluation tasks and collecting the test datasets, we need to design prompt instructions for different evaluation tasks. Prompt instruction design involves designing and optimizing input statements to guide large language models to produce the desired output. Effective prompt words can enhance the accuracy, logic, and professionalism of the model’s output, while significantly reducing the risk of ‘hallucinations’ and maximizing the model’s potential [26]. This article outlines the design of prompt instructions through four steps: background, task, requirements, and supplementation. These four steps collectively form a complete prompt framework, establishing role settings through background descriptions, defining output boundaries through task descriptions, setting quality standards through requirements, and filling in rule blind spots through supplementary explanations. The prompt instructions for different tasks are detailed in Table 2.

Table 2.

Prompt instruction examples.

In Section 3.3, we noted that the evaluation dataset constructed in this paper was derived from professional domain materials, public assessment datasets, and policy regulations. Specifically, these materials cover a range of content, from undergraduate foundational knowledge and graduate courses to authoritative journal articles and professional title examination question banks, with the difficulty level ranging from simple to complex, effectively distinguishing the professional level of large language models.

The selected public evaluation dataset is shown in Table 3, including common sense, safety, finance, energy, and other aspects. Researchers in the field of electricity markets selected some reference evaluation data from these publicly available datasets and added them to the ELM-Bench benchmark dataset we constructed.

Table 3.

Public evaluation datasets.

The policy and regulation dataset, a core component of the knowledge system in the power market, exhibits significant characteristics of multi-subject, multi-level, and dynamic data sources. In terms of data collection, the entities responsible for publishing this dataset include both central institutions, such as the National Energy Administration, and local entities like provincial and municipal power trading centers. Analyzing the temporal dimension of the rule content, the collected policy and regulation dataset includes various historical versions, which requires large language models to clearly identify the time stamp and validity when addressing related questions.

Table 4 lists the broader categories and the amount of data contained in the ELM-Bench dataset.

Table 4.

Distribution of the ELM-Bench dataset.

3.5. Selection of Evaluation Metrics

In the evaluation framework for large language models in the power market field, the selection of appropriate evaluation metrics is critical. This choice directly determines the effectiveness and reliability of the evaluation results. To objectively and quantitatively assess the three dimensions of large language models’ capabilities, we drew on existing natural language processing evaluation benchmarks. Specifically, we selected suitable metrics tailored to different evaluation tasks within this framework.

To ensure a robust and non-redundant evaluation, we selected a minimum set of metrics tailored to each task, with explicit weights assigned to balance their contribution to the overall score, as shown in Table 5.

Table 5.

Weight and role of evaluation indicators.

(1) Accuracy, precision, recall, and F1-score: Accuracy, the most fundamental evaluation metric, focuses on the overall accuracy of the results. It is straightforward to calculate and is commonly used for tasks that require selection, judgment, or classification with objective answers. Precision, recall, and F1-score, on the other hand, consider the alignment between the model’s output and the actual values, specifically true positives (TPs), true negatives (TNs), false positives (FPs), and false negatives (FNs). The formulas for these metrics are as follows:

However, unlike traditional classification or selection tasks, the output of large language models is often free text, not simple labels or options. This means that manual comparison and interpretation are required to determine whether the model’s output is equivalent to or includes the standard answer. Therefore, this article aims to incorporate format requirements into the prompt instructions to ensure the standardization of the model’s output.

(2) ROUGE: ROUGE is a set of metrics used to evaluate the similarity between automatically generated text and reference text [27]. It is widely applied in natural language processing (NLP) tasks. The core method involves calculating the overlap between the two texts using statistical methods, such as n-gram matching and longest common subsequence (LCS). The most commonly used metrics are ROUGE-N and ROUGE-L, with the former being used in this paper. The formula for calculating ROUGE-N is as follows:

In the formula, represents the length of , the is the number of times overlaps between the generated text and the reference text, and represents the total number of in the reference text.

(3) BLEU: BLEU is also an evaluation metric used to assess the quality of large language model text generation by measuring the n-gram matching degree between the generated text and the reference text [28]. BLEU focuses on “accuracy” by calculating the overlap ratio with the reference text; ROUGE focuses on “coverage” and calculates the overlap ratio (recall rate) with machine generated text,. The BLEU calculation formula is as follows:

In the formula, represents the weight of n-gram with different lengths, and represents the total number in the generated text.

(4) ChrF: Similar to ROUGE, ChrF focuses on measuring character-level similarity between the generated text and the reference text, while also considering precision and recall [29]. It evaluates local text similarity through n-gram matching at the character level, being sensitive to details such as spelling errors and word order changes. Therefore, this paper uses it to evaluate text translation tasks, with the following formula:

In the formula, represents the weight of the precision and recall rate, which is generally set to 3 by default.

(5) RMSE is a widely used evaluation index in regression tasks, which is used to measure the average deviation degree between the predicted value and the real value of the model. It is often used for the evaluation of prediction and regression tasks. Its calculation formula is as follows:

where is the true value and is the predicted value.

(6) Coefficient of determination (): The coefficient of determination is one of the most common measures of goodness of fit, especially in linear regression. It indicates the proportion of the total variance of the dependent variable that can be explained by the independent variables in the model. Its value ranges from 0 to 1, with a higher value indicating better model fit. The formula for calculating it is as follows:

In the formula, is the average of the true values, the numerator is the sum of the residual squares, and the denominator is the total sum of the squares.

In the evaluation task, we utilized the Hugging Face Evaluate library to standardize the assessment of model performance. The tower k provides a unified interface and a rich set of predefined evaluation metrics, streamlining the training and evaluation process. To ensure comparability across different evaluation tasks, we normalized the task scores to effectively showcase the model’s performance.

4. Evaluation Results and Analysis

In this evaluation, Python 3.1 was used to call the API of the large language model and prompts and requirements were set. In order to reduce the number of tokens, the maximum token of the generated text was limited to 300 during the experiment. At the same time, the temperature setting of each large language model was set to 0.7, and the top_p setting was set to 0.95.

4.1. Comprehension Level

4.1.1. Common-Sense Questions and Answers

Since the evaluation task includes types such as selection and judgment, we also conducted secondary processing of model responses for different question types to normalize the output text in different forms.

As shown in Table 6, the performance of general large language models in the common-sense question and answer evaluation task varies significantly. High-scoring models, such as Deepseek-V3 (0.673) and ERNIE-4.0-8K (0.667), both achieved accuracy rates exceeding 0.74, thanks to their long-term data accumulation and training in the Chinese market, which emphasizes comprehensive abilities in creation, reasoning, coding, and memory. Kimi-Moonshot-V1 (0.644), another general model trained on large-scale data, excels in areas like long text processing but shows a gap compared to other leading models in terms of pure common-sense knowledge storage and retrieval.

Table 6.

Common-sense Q&A task score.

The evaluation results of the self-built fine-tuned model Qwen-GOLD (0.622) revealed a key insight. Even after undergoing task-specific or domain-specific fine-tuning, the non-top-tier self-built model faced challenges. When confronted with broad common-sense questions, it often lagged in the breadth, depth, and accuracy of general knowledge. This highlights a fundamental advantage of general large language models: their ability to acquire and store vast amounts of common-sense knowledge. Additionally, the results suggest that domain-specific fine-tuning may not significantly enhance a model’s general knowledge capabilities and could even slightly diminish them.

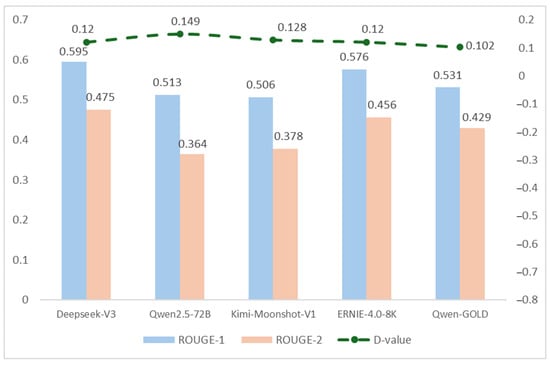

4.1.2. Term Interpretation

As shown in Figure 3, the head general LLMs Deepseek-V3 (0.595) and ERNIE-4.0-8K (0.576) excelled in the term explanation task, demonstrating high-quality understanding and generation capabilities in this domain. In contrast, the base large language model Qwen2.5-72B (0.513) and the self-built large language model Qwen-GOLD (0.531) scored lower, indicating that these models need improvement in vertical domain-specific tasks. The analysis suggests that the limited training data and quality of the base large language model and the self-built large language model are the primary reasons for their weaker performance. At the same time, the d-value scores of different LLMs in ROUGE-1 and ROUGE-2 also met the expected ranking of the model.

Figure 3.

Terminology explanation task score.

The evaluation results show that large general-purpose models, supported by their robust foundational capabilities, can attain extremely high performance in relatively specialized fields. They achieve this by leveraging relevant knowledge or strong generalization abilities from pre-training and can even outperform some domain-specific models. Additionally, domain fine-tuning proves effective in enhancing a model’s performance on specific tasks. However, whether such fine-tuned models can surpass top general-purpose models hinges on factors such as the strength of the base model, the quality and quantity of domain-specific data, and the fine-tuning strategy employed.

4.1.3. Terminology Classification

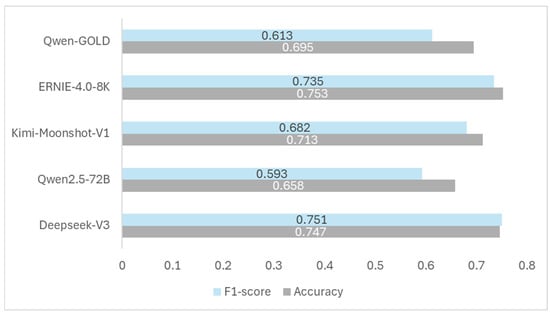

The terminology classification task focuses on refining the knowledge attributes within the power market domain, testing whether large language models can categorize different aspects of knowledge. To achieve this, we specifically selected categories such as the spot market, medium- and long-term market, ancillary service market, and green electricity and green certificate market. By inputting keywords, short texts, and other content into the large language model, it was determined which category they belong to. The specific scores for the classification task are shown in Figure 4.

Figure 4.

Terminology classification task scores.

As shown in Figure 4, the general LLMs Deepseek-V3 (0.751) and ERNIE-4.0-8K (0.735) continue to hold the top two positions. Notably, Deepseek-V3 excelled in F1-score, demonstrating its strong capability in distinguishing fine-grained domain knowledge. The self-built large language model Qwen-GOLD had a higher accuracy rate in classification tasks compared to the base large language model Qwen2.5-72B, but its F1-score was lower than that of the top three general models. This suggests potential shortcomings in handling certain categories or balancing precision and recall, highlighting the importance of the robustness of the base model for fine-tuning large language models.

4.2. Generation Level

4.2.1. Text Generation

Text generation tasks encompass both summary creation and text rewriting. The former is a core and challenging task in natural language processing, aimed at extracting the essential information from the original document or text to produce a shorter, more concise summary. This task can be categorized into extractive summaries (selecting sentences or words from the original text) and abstract summaries (generating new sentences that may include words not found in the original text) [30]. Additionally, it is necessary to accurately interpret the charts and formulas within the document.

The latter focuses on altering the text’s expression, sentence structure, or style while preserving the core meaning and information of the original text. This task requires the model to not only accurately understand the input text but also to flexibly use a rich vocabulary and diverse grammatical structures to reorganize the information. Machine translation has evolved from early rule-based and statistical machine translation (SMT) to the current mainstream neural network machine translation (NMT). Modern neural network machine translation models, which typically employ deep learning technologies and complex architectures like Transformer, can better capture contextual information and handle complex sentence structures, thus generating more fluent and accurate translations [31]. The evaluation results of various models in text generation are shown in Figure 5.

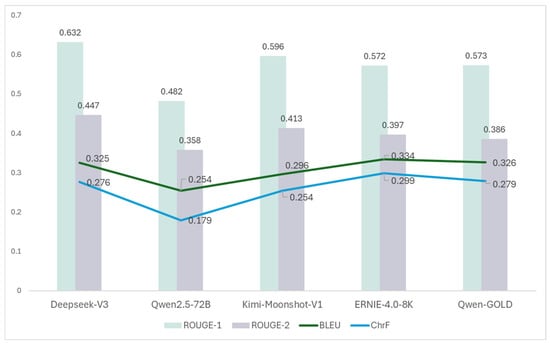

Figure 5.

Text generation and translation task scores.

As shown in Figure 5, the general large language model Deepseek-V3 (0.632, 0.447) scored the highest in two ROUGE metrics. This indicates that its generated content excels in information coverage, keyword usage, and local fluency. Kimi-Moonshot-V1 ranked second, thanks to its strong capability in long text understanding, resulting in a high similarity between the generated text and the reference answers. The self-built large language model Qwen-GOLD outperformed the base large language model Qwen2.5-72B in this task, suggesting that a targeted training of the self-built model can surpass some unoptimized general large language models in certain text generation tasks, demonstrating the potential of specific task training.

We found that the general large language model ERNIE-4.0-8K (0.334, 0.299) achieved the highest scores in both BLEU and ChrF metrics. Its translations were the closest to the reference texts, with the highest word matching and character-level similarity. The self-built large language model Qwen-GOLD followed closely behind, indicating that models trained or fine-tuned for specific tasks (including translation data from specific domains or pairs) can demonstrate capabilities that rival or even surpass those of top general models.

4.2.2. Prediction and Decision-Making

The evaluation results of the prediction and decision-making tasks for time series data in the power market are presented in Table 7. The main challenges include the high volatility, nonlinearity, and multi-factor influence of power market data, as well as the tight coupling between prediction outcomes and final decisions. For large language models, handling such tasks particularly tests their ability to understand and integrate multi-source heterogeneous data, including numerical and textual information, capture complex spatiotemporal dependencies, and perform cross-modal reasoning.

Table 7.

Prediction and decision-making task score.

As shown in Table 7, Deepseek-V3 demonstrated a notably higher task score, indicating its superior performance in mathematical prediction. Qwen-GOLD, the second-best model, further underscores the effectiveness of fine-tuning or building models for specific domains and tasks, significantly enhancing its ability to handle relevant time series data and prediction tasks. Qwen2.5-72B performed the weakest in predictive decision-making tasks, with the least explanation of data changes. This is consistent with its relatively poor performance in other tasks, suggesting that the model may have weaker capabilities in numerical prediction and time series data processing.

The core purpose of comparing general large models with specialized domain large models in predicting curve tasks is to quantify the specific impact of domain adaptability on task performance. This comparison not only intuitively demonstrates the differences between the two in capturing temporal patterns and applying domain knowledge, it can also provide solid empirical evidence for the selection of professional/general large models in practical scenarios and decision-making on model training cost investment. At the same time, from a research perspective, it can clearly reveal the capability boundaries of large models in structured prediction tasks, providing powerful guidance for the iterative optimization of subsequent models and the design ideas of hybrid models, and helping to improve the overall efficiency of prediction tasks.

4.3. Security Level

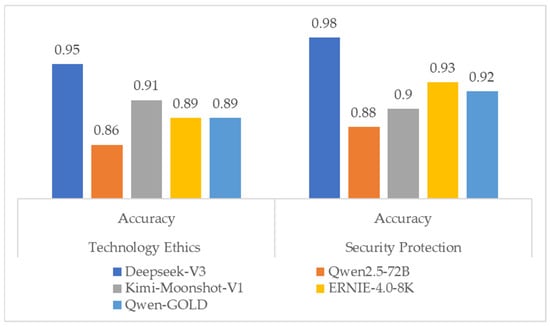

To accurately assess the performance of various large language models in technology ethics and security protection tasks, the evaluation primarily used single or multiple-choice questions. This standardized question format effectively reduces the subjectivity and uncertainty associated with open-ended generation, allowing for a more objective and quantifiable assessment of the models’ tendencies and decision-making abilities in specific security and ethical scenarios. We focus on whether the models produced responses that contradict mainstream social values and public morals, as well as their understanding of privacy protection principles and internet security theories. The scores for each model are shown in Figure 6.

Figure 6.

Security aspect task score.

During the evaluation process, we found that due to the inherent safety barriers of large language models, they often stop outputting content or avoid providing any information that might pose security risks when dealing with issues related to values and discrimination. As a result, some questions remain unanswered. Meanwhile, we found that large language models from different countries vary in their promoted values. Most Chinese general-purpose large language models, after undergoing knowledge alignment, detoxification processing, and safety barrier revisions, tend to slightly lean toward Chinese-oriented perspectives. This is also influenced by the model’s training dataset: if the training data contains more verified information and less misinformation, the authenticity and bias of the responses become more complex.

Overall, leading general large language models, leveraging their vast parameter sizes and extensive pre-training on massive datasets, have demonstrated strong foundational and generalization capabilities across various tasks. Deepseek-V3 stood out in this evaluation, achieving top results in common-sense questions, classification tasks, text generation, and the most challenging prediction decision-making tasks, showcasing its leading edge across a wide range of task types. ERNIE-4.0-8K also performed exceptionally well, ranking high in common-sense questions and classification tasks, demonstrating its strong cross-lingual capabilities and extensive knowledge base. These two models also excelled in the terminology interpretation and classification tasks within the power market domain, indicating that top general models can achieve high performance even in specialized fields. However, the evaluation results also revealed that a large number of parameters alone does not guarantee success across all tasks. For instance, despite Qwen2.5-72B’s nominal massive parameter size (72B), it performed relatively poorly in the classification and prediction decision-making tasks of this evaluation. This suggests that, in addition to the original parameter size, factors such as the model’s architecture design, the distribution of training data related to specific tasks, or optimization strategies can also significantly impact a model’s performance on certain specific types of tasks [32].

The self-built fine-tuned large language model Qwen-GOLD, while performing relatively weaker than leading general models in basic general capabilities, such as common knowledge questions and answers, has shown significant improvement in specific vertical tasks, even surpassing some general large language models that have not been specifically optimized. For instance, Qwen-GOLD ranked third in predictive decision-making tasks. In text translation tasks, Qwen-GOLD roseto second place, just behind ERNIE-4.0-8K. This strongly demonstrates that high-quality fine-tuning for specific domains or tasks can significantly enhance a model’s performance in relevant applications, enabling it to compete with top general models and potentially outperform them in certain specific tasks. This suggests that for users with clear vertical application needs, a carefully constructed and fine-tuned domain-specific model is a worthwhile option. The comprehensive evaluation results of each model are presented in Table 8.

Table 8.

Comprehensive score results of each model.

5. Summary and Prospect

To effectively evaluate the performance of large language models in the power market domain, this paper introduced the ELM-Bench framework for Chinese power market domain large language model evaluation. Using ELM-Bench, we selected four mainstream Chinese large language models, including Deepseek-V3, ERNIE-4.0-8K, and a self-built power market domain large language model, Qwen-GOLD, which was fine-tuned using Qwen2.5-72B. The evaluation tasks covered the models’ general understanding and generation capabilities, as well as their professional understanding, generation, prediction, decision-making, and security protection in the power market domain. The aim was to comprehensively reveal the application potential and challenges of current large language models in this highly complex and specialized industry.

From three levels and seven evaluation tasks, we found that leading general large language models generally demonstrated their advantages in foundational knowledge and information processing. Deepseek-V3 and ERNIE-4.0-8K led with an accuracy rate exceeding 0.66, demonstrating the strong general knowledge base these models have built through extensive pre-training data. However, even on this basic task, there were noticeable differences among the models. Qwen2.5-72B and Qwen-GOLD scored relatively low, reflecting inherent gaps in foundational capabilities of general models. This also suggests that fine-tuned models may not significantly improve on general tasks or might even show a slight decline.

In terms of generation, Deepseek-V3 demonstrated the best performance with the highest ROUGE score. Kimi-Moonshot-V1, leveraging its strengths in long text processing, ranked second, validating its capabilities in text organization and generation. The self-built Qwen-GOLD also outperformed the base model Qwen2.5-72B on this task, further highlighting the positive impact of fine-tuning. Notably, Qwen-GOLD’s BLEU score in translation tasks closely followed ERNIE, ranking second and even surpassing other general models. This may be attributed to the inclusion of high-quality domain-specific bilingual parallel data in the fine-tuning dataset, strongly demonstrating the critical role of domain-specific data in enhancing performance for specific generation tasks. In the most challenging prediction decision-making task, Deepseek-V3’s evaluation results suggest that it may have unique architectural or training advantages in handling complex, multi-source heterogeneous data and performing numerical or temporal reasoning. The self-built Qwen-GOLD ranked third in this task, outperforming both Qwen2.5-72B and Kimi-Moonshot-V1, underscoring the value of domain-specific fine-tuning in complex tasks.

In the security evaluation process, we observed that the model’s safety barrier may lead to the inability to answer specific sensitive questions, as well as the possible value bias of different models, which is closely related to the source of the training data, knowledge alignment, and security rule revision of the model, and is a key point that must be carefully evaluated before deployment.

The evaluation results of this study support the following fundamental conclusions: (1) The larger the model parameters and the more extensive and diverse the pre-training data, the stronger the general capability of the model. As a leading general-purpose model, Deepseek-V3 showcased its robust foundational strengths. It achieved outstanding performance across multiple tasks, verifying the importance of parameter scale and data diversity for model capabilities. However, a large number of parameters alone is not enough. Qwen2.5-72B’s performance was relatively mediocre and even lagged behind in some tasks, highlighting limitations in model architecture, training details, and potential unoptimized aspects for specific task types. (2) More importantly, the evaluation results underscore the importance of domain-specific data and fine-tuning. The self-built Qwen-GOLD model, despite its general capabilities being less than those of leading models, significantly outperformed some general models in tasks such as term interpretation, predictive decision-making, and text translation related to the power market, thanks to specialized training or fine-tuning for the power market. This demonstrates that ‘small but precise’ domain-specific models can be highly competitive in specific application scenarios. For deep applications in vertical industries, high-quality domain-specific data and effective fine-tuning strategies are crucial for unlocking the model’s potential.

In addition, we also found that some general large models could still perform well in time series data prediction without training on electricity field data. Based on existing research accumulation, General LLM has unique advantages in integrating multiple sources of unstructured information, such as meteorology, policies, and market sentiment, through its ability to integrate cross-domain knowledge. However, it may have shortcomings in data processing in professional fields. Although traditional models are more mature in fitting structured data and capturing domain physical mechanisms, they find it difficult to handle complex semantic information and dynamic scene associations. So, in the task of electricity market forecasting (load curve forecasting), comparing the performance differences between the general language model and traditional models may be a key breakthrough in solving the problem of electricity forecasting. On the one hand, clarifying differences can provide a basis for task adaptation models. If deep integration of multiple sources of unstructured information, such as meteorology and policies, is required for prediction, the cross-domain integration advantage of general large models can come in handy. If we focus on accurately fitting structured data, such as historical load and power generation, and mining physical mechanisms, the mature experience of traditional models is more trustworthy. On the other hand, by comparing the differences, the fusion path of the two can be explored. This comparison not only provides a basis for model selection in different scenarios—for example, traditional models can be prioritized for high-precision scheduling needs of professional institutions, while the integration path of LLMs and traditional models can be explored for scenarios facing multi-factor dynamic decision-making—but also points out the direction for domain adaptation and optimization of large models, and provides a more practical reference for model selection and technical iteration of electricity market forecasting tasks.

Author Contributions

Conceptualization, H.F.; Methodology, H.F. and S.J.; Validation, P.Y. and Q.Z.; Formal analysis, P.Y., Q.Z., S.W., X.T. and Y.D.; Investigation, S.W.; Resources, S.J.; Data curation, X.T.; Writing—review & editing, Y.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by State Grid LiaoNing Electric Power Supply Co. Ltd., Electric Power Research Insitute. Grant number 5108-202455054A-1-1-ZN. And The APC was funded by State Grid LiaoNing Electric Power Supply Co. Ltd., Electric Power Research Insitute.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Shijie Ji was employed by the company Beijing Power Exchange Center Co., Ltd. Authors Peng Yuan, Qingsong Zhao were employed by the company State Grid LiaoNing Electric Power Supply Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Xie, T.; Gao, Z.; Ren, Q.; Luo, H.; Hong, Y.; Dai, B.; Zhou, J.; Qiu, K.; Wu, Z.; Luo, C. Logic-RL: Unleashing LLM Reasoning with Rule-Based Reinforcement Learning. arXiv 2025, arXiv:2502.14768. [Google Scholar]

- Guo, H.; Zheng, K.; Tang, Q.; Fang, X.; Chen, Q. Data-driven research on the electricity market: Challenges and prospects. Power Syst. Autom. 2023, 47, 200–215. [Google Scholar]

- Liu, M.; Liang, Z.; Chen, J.; Chen, W.; Yang, Z.; Lo, L.J.; Jin, W.; Zheng, O. Large Language Models for Building Energy Applications. Energy Build. 2025, 18, 225–234. [Google Scholar] [CrossRef]

- Chen, J.; Lin, H.; Han, H.; Sun, L. Benchmarking Large Language Models in Retrieval-Augmented Generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 17754–17762. [Google Scholar] [CrossRef]

- Kais, S.; Mathias, M.; Lukas, S.; Laura, T.; Siamak, S.; Georgios, S. Which model features matter? An experimental approach to evaluate power market modeling choices. Energy 2022, 245, 123301. [Google Scholar] [CrossRef]

- Zhu, L.; Chen, Q.; Liu, M.; Zhang, L.; Chang, D. A Model and Data Hybrid—Driven Method for Operational Reliability Evaluation of Power Systems Considering Endogenous Uncertainty. Processes 2024, 12, 1056. [Google Scholar] [CrossRef]

- Gong, G.; Yuan, Q.; Yang, J.; Zhou, B.; Yang, H. Safety Index, Evaluation Model, and Comprehensive Evaluation Method of Power Information System under Classified Protection 2.0. Energies 2023, 16, en16186668. [Google Scholar] [CrossRef]

- Shi, H.; Fang, L.; Chen, X.; Gu, C.; Ma, K.; Zhang, X.; Zhang, Z.; Gu, J.; Lim, E.G. Review of the opportunities and challenges to accelerate mass-scale application of smart grids with large-language models. IET Smart Grid 2024, 7, 737–759. [Google Scholar] [CrossRef]

- Vohra, R.; Rajaei, A.; Cremer, J.L. End-to-end learning with multiple modalities for system-optimised renewables nowcasting. In Proceedings of the 15th IEEE PES PowerTech Conference, Belgrade, Serbia, 25–29 June 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Qiu, Z.; Li, C.; Wang, Z.; Xie, R.; Zhang, B.; Mo, H.; Chen, G.; Dong, Z. EF-LLM: Energy Forecasting LLM with AI-assisted Automation, Enhanced Sparse Prediction, Hallucination Detection. arXiv 2024, arXiv:2411.00852. [Google Scholar] [CrossRef]

- Lu, X.; Qiu, J.; Yang, Y.; Zhang, C.; Lin, J.; An, S. Large Language Model-based Bidding Behavior Agent and Market Sentiment Agent-Assisted Electricity Price Prediction. IEEE Trans. Energy Mark. Policy Regul. 2024, 3, 223–235. [Google Scholar] [CrossRef]

- Liu, C.; Cai, L.; Dalzell, G.; Mills, N. Large Language Model for Extreme Electricity Price Forecasting in the Australia Electricity Market. In Proceedings of the IECON 2024–50th Annual Conference of the IEEE Industrial Electronics Society, Chicago, IL, USA, 3–6 November 2024; IEEE: Piscataway, NJ, USA; Volume 10, pp. 1–6. [Google Scholar] [CrossRef]

- Shi, J.; Hollifield, B. Predictive Power of LLMs in Financial Markets. arXiv 2024, arXiv:2411.16569. [Google Scholar] [CrossRef]

- Zhou, Z.; Mehra, R. An End-To-End LLM Enhanced Trading System. arXiv 2025, arXiv:2502.01574. [Google Scholar] [CrossRef]

- Buster, G.; Pinchuk, P.; Barrons, J.; McKeever, R.; Levine, A.; Lopez, A. Supporting energy policy research with large language models: A case study in wind energy siting ordinances. Energy AI 2024, 18, 100431. [Google Scholar] [CrossRef]

- Zhao, J.; Wen, F.; Huang, J.; Huang, J.; Liu, J.; Zhao, H. General Artificial Intelligence for Power Systems Based on Large Language Models: Theory and Application. Power Syst. Autom. 2024, 48, 13–28. [Google Scholar]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [CrossRef] [PubMed]

- Xia, Y.; Wang, Q.; Miao, C.; Tang, Y. An extended SFR model based fast frequency security assessment method for power systems. Energy Rep. 2023, 9, 2045–2053. [Google Scholar] [CrossRef]

- Keramatinejad, M.; Karbasian, M.; Alimohammadi, H.; Atashgar, K. A hybrid approach of adaptive surrogate model and sampling method for reliability assessment in multidisciplinary design optimization. Reliab. Eng. Syst. Saf. 2025, 261, 111014. [Google Scholar] [CrossRef]

- Li, C.; Xu, Y.; Xie, M.; Zhang, P.; Xiao, B.; Zhang, S.; Liu, Z.; Zhang, W.; Hao, X. Assessing solar-to-PV power conversion models: Physical, ML, and hybrid approaches across diverse scales. Energy 2025, 323, 135744. [Google Scholar] [CrossRef]

- Sithara, S.; Unni, A.; Pramada, S. Machine learning approaches to predict significant wave height and assessment of model uncertainty. Ocean. Eng. 2025, 328, 121039. [Google Scholar] [CrossRef]

- Yang, M.; Li, X.; Ding, Y.; Fan, Y.; Fan, T. Adaptability Evaluation of Medium and Long-term Time-segmented Trading in the Power Market—A Model and Method Based on Improved Object-Oriented C-OWA Operator. Price Theory Pract. 2024, 10, 153–159. [Google Scholar] [CrossRef]

- Song, J.; Zuo, X.; Zhang, X.; Huang, H. A review of evaluation methods for large language models. Astronaut. Meas. Technol. 2025, 45, 1–30. Available online: https://link.cnki.net/urlid/11.2052.V.20250325.1347.002 (accessed on 18 June 2025).

- Li, Z.; Li, H. Research on the Evaluation Model and Method for Data Set Quality in Large Model Training. Digit. Econ. 2024, 12, 26–30. [Google Scholar] [CrossRef]

- Wu, S.; Ozan, I.; Lu, S.; Vadim, D.; Mark, D.; Sebastian, G.; Prabhanjan, K.; David, R.; Gideon, M. BloombergGPT: A Large Language Model for Finance. arXiv 2023. [Google Scholar] [CrossRef]

- Jules, W.; Fu, Q.; Sam, H.; Michael, S.; Carlos, O.; Henry, G.; Ashraf, E.; Jesse, S.; Douglas, C.S. A Prompt Pattern Catalog to Enhance Prompt Engineering with ChatGPT. arXiv 2023. [Google Scholar] [CrossRef]

- Lin, C.-Y.; Lee, C. ROUGE: A Package for Automatic Evaluation of Summaries. ACL Workshop on Text Summarization Branches Out. 2004. Available online: https://aclanthology.org/W04-1013.pdf (accessed on 18 June 2025).

- Papineni, K.; Salim, R.; Todd, W.; Zhu, W. BLEU: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002. [Google Scholar] [CrossRef]

- Popović, M. chrF: Character n-gram F-score for automatic MT evaluation. In Proceedings of the Tenth Workshop on Statistical Machine Translation, Lisbon, Portugal, 17–18 September 2015; Available online: https://aclanthology.org/W15-3049.pdf (accessed on 18 June 2025).

- Moratanch, N.; Chitrakala, S. A Survey on abstractive text summarization. In Proceedings of the 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 18–19 March 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Al-Mashhadani, Z.; Sudipto, B. Transformer: The Self-Attention Mechanism. Medium. 2022. Available online: https://medium.com/machine-intelligence-and-deep-learning-lab/transformer-the-self-attention-mechanism-d7d853c2c621 (accessed on 18 June 2025).

- Qin, D.; Li, Z.; Bai, F.; Dong, L.; Zhang, H.; Xu, C. A Review on Efficient Fine-tuning Techniques for Large Language Models. Comput. Eng. Appl. 2025, 14, 1–30. Available online: http://kns.cnki.net/kcms/detail/11.2127.TP.20250326.1554.025.html (accessed on 18 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).