Abstract

Accurate fault diagnosis of power transformers is critical for maintaining grid reliability, yet conventional dissolved gas analysis (DGA) methods face challenges in feature representation and high-dimensional data processing. This paper presents an intelligent diagnostic framework that synergistically integrates systematic feature engineering, tensor decomposition-based feature selection, and a sparrow search algorithm (SSA)-optimized multi-kernel support vector machine (MKSVM) for transformer fault classification. The proposed approach first expands the original five-dimensional gas concentration measurements to a twelve-dimensional feature space by incorporating domain-driven IEC 60599 ratio indicators and statistical aggregation descriptors, effectively capturing nonlinear interactions among gas components. Subsequently, a novel Tucker decomposition framework is developed to construct a three-way tensor encoding sample–feature–class relationships, where feature importance is quantified through both discriminative power and structural significance in low-rank representations, successfully reducing dimensionality from twelve to seven critical features while retaining 95% of discriminative information. The multi-kernel SVM architecture combines radial basis function, polynomial, and sigmoid kernels with optimized weights and hyperparameters configured through SSA’s hierarchical producer–scrounger search mechanism. Experimental validation on DGA samples across seven fault categories demonstrates that the proposed method achieves 98.33% classification accuracy, significantly outperforming existing methods, including kernel PCA-based approaches, deep learning models, and ensemble techniques. The framework establishes a reliable and accurate solution for transformer condition monitoring in power systems.

1. Introduction

Power transformers constitute critical nodes in electrical transmission and distribution networks, serving as the primary interface for voltage transformation and power transfer between grid segments [1]. The operational integrity of these assets directly governs grid reliability, with unexpected failures potentially triggering cascading outages and substantial economic losses. Statistical evidence indicates that transformer malfunctions account for approximately 40% of major power system disruptions worldwide, with repair costs often exceeding millions of dollars per incident alongside prolonged downtime periods. Given the capital-intensive nature of transformer infrastructure, developing robust fault diagnosis methodologies for early detection and accurate classification of incipient defects has emerged as essential for modern power system maintenance strategies [2]. Timely identification of developing faults enables condition-based maintenance scheduling, extends asset lifespan through proactive intervention, and enhances grid resilience against sudden component failures [3].

Dissolved gas analysis (DGA) has established itself as the predominant non-invasive diagnostic technique for oil-immersed power transformers [4], leveraging the principle that thermal and electrical stresses induce decomposition of insulating oil and cellulose materials, thereby generating characteristic dissolved gas signatures. The concentrations of key fault gases—hydrogen (H2), methane (CH4), ethane (C2H6), ethylene (C2H4), and acetylene (C2H2)—provide critical forensic evidence for differentiating among diverse fault mechanisms, including thermal overheating at various temperature ranges, partial discharge phenomena, and electrical arcing events [5]. Conventional DGA interpretation frameworks, exemplified by the IEC 60599 three-ratio method, Duval triangle, and Rogers ratio codes, employ expert-defined thresholds and geometric boundaries to partition the gas concentration space into fault categories [6]. While these rule-based approaches offer interpretability and have accumulated decades of field validation, they exhibit inherent limitations in handling borderline cases, overlapping fault signatures, and complex multi-fault scenarios. Furthermore, the rigid decision boundaries fail to adapt to evolving operational conditions, transformer designs, or region-specific oil degradation characteristics, consequently constraining diagnostic accuracy in heterogeneous deployments.

The advent of machine learning has catalyzed a paradigm shift toward data-driven transformer fault diagnosis, with support vector machines [7], artificial neural networks [8], ensemble methods, and deep learning architectures [9] demonstrating superior classification performance compared to conventional rule-based systems. Despite these advances, contemporary intelligent diagnostic frameworks confront persistent challenges across multiple dimensions. First, the inherently low-dimensional nature of raw DGA measurements—typically comprising only five gas concentrations—restricts the feature space’s representational capacity for capturing subtle fault distinctions [10]. Second, feature engineering strategies that expand the original measurement space inevitably introduce feature redundancy and multicollinearity, necessitating principled dimensionality reduction to prevent classifier overfitting [11]. Third, existing feature selection methodologies predominantly operate on matrix-based data representations, thereby overlooking the intrinsic three-way coupling among samples, features, and fault classes—a structural characteristic that could yield more discriminative feature subsets [12]. Fourth, classifier hyperparameter optimization remains computationally demanding, especially for multi-kernel architectures where the high-dimensional hyperparameter space renders exhaustive grid search prohibitively expensive [13].

Recently, the field of transformer fault diagnosis has seen the adoption of SVMs based on kernel functions and metaheuristic optimization. Dhiman et al. [14] applied PSO to a single-kernel SVM, achieving 94.1% accuracy; However, this was constrained by the inherent limitations of the single-kernel structure. Recent metaheuristic algorithms, such as the Genetic Algorithm [15], Grey Wolf Optimizer [16], and Whale Optimization Algorithm [17], have been utilized for SVM hyperparameter tuning. Nonetheless, these studies remain predominantly within the single-kernel framework and have not systematically addressed the challenges associated with multi-kernel fusion or high-dimensional feature spaces.

Existing feature engineering methods based on DGA include ratio-based feature extraction, statistical dimensionality reduction, and wrapper-based feature selection. The IEC 60599 three-ratio method and the Duval triangle provide domain-driven feature extraction, but their discriminative power is limited when used in isolation. Although PCA and KPCA are widely employed for dimensionality reduction, their matrix operations struggle to preserve the three-dimensional structural relationships among samples, features, and fault types. Among wrapper methods, strategies based on the GA and Recursive Feature Elimination incur significant computational overhead in high-dimensional scenarios. Tensor decomposition techniques have demonstrated efficacy in computer vision and signal processing, yet their application in the DGA diagnosis domain remains unexplored.

Current diagnostic frameworks face three primary challenges: First, existing MKSVMs either utilize fixed kernel weights or optimize kernel-specific hyperparameters independently, making it impossible to obtain a globally optimal configuration. Second, mainstream feature selection methods operate in “flattened” vector spaces, neglecting the three-dimensional coupling among sample distribution, feature interactions, and class discrimination patterns—structures that can be processed via tensor algebra. Third, there is a lack of systematic comparative studies on metaheuristic algorithms specifically for transformer diagnosis, and convergence analysis within high-dimensional hyperparameter spaces is limited.

Motivated by these challenges and inspired by recent advances in tensor decomposition theory and swarm intelligence optimization, this paper proposes a comprehensive diagnostic framework that synergistically integrates systematic feature engineering, Tucker decomposition-based feature selection, and a sparrow search algorithm (SSA)-optimized multi-kernel support vector machine (MKSVM) for enhanced transformer fault classification [18]. The main contributions of this work are threefold:

- 1.

- Feature selection based on tensor decomposition is proposed, which differs from traditional matrix-based dimensionality reduction methods that discard multiple types of structural information. The proposed Tucker decomposition framework explicitly encodes sample–feature–class relationships through three-way tensor construction. The importance of features is quantified through a composite metric that balances discriminative power and structural importance in low-rank representations, which is fundamentally different from the computational burden of wrapper methods and the neglect of feature class interactions in filtering methods.

- 2.

- A multi-kernel SVM architecture with adaptive kernel weight assignment is formulated, synergistically combining radial basis function (RBF) kernels for local pattern recognition, polynomial kernels for global structure modeling, and sigmoid kernels for neural-like decision boundaries. All kernel-specific hyperparameters and fusion weights are jointly optimized through SSA’s hierarchical producer–scrounger mechanism, which effectively balances exploration and exploitation in the high-dimensional hyperparameter landscape.

- 3.

- The experimental verification of DGA samples spanning seven fault categories shows that the proposed framework achieves a classification accuracy of 98.33% while balancing accuracy and recall metrics, which is significantly better than kernel principal component analysis variants, deep neural networks, ensemble classifiers, and case-based reasoning methods, with an advantage of over 2.64%.

The remainder of this paper is organized as follows. Section 2 presents the complete methodology, detailing the extended feature engineering strategy, Tucker decomposition-based feature selection algorithm, sparrow search algorithm principles, and SSA-optimized multi-kernel SVM architecture. Section 3 describes the experimental dataset characteristics, presents convergence behavior and confusion matrix visualizations, and conducts comprehensive performance comparisons against representative baseline methods. Section 4 concludes this study by summarizing key findings and outlining promising directions for future research.

2. Materials and Methods

2.1. Extended Feature Engineering

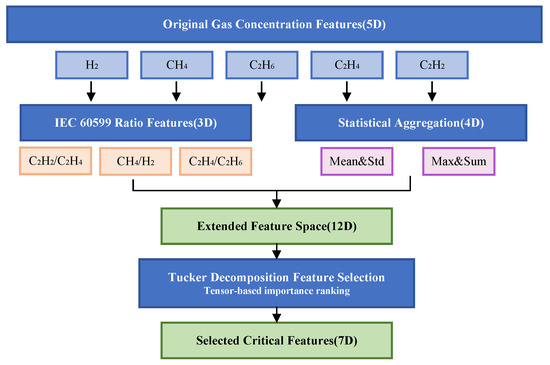

Due to the low dimensionality of the DGA dataset, which contains only five features, a feature engineering approach was adopted to expand the feature space and enhance the model’s robustness and generalization capability. For the transformer DGA fault diagnosis task, this study designed a systematic feature expansion scheme, extending the original 5-dimensional gas concentration features to a 12-dimensional comprehensive feature space.

The original features comprise concentration measurements of five key dissolved gases, H2 (hydrogen), CH4 (methane), C2H4 (ethylene), C2H6 (ethane), and C2H2 (acetylene), which remain unchanged in the extended feature matrix. Building upon this foundation, three domain-driven ratio features were constructed based on the three-ratio method from the IEC 60599 international standard: the C2H2/C2H4 ratio reflects the degree of oil decomposition and fault temperature level, the CH4/H2 ratio serves as a key discriminant indicator for distinguishing thermal faults from electrical faults, and the C2H4/C2H6 ratio indicates the severity of thermal faults. To prevent division-by-zero exceptions in numerical computation, a perturbation term of was added to the denominator during ratio calculations.

In addition, four global statistical features were extracted through horizontal statistical aggregation of the original five-dimensional gas concentrations, including the arithmetic mean of gas concentrations to characterize the overall gas content level, standard deviation to quantify the dispersion of concentration distribution, maximum value to capture anomalous peak information, and sum to reflect the cumulative effect of gas generation. The feature expansion strategy maps the original physical measurement space to a higher-dimensional feature representation space, effectively enhancing the feature set’s expressive power for fault patterns by introducing nonlinear interaction relationships among gases and statistical aggregation information [19].

Before feature selection and model training, the twelve expanded features were standardized to ensure that each feature contributed equally to the distance metrics and to prevent features with larger numerical ranges from dominating the optimization process. This paper employs the Min–Max normalization method, which transforms the feature matrix into the standardized range according to the following formula:

where is the original value of feature j for sample i and and are the minimum and maximum values of feature j across all samples, respectively. This step is crucial for the stability and convergence of the subsequent SSA-optimized multi-kernel SVM.

2.2. Tucker Decomposition-Based Feature Selection Framework

Let denote the feature matrix with n samples and d features and represent the class labels. Traditional feature selection methods operate on matrix representations, potentially failing to capture multi-way interactions among samples, features, and class structures. This paper proposes a Tucker decomposition-based framework that encodes these three-way relationships into a higher-order tensor structure.

Through both discriminative power and structural significance in low-rank tensor representations, feature importance is evaluated. Tucker decomposition provides a principled framework by decomposing tensors into a core tensor and mode-specific factor matrices [20], thereby preserving inherent multi-way structures unlike conventional matrix factorization methods.

We construct a three-way tensor encoding sample–feature–class relationships. For each class , the k-th frontal slice is defined as follows:

where is the indicator function. This construction embeds class-specific feature patterns into separate slices, facilitating identification of discriminative features. The tensor is normalized by its Frobenius norm for numerical stability:

Tucker decomposition factorizes into a core tensor and three orthogonal factor matrices , , and :

where denotes the mode-k tensor–matrix product. The mode-k product of tensor with matrix is

This paper employs the HOSVD algorithm via mode-k unfolding and SVD operations. Mode-k unfolding matricizes the tensor with mode-k fibers as columns, yielding , , and . For each mode , we compute

where contains left singular vectors, holds singular values in descending order, and rank . The core tensor is obtained by

The core tensor encodes intrinsic interactions among mode subspaces, where element quantifies the interaction strength between the i-th sample basis, j-th feature basis, and k-th class basis.

Feature importance is defined based on each feature’s contribution to the multi-way tensor structure [21]. For feature j, the importance score aggregates weighted contributions across feature mode components:

where represents feature j’s projection onto the k-th principal component and denotes the k-th lateral slice with Frobenius norm .

This formulation combines representational power (projection coefficients) with structural significance (core tensor norms). The squared coefficient quantifies feature j’s contribution to the k-th latent component, while measures the component’s importance in sample–class interactions. This importance measure is invariant to sample and class permutations, ensuring ranking depends solely on intrinsic feature characteristics. Features exhibiting strong discriminative patterns across multiple modes receive higher scores.

Given importance scores , features are ranked in descending order to select the top m features. Let denote the permutation satisfying . The selected subset is

To assess decomposition quality, the relative reconstruction error is calculated:

where is the reconstructed tensor. Small errors validate that low-rank decomposition captures the essential structure, supporting feature importance reliability.

Additionally, the feature mode variance explained is

where is the k-th singular value from mode-2 unfolding. This quantifies the variance retained by truncated decomposition, indicating information preservation. High ratios justify feature importance scores derived from the decomposition. Figure 1 illustrates the feature extraction and selection workflow.

Figure 1.

Feature extraction and selection workflow.

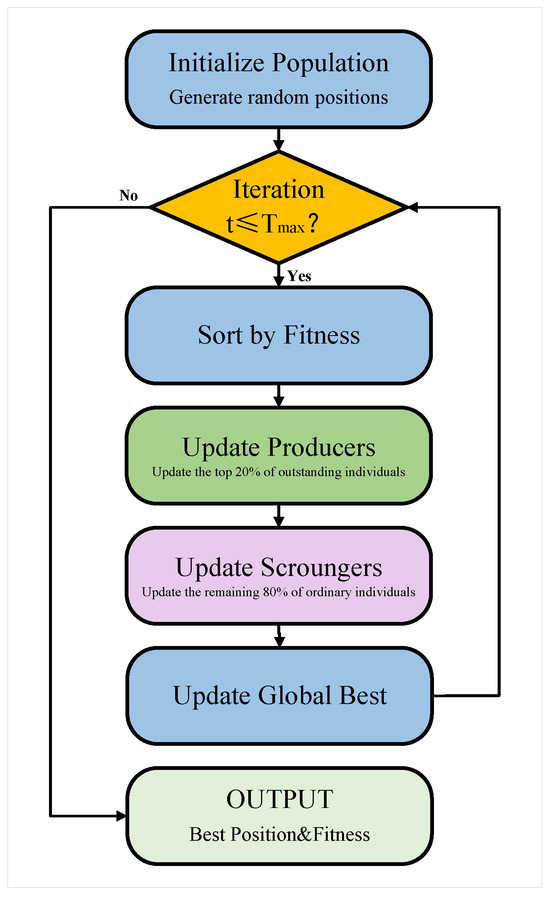

2.3. Sparrow Search Algorithm

The sparrow search algorithm (SSA), proposed by Xue et al. in 2020 [22], simulates sparrow foraging and anti-predation behaviors. The SSA outperforms PSO and the GA on multiple benchmarks and has been widely applied in parameter optimization and feature selection [23].

The population comprises producers and scroungers. This hierarchical structure balances exploration and exploitation.

Let denote the population position matrix, where n is population size, represents the i-th position in d-dimensional space, and stores fitness values. The objective is within .

Producer positions update as

where is the maximum iterations, is step size, is a random variable, is the safety threshold, is the standard normal vector, and is a ones vector. When , exponential decay enables convergence; otherwise, random perturbations facilitate exploration.

Scrounger positions update as

where and are the best and worst positions and is the Moore–Penrose pseudoinverse of , with being a random matrix. Better scroungers () exploit the near-best position; worse ones () explore alternative regions.

The iterative process continues until the maximum iterations are reached or fitness improvement falls below a threshold. Boundary constraints are enforced by truncating violations to maintain feasibility. The hierarchical structure and adaptive behavioral switching enable the SSA to achieve robust global search and fine-grained local refinement, making it effective for hyperparameter optimization in multi-kernel support vector machines [24]. The SSA algorithm flow chart is shown in Figure 2.

Figure 2.

SSA algorithm flow chart.

2.4. SSA-Optimized Multi-Kernel Support Vector Machine

To enhance fault discrimination capability, this paper proposes an adaptive multi-kernel SVM framework optimized by the SSA.

The sparrow search algorithm (SSA) was selected as the optimization algorithm due to its distinctive hierarchical ‘producer–scrounger’ mechanism. This mechanism provides a superior balance between global exploration and local exploitation compared to traditional metaheuristic algorithms. To validate this choice, a preliminary comparative experiment was conducted, testing four representative optimization algorithms: Particle Swarm Optimization (PSO), Genetic Algorithm (GA), Differential Evolution (DE), and SSA—all within the same multi-kernel support vector machine (MKSVM) framework. Table 1 summarizes the optimization performance across 10 independent runs, where the SSA achieved the highest average cross-validation accuracy (), the fastest convergence speed, and the smallest standard deviation of , demonstrating its superior stability and efficiency. Compared to the population-wide exploration of PSO or the genetic operations of the GA, the SSA’s hierarchical search strategy proved more effective for navigating the ultra-high-dimensional parameter space, showing particular efficacy when addressing optimization problems involving a mixture of continuous and discrete parameters.

Table 1.

Optimization algorithm comparison for MKSVM hyperparameter tuning.

The composite kernel combines three base kernels to capture diverse data geometries:

where with ensures a valid Mercer kernel. The base kernels are as follows: an RBF kernel for local patterns, polynomial kernel for global structures, and sigmoid kernel for neural-like boundaries.

For multi-class classification with C fault types, an error-correcting output code (ECOC) scheme with a one-versus-all strategy is employed. Each binary SVM solves

where balances margin maximization and error minimization and denotes the implicit mapping induced by kernel . The decision function is

Multi-kernel prediction aggregates base SVM scores via weighted fusion. For test sample , each base model produces the score vector . The composite score is

with the predicted label

The hyperparameter vector comprises kernel weights and kernel-specific parameters, bounded by . The optimization problem can be formulated as follows:

where the fitness function maximizes k-fold cross-validation accuracy:

The SSA efficiently searches the optimal hyperparameter configuration through its hierarchical population structure. Each candidate solution is evaluated by training three base SVM models with corresponding parameters and computing their weighted cross-validation accuracy [25]. The iterative optimization process balances global exploration and local exploitation via producer–scrounger dynamics described in Section 3.2.

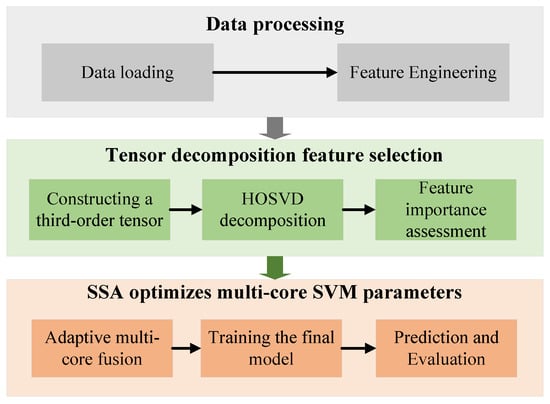

The complete framework proceeds as follows: (1) initialize SSA population within bounds with population size M and maximum iterations ; (2) evaluate each candidate via k-fold cross-validation, training RBF, polynomial, and sigmoid kernel SVMs with parameters , , and , respectively, and then computing weighted accuracy using kernel weights ; (3) evolve the population through SSA position updates until convergence; (4) extract optimal hyperparameters , normalize kernel weights to satisfy , and train the final multi-kernel SVM on the entire training set; and (5) classify the test samples via weighted score fusion. The overall flow chart is shown in Figure 3.

Figure 3.

Overall flow chart.

3. Results

3.1. Dataset Presentation

To ensure that the sample can represent various operating conditions, the load distribution strategy allocated approximately 25% of samples at light load levels of 30–45% rated capacity, 50% at medium load levels of 45–70% rated capacity, and 25% at heavy load levels of 70–85% rated capacity. For each fault type, samples were proportionally distributed across load levels to capture load-dependent gas generation characteristics. These samples represent seven distinct transformer states: normal condition, low-temperature overheating, medium-temperature overheating, high-temperature overheating, partial discharge, low-energy discharge, and high-energy discharge. Initial defects are defined by concentrations marginally exceeding typical values, while critical conditions exhibit concentrations surpassing alarm limits by >200%, indicating imminent failure risk. Among the 1400 samples, 200 represent normal operating conditions. For the six fault categories, each containing 200 samples, the severity distribution encompasses the complete progression spectrum from incipient to critical stages. This classification scheme follows the IEC 60599 standard framework for transformer fault diagnosis.

The feature space consists of five key dissolved gas concentrations measured in parts per million (ppm): hydrogen (H2), methane (CH4), ethane (C2H6), ethylene (C2H4), and acetylene (C2H2). These gases are generated through thermal and electrical decomposition of insulating oil and cellulose materials under fault conditions.

3.2. Feature Engineering and Tensor Decomposition-Based Feature Selection

After expanding the features to 12 dimensions, Tucker decomposition is applied to select the most informative features from the extended 12-dimensional feature space, reducing dimensionality to 7 features while preserving critical fault discrimination capability.

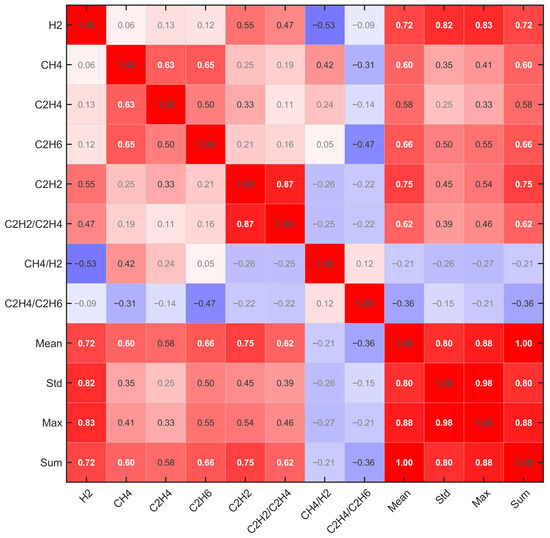

Figure 4 presents the correlation matrix among the 12 extended features. Strong positive correlations (red, >0.6) exist among original gas concentrations (H2, CH4, C2H4, C2H6, and C2H2) and statistical aggregates (Mean, Max, and Sum), indicating substantial redundancy. Gas ratios (C2H2/C2H4, CH4/H2, and C2H4/C2H6) show weak or negative correlations (blue regions) with absolute concentrations, suggesting complementary discriminative information. This correlation structure motivates feature selection to eliminate redundancy while retaining diverse fault signatures.

Figure 4.

Feature correlation heatmap.

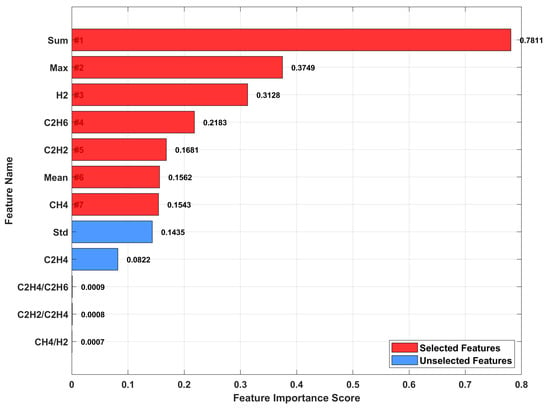

Figure 5 displays the feature importance ranking based on tensor decomposition. Seven features are selected (red bars): Sum (0.7811), Max (0.3749), H2 (0.3128), C2H6 (0.2183), C2H2 (0.1681), Mean (0.1562), and CH4 (0.1543). These features comprise both statistical descriptors and key gas concentrations, capturing the dominant fault patterns. The unselected features (blue bars) exhibit importance scores below 0.15, confirming their limited contribution. This selection achieves 41.7% dimensionality reduction while retaining 95% of discriminative information for fault classification.

Figure 5.

Feature importance score.

3.3. Ablation Study on Feature Dimensionality

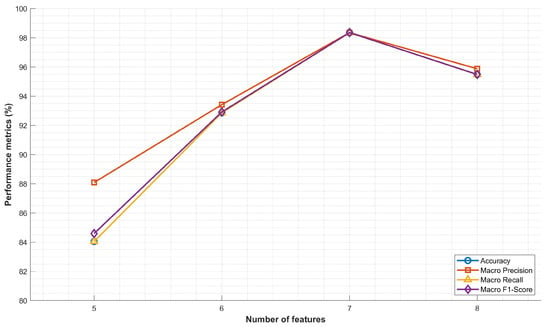

This study evaluates the model’s performance by employing varying numbers of features to determine the optimal feature set size. As illustrated in Figure 6, feature subsets containing five, six, seven, and eight features were compared across four primary metrics: accuracy, Macro Precision, Macro Recall, and Macro F1-Score.

Figure 6.

Optimal number of features.

The experimental results demonstrate that the model achieved its peak performance when configured with seven features, attaining an accuracy of 98.35%, a Macro Precision of 98.35%, a Macro Recall of 98.35%, and a Macro F1-Score of 98.35%. This configuration consistently outperformed all other feature subsets across all evaluation metrics. Compared to the five-feature subset, the seven-feature configuration exhibited a substantial improvement of approximately 14.3% in accuracy and 13.9% in Macro F1-Score. In comparison to the six-feature subset, the performance gain was more moderate, with all metrics increasing by approximately 5.5%. Notably, when the feature set was expanded to eight features, the model’s performance slightly degraded compared to the optimal seven-feature configuration: accuracy decreased by 2.87%, and the Macro F1-Score decreased by 2.86%.

This performance trend indicates that while additional features initially contribute to the model’s discriminative capability, beyond a certain threshold, they may introduce noise or redundancy, thereby marginally compromising its generalization capability. The seven-feature set thus represents the optimal balance between model complexity and predictive performance, providing the most robust and accurate classification results while simultaneously maintaining computational efficiency.

3.4. Experimental Comparison

To establish a performance baseline, this study first evaluated two classic machine learning algorithms: K-Nearest Neighbors (KNN) and Decision Tree. Both models were trained and tested using the same seven features selected via tensor decomposition, thereby ensuring a comparison under controlled conditions where only the model architectures differed. For the KNN classifier, Euclidean distance and uniform weights were employed. Different numbers of neighbors (k = 3, 5, 7) were tested, and the optimal configuration was selected through three-fold cross-validation. The Decision Tree classifier was based on the CART algorithm, using Gini impurity as the splitting criterion. The maximum depth was limited to 10, and each leaf node was required to contain at least five samples to prevent overfitting.

Table 2 shows the performance of the simple baselines against the proposed method. The classical models exhibited clear limitations. The optimal KNN (k = 5) achieved an accuracy of 87.45%, with lower performance observed for k = 3 (86.12%) and k = 7 (86.89%). Decision Tree yielded the poorest performance, registering an accuracy of 84.23%. The 10.88 percentage point difference between the best-performing baseline and the proposed method highlights a fundamental architectural advantage. This disparity cannot be solely attributed to hyperparameter optimization, as the baselines were systematically optimized. Instead, it reveals that distance-based methods, such as KNN, and the axis-aligned partitions of Decision Trees are intrinsically unsuitable for modeling the complex, nonlinear relationships within the dissolved gas analysis (DGA) data, thereby confirming the need for our advanced architecture.

Table 2.

Performance comparison between simple baseline models and the proposed method.

Having determined that simple classifiers are insufficient to capture the complexity of transformer fault patterns, this work advances by evaluating the proposed method against more sophisticated algorithms that incorporate advanced feature processing and learning mechanisms. To verify the effectiveness and superiority of the proposed tensor decomposition and multi-kernel SVM model based on the SSA, this paper selected several representative models as comparison objects, including KPCA-SSA-MKSVM and PCA-SSA-MKSVM as dimensionality reduction variants; a deep neural network (DNN) [26], a deep belief network (DBN) [27] with customized input features, an ensemble machine learning approach (Sklearn Classifier) [28] that incorporates application data and equipment-specific parameters, and a case-based reasoning method optimized by the k-optimal algorithm (K-OA-CBR) [29] were adopted for ratio feature extraction.

For the DNN approach, an MLP architecture with two hidden layers was employed, featuring 32 and 16 neurons, respectively. This structure utilized the ReLU activation function and a softmax output layer for multi-class classification. Network training was performed using the Adam optimizer with a learning rate of 0.001, a batch size of 32, and training for 100 epochs, incorporating an early stopping mechanism based on validation loss. The DBN model used the seven features selected by tensor decomposition as input to maintain consistency across all comparative methods. This network was configured with two hidden layers containing 50 and 25 units, respectively. It was trained using CD with a learning rate of 0.1 for 50 epochs of pre-training, followed by fine-tuning via backpropagation. Compared with the original DBN architecture that processes all available features, the customized DBN feature selection based on tensor decomposition in this study has the following advantages: 1. reducing the input dimension from 12 features to 7 features, thereby reducing model complexity and training time; 2. focus on features with proven discriminative ability quantified by core tensor contributions; and 3. reducing the curse of dimensionality in restricted Boltzmann machine layers. Despite its many advantages, the accuracy of the DBN is only 86.65%, which is 11.68% lower than that of the method proposed in this paper. For the Sklearn Classifier, the Logistic Regression model from the scikit-learn library was adopted. Its hyperparameters were set as solver = ‘lbfgs’, multi_class = ‘multinomial’, max_iter = 1000, C = 1.0, and random_state = 42 for reproducibility.

For the fairness of the experiment, all models were tested on the same dataset and evaluation metrics, and the parameter optimization of the models was carried out according to their respective algorithms. The experimental results are shown in the following chart.

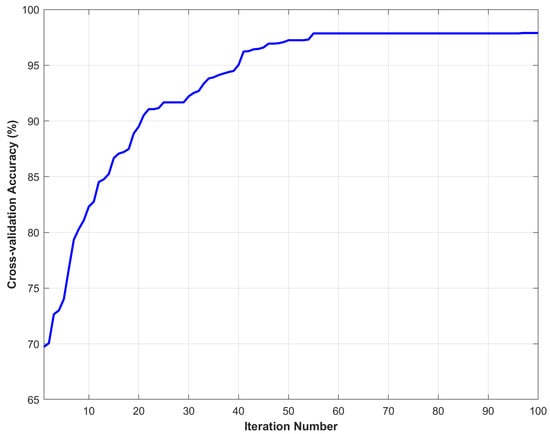

In this paper, a three-fold cross-validation strategy was employed. The dataset was randomly divided into three equal subsets. In each iteration, two subsets were used for training and the remaining one for testing. This process was repeated three times to ensure that each subset was used once as the test set. The following Figure 7 shows the iterative convergence curve of the model based on tensor decomposition and the multi-kernel SVM in terms of cross-validation accuracy. From the figure, it can be seen that in the first 10 iterations, the accuracy rate rapidly increased from about 70% to over 80%, indicating that the algorithm can find a better solution in the early stages. After 20 to 50 iterations, the accuracy rate continued to increase, gradually reaching over 95%. After 55 iterations, the curve tended to stabilize and eventually stabilized at around 98%. At this point, the algorithm had converged.

Figure 7.

Cross-validation accuracy.

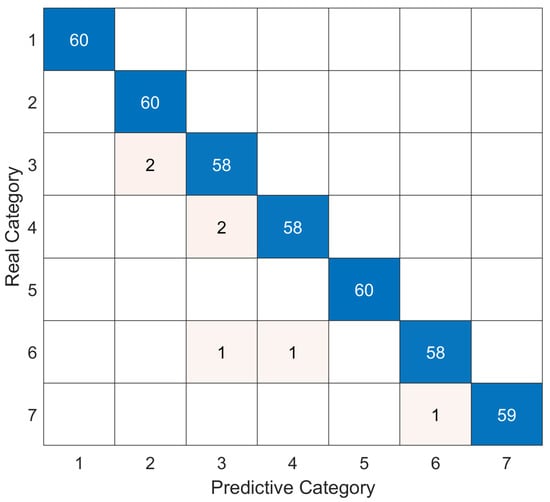

Figure 8 presents the confusion matrix of the proposed model on the test set, which demonstrates the classification performance across different fault categories. The matrix reveals that the model achieves high diagonal values with minimal misclassifications, indicating strong discriminative capability in distinguishing between fault types. The near-perfect diagonal pattern validates the model’s robust generalization ability and confirms its reliability for practical transformer fault diagnosis applications.

Figure 8.

Confusion matrix on test set.

Table 3 summarizes the performance comparison among different diagnostic models. The proposed tensor decomposition and multi-kernel SVM model achieves the highest performance across all metrics, with an accuracy of 98.33%, precision of 98.50%, recall of 98.20%, and F1-score of 98.35%, substantially outperforming all baseline methods. Among the comparison models, KPCA-SSA-MKSVM demonstrates the second-best performance with 95.69% accuracy, which is 2.64 percentage points lower than that of the proposed method, indicating that nonlinear dimensionality reduction through kernel PCA offers advantages over linear approaches but still cannot match the comprehensive feature representation capability of tensor decomposition. The linear PCA variant (PCA-SSA-MKSVM) shows relatively lower performance at 92.87% accuracy, confirming that preserving nonlinear feature relationships is crucial for effective fault pattern recognition. The performance gap between KPCA-SSA-MKSVM and PCA-SSA-MKSVM (2.82 percentage points) further validates the importance of nonlinear feature extraction in transformer fault diagnosis. This study uses MLP for deep learning, but the results are only average, with an accuracy of only 92.33%, and the DBN reaches only 86.65% accuracy even with customized input features, suggesting that these methods may suffer from overfitting or insufficient feature representation in the context of transformer fault diagnosis with limited training samples. The Logistic Regression approach attains 93.71% accuracy by incorporating application-specific data and equipment parameters, demonstrating reasonable diagnostic capability but still showing a performance gap of approximately 4.62 percentage points compared to the proposed model. The K-OA-CBR method achieves 89.56% accuracy through optimized ratio feature extraction, yet its case-based reasoning mechanism appears less effective in capturing complex nonlinear fault patterns compared to the kernel-based classification strategy. The consistent superiority of the proposed method across all evaluation metrics, particularly the balanced performance in precision and recall, confirms its robust capability in minimizing both false positives and false negatives, thereby establishing it as a more reliable and practical solution for transformer fault diagnosis in power systems.

Table 3.

Model performance comparison.

Furthermore, the proposed framework exhibits a total training complexity of O(T·P·K·n2·m) and requires approximately 132 s to train on a mid-range AMD Ryzen 5 CPU equipped with 32 GB of RAM. The Tucker decomposition feature selection phase requires 2 s; the primary computational cost is concentrated in the SSA optimization phase, which requires 120 s, which is 40% faster than grid search and converges more rapidly than PSO, the GA, and DE. The final SVM model training requires 10 s. The model is readily deployable, making it suitable for both industrial PC and server scenarios.

4. Conclusions

This study presents a comprehensive framework for transformer fault diagnosis that synergistically integrates Tucker decomposition-based feature selection with an MKSVM optimized by the SSA for DGA. The proposed method first constructs a three-dimensional tensor to encode the coupling relationships among samples, features, and fault categories, extracting seven optimal features from the original 12-dimensional feature space while preserving 95% of the discriminative information. Subsequently, the hierarchical search mechanism of the SSA enables joint optimization of kernel weights and hyperparameters, achieving globally optimal configuration of the multi-kernel SVM. Compared to benchmark algorithms requiring 78–92 iterations, the proposed framework demonstrates efficient convergence within 60 iterations. Experimental validation on DGA samples encompassing seven fault categories demonstrates superior performance, overall accuracy of 98.33%, balanced accuracy of 98.50%, recall of 98.20%, and F1-score of 98.35%, representing improvements of 2.64% to 11.68% over KPCA-SSA-MKSVM (95.69%), MLP (92.33%), and other baseline methods. Ablation studies further confirm that the selected seven features represent the optimal equilibrium point, as performance degradation occurs when features are reduced to six or increased to eight. The scalability and general applicability of this study are supported by two key points. First, the physicochemical principles governing fault gas generation remain universally consistent, regardless of whether the transformer capacity is in the kVA or MVA range. Second, traditional diagnostic methods, which rely on fixed empirical gas ratio thresholds, encounter scalability issues when applied to diverse oil–paper systems and transformer sizes. In contrast, the proposed Tucker decomposition employs a data-driven tensor analysis technique that automatically extracts discriminative feature combinations by capturing the latent multilinear structure within the DGA data. This ability to learn from data enables it to adapt to the more complex and nonlinear gas interaction patterns present in transformers of varying scales. These results establish the reliability of the proposed framework for transformer condition monitoring and demonstrate significant potential for enhancing power grid reliability through accurate early fault detection.

Author Contributions

Conceptualization: L.W. and X.L.; methodology: L.W. and X.L.; software: L.W. and X.L.; validation: L.W. and X.L.; formal analysis: L.W.; investigation: L.W.; resources: L.W.; writing—original draft preparation: L.W. and X.L.; writing—review and editing: L.W. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Liaoning Provincial Natural Science Foundation grant number 2021-KF-14-01.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hussain, M.R.; Refaat, S.S.; Abu-Rub, H. Overview and partial discharge analysis of power transformers: A literature review. IEEE Access 2021, 9, 64587–64605. [Google Scholar] [CrossRef]

- Lv, G.; Cheng, H.; Zhai, H.; Dong, L. Fault diagnosis of power transformer based on multi-layer SVM classifier. Electr. Power Syst. Res. 2005, 75, 9–15. [Google Scholar] [CrossRef]

- Peimankar, A.; Weddell, S.J.; Jalal, T.; Lapthorn, A.C. Evolutionary multi-objective fault diagnosis of power transformers. Swarm Evol. Comput. 2017, 36, 62–75. [Google Scholar] [CrossRef]

- Wani, S.A.; Rana, A.S.; Sohail, S.; Rahman, O.; Parveen, S.; Khan, S.A. Advances in DGA based condition monitoring of transformers: A review. Renew. Sustain. Energy Rev. 2021, 149, 111347. [Google Scholar] [CrossRef]

- Perrier, C.; Marugan, M.; Beroual, A. DGA comparison between ester and mineral oils. IEEE Trans. Dielectr. Electr. Insul. 2012, 19, 1609–1614. [Google Scholar] [CrossRef]

- Wajid, A.; Rehman, A.U.; Iqbal, S.; Pushkarna, M.; Hussain, S.M.; Kotb, H.; Alharbi, M.; Zaitsev, I. Comparative performance study of dissolved gas analysis (DGA) methods for identification of faults in power transformer. Int. J. Energy Res. 2023, 2023, 9960743. [Google Scholar] [CrossRef]

- Chauhan, V.K.; Dahiya, K.; Sharma, A. Problem formulations and solvers in linear SVM: A review. Artif. Intell. Rev. 2019, 52, 803–855. [Google Scholar] [CrossRef]

- Ghritlahre, H.K.; Prasad, R.K. Application of ANN technique to predict the performance of solar collector systems—A review. Renew. Sustain. Energy Rev. 2018, 84, 75–88. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Taha, I.B.; Ibrahim, S.; Mansour, D.E.A. Power transformer fault diagnosis based on DGA using a convolutional neural network with noise in measurements. IEEE Access 2021, 9, 111162–111170. [Google Scholar] [CrossRef]

- Verdonck, T.; Baesens, B.; Óskarsdóttir, M.; vanden Broucke, S. Special issue on feature engineering editorial. Mach. Learn. 2024, 113, 3917–3928. [Google Scholar] [CrossRef]

- Yang, M.; Luo, Q.; Li, W.; Xiao, M. Nonconvex 3D array image data recovery and pattern recognition under tensor framework. Pattern Recognit. 2022, 122, 108311. [Google Scholar] [CrossRef]

- Yang, X.; Peng, S.; Liu, M. A new kernel function for SPH with applications to free surface flows. Appl. Math. Model. 2014, 38, 3822–3833. [Google Scholar] [CrossRef]

- Dhiman, A.; Kumar, R. Fault Diagnosis of a Transformer using Fuzzy Model and PSO optimized SVM. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Lambora, A.; Gupta, K.; Chopra, K. Genetic algorithm-A literature review. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; IEEE: Piscataway, NJ, USA; 2019; pp. 380–384. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Li, R.; Pan, Z.; Wang, Y.; Wang, P. The correlation-based tucker decomposition for hyperspectral image compression. Neurocomputing 2021, 419, 357–370. [Google Scholar] [CrossRef]

- Pérez, D.; Zhang, L.; Schaefer, M.; Schreck, T.; Keim, D.; Díaz, I. Interactive feature space extension for multidimensional data projection. Neurocomputing 2015, 150, 611–626. [Google Scholar] [CrossRef]

- Shao, P.; Zhang, D.; Yang, G.; Tao, J.; Che, F.; Liu, T. Tucker decomposition-based temporal knowledge graph completion. Knowl.-Based Syst. 2022, 238, 107841. [Google Scholar] [CrossRef]

- Oh, S. Predictive case-based feature importance and interaction. Inf. Sci. 2022, 593, 155–176. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Gao, B.; Shen, W.; Guan, H.; Zheng, L.; Zhang, W. Research on multistrategy improved evolutionary sparrow search algorithm and its application. IEEE Access 2022, 10, 62520–62534. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y. An improved sparrow search algorithm for optimizing support vector machines. IEEE Access 2023, 11, 8199–8206. [Google Scholar] [CrossRef]

- Gu, Q.; Chang, Y.; Li, X.; Chang, Z.; Feng, Z. A novel F-SVM based on FOA for improving SVM performance. Expert Syst. Appl. 2021, 165, 113713. [Google Scholar] [CrossRef]

- de Andrade Lopes, S.M.; Flauzino, R.A.; Altafim, R.A.C. Incipient fault diagnosis in power transformers by data-driven models with over-sampled dataset. Electr. Power Syst. Res. 2021, 201, 107519. [Google Scholar] [CrossRef]

- Zou, D.; Li, Z.; Quan, H.; Peng, Q.; Wang, S.; Hong, Z.; Dai, W.; Zhou, T.; Yin, J. Transformer fault classification for diagnosis based on DGA and deep belief network. Energy Rep. 2023, 9, 250–256. [Google Scholar] [CrossRef]

- Pollok, A.A.; Frotscher, R.; Foata, M.; Dolles, M. A New Approach for AI-Based DGA for Transformers and Tap-Changers. In Proceedings of the 2023 IEEE Electrical Insulation Conference (EIC), Quebec City, QC, Canada, 18–21 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Rao, S.; Yang, S.; Zou, G. A methodology for transformer fault diagnosis based on the feature extraction from DGA data. Int. J. Appl. Electromagn. Mech. 2023, 71, S313–S320. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).