Abstract

This paper introduces a novel control framework for prescribed-time synchronization of higher-order nonlinear multi-agent systems (MAS) subject to parametric uncertainties and external disturbances. The proposed method integrates a fuzzy neural network (FNN) with a robust non-singular terminal sliding mode controller (NTSMC) to ensure leader–follower consensus within a user-defined time horizon, regardless of the initial conditions. The FNN is employed to approximate unknown nonlinearities online, while an adaptive update law ensures accurate compensation for uncertainty. A terminal sliding manifold is designed to enforce finite-time convergence, and Lyapunov-based analysis rigorously proves prescribed-time stability and boundedness of all closed-loop signals. Simulation studies on a leader–follower MAS with four nonlinear agents under directed communication topology demonstrate the superiority of the proposed approach over conventional sliding mode control, achieving faster convergence, enhanced robustness, and improved adaptability against system uncertainties and external perturbations.

1. Introduction

Cooperative control of networked multi-agent systems (MAS) has attracted significant interest due to its broad applications in areas such as rendezvous coordination of nonholonomic agents [1], flocking-based altitude alignment [2], and formation control tasks [3,4]. In such systems, individual agents interact over structured communication topologies to achieve collective goals. A typical configuration is the leader–follower framework, where one agent (the leader) provides a reference trajectory, and the remaining agents (followers) synchronize their states accordingly. This framework facilitates controller implementation and reduces communication and computational overhead [5]. Although in real-world applications, not all followers may have direct access to the leader’s state or control input—especially in adversarial or resource-scarce situations. Therefore, contemporary distributed control approaches mostly consider the case where only a fraction of agents get partial leader information, which is relayed throughout the network [6]. This configuration adds complexity to the design of efficient and robust synchronization protocols with incomplete and uncertain information [7].

In the past ten years, substantial attention has been devoted to two core coordination problems in MASs, consensus and synchronization [8]. In consensus problems, all agents attempt to agree on a particular state variable, which is usually specified by its initial value, without depending on any leader agent, and this configuration is known as leaderless consensus [9]. Synchronization, however, deals with leader-follower problems where a leader agent produces a reference trajectory and the followers are required to track the leader along the path [10].

Although all these aspects have been theoretically investigated in great depth, satisfactory applications of them are still lacking. Practical MAS operate in dynamic and uncertain environments, in which unmodeled system dynamics, parameter variations, time-varying communication networks, and external noise may degrade performance or even cause instability [11]. Bridging such a gap to real applications, e.g., coordination of autonomous vehicles, clusters of robots, and sensor networks, requires control schemes that are adaptive to uncertainties and robust to time-varying configurations. In light of these difficulties, several control paradigms have been developed, including passivity-based methods [12], pinning control [13], energy-shaping methods [14], optimal control methods [15], and sliding mode control (SMC) methods.

Among the various SMC approaches, several notable variants have been developed to enhance robustness, adaptability, and precision in MAS coordination. Integral SMC [16] is commonly acknowledged for eliminating the reaching phase, thereby improving robustness and reducing the influence of initial conditions. Second-order SMC [17] introduces higher-order dynamics into the sliding variables, resulting in smoother control actions and reduced chattering compared to conventional SMC. Terminal SMC (TSMC) [18,19] ensures finite-time convergence with faster error reduction rates, making it ideal for applications that require rapid stabilization. Adaptive SMC [20,21] employs real-time tuning laws that allow the controller to adjust its gains online, effectively compensating for unknown disturbances and parameter variations without prior knowledge of uncertainty bounds. Overall, these SMC variants have proven to be powerful methods for achieving robust MAS coordination in the presence of severe nonlinearities, communication constraints, and external disturbances. Because they can guarantee finite-time or fixed-time convergence and mitigate chattering, these techniques have attracted significant attention in safety-critical and time-sensitive domains such as cooperative robotics, autonomous vehicle swarms, and distributed sensor networks.

Even considering the above advantages, finite-time conventional control methods (such as traditional TSMC) still exhibit some limitations. They are not entirely free from singularity in the control input and may suffer performance degradation due to sensitivity to initial conditions. Such drawbacks can restrict their applicability in systems that demand high precision and strict temporal performance. To overcome these issues, several advanced schemes have been introduced in the literature, including non-singular TSMC [22,23], trajectory transformation methods [24], redesigned sliding surfaces [25], and adaptive schemes incorporating saturation or fractional-order dynamics [26,27,28,29]. Although these methods appear to offer improved convergence and robustness characteristics, they generally lack a closed-form and exact expression for the settling time, which remains a critical limitation in time-sensitive applications.

Fixed-time control has been a promising choice, ensuring convergence within a predetermined upper limit that is entirely independent of the system’s initial state [30]. This feature is crucial for coordinating leader–follower synchronization with stringent timing requirements. Early studies explored fixed-time stability in nonlinear systems [31,32,33], while recent developments have extended these results to complex MAS architectures and high-order nonlinear dynamics. For example, Lei et al. proposed an event-triggered fixed-time stabilization framework for two-time-scale linear systems, ensuring practical fixed-time convergence under state-triggered sampling rules [34]. Similarly, Long et al. introduced a fixed-time consensus control scheme with prescribed performance and full-state constraints for nonlinear MAS, enhancing transient response and steady-state accuracy through adaptive event-triggered communication [35].

In parallel, Xiong et al. developed a model-free adaptive predictive control approach based on a broad-learning-system, which combines data-driven prediction with adaptive compensation to achieve robust consensus under denial-of-service attacks [36]. Likewise, reinforcement learning (RL) methods have recently been incorporated into fixed-time frameworks. Wang et al. presented a reinforcement-learning-based fixed-time prescribed performance consensus controller for stochastic nonlinear MAS with sensor faults, demonstrating strong robustness and convergence guarantees under probabilistic uncertainty [37]. Additionally, Ma et al. proposed a distributed fixed-time formation tracking control scheme using integral terminal SMC and disturbance observers for multi-wheeled mobile robots, validated experimentally under directed communication topologies [38]. In practical transportation systems, Liu and Xu designed a cooperative control framework for virtually coupled train formations that maintains safe inter-train spacing using barrier Lyapunov functions and adaptive control strategies [39].

Although these state-of-the-art methods have improved performance and reliability, most remain limited to linear or simplified nonlinear dynamics, struggling to maintain robustness under severe uncertainties and rapidly changing conditions. Moreover, excessive chattering and energy inefficiency persist in many fixed-time SMC formulations, particularly under high-gain or discontinuous control laws.

To overcome these challenges, this paper introduces a Neuro-Adaptive Prescribed-Time Non-Singular Terminal Sliding Mode Control (PNTSMC) framework for robust synchronization of higher-order uncertain nonlinear MAS. The proposed controller ensures convergence within a prescribed time for any initial condition while simultaneously reducing chattering and enhancing robustness. By integrating adaptive neural approximation into a non-singular terminal sliding mode framework, the learning capability is enhanced, allowing for compensation of unknown nonlinearities and achieving accurate tracking in the presence of matched uncertainties and external disturbances. This integrated topology offers a unified design for high-performance synchronization with guaranteed convergence time, making it suitable for implementation in complex and distributed intelligent systems.

The main contributions of this paper are summarized as follows:

- A novel non-singular terminal sliding surface is designed to ensure prescribed-time convergence in synchronization tasks, completely avoiding the singularity problem that commonly arises in conventional terminal SMC approaches.

- A fuzzy neural network (FNN)-based adaptive approximation mechanism is developed to estimate unknown nonlinear dynamics and external disturbances in real time. Compared with RBF-NNs or FLS-based methods, the proposed FNN offers faster learning, improved global approximation, and enhanced robustness through online parameter adaptation.

- A continuous reachability control law is formulated to effectively suppress chattering while preserving robustness, overcoming the high-frequency oscillation and actuator stress often observed in traditional SMC frameworks.

- Rigorous Lyapunov-based stability analysis is provided to guarantee prescribed-time synchronization and the boundedness of all closed-loop signals under matched uncertainties.

- Extensive simulations on a leader–follower network consisting of one leader and four followers verify that the proposed FNN-based PTSMC achieves faster convergence, higher robustness, and better adaptability than existing finite-time, fixed-time, and classical SMC schemes for uncertain nonlinear multi-agent systems.

The structure of this paper is outlined as follows. Section 2 provides the necessary mathematical background and an overview of graph theory, along with the formulation of the synchronization problem for higher-order MAS. The proposed PNTSMC scheme and its Lyapunov-based stability proof are presented in Section 3. Section 4 illustrates a simulation study validating the effectiveness of the proposed approach. Finally, Section 5 summarizes the work and highlights potential directions for future research.

2. Problem Formulation and Preliminaries

The growing application of multi-agent systems in contemporary engineering areas—ranging from robotics and autonomous vehicles to smart grids and sensor networks—has raised the need for robust, distributed control methods. The systems typically involve many agents communicating over intricate networks to pursue a common objective, for example, synchronization or formation tracking. One of the most challenging control problems in this regard is the leader-follower synchronization problem for higher-order uncertain nonlinear multi-agent systems, where each agent has complex dynamics and the system is affected by modeling uncertainties and communication constraints.

2.1. Challenges in Higher-Order Multi-Agent Systems

Multi-agent systems pose some particular challenges that require advanced control schemes:

- Higher-Order Dynamics: In contrast to simple first- or second-order systems, most real-world agents have dynamics with more than a single integrator or nonlinear internal variables. The design of controllers for these types of systems requires a deep understanding of their own dynamics.

- Nonlinearities: The agent dynamics are often nonlinear, making it inadequate to use the traditional linear control design techniques. Nonlinearities cause analytical complexity and restrict performance if not adequately addressed.

- Uncertainties: Physical systems are always subject to unmodeled dynamics, disturbance inputs, and parametric uncertainties. These can cause a significant deterioration in stability and tracking performance.

- Networked Interactions: Agents communicate via a communication structure given by a directed graph. The network topology, connectivity, and possibly delays have a significant impact on information flow and control performance.

2.2. Leader-Follower Synchronization Objective

In the leader–follower synchronization framework, a specific leader agent computes the reference trajectory, and the other follower agents are responsible for following it over time. The objective is to design distributed control inputs for each follower such that their states converge to those of the leader within a given time limit, regardless of the initial conditions. Such prescribed-time convergence is especially critical in time-sensitive applications, such as formations of uncrewed aerial vehicles and cooperative robotic systems.

Key aspects of the problem include:

- Distributed Control: Each follower’s control input should be computed using its own state and information received only from neighboring agents, ensuring scalability and fault-tolerance.

- prescribed-time Synchronization: In contrast to asymptotic or finite-time synchronization, prescribed-time synchronization guarantees uniform convergence time, which is particularly valuable in mission-critical scenarios.

2.3. Mathematical Modeling and Network Topology

The dynamics of each follower agent are described by:

where is the state vector, is a smooth nonlinear drift function, is a smooth nonlinear input gain, represents bounded uncertainty, and is the control input.

The leader’s dynamics are given by:

with representing the leader’s state and being a continuous driving signal.

The communication topology is represented by a directed graph , where the node set is and the edge set satisfies . An edge signifies that agent i has access to the information transmitted by agent j. The weighted adjacency matrix is denoted by , and the Laplacian matrix is defined as , where D is the in-degree matrix. For the follower network, the matrices and represent the adjacency and Laplacian structures, respectively, while characterizes the influence of the leader on the followers.

2.4. Synchronization Mismatch and Reformulated Objective

Let the synchronization error for the i-th agent be defined by:

The synchronization error for the state of agent i is:

This captures both inter-follower discrepancies and the tracking error with respect to the leader. The dynamics of the mismatch vector evolve as:

with being the lumped uncertainty derived from all agents’ uncertainties.

where , are unknown nonlinearities, represents lumped uncertainties, and , are adjacency and pinning gains, respectively.

Thus, the control objective is reformulated as a prescribed-time regulation problem: Design a distributed control input for each follower such that in fixed time despite unknown nonlinearities and lumped uncertainty .

2.5. Assumptions

To make the problem tractable, the following assumptions are adopted:

- (A1) Controllability: for all , ensuring full actuation.

- (A2) Boundedness:

- Leader state:

- Leader dynamics:

- Uncertainties:

- (A3) Neighbor Awareness: Each follower has access to the states of its neighboring agents and, if directly connected, to the leader’s state.

- (A4) Smoothness: The nonlinear functions and are assumed to be at least -smooth with respect to their arguments. This guarantees the existence and continuity of their first-order derivatives, ensuring the validity of derivative-based operations in the control design and Lyapunov stability analysis.

- (A5) Leader–Follower Connectivity: The communication topology among agents is represented by a directed graph that contains a directed spanning tree rooted at the leader. This ensures that at least one directed path exists from the leader to every follower agent, allowing the leader’s information to propagate throughout the network.

Remark 1.

Assumption (A5) ensures that the leader’s information can propagate throughout the entire network, which is a standard and necessary condition for achieving consensus or synchronization in leader–follower MASs under directed topologies. Without this condition, certain followers may become isolated from the leader’s influence, making global synchronization impossible.

2.6. Role of Graph Theory in Control Design

Graph-theoretic constructs such as adjacency matrices, Laplacians, and the leader influence matrix provide the foundation for designing distributed controllers and formulating the synchronization error. The existence of a directed spanning tree rooted at the leader ensures that the leader’s influence can propagate throughout the network, a necessary condition for achieving global synchronization.

2.7. Remark on State Availability

The proposed control framework assumes full state availability for all agents to compute mismatch variables. In practical applications, where higher-order states may not be directly measurable, observer-based techniques—such as high-gain observers or higher-order differentiators—can be employed to estimate the required states.

3. Control Design

This section introduces an adaptive control strategy based on fuzzy neural networks to realize prescribed-time synchronization in higher-order uncertain nonlinear multi-agent systems. The FNNs serve as universal approximators to estimate the unknown nonlinear components of the agent dynamics. A prescribed-time non-singular terminal sliding mode surface is designed to guarantee convergence within a fixed time regardless of the initial conditions, and adaptive weight update laws for the FNNs are derived using Lyapunov stability analysis.

3.1. Fuzzy Neural Network Approximation

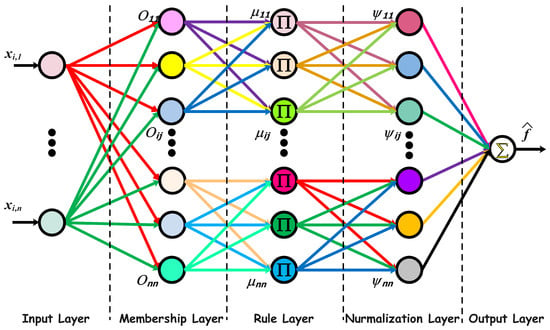

To address the presence of unknown nonlinear functions in the agent dynamics, an FNN structure is employed for each agent i. This architecture, inspired by the Adaptive Neuro-Fuzzy Inference System (ANFIS), is designed to approximate the unknown smooth functions and , as illustrated in Figure 1.

Figure 1.

Architecture of the fuzzy neural network used for approximating the unknown nonlinear functions in the proposed FNN-PT-NTSMC framework.

The FNN operates in two primary phases:

- Forward Propagation: The input vector is processed sequentially through the network’s five layers to produce an estimated output, .

- Parameter Adaptation: The network’s output weights, and , are updated online to minimize approximation errors. These updates are governed by adaptation laws derived from a Lyapunov-based stability analysis, ensuring system stability and prescribed-time convergence.

The FNN approximates the unknown functions as:

where and are the normalized fuzzy basis function vectors, and , are the adaptive weight vectors.

The FNN consists of five computational layers:

Layer 1 (Input): This layer receives the agent’s state vector .

Layer 2 (Fuzzification): Each node uses a Gaussian membership function to determine the degree of membership for each input.

The Gaussian membership function is chosen for the smoothness and differentiability. These characteristics enable stable calculations for adaptive parameter learning. Gaussian-shaped functions are preferred over triangular or trapezoidal functions, in which the points are nondifferentiable. The Gaussian shape provides smooth and continuous mappings. The learning process becomes more efficient, and the backpropagation of errors is also more effective. Its localized yet overlapping structure allows for better nonlinear approximations. The Gaussian function also achieves faster convergence and demonstrates lower sensitivity to parameter initialization. These make the Gaussian function particularly suited to fuzzy–neural adaptive control, where high precision and smooth adaptation are vital in the fuzzy–neural system to maintain control of stability and robustness for the system.

Layer 3 (Rule): This layer computes the firing strength of each fuzzy rule, typically using a product t-norm.

Layer 4 (Normalization): The firing strengths are normalized to ensure each rule has a proportional influence.

This results in the fuzzy basis function vector:

Layer 5 (Output): For this layer, the TSK defuzzification technique is utilized to determine the final output.

We emphasize the TSK defuzzification method due to its analytical tractability and smooth fuzzy rule for controlling output mapping. TSK models, unlike Mamdani models, allow the use of linear, differentiable forms, which are necessary for Lyapunov-based stability control, parameter adaptation, and continuous-time fuzzy neural control.

Approximation errors are unavoidable; thus, we assume that optimal weight vectors exist, and , with possible approximations defined for the functions:

where and are the bounded approximation errors.

In the control structure, the FNN output is adaptively adjusted to force the sliding surface to zero. The adaptive laws update the output weights continuously with an instantaneous value of and normalized rule activations such that the approximation and tracking errors converge within the specified time.

Remark 2.

The proposed FNN combines the interpretability of fuzzy logic with the adaptability of neural networks. Unlike RBF-NNs, which rely solely on local Gaussian activations, the FNN utilizes fuzzy membership rules for superior global approximation and enhanced resilience. Additionally, in contrast to conventional fuzzy logic systems, it eliminates the necessity for a priori rule base by allowing online adaptive adjustment of antecedent as well as consequent parameters, leading to quicker convergence and better adaptability in the presence of system uncertainties.

3.2. Prescribed-Time Non-Singular Terminal Sliding Surface Design

For the convergence of tracking error in a pre-specified time, the sliding variable and associated control law are designed accordingly. Let be the pre-specified convergence time. From this condition, the sliding surface can be defined as:

where is defined through the recursive NTSM as:

with

Remark 3.

This time-varying sliding surface injects an explicit time-decaying term that forces convergence by time , i.e., the term in (15) ensures exact convergence at . In contrast, the use of in (16) avoids singularities. The sliding surface’s time-varying structure guarantees prescribed-time stability regardless of initial conditions.

3.3. Prescribed-Time Control Law Design

Taking the time derivative of the sliding surface and expanding the terms gives:

With the recursive definition of , its derivative is:

The control input is then designed to ensure the stability of the system, driving the sliding surface to zero:

where , , and are design constants. The control law compensates for uncertainties and enforces the sliding dynamics in (21):

Remark 4.

This control law (22) ensures prescribed-time synchronization of each agent to the leader within time T, despite uncertainties, external disturbances, and inter-agent couplings. Neural network approximators can be updated online via adaptation laws to handle modeling errors.

Theorem 1.

Consider the nonlinear multi-agent system described in (1)–(2), characterized by unknown but at least -smooth nonlinear functions and , and operating under Assumptions (A1)–(A5). If each follower agent employs the prescribed-time fuzzy neural adaptive non-singular terminal sliding mode control law defined by (22), with the adaptive FNN weight update rules:

and the sliding variable evolves according to

Then the following properties hold:

- All closed-loop signals, including , , and , remain bounded for all .

- The synchronization errors and sliding variables converge to zero within the prescribed time T, i.e.,

This result holds provided the control parameters satisfy , , , , , and .

Proof.

Consider the Lyapunov candidate for the whole network:

where and denote weight estimation errors.

Differentiate with respect to time to obtain

From the control law and the augmented sliding construction, the full expression for is

where the lumped approximation/disturbance error is

and collects the network-induced lumped uncertainties (as in the problem setup).

Use the standard decomposition (and similarly for the -term) to group approximation terms. Define the aggregated bounded residual

which satisfies by assumption.

Choose the switching gain to satisfy the sufficient condition

so that the switching action dominates the uncertain terms. With this choice and noting , (30) reduces to the conservative bound

Hence , and is nonincreasing and bounded on . This establishes boundedness of all closed-loop signals (weights, control inputs, states) for .

To obtain the explicit decay rate that yields prescribed-time convergence, we exploit the augmented sliding structure. On the sliding manifold (or during the reaching phase under the designed reaching law), the time-varying term with enforces an accelerated decay. In particular, one can show (by using the sliding-surface definition and the NTSM recursion) that there exists a positive constant such that for

Here the constant and parameter choices are determined by the NTSM gains and the network weights; details follow standard prescribed-time NTSM derivations—(see e.g., [40]).

Combining (34) across agents yields the differential inequality

Solving (35) by separation of variables gives

which implies . Since is positive definite in the tracking variables, we obtain

Remark 5.

Theorem 1 establishes prescribed-time convergence on , with guaranteed limit . For numerical robustness, the singular term used in the control law is regularized as with a small (e.g., ), ensuring smooth control action and eliminating division-by-zero near .

Therefore, all closed-loop signals remain bounded for and the synchronization errors converge to zero at the prescribed time in the limiting sense , completing the proof. □

3.4. Prescribed-Time Convergence

To verify the prescribed-time convergence capability of the proposed control method, we conduct a detailed analysis that demonstrates the tracking errors and the sliding variables reach zero precisely at the predefined time T, regardless of the initial system states. Consider the sliding surface dynamics:

This represents a finite-time stable system. Therefore, the sliding variable converges to zero in a finite time . However, the constructed time-varying sliding surface includes a singular term:

Lemma 1.

The sliding surface converges to zero in prescribed time T, i.e., for all .

Proof.

From the structure of in (38), as , the term must remain finite (since is bounded). This requires

and thus as . The recursive structure of then ensures all tracking errors converge to zero by time T. □

On the sliding surface , we have:

Taking the time derivative yields:

Recall the recursive NTSM structure:

This implies that:

From (43), it is evident that must decay faster than to avoid the singularity at , ensuring convergence of both and .

Lyapunov-Based Prescribed-Time Stability Analysis: To formally verify convergence, consider the Lyapunov candidate:

Differentiating (44) with respect to time:

From the sliding condition , substitute for :

Since is bounded (due to bounded dynamics and control design), the dominant term is:

This yields a time-varying differential inequality. Solving it gives:

Therefore:

As , this implies:

demonstrating prescribed-time convergence of the tracking error . Given inequality (50), it is clear that:

Thus, each agent’s tracking error converges to zero exactly at the predefined time T, regardless of initial conditions, confirming prescribed-time synchronization.

Remark 6.

The convergence time T is explicitly imposed by the designer and is independent of the initial conditions of the agents. This is a stronger guarantee than finite-time or fixed-time convergence and is particularly valuable for time-critical cooperative control applications.

The proposed control framework, summarized in Algorithm 1, outlines the step-by-step implementation of the designed strategy.

| Algorithm 1 Fuzzy Neural Network-based PNTSMC |

,

|

4. Illustrative Example: Synchronization Control of a Networked System

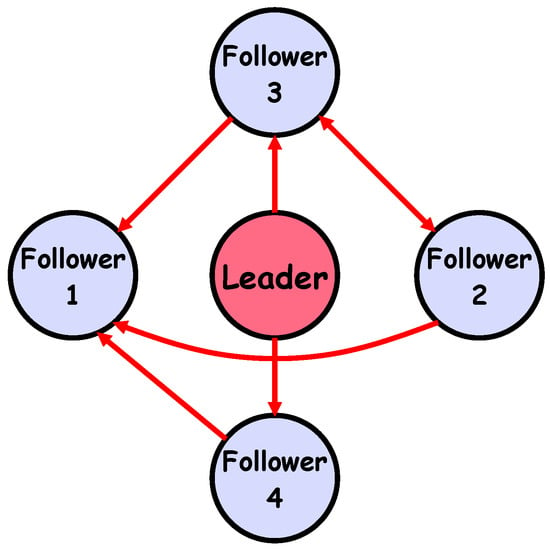

To demonstrate the effectiveness of the proposed synchronization control approach for networked agents, a simulation is conducted on a system comprising one leader and four follower agents operating under uncertain disturbances. The communication topology, shown in Figure 2, represents a directed graph that illustrates the information flow among the agents.

Figure 2.

Configuration of the network consisting of one leader and four follower agents under a defined topology.

In this illustrative scenario, both the leader and the followers are modeled as second-order dynamical systems. The main objective is to demonstrate the controller’s capability in ensuring that all follower agents asymptotically track the leader’s trajectory, even in the presence of external matched disturbances acting on the followers. The subsequent subsections describe the agent dynamics, graph-theoretic properties of the network, and synchronization mismatch variables.

4.1. System Dynamics of the Leader and Followers

The following set of first-order differential equations describes the leader’s dynamics:

where represents a time-varying exogenous input. This formulation ensures that , guaranteeing equilibrium at the origin in the absence of external excitation.

Each follower agent () is governed by the nonlinear dynamics:

where is the state vector, denotes a matched-type bounded disturbance, and represents pendulum-like dynamics with gravity g and length l. The control gain is given by , where m is the pendulum mass. The goal is to design such that asymptotically tracks despite disturbances.

4.2. Network Topology and Interconnection Matrices

The inter-agent communication is described by a directed graph shown in Figure 2. The graph structure is captured by the adjacency matrix (including the leader as node 1), the Laplacian matrix for the follower subgraph, and the leader influence matrix :

where denotes a directed link from agent j to agent i, and zero otherwise. captures the internal connectivity of follower agents, while indicates which followers directly receive information from the leader.

4.3. Control Design for the Illustrative Example

Based on the provided illustrative example, we now detail the design of the fuzzy neural network-based adaptive prescribed-time control technique for the networked system. The system comprises one leader and four followers, each with second-order dynamics.

4.3.1. Synchronization Error Dynamics

The synchronization mismatch variables for each follower agent are defined as:

These represent weighted state deviations from neighboring agents and the leader, based on the network structure. The controller aims to drive as .

The dynamics of these error variables are derived by taking their time derivatives:

Let denote the set of neighbors of agent i, including the leader. The control input is designed to drive and to zero within a prescribed time T.

4.3.2. Prescribed-Time Non-Singular Terminal Sliding Surface

For the second-order system, a time-varying sliding surface is formulated to guarantee prescribed-time convergence. The objective is to ensure that all tracking errors vanish exactly at the predefined time T, regardless of their initial values. The sliding variable is defined as:

where , , , and are positive design constants.

4.3.3. FNN-Based Adaptive Control Law Design

The control law is designed to satisfy the sliding condition (or a more general stable form for robustness). Taking the time derivative of :

The unknown nonlinear functions and disturbances are approximated by FNNs. The FNN approximations are denoted by and . To ensure stability and convergence, the control law is designed to cancel the nonlinearities and disturbances. The resulting control law is given by:

where and are the control gains for robustness and stability.

4.3.4. FNN Adaptive Laws

The weights of the FNNs are updated online using the following adaptive laws, which are derived to guarantee the boundedness of the weight estimation errors:

where are positive definite learning rate matrices and are positive design constants. These laws ensure that the approximation errors are compensated for, allowing the system to achieve prescribed-time synchronization.

4.3.5. Theoretical Guarantee

Theorem 2.

Consider the leader-follower network described above. Suppose each follower’s control input is designed as per the control law (62), and the FNN weights are updated via the adaptation laws (63). Then, for properly selected design parameters , the synchronization errors and sliding surfaces converge to zero within the prescribed time T, i.e.,

This implies that all follower agents synchronize with the leader within a guaranteed finite time, despite the presence of modeling uncertainties, nonlinear dynamics, and inter-agent couplings.

4.4. Simulation Results

This section presents a detailed simulation analysis aimed at assessing the performance and robustness of the new control scheme, particularly in the presence of matched-type, time-varying disturbances. Additionally, comparative performance evaluation is conducted with respect to the conventional SMC approach to identify the improvements achieved by the new method.

4.4.1. Controller Parameters

The control parameters used in the simulations are listed in Table 1. They play a crucial role in shaping the closed-loop dynamics of the multi-agent system and in adjusting the responsiveness of the control strategy.

Table 1.

System, control design, and FNN parameters for leader and followers.

4.4.2. Simulation Setup

The considered system is a five-agent system, where one agent, , is designated as the leader, while the other agents, , are followers. Its communication network is formed according to Figure 2. The agents are initialized with unique values for their initial states. Simulation results are obtained using MATLAB/Simulink (version R2024b) with an Euler integration (One-Step) scheme and a step size of 0.01 s. For robustness evaluation, the followers are subjected to sinusoidal time-varying disturbances during simulation.

4.4.3. Tracking Performance

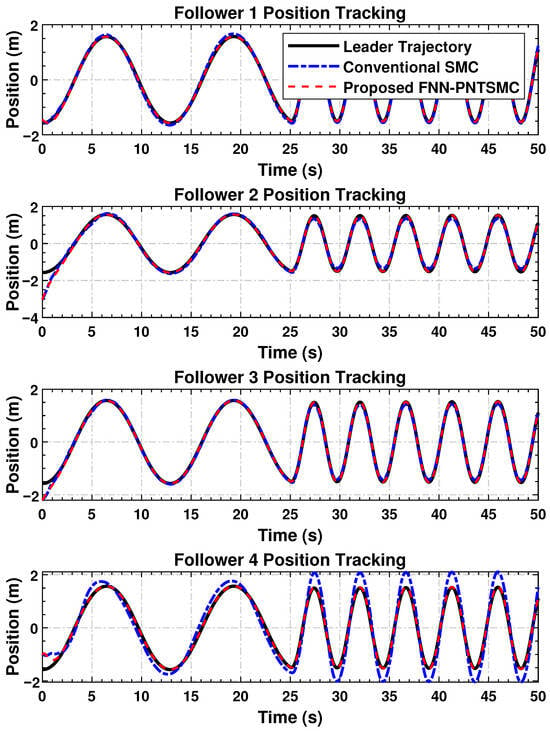

The positional convergence pattern of the follower agents with respect to the leader is shown in Figure 3. The tracking accuracy of the proposed control law, despite external perturbations, further affirms the results of the previous section. The results of the proposed framework demonstrate a significant improvement over classical SMC, characterized by smoother transitions and lower residual errors.

Figure 3.

The four follower agents’ position trajectories converging to the path of the leader under the designed control strategy.

As shown, all agents effectively and efficiently converge to the leader’s trajectory, even in the presence of the prescribed time constraints and disturbances. The active control achieves minimal overshoot and oscillation, further demonstrating the responsive and well-tuned nature of the proposed control system. In contrast, while the conventional SMC-based controller exhibited some steady-state error and noticeable chattering, the proposed controller reached a steady state with virtually no error. The residual chattering and steady-state deviations in the SMC case could pose challenges in precision or safety-critical systems.

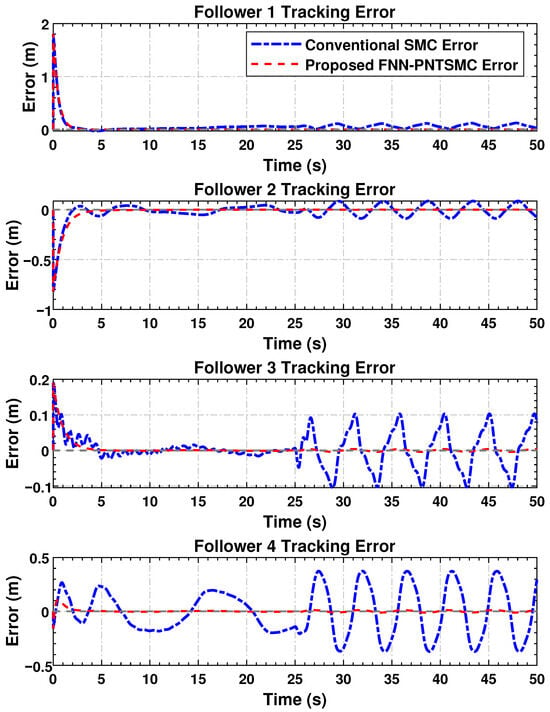

4.4.4. Mismatch Convergence

The evolution of synchronization error for the follower agents is depicted in Figure 4. The standing drift in the magnitude of the errors provides additional evidence of the control algorithm’s capability in coordinating agents’ behaviors. Regarding settling time for mismatch convergence and disturbance attenuation, the proposed approach significantly outperforms SMC.

Figure 4.

Synchronization errors’ evolution between follower agents indicating successful convergence in the multi-agent network.

Error plots demonstrate that all agents converge rapidly, and the disparity values tend toward zero. Based on the simulation results, smoother error profiles with substantially reduced chattering are obtained using the proposed controller compared to the SMC reference. This confirms the successful operation of the adaptive FNN component in mitigating inter-agent discrepancies, even under time-varying disturbances and nonlinear uncertainties.

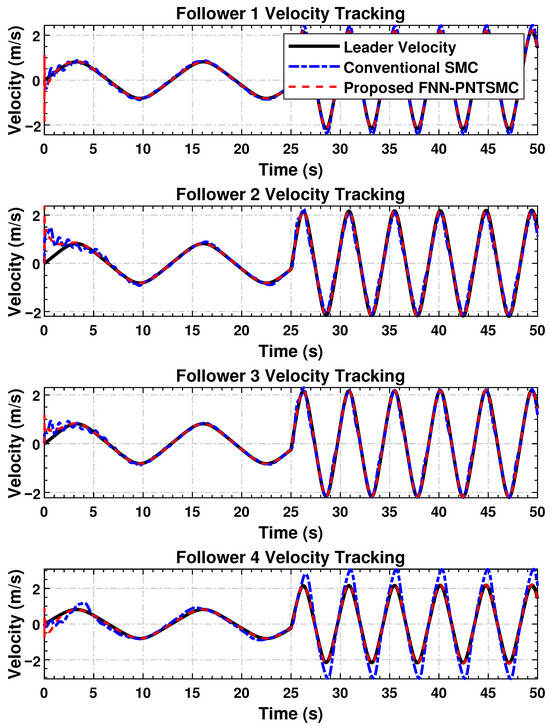

4.4.5. Velocity Tracking

The velocity profiles of the follower agents, reflecting the dynamic behavior of the system, are shown in Figure 5. The proposed control law ensures that each follower approaches the leader’s velocity smoothly and with minimal oscillation. In contrast to SMC, the proposed scheme yields superior transient performance and improved steady-state stability. The rapid corrective actions in the velocity response are well-damped, preventing abrupt fluctuations and excessive power loss—critical factors in systems operating under high inertia or rapid speed variations. The irregular and non-smooth transients observed under SMC control indicate sub-optimal regulation compared to the proposed method.

Figure 5.

Velocity synchronization of the follower agents with the leader, demonstrating dynamic response under the control scheme.

The transient overshoot in the followers’ velocity tracking arises from the strong corrective action of the prescribed-time control term and terminal sliding dynamics, which enforce rapid convergence within the fixed horizon T. This transient behavior reflects the trade-off between fast convergence and smoothness typical in prescribed-time and terminal SMC frameworks. The slightly higher overshoot under the proposed FNN-based controller results from the neural adaptation phase, where rapid online weight updates temporarily amplify control activity before accurate approximation of system nonlinearities is achieved. Once adaptation stabilizes, the controller ensures faster convergence, smaller steady-state errors, smoother control signals, and superior robustness compared to conventional SMC. Unlike fixed-gain SMC, the fuzzy neural adaptive NTSMC continuously tunes its parameters online, enabling improved long-term tracking under uncertainties. Moreover, the comparatively smoother performance of followers farther from the leader (e.g., follower 4) results from the directed communication topology, where intermediate agents naturally filter high-frequency transients during consensus propagation.

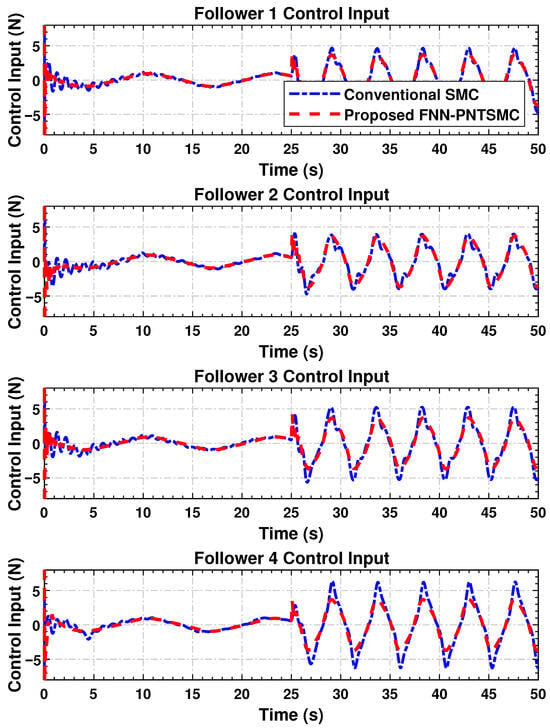

4.4.6. Control Input Synchronization

The control signal trajectories are shown in Figure 6. These plots demonstrate that the control inputs of the agents are synchronizing each intra-agent signal with much less high-frequency switching. This is crucial in physical implementations, as it reduces the wear and energy burden in both electro-mechanical systems. Control profiles in that case are smooth and contain little sharp transition or spike. This indicates that the proposed controller is useful for smooth control, in contrast to the SMC scheme, which generates a discontinuous signal due to its discontinuity. There is no doubt that smoother input benefits systems that respond with physical actuators or those with bandwidth limitations, and this is one of our approach’s strengths.

Figure 6.

Time histories of control signals applied to follower agents, illustrating coordinated actuation and reduced chattering behavior.

The simulation results confirm the effectiveness of the proposed distributed control law in achieving high tracking accuracy (Figure 3), maintaining synchronization stability (Figure 4), regulating dynamic velocity and ensuring convergence (Figure 5), as well as delivering smooth and coordinated control efforts (Figure 6) under time-varying disturbances. Compared to the conventional SMC method, the proposed FNN-based adaptive controller demonstrates superior robustness, with significantly reduced steady-state and transient errors. Moreover, it achieves a substantial reduction in control forces. These findings suggest that the proposed controller performs reliably in practice and holds strong potential for application in multi-agent cooperative systems, robotic platforms, and precision networked control systems.

5. Conclusions

This work proposes a novel synchronization control strategy that integrates fuzzy neural networks with a prescribed-time nonsingular terminal sliding mode control framework for higher-order nonlinear multi-agent systems subject to uncertainties. The method guarantees that all follower agents align with the leader’s state within a predefined time bound, regardless of initial conditions, while effectively compensating for matched uncertainties under a directed communication topology. The FNNs provide real-time estimation of unknown nonlinear dynamics, and the terminal sliding surface is designed to eliminate singularities and ensure fast error convergence. A Lyapunov-based stability analysis verifies prescribed-time synchronization with bounded trajectories of the system. Simulation studies on a leader–follower network demonstrate superior robustness, accuracy, and adaptability compared to conventional sliding mode control techniques, confirming its potential for real-world deployment in uncertain and time-critical applications. Future research will focus on extending this approach to handle time-varying communication topologies, actuator faults, and heterogeneous agent dynamics, as well as conducting experimental validation to confirm its practical feasibility.

Author Contributions

Conceptualization, S.U.; Methodology, S.U.; Software, S.U.; Validation, S.U., M.Z.B. and A.A.A.; Formal analysis, M.Z.B., S.A. and A.S.A.; Investigation, M.Z.B., A.S.A. and A.A.A.; Resources, S.A., A.S.A. and H.K.; Data curation, M.Z.B. and H.K.; Writing—original draft preparation, S.U.; Writing—review and editing, S.U., M.Z.B., S.A., A.S.A., H.K. and A.A.A.; Visualization, Project administration, S.U. and H.K.; Funding acquisition, S.A., A.S.A. and A.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Deanship of Scientific Research (DSR) at King Faisal University, Saudi Arabia under Grant KFU254188 and in part by the DSR at Northern Border University, Arar, Saudi Arabia, under Project NBU-FFR-2025-2484-20.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, Y.; Garcia, E.; Casbeer, D.; Zhang, F. Cooperative Control of Multi-Agent Systems: Theory and Applications; John Wiley and Sons Ltd.: Hoboken, NJ, USA, 2017. [Google Scholar]

- Ali, Z.A.; Alkhammash, E.H.; Hasan, R. State-of-the-art flocking strategies for the collective motion of multi-robots. Machines 2024, 12, 739. [Google Scholar] [CrossRef]

- Bao, G.; Ma, L.; Yi, X. Recent advances on cooperative control of heterogeneous multi-agent systems subject to constraints: A survey. Syst. Sci. Control Eng. 2022, 10, 539–551. [Google Scholar] [CrossRef]

- Li, W.; Shi, F.; Li, W.; Yang, S.; Wang, Z.; Li, J. Review on Cooperative Control of Multi-Agent Systems. Int. J. Appl. Math. Control Eng. 2024, 7, 10–17. [Google Scholar]

- Smith, E.; Robinson, D.; Agalgaonkar, A. Cooperative control of microgrids: A review of theoretical frameworks, applications and recent developments. Energies 2021, 14, 8026. [Google Scholar] [CrossRef]

- Liao, Z.; Shi, J.; Zhang, Y.; Wang, S.; Chen, R.; Sun, Z. A leader–follower attack-tolerant algorithm for resilient rendezvous with reduced network redundancy. IEEE Syst. J. 2025, 19, 212–223. [Google Scholar] [CrossRef]

- Katsoukis, I.; Rovithakis, G.A. A low complexity robust output synchronization protocol with prescribed performance for high-order heterogeneous uncertain MIMO nonlinear multiagent systems. IEEE Trans. Autom. Control 2021, 67, 3128–3133. [Google Scholar] [CrossRef]

- Chen, Z. Synchronization of frequency-modulated multiagent systems. IEEE Trans. Autom. Control 2022, 68, 3425–3439. [Google Scholar] [CrossRef]

- Ning, B.; Han, Q.L.; Zuo, Z.; Ding, L.; Lu, Q.; Ge, X. Fixed-time and prescribed-time consensus control of multiagent systems and its applications: A survey of recent trends and methodologies. IEEE Trans. Ind. Inform. 2022, 19, 1121–1135. [Google Scholar] [CrossRef]

- Lui, D.G.; Petrillo, A.; Santini, S. Leader tracking control for heterogeneous uncertain nonlinear multi-agent systems via a distributed robust adaptive PID strategy. Nonlinear Dyn. 2022, 108, 363–378. [Google Scholar] [CrossRef]

- Munir, M.; Khan, Q.; Ullah, S.; Syeda, T.M.; Algethami, A.A. Control Design for Uncertain Higher-Order Networked Nonlinear Systems via an Arbitrary Order Finite-Time Sliding Mode Control Law. Sensors 2022, 22, 2748. [Google Scholar] [CrossRef]

- Hatanaka, T.; Igarashi, Y.; Fujita, M.; Spong, M.W. Passivity-based pose synchronization in three dimensions. IEEE Trans. Autom. Control 2011, 57, 360–375. [Google Scholar] [CrossRef]

- Zhou, Y.; Yu, X.; Sun, C.; Yu, W. Robust synchronisation of second-order multi-agent system via pinning control. IET Control Theory Appl. 2015, 9, 775–783. [Google Scholar] [CrossRef]

- Nuño, E.; Ortega, R.; Jayawardhana, B.; Basañez, L. Coordination of multi-agent Euler–Lagrange systems via energy-shaping: Networking improves robustness. Automatica 2013, 49, 3065–3071. [Google Scholar] [CrossRef]

- Zhang, H.; Lewis, F.L.; Qu, Z. Lyapunov, adaptive, and optimal design techniques for cooperative systems on directed communication graphs. IEEE Trans. Ind. Electron. 2011, 59, 3026–3041. [Google Scholar] [CrossRef]

- Ullah, S.; Mehmood, A.; Khan, Q.; Rehman, S.; Iqbal, J. Robust integral sliding mode control design for stability enhancement of under-actuated quadcopter. Int. J. Control Autom. Syst. 2020, 18, 1671–1678. [Google Scholar] [CrossRef]

- Pilloni, A.; Pisano, A.; Franceschelli, M.; Usai, E. Finite-time consensus for a network of perturbed double integrators by second-order sliding mode technique. In Proceedings of the 52nd IEEE Conference on Decision and Control, Firenze, Italy, 10–13 December 2013; pp. 2145–2150. [Google Scholar]

- Ullah, S.; Khan, Q.; Mehmood, A.; Kirmani, S.A.M.; Mechali, O. Neuro-adaptive fast integral terminal sliding mode control design with variable gain robust exact differentiator for under-actuated quadcopter UAV. ISA Trans. 2022, 120, 293–304. [Google Scholar] [CrossRef] [PubMed]

- Khan, Q.; Akmeliawati, R.; Bhatti, A.I.; Khan, M.A. Robust stabilization of underactuated nonlinear systems: A fast terminal sliding mode approach. ISA Trans. 2017, 66, 241–248. [Google Scholar] [CrossRef]

- Zhang, H.; Lewis, F.L. Adaptive cooperative tracking control of higher-order nonlinear systems with unknown dynamics. Automatica 2012, 48, 1432–1439. [Google Scholar] [CrossRef]

- El-Ferik, S.; Qureshi, A.; Lewis, F.L. Neuro-adaptive cooperative tracking control of unknown higher-order affine nonlinear systems. Automatica 2014, 50, 798–808. [Google Scholar] [CrossRef]

- Yang, L.; Yang, J. Nonsingular fast terminal sliding-mode control for nonlinear dynamical systems. Int. J. Robust Nonlinear Control 2011, 21, 1865–1879. [Google Scholar] [CrossRef]

- Ullah, S.; Khan, Q.; Mehmood, A. Neuro-adaptive fixed-time non-singular fast terminal sliding mode control design for a class of under-actuated nonlinear systems. Int. J. Control 2023, 96, 1529–1542. [Google Scholar] [CrossRef]

- Wu, Y.; Yu, X.; Man, Z. Terminal sliding mode control design for uncertain dynamic systems. Syst. Control Lett. 1998, 34, 281–287. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, X.; Man, Z. Non-singular terminal sliding mode control of rigid manipulators. Automatica 2002, 38, 2159–2167. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, X.; Han, F. On nonsingular terminal sliding-mode control of nonlinear systems. Automatica 2013, 49, 1715–1722. [Google Scholar] [CrossRef]

- Qiao, L.; Zhang, W. Trajectory tracking control of AUVs via adaptive fast nonsingular integral terminal sliding mode control. IEEE Trans. Ind. Inform. 2019, 16, 1248–1258. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, L.; Wang, D.; Ju, F.; Chen, B. Time-delay control using a novel nonlinear adaptive law for accurate trajectory tracking of cable-driven robots. IEEE Trans. Ind. Inform. 2019, 16, 5234–5243. [Google Scholar] [CrossRef]

- Ullah, S.; Alghamdi, H.; Algethami, A.A.; Alghamdi, B.; Hafeez, G. Robust Control Design of Under-Actuated Nonlinear Systems: Quadcopter Unmanned Aerial Vehicles with Integral Backstepping Integral Terminal Fractional-Order Sliding Mode. Fractal Fract. 2024, 8, 412. [Google Scholar] [CrossRef]

- Polyakov, A. Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans. Autom. Control 2011, 57, 2106–2110. [Google Scholar] [CrossRef]

- Li, H.; Cai, Y. On SFTSM control with fixed-time convergence. IET Control Theory Appl. 2017, 11, 766–773. [Google Scholar] [CrossRef]

- Ni, J.; Liu, L.; Liu, C.; Hu, X.; Li, S. Fast fixed-time nonsingular terminal sliding mode control and its application to chaos suppression in power system. IEEE Trans. Circuits Syst. II Express Briefs 2016, 64, 151–155. [Google Scholar] [CrossRef]

- Hu, C.; Yu, J.; Chen, Z.; Jiang, H.; Huang, T. Fixed-time stability of dynamical systems and fixed-time synchronization of coupled discontinuous neural networks. Neural Netw. 2017, 89, 74–83. [Google Scholar] [CrossRef] [PubMed]

- Lei, Y.; Wang, Y.W.; Morărescu, I.C.; Postoyan, R. Event-triggered fixed-time stabilization of two time scales linear systems. IEEE Trans. Autom. Control 2022, 68, 1722–1729. [Google Scholar] [CrossRef]

- Long, S.; Huang, W.; Wang, J.; Liu, J.; Gu, Y.; Wang, Z. A fixed-time consensus control with prescribed performance for multi-agent systems under full-state constraints. IEEE Trans. Autom. Sci. Eng. 2024, 22, 6398–6407. [Google Scholar] [CrossRef]

- Xiong, H.; Chen, G.; Ren, H.; Li, H. Broad-Learning-System-Based Model-Free Adaptive Predictive Control for Nonlinear MASs Under DoS Attacks. IEEE/CAA J. Autom. Sin. 2025, 12, 381–393. [Google Scholar] [CrossRef]

- Wang, Z.; Cai, X.; Luo, A.; Ma, H.; Xu, S. Reinforcement-Learning-Based Fixed-Time Prescribed Performance Consensus Control for Stochastic Nonlinear MASs with Sensor Faults. Sensors 2024, 24, 7906. [Google Scholar] [CrossRef]

- Ma, L.; Gao, Y.; Li, B. Distributed Fixed-Time Formation Tracking Control for the Multi-Agent System and an Application in Wheeled Mobile Robots. Actuators 2024, 13, 68. [Google Scholar] [CrossRef]

- Liu, C.; Xu, Z. Multi-Agent System Based Cooperative Control for Speed Convergence of Virtually Coupled Train Formation. Sensors 2024, 24, 4231. [Google Scholar] [CrossRef]

- Li, M.; Zhang, K.; Liu, Y.; Song, F.; Li, T. Prescribed-Time Consensus of Nonlinear Multi-Agent Systems by Dynamic Event-Triggered and Self-Triggered Protocol. IEEE Trans. Autom. Sci. Eng. 2025, 22, 16768–16779. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).