Abstract

Atrial Fibrillation (AF) is a common yet often undiagnosed cardiac arrhythmia with serious clinical consequences, including increased risk of stroke, heart failure, and mortality. In this work, we present a novel Embedded Edge system performing real-time AF detection on a low-power Microcontroller Unit (MCU). Rather than relying on full Electrocardiogram (ECG) waveforms or cloud-based analytics, our method extracts Heart Rate Variability (HRV) features from RR-Interval (RRI) and performs classification using a compact Long Short-Term Memory (LSTM) model optimized for embedded deployment. We achieved an overall classification accuracy of 98.46% while maintaining a minimal resource footprint: inference on the target MCU completes in 143 ± 0 ms and consumes 3532 ± 6 μJ per inference. This low power consumption for local inference makes it feasible to strategically keep wireless communication OFF, activating it only to transmit an alert upon AF detection, thereby reinforcing privacy and enabling long-term battery life. Our results demonstrate the feasibility of performing clinically meaningful AF monitoring directly on constrained edge devices, enabling energy-efficient, privacy-preserving, and scalable screening outside traditional clinical settings. This work contributes to the growing field of personalised and decentralised cardiac care, showing that Artificial Intelligence (AI)-driven diagnostics can be both technically practical and clinically relevant when implemented at the edge.

1. Introduction

Atrial Fibrillation (AF) is the most prevalent sustained cardiac arrhythmia, affecting an estimated 59 million individuals globally as of 2019 [1]. It is characterised by rapid and disorganised atrial electrical activity that disrupts coordinated atrial contraction, thereby promoting the stasis of blood flow, particularly in the left atrial appendage. This contributes to a substantially elevated risk of thromboembolic events, most notably ischemic stroke, for which AF increases the risk fivefold [2,3]. Moreover, AF is associated with a broader spectrum of adverse clinical outcomes, including heart failure [4], cognitive impairment [5], reduced quality of life [6], and increased all-cause mortality [7,8]. Despite its clinical significance, timely AF diagnosis remains challenging. A considerable proportion of AF cases are asymptomatic or manifest paroxysmally; hence, such cases are difficult to detect during routine clinical encounters [9]. This delay is particularly problematic, as early initiation of evidence-based treatments, such as anticoagulation therapy, rate or rhythm control, and lifestyle changes, has been shown to significantly reduce the morbidity and mortality [10]. These challenges underscore the imperative for continuous, accessible, and reliable AF monitoring modalities that extend beyond conventional clinical settings.

Remote monitoring technologies are seen by many as promising tools for improving AF detection rates, particularly for paroxysmal and silent AF [11,12,13]. Wearable devices and portable biosensors facilitate the longitudinal acquisition of physiological data in real-world environments, enabling proactive intervention and management. However, many current solutions rely on cloud-based analytics [14], which introduce several critical limitations: increased latency, dependence on uninterrupted wireless connectivity, elevated energy consumption due to frequent data transmission, and concerns related to data privacy and regulatory compliance [15]. To mitigate these constraints, a shift toward edge computing is necessary. The “edge” is often defined as a continuum of processing locations; while the network edge refers to computing near the base station, our focus is on the embedded edge or micro edge: the processing power located directly on the end-device (the wearable sensor). Deploying edge Artificial Intelligence (AI) models at this tier allows for real-time signal processing, drastically reduces energy expenditure by minimising raw data transmission, and bolsters user privacy through localised inference on resource-constrained Microcontroller Units (MCUs) [16,17].

In this work, we propose a novel embedded edge AF detection system that performs real-time classification entirely on a low-power embedded platform. Our approach represents a classic TinyML application, where the complex model training occurs in the cloud, but the resultant lightweight model is deployed to the MCU for local execution. Our method is based on Heart Rate Variability (HRV) features derived from RR-Intervals (RRIs) as the primary input, rather than raw Electrocardiogram (ECG) waveforms. We demonstrate that this abstraction significantly reduces the computational burden and memory footprint while retaining sufficient discriminatory power for arrhythmia detection. The demo setup is based on the design and implementation of a compact Long Short-Term Memory (LSTM) model, which was optimised for embedded deployment. The model achieved a classification Accuracy (ACC) of 98.46%. Critically, we quantified the resource footprint of this architecture: inference on the selected MCU completes in approximately 143 ± 0 ms and consumes an average current of 12.85 mA at 1.8 V, corresponding to an energy consumption of 3532 ± 6 J per inference. This low energy cost for inference enables a key architectural advantage: the wireless uplink can be strategically kept OFF during continuous monitoring and activated only to transmit a high-priority alert upon AF detection, thereby conserving energy and maximising the battery life. We validate the proposed architecture through detailed empirical measurements on a representative MCU platform, demonstrating the feasibility of sustainable continuous AF monitoring via intelligent edge processing. This contribution advances the development of energy-efficient, privacy-preserving, and clinically meaningful wearable diagnostics for cardiac rhythm disorders. It also aligns with emerging trends in personalised and decentralised healthcare, where AI-driven insights are delivered directly to the user with minimal latency and infrastructural demands.

The manuscript is structured as follows: The Section 2 introduces the materials and methods used to implement and validate the LSTM model on an MCU. Section 2.5 includes a system architecture sketch clarifying the roles of the MCU (micro edge), the wireless link, and the cloud. We also outline the measurement setup which allows us to establish the power requirements during inference. The Section 3 details the model performance results and the implementation related measurements. In the Section 4, we compare and contrast these results with reported performance from the related work. The paper concludes with Section 5.

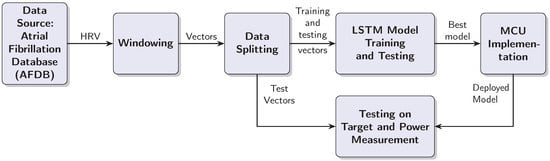

2. Materials and Methods

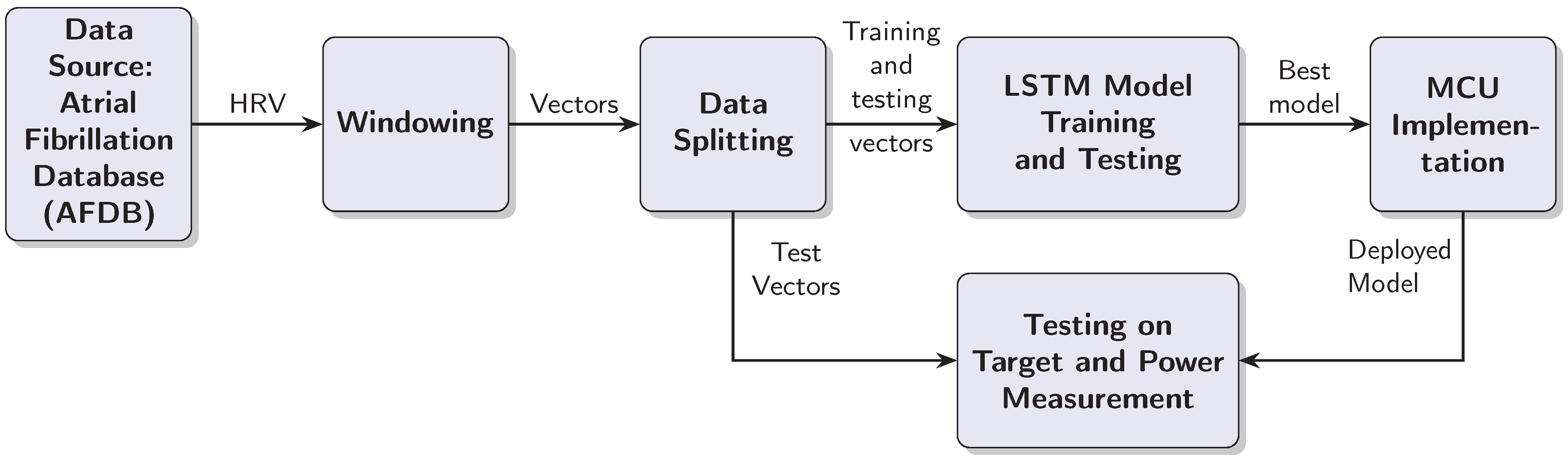

This section outlines the steps taken to test the LSTM based AF detection model with an MCU target. The design process is iterative, because the MCU target places restrictions on the model complexity and the window length. Furthermore, the practical implementation should be capable of processing RRIs in real time, which means processing a test vector in less than half a second. As a consequence of these requirements, we had to balance the classification performance on one side with the computational complexity of the model and inference time on the other. The design process resulted in a linear data processing chain, which is documented in Figure 1. The individual blocks are explained in the text below.

Figure 1.

Block diagram of the AF detection system development and deployment process.

2.1. Data Source

The AF detection model was developed with HRV data from the MIT-BIH AF Database [18,19]. The collection comprises 23 ten-hour, two-lead ECG Holter recordings (sampling rate = 250 Hz) recorded from distinct subjects. Expert cardiologists, from Beth Israel Hospital, Boston, MA, United States, provided beat-level and rhythm-level annotations, including AF markers and R-peak positions. For this study, the R-peak annotations were used to derive the RRI sequence for HRV analysis.

To validate the model’s generalisation capability to unseen less-preprocessed data from a separate cohort, we utilised the Long-Term AF Database (LTAFDB) [18,20]. The LTAFDB consists of 84 long-term ECG recordings (typically 24 to 25 h each) from subjects with paroxysmal or sustained AF. The beat and rhythm annotations used for generating the RRI vectors were carried out by medical experts from the Northwestern University, 633 Clark St, Evanston, IL 60208, USA.

2.2. Windowing

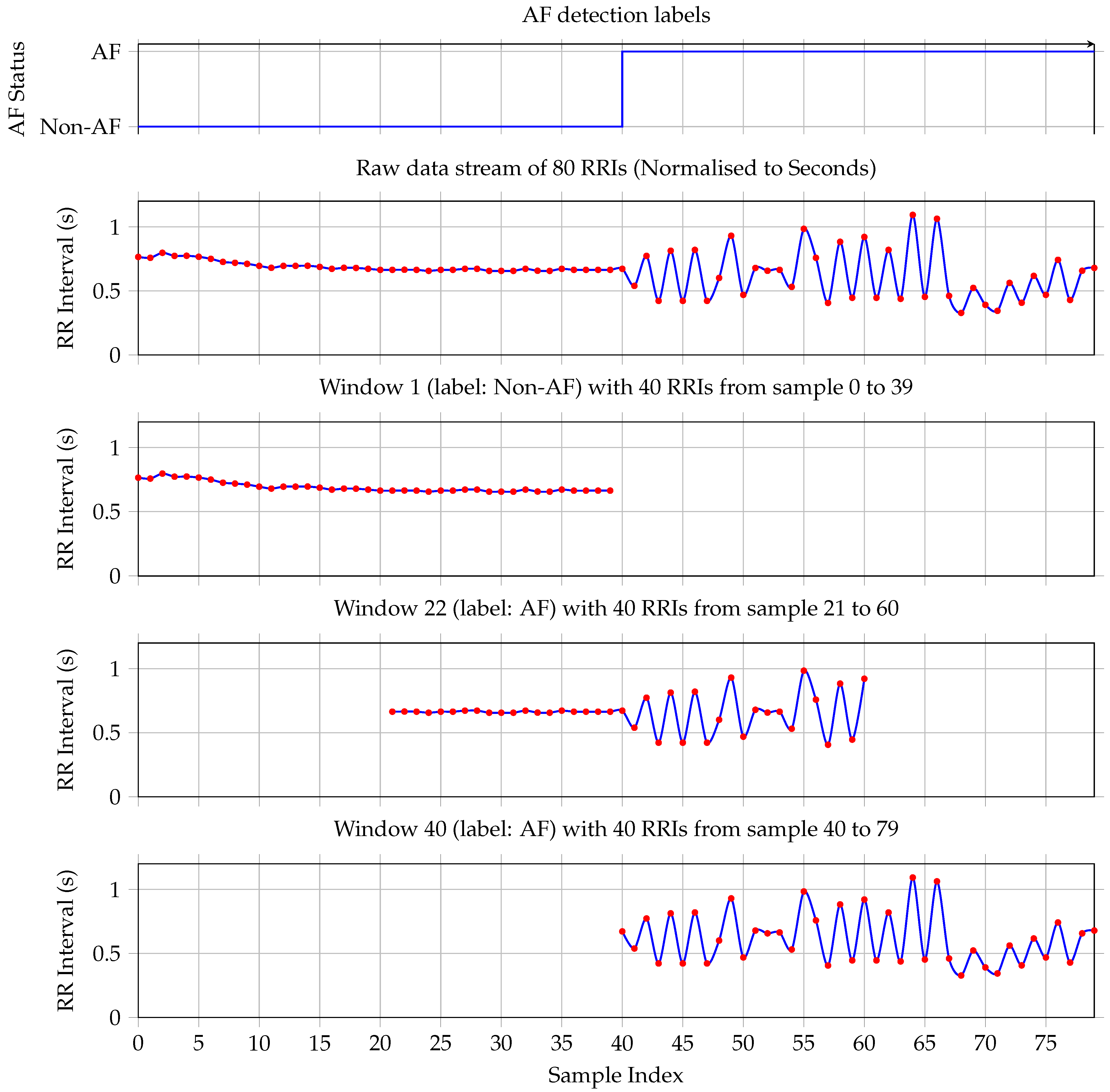

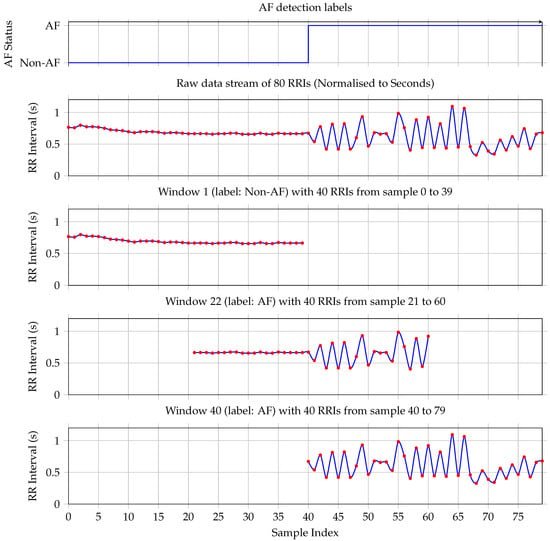

Data from 18 subjects were used for model development and evaluation. Preprocessing started with the segmentation of RRI sequences using a sliding window approach, as illustrated in Figure 2. Two parameters govern this process: a fixed window length of 40 RRIs and a step size of 1, resulting in a one-step sliding window technique that generates overlapping training and testing samples. Appendix A provides a short discussion and justification for selecting a window length of 40 RRIs.

Figure 2.

The first plot shows the ground truth—each RRI is labeled as either non-AF or AF. The plot shows a transition from non-AF to AF on sample RRI sample 40. The second plot shows the corresponding RRI stream. Window 1 contains only non-AF samples. Therefore, the label for the whole window is non-AF. Plot four shows Window 22, which is taken at the transition from non-AF to AF. It has more RRIs labeled as AF; hence, the complete window is labeled as AF. Plot five documents window 40, which contains only RRIs labeled as AF. Hence, window 40 is labeled as AF.

Each window is assigned a binary label based on the proportion of AF beats it contains. Specifically, if a given window includes 20 or more AF-labeled beats (i.e., at least 50% of the window), it is labeled as AF; otherwise, it is labeled as non-AF. This threshold is selected to align with the minimum clinical duration criteria for AF diagnosis. Given a typical heart rate, a 40-RRI window approximates the 30 s minimum duration required for an AF episode to be considered clinically significant [6,19]. The 50% threshold ensures balanced sensitivity to paroxysmal onset/offset while maintaining training robustness.

To illustrate this procedure, consider the 80 RRIs shown in the second plot of Figure 2. These intervals yield 41 overlapping windows. The first window, comprising the first 40 RRIs, is depicted in the second plot and it contains no AF-labeled beats; hence, it is classified as non-AF, consistent with the ground truth shown in the first subplot. The fourth plot illustrates the first window that meets the AF threshold: it contains exactly 20 AF beats and is therefore labeled as AF. Finally, the last subplot shows the 41st window, which consists entirely of AF-labeled RRIs and is likewise labeled as AF.

This windowing strategy ensures dense temporal coverage and supports the robust training of sequence-based classifiers by preserving the beat-wise temporal structure while allowing for smooth transitions between AF and non-AF states.

2.3. Data Splitting

The windowing process resulted in set of 1,127,641 labeled RRI vectors. The dataset was randomly partitioned into two disjoint subsets, with 80% of the data (ł845,730) allocated for training, and the remaining 20% (281,911) were used for testing.

2.4. Model Training and Testing

Our AF detection system utilises a Bidirectional-LSTM model [21], the architecture of which is detailed in Table 1. The model is designed to process the 40-sample RRI input vectors. The core of the model consists of two parallel LSTM layers (Layers 2a and 2b), operating in forward and backward directions, respectively, each producing an output of 40 features. These recurrent layers are followed by a Global 1D Max Pooling layer (Layer 3) to reduce the dimensionality and extract the significant features. A fully connected layer with ReLU activation (Layer 4) then processes these features. The subsequent Dropout layer (Layer 5) was included for regularisation, in order to prevent overfitting during training. The final output is generated by a sigmoid-activated fully connected layer (Layer 6), yielding a single probability score for AF detection. The total number of trainable parameters for the model is 17,561.

Table 1.

Layer-wise architecture for the 40 × 64 bidirectional-LSTM model.

The model was developed in Python (v3.12) using the Keras Application Programming Interface (API) for the TensorFlow network (v2.20.0). The model was trained using the designated training vector set. During training, the model’s parameters were optimised to minimise a binary cross-entropy loss function, using the Adam optimiser [22]. Early stopping was implemented to prevent overfitting. Upon completion of training, the model with the best performance on the validation set was selected for implementation testing on the MCU target and for external validation on the LTAFDB (see Section 3.2.2).

Our empirical analysis (Section 3.2.2 and Section 3.2.3) focuses on quantifying the inference energy on the embedded edge (MCU), validating the feasibility of this power-saving energy-gated system design.

2.5. MCU Implementation

For the practical implementation of the AF detection model, we used the STM32L475VGT6 device [23], which is an ultra-low-power microcontroller based on the Arm Cortex-M4 32-bit RISC core [24] operating at a frequency of up to 80 MHz [25]. The Cortex-M4 core features a floating point unit single precision, which supports all Arm single-precision data-processing instructions and data types. It also implements a full set of Digital Signal Processing (DSP) instructions and a memory protection unit, which enhances application security. The device has 1 Mbyte flash memory and 128 kByte of Static Random Access Memory (SRAM).

2.6. Testing on Target and Power Measurement

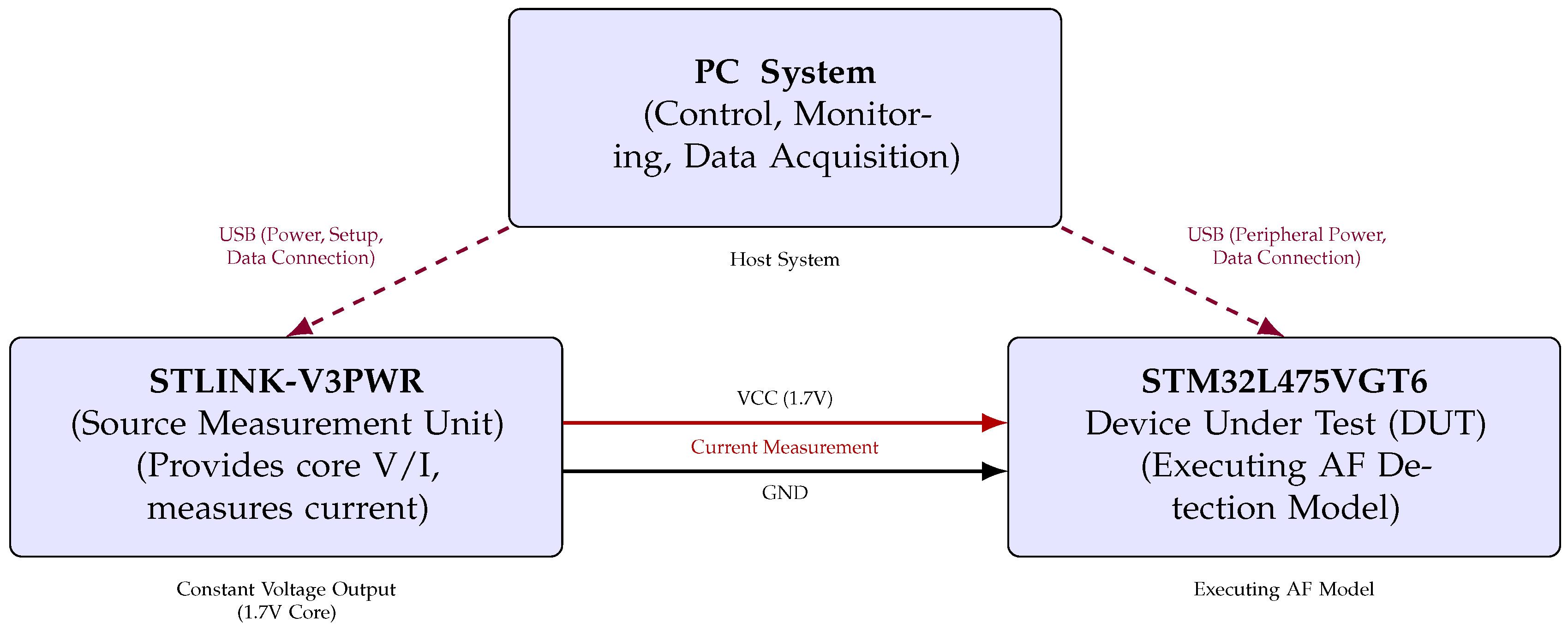

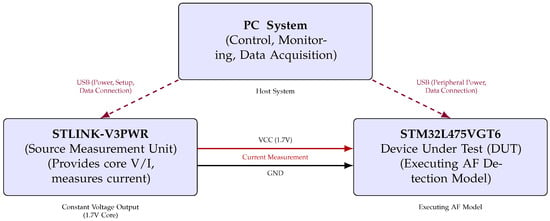

To evaluate the model’s performance on the target hardware and to quantify its energy as well as its latency characteristics during inference, a dedicated testbench was constructed. This setup is composed from three primary components: a host Personal Computer (PC) system, the STM32L475VGT6 MCU serving as the Device Under Test (DUT), and the STLINK-V3PWR source measurement unit, as illustrated in Figure 3.

Figure 3.

Functional block diagram of hardware setup for STM32L475VGT6 AF detection model energy measurement (triangular layout).

The host PC served as the central controller, managing test execution through a bidirectional serial interface with the MCU. It also triggered and synchronised power measurements via a dedicated USB connection to the STLINK-V3PWR module. The DUT processor was powered via the source measurement unit, which provided a constant supply voltage of 1.7 V to the STM32L475VGT6 and simultaneously measured the current drawn during inference.

To ensure the reliability and statistical robustness of our energy and timing metrics, the measurements were performed through a set of repeated runs. Specifically, we conducted 30 independent power measurement trials (N = 30). In each trial, the MCU performed 20 consecutive model inferences. The inference time, power consumption, and energy per inference were recorded for each trial. This methodology allowed us to establish the mean and variance of the key parameters, providing a statistically sound measure of the system’s performance.

Each test run was initiated by triggering the power measurement sequence on the STLINK-V3PWR, followed by the activation of model inference on the MCU. This controlled process enabled the precise measurement of the inference latency and the instantaneous power consumption. The STM32 device executed the AF detection model entirely on-chip, allowing assessment under realistic embedded deployment conditions.

The triangular layout in Figure 3 depicts the connections between the components, highlighting the power delivery, current sensing paths, and data communication channels. This configuration ensured high temporal resolution in power measurement, critical for accurately quantifying the energy efficiency of edge-based AI inference.

3. Results

3.1. Baseline Performance on PC

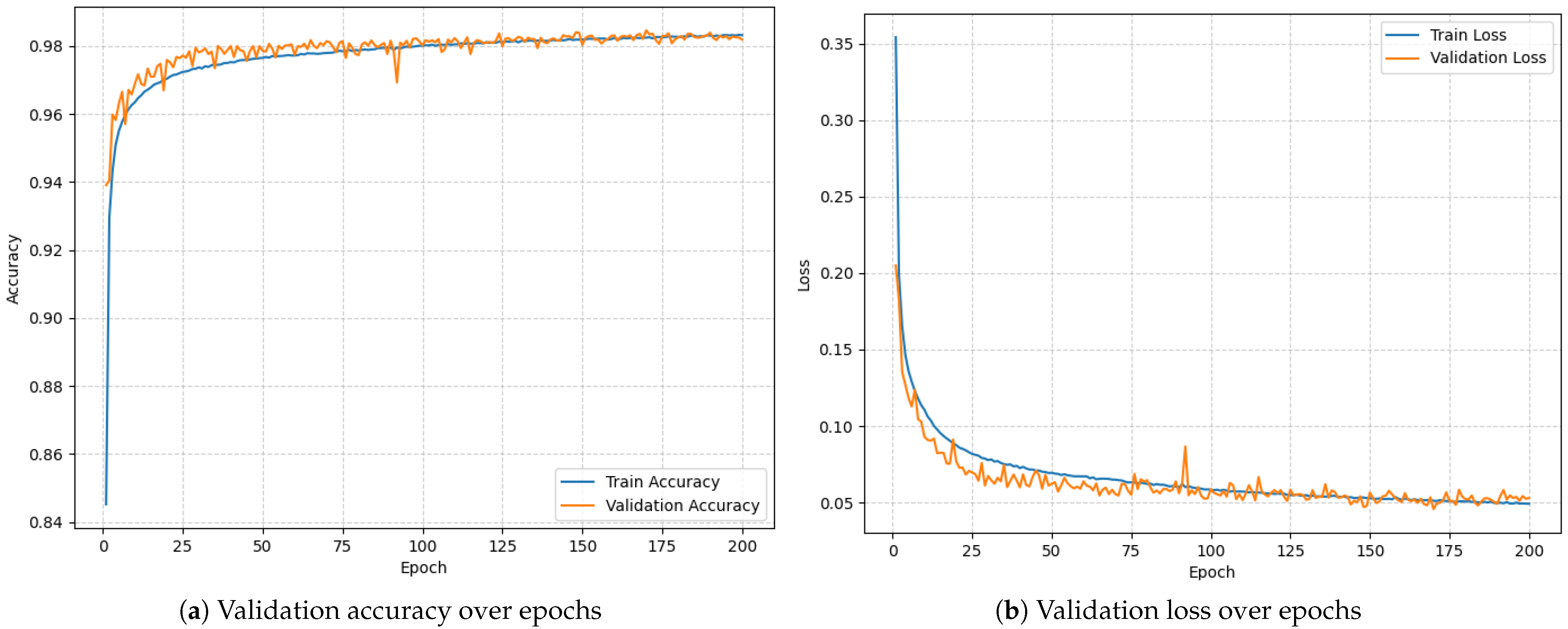

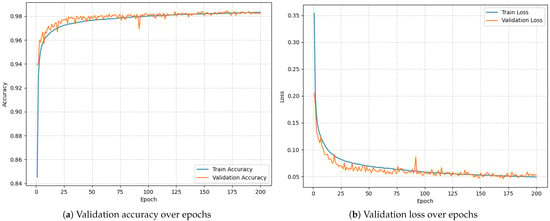

To establish a performance benchmark, the LSTM model was initially trained and evaluated on a PC using preprocessed RRI data. The model was trained for 200 epochs using a categorical cross-entropy loss function. Figure 4 documents the training process. The final validation results were as follows:

Figure 4.

Model training over 200 epochs.

- Validation Accuracy: 98.46%;

- Validation Loss: 0.0456.

These results confirm that the model demonstrates strong discriminative ability for AF detection under ideal (non-constrained) computing conditions, providing a robust reference point for subsequent evaluation on embedded hardware.

3.2. Embedded Performance on STM32 Microcontroller

The trained model was converted using the X-Cube-AI framework and deployed to the STM32L475VGT6 microcontroller for real-time inference. The same validation dataset was used to compare performance metrics between the PC and embedded platforms.

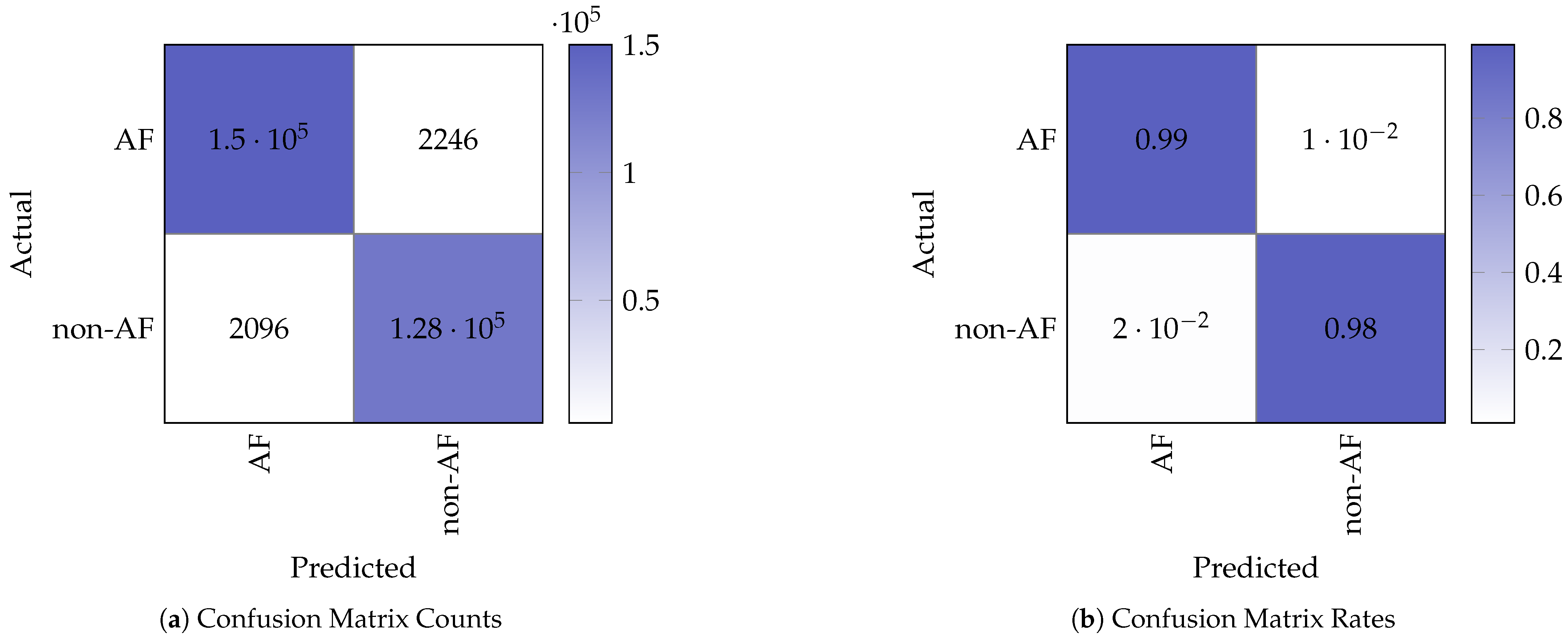

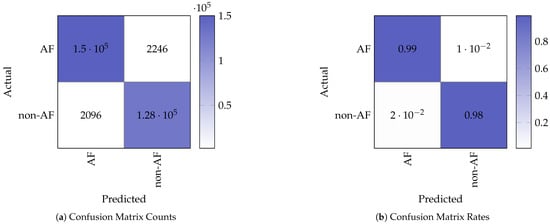

3.2.1. Confusion Matrix Analysis

Figure 5 shows the confusion matrix counts and corresponding normalised rates obtained from testing on the STM32. The confusion matrix indicates the following performance values for the binary (non-AF and AF) classification:

Figure 5.

Confusion matrix representation of the model test results.

- True Positives (TP): 127,589;

- False Negatives (FN): 2096;

- False Positives (FP): 2246;

- True Negatives (TN): 149,980.

From the performance values, we computed the following performance metrics:

These metrics demonstrate that the model retains strong classification performance when deployed on the embedded device, with minimal degradation in AF detection performance compared to the baseline model running on the PC.

3.2.2. External Validation with the LTAFDB

To assess the model’s generalisation capability on a fully independent dataset, we tested the trained model on 8400 random vectors from the LTAFDB (100 vectors from each of the 84 subjects). The performance on this external, unseen dataset was as follows:

- Accuracy: 94.44%;

- Evaluation Loss (Leval): 0.2495.

The accuracy of 94.44% on the LTAFDB confirms the model’s generalisation to a different less-curated patient cohort than the one used for training. The evaluation loss is reported here as a standard metric to quantify the distance between the model’s predicted probabilities and the ground truth labels, showing the confidence and fidelity of the model’s predictions on the external data.

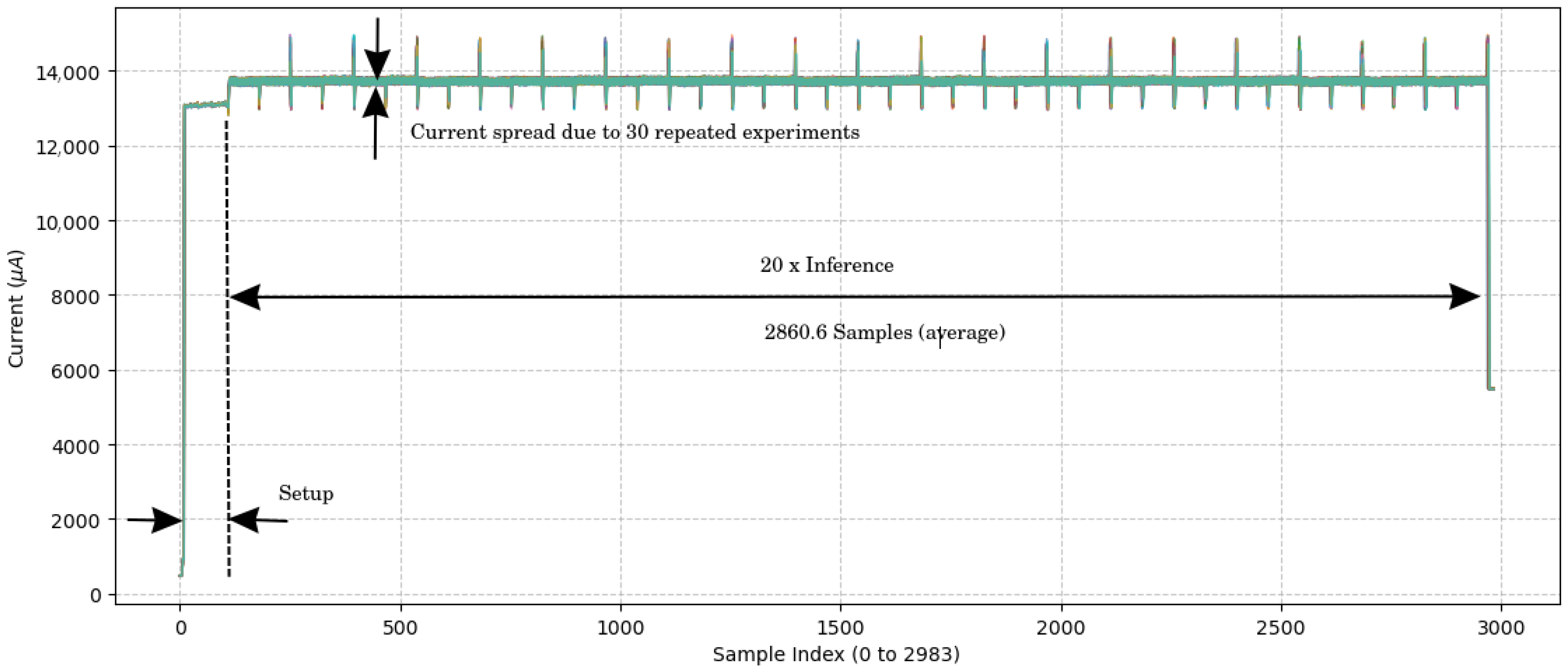

3.2.3. Inference Time and Power Efficiency

Real-time inference timing was measured using the on-board TIM16 hardware timer. The model inference time for a window of 40 RRIs was approximately 143 ± 0 ms per sample. This corresponds to a theoretical maximum of ≈7 inferences per second, or ≈419 inferences per minute. This is well beyond the requirements for real-time AF monitoring, which is one inference for each heartbeat.

3.3. Power and Energy Consumption

A key objective of this work was to develop the project into a wearable low-power system able to operate continuously over a long time scale. In order to guarantee that the model can be used efficiently without the continuous need to recharge it, the power consumption was tracked at various stages in the system.

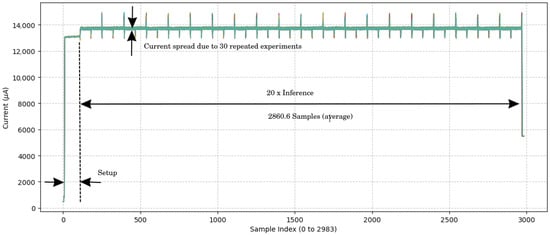

Power consumption was estimated using an ST Link V3PWR [26] at the time of inference. Figure 6 documents the measurement resutls. The amperage current during inference was 13.72 ± 0.02 mA at a voltage of 1.8 V. The average current times the voltage results in the average power consumption: 13.72 ± 0.02 mA × 1.8 V = 24.70 ± 0.04 mW. This low power consumption saves battery and enables prolonged operation; hence, it is ideal for a wearable device. Given the inference time of 143 ± 0 ms, the energy consumption is the power required during the inference: 24.70 ± 0.04 mW × 143 ± 0 ms = 3532 ± 6 J.

Figure 6.

Current measurement over time samples. The figure results from an overlay of 30 experiment results. The sample period is 1 ms, which results from a 1 kHz sampling frequency for the current measurement. No variance in the inference time was observed.

4. Discussion

This study demonstrates the successful design, implementation, and validation of an LSTM-based AF detection system on a resource-constrained microcontroller. The primary contribution lies not in achieving the absolute highest classification accuracy reported in the literature but in architecting a solution that is practical, energy-efficient, and suitable for continuous real-world monitoring. Our approach uses HRV features to perform all inference directly on the edge, addressing the critical limitations of cloud-dependent systems, namely latency, connectivity, power consumption, and data privacy. The results, an accuracy of 98.46%, a 143 ± 0 ms inference time, and a power consumption of 24.70 ± 0.04 mW, balance clinical utility and technical feasibility for wearable diagnostic devices.

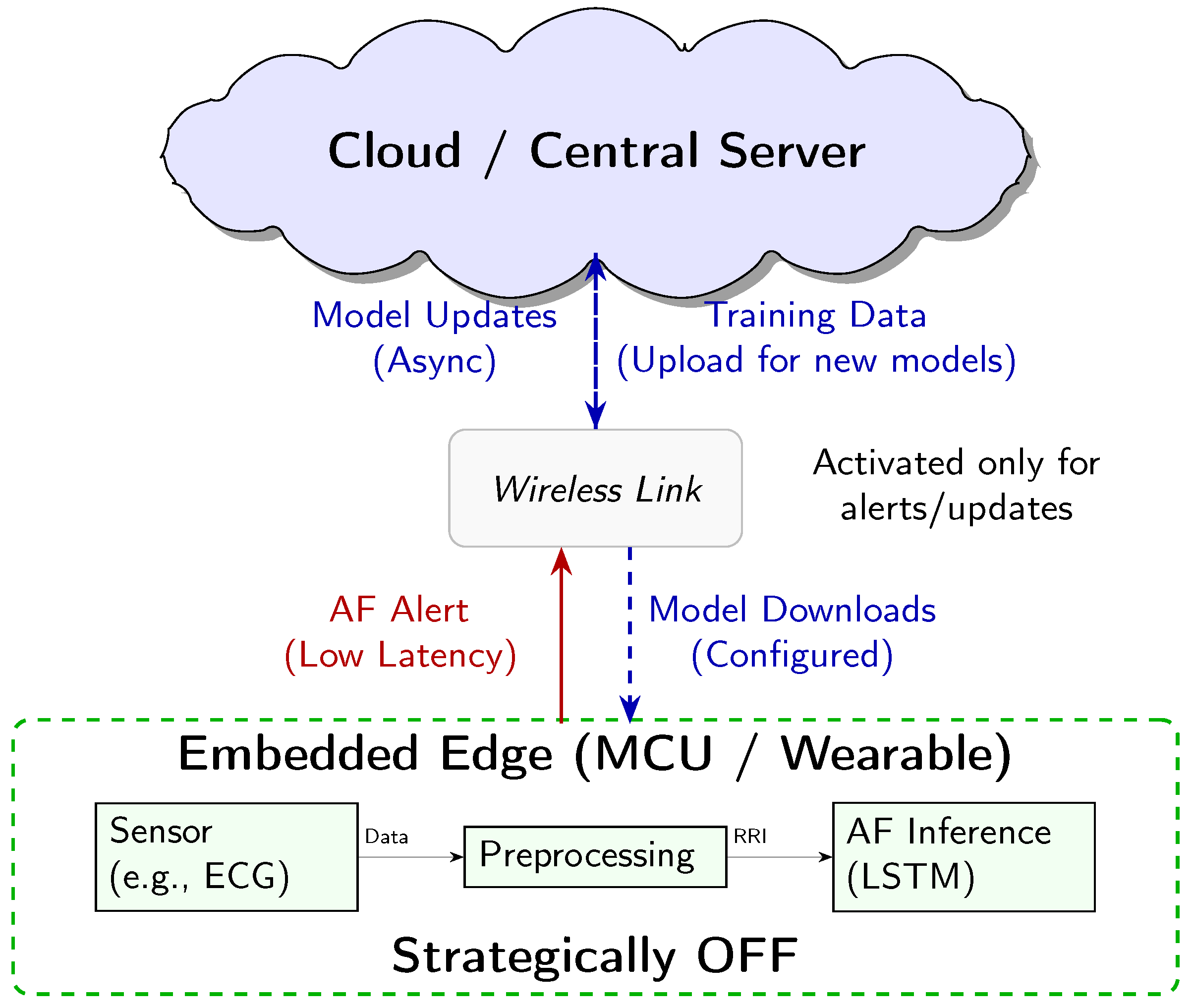

4.1. Architectural Context: Realising AF Monitoring on the Embedded Edge

The core contribution of this work lies in demonstrating the energy efficiency of AF inference directly on a resource-constrained MCU, a paradigm known as embedded edge AI or TinyML. This approach allows for a practical sustainable system architecture, which we clarify here to define our use of “edge” and the intended role of the entire system.

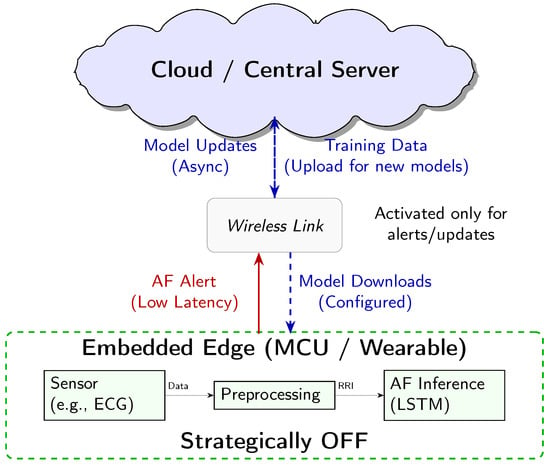

The “edge” is interpreted here as the micro edge, the end device itself. Our system is designed for an energy-optimised three-tiered architecture (Figure 7), where each component has distinct power-managed roles:

Figure 7.

Conceptual three-tier system architecture for AF detection. The embedded edge MCU performs continuous low-power inference. The Cloud handles heavy-duty tasks like model training and storage. The Wireless link acts as a communication gate, only activated for low-latency alerts and asynchronous model updates.

- 1.

- Embedded Edge (Low-Power MCU): This tier, the focus of our empirical analysis, performs continuous, real-time AF classification. The measured energy consumption of 3532 ± 6 J per inference is the key enabling factor for the overall system’s efficiency.

- 2.

- Cloud/Central Server (Training and Updates): This high-resource environment is used for computationally intensive tasks, namely LSTM model training and long-term data storage. The finalised model is deployed to the MCU, and model updates are delivered asynchronously.

- 3.

- Wireless Link (Energy-Gated Uplink): The role of the wireless link (e.g., Bluetooth Low Energy) is strictly minimised to conserve power. Data transmission is the single largest energy sink in a wearable system. Therefore, the link is designed to be kept OFF during routine monitoring and only activated upon positive AF detection by the MCU. This enables the transmission of a time-stamped low-latency alert packet, drastically reducing the communication overhead.

Crucially, while our empirical measurements focused on quantifying the energy cost of the embedded edge inference engine, this three-tier model represents the necessary clinically relevant deployment scenario, enabled by our highly efficient local processing. This design minimises the latency for critical diagnosis, while maximising the energy conservation and user privacy by keeping the raw physiological data localised.

4.2. Preprocessing and Real-World Applicability

The feasibility of any wearable AI system depends on the entire data chain, including sensor based data acquisition and preprocessing (noise filtering, R-peak detection), as shown in Figure 7. These processing steps are known sources of computational burden and vulnerability to artifacts (e.g., motion). However, the primary scope of this manuscript is centered on demonstrating the technical and energy-cost viability of the AI inference engine itself. Specifically, we isolate and quantify the resource usage of the LSTM model deployed on a resource-constrained MCU, which represents the most computationally demanding and novel component of the system.

Our design choice is based on the pragmatic assumption that our solution will be integrated as an intelligent decision support module within existing or commercially mature HRV monitoring platforms. In such a scenario, we offer the following:

- 1.

- Decoupling the Front-End: we treat the input to our model as the preprocessed RRI sequence, effectively offloading the well-understood tasks of signal capture, noise reduction, and R-peak detection to the existing front-end sensor solution.

- 2.

- Integration Potential: this approach means our energy-efficient AF classification module can be “dropped into” an existing HRV data pipeline, simplifying the challenge for system integrators and providing a core technological advancement with minimal additional computational or energy burden.

Therefore, while the full sensor-to-cloud chain is essential for a final commercial product, a detailed hardware-specific analysis is beyond the scope of this paper, which focuses on AI optimisation and embedded deployment. Our aim is to provide a foundational assessment of our AI system’s feasibility, which is the necessary step before committing to expensive and hardware-specific full-system integration.

4.3. Performance in the Context of Practical Application

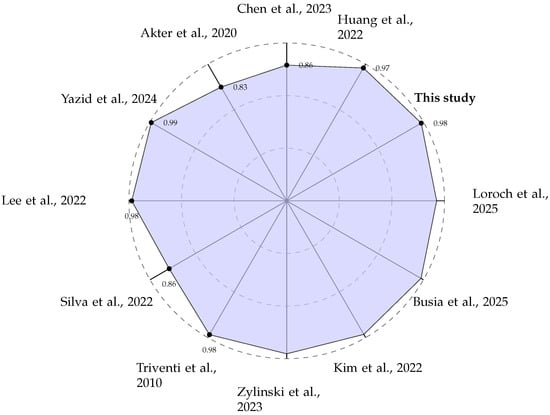

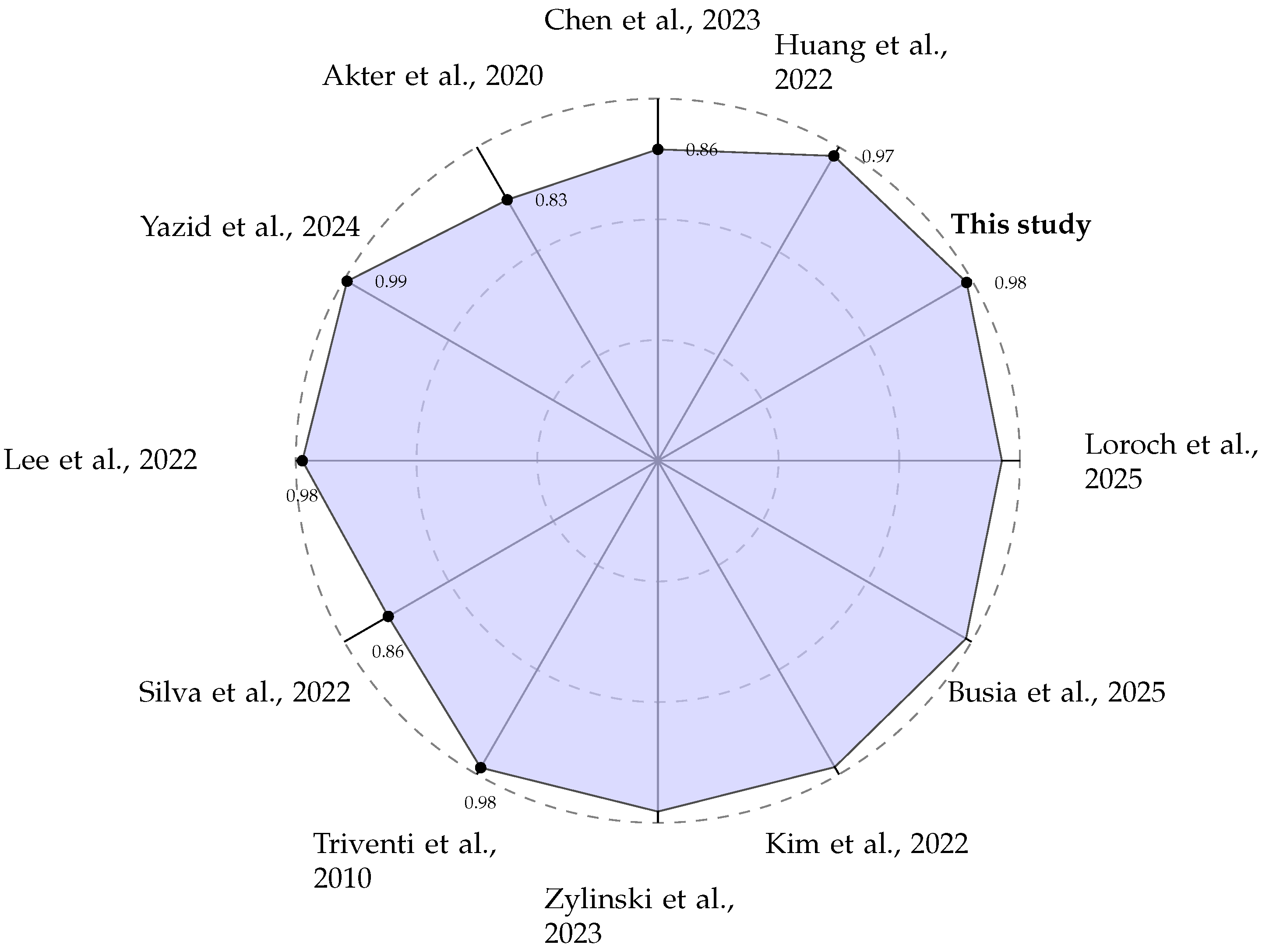

A direct comparison of performance metrics, as summarised in Table 2 and visualised in Figure 8, shows that our system is competitive with other state-of-the-art edge AF detection methods. While some studies, such as Yazid et al. [27], report slightly higher accuracy (99.13%), it is crucial to analyse the methodologies used to generate these results. We adopted a sliding window approach for creating training and testing data segments, as documented in Section 2.2. This method is intentionally designed to mimic a real-world scenario where the device has no prior knowledge of the underlying cardiac rhythm. The window moves continuously along the signal, creating data segments that may contain purely normal beats, purely AF beats, or, critically, a mixture of both, particularly at the onset or termination of a paroxysmal AF episode.

Table 2.

Performance comparison with state-of-the-art MCU based edge AI systems for AF detection.

This approach contrasts sharply with methodologies that use “clean” or pre-selected data segments. For instance, Yazid et al. [27] explicitly state that segments containing both AF and non-AF beats were discarded. Similarly, other high-performing models often rely on datasets where the signal windows are curated to contain only one type of rhythm [29]. While this preprocessing simplifies the classification task and can artificially inflate the reported accuracy, it does not reflect the challenges of continuous unsupervised monitoring. A model trained on these ’pure’ vectors may perform poorly when faced with the transitional or ambiguous signal patterns common in a clinical setting. Therefore, the ACC we achieved of 98.46%, was established with a more challenging and realistic test set. Arguably this represents a more reliable indicator of the system’s practical utility.

Furthermore, the performance cannot be assessed on the accuracy alone. For an edge device intended for long-term use, inference time and the energy-accuracy trade-off are important parameters as well. Our system achieves a rapid 143 ± 0 ms inference time, enabling near-real-time feedback. More importantly, the power draw of 24.70 mW (translating to just 3532 ± 6 J per inference) is significantly lower than that reported by Yazid et al. [27] (89.1 mW), indicating superior energy efficiency and the potential for longer battery life in a wearable form factor.

Figure 8.

Radar plot comparing the reported classification ACC (in %) of various edge-based AF detection systems [27,28,29,30,31,32,33,34,35,36,38].

Figure 8.

Radar plot comparing the reported classification ACC (in %) of various edge-based AF detection systems [27,28,29,30,31,32,33,34,35,36,38].

This 3532 ± 6 J per inference result positions our chosen LSTM architecture as a benchmark point on the energy–accuracy landscape (the Pareto front). While we did not perform a full hyperparameter search across model sizes (e.g., 32 to 128 hidden units) to determine the absolute global optimum, the comparison in Table 2 demonstrates a superior energy-to-accuracy ratio in the context of the published edge implementations. Our software-based solution on a general-purpose MCU was deliberately chosen for its balance of performance, cost, and adaptability. This stands in contrast to System on Chip (SoC) methodologies where AI models are implemented directly in logic circuits [39,40,41,42]. While these hardware implementations can yield extremely fast inference times, a critical trade-off has to be resolved. If implemented in fixed silicon (ASICs), the model functionality becomes permanent, preventing updates or improvements without a costly hardware redesign. Alternatively, reconfigurable hardware like Field Programmable Gate Arrays (FPGAs) allows for model updates [40], but these devices are typically more expensive and power-hungry when compared to general-purpose MCUs. Our approach prioritises flexibility and low power, making it a more practical choice for scalable and maintainable wearable health solutions.

4.4. Limitations and Future Work

Despite the promising results, this study has several limitations that provide clear directions for future research. Our validation was performed on a well-established public dataset but did not employ a k-fold cross-validation scheme. Future work should incorporate a 10-fold cross-validation methodology to ensure the model’s robustness and to provide a more comprehensive assessment of its generalisation capabilities. Moreover, testing the system on more diverse multi-center datasets, including those with a wider range of signal qualities and patient demographics, will also be required to shows its clinical readiness.

The current implementation runs on a general-purpose MCU without a dedicated neural processing unit. While this demonstrates the model’s efficiency on common hardware, future iterations could explore deployment on MCUs equipped with AI accelerators. Such hardware could further reduce the inference time and power consumption, potentially enabling the use of more complex models for enhanced diagnostic accuracy or the classification of multiple arrhythmia types without compromising battery life.

A significant avenue for future enhancement is the incorporation of Explainable AI (XAI) [43]. For any diagnostic support tool to gain clinical acceptance, it must not be a “black box”. Clinicians need to trust and understand the basis of an AI-driven recommendation. Future research should focus on implementing lightweight XAI techniques, such as Local Interpretable Model-agnostic Explanations (LIME) or Shapley Additive Explanations (SHAP) adapted for embedded systems. These methods could highlight which specific HRV features or beat-to-beat intervals were most influential in a given AF classification, providing valuable interpretable feedback that could aid in clinical decision-making.

Another promising direction is the expansion towards multimodal signal analysis. While HRV is a powerful indicator for AF, its specificity can be limited by artifacts or other arrhythmias. Future systems could integrate data from other sensors, such as Photoplethysmogram (PPG) or accelerometers, as proposed by emerging platforms like BioBoard [44]. Fusing data from multiple sources could create a more robust system capable of distinguishing true AF from motion artifacts and potentially classifying other conditions, thereby increasing the overall diagnostic value of the wearable device.

Finally, we envision the evolution of such edge devices within a broader healthcare ecosystem [45]. The role of AI at the edge of the network is not necessarily to provide a definitive diagnosis, which is still left to clinicians, but to act as an intelligent gatekeeper or triage system. Therefore, the primary goal is to maximise sensitivity, which ensures that no potential AF event is missed. The requirement for specificity can be relaxed, as a suspected event can trigger an alert for the user to seek clinical confirmation, or the device can automatically transmit a short relevant ECG snippet to a healthcare provider. This approach aligns with the goal of edge computing: minimising data transmission and power consumption by only communicating and acting upon data that are deemed significant. This “high-sensitivity” screening model represents the most pragmatic and impactful application of AF detection on the edge.

5. Conclusions

This study presents a practical and energy-efficient approach to AF detection using a lightweight LSTM model deployed on a resource-constrained MCU. Rather than focusing solely on achieving the highest theoretical accuracy, we have prioritised a design that balances clinical utility, real-time responsiveness, and power efficiency—key requirements for continuous monitoring in wearable devices.

By using HRV features and optimising the model for Embedded Edge inference, we demonstrate that high accuracy (98.46%) and low latency (143 ± 0 ms) are achievable. Crucially, the ultra-low energy consumption of 3532 ± 6 J per inference validates our proposed energy-gated architectural strategy. This approach minimises energy expenditure by enabling the device to perform continuous AF screening locally and strategically keeping the wireless communication link OFF, activating it only to transmit a critical alert. This effectively bypasses the high-power, latency, and privacy issues associated with cloud offloading.

Our findings show that real-world conditions, such as signal transitions and mixed-rhythm segments, can be effectively handled by the proposed system, making it robust to practical deployment challenges. The solution stands out for its adaptability, scalability, and ability to operate on general-purpose MCUs, avoiding the rigidity and cost associated with fixed-function hardware accelerators.

This work affirms the viability of performing clinically relevant AF detection at the micro edge. It offers a blueprint for developing intelligent wearable cardiac monitoring solutions that are not only technically feasible but also designed for long-term energy-efficient operation in real-world environments. By achieving this high standard of embedded edge AI performance, we have laid the foundation for scalable personalised cardiovascular care, enabling intelligent screening systems to proactively support patients and clinicians in both home and clinical settings.

Author Contributions

Corresponding author, O.F.; Conceptualisation, O.F.; Writing, O.F.; Software implementation, Y.A.; Writing points, Y.A.; Concept, Y.A.; Investigation, Y.A.; Proof reading, N.L. and N.P.; Provisioning, N.L. and N.P.; Editing, N.L., Y.P. and N.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The AF detection model was designed with the public ’MIT-BIH AF Database’ available at https://physionet.org/content/afdb/1.0.0/ (accessed on 20 October 2025). The AF detection model was validated with the public ’Long Term AF Database’ available at https://physionet.org/content/ltafdb/1.0.0/ (accessed on 20 October 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| ACC | Accuracy |

| AF | Atrial Fibrillation |

| AFDB | Atrial Fibrillation Database |

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| XAI | Explainable AI |

| DSP | Digital Signal Processing |

| DUT | Device Under Test |

| ECG | Electrocardiogram |

| FPGA | Field Programmable Gate Array |

| HRV | Heart Rate Variability |

| LIME | Local Interpretable Model-agnostic Explanations |

| LSTM | Long Short-Term Memory |

| MCU | Microcontroller Unit |

| PC | Personal Computer |

| PPG | Photoplethysmogram |

| RRI | RR-Interval |

| SHAP | Shapley Additive Explanations |

| SoC | System on Chip |

| SRAM | Static Random Access Memory |

Appendix A. Analysis of RRI Window Length

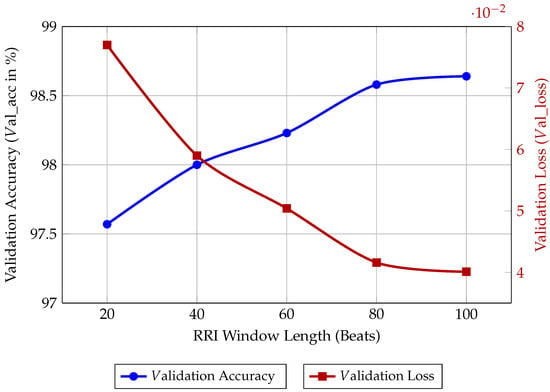

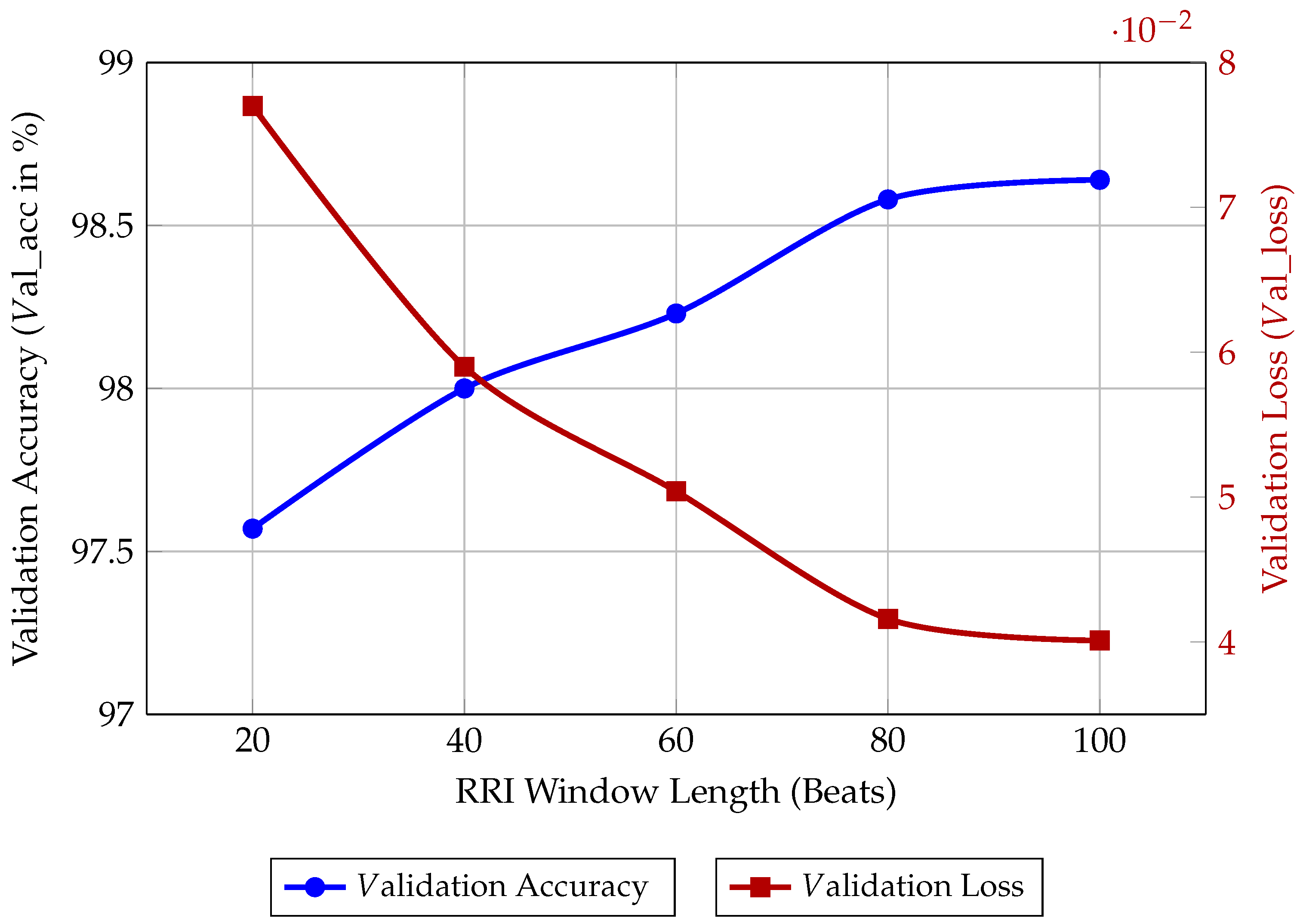

To substantiate the selection of the 40-beat RRI window length for our Bi-LSTM model, we performed an ablation study on the input sequence length. The window length is a critical hyperparameter that directly governs the balance between diagnostic performance, memory footprint, and computational cost on an MCU.

We trained and evaluated the fixed 40 × 64 Bi-LSTM architecture using five different window lengths (L = 20, 40, 60, 80, and 100 beats) for 50 epochs each. The results, presented in Table A1 and visualised in Figure A1, illustrate the following trade-offs:

- Performance Saturation: As the window length increases, the model’s accuracy improves, reflecting the benefit of capturing longer temporal dependencies in the RRI signal. However, the gain in val_acc from L = 80 to L = 100 is marginal (0.06%), indicating diminishing returns.

- Computational Cost: Longer window lengths require a greater number of recurrent operations per inference run. This directly translates to higher Inference Latency and Energy Consumption.

- Memory Footprint: The memory required to store the LSTM hidden states and process the input buffer scales linearly with the window length (L). For L ≥ 60, the model approaches the available RAM ceiling of the STM32L475VGT6 after accounting for the operating system and peripheral drivers.

Table A1.

Performance vs. RRI window length.

Table A1.

Performance vs. RRI window length.

| RRI Window Length | Validation Accuracy | Validation Loss |

|---|---|---|

| (L in Beats) | (Val_ACC in %) | (Val_Loss) |

| 20 | 97.57 | 0.0770 |

| 40 | 98.00 | 0.0590 |

| 60 | 98.23 | 0.0504 |

| 80 | 98.58 | 0.0416 |

| 100 | 98.64 | 0.0401 |

Figure A1.

Model performance vs. RRI window length based on 40 epochs of training.

Figure A1.

Model performance vs. RRI window length based on 40 epochs of training.

The 40-beat window was ultimately chosen because it delivers a competitive val_acc of 98.00%, while maintaining a significantly lower memory and computational footprint compared to the longer windows. This choice maximises the energy efficiency-to-accuracy ratio necessary for continuous long-term wearable monitoring.

References

- Lippi, G.; Sanchis-Gomar, F.; Cervellin, G. Global epidemiology of atrial fibrillation: An increasing epidemic and public health challenge. Int. J. Stroke 2021, 16, 217–221. [Google Scholar] [CrossRef]

- Wolf, P.A.; Abbott, R.D.; Kannel, W.B. Atrial fibrillation as an independent risk factor for stroke: The Framingham Study. Stroke 1991, 22, 983–988. [Google Scholar] [CrossRef] [PubMed]

- Chugh, S.S.; Havmoeller, R.; Narayanan, K.; Singh, D.; Rienstra, M.; Benjamin, E.J.; Gillum, R.F.; Kim, Y.H.; McAnulty, J.H., Jr.; Zheng, Z.J. Worldwide epidemiology of atrial fibrillation: A Global Burden of Disease 2010 Study. Circulation 2014, 129, 837–847. [Google Scholar] [CrossRef] [PubMed]

- Kalantarian, S.; Ay, H.; Gollub, R.L.; Lee, H.; Retzepi, K.; Mansour, M.; Ruskin, J.N. Association between atrial fibrillation and silent cerebral infarctions: A systematic review and meta-analysis. Ann. Intern. Med. 2014, 161, 650–658. [Google Scholar] [CrossRef] [PubMed]

- Chung, S.C.; Rossor, M.; Torralbo, A.; Ytsma, C.; Fitzpatrick, N.K.; Denaxas, S.; Providencia, R. Cognitive impairment and dementia in atrial fibrillation: A population study of 4.3 million individuals. JACC Adv. 2023, 2, 100655. [Google Scholar] [CrossRef]

- Karnik, A.A.; Saczynski, J.S.; Chung, J.J.; Gurwitz, J.H.; Bamgbade, B.A.; Paul, T.J.; Lessard, D.M.; McManus, D.D.; Helm, R.H. Cognitive impairment, age, quality of life, and treatment strategy for atrial fibrillation in older adults: The SAGE-AF study. J. Am. Geriatr. Soc. 2022, 70, 2818–2826. [Google Scholar] [CrossRef]

- Chen, Y.Y.; Lin, Y.J.; Hsieh, Y.C.; Chien, K.L.; Lin, C.H.; Chung, F.P.; Chen, S.A. Atrial fibrillation as a contributor to the mortality in patients with dementia: A nationwide cohort study. Front. Cardiovasc. Med. 2023, 10, 1082795. [Google Scholar] [CrossRef]

- Lip, G.Y.; Nieuwlaat, R.; Pisters, R.; Lane, D.A.; Crijns, H.J. Refining clinical risk stratification for predicting stroke and thromboembolism in atrial fibrillation using a novel risk factor-based approach: The euro heart survey on atrial fibrillation. Chest 2010, 137, 263–272. [Google Scholar] [CrossRef]

- Hindricks, G.; Potpara, T.; Dagres, N.; Arbelo, E.; Bax, J.J.; Blomström-Lundqvist, C.; Boriani, G.; Castella, M.; Dan, G.A.; Dilaveris, P.E. 2020 ESC Guidelines for the diagnosis and management of atrial fibrillation developed in collaboration with the European Association for Cardio-Thoracic Surgery (EACTS) The Task Force for the diagnosis and management of atrial fibrillation of the European Society of Cardiology (ESC) Developed with the special contribution of the European Heart Rhythm Association (EHRA) of the ESC. Eur. Heart J. 2021, 42, 373–498. [Google Scholar]

- Joglar, J.A.; Chung, M.K.; Armbruster, A.L.; Benjamin, E.J.; Chyou, J.Y.; Cronin, E.M.; Deswal, A.; Eckhardt, L.L.; Goldberger, Z.D.; Gopinathannair, R. 2023 ACC/AHA/ACCP/HRS guideline for the diagnosis and management of atrial fibrillation: A report of the American College of Cardiology/American Heart Association Joint Committee on Clinical Practice Guidelines. J. Am. Coll. Cardiol. 2024, 83, 109–279. [Google Scholar] [CrossRef]

- Biersteker, T.E.; Schalij, M.J.; Treskes, R.W. Impact of mobile health devices for the detection of atrial fibrillation: Systematic review. JMIR mHealth uHealth 2021, 9, e26161. [Google Scholar] [CrossRef] [PubMed]

- Steinhubl, S.R.; Muse, E.D.; Topol, E.J. The emerging field of mobile health. Sci. Transl. Med. 2015, 7, 283rv3. [Google Scholar] [CrossRef] [PubMed]

- Faust, O.; Shenfield, A.; Kareem, M.; San, T.R.; Fujita, H.; Acharya, U.R. Automated detection of atrial fibrillation using long short-term memory network with RR interval signals. Comput. Biol. Med. 2018, 102, 327–335. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Zheng, G.; Koh, J.; Li, H.; Xu, Z.; Cho, S.P.; Im, S.I.; Braverman, V.; Jeong, I.c. Optimizing beat-wise input for arrhythmia detection using 1-D convolutional neural networks: A real-world ECG study. Comput. Methods Programs Biomed. 2025, 269, 108898. [Google Scholar] [CrossRef]

- Islam, S.; Islam, M.R.; Sanjid-E-Elahi; Abedin, M.A.; Dökeroğlu, T.; Rahman, M. Recent advances in the tools and techniques for AI-aided diagnosis of atrial fibrillation. Biophys. Rev. 2025, 6, 011301. [Google Scholar] [CrossRef]

- Rancea, A.; Anghel, I.; Cioara, T. Edge computing in healthcare: Innovations, opportunities, and challenges. Future Internet 2024, 16, 329. [Google Scholar] [CrossRef]

- Saha, S.S.; Sandha, S.S.; Srivastava, M. Machine learning for microcontroller-class hardware: A review. IEEE Sens. J. 2022, 22, 21362–21390. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Faust, O.; Hong, W.; Loh, H.W.; Xu, S.; Tan, R.S.; Chakraborty, S.; Barua, P.D.; Molinari, F.; Acharya, U.R. Heart rate variability for medical decision support systems: A review. Comput. Biol. Med. 2022, 145, 105407. [Google Scholar] [CrossRef]

- Petrutiu, S.; Sahakian, A.V.; Swiryn, S. Abrupt changes in fibrillatory wave characteristics at the termination of paroxysmal atrial fibrillation in humans. Europace 2007, 9, 466–470. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Adam, K.D.B.J. A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- STMicroelectronics. STM32L475xx, STM32L485xx—Ultra-Low-Power Microcontrollers with FPU Arm Cortex-M4 MCU with 1 Mbyte of Flash, 120 MHz CPU, LPTIM; Datasheet DS10920, Revision 7; STMicroelectronics: Geneva, Switzerland, 2022; Available online: https://www.st.com/resource/en/application_note/an4865-lowpower-timer-lptim-applicative-use-cases-on-stm32-mcus-and-mpus-stmicroelectronics.pdf (accessed on 20 October 2025).

- Arm. Cortex-M4 Processor Technical Reference Manual; Revision r0p1; Arm: Cambridge, UK, 2017; Available online: https://developer.arm.com/documentation/ddi0439/latest (accessed on 20 October 2025).

- STMicroelectronics. STM32L475xx Datasheet—Ultra-Low-Power Arm Cortex-M4 32-bit MCU+FPU, 100 DMIPS, up to 1 MB Flash, 128 KB SRAM, USB OTG FS, LCD, ext. SMPS; DS11927; STMicroelectronics: Geneva, Switzerland, 2023. [Google Scholar]

- STMicroelectronics. ST-LINK/V3PWR in-Circuit Debugger/Programmer for STM8 and STM32, with Advanced Power Features; User Manual UM2812, Revision 2; STMicroelectronics: Geneva, Switzerland, 2023; Available online: https://www.st.com/resource/en/user_manual/um1075-stlinkv2-incircuit-debuggerprogrammer-for-stm8-and-stm32-stmicroelectronics.pdf (accessed on 20 October 2025).

- Yazid, M.; Rahman, M.A.; Nuryani, N.; Aripriharta. Atrial Fibrillation Detection From Electrocardiogram Signal on Low Power Microcontroller. IEEE Access 2024, 12, 91590–91604. [Google Scholar] [CrossRef]

- Triventi, M.; Calcagnini, G.; Censi, F.; Mattei, E.; Mele, F.; Bartolini, P. Clinical Validation of an Algorithm for Automatic Detection of Atrial Fibrillation from Single Lead ECG. In Proceedings of the XII Mediterranean Conference on Medical and Biological Engineering and Computing, Chalkidiki, Greece, 27–30 May 2010; Bamidis, P.D., Pallikarakis, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 168–171. [Google Scholar]

- Lee, K.S.; Park, H.J.; Kim, J.E.; Kim, H.J.; Chon, S.; Kim, S.; Jang, J.; Kim, J.K.; Jang, S.; Gil, Y.; et al. Compressed Deep Learning to Classify Arrhythmia in an Embedded Wearable Device. Sensors 2022, 22, 1776. [Google Scholar] [CrossRef]

- Huang, W.; Zhang, Y.; Wan, S. A sorting fuzzy min-max model in an embedded system for atrial fibrillation detection. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 126. [Google Scholar] [CrossRef]

- Silva, G.V.; Lima, M.D.; Filho, J.A.; Rovai, M.J. Atrial Fibrillation and Sinus Rhythm Detection Using TinyML (Embedded Machine Learning). In Proceedings of the Latin American Conference on Biomedical Engineering, Florianópolis, Brazil, 24–28 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 633–644. [Google Scholar]

- Chen, J.; Jiang, M.; Zhang, X.; da Silva, D.S.; de Albuquerque, V.H.C.; Wu, W. Implementing ultra-lightweight co-inference model in ubiquitous edge device for atrial fibrillation detection. Expert Syst. Appl. 2023, 216, 119407. [Google Scholar] [CrossRef]

- Akter, M.; Islam, N.; Ahad, A.; Chowdhury, M.A.; Apurba, F.F.; Khan, R. An Embedded System for Real-Time Atrial Fibrillation Diagnosis Using a Multimodal Approach to ECG Data. Eng 2024, 5, 2728–2751. [Google Scholar] [CrossRef]

- Żyliński, M.; Nassibi, A.; Mandic, D. Design and Implementation of an Atrial Fibrillation Detection Algorithm on the ARM Cortex-M4 Microcontroller. Sensors 2023, 23, 7521. [Google Scholar] [CrossRef]

- Kim, S.; Chon, S.; Kim, J.K.; Kim, J.; Gil, Y.; Jung, S. Lightweight Convolutional Neural Network for Real-Time Arrhythmia Classification on Low-Power Wearable Electrocardiograph. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; Volume 2022, pp. 1915–1918. [Google Scholar] [CrossRef]

- Busia, P.; Scrugli, M.A.; Jung, V.J.B.; Benini, L.; Meloni, P. A Tiny Transformer for Low-Power Arrhythmia Classification on Microcontrollers. IEEE Trans. Biomed. Circuits Syst. 2025, 19, 142–152. [Google Scholar] [CrossRef]

- Fajardo, C.A.; Parra, A.S.; Castellanos-Parada, T.V. Lightweight Deep Learning for Atrial Fibrillation Detection: Efficient Models for Wearable Devices. Ing. Investig. 2025, 45, e114530. [Google Scholar] [CrossRef]

- Loroch, D.; Feldmann, J.; Rybalkin, V.; Wehn, N. Low-power, Energy-efficient, Cardiologist-level Atrial Fibrillation Detection for Wearable Devices. arXiv 2025, arXiv:2508.13181. [Google Scholar]

- Chen, C.; Ma, C.; Xing, Y.; Li, Z.; Gao, H.; Zhang, X.; Yang, C.; Liu, C.; Li, J. An atrial fibrillation detection system based on machine learning algorithm with mix-domain features and hardware acceleration. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico City, Mexico, 1–5 November 2021; pp. 1423–1426. [Google Scholar] [CrossRef]

- Żyliński, M.; Nassibi, A.; Rakhmatulin, I.; Malik, A.; Papavassiliou, C.M.; Mandic, D.P. Deployment of Artificial Intelligence Models on Edge Devices: A Tutorial Brief. IEEE Trans. Circuits Syst. Ii Express Briefs 2024, 71, 1738–1743. [Google Scholar] [CrossRef]

- Lim, H.W.; Hau, Y.W.; Othman, M.A.; Lim, C.W. Embedded system-on-chip design of atrial fibrillation classifier. In Proceedings of the 2017 International SoC Design Conference (ISOCC), Seoul, Republic of Korea, 5–8 November 2017; pp. 90–91. [Google Scholar]

- Hau, Y.; Lim, H.; Lim, C.; Kasim, S. P204 Automated detection of atrial fibrillation based on stationary wavelet transform and artificial neural network targeted for embedded system-on-chip technology. Eur. Heart J. 2020, 41, ehz872-075. [Google Scholar] [CrossRef]

- Gertych, A.; Faust, O. AI explainability and bias propagation in medical decision support. Comput. Methods Programs Biomed. 2024, 257, 108465. [Google Scholar] [CrossRef]

- Nassibi, A.; Zylinski, M.; Hammour, G.; Malik, A.; Davies, H.J.; Williams, I.; Papavassiliou, C.; Mandic, D. BioBoard: A multimodal physiological sensing platform for wearables. TechRxiv 2025. [Google Scholar] [CrossRef]

- Faust, O.; Ciaccio, E.J.; Acharya, U.R. A review of atrial fibrillation detection methods as a service. Int. J. Environ. Res. Public Health 2020, 17, 3093. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).