Abstract

Recently, the development of smartphone apps has resulted in a wide range of services being offered related to wood supply chain management, supporting decision-making and narrowing the digital divide in this business. This study examined the performance of Tree Scanner (TS)—a LiDAR-based smartphone app prototype integrating advanced algorithms—in estimating and providing instant data on log volume through direct digital measurement. Digital log measurements were conducted by two researchers, who each performed two repetitions; in addition to accuracy, measurement-time efficiency was also considered in this study. The results indicate strong agreement between the standard (manual) and digital measurement estimates, with an R2 > 0.98 and a low RMSE (0.0668 m3), as well as intra- and inter-user consistency. Moreover, the app showed significant potential for productivity improvement (38%), with digital measurements taking a median time of 21 s per log compared to 29 s per log with manual measurements. Its ease of use and integration of several key functionalities—such as Bluetooth transfer, remote server services, automatic species identification, the provision of instant volume estimates, compatibility with RFID tags and wood anatomy checking devices, and the ability to document the geographic location of measurements—make the Tree Scanner app a useful tool for integration into wood traceability systems.

Keywords:

Tree Scanner; LiDAR sensor; log; volumes; digital measurements; manual measurement; traceability 1. Introduction

The forestry sector, like the primary sector, is facing novel and demanding challenges, including the climate crisis, an aging workforce, and globalization. Additionally, other factors hinder forest management, such as property fragmentation and technological development. The rapid advancement of information and communication technologies (ICTs) has fundamentally changed people’s knowledge and information behaviors. These changes stem from an unprecedented level of connectivity that characterizes people’s information environments [].

Technological development presents both obstacles and opportunities for addressing these challenges. The transformation of a highly traditional context, such as the forestry sector, through the introduction of digital systems can lead to undesired consequences []. Typical barriers to the adoption of ICT solutions in rural areas include a lack of connectivity, as well as users’ and stakeholders’ fear and suspicion toward technology []. On the one hand, establishing stable connections has never been easier than it is today []; on the other hand, the aging human capital in rural communities may hinder their ability to embrace the benefits of ICT, unlike younger individuals, who are drawn to robust digitalization []. This disparity contributes to the narrowing of the digital divide [].

In recent years, the rapid spread of mobile phones has provided opportunities to reach farmers, who are often remote, dispersed, and poorly serviced, by overcoming barriers of space and social standing. Mobile phones offer a range of services related to banking, payments, and commerce, as well as the ability to gather information in the form of images, audio recordings, graphics, videos, text, and functions such as marketplaces and social networking platforms []. In the agroforestry sector, the number of decision support tools available to farmers as smartphone apps has been steadily increasing. For instance, in India, 25 apps were used for various farming-related sectors, including horticulture, livestock management, farm operations, irrigation monitoring, soil health assessment, and agricultural marketing []. These tools range from equipment optimization (e.g., manufacturer-to-consumer instructions) to RFID management of livestock [] and from global dairy herd management [,] to improvements in sustainability in agriculture and agronomic decision-making [].

Equipped with high-quality RGB cameras, depth sensors, and LiDAR scanners, smartphones are becoming practical tools for close-range photogrammetry and remote sensing. Mobile devices have been effectively used to measure attributes such as diameter at breast height (DBH), tree height, basal area (BA), volume, and tree position. They utilize both passive and active sensing techniques, thus having the potential to improve the accuracy and efficiency of forest inventories. Tree attributes can be estimated either through indirect measurements via the post-processing of datasets or by direct methods using apps for mobile devices [].

Significant advancements in forestry applications have included the use of general-purpose or dedicated apps for measuring and comparing the main biometrics of trees and logs with those obtained through manual methods [,,]. While these apps are useful, they have several important shortcomings. They do not provide instant estimations of volume in the field, lack integration with other technologies such as RFID (radio frequency identification) tags, and miss other essential functionalities that allow for the sourcing, automatic data storage, and documentation of the geographic location necessary for traceability in modern management systems [,]. For effective digitalization in forestry, several key requirements must be met by mobile apps. These include performing accurately; being time-efficient; having the capability to store, process, and transfer digital data in real time; and, most importantly, integrating with other wood traceability technologies [].

Considering these requirements, the Sintetic project [] set the ambition to develop the Tree Scanner app as a comprehensive tool for single-item identification, sourcing, measuring, and traceability. In addition to estimating the volume of logs using a smartphone-embedded LiDAR sensor (real-time point cloud scanning) and identifying log species while documenting geographic location, the app is equipped with other essential functionalities. These include Bluetooth connectivity with the HITMAN acoustic tool for detecting wood stiffness and RFID (radio frequency identification) tag readers that uniquely identify each piece of wood. It is necessary to highlight that testing an app during the prototyping phase is an essential step in product development, as it is important for assessing functionality, accuracy, and practical use [].

The aim of this study was to evaluate the performance of the Tree Scanner app (TS) as a digital measurement tool during its prototyping phase for log volume estimation by (i) assessing its accuracy compared to manual measurements, (ii) evaluating the internal consistency of the app’s estimates by considering two data sampling densities (0.1 and 0.5 m), (iii) assessing the agreement and intra-rater reliability of log volume estimates by repeating measurements by the same user, (iv) assessing the agreement and inter-rater reliability of log volume estimates by having two different users repeat the measurements, and (v) comparing the time efficiency of the app with that of manual log measurements.

2. Materials and Methods

2.1. App Description and Data Used in This Study

Tree Scanner (hereafter referred to as TS) is a smartphone-based application currently under development as part of the Sintetic (Single Item Identification for Forest Production, Protection, and Management) project []. The goal of this project is to harness the potential of digital technologies in supply chains that are still largely reliant on manual methods. To enhance its effectiveness, TS is being developed specifically for iPhone platforms, which incorporate a vertical-cavity short-range LiDAR sensor [].

In summary, TS is designed to integrate various workflows that support critical functions within the supply chain, such as wood sourcing and traceability. The app enables users to measure the biometrics of trees and logs, process and save results on local devices, and transfer and store geo-referenced data in dedicated databases using state-of-the-art remote server connection technologies. For log measurement, it allows users to create measurement instances, take reference diameters at the ends of logs using deep learning models to recognize their profiles, and scan the logs using dedicated sensors.

The version utilized in this study was released in July 2024 and was tested via TestFlight (version 3.51, https://testflight.apple.com/join/IdnPkbIo, accessed on 15 September 2025), a tool developed by Apple (Cupertino, CA, USA) to facilitate the testing of app beta versions. In addition to estimating timber volume by applying intelligent sensors and algorithms to recognize features such as diameter, length, and tree species, the app is equipped with other essential functionalities. These include Bluetooth connectivity with the HITMAN acoustic tool for detecting wood stiffness and an RFID (radio frequency identification) tag reader to uniquely identify every tree or piece of wood. The TS app (version 0.7, Umeå, Sweden) allows users to collect, transfer, and store important sourcing, traceability, and quality information related to single tree and wood pieces.

Volume estimates were derived from the implementation of a Random Sampling and Consensus (RANSAC) algorithm [], which operates on data collected in the form of LiDAR point clouds. The version tested in this study included two data-processing workflows, each producing a separate volume estimate. For each scanned log, the app automatically produced two datasets: one using measurements every 0.5 m along the log (hereafter called the first workflow), and the other using measurements every 0.1 m (hereafter called the second workflow). These outputs are hereafter referred to as sampling densities, which produced two separate volume estimates from the same scan, allowing us to assess the effect of different sampling densities on measurement reliability. Sampled diameters, log lengths, species, geographic locations, and timestamps were stored as .json files.

This study was designed to exert control over all factors that could potentially influence or compromise the test results. To mitigate any bias that might arise from processing data on the dedicated server, which could affect the measurement time estimates, in this study, the data was processed and stored directly on the mobile devices used for measurements, specifically, two iPhone 13 Pro Max smartphones having installed in advance the TestFlight and TS apps (Version 0.7). The data, saved as .json files, were later extracted and stored in a Microsoft Excel worksheet.

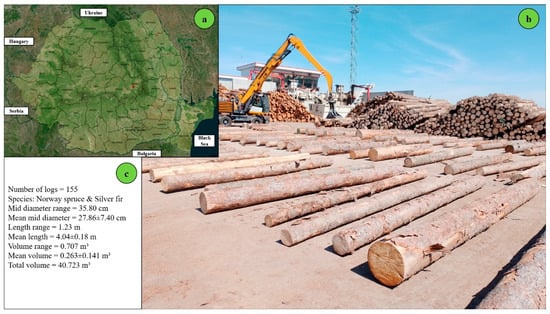

2.2. Location of the Study

The site setting and the arrangement of logs were chosen to limit the background noise as much as possible, because the presence of understory or grass would create artifacts in the collected point clouds or obstructions on the ground during measurement, which, in turn, could affect the computational workflow of the app and the time-consumption measurements. The species group was controlled as well by including in this study only coniferous logs to limit the number of variables. In forest operations, comparative time studies require control over factors such as the ground slope, ground condition, and piece size to be able to detect at a high degree of certainty the differences in estimates caused only by the technology used. Accordingly, the location of study was selected to accommodate all these requirements, except for log size, because a degree of variability in this feature was required to accommodate the reliability component of this study. This study was conducted in the log yard of an important wood processing company based in Romania, which is designated by the red dot in Figure 1a. The company handles material sourced both from domestic and foreign forests and typically works with short logs in the range of 3 to 4 m in length. A batch of logs representing a part of the sample used to take the measurements is illustrated in Figure 1b, while descriptive statistics of the sample, including volume estimates based on Huber’s formula, are provided in Figure 1c.

Figure 1.

Location of the study area and characteristics of the sample used in this study: (a) national-level map showing the study site (red dot), (b) sample logs used for measurement (c) summary statistics of the sample, with volume estimates based on Huber’s formula.

The sample under study consisted of 155 logs, which totaled an estimated volume of about 41 m3 and exhibited a wide variability in size. The logs were placed on the ground approximately 1 m apart from each other (Figure 1b). The ground was flat, paved with concrete, and cleaned before the experiment. The diameters measured at the midpoint ranged from 13 to 49 cm, with an average of 28 cm, while the mean length was close to 4 m. For detailed statistics concerning the biometric characteristics of the logs, please refer to Table S1 and Figure S1 (Supplementary Materials). The sky was mostly clear during the field data collection.

2.3. Experimental Design and Data Collection

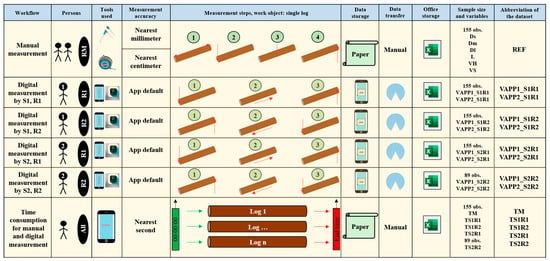

The design of this study was primarily comparative, and the data were collected using both manual and digital measurements. Two researchers, hereafter referred to as Subject 1 (S1) and Subject 2 (S2), measured the logs as described in Figure 2. Both subjects conducted the measurement tasks in two replications. The order of log measurement remained consistent, irrespective of the subject or replication. In the first replication (R1), the logs were approached from one end, while in the second replication (R2), the logs were approached from the opposite end. S1 took measurements of all 155 logs in both replications, while S2 measured all logs in R1 and the last 89 logs in R2 due to space and production flow constraints in the factory.

Figure 2.

Description of the testing workflows implemented in the field.

For manual measurements (Figure 2), variables included the diameter at the small end (Ds), diameter at the middle (Dm), diameter at the large end (Dl), and log length (L). Volume estimates were calculated using Huber’s (VH) and Smalian’s (VS) formulae. Digital measurements were collected through the app with two sampling densities (VAPP1—digital volume estimate based on a sampling frequency of 0.5 m, VAPP2—digital volume estimate based on a sampling frequency of 0.1 m). Time consumption was recorded for both methods (TM—cycle time of manual measurement, TS1R1—cycle time of digital measurement for Subject 1 during replication 1, TS1R2—cycle time of digital measurement for Subject 1 during replication 2, TS2R1—cycle time of digital measurement for Subject 2 during replication 1, TS2R2—cycle time of digital measurement for Subject 2 during replication 2).

Overall, six main workflows were implemented. The first workflow (REF—Figure 2) involved manually measuring the logs to obtain the large-end diameter (Dl), small-end diameter (Ds), diameter at the middle (Dm), and log length (L). This workflow was conducted once per log, starting from the same end of the log. The collected data was recorded on paper sheets and then manually transferred to a Microsoft Excel worksheet.

Digital measurement workflows were implemented as illustrated in Figure 2. For each subject and replication, the TS app was used to detect the diameter at the first end of the log. The subject then moved along the log while scanning and concluded the task by detecting the diameter at the second end. In all digital measurement workflows, movement along the log was performed at a constant pace, although there were variations in movement speed among the subjects. After each measurement, data were processed and saved locally, then transferred to a personal computer via AirDrop at the end of the field test. For each subject and replication, two datasets were created and saved as Microsoft Excel files, corresponding to estimates based on the algorithm’s sampling density at 0.5 m (VAPP1) and 0.1 m (VAPP2), respectively. This resulted in eight datasets named based on sampling density (VAPP), subject (S), and replication (R), as shown in Figure 2. Although the digital volume estimates were collected with a precision of more than ten digits, for consistency, these estimates were included in the database rounded to five digits.

Time consumption for manual (TM) and digital (TS1R1, TS1R2, TS2R1, TS2R2) log measurements was assessed using the snap-back chronometry method []. The chronometer was set to zero before measuring each log. For manual measurements, the team verbally communicated their intention to start, at which point the chronometer was activated. This communication always occurred after the team arrived at a given log. Upon completing the measurement of a log, they verbally indicated the end of the process, the chronometer was stopped, and the time was recorded on a paper sheet. A similar procedure was followed to collect time consumption for digital measurements. Each subject communicated verbally their intention to start the measurement process and used the app interface to initiate it, at which point the chronometer was activated. Once a measurement was completed, including data processing and saving on the smartphone, the subject verbally indicated the end of the process, and the chronometer was stopped. All time measurements were recorded to the nearest second by a researcher using the chronometer app on an iPhone 13 Pro Max.

For clarity, this study used the following abbreviations: VAPP1_S1R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 1, VAPP2_S1R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 1, VAPP1_S1R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 2, VAPP2_S1R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 2, VAPP1_S2R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 1, VAPP2_S2R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 1, VAPP1_S2R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 2, VAPP2_S2R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 2, C—slope of the regression through the origin, and R2—coefficient of determination.

2.4. Data Processing

Data processing steps were primarily supported by Microsoft Excel. In the first step, all data were paired according to the log identification number. Volume estimates produced for each subject, replication, and sampling density were manually extracted from the .json files and entered into the database. Among other variables, the final database contained paired data for all variables described in Figure 2. Manually collected biometric data were used to compute the volume of each log based on Huber’s (VH) and Smalian’s (VS) formulae. The time consumption of digital measurements (TS1R1, TS1R2, TS2R1, TS2R2) was averaged for each log, resulting in an estimate (AMT) rounded to the nearest second. Subsequently, all data were prepared for statistical analysis according to the objectives of this study. This step included organizing data into separate sheets to meet the requirements of each statistical workflow, as described in Figures S2–S7 (see Supplementary Materials).

2.5. Data Analysis

Data analysis was structured into separate workflows (Figures S2–S7, Supplementary Materials) designed in accordance with the objectives of this study. Descriptive statistics, including those showing the distribution of data (Table S1 and Figure S2, Supplementary Materials), were developed to characterize the REF dataset. All variables were subjected to a normality check using the Shapiro–Wilk test []. Subsequently, relevant statistical descriptors such as the minimum, maximum, mean, standard deviation, and median values were estimated.

For the first four objectives of this study, the statistical workflows were similar and included checking the paired data for heteroscedasticity using the Breusch–Pagan and White tests [,] (Figures S3–S6, Supplementary Materials), assessing trends in the data using the regression through the origin [], evaluating agreement using Bland and Altman’s method [], and characterizing the magnitude of differences using bias (hereafter called Bias [,]), mean absolute error (hereafter called MAE []), and root mean squared error (hereafter called RMSE [,]). The statistical workflow for the fourth objective diverged slightly, as the agreement assessment using Bland and Altman’s method was omitted due to the extensive number of comparisons required and the limited space of this paper. However, trends in data and error metrics were computed and reported for all eight possible comparisons.

For the last objective of this study, a normality check using the Shapiro–Wilk test was first conducted on the compared variables (TM and AMT). Next, the potential effects of log biometrics on time consumption were evaluated using least squares ordinary regression for both variables. The log biometrics considered in this analysis were manually collected. Because the data failed the normality test, the nonparametric Mann–Whitney comparison test [,,] was subsequently implemented to determine whether there were statistically significant differences in time consumption between the data measurement methods. Descriptive statistics for this workflow were reported similarly to those characterizing the log biometrics obtained through manual measurement.

Statistical tests and the development of descriptive statistics were conducted using the Real Statistics add-in for Excel []. Trends analyzed included those checked by regression through the origin as well as those utilizing ordinary least squares regression, all performed using the functionalities of Microsoft Excel. The results were primarily presented in tabular form, highlighting the main features and interpretations of the regression equations, and were accompanied by scatterplots displaying the data against the identity (1:1) lines. Where relevant, differences between the datasets were included in the trend scatterplots.

Bland–Altman analysis was performed by integrating the data computing and processing functionalities of Microsoft Excel with its capabilities for developing scatterplots. Error (difference) metrics were estimated using commonly known formulas based on data organized in advance for each statistical workflow. For instance, signed, absolute, and squared differences between each pair of compared variables were computed in advance. For convenience, where relevant, these metrics were included in the Bland–Altman plots and reported alongside the upper and lower limits of agreement based on two standard deviations of the data.

3. Results

3.1. Log Biometrics Based on Manual Measurement

The dataset obtained through manual measurement consisted of 155 observations (logs) and was characterized by a high diversity of the biometrics, particularly in the estimated volumes. The coefficients of variation computed for the Ds, Dl, and Dm variables exhibited similar values in the range of 27% to 29%. By contrast, log length (L) showed reduced variability, with a coefficient of variation of 5%. The volume estimates had coefficients of variation ranging from 54% to 57%. Table S1 (Supplementary Materials) presents the detailed descriptive statistics of the REF dataset, indicating that the volume estimates based on Huber’s formula ranged from approximately 0.05 to 0.76 m3, while those based on Smalian’s formula ranged from about 0.07 to 0.96 m3. The mean values of the estimates were identical (0.263 m3), while the median values were also similar (0.233 m3 for VH and 0.235 m3 for VS). All variables failed the normality test, although some were close to a standard normal distribution (Figure S1, Supplementary Materials). The results from the heteroscedasticity tests indicate that the data were heteroscedastic (Table S2, Supplementary Materials). Moreover, the analysis of the trends (Figure S9, Supplementary Materials), along with the Bland–Altman plot and error metrics (Figure S10, Supplementary Materials), indicates a high level of agreement in the data.

3.2. Trends, Agreement, and Differences in the Algorithm’s Estimates

Table 1 summarizes the values of the slopes (C) and coefficients of determination (R2) derived from the trend models developed using regression through the origin for both the actual values and the differences between the actual values. The results of heteroscedasticity tests indicate that most of the data were heteroscedastic, which suggests the presence of proportional bias (see Table S3, Supplementary Materials). The slopes (C) and coefficients of determination (R2) for the actual values demonstrate a high level of agreement, as their values were close to 1 in both cases. In regression through the origin, a slope value close to 1 suggests that the data points closely align with the identity line (see Figures S11–S14, Supplementary Materials), whereas a coefficient of determination equal to 1 indicates that 100% of the variability in the dependent variable can be explained by the variability in the independent variable.

Table 1.

Summary statistics of trends in data between the algorithm’s estimates.

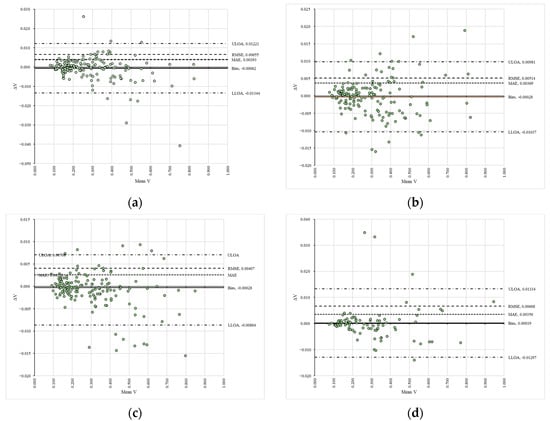

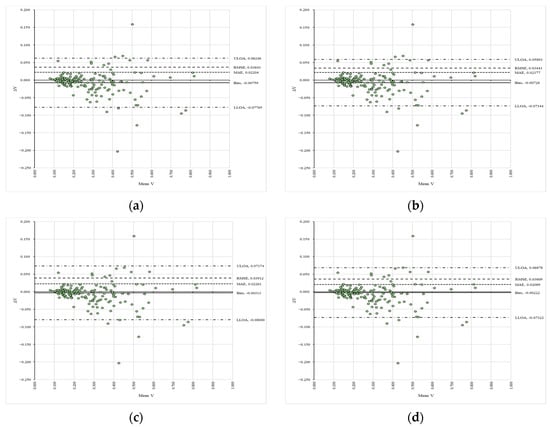

The regression models developed for the differences in the data reveal two key points: the slope was consistently close to zero, indicating a constant trend line, and the coefficients of determination suggested a poor explanation of variability (Table 1). These trends are clearly observable in Figures S11–S14 (Supplementary Materials), which illustrate a general lack of significant differences in the data. Similar findings are presented in Figure 3, where the Bland–Altman plots indicate a high degree of agreement in the estimates, as the data points fall within the agreement limits and show a narrow range of values for these limits.

Figure 3.

Bland–Altman plots of the compared variables for the algorithm’s estimates: (a) comparison between VAPP1_S1R1 and VAPP2_S1R1; (b) comparison between VAPP1_S1R2 and VAPP2_S1R2; (c) comparison between VAPP1_S2R1 and VAPP2_S2R1; (d) comparison between VAPP1_S2R2 and VAPP2_S2R2.

In these plots (Figure 3), the signed differences (ΔV) between the reference (VAPP1_S1R1—panel (a), VAPP1_S1R2—panel (b), VAPP1_S2R1—panel (c), VAPP1_S2R2—panel (d)) and compared (VAPP2_S1R1—panel (a), VAPP2_S1R2—panel (b), VAPP2_S2R1—panel (c), VAPP2_S2R2—panel (d)) data are plotted against their mean values (Mean V) in a space delimited by the upper (ULOA) and lower (LLOA) limits of agreement, along with figures such as the fixed bias (Bias), mean absolute error (MAE) and root mean squared error (RMSE). Moreover, the error metrics estimated for the compared datasets indicate a systematic bias that remained very low (Bias = −0.00062 to 0.00019), along with low values for mean absolute error (MAE = 0.00258 to 0.00393) and root mean squared error (RMSE = 0.00407 to 0.00668), with the latter suggesting the absence of large-magnitude differences between the datasets.

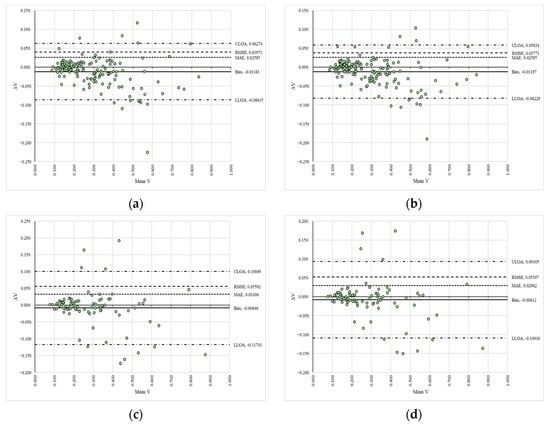

3.3. Trends, Agreement, and Differences in the Replicates

The results on the trends, agreement, and differences in the replicates are summarized similarly to those reported in Section 3.2. Table 2 presents the values of the slopes (C) and coefficients of determination (R2), while Figure 4 displays the Bland–Altman plots along with the error metrics. Panels (a) to (d) show the signed differences (ΔV) between the reference (VAPP1_S1R1—panel (a), VAPP2_S1R1—panel (b), VAPP1_S2R1—panel (c), VAPP2_S2R1—panel (d)) and compared (VAPP1_S1R2—panel (a), VAPP2_S1R2—panel (b), VAPP1_S2R2—panel (c), VAPP2_S2R2—panel (d)) data plotted against their mean values (Mean V) in a space delimited by the upper (ULOA) and lower (LLOA) limits of agreement, along with figures such as the fixed bias (Bias), mean absolute error (MAE), and root mean squared error (RMSE).

Table 2.

Summary statistics of trends in data between the replicates.

Figure 4.

Bland–Altman plots of the compared variables between replicates: (a) comparison between VAPP1_S1R1 and VAPP1_S1R2; (b) comparison between VAPP2_S1R1 and VAPP2_S1R2; (c) comparison between VAPP1_S2R1 and VAPP1_S2R2; (d) comparison between VAPP2_S2R1 and VAPP2_S2R2.

Table S4 (Supplementary Materials) provides the results of the heteroscedasticity tests, and Figures S15–S18 (Supplementary Materials) illustrate the trends in the data. The slopes of the models (C) and coefficients of determination (R2) for the actual values indicate good agreement, as their values were close to 1 in both cases. However, the differences from the unit values were greater, due to higher dispersion in the data (see Figures S15–S18, Supplementary Materials), which is consistent with the results from the Bland–Altman plots, particularly concerning the error metrics, which indicate a higher systematic bias (Bias = −0.00759 to 0.00222), mean absolute error (MAE), and root mean squared error (RMSE).

Contrary to the data reported in Section 3.2, the heteroscedasticity tests indicated the presence of homoscedasticity for the pairs VAPP1_S2R1—VAPP1_S2R2 and VAPP2_S2R1—VAPP2_S2R2 (Table S4, Supplementary Materials), indicating no proportional bias between the measurements. However, it is important to note that these results were based on a smaller sample size consisting of only 89 observations.

3.4. Trends, Agreement, and Differences Between the Raters

Regarding inter-rater consistency, Table 3 summarizes the values of the slopes (C) and coefficients of determination (R2), while Figure 5 presents the Bland–Altman plots alongside the error metrics. Panels (a) to (d) show the signed differences (ΔV) between the reference (VAPP1_S1R1—panel (a), VAPP2_S1R1—panel (b), VAPP1_S2R1—panel (c), VAPP2_S2R1—panel (d)) and compared (VAPP1_S1R2—panel (a), VAPP2_S1R2—panel (b), VAPP1_S2R2—panel (c), VAPP2_S2R2—panel (d)) data plotted against their mean values (Mean V) in a space delimited by the upper (ULOA) and lower (LLOA) limits of agreement, along with figures such as the fixed bias (Bias), mean absolute error (MAE), and root mean squared error (RMSE). The supplementary data found in Table S5 (Supplementary Materials) detail the results of the heteroscedasticity tests, and Figures S19–S22 (Supplementary Materials) illustrate the trends in the data. The slopes and coefficients of determination showed the highest deviations from unity for the datasets analyzed for inter-rater consistency, as depicted in Table 3. All data considered in this workflow were found to be heteroscedastic (Table S5, Supplementary Materials). The systematic bias generally exhibited values indicating greater differences (Figure 5), and the mean absolute error (MAE) was slightly higher compared to intra-rater consistency. Furthermore, the root mean squared error (RMSE) suggested the presence of larger-magnitude differences, particularly for the last two compared datasets (Figure 5).

Table 3.

Summary statistics of trends in data between raters.

Figure 5.

Bland–Altman plots of the compared variables between the raters: (a) comparison between VAPP1_S1R1 and VAPP1_S1R2; (b) comparison between VAPP2_S1R1 and VAPP2_S1R2; (c) comparison between VAPP1_S2R1 and VAPP1_S2R2; (d) comparison between VAPP2_S2R1 and VAPP2_S2R2.

3.5. Agreement Between Digital and Manual Data

When comparing the digital estimates to the manually based reference data, the developed models exhibited varying slopes and coefficients of determination, as presented in Table 4. There was similarity in the slopes (C) for the same subject and replication across different sampling densities, and the values for fixed bias (Bias), mean absolute error (MAE), and root mean squared error (RMSE) demonstrated similar behavior (Table 5). Additionally, the scatterplot of the compared datasets is shown in Figure S23 (Supplementary Materials).

Table 4.

Summary statistics of trends in digital data against the manually based estimates.

Table 5.

Error metrics of the digital data when compared with manually based estimates.

3.6. Time Efficiency

The time-consumption data did not meet the normality assumption. Therefore, the median value was selected to characterize the central tendency, and the Mann–Whitney nonparametric test was employed for data comparison. The main descriptive statistics for the time-consumption data are presented in Table 6, while the trends in time-consumption variation are illustrated in Figure S24 (Supplementary Materials). Based on the median value, the time consumption for manual measurements was higher by 7 s per log. Additionally, the coefficient of variation (Table 6) indicated greater dispersion in the manual time-consumption dataset compared to the digital measurements. This finding is further supported by the distributions shown in Figure S24, which consistently indicate greater stability in the digital measurement data.

Table 6.

Descriptive statistics of time consumption data.

The results from the Mann–Whitney test indicated highly significant statistical differences (p = 0.00000, α = 0.05) between the analyzed variables, which were also supported by a very high effect size (r = 82%). According to the descriptive statistics presented in Table 6, the productivity gain when using digital measurements is estimated to be about 38% compared to what can be achieved with manual methods.

4. Discussion

This study evaluated the performance of the Tree Scanner (TS) app, a LiDAR-based smartphone tool, during its prototyping phase for log volume estimation. The findings are promising, indicating the app’s potential as an accurate, reliable, and efficient alternative to traditional manual methods, though several considerations and limitations exist. The TS app demonstrated a high degree of accuracy in estimating log volumes when compared to the manual reference data that was evaluated using Huber’s formula. The strong agreement, evidenced by R2 values consistently exceeding 0.98 and a low overall RMSE (below 0.067 m3), with minor systematic underestimation (Bias approx. −0.0417 m3), positions the TS app favorably against other mobile solutions. For instance, Niță and Borz [] reported that their tested app, based on RANSAC [] and Poisson Surface Reconstruction [] algorithms, exhibited proportional bias, particularly for logs outside a specific size range (0.25 to 0.40 m3). The TS app, leveraging direct LiDAR scanning for single logs, did not show such pronounced operational bias across different log sizes within our sample, aligning with the general accuracy expectations for LiDAR-based measurements [,]. This direct scanning approach contrasts with methods reliant on the geometric assumptions inherent in many manual formulae [,] or extensive post-processing. Previous work using LiDAR-equipped iPhones, such as Borz et al. [], also found high accuracy (95–99%) for individual log diameter and length measurements, corroborating the base capability of the sensor.

However, this study did not specifically test the LiDAR sensor’s ability to accurately capture very-small-diameter logs or highly complex geometries []. Several studies show that LiDAR technology inherently has limitations when it comes to capturing very fine details [,,]. Additionally, the current study used sampling densities of 0.1 m and 0.5 m along the log. While both yielded accurate results, the 0.1 m interval provides more data points for shape reconstruction, which could be beneficial for more irregularly shaped logs. Essentially, measurements were conducted under optimal conditions: on a flat, paved, and clean log yard, with the logs spaced apart. Real-world forest operations present significant challenges [], including uneven terrain, dense understory, or slash, which can create artifacts in point clouds [,], varying weather conditions, and mud or debris on logs [,,]. Therefore, the robust performance observed in the controlled settings in this study needs to be validated in typical forest environments.

Moreover, an important characteristic of any measurement tool is its internal consistency [,]. The TS app demonstrated excellent internal consistency, with R2 values > 0.99 and RMSE values < 0.00407 m3 when comparing volume estimates derived from the 0.1 m and 0.5 m sampling densities. This indicates that the app’s underlying algorithms produce highly similar results regardless of the chosen sampling intensity within the tested range [,,]. While the 0.5 m sampling density might be slightly faster in data acquisition, the 0.1 m density captures more detailed surface information. The choice between these may depend on the trade-off between speed and the need for higher-resolution data, especially for logs with significant taper or irregularities []. This consistency is vital, as it suggests that users can expect predictable behavior from the app when adjusting this setting, although further study is needed to determine if one interval consistently yields results closer to ground truth across a wider variety of log forms.

Intra-rater reliability, which measures the consistency of measurements taken by the same user on the same objects at different times [], was also high for the TS app (R2 > 0.99 between replications by the same subject). This high consistency is a significant advantage over manual measurements, where slight variations in caliper placement or reading the tape measure can introduce variability between repeated measurements by the same individual [,,], especially if the exact point of diameter sampling is not precisely replicated [,,]. The app’s structured scanning process, capturing data at pre-defined intervals along the log (0.1 m or 0.5 m), likely contributes to this stability by standardizing the data capture methodology between replications for the same user. This suggests that a trained user can repeatedly achieve similar results, enhancing the credibility of the data collected. Forkuo and Borz [] also reported good reliability for LiDAR-based log measurements, underscoring the potential of this technology.

Similarly, inter-rater reliability, which measures the consistency of results obtained by different users measuring the same objects [,], yielded strong agreement, with R2 values between 0.9773 and 0.9898. While slightly lower than the intra-rater reliability, which is common, these values indicate that the TS app largely mitigates the influence of individual operator technique. In manual measurements, differences in how operators handle tools, interpret measurement points, and round values can lead to greater discrepancies [,]. The app’s guided workflow and automated data capture appear to reduce such operator-specific variability. The study by Forkuo and Borz [] similarly found that user experience could influence reliability but that LiDAR apps generally offer good inter-rater consistency. The TS app’s performance suggests it can be reliably used by different trained operators with minimal variation in outcomes attributable to personal technique during the scanning process itself [,].

In addition, the TS app showed a substantial improvement in time efficiency, with digital measurements requiring a median of 21 s per log compared to 29 s for manual measurements. This represents an estimated productivity gain of approximately 38%. Such efficiency gains are critical in large-scale forest operations, where thousands of logs may need to be measured [,]. The Mann–Whitney U test confirmed a highly significant difference in time consumption (p < 0.0001), supporting this observation, which is particularly relevant given the non-normal distribution of the time data.

Furthermore, the coefficient of variation for time consumption was considerably lower for digital measurements (10.22%) compared to manual measurements (25.19%). This indicates a more stable and predictable workflow when using the TS app. Traditional manual methods often require a team of at least two, sometimes three, individuals (e.g., for positioning, measuring, and recording) [,,], whereas the TS app can be operated by a single person. When comparing to other technologies, Borz and Proto [] reported that measuring the diameter and length of single logs took 1.5 min with a different smartphone app and 19 s with a mobile laser scanner, though the latter is a more specialized and costly device. The TS app’s performance for full volume estimation appears competitive, particularly considering that it is a smartphone-based solution. It is important to note that this study did not find evidence that the increased speed of digital measurement compromised its accuracy under the tested conditions.

Several strengths of the Tree Scanner (TS) app have emerged from the evaluation conducted during the prototyping phase. One of the most notable strengths is its accuracy and reliability, which demonstrated a high level of agreement with traditional manual methods. Additionally, the app exhibited strong intra- and inter-rater reliability, reinforcing its dependability in various measurement scenarios. Another significant advantage of the TS app is its time efficiency. The app resulted in a substantial reduction in the measurement time required per log, as well as lower variability in operational time. This improvement indicates that the app can streamline the log measurement process, providing faster results without sacrificing quality. The design of the TS app also prioritizes ease of use and single-operator functionality. It is created for straightforward operation by one person, thereby reducing the labor requirements compared to some manual crews. This feature enhances the app’s practicality and accessibility for users in the field. Furthermore, the TS app delivers direct on-device volume estimation. Users receive instant volume data while in the field, unlike with other apps that only capture raw data for later post-processing. This functionality allows for immediate decision-making based on accurate measurements. In terms of data management, the app offers standardized data capture. The use of LiDAR scanning combined with algorithmic processing minimizes the subjectivity and human error often associated with manual measurements [], leading to more consistent results. Moreover, the use of this smartphone-based solution for individual log measurements is promising for practical use due to its fast measurement process and lower acquisition costs [] compared to professional LiDAR scanners. Finally, the app shows significant potential for integration with other technologies [,]. It includes functionalities such as Bluetooth connectivity for devices like the HITMAN acoustic tool and RFID readers. It also supports remote server services and species identification features, although these functionalities were not tested in this study. Such capabilities are essential for modern wood traceability systems, as highlighted in previous studies [,].

Despite these promising results, this study has limitations because it used a LiDAR-based scanning method [,,], and as a prototype, the app has areas that require further development. One limitation is that this study was conducted in a controlled environment, specifically, in a log yard under optimal conditions. The performance of the app in more challenging forest environments, which are characterized by uneven terrain, poor lighting, occlusions, debris on logs, and adverse weather conditions, has yet to be determined, particularly in terms of accuracy. This represents a critical next step for future research. While the principles of ensuring minimal background noise and a clear line of sight during scanning [,,] were upheld in this study, they may not apply under forest conditions. Another limitation is the limited log variability in the sample used for this study. Although the sample included a range of log sizes, it was restricted to coniferous species from a single location. To fully assess the app’s effectiveness, future testing should encompass diverse species, including hardwoods, and logs with more pronounced irregularities such as sweep, crook, and butt flare. Additionally, future studies should clarify its performance in more challenging environments, for example, when obtaining individual volume measurements among densely packed logs [,]. Moreover, there are several untested functionalities within the app that warrant evaluation. Key features designed for enhanced traceability and data management, such as Bluetooth device integration, automatic species identification, and remote server synchronization, were not assessed in this study. Understanding their practical utility and performance is essential for the app’s overall effectiveness. User acceptance and training also pose limitations. Measurements were performed by researchers who were already familiar with the app. Further investigation is needed to assess user acceptance, ease of learning, and the performance of forestry professionals with varying levels of digital literacy []. Lastly, while the app tested sampling densities of 0.1 m and 0.5 m, there could be a need to explore a broader range of densities to find an optimal balance between accuracy, processing time, and data storage for various log types.

To build on these findings, future research should prioritize field testing under realistic operational conditions. Expanding the range of tree species and log characteristics, evaluating all integrated functionalities, and conducting user acceptance studies will be essential for the continued development of the TS app.

5. Conclusions

This study aimed to evaluate the performance of the prototype Tree Scanner (TS) app, a LiDAR-equipped smartphone application, for direct log volume estimation. The results demonstrate that the TS app is a promising digital measurement tool, exhibiting strong agreement with conventional manual methods while offering a significant improvement in time efficiency. The app maintained consistent accuracy and high reliability across two different sampling densities (0.1 m and 0.5 m) and between different users. The TS app represents a notable advancement by providing instant volume estimates directly on a mobile device, streamlining field data collection. Its ease of use by a single operator and its design integrating features for Bluetooth device connectivity, remote server access, and potential for species identification position it as a valuable component for enhancing forest operations and integrating into comprehensive wood traceability systems. However, as a prototype evaluated under controlled conditions, further research is essential. Future work must focus on assessing the TS app’s performance and robustness in diverse and challenging real-world forest environments and across a wider variety of tree species and log irregularities. Additionally, the practical utility of its extended functionalities and user acceptance by the broader forestry workforce, particularly those with limited experience with digital tools, needs thorough investigation. Addressing these aspects will be essential for realizing the full potential of this app-based smartphone technology in modern forestry.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s25185847/s1, Figure S1: QQ-Plots and the relative frequency of biometric data taken by manual measurement. Legend: Panels on the left show the QQ-Plots of the diameter at the small end (Ds, Panel a), diameter at the large end (Dl, Panel c), diameter at the middle (Dm, Panel e), volume estimated by Huber’s formula (VH, Panel g), and volume estimated by Smalian’s formula (VS, Panel i); panels on the right show the relative frequencies in data using histograms for the diameter at the small end (Ds, Panel b), diameter at the large end (Dl, Panel d), diameter at the middle (Dm, Panel f), volume estimated by Huber’s formula (VH, Panel h), and volume estimated by Smalian’s formula (VS, Panel j). Note: Bins’ width and size were computed using the Freedman–Diaconis rule, which is based on interquartile range and the number of observations in the sample; data on log length is not shown in the figure, but it had a bi-modal distribution with modes at about 3.1 and 4.1 m in length; Figure S2: Statistical workflow implemented to characterize the data collected manually. Legend: Ds—diameter at the small end, Dm—diameter at the middle, Dl—diameter at the large end, L—log length, VH—volume estimated by Huber’s formula, VS—volume estimated by Smalian’s formula. Note: All the variables of the REF dataset (Ds, Dm, Dl, and L) as well as those derived (VH, VS), were subjected to a data normality test (Shapiro–Wilk); based on the outcomes of the test, the relevant descriptive statistics were estimated (see also Table S1 and Figure S1); Figure S3: Statistical workflow implemented to characterize the consistency of estimates due to the algorithm’s sampling densities. Legend: VAPP1_S1R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 1, VAPP2_S1R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 1, VAPP1_S1R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 2, VAPP2_S1R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 2, VAPP1_S2R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 1, VAPP2_S2R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 1, VAPP1_S2R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 2, VAPP2_S2R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 2. Note: All the pairs of variables were subjected to heteroscedasticity tests (Breusch–Pagan, White). Conventionally, the data from the sampling density of 0.5 m was taken as a reference to check the agreement of estimates by the Blant–Altman method, as well as to compute the relevant difference metrics (Bias, MAE, RMSE). Regression through the origin (RTO) was used to see the trends in data, where the independent variables were those based on a sampling density of 0.5 m. Note that the subject and replication were kept the same in all comparisons; Figure S4: Statistical workflow implemented to characterize the consistency of estimates when considering different replications of the same subject. Legend: VAPP1_S1R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 1, VAPP2_S1R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 1, VAPP1_S1R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 2, VAPP2_S1R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 2, VAPP1_S2R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 1, VAPP2_S2R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 1, VAPP1_S2R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 2, VAPP2_S2R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 2. Note: All the pairs of variables were subjected to heteroscedasticity tests (Breusch–Pagan, White). Conventionally, the data from the sampling density of 0.5 m was taken as a reference to check the agreement in estimates by the Blant–Altman method, as well as to compute the relevant difference metrics (Bias, MAE, RMSE). Regression through the origin (RTO) was used to see the trends in data, where the independent variables were those based on a sampling density of 0.5 m. Note that the sampling density and the subject were kept the same in all comparisons; Figure S5: Statistical workflow implemented to characterize the inter-rater consistency of estimates. Legend: VAPP1_S1R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 1, VAPP2_S1R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 1, VAPP1_S1R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 2, VAPP2_S1R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 2, VAPP1_S2R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 1, VAPP2_S2R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 1, VAPP1_S2R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 2, VAPP2_S2R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 2. Note: All the pairs of variables were subjected to heteroscedasticity tests (Breusch–Pagan, White). Conventionally, the data from the sampling density of 0.5 m was taken as a reference to check the agreement in estimates by the Blant–Altman method, as well as to compute the relevant difference metrics (Bias, MAE, RMSE). Regression through the origin (RTO) was used to see the trends in data, where the independent variables were those based on a sampling density of 0.5 m. Note that the sampling density and the replication were kept the same in all comparisons; Figure S6: Statistical workflow implemented to characterize the agreement between digital and manual estimates. Legend: Ds—diameter at the small end, Dm—diameter at the middle, Dl—diameter at the large end, L—log length, VH—volume estimated by Huber’s formula, VS—volume estimated by Smalian’s formula, VAPP1_S1R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 1, VAPP2_S1R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 1, VAPP1_S1R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 1 during replication 2, VAPP2_S1R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 1 during replication 2, VAPP1_S2R1—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 1, VAPP2_S2R1—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 1, VAPP1_S2R2—volume estimate using the algorithm sampling density of 0.5 m for data collected by Subject 2 during replication 2, VAPP2_S2R2—volume estimate using the algorithm sampling density of 0.1 m for data collected by Subject 2 during replication 2. Note: All the pairs of variables were subjected to heteroscedasticity tests (Breusch–Pagan, White). Conventionally, VH was taken as the independent variable in the regression through the origin (RTO), which was used to see the trends in data and as a reference to estimate the differences in the Bias, MAE, and RMSE metrics. Note that all the possible comparisons were taken into account; Figure S7: Statistical workflow implemented to compare the methods’ time efficiency. Legend: TM—cycle time of manual measurement, TS1R1—cycle time of digital measurement taken by Subject 1 during replication 1, TS1R2—cycle time of digital measurement taken by Subject 1 during replication 2, TS2R1—cycle time of digital measurement taken by Subject 2 during replication 1, TS2R2—cycle time of digital measurement taken by Subject 2 during replication 2, AMT—average digital measurement cycle time. Note: AMT was computed as the mean value rounded to the nearest second of TS1R1, TS1R2, TS2R1, and TS2R2. All the variables were subjected to data normality tests (Shapiro–Wilk) to decide what statistics could be used to characterize the central tendency as well as to select the right type of statistical comparison test; Figure S8: Frequency of differences between VH and VS plotted against a normal density curve; Figure S9: Trend of VS (green dots) and of the differences between the estimates (ΔV, brown dots) as a function of VH, plotted against the identity (1:1) line shown as the black dashed line. Legend: VH—volume estimated by Huber’s formula, VS—volume estimated by Smalian’s formula, ΔV—signed difference between VH and VS. Note: The sample contained 155 logs; Figure S10: Bland–Altman plot showing the agreement of estimates between VH and VS. Legend: ΔV—signed differences between volume estimates, Mean V—average value of volume estimates, ULOA—upper limit of agreement, RMSE—root mean squared error, MAE—mean absolute error, Bias—bias, LLOA—lower limit of agreement. Note: There was good agreement when considering two standard deviations to compute the limits of agreement; the Bias was close to zero, meaning that there was low systematic bias. However, the mean absolute error was close to 0.02, and some observations large in magnitude led to an RMSE value of 0.03; Figure S11: Scatterplot of VAPP1_S1R1 against VAPP2_S1R1. Legend: Green dots stand for the paired values of VAPP1_S1R1 and VAPP2_S1R1 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP1_S1R1 and VAPP2_S1R1. Note: The sample contained 155 observations; Figure S12: Scatterplot of VAPP1_S1R2 against VAPP2_S1R2. Legend: Green dots stand for the paired values of VAPP1_S1R2 and VAPP2_S1R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP1_S1R2 and VAPP2_S1R2. Note: The sample contained 155 observations; Figure S13: Scatterplot of VAPP1_S2R1 against VAPP2_S2R1. Legend: Green dots stand for the paired values of VAPP1_S2R1 and VAPP2_S2R1 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed differences between VAPP1_S2R1 and VAPP2_S2R1. Note: The sample contained 155 observations; Figure S14: Scatterplot of VAPP1_S2R2 against VAPP2_S2R2. Legend: Green dots stand for the paired values of VAPP1_S2R2 and VAPP2_S2R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed differences between VAPP1_S2R2 and VAPP2_S2R2. Note: The sample contained 86 observations; Figure S15: Scatterplot of VAPP1_S1R1 against VAPP1_S1R2. Legend: Green dots stand for the paired values of VAPP1_S1R1 and VAPP1_S1R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP1_S1R1 and VAPP1_S1R2. Note: The sample contained 155 observations; Figure S16: Scatterplot of VAPP2_S1R1 against VAPP2_S1R2. Legend: Green dots stand for the paired values of VAPP2_S1R1 and VAPP2_S1R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP2_S1R1 and VAPP2_S1R2. Note: The sample contained 155 observations; Figure S17: Scatterplot of VAPP1_S2R1 against VAPP1_S2R2. Legend: Green dots stand for the paired values of VAPP1_S2R1 and VAPP1_S2R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP1_S2R1 and VAPP1_S2R2. Note: The sample contained 89 observations; Figure S18: Scatterplot of VAPP2_S2R1 against VAPP2_S2R2. Legend: Green dots stand for the paired values of VAPP2_S2R1 and VAPP2_S2R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP2_S2R1 and VAPP2_S2R2. Note: The sample contained 89 observations; Figure S19: Scatterplot of VAPP1_S1R1 against VAPP1_S2R1. Legend: Green dots stand for the paired values of VAPP1_S1R1 and VAPP1_S2R1 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP1_S1R1 and VAPP1_S2R1. Note: The sample contained 155 observations; Figure S20: Scatterplot of VAPP2_S1R1 against VAPP2_S2R1. Legend: Green dots stand for the paired values of VAPP2_S1R1 and VAPP2_S2R1 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP2_S1R1 and VAPP2_S2R1. Note: The sample contained 155 observations; Figure S21: Scatterplot of VAPP1_S1R2 against VAPP1_S2R2. Legend: Green dots stand for the paired values of VAPP1_S1R2 and VAPP1_S2R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP1_S1R2 and VAPP1_S2R2. Note: The sample contained 89 observations; Figure S22: Scatterplot of VAPP2_S1R2 against VAPP2_S2R2. Legend: Green dots stand for the paired values of VAPP2_S1R2 and VAPP2_S2R2 and are plotted against the identity (1:1) line showed as black dashed line; brown dots stand for the signed difference between VAPP2_S1R2 and VAPP2_S2R2. Note: The sample contained 89 observations; Figure S23: Scatterplot of VAPP variables against VH. Note: Shapes of various colors stand for the paired values of the eight datasets (see the figure legend) against the VH estimates and are plotted against the identity (1:1) line showed as black dashed line. Note: The sample contained 89 observations when using VAPP1_S2R2 and VAPP2_S3R2 datasets; Figure S24: Dependence of time consumption for manual (TM) and digital (AMT) measurements on variability of log biometrics collected manually. Legend: TM—cycle time for manual measurement, AMT—cycle time for digital measurement, Ds—diameter at the small end, Dl—diameter at the large end, Dm—diameter at the middle, L—log length, VH—volume estimated by Huber’s formula; Table S1: Summary statistics of log biometric data taken by manual measurement; Table S2: Results of heteroscedasticity tests; Table S3: Results of heteroscedasticity tests; Table S4: Results of heteroscedasticity tests; Table S5: Results of heteroscedasticity tests.

Author Contributions

Conceptualization, S.A.B.; Methodology, G.P., C.N. and S.A.B.; Software, G.P.; Validation, M.E., G.O.F., C.N. and S.A.B.; Formal analysis, M.E. and G.O.F.; Investigation, M.E. and G.O.F.; Resources, G.P., C.N. and S.A.B.; Data curation, M.E. and G.O.F.; Writing—original draft, M.E., G.O.F., G.P., C.N. and S.A.B.; Writing—review & editing, M.E., G.O.F., G.P., C.N. and S.A.B.; Visualization, M.E., G.P., C.N. and S.A.B.; Supervision, S.A.B.; Project administration, G.P., C.N. and S.A.B.; Funding acquisition, G.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the EU’s Sintetic project. The Sintetic project has received funding from the European Union’s Horizon Europe research and innovation program under grant agreement No. 101082051. More information about the project can be found at: https://sinteticproject.eu/ (accessed on 15 September 2025).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors gratefully acknowledge the support of the HS Timber Productions S.R.L. company for their support during data collection. Also, the authors acknowledge the support of the Department of Forest Engineering, Forest Management Planning and Terrestrial Measurements for providing several of the instruments required to conduct this study. Special thanks go to Jenny Magali Morocho Toaza, who helped with data collection.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Digitalisation in Europe—2024 Edition. Available online: https://ec.europa.eu/eurostat/web/interactive-publications/digitalisation-2024 (accessed on 8 July 2025).

- Rijswijk, K.; Klerkx, L.; Bacco, M.; Bartolini, F.; Bulten, E.; Debruyne, L.; Dessein, J.; Scotti, I.; Brunori, G. Digital transformation of agriculture and rural areas: A socio-cyber-physical system framework to support responsibilisation. J. Rural Stud. 2021, 85, 79–90. [Google Scholar] [CrossRef]

- Ferrari, A.; Bacco, M.; Gaber, K.; Jedlitschka, A.; Hess, S.; Kaipainen, J.; Koltsida, P.; Toli, E.; Brunori, G. Drivers, barriers and impacts of digitalisation in rural areas from the viewpoint of experts. Inf. Softw. Technol. 2022, 145, 106816. [Google Scholar] [CrossRef]

- Király, K.; Dunai, L.; Calado, L.; Kocsis, A.B. Demountable shear connectors–constructional details and push-out tests. ce/papers 2023, 6, 53–58. [Google Scholar] [CrossRef]

- Bonke, V.; Fecke, W.; Michels, M.; Musshoff, O. Willingness to pay for smartphone apps facilitating sustainable crop protection. Agron. Sustain. Dev. 2018, 38, 51. [Google Scholar] [CrossRef]

- DESIRA. Digitisation: Economic and Social Impacts on Rural Areas. Available online: https://desira2020.agr.unipi.it/ (accessed on 8 July 2025).

- Baumüller, H. The little we know: An exploratory literature review on the utility of mobile phone-enabled services for smallholder farmers. J. Int. Dev. 2018, 30, 134–154. [Google Scholar] [CrossRef]

- Sivakumar, S.; Bijoshkumar, G.; Rajasekharan, A.; Panicker, V.; Paramasivam, S.; Manivasagam, V.S.; Manalil, S. Evaluating the expediency of smartphone applications for Indian farmers and other stakeholders. AgriEngineering 2022, 4, 656–673. [Google Scholar] [CrossRef]

- Nyakonda, T.; Tsietsi, M.; Terzoli, A.; Dlodlo, N. An RFID flock management system for rural areas. In Proceedings of the 2019 Open Innovations (OI), Cape Town, South Africa, 2–4 October 2019; IEEE: New York, NY, USA, 2019; pp. 78–82. [Google Scholar] [CrossRef]

- Delgado, F.J.; Delgado, J.; González-Crespo, J.; Cava, R.; Ramírez, R. High-pressure processing of a raw milk cheese improved its food safety maintaining the sensory quality. Food Sci. Technol. Int. 2013, 19, 493–501. [Google Scholar] [CrossRef]

- Michels, M.; Bonke, V.; Musshoff, O. Understanding the adoption of smartphone apps in dairy herd management. J. Dairy Sci. 2019, 102, 9422–9434. [Google Scholar] [CrossRef]

- Eichler Inwood, S.E.; Dale, V.H. State of apps targeting management for sustainability of agricultural landscapes. A review. Agron. Sustain. Dev. 2019, 39, 8. [Google Scholar] [CrossRef]

- Magnuson, R.; Erfanifard, Y.; Kulicki, M.; Gasica, T.A.; Tangwa, E.; Mielcarek, M.; Stereńczak, K. Mobile Devices in Forest Mensuration: A Review of Technologies and Methods in Single Tree Measurements. Remote Sens. 2024, 16, 3570. [Google Scholar] [CrossRef]

- Borz, S.A.; Morocho Toaza, J.M.; Forkuo, G.O.; Marcu, M.V. Potential of Measure app in estimating log biometrics: A comparison with conventional log measurement. Forests 2022, 13, 1028. [Google Scholar] [CrossRef]

- Niţă, M.D.; Borz, S.A. Accuracy of a Smartphone-based freeware solution and two shape reconstruction algorithms in log volume measurements. Comput. Electron. Agric. 2023, 205, 107653. [Google Scholar] [CrossRef]

- Tatsumi, S.; Yamaguchi, K.; Furuya, N. ForestScanner: A mobile application for measuring and mapping trees with LiDAR-equipped iPhone and iPad. Methods Ecol. Evol. 2023, 14, 1603–1609. [Google Scholar] [CrossRef]

- Häkli, J.; Sirkka, A.; Jaakkola, K.; Puntanen, V.; Nummila, K. Challenges and Possibilities of RFID in the Forest Industry. In Radio Frequency Identification from System to Applications; IntechOpen: London, UK, 2013. [Google Scholar] [CrossRef][Green Version]

- He, Z.; Turner, P. A systematic review on technologies and industry 4.0 in the forest supply chain: A framework identifying challenges and opportunities. Logistics 2021, 5, 88. [Google Scholar] [CrossRef]

- Figorilli, S.; Antonucci, F.; Costa, C.; Pallottino, F.; Raso, L.; Castiglione, M.; Pinci, E.; Menesatti, P. A blockchain implementation prototype for the electronic open source traceability of wood along the whole supply chain. Sensors 2018, 18, 3133. [Google Scholar] [CrossRef]

- SINTETIC: Harnessing the Digital Revolution in the Forest-Based Sector. Available online: https://sinteticproject.eu/ (accessed on 30 April 2025).

- Ulrich, K.T.; Eppinger, S.D. Product Design and Development, 6th ed.; McGraw-Hill: New York, NY, USA, 2016. [Google Scholar]

- Apple Developer Documentation, n.d. Capturing Depth Using the LiDAR Camera. Available online: https://developer.apple.com/documentation/avfoundation/capturing-depth-using-the-lidar-camera (accessed on 30 April 2025).

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Björheden, R.; Thompson, M.A. An international nomenclature for forest work study. In Proceedings, IUFRO 1995 S3: 04 Subject Area: 20th World Congress, Caring for the Forest: Research in a Changing World. August 1995 6–12; Tampere, Finland. Miscellaneous Report 422; University of Maine: Orono, ME, USA, 2000; pp. 190–215. [Google Scholar]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- Breusch, T.S.; Pagan, A.R. A simple test for heteroscedasticity and random coefficient variation. Econom. J. Econom. Soc. 1979, 47, 1287–1294. [Google Scholar] [CrossRef]

- White, H. A heteroskedasticity-consistent covariance matrix estimator and a direct test for heteroskedasticity. Econom. J. Econom. Soc. 1980, 48, 817–838. [Google Scholar] [CrossRef]

- Eisenhauer, J.G. Regression through the origin. Teach. Stat. 2003, 25, 76–80. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Measuring agreement in method comparison studies. Stat. Methods Med. Res. 1999, 8, 135–160. [Google Scholar] [CrossRef] [PubMed]

- Heckman, J.; Ichimura, H.; Smith, J.; Todd, P. Characterizing selection bias using experimental data. Econometrica 1998, 66, 1017–1098. [Google Scholar] [CrossRef]

- Ross, M.G.; Russ, C.; Costello, M.; Hollinger, A.; Lennon, N.J.; Hegarty, R.; Nusbaum, C.; Jaffe, D.B. Characterizing and measuring bias in sequence data. Genome Biol. 2013, 14, R51. [Google Scholar] [CrossRef] [PubMed]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?-Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Karunasingha, D.S.K. Root mean square error or mean absolute error? Use their ratio as well. Inf. Sci. 2022, 585, 609–629. [Google Scholar] [CrossRef]

- Mann, H.B.; Whitney, D.R. On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. Stat. 1947, 18, 50–60. [Google Scholar] [CrossRef]

- Bergmann, R.; Ludbrook, J.; Spooren, W.P. Different outcomes of the Wilcoxon-Mann-Whitney test from different statistics packages. Am. Stat. 2000, 54, 72–77. [Google Scholar] [CrossRef]

- MacFarland, T.W.; Yates, J.M. Introduction to Nonparametric Statistics for the Biological Sciences Using R; Springer: Cham, Switzerland, 2016; pp. 103–132. [Google Scholar] [CrossRef]

- Zaiontz, C. Real Statistics Resource Pack for Excel. 2025. Available online: https://real-statistics.com/ (accessed on 2 April 2025).

- Kazhdan, M.; Chuang, M.; Rusinkiewicz, S.; Hoppe, H. Poisson surface reconstruction with envelope constraints. Comput. Graph. Forum 2020, 39, 173–182. [Google Scholar] [CrossRef]

- de Miguel-Díez, F.; Reder, S.; Wallor, E.; Bahr, H.; Blasko, L.; Mund, J.P.; Cremer, T. Further application of using a personal laser scanner and simultaneous localization and mapping technology to estimate the log’s volume and its comparison with traditional methods. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102779. [Google Scholar] [CrossRef]

- Purfürst, T.; de Miguel-Díez, F.; Berendt, F.; Engler, B.; Cremer, T. Comparison of Wood Stack Volume Determination between Manual, Photo-Optical, iPad-LiDAR and Handheld-LiDAR Based Measurement Methods. Iforest-Biogeosciences For. 2023, 16, 243. [Google Scholar] [CrossRef]

- Fonweban, J.N. Effect of log formula, log length and method of measurement on the accuracy of volume estimates for three tropical timber species in Cameroon. Commonw. For. Rev. 1997, 76, 114–120. [Google Scholar]

- Ahmad, S.S.S.; Mushar, S.H.M.; Shari, N.H.Z.; Kasmin, F. A Comparative study of log volume estimation by using statistical method. Educ. J. Sci. Math. Technol. 2020, 7, 22–28. [Google Scholar] [CrossRef]

- Xu, M.; Chen, S.; Xu, S.; Mu, B.; Ma, Y.; Wu, J.; Zhao, Y. An accurate handheld device to measure log diameter and volume using machine vision technique. Comput. Electron. Agric. 2024, 224, 109130. [Google Scholar] [CrossRef]

- Gollob, C.; Ritter, T.; Kraßnitzer, R.; Tockner, A.; Nothdurft, A. Measurement of forest inventory parameters with Apple iPad Pro and integrated LiDAR sensor. Remote Sens. 2021, 13, 3129. [Google Scholar] [CrossRef]

- Kärhä, K.; Nurmela, S.; Karvonen, H.; Kivinen, V.P.; Melkas, T.; Nieminen, M. Estimating the accuracy and time consumption of a mobile machine vision application in measuring timber stacks. Comput. Electron. Agric. 2019, 158, 167–182. [Google Scholar] [CrossRef]

- Gollob, C.; Ritter, T.; Nothdurft, A. Forest inventory with long range and high-speed personal laser scanning (PLS) and simultaneous localization and mapping (SLAM) technology. Remote Sens. 2020, 12, 1509. [Google Scholar] [CrossRef]

- Shao, J.; Lin, Y.C.; Wingren, C.; Shin, S.Y.; Fei, W.; Carpenter, J.; Habib, A.; Fei, S. Large-scale inventory in natural forests with mobile LiDAR point clouds. Sci. Remote Sens. 2024, 10, 100168. [Google Scholar] [CrossRef]

- Moskalik, T.; Tymendorf, Ł.; van der Saar, J.; Trzciński, G. Methods of wood volume determining and its implications for forest transport. Sensors 2022, 22, 6028. [Google Scholar] [CrossRef]

- Ferketich, S. Internal consistency estimates of reliability. Res. Nurs. Health 1990, 13, 437–440. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Tavakol, M.; Dennick, R. Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef] [PubMed]

- Strandgard, M. Evaluation of manual log measurement errors and its implications on harvester log measurement accuracy. Int. J. For. Eng. 2009, 20, 9–16. [Google Scholar] [CrossRef]

- Gwet, K.L. Intrarater reliability. Wiley Encycl. Clin. Trials 2008, 4, 473–485. [Google Scholar] [CrossRef]

- Söderberg, J.; Wallerman, J.; Persson, H.J.; Ståhl, G. Sources of error in manual forest inventory measurements. Scand. J. For. Res. 2015, 30, 611–620. [Google Scholar]

- Bate, L.J.; Torgersen, T.R.; Wisdom, M.J.; Garton, E.O. Biased estimation of forest log characteristics using intersect diameters. For. Ecol. Manag. 2009, 258, 635–640. [Google Scholar] [CrossRef]

- Forkuo, G.O.; Borz, S.A. Intra-and Inter-Rater Reliability in Log Volume Estimation Based on Lidar Data and Shape Reconstruction Algorithms: A Case Study on Poplar Logs. SSRN 4948247. 2024. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4948247 (accessed on 15 September 2025).

- Gwet, K.L. Handbook of Inter-Rater Reliability: The Definitive Guide to Measuring the Extent of Agreement Among Raters; Advanced Analytics, LLC: Oxford, MS, USA, 2014. [Google Scholar]

- Kottner, J.; Audigé, L.; Brorson, S.; Donner, A.; Gajewski, B.J.; Hróbjartsson, A.; Roberts, C.; Shoukri, M.; Streiner, D.L. Guidelines for reporting reliability and agreement studies (GRRAS) were proposed. J. Clin. Epidemiol. 2011, 64, 96–106. [Google Scholar] [CrossRef]

- Di Stefano, F.; Chiappini, S.; Gorreja, A.; Balestra, M.; Pierdicca, R. Mobile 3D scan LiDAR: A literature review. Geomat. Nat. Hazards Risk 2021, 12, 2387–2429. [Google Scholar] [CrossRef]

- Raj, T.; Hanim Hashim, F.; Baseri Huddin, A.; Ibrahim, M.F.; Hussain, A. A survey on LiDAR scanning mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Nurminen, T.; Korpunen, H.; Uusitalo, J. Time consumption of timber measurement and quality assessment in roadside scaling. Silva Fenn. 2006, 40, 533–547. [Google Scholar] [CrossRef]

- Spinelli, R.; Magagnotti, N.; Schweier, J.; O’Neal, J.; Kanzian, C.; Kühmaier, M. Time consumption and productivity of mechanized and manual log-making in forest operations. Comput. Electron. Agric. 2016, 128, 154–162. [Google Scholar]

- Borz, S.A.; Proto, A.R. Application and accuracy of smart technologies for measurements of roundwood: Evaluation of time consumption and efficiency. Comput. Electron. Agric. 2022, 197, 106990. [Google Scholar] [CrossRef]

- Moik, L.; Gollob, C.; Ofner-Graff, T.; Sarkleti, V.; Ritter, T.; Tockner, A.; Witzmann, S.; Kraßnitzer, R.; Stampfer, K.; Nothdurft, A. Measurement of sawlog stacks on an individual log basis using LiDAR. Comput. Electron. Agric. 2025, 237, 110493. [Google Scholar] [CrossRef]

- Costa, C.; Figorilli, S.; Proto, A.R.; Colle, G.; Sperandio, G.; Gallo, P.; Antonucci, F.; Pallottino, F.; Menesatti, P. Digital stereovision system for dendrometry, georeferencing and data management. Biosyst. Eng. 2018, 174, 126–133. [Google Scholar] [CrossRef]

- Michels, M.; Fecke, W.; Feil, J.H.; Musshoff, O.; Pigisch, J.; Krone, S. Smartphone adoption and use in agriculture: Empirical evidence from Germany. Precis. Agric. 2020, 21, 403–425. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |