CrossInteraction: Multi-Modal Interaction and Alignment Strategy for 3D Perception

Abstract

1. Introduction

2. Related Work

2.1. Camera-Based 3D Perception

2.2. LiDAR-Based 3D Perception

2.3. Multi-Modal Fusion-Based 3D Perception Methods

3. Method

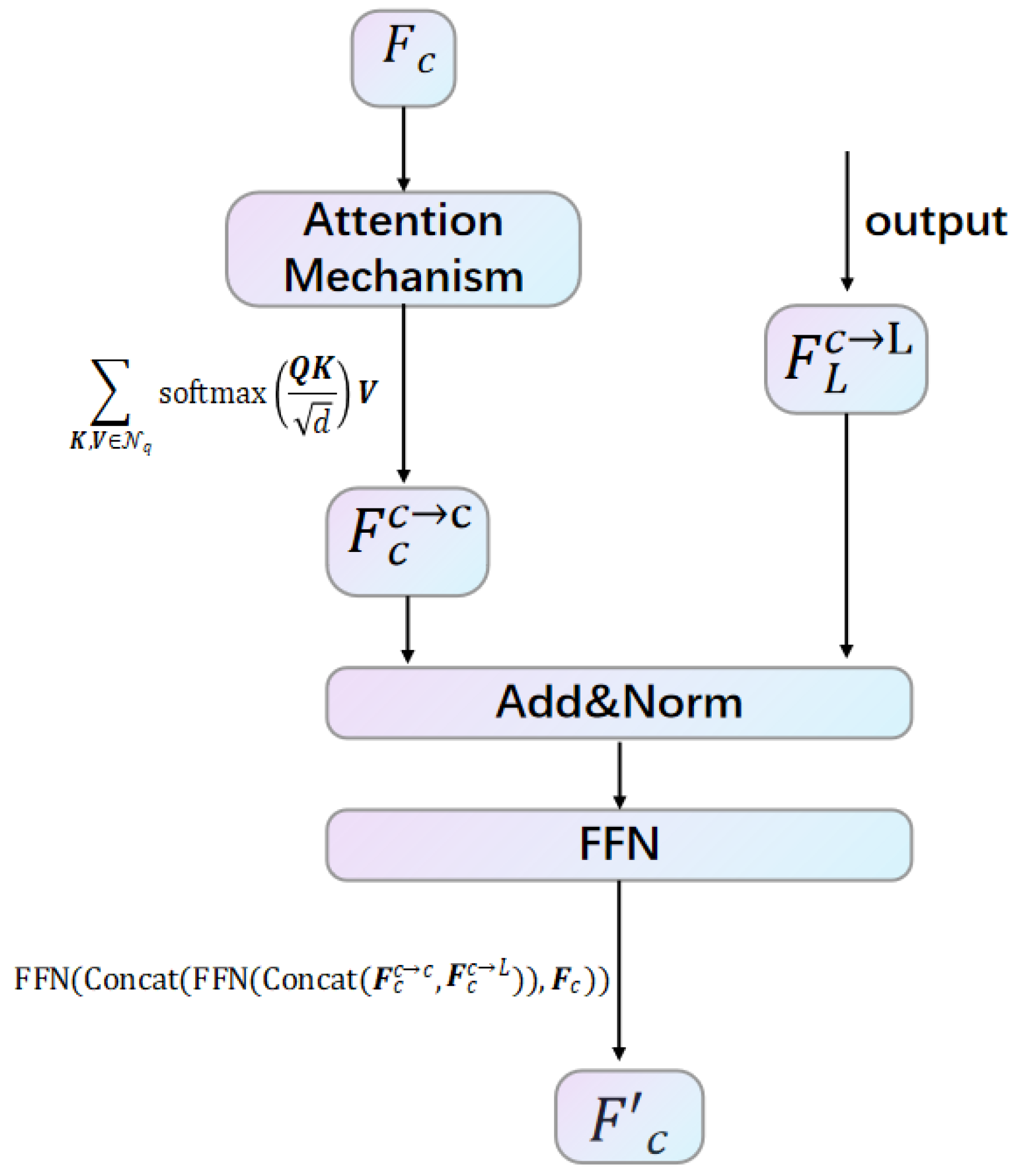

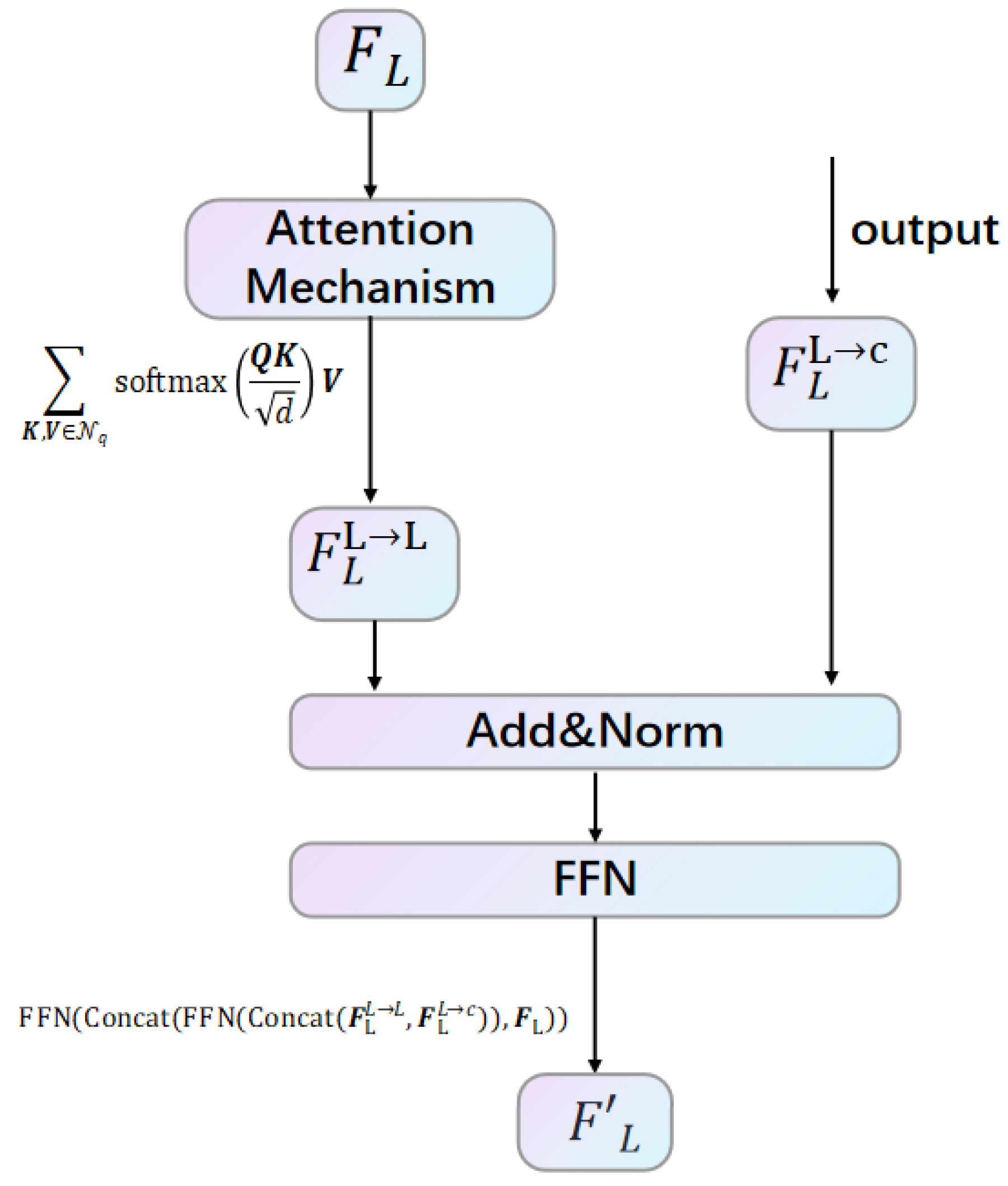

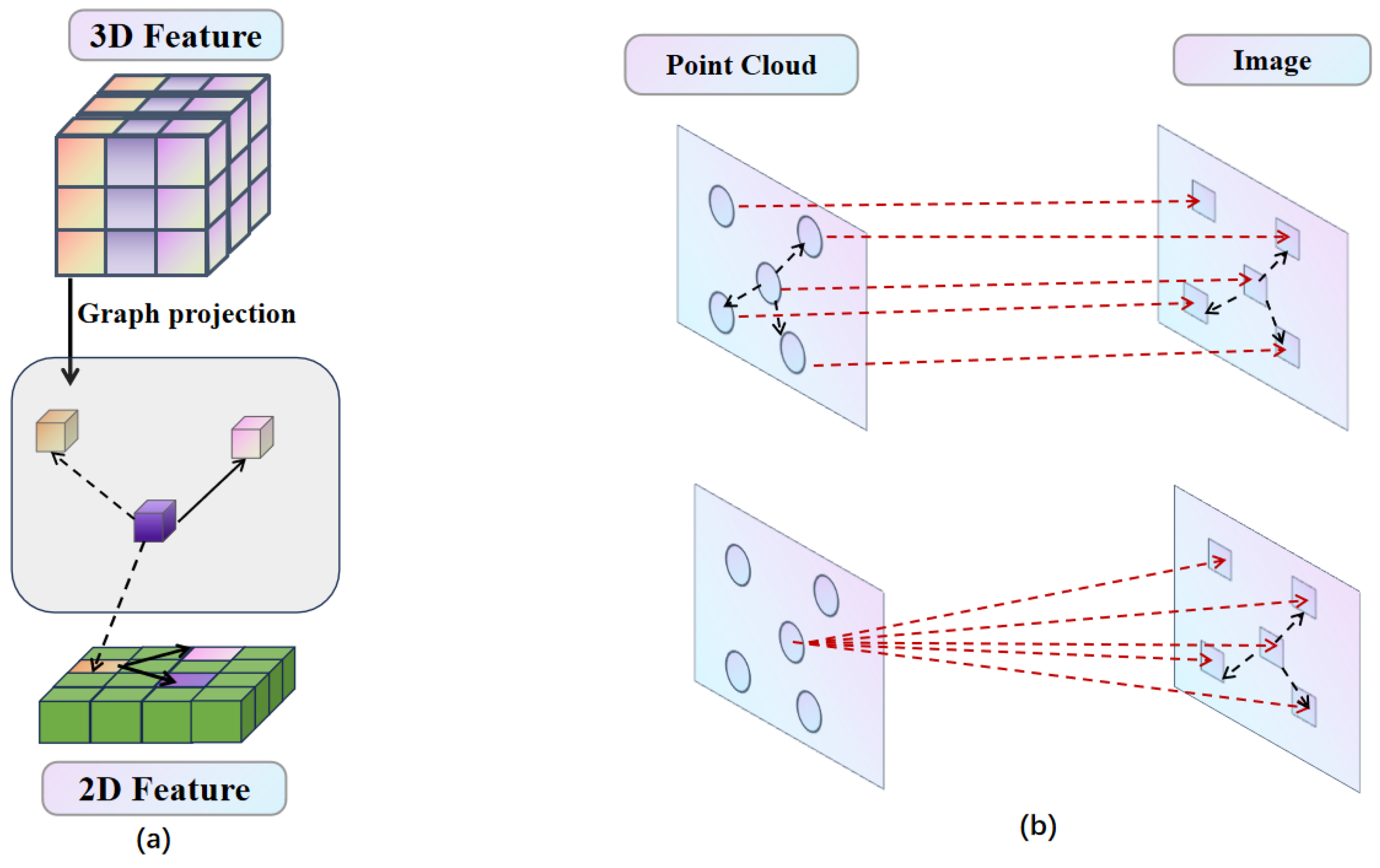

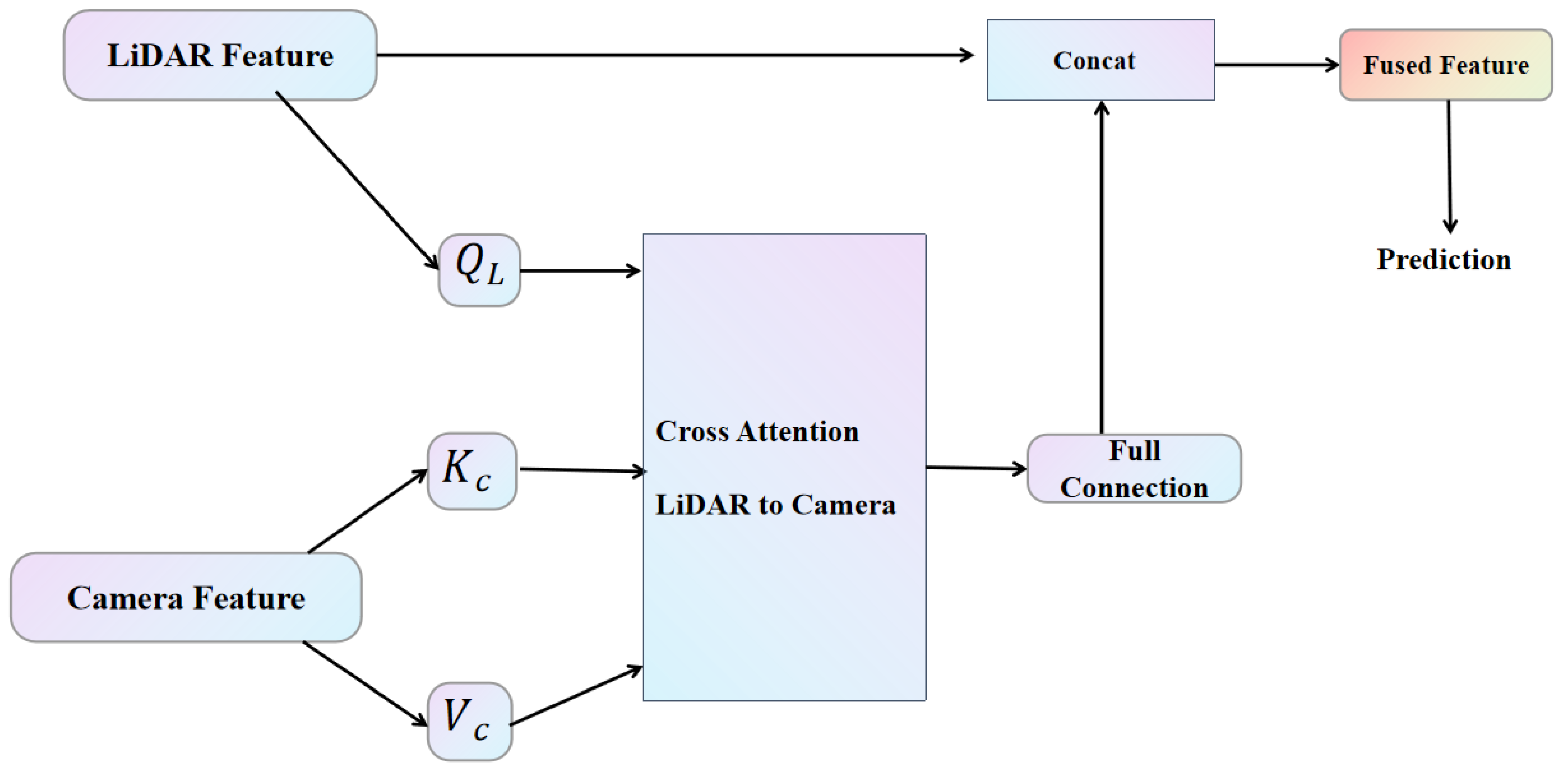

3.1. Interaction Encoder

3.2. Fusion Encoder

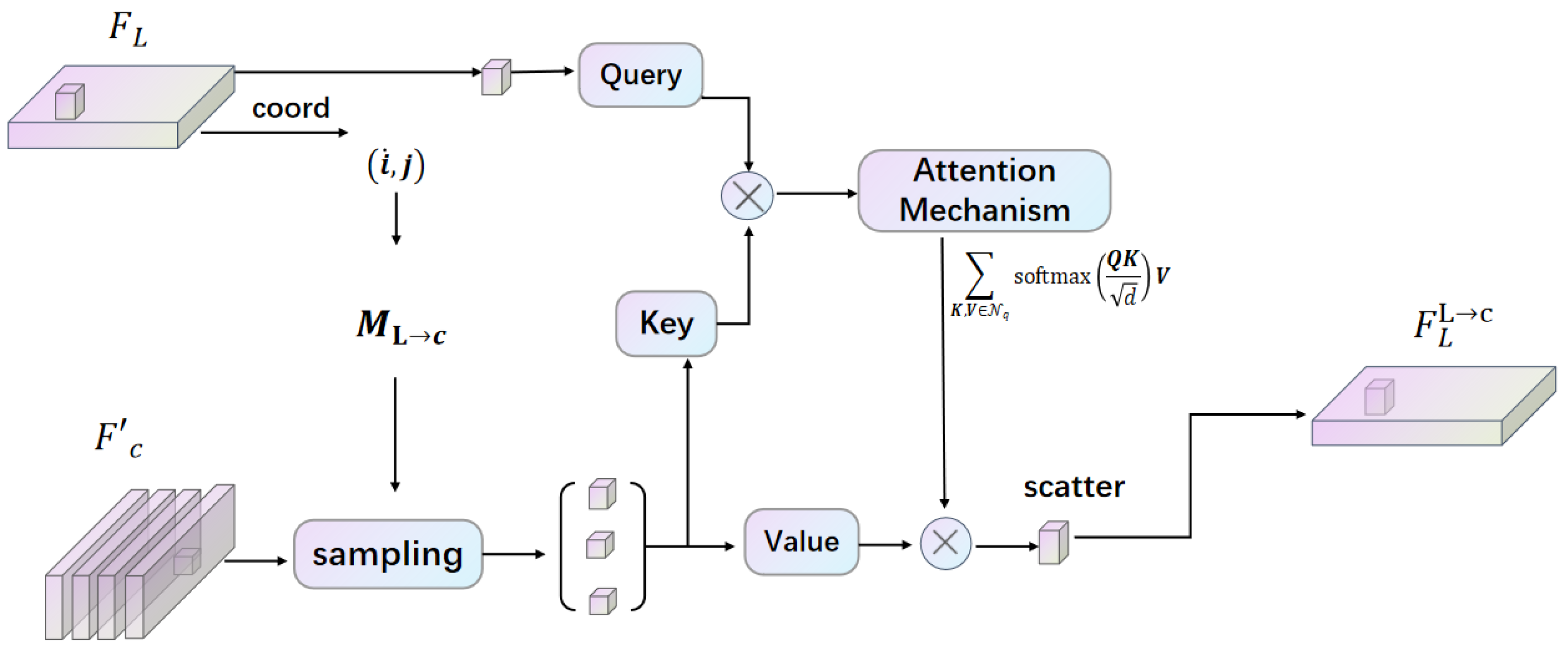

3.2.1. Feature Alignment

3.2.2. Feature Fusion

4. Experiments

4.1. Introduction of Dataset

4.2. Implementation Details

4.3. Results and Analysis

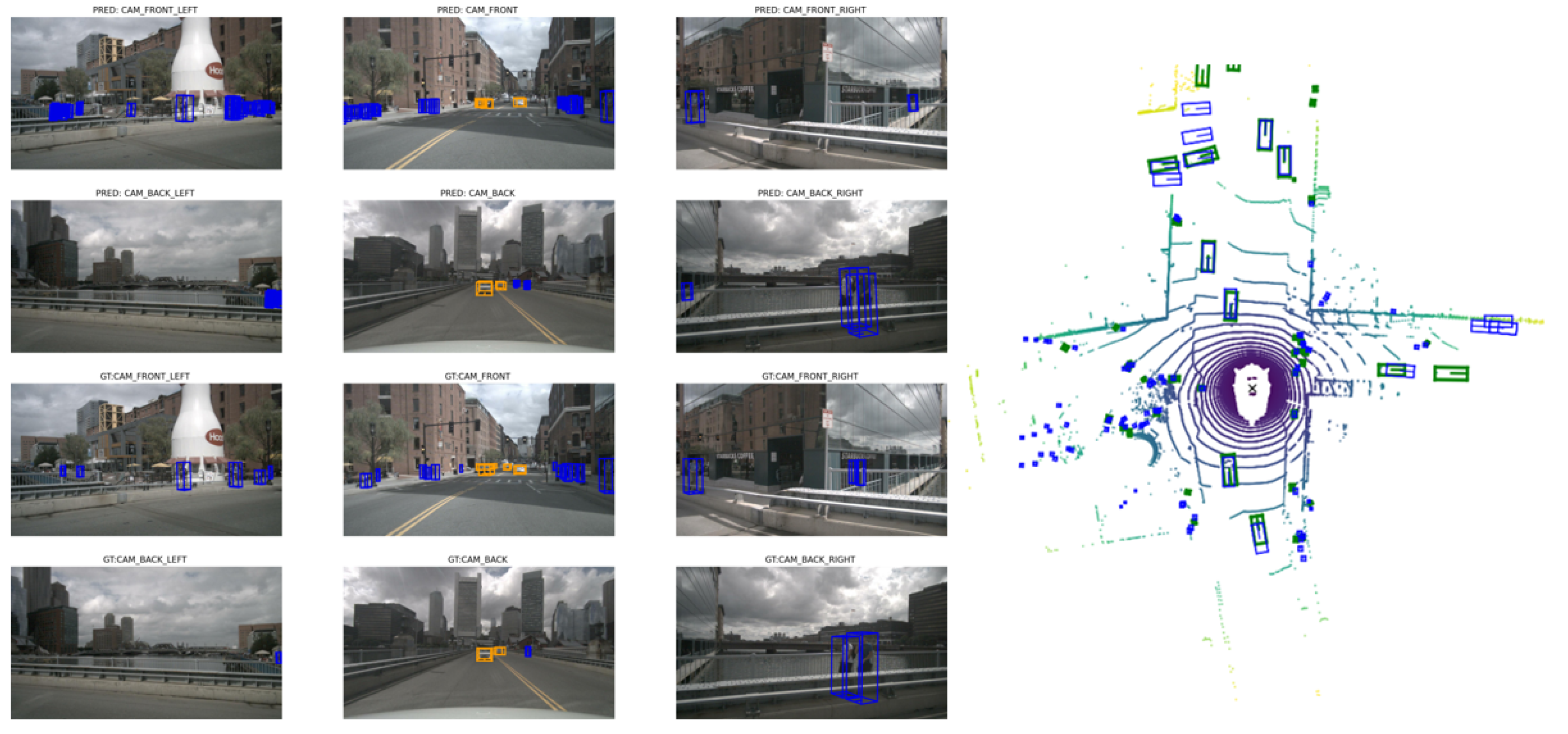

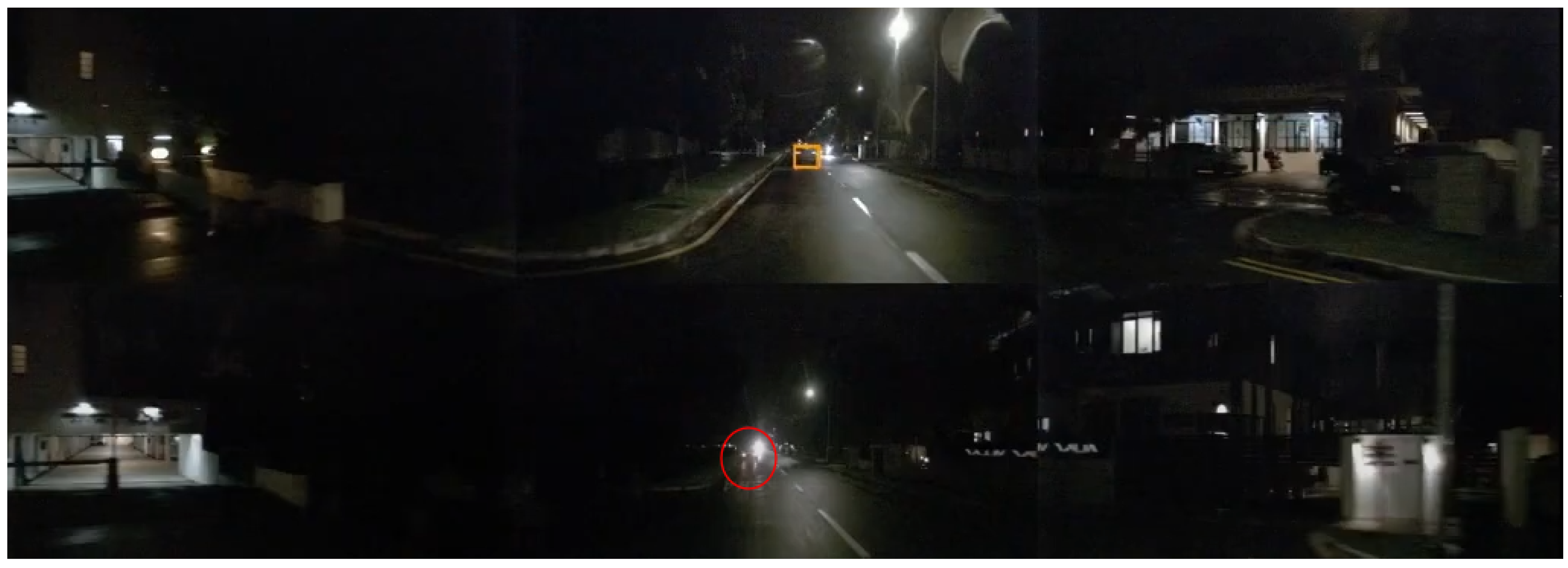

4.4. Visualizations

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, Y.; He, H.; Wang, Y.; Mao, Z.; Wang, H. Multi-modality 3D object detection in autonomous driving: A review. Neurocomputing 2023, 553, 126587. [Google Scholar] [CrossRef]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4603–4611. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Multimodal virtual point 3d detection. In Proceedings of the NIPS ’21: 35th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 6–14 December 2021; pp. 16494–16507. [Google Scholar]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le, Q.V.; et al. Deepfusion: Lidar-camera deep fusion for multi-modal 3d object detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17161–17170. [Google Scholar]

- Liu, Z.; Tang, H.; Amini, A.; Yang, X.; Mao, H.; Rus, D.L.; Han, S. Bevfusion: Multi-task multi-sensor fusion with unified bird’s-eye view representation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2774–2781. [Google Scholar]

- Yang, Z.; Chen, J.; Miao, Z.; Li, W.; Zhu, X.; Zhang, L. Deepinteraction: 3d object detection via modality interaction. In Proceedings of the NIPS ’22: Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; pp. 1992–2005. [Google Scholar]

- Philion, J.; Fidler, S. Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23 August 2020; pp. 194–210. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Roddick, T.; Kendall, A.; Cipolla, R. Orthographic feature transform for monocular 3d object detection. arXiv 2018, arXiv:1811.08188. [Google Scholar] [CrossRef]

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. Bevdet: High-performance multi-camera 3d object detection in bird-eye-view. arXiv 2021, arXiv:2112.11790. [Google Scholar]

- Huang, J.; Huang, G. Bevdet4d: Exploit temporal cues in multi-camera 3d object detection. arXiv 2022, arXiv:2203.17054. [Google Scholar]

- Li, Y.; Ge, Z.; Yu, G.; Yang, J.; Wang, Z.; Shi, Y.; Sun, J.; Li, Z. Bevdepth: Acquisition of reliable depth for multi-view 3d object detection. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence and Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence and Thirteenth Symposium on Educational Advances in Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 1477–1485. [Google Scholar]

- Li, Y.; Bao, H.; Ge, Z.; Yang, J.; Sun, J.; Li, Z. Bevstereo: Enhancing depth estimation in multi-view 3d object detection with temporal stereo. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence and the Twenty-Sixth Innovative Applications of Artificial Intelligence Conference and the Fifth Symposium on Educational Advances in Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; Springer: Berlin/Heidelberg, Germany; AAAI Press: Washington, DC, USA, 2014; Volume 165, pp. 1486–1494. [Google Scholar]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. Detr3d: 3d object detection from multi-view images via 3d-to-2d queries. In Proceedings of the Conference on Robot Learning, PMLR 2022, Auckland, New Zeeland, 14–18 December 2022; pp. 180–191. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end Object Detection with Transformers; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Liu, Y.; Wang, T.; Zhang, X.; Sun, J. Petr: Position Embedding Transformation for Multi-View 3D Object Detection; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Qiao, Y.; Dai, J. Bevformer: Learning Bird’s-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11779–11788. [Google Scholar]

- Wang, C.; Ma, C.; Zhu, M.; Yang, X. PointAugmenting: Cross-Modal Augmentation for 3D Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11789–11798. [Google Scholar]

- Yao, J.; Zhou, J.; Wang, Y.; Gao, Z.; Hu, W. Infrastructure-assisted 3d detection networks based on camera-lidar early fusion strategy. Neurocomputing 2024, 600, 128180. [Google Scholar] [CrossRef]

- Bai, X.; Hu, Z.; Zhu, X.; Huang, Q.; Chen, Y.; Fu, H.; Tai, C.-L. Transfusion: Robust lidar-camera fusion for 3d object detection with transformers. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1080–1089. [Google Scholar]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep Continuous Fusion for Multi-Sensor 3D Object Detection; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar]

- He, W.; Deng, Z.; Ye, Y.; Pan, P. ConCs-Fusion: A Context Clustering-Based Radar and Camera Fusion for Three-Dimensional Object Detection. Remote Sens. 2023, 15, 5130. [Google Scholar] [CrossRef]

- Wei, M.; Li, J.; Kang, H.; Huang, Y.; Lu, J.-G. Bev-cfkt: A lidar-camera cross-modality-interaction fusion and knowledge transfer framework with transformer for bev 3d object detection. Neurocomputing 2024, 582, 127527. [Google Scholar] [CrossRef]

- Song, Z.; Zhang, G.; Liu, L.; Yang, L.; Xu, S.; Jia, C.; Jia, F.; Wang, L. Robofusion: Towards robust multi-modal 3d object detection via sam. arXiv 2024, arXiv:2401.03907. [Google Scholar]

- Song, Z.; Yang, L.; Xu, S.; Liu, L.; Xu, D.; Jia, C.; Jia, F.; Wang, L. Graphbev: Towards Robust bev Feature Alignment for Multi-Modal 3D Object Detection; Springer International Publishing: Cham, Switzerland, 2024. [Google Scholar]

- Li, Y.; Huang, B.; Chen, Z.; Cui, Y.; Liang, F.; Shen, M.; Liu, F.; Xie, E.; Sheng, L.; Ouyang, W.; et al. Fast-BEV: A Fast and Strong Bird’s-Eye View Perception Baseline. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 8665–8679. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Zhang, T.; Peng, K.C.; Wang, G. PF3Det: A Prompted Foundation Feature Assisted Visual LiDAR 3D Detector. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 3778–3787. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 10386–10393. [Google Scholar]

- Ku, J.; Harakeh, A.; Waslander, S.L. In Defense of Classical Image Processing: Fast Depth Completion on the CPU. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 16–22. [Google Scholar]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. VoxelNeXt: Fully Sparse VoxelNet for 3D Object Detection and Tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 21674–21683. [Google Scholar]

- Teng, S.; Li, L.; Li, Y.; Hu, X.; Li, L.; Ai, Y.; Chen, L. Fusionplanner: A multi-task motion planner for mining trucks via multi-sensor fusion. Mech. Syst. Sig. Process. 2024, 208, 111051. [Google Scholar] [CrossRef]

- Liao, B.; Chen, S.; Jiang, B.; Cheng, T.; Zhang, Q.; Liu, W.; Huang, C.; Wang, X. Lane graph as path: Continuity-preserving path-wise modeling for online lane graph construction. In Proceedings of the Computer Vision–ECCV 2024: 18th European Conference, Milan, Italy, 29 September–4 October 2024; Proceedings, Part XLIV, Milan, Italy, 29 September 2024. pp. 334–351. [Google Scholar]

- Xie, E.; Yu, Z.; Zhou, D.; Philion, J.; Anandkumar, A.; Fidler, S.; Luo, P.; Alvarez, J.M. M2bev: Multi-camera joint 3d detection and segmentation with unified birds-eye view representation. arXiv 2022, arXiv:2204.05088. [Google Scholar]

- Xu, S.; Zhou, D.; Fang, J.; Yin, J.; Bin, Z.; Zhang, L. FusionPainting: Multimodal Fusion with Adaptive Attention for 3D Object Detection. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3047–3054. [Google Scholar]

- Chen, Z.; Li, Z.; Zhang, S.; Fang, L.; Jiang, Q.; Zhao, F.; Zhou, B.; Zhao, H. Autoalign: Pixel-instance feature aggregation for multi-modal 3d object detection. arXiv 2022, arXiv:2201.06493. [Google Scholar]

- Chen, X.; Zhang, T.; Wang, Y.; Wang, Y.; Zhao, H. Futr3d: A unified sensor fusion framework for 3d detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 172–181. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 22–31. [Google Scholar]

| Modality | mAP (test) | NDS (test) | mAP (val) | NDS (val) | MACs (G) | Latency (ms) | |

|---|---|---|---|---|---|---|---|

| BEVDet [10] | C | 42.2 † | 48.2 † | – | – | – | – |

| M2BEV [38] | C | 42.9 | 47.4 | 41.7 | 47.0 | – | – |

| BEVFormer [17] | C | 44.5 | 53.5 | 41.6 | 51.7 | – | – |

| BEVDet4D [11] | C | 45.1 † | 56.9 † | – | – | – | – |

| PointPillars [21] | L | – | – | 52.3 | 61.3 | 65.5 | 34.4 |

| SECOND [19] | L | 52.8 | 63.3 | 52.6 | 63.0 | 85.0 | 69.8 |

| CenterPoint [22] | L | 60.3 | 67.3 | 59.6 | 66.8 | 153.5 | 80.7 |

| PointPainting [2] | C+L | – | – | 65.8 | 69.6 | 370.0 | 185.8 |

| PointAugmenting [23] | C+L | 66.8 † | 71.0 † | – | – | 408.5 | 234.4 |

| MVP [3] | C+L | 66.4 | 70.5 | 66.1 | 70.0 | 371.7 | 187.1 |

| FusionPainting [39] | C+L | 68.1 | 71.6 | 66.5 | 70.7 | – | – |

| AutoAlign [40] | C+L | – | – | 66.6 | 71.1 | – | – |

| FUTR3D [41] | C+L | – | – | 64.5 | 68.3 | 1069.0 | 321.4 |

| TransFusion [25] | C+L | 68.9 | 71.6 | 67.5 | 71.3 | 485.8 | 156.6 |

| BEVfusion [5] | C+L | 70.2 | 72.9 | 68.5 | 71.4 | 253.2 | 119.2 |

| VoxelNeXt [35] | C+L | 70.3 | 72.2 | 68.6 | 71.7 | 777.3 | 234.2 |

| FusionPlanner [36] | C+L | 69.5 | 71.9 | 67.2 | 70.3 | 459.4 | 210.4 |

| LaneGAP [37] | C+L | 70.2 | 71.4 | 68.2 | 70.9 | 542.2 | 220.1 |

| Ours | C+L | 72.1 | 73.8 | 70.3 | 72.2 | 249.0 | 110.9 |

| VoxelNeXt [35] | C+L | 74.4‡ | 75.4‡ | – | – | – | – |

| FusionPlanner [36] | C+L | 74.6‡ | 75.9‡ | – | – | – | – |

| LaneGAP [37] | C+L | 74.2‡ | 76.0‡ | – | – | – | – |

| BEVfusion [5] | C+L | 75.0‡ | 76.1‡ | 73.7‡ | 74.9‡ | – | – |

| Ours | C+L | 76.3‡ | 77.4‡ | 74.2‡ | 76.3‡ | – | – |

| Modality | Drivable | Ped. Cross. | Walkway | Stop Line | Carpark | Divider | Mean | |

|---|---|---|---|---|---|---|---|---|

| OFT [9] | C | 74.0 | 35.3 | 45.9 | 27.5 | 35.9 | 33.9 | 42.1 |

| LSS [7] | C | 75.4 | 38.8 | 46.3 | 30.3 | 39.1 | 36.5 | 44.4 |

| CVT [42] | C | 74.3 | 36.8 | 39.9 | 25.8 | 35.0 | 29.4 | 40.2 |

| M2BEV [38] | C | 77.2 | – | – | – | – | 40.5 | – |

| PointPillars [21] | L | 72.0 | 43.1 | 53.1 | 29.7 | 27.7 | 37.5 | 43.8 |

| CenterPoint [22] | L | 75.6 | 48.4 | 57.5 | 36.5 | 31.7 | 41.9 | 48.6 |

| PointPainting [2] | C+L | 75.9 | 48.5 | 57.1 | 36.9 | 34.5 | 41.9 | 49.1 |

| BEVFusion [5] | C+L | 85.5 | 60.5 | 67.6 | 52.0 | 57.0 | 53.7 | 62.7 |

| VoxelNeXt [35] | C+L | 85.4 | 57.2 | 63.4 | 53.7 | 55.9 | 54.7 | 61.7 |

| LaneGAP [37] | C+L | 82.2 | 50.4 | 59.0 | 52.1 | 54.6 | 52.0 | 58.4 |

| Ours | C+L | 88.7 | 62.4 | 68.2 | 53.9 | 59.8 | 54.9 | 64.7 |

| Sunny | Rainy | Day | Night | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Modality | mAP | mIoU | mAP | mIoU | mAP | mIoU | mAP | mIoU | ||||

| CenterPoint [22] | L | 62.9 | 50.7 | 59.2 | 42.3 | 62.8 | 48.9 | 35.4 | 37.0 | |||

| BEVDet [10] | C | 32.9 | 59.0 | 33.7 | 50.5 | 33.7 | 57.4 | 13.5 | 30.8 | |||

| MVP [3] | C+L | 65.9 (+3.0) | 51.0 (−8.0) | 66.3 (+7.1) | 42.9 (−7.6) | 66.3 (+3.5) | 49.2 (−8.2) | 38.4 (+3.0) | 37.5 (+6.7) | |||

| BEVFusion [5] | C+L | 68.2 (+5.3) | 65.6 (+6.6) | 69.9 (+10.7) | 55.9 (+5.4) | 68.5(+5.7) | 63.1 (+5.7) | 42.8(+7.4) | 43.6 (+12.8) | |||

| Ours | C+L | 69.2 (+6.3) | 68.9 (+9.9) | 72.0 (+12.8) | 58.3 (+7.6) | 70.9(+8.1) | 65.2 (+7.8) | 44.2(+8.8) | 47.9 (+17.1) | |||

| mAP | NDS | mIoU | |

|---|---|---|---|

| L | 58.4 | 66.7 | 57.1 |

| C+L | 72.1 | 72.2 | 64.7 |

| (Meters) | mAP | NDS | mIoU |

|---|---|---|---|

| 0.075 | 72.1 | 72.2 | 64.7 |

| 0.1 | 67.5 | 71.5 | 64.3 |

| 0.125 | 67.2 | 70.5 | 64.4 |

| mAP | NDS | mIoU | |

|---|---|---|---|

| ResNet50 | 67.1 | 68.7 | 60.2 |

| SwinT (freeze) | 66.3 | 71.2 | 54.0 |

| SwinT | 72.1 | 72.2 | 64.7 |

| mAP | NDS | |

|---|---|---|

| 1 | 66.3 | 70.6 |

| 2 | 67.5 | 71.4 |

| 3 | 69.8 | 72.7 |

| mAP | NDS | |

|---|---|---|

| 68.3 | 70.5 | |

| 69.5 | 71.9 | |

| + | 72.1 | 72.2 |

| mAP (nuScenes→Waymo) | mAP (Waymo→nuScenes) | |

|---|---|---|

| BEVFusion [5] | 67.3 | 47.5 |

| Ours | 72.1 | 56.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, W.; Liu, X.; Ding, Y. CrossInteraction: Multi-Modal Interaction and Alignment Strategy for 3D Perception. Sensors 2025, 25, 5775. https://doi.org/10.3390/s25185775

Zhao W, Liu X, Ding Y. CrossInteraction: Multi-Modal Interaction and Alignment Strategy for 3D Perception. Sensors. 2025; 25(18):5775. https://doi.org/10.3390/s25185775

Chicago/Turabian StyleZhao, Weiyi, Xinxin Liu, and Yu Ding. 2025. "CrossInteraction: Multi-Modal Interaction and Alignment Strategy for 3D Perception" Sensors 25, no. 18: 5775. https://doi.org/10.3390/s25185775

APA StyleZhao, W., Liu, X., & Ding, Y. (2025). CrossInteraction: Multi-Modal Interaction and Alignment Strategy for 3D Perception. Sensors, 25(18), 5775. https://doi.org/10.3390/s25185775