Abstract

The construction of knowledge graphs in cyber threat intelligence (CTI) critically relies on automated entity–relation extraction. However, sequence tagging-based methods for joint entity–relation extraction are affected by the order-dependency problem. As a result, overlapping relations are handled ineffectively. To address this limitation, a parallel, ensemble-prediction–based model is proposed for joint entity–relation extraction in CTI. The joint extraction task is reformulated as an ensemble prediction problem. A joint network that combines Bidirectional Encoder Representations from Transformers (BERT) with a Bidirectional Gated Recurrent Unit (BiGRU) is constructed to capture deep contextual features in sentences. An ensemble prediction module and a triad representation of entity–relation facts are designed for joint extraction. A non-autoregressive decoder is employed to generate relation triad sets in parallel, thereby avoiding unnecessary sequential constraints during decoding. In the threat intelligence domain, labeled data are scarce and manual annotation is costly. To mitigate these constraints, the SecCti dataset is constructed by leveraging ChatGPT’s small-sample learning capability for labeling and augmentation. This approach reduces annotation costs effectively. Experimental results show a 4.6% absolute F1 improvement over the baseline on joint entity–relation extraction for threat intelligence concerning Advanced Persistent Threats (APTs) and cybercrime activities.

1. Introduction

With the rapid development of internet technology, cybersecurity threats have become increasingly complex and diverse. Advanced Persistent Threats (APTs), ransomware, distributed denial-of-service (DDoS) attacks, and other emerging attack types pose serious risks to the critical infrastructures of enterprises and nations [1,2]. In this context, cyber threat intelligence (CTI) has been recognized as a key component of cyber defense and capability enhancement [3]. CTI is intended to provide timely and actionable strategies by collecting, analyzing, and sharing dynamic threat information. Nevertheless, the accurate mining and analysis of high-value intelligence from large volumes of CTI reports remain significant challenges for cybersecurity professionals [4].

The core of CTI knowledge mining lies in the accurate extraction and analysis of extensive information. This information includes attacker behaviors, vulnerabilities and exploits, defender actions, attack tools, and the organizational relationships behind adversaries. The effective management of such intelligence is essential for informed defense decision-making [5]. Knowledge graphs describe concepts, entities, and inter-entity relations in a structured form that aligns more closely with human cognition [6]. By leveraging knowledge graph-based information extraction techniques, large volumes of threat intelligence can be organized and interpreted more effectively. The primary tasks of information extraction are Named Entity Recognition (NER) and relation extraction (RE), whose objective is to identify entities and the relations expressed among them in text.

In the early stage of development, entity and relation extraction were primarily based on expert-crafted pattern-matching rules [7] and statistical machine learning methods [8]. However, these approaches depended on hand-engineered rules and features, which limited domain adaptability. With advances in artificial intelligence, rapid developments in Natural Language Processing (NLP) and deep learning have substantially advanced entity and relation extraction. Pipeline architectures were adopted for Named Entity Recognition (NER). In particular, deep models combining Recurrent Neural Networks (RNNs) with Conditional Random Fields (CRFs) were proposed [9]. These models captured contextual dependencies more effectively and improved NER accuracy.

After NER, attention-based Graph Neural Network (GNN) models were introduced for relation extraction [10]. The full dependency tree was used as input. Soft pruning was applied to automatically learn and retain informative substructures relevant to the relation extraction task. However, pipeline extraction suffers from error propagation: mistakes in upstream NER directly degrade downstream relation extraction accuracy.

To mitigate error propagation, information extraction has recently been modeled as a sequence-to-sequence (Seq2Seq) joint entity–relation extraction problem [11,12]. End-to-end attention mechanisms were introduced to capture word-level dependencies. Outputs were generated as sequences of tokens. However, performance degraded on complex sentences and long documents, and triples with overlapping entities were handled poorly.

To address the shortcomings of prior methods, a joint entity–relation extraction approach for cyber threat intelligence is proposed. The task is reformulated as an ensemble-prediction problem within a parallel framework. A joint network that combines BERT (Bidirectional Encoder Representations from Transformers) [13] and BiGRU (Bidirectional Gated Recurrent Unit) [14] is constructed to capture deep contextual features in sentences. An ensemble prediction module and an entity–relation triple representation are designed for joint extraction. A non-autoregressive decoder is employed to generate sets of relation triples in parallel, thereby avoiding unnecessary ordering constraints during sequence generation.

The main contributions of this work are summarized below:

- A joint entity–relation extraction model for CTI is proposed. CTI triples are generated in parallel to mitigate the sequential-dependency issue inherent to sequence-labeling methods.

- A cost-effective CTI dataset, SecCti, is constructed. ChatGPT’s small-sample capability is leveraged via Q&A prompts for data labeling and augmentation, and all annotations are verified by security professionals, substantially reducing manual labeling costs.

- Improved robustness to overlapping-entity triples is demonstrated. An absolute F1 score of 89.1% is achieved in the corresponding evaluations.

2. Related Work

Information extraction from CTI reports primarily involves Named Entity Recognition (NER) and relation extraction (RE). Deep learning–based approaches are typically categorized into pipeline extraction and joint extraction.

In pipeline extraction, NER is performed first, followed by RE based on the recognized entities. The performance of RE is therefore highly dependent on NER quality. Gao et al. [15] proposed an NER model that combines a data-driven deep learning approach with a knowledge-driven dictionary method to construct dictionary features, improving the recognition of rare entities and complex words. Wang et al. [16] developed a neural unit, GARU, to integrate features from Graph Neural Networks (GNNs) and Recurrent Neural Networks (RNNs), alleviating fuzzy interactions between heterogeneous networks. To address data sparsity in CTI, Liu et al. [17] proposed a semantic enhancement method that encodes and aggregates compositional, morphological, and lexical features of input tokens to retrieve semantically similar words from a cybersecurity corpus. Sarhan et al. [18] devised a CTI knowledge-graph framework in which NER models are first trained to recognize entities, and relation triples generated by Open Information Extraction (OIE) are subsequently labeled. Although these methods perform well on standalone NER, RE performance remains constrained by NER quality, leading to error propagation.

Joint extraction aims to identify both entities and relations directly from CTI text, improving accuracy and efficiency in cyber threat monitoring and response. Because relation triples within a sentence may share overlapping entities, several joint models have been proposed. Yuan et al. [19] introduced a Relation-Specific Attention Network (RSAN) to jointly extract relations in sentences. RSAN combines relation-based attention with a gating mechanism over a Bidirectional Long Short-Term Memory (BiLSTM) network to produce relation-guided sentence representations, reducing redundant operations observed in pipeline methods. Building on relation-based attention, Guo et al. [11] formulated CTI entity–relation joint extraction as a multi-sequence labeling problem. Text features were extracted with a Bidirectional Gated Recurrent Unit (BiGRU), and decoding was performed using BiGRU and a Conditional Random Field (CRF). Zuo et al. [20] developed an end-to-end sequential labeling model based on BERT-att-BiLSTM-CRF for joint extraction, and knowledge triples were finally obtained using entity–relation matching rules. Ahmed et al. [12] improved upon Zuo et al. [20] by employing an attention-based RoBERTa-BiGRU-CRF model for sequential labeling, mitigating limitations of classical pipeline techniques. Despite these advances, traditional sequence-labeling approaches still struggle with overlapping entities due to order constraints and thus suffer from order dependency.

Recently, the non-autoregressive decoder (NAD) has been widely explored for natural language tasks [21]. Sui et al. [22] formulated joint entity–relation extraction as a set-prediction problem, relieving the model of the sequential burden of predicting multiple triples. Jun Yu et al. [23] applied an NAD to the aspect-level sentiment triad extraction (ASTE) task. The encoding layer used MPNet (proposed by Microsoft), and dependencies among predicted tokens were modeled via Permutation Language Modeling (PLM). Combined with the non-autoregressive decoder, this design achieved state-of-the-art results on ASTE. Yanfei Peng et al. [24] proposed an end-to-end model with a dual ensemble-prediction network to decode triples (termed “ternary groups” in their work). Entity pairs and relations were decoded sequentially, and interactions were strengthened through parameter sharing across the two ensemble-prediction networks. Additionally, entity filters were designed to tighten subject–object connections and to discard triples with low subject/object confidence.

3. Materials and Methods

In this study, unstructured cybersecurity threat intelligence in PDF format was collected from relevant sources. The data were cleaned and preprocessed to obtain sentence-level text. Relation triples present in the sentences were then manually annotated to serve as inputs to the model. The joint entity–relation extraction model consists of two core modules. First, sentences are encoded with a joint BERT–BiGRU encoder to capture sequence dependencies and to provide rich contextual information for the non-autoregressive decoder (NAD). Second, the NAD is used to generate predicted triples in parallel. The model is optimized with a bipartite matching loss, ensuring the best alignment between predicted triples and gold triples.

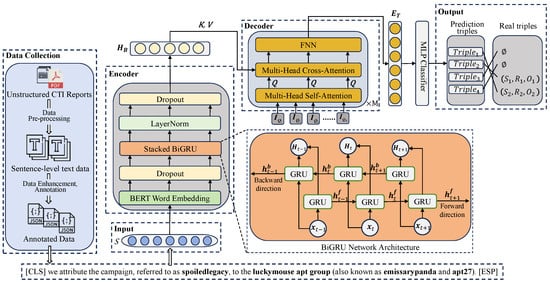

The method architecture of this paper is shown in Figure 1, where the sentence is the input to the model with length l (containing [CLS] and [SEP]), and the set of relation triples in the sentence is , where m is the number of triples, and E and R are the set of entities and the set of relations, respectively; the specific examples are shown in Table 1. There exist two kinds of cases: one is the ordinary example, where there is no overlapping entity in the triple, and the other is the overlapped example, where the the sentence is more complex and there are multiple overlapping entities. A Bidirectional Gated Recurrent Unit (BiGRU) network input vector denotes the word embedding vector of after passing through the BERT layer; vector denotes the word feature representation of after passing through a layer of BiGRU encoding; vector denotes the output of the encoder; and denotes the learnable embeddings, which are used as initialization inputs to the decoder, where N is the number of learnable embeddings and d is the initial dimension of the BERT word embeddings. denotes the output of the decoder. denotes the final prediction of the obtained relational triple; the final goal of the entity–relationship joint extraction model is to extract the set of relational triples T present in the sentence S.

Figure 1.

The methodological architecture of this paper.

Table 1.

Example of threat intelligence information.

The overall architecture is shown in Figure 1. A sentence is denoted by with length l (including [CLS] and [SEP]). The set of relation triples is defined as , where m is the number of triples, and E and R denote the entity and relation sets, respectively. Examples are provided in Table 1. Two cases are considered. In the ordinary case, no entity overlap occurs within a triple. In the overlapped case, the sentence is more complex and contains multiple overlapping entities. For the encoder, is the BERT embedding of . The vector is the contextual representation after a BiGRU layer, and is the encoder output. Learnable embeddings are used to initialize the decoder, where N is the number of learnable embeddings and d is the BERT embedding dimension. The decoder output is . The final prediction of relation triples is denoted by . The objective of the joint entity–relation extraction model is to recover the set T of relation triples present in S.

3.1. Entity Relationship Category Design

A relatively mature system of core concepts for cyber threat intelligence (CTI) and security elements has been established [25,26,27,28]. Based on this body of knowledge and the CTI corpus collected in this study, eleven entity categories were defined: operating system (OS), malware (MAL), tool (TOO), threat actor (THR), campaign (CAM), vulnerability (VUL), mitigation (MIT), attack technique (ATT), group (GRO), consequence (CON), and organization (ORG), as explained in Table 2. The following relations were considered: Target, Attributed_to, Mitigates, HasVulnerability, Uses, Includes, Exploits, Associates, Causes, and Alias_of. In total, thirty-two refined entity–relation categories were obtained by enumerating valid entity-pair types, as shown in Table 3.

Table 2.

Entity Categories.

Table 3.

Relationship categories.

3.2. Data Enhancement

The scarcity of data, compounded by the extensive array of relationship categories, has given rise to a distinct challenge: the inadequate annotation of data. To address this challenge, the few-shot learning capabilities of large language models were used, with ChatGPT (version GPT-4o) [29] being used to implement a structured data augmentation process [30,31,32].

- Initial Dataset: We started with 1051 basic samples.

- Data augmentation Process: During data augmentation, an instruction-based rewriting process was constructed around each base sample to increase corpus diversity and robustness. For each sentence, a refined prompt was injected: “Original sentence. Please provide six additional sentences on the same theme that remain similar to the original. The generated sentences must be in English. You may expand content and internal relations using Wikipedia and verified facts, and add new content related to the theme. Entities and relations in the rewritten sentences should not change substantially. All generated sentences must be in lowercase.”To balance efficiency and quality, the OpenAI API with the “deepseek” model was initially employed for batch generation to achieve high throughput. However, the outputs did not meet downstream requirements. Considering the cost–quality trade-off, a switch was made to the web-based GPT-4o for manual, interactive rewriting and quality control. Instructions were entered sequentially. Low-level specifications (all-lowercase text and syntactic fluency) were verified. Consistency of entities and relations was validated. Newly introduced facts were rapidly fact-checked to remove semantic drift and hallucinatory samples.This procedure markedly improved semantic fidelity and trainability while maintaining controllable generation speed. As a result, enhanced corpora with a higher signal-to-noise ratio were produced for subsequent model training. Representative generated sentences are shown in Table 4.

Table 4. Comparison of raw and GPT-generated data.

Table 4. Comparison of raw and GPT-generated data. - Quality Assurance: For quality assurance, a semi-automated quality-control scheme was adopted under resource constraints and in the absence of external security reviewers. The scheme was centered on manual verification by researchers with cybersecurity expertise. Each generated sample was comprehensively reviewed and corrected to ensure syntactic fluency, clarity of meaning, and factual accuracy.Because the dataset primarily consists of text and entity–relation structures, traditional semantic-similarity measures (e.g., cosine similarity) were deemed inapplicable. An automated screening strategy based on “entity–relation preservation” was therefore developed. A predefined, manually annotated entity–relation dataset was used as the baseline. Normalized definitions of relations were provided to the model, and entity–relation annotations were applied to newly generated sentences. The annotated relations of generated sentences were then compared one-to-one with those of the original samples.If core entities and their relations remained unchanged, a sample was deemed semantically consistent and retained. If relation drift or entity mismatch was detected, the sample was flagged as non-compliant and excluded. Structural consistency served as the primary quality metric. Manual review supplemented this metric to correct potential model-annotation errors and factual issues, thereby ensuring semantic fidelity and factual reliability without relying on traditional similarity metrics.

- Final Dataset: After enhancement and filtering, we obtained a total of 4741 samples, including the original 1051 samples and 3690 generated samples.

3.3. Coding Layer Design for Joint BERT and BiGRU

The primary objective of this layer is to extract context-aware information for each token in a sentence. A Transformer-based bidirectional encoder, BERT, is employed to produce word embeddings [13]. To incorporate threat-intelligence-specific domain knowledge, the embeddings from the BERT layer are further processed by a BiGRU to obtain deep contextual features of the sentence [14].

Given an input sentence , where is the t-th word in the sentence, in order to better obtain the contextual information of each sequence in the sentence, the pre-trained model BERT is loaded to generate the word embedding vectors, and the outputs are the context-aware word embeddings , , where l stands for is the length of the sentence (including [CLS] and [SEP]), and d is the number of hidden units of the BERT model. The specific formula is shown in (1) below:

To improve model generalization, a BiGRU was employed to extract deep context-aware features for each token. BiGRU extends GRU and operates as a bidirectional Recurrent Neural Network. It is designed to mitigate the long-range dependency limitations of traditional RNNs.

A BiGRU comprises two GRU directions. The forward GRU processes the input from left to right, and the backward GRU processes the input from right to left. Using a BiGRU rather than a unidirectional GRU enables the simultaneous capture of past and future context at each time step, thereby improving sequence feature modeling efficiency. Compared with LSTM, GRU uses two gates—the update gate and the reset gate. This design reduces the number of parameters and improves computational efficiency.

The word embedding vector E output from the BERT layer is used as an input to the BiGRU network, and the semantic features of the sentence are further extracted to obtain new sequence vectors , , where the output of BiGRU is spliced from the hidden states of the forward and backward GRUs to capture the contextual information of the sequence more comprehensively. The formulas of BiGRU are shown in (2)–(4) below:

where is the feature representation of the t-th input token, is the hidden state of the (t − 1)-th input token, is the hidden state of the (t+1)-th input token, represents the hidden state of the forward GRU unit, represents the hidden state of the backward GRU, and represents the final result of the current sequence after one layer of BiGRU network.

3.4. Parallel Decoding Layer Design

Following [22], a non-autoregressive decoder (NAD) is employed, which formulates joint entity–relation extraction as an ensemble prediction problem. Unlike autoregressive decoders that condition on previously generated outputs, the NAD uses maskless self-attention to access information at all sequence positions simultaneously. Triples are therefore generated in parallel, which markedly improves efficiency. For each sentence, the decoder outputs a set of N fixed-size predicted triples. The value of N is set greater than the maximum number of triples present in the sentence. The query vector is initialized with N shared, learnable embeddings and is computed by Equation (5).

The core of the non-autoregressive decoder consists of M Transformer blocks. Each block comprises three sublayers: multi-head self-attention, multi-head cross-attention, and a feed-forward network. The multi-head self-attention sublayer models intra-triple dependencies. Its output projection is used as the query (Q) for the multi-head cross-attention sublayer. The encoder outputs provide the keys (K) and values (V) for that cross-attention, thereby fusing sentence-level information. The computations of the multi-head cross-attention sublayer are given by Equations (6)–(8).

is the output of the previous multi-head self-attention sublayer, and is the output of the encoder. , , , are trainable parameters, h is the number of attention heads, is the dimensional size of the key vector. The score can be scaled by dividing by , which helps to enhance the stability of the gradient. The multi-head self-attention sublayer has the same formulas (6)–(8), unlike the multi-head cross self-attention sublayer, whose Q, K, and V are initialized learnable embeddings. With the non-autoregressive decoder, the N ternary queries are converted into N output embeddings, which are denoted as .

Finally, the output embedding is inputted into the MLP network to decode the entities and relations independently, respectively, and the MLP network predicts to get the relation label , as well as the start position and end position of the head and tail entities , , , and by the softmax classifier. The specific equations are shown in (9), (10)–(13) below:

where and are the learnable parameters and is the output of BiGRU encoder based on BERT to obtain the final prediction triples as .

3.5. Loss Function

For model optimization, the Bipartite Matching Loss (BMLoss) was employed [22]. Optimal alignments between predicted and gold triples were obtained with the Hungarian algorithm. The loss comprises relation classification and entity position prediction, both computed via negative log-likelihood.

4. Experiments

4.1. Datasets

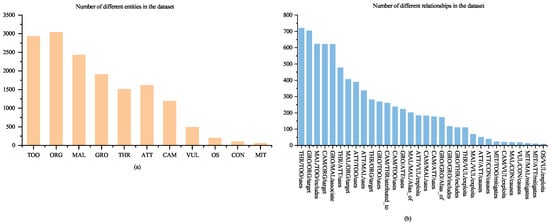

This study collects a total of 1489 publicly available security reports related to APT organizations and cybercriminal activities [33], which cover organizations or operations active from 2008 to July 2024, from which 36 high-quality reports are filtered based on the keyword density of cybersecurity terminology [12]. These reports are mainly related to APT organizations and malware families, such as APT37, APT10, and Andromeda. After the data cleaning and preprocessing work, a brand new dataset SecCti is constructed, and a total of 4741 sentences are obtained. A total of 15,474 entities and 7699 relationships are labeled, and the distribution of the number of entities and relationships is shown in Figure 2. The data is divided into training set, evaluation set, and test set according to an 8:1:1 ratio. The pattern of overlapping relationship triples in the dataset is shown in Table 5.

Figure 2.

Distribution of the number of entities and relationships: (a) Number of different entities in dataset; (b) Number of different relationship in the dataset.

Table 5.

Triples overlap mode.

4.2. Experimental Setup

- Implementation Details: In this study, PyTorch (version 2.7.0) was used to create the experimental models and all experiments were conducted using NVIDIA GeForce RTX 4090 Ti. In the BERT-based BiGRU sentence encoder used in this paper, the BERT word embedding contains 100M parameters, the initial dimension of the word embedding representation is 768, the dimension of the BiGRU is 256, and 3 layers of BiGRU are set up with an initial learning rate of 0.00002. The number of non-autoregressive decoder layers is set up as 3 layers with an initial learning rate of 0.00005, and a total of 100 rounds of training are performed. The coding layer discard rate is set to 0.5 to prevent the model from overfitting during training.

- Evaluation Metrics: In this paper, standard micro-average precision (Precision), micro-average recall (Recall), and micro-average F1 (F1) score are used to evaluate the performance of the model. A triple is considered to be correctly extracted when and only when its relation type and two entities are correctly matched.

4.3. Results and Analysis

4.3.1. Baseline Model

CyberRel [11]: A multiple-sequence labeling model for the joint extraction of cybersecurity conceptual entity–relationships is used. Separate sequences of tags are generated for different relationships containing information about the entities involved, as well as the subject and object of the relationship. BERT-BiLSTM-CRF [20]: the end-to-end sequential annotation model of BERT-att-BiLSTM-CRF is used to realize the joint extraction of entities and relations, and finally the knowledge triples are extracted according to the rules for matching entities and relations. CyberEntRel [12]: utilizes the attention-based RoBERTa-BiGRU-CRF model for sequential labeling. After matching the most appropriate relationship for two predicted entities, the relationship triples are extracted using the relationship matching technique.

4.3.2. Comparison Experiments

In order to validate the effectiveness of the model, the performance of this paper is compared with three baseline models. As shown in Table 6, the model proposed in this paper outperforms the other methods on both the overall relationship triple extraction (All) and the overlapping entity relationship triple extraction (Overlap) tasks. Compared with the state-of-the-art CyberEntRel method, the F1 scores of the two tasks are improved by 4.6% and 9.6%, respectively.

Table 6.

Experimental results of different models.

The performance improvement is mainly due to two aspects: first, the BiGRU encoder is able to capture deep contextual information effectively; second, the non-autoregressive decoder adopts a mask-free self-attention mechanism, which can process all the positional information in parallel and generate the set of relational triples directly. In addition, a high recall rate is ensured by setting an adequate number of ternary queries, which enables the model to achieve a more balanced performance in terms of both precision and recall.

It is worth noting that, in the domain-specific task of this paper, better results were achieved using the BiGRU network with fewer parameters and simpler structure than BiLSTM. This may be due to the fact that on smaller and noisy datasets, the simplified structure of BiGRU helps to reduce the risk of overfitting while improving the computational efficiency.

4.3.3. Ablation Experiments

The importance of the BiGRU and the effect of decoder depth were assessed via ablation experiments. Results are reported in Table 7. BiGRU depths of 1, 2, 3, 4, 5, and 6 layers were evaluated. For depths below three, model capacity and expressive power increased with depth. With three BiGRU layers, the model achieved the best performance: the F1 score improved by 5.6% relative to a model without BiGRU, demonstrating the effectiveness of the BiGRU subnetwork. Beyond three layers, performance declined. The degradation is attributed to overfitting to training noise and unnecessary complexity introduced by deeper architectures. Decoder depth was analyzed in the same manner. When the number of decoder layers was below three, performance improved with additional layers. The highest F1 score was obtained at three layers, after which performance decreased. Although the non-autoregressive decoder supports parallel decoding, excessive depth may prevent the full utilization of contextual information, leading to diminishing returns.

Table 7.

Ablation experiment results.

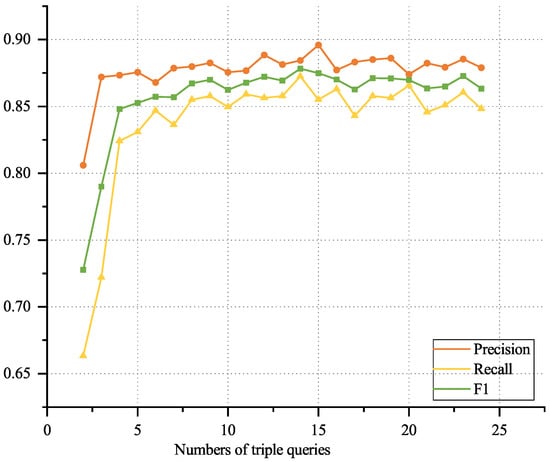

4.3.4. Hyperparametric Experiments

For this dataset, the effect of varying numbers of triple queries (2–24) on model performance was evaluated, as shown in Figure 3. Performance improved as the number of queries increased up to 14, at which point the highest F1 score was achieved. Beyond 14 queries, performance gradually decreased, likely due to interference from excessive invalid triple candidates.

Figure 3.

Variation of model performance with different numbers of triple queries.

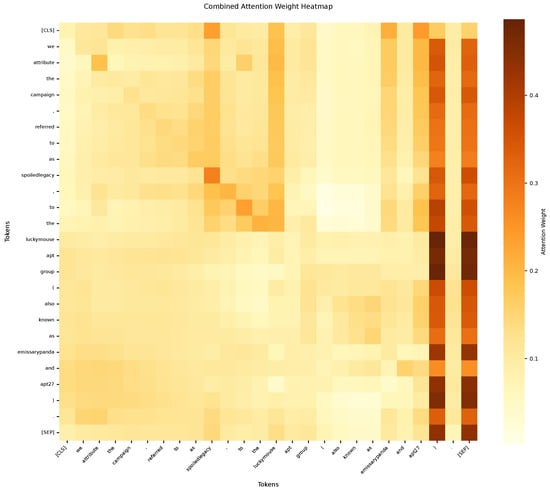

4.3.5. Visual Analysis

To provide an intuitive view of the model’s mechanism, the attention distributions of the encoder and decoder were visualized. As shown in Figure 4, the encoder—comprising BERT and BiGRU—accurately identifies key entity tokens such as “spoiledlegacy,” “APT27,” and “EmissaryPanda.” Clear attention focus regions are also formed around threat-intelligence entities such as “LuckyMouse,” “APT,” and “group.” These patterns indicate that task-relevant semantic features have been effectively captured by the model.

Figure 4.

Heat map of encoder attention weights.

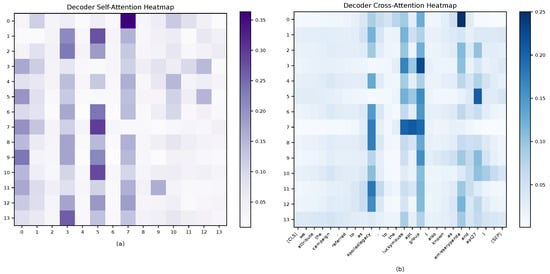

Figure 5 presents the decoder’s self-attention and cross-attention distributions. In the self-attention heat map (Figure 5a), query positions 3, 5, and 7 form a clear high-attention region. Diagonal weights are generally low, indicating information sharing across query vectors. In the cross-attention heat map (Figure 5b), distinct entity focuses are observed. Query 3 attends to “LuckyMouse APT group” and “EmissaryPanda.” Query 5 attends to “LuckyMouse APT group” and “APT27.” Query 7 attends to “LuckyMouse APT group” and “SpoiledLegacy.” These patterns suggest that overlapping relations are handled efficiently. Different query vectors focus on different entity pairs in parallel while sharing key entity information.

Figure 5.

Heat map of decoder self-attention and cross-attention weights: (a) Self-attention weights between decoder queries; (b) Cross-attention weights from decoder queries to input entities.

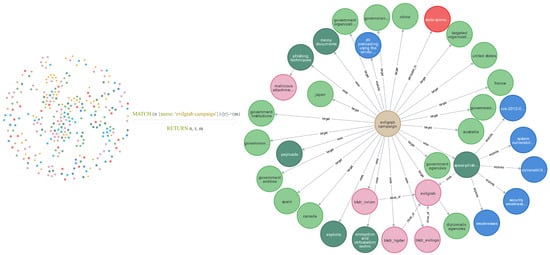

4.3.6. Case Study

The cyber threat intelligence (CTI) extracted in this study was imported into a Neo4j graph database. The database contains 2552 entities and 2680 relations. A partial view of the CTI knowledge graph is shown in Figure 6. Information related to the EvilGrab campaign was queried using Cypher to obtain detailed context. The results indicate that the EvilGrab campaign primarily targets countries such as China and Canada, along with their governmental organizations. The campaign employs harpoon-style network attacks using the EvilGrab malware and primarily exploits vulnerabilities such as CVE-2012-0158. These details provide multi-dimensional insight into the attack. Based on this information, security analysts can specify more effective defensive strategies against cyberattacks.

Figure 6.

Example of cyber threat intelligence knowledge graph.

5. Conclusions and Future Work

In this study, a joint extraction method for CTI entity–relation triples based on parallel ensemble prediction was proposed. A BERT–BiGRU encoder was combined with a non-autoregressive decoder to capture deep context and to generate triples in parallel. Competitive performance was achieved on SecCti, with clear gains on overlapping triples. Building on these results, key advantages, limitations, comparisons, and future directions are outlined below:

- Advantages: First, order-dependency and exposure-bias issues are mitigated by set-style, non-autoregressive decoding. Parallel decoding improves efficiency on inputs with multiple triples. A BiGRU layer provides lightweight sequential inductive bias atop BERT, benefiting noisy CTI text.

- Limitations: Nevertheless, several challenges were identified. Performance is sensitive to the number of query slots; excessive slots yield invalid candidates and may reduce F1. Deeper encoders/decoders risk overfitting, requiring careful depth and regularization. Accurate span localization remains critical, and domain drift and label noise can affect robustness. Moreover, the present study comprised a total of 36 reports, 4741 sentences, and 7699 relationships. It is acknowledged that the current body of evidence is limited in terms of both sources and scale, a factor which may have a bearing on the universality of the findings across institutions and time periods.

- Comparison: In relation to prior approaches, error propagation is reduced via joint decoding compared with pipeline NER→RE. Relative to joint sequence-labeling baselines (e.g., BERT-BiLSTM-CRF-style), overlapping triples are handled more effectively and decoding is faster, though sequence-labeling can be simpler for single-triple cases.

- Future work: Emphasis will be placed on document-level Chinese CTI, adaptive query allocation, boundary-aware targeting, noise-aware training, and enhanced Neo4j governance. In addition, as resources increase, the scope of evaluation will be expanded by introducing CTI reports from more institutions and time periods to improve source diversity, and the automation of end-to-end CTI knowledge graph construction based on large models will be explored.

Author Contributions

Conceptualization, H.W. and S.Z.; methodology, S.Z.; validation, S.Z., H.W., and J.S.; formal analysis, Q.L.; investigation, Z.W.; resources, H.W.; data curation, S.Z.; writing—original draft preparation, S.Z.; writing—review and editing, H.W.; visualization, J.S.; supervision, H.W. and Z.W.; project administration, Q.L.; funding acquisition, H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the following research projects: Guangxi Zhuang Autonomous Region Natural Science Foundation Project “Research on Key Technologies for Privacy Protection in Cross-Domain Government Data Flow” (No. 2025GXNSFHA069193); Guangxi Zhuang Autonomous Region Education Department Project (No. 2025KY0343); Guangxi Zhuang Autonomous Region Key R&D Program Project “Research and Development of Active Defense-Based Intelligent Network Security Operation Service Platform” (No. GuiKe AB24010309).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DDoS | Distributed Denial-of-Service |

| BERT | Bidirectional Encoder Representations from Transformers |

| RNN | Recurrent Neural Network |

| CRF | Conditional Random Field |

| BiGRU | Bidirectional Gated Recurrent Unit |

| BiLSTM | Bidirectional Long Short-Term Memory |

| ChatGPT | Chat Generative Pre-trained Transformer |

| NAD | Non-autoregressive Decoder |

| APT | Advanced Persistent Threats |

| NER | Named Entity Recognition |

| RE | Relation Extraction |

| NLP | Natural Language Processing |

| MLP | Multilayer Perceptron |

References

- Sharma, A.; Gupta, B.B.; Singh, A.K.; Saraswat, V.K. Multi-dimensional Hybrid Bayesian Belief Network Based Approach for APT Malware Detection in Various Systems. In International Conference on Cyber Security, Privacy and Networking (ICSPN 2022). ICSPN 2021; Lecture Notes in Networks and Systems; Springer: Berlin/Heidelberg, Germany, 2023; Volume 599. [Google Scholar] [CrossRef]

- Jain, G.; Rani, N. Awareness Learning Analysis of Malware and Ransomware in Bitcoin. In Proceedings of the First International Conference on Computing, Communications, and Cyber-Security (IC4S 2019); Lecture Notes in Networks and Systems; Springer: Singapore, 2020; Volume 121. [Google Scholar] [CrossRef]

- What Is Cyber Threat Intelligence? 2025 Threat Intelligence Report, 04 March 2025. Available online: https://www.crowdstrike.com/cybersecurity-101/threat-intelligence/ (accessed on 12 August 2025).

- Llorens, A. 5 Best Practices to Get More from Threat Intelligence, ThreatQuo tient, Inc., 26 October 2021. Available online: https://www.threatq.com/5-best-practices-more-threat-intelligence/ (accessed on 12 August 2025).

- Ainslie, S.; Thompson, D.; Maynard, S.; Ahmad, A. Cyber-threat intelligence for security decision-making: A review and research agenda for practice. Comput. Secur. 2023, 132, 103352. [Google Scholar] [CrossRef]

- Zhao, X.; Jiang, R.; Han, Y.; Li, A.; Peng, Z. A survey on cybersecurity knowledge graph con struction. Comput. Secur. 2024, 136, 103524. [Google Scholar] [CrossRef]

- Liao, X.; Yuan, K.; Wang, X.; Li, Z.; Xing, L.; Beyah, R. Acing the IOC Game: Toward Automatic Discovery and Analysis of Open-Source Cyber Threat Intelligence. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security (CCS ’16); Association for Computing Machinery: New York, NY, USA, 2016; pp. 755–766. [Google Scholar] [CrossRef]

- Ritter, A.; Wright, E.; Casey, W.; Mitchell, T. Weakly Supervised Extraction of Computer Security Events from Twitter. In Proceedings of the 24th International Conference on World Wide Web (WWW ’15); International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE: Geneva, Switzerland, 2015; pp. 896–905. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, Y.; Lu, W. Attention guided graph convolutional networks for relation extraction. arXiv 2019, arXiv:1906.07510. [Google Scholar]

- Guo, Y.; Liu, Z.; Huang, C.; Liu, J.; Jing, W.; Wang, Z.; Wang, Y. CyberRel: Joint entity and relation extraction for cybersecurity concepts. In Proceedings of the Information and Communications Security: 23rd International Conference, ICICS 2021, Chongqing, China, 19–21 November 2021; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 447–463. [Google Scholar]

- Ahmed, K.; Khurshid, S.K.; Hina, S. CyberEntRel: Joint extraction of cyber entities and relations using deep learning. Comput. Secur. 2024, 136, 103579. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Zhang, J.; Liu, F.; Xu, W.; Yu, H. Feature Fusion Text Classification Model Combining CNN and BiGRU with Multi-Attention Mechanism. Future Internet 2019, 11, 237. [Google Scholar] [CrossRef]

- Gao, C.; Zhang, X.; Liu, H. Data and knowledge-driven named entity recognition for cyber security. Cybersecurity 2021, 4, 9. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. A novel feature integration and entity boundary detection for named entity recognition in cybersecurity. Knowl.-Based Syst. 2023, 260, 110114. [Google Scholar] [CrossRef]

- Liu, P.; Li, H.; Wang, Z.; Liu, J.; Ren, Y.; Zhu, H. Multi-features based semantic augmentation networks for named entity recognition in threat intelligence. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; IEEE: Piscataway Township, NJ, USA, 2022; pp. 1557–1563. [Google Scholar]

- Sarhan, I.; Spruit, M. Open-cykg: An open cyber threat intelligence knowledge graph. Knowl.-Based Syst. 2021, 233, 107524. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhou, X.; Pan, S.; Zhu, Q.; Song, Z.; Guo, L. A relation-specific attention network for joint entity and relation extraction. In International Joint Conference on Artificial Intelligence; ACM: Montreal, QC, USA, 2021; pp. 4054–4060. [Google Scholar]

- Zuo, J.; Gao, Y.; Li, X.; Yuan, J. An end-to-end entity and relation joint extraction model for cyber threat intelligence. In Proceedings of the 2022 7th International Conference on Big Data Analytics (ICBDA), Guangzhou, China, 4–6 March 2022; IEEE: Piscataway Township, NJ, USA, 2022; pp. 204–209. [Google Scholar]

- Guo, P.; Xiao, Y.; Li, J.; Zhang, M. RenewNAT: Renewing potential translation for non autoregressive transformer. In Proceedings of the AAAI Conference on Artificial Intelligence; AAAI Press: Washington, DC, USA, 2023; pp. 12854–12862. [Google Scholar]

- Sui, D.; Zeng, X.; Chen, Y.; Liu, K.; Zhao, J. Joint Entity and Relation Extraction With Set Prediction Networks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 12784–12795. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Guo, Y.; Ruan, Q.-M. Aspect-level sentiment triple extraction based on set prediction. J. Chin. Inf. Process. 2024, 38, 147–157. [Google Scholar]

- Peng, Y.-F.; Wang, R.-H.; Zhang, R.-S. Entity relationship joint extraction model based on dual set prediction network. J. Front. Comput. Sci. 2023, 17, 1690–1699. [Google Scholar]

- STIX. 2022. Available online: https://oasis-open.github.io/cti-documentation/stix/intro (accessed on 12 August 2025).

- Iannacone, M.; Bohn, S.; Nakamura, G.; Gerth, J.; Huffer, K.; Bridges, R.; Ferragut, E.; Goodall, J. Developing an ontology for cyber security knowledge graphs. In Proceedings of the 10th Annual Cyber and Information Security Research Conference; ACM: New York, NY, USA, 2015; pp. 1–4. [Google Scholar]

- Syed, Z.; Padia, A.; Finin, T.; Joshi, A. UCO: A unified cybersecurity ontology. In Proceedings of the Workshops at the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–13 February 2016; AAAI Press: Washington, DC, USA, 2016. [Google Scholar]

- Simmonds, A.; Sandilands, P.; van Ekert, L. An ontology for network security attacks. In Applied Computing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 317–323. [Google Scholar]

- OpenAI. ChatGPT-Blog. 2023. Available online: https://openai.com/blog/chatgpt (accessed on 12 August 2025).

- Hu, Y.; Zou, F.; Han, J.; Sun, X.; Wang, Y. Llm-tikg: Threat intelligence knowledge graph construction utilizing large language model. Comput. Secur. 2024, 145, 103999. [Google Scholar] [CrossRef]

- Møller, A.G.; Dalsgaard, J.A.; Pera, A.; Aiello, L.M. Isaprompt and a few samples all you need? Using GPT-4 for data augmentation in low-resource classification tasks. arXiv 2023, arXiv:2304.13861. [Google Scholar]

- Puri, R.S.; Mishra, S.; Parmar, M.; Baral, C. How many data samples is an additional instruction worth? arXiv 2022, arXiv:2203.09161. [Google Scholar]

- CyberMonitor: Apt&Cybercriminals Campaign Collection. 2021. Available online: https://github.com/CyberMonitor/APT_CyberCriminal_Campagin_Collections (accessed on 12 August 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).