1. Introduction

The increasing adoption of electric vehicles (EVs) and stationary energy storage systems has intensified research on accurate and efficient estimation of the state of charge of lithium-ion batteries, which is essential for battery management systems to ensure safety, performance, and longevity. SOC estimation is a complex problem due to the nonlinear dynamics of battery behavior under varying environmental and load conditions.

Accurate estimation of the state of charge in medium- and high-voltage batteries is essential to ensure the performance and safety of electric vehicles. However, the nonlinear and dynamic characteristics of batteries make this task particularly challenging under varying operating conditions, as noted by Plett et al. [

1]. A first group of studies adopts model-based approaches that rely on physical representations of battery dynamics, primarily using Kalman filters and equivalent circuit models (ECMs). In the study by Manríquez et al. [

2], long short-term memory (LSTM) neural networks were proposed to model these temporal complexities due to their ability to capture long-term dependencies in sequential data. Furthermore, optimizing LSTM models using genetic algorithms (GA) has been shown to improve estimation accuracy.

The study presented by Lee et al. [

3] focuses on the estimation of the SOC by combining Gaussian processes with Bayesian filters. Two methods are proposed: a Gaussian process-assisted unscented Kalman filter (GP-UKF) and a Gaussian process-assisted particle filter (GP-PF). In both approaches, the Gaussian process regression (GPR) is trained to predict the battery voltage and dynamically update the covariance matrices of the respective filters. Using driving cycle data from UDDS and HWFET, the authors demonstrate significant improvements: the GP-UKF reduced the estimation error by up to 60% and uncertainty by approximately 80% compared to a classical UKF, while the GP-PF increased estimation accuracy by 58% and reduced uncertainty by 97% relative to a standard particle filter.

The study conducted by Shi et al. [

4] use simulated data based on a lithium-ion battery equivalent circuit model featuring a second-order RC network. The proposed methodology includes parameter identification via a bias-compensated recursive least squares algorithm with adaptive forgetting factor, and the development of a novel estimator: the Improved Adaptive Square-Root Cubature Kalman Filter (IASRCKF). This estimator dynamically adjusts the error statistics window length to mitigate the impact of noise. The results demonstrate outstanding performance, with RMSE and MAE values of 0.18% and 0.15%, respectively—representing improvements of 40% and 44.4% over the standard ASRCKF approach. Additionally, robustness analyses under varying initial SOC values, current and voltage offsets, and different measurement noise covariances confirm the accuracy, robustness, and computational efficiency of the proposed method.

A second group of studies employs data-driven approaches based on conventional machine learning techniques, including multiple linear regression, decision trees, and random forests, often trained on real-time or historical battery data.

A study by Chandran et al. [

5] employs machine learning algorithms to analyze and estimate the SOC, optimizing performance parameters through error analysis. The results indicate that artificial neural networks (ANN) and Gaussian process regression (GPR) are the most effective techniques, with the ANN achieving an MSE of 0.0004 and an RMSE of 0.00170, and the GPR yielding an MSE of 0.023 and an RMSE of 0.04118. These results highlight the superior accuracy of ANN and GPR in SoC prediction tasks.

In a related study, Amin et al. [

6] tackled the challenge of estimating the range of electric motorcycles, a task complicated by the limited technical information provided by manufacturers. They developed an application that collects data on user behavior, weather, road conditions, and vehicle performance history. Several cloud-based machine learning algorithms were tested, with the support vector machine (SVM) model achieving the best results—yielding a mean absolute error of 150 m, which improved to 130 m after system optimization.

A study by Jain et al. [

7] addresses the need for accurate prediction of SOC and driving range to reduce range anxiety in electric vehicles. Real-world trip data collected from electric scooters in India were used to train machine learning models, including k-nearest neighbors (KNN) and ensemble learning methods, combined with stochastic segmentation and Bayesian optimization. The best-performing models achieved prediction accuracies of 99.71% on the first validation trip and 98.04% on the second.

Pranav et al. [

8] proposed a comparative machine learning algorithm for SOC estimation in electric vehicles, evaluating various approaches and highlighting the effectiveness of GPR. This study, published in Scientific Reports, trained models including SVM, neural networks, ensemble methods, and GPR using extensive real-world driving data collected from diverse drivers and conditions. Through statistical metrics applied to this field data, the study evaluated the SOC prediction accuracy, demonstrating that GPR achieved the highest accuracy with the lowest prediction errors, outperforming all other tested techniques. The authors concluded that GPR offers a reliable framework capable of modeling the complex relationship between real-time driving data and SOC, thereby improving SOC monitoring in electric vehicles under variable operating conditions.

A study presented by Ali et al. [

9] focuses on battery-powered wireless sensor networks (WSNs) and the implementation of GPR in low-resource embedded systems. The proposed method is an adaptive SOC estimation approach using GPR trained with laboratory data under varying temperature conditions (5 °C to 45 °C) for three types of batteries (lithium-ion, NiMH, and Li-Poly). The GPR model employs an RBF kernel tuned via hyperparameter optimization, using voltage, capacity, and temperature as input features. Compared to other methods (e.g., polynomial regression and SVM) under the same conditions, the GPR model achieved mean absolute errors of approximately 2.0–2.5% and an RMSE of 0.30 in SOC prediction. Furthermore, the study demonstrates the feasibility of deploying this model on an ARM Cortex-M4 microcontroller, enabling real-time SOC estimation in WSN nodes. These results highlight the efficiency and lightweight nature of GPR for SOC estimation in IoT devices with constrained resources.

A third group of works leverages deep learning architectures such as LSTM, ANN, and CNN to capture temporal and nonlinear battery behaviors. LSTM networks have demonstrated strong potential for modeling the nonlinear behavior of battery systems. For instance, Bouktif et al. [

10] developed an LSTM-based model optimized with genetic algorithms to identify time lags and optimal layer configurations. Several baseline machine learning models were evaluated, and the best features were selected using both filter-based and wrapper-based methods. Using electricity consumption data from the metropolitan area of France, the LSTM model achieved high accuracy and stability, with consistently low mean absolute error (MAE) and root mean square error (RMSE). The model achieved an RMSE of 0.61% for short-term forecasts and an average of 0.56% for medium-term forecasts, demonstrating that recurrent neural networks—specifically LSTM—are efficient and reliable tools for managing electric demand forecasting in smart grids.

A study by George et al. [

11] addresses range anxiety in electric vehicles by implementing an electric vehicle model in MATLAB/Simulink and applying deep learning techniques to develop a neural network-based range prediction system. Their results showed that driving style, environmental conditions, battery parameters, and auxiliary loads significantly affect the vehicle’s range. Among the models tested, the bidirectional LSTM (BiLSTM) network achieved the lowest prediction error, with an RMSE of 0.029 km.

In a work by Oka et al. [

12], an LSTM-based battery emulator was developed to predict the charging and discharging behavior of lithium-ion batteries (LIBs). The model was trained using both simulated data (via Dualfoil) and experimental data to forecast voltage profiles under galvanostatic conditions. The emulator demonstrated high accuracy, achieving coefficients of determination (

) of 0.98 with simulation data and 0.97 with experimental data.

Tian et al. [

13] proposed a deep neural network (DNN) model for SOC estimation using voltage and charging current data sampled every 10 min. To enhance robustness against noise and sporadic errors, the approach was integrated with a Kalman filter. The methodology achieved SOC estimation errors below 2.03%, and reduced the mean squared error (MSE) to 0.385%, even under significant disturbances. Moreover, the model was adapted through transfer learning for various battery types and aging conditions, resulting in estimation errors below 3.146% for aged batteries and 2.315% for other battery chemistries.

A study by Tiwary et al. [

14] investigated the influence of driving style on electric vehicle range estimation. The model incorporated three primary variables—speed, acceleration/deceleration, and battery SOC—along with driving data from four specific routes. An LSTM neural network was employed to capture temporal dependencies in driver behavior, and the model was optimized using the Adam algorithm with dropout regularization to prevent overfitting. The network achieved high predictive accuracy, with errors below 1% across all evaluated routes.

Kumar et al. [

15] addressed the importance of accurately and reliably estimating the SOC of lithium-ion batteries to improve their performance, lifespan, and safety. Using voltage, current, and temperature data—collected under controlled thermal conditions (0 °C and 10 °C)—they applied machine learning and deep learning techniques based on artificial neural networks. The models achieved mean absolute errors (MAE) ranging from 0.0030 to 0.0035 and mean squared errors (MSE) between 0.0043 and 0.0047.

In a work by Gupta et al. [

16], an electric vehicle was modeled and simulated in MATLAB/Simulink R2024b under various driving cycles. The resulting data were used to train a neural network for SoC estimation, initially using the coulomb counting method. After optimization with 40 neurons, the neural network achieved estimation errors below 1% for the Worldwide Harmonized Light Vehicles Test Procedure (WLTP-3) and below 3% for the Los Angeles 1992 (LA92) driving cycle.

A distinct set of studies explores Gaussian process regression (GPR)-based methods, which model uncertainty and nonlinearities in SoC estimation, incorporating factors such as battery expansion characteristics and thermal variability.

A study conducted by Yi et al. [

17] investigates a novel SOC estimation method by leveraging physical changes in the battery. The work analyzes the variation in thickness (mechanical expansion) of Li-ion cells during charge/discharge cycles and its correlation with SOC. Based on this phenomenon, a GPR model is proposed, which uses both cell expansion and voltage characteristics as input features to predict SOC. Experimental results reveal very high accuracy: the maximum error does not exceed 0.0076 (dimensionless), and the RMSE remains around 0.0018 under constant charge/discharge conditions across various current rates. This approach demonstrates how GPR can incorporate mechanical indicators (expansion) to enhance SOC estimation in next-generation battery technologies.

An article presented by Hossain et al. [

18] explores SOC estimation using GPR with a focus on thermal variability. Recognizing that battery dynamics are highly temperature-dependent, the study trains a GPR model using experimental data across a wide range of thermal conditions, from −10 °C to 25 °C. The resulting GPR model is capable of accurately predicting SOC throughout this range, demonstrating a global RMSE below 0.02 and a maximum absolute error under 0.1 across all tested temperatures. These results confirm that the model effectively captures the influence of temperature on battery behavior. Compared to other approaches, GPR proved to be more resilient to temperature fluctuations, maintaining reliable SOC estimations where traditional methods would suffer from degraded accuracy. This contribution is critical for optimizing battery management in applications with significant thermal variations (e.g., cold starts or hot climates), thereby enhancing the safety and efficiency of the BMS.

A study conducted by Gok et al. [

19] addresses the challenge of accurately estimating the SOC of lithium-ion batteries under dynamic conditions and sub-zero temperatures, where internal resistance and usable capacity vary significantly. Real-world data were collected, including discharge rates from 0.2C to 2C, ambient temperature profiles ranging from 25 °C to −10 °C, battery surface temperature at five points, and voltage measurements, replicating scenarios based on the NEDC driving cycle. Two machine learning techniques were applied: NN and GPR, aimed at predicting SOC under complex operating conditions. Experimental results showed determination coefficients between 0.98 and nearly 1.00 across all scenarios. NN achieved higher accuracy and lower computational time under simple conditions, whereas GPR outperformed in complex nonlinear situations. Additionally, a reduction of up to 18.51% in discharged capacity was observed when sub-zero temperatures were combined with high discharge rates, underscoring the relevance of advanced SOC estimation techniques for demanding real-world applications.

A study by Bhattacharya et al. [

20] addresses the challenge of accurately estimating the SOC in lithium-ion batteries, overcoming the limitations of static, temperature-sensitive models trained solely on laboratory data. A diverse dataset encompassing eight global driving cycles was employed, enabling the capture of a wide range of real-world conditions in electric vehicles. The proposed methodology integrates a generative model based on a stochastic variational Gaussian process (SVGP) with a deep neural network featuring incremental learning capabilities, forming an adaptive dual-model online learning system. This approach allows for progressively improved SOC estimation as new data become available, effectively addressing thermal variability. Experimental results demonstrate a substantial accuracy improvement, with the mean squared error reduced from 9 to 4 and the coefficient of determination increased by 90%, confirming the robustness and adaptability of the system.

Finally, several comprehensive or hybrid studies integrate multiple methodologies, offering comparative evaluations or extending SOC and SOH estimation beyond lithium-ion chemistries, such as sodium-based batteries. For instance, in the study by Xiang et al. [

21], experimental tests were conducted on commercial sodium-ion batteries (SIBs) using a third-order equivalent circuit model, which was optimized through a particle swarm optimization algorithm for accurate SoC estimation. Three model-based approaches were evaluated: the extended Kalman filter (EKF), the unscented Kalman filter (UKF), and the particle filter (PF). Among them, the UKF demonstrated the highest accuracy, with mean absolute error and mean squared error values below 1.2% and 1.6%, respectively.

Wu et al. [

22] report a SOC estimation method that incorporates GPR within an adaptive unscented Kalman filter. In this approach, GPR is employed to model the nonlinear relationship between measured parameters (current, voltage, temperature) and the SOC, and its predictions are fed into the UKF to enhance online SOC estimation. In comparative tests, the GPR-based UKF demonstrated high prediction accuracy, maintaining SOC error below 1% under various loading conditions. The inclusion of GPR allowed the filter to adapt more effectively to system variations without the need to re-identify internal models, suggesting a promising framework for battery management systems where both precision and robustness are critical.

In this study, the term medium- and high-voltage lithium-ion batteries refers to battery systems with nominal voltages exceeding 60 V and up to 400 V, commonly found in electric motorcycles, hybrid electric vehicles (HEVs), and electric cars. These packs consist of multiple lithium-ion cells connected in series and/or parallel, resulting in higher energy storage capacity and power output compared to typical low-voltage commercial battery packs (e.g., 12–48 V). Unlike low-voltage lithium batteries used in consumer electronics or light electric vehicles, medium- and high-voltage batteries operate under more demanding thermal, electrical, and safety constraints. Therefore, SOC estimation for these systems requires robust, accurate, and scalable algorithms capable of handling rapid transients and voltage nonlinearities. The distinction is essential to contextualize the relevance and applicability of the proposed estimation approach under realistic high-voltage EV operating conditions.

Research Gap, Problem Statement, and Objectives

While numerous studies report state-of-charge estimation accuracies exceeding 98%, such performance figures should be interpreted with caution. First, many of these works rely on highly controlled datasets that do not reflect the full variability of real-world driving scenarios, such as rapid temperature fluctuations or mixed urban/highway profiles. Second, dataset sizes are often relatively small (typically comprising only a few hundred cycles), which increases the risk of overfitting, particularly when complex models such as LSTM networks are employed. Third, methodological details are sometimes insufficiently reported, making it difficult to assess the true generalization capabilities of the proposed models.

Therefore, although these high reported accuracies demonstrate the potential of advanced machine learning techniques, they may overstate real-world performance under the practical constraints of battery management systems, where sensor noise, battery degradation, and hardware limitations play a significant role.

Consequently, a clear gap exists between the idealized, upper-bound performance typically presented in the literature and the practical accuracy achievable in an embedded BMS within an electric vehicle powertrain. The problem addressed in this work is twofold: The development of an SOC estimator that consistently maintains acceptable accuracy (above 90%) across dynamically varying load profiles, thermal drift, and cell ageing. Ensuring that the model’s computational and memory footprint fits within the constraints of typical embedded BMS hardware, without compromising prediction quality. To address this gap, the present study pursues the following objectives:

Design and implement a hybrid LSTM + GA framework capable of automating the hyperparameter optimization process for SOC prediction;

Compare the hybrid model’s performance in terms of prediction accuracy, convergence speed, and computational cost against two baselines: a conventional LSTM model and a multiple linear regression (MLR) estimator;

Validate all models using a comprehensive dataset that includes urban and highway driving cycles, temperature fluctuations, and cell aging profiles—realistically representing the operational conditions of electric vehicles;

Assess the feasibility of deploying the proposed estimator on embedded battery management system (BMS) platforms by evaluating memory usage, training and inference time, and expected energy consumption under fixed-point arithmetic implementation.

The present study develops and evaluates an adaptable monitoring system for medium- and high-voltage electric propulsion systems. This approach enables real-time measurement of key parameters, including the state of charge of the test vehicle’s battery. The system integrates machine learning techniques to provide accurate SOC estimations. Experimental tests were conducted in Latacunga, Ecuador, using standardized driving cycles such as the New European Driving Cycle (NEDC), the Worldwide Harmonized Light Vehicles Test Procedure (WLTP), and custom-designed routes. Data acquisition is carried out via sensors and visualized in real-time using a central microprocessor. Unlike previous research focused on electric vehicle batteries in four-wheeled vehicles operating on flat terrain, this study adapts its methodology to electric motorcycles operating in mountainous environments. It introduces the use of accelerator angle sensors and applies genetic algorithms to enhance energy management and battery reliability.

The remainder of the article is organized as follows:

Section 2 details the materials and methods used for data collection, including standardized driving cycles.

Section 3 describes the implementation of the proposed monitoring system, outlining the electronic signal flow from acquisition to visualization.

Section 4 presents the experimental results and performance metrics of the evaluated models.

Section 5 discusses and analyzes these results in comparison with related studies. Finally,

Section 6 provides the conclusions and outlines potential improvements for future iterations of the prototype.

2. Materials and Methods

This section outlines the methodologies, techniques, and resources used to estimate the SOC in medium- and high-voltage batteries employed in electric propulsion systems for both two- and four-wheeled vehicles. Accurate SOC estimation is essential for preventing operational risks such as overcharging or deep discharging, which can degrade battery cells and reduce the vehicle’s driving range—a critical factor in the widespread adoption of electric mobility. In addition, electric propulsion systems are subject to operational challenges such as thermal variability, battery aging, and highly dynamic charge/discharge cycles. These conditions require adaptive models capable of integrating real-time data to ensure robust and reliable SOC estimation [

23].

Prior to SOC estimation, the state of health of each cell must be determined by measuring its remaining capacity and internal resistance through controlled cycling tests. These SoH metrics are used to calibrate the SOC prediction model, as battery degradation introduces significant bias when estimates rely solely on voltage and current readings.

In this study, the EBC-A40L battery tester was used to assess the actual capacity and health of the LiFePO4 cells (3.2 V, 50 Ah). This equipment enables controlled charge–discharge cycles under standardized conditions (ambient temperature: 25 ± 2 °C; discharge rate: 0.5C). The cell is first fully charged to 3.65 V using a constant current–constant voltage (CC–CV) profile and then discharged to 2.5 V while recording the discharged capacity.

The measured capacity is compared to the nominal value to calculate the SoH using Equation (

1).

In addition, the EBC-A40L monitors critical parameters such as the voltage curve during discharge, internal resistance, and thermal stability, enabling the identification of accelerated degradation or electrochemical inhomogeneities. This method ensures accurate and reproducible estimation of SoH, which is essential for predicting remaining battery life and validating the reliability of SOC estimation models in real-world automotive applications.

2.1. LSTM Neural Networks

A long short-term memory (LSTM) neural network is a type of recurrent neural network (RNN) specifically designed to process sequential data and capture long-term dependencies. Unlike traditional RNNs, LSTMs incorporate memory cells along with gate mechanisms—namely the input, output, and forget gates—that allow the model to selectively retain or discard information over time. This architectural design enables LSTMs to effectively model complex temporal dynamics and mitigate issues such as vanishing gradients. As a result, they are particularly well-suited for tasks involving time-series forecasting, sequential sensor data, and natural language processing, where learning from long-term context is essential [

24].

The forget gate determines which information in long-term memory should be discarded. Equation (

2) uses a sigmoid function to produce a value between 0 and 1, indicating the degree of forgetting. The weight

adjusts the importance of the previous

and current inputs

, while the bias

compensates for possible deviations.

The input gate (Equation (

3)) regulates the new information incorporated into the memory. It uses a sigmoid function that evaluates the inputs, weights

, and bias

. Its output, which ranges from 0 to 1, indicates the proportion of relevant data that should be added to the cell state.

Equation (

4) generates a proposal of new information for the cell state. It uses the hyperbolic tangent function to transform the inputs

with weights

and bias

. Its output is a filtered representation between

and 1 of the incoming data, which is used if the input gate allows:

The cell state (Equation (

5)) combines the retained information with the new data. This operation multiplies the previous state

by the forget gate output

and adds the candidate content

weighted by the input gate

. The result is an updated memory that reflects both experience and new operating conditions:

Equation (

6) defines the gate that determines how much information from the cell state should be transmitted to the next time step or the output layer. Its output

modulates the influence of the current state

on the final result:

The hidden state (Equation (

7)) represents the intermediate output of the LSTM node. It is obtained by multiplying the output gate output

by the hyperbolic tangent of the cell state

. This combination enables the network to retain relevant information while attenuating extreme values:

Equation (

8) for the predictive output transforms the hidden state

into an estimate. It uses an activation function—such as sigmoid—to scale values within the required bounds. The weights

W and bias

b define how each feature influences the final prediction:

Optimization algorithms, such as Adam or root mean square propagation (RMSProp), iteratively adjust the LSTM weights to reduce the mean squared error.

Multiscale Feature Alignment and SoH Decoupling

To address the coexistence of variables with different temporal dynamics, a two-stage preprocessing and feature alignment approach was implemented.

First, high-frequency signals such as voltage, current, acceleration, and throttle angle were collected at a rate of 2.6 Hz (every ∼0.385 s). In contrast, the state of health (SoH) was considered a slowly varying parameter, typically changing over much longer periods (daily or hourly). To integrate this disparity,

SoH values were interpolated to remain constant over fixed one-minute intervals (pseudo-static behavior),

A dual-input architecture was evaluated, where SoH acted as a contextual feature concatenated only at the input gate of the LSTM and not recurrently propagated.

Second, all inputs were normalized individually using a time-aware z-score method, ensuring that variables with different magnitudes and sampling rates did not dominate the training loss.

This design ensures the robustness of the LSTM against temporal mismatches and avoids overfitting to spurious short-term correlations with slow-changing parameters.

2.2. Genetic Algorithms

Genetic algorithms (GAs) are optimization techniques inspired by the principles of biological evolution. They employ mechanisms such as selection, crossover, and mutation to search for optimal or near-optimal solutions to complex problems. When applied to the optimization of LSTM neural networks, GAs aim to enhance model performance by tuning hyperparameters—such as the number of layers, the number of neurons per layer, and the learning rate—or, in some cases, by optimizing the network’s weights [

25]. Through iterative evaluation of candidate configurations and selective reproduction of the best-performing solutions, GAs efficiently explore a vast and nonlinear search space. This approach improves the predictive capabilities of LSTM networks, particularly in applications involving time-series forecasting and other sequential data modeling tasks [

26].

GAs employ various mathematical operators to optimize LSTM networks. The fitness function (Equation (

9)) evaluates how good a solution (i.e., an individual) is for the problem at hand. In this context,

it represents the actual value,

denotes the value predicted by the network, and

n is the number of samples. Typically, in optimizing LSTM networks, the fitness function is based on the prediction error, such as the mean squared error.

Equation (

10) selects the best solutions to generate the next generation. A common method is the roulette wheel selection.

is the selection probability of each individual,

is the fitness value of the individual, and

N is the population size:

Through Equation (

11), the parameters of two individuals (parents) are combined to produce new offspring.

is a random coefficient between 0 and 1. Uniform crossover is often used:

In Equation (

12), some parameters of an individual are randomly changed to explore new solutions.

is a random value within a predefined range:

The population is replaced by a combination of the best individuals (elitism) and the new offspring generated by crossover and mutation. In Equation (

13), the algorithm stops when a stopping criterion (

is a small threshold) is met, such as reaching a maximum number of iterations or achieving a minimal improvement in the fitness function [

27].

2.3. Mathematical Formalization of LSTM Network Optimization Using Genetic Algorithms

To optimize the LSTM network architecture, a genetic algorithm was implemented to automatically select key hyperparameters, including the number of hidden units, learning rate, batch size, and dropout rate. The following GA parameters were used: a population size of 30 individuals, a maximum of 50 generations, a crossover probability of 0.8, and a mutation probability of 0.1. These values were chosen based on preliminary sensitivity analysis and aligned with recommendations from related works (Bouktif et al. [

10]; Kumar et al. [

15]).

A smaller population (<20) resulted in premature convergence and limited diversity, while larger populations (>50) significantly increased computation time without substantial accuracy gain. The number of generations (50) provided a trade-off between exploration and convergence stability. The crossover rate (0.8) ensures effective combination of candidate solutions, while the mutation rate (0.1) introduces sufficient variability to avoid local minima. A binary encoding scheme was used to represent the candidate solutions, where each chromosome encodes a unique combination of LSTM hyperparameters. The fitness function was defined as the inverse of the RMSE on the validation dataset, ensuring that the GA prioritizes configurations that yield better SOC estimation accuracy.

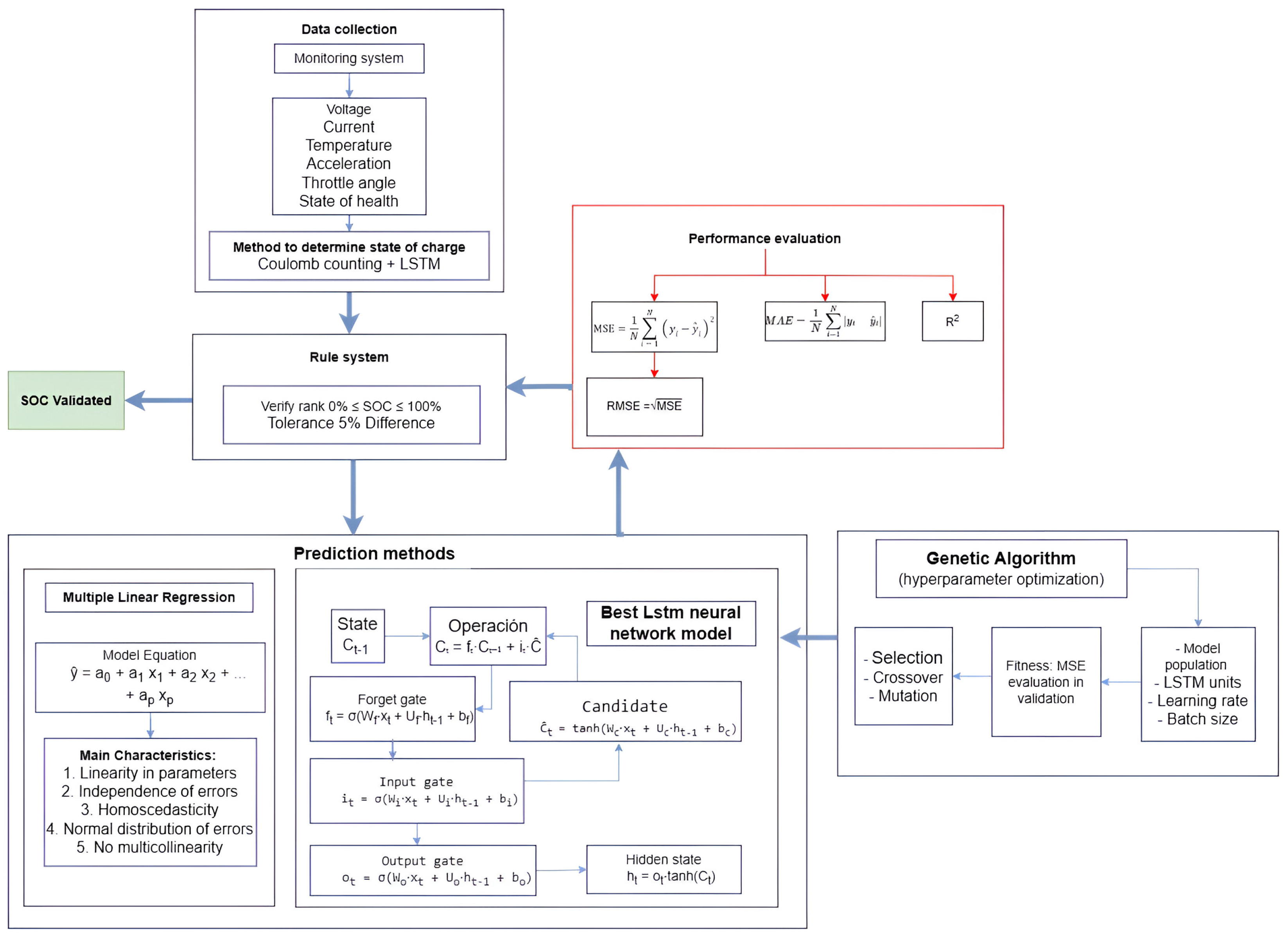

As shown in

Figure 1, the optimization process begins with the generation of an initial population of hyperparameter vectors for the LSTM model. Each vector is used to train the network, and its fitness is evaluated on a validation dataset. In each generation, parent vectors are selected based on a probability proportional to their fitness. These selected individuals are then combined using crossover operations and undergo random mutations to produce offspring. The fitness of the offspring is re-evaluated, and a new population is formed by preserving an elite subset of the best-performing individuals and complementing them with the newly generated offspring. This evolutionary cycle—comprising selection, crossover, mutation, and replacement—continues until a predefined number of generations is reached or no further improvement in fitness is observed. The hyperparameter vector with the highest fitness score is then selected as the optimal configuration for the LSTM network [

28].

2.3.1. Hyperparameter Vector

The hyperparameter vector (Equation (

14)) in LSTM networks optimized with genetic algorithms groups key parameters into three categories:

Architectural hyperparameters (e.g., number of LSTM layers , neurons per layer , and auxiliary components such as dropout rate or dense layer size ),

Training hyperparameters (e.g., learning rate , batch size , and sequence length , which defines the input time window), and

Optimization-specific parameters (e.g., mutation rate and crossover strategy ).

The latter is critical for guiding the exploration and exploitation of the genetic algorithm within the search space. This vector determines the model’s capacity, its convergence during training, and its adaptability to specific tasks, such as time series forecasting [

29].

2.3.2. Evaluation Function (Fitness)

For each individual

h, we train the LSTM using the corresponding hyperparameters and evaluate the MSE on a validation set. The fitness function is defined by Equation (

15), where the negative sign is used to convert the minimization of the MSE into a maximization of the fitness [

30].

2.3.3. Roulette Wheel Selection

In genetic algorithms, this method is used to select individuals from a population

according to their fitness

F, by assigning selection probabilities proportional to their performance. In Equation (

16),

represents the fitness function of individual

, and

is the total fitness of the population.

denotes the probability of selecting the

i-th individual. This mechanism favors individuals with higher fitness, replicating the “survival of the fittest” principle to generate the next generation of candidate solutions [

31].

2.3.4. Uniform Crossover

Uniform crossover is a genetic operator used in evolutionary algorithms to combine two parent vectors,

and

, by generating a child whose genes are randomly selected from each parent. In Equation (

17), for each

k-th gene of the child

, a value

is sampled from a uniform distribution

: if

, the gene is inherited from the first parent,

; otherwise, from the second parent,

. This method ensures a random and independent mixing of hyperparameters, promoting diversity in the population by avoiding bias toward specific segments of the parents, thereby facilitating a balanced exploration of the search space [

32].

2.3.5. Gaussian Mutation

Gaussian mutation is an operator in genetic algorithms that introduces variability in an individual’s genes through random perturbations based on a normal distribution. For each gene

, with mutation probability

, Equation (

18) is applied, where

represents the original value of the

k-th gene, and

is the mutated gene value after perturbation.

denotes the mutation amplitude, which controls the standard deviation of the noise (i.e., the magnitude of the variation).

is a standard normal random variable (mean 0, standard deviation 1) that generates random fluctuations around the original value. This method allows controlled exploration of the search space, favoring small adjustments (due to the high probability density of

near zero) while maintaining genetic diversity. The mutation probability

regulates the frequency of mutations, balancing exploration and exploitation [

33].

2.3.6. Population Update

Population update in genetic algorithms defines how the new generation (

) is formed by combining the best individuals from the current population (

) with the newly generated offspring. According to Equation (

19),

E represents the number of elite individuals (i.e., the best solutions from

), preserved through elitism to ensure that optimal solutions are not lost.

N denotes the total population size, where

corresponds to the offspring created using genetic operators (crossover, mutation). The set

refers to the

E individuals with the highest fitness in

, and offspring represents the set of

new individuals generated through parent selection and recombination. This strategy balances the exploitation of existing solutions (elitism) with the exploration of new ones (offspring), ensuring convergence toward optimal solutions without compromising genetic diversity [

34].

2.3.7. Stopping Criterion

The stopping criterion in genetic algorithms determines when to terminate the evolutionary process based on two conditions:

Reaching a maximum number of generations , a predefined parameter that limits the execution to avoid excessive computational costs; or

Absence of improvement in the best fitness value of the population, , where is the fitness function of individual over G consecutive generations, indicating algorithm convergence.

Here,

G acts as a patience threshold: if there is no increase in the maximum fitness after

G iterations, it is assumed that no better solutions will be found. This mechanism balances search space exploration and computational efficiency, avoiding redundant executions [

35].

2.4. Multiple Linear Regression

The multiple linear regression model assumes that the dependent variable can be expressed as a linear combination of the independent variables plus an error term. It is defined by Equation (

20), where

is the constant term,

are the coefficients that determine the influence of each variable

, and

is the error or residual, assumed to follow a normal distribution [

36]:

To determine the values of

, the mean squared error (MSE) is minimized over the training dataset using Equation (

21). The cost function to be minimized is the following:

The analytical solution is obtained by solving the normal equations (Equation (

22)).

X is the design matrix (each row is a sample and each column is a variable, including a column of ones for the intercept), and

is the response vector:

In the Python (3.12.11) programming environment, the scikit-learn library handles this calculation using the fit() method of the LinearRegression object. As a data preprocessing step before training the model, the features are normalized using StandardScaler. This involves transforming each variable

x according to Equation (

23). Here,

is the mean and

the standard deviation of the variable. This transformation ensures that all variables share a similar scale, improving the numerical stability of the training process:

The train_test_split function splits the dataset into a training set (used to fit the model) and a test set (used to evaluate the model’s predictive capability). During training and validation, the model’s coefficients are calculated by minimizing the sum of squared errors on the training set.

Once the model is trained, it makes predictions

on the test set (Equation (

24)):

The model’s performance is then evaluated using various metrics that quantify how far, on average, the predictions of the model are from the actual values [

37].

Mean squared error (MSE): Equation (

25) is the average of the squared errors between the actual and predicted values, heavily penalizing significant errors.

Root mean squared error (RMSE): Equation (

26) is the square root of the MSE, allowing the error to be interpreted in the same units as the original variable.

Mean absolute error (MAE): Equation (

27) is the average of the absolute differences between the actual and predicted values, providing a direct measure of the mean error.

2.5. Materials

In this study, a real-time monitoring system is employed, equipped with sensors for temperature (°C), current (A), voltage (V), acceleration (m/s2), and accelerator angle (°), as well as an integrated visualization interface for displaying data during testing. The information from these independent variables is recorded to enable accurate estimation of the state of charge.

Standardized driving cycles—including the New European Driving Cycle (NEDC) and the Worldwide Harmonized Light Vehicle Test Procedure (WLTP)—along with custom routes (e.g., inter-campus travel scenarios), are used to capture realistic operating conditions. The resulting SOC data are stored in a time-series format to build a historical dataset, which is then used to train both the LSTM neural network and the multiple linear regression model.

This dataset integrates data collected under various driving conditions, supporting effective hyperparameter optimization. The computational infrastructure processes this information by leveraging the temporal prediction capabilities of the LSTM network in combination with the interpretability and precision of the MLR model, with the goal of developing a robust hybrid estimator for SOC prediction.

There are various approaches for estimating the state of charge in electric vehicle batteries. The open-circuit voltage (OCV) method is based on the empirical relationship between SOC and resting voltage. It provides good accuracy under steady-state conditions but requires long pauses for measurement. Coulomb counting integrates the charge/discharge current and is simple and fast in real time, but it accumulates errors due to drift and capacity variations. Equivalent circuit models (RC networks with Kalman filters) incorporate electrical dynamics and compensate for sensor noise, improving stability under varying conditions. Artificial intelligence methods (neural networks, LSTM, SVM) learn nonlinear patterns and capture thermal and aging effects, although they require large amounts of data and computational power for training. Finally, hybrid approaches that combine two or more techniques (Coulomb counting and LSTM) integrate the speed and simplicity of some methods with the error correction and adaptability of others, achieving the best trade-off between accuracy, robustness to dynamic changes, and implementation cost.

In this study, a hybrid method is used to calculate SOC that integrates two complementary approaches. On one hand, Coulomb counting calculates the variation in SOC based on the integration of the actual current supplied to or drawn from the battery, adjusted by a Coulombic efficiency factor. On the other hand, an LSTM model is trained on time series of current, voltage, temperature, acceleration, throttle angle, and battery state-of-health data, enabling it to capture nonlinearities and thermal dynamics of the system under real operating conditions.

As shown in Equation (

28), at each time instant

t, the values of

and

are obtained and combined through a weighting factor

, either fixed or adaptively chosen between 0.5 and 0.9. This fusion leverages the short-term accuracy and speed of Coulomb counting with the LSTM’s capacity to correct accumulated errors and respond to dynamic events such as accelerations or thermal changes. The result is a more accurate and robust SOC estimate throughout the entire operating cycle.

In Equation (

28), the parameter

governs the contribution of each estimator in the fusion process:

The selection of

was based on a cross-validation strategy using empirical data from five driving cycles. To improve estimation accuracy and account for varying reliability of each estimator under different load conditions,

was adjusted dynamically according to current intensity and LSTM stability, as shown in

Table 1.

This adaptive strategy allows the model to rely more on CC in low-current regions where drift is minimal, use a balanced fusion in moderate regimes, favor LSTM in high-transient regions where CC becomes less accurate due to integration drift. The thresholds were derived empirically to minimize RMSE over a validation dataset. Future work may explore reinforcement learning or Kalman-based dynamic weighting.

The dataset used in this study is derived from extensive experimental trials in which key parameters of an electric vehicle battery are continuously monitored. State of charge values are recorded at non-uniform time intervals, and each evaluated driving cycle is represented as an independent time series consisting of 5213 data instances for prediction purposes.

The dataset includes a dedicated SOC column that displays a nonlinear, monotonically decreasing trend, with gradually diminishing values that correspond to the battery’s discharge behavior. Relevant features for training the LSTM network include characteristic discharge patterns with fluctuations associated with variations in load, operating conditions, or user behavior. Raw sensor measurements are initially recorded with a precision of three decimal places. To reduce statistical noise near the limit of instrument resolution, all signals are preprocessed using a five-sample moving average filter. Subsequently, the data are quantized to two decimal places before model training. This procedure ensures that the LSTM network captures true battery dynamics rather than high-frequency fluctuations introduced by measurement noise.

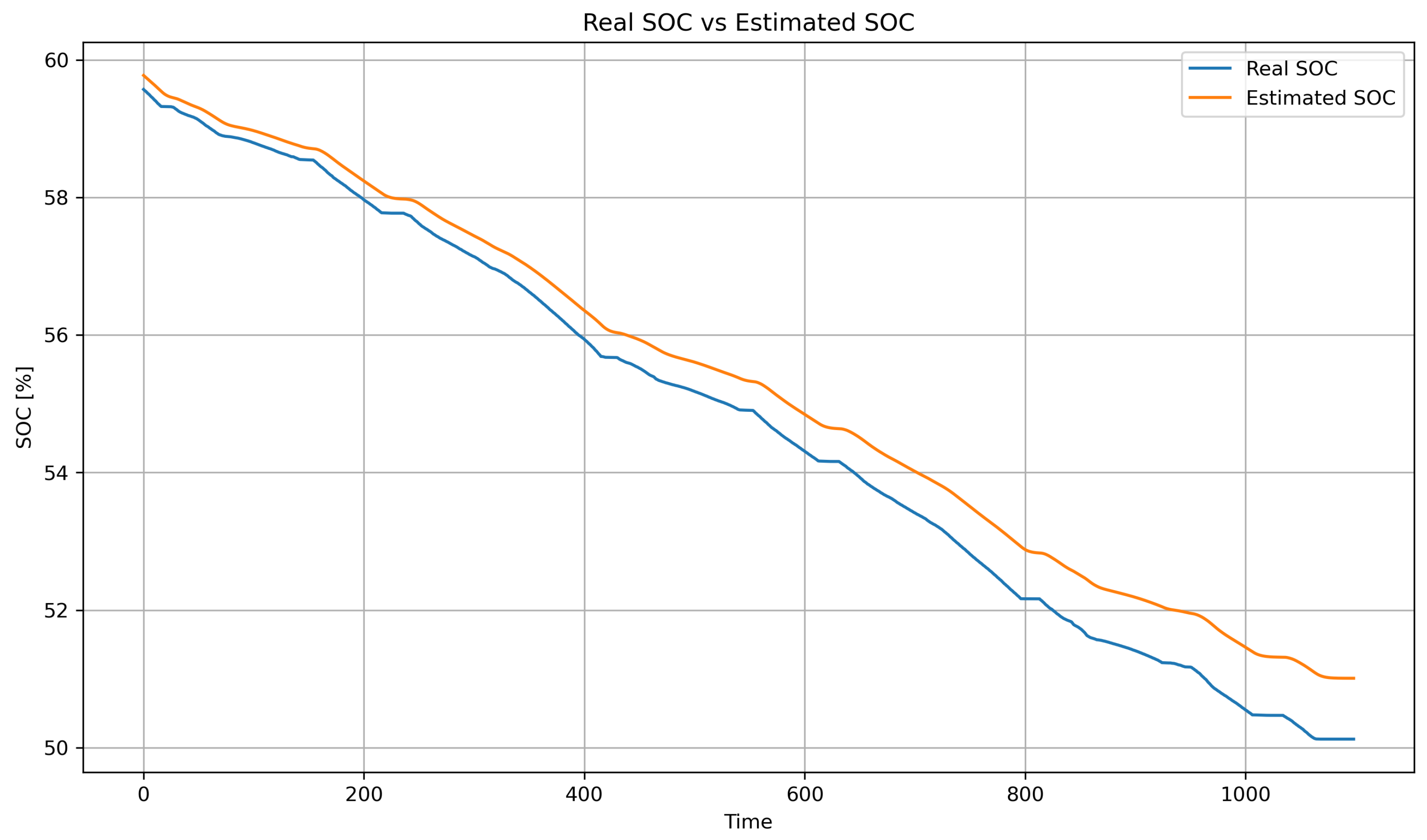

Figure 2 shows the real SOC curve (blue line) and the SOC estimated by the hybrid method (orange line), based on Coulomb counting combined with LSTM. Both follow the same downward trend, confirming the estimator’s reliability. The hybrid method accurately reproduces the global discharge slope (from approximately 93% to 72%) due to the contribution of Coulomb counting, while the small oscillations captured reflect the LSTM’s ability to correct for dynamic variations (such as current, temperature, and acceleration). With an RMSE of 0.48% and an MAE of 0.36%, the model achieves an error below 1%, making it suitable for real-time energy management. The weighting factor

enables the model to combine long-term stability with the correction of short-term deviations.

In the analysis of SOC in electric vehicle batteries, autocorrelation plots are essential tools for examining how variables correlate over time. These plots enable the identification of temporal patterns, dependencies, or noise within the data—factors that are critical for the development of accurate SOC prediction models. Autocorrelation analysis reveals whether past values of a variable significantly influence its future values, thus providing key insights for optimizing battery management algorithms and improving the predictive performance of time-series models.

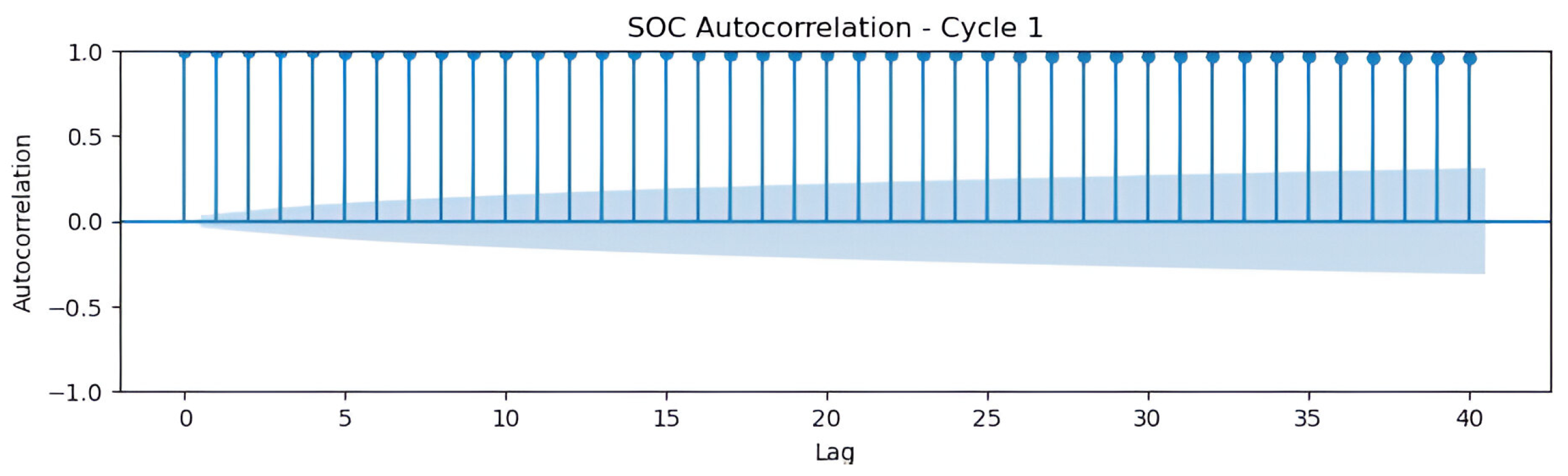

In

Figure 3, the autocorrelation function (ACF) graph of SOC in cycle 1 reveals a complex temporal structure, with significant autocorrelations at lags 0, 5, 15, 20, and 40. These findings highlight a slow decay, suggesting a trend, and recurring peaks that may indicate seasonal patterns. The unusual value of 1.0 at lag 40 might signal strong seasonality, requiring additional validation. These patterns justify the use of integrated autoregressive moving average (ARIMA) or seasonal ARIMA models, with autoregressive components (

for lags 5 and 15) and seasonality (

), combined with differencing to ensure stationarity. It is advisable to analyze the time series in its temporal domain to rule out anomalies and perform stationarity tests—such as Dickey–Fuller—to optimize predictive modeling.

2.6. Data Preprocessing Justification

To ensure robust signal conditioning prior to model training, this study applied two preprocessing techniques: a moving average filter and numerical quantization. Their configurations were selected based on a quantitative analysis of their impact on signal fidelity and noise attenuation.

2.6.1. Moving Average Filter

We tested window sizes of 3, 5, 7, and 9 samples on raw voltage, current, and acceleration signals. As summarized in

Table 2, a five-sample window achieved the best trade-off between noise suppression and temporal accuracy. Specifically, this configuration yielded a low RMSE while preserving key dynamic transitions in the signals. For instance, the RMSE for the current signal increased from 1.20 A (window = 3) to 2.25 A (window = 9), indicating that larger windows overly smoothed transient behavior.

A visual comparison (

Figure 4) confirms that the five-sample window effectively suppresses sensor noise without significantly distorting sharp variations, such as acceleration bursts or regenerative braking events.

2.6.2. Quantization Analysis

To reduce statistical noise near the sensor resolution limit, we evaluated the effect of rounding all signal values to 1, 2, and 3 decimal places (

Table 3). We computed the signal-to-quantization-noise ratio (SQNR) for each case. Results showed that rounding to two decimals preserved high SQNR values (>54 dB for current) and introduced no measurable degradation in voltage or acceleration signals (SQNR →

∞). In contrast, one-decimal quantization caused a significant SQNR drop, indicating information loss in sensitive variables.

Hence, quantization to two decimals was selected to ensure numerical stability during model training while retaining essential signal detail.

2.6.3. Dataset Description and Statistical Summary

As shown in

Table 4 the dataset used in this study comprises a total of 5213 time-series samples, collected from real-world testing under five distinct driving scenarios.

Each sample corresponds to a 0.385-s interval, resulting in a total coverage time of approximately 33.4 min.

Table 5 summarizes the main descriptive statistics of the variables used in model training:

2.7. OCV–SOC Curve Fitting

To model the relationship between open circuit voltage and state of charge, a 6th-degree polynomial fitting method was employed. Experimental OCV data were obtained under static relaxation conditions at an ambient temperature of 25 °C. After each discharge or charge step, the battery was allowed to rest until voltage stabilization (typically 1 h), and the corresponding SOC was recorded.

The resulting OCV–SOC dataset was fitted using the following polynomial expression (Equation (

29)).

where

x is the SOC (0–1), and

are the fitted coefficients derived via least squares regression.

This approach enables smooth interpolation across the entire SOC range and avoids the stepwise discontinuities associated with traditional lookup tables.

Temperature Consideration: The OCV–SoC model was developed under nominal conditions (25 °C), and the thermal effect on OCV was not modeled. Although this assumption holds in controlled environments, the influence of temperature on OCV may become significant at low temperatures. This limitation is acknowledged and will be addressed in future work using temperature-dependent OCV profiles.

3. Proposed Monitoring System

This section presents a system designed to monitor key parameters of medium- and high-voltage batteries in hybrid and electric vehicles. The system captures and analyzes voltage, current, temperature, and accelerator angle through sensors embedded in the electric propulsion system to calculate the SOC. It performs continuous monitoring to ensure adaptability under challenging driving conditions, such as the mountainous and rainy terrain of Ecuador’s Sierra region.

The proposed system integrates high-precision sensors with intelligent prediction algorithms that collect and process data to deliver accurate SOC estimations. By doing so, it reduces the errors typically associated with generalized methods for estimating SOC and driving range in electric vehicles. The model explicitly considers environmental and operational factors, such as temperature, discharge rates, and driver acceleration behavior to support more efficient energy management strategies.

The monitoring system features a modular architecture composed of high-precision sensors, a microcontroller, and actuators for real-time data visualization and storage. In addition, the software layer employs machine learning techniques and predictive models to estimate the SOC of medium- and high-voltage batteries based on the data acquired from the system hardware.

3.1. Hardware

The hardware component consists of a direct current (DC) circuit in which the power battery is connected to the load devices through a protection circuit that ensures stable and safe operation. Sensor data are transmitted wirelessly to the control board via dedicated communication links.

Figure 5 illustrates the electronic schematic of the motherboard where the monitoring sensors are installed. This diagram shows the interconnections between the sensors, microcontroller, and actuators. The motherboard collects data from the sensors, records the information, and uses it to calculate the battery’s state of charge.

Among the integrated sensors is the voltage and current sensor (5), specifically the PZEM-017 DC sensor, which is designed for simultaneous voltage and current measurement in electric propulsion systems. Its operation is based on detecting voltage differentials and monitoring current flow through the circuit, sending the data to an RS485 communication module for digital processing. This sensor is manufactured by Jeanoko Co., Ltd., located in Shenzhen, China, a company specializing in energy instrumentation and monitoring.

The PZEM-017 is essential for monitoring medium- and high-voltage batteries, as it enables real-time analysis of energy consumption and supports accurate SOC estimation. Its measurement range spans from 0.05 to 300 V with a resolution of 0.01 V, and from 0.02 to 300 A for current, with a resolution of 0.01 A.

The DS1820 digital temperature sensor (3), manufactured by Maxim Integrated headquartered in San Jose, CA, USA (now part of Analog Devices, Wilmington, MA, USA), is widely recognized for its reliability and ease of integration in electronic thermal monitoring systems. It operates on a single-wire bus protocol, which simplifies wiring and enhances system scalability—particularly valuable in complex configurations such as battery packs. With an accuracy of ±0.5 °C and typical operating voltages of 3.3 V or 5 V, the DS1820 provides stable and accurate thermal measurements, making it well-suited for temperature monitoring in electric vehicle power systems.

The 100K potentiometer (2), commonly used for throttle position measurement, is a standard component in analog control systems and is supplied by established manufacturers such as ALPS Alpine Co., Ltd., based in Tokyo, Japan. It operates within a voltage range of 3.3 V to 5 V. The device functions based on resistance variation as the throttle is actuated, generating an analog voltage proportional to the rotation angle. This signal is then digitized by an analog-to-digital converter (ADC), allowing the system to accurately interpret the driver’s power demand.

The MPU6050 accelerometer (12) is a six-degree-of-freedom inertial measurement unit (IMU) developed by InvenSense Inc., headquartered in San Jose, CA, USA (currently part of TDK Corporation, Tokyo, Japan). It combines a three-axis accelerometer with a three-axis gyroscope, enabling simultaneous measurement of linear acceleration and angular velocity (tilt or yaw). The MPU6050 operates with a supply voltage between 3 V and 5 V and communicates via an I2C interface, providing updated measurements at approximately 1000 ms intervals. Its high sensitivity and responsiveness to dynamic changes make it an excellent choice for applications requiring real-time vehicle stability monitoring under various operating conditions.

The microcontroller (4) operates at a clock frequency of 16 MHz and processes both analog inputs (voltage, current, and temperature) and digital inputs (acceleration and throttle angle). Temperature and throttle position sensors are connected via a 1-wire bus, facilitating simplified integration. Voltage and current measurements are routed through a differential-to-binary interface and transmitted over a 1-wire bus to the microcontroller’s digital input. A six-degrees-of-freedom (6-DoF) inertial measurement unit—combining a three-axis accelerometer and a three-axis gyroscope—monitors dynamic vehicle behavior and is connected through a three-wire bus. Vehicle speed is measured using a Hall effect sensor, and a microSD storage module is used to log data locally.

A 5 V regulated power supply is provided by an LM7805 voltage regulator, sourced from Texas Instruments Inc., headquartered in Dallas, TX, USA, protected by a resettable fuse. RC filters with a cutoff frequency of 10 Hz are applied to analog inputs to suppress noise. Galvanic isolation between high-voltage sensors and the battery is achieved using optocouplers, ensuring system safety.

Sensor calibration is performed to ensure reliable and accurate data acquisition. Voltage sensors are calibrated using an adjustable DC power supply and a Trisco DA830 digital multimeter, manufactured by Trisco Technologies Corp., based in Taichung City, Taiwan, compensating for deviations across the operating range. Current sensors are calibrated similarly, using a precision current source and a reference ammeter to verify measurements throughout their full scale. Temperature sensors are calibrated using a Goyojo GW192 thermal imaging camera, manufactured by Goyojo Technology Co., Ltd., located in Shenzhen, China, and a reference thermometer, validating sensor outputs at multiple controlled temperature points. Throttle position sensors are calibrated using a stable voltage source and a digital multimeter to ensure accurate angular voltage correlation.

Finally, a 5-inch Nextion display is employed as a real-time visualization panel to present the SOC results. Through serial communication, the outputs from both predictive algorithms are integrated into the display to provide adaptive estimations under dynamic driving conditions. A computer program is embedded within the virtual interface to display key parameters related to the state of medium- and high-voltage batteries powering the electric traction system. The monitoring system was deployed on a test vehicle—a Super Soco electric motorcycle—for on-road testing and training data acquisition. SOC values from the medium-voltage battery were collected using standardized driving cycles, as detailed in

Section 2.

3.2. Sensor Calibration and Reliability Assurance

To ensure accurate data acquisition, all embedded sensors were calibrated using laboratory-grade reference instruments prior to data collection.

A Fluke 87V True RMS multimeter was used as the reference for voltage and current measurements. It offers a DC voltage accuracy of digit and a DC current accuracy of . Temperature calibration was conducted using a FLIR E5-XT thermal imaging camera, which provides a thermal sensitivity of <0.1 °C at 30 °C and an absolute accuracy of ±2 °C or of reading, whichever is greater.

Calibration involved applying different load profiles (no-load, partial load, and full load) and recording sensor readings in parallel with the reference devices. For temperature, thermal gradients were induced using forced air and heat sources to cover the expected operating range. To mitigate long-term sensor drift, a three-point recalibration protocol was applied every 30 days. Additionally, offset correction routines were implemented in firmware using idle-condition data to continuously recalibrate zero-current and zero-voltage baselines.

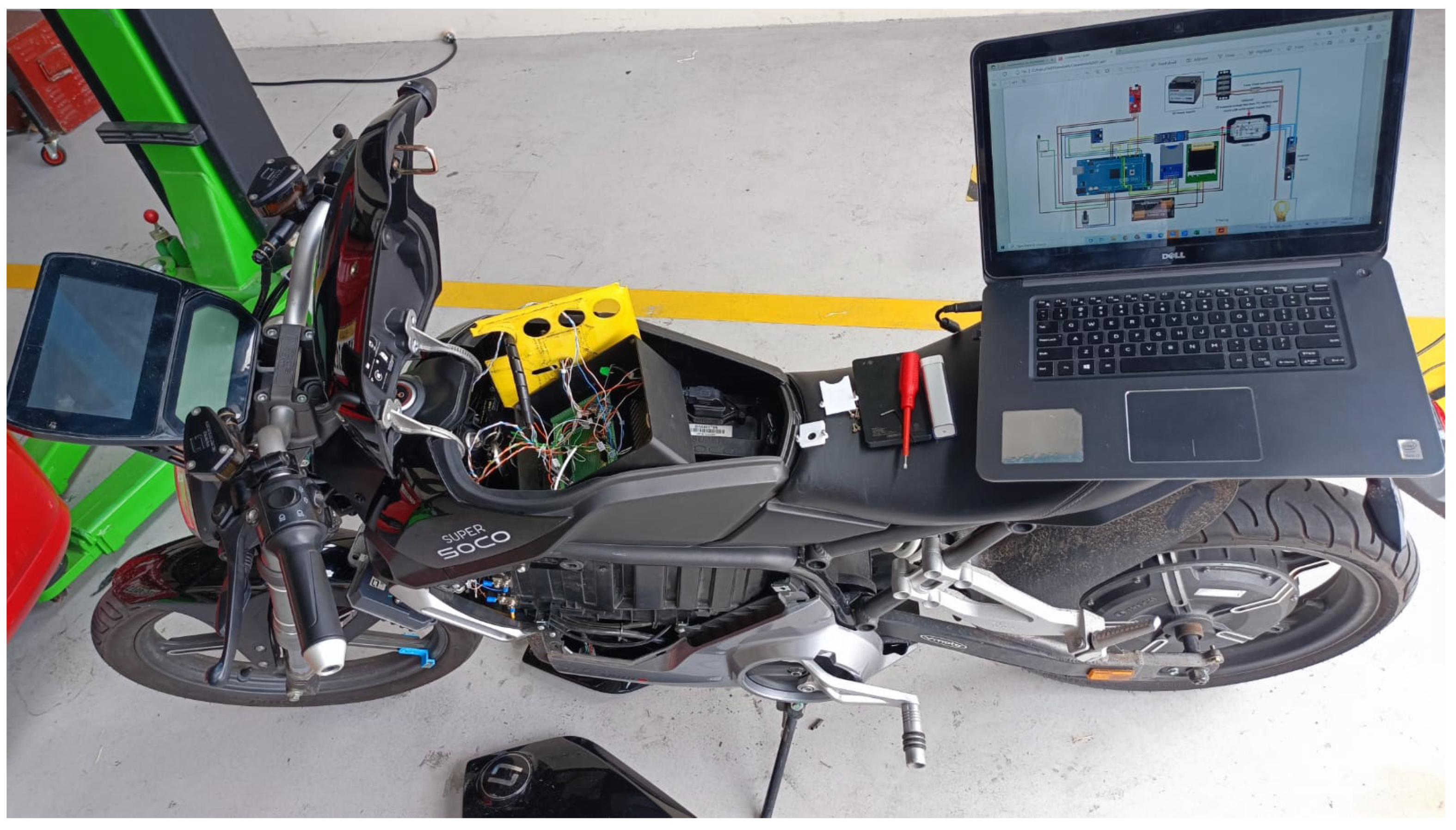

An experimental platform was implemented on a Super Soco electric motorcycle to perform real-time data acquisition and evaluate the accuracy of the proposed SOC estimation models. The vehicle was modified to integrate a custom monitoring system composed of various sensors and a microcontroller-based control unit.

Figure 6 shows the modified motorcycle with the seat lifted, exposing the main components of the system. The control board (microcontroller) is installed beneath the seat, connected to the following sensors: a module for simultaneous voltage and current measurements, a digital temperature sensor, a throttle potentiometer, an inertial measurement unit, and a Hall-effect sensor for wheel speed measurement. All signals are routed to the control board through shielded cables to minimize electromagnetic interference during operation.

A laptop is placed on the rear seat to run the real-time monitoring software, which displays the system’s schematic and records the captured data. This setup allows for full supervision of sensor signals during on-road testing under standardized and custom driving cycles. The platform enables validation of both machine learning models—LSTM + GA and multiple linear regression—by capturing and comparing actual versus predicted SOC under varying conditions of load, terrain, and driving style.

3.3. Software

The software architecture integrates two predictive models: an LSTM neural network optimized using genetic algorithms, designed to capture temporal dependencies, and a MLR model to account for linear relationships among variables such as temperature, voltage, and current. Both algorithms update the estimated driving range every 0.385 s, combining high precision (at the sensor resolution level) with computational efficiency for dynamic operating environments.

Sensor signals are continuously fed into a regression system to capture operating variables in real time. The process begins with the acquisition of raw sensor data. If the reading is valid, the data are integrated into the software for interpretation and processing. These values are then displayed in real time on the visualization panel and stored on a microSD card via serial communication. In the event of an invalid or corrupted reading, the system discards the incoming signals and retains the last valid data stored in the memory unit. Finally, the validated signals are encoded in hexadecimal format for interpretation by the microcontroller using the implemented programming language.

Figure 7 illustrates the software architecture of the integrated system designed to predict the state of charge in medium- and high-voltage batteries for electric vehicles. The system combines an LSTM neural network for predictive modeling and a MLR model to enhance accuracy based on the historical state of charge, denoted as

. Both models operate in parallel to provide robust and adaptive SOC estimation.

The MLR model includes operating parameters such as voltage, current, and temperature, producing an (MLR). Meanwhile, the LSTM model structures its prediction through dynamic computations: the “state computer” observes the influence of noise and feeds the saturation of the cell into the hidden-layer output. The “output computer” then determines the hidden-layer output, yielding (LSTM).

This technique chains recurrent regressors with input computers () and employs forget gate mechanisms to capture temporal dependencies. Genetic optimization is used to adjust hyperparameters (LSTM units, learning rate, and batch size) within a broad search space, using the mean squared error as the fitness function.

3.4. Long Short-Term Memory (LSTM) Neural Networks Optimized via Genetic Algorithms

As described in

Section 2, this research employs long short-term memory neural networks optimized using genetic algorithms (GA). The LSTM network equations are defined in Equations (

2)–(

7), while the genetic algorithm-based optimization process is detailed in Equations (

9)–(

13). The LSTM network processes the time-series data corresponding to the SOC of medium- and high-voltage batteries using cells composed of three gates—forget, input, and output—and a cell state. Mathematically, an LSTM cell performs the following operations at each time step

t.

This approach leverages the capacity of LSTM networks to model sequential data, while employing genetic algorithms to optimize critical hyperparameters such as the number of LSTM units, learning rate, and batch size. The model was trained using a dataset obtained from actual measurements of the WLTP urban driving cycle. Prior to training, the data were normalized to promote convergence and structured into sequences with five time steps to match the LSTM input format.

The LSTM network architecture incorporates advanced features, including optional bidirectional layers to capture temporal dependencies in both forward and backward directions, multiple LSTM layers with decreasing numbers of units to reduce computational redundancy, and an adjustable dropout mechanism to mitigate overfitting. The framework is flexible, allowing customization of time window lengths (ranging from 5 to 30 steps) and batch sizes (ranging from 8 to 128) to accommodate sequences of varying length and complexity.

Hyperparameter optimization is performed using genetic algorithms, with a search space encompassing seven parameters: number of LSTM layers (1–3), number of units in the first layer (16–128), dropout rate (0–0.5), use of bidirectionality (binary), learning rate (0.0001–0.01), time step window, and batch size. A population size of 30 individuals and 40 generations are employed to thoroughly explore the solution space. Custom genetic operators are implemented to ensure an effective balance between exploration and exploitation. Combined with temporal cross-validation and a fixed random seed for reproducibility, this strategy enhances both the robustness and generalization capability of the model.

Model validation was performed using a 70–30% training–testing data split. Performance was evaluated using standard metrics, including MSE, RMSE, MAE, and the coefficient of determination (). To prevent overfitting, an early stopping mechanism based on validation loss was employed during training. The synergy between the LSTM network responsible for capturing temporal patterns and genetic algorithm based optimization used for fine tuning hyperparameters enabled accurate SOC prediction in electric vehicles. This hybrid approach demonstrated strong performance under both standardized WLTP driving cycles and custom-defined test routes.

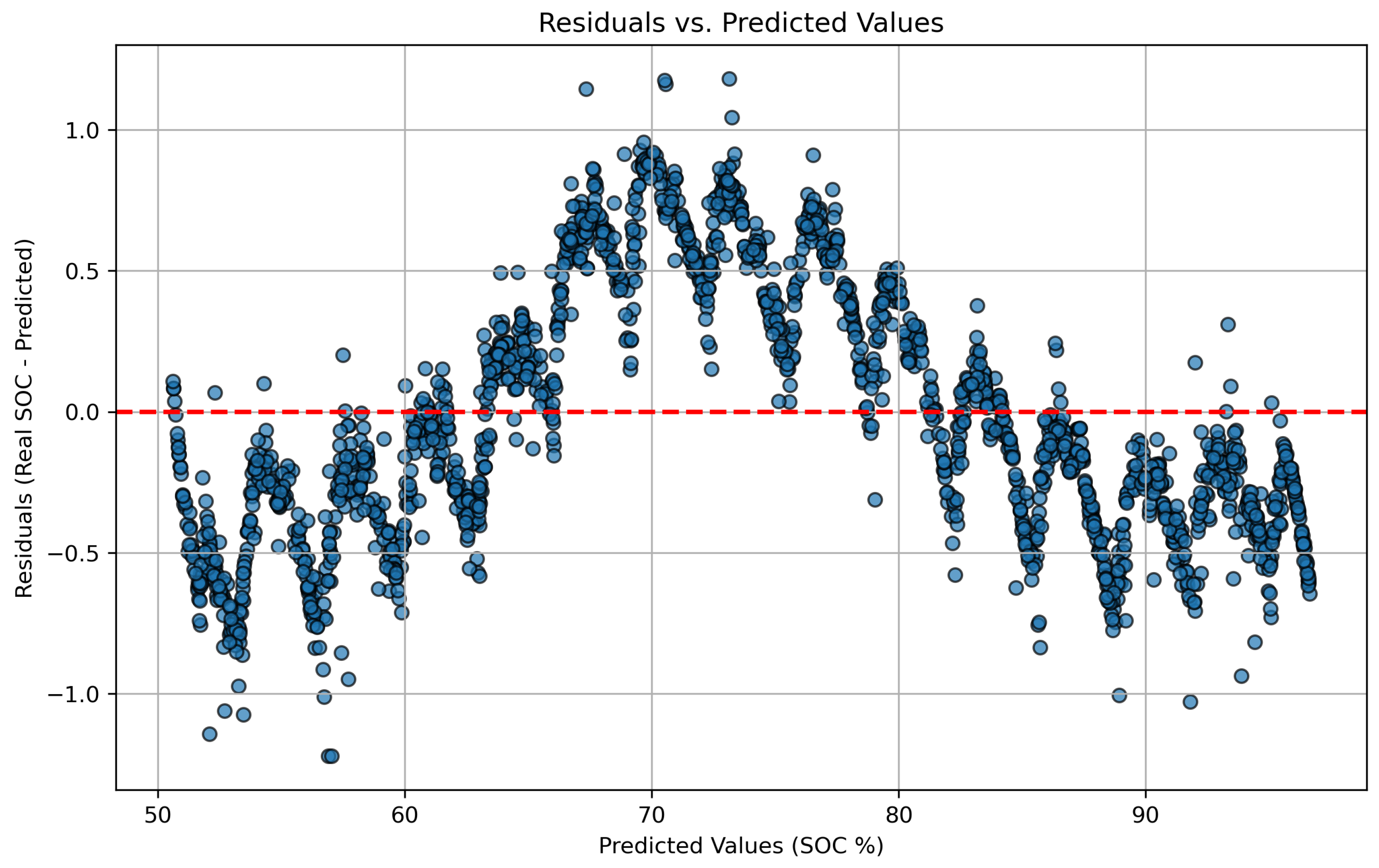

3.5. Multiple Linear Regression Model Formulation

This study also implements a multiple linear regression model to estimate the state of charge of a battery based on operational data. The model’s performance is assessed using standard evaluation metrics, including mean squared error, mean absolute error, and root mean squared error. In addition, scatter plots and correlation matrices are presented to support interpretation and assess the model’s predictive effectiveness. The dataset obtained from road tests serves as the training input for the application of Equations (

14)–(

21), which relate SOC to the selected independent variables.

The MLR model estimates SOC using temporal data on current, voltage, and time. The dataset is partitioned into training (70%) and testing (30%) subsets, with a fixed random state to ensure reproducibility. The model is trained and evaluated using MSE, RMSE, MAE, and the coefficient of determination (), highlighting its explanatory capability. Additionally, the model includes visualization tools for comparing actual vs. predicted values, analyzing residuals, and displaying the correlation structure among input features. Robustness is further assessed through five-fold cross-validation, which computes the average MSE across folds.

The hyperparameters used in this methodology include a test size of 0.3, indicating that 30% of the data are allocated for testing and 70% for training. A random state of 42 is applied to ensure consistent data splitting. The number of cross-validation folds is set to 5, and the negative mean squared error is used as the scoring metric during cross-validation.

3.6. Rule System

As shown in

Figure 7, the flowchart of the SOC monitoring and prediction system for electric vehicle batteries includes a rule-based validation module that plays a critical role in verifying the plausibility of model outputs. This system ensures that both the actual and predicted SOC values fall within the acceptable range of 0% to 100%, with an allowable deviation of up to 5% between the two. The rule engine serves as a safety filter, validating the results generated by both the multiple linear regression and LSTM models to ensure consistency with the physical constraints of the battery.

If any predicted SOC value lies outside the valid range, such as negative values, values exceeding 100%, or significant disagreement between the models, the system automatically triggers an alert. This functionality enables early intervention to prevent battery degradation, incorrect energy management decisions, or errors in vehicle range estimation.

The rule-based system also defines several critical thresholds to enhance operational safety and diagnostic reliability:

A maximum allowable deviation of 5% between actual and predicted SOC values,

A defined safe operating SOC range between 20% and 95%,

A threshold for anomalous rates of change exceeding 2% per minute.

Alarms are triggered when these limits are exceeded, when performance metrics indicate high error (e.g., MSE > 0.2 or MAE > 3%), or when model overfitting is detected (defined as a difference greater than 0.25 between training and test sets).

Automated corrective actions include: (i) model recalibration after three critical alarms within one hour, (ii) immediate operator notification if the SoC exits the safe range, and (iii) detailed logging of all anomalies for post-analysis and model refinement.

The ±5% criterion was selected based on the following considerations:

In commercial battery management systems (BMS), this margin is widely accepted as a practical trade-off between accuracy and operational safety. It accounts for typical sensor noise, cell-to-cell variability, and system tolerance, while minimizing the occurrence of false alarms.

Benchmark studies in the SOC estimation literature report acceptable tolerances ranging from 3% to 7% for electric vehicle (EV) applications. A 5% threshold ensures compliance with these standards, preserving battery longevity and user safety without imposing unrealistic precision demands.

The threshold aligns with the effective resolution of the measurement system used in this study (based on sensors with an accuracy of ±0.5–1%) while also incorporating a margin for modeling uncertainties. Reducing the threshold further would offer limited practical benefit and could increase the likelihood of triggering unnecessary alerts.

4. Results

Accurate estimation of the state of charge in medium- and high-voltage battery systems remains a critical challenge for ensuring the efficient and safe operation of electric vehicles. In this study, LSTM neural networks optimized using genetic algorithms are employed to predict SOC based on key variables related to battery performance. This hybrid approach leverages the sequential modeling capabilities of LSTM networks, while the genetic algorithm is used to fine-tune essential hyperparameters, including the number of LSTM units, learning rate, and batch size.

The training dataset consists of samples collected from standardized driving cycles, such as WLTP and NEDC, as well as a custom driving route designed to reflect real-world conditions. The historical SOC of the test vehicle’s battery was obtained through extensive road testing and is used as the ground truth target for the supervised learning models developed in this work.

4.1. Experimental Setup

Two types of lithium-ion battery packs were used in the experimental validation:

Medium-voltage battery: A 60 V, 40 Ah NCM lithium-ion pack extracted from a Super Soco electric motorcycle (manufactured by Vmoto Soco Group, Perth, Australia). It consists of 16 cells in series and was selected for its accessibility, manageable voltage level, and suitability for real-time onboard data acquisition.

High-voltage battery: A 240 V, 6.5 Ah pack (64 cells) repurposed from a second-generation Kia Niro EV (manufactured by Kia Corporation, Seoul, Republic of Korea). This battery was used for testing the model’s generalization under WLTP and NEDC cycles in a controlled laboratory environment.

The data acquisition system was built around an Arduino Mega 2560 microcontroller equipped with INA219 voltage/current sensors, a GPS module, a throttle position sensor (0–5 V analog input), a Bosch MPU6050 accelerometer and an I2C temperature sensor. The SOC reported by the original BMS was recorded for reference.

Sampling Configuration: All signals were sampled at a frequency of 2.6 Hz (approximately every 0.385 s) and stored on a microSD card in CSV format for offline processing. The acquisition included the following signals:

Battery voltage (V),

Battery current (A),

Battery surface temperature (°C),

GPS-based vehicle speed (km/h),

Throttle angle (0–100%),

Longitudinal acceleration (m/s2).

Data were collected during predefined driving cycles (NEDC and WLTP) and a custom inter-campus route. Before each test, sensors were calibrated using laboratory references (see

Section 3.2). This detailed configuration ensures the reproducibility of the measurements and the validity of the SOC estimation results.

4.2. Experimental Protocols for Dynamic and Static Testing

The SOC estimation models were validated using two complementary experimental approaches: dynamic tests with an electric motorcycle and static discharge tests with high-voltage batteries, each following NEDC and WLTP driving cycle standards.

4.2.1. Dynamic Tests: Super Soco Electric Motorcycle

Dynamic tests were carried out using a Super Soco electric motorcycle equipped with GPS, current and voltage sensors, temperature probes, and throttle position sensors. These tests were conducted at the Universidad de las Fuerzas Armadas ESPE—Campus Belisario Quevedo.

Urban NEDC cycle: Distance of 11 km, speed range of 0–34 km/h, estimated test duration of 20 min.

Extra-urban NEDC cycle: Distance of 11 km, speed range of 0–120 km/h, estimated duration of 10–20 min.

WLTP urban cycle: Distance of 23.25 km, maximum speed of 46.5 km/h, average duration 30 min.

WLTP extra-urban cycle: Distance of 23.26 km, speed range 0–120 km/h, average duration 20–30 min.

Each test was performed twice, under dry conditions, average ambient temperatures between 21 °C and 26 °C, and low wind. The terrain was asphalted and level. Data was acquired at 2.6 Hz.

4.2.2. Static Battery Testing: High-Voltage Pack

A 240 V, 7 Ah high-voltage lithium-ion pack was tested in a laboratory environment using a programmable DC electronic load. The battery was subjected to current profiles that emulate WLTP and NEDC cycles. No driver was required; tests were executed on a stand in zero-load mechanical condition (no real torque).

Five test repetitions were conducted for each profile.

The system monitored voltage, current, temperature, SOC, and acceleration.

Motor rotation was registered from 0 to 600 wheel revolutions, with no physical traction load.

These controlled tests allowed repeatable capture of battery response under pseudo-realistic profiles and ensured safe characterization of the SOC estimation model.

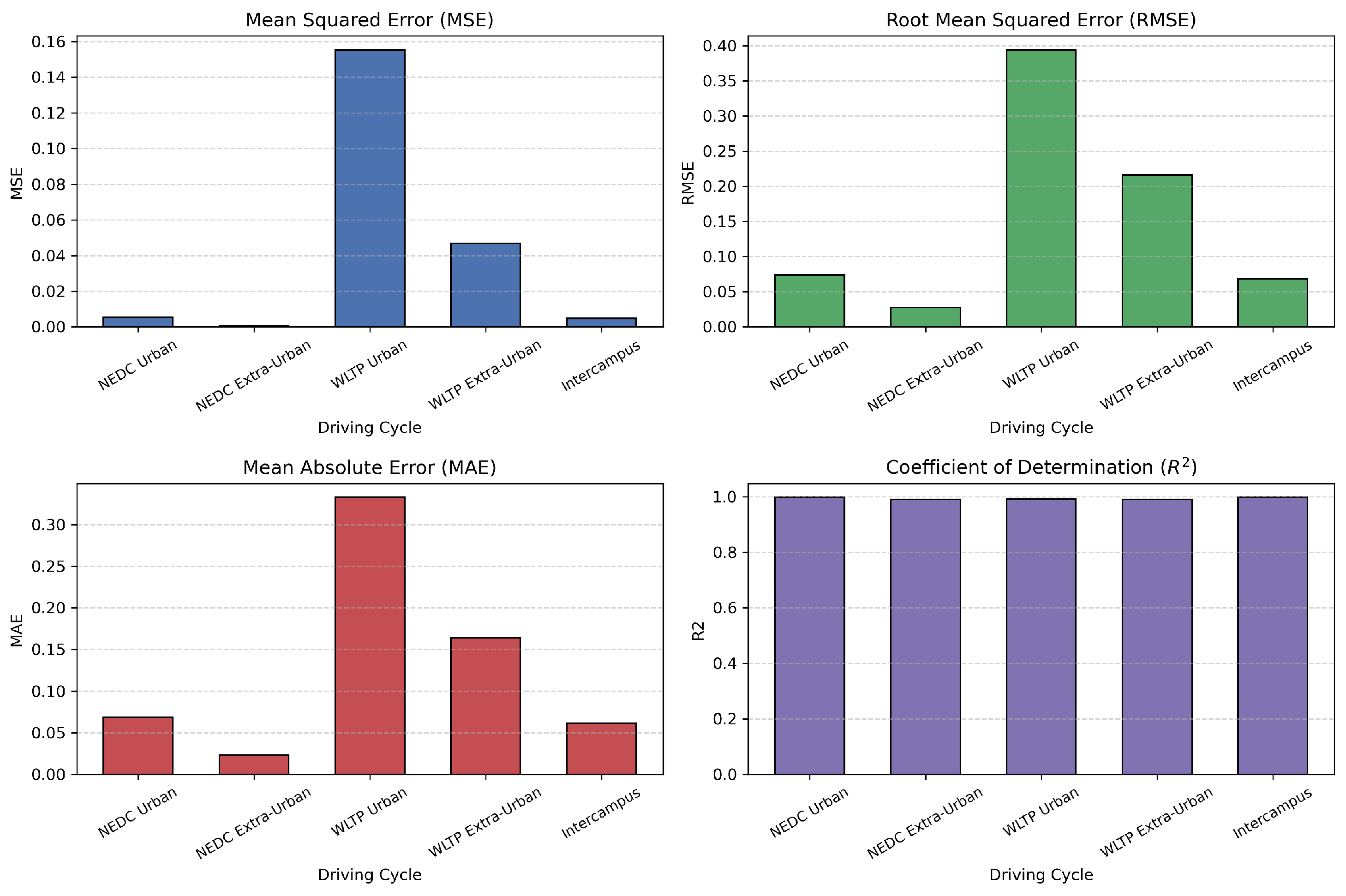

4.3. SOC Prediction for Each Driving Cycle

This study presents a comparative analysis of state-of-charge estimation results for an electric motorcycle subjected to five distinct driving profiles. These include: (i) the NEDC urban cycle, (ii) the NEDC extra-urban cycle, (iii) the WLTP urban cycle, (iv) the WLTP extra-urban cycle, and (v) a custom driving cycle simulating inter-campus travel between the two locations of the Universidad de las Fuerzas Armadas ESPE in Latacunga.

For each scenario, temporal curves comparing predicted vs. actual SOC values are provided, along with quantitative performance metrics (MSE, RMSE, MAE and ) generated by the LSTM model optimized through a genetic algorithm.

This analysis underscores how variations in speed, acceleration, and battery operating conditions across different driving cycles impact the accuracy of state-of-charge estimation. It enables the identification of conditions under which the predictive algorithm exhibits enhanced robustness, as well as scenarios where refinements to the battery management system (BMS) may be required to improve SOC prediction performance.

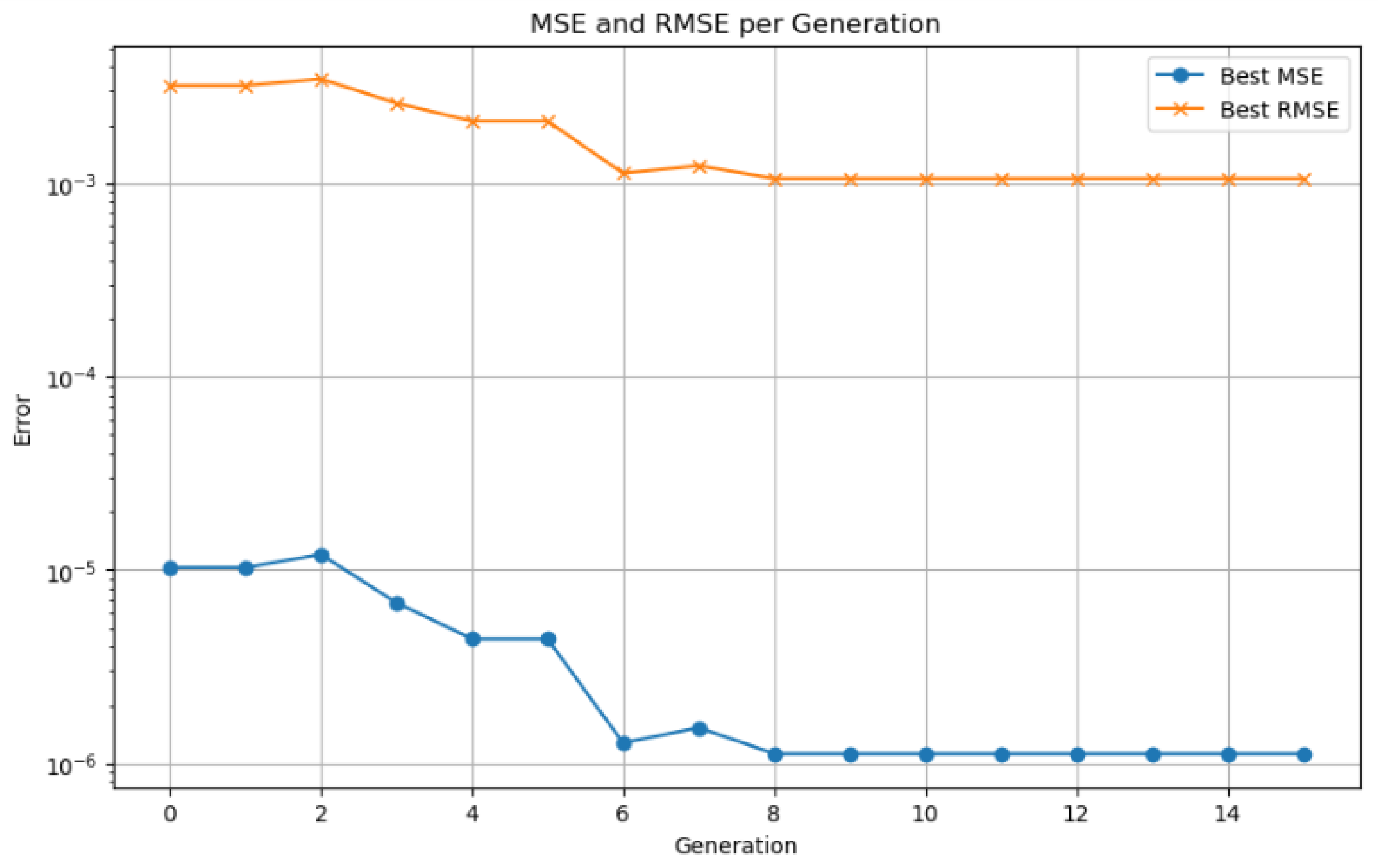

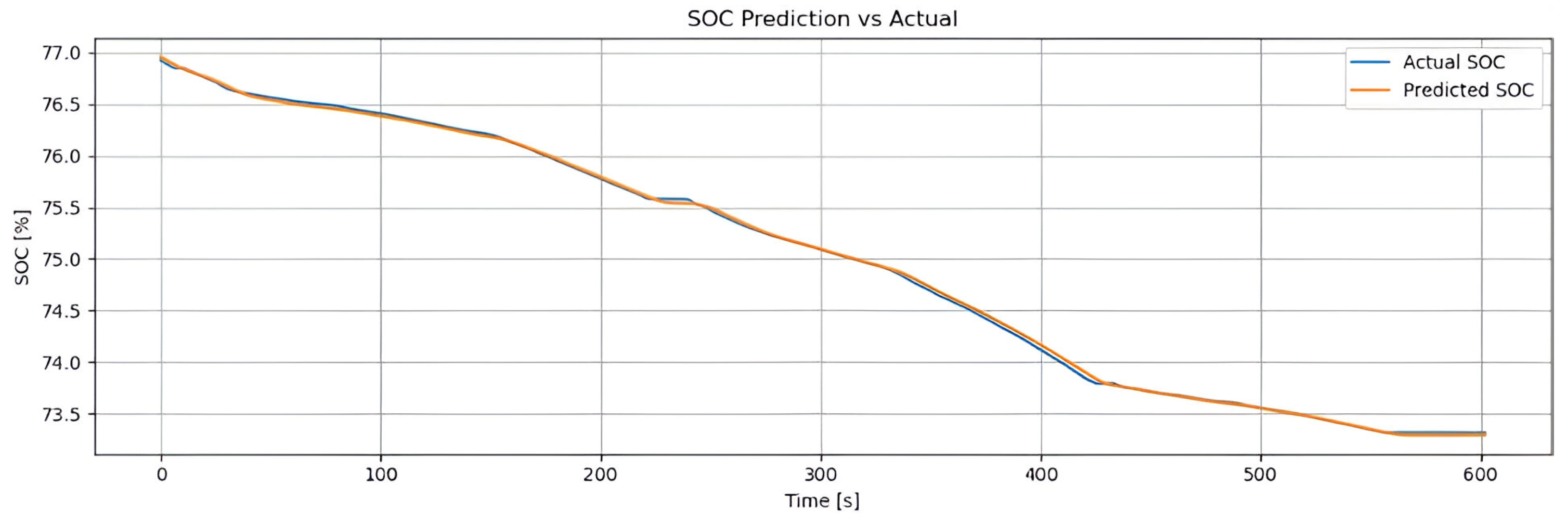

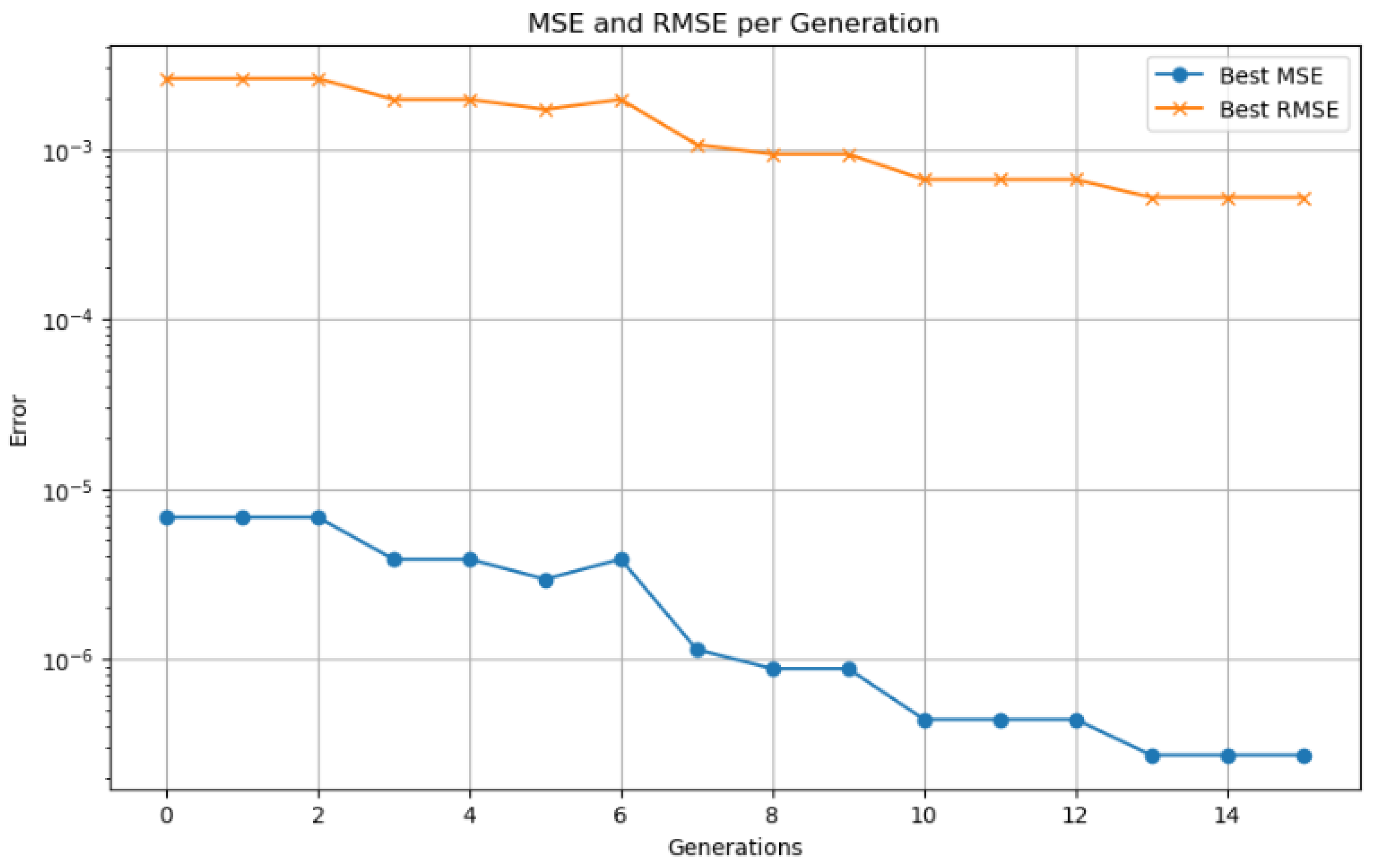

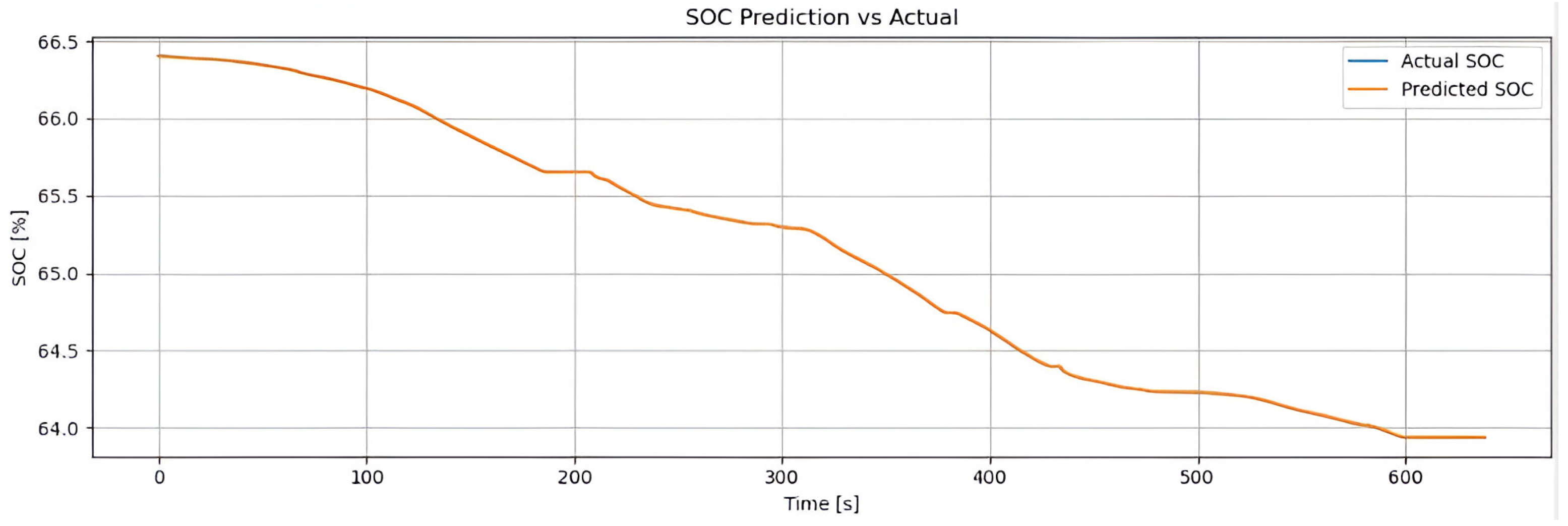

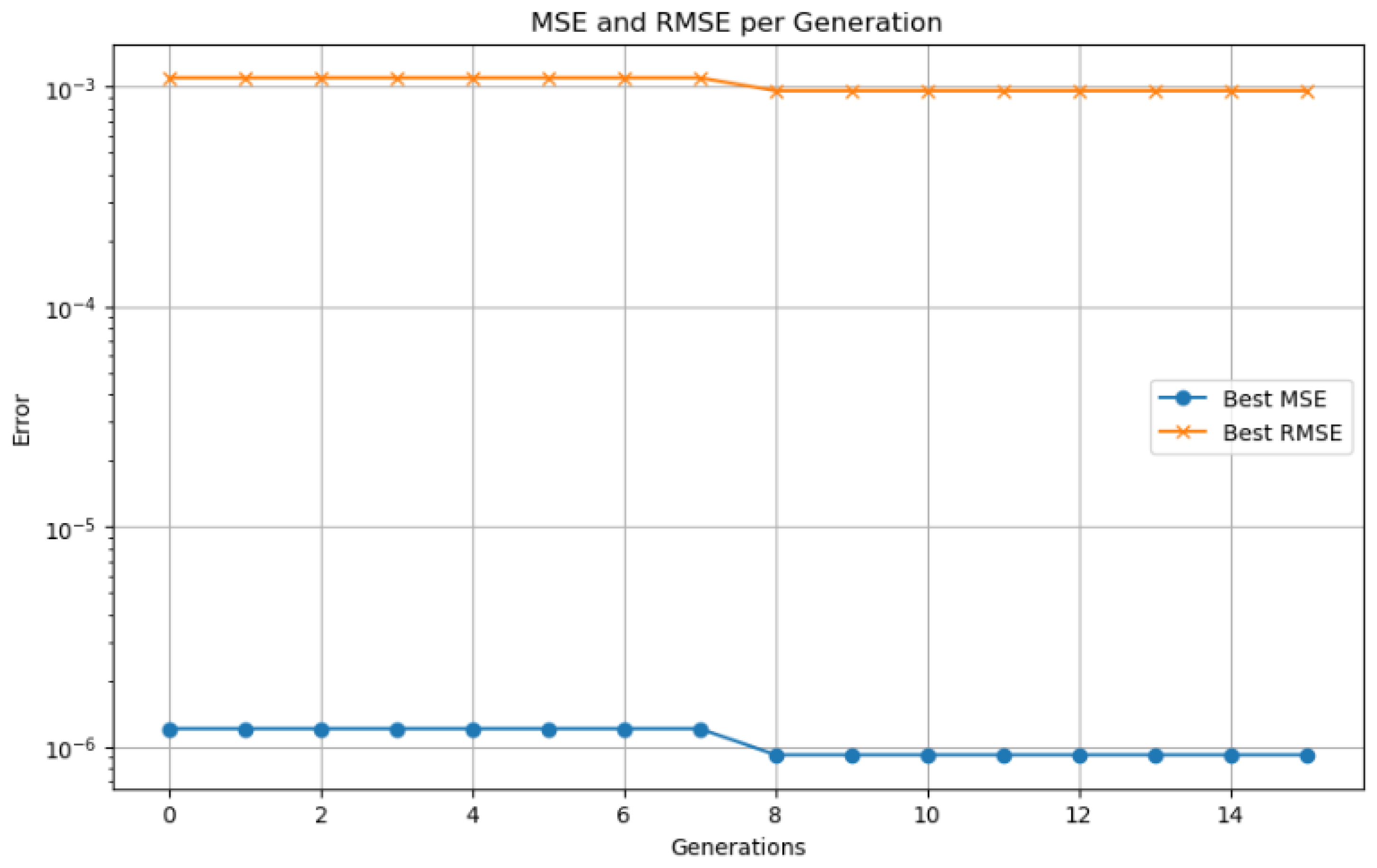

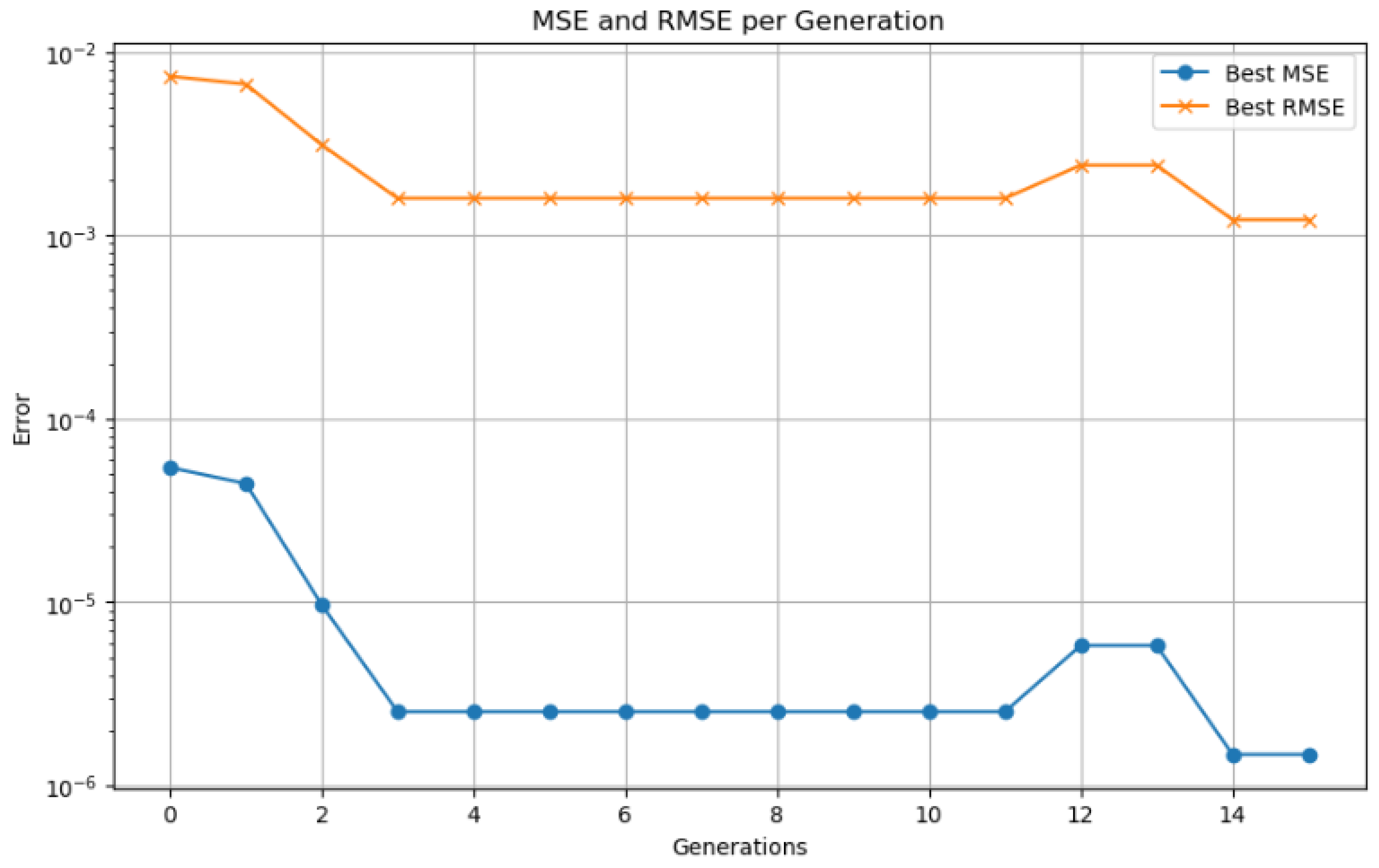

The LSTM hybrid model, optimized using a genetic algorithm, demonstrated rapid convergence. The scaled MSE was reduced from

in the initial generation to

by the sixth generation. This optimization resulted in an almost perfect alignment between the predicted and actual SOC curves during the NEDC urban cycle, as illustrated in

Figure 8. In this chart, each point along the X-axis represents a generation of the genetic algorithm. A generation includes the selection, crossover, and mutation steps used to produce a new population of hyperparameter vectors, followed by the evaluation of an LSTM model trained with each of them.

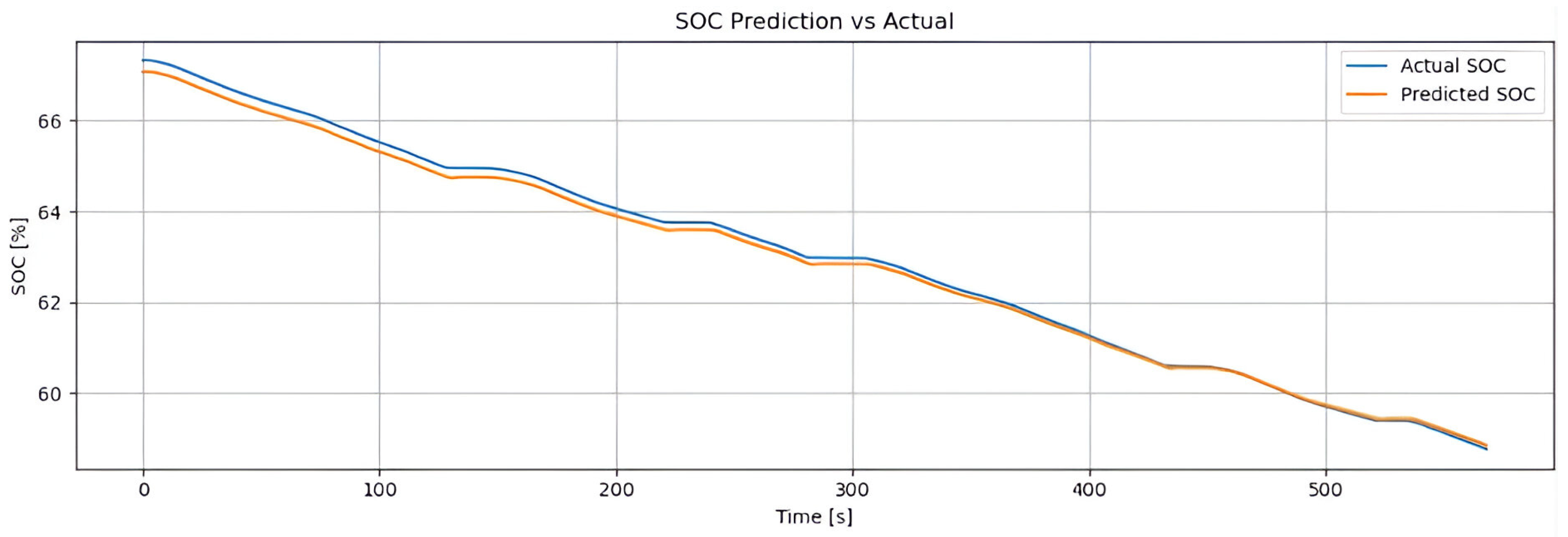

In absolute terms, the model achieved an MSE of 0.0054, an RMSE of 0.0736, a MAE of 0.0685, and a coefficient of determination of , reflecting its high precision and reliability in SOC estimation tasks under real-world urban driving conditions.

Figure 9 illustrates that the SOC curve predicted by the model (orange line) closely tracks the actual SOC evolution (blue line) throughout the NEDC urban cycle. The minimal discrepancies demonstrate the model’s exceptional tracking accuracy. This near-perfect alignment confirms that the hyperparameters optimized via the genetic algorithm enabled the LSTM model to accurately learn and replicate the battery’s discharge behavior.