Proof of Concept and Validation of Single-Camera AI-Assisted Live Thumb Motion Capture

Abstract

1. Introduction

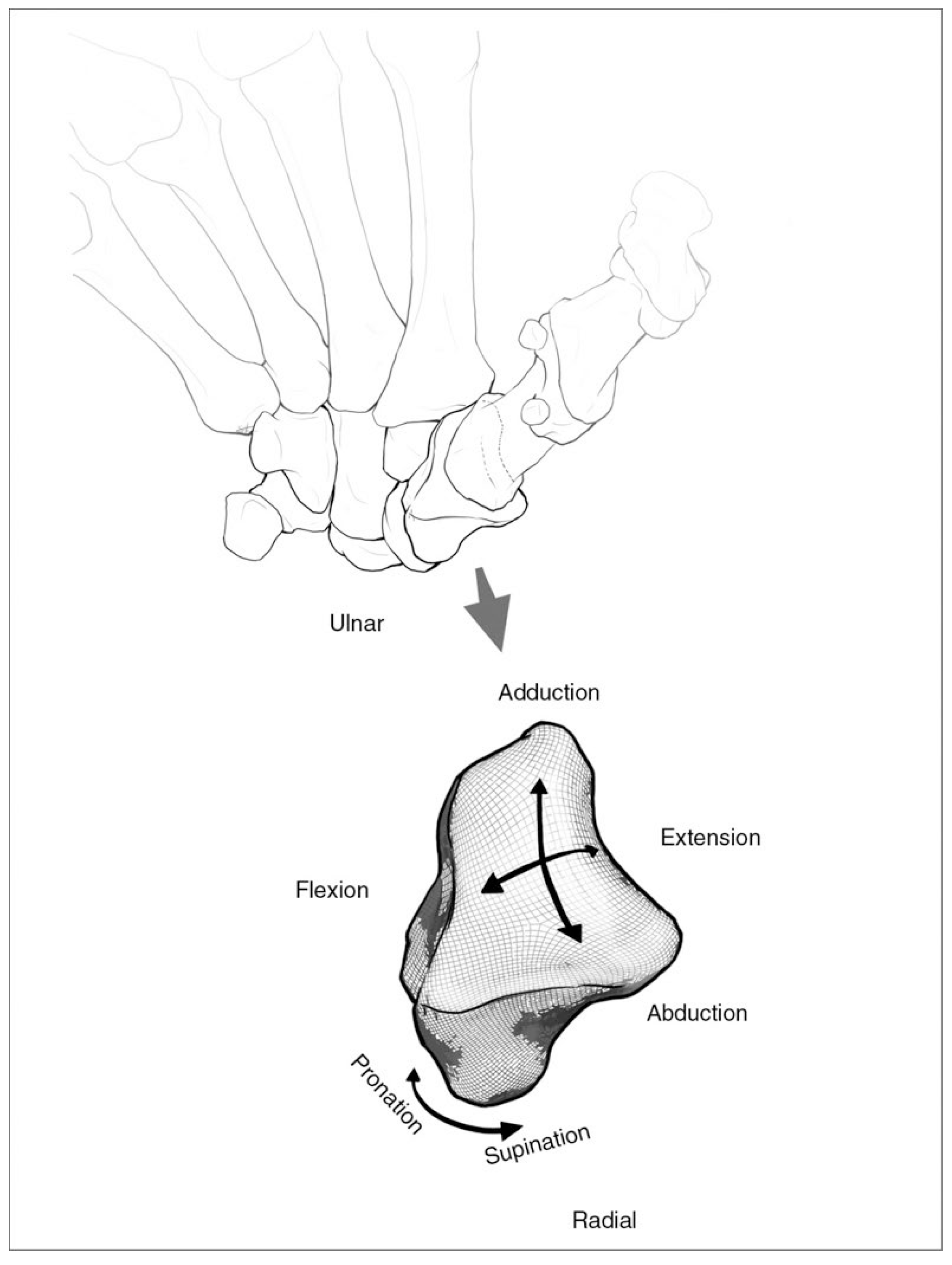

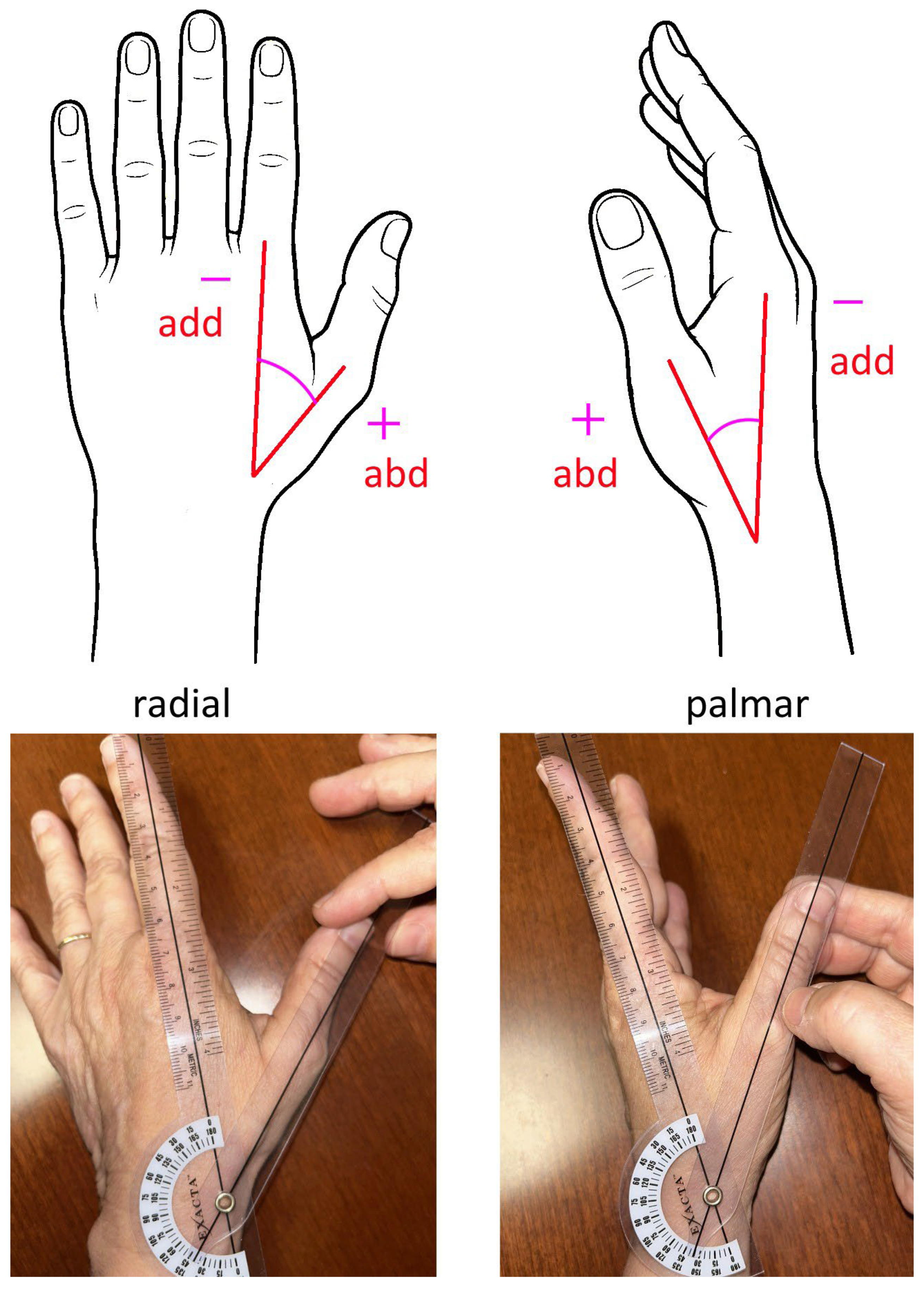

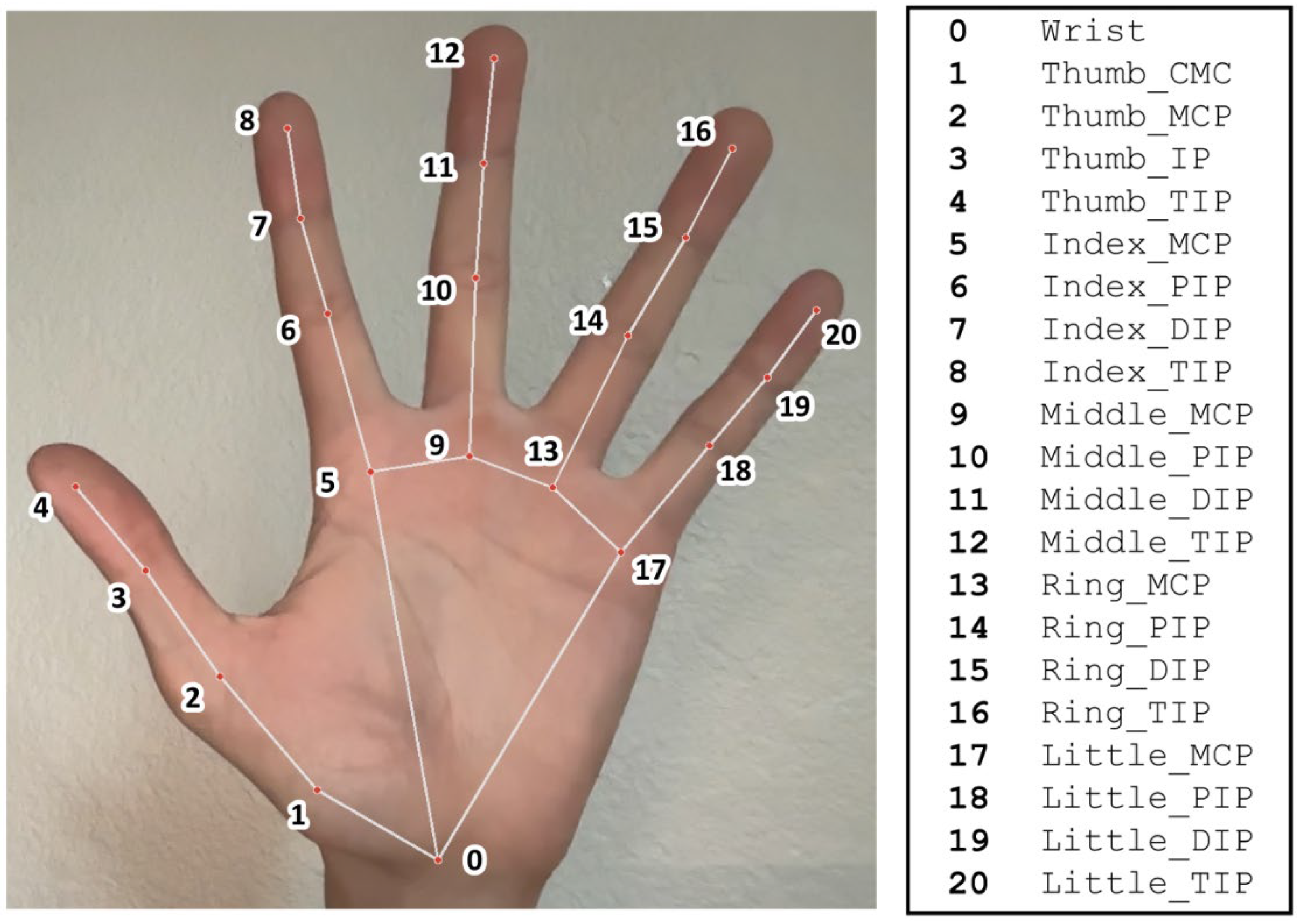

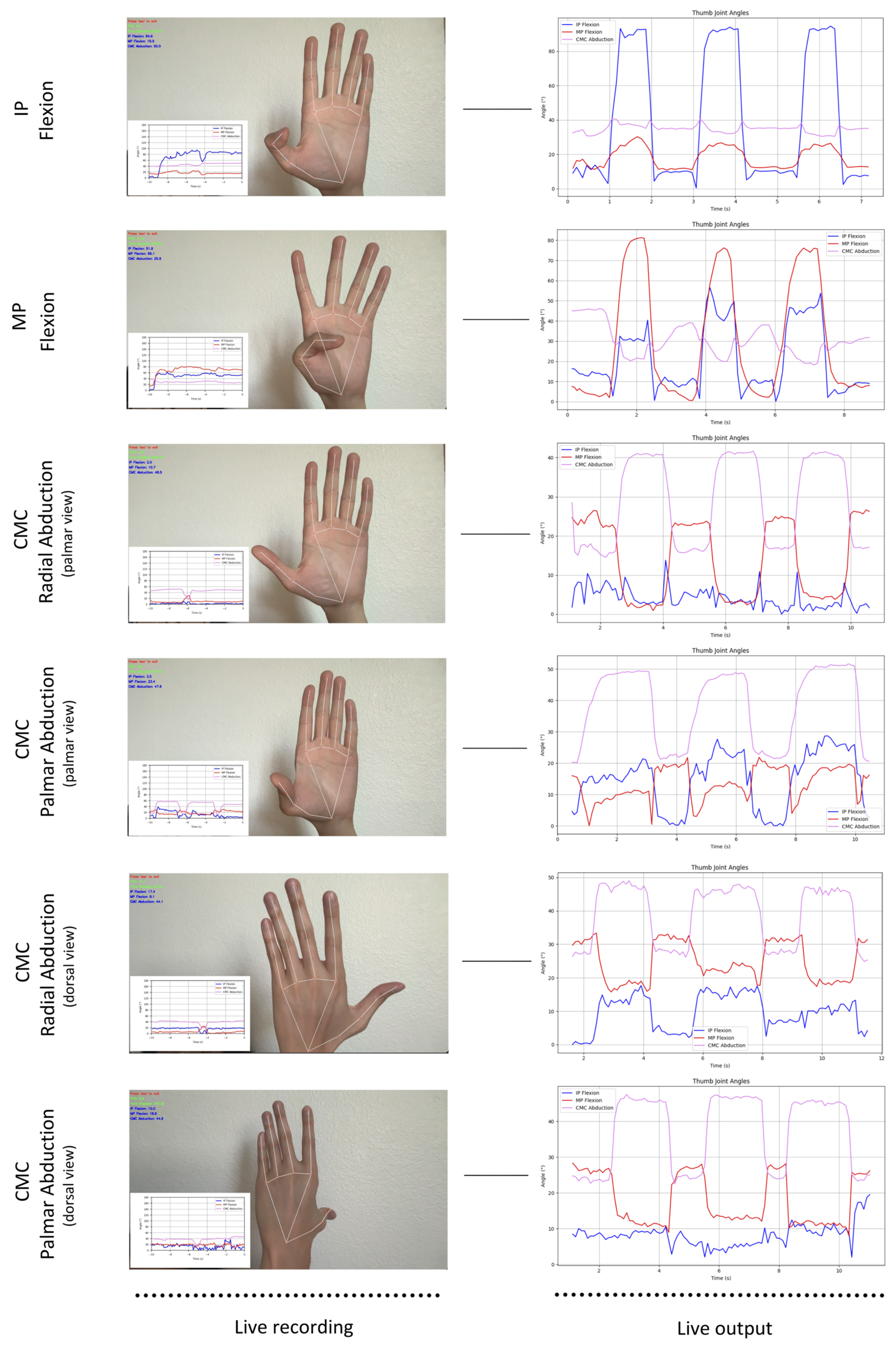

2. Materials and Methods

- (1)

- Thumb IP flexion/extension × 3, palm facing the camera;

- (2)

- Thumb MP flexion/extension × 3, palm facing the camera;

- (3)

- Thumb CMC radial abduction/adduction × 3, palm facing the camera;

- (4)

- Thumb CMC radial abduction/adduction × 3, dorsum facing the camera;

- (5)

- Thumb CMC palmar abduction/adduction × 3, palm facing the camera;

- (6)

- Thumb CMC palmar abduction/adduction × 3, dorsum facing the camera.

3. Results

3.1. Participants

3.2. Motion Capture Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IP | Interphalangeal |

| MP | Metacarpal phalangeal |

| CMC | Carpometacarpal |

| ROM | Range of motion |

| ICC | Intraclass correlation coefficient |

| PCC | Pearson correlation coefficient |

| CI | Confidence interval |

| OA | Osteoarthritis |

References

- Athlani, L.; De Almeida, Y.-K.; Martins, A.; Seaourt, A.-C.; Dap, F. Thumb Basal Joint Arthritis in 2023. Orthop. Traumatol. Surg. Res. 2023, 110, 103772. [Google Scholar] [CrossRef]

- Bakri, K.; Moran, S.L. Thumb Carpometacarpal Arthritis. Plast. Reconstr. Surg. 2015, 135, 508–520. [Google Scholar] [CrossRef]

- Zhang, W.; Doherty, M.; Leeb, B.F.; Alekseeva, L.; Arden, N.K.; Bijlsma, J.W.; Dinçer, F.; Dziedzic, K.; Häuselmann, H.J.; Herrero-Beaumont, G.; et al. EULAR Evidence Based Recommendations for the Management of Hand Osteoarthritis: Report of a Task Force of the EULAR Standing Committee for International Clinical Studies Including Therapeutics (ESCISIT). Ann. Rheum. Dis. 2007, 66, 377–388. [Google Scholar] [CrossRef]

- Kloppenburg, M.; Kroon, F.P.; Blanco, F.J.; Doherty, M.; Dziedzic, K.S.; Greibrokk, E.; Haugen, I.K.; Herrero-Beaumont, G.; Jonsson, H.; Kjeken, I.; et al. 2018 Update of the EULAR Recommendations for the Management of Hand Osteoarthritis. Ann. Rheum. Dis. 2019, 78, 16–24. [Google Scholar] [CrossRef]

- Pomares, G.; Delgrande, D.; Dap, F.; Dautel, G. Minimum 10-Year Clinical and Radiological Follow-up of Trapeziectomy with Interposition or Suspensionplasty for Basal Thumb Arthritis. Orthop. Traumatol. Surg. Res. 2016, 102, 995–1000. [Google Scholar] [CrossRef]

- Dellestable, A.; Cheval, D.; Kerfant, N.; Stindel, E.; Le Nen, D.; Letissier, H. Long-Term Outcomes of Trapeziectomy with Gore-Tex® Ligament Reconstruction for Trapezio-Metacarpal Osteoarthritis. Orthop. Traumatol. Surg. Res. 2024, 110, 103366. [Google Scholar] [CrossRef]

- Komura, S.; Hirakawa, A.; Masuda, T.; Nohara, M.; Kimura, A.; Matsushita, Y.; Matsumoto, K.; Akiyama, H. Preoperative Prognostic Factors Associated with Poor Early Recovery after Trapeziectomy with Ligament Reconstruction and Tendon Interposition Arthroplasty for Thumb Carpometacarpal Osteoarthritis. Orthop. Traumatol. Surg. Res. 2022, 108, 103191. [Google Scholar] [CrossRef]

- Huang, K.; Hollevoet, N.; Giddins, G. Thumb Carpometacarpal Joint Total Arthroplasty: A Systematic Review. J. Hand Surg. Eur. Vol. 2015, 40, 338–350. [Google Scholar] [CrossRef]

- Ladd, A.L.; Weiss, A.-P.C.; Crisco, J.J.; Hagert, E.; Wolf, J.M.; Glickel, S.Z.; Yao, J. The Thumb Carpometacarpal Joint: Anatomy, Hormones, and Biomechanics. Instr. Course Lect. 2013, 62, 165–179. [Google Scholar]

- Weiss, A.-P.C.; Goodman, A.D. Thumb Basal Joint Arthritis. JAAOS-J. Am. Acad. Orthop. Surg. 2018, 26, 562. [Google Scholar] [CrossRef]

- Ong, F.R. Thumb Motion and Typing Forces during Text Messaging on a Mobile Phone. In Proceedings of the 13th International Conference on Biomedical Engineering, Singapore, 3–6 December 2008; Lim, C.T., Goh, J.C.H., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 2095–2098. [Google Scholar]

- Li, Z.-M.; Tang, J. Coordination of Thumb Joints during Opposition. J. Biomech. 2007, 40, 502–510. [Google Scholar] [CrossRef]

- Ellis, B.; Bruton, A. A Study to Compare the Reliability of Composite Finger Flexion with Goniometry for Measurement of Range of Motion in the Hand. Clin. Rehabil. 2002, 16, 562–570. [Google Scholar] [CrossRef]

- Gajdosik, R.L.; Bohannon, R.W. Clinical Measurement of Range of Motion: Review of Goniometry Emphasizing Reliability and Validity. Phys. Ther. 1987, 67, 1867–1872. [Google Scholar] [CrossRef]

- Zhao, J.Z.; Blazar, P.E.; Mora, A.N.; Earp, B.E. Range of Motion Measurements of the Fingers via Smartphone Photography. Hand 2020, 15, 679–685. [Google Scholar] [CrossRef]

- Trejo Ramirez, M.P.; Evans, N.; Venus, M.; Hardwicke, J.; Chappell, M. Reliability, Accuracy, and Minimal Detectable Difference of a Mixed Concept Marker Set for Finger Kinematic Evaluation. Heliyon 2023, 9, e21608. [Google Scholar] [CrossRef]

- Reissner, L.; Fischer, G.; List, R.; Taylor, W.R.; Giovanoli, P.; Calcagni, M. Minimal Detectable Difference of the Finger and Wrist Range of Motion: Comparison of Goniometry and 3D Motion Analysis. J. Orthop. Surg. Res. 2019, 14, 173. [Google Scholar] [CrossRef]

- Luker, K.R.; Aguinaldo, A.; Kenney, D.; Cahill-Rowley, K.; Ladd, A.L. Functional Task Kinematics of the Thumb Carpometacarpal Joint. Clin. Orthop. Relat. Res. 2014, 472, 1123–1129. [Google Scholar] [CrossRef]

- Fischer, G.; Jermann, D.; List, R.; Reissner, L.; Calcagni, M. Development and Application of a Motion Analysis Protocol for the Kinematic Evaluation of Basic and Functional Hand and Finger Movements Using Motion Capture in a Clinical Setting—A Repeatability Study. Appl. Sci. 2020, 10, 6436. [Google Scholar] [CrossRef]

- Sancho-Bru, J.L.; Jarque-Bou, N.J.; Vergara, M.; Pérez-González, A. Validity of a Simple Videogrammetric Method to Measure the Movement of All Hand Segments for Clinical Purposes. Proc. Inst. Mech. Eng. Part H 2014, 228, 182–189. [Google Scholar] [CrossRef]

- American Society of Hand Therapists. Clinical Assessment Recommendations, 3rd ed.; American Society of Hand Therapists (ASHT): Mount Laurel, NJ, USA, 2015. [Google Scholar]

- Gionfrida, L.; Rusli, W.M.R.; Bharath, A.A.; Kedgley, A.E. Validation of Two-Dimensional Video-Based Inference of Finger Kinematics with Pose Estimation. PLoS ONE 2022, 17, e0276799. [Google Scholar] [CrossRef]

- Gu, F.; Fan, J.; Wang, Z.; Liu, X.; Yang, J.; Zhu, Q. Automatic Range of Motion Measurement via Smartphone Images for Telemedicine Examination of the Hand. Sci. Prog. 2023, 106, 00368504231152740. [Google Scholar] [CrossRef]

- Kuchtaruk, A.; Yu, S.S.Y.; Iansavichene, A.; Davidson, J.; Wilson, C.A.; Symonette, C. Telerehabilitation Technology Used for Remote Wrist/Finger Range of Motion Evaluation: A Scoping Review. Plast. Reconstr. Surg.–Glob. Open 2023, 11, e5147. [Google Scholar] [CrossRef]

- Shinohara, I.; Inui, A.; Mifune, Y.; Yamaura, K.; Kuroda, R. Posture Estimation Model Combined with Machine Learning Estimates the Radial Abduction Angle of the Thumb with High Accuracy. Cureus 2024, 16, e71034. [Google Scholar] [CrossRef]

| Parameter Name | Parameter Description | Live Motion Capture Measurement | Manual Goniometry Measurement |

|---|---|---|---|

| IP | IP ROM, palmar view | Angle between 2, 3, 4 | Angle between dorsal midline of distal and proximal phalanx |

| MP | MP ROM, palmar view | Angle between 1, 2, 3 | Angle between dorsal midline of proximal phalanx and 1st metacarpal |

| CMC_r_add_p | CMC radial adduction, palmar view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_r_add_d | CMC radial adduction, dorsal view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_r_abd_p | CMC radial abduction, palmar view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_r_abd_d | CMC radial adduction, dorsal view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_r_p | CMC radial ROM, palmar view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_r_d | CMC radial ROM, dorsal view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_p_add_p | CMC palmar adduction, palmar view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_p_add_d | CMC palmar adduction, dorsal view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_p_abd_p | CMC palmar abduction, palmar view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_p_abd_d | CMC palmar abduction, dorsal view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_p_p | CMC palmar ROM, palmar view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

| CMC_p_d | CMC palmar ROM, dorsal view | Subtended angle between extended vectors 1, 2 and 0, 9 | Angle between dorsal midline of 1st and 2nd metacarpal |

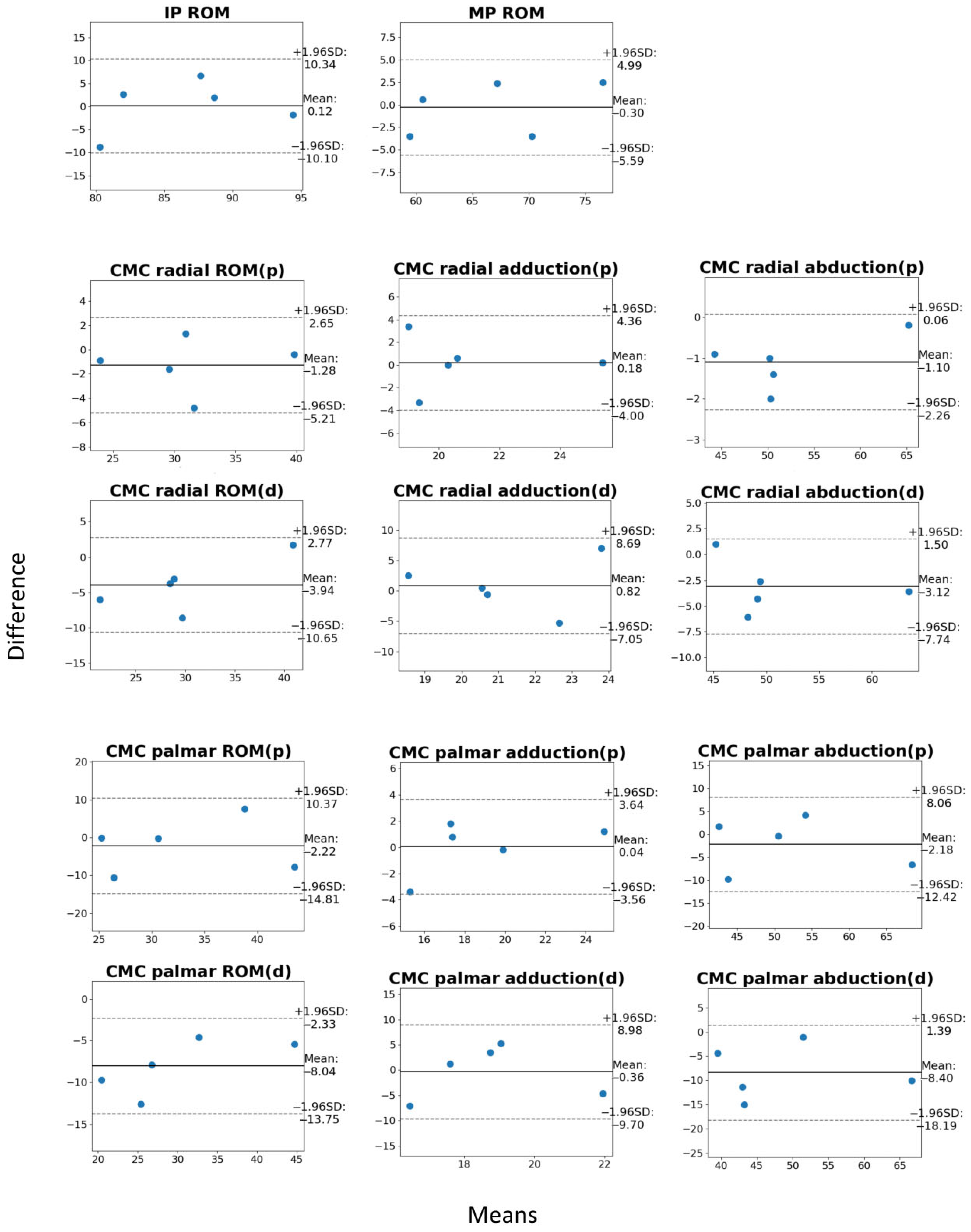

| Parameter Name | Motion Capture (mean ± SD) | Manual Goniometry (mean ± SD) | Mean Error (mean ± SD) | 95% CI of Mean Error |

|---|---|---|---|---|

| IP | 86.7 ± 3.5 | 86.5 ± 0.9 | 0.12 ± 3.70 | (−4.07, 4.31) |

| MP | 66.7 ± 2.3 | 67.0 ± 1.3 | −0.30 ± 3.18 | (−3.91, 3.31) |

| CMC_r_add_p | 21.0 ± 2.0 | 20.8 ± 1.4 * | 0.18 ± 2.73 | (−2.91, 3.28) |

| CMC_r_add_d | 21.7 ± 1.3 | 20.8 ± 1.4 | 0.82 ± 2.16 | (−1.63, 3.27) |

| CMC_r_abd_p | 51.6 ± 1.4 | 52.7 ± 1.2 * | −1.10 ± 1.82 | (−3.16, 0.96) |

| CMC_r_abd_d | 49.5 ± 1.1 | 52.7 ± 1.2 | −3.12 ± 1.73 | (−5.08, −1.16) |

| CMC_r_p | 30.5 ± 2.6 | 31.8 ± 1.9 * | −1.28 ± 3.35 | (−5.06, 2.51) |

| CMC_r_d | 27.9 ± 1.8 | 31.8 ± 1.9 | −3.94 ± 2.80 | (0.76, 7.11) |

| CMC_p_add_p | 19.0 ± 2.3 | 18.9 ± 1.1 * | 0.04 ± 2.72 | (−3.04, 3.12) |

| CMC_p_add_d | 18.6 ± 2.4 | 18.9 ± 1.1 | −0.36 ± 2.69 | (−3.40, 2.68) |

| CMC_p_abd_p | 50.8 ± 1.1 | 53.0 ± 1.6 * | −2.18 ± 2.02 | (−4.47, 0.11) |

| CMC_p_abd_d | 44.6 ± 2.0 | 53.0 ± 1.6 | −8.40 ± 2.81 | (−11.58, −5.22) |

| CMC_p_p | 31.8 ± 2.8 | 34.0 ± 2.0 * | −2.22 ± 3.52 | (−6.20, 1.76) |

| CMC_p_d | 26.0 ± 3.5 | 34.0 ± 2.0 | −8.04 ± 4.12 | (−12.70, −3.38) |

| Subject | Intraclass Correlation Coefficient | Pearson Correlation Coefficient |

|---|---|---|

| 1 | 0.966 | 0.977 |

| 2 | 0.958 | 0.977 |

| 3 | 0.970 | 0.989 |

| 4 | 0.962 | 0.973 |

| 5 | 0.953 | 0.967 |

| Total | 0.974 | 0.974 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dinh, H.G.; Zhou, J.Y.; Benmira, A.; Kenney, D.E.; Ladd, A.L. Proof of Concept and Validation of Single-Camera AI-Assisted Live Thumb Motion Capture. Sensors 2025, 25, 4633. https://doi.org/10.3390/s25154633

Dinh HG, Zhou JY, Benmira A, Kenney DE, Ladd AL. Proof of Concept and Validation of Single-Camera AI-Assisted Live Thumb Motion Capture. Sensors. 2025; 25(15):4633. https://doi.org/10.3390/s25154633

Chicago/Turabian StyleDinh, Huy G., Joanne Y. Zhou, Adam Benmira, Deborah E. Kenney, and Amy L. Ladd. 2025. "Proof of Concept and Validation of Single-Camera AI-Assisted Live Thumb Motion Capture" Sensors 25, no. 15: 4633. https://doi.org/10.3390/s25154633

APA StyleDinh, H. G., Zhou, J. Y., Benmira, A., Kenney, D. E., & Ladd, A. L. (2025). Proof of Concept and Validation of Single-Camera AI-Assisted Live Thumb Motion Capture. Sensors, 25(15), 4633. https://doi.org/10.3390/s25154633