Abstract

Accurate time-series forecasting plays a vital role in sensor-driven applications such as energy monitoring, traffic flow prediction, and environmental sensing. While most existing approaches focus on extracting local patterns from historical observations, they often overlook the global temporal information embedded in timestamps. However, this information represents a valuable yet underutilized aspect of sensor-based data that can significantly enhance forecasting performance. In this paper, we propose a novel timestamp-guided knowledge distillation framework (TKDF), which integrates both historical and timestamp information through mutual learning between heterogeneous prediction branches to improve forecasting robustness. The framework comprises two complementary branches: a Backbone Model that captures local dependencies from historical sequences, and a Timestamp Mapper that learns global temporal patterns encoded in timestamp features. To enhance information transfer and reduce representational redundancy, a self-distillation mechanism is introduced within the Timestamp Mapper. Extensive experiments on multiple real-world sensor datasets—covering electricity consumption, traffic flow, and meteorological measurements—demonstrate that the TKDF consistently improves the performance of mainstream forecasting models.

1. Introduction

Time-series forecasting plays a vital role in various sensor-based application, including transportation [,,], energy [], and weather []. Since time series consist of sequential data collected at fixed time intervals, capturing both local and global dependencies is crucial for accurate prediction. Recently, neural networks have realized promising achievements owing to their strong data fitting and automatic learning capabilities. However, most of the existing studies primarily focus on local observations within historical windows, underestimating abundant global information contained in the timestamp.

Deep learning approaches, such as Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Transformers, have been extensively validated for their capability to automatically capture temporal dependencies [,,]. Nevertheless, most of existing methods adopt a sequence-to-sequence prediction paradigm, where future sequences are predicted based on continuous historical sequences. These works typically focus on historical observations within local predefined windows, while overlooking the significant role of timestamps in forecasting. In fact, the timestamp contains substantial global and seasonal information, especially in sensor-generated time series where periodicity and external environmental patterns are often prominent.

For each timestamp, temporal features such as the month, day, weekday, hour, minute, and second can be extracted, all of which are highly relevant to accurate prediction. For example, traffic volume collected from roadside sensors often differs between weekdays and weekends, as well as between peak and off-peak hours, reflecting distinct temporal patterns. Owing to their rich informational content, timestamps have the potential to provide robust global guidance for forecasting models and can even independently generate prediction outputs.

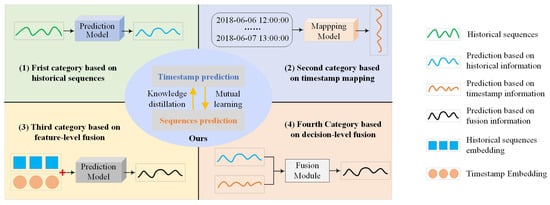

Through a systematic review of state-of-the-art methods, existing works can be categorized into four groups based on how timestamps are utilized. As shown in Figure 1, the first category primarily concentrates on historical sequential observations, completely overlooking timestamps, such as Decomposition-Linear (DLinear) []. A significant portion of the existing works fall into this category. Instead of relying on historical sequences, the second category uses only the multiple temporal features contained in the timestamp, such as the Multi-Feature Spatial–Temporal fusion Network (MFSTN) []. For example, traffic volumes can be approximately estimated based on factors such as weather, holidays, day of the week, and peak hours. The third category, such as Informer [], begins to consider timestamps by summing temporal embeddings with data embeddings. These intricate correlations prompt networks to extract information from more intuitive observations. The fourth category handles the historical sequence and timestamp independently through different models and combines two outputs using fusion weights, such as in the Global–Local Adaptive Fusion Framework (GLAFF) [].

Figure 1.

A comparison between our method and existing approaches.

Despite the remarkable progress of existing models in time-series forecasting, the rich semantic information embedded in timestamps has not been fully exploited []. First, many approaches rely heavily on historical observations within fixed-size windows, which limits their ability to capture long-term seasonal or global patterns. Second, although timestamp features contain rich temporal semantics, they are often processed in overly simplistic ways (e.g., through summation with data embeddings), failing to unleash their potential in guiding predictions. For instance, Informer attempts to incorporate timestamp information by summing timestamp embeddings with data embeddings; the GLAFF processes the historical sequence and timestamp independently through separate models and then combines the outputs via fusion weights. However, these approaches fail to enable mutual learning between different models, which restricts their ability to fully capture the complementary knowledge within temporal data.

To overcome these limitations, we leverage knowledge distillation to facilitate the collaboration between heterogeneous models. This approach allows models to extract complementary temporal knowledge—local patterns from historical sequences and global patterns from timestamps. Through mutual guidance, these models can distill and integrate complementary information, leading to improved forecasting accuracy and robustness.

In this paper, we propose a timestamp-guided knowledge distillation framework (TKDF) that facilitates effective knowledge transfer among heterogeneous prediction models, aiming to enhance both robustness and predictive accuracy. This framework consists of a Backbone Model, which can be any sequence-based prediction model designed to capture local dependencies within a specific historical window. Additionally, a Timestamp Mapper is introduced, specifically designed to capture global dependencies by individually mapping timestamps to observation values. Unlike traditional methods, our approach integrates both local and global dependencies through mutual learning, which encourages the above two prediction branches to learn from one another, fostering a more comprehensive understanding. Notably, we incorporate self-distillation in the Timestamp Mapper to facilitate the knowledge transfer between deeper and shallower layers, thus enhancing the model’s capability. The contributions of this paper are summarized as follows:

- (1)

- We propose a novel temporal knowledge distillation framework consisting of two prediction branches that learn from each other. The framework is highly flexibility: the Backbone Model can be any sequence-based prediction model, and the Timestamp Mapper serves as a plug-and-play component that seamlessly collaborates with the Backbone Model.

- (2)

- We design a unique Timestamp Mapper with self-distillation. This self-distillation facilitates knowledge transfer from deeper to shallower layers within the same model, which is beneficial to enhancing its capacity to learn from broader contextual information in the timestamps.

- (3)

- Experiments are conducted using several real-world datasets collected from sensor-based systems to demonstrate the effectiveness of the proposed model.

The subsequent sections of this paper are organized as follows: Section 2 reviews the related work on knowledge distillation and time-series prediction. Section 3 describes the overall design and details of the proposed framework. Section 4 presents the experimental results, demonstrating the effectiveness of our approach in comparison to existing methods. Section 5 concludes the paper.

2. Related Work

This section reviews the relevant literature about knowledge distillation, and time-series prediction, with a focus on the underlying technical principles and their associated applications.

2.1. Knowledge Distillation

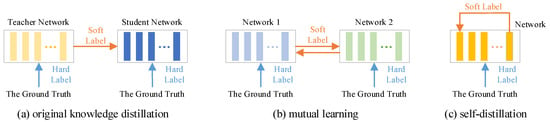

The original concept of knowledge distillation, proposed by Hinton et al., involves transferring knowledge from a complex teacher model with strong learning capacity to a simpler student model, thereby forming a teacher–student network []. The student model, typically lightweight, learns from both the ground truth (as the hard label) and the teacher’s output (as the soft label). By mimicking the behavior of the teacher through soft label supervision, the student model can achieve comparable performance while significantly reducing computational and memory overhead. Due to its superior performance, knowledge distillation has been successfully applied in the field of time-series prediction. For instance, Ma et al. proposed a multi-stage catalytic distillation framework for time-series forecasting which leverages knowledge distillation to facilitate efficient knowledge transfer in edge computing environments []. However, it may be challenging for a simpler student model to absorb all the knowledge from a complex teacher model. To address this issue, researchers have introduced several variations of the original knowledge distillation framework, such as mutual learning and self-distillation, as illustrated in Figure 2.

Figure 2.

The original knowledge distillation framework and its main variations.

Mutual learning is a collaborative learning paradigm that enables multiple models to exchange knowledge, facilitating bidirectional interaction during the training process. This approach disrupts the traditional teacher–student relationship, allowing models to serve as both teachers and students to one another. Such interaction promotes knowledge sharing, ultimately improving prediction accuracy and enhancing the model’s generalization capability. For example, Wu et al. proposed a mutual learning module comprising multiple network branches, where interleaved multi-task supervision was employed to significantly boost performance []. Li et al. applied mutual learning to better capture complex correlations in traffic flow data and improve forecasting accuracy [].

Self-distillation is a specialized form of knowledge distillation where a single network simultaneously functions as both teacher and student. Typically, the deeper layers of the network are used to supervise the shallower ones, as their outputs encode more complex and informative representations. This enables the shallower layers to acquire higher-level knowledge. Self-distillation facilitates self-optimization without the need for an external teacher model, thereby significantly reducing computational overhead and inference cost. For example, Kim et al. introduced a method called progressive self-knowledge distillation, applicable to any supervised learning task, which enhances prediction performance and generalization by distilling a model’s own knowledge []. Similarly, Ji et al. proposed a self-distillation mechanism during the pre-training phase, which can be regarded as an implicit regularization technique that transfers knowledge within the model itself [].

2.2. Time-Series Prediction

As a significant practical challenge, time-series forecasting has attracted wide attention []. Time-series forecasting approaches have evolved into four categories: traditional statistics-based methods, machine learning-based methods, deep learning-based methods, and other emerging methods. The earliest methods based on traditional statistics, such as the auto-regressive integrated moving average (ARIMA) model [], rely on temporal smoothness assumptions and are difficult to apply in practice [,]. Subsequently, machine learning methods, such as Support Vector Regression (SVR), became widely adopted but require large amounts of data for manual feature extraction, limiting their capacity []. Ensemble learning paradigms, such as bagging and boosting, have been widely adopted in time-series forecasting tasks to improve predictive performance by aggregating multiple weak learners []. With the development of deep learning, neural networks, including RNN-based, CNN-based, Transformer-based, and other architectures, have demonstrated satisfactory prediction performance. Among them, RNN-based methods capture temporal dependencies in time-series data dynamically through their recurrent structure [,,], with examples including Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU). Unfortunately, this architecture often suffers from the vanishing gradient problem when handling long sequences. Meanwhile, CNN-based methods rely on convolutional kernels to perform local operations [], capturing detailed information within their limited receptive fields. This makes it difficult to capture long-term dependencies and multiple repetitive patterns using traditional convolution. To alleviate this problem, Wu et al. proposed TimesNet [], which transforms 1D inputs into 2D space and employs 2D convolution to capture multiple periodicities. Unlike RNNs and CNNs, Transformer-based methods utilize self-attention mechanisms [], allowing them to effectively capture long-range dependencies. However, the self-attention mechanism has quadratic complexity with respect to sequence length, making it computationally expensive for extremely long sequences. To address this, Zhou et al. proposed Informer [], which introduces a ProbSparse self-attention mechanism that reduces both computational complexity and memory usage. As a recent variant, the iTransformer model [] repurposes the traditional Transformer architecture by embedding time series from different channels into variate tokens which are then processed by the attention mechanism. Recently, other emerging methods have also demonstrated promising performance. Zeng et al. proposed a lightweight model, named DLinear [], which employs only simple linear operations to accelerate both training and inference speed. With the rapid advancement of artificial intelligence, large language models (LLMs) have recently demonstrated remarkable performance in time-series forecasting []. Despite strong capabilities, LLMs incur high deployment and inference costs, making them less suitable for resource-constrained or low-latency prediction scenarios.

In terms of timestamps, most existing methods focus on local observations within historical windows, treating timestamps merely as auxiliary information whose global significance remains largely underestimated. For example, DLinear completely overlooks timestamps. Informer and TimesNet incorporate timestamps by adding their embeddings to position and data embeddings. iTransformer embeds timestamp features separately into variate tokens, which may compromise the physical significance of timestamps. To fully exploit timestamps, we construct heterogeneous prediction branches that independently process historical sequences and timestamps, and then integrate both local and global temporal knowledge through mutual learning to generate the final predictions.

3. Methods

We propose a timestamp-guided knowledge distillation framework that can efficiently integrate the local and global temporal dependencies from historical sequences and timestamp information. In time-series forecasting, given the historical sequence of channels within time steps , our goal is to predict the future sequence in subsequent time steps . In addition to sequential observations, we extract several timestamp features to provide global information, such as month, day, weekday, hour, minute, and second. For example, for the timestamp “2018-06-02 12:00:00” at the sampling moment , its features can be expressed as . Unlike observations, both the historical timestamps within the predefined window and the future timestamps within the prediction window are known. Therefore, we can incorporate the local observations and global timestamps to provide robustness prediction for the values of .

3.1. Overview

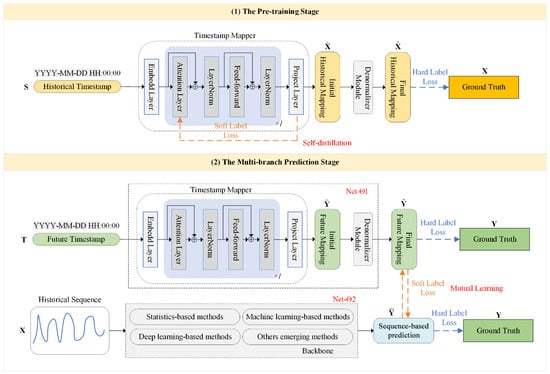

The proposed timestamp-guided knowledge distillation framework is illustrated in Figure 3, comprising two primary components: Timestamp Mapper and Backbone Model. Our framework offers highly flexibility: the Backbone Model can be any sequence-based model that convers historical sequences into future sequences, while the Timestamp Mapper serves as a plug-and-play component that maps timestamp features to observation values.

Figure 3.

An overview of the proposed timestamp-guided knowledge distillation framework.

During the pre-training stage, the capacity of the Timestamp Mapper is enhanced through self-distillation, enabling it to learn the relationship between timestamps and observations. Since both historical observations and timestamps are available, the Timestamp Mapper can transform historical timestamps into the final historical mapping , aligning them with corresponding observations . Self-distillation feeds deep feature representations back to the shallow layers, allowing the model to acquire global temporal contextual knowledge more effectively.

During the multi-branch prediction stage, the well-trained Timestamp Mapper further maps future timestamps to the final future mapping , while the Backbone Model offers the future prediction based on the historical sequence . Both prediction results are aligned with the ground truth . Mutual learning facilitates two heterogeneous prediction branches to interact with each other, enabling them to exchange complementary temporal knowledge. In this way, the proposed framework integrates the local observations and global timestamps to provide accurate and robust predictions.

3.2. Timestamp Mapper

As depicted in Figure 3, the Timestamp Mapper utilizes the Transformer encoder structure, which consists of an embedding layer, attention blocks, and a projection layer. Initially, each timestamp is embedded to describe its properties, then passed through attention blocks for correlation calculation, and processed by a projection layer to obtain the initial mappings. The initial historical and future mappings are denoted as and , respectively, conforming to standard distribution. Subsequently, the Denormalizer Module separately inverse normalizes the initial mappings and to produce the final mappings and , thereby alleviating the impact of data drift.

The Timestamp Mapper can sufficiently extract the global temporal information embedded in timestamps. Specifically, the procedure of transforming historical timestamps into an initial mapping is succinctly outlined as follows:

where denotes the intermediate representation output by the i-th network layer, and represents the dimension of the intermediate output. The attention blocks are stacked in layers to capture the high-level temporal information embedded within the timestamps. The primary computation process for the i-th attention block is delineated as follows:

where represents the common normalization layer and denotes the feedforward network. indicates the multi-head self-attention mechanism, where represent the query, key, and value matrix, respectively. To simplify implementation, both the embedding and projection layers consist of a single linear layer. Additionally, a dropout mechanism is adopted to alleviate overfitting problems and enhance the network’s generalization. Similarly, the process of transforming future timestamps into an initial mapping mirrors the aforementioned procedure, achieved by simply substituting and in Equations (1)–(3) with and , respectively.

To alleviate the impact of data drift, the conventional inverse normalization approach considers mean and variance to measure the distribution deviations. However, this approach is sensitive to extreme values and lacks robustness when the observations contain anomalies. Instead of relying on the mean and standard deviation, the Denormalizer Module uses median and quantile ranges to enhance the robustness of the Timestamp Mapper against anomalies. This procedure can be succinctly expressed as follows:

where and denote the median of the initial mapping and the actual observation , respectively. Similarly, and represent the quantile range (the distance between the quantile and the quantile) of the initial mapping and the actual observation . When , the quantile range corresponds to the interquartile range (IQR3). This process not only helps alleviate the problem of data drift, but also makes the distribution of the final mappings more consistent with the actual observations.

3.3. Self-Distillation Phase

As shown in Figure 3, self-distillation is incorporated into the Timestamp Mapper during the pre-training stage, enabling it to acquire higher-level contextual knowledge more effectively. According to the relevant definitions, the knowledge distillation loss function consists of hard label loss and soft label loss. This self-distillation process can be represented as follows:

where is the weight hyperparameter, and , respectively, represent the hard label loss and soft label loss of self-distillation. During the pre-training stage, the Timestamp Mapper maps historical timestamps to initial historical mappings , which are then transformed into final historical mappings through inverse normalization, followed by alignment with the corresponding observations . Therefore, hard label loss can be expressed as follows:

where is the distance function, which is used to measure the distance between the final historical mappings and actual observations . Additionally, the soft label loss can be expressed as follows:

where and represent the first and last layer of the Timestamp Mapper, respectively. In this way, the deep feature representations are fed back to the shallow layers, allowing the attention blocks to focus on more crucial information. The total self-distillation loss function is as follows:

3.4. Mutual Learning Phase

Mutual learning involves two student networks learning from each other, as illustrated in the multi-branch prediction stage in Figure 3. Two neural networks, and , have both hard label loss and soft label loss. As shown in Figure 3, the Timestamp Mapper is denoted as , whose mutual learning loss function can be expressed as follows:

where is the weight hyperparameter. and , respectively, represent the hard label loss and soft label loss of , whose calculation process is as follows:

where is used to measure the distance between the final future mapping and the ground truth , and is used to measure the distance between the final future mapping and the sequence-based prediction . Similarly, the Backbone Model is denoted as , whose mutual learning loss function is as follows:

where and , respectively, represent the hard label loss and soft label loss of , whose calculation process is as follows:

where is used to measure the sequence-based prediction and the ground truth , and is used to measure the distance between the sequence-based prediction and the final future mapping . Mutual guidance between heterogeneous branches allows them to distill and integrate complementary knowledge, thereby improving prediction accuracy and robustness.

Overall, we employ the Timestamp Mapper and Backbone Model to extract the local and global temporal knowledge and enhance the accuracy and robustness of prediction. Algorithm 1 presents the overall process of the prediction algorithm in the TKDF.

| Algorithm 1: Prediction algorithm process in TKDF—TKDF for Time-Series Prediction |

| Input: Historical timestamp , future timestamp , historical sequence |

| Output: Future sequence |

| Hyperparameter: Learning rate , weight |

| 1. Initialization: Network and Network |

| 2. [The pre-training stage]: utilize to predict |

| 3. for do: |

| 4. Calculate self-distillation loss: |

| 5. Update parameters of the timestamp mapper: |

| 6. end for |

| 7. [The Multi-branch Prediction Stage]: utilize and to predict |

| 8. for do: |

| 9. Calculate mutual learning loss of network : |

| 10. Calculate mutual learning loss of network : |

| 11. Update parameters of network : |

| 12. Update parameters of network : |

| 13. Update the prediction result |

| 14. End for |

4. Experiments

To verify the effectiveness of the proposed framework, three types of experiments are conducted to address the following research questions:

RQ1: Compared with the existing models for time-series prediction, does the TKDF achieve improved predictive performance?

RQ2: What are the appropriate values for the key hyperparameters?

RQ3: How do the learning strategies and individual components adopted in the TKDF contribute to predictive performance?

4.1. Datasets

The performance of the proposed method is evaluated on seven real-world benchmark datasets collected from sensor-based systems, including Electricity, Traffic, Weather, and four ETT datasets. These datasets are publicly available and have been widely used for performance evaluation []. Table 1 summarizes the statistics of these datasets. The basic information of these datasets is as follows.

Table 1.

The statistics of each dataset. Channel indicates the number of variates in each dataset. Length refers to the total number of time points. Frequency denotes the sampling interval.

- Electricity comprises hourly electricity consumption data from 321 customers, collected between 2012 and 2014.

- Traffic contains hourly road occupancy rates collected by 862 sensors installed on freeways in the San Francisco Bay Area, covering the period from 2015 to 2016.

- Weather contains 21 meteorological variables recorded every 10 min at stations across Germany in 2020.

- ETT records the oil temperature and load characteristics of two power transformers from 2016 to 2018, with measurements collected at two different resolutions (15 min and 1 h) for each transformer, resulting in four datasets: ETTh1, ETTh2, ETTm1, and ETTm2.

We follow the standard segmentation protocol by chronologically dividing each dataset into training, validation, and testing sets to prevent information leakage. The segmentation ratio is set to 6:2:2. The Adam optimizer is employed to train the model. The batch size and learning rate are set to 32 and 0.001, respectively. The number of training epochs is fixed at 50. Specifically, the length of the history window is set to 96 for the Electricity, Traffic, Weather, and all four ETT datasets, while the prediction length is varied among {96, 192, 336, 720}. The number of attention blocks in the Timestamp Mapper is set to 2, and the dropout rate is set to 0.1. The quantile used in the Denormalizer Module is configured to 0.75.

4.2. Backbone Model

To demonstrate the effectiveness of our framework, we select several existing mainstream networks to serve as the Backbone Model, including the Transformer-based Informer (2021) [] and iTransformer (2024) [], the linear-based DLinear (2023) [], and the convolution-based TimesNet (2023) []. All aforementioned models represent previous state-of-the-art methods for time-series forecasting tasks. (1) Informer leverages the ProbSparse attention mechanism to efficiently handle exceedingly long input sequences. It also incorporates a generative decoder to alleviate error accumulation inherent in autoregressive forecasting methods. (2) DLinear employs decomposition-enhanced linear networks to achieve competitive forecasting performance. (3) TimesNet captures two-dimensional dependencies by transforming one-dimensional time series into a collection of two-dimensional tensors. It leverages multiple periodicities to embed intra-periodic and inter-periodic variations along the columns and rows of each tensor, respectively. (4) iTransformer embeds each individual channel into a token processed by the attention mechanism, facilitating the capture of inter-channel multivariate correlations. A feedforward network is then applied to each token to learn nonlinear series representations.

As described in Section 2, these models encompass three different strategies to handle timestamps in forecasting techniques, namely summation (Informer, TimesNet), concatenation (iTransformer), and omission (DLinear).

4.3. Evaluation Metrics

The Mean Square Error (MSE) and Mean Absolute Error (MAE) are used to evaluate the prediction performance of the proposed method. The smaller the value of the metrics, the better the predictive performance of the model.

4.4. Experiment 1: Prediction Performance of the Proposed Framework

Aiming to answer RQ1, the TKDF is compared with various mainstream baselines across different architectures. The experimental results are shown in Table 2.

Table 2.

The forecasting errors on multivariate datasets for the TKDF and mainstream baselines.

In Table 2, lower values indicate better predictive performance. The best results for each setting are highlighted in bold. Across most datasets and all prediction horizons, the TKDF significantly enhances the performance of nearly all baseline models, demonstrating strong generalization capability. This demonstrates that the TKDF effectively integrates global information from timestamps and local information from historical windows, thereby enhancing the robustness and predictive capability of mainstream forecasting models. This makes the TKDF well suited for long-range and ultra-long-range time-series forecasting.

On the Traffic dataset, the TKDF is significantly superior to TimesNet, DLinear, and Informer, with particularly obvious improvements at steps 336 and 720 (e.g., MAE decreases from 0.437 to 0.341). It is shown that the TKDF can better capture the temporal patterns in complex and dynamic traffic data. On the ETT datasets, the TKDF consistently achieves the best performance in both MSE and MAE for long-horizon predictions (e.g., horizon 720), indicating its superior ability to model long-term dependencies. On the Electricity and Weather datasets, although the performance gains are relatively modest, the TKDF still maintains a leading position. This indicates that the TKDF can provide stable advantages even on datasets characterized by strong periodicity and relatively stable patterns.

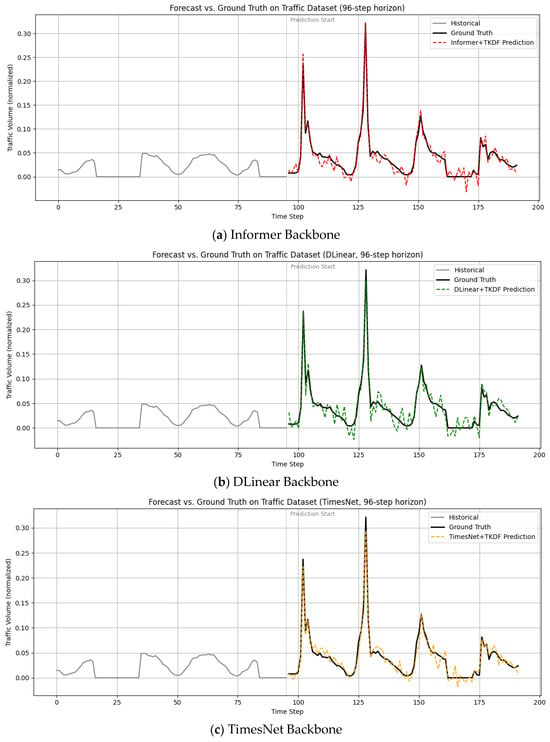

Figure 4a–d illustrate the prediction performance of the four backbone networks of the TKDF. In Figure 4, historical data are marked in gray. The Traffic dataset records traffic volume in hourly granularity. We deliberately selected data with clear periodic patterns and used working-day data (marked in gray) as historical input to predict weekend traffic peaks. The results demonstrate that our method can effectively capture periodicity and provide accurate forecasting. Because the TKDF fully leverages the global information embedded in timestamps, it effectively captures temporal correlations and periodic patterns, enabling accurate prediction of both peak and off-peak values. It can be observed that iTransformer achieves the best prediction performance, demonstrating that the proposed TKDF is also effective when applied to state-of-the-art models.

Figure 4.

Prediction results of mainstream baseline models enhanced by TKDF.

4.5. Experiment 2: Parameter Determination

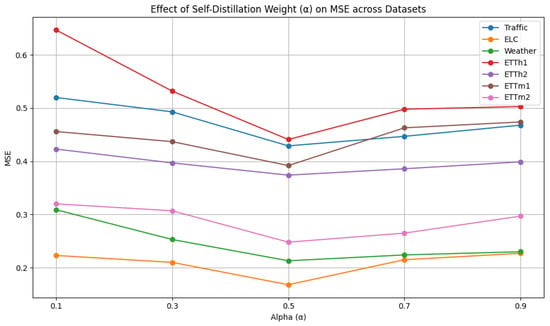

To address RQ2, we conduct a comprehensive hyperparameter analysis. It is worth noting that, in theory, the TKDF introduces the two most important core hyperparameters, the self-distillation weight and the mutual learning weight . To comprehensively compare the different parameter configurations, we also explore the quantile of the Denormalizer Module, the number of attention blocks, and the dropout proportion in the Timestamp Mapper. Since iTransformer represents the previous state of the art in time-series forecasting, we implement our method using it as the backbone. The history window length is fixed at 96, while the prediction window length is set to 192. The experimental results are depicted below.

As shown in Figure 5, the self-distillation weight is selected from {0.1, 0.3, 0.5, 0.7, 0.9}. We analyze the influence of different self-distillation weights. When approaches 1, no soft label supervision is involved, meaning that the model relies solely on hard labels. In this case, it cannot benefit from deep semantic representations generated by the model itself and ultimately degenerates into a standard supervised learning paradigm. When approaches 0, the model relies solely on soft labels, resulting in reduced robustness due to potential overfitting to internal representations. Therefore, the results demonstrate that moderate values (e.g., ) yield the best performance, suggesting that both soft and hard labels contribute to improved predictive performance.

Figure 5.

Hyperparameter analysis of self-distillation weight.

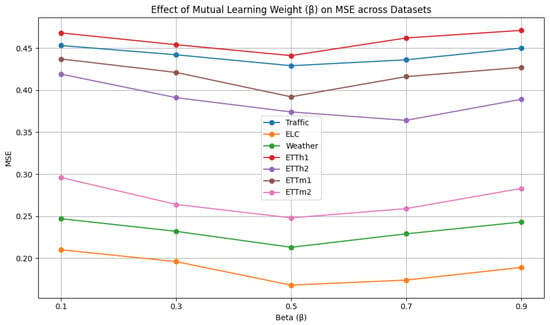

As shown in Figure 6, the mutual learning weight is selected from {0.1, 0.3, 0.5, 0.7, 0.9}. We analyze the influence of different mutual learning weights. The model exhibits the worst performance when or . A high value of indicates that the weight assigned to the mutual learning loss is insufficient, weakening the knowledge exchange between networks and consequently degrading the prediction performance. On the contrary, a low value of indicates that the mutual learning loss is overemphasized, while the supervision from the ground truth (hard labels) is underweighted, which significantly compromises the model’s predictive performance. When , both networks contribute complementary information, and the model achieves its best predictive performance.

Figure 6.

Hyperparameter analysis of mutual learning weight.

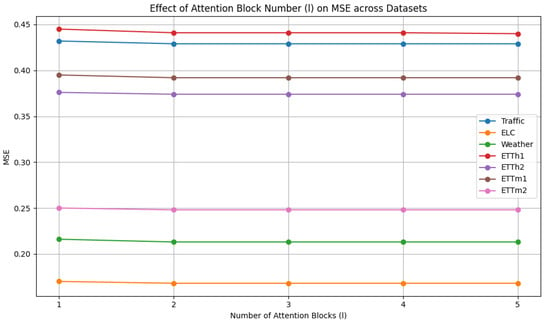

As shown in Figure 7, the number of attention blocks is selected from {1, 2, 3, 4, 5}. The impact of the number of attention blocks on performance can be observed. Overall, the model exhibits low sensitivity to changes in layer depth, with two or three layers generally enough to achieve strong performance. However, increasing the network depth also leads to higher computational costs and greater difficulty in convergence. Considering training cost and model performance, we choose 2 as the number of attention blocks.

Figure 7.

Hyperparameter analysis of attention block number.

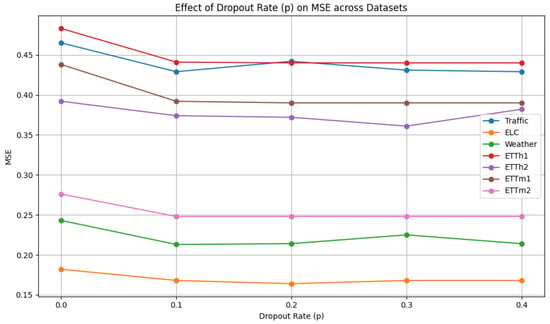

As shown in Figure 8, the dropout proportion is selected from {0.0, 0.1, 0.2, 0.3, 0.4}. We can observe the change trend of dropout proportion . Most datasets perform better at a lower dropout rate (such as 0.1 or 0.2), indicating that an excessively high dropout rate may introduce excessive information loss. To simplify the parameter selection challenge, we adopt a dropout proportion of 0.1.

Figure 8.

Hyperparameter analysis of dropout rate.

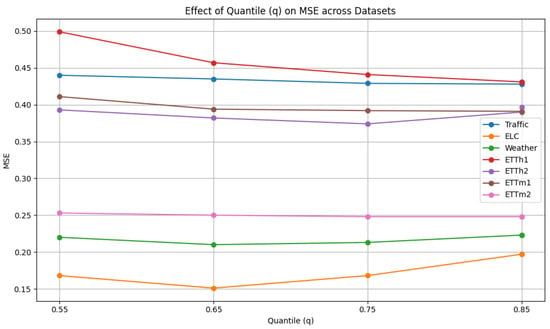

As shown Figure 9, the quantile is selected from {0.55, 0.65, 0.75, 0.85, 0.95}. The quantile parameter in the Denormalizer Module enables the TKDF to better adapt to data drift, particularly in the presence of anomalies within the sliding window. However, higher quantile values may cause the TKDF to become overly sensitive to magnitude variations, reducing its robustness to outliers. In contrast, lower quantiles may limit the TKDF’s ability to capture distributional changes, thereby impairing its adaptability to data drift. We can observe that the performance around is better on almost all datasets, supporting the rationality of setting .

Figure 9.

Hyperparameter analysis of quantile.

4.6. Experiment 3: Ablation Experiments

To validate the effectiveness of the different learning strategies and core components, a series of ablation experiments are conducted below. We implement our proposed framework and its several variants using the iTransformer as the Backbone Model. The results of the ablation studies conducted on three representative benchmark datasets are summarized in Table 3.

Table 3.

Ablation study on TKDF and its variants.

Without self-distillation, the self-distillation mechanism within the Timestamp Mapper is removed, disabling the internal knowledge transfer between deep and shallow layers. As a result, the model exhibits a moderate performance drop across datasets, indicating that self-distillation plays a supportive role in enhancing the representation capacity of the Timestamp Mapper. This mechanism helps lower layers to incorporate richer temporal context from deeper layers, thereby improving the model’s generalization ability, especially when the timestamp information is complex or weakly periodic.

Without mutual learning, the mutual learning strategy between the Timestamp Mapper and the Backbone Model is disabled. Each component makes predictions independently without exchanging soft label guidance, equivalent to without the Backbone Model and without the Timestamp Mapper. This modification results in a noticeable degradation in performance, highlighting the importance of cross-branch collaboration. Compared to self-distillation, mutual learning leads to a more significant enhancement in predictive accuracy, as it facilitates bidirectional knowledge exchange between components, thereby capturing both local and global temporal dependencies more effectively.

Without the backbone, the Backbone Model is entirely removed from the TKDF, and predictions are made solely based on timestamps. Surprisingly, the TKDF still achieves competitive performance using only the Timestamp Mapper, highlighting its ability to effectively capture global temporal patterns embedded in the timestamps. This observation aligns with human cognitive processes when predicting traffic states, where we often do not rely on large amounts of historical data but instead make reasonably accurate predictions based on temporal features such as whether it is a weekend or peak hour. This fully demonstrates that the rich semantic information in timestamps can support independent prediction.

Without the Timestamp Mapper, the stacked attention blocks are replaced with MLP networks of the equivalent size. After this substitution, the TKDF exhibits a noticeable decline in performance, indicating that the model struggles to capture temporal dependencies among timestamps without the self-attention mechanism.

Without the quantile, the Denormalizer Module is replaced with conventional inverse normalization. The experimental results demonstrate that our proposed design is more effective. When the historical window contains anomalies, conventional normalization methods often produce inaccurate distribution estimates.

5. Discussion

The proposed timestamp-guided knowledge distillation framework (TKDF) has demonstrated significant improvements in forecasting robustness and accuracy across various sensor-based time-series datasets. In this section, we discuss the strengths and potential limitations of the framework, highlight its differences from existing models, and analyze its computational complexity.

5.1. Strengths and Innovations

The TKDF introduces a dual-branch architecture with a Backbone Model for capturing local dependencies and a Timestamp Mapper for modeling global temporal semantics. The mutual learning mechanism between these two branches enables effective integration of complementary information. Furthermore, the incorporation of self-distillation in the Timestamp Mapper allows for enhanced extraction of timestamp semantics, improving the generalization capability across different datasets.

5.2. Comparison with Existing Models

Unlike the GLAFF, which treats historical sequences and timestamp features independently and fuses their outputs with fixed weights, the TKDF allows for collaborative knowledge exchange between the two branches during training. This design facilitates deeper integration of local and global temporal patterns. Compared to the MFSTN, which directly maps timestamp features to predictions and neglects historical sequences, the TKDF leverages both components synergistically, ensuring richer temporal context awareness.

5.3. Computational Complexity Analysis

The TKDF is designed to balance accuracy and efficiency. By limiting the number of attention blocks to two and adopting lightweight components for both the Backbone Model and Timestamp Mapper, the framework maintains a manageable parameter size and training time. Preliminary observations indicate that increasing attention depth beyond two provides no noticeable improvement in accuracy while introducing additional computational overhead. This trade-off was a key consideration in finalizing the architecture.

5.4. Limitations and Future Work

One limitation of the TKDF is its reliance on pre-training for the Timestamp Mapper, which could increase the overall training time in large-scale applications. Additionally, while the TKDF has shown robustness across multiple datasets, its performance on highly irregular or sparse time-series data requires further investigation. Future work will explore alternative fusion strategies, adaptive attention mechanisms, and more efficient distillation techniques to address these challenges.

6. Conclusions

In this paper, we propose a novel timestamp-guided knowledge distillation framework (TKDF) that integrates both local and global temporal dependencies through a multi-branch mutual learning architecture. Specifically, the TKDF consists of two heterogeneous prediction branches: a Backbone Model that captures local dependencies from historical sequential data, and a Timestamp Mapper designed to model global dependencies inherent in timestamps. The two branches interact through mutual learning, allowing for bidirectional knowledge transfer via soft label supervision. Furthermore, self-distillation is incorporated into the Timestamp Mapper to enhance its capacity by transferring deep representations to shallower layers. Extensive experiments on multiple sensor-based datasets demonstrate that the TKDF significantly boosts the performance of mainstream forecasting models, particularly in capturing periodic patterns, improving robustness, and handling long-range dependencies. On the Traffic dataset, for example, the TKDF is significantly superior to TimesNet, DLinear, and Informer, with particularly obvious improvements at steps 336 and 720 (e.g., MAE decreases from 0.437 to 0.341).

Author Contributions

Conceptualization, J.Y.; methodology, J.Y.; formal analysis, H.L. (Honghui Li); data curation, Y.B. (Yanhui Bai) and J.L.; validation and provision of technical standards compliance review, Y.B. (Yang Bai); writing—original draft, J.Y.; project administration, H.L. (Hairui Lv); writing—review and editing, H.L. (Honghui Li) and Y.B. (Yanhui Bai); supervision, H.L. (Honghui Li); funding acquisition, H.L. (Honghui Li). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Major Scientific Project “Unveiling and Leading” of Ordos City, grant number JBGS-2023-002.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data can be provided upon request.

Conflicts of Interest

Authors Hairui Lv and Yang Bai are employed by the company Inner Mongolia High Tech Holdings Co., Ltd. Author Honghui Li is partially supported by a collaborative project between Beijing Jiaotong University and the company Inner Mongolia High Tech Holdings Co., Ltd. The authors declare no additional commercial or financial conflicts of interest beyond this acknowledged project funding.

References

- Zhang, J.; Zheng, Y.; Qi, D. Deep Spatio-Temporal Residual Networks for Citywide Crowd Flows Prediction. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Liu, Y.; Yu, J.J.Q.; Kang, J.; Niyato, D.; Zhang, S. Privacy-Preserving Traffic Flow Prediction: A Federated Learning Approach. IEEE Internet Things J. 2020, 7, 7751–7763. [Google Scholar] [CrossRef]

- Zhang, L.; Zhu, J.; Jin, B.; Wei, X. Multiview Spatial-Temporal Meta-Learning for Multivariate Time Series Forecasting. Sensors 2024, 24, 4473. [Google Scholar] [CrossRef] [PubMed]

- Pinto, T.; Praça, I.; Vale, Z.; Silva, J. Ensemble Learning for Electricity Consumption Forecasting in Office Buildings. Neurocomputing 2021, 423, 747–755. [Google Scholar] [CrossRef]

- Du, S.; Li, T.; Yang, Y.; Horng, S.J. Deep Air Quality Forecasting Using Hybrid Deep Learning Framework. IEEE T. Knowl. Data En. 2019, 33, 2412–2424. [Google Scholar] [CrossRef]

- He, Q.Q.; Siu, S.W.I.; Si, Y.W. Instance-based Deep Transfer Learning with Attention for Stock Movement Prediction. Appl. Intell. 2023, 53, 6887–6908. [Google Scholar] [CrossRef]

- Liang, P.P.; Zadeh, A.; Morency, L.P. Foundations & Trends in Multimodal Machine Learning: Principles, Challenges, and Open Questions. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Yin, X.; Wu, G.; Wei, J.; Shen, Y.; Qi, H.; Yin, B. Deep Learning on Traffic Prediction: Methods, Analysis, and Future Directions. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3054840. [Google Scholar] [CrossRef]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are Transformers Effective for Time Series Forecasting? In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023.

- Yan, J.; Li, H.; Zhang, D.; Bai, Y.; Xu, Y.; Han, C. A Multi-feature Spatial–temporal Fusion Network for Traffic Flow Prediction. Sci. Rep. 2024, 14, 14264. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021. [Google Scholar]

- Wang, C.; Qi, Q.; Wang, J.; Sun, H.; Zhuang, Z.; Wu, J.; Liao, J. Rethinking the Power of Timestamps for Robust Time Series Forecasting: A Global-Local Fusion Perspective. In Proceedings of the Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Pereira, L.M.; Salazar, A.; Vergara, L.A. Comparative Analysis of Early and Late Fusion for the Multimodal Two-Class Problem. IEEE Access 2023, 11, 84283–84300. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [PubMed]

- Ma, R.; Zhang, C.; Kang, Y.; Wang, X.; Qiu, C. MCD: Multi-Stage Catalytic Distillation for Time Series Forecasting. In Proceedings of the International Conference on Database Systems for Advanced Applications, Gifu, Japan, 2–5 July 2024. [Google Scholar]

- Wu, R.; Feng, M.; Guan, W.; Wang, D.; Lu, H.; Ding, E. A Mutual Learning Method for Salient Object Detection with Intertwined Multi-Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, California, CA, USA, 16–20 June 2019. [Google Scholar]

- Li, Y.; Li, P.; Yan, D.; Liu, Z. Deep Knowledge Distillation: A Self-Mutual Learning Framework for Traffic Prediction. Expert Syst. Appl. 2024, 252, 124138. [Google Scholar] [CrossRef]

- Kim, K.; Ji, B.M.; Yoon, D.; Hwang, S. Self-knowledge Distillation with Progressive Refinement of Targets. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montréal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Ji, J.; Yu, F.; Lei, M. Self-supervised Spatiotemporal Graph Neural Networks with Self-Distillation for Traffic Prediction. IEEE T. Intell. Transp. 2022, 24, 1580–1593. [Google Scholar] [CrossRef]

- Li, W.; Law, K.L.E. Deep learning models for time series forecasting: A review. IEEE Access 2024, 12, 92306–92327. [Google Scholar] [CrossRef]

- Ariyo, A.A.; Adewumi, A.O.; Ayo, C.K. Stock Price Prediction Using the ARIMA Model. In Proceedings of the International Conference on Computer Modelling and Simulation, Cambridge, UK, 26–28 March 2014. [Google Scholar]

- Ghaderpour, E.; Dadkhah, H.; Dabiri, H.; Bozzano, F.; Scarascia Mugnozza, G.; Mazzanti, P. Precipitation Time Series Analysis and Forecasting for Italian Regions. Eng. Proc. 2023, 39, 23. [Google Scholar]

- Puri, C.; Kooijman, G.; Vanrumste, B.; Luca, S. Forecasting Time Series in Healthcare with Gaussian Processes and Dynamic Time Warping Based Subset Selection. IEEE J. Biomed. Health 2022, 26, 6126–6137. [Google Scholar] [CrossRef] [PubMed]

- Agarap, A.F.M. A Neural Network Architecture Combining Gated Recurrent Unit (GRU) and Support Vector Machine (SVM) for Intrusion Detection in Network Traffic Data. In Proceedings of the ACM International Conference Proceeding Series, Macau, China, 26–28 February 2018. [Google Scholar]

- Bouhali, A.; Zeroual, A.; Harrou, F. Enhancing Traffic Flow Prediction with Machine Learning Models: A Comparative Study. In Proceedings of the 2024 International Conference of the African Federation of Operational Research Societies, Tlemcen, Algeria, 3–5 November 2024. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. arXiv 2018, arXiv:1707.01926. [Google Scholar] [CrossRef]

- Jia, Y.; Lin, Y.; Hao, X.; Lin, Y.; Guo, S.; Wan, H. WITRAN: Water-Wave Information Transmission and Recurrent Acceleration Network for Long-Range Time Series Forecasting. In Proceedings of the Annual Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. iTransformer: Inverted Transformers Are Effective for Time Series Forecasting. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Kaushik, S.; Choudhury, A.; Sheron, P.K.; Dasgupta, N.; Natarajan, S.; Pickett, L.A.; Dutt, V. AI in Healthcare: Time-Series Forecasting Using Statistical, Neural, and Ensemble Architectures. Front. Big Data 2020, 3, 4. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).