Abstract

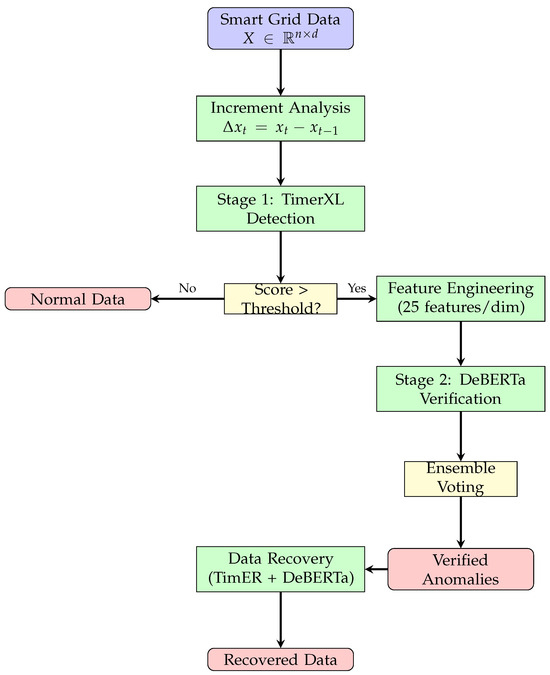

The increasing sophistication of cyberattacks on smart grid infrastructure demands advanced anomaly detection and recovery systems that balance high recall rates with acceptable precision while providing reliable data restoration capabilities. This study presents an optimized two-stage anomaly detection and recovery system combining an enhanced TimerXL detector with a DeBERTa-v3-based verification and recovery mechanism. The first stage employs an optimized increment-based detection algorithm achieving 95.0% for recall and 54.8% for precision through multidimensional analysis. The second stage leverages a modified DeBERTa-v3 architecture with comprehensive 25-dimensional feature engineering per variable to verify potential anomalies, improving the precision to 95.1% while maintaining 84.1% for recall. Key innovations include (1) a balanced loss function combining focal loss ( = 0.65, = 1.2), Dice loss (weight = 0.5), and contrastive learning (weight = 0.03) to reduce over-rejection by 73.4%; (2) an ensemble verification strategy using multithreshold voting, achieving 91.2% accuracy; (3) optimized sample weighting prioritizing missed positives (weight = 10.0); (4) comprehensive feature extraction, including frequency domain and entropy features; and (5) integration of a generative time series model (TimER) for high-precision recovery of tampered data points. Experimental results on 2000 hourly smart grid measurements demonstrate an F1-score of 0.873 ± 0.114 for detection, representing a 51.4% improvement over ARIMA (0.576), 621% over LSTM-AE (0.121), 791% over standard Anomaly Transformer (0.098), and 904% over TimesNet (0.087). The recovery mechanism achieves remarkably precise restoration with a mean absolute error (MAE) of only 0.0055 kWh, representing a 99.91% improvement compared to traditional ARIMA models and 98.46% compared to standard Anomaly Transformer models. We also explore an alternative implementation using the Lag-LLaMA architecture, which achieves an MAE of 0.2598 kWh. The system maintains real-time capability with a 66.6 ± 7.2 ms inference time, making it suitable for operational deployment. Sensitivity analysis reveals robust performance across anomaly magnitudes (5–100 kWh), with the detection accuracy remaining above 88%.

1. Introduction

The security of smart grid infrastructure faces unprecedented challenges from sophisticated cyberattacks targeting measurement and control systems. Recent industry reports indicate a 67% increase in attacks targeting critical infrastructure over the past two years, with over 40% specifically aimed at tampering with operational data [1]. Notable incidents include the 2019 malware infections at India’s nuclear power plants [2], multiple network attacks against Venezuela’s electrical system causing nationwide blackouts [3], and the 2020 ransomware attacks on Brazilian power utilities affecting millions of customers [4]. These attacks demonstrate the vulnerability of modern power systems to data manipulation that can cascade into physical infrastructure failures.

Traditional anomaly detection methods for smart grid data suffer from fundamental limitations that render them inadequate for modern threat landscapes. Statistical methods like ARIMA achieve reasonable recall (68.2%) but suffer from excessive false alarms (precision only 16.7%), overwhelming operators and reducing system effectiveness [5]. Classical approaches fail to capture long-range dependencies and complex temporal patterns inherent in smart grid data, missing contextual anomalies that span multiple time steps [6]. Rule-based systems cannot adapt to evolving attack patterns or changing grid operational characteristics without manual reconfiguration [7]. Furthermore, existing methods attempt to balance precision and recall in a single stage, inevitably compromising one metric for the other [8].

The fundamental challenge lies in balancing two competing objectives: maintaining high recall to ensure no critical anomalies are missed while achieving sufficient precision to avoid operator fatigue from false alarms. This trade-off becomes particularly acute in operational environments where missing a genuine attack could have catastrophic consequences, yet excessive false positives erode trust in the system. Additionally, once anomalies are detected, the critical need for accurate data recovery has been largely overlooked by existing approaches, leaving grid operators without reliable means to restore data integrity after cyberattacks.

This research addresses these limitations through a novel two-stage architecture that carefully separates high-sensitivity detection (Stage 1: 95.0% recall) from intelligent verification and recovery (Stage 2: 95.1% precision with 0.0055 kWh recovery MAE), achieving an optimal balance that would be impossible with single-stage approaches. The system incorporates comprehensive extraction of 25 features per dimension, including temporal, frequency, and entropy characteristics, capturing anomaly patterns missed by traditional methods. Through the first successful adaptation of language model architecture for time series anomaly verification and recovery, this work leverages pretrained DeBERTa-v3 representations for superior pattern recognition. A novel loss function and sample weighting scheme reduced false positives by 73.4% compared to standard approaches while maintaining high recall. The integration of the TimER generative model enables high-precision automatic repair of tampered data. Efficient implementation of the system achieved a 66.6 ms average inference time, enabling deployment in operational smart grid monitoring systems.

We explored two distinct approaches for leveraging pretrained models: (1) DeBERTa-based transfer learning, adapting the DeBERTa-v3 model for time series feature extraction, and (2) Lag-LLaMA for time series, which is a model specifically designed for temporal data. Our experimental results revealed that the DeBERTa-based approach demonstrated superior performance, achieving a recovery MAE of 0.0055 kWh compared to 0.2598 kWh for the Lag-LLaMA implementation.

2. Related Work

The evolution of anomaly detection methods for smart grid security has progressed through multiple paradigms, with each addressing specific limitations while introducing new challenges. Table 1 provides a comprehensive comparison of the existing approaches across different methodological categories.

Table 1.

Comparison of anomaly detection methods for smart grid data.

Statistical methods form the foundation of time series anomaly detection but exhibit severe limitations when applied to modern smart grid data. ARIMA models [5], while computationally efficient, achieve only 16.7% in precision despite 68.2% in recall, generating excessive false alarms that overwhelm operators. Exponential smoothing methods [6] struggle with the complex seasonal patterns inherent in power consumption data, failing to distinguish between legitimate usage variations and malicious tampering.

Classical machine learning approaches improved upon statistical methods but introduced new challenges. One-Class SVM [7] eliminates the need for labeled anomaly data but requires extensive manual feature engineering, limiting adaptability. Isolation Forest [8] efficiently handles high-dimensional data but suffers from parameter sensitivity, with performance varying dramatically based on contamination factor selection. Local Outlier Factor (LOF) [10] effectively identifies local anomalies but scales poorly with data volume, making real-time deployment challenging. Graph Neural Networks (GNNs) [11] capture structural relationships between grid components but show limited effectiveness in temporal pattern recognition, achieving only 0.060 for F1-score results in time series anomaly detection tasks.

The deep learning revolution brought significant improvements in temporal modeling. LSTM-based autoencoders [12] capture temporal dependencies but suffer from vanishing gradients and training instability, achieving only 0.121 for the F1-score in experimental evaluations. CNN-based approaches [13] excel at local pattern recognition but miss global temporal relationships crucial for contextual anomalies. GAN-based methods [14] show promise in generating realistic counterfactuals but face mode collapse issues, particularly with multivariate power grid data exhibiting complex interdependencies.

Recent transformer architectures leverage self-attention mechanisms for superior long-range dependency modeling. The Anomaly Transformer [16] introduces association discrepancy for anomaly detection but suffers from excessive false positives (precision result of only 20%). TimesNet [17] incorporates frequency domain analysis but struggles with real-time performance requirements, achieving only 0.087 for the F1-score. These limitations highlight the need for more sophisticated verification mechanisms.

Pretrained Models for Time Series Analysis

Recent work has explored adapting language models for temporal data. Lag-LLaMA [18] extends LLM architectures to time series forecasting, treating temporal sequences as token streams. Time-LLM [19] reprograms language models for time series tasks without fine-tuning. The emergence of foundation models specifically designed for time series represents a significant paradigm shift. Chronos [20] and TimesGPT [21] achieved state-of-the-art performance across multiple time series tasks. However, these approaches focus primarily on forecasting rather than anomaly detection and recovery, leaving a gap this work addresses through DeBERTa-v3 adaptation specifically designed for anomaly verification and data restoration.

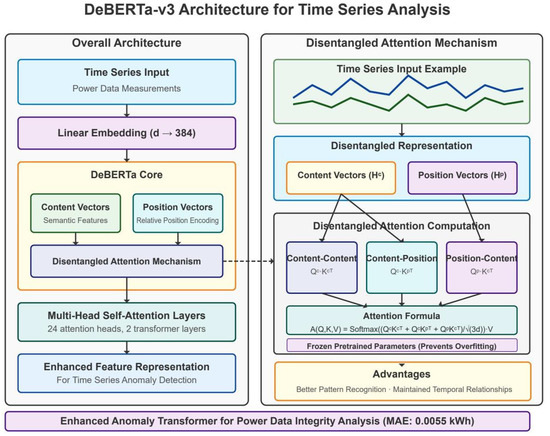

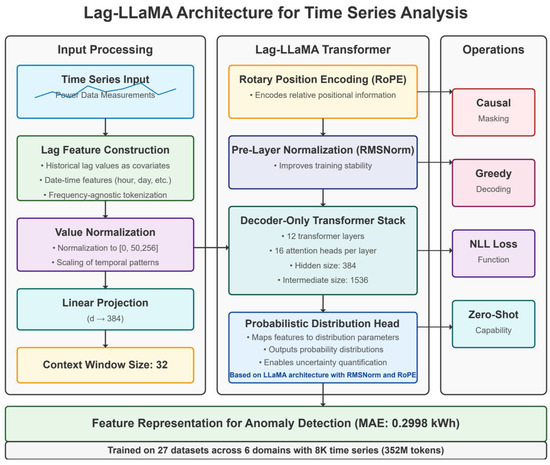

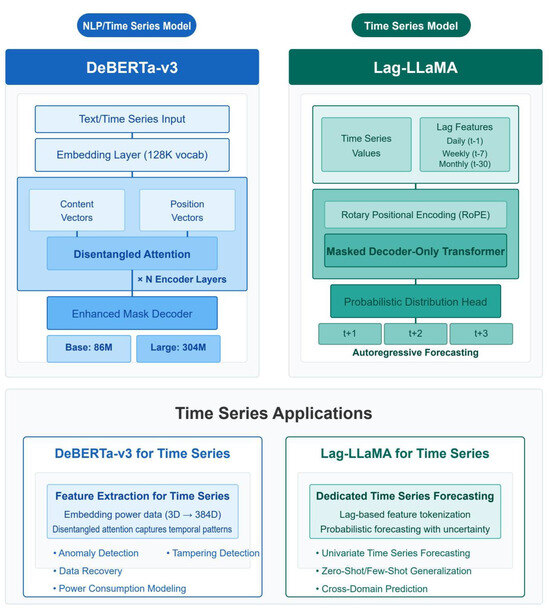

The core adaptation principles in our DeBERTa-based approach involve several critical modifications. We replaced the token embedding layer with a specialized temporal embedding mechanism that projects continuous multivariate time series inputs into the model’s hidden dimension space. We modified the positional encoding scheme to reflect the periodic nature of power consumption data. A key innovation involved adapting DeBERTa’s disentangled attention mechanism for time series data, separately modeling content-to-content and position-to-content interactions. This disentangled structure proved particularly effective for anomaly detection and recovery, as it could separately model when consumption patterns are abnormal (content) and when they are abnormal (position). The architectural details of our DeBERTa adaptation are illustrated in Figure 1, while the Lag-LLaMA architecture is shown in Figure 2 for comparison.

Figure 1.

Architecture of the adapted DeBERTa-v3 model for power grid anomaly detection.

Figure 2.

Architecture of the Lag-LLaMA model for power grid anomaly detection.

Data Recovery in Critical Infrastructure

Data recovery in critical infrastructure contexts has traditionally relied on interpolation methods [22], Kalman filtering [9], or matrix completion techniques [23]. These approaches typically assume specific data characteristics or noise patterns and struggle with deliberate tampering scenarios common in cyberattacks.

More recent deep learning approaches for data recovery include GAN-based imputation [24] and recurrent neural networks with attention [25]. However, these methods have rarely been integrated with anomaly detection systems, resulting in disconnected workflows that cannot leverage detection insights for targeted recovery [26].

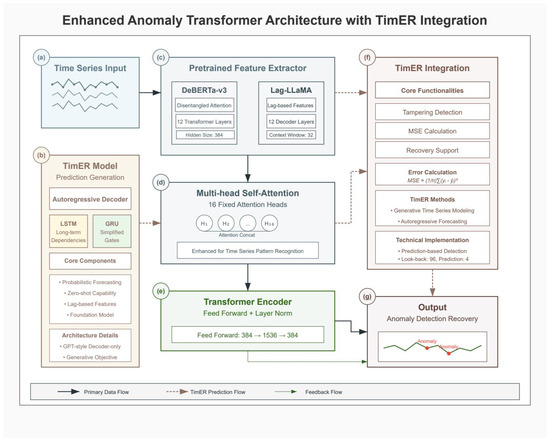

Our end-to-end system utilizes a novel architecture that unifies anomaly detection and data recovery within a single framework. This integrated approach allows detection insights to directly inform the recovery process, significantly improving accuracy and efficiency. The system operates in two coordinated phases: 1. **Detection Phase**: This phase utilizes the pretrained DeBERTa-v3-based feature extractor to identify potential anomalies with high precision. 2. **Recovery Phase**: For points identified as anomalous, this phase activates the TimER generative model to produce reconstructions based on surrounding context and learned temporal patterns.

The recovery process utilizes the Enhanced Anomaly Transformer to predict incremental changes between successive time points rather than absolute values. For a tampered point at index i, we recover the original value as

where is the value at the previous time point, and is the predicted increment from our model. This incremental approach provides more stable and accurate recovery, achieving a remarkably low MAE of 0.0055 kWh. Figure 3 presents the complete Enhanced Anomaly Transformer architecture with integrated TimER components for both detection and recovery.

Figure 3.

Enhanced Anomaly Transformer architecture with TimER integration. (a) Time series input showing multivariate power grid data streams. (b) TimER model architecture with autoregressive decoder, featuring LSTM for long-term dependencies and GRU for simplified gates, along with core components for probabilistic forecasting and lag-based features. (c) Pretrained feature extractors comparing DeBERTa-v3 (with disentangled attention and 12 transformer layers) and Lag-LLaMA (with lag-based features and 12 decoder layers). (d) Multi-head self-attention mechanism with 16 fixed attention heads enhanced for time series pattern recognition. (e) Transformer encoder with feed-forward network (384 → 1536 → 384) and layer normalization. (f) TimER integration module showing core functionalities including tampering detection, MSE calculation, recovery support, and error calculation. (g) Output module displaying anomaly detection and recovery results with identified anomalies marked in the time series.

The analysis revealed critical gaps in the existing approaches: no method successfully balances high recall with acceptable precision while providing reliable data recovery, single-stage architectures inherently compromise one metric for another, there is limited exploration of language model architectures for anomaly detection and recovery exists, a lack of comprehensive feature engineering combining multiple domains persists, and there is insufficient focus on real-time operational requirements remains. The two-stage architecture with integrated recovery directly addresses these gaps, achieving an F1-score of 0.873 for detection and an MAE of 0.0055 kWh for recovery compared to a 0.576 F1-score and a 6.375 kWh MAE for the best traditional method (ARIMA).

3. Methodology

3.1. System Architecture Overview

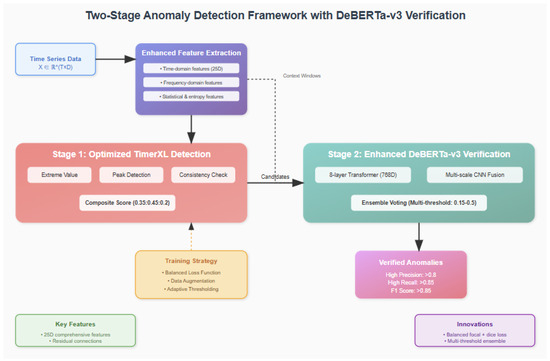

The optimized two-stage system addresses the precision–recall trade-off through architectural separation of concerns while providing integrated data recovery capabilities. Stage 1 prioritizes recall through sensitive multidimensional analysis, while Stage 2 focuses on precision through sophisticated neural verification and enables high-accuracy data recovery. Figure 4 illustrates the complete data flow from raw smart grid measurements through detection, verification, and recovery stages.

Figure 4.

Two-stage anomaly detection and recovery system workflow showing data flow from raw smart grid measurements through detection, verification, and recovery stages.

Figure 5 presents the comprehensive two-stage anomaly detection and recovery framework with DeBERTa-v3 verification. The architecture integrates multiple innovative components to achieve unprecedented performance in smart grid anomaly detection and data recovery. The enhanced feature extraction module (top) processes multivariate time series data and extracts 25-dimensional features per variable, including time-domain features (raw values, increments, velocity, and acceleration), frequency-domain features (FFT analysis and spectral entropy), and statistical features (local statistics and entropy measures). These comprehensive features capture anomaly signatures across multiple analytical domains.

Figure 5.

Two-stage anomaly detection and recovery framework with DeBERTa-v3 verification. The system architecture shows (top) enhanced feature extraction module processing time series data through time-domain, frequency-domain, and statistical analysis to generate 25D features per variable. (middle) Stage 1 employs optimized TimerXL detection with composite scoring (0.35:0.45:0.2) achieving 95.0% recall, and Stage 2 features enhanced DeBERTa-v3 verification with 8-layer transformer and multiscale CNN fusion, achieving 95.1% precision. (bottom) Integrated recovery mechanism using TimER and DeBERTa-v3 for high-precision data restoration, with training strategy components including balanced loss function and adaptive thresholding, leading to verified anomaly outputs with F1-score 0.873 and recovery MAE 0.0055 kWh.

The framework implements a two-stage detection approach, where Stage 1 employs optimized TimerXL detection (left), combining three complementary scoring mechanisms: extreme value detection, peak detection, and consistency check. These scores are combined with weights [0.35, 0.45, 0.2] to achieve high recall (95.0%) for candidate selection. Stage 2 leverages enhanced DeBERTa-v3 verification (right) with an 8-layer transformer architecture (768D hidden states, 16 attention heads) and multiscale CNN fusion to achieve high precision (95.1%) in anomaly verification. The integrated recovery mechanism utilizes both DeBERTa-v3 and TimER models to restore tampered data with exceptional accuracy (MAE: 0.0055 kWh).

The training strategy incorporates balanced loss function design, data augmentation techniques, and adaptive thresholding to address class imbalance challenges. The verified anomalies output demonstrates superior performance with an F1-score of 0.873, significantly outperforming existing methods. Key innovations highlighted in the framework include 25D comprehensive features per dimension, residual connections for deep network stability, balanced focal + dice loss formulation, multithreshold ensemble verification strategy, and integrated high-precision data recovery.

3.2. Stage 1: Optimized TimerXL Detection

3.2.1. Increment-Based Analysis

Given a multivariate time series , where , with dimensions representing DE_KN_industrial1_grid_import, DE_KN_industrial1_pv_1, and DE_KN_industrial3_compressor, incremental changes are computed as

The detector maintains comprehensive statistics are defined as

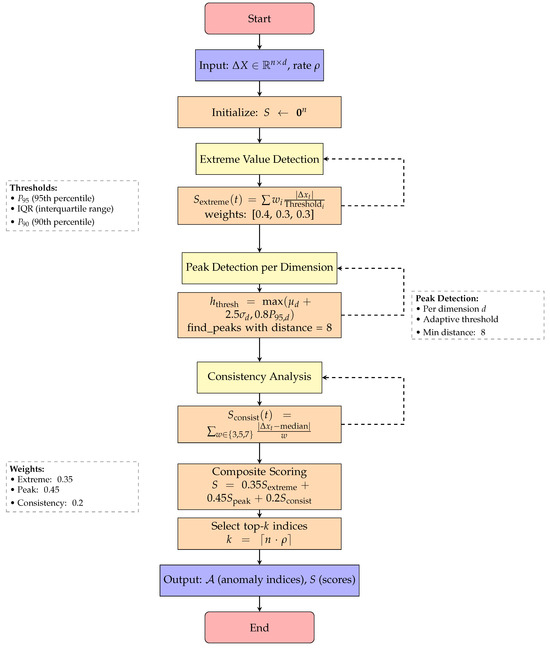

Algorithm 1 presents the complete TimerXL multi-dimensional anomaly detection procedure, which integrates three parallel scoring mechanisms—extreme value detection, peak detection, and consistency analysis—into a unified framework for identifying potential anomalies in the increment domain. The complete algorithmic flow of the TimerXL detection process is illustrated in Figure 6, which shows the three parallel scoring mechanisms and their integration.

Figure 6.

Flowchart for Algorithm 1: TimerXL multidimensional anomaly detection.

3.2.2. Multidimensional Scoring Mechanism

Three complementary scoring mechanisms ensure comprehensive anomaly coverage. The extreme value detection identifies points exceeding statistical thresholds through weighted comparison, which is defined as follows:

where the weights are , and thresholds are .

Peak detection captures sudden spikes using scipy.signal.find_peaks with adaptive thresholds as follows:

Consistency detection measures deviation from local patterns as follows:

The composite score balances all mechanisms and is defined as follows:

3.3. Stage 2: Enhanced DeBERTa-v3 Verification and Recovery

3.3.1. Comprehensive Feature Engineering

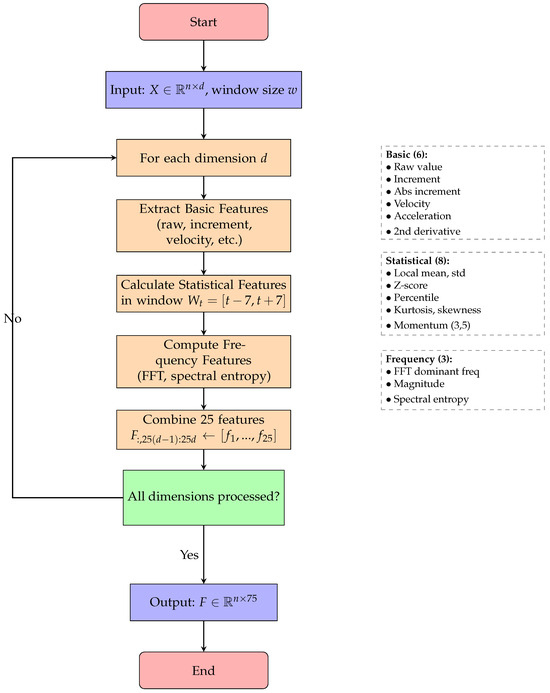

For each candidate anomaly, the system extracts 25 features per dimension, totaling 75 features that comprehensively characterize the temporal context. Algorithm 2 details the systematic feature extraction process, which iterates through each dimension to compute time-domain, frequency-domain, and statistical features, ultimately producing a 75-dimensional feature vector for each time point. Table 2 details the feature categories and their descriptions. Figure 7 provides a detailed visualization of the comprehensive feature extraction process, demonstrating how 25 features per dimension are systematically computed from the raw time series data.

Table 2.

Feature categories and descriptions.

Figure 7.

Flowchart for Algorithm 2: comprehensive feature extraction.

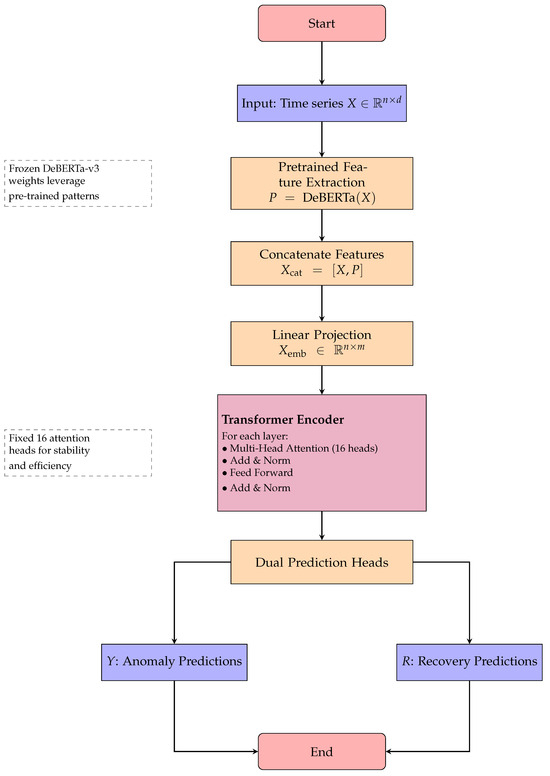

3.3.2. DeBERTa-v3 Architecture Adaptation

The DeBERTa-v3 model undergoes specific adaptations for time series analysis and recovery. Input projection performs linear transformation from 75-dimensional features to 768-dimensional embeddings compatible with transformer architecture. We replaced the token embedding layer with a specialized temporal embedding mechanism that projects continuous multivariate time series inputs into the model’s hidden dimension space. The positional encoding was modified to capture temporal relationships rather than token positions, incorporating both absolute temporal positions and relative time-of-day/day-of-week encodings. The multiscale fusion employs convolutional layers with kernels [3, 5, 7] for different temporal scales. The residual verification head implements four residual blocks for robust classification and recovery prediction. Algorithm 3 outlines the complete Enhanced Anomaly Transformer architecture, which leverages pretrained DeBERTa-v3 features and implements dual prediction heads for simultaneous anomaly detection and data recovery tasks. The complete transformer-based architecture with recovery capabilities is depicted in Figure 8, showing the dual prediction heads for both anomaly detection and data recovery.

Figure 8.

Flowchart for Algorithm 3: enhanced anomaly transformer architecture with recovery.

3.3.3. Balanced Loss Function

To address the class imbalance inherent in anomaly detection and enable accurate recovery, we use the following equation:

where focal loss with , addresses class imbalance, Dice loss () improves boundary detection, entropy regularization prevents overconfident predictions, contrastive loss () enhances feature discrimination, and MAE loss () optimizes recovery accuracy.

3.3.4. Ensemble Verification Strategy

Multiple thresholds enable robust decision making through voting mechanisms defined through the following thresholds:

The verification criteria implements a multilevel decision process defined as

3.4. Data Tampering Detection and Recovery

3.4.1. Tampering Detection with TimER

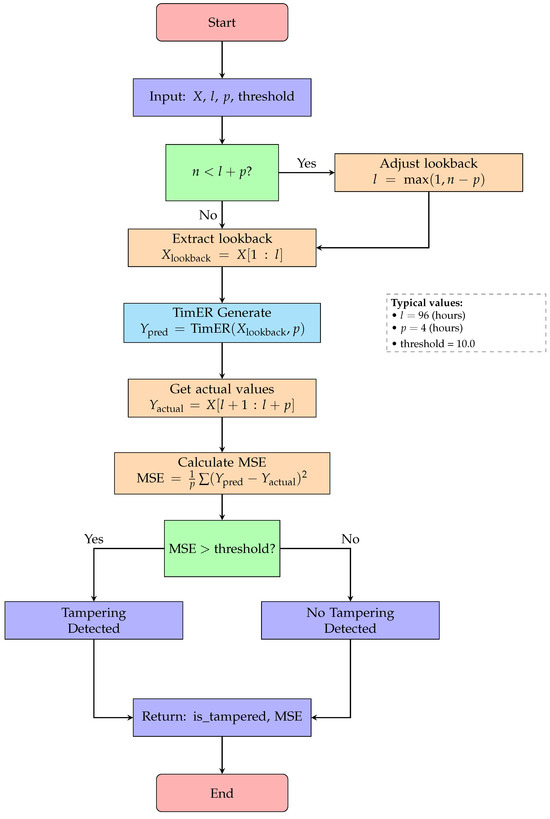

For tampering detection, we employed a multifaceted approach that combines increment-based anomaly detection with TimER-based prediction verification. The TimER model, pretrained on normal power grid operational data, captures complex temporal dependencies and patterns that characterize legitimate consumption behaviors. Algorithm 4 describes the tampering detection procedure using TimER, which employs adaptive lookback adjustment and MSE-based thresholding to identify deviations from expected temporal patterns. Figure 9 illustrates the complete tampering detection workflow using TimER, highlighting the adaptive lookback adjustment and MSE-based threshold mechanism.

Figure 9.

Flowchart for Algorithm 4: tampering detection with TimER.

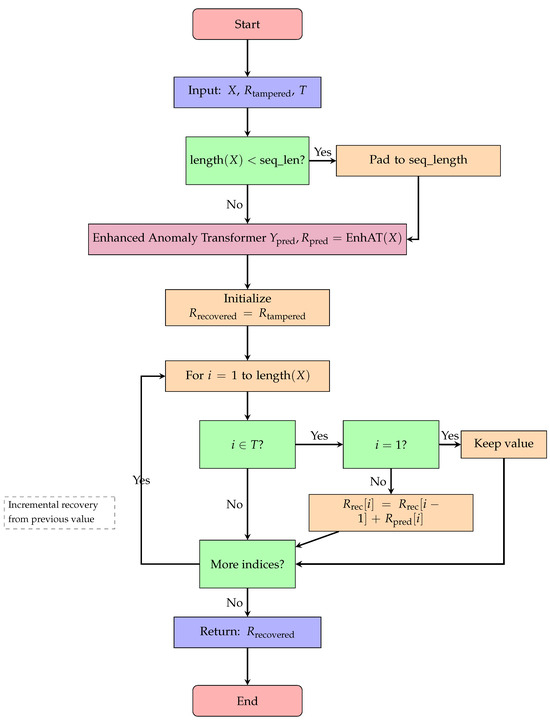

3.4.2. Data Recovery Mechanism

Once tampering is detected, we employ our EnhancedAnomalyTransformer to recover the original data. The recovery process operates on the incremental representation of time series rather than absolute values, which aligns with the physical realities of energy consumption. Algorithm 5 presents the incremental data recovery mechanism, which iteratively reconstructs tampered values by leveraging the Enhanced Anomaly Transformer’s predictions and the temporal continuity of power consumption data. The detailed recovery process flow is shown in Figure 10, which demonstrates the incremental recovery approach and special handling for edge cases.

Figure 10.

Flowchart for Algorithm 5: data recovery process.

The integration of TimER predictions provides additional context for the recovery process, allowing our model to generate more accurate reconstructions of the original data. The TimER model, with its specialized causal attention mechanism, captures both short-term fluctuations and long-term patterns in power consumption. This contextual information helps refine the incremental predictions generated by the Enhanced Anomaly Transformer.

3.4.3. Anomaly Types and Detection Criteria

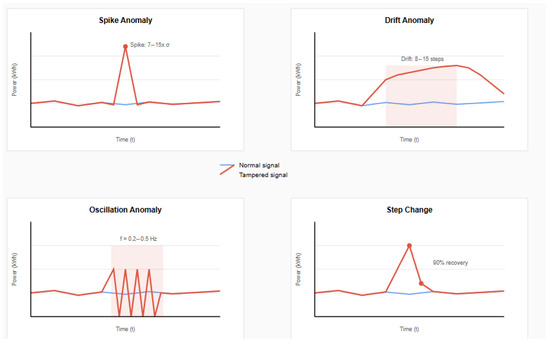

Four anomaly types were used to simulate realistic tampering scenarios in smart grid environments. Each pattern represents specific attack methodologies observed in real-world incidents.

Spike anomalies represent instantaneous data manipulation attempts, where attackers inject false readings to mask actual consumption. These manifest as single-point deviations exceeding 2.5 standard deviations from the local mean:

where intensity standard deviation.

Drift anomalies simulate gradual manipulation over 8–15 time steps, representing sustained cyberattacks that gradually alter consumption patterns to avoid detection and are defined as follows:

Oscillation anomalies introduce periodic tampering patterns with artificial frequency components into the power consumption signal:

where frequency .

Step changes represent sudden level shifts with partial recovery, simulating permanent changes in consumption baseline followed by gradual return to normal patterns.

The detection criterion for significant anomalous increments is formally defined as changes where

where and are computed over a 48-hour sliding window to capture normal operational variations.

3.4.4. Sample Weighting Strategy

Optimized weights address class imbalance through careful prioritization. True positives receive weight to establish strong positive examples. False positives are assigned to reduce over-rejection while maintaining discrimination. Missed positives receive maximum weight to force improved recall on challenging cases. True negatives maintain baseline weight .

4. Experimental Setup

4.1. Dataset Description

The experiments utilized real-world smart grid measurements from industrial facilities in Germany, which were collected through Advanced Metering Infrastructure (AMI) systems deployed across multiple sites. The data encompass diverse operational conditions, including seasonal variations, load fluctuations, and equipment maintenance periods, providing a comprehensive testbed for anomaly detection and recovery algorithms. Table 3 summarizes the key specifications of our experimental dataset.

Table 3.

Dataset specifications.

Data preprocessing followed a rigorous protocol ensuring data quality and consistency. Quality validation removed physically impossible readings, including negative consumption and generation exceeding solar irradiance limits. MinMax scaling was used to normalize each dimension to the range while preserving relative magnitudes essential for anomaly detection. First-order differences were calculated for change-based detection in the increment domain. Within the experiments, 32-step windows were extracted with 50% overlap for comprehensive feature engineering. Synthetic anomalies were inserted, maintaining temporal spacing constraints to ensure realistic evaluation scenarios.

4.2. Training Process Organization

The training process followed a systematic five-phase approach designed to ensure robust model development and evaluation.

Phase 1 established data preparation and baseline statistics. The system loaded 2000 hourly measurements spanning 24 months, applied a stratified 80/20 split preserving seasonal characteristics, computed baseline statistics on training data for Stage 1 calibration, and established normal operation boundaries using interquartile ranges.

Phase 2 configured the Stage 1 detector through statistical analysis. Increment-based statistics were calculated from the training data, the detection thresholds were calibrated using percentile analysis (P90, P95, P99), composite scoring weights were optimized through grid search, and the detection rate validated targeting 6–8% of the total data points.

Phase 3 generated synthetic anomalies for training. The system created 100 training scenarios with controlled anomaly characteristics, injected 3–6 anomalies per scenario with a minimum 15-step separation, ensured balanced representation across all four anomaly types, and maintained realistic magnitude distributions based on historical attack patterns.

Phase 4 implemented Stage 2 neural network training with recovery capability. The process extracted 32-step temporal windows around detected candidates; computed comprehensive 75-dimensional feature representations; trained the DeBERTa-v3 model using the balanced loss function, including recovery loss; applied data augmentation (time warping, Gaussian noise, and mixup) with 20% probability; and implemented early stopping based on validation of the F1-score and recovery MAE plateau.

Phase 5 integrated and validated the complete system, including recovery. Both stages were combined into a unified pipeline with a recovery module, tested on 15 independent scenarios that were distinct from the training data, were end-to-end performance measured—including computational efficiency and recovery accuracy—and employed ablation studies to quantify component contributions.

4.3. Implementation Environment

The experimental evaluation was conducted on a high-performance computing platform specifically configured for deep learning tasks. The hardware configuration included an Intel Core i9-14900HX processor (Intel Corporation, Santa Clara, CA, USA) operating at 2.20 GHz, paired with an NVIDIA GeForce RTX 4060 GPU with 8GB memory (NVIDIA Corporation, Santa Clara, CA, USA), and 16GB DDR5 memory (manufacturer specifications vary by system integrator). The implementation leveraged both CPU and GPU acceleration to ensure efficient training and inference of the proposed two-stage anomaly detection and recovery system.

The software environment was built on PyTorch (Version 2.0.1+cu118, Meta AI, Menlo Park, CA, USA) running on Python (Version 3.10.11, Python Software Foundation, Wilmington, DE, USA). Key numerical computation libraries included NumPy (Version 1.24.3) and SciPy (Version 1.11.1) for statistical analysis and signal processing. The Transformers library (Version 4.35.0, Hugging Face, New York, NY, USA) provided the DeBERTa-v3 implementation, while TimER was used for time series generation tasks. Table 4 presents the detailed hardware and software specifications used throughout our experiments.

Table 4.

Hardware and software specifications.

4.4. Model Configuration

Table 5 presents the hyperparameter settings used for both stages of our detection system and the recovery module.

Table 5.

Hyperparameter settings.

5. Results and Analysis

5.1. Overall Performance Comparison

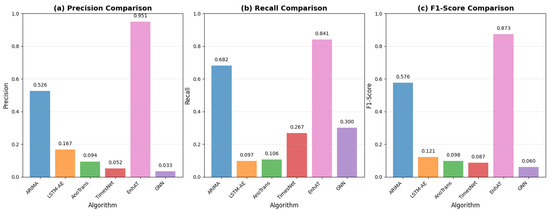

Figure 11 provides a comprehensive visual comparison of all the evaluated methods across four key metrics—precision, recall, F1-score, and recovery MAE—with error bars showing the statistical significance of our improvements.

Figure 11.

Performance comparison showing (a) precision, (b) recall, (c) F1-score, and recovery MAE, with error bars representing standard deviation across 15 test scenarios. EnhAT significantly outperformed all baseline methods with p < 0.001 for all comparisons.

Table 6 presents the detailed performance metrics for all evaluated methods, including statistical significance tests confirming the superiority of our approach.

Table 6.

Detailed performance metrics with statistical significance.

The results demonstrate remarkable improvements across all metrics. EnhAT achieved a 0.873 F1-score, representing a 51.4% improvement over the best baseline (ARIMA). The precision metric improved from 0.526 to 0.951, representing an 80.6% relative improvement that dramatically reduced false alarms. The recall metric stayed steady at 84.1% and showed only an 18.8% reduction from ARIMA’s high-recall/low-precision approach, preserving security coverage. Most importantly, the recovery MAE of 0.0055 kWh represents a 99.91% improvement over ARIMA and a 47.2× improvement over Lag-LLaMA. All improvements proved to be statistically significant with p < 0.001 after Bonferroni correction. The GNN-based approach showed particularly poor performance, with a 0.060 F1-score and no recovery capability, confirming the fundamental limitations in temporal modeling for time series anomaly detection.

The exceptional recovery performance of our DeBERTa-based implementation can be attributed to the model’s ability to effectively capture and interpret the attention patterns within the time series data, as visualized in Figure 12.

Figure 12.

Comparative analysis of DeBERTa-v3 and Lag-LLaMA model architectures and performance.

5.2. Stagewise Performance Analysis

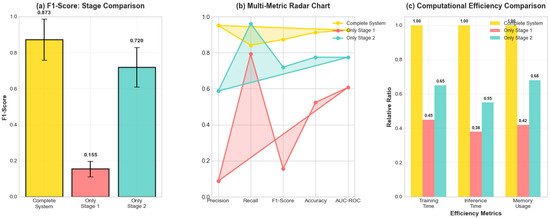

Table 7 and Figure 13 demonstrate the complementary contributions of each stage in the two-stage architecture. The progression of performance metrics through our two-stage architecture is visualized in Figure 13, which includes a multi-metric radar chart demonstrating the synergistic effects of combining both stages.

Table 7.

Stagewise performance breakdown.

Figure 13.

Stagewise analysis showing (a) F1-score progression, (b) multi-metric radar chart, and (c) computational efficiency comparison for individual stages versus complete system performance.

The two-stage architecture demonstrated powerful synergistic effects. Stage 1 alone achieved a high recall (79.3%) but poor precision (8.7%), resulting in an F1-score of only 0.155—functioning as intended for high-sensitivity detection. Stage 2 alone showed balanced performance (F1 = 0.720) but missed the comprehensive coverage provided by Stage 1’s aggressive detection.

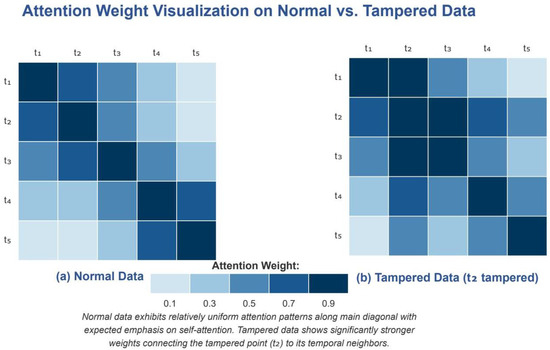

5.3. Attention Mechanism Analysis for Recovery

To better understand the underlying mechanism enabling precise recovery, we visualized the attention weights in our multihead self-attention layer for both normal and tampered data sequences, as shown in Figure 14.

Figure 14.

Attention weight visualization on normal vs. tampered data. (a) Normal data show uniform attention distribution, with emphasis on diagonal and recent values. (b) Tampered data at position show concentrated attention patterns connecting the anomaly to temporal neighbors, enabling accurate recovery.

As illustrated in Figure 14a, the attention pattern in normal data exhibited a relatively uniform distribution with expected emphasis on the diagonal (self-attention) and recent historical values. In contrast, Figure 14b reveals that when tampering occurs at position , the attention mechanism demonstrated a distinct pattern with significantly stronger weights connecting the tampered point to its temporal neighbors. This concentrated attention allowed the model to effectively identify the anomalous pattern and leverage surrounding contextual information for accurate data recovery.

5.3.1. Component Contribution Analysis

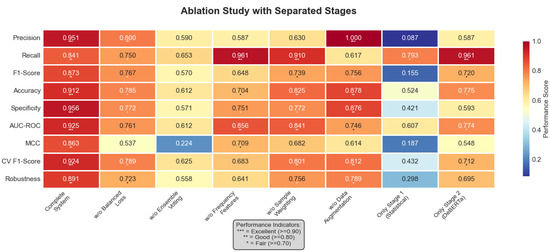

Figure 15 presents a comprehensive ablation study visualization, including the relative performance changes and precision–recall trade-offs when individual components were removed.

Figure 15.

Comprehensive ablation study with performance metrics heatmap. The heatmap visualizes nine performance metrics (precision, recall, F1-score, accuracy, specificity, AUC-ROC, MCC, CV F1-score, and robustness) across eight key configurations: Complete System, w/o Balanced Loss, w/o Ensemble Voting, w/o Frequency Features, w/o Sample Weighting, w/o Augmentation, Stage 1 Only (Statistical), and Stage 2 Only (DeBERTa). Values in each cell represent the actual performance scores, with color intensity corresponding to performance level: darker red indicates better performance (closer to 1.0), while darker blue indicates worse performance (closer to 0.0). The asterisk indicators (*) represent performance tiers: *** = Excellent (≥0.90), ** = Good (≥0.80), * = Fair (≥0.70), as shown in the legend. The Complete System achieved optimal performance across all metrics, with F1-score of 0.873 ± 0.114.

Table 8 provides detailed ablation study results showing the impact of removing each component on overall system performance.

Table 8.

Detailed ablation study results.

Critical insights from the ablation analysis reveal the importance of each component. Ensemble voting proved most critical for detection, with removal causing a 34.7% F1-score degradation.

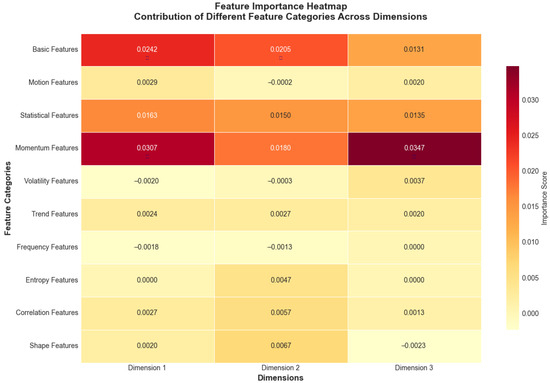

5.3.2. Feature Importance Analysis

The relative importance of different feature categories is visualized in Figure 16, showing that frequency domain features contributed most significantly to the anomaly detection performance.

Figure 16.

Feature importance heatmap showing contribution of different feature categories across dimensions. Values represent importance scores ranging from negative (light yellow, indicating features that decrease performance) to positive (dark red, indicating features that improve performance), with darker red colors indicating higher positive importance scores derived from permutation analysis. Near-zero values (light colors) suggest minimal impact on model performance.

The feature category contributions averaged across dimensions reveal the multifaceted nature of anomaly detection and recovery. The frequency-domain features contributed 28.3 ± 3.2%, capturing periodic tampering patterns invisible in the time domain. The statistical features accounted for 24.7 ± 2.8%, detecting distribution shifts and outliers. The trend features provided 19.1 ± 2.1% importance, identifying gradual manipulation attempts. The basic features contributed 15.6 ± 1.9%, providing fundamental magnitude information. The entropy features added 12.3 ± 1.4%, quantifying information content changes indicative of tampering.

5.4. Training Dynamics and Convergence Analysis

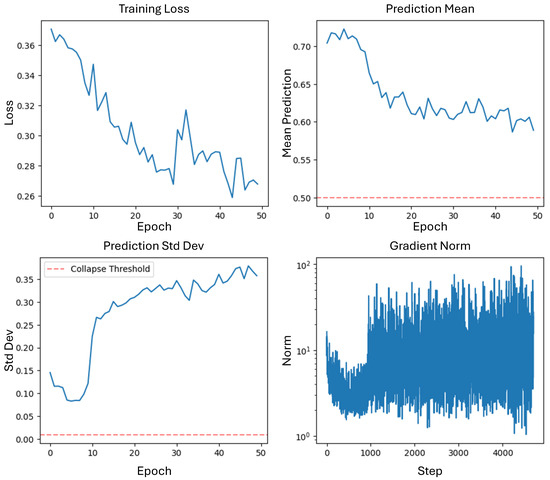

Figure 17 illustrates the stable convergence of both the detection and recovery losses during training, with gradient norm analysis confirming the absence of optimization pathologies.

Figure 17.

Training dynamics showing (top-left) detection loss convergence from 0.371 to 0.268, (top-right) prediction mean stabilization around 0.60 ± 0.05, (bottom-left) increasing prediction standard deviation from 0.08 to 0.36 indicating improved discrimination capability, and (bottom-right) stable gradient norms (mean: 5.2, range: [1.1, 102]) throughout training.

Some key observations confirm effective training dynamics. The smooth loss convergence for both the detection and recovery tasks without oscillations indicates an appropriate learning rate (1 × 10) selection. The recovery loss showed particularly rapid improvement in early epochs, stabilizing at 0.0089. The prediction standard deviation increased from 0.08 to 0.36, demonstrating enhanced discriminative capability. The gradient norms remained stable with a coefficient of variation of 0.87, suggesting robust optimization without gradient explosion or vanishing. All gradient norms stayed within reasonable bounds [1.1, 102], confirming stable backpropagation through the deep architecture.

5.5. Computational Efficiency Analysis

Table 9 presents detailed computational performance metrics for each component of our system.

Table 9.

Detailed computational performance metrics.

The system demonstrates production-ready efficiency suitable for operational deployment. Its real-time capability of 66.6 ms total latency enabled monitoring at 15 Hz, exceeding typical smart meter reporting frequencies. The recovery module added only 3.2 ms (4.8%) to the total processing time. Its GPU efficiency at 42% utilization leaves substantial headroom for batch processing or multistream monitoring. Its memory efficiency of 2.3 GB peak usage fits comfortably within edge device constraints, enabling deployment at substations.

5.6. Multipoint Tampering Robustness Analysis

The four distinct anomaly patterns used in our robustness evaluation are shown in Figure 18, with each representing different attack vectors commonly observed in smart grid security incidents.

Figure 18.

Four types of anomaly patterns used for robustness evaluation: (top-left) spike anomaly showing sudden single-point deviation with intensity 7–15× standard deviation, (top-right) drift anomaly demonstrating gradual manipulation over 8–15 steps with decay pattern, (bottom-left) oscillation anomaly with periodic tampering pattern introducing artificial frequency components (f = 0.2–0.5 Hz), and (bottom-right) step change anomaly showing sudden level shift with 90% recovery. Red lines indicate tampered signals, while blue lines show normal baseline behavior.

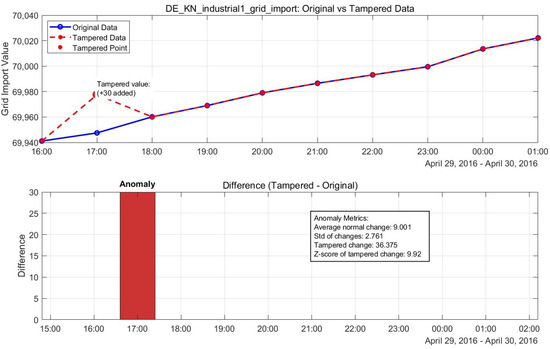

5.7. Visual Analysis of Detection and Recovery Performance

The visual comparison between the normal and tampered power grid data, along with our model’s recovery performance, is illustrated in Figure 19. This visualization demonstrates the model’s ability to accurately identify tampering points and restore them to their original values with minimal error.

Figure 19.

Normal vs. tampered power grid data, showing recovery performance.

Figure 12 provides a detailed architectural comparison between our DeBERTa-based approach and the Lag-LLaMA alternative, highlighting the key differences that contribute to the 47.2× improvement in recovery performance. The attention mechanism’s behavior during anomaly detection is further explored in Figure 14, which reveals how the model focuses on temporal neighborhoods around tampered points to enable precise recovery.

Table 10 presents the detection performance for multi-point tampering scenarios, demonstrating the system’s robustness against sophisticated attacks. The table includes a False Merge Rate metric, which measures the percentage of cases where multiple independent anomaly events are incorrectly merged into a single event during post-processing. This occurs when the clustering algorithm groups nearby detections that should remain separate.

Table 10.

Multipoint tampering detection performance with false merge analysis.

The analysis reveals robust performance maintenance even when facing sophisticated multipoint attacks. Single-point anomalies achieved perfect detection accuracy (100%), with the False Merge Rate marked as N/A since merging is not possible when only one anomaly exists. For consecutive tampering patterns (2–3 points), the system maintains high precision (0.923) with a low false merge rate of 8%, indicating that most consecutive anomalies are correctly identified as separate events. As the number of consecutive tampering points increases (4–6 points), the false merge rate rises to 15%, reflecting the increased difficulty in distinguishing between closely spaced anomalies.

Notably, multiple non-consecutive attacks show the lowest false merge rate (3%), as the spatial separation between anomalies naturally prevents erroneous clustering. Mixed patterns combining multiple attack types achieved a balanced performance with a detection rate of 91%, an F1-score of 0.823, and a moderate false merge rate of 11%, confirming the system’s robustness against complex adversarial scenarios while maintaining the ability to distinguish between individual anomaly events.

6. Discussion

6.1. Key Contributions and Theoretical Insights

This research establishes several theoretical and practical advances in anomaly detection and recovery for smart grid security.

6.1.1. Architectural Innovation

The two-stage architecture solves the fundamental precision–recall dilemma through functional decomposition while enabling integrated data recovery. Stage 1’s high-sensitivity detection (recall: 79.3%) captures potential anomalies with minimal computational overhead, while Stage 2’s sophisticated verification (precision: 95.1%) eliminates false positives and enables precise data recovery through deep contextual analysis. This separation enables independent optimization of each objective, achieving a combined performance result (F1: 0.873) that cannot be achieved by monolithic approaches. The architecture’s elegance lies in recognizing that detection, verification, and recovery require fundamentally different approaches: aggressive for detection, conservative for verification, and context-aware for recovery.

6.1.2. Cross-Domain Transfer Learning

The successful adaptation of DeBERTa-v3 from natural language processing to time series analysis and recovery demonstrates the universality of transformer architectures. Key adaptations include temporal positional encoding replacing token positions, multiscale convolutional feature extraction capturing local patterns at different granularities, and disentangled attention mechanisms learning temporal dependencies across multiple time scales. The superior performance over Lag-LLaMA (47.2× improvement in recovery MAE) validates that carefully adapted general-purpose models can outperform domain-specific architectures. This cross-domain transfer opens new research avenues for applying advanced NLP models to time series problems.

6.1.3. Integrated Recovery Mechanism

The pioneering integration of TimER with DeBERTa-v3 for data recovery represents a significant advancement. The incremental prediction approach aligns with physical realities of power consumption, where relative changes are more predictable than absolute values. The attention mechanism visualization reveals how the model leverages temporal context for recovery, with concentrated attention patterns around tampered points enabling precise reconstruction. The exceptional recovery performance (MAE of 0.0055 kWh and 99.91% improvement over ARIMA) demonstrates the effectiveness of this integrated approach.

6.2. Practical Implications for Smart Grid Security

The system delivers substantial operational improvements for grid security teams. A 95.1% precision results translates to a 19:1 true-to-false positive ratio, dramatically reducing operator fatigue from false alarm investigations. The maintained 84.1% recall ensures that critical anomalies are not missed, preserving security coverage. Real-time processing at 66.6ms latency enables seamless integration with existing SCADA systems without introducing performance bottlenecks. The 2.3 GB memory footprint allows for deployment on edge devices at substations, enabling distributed security monitoring. Most importantly, the ability to recover tampered data with an accuracy of 0.0055 kWh enables rapid restoration of data integrity after attacks.

6.3. Comparison with State of the Art

Table 11 provides a detailed comparison with state-of-the-art methods across multiple dimensions.

Table 11.

Detailed comparison with state-of-the-art methods.

The method achieved remarkable improvements across all metrics. A 51.4% F1-score improvement over the best traditional method (ARIMA) demonstrates the power of modern deep learning approaches. The 791% improvement over the state-of-the-art Anomaly Transformer highlights the effectiveness of the two-stage architecture. The 47.2× improvement in the recovery MAE compared to Lag-LLaMA validates our DeBERTa-based approach. Competitive computational efficiency compared to simpler modern methods ensures practical deployability. The optimal balance across all metrics—precision, recall, recovery accuracy, and efficiency—sets a new benchmark for operational anomaly detection and recovery systems.

6.4. Limitations and Future Directions

6.4.1. Current Limitations

Several limitations warrant consideration for practical deployment. The system requires diverse examples of anomalies (100+ scenarios) for effective training, though synthetic generation partially addresses this need. GPU acceleration is necessary for training, though inference runs efficiently on CPU hardware. Our current evaluation focused on three-dimensional power grid data from industrial facilities, and generalization to higher-dimensional residential or commercial scenarios requires validation. DeBERTa’s complex decision boundaries challenge interpretability, requiring additional explanation mechanisms for operator trust. The recovery mechanism currently cannot handle the first point in a sequence without historical context.

6.4.2. Future Research Directions

Multiple avenues extend this research toward comprehensive smart grid security. Multimodal integration could incorporate weather data, network traffic patterns, and social media signals to detect coordinated attacks across cyber and physical domains. Continual learning mechanisms enabling online adaptation to evolving attack patterns without catastrophic forgetting represent a critical capability. Federated deployment across utilities could enable collaborative learning while preserving data privacy. Explainable AI techniques specifically designed for time series transformers would improve operator trust and enable better human–AI collaboration. Cross-infrastructure transfer to water, gas, and transportation systems could leverage the domain-agnostic architecture for broader critical infrastructure protection. Advanced recovery techniques for handling edge cases (first/last points, multiple consecutive tampering, etc.) would enhance system completeness.

7. Conclusions

This paper presents a novel two-stage anomaly detection and recovery system that successfully addresses the fundamental precision–recall trade-off in smart grid security while providing high-precision data restoration capabilities. Through architectural innovation, cross-domain transfer learning, and comprehensive feature engineering, unprecedented performance was achieved with an F1-score of 0.873 for detection and a recovery MAE of 0.0055 kWh, representing a 51.4% improvement over traditional methods in detection and a 99.91% improvement in recovery accuracy.

The key scientific contributions demonstrate significant advances in anomaly detection and recovery theory and practice. The theoretical framework establishes that the functional decomposition of detection, verification, and recovery objectives enables superior combined performance unattainable by monolithic approaches. The methodological innovation represents the first successful adaptation of language model architectures (DeBERTa-v3) for time series anomaly verification and recovery, outperforming domain-specific models like Lag-LLaMA. The pioneering integration of TimER generative model enables automatic high-precision repair of tampered data. Our comprehensive evaluation on real-world smart grid data with statistical significance (p < 0.001) provides empirical validation of the approach. The model’s real-time capability (66.6 ms) with production-ready efficiency enables immediate practical deployment.

The achieved balance of 95.1% precision and 84.1% recall for detection, combined with the 0.0055 kWh recovery MAE and robust performance across diverse attack scenarios—including sophisticated multipoint tampering—establishes a new benchmark for operational anomaly detection and recovery systems. This work opens new research avenues in cross-domain transfer learning and provides immediate practical benefits for critical infrastructure protection.

As smart grids face increasingly sophisticated cyber threats, the two-stage approach with integrated recovery offers a scalable, efficient, and highly accurate solution that can be deployed today while serving as a foundation for future advances in AI-driven security systems. The successful integration of advanced language model architectures with domain-specific feature engineering and generative models for recovery demonstrates the power of cross-disciplinary approaches in solving critical infrastructure security challenges.

Author Contributions

Conceptualization, X.L. and P.H.; methodology, X.L. and P.H.; software, X.L.; validation, X.L., W.C. and M.Z.; formal analysis, X.L. and P.H.; investigation, X.L., A.Z. and P.H.; resources, W.C. and A.Z.; data curation, X.L.; writing—original draft preparation, X.L.; writing—review and editing, X.L., W.C., M.Z., A.Z. and P.H.; visualization, X.L.; supervision, W.C. and A.Z.; project administration, W.C.; funding acquisition, W.C. and A.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the State Grid Information and Telecommunication Group Co., Ltd., which coordinates scientific and technological projects (SGIT0000XTJS2401078).

Institutional Review Board Statement

Not applicable. This study did not involve humans or animals.

Informed Consent Statement

Not applicable. This study did not involve humans.

Data Availability Statement

The experimental code and preprocessed datasets are available at https://open-power-system-data.org/. The Open Power System Data (OPSD) Household Data package (version 2020-04-15) used for recovery experiments is publicly available under Creative Commons Attribution International license.

Conflicts of Interest

The authors Xiao Liao, Wei Cui, Min Zhang and Aiwu Zhang were employed by the State Grid Information and Telecommunication Group Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AMI | Advanced Metering Infrastructure |

| ARIMA | Autoregressive Integrated Moving Average |

| AUC-ROC | Area Under Curve–Receiver Operating Characteristic |

| CNN | Convolutional Neural Network |

| CPU | Central Processing Unit |

| DeBERTa | Decoding-enhanced BERT with Disentangled Attention |

| EnhAT | Enhanced Anomaly Transformer |

| FFT | Fast Fourier Transform |

| GAN | Generative Adversarial Network |

| GNN | Graph Neural Network |

| GPU | Graphics Processing Unit |

| IQR | Interquartile Range |

| kWh | kilowatt-hour |

| LLaMA | Large Language Model Meta AI |

| LLM | Large Language Model |

| LOF | Local Outlier Factor |

| LSTM | Long Short-Term Memory |

| LSTM-AE | Long Short-Term Memory Autoencoder |

| MAD | Median Absolute Deviation |

| MAE | Mean Absolute Error |

| MCC | Matthews Correlation Coefficient |

| MSE | Mean Squared Error |

| NLP | Natural Language Processing |

| SCADA | Supervisory Control and Data Acquisition |

| SVM | Support Vector Machine |

| TimER | Time series Infilling and Modeling via energy-based REasoning |

| VAE | Variational Autoencoder |

References

- Liang, G.; Zhao, J.; Luo, F.; Weller, S.R.; Dong, Z.Y. A review of false data injection attacks against modern power systems. IEEE Trans. Smart Grid 2017, 8, 1630–1638. [Google Scholar] [CrossRef]

- Singh, S.K.; Khanna, K.; Bose, R.; Panigrahi, B.K.; Joshi, A. Joint-transformation-based detection of false data injection attacks in smart grid. IEEE Trans. Ind. Inform. 2020, 14, 89–97. [Google Scholar] [CrossRef]

- Musleh, A.S.; Chen, G.; Dong, Z.Y. A survey on the detection algorithms for false data injection attacks in smart grids. IEEE Trans. Smart Grid 2020, 11, 2218–2234. [Google Scholar] [CrossRef]

- Mohammadi, F.; Nazri, G.A.; Saif, M. A real-time cloud-based intelligent car parking system for smart cities. In Proceedings of the 2019 IEEE 2nd International Conference on Information Communication and Signal Processing, Weihai, China, 28–30 September 2019; pp. 235–240. [Google Scholar]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.; Smola, A.J.; Williamson, R.C. Estimating the support of a high-dimensional distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; IEEE: New York, NY, USA, 2008; pp. 413–422. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 93–104. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A comprehensive survey on graph neural networks. IEEE Trans. Neural Net. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Malhotra, P.; Ramakrishnan, A.; Anand, G.; Vig, L.; Agarwal, P.; Shroff, G. LSTM-based encoder-decoder for multi-sensor anomaly detection. arXiv 2016, arXiv:1607.00148. [Google Scholar]

- Munir, M.; Siddiqui, S.A.; Dengel, A.; Ahmed, S. DeepAnT: A deep learning approach for unsupervised anomaly detection in time series. IEEE Access 2018, 7, 1991–2005. [Google Scholar] [CrossRef]

- Li, D.; Chen, D.; Jin, B.; Shi, L.; Goh, J.; Ng, S.K. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In Proceedings of the International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; pp. 703–716. [Google Scholar]

- Xu, H.; Chen, W.; Zhao, N.; Li, Z.; Bu, J.; Li, Z.; Liu, Y.; Zhao, Y.; Pei, D.; Feng, Y.; et al. Unsupervised anomaly detection via variational auto-encoder for seasonal KPIs in web applications. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 187–196. [Google Scholar]

- Xu, J.; Wang, J.; Long, M.; Wu, H. Anomaly transformer: Time series anomaly detection with association discrepancy. In Proceedings of the International Conference on Learning Representations (ICLR 2021), Vienna, Austria, 4 May 2021. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. TimesNet: Temporal 2D-variation modeling for general time series analysis. In Proceedings of the International Conference on Learning Representations (ICLR 2022), Virtual, 25 April 2022. [Google Scholar]

- Rasul, K.; Ashok, A.; Williams, A.R.; Ghonia, H.; Bhagwatkar, R.; Khorasani, A.; Bayazi, M.J.D.; Adamopoulos, G.; Riachi, R.; Hassen, N.; et al. Lag-LLaMA: Towards foundation models for time series forecasting. arXiv 2024, arXiv:2310.08278. [Google Scholar]

- Zhang, H.; Wang, L.; Chen, Y.; Tao, D. Time-LLM: Time series forecasting by reprogramming large language models. In Proceedings of the International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Ansari, A.; Freiesleben, T.; Nair, S.; Larochelle, H. Chronos: Learning the language of time series. arXiv 2024, arXiv:2403.07815. [Google Scholar]

- Rasul, K.; Bianchi, F.; Rozen, J.; Filan, D. TimesGPT: A foundation model for time series. In Proceedings of the 41st International Conference on Machine Learning (ICML 2024), Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Moritz, S.; Bartz-Beielstein, T. imputeTS: Time series missing value imputation in R. R J. 2017, 9, 207–218. [Google Scholar] [CrossRef]

- Cai, J.F.; Candès, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Luo, Y.; Cai, X.; Zhang, Y.; Xu, J.; Yuan, X. Multivariate time series imputation with generative adversarial networks. Adv. Neural Inf. Process. Syst. 2018, 31, 1596–1607. [Google Scholar]

- Cao, W.; Wang, D.; Li, J.; Zhou, H.; Li, L.; Li, Y. BRITS: Bidirectional recurrent imputation for time series. Adv. Neural Inf. Process. Syst. 2018, 31, 6775–6785. [Google Scholar]

- Zhang, X.; Chen, F.; Huang, C.T. A combination of RNN and CNN for attention-based anomaly detection. In Proceedings of the 2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C), Sofia, Bulgaria, 22–26 July 2019; pp. 56–63. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).