Abstract

Precipitation intensity estimation is a critical issue in the analysis of weather conditions. Most existing approaches focus on building complex models to extract rain streaks. However, an efficient approach to estimate the precipitation intensity from surveillance cameras is still challenging. This study proposes a convolutional neural network known as the signal filtering convolutional neural network (SF-CNN) to handle precipitation intensity using surveillance-based images. The SF-CNN has two main blocks, the signal filtering block (SF block) and the gradually decreasing dimension block (GDD block), to extract features for the precipitation intensity estimation. The SF block with the filtering operation is constructed in different parts of the SF-CNN to remove the noise from the features containing rain streak information. The GDD block continuously takes the pair of the convolutional operation with the activation function to reduce the dimension of features. Our main contributions are (1) an SF block considering the signal filtering process and effectively removing the useless signals and (2) a procedure of gradually decreasing the dimension of the feature able to learn and reserve the information of features. Experiments on the self-collected dataset, consisting of 9394 raining images with six precipitation intensity levels, demonstrate the proposed approach’s effectiveness against the popular convolutional neural networks. To the best of our knowledge, the self-collected dataset is the largest dataset for monitoring infrared images of precipitation intensity.

1. Introduction

The understanding of weather conditions has become more critical, and has been discussed for decades due to the dramatic changes in the global climate, in which precipitation intensity is an important issue. The estimation of the precipitation intensity is the fundamental technology underlying various applications, for example, farming, weather forecasting, and climate simulation. Moreover, the abnormal precipitation intensity can cause disasters, such as floods and droughts, threatening human life and property, and destroying the environment.

Various studies have been dedicated to measuring precipitation intensity, and they can be classified into three categories based on the used data sources: gauge-based [1,2], radar-based [3,4], and satellite-based [5,6] approaches. The rain gauge is the earliest and most widely used device to measure precipitation intensity. The basic concept of a rain gauge is to manually or automatically calculate the rainfall using containers to collect rainwater and estimate the rainfall. Various types of rain gauges have been developed in studies to estimate precipitation intensity, but there have been some drawbacks; for example, the rain gauge must be placed on a flat surface perpendicular to the horizontal plane. Moreover, the rain gauge can only collect the installed local rainfall information. The number of rain gauges needing to be built increases to expand the coverage, which needs a huge budget.

Radar-based approaches use radar to emit radio waves to the sky and receive the radio waves reflected back from various objects. Researchers analyze the reflected radio waves to obtain weather information, such as the moisture content in the air and rainfall probability. The monitoring coverage of radar-based approaches is broader than gauge-based approaches, but the accuracies of the radar-based approaches are related to the reflected waves vulnerable to outside interference, such as the terrain and the reflected waves from various objects.

Satellite-based approaches require various sensors to obtain visibility, infrared, and microwave information for analyzing the precipitation with a large-scale observation. However, the visible and infrared data can only provide information from the top of clouds, which is weakly correlated with rainfall. The microwave emits from the satellite, penetrates the cloud and obtains the information from under the cloud. Therefore, some studies use microwaves to analyze precipitation information. However, a satellite has drawbacks of a low sampling rate and low spatial resolution of visibility, infrared, and microwave.

In addition, surveillance cameras are another kind of sampling equipment which have been widely erected and used in various areas, such as traffic monitoring [7], home care [8], and security monitoring [9]. These studies focus on understanding the scene’s content, including static and dynamic objects. A challenge in analyzing a scene’s contents is related to the weather. In weather-related topics, the rain streaks affect the quality of the monitoring images and distort the interesting objects, reducing the performance of outdoor vision surveillance systems. Therefore, many scholars focus on solving rain streaks by utilizing popular convolutional neural networks in a monitoring image or a surveillance video [10,11]. These methods can detect and remove rain streaks, but do not analyze or provide information on the precipitation intensity.

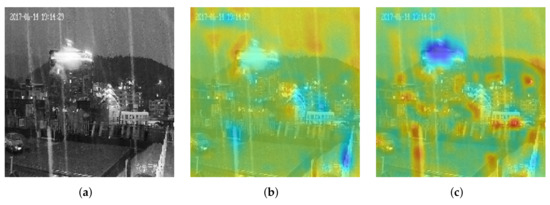

In recent years, many countries have faced the problem of heavy precipitation. Therefore, an efficient approach for estimating precipitation intensity in a city is needed. Figure 1 shows examples of raining images from various weather stations using infrared cameras; Figure 1a,b are images of a drizzle and moderate rain with 0.7 mm and 2.7 mm precipitation, respectively. In Figure 1, rain streaks can be observed in infrared cameras, and that is related to the intensity of precipitation. This study takes infrared surveillance cameras as data sampling devices and designs a new framework—the signal filtering convolutional neural network (SF-CNN)— capable of describing the features of precipitation with signal filtering and dimensional transformation in the infrared image to estimate the precipitation intensity in the city. Our study takes surveillance cameras as data collection devices, providing a high sampling spatial resolution compared to existing methods. Moreover, the SF-CNN achieves more superior results than other popular networks. To the best of our knowledge, no precipitation intensity dataset with surveillance-based infrared images has been created to date. This study is the first to use surveillance-based optical images for precipitation intensity estimation.

Figure 1.

Example of the self-collected precipitation intensity dataset. (a) Drizzle, (b) moderate rain.

The rest of this paper is organized as follows: We present related literature works in Section 2. In Section 3, we introduce the proposed SF-CNN for the precipitation intensity estimation, including the signal filtering block (SF block), the gradually decreasing dimensional block (GDD block), and the entire network structure. Experimental results, a discussion, and conclusions are presented in Section 4, Section 5 and Section 6, respectively.

2. Related Works

Precipitation intensity estimation is essential for climate, hydrological, and weather forecasts. The commonly used methods can be classified as the direct rainfall measurement and indirect rainfall estimation.

In the approaches of the direct rainfall measurement, the rain gauges, including the tipping bucket [12,13], weighing rain gauge [14,15], and siphon rain gauge [16], are widely used to measure the precipitation intensity directly. The rain gauge comprises three components: a water receiver (the funnel), a water storage tube (outer tube), and a water container. It uses a water container to collect precipitation and directly measures it by using the methods of weighting and setting thresholds. The precipitation recognition method with the rain gauge sampling is in direct contact with precipitation. The methods consider various containers to collect precipitation and measure the precipitation intensities, playing a critical role in and measuring of precipitation intensity [17]. The rain gauges are the direct and accurate approach to obtaining precipitation [18] and can provide the high-precision measurement of the precipitation intensity at a single sampling point [19]. However, it has the problems of the density of sampling, residual water, and water evaporation.

The rain gauge should be set in an open field to avoid movement and external forces. Therefore, the cost of building a high-density and well-configured rain gauge network is too high. The distribution density of sampling directly affects the accuracy of the precipitation estimation [20] and makes the measured value lose spatial representativeness [21]. Scholars have utilized the interpolation method [22] to fill the lost values in spatial distribution but experience significant errors in large areas because the sampling points are few [23]. In addition, a rain gauge uses a container to receive and estimate the precipitation. It cannot avoid the problems of residual water and water evaporation either using manual observation or automatic measurement. Therefore, more studies consider using approaches that indirectly obtain the precipitation information, such as weather radars, satellite monitoring, and surveillance monitoring, to estimate the precipitation intensity.

Weather radars and satellite monitoring have become essential approaches for large-scale precipitation estimation because the rain gauge has the problems of the limitation of space coverage and the low spatial representation. A weather radar retrieves the rain intensity through reflectivity Z and rainfall intensity R (Z–R relationship), quickly providing real-time precipitation in the study area. Compared with using a rain gauge to measure rainfall intensity, a radar can obtain a higher temporal and spatial resolution and a more comprehensive measurement range by adjusting the emission width and emission frequency. However, the Z–R relationship’s conversion relationship varies with rainfall types [24], and it directly affects the accuracy of the radar. Therefore, determining a reasonable Z–R relationship is a scientific problem when using a weather radar. In addition, precipitation estimation using the radar is susceptible to some factors, such as a non-weather echo, radar beam abnormality, and signal attenuation [25]. These factors can cause differences between the values measured using a radar vs. reality.

In addition, a satellite is a vital sampling device for precipitation intensity measurement, and its spatial coverage is of global scale. Satellite precipitation monitoring mainly consists of infrared (IR) observations from geosynchronous orbit satellites (GEO), passive microwave (PMW) observations from low Earth orbit satellites (LEO), and a combination of the IR and PMW [20]. The IR precipitation estimation is used to associate the properties of the cloud, such as the clou’sd thickness and the top temperature (brightness) of the cloud, to estimate the probability and intensity of precipitation [26]. The lower the cloud top temperature (the brighter the cloud), the stronger the precipitation [27]. However, the relationship between precipitation and the temperature of the cloud top is indirect. Therefore, there exists the estimation error of precipitation intensity. PMW sensors can penetrate clouds and receive microwave signals from hydrometeors compared to infrared signals. PMW sensors can directly measure hydrometeors in the atmosphere [28] to estimate precipitation more accurately [29]. However, satellite images cannot provide direct measurements of precipitation intensity. Moreover, sampling with microwaves has the problems of a low temporal resolution and a large sampling error when processing a short-term rainfall estimation. Therefore, studies have associated the merits of infrared and microwave information with precipitation intensity estimation [5]. Furthermore, scholars associate the remote sensing image, which is provided by a satellite, with precipitation information by assigning precipitation to each pixel to quantify precipitation in the satellite image [30]. Therefore, the quantitative results of precipitation on satellite images depend on the quality of the assignment (classification). Machine learning methods, such as random forests [31] and the convolutional neural network (CNN) [32,33], are effective classification techniques for achieving an image-based precipitation intensity classification. The information provided by the radar and satellite devices is suitable for large-scale precipitation estimation and is brutal regarding measuring the precipitation in small and specific areas. Moreover, the satellite and radar provide precipitation information with large intervals and cannot be used in extreme precipitation monitoring and nowcasting with high timeliness requirements.

In recent years, monitoring cameras have been widely erected, used in various fields, such as traffic, safety, and disaster prevention, and are sampling devices for indirect precipitation intensity estimation. In the early stage, the studies of precipitation intensity estimation using monitoring-based images utilized image processing techniques, such as foreground extraction [34], the morphological component analysis [35], and matrix decomposition [36], to extract rain streaks from the monitoring-based images, used to remove/identify rain streaks from the images. Then, studies use various methods, such as counting [37], neural networks (NN) [38], support vector machines (SVM) [39], and training the identification model for estimating precipitation intensity. These studies utilize the computer vision technique to classify the monitoring-based images for the precipitation intensity estimation.

In addition, the technique of deep convolutional neural networks (DCNNs) has been widely used in various topics of image classification, such as the face [40], vehicle [41], and bird [42], and significantly improved classification accuracy. Scholars associated the stacked denoising auto-encoder (SDAE) [43] with the fully connected structure to construct the PERSIANN-SDAE model and use bispectral information, including infrared and water vapor, to estimate the precipitation intensity [32]. However, the PERSIANN-SDAE model cannot efficiently extract local spatial changes from IR. Therefore, the follower utilizes convolutional neural networks (CNNs) to extract pixel information and information from between pixels from bispectral information for estimating the precipitation intensity [44].

However, the studied topic, precipitation intensity estimation, still has some issues: (1) lacks surveillance-based precipitation intensity dataset which can effectively increase the spatial resolution; (2) the analyzed objects, which are mentioned in the literature, have the apparent shapes compared with the targets (rain streaks) in the precipitation intensity classification; (3) previous studies, which are related to the precipitation intensity estimation, have low temporal and spatial resolutions. Therefore, our study design of the SF-CNN extracts features after applying the signal filtering operation and learns the features with a low dimension for the precipitation intensity estimation. Moreover, our study constructs a surveillance-based infrared images dataset with six precipitation levels.

3. Signal Filtering Convolutional Neural Network

This study designed various components to form the signal filtering convolutional neural network (SF-CNN) to construct an effective identification model for the precipitation intensity estimation. The SF-CNN considers the composition of the signal, which is comprised of useful and useless information. Moreover, the SF-CNN effectively decreases the dimension of features to reduce computational costs. This section sequentially introduces the signal filtering block (SF block), the gradually decreasing dimension (GDD block), and the proposed SF-CNN framework.

3.1. Signal Filtering Block

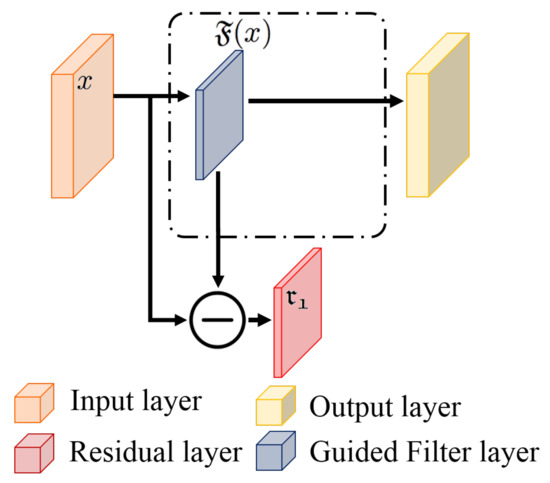

An image can be considered a composite signal containing useless information, such as noise. The noise affects the quality of the image and weakens the characteristics of the interesting objects. This study took the filtering operation to form the signal filtering block (SF block) that could effectively remove the noise from the surveillance-based infrared images and provide the signals without redundant information. The structure of the SF block is shown in Figure 2.

Figure 2.

The proposed signal filtering block.

In the SF block, this study took a guided filter, a useful filtering technique, as the signal filtering operation to remove the noise from the analyzed images. The signal filtering procedure could be considered a signal decomposition process that can be restructured into the original signal. Therefore, we took x as an input and generated decomposed and residual signals as outputs for each decomposition procedure (filtering procedure) in which was also called the filtered signal. Its formula is expressed as the following equation:

where is the guided filter [45,46] that filters the noise and preserves the characteristics of the gradient from the input x, and is the residue signal of x and can be referred to as noise.

This study took the decomposed terms and as the inputs and abandoned the last term , which was considered as the noise, to construct the signal filtering convolutional neural network.

3.2. Gradually Decreasing Dimensional Block

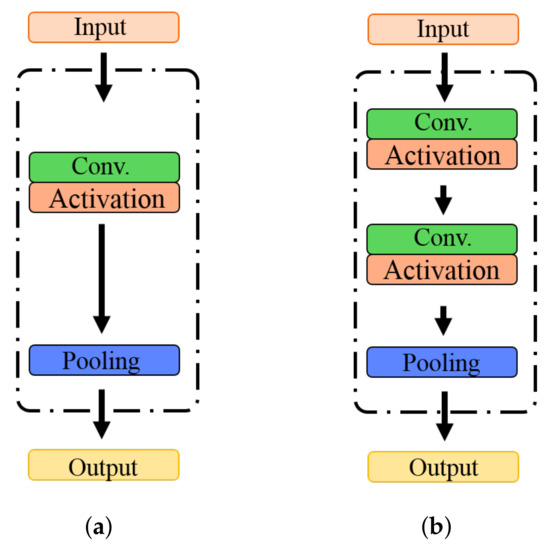

An efficient mechanism to integrate the feature maps from various convolutional layers is crucial in constructing convolution neural networks. The transformation layer is the commonly used component that has one convolutional layer with an activation function and a pooling layer to reduce the dimension of feature maps as shown in Figure 3a. The transformation layer can reduce dimensionality and consider the nonlinearity, but its nonlinearity information is insufficient, and parameters and computational costs are numerous. Therefore, our study designed a gradually decreasing dimensional block (GDD block) with three components, including two convolutional layers with activation functions and one pooling layer, as shown in Figure 3b.

Figure 3.

The transformation blocks. (a) Standard transformation, (b) the proposed gradually decreasing dimensional block (GDD block).

In the GDD block, this study took two convolutional layers with activation functions to gradually decrease the dimensionality of feature maps and be expressed as:

where is the feature map from the previous layers, is an operator, including the convolutional operation and an activation function, and is the pooling operation. The gradually decreasing dimension can improve the information of nonlinearity by operating two activation functions and effectively reducing the dimensionality of feature maps. Moreover, this study used the max-pooling layer after operating two convolutional layers to decrease the size of feature maps, reducing the computational cost of the network.

3.3. Network Structure

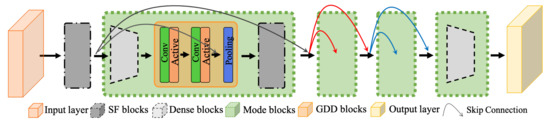

The proposed signal filtering convolutional neural network (SF-CNN) considered the decomposed signals for precipitation intensity estimation. As shown in Figure 4, it had one input layer, four signal filtering blocks, four mode blocks, and one classification layer.

Figure 4.

The proposed signal filtering convolutional neural network (SF-CNN).

In the SF-CNN, the input images were resized into ; each mode block () consisted of two components, including convolutional layers and gradually decreasing dimensional blocks, in which j is the number of mode blocks in the SF-CNN. In each mode block, firstly operated the convolutional operation for the decomposed terms () and generated the set of feature maps , ; K is the number of feature maps. Each feature map was expressed as:

where is the jth decomposed term which has P maps; and are the weight and bias for each ; is the activation function. Then, we applied the GDD block and considered the character of the dense local connectivity for the following layers. The formula of the GDD block was expressed as follows:

where is the output of the mode block, is the concatenation operator which concatenates the previous multiple layers and in which is the convolutional layer with a kernel size. Next, we adopted the pooling layer to reduce the size of feature maps:

where is the output of the jth mode block which was a set with d feature maps; is the maximum pooling which operated the operator with stride two to reduce the size of the feature maps.

4. Experimental Results

This study first presented the self-collected dataset for the precipitation intensity estimation and comparative evaluations in the experiments. Next, the comparison results of the proposed SF-CNN with several popular methods were presented. Finally, this study demonstrated the performance of the proposed SF-CNN without the proposed components to prove the efficiency of these components. All networks were trained using a momentum optimizer, the activation function was ReLU, the batch size was 16 for 400 epochs, and the learning rate was set to 0.0001. The number followed by the method name refers to the network layers.

4.1. Experimental Environments and Benchmarks

This study collected the precipitation intensity images from eight weather stations with benchmarks captured by an infrared camera and classified the images into six precipitation intensities according to the grade of precipitation (GB/T 28592-2012). The grade of precipitation and the dataset of precipitation intensity are shown in Table 1. In Table 1, the precipitation intensity was classified as scattered rain, a drizzle, moderate rain, heavy rain, rainstorm, and large rainstorm, based on hourly rainfall. The total number of images was 9394, in which scattered rain, drizzle, and moderate rain accounted for 94.27%. Moreover, this study took 6594 and 2800 images as the training and testing datasets, respectively, which caused the ratio of training and testing images to approximate 7:3, based on the references [47,48].

Table 1.

Dataset of precipitation intensity.

Moreover, this study took the precision metric and Kappa metric () to evaluate the performance of each network. The precision metric was calculated according to the following equation:

where and are the true and false positives of precipitation intensity. The was calculated as follows:

where C is the number of types of precipitation intensity, N is the total number of testing images, refers to the images which belong to the ith precipitation intensity and are classified as ith precipitation intensity, is the number of testing images for the ith precipitation intensity, and is the number of images which were classified as the ith precipitation intensity.

4.2. Experimental Analysis on Various Networks

This study compare the SF-CNN with eight popular CNN methods, including four classic CNNs—VGG [49], Inception [50,51], the series of ResNets [52], the series of DenseNets [53]—and four novel CNNs—the series of DCNet [54], NTS [55], DCL [56], and HRNet [57]—to evaluate the performance of the proposed SF-CNN.

This study first demonstrated the summarized quantitative comparison results in Table 2. In Table 2, the best results of each benchmark and that of each metric were marked in bold. ResNet-101 and DCNet-101 had the best precision in the benchmark of the large rainstorm; DenseNet-63 and DenseNet-169 had the best precision in the benchmark of the rainstorm; the proposed SF-CNNs had the best results in all the benchmarks. Moreover, the SF-CNN-169 had the best overall precision and , and the SF-CNN-63 had fewer parameters. The best overall precision and of the SF-CNNs were 2.28% and 0.0360 higher than ResNet-101, the second-best method, respectively. It improved the precision of precipitation intensity estimation, and its performance was superior to the compared methods. The overall precision and of SF-CNN-63 were 1.82% and 0.0285 higher than ResNet-101, respectively.

Table 2.

Quantitative comparison results.

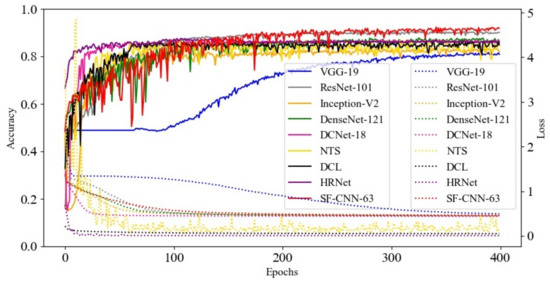

In addition, this study presented the learning curve of various networks in Figure 5 to demonstrate the process of training loss and testing accuracy. Notice that this study selected the network which had the best overall precision in its series of networks to form Figure 5, except the proposed SF-CNNs. In Figure 5, this study took the SF-CNN-63 to compare with the other methods because it had the least number of parameters and similarity of precision in the series of SF-CNNs. In Figure 5, the loss value of each method reduced to a lower level, and it did not have the rebound phenomenon. Moreover, the accuracy of each approach gradually increased without the phenomenon of suddenly declining during the training process. Therefore, there was no over-fitting phenomenon in the process of model generation. Furthermore, VGG-19 had the lowest convergence rate and worst classification accuracy; the rest of the compared methods had fast convergence rates, and the increasing accuracy rates were fast at the beginning of training, but their best performance did not exceed the SF-CNN-63 after 300 epochs. Although the proposed SF-CNN-63 had a low convergence rate and unstable accuracy at the beginning of training, its convergence rate became stable and had a good classification accuracy after 300 epochs.

Figure 5.

The learning curve of various networks.

The series of SF-CNNs had the best overall precision, and the metric had the best classification performance in all types of precipitation intensities. Moreover, the series of SF-CNNs had the smallest number of parameters compared with the state-of-the-art methods.

4.3. Ablation Experimental Analysis of the Proposed Network

The proposed SF-CNN contained the gradually decreasing dimensional block (GDD block) and signal filtering block (SF block). This study modified the proposed SF-CNN-63 and generated the SF-CNN-63-W-GDD and SF-CNN-63-W-SF, in which the SF-CNN-63-W-GDD and SF-CNN-63-W-SF were the SF-CNN-63 without the GDD and SF blocks, respectively, to verify the performance of each proposed block, GDD, and SF blocks. In other words, the SF-CNN-63-W-GDD only contained the SF blocks and was used to verify the performance of SF blocks, and the SF-CNN-63-W-SF only contained the GDD blocks and was used to verify the performance of GDD blocks

The comparison results with the metrics of , , and Params are demonstrated in Table 3. In Table 3, although the GDD and SF blocks were, respectively, removed from the SF-CNN-63, their precision was 4.32% and 0.39% higher than DenseNet-63, respectively. Moreover, their was 0.0674 and 0.0039 higher than DenseNet-63, respectively, and their Params was less than DenseNet-63. Moreover, the parameters of the SF-CNN-63-W-SF and SF-CNN-63 were the same because the calculation of parameters was related to the neurons (convolutional kernels). The procedure of the SF block was irrelevant to the neurons (convolutional kernels). Although the models of the SF-CNN-63-W-GDD and SF-CNN-63-W-SF had good performance compared to DenseNet-63, the complete model, the SF-CNN-63, had the best performance in each metric.

Table 3.

The quantitative results without using the proposed blocks.

5. Discussion

5.1. Analysis of the GDD Block

The gradually decreasing dimension (GDD) block sequentially operated two sets of convolutional operations accompanying an activation function. It reduced the dimensionality of feature maps to reduce the number of parameters effectively [50,53]. Moreover, the operation of the gradually decreasing dimension could also achieve the interactive integration of cross-channel information [58], improving the nonlinearity information to improve the expressive ability of the network.

In more detail, the convolution operation reduced the number of channels and decreased the computation cost due to the concatenation operation increasing the number of channels. Moreover, the convolution operation operated the linear operation to achieve the information combination between channels and reduced the channel dimension. In our study, we sequentially operated two convolution operations because the deduction with more channels at once would cause information loss. In addition, this study operated the activation function, which was executed following each convolutional operation to improve the nonlinearity information. The network only constructed with the multi-layer convolution operation could be transferred to a single-layer convolution operation by using a matrix transformation. The activation function executing space mapping with the nonlinear function caused the “multi-layer” of the neural network to have practical meaning and strengthened the learning ability of the model [2,59,60].

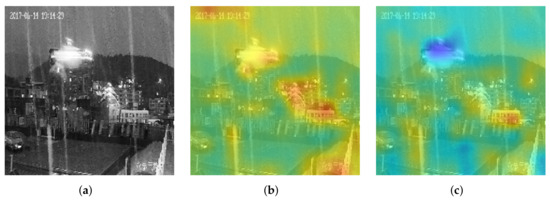

In addition, we utilized the skip connection structure to fuse the features in the GDD block, including the features extracted from the SF block and the two convolution operations, respectively. The fused features caused the network to continue to focus on rain patterns during the convolution process due to the reuse of the features [53,61], which were filtered by the guided filter and improved the accuracy of precipitation estimation. This study visualized the features’ heatmaps, which were extracted from the GDD block in the second block, as shown in Figure 6.

Figure 6.

The visualization of skip connection in the second GDD block. (a) Original image, (b) before, (c) after.

In Figure 6, Figure 6a–c are the input image, its heatmap before the skip connection operation, and its heatmap after executing the skip connection structure. In Figure 6b,c, the darker the color, the more significant the value. To compare Figure 6b,c, the network focused on the rain pattern after reusing the features filtered by the guided filter. In Figure 6c, the rain streak had higher values than the rest in the input image.

Finally, this study used the max-pooling layer after operating two convolutional layers to decrease the size of feature maps, further reducing the computational cost of the network. The total number of parameters is shown in Table 2. In Table 2, the proposed SF-CNN had the lowest number of parameters in comparison to the compared method with the same depth.

5.2. Analysis of the SF Block

In the problem of the precipitation intensity estimation, the noise was an essential issue in the analyzed image that caused the rain streak to be unclear and affected the estimation accuracy in the infrared image. This study took the technique of guided image filtering [46] to minimize noise and background information. The strategies of the guided image filtering were (1) using the mean filter to minimize the noise and background information when the variance was small in the mask area, and (2) maintaining the rain streak (foreground) information that had a large variance.

This study demonstrated the visualization results to discuss the effects of the SF block, as shown in Figure 7. In Figure 7, Figure 7a–c are the input image, its heatmap before using the guided filter, and its heatmap after executing the guided filter. In Figure 7b, the network payed more attention to the background than to the rain streak before using the guided filter, such as the building with a strong light at the top of the mountain. From Figure 7b,c, the operation of the guided filter could efficiently suppress the background information and retain the rain streak information, resulting in the characteristics of the rain streak to be more prominent.

Figure 7.

The visualization of the guided filter in the second SF block. (a) Original image, (b) before, (c) after.

In the precipitation image, there was more background information than rain streak information, affecting the network by the background during the training process. Therefore, this study added the signal block, which operated the guided filter, before each dense block to ensure the network always focused on the rain streak and avoided the interference of background information during the training process.

5.3. Characteristic of the DNN’s Black Box

In traditional machine learning, designers design the image features according to the characteristics of images and construct the classification mechanism based on the mathematical model. Therefore, traditional machine learning approaches are interpretable. The deep convolutional neural network was developed based on the neural network, which is an interpretable mathematical model. Still, it cannot explain why the generated features can efficiently describe the input data. Therefore, many scholars consider that the DNN is a black box technology. In other words, it is difficult to explain its working mechanism for specific reasons for the formation of the features and decision boundaries in the form of mathematical expressions [62]. The relationship between the DNN and interpretability is equivalent to a steam engine and thermodynamics, developing from technological invention to scientific theory. Therefore, the DNN is defined as a “black box”, mainly because there is no primary theoretical basis.

Scholars divide the interpretability of the DNN into two types: post hoc interpretability and intrinsic interpretability [63,64]. The post hoc interpretability interprets the decisions in the actual application [65], in which the visualization method is the widely used approach [66]. The visualization method visually presents the weight of the convolution kernel in the network, the characteristics of the convolution layer, and the object of interest of the model in a visual form. The visualization method can assist researchers in understanding the feature which is extracted from various layers in the DNN. This study adopted the technique of post hoc interpretability (the visualization method) and was associated with the structure of the proposed model to understand the principle of prediction.

5.4. Limitation and Outlook

This study designed the signal filtering block by utilizing the guided filter layer to filter out the noise (the residual layer). However, it was possible to retain valuable information in the residual layer. Therefore, we considered the signal decomposition technology [67] to reuse the information from the residual layer. Moreover, the magnitude of the filtered signal would be smaller than the original value. Therefore, these filtered signals should consider the signal enhancement technology, such as the spatial and channel attention in the CNN [68,69].

This study utilized the surveillance-based infrared image captured from eight weather stations, but did not consider the temporal and spatial correlation [70,71]. In the future, we could extend the study to analyze the relationship between sampling points and further study the prediction of the precipitation intensity [72,73].

6. Conclusions

This study proposed a new CNN model known as the SF-CNN for precipitation intensity estimation. The concept of the SF-CNN is to consider the decomposed signals using the filtering operation in the network. In the SF-CNN, this study designed a signal filtering block (SF block) and a gradually decreasing dimensional block (GDD block). In the SF block, this study took the guided filter to filter the noise of the feature maps, which was the residual signal. The GDD block used gradually decreasing dimensions to reduce the dimensionality of feature maps. The SF block removed the noise at the beginning of the network and in the entire network procedure. The GDD integrated the information of feature maps, improved the information of nonlinearity, and efficiently reduced the number of parameters.

In the experiments, this study analyzed various network factors for the proposed SF-CNNs and chose the best framework to compare with various popular methods. Comparing the proposed SF-CNNs with various popular methods using the self-collected precipitation dataset, the proposed model exhibited the best overall precision and metric, and had the most minor parameters.

Author Contributions

Conceptualization, C.-W.L.; methodology, C.-W.L.; validation, C.-W.L., X.H., M.L. and S.H.; investigation, C.-W.L., X.H., M.L. and S.H.; writing—original draft preparation, C.-W.L.; writing—review and editing, C.-W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Youth Program of Humanities and Social Sciences Foundation, Ministry of Education of China under grant 18YJCZH093, in part by the China Postdoctoral Science Foundation under grant 2018M632565, in part by the Channel Post-Doctoral Exchange Funding Scheme, and in part by the Natural Science Foundation of Fujian Province under Grant 2021J01128.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

The authors would like to thank Lai Shaojun from the Fuzhou Meteorological Bureau, Fuzhou, China, and Jinfu Liu from Fujian Agriculture and Forestry University, for their assistance with the collection of the data for our dataset.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xie, P.; Chen, M.; Yang, S.; Yatagai, A.; Hayasaka, T.; Fukushima, Y.; Liu, C. A gauge-based analysis of daily precipitation over East Asia. J. Hydrometeorol. 2007, 8, 607–626. [Google Scholar] [CrossRef]

- Chen, M.; Shi, W.; Xie, P.; Silva, V.B.; Kousky, V.E.; Wayne Higgins, R.; Janowiak, J.E. Assessing objective techniques for gauge-based analyses of global daily precipitation. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Wilson, J.W.; Brandes, E.A. Radar measurement of rainfall—A summary. Bull. Am. Meteorol. Soc. 1979, 60, 1048–1060. [Google Scholar] [CrossRef] [Green Version]

- Germann, U.; Galli, G.; Boscacci, M.; Bolliger, M. Radar precipitation measurement in a mountainous region. Q. J. R. Meteorol. Soc. 2006, 132, 1669–1692. [Google Scholar] [CrossRef]

- Ebert, E.E.; Janowiak, J.E.; Kidd, C. Comparison of near-real-time precipitation estimates from satellite observations and numerical models. Bull. Am. Meteorol. Soc. 2007, 88, 47–64. [Google Scholar] [CrossRef] [Green Version]

- Tian, Y.; Peters-Lidard, C.D.; Eylander, J.B.; Joyce, R.J.; Huffman, G.J.; Adler, R.F.; Hsu, K.l.; Turk, F.J.; Garcia, M.; Zeng, J. Component analysis of errors in satellite-based precipitation estimates. J. Geophys. Res. Atmos. 2009, 114. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Zhang, C.; Gu, R.; Li, P.; Yang, B. Vehicle detection and recognition for intelligent traffic surveillance system. Multimed. Tools Appl. 2017, 76, 5817–5832. [Google Scholar] [CrossRef]

- Liu, L.; Stroulia, E.; Nikolaidis, I.; Miguel-Cruz, A.; Rincon, A.R. Smart homes and home health monitoring technologies for older adults: A systematic review. Int. J. Med. Inform. 2016, 91, 44–59. [Google Scholar] [CrossRef]

- Yuan, P.H.; Yang, K.F.; Tsai, W.H. Real-time security monitoring around a video surveillance vehicle with a pair of two-camera omni-imaging devices. IEEE Trans. Veh. Technol. 2011, 60, 3603–3614. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar]

- Fu, X.; Huang, J.; Ding, X.; Liao, Y.; Paisley, J. Clearing the skies: A deep network architecture for single-image rain removal. IEEE Trans. Image Process. 2017, 26, 2944–2956. [Google Scholar] [CrossRef] [Green Version]

- Shedekar, V.S.; King, K.W.; Fausey, N.R.; Soboyejo, A.B.; Harmel, R.D.; Brown, L.C. Assessment of measurement errors and dynamic calibration methods for three different tipping bucket rain gauges. Atmos. Res. 2016, 178, 445–458. [Google Scholar] [CrossRef] [Green Version]

- Niemczynowicz, J. The dynamic calibration of tipping-bucket raingauges. Hydrol. Res. 1986, 17, 203–214. [Google Scholar] [CrossRef]

- Tang, H.; Kuang, L.; Shi, P. Design of a high precision weighing rain cauge based on WSN. Meas. Control Technol. 2014, 33, 200–204. [Google Scholar]

- Knechtl, V.; Caseri, M.; Lumpert, F.; Hotz, C.; Sigg, C. Detecting temperature induced spurious precipitation in a weighing rain gauge. Meteorol. Z. 2019, 28, 215–224. [Google Scholar] [CrossRef]

- Al-Wagdany, A. Inconsistency in rainfall characteristics estimated from records of different rain gauges. Arab. J. Geosci. 2016, 9, 410. [Google Scholar] [CrossRef]

- Grimes, D.; Pardo-Iguzquiza, E.; Bonifacio, R. Optimal areal rainfall estimation using raingauges and satellite data. J. Hydrol. 1999, 222, 93–108. [Google Scholar] [CrossRef]

- Gat, J.R.; Airey, P.L. Stable water isotopes in the atmosphere/biosphere/lithosphere interface: Scaling-up from the local to continental scale, under humid and dry conditions. Glob. Planet. Chang. 2006, 51, 25–33. [Google Scholar] [CrossRef]

- Yan, J.; Bárdossy, A.; Hörning, S.; Tao, T. Conditional simulation of surface rainfall fields using modified phase annealing. Hydrol. Earth Syst. Sci. 2020, 24, 2287–2301. [Google Scholar] [CrossRef]

- Suseno, D.P.Y.; Yamada, T.J. Simulating flash floods using geostationary satellite-based rainfall estimation coupled with a land surface model. Hydrology 2020, 7, 9. [Google Scholar] [CrossRef] [Green Version]

- Sevruk, B. Regional dependency of precipitation-altitude relationship in the Swiss Alps. In Climatic Change at High Elevation Sites; Springer: Dordrecht, The Netherlands, 1997; pp. 123–137. [Google Scholar]

- Barnes, S.L. A technique for maximizing details in numerical weather map analysis. J. Appl. Meteorol. Climatol. 1964, 3, 396–409. [Google Scholar] [CrossRef] [Green Version]

- Foehn, A.; Hernández, J.G.; Schaefli, B.; De Cesare, G. Spatial interpolation of precipitation from multiple rain gauge networks and weather radar data for operational applications in Alpine catchments. J. Hydrol. 2018, 563, 1092–1110. [Google Scholar] [CrossRef]

- Ryzhkov, A.; Zrnic, D. Radar polarimetry at S, C, and X bands: Comparative analysis and operational implications. In Proceedings of the 32nd Conference on Radar Meteorology, Norman, OK, USA, 22–29 October 2005. [Google Scholar]

- Huang, H.; Zhao, K.; Zhang, G.; Hu, D.; Yang, Z. Optimized raindrop size distribution retrieval and quantitative rainfall estimation from polarimetric radar. J. Hydrol. 2020, 580, 124248. [Google Scholar] [CrossRef]

- Bellerby, T.; Todd, M.; Kniveton, D.; Kidd, C. Rainfall estimation from a combination of TRMM precipitation radar and GOES multispectral satellite imagery through the use of an artificial neural network. J. Appl. Meteorol. 2000, 39, 2115–2128. [Google Scholar] [CrossRef]

- Behrangi, A.; Hsu, K.L.; Imam, B.; Sorooshian, S.; Kuligowski, R.J. Evaluating the utility of multispectral information in delineating the areal extent of precipitation. J. Hydrometeorol. 2009, 10, 684–700. [Google Scholar] [CrossRef]

- Hong, Y.; Hsu, K.L.; Sorooshian, S.; Gao, X. Precipitation estimation from remotely sensed imagery using an artificial neural network cloud classification system. J. Appl. Meteorol. 2004, 43, 1834–1853. [Google Scholar] [CrossRef] [Green Version]

- Kummerow, C.; Olson, W.S.; Giglio, L. A simplified scheme for obtaining precipitation and vertical hydrometeor profiles from passive microwave sensors. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1213–1232. [Google Scholar] [CrossRef]

- Lazri, M.; Labadi, K.; Brucker, J.M.; Ameur, S. Improving satellite rainfall estimation from MSG data in Northern Algeria by using a multi-classifier model based on machine learning. J. Hydrol. 2020, 584, 124705. [Google Scholar] [CrossRef]

- Ouallouche, F.; Lazri, M.; Ameur, S. Improvement of rainfall estimation from MSG data using Random Forests classification and regression. Atmos. Res. 2018, 211, 62–72. [Google Scholar] [CrossRef]

- Tao, Y.; Hsu, K.; Ihler, A.; Gao, X.; Sorooshian, S. A two-stage deep neural network framework for precipitation estimation from bispectral satellite information. J. Hydrometeorol. 2018, 19, 393–408. [Google Scholar] [CrossRef]

- Wang, C.; Xu, J.; Tang, G.; Yang, Y.; Hong, Y. Infrared precipitation estimation using convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8612–8625. [Google Scholar] [CrossRef]

- Bossu, J.; Hautiere, N.; Tarel, J.P. Rain or snow detection in image sequences through use of a histogram of orientation of streaks. Int. J. Comput. Vis. 2011, 93, 348–367. [Google Scholar] [CrossRef]

- Kang, L.W.; Lin, C.W.; Fu, Y.H. Automatic single-image-based rain streaks removal via image decomposition. IEEE Trans. Image Process. 2011, 21, 1742–1755. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.H.; Sim, J.Y.; Kim, C.S. Video deraining and desnowing using temporal correlation and low-rank matrix completion. IEEE Trans. Image Process. 2015, 24, 2658–2670. [Google Scholar] [CrossRef] [PubMed]

- Sawant, S.; Ghonge, P. Estimation of rain drop analysis using image processing. Int. J. Sci. Res. 2015, 4, 1981–1986. [Google Scholar]

- Hsieh, C.W.; Chi, P.W.; Chen, C.Y.; Weng, C.J.; Wang, L. Automatic Precipitation Measurement Based on Raindrop Imaging and Artificial Intelligence. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10276–10284. [Google Scholar] [CrossRef]

- Roser, M.; Moosmann, F. Classification of weather situations on single color images. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 798–803. [Google Scholar]

- Zhang, S.; Chi, C.; Lei, Z.; Li, S.Z. Refineface: Refinement neural network for high performance face detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4008–4020. [Google Scholar] [CrossRef]

- Li, X.; Yu, L.; Chang, D.; Ma, Z.; Cao, J. Dual cross-entropy loss for small-sample fine-grained vehicle classification. IEEE Trans. Veh. Technol. 2019, 68, 4204–4212. [Google Scholar] [CrossRef]

- LeBien, J.; Zhong, M.; Campos-Cerqueira, M.; Velev, J.P.; Dodhia, R.; Ferres, J.L.; Aide, T.M. A pipeline for identification of bird and frog species in tropical soundscape recordings using a convolutional neural network. Ecol. Inform. 2020, 59, 101113. [Google Scholar] [CrossRef]

- Hossain, M.; Rekabdar, B.; Louis, S.J.; Dascalu, S. Forecasting the weather of Nevada: A deep learning approach. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–6. [Google Scholar]

- Sadeghi, M.; Asanjan, A.A.; Faridzad, M.; Nguyen, P.; Hsu, K.; Sorooshian, S.; Braithwaite, D. PERSIANN-CNN: Precipitation estimation from remotely sensed information using artificial neural networks–convolutional neural networks. J. Hydrometeorol. 2019, 20, 2273–2289. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–14. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.; Zhu, Y.; Tian, L.; Shan, Y. Dual super-resolution learning for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3774–3783. [Google Scholar]

- Bae, K.I.; Park, J.; Lee, J.; Lee, Y.; Lim, C. Flower classification with modified multimodal convolutional neural networks. Expert Syst. Appl. 2020, 159, 113455. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Liu, W.; Liu, Z.; Yu, Z.; Dai, B.; Lin, R.; Wang, Y.; Rehg, J.M.; Song, L. Decoupled Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2771–2779. [Google Scholar]

- Yang, Z.; Luo, T.; Wang, D.; Hu, Z.; Gao, J.; Wang, L. Learning to navigate for fine-grained classification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 420–435. [Google Scholar]

- Chen, Y.; Bai, Y.; Zhang, W.; Mei, T. Destruction and construction learning for fine-grained image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5157–5166. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [Green Version]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Zheng, Q.; Yang, M.; Tian, X.; Wang, X.; Wang, D. Rethinking the Role of Activation Functions in Deep Convolutional Neural Networks for Image Classification. Eng. Lett. 2020, 28, 80–92. [Google Scholar]

- Kuo, C.C.J. Understanding convolutional neural networks with a mathematical model. J. Vis. Commun. Image Represent. 2016, 41, 406–413. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imaging 2019, 39, 1856–1867. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carvalho, D.V.; Pereira, E.M.; Cardoso, J.S. Machine learning interpretability: A survey on methods and metrics. Electronics 2019, 8, 832. [Google Scholar] [CrossRef] [Green Version]

- Du, M.; Liu, N.; Hu, X. Techniques for interpretable machine learning. Commun. ACM 2019, 63, 68–77. [Google Scholar] [CrossRef] [Green Version]

- Buhrmester, V.; Münch, D.; Arens, M. Analysis of explainers of black box deep neural networks for computer vision: A survey. Mach. Learn. Knowl. Extr. 2021, 3, 48. [Google Scholar] [CrossRef]

- Xie, N.; Ras, G.; van Gerven, M.; Doran, D. Explainable deep learning: A field guide for the uninitiated. arXiv 2020, arXiv:2004.14545. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Qin, S.; Zhong, Y.M. A new envelope algorithm of Hilbert–Huang transform. Mech. Syst. Signal Process. 2006, 20, 1941–1952. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Baigorria, G.A.; Jones, J.W.; O’Brien, J.J. Understanding rainfall spatial variability in southeast USA at different timescales. Int. J. Climatol. A J. R. Meteorol. Soc. 2007, 27, 749–760. [Google Scholar] [CrossRef]

- Razmkhah, H.; AkhoundAli, A.M.; Radmanesh, F.; Saghafian, B. Evaluation of rainfall spatial correlation effect on rainfall-runoff modeling uncertainty, considering 2-copula. Arab. J. Geosci. 2016, 9, 323. [Google Scholar] [CrossRef]

- Wu, K.; Shen, Y.; Wang, S. 3D convolutional neural network for regional precipitation nowcasting. J. Image Signal Process. 2018, 7, 200–212. [Google Scholar] [CrossRef]

- Ayzel, G.; Scheffer, T.; Heistermann, M. RainNet v1. 0: A convolutional neural network for radar-based precipitation nowcasting. Geosci. Model Dev. 2020, 13, 2631–2644. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).