Abstract

The goal of automatic parking system is to accomplish the vehicle parking to the specified space automatically. It mainly includes parking space recognition, parking space matching, and trajectory generation. It has been developed enormously, but it is still a challenging work due to parking space recognition error and trajectory generation for vehicle nonparallel initial state with parking space. In this study, the authors propose multi-sensor information ensemble for parking space recognition and adaptive trajectory generation method, which is also robust to vehicle nonparallel initial state. Both simulation and real vehicle experiments are conducted to prove that the proposed method can improve the automatic parking system performance.

1. Introduction

Automatic parking systems (APS), as one of the main functions in advanced driver assistance systems (ADAS) and autonomous driving, is the key technology of transportation and traffic management [1,2,3]. It refers to the Internet of Things (IoT) [4] and artificial intelligence [5]. In addition, with the development of increased computing power, multi-sensor fusion technology has made a great contribution to smart parking systems.

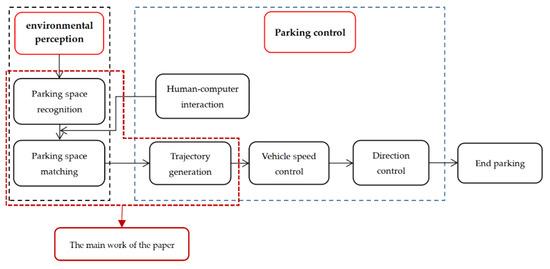

To design an automatic parking system, it should consist of parking space recognition, parking space matching, trajectory generation [6,7,8], and vehicle control [9]. Parking space recognition [10,11] is one of the crucial components for APS, which is commonly realized based on machine vision technique. Following parking space recognition, parking space matching [12,13] requires the positioning information of the target parking space to guarantee the precision of the trajectory generation. From the trajectory generation perspective [14,15], it needs to establish the vehicle dynamics and obstacle avoidance models to generate available parking trajectory.

2. Related Works

This Section introduces related works that motivate this study: parking space recognition, parking space matching, trajectory generation, and vehicle control.

Jung et al. [16] describe a monocular vision-based parking-slot-markings recognition selection of automatic parking assist system. Hsu et al. [17] propose a configuration of the APS including sensors information fusion, position estimation, path planning, and tracking algorithm, which shows a good maneuver performance for vehicle. Ma et al. [18] develop an automatic parking system based on parking scene recognition. It introduces machine vision and pattern recognition techniques to intelligently recognize a vertical parking scenario, plan a reasonable parking path, develop a path tracking control strategy to improve the vehicle control automation, and explore a highly intelligent automatic parking technology road map. Liu et al. [19] focus on positioning accuracy and stability research, which is crucial for automatic parking system. They propose a new adaptive robust four-wheel calculation positioning method to address the problems of low precision and poor stability. It is based on the local outlier factor detection algorithm, in which adaptive diagnosis and compensation are made for data anomalies of four-wheel speedometers. The preview correction method is used for parking path planning in [20]. This method can not only detect the curvature outliers in the parking path, but also correct and optimize a reasonable parking trajectory in advance. Based on the Dijkstra algorithm, a scheme that can consider dynamic influence factors [21] is proposed to solve the lane occupancy caused by parking. Zhao et al. [22] designed an indoor automatic parking system based on indoor positioning and navigation technology. The power-aware path planning algorithm was proposed in [23]. This method determines the best parking place in the automatic parking system, calculates the best path, and can greatly reduce power consumption. Considering the possibility of collision between the car body and obstacles in the parking space and the continuity of parking needs, a Bezier curve is used in [24] to fit the trajectory of automatic parking. An improved genetic algorithm and time-enhanced A* algorithm trajectory calculation method [25] is used to solve the problem of high-density parking lot path planning. This method has been effectively improved in terms of driving distance and safety. In [26], parallel line pairs are extracted from the AVM image to detect the dividing line. According to the geometric constraints of the parking spaces, the separation lines are paired to generate candidate parking spaces. By using line and corner features to identify where they entered, and using ultrasonic sensors to classify their positions, the candidates are determined. In [27], a novel detection method based on deep convolutional neural network is proposed, and the largest data set in the field of parking space recognition is established to overcome various unpredictable factors affecting parking spaces. The parking space detection method based on the direction entry line regression and classification of the deep convolutional neural network [28] can easily detect parking spaces of different shapes from different angles. The parking space marking detection method based on the geometric features of parking spaces [29] mainly includes separation line detection and parking space entry detection. This method can identify typical vertical and parallel rectangular parking spaces with high accuracy.

Most of the existing methods are applicable when the initial state of a vehicle is parallel to the target space and can only generate a fixed-point trajectory [30]. Further, narrow parking area and limited parking points are also serious problems to APS. To overcome these limitations, we design an automatic parking system design, which can realize precision parking with different vehicle initial states (parallel/nonparallel to the target parking space). The primary study objective is to develop a novel APS in the engineering practice context as it overcomes the aforementioned shortcomings. This paper adopts the idea of data fusion, and then designs a multi-sensor data fusion method. The parking space matching uses the visual positioning method, and this paper also uses the experimental method to correct the image distortion caused by the optical lens. The trajectory calculation method is a traditional geometric method because it has an irreplaceable and efficient calculation speed. To sum up, the main contribution of this paper can be summarized as follows: (I) Multi-sensor information is fused to recognize the parking space, which can not only enhance the recognition performance, but also provide support for parking space matching. (II) A self-adaptive trajectory generation method is proposed to satisfy both parallel and nonparallel initial state. Moreover, some simulation and real vehicle experiments are conducted to demonstrate our method. Through the scheme designed in this paper, the probability of correct parking space matching reaches 94%, the maximum matching error of parking space is only 5 cm, and the parking success rate is as high as 90%.

The remainder of this paper is organized into four sections. Section 2 introduces related works. The proposed method is described in detail in Section 3. Section 4 designs the simulation and real vehicle experiment. Section 5 demonstrates the experimental results and contains a discussion on the specific analysis. Section 6 provides concluding remarks.

3. Automatic Parking System

3.1. Multi-Sensor Information Ensemble-Based Parking Space Recognition

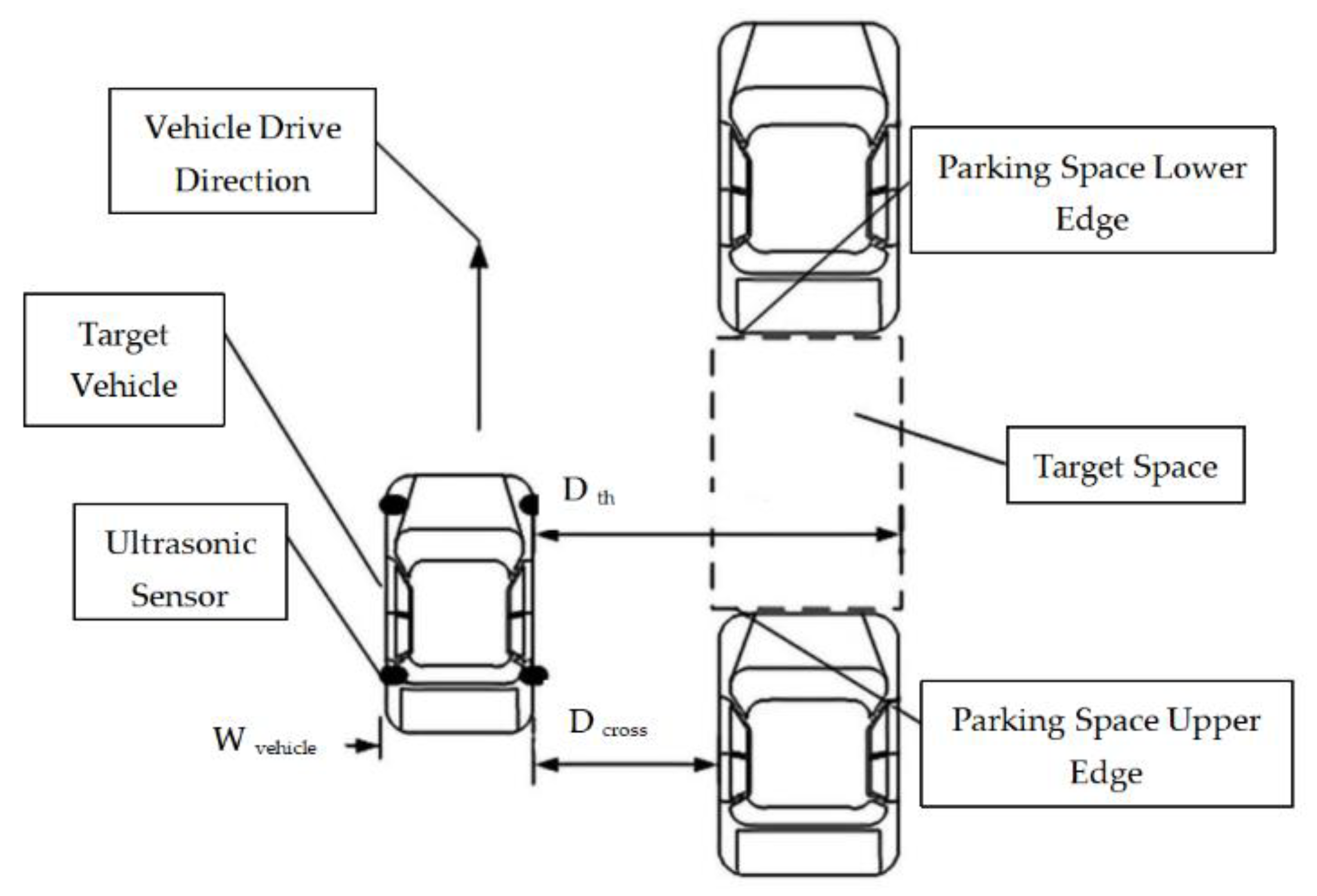

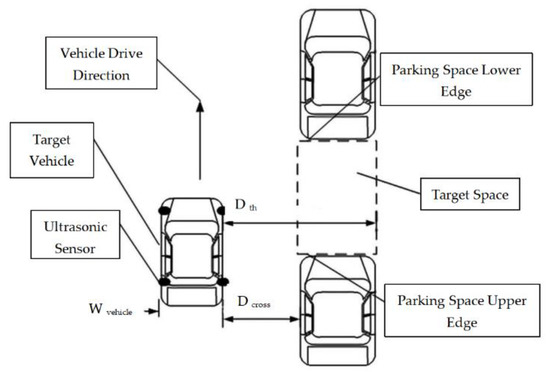

Parking space recognition, as the first step for automatic parking system, highly depends on the information collected by sensors such as ultrasonic or cameras. Multi-sensor information ensemble can improve recognition performance. The parking space consists of an upper edge and a lower one. Ultrasonic sensors, which are installed on the right-hand side of the vehicle, are used for edge detection. The installed ultrasonic model is LGCB1000-18GM-D1/D2-V15, and the detection range is 70−1000 mm. The mimic diagram of a parallel parking spot is shown in Figure 1.

Figure 1.

Mimic diagram of parallel parking spot.

A range including target parking space is obtained by ultrasonic sensors, and the image collected by sensors is smoothed by filtering. Thereafter, the distance between the upper and lower edges is measured, and also the displacement of the vehicle to the target parking spot. Subsequently, the data measured by the two sensors are fused through the data fusion algorithm proposed in this paper.

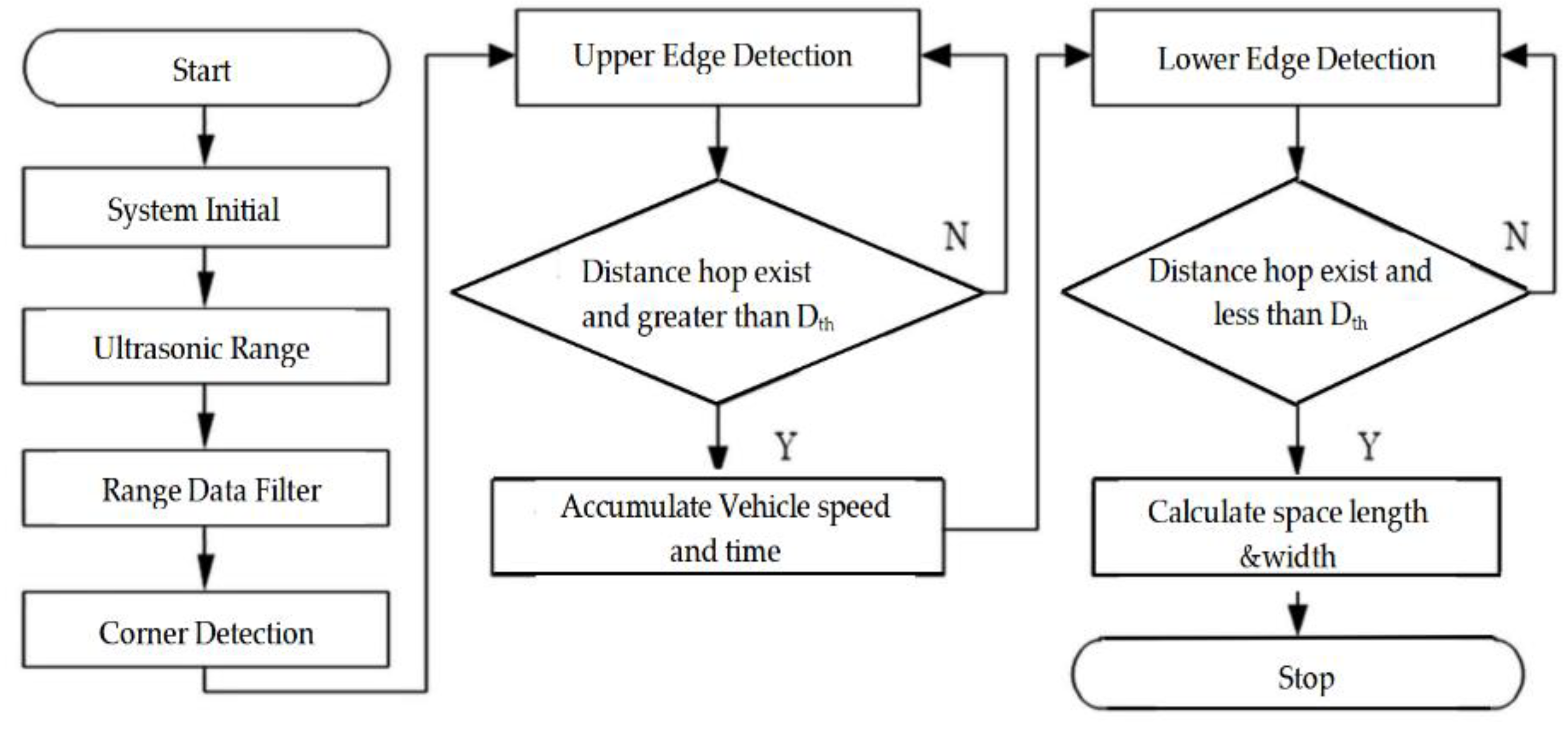

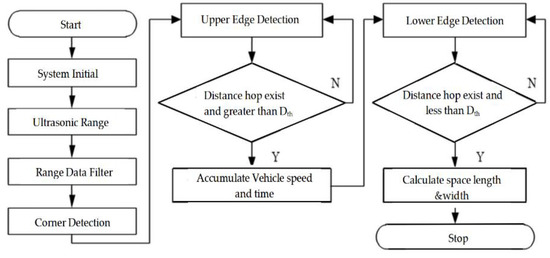

Parking spot detection is of great importance to parking space recognition. We design a parking spot detection algorithm consisting of ultrasonic range determination, edge detection, and spot length calculation, as shown in Figure 2. In this study, polling-driven mechanism is introduced to trigger the sensors, which means one of the sensors is firstly triggered to produce ultrasonic waves with a fixed cycle. If the echoes are received by the triggered one, then the system polls the next channel for the other sensor.

Figure 2.

The structure of parking spot detection algorithm.

The process is repeated in cycles. Time of flight (ToF) is calculated by the time capture register of the micro control unit (MCU), and the measured distance is obtained as follows:

where at ; is the travel time.

Edge detection, which is crucial for the accuracy of parking spot detection, comprises upper edge detection and lower edge detection. In the case of parallel parking, the threshold of distance hop, which includes the hop threshold of the upper and lower edges, is calculated based on the threshold of the ultrasonic sensors and the cross range between the target vehicle and parked vehicles, which is adjacent to the target parking spot. The threshold is determined by the width of the vehicle and maximum range of the ultrasonic sensor .

The edge thresholds are expressed as follows:

where and .

The spot length is calculated by the driving speed and time:

where N is the cumulative number of vehicle displacements; V(k) is the running speed in cycle k Further, and T is the cycle time.

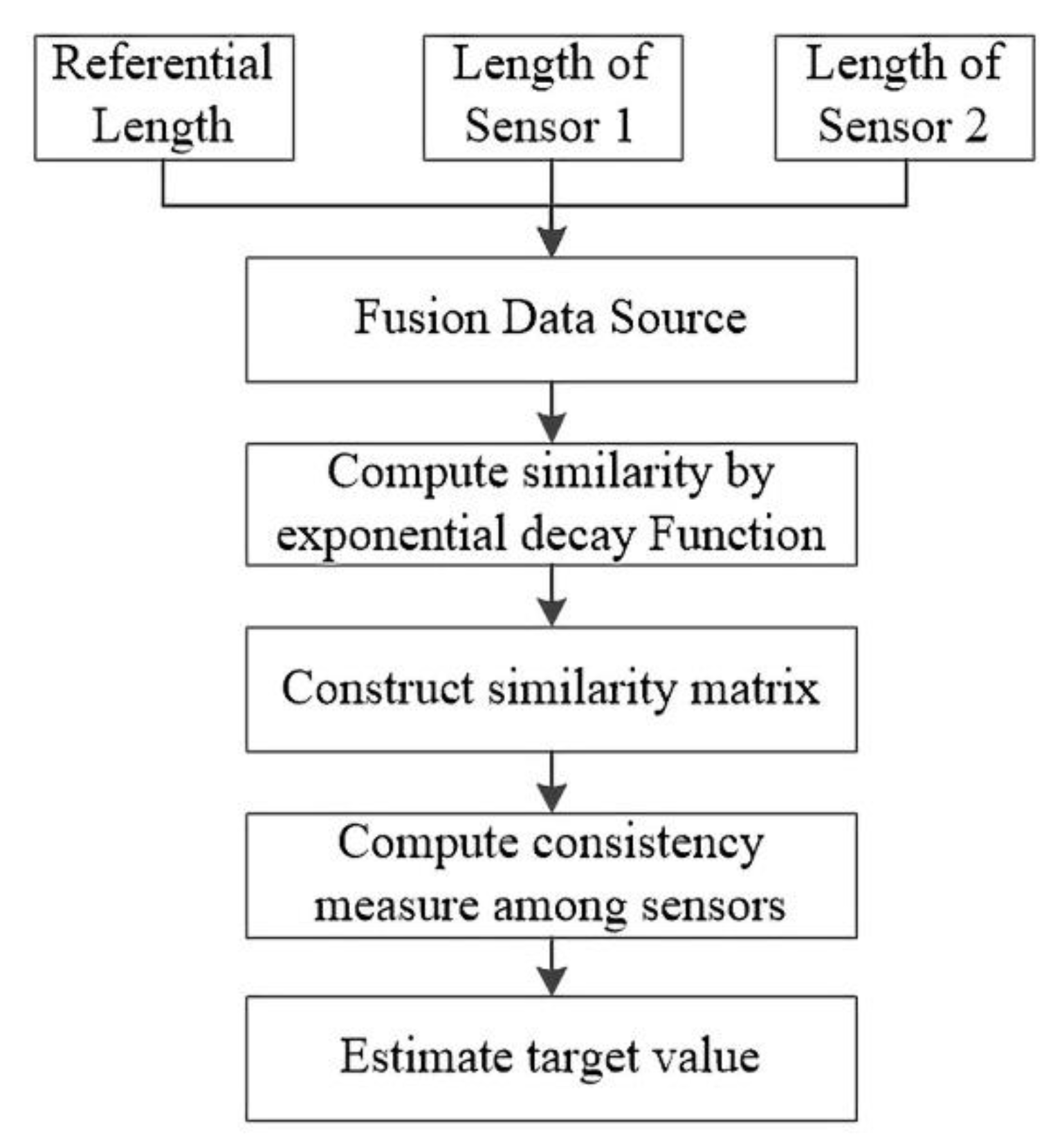

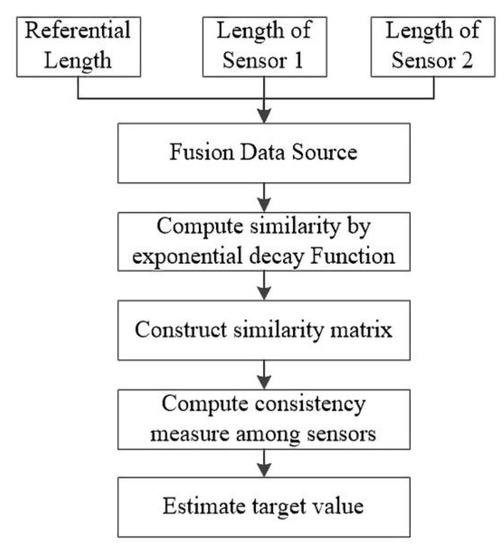

In multi-sensor information ensemble, a similarity model fusion is proposed. Compared with other methods such as D-S theory [31], Bayes theory [32], Kalman filter [33], and optimal statistical decision [34], the proposed method is suitable for the situations where prior knowledge is not available. In our method, multiple sensors are used to measure the same object, and the measured values are independent of each other. The process of information ensemble can be divided into several phases, as shown in Figure 3. The ensemble data sources are from the referential spot length and the measured values by two sensors. In the process of sensor measurement, the measurement result will be inaccurate due to the influence of the environment. Therefore, Formula (5) is used to compensate the measurement results of the ultrasonic sensor. Referential spot length is calculated by the difference between the average error of the sensors and corrected error. It can improve the data ensemble accuracy.

where and are the measured lengths of sensors 1 and 2, respectively; is the corrected error computed using multiple linear regression model.

Figure 3.

Flowchart on multi-sensor information ensemble.

To quantify the similarity among the sensors at a particular moment, exponential decay function (EDF) [18] is employed to calculate the similarity and construct the similarity matrix. The conventional EDF is expressed as below:

where is a hyper-parameter; is the similarity between observed values of the th and th sensors; and e is the natural base. The measured value of the th sensor at time is denoted as . If and are considerably different, then the similarity between the th and th sensors is weak. Due to real world application, parking spot detection emphasizes real-time implementation in the embedded system. To this end, we define a new EDF function to avoid setting the experience value manually, which can brief the calculation process.

where is the number of fused data sources; in our study, it is 3, including the referential length, data of sensor 1, and data of sensor 2. The similarity matrix at moment can be expressed as follows:

where is the number of fused data sources. It combines the similarity among sensors as a matrix where each line indicates the support degree among the sensors. indicates the consistency among the th sensor and others. The higher the value is, the more consistent it is. Therefore, the consistency measurement approach is defined as the assessment criteria:

where is the proximity degree between the th sensor and all the sensors (including the th sensor) at moment. Further, is the sum of matrix line in Equation (8) and . Target estimation only focuses on the consistency measurement at a particular observation time. The target fusion value at time is expressed as follows:

where is the sum of proximity degree of all the sensors at time k.

3.2. Parking Space Matching

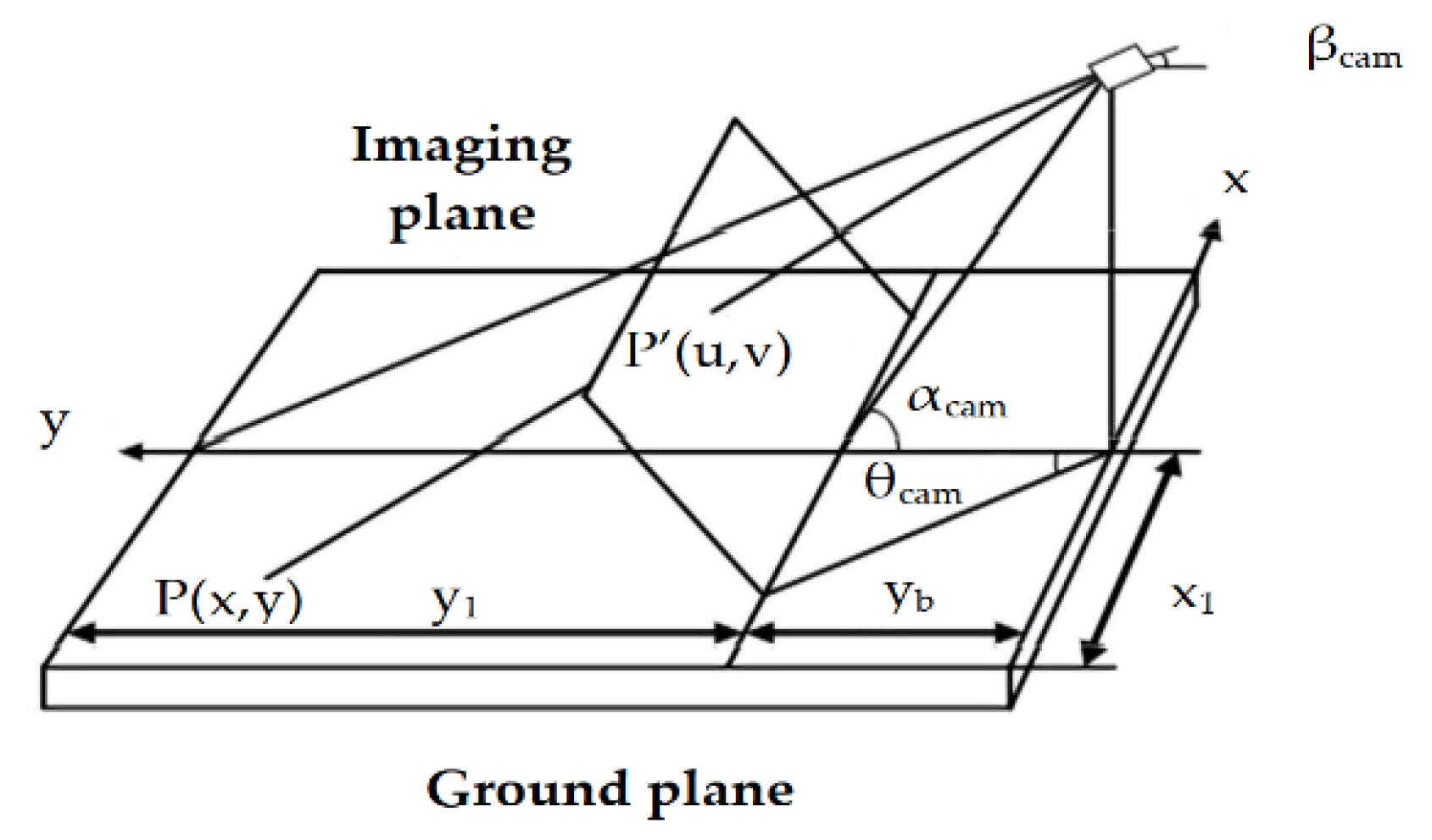

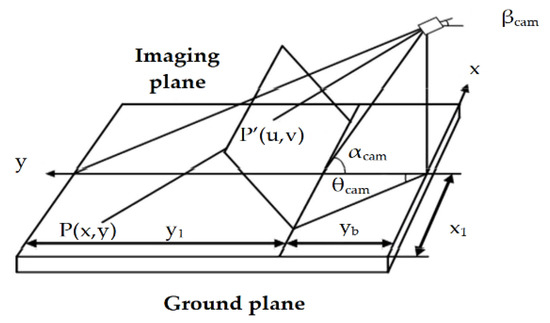

The proposed parking space matching method uses image information collected by a wide-range camera installed in the rear portion of the vehicle. The purposes of parking space matching are to confirm the target parking space and to determine the target parking position as well as the distance with a vehicle [35,36]. The viewing angle range of the camera used is 120 degrees, the resolution is 640 × 480, and the chip is MT9V136. In our study, instead of employing an expensive positioning system such as GPS or inertial navigation, a convenient and accurate image ranging method is proposed. The common monocular camera is to solve object location problem based on the geometrical imaging model of the camera. The imaging model of the camera is shown in Figure 4. and are the coordinate of the object in the world and image coordinate system, respectively; and are the width and height of the image. From the camera imaging geometry model, we obtain

where is the distance between the target point and camera. hcam is the distance between the camera and the horizontal ground. This method can be successfully employed when the distance range is from 0.5–3.0 m. However, it does not satisfy the parking requirements that a minimum positioning distance range should be between 0.5 m and 9.0 m. In this study, the image coordinates and mapping points in the world coordinate are measured and some relationships are derived by analyzing and processing the measured data. There is an inverse proportional function relationship between the -axis in an image coordinate and its corresponding longitudinal distance on the -axis in the world coordinate system. For the -axis, it has a proportional function relationship. The conversion relationship between in image coordinates and in world coordinates is given by Equation (12)

where represents the maximum horizontal distance that corresponds to line in the world coordinates. The size of the captured images is . The center axis coordinates of the image are . The location data of the camera is shown in Table 1.

Figure 4.

Mimic diagram of parking spot.

Table 1.

Performance comparison with other state-of-the-art methods on the various datasets.

In this manner, a virtual space that has the same size with a parking space and the function of location can be established in the image systems. In this system, the virtual and real spaces can be matched by translation and rotation. Figure 5 shows the matching results.

Figure 5.

Matching of vertical and parallel parking spaces: (a) Matching on vertical parking space, (b) Matching on parallel parking space.

3.3. Self-Adaptive Trajectory Generation for Vehicle Nonparallel/Parallel Initial State

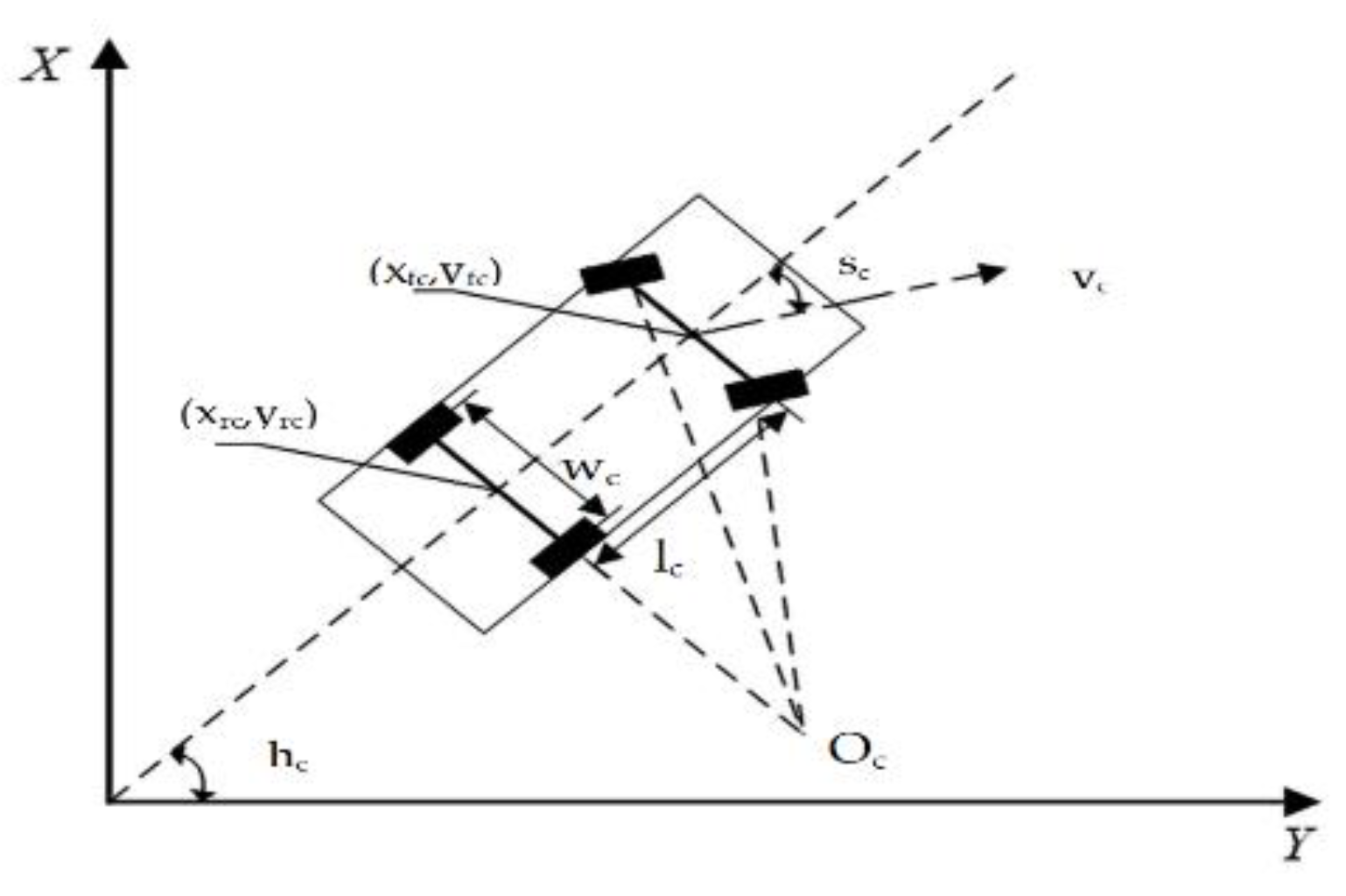

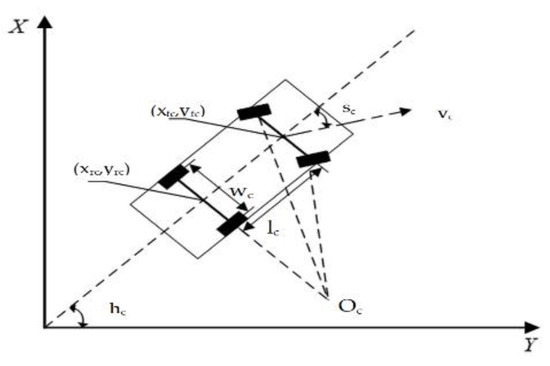

To develop an automatic parking system, the direction of motion and trajectory of the vehicle should be known [37]. To this end, a vehicle kinematics model is established. Since automatic parking system always works with a low speed, the model can eliminate the possibility of sliding and lateral movements. The proposed vehicle kinematics model is shown in Figure 6.

Figure 6.

Kinematics model of vehicle.

The kinematic equations of the vehicle are expressed as:

where is the angle between the horizontal and the vehicle axle; is the angle between the vehicle front wheel and the vehicle axle. Further, and are the abscissa and ordinate of the rear axle center, respectively; and are the moving speed and wheelbase of the vehicle, respectively.

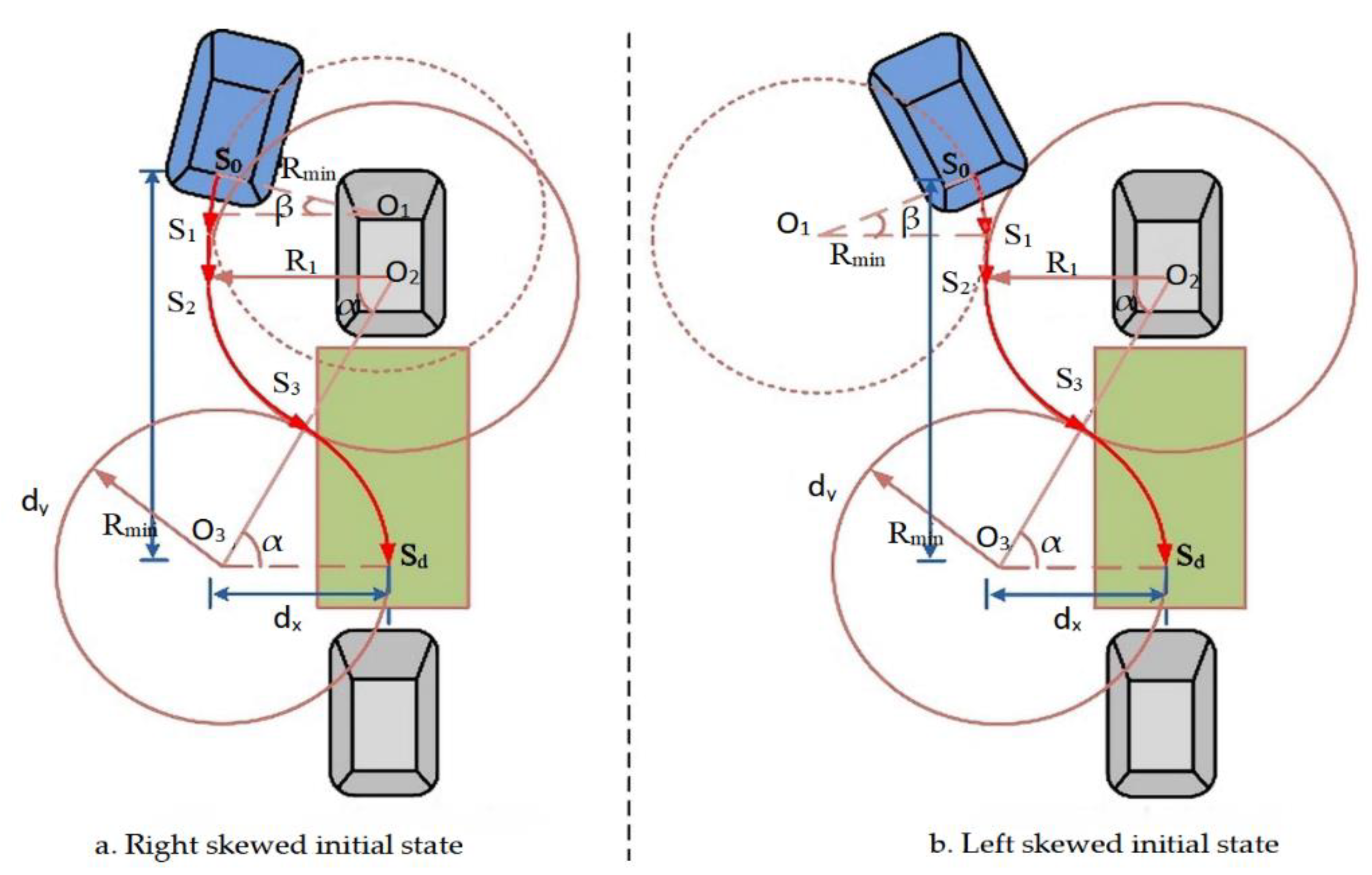

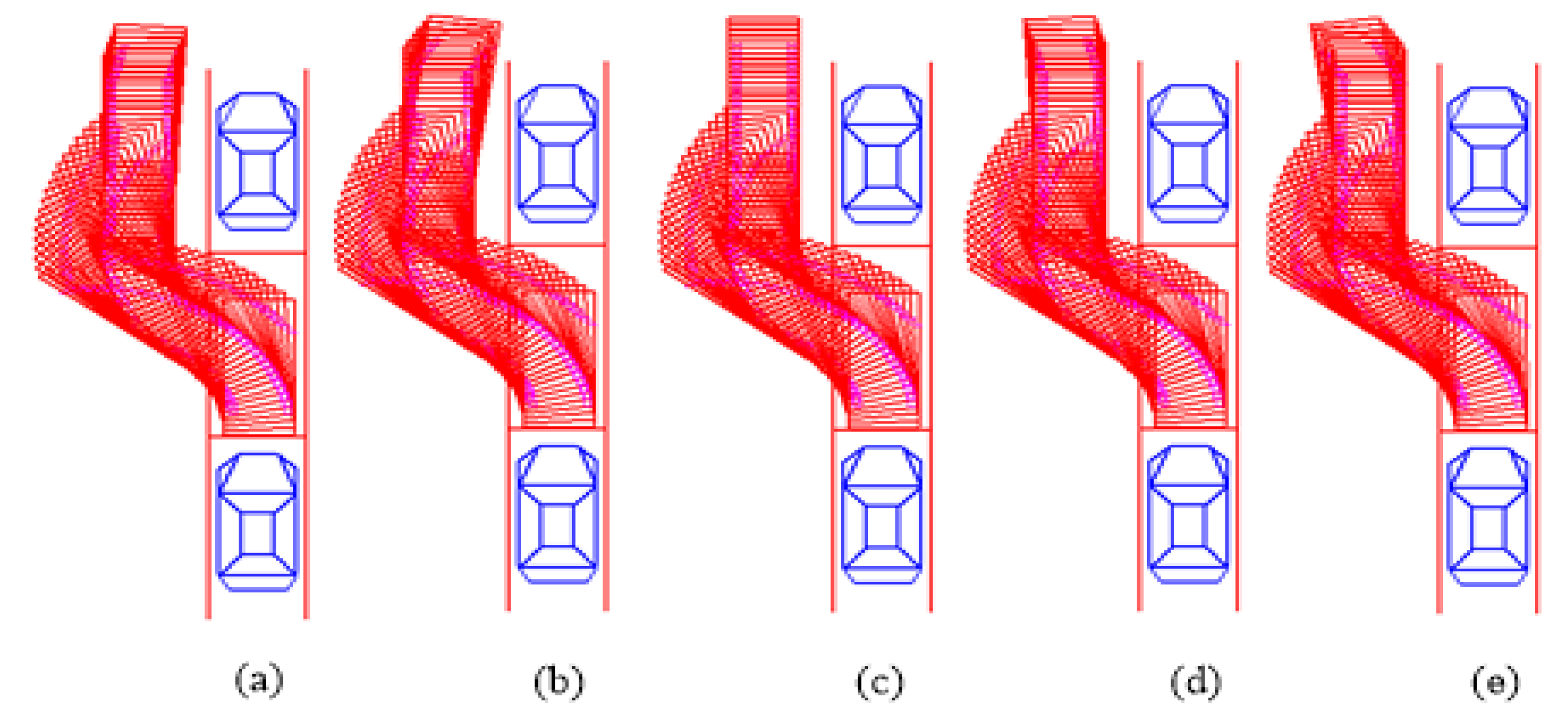

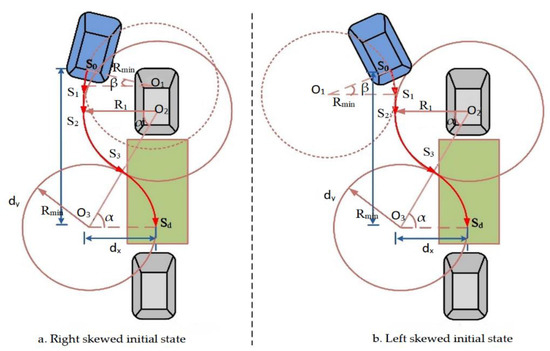

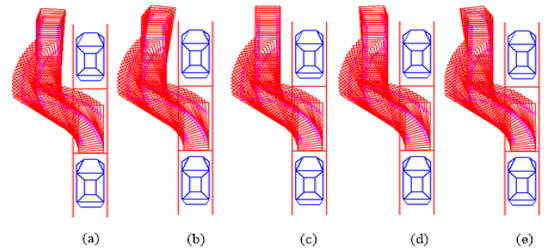

The trajectory is constituted from numerous sections of equal tangent arcs by analyzing the kinematics model. Typically, the trajectory is generated by using minimum radius method, in which the trajectory consists of two arcs with vehicle minimum radii. However, this kind of method requires that the vehicle body must be parallel to the parking space in the initial state. To overcome this limitation, a new trajectory generation method is proposed, which can process both parallel and nonparallel initial states. As shown in Figure 7, the vehicle rear axle center coordinates represent the entire vehicle trajectory, and each coordinate position of the vehicle can be calculated from the vehicle geometry and the current steering angle . The initial position of the vehicle is , and the target position is . The parking trajectory comprises arcs , , , and . The generated radius is different from the minimum radius; thus, its radii is unequal.

Figure 7.

Schematic diagram of nonparallel initial state parking reference trajectory.

Considering the actual parking condition, the initial state of a vehicle is always nonparallel to parking spaces. It is always right or left skewed as shown in Figure 7. In our study, we consider these two conditions independently.

In the right skewed condition, as shown in Figure 7a, the car firstly turns left with center point , and its radius is . When the rear axle center point reaches point , the car moves straight up to point . The car then turns right with center point , and the corresponding radius is . It maintains this state until arriving at point . Finally, the car turns left with center point until reaching point , and its radius is . From the geometric relationship and parking process perspective, the relationship between circles and can be expressed as follows:

where and are the horizontal and vertical distances of the parking space obtained from the ultrasonic sensors, respectively; is the initial attitude angle of the vehicle.

Then, and can be calculated as follows:

The trajectory for the right skewed initial state can be generated as follows:

The left skewed condition, as shown in Figure 7b, is similar with the right skewed condition. The relationship between circles and for left skewed condition can be expressed as follows:

The trajectory for the left skewed initial state can be generated as follows:

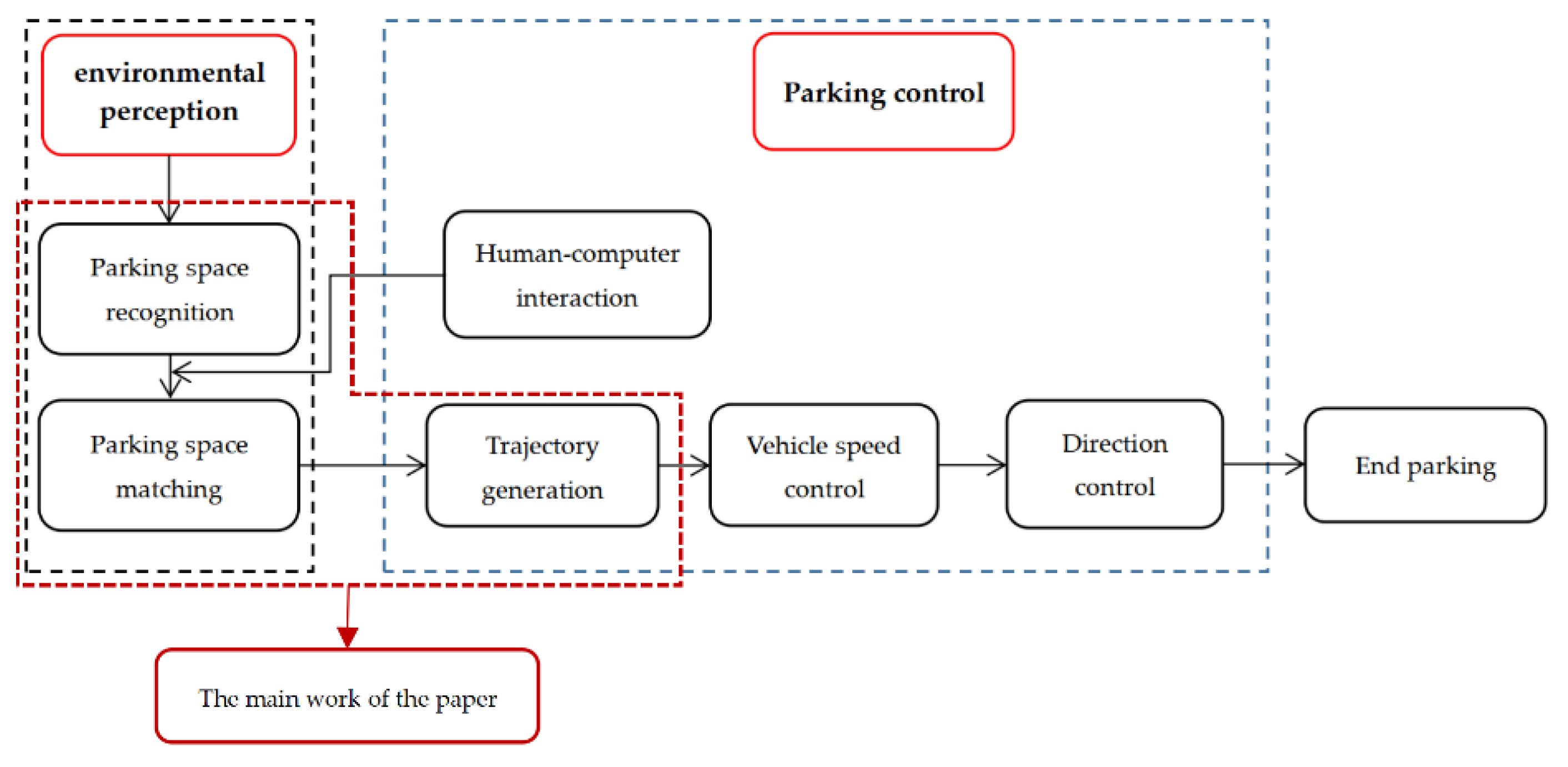

3.4. The Workflow of the Automatic Parking System

The previous sections introduced the various components of the automatic parking system, but the entire system requires their cooperation to complete. The entire system first needs ultrasonic sensors and cameras to obtain parking space coordinate information, parking space size information, and obstacle location information, and then lock the matched parking space and generate a suitable parking track. In the parking process, the vehicle control system generates angle control signals and vehicle speed control signals based on the fuzzy control algorithm [38,39], transmits them to the electric power assist system and the vehicle speed control system, and ends the parking process when it reaches the end of the target trajectory. In order for the driver to operate more conveniently, the driver can see the parking space image information and select the parking space he wants on the human–computer interaction interface. The workflow of the entire automatic parking system is shown in Figure 8.

Figure 8.

The workflow of the automatic parking system.

4. Vehicle Testing

4.1. Simulation Model-Based Experiment

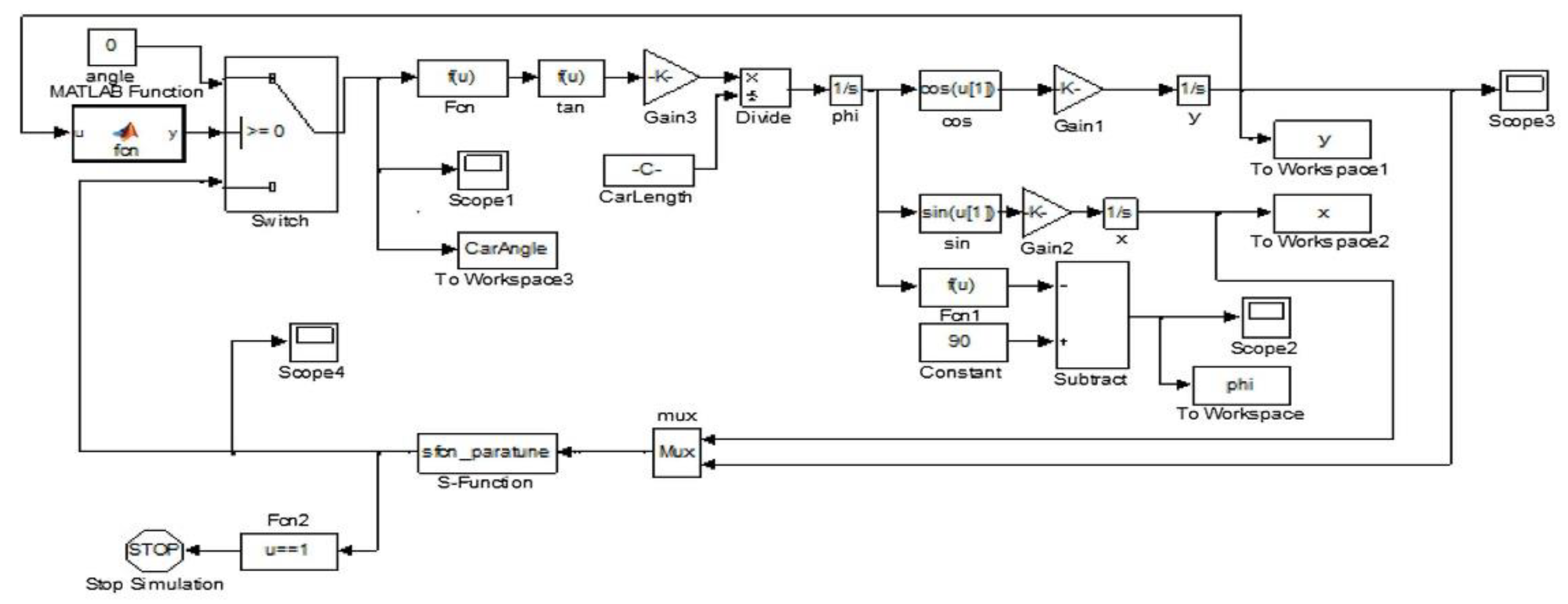

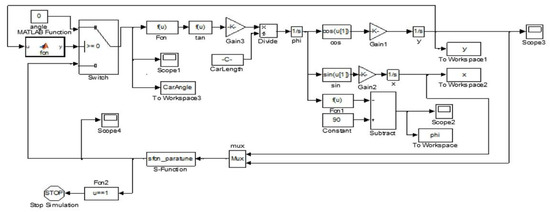

To demonstrate the proposed automatic parking system, a simulation model is built through the Simulink. The simulation of the automatic parking system is conducted under nonparallel initial state conditions based on the actual parameters of the test vehicle. It is shown in Table 2.

Table 2.

Simulation parameters [mm].

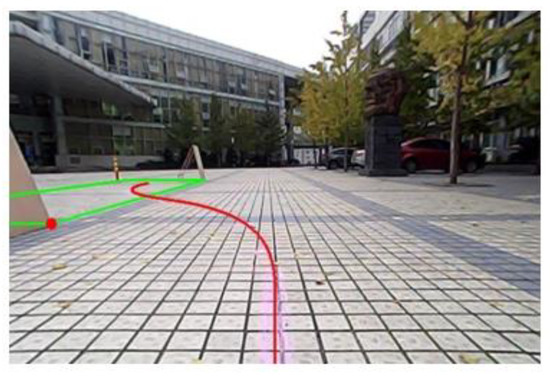

The simulation model is shown in Figure 9, which is mainly for trajectory generation evaluation. The simulation results with different initial attitude angle conditions are shown in Figure 10. Figure 10a–d shows the simulation results with different initial attitude angles of the vehicle including , , , and . It can be seen that the proposed trajectory generation method can satisfy the parking requirement with nonparallel initial state.

Figure 9.

Simulation model of the automatic parking system in Simulink.

Figure 10.

Parking simulation results under nonparallel initial state condition. (a–e) respectively are the simulation results of different initial conditions

4.2. Real Vehicle Experiment

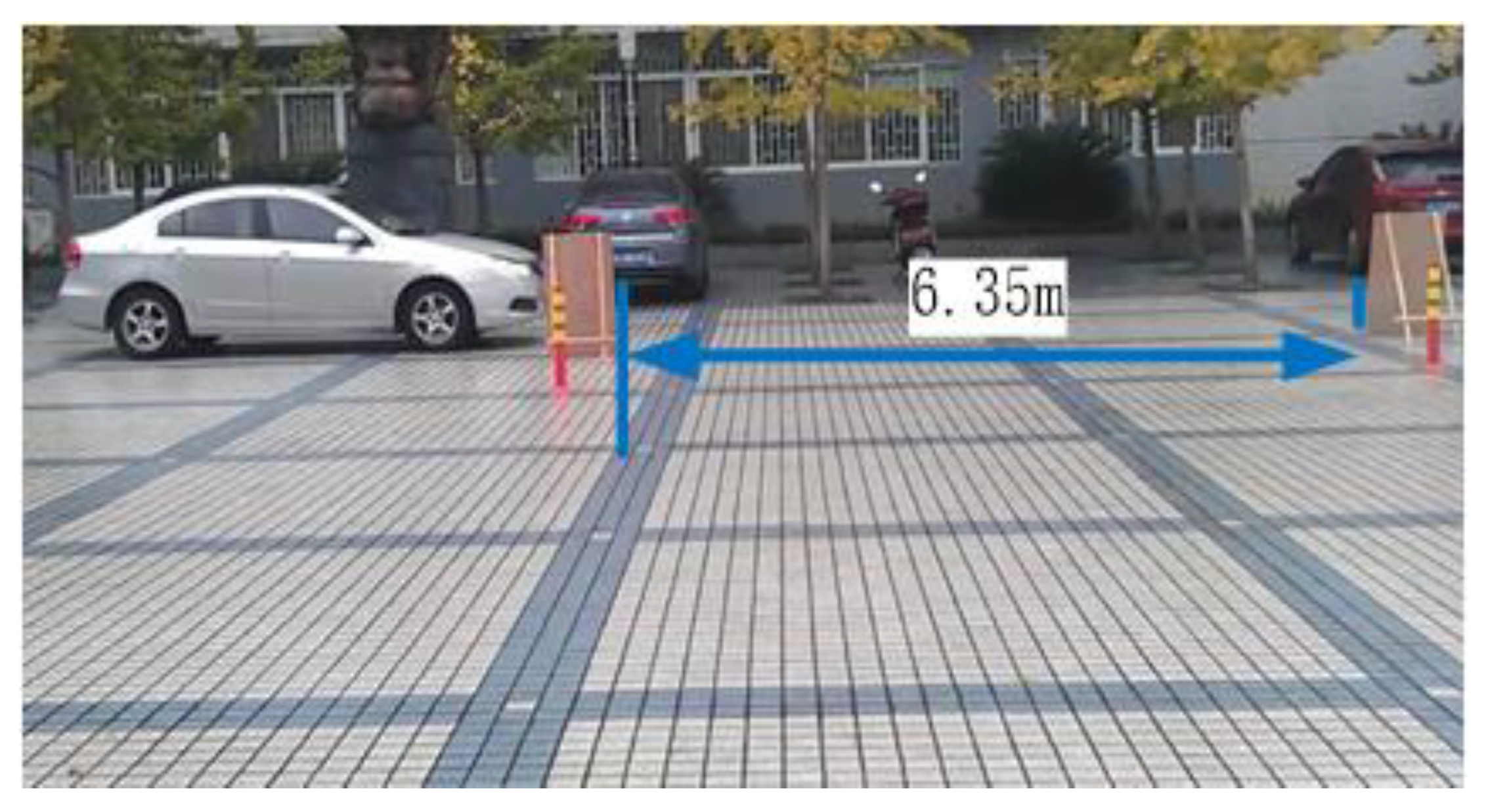

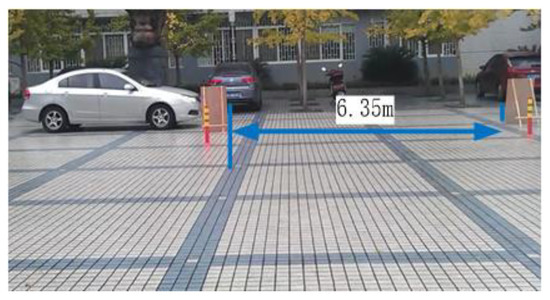

In Equation (5), , which is introduced as the referencing target value in the data fusion step, is related to recognition error. It is mainly affected by two factors: driving speed and cross range. To evaluate two factors, all experiments are conducted in a virtual environment as shown in Figure 11, where the ultrasonic sensors are mounted 70 cm above the ground in a vehicle. The role of the stake not only simulates the parking spot, but also represents other vehicles existing, which means if the target vehicle strikes the stake, the experiment is a failure.

Figure 11.

Virtual parallel parking environment.

We first evaluate driving speed and cross range independently. Also, both the single sensor and the average of double sensors, respectively, are used to do the experiment. Moreover, considering the influence of the other factors, we must ensure that the sensors are stable during the experiment.

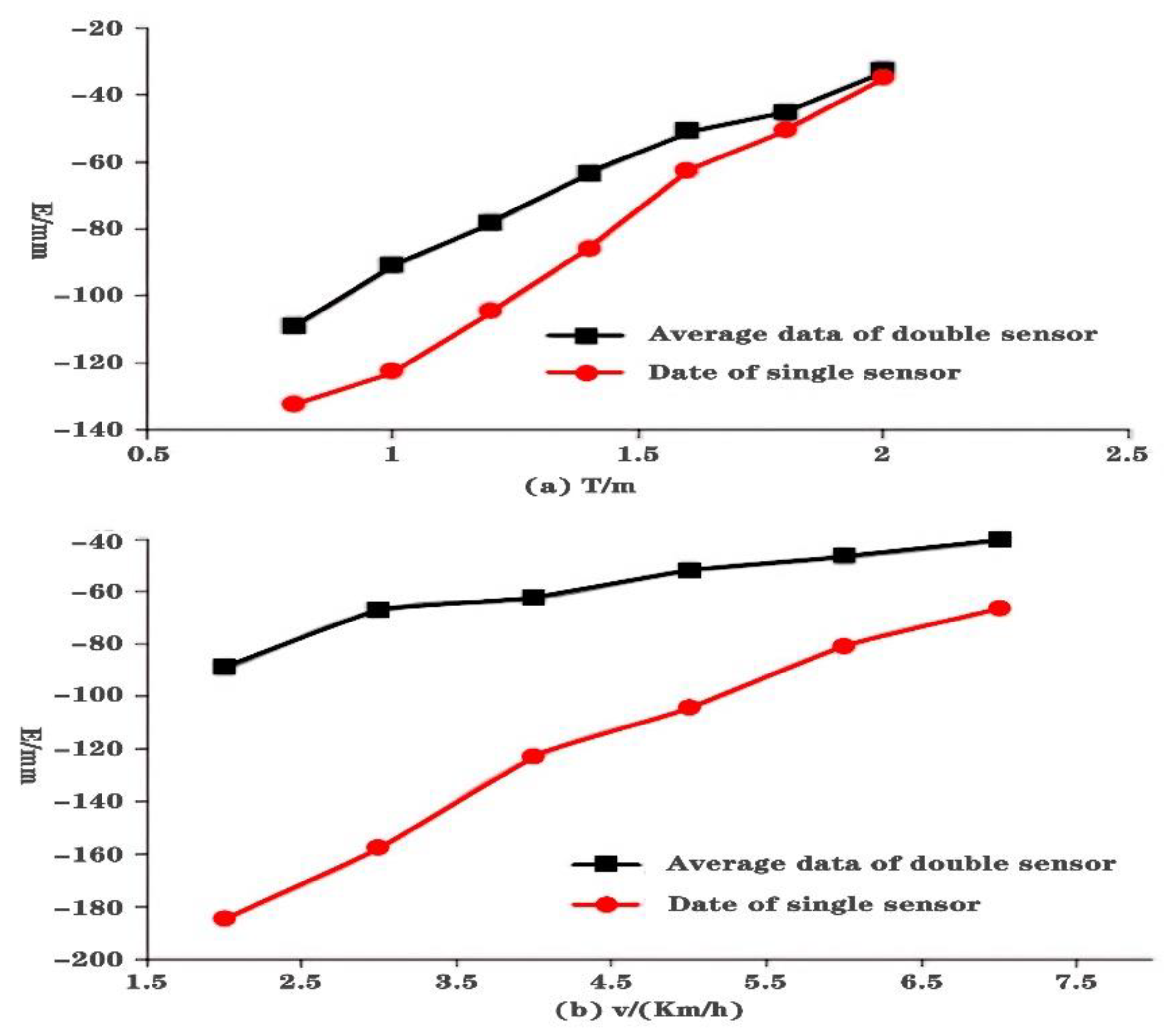

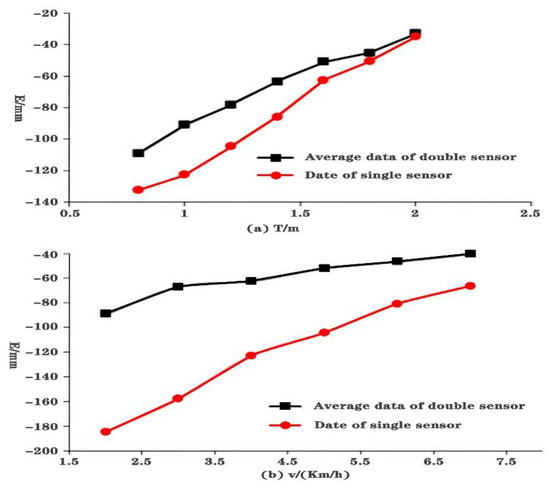

First, keeping driving speed at 5 km/h and target parking space length at 6.35 m, seven different cross ranges (0.8 m, 1.0 m, 1.2 m, 1.4 m, 1.6 m, 1.8 m, and 2.0 m) between the target parking space and vehicle are tested. The experiment is repeated 10 times for each condition. We compute the average error of the 10 groups for each distance as the final error. Figure 12a shows the experiment results including single sensor and average of double sensors. It can be seen that they have similar curves.

Figure 12.

Results on single factor experiment: (a) Results on fixed driving speed, (b) Results on fixed cross range.

Then, the cross range is kept at 1.0 m and target parking space length at 6.35 m. Six different driving speed (2 km/h, 3 km/h, 4 km/h, 5 km/h, 6 km/h, and 7 km/h) are tested. The experiment is repeated 10 times for each driving speed. We compute the average error of the 10 groups for each driving speed as the final error. Figure 12b shows the experiment results including single sensor and average of double sensors. It can be seen that recognition error approximately keeps a linear relationship with driving speed for both methods.

Figure 12 shows that both driving speed and cross range have a nearly linear influence on parking spot length error. Based on the analysis and synthesizing the linear influence of the two factors, we formalize a linear formula for error correction.

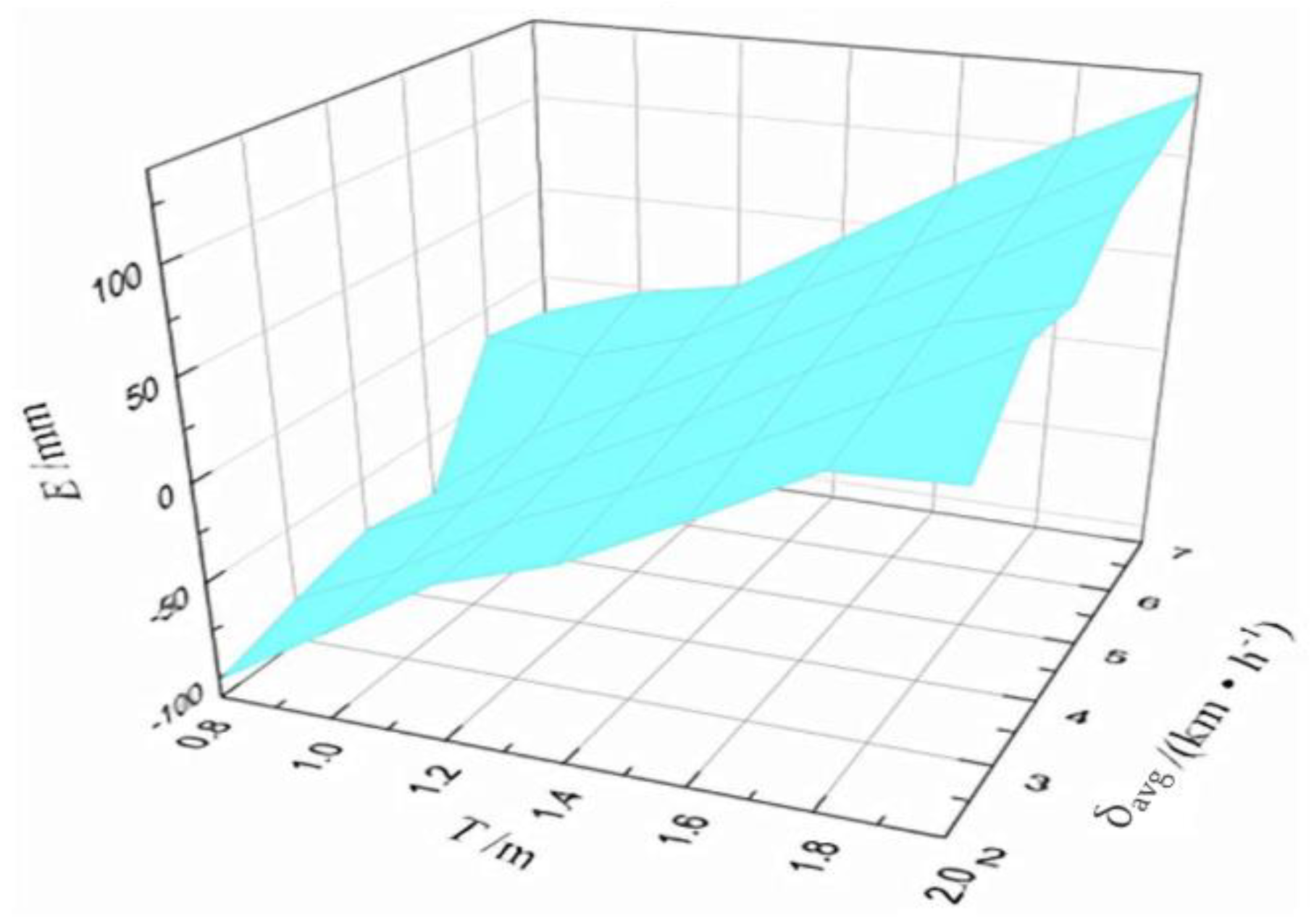

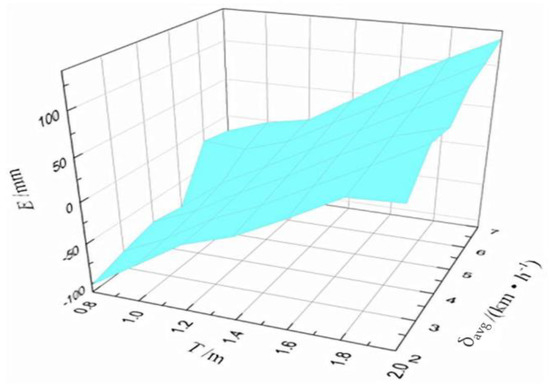

From the comprehensive factors experiment perspective, we consider the average error of double sensors to be the final measured value. The multilevel design includes seven different cross ranges (0.8 m, 1.0 m, 1.2 m, 1.4 m, 1.6 m, 1.8 m, and 2.0 m) and six different driving speeds (2 km/h, 3 km/h, 4 km/h, 5 km/h, 6 km/h, and 7 km/h). A total of 42 groups of experiments are conducted. Each group of experiment is repeated three times and the average value is calculated. Based on the experiment results, a 3D error curve is obtained as shown in Figure 13. Here, T indicates the cross range. and are the driving speed and average error of double sensors, respectively.

Figure 13.

Error curved surface of orthogonal data.

The value measured by the sensor is usually affected by external conditions, so the sensor requires a separate correction equation. In mathematical models, multiple regression models [40] that can consider surrounding environmental factors have been used to establish relevant correction formulas. According to the results, the mathematical model of multiple linear regression is given as follows:

where is the measured average error of double sensors; and and are the horizontal distance and driving speed, respectively. To assure scientific rationality of error correction, is the average value of cross range through the upper and lower edges of the target space, and is the average driving speed through the target space.

To verify the validity of the regression formula, we use F-value and present the results in Table 3. When is at level 0.05, the F-value from Equation (20) is 311.3356, and . The results show that if F-value is greater than , the regression function is significant at level 0.05. Moreover, the residual standard deviation () is 0.016294, and is 0.032588. Therefore, 95% deviations of the detected errors are within 0.032588 m with this regression function and satisfy the experimental requirements.

Table 3.

Test of multiple linear regression model.

5. Experimental Results and Discussion

5.1. Analysis and Comparison

We next aim to improve the success parking space recognition rate under the simulated parking environment shown in Figure 10. We compare our multi-sensor information ensemble method with single sensor application and the average of double sensors. It should be noted that the cross range is the average value of measured distance through the edges of the target space, and the driving speed is the average value of the speed recorded while driving through the target space.

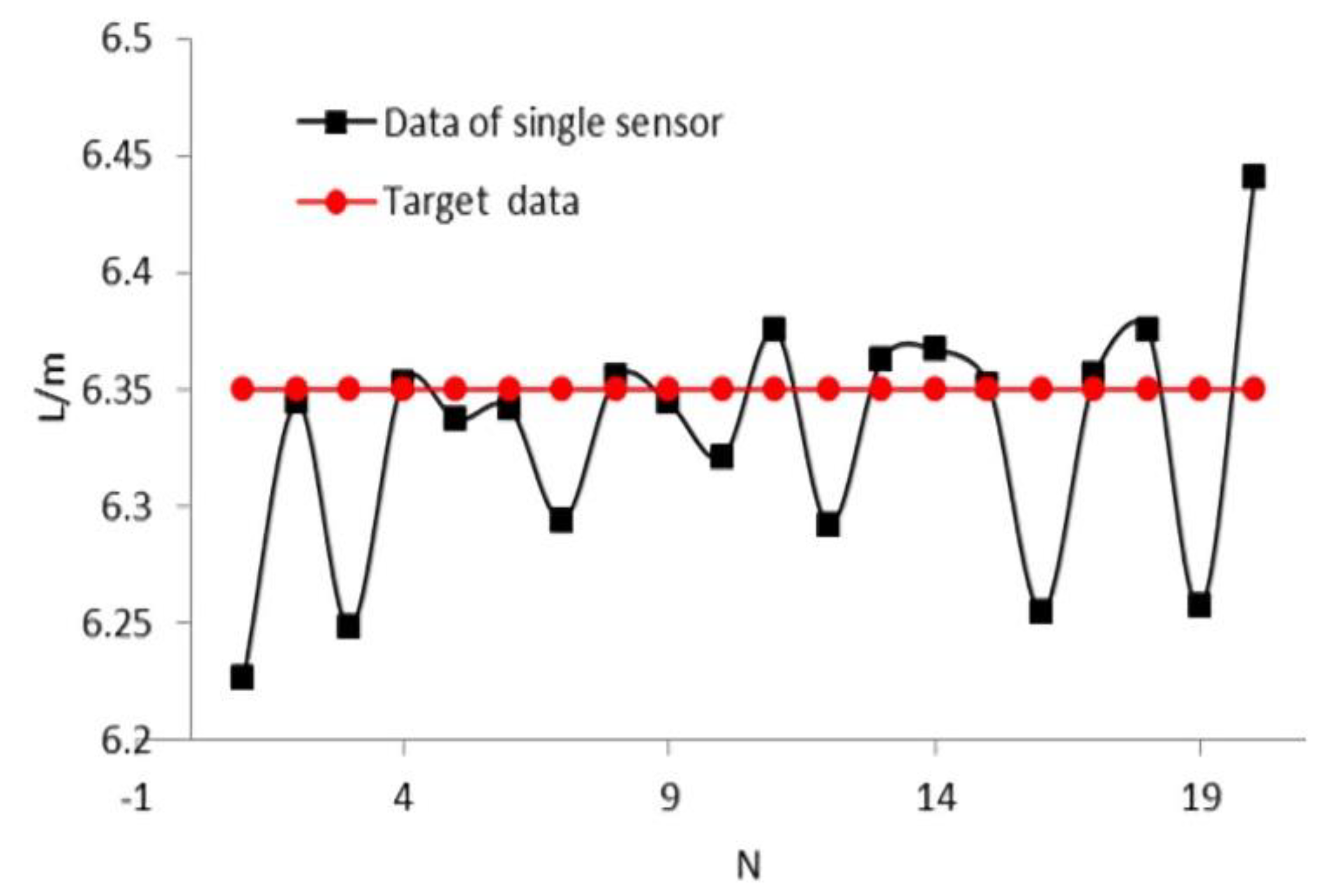

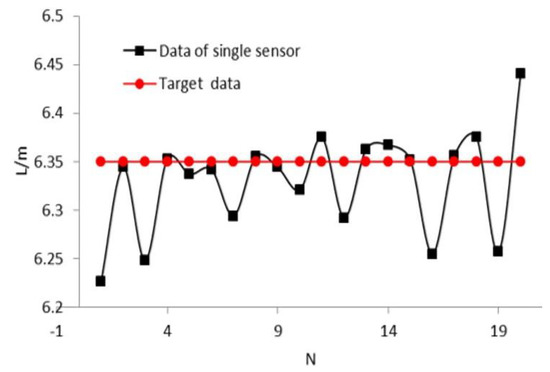

The experimental results of the single sensor application are shown in Figure 14. The -axis and -axis represent the number of experiments and measured length of the target space, respectively. It can be seen that nine groups of experiment results are larger than the target data (6.35 m). The success rate of recognition is approximately 45%, and the maximum recognition error is about 15 cm.

Figure 14.

Experimental results of single sensor application.

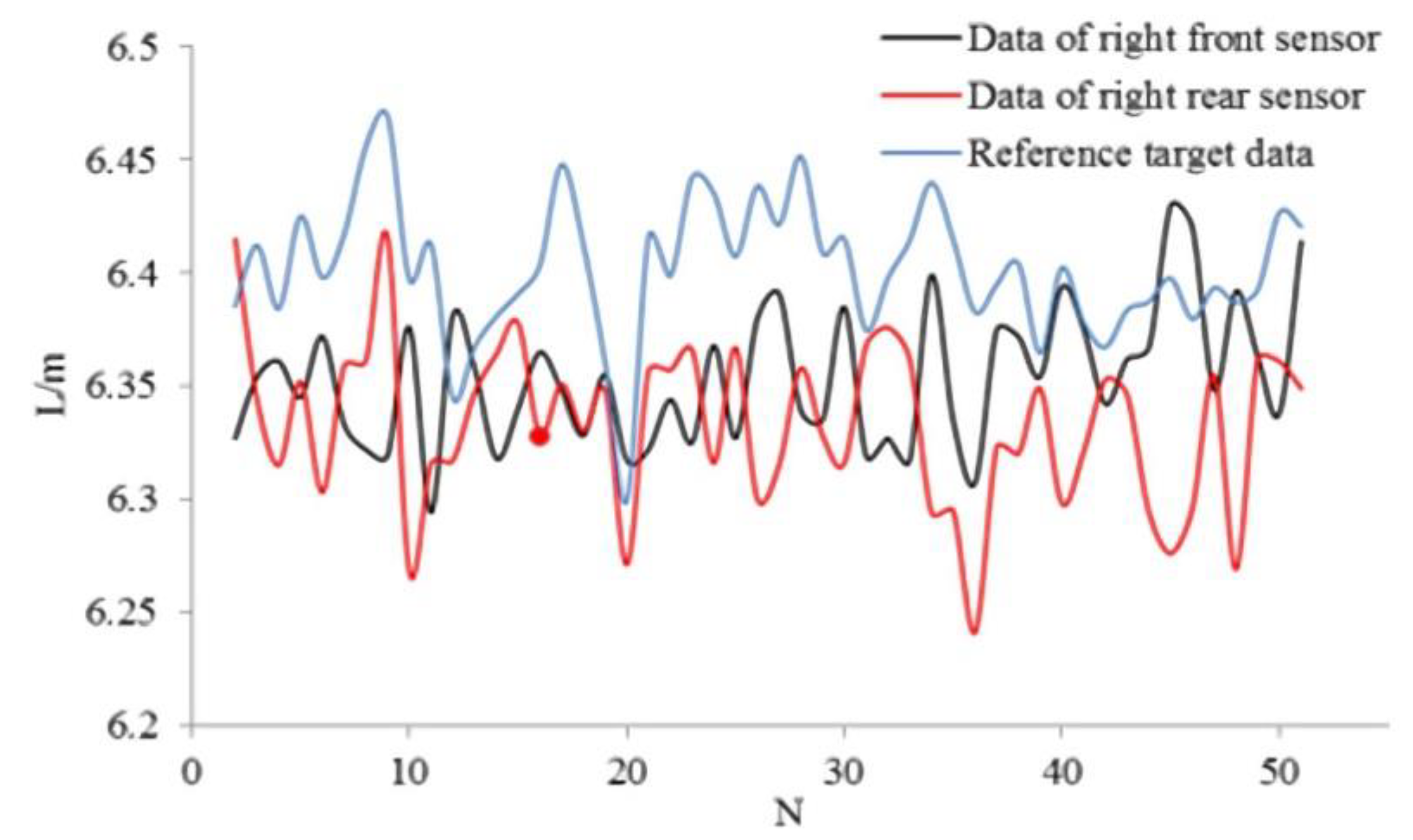

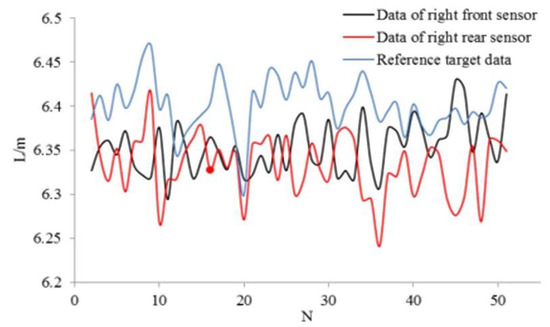

In the double sensor method experiments, the ranging sensors are located on the right-hand side of the vehicle. The driver controls the speed and cross range at a relatively stable level. The experimental process is the same as that of single sensor application. Figure 15 shows the measured and reference data obtained by Equation (20).

Figure 15.

Recognition data of two sensors and reference target data.

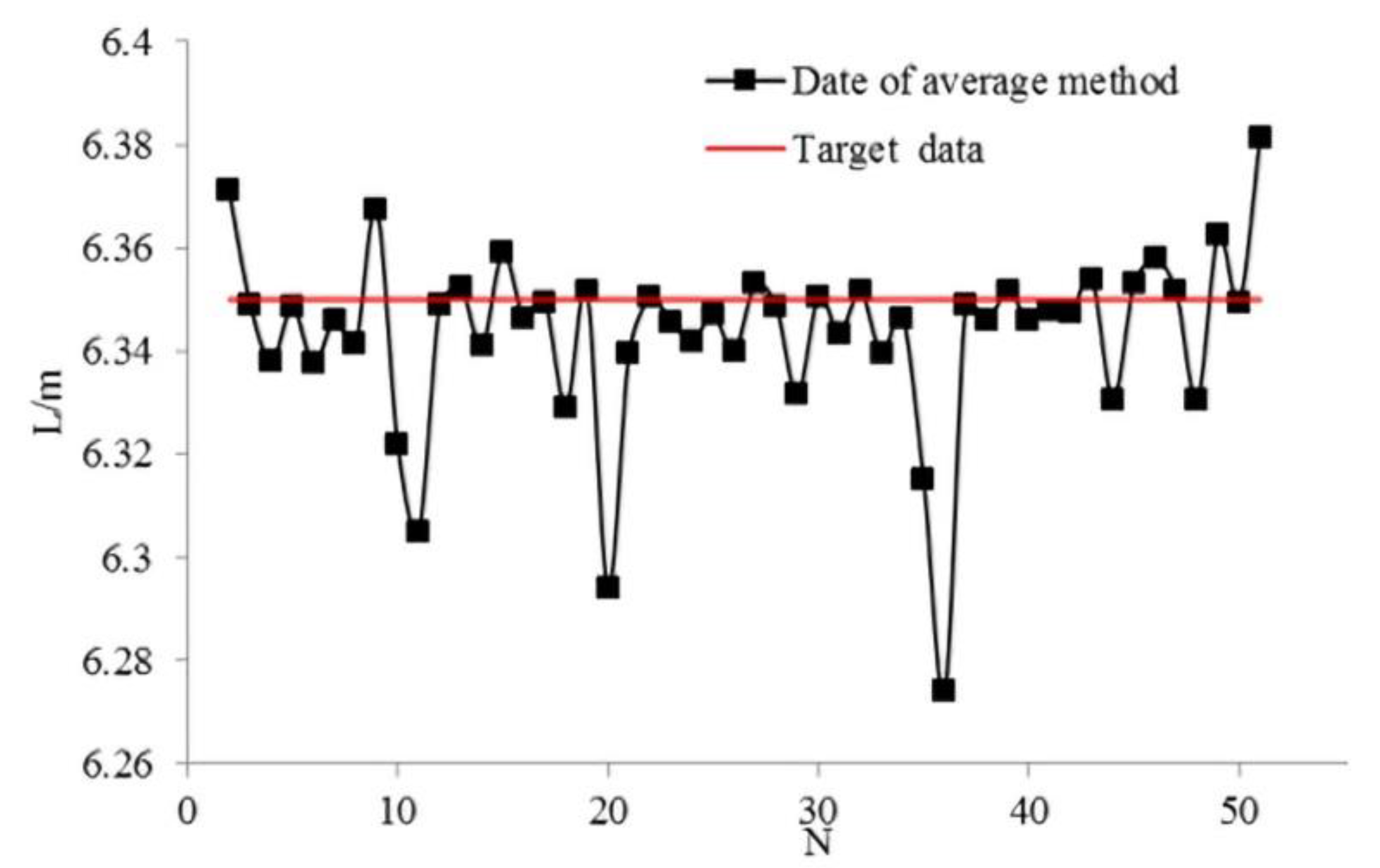

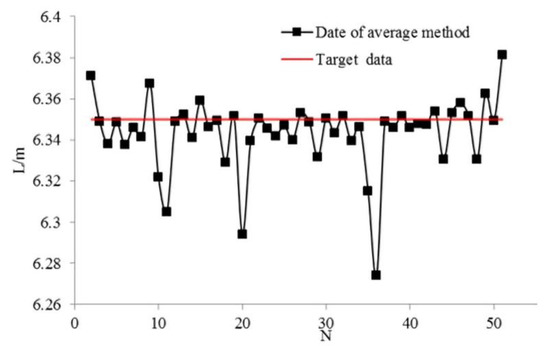

Figure 16 shows the average of data of the double sensors shown in Figure 15. The -axis and -axis represent the number of experiments and measured data, respectively. As shown in Figure 16, 32 groups of experiment results are above the expected target value of 6.35 m. The success rate of the average method is 64%, and the recognition error is within 9 cm, which means recognition results are better than those obtained with the single sensor method.

Figure 16.

Results of average of double sensors method.

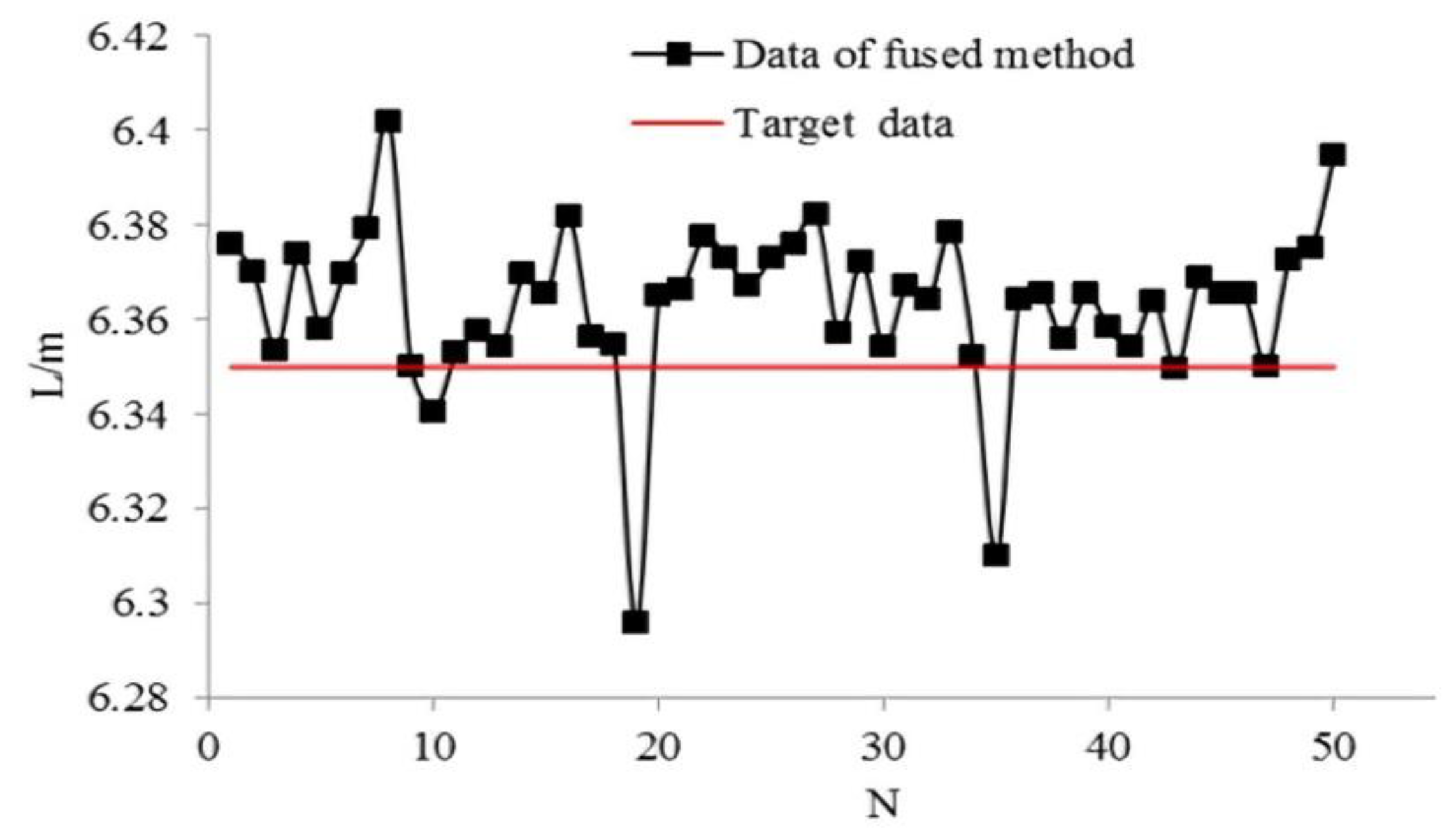

Our proposed multi-sensor information ensemble method results are shown in Figure 17. The -axis and -axis represent the number of experiments and measured data, respectively. As shown in Figure 16, 47 groups of experimental results are greater than the expected value of 6.35 m. The success rate of multi-sensor information ensemble method is 94%, and the recognition error is within 5 cm, which means our proposed method is better than the single sensor and average of double sensors methods. The experimental results are summarized in Table 4.

Figure 17.

Results of multi-sensor information ensemble.

Table 4.

Comparison results on parking space recognition.

The comparison results shows that our proposed multi-sensor information ensemble method can enhance the success rate and reduce recognition error in parking space recognition.

5.2. Parking Space Matching and Final Auto-Parking Test Results

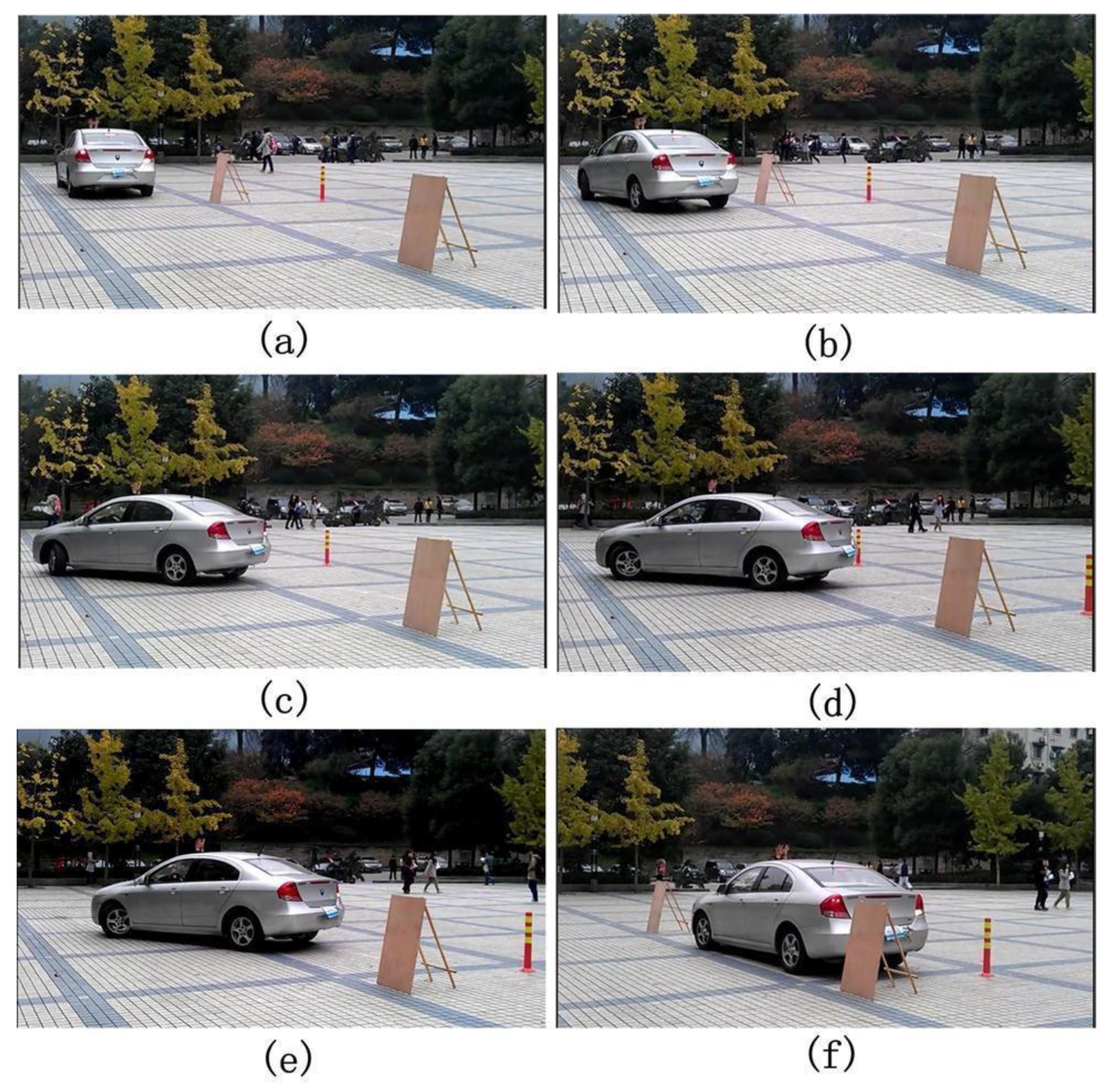

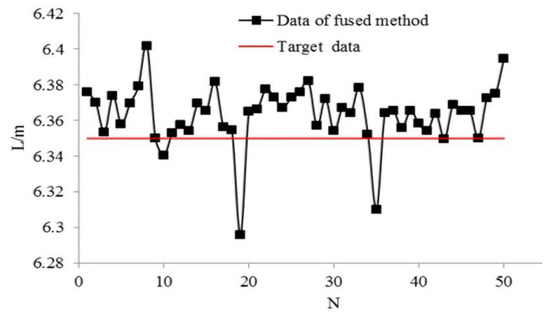

For the automatic parking system testing, the parking space recognition, parking space matching, and trajectory generation algorithm are combined. The whole process is shown in Figure 18.

Figure 18.

Parking process using proposed automatic parking system. (a–f) are the actual vehicle test results with different initial conditions

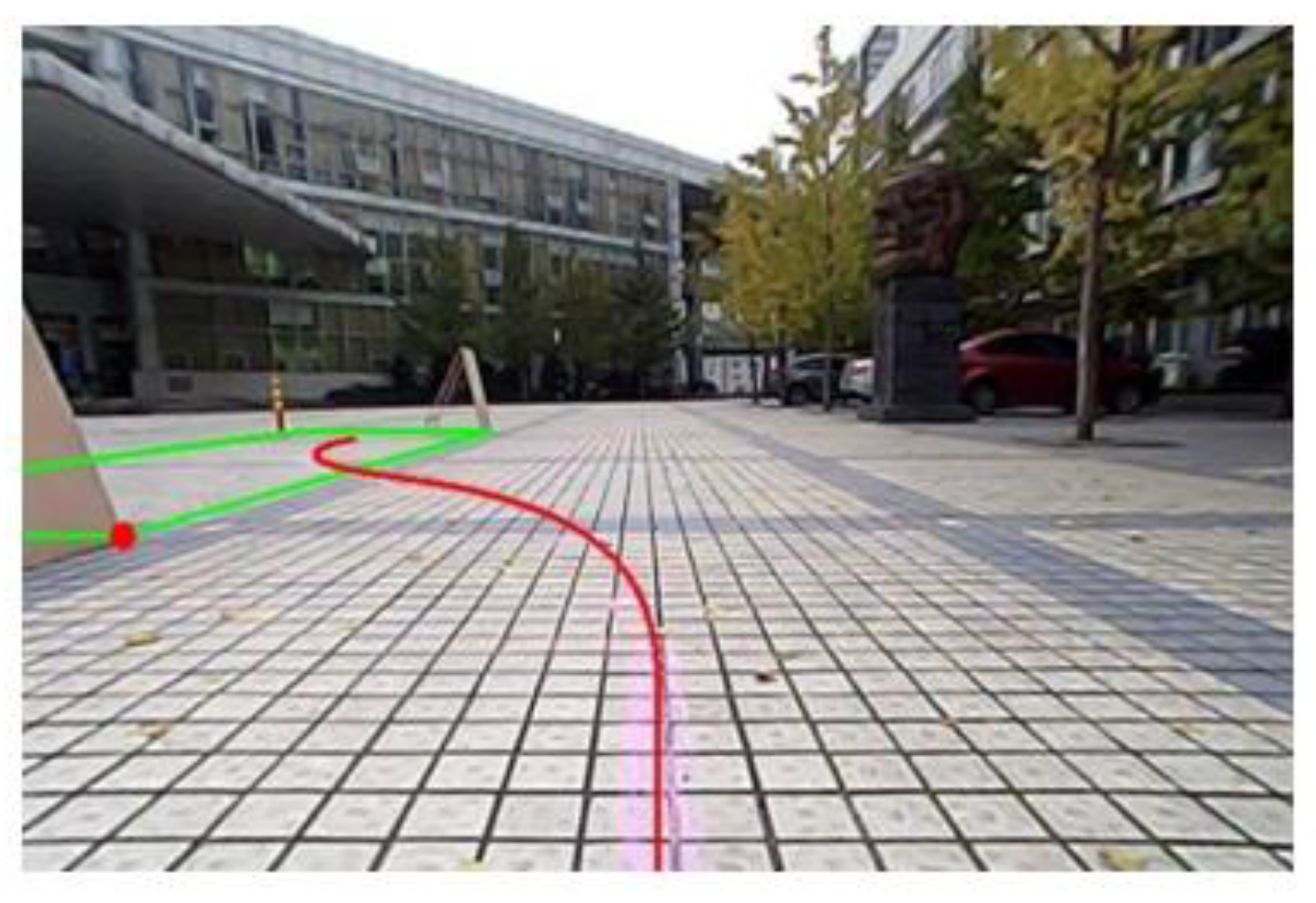

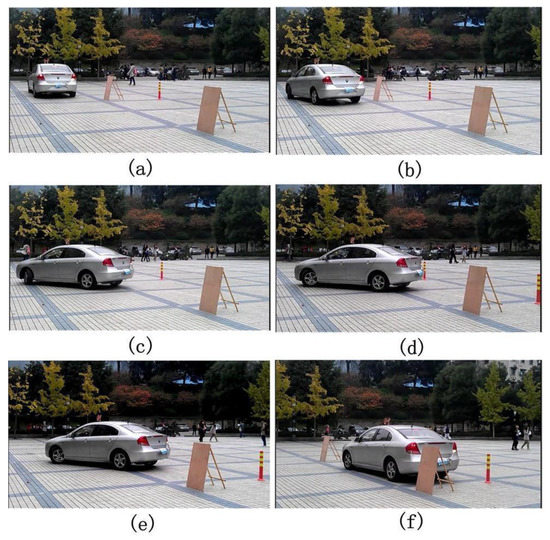

While parking the vehicle, the image sensor recognizes the parking space. The virtual space is then established by parking space matching algorithm. A number of parking space matching tests are performed with different parking initial vehicle states. The parking space matching result is shown in Figure 19.

Figure 19.

Parking space matching and trajectory generation.

The longitudinal and horizontal distance between the vehicle and the parking space can affect the trajectory generation. If the size of parking spaces is fixed, the length and width of the parking space can be determined. Therefore, only the coordinates of the red point (reference point) in Figure 18 should be confirmed. The longitudinal and horizontal distance between the vehicle and the parking space can then be calculated based on the confirmed reference point. We measure the reference point 10 times and compare errors between the actual coordinates and measured results as shown in Table 5. It can be viewed that the errors on and directions are within 4 cm and 5 cm, respectively, which satisfy the parking requirement.

Table 5.

Range data of reference points.

The trajectory generation algorithm can directly reflect the success parking rate. After finishing parking, we can determine the effectiveness of the generated trajectory from the attitude angle of the vehicle. During the parking process, if no collisions occur and the attitude angle of the vehicle is within , the parking can be considered to be successful. From the numerous parking experiments, 10 experimental results are randomly selected and shown in Table 5. The left, right, front, and rear columns show the respective distances from the parking space when the vehicle is parked in the parking space.

In Table 6, the initial attitude angle of the vehicle is within . The parking process is a success when the angle is within . Whereas it may fail when the initial angle is . The experimental results show that the proposed automatic parking system algorithms are successful and effectively solve the collision problem during the parking process. The first nine experiments in which attitude angle is within are successful with no collisions when the vehicle is parked in the parking space. Only one experiment is unsuccessful because the attitude angle is about . Therefore, the success rate of parking is 90% with the proposed methods.

Table 6.

Parking results under nonparallel initial state and unequal radii conditions.

6. Conclusions

This paper has developed the parking space recognition, parking space matching, and trajectory generation-based approach for automatic parking system, which successfully overcomes the drawbacks associated with existing traditional methods. The proposed approach significantly improves the parking performance. In particular, we propose multi-sensor information ensemble algorithm for parking space recognition. Then, the linear mapping is applied to match the parking space. Subsequently, the nonparallel initial state-based trajectory generation algorithm is investigated. Simulation and real vehicle experimental results have been conducted to demonstrate the superior performance with respect to the accuracy parking performance. In detail, the success rate of parking space recognition reaches 94% and its identification error is within cm. When the initial angle of the vehicle is within and the length and width of the parking space are 6 m and 2.4 m, respectively, the parking success rate can reach 90%.

Author Contributions

Formal analysis, K.C.; Investigation, K.C. and Y.L.; Methodology, J.Z.; Project administration, C.P., K.C. and M.L.; Writing—review & editing, K.C. and Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by The National Key Research and Development Program of China (2018YFB0106100).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bibri, S.E. The anatomy of the data-driven smart sustainable city: Instrumentation, datafication, computerization and related applications. J. Big Data 2019, 6, 59. [Google Scholar] [CrossRef]

- Bibri, S.E.; Krogstie, J. The emerging data–driven Smart City and its innovative applied solutions for sustainability: The cases of London and Barcelona. Energy Inform. 2020, 3. [Google Scholar] [CrossRef]

- Aslinezhad, M.; Malekijavan, A.; Abbasi, P. ANN-assisted robust GPS/INS information fusion to bridge GPS outage. J. Wirel. Commun. Netw. 2020, 2020, 1–18. [Google Scholar] [CrossRef]

- Park, J.S.; Park, J.H. Future Trends of IoT, 5G Mobile Networks, and AI: Challenges, Opportunities and Solutions. J. Inf. Process. Syst. 2020, 16, 743–749. [Google Scholar] [CrossRef]

- Park, J.S.; Park, J.H. Advanced Technologies in Blockchain, Machine Learning, and Big Data. J. Form. Process. Syst. 2020, 16, 239–245. [Google Scholar] [CrossRef]

- Mendiratta, S.; Dey, D.; Sona, D.R. Automatic car parking system with visual indicator along with IoT. In Proceedings of the 2017 International Conference on Microelectronic Devices, Circuits and Systems (ICMDCS), Vellore, India, 10–12 August 2017; pp. 1–3. [Google Scholar]

- Nasreen, M.; Iyer, M.; Jayakumar, E.P.; Bindiya, T.S. Automobile Safety and Automatic Parking System using Sensors and Con-ventional Wireless Networks. In Proceedings of the 2018 IEEE 3rd International Conference on Computing, Communication and Security (ICCCS), Kathmandu, Nepal, 25–27 October 2018; pp. 51–55. [Google Scholar]

- Aswini, R.; Archana, T. Automatic Car Parking System Using Raspberry-Pi with Cloud Storage Environment. In Proceedings of the 2019 IEEE International Conference on System, Computation, Automation and Networking (ICSCAN), Pondicherry, India, 29–30 March 2019; pp. 1–5. [Google Scholar]

- Lee, D.; Kim, K.-S.; Kim, S. Controller Design of an Electric Power Steering System. IEEE Trans. Control. Syst. Technol. 2018, 26, 748–755. [Google Scholar] [CrossRef]

- Park, W.-J.; Kim, B.-S.; Seo, D.-E.; Kim, D.-S.; Lee, K.-H. Parking space detection using ultrasonic sensor in parking assistance system. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 1039–1044. [Google Scholar]

- Han, S.J.; Choi, J. Parking Space Recognition for Autonomous Valet Parking Using Height and Salient Line Probabil-ity Maps. Etri J. 2015, 37, 1220–1230. [Google Scholar] [CrossRef]

- Kim, K.; Seol, S.; Kong, S.-H. High-speed train navigation system based on multi-sensor data fusion and map matching algorithm. Int. J. Control. Autom. Syst. 2015, 13, 503–512. [Google Scholar] [CrossRef]

- Kim, O.T.T.; Tri, N.D.; Nguyen, V.D.; Tran, N.H.; Hong, C.S. A shared parking model in vehicular network using fog and cloud environment. In Proceedings of the 2015 17th Asia-Pacific Network Operations and Management Symposium (APNOMS), Busan, Korea, 19–21 August 2015; pp. 321–326. [Google Scholar]

- Nina, Y.; Liang, H. Trajectory planning method and simulation research of parallel parking. Electron. Meas. Technol. 2011, 34, 42–45. [Google Scholar] [CrossRef]

- Hehn, M.; Andrea, R. Real-time trajectory generation for quadrocopters. IEEE Trans. Robot. 2015, 31, 877–892. [Google Scholar] [CrossRef]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Parking slot markings recognition for automatic parking assist system. In Proceedings of the 2006 IEEE Intelli-Gent Vehicles Symposium, Meguro-ku, Japan, 13–15 June 2006; pp. 106–113. [Google Scholar]

- Hsu, T.-H.; Liu, J.-F.; Yu, P.-N.; Lee, W.-S.; Hsu, J.-S. Development of an automatic parking system for vehicle. In Proceedings of the 2008 IEEE Vehicle Power and Propulsion Conference, Harbin, China, 3–5 September 2008; pp. 1–6. [Google Scholar]

- Ma, S.; Jiang, H.; Han, M.; Xie, J.; Li, C. Research on Automatic Parking Systems Based on Parking Scene Recognition. IEEE Access 2017, 5, 21901–21917. [Google Scholar] [CrossRef]

- Liu, H.; Luo, S.; Lu, J. Method for Adaptive Robust Four-Wheel Localization and Application in Automatic Parking Systems. IEEE Sens. J. 2019, 15, 10644–10653. [Google Scholar] [CrossRef]

- Li, C.; Jiang, H.; Ma, S.; Jiang, S.; Li, Y. Automatic Parking Path Planning and Tracking Control Research for Intelligent Vehicles. Appl. Sci. 2020, 10, 9100. [Google Scholar] [CrossRef]

- Yu, L.; Jiang, H.; Hua, L.; Yu, H. Anti-Congestion Route Planning Scheme Based on Dijkstra Algorithm for Automatic Valet Parking System. Appl. Sci. 2019, 9, 5016. [Google Scholar] [CrossRef]

- Zhao, J.; Wu, Q.; Chen, J.; Huang, Y. Parking, Intelligent Parking System. In Proceedings of the 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019; Volume 1, pp. 2262–2267. [Google Scholar]

- Al-A’Abed, M.; Majali, T.; Abu Omar, S.; Alnawaiseh, A.; Al-Ayyoub, M.; Jararweh, Y. Building a prototype for power-aware automatic parking system. In Proceedings of the 2015 3rd International Renewable and Sustainable Energy Conference (IRSEC), Ouarzazate, Morocco, 10–13 December 2015; pp. 1–6. [Google Scholar]

- Liang, Z.; Zheng, G.; Li, J. Automatic parking path optimization based on Bezier curve fitting. In Proceedings of the 2012 IEEE International Conference on Automation and Logistics, Zhengzhou, China, 15–17 August 2012; pp. 583–587. [Google Scholar]

- Chen, G.; Hou, J.; Dong, J.; Li, Z.; Gu, S.; Zhang, B.; Yu, J.; Knoll, A. Multi-Objective Scheduling Strategy with Genetic Algorithm and Time Enhanced A* Planning for Autonomous Parking Robotics in High-Density Unmanned Parking Lots. IEEE/Asme Trans. Mechatron. 2020, 1. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. A Universal Vacant Parking Slot Recognition System Using Sensors Mounted on Off-the-Shelf Vehicles. Sensors 2018, 18, 1213. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Huang, J.; Li, X.; Xiong, L. Vision-based Parking-slot Detection: A DCNN-based Approach and A Large-scale Benchmark Dataset. IEEE Trans. Image Process. 2018, 27, 5350–5364. [Google Scholar] [CrossRef]

- Li, W.; Cao, H.; Liao, J.; Xia, J.; Cao, L.; Knoll, A. Parking Slot Detection on Around-View Images Using DCNN. Front. Neurorobotics 2020, 14. [Google Scholar] [CrossRef]

- Li, Q.; Lin, C.; Zhao, Y. Geometric Features-Based Parking Slot Detection. Sensors 2018, 18, 2821. [Google Scholar] [CrossRef]

- Vorobieva, H.; Glaser, S.; Minoiu-Enache, N.; Mammar, S. Automatic parallel parking in tiny spots: Path planning and con-trol. IEEE Trans. Intell. Transp. Syst. 2014, 16, 396–410. [Google Scholar] [CrossRef]

- Ye, F.; Chen, J.; Li, Y. Improvement of DS evidence theory for multi-sensor conflicting information. Symmetry 2017, 9, 69. [Google Scholar]

- Maritz, J.S.; Lwin, T. Empirical Bayes Methods; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; Academic Press, Inc.: New York, NY, USA, 1995; pp. 41–95. [Google Scholar]

- Raiffa, H.; Schlaifer, R. Applied Statistical Decision Theory; Harvard Business School Publications: Brighton, MA, USA, 1961. [Google Scholar]

- Grimmett, H.; Buerki, M.; Paz, L.; Pinies, P.; Furgale, P.; Posner, I.; Newman, P. Integrating metric and semantic maps for vision-only automated parking. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2159–2166. [Google Scholar]

- Juneja, S.; Kochar, S.; Dhiman, S. Intelligent Algorithm for Automatic Multistoried Parking System Using Image Pro-cessing with Vehicle Tracking and Monitoring from Different Locations in the Building. In Sensors and Image Processing; Springer: Singapore, 2018; pp. 73–84. [Google Scholar]

- Wahab, M.F.B.; Moe, A.L.; Abu, A.B.; Yaacob, Z.B.; Legowo, A. Development of automated parallel parking system in small mobile vehicle. ARPN J. Eng. Appl. Sci. 2015, 10, 7107–7112. [Google Scholar]

- Xiong, B.; Qu Shiru, Q. Intelligent Vehicle’s Path Tracking Based on Fuzzy Control. J. Transp. Syst. En-Gineeing Inf. Technol. 2010, 10, 70. [Google Scholar] [CrossRef]

- Lin, H.; Hu, Y.; Chen, A. Research on Control System of Intelligent Car based on Self-A- daptive Fuzzy Control. Comput. Meas. Control 2011, 19, 78. (In Chinese) [Google Scholar]

- Wang, W.-C.V.; Lung, S.-C.C.; Liu, C.-H. Application of Machine Learning for the in-Field Correction of a PM2.5 Low-Cost Sensor Network. Sensors 2020, 20, 5002. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).