Abstract

Automatizing the identification of human brain stimuli during head movements could lead towards a significant step forward for human computer interaction (HCI), with important applications for severely impaired people and for robotics. In this paper, a neural network-based identification technique is presented to recognize, by EEG signals, the participant’s head yaw rotations when they are subjected to visual stimulus. The goal is to identify an input-output function between the brain electrical activity and the head movement triggered by switching on/off a light on the participant’s left/right hand side. This identification process is based on “Levenberg–Marquardt” backpropagation algorithm. The results obtained on ten participants, spanning more than two hours of experiments, show the ability of the proposed approach in identifying the brain electrical stimulus associate with head turning. A first analysis is computed to the EEG signals associated to each experiment for each participant. The accuracy of prediction is demonstrated by a significant correlation between training and test trials of the same file, which, in the best case, reaches value r = 0.98 with MSE = 0.02. In a second analysis, the input output function trained on the EEG signals of one participant is tested on the EEG signals by other participants. In this case, the low correlation coefficient values demonstrated that the classifier performances decreases when it is trained and tested on different subjects.

1. Introduction

In human computer interaction (HCI), design and application of brain–computer interfaces (BCIs) are among the main challenging research activities. BCI technologies aim at converting human mental activities into electrical brain signals, producing a control command feedback to external devices such as robot systems [1]. Recently, scientific literature has shown specific interest in cognitive human reactions’ identification, caused by a specific environment perception or an adaptive HCI [2]. Reviews on BCI and HCI can be found in Mühl et al. [3] and Tan and Nijholt [4].

The essential stages for a BCI application consist of a signal acquisition of the brain activities, on the preprocessing and feature extraction, classification, and feedback.

The brain signals acquisition may be realized by different devices such as Electroencephalography (EEG), Magnetoencephalography (MEG), Electrocorticography (ECoG), or functional near infrared spectroscopy (fNIRS) [5]. The preprocessing consists of cleaning the input data from noises (called artifacts), while the extraction feature phase deals with selecting, from the input signals, the most relevant features required to discriminate the data according to the specific classification [6]. The classification is the central element of the BCI and it refers to the identification of the correct translation algorithm, which converts the extracting signals features into control commands for the devices according to the user’s intention.

From the signals acquisition viewpoint, the EEG represents the most used technique; although it is non-invasive, cheap, and portable, it assures a good spatial and temporal resolution [7]. As stated in the literature [8], however, the acquisition of EEG signals through hair remains a critical issue.

Electroencephalography (EEG)-based biometric recognition systems have been used in a large range of clinical and research applications [9] such as interpreting humans’ emotional states [10], monitoring participants’ alertness or fatigue [11], checking memory workload [12], evaluating participants’ fear when subjected to unpredictable acoustic or visual external stimuli [13], or diagnosing generic brain disorders [14].

Significant literature concerning EEG signal analysis versus visual-motor tasks is available. Perspectives are particularly relevant in rehabilitation engineering. A pertinent recent example of EEG analysis application in robotics and rehabilitation engineering is provided in Randazzo et al. [15], where the authors tested on nine participants how an exoskeleton, coupled with a BCI, can elicit EEG brain patterns typical of natural hand motions.

Apart from this, cognitive activities related to motor movements have been observed in EEG following both actually executed and imagined actions [16,17]. Comparing neural signals provided by actual or imaginary movements, most papers concluded that the brain activities are similar [18]. In literature, a significant correlation between head movements and visual stimuli has been proven [19].

In order to realize an EEG-based BCI, adopting a classifier to interpret EEG-signals and implement a control system is necessary. In fact, according to the recorded EEG pattern and the classification phase, EEG may be used as input for the control interface in order to command external devices. As demonstrated in literature, the quality of the classifier, which has to extract the meaningful data from the brain signals, represents the crucial point to obtain a robust BCI [20].

The well-known techniques used for EEG signals classification in motor-imagery BCI applications are support vector machine (SVM), linear discriminant analysis (LDA), multi-layer perceptron (MLP), and random forest (RF) or convolutional neural network (CNN) classifiers. In Narayan [21], SVM obtained better performances with 98.8% classification accuracy in respect to LDA and MLP for left-hand and right-hand movements recognition. In their research, the authors demonstrated the superiority of the CNN in respect to LDA and RF for the classification of different fine hand movements [22]. In Antoniou et al. [23], the RF algorithm outperformed compared to K-NN, MLP, and SVM in the classification of eye movements used in a EEG-based control system for driving an electromechanical wheelchair. In Zero et al. [24], a time delay neural network (TDNN) classification model has been implemented to classify the human’s EEG signals when the driver has to rotate the steering wheel to perform a right or a left turn during a driving task in a simulated environment.

More in general, almost 25% of recent classification algorithms for neural cortical recording were based on Artificial Neural Networks (ANNs) [25], as they have been intensively applied to EEG classification [26]. An interesting application is presented in Craig and Nguyen [27], where the authors proposed an ANN classifier for mental command with the purpose of enhancing the control of a head-movement controlled power wheelchair for patients with chronic spinal cord injury. The authors obtained an average accuracy rate of 82.4%, but they also noticed that the classifier applied to a new subject performed worse than expected and that the customization of the classifier by an adaptive training technique increased the quality of prediction. Besides, researchers used ANNs for motor imagery classification of hand [28] or foot movements [29], as well as eye blinking detection [30]. In Lotte et al. [31], a review on classification algorithms for EEG-based BCI appears.

This paper focuses on an original objective in the context of EEG signals classifiers in respect to the literature related to body movements. Even if this work adopts a traditional ANN classifier, the scope of the application represents the main novelty due to the fact that we explore the recognition of the yaw head rotations directed toward a light target by EEG brain activities to support the driving of tasks in different applications, such as to control autonomous vehicle or wheelchair or robot in general.

In detail, this work is about “using brain electrical activities to recognize head movements in human subjects.” Input data are EEG signals collected from a set of 10 participants. Left or right head position as responses to external visual stimulus represent the output data for the experiments. The main purpose of the proposed approach is defining and verifying the BCI system effectiveness in identifying an input-output function between EEG and head different positions. Section 2 introduces BCI architecture used for experiments, while Section 3 shows results coming from different training and testing scenarios. Section 4 briefly reports the conclusions.

2. Materials and Methods

2.1. System Architecture

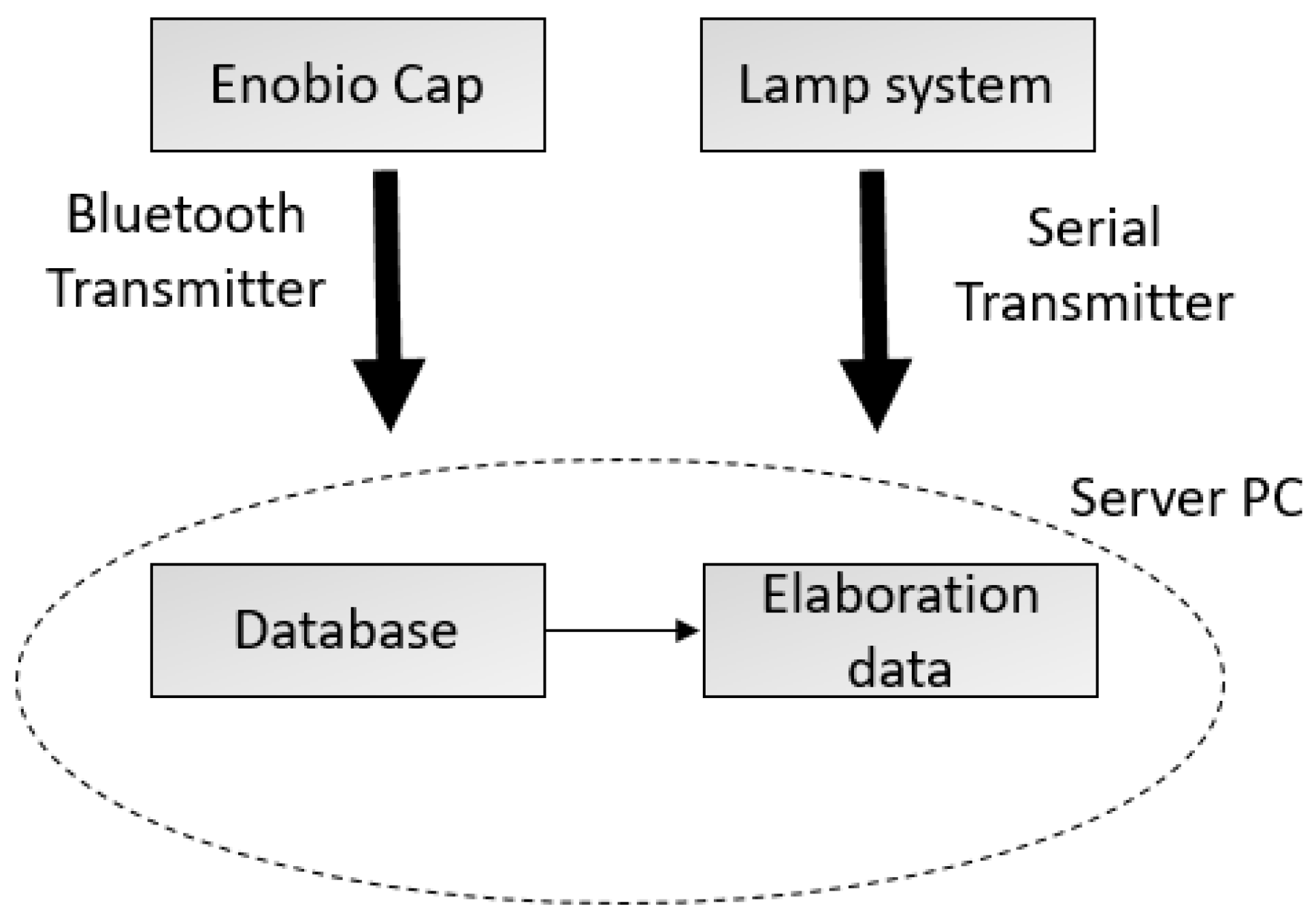

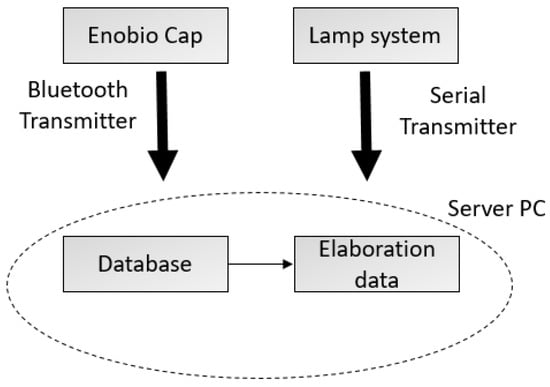

The architecture of the system used for the experiments consists of two interacting sub-systems: (1) a basic lamp system in charge of generating visual stimuli, and (2) an Enobio® EEG systems cap by Neuroelectrics (Cambridge, MA, USA) for EEG signal acquisition. The two subsystems can communicate with a PC server through a serial port and a Bluetooth connector, respectively.

2.1.1. Lamp System

The lamp system’s main components are a Raspberry pi 3-control unit (Cambridge, UK) and two LED lamps. The PC server hosts a Python application, which randomly sends an input to the Raspberry unit by the serial cable. The Raspberry unit hosts another Python application, which receives commands to switch on/off the lamps. Figure 1 shows the system architecture.

Figure 1.

System architecture.

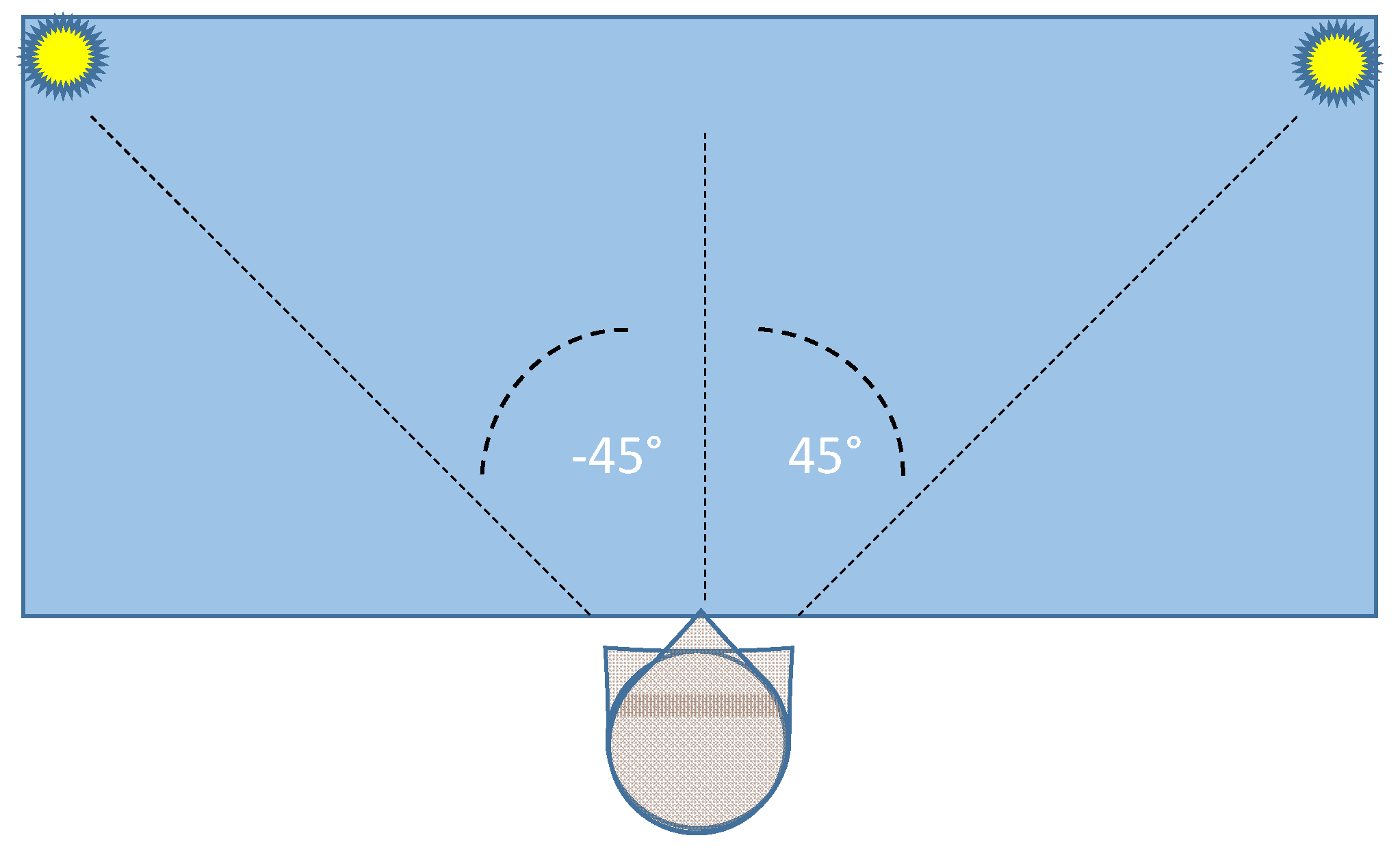

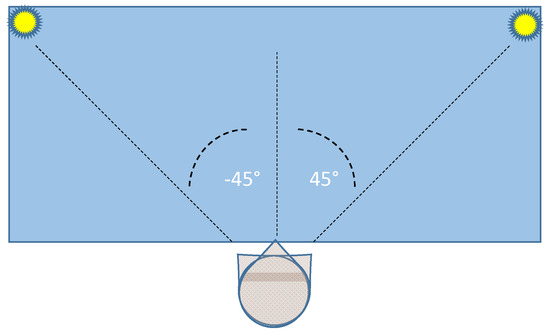

The two lamps are positioned at the extreme sides of a table (size: 1.3 × 0.6 m), allowing a typical head rotation (yaw angle) over a −45°/45° range. Figure 2 shows a top vision of the experimental set environment.

Figure 2.

Top vision of the layout of the experimental set environment.

2.1.2. EEG Enobio Cap

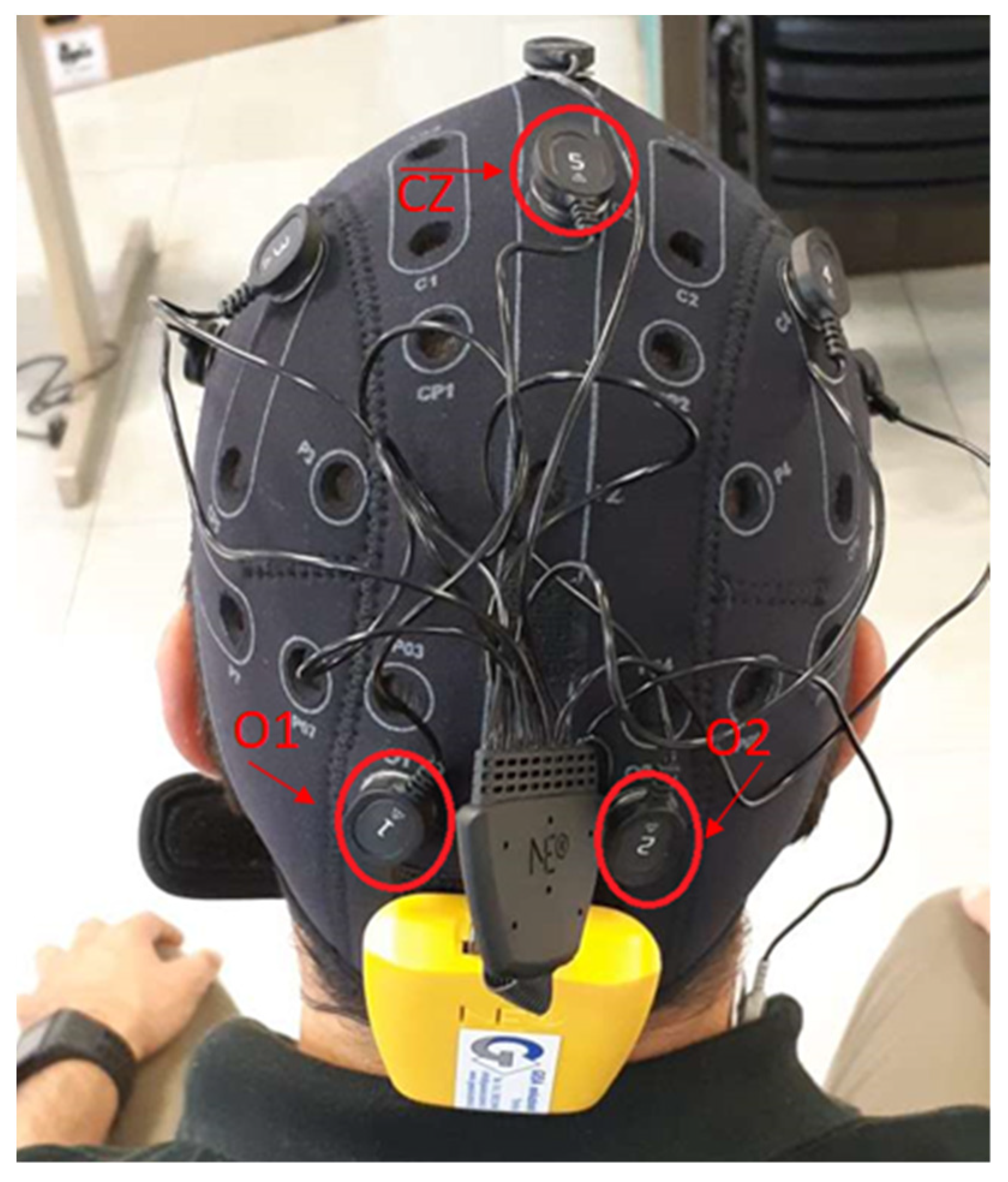

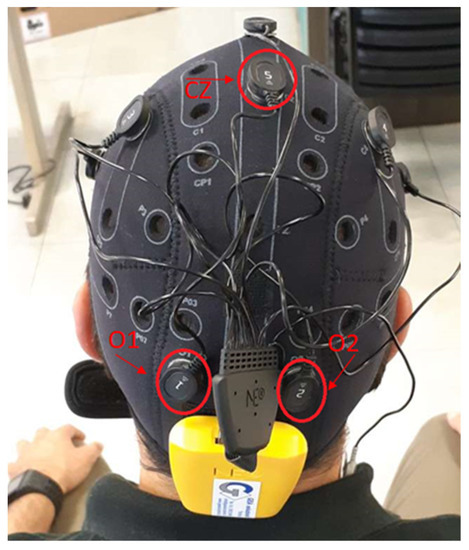

The sensors connected to this cap can monitor EEG signals at 500 Hz frequency. The Enobio cap works on eight different channels. In order to decrease the artifacts due to muscular activity, the EEG system is equipped with two additional electrodes to apply a differential filtering to the EEG signals. These two electrodes are positioned in a hairless area in the head (usually behind the ears by the neck). In the proposed experiments, we focus on three channels labeled O1, O2, and CZ, according to International Standard System 10/20. The first two are positioned in the occipital lobe; the other in the parietal one (Figure 3). The reason for this choice is that the signals coming from the occipital lobe are commonly associated with visual processing [32], while the signals coming from the parietal lobe are related to body movement activities. In addition, a good correlation between occipital centroparietal areas improves visual motor performance identification [33].

Figure 3.

EEG Enobio Cap.

Positioning electrodes on the head plays a fundamental role in the quality of the data acquisition. For example, using gel may improve the quality of EEG signal. However, the main target of this work is verifying EEG monitoring’s feasibility in working conditions, in order to avoid every possible, although limited, action on the workers. For this reason, no gel was used in the sensors positioning phase.

2.2. Simulation Description

During data acquisition, the participant sits in front of the table and wears the EEG Enobio cap, assisted by the operator who checks the electrodes position. Each participant is expected to move his/her head left or right towards the lamp, which is randomly switched on by the Raspberry unit. The lamp stays on for a variable period of time (between six and nine seconds). After turning off, the lamp stays inactive for five seconds. The test participant is expected to move his or her head back to the starting position following the lamp turning off.

2.3. Data Processing and Analysis

2.3.1. Pre-Processing Data

During EEG monitoring, the presence of artifacts and noise in the acquired data was one of the main problems we had to face. Exogenous and endogenous noises can significantly affect reliability of the acquired data. Concerning artifacts, several types have been described in literature [34], among others, such as ocular, muscle, cardiac, and extrinsic artifacts.

In order to limit artifacts, we worked as follows:

- Muscle artifacts were intrinsically limited in the EEG signal acquisition system thanks to the two differential electrodes embodied in Enobio Cap.

- Extrinsic artifacts were limited by proper signal filtering and normalizing EEG signals. Specifically, we applied a bandpass filtering between 49 and 51 Hz in order to eliminate the noise given by the electrical frequencies [35].

- In addition, in order to remove linear trends, a high pass filter—cutting frequencies lower than 1 Hz—filtered the overall signal.

The resulting signals, whose unit of measure is µV, have been amplified to a factor 105, and limited between 1 and −1. The reason is to enhance the precision of the following signal analysis. The head positions were classified as follows: −1 for left position, 1 for right, and 0 for forward. The participants were asked to move the head in a normal speed avoiding sudden movements. Thus, transition from one position to the other (e.g., left to forward) was linearly smoothed using a moving average computed on a window of 300 samples (i.e., for a duration of 0.6 s).

2.3.2. Input Output Data Analysis

The testing goal is to find a direct input-output function that is able to relate a certain number of EEG samples to the related value of the head position. This is challenging since, as stated in literature, time variance [36] and sensibility to different participants’ reactions [37] are well known obstacles.

Specifically, the goal is to identify a non-linear input-output function, which takes 10 consecutive EEG samples, extracted from O1, O2, and Cz, (hereinafter defined as ), which is a 3-component vector sampled at instant t), and the value of the head position in the sample just following the EEG samples (hereinafter defined as ).

A non-linear function between input and output must be identified so that the values resulting by Equation (1):

minimize the minimum squared error (MSE) between and values, where MSE computed on one prediction is given by:

To keep predictions less sensible to the input noise, the predicted values are averaged on a moving mean of 300 preceding samples, which is:

Results related to the identification reliability of the function are evaluated against two key performance indexes, MSE and Pearson correlation coefficient r, as reported below:

where:

- and are the standard deviations of and ;

- is the covariance of and .

An ANN with 10 neurons in the hidden layer identified the non-linear input-output function. The identification process is based on Levenberg–Marquardt backpropagation algorithm [38,39] Matlab® software version R2020 b (Natick, MA, USA). The training processes got a solution after an average of 87 steps (about 45 s) on a common Dell laptop Intel i5-3360M CPU, 2.8 GHz, 8 GB (Austin, TX, USA).

3. Results

3.1. Data Set

The trials involved 10 participants: one woman (P1) and nine men (P2–P10), aged 25 to 60, with no known history of neurological abnormalities.

All participants, but P5, are right-handed. P2 and P4 are hairless. For two participants, namely P1 and P2, 10 different experiments were recorded; for P10, 2 experiments were recorded while for the others, namely P3–P9, only one experiment was recorded. All tests were 5 min long. Table 1 shows the main files characteristics.

Table 1.

The files used to identify the function .

From left to right, the columns show: participant ID; file ID; the number of samples in each file; time elapsed from participant’s first trial; occurrences percentage related to the three coded head positions (1 R (right), 0 F (forward), and −1 L (left)).

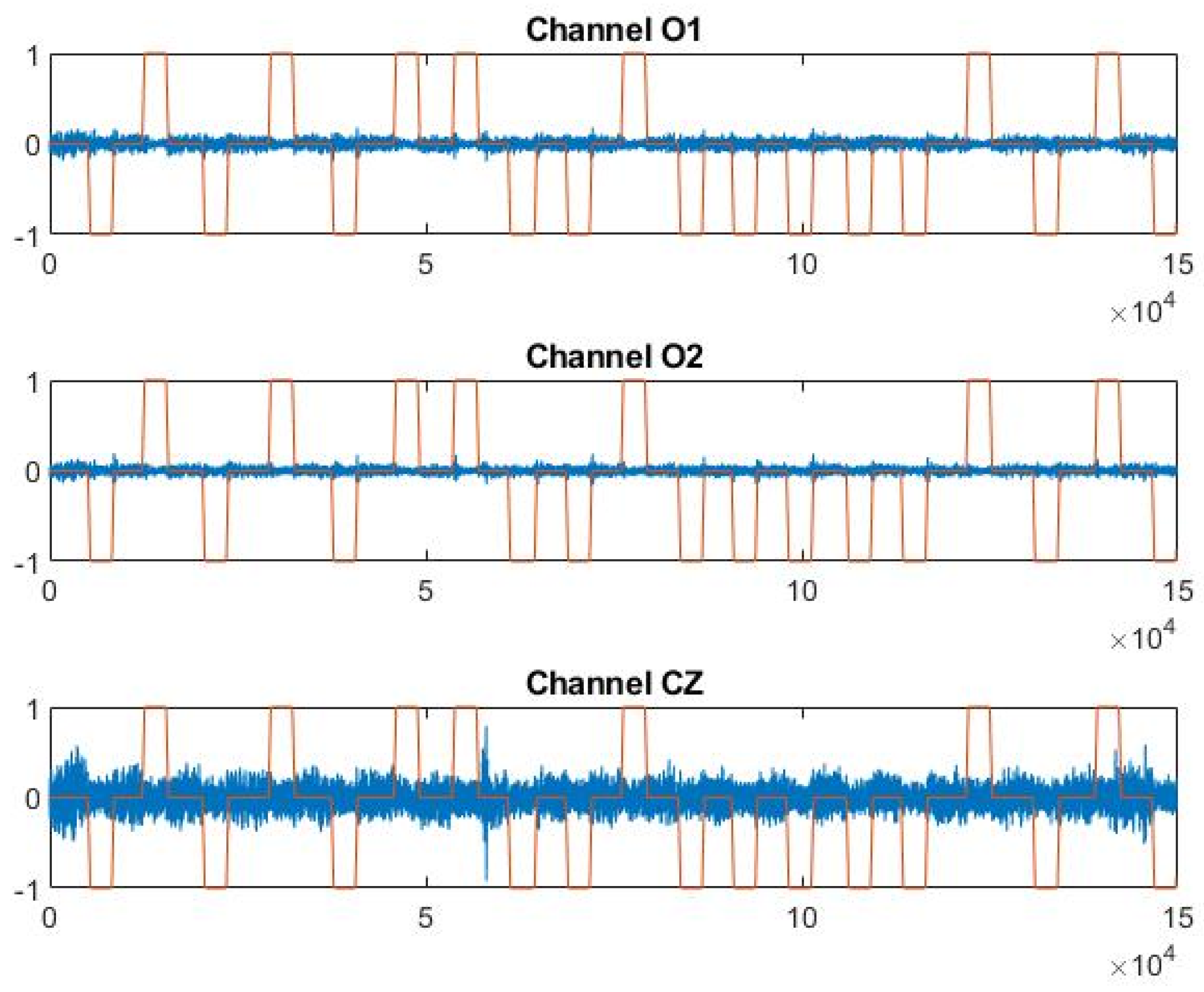

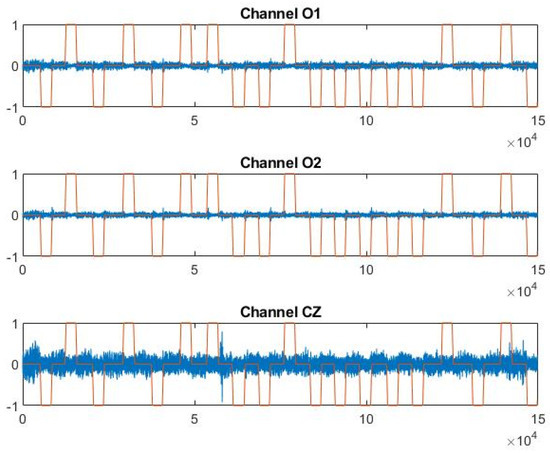

Out of the example, Figure 4 shows P4F1 trend in the three EEG channels versus head movement output signals, filtered and normalized as described in Section 2.3.1.

Figure 4.

Trend of channels O1, O2, and CZ vs. the output signal in file P4 F1.

3.2. First Analysis. Identification of the Function f on the First Half File and Verification on the Second Half

Each file was divided into two equals parts; we named the first “training set,” and the second “test set.” The training sets always include samples related to the three possible positions (R, F, L). The results on the testing set can be further classified according to r value ranges reported in Table 2 [40].

Table 2.

Threshold values to evaluate correlation performance.

Table 3 shows the performance indexes on the testing set. In 29 files, only two (P1F8 and P1F10) show a moderate correlation; the others show a strong one instead.

Table 3.

Prediction performances by the first analysis.

Table 4 and Table 5 report r and MSE values produced by extracting the functions from the 10 different tests on P1 (rows) and applying them to each test for the same subject (columns). Table 6 and Table 7 report the same data produced from P2 tests.

Table 4.

r Values (P1).

Table 5.

MSE Values (P1).

Table 6.

r Values (P2).

Table 7.

MSE Values (P2).

The cells in the tables are grayed according to the classification given in Table 2 (white = strong correlation; gray = moderate correlation; dark gray = weak correlation).

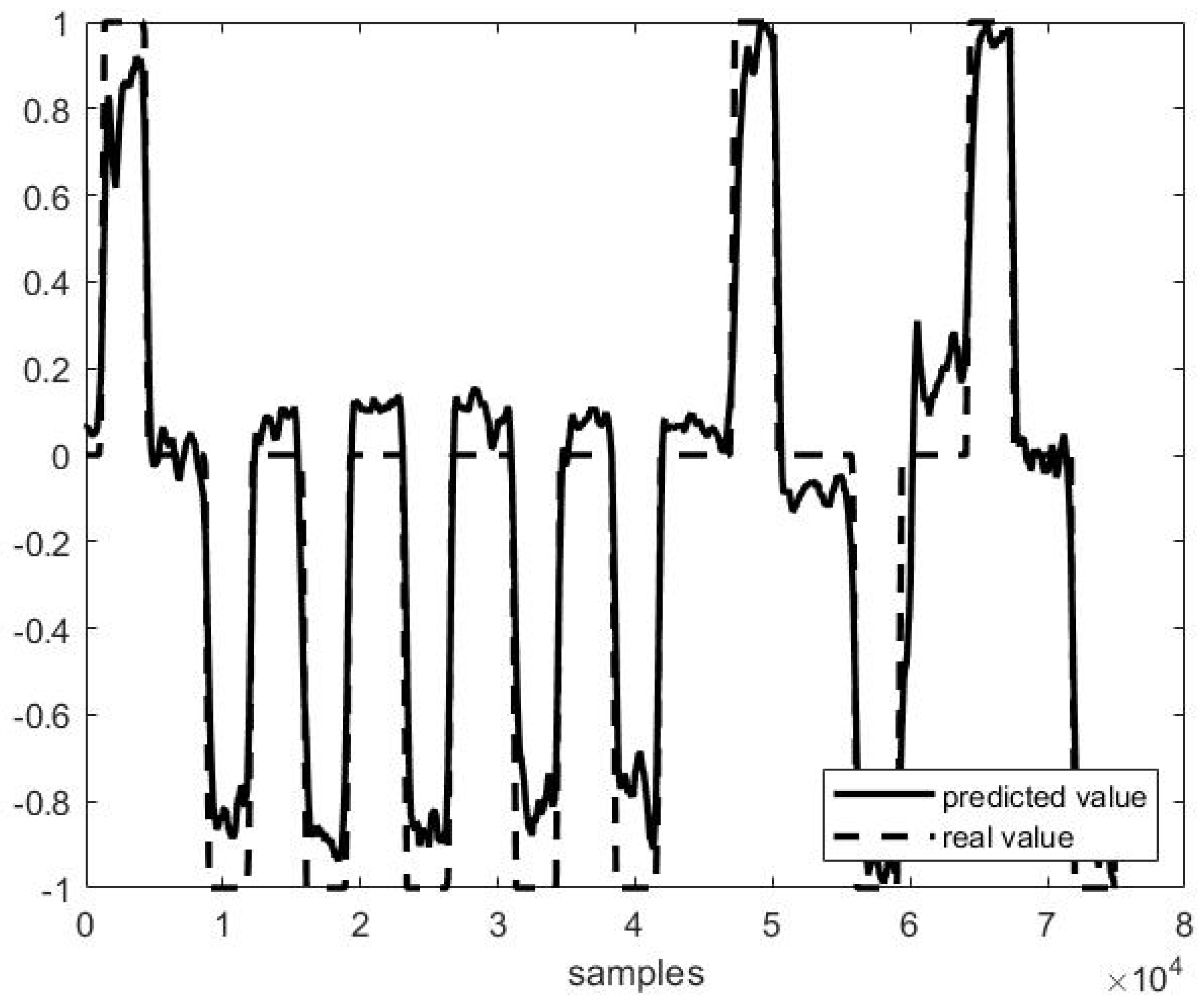

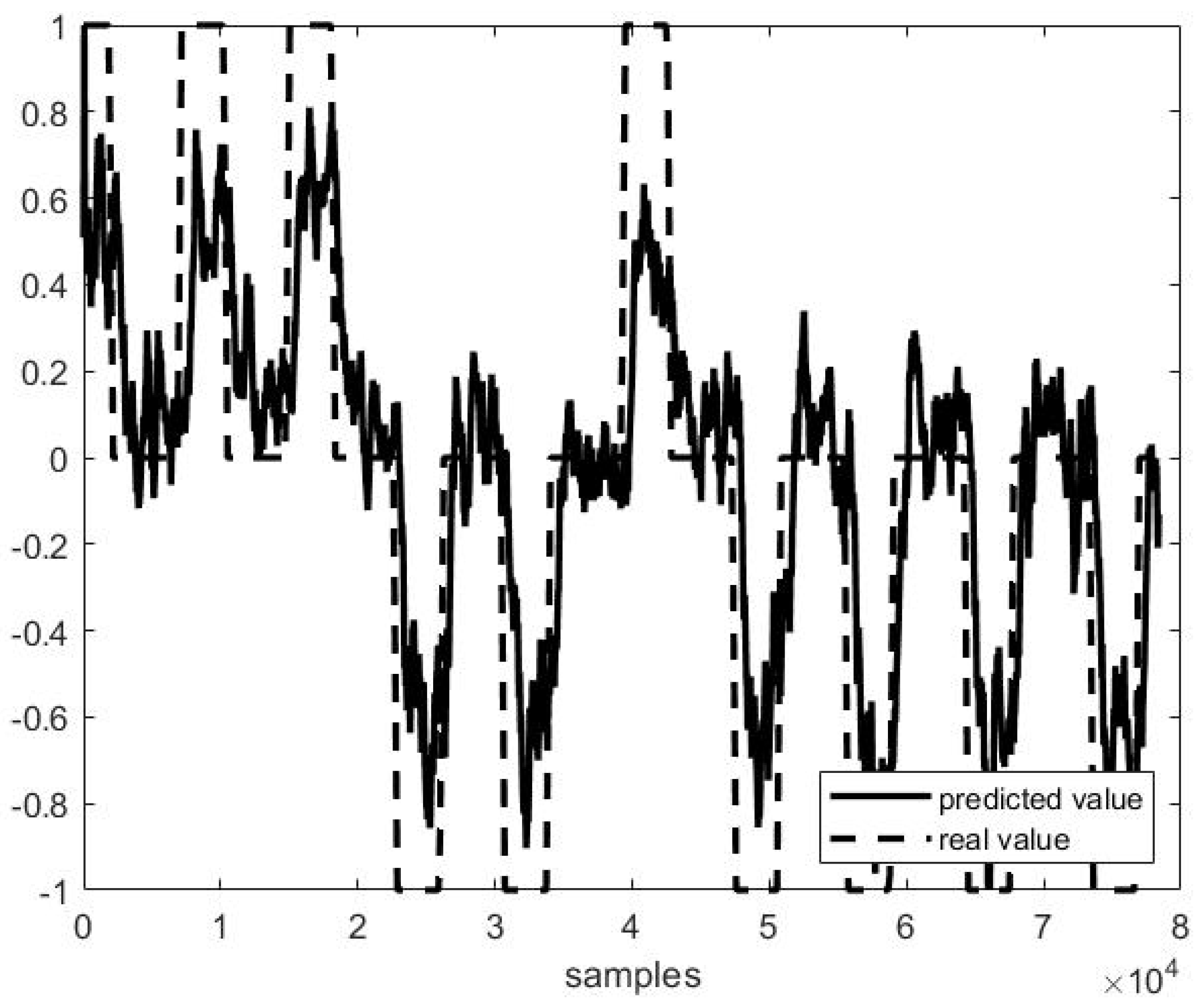

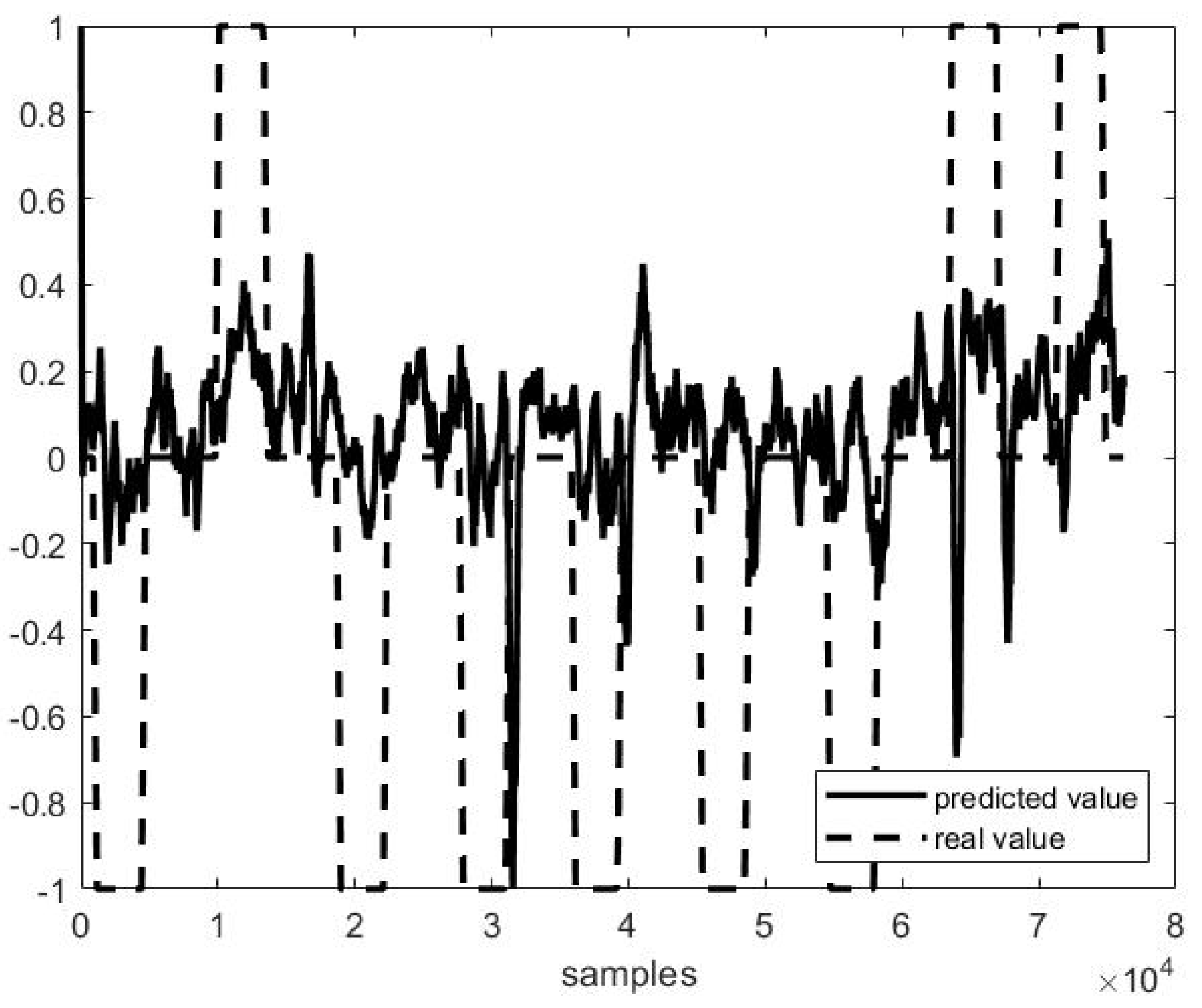

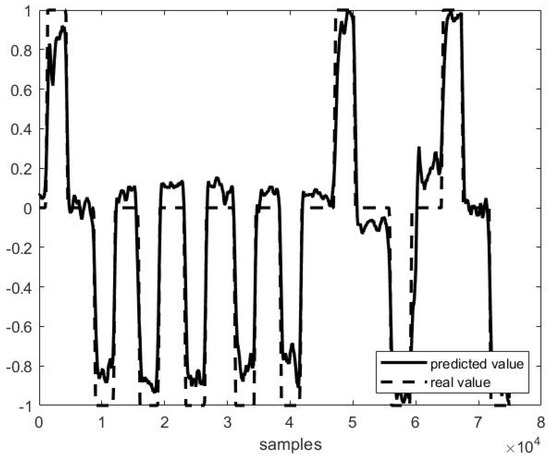

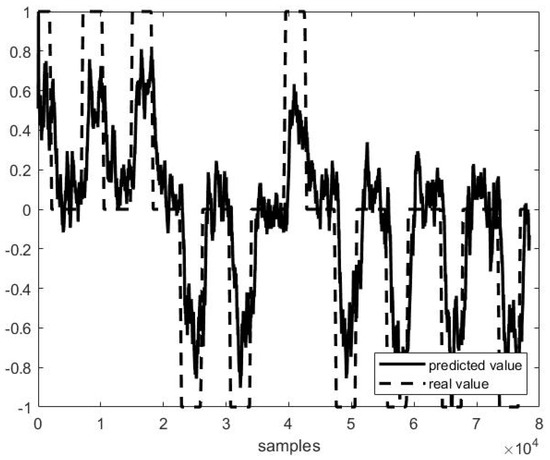

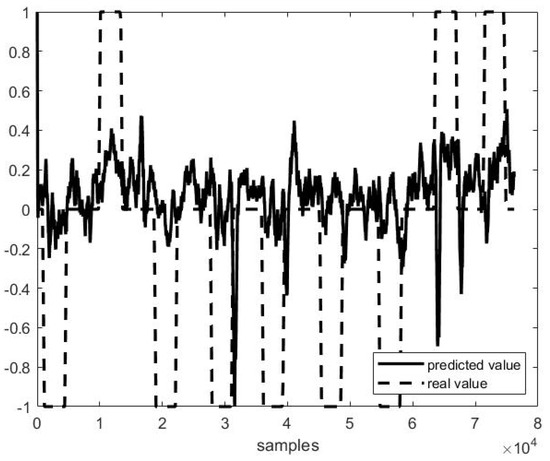

Out of example, Figure 5, Figure 6 and Figure 7 show the trend of three different cases of predictions against the actual head positions.

Figure 5.

Predicted vs. actual values in P4 F1 testing (r = 0.98 and MSE = 0.02).

Figure 6.

Predicted vs. actual values in the second half of file P3 F1 (r = 0.82 and MSE = 0.31).

Figure 7.

Predicted vs. actual values in P1 F10 testing (r = 0.38 and MSE = 0.38).

3.3. Second Analysis. Identification of the Function f on One Participant’s Overall Data and Verification on All Participants’ Overall Data

Following this approach, the files related to the overall experiments for each participant were used to train ANN in order to test the classifier using each function on each test file. Although the function is identified and verified on the same data, the values on the diagonal (see Table 8 and Table 9) showed strong correlation in this analysis too. On the other hand, as expected, testing one subject’s function f on another subject’s data returns very low correlation coefficient values, almost close to zero. There is just one case that contradicts this statement: we managed to see that functions coming from P1 return results with a good performance (r = 0.52, MSE = 0.38) for the P3 case. This exception is surely fortuitous, although it is quite curious noting that P1 is P2′s mother.

Table 8.

r values in the second analysis.

Table 9.

MSE values in the second analysis.

4. Conclusions

The main contribution of this paper is to address an issue that the literature concerning BCI has paid little attention to: the identification of human head movements (yaw rotation) by EEG signals.

This kind of system is effectively starting to become present in commercial systems at prototypal level. For example, it will be used more and more in the automotive context, with proprietary systems, which will be, however, mostly based on ANN applications. Thus, for the scientific community, it is hard to be completely aware of the current state of the art prototypes. In our opinion, it is important to share experimental results on these subjects.

Concerning the head yaw rotation studied in this work, from the trials performed on ten different participants, spanning more than two hours of experiments, it seems clear that—under some specific limitations—this goal is achievable.

Specifically, after identifying a proper function over a short period of time (a couple of minutes for each participant), this can predict head positions with a quite relevant accuracy for the remaining minutes. Such accuracy is quite relevant (MSE < 0.35 and r > 0.5, p < 0.01) since it was obtained in 26 out of 28 tested files. Once the function is identified for a single file, this generally shows good results on files involving the same participant in the same day.

However, the results obtained in different analyses proved that EEG signals are time variant and the files recorded in a short time interval may be useful to generate a classifier for human head movements following visual stimuli. As a matter of fact, such correlation appears to be time dependent, or more likely, quite susceptible to sensors’ positioning. Besides, a further result of the study, which may represent a drawback but also an important finding of the approach, is related to the fact that the correlation is surely dependent on the specific participant, with the impossibility to predict on another subject when the classifier is trained on another one. This may be a disadvantage in the implementation of the EEG classifier because it seems to be significantly different for each subject, and this precludes the ability to achieve an acceptable level of generalization. However, further studies should demonstrate this when the classifier is identified on a group of several different subjects.

Other important remarks concern the EEG data acquisition reliability, which seems to be extremely dependent on the adherence of the electrodes to the scalp. In the proposed study cases, the two hairless participants achieved better performance in the tests proving that the quality of data collection is closely related to the quality of the predictions.

Future developments will address different arguments. Since, in the trials reported in this paper, EEG is affected both by electrical and illumination stimuli, further efforts should be devoted to separate these two aspects. Secondly, further EEG signal analysis should be performed to outline input-output relations for specific frequency bands.

Author Contributions

Conceptualization, R.S., E.Z.; methodology, E.Z., C.B., R.S.; software, E.Z.; validation, E.Z.; writing—original draft preparation, C.B.; writing—review and editing, C.B.; supervision, R.S. However, the efforts in this work by all authors can be considered equal. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all individual participants involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to restrictions present in the informed consent.

Acknowledgments

Research partially supported by Eni S.p.A.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nourmohammadi, A.; Jafari, M.; Zander, T.O. A survey on unmanned aerial vehicle remote control using brain–computer interface. IEEE Trans. Hum.-Mach. Syst. 2018, 48, 337–348. [Google Scholar] [CrossRef]

- Zhang, J.; Yin, Z.; Wang, R. Recognition of Mental Workload Levels Under Complex Human–Machine Collaboration by Using Physiological Features and Adaptive Support Vector Machines. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 200–214. [Google Scholar] [CrossRef]

- Mühl, C.; Allison, B.; Nijholt, A.; Chanel, G. A survey of affective brain computer interfaces: Principles, state-of-the-art, and challenges. Brain-Comput. Interfaces 2014, 1, 66–84. [Google Scholar] [CrossRef]

- Tan, D.; Nijholt, A. Brain-Computer Interfaces and Human-Computer Interaction. In Brain-Computer Interfaces; Tan, D., Nijholt, A., Eds.; Human-Computer Interaction Series; Springer: London, UK, 2010. [Google Scholar]

- Saha, S.; Mamun, K.A.; Ahmed, K.I.U.; Mostafa, R.; Naik, G.R.; Darvishi, S.; Khandoker, A.H.; Baumert, M. Progress in Brain Computer Interface: Challenges and Potentials. Front. Syst. Neurosci. 2021, 15, 4. [Google Scholar] [CrossRef] [PubMed]

- Di Flumeri, G.; Aricò, P.; Borghini, G.; Sciaraffa, N.; Di Florio, A.; Babiloni, F. The dry revolution: Evaluation of three different EEG dry electrode types in terms of signal spectral features, mental states classification and usability. Sensors 2019, 19, 1365. [Google Scholar] [CrossRef]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef] [PubMed]

- Chi, Y.M.; Jung, T.; Cauwenberghs, G. Dry-Contact and Noncontact Biopotential Electrodes: Methodological Review. IEEE Rev. Biomed. Eng. 2010, 3, 106–119. [Google Scholar] [CrossRef] [PubMed]

- Ali, F.; El-Sappagh, S.; Islam, S.R.; Kwak, D.; Ali, A.; Imran, M.; Kwak, K.S. A smart healthcare monitoring system for heart disease prediction based on ensemble deep learning and feature fusion. Inf. Fusion 2020, 63, 208–222. [Google Scholar] [CrossRef]

- Stikic, M.; Johnson, R.R.; Tan, V.; Berka, C. EEG-based classification of positive and negative affective states. Brain-Comput. Interfaces 2014, 1, 99–112. [Google Scholar] [CrossRef]

- Monteiro, T.G.; Skourup, C.; Zhang, H. Using EEG for Mental Fatigue Assessment: A Comprehensive Look into the Current State of the Art. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 599–610. [Google Scholar] [CrossRef]

- Wang, S.; Gwizdka, J.; Chaovalitwongse, W.A. Using wireless EEG signals to assess memory workload in the n-back task. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 424–435. [Google Scholar] [CrossRef]

- Zero, E.; Bersani, C.; Zero, L.; Sacile, R. Towards real-time monitoring of fear in driving sessions. IFAC-PapersOnLine 2019, 52, 299–304. [Google Scholar] [CrossRef]

- Borhani, S.; Abiri, R.; Jiang, Y.; Berger, T.; Zhao, X. Brain connectivity evaluation during selective attention using EEG-based brain-computer interface. Brain-Comput. Interfaces 2019, 6, 25–35. [Google Scholar] [CrossRef]

- Randazzo, L.; Iturrate, I.; Perdikis, S.; Millán, J.D.R. mano: A wearable hand exoskeleton for activities of daily living and neurorehabilitation. IEEE Robot. Autom. Lett. 2017, 3, 500–507. [Google Scholar] [CrossRef]

- Sleight, J.; Pillai, P.; Mohan, S. Classification of executed and imagined motor movement EEG signals. Ann. Arbor Univ. Mich. 2009, 110, 2009. [Google Scholar]

- Liao, J.J.; Luo, J.J.; Yang, T.; So, R.Q.; Chua, M.C. Effects of local and global spatial patterns in EEG motor-imagery classification using convolutional neural network. Brain-Comput. Interfaces 2020, 7, 47–56. [Google Scholar] [CrossRef]

- Athanasiou, A.; Chatzitheodorou, E.; Kalogianni, K.; Lithari, C.; Moulos, I.; Bamidis, P.D. Comparing sensorimotor cortex activation during actual and imaginary movement. In XII Mediterranean Conference on Medical and Biological Engineering and Computing; Springer: Berlin/Heidelberg, Germany, 2010; pp. 111–114. [Google Scholar]

- Li, B.J.; Bailenson, J.N.; Pines, A.; Greenleaf, W.J.; Williams, L.M. A public database of immersive VR videos with corresponding ratings of arousal, valence, and correlations between head movements and self report measures. Front. Psychol. 2017, 8, 2116. [Google Scholar] [CrossRef]

- Ilyas, M.Z.; Saad, P.; Ahmad, M.I.; Ghani, A.R. Classification of EEG signals for brain-computer interface applications: Performance comparison. In Proceedings of the 2016 International Conference on Robotics, Automation and Sciences (ICORAS), Melaka, Malaysia, 5–6 November 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Narayan, Y. Motor-Imagery EEG Signals Classification using SVM, MLP and LDA Classifiers. Turk. J. Comput. Math. Educ. 2021, 12, 3339–3344. [Google Scholar]

- Bressan, G.; Cisotto, G.; Müller-Putz, G.R.; Wriessnegger, S.C. Deep learning-based classification of fine hand movements from low frequency EEG. Future Internet 2021, 13, 103. [Google Scholar] [CrossRef]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Kalafatakis, K.; G Tsipouras, M.; Giannakeas, N.; Tzallas, A.T. EEG-Based Eye Movement Recognition Using the Brain–Computer Interface and Random Forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef]

- Zero, E.; Bersani, C.; Sacile, R. EEG Based BCI System for Driver’s Arm Movements Identification. In Proceedings of the Automation, Robotics & Communications for Industry 4.0, Chamonix-Mont-Blanc, France, 3–5 February 2021; Volume 77. [Google Scholar]

- Bashashati, A.; Fatourechi, M.; Ward, R.K.; Birch, G.E. A survey of signal processing algorithms in brain–computer interfaces based on electrical brain signals. J. Neural Eng. 2007, 4, R32. [Google Scholar] [CrossRef] [PubMed]

- Kawala-Sterniuk, A.; Browarska, N.; Al-Bakri, A.; Pelc, M.; Zygarlicki, J.; Sidikova, M.; Martinek, R.; Gorzelanczyk, E.J. Summary of over Fifty Years with Brain-Computer Interfaces—A Review. Brain Sci. 2021, 11, 43. [Google Scholar] [CrossRef] [PubMed]

- Craig, D.A.; Nguyen, H.T. Adaptive EEG Thought Pattern Classifier for Advanced Wheelchair Control. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 2544–2547. [Google Scholar] [CrossRef]

- Alazrai, R.; Abuhijleh, M.; Alwanni, H.; Daoud, M.I. A Deep Learning Framework for Decoding Motor Imagery Tasks of the Same Hand Using EEG Signals. IEEE Access 2019, 7, 109612–109627. [Google Scholar] [CrossRef]

- Tariq, M.; Trivailo, P.M.; Simic, M. Mu-Beta event-related (de) synchronization and EEG classification of left-right foot dorsiflexion kinaesthetic motor imagery for BCI. PLoS ONE 2020, 15, e0230184. [Google Scholar] [CrossRef]

- Chambayil, B.; Singla, R.; Jha, R. EEG eye blink classification using neural network. In Proceedings of the World Congress on Engineering, London, UK, 30 June–2 July 2010; Volume 1, pp. 2–5. [Google Scholar]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A review of classification algorithms for EEG-based brain–computer interfaces. J. Neural Eng. 2007, 4, R1. [Google Scholar] [CrossRef]

- Müller, V.; Lutzenberger, W.; Preißl, H.; Pulvermüller, F.; Birbaumer, N. Complexity of visual stimuli and non-linear EEG dynamics in humans. Cogn. Brain Res. 2003, 16, 104–110. [Google Scholar] [CrossRef]

- Rilk, A.J.; Soekadar, S.R.; Sauseng, P.; Plewnia, C. Alpha coherence predicts accuracy during a visuomotor tracking task. Neuropsychologia 2011, 49, 3704–3709. [Google Scholar] [CrossRef]

- Jiang, X.; Bian, G.B.; Tian, Z. Removal of artifacts from EEG signals: A review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef]

- Singh, V.; Veer, K.; Sharma, R.; Kumar, S. Comparative study of FIR and IIR filters for the removal of 50 Hz noise from EEG signal. Int. J. Biomed. Eng. Technol. 2016, 22, 250–257. [Google Scholar] [CrossRef]

- Sakkalis, V. Review of advanced techniques for the estimation of brain connectivity measured with EEG/MEG. Comput. Biol. Med. 2011, 41, 1110–1117. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef] [PubMed]

- Sapna, S.; Tamilarasi, A.; Kumar, M.P. Backpropagation learning algorithm based on Levenberg Marquardt Algorithm. Comp. Sci. Inform. Technol. 2012, 2, 393–398. [Google Scholar]

- Lv, C.; Xing, Y.; Zhang, J.; Na, X.; Li, Y.; Liu, T.; Cao, D.; Wang, F.Y. Levenberg–Marquardt backpropagation training of multilayer neural networks for state estimation of a safety-critical cyber-physical system. IEEE Trans. Ind. Inform. 2017, 14, 3436–3446. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).