- Article

Key Features to Distinguish Between Human- and AI-Generated Texts: Perspectives from University Professors

- Georgios P. Georgiou

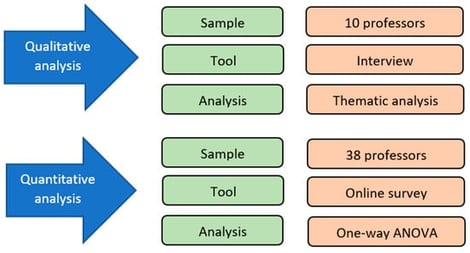

This study provides direct evidence from university professors’ experiences regarding the key features they use to identify artificial intelligence (AI)–generated texts and ranks these features by their perceived importance. The research was conducted in two phases. In Phase 1, online interviews were used to identify the most salient features professors reported using to detect AI-generated texts. In Phase 2, an online survey asked professors to rate the extent to which each identified feature contributes to the successful detection of AI-generated text. The interview data yielded seven features that professors reported using when they suspected a text was AI-generated. Survey ratings varied across features, with hallucinated facts or explanations, nonexistent sources, and the absence of language errors receiving the highest mean ratings in this sample. The use of difficult words received the lowest mean rating. These results have important pedagogical implications, as they can inform the development of more effective detection tools and guide the design of academic integrity policies and instructional strategies to address the challenges posed by AI-generated content.

2 February 2026