Abstract

The release of the large language model-based chatbot ChatGPT 3.5 in November 2022 has brought considerable attention to the subject of artificial intelligence, not only to the public. From the perspective of higher education, ChatGPT challenges various learning and assessment formats as it significantly reduces the effectiveness of their learning and assessment functionalities. In particular, ChatGPT might be applied to formats that require learners to generate text, such as bachelor theses or student research papers. Accordingly, the research question arises to what extent writing of bachelor theses is still a valid learning and assessment format. Correspondingly, in this exploratory study, the first author was asked to write his bachelor’s thesis exploiting ChatGPT. For tracing the impact of ChatGPT methodically, an autoethnographic approach was used. First, all considerations on the potential use of ChatGPT were documented in logs, and second, all ChatGPT chats were logged. Both logs and chat histories were analyzed and are presented along with the recommendations for students regarding the use of ChatGPT suggested by a common framework. In conclusion, ChatGPT is beneficial for thesis writing during various activities, such as brainstorming, structuring, and text revision. However, there are limitations that arise, e.g., in referencing. Thus, ChatGPT requires continuous validation of the outcomes generated and thus fosters learning. Currently, ChatGPT is valued as a beneficial tool in thesis writing. However, writing a conclusive thesis still requires the learner’s meaningful engagement. Accordingly, writing a thesis is still a valid learning and assessment format. With further releases of ChatGPT, an increase in capabilities is to be expected, and the research question needs to be reevaluated from time to time.

Keywords:

AIEd; artificial intelligence; academic writing; large language models; education; ChatGPT 1. Introduction

In November 2022, the large language model (LLM)-based chatbot ChatGPT was released and received a lot of public attention due to its enormous capabilities. ChatGPT is an example of an increasingly powerful artificial intelligence (AI) tool that allows opening up new fields of application [1]. Higher education (HE), with numerous teaching and assessment formats, also provides numerous opportunities for AI tools. For example, ChatGPT may be implemented in new teaching formats that specifically align the learning objectives to the student’s capabilities and support individual learning processes with accompanying personalized feedback [2,3]. Additionally, the use of AI tools may have a disruptive impact on assessment formats in HE, as the learning and assessment functionality of the formats is at least reduced, if not lost [4,5,6,7,8]. For instance, if an essay is written entirely by ChatGPT, the student’s writing skills will not be encouraged to the level intended. Also, when answers to exam questions are generated by students using ChatGPT, there is an assessment of ChatGPT’s “knowledge” rather than of the student’s understanding. In conclusion, there is a demand for adaptation of existing teaching and assessment formats to the capability of current AI tools. In addition, organizational guidelines must ensure the responsible use of AI in HE [9].

As a chatbot, ChatGPT is well-suited in those teaching and assessment formats mostly based on generating texts. Such a format also includes theses, such as bachelor theses and student research papers. For theses, the use of ChatGPT cannot be excluded in the same way as for, e.g., proctored or oral exams. Accordingly, it is important to answer the research question regarding the impact ChatGPT might have on the assessment and learning functionality of theses. Thus, the first author was assigned to construct his bachelor thesis using ChatGPT wherever possible and meaningful. Based on an autoethnographical approach, the impact of the AI tool on text-heavy formats of HE is to be identified. In accordance, the next section presents the state of the literature leading from AI in education in general toward the writing of theses with the help of LLM-based AI tools. Section 3 describes the methods and the general findings of the bachelor thesis. The autoethnographical results are described in Section 4. Finally, in the subsequent two sections, the results are discussed, and the conclusions are drawn.

2. Literature Review

Particularly in education, new “artificial intelligence tools for education (AIEd)” [10] (p. 11) are being pushed forward in a dedicated field of research [11]. The primary tasks of AIEd are to provide support in HE [10,11], as well as to teach “digital literacy” [12] (p. 32). In the following, we will discuss cornerstones in the field.

2.1. AIEd Classification

AIEd may be classified by different approaches. For example, de Witt et al. [4] described the relevance of AIEd based on the importance of learning analytics (LA) in the purposeful optimization of everyday HE life. This involves using data collection and analysis to advance to a higher level of comprehension of teaching and learning. Subsequently, based on the interaction between the user and AI tools, the respective capabilities should be combined to exploit the potential of AIEd.

Based on their systematic literature review, Zawacki-Richter et al. [3] classified AIEd according to the most frequently named fields of application in everyday HE life. They distinguished between applications that relate to the administrative level of HE, direct use within teaching formats, the organization of studies, and evaluation and monitoring. Contrary to this, Backer and Smith [10] referred to a classification of AIEd based on system-, teaching- and learning-oriented applications. Thereby, system-oriented applications focus on the provision of efficient infrastructure in study organization and administration [10]. This includes automation of enrollment and admission processes [3], as well as the automated identification and analysis of data regarding teaching and learning [12]. Teaching-oriented applications focus on instructors; accordingly, new teaching formats may be developed that contribute to an individualization of teaching [10,11]. Thus, lectures might evolve into a “consultation meeting” in which instructors interact with learners by providing “advice and assistance” [13] (p. 262). This is flanked by the changing of the instructor’s role to that of a learning facilitator via the partial takeover of the teaching by AIEd [11,13,14]. Learning-oriented applications, on the other hand, focus on the individual needs of learners. Thereupon, the focus is on adapting learning objectives to the competencies of the instructors by providing location- and time-independent support with personalized feedback [2,3,11,15]. In addition, AIEd can be used specifically to assist with exam preparation, saving significant time for the learner and maximizing their academic success [5,7,8].

2.2. Acceptance

Besides the accessibility and identification of suitable starting points for AIEd, acceptance by learners is a key factor. Stützer [16] investigated the acceptance of AIEd at four German universities. With a survey, interdisciplinary influences, already-tapped individual experiences in interacting with AIEd, and further support provided by AI were considered. The results showed that most learners considered the interdisciplinary use of AIEd as a possibility for the integration of digital technologies in HE. In this regard, a correlation was found between acceptance and the research field of the survey participants. Members of scientific study programs showed a higher level of acceptance. In particular, dialog and assistance systems were appreciated by the students. With regard to the risks of using AIEd, opinions were diverse, ranging from no concerns to uncertainty due to unpredictable side effects. Also, in line with the literature [11,13,17], students preferred the accompanying contact with instructors. Stützer [16] stated that the acceptance of AI tools increases with purposeful use in addition to performance and usefulness.

2.3. Influencing Factors

Two influencing factors for AIEd, in particular, can be identified: First, the COVID-19 pandemic and ongoing digitalization efforts at universities have encouraged the digitization of teaching [16]. Also, learners are accessing external technologies on their own when digital infrastructure is not provided. In some cases, provided HE institutions’ resources were rejected [7,13]. In this context, AIEd are also increasingly used outside of any control and in an environment that is not didactically visible [18]. On the other hand, the progressive development of AI adapted to teaching [1] creates access to a wide range of assistance systems, which are increasingly actively integrated into HE [19].

2.4. Potentials of LLMs in HE

ChatGPT is based on an LLM. LLMs are a subfield of generative AI and are capable of understanding, processing, and generating natural language. Based on Baker and Smith [10], the starting points of LLM in HE are described in the following. Besides identifying application areas, the capabilities of ChatGPT are also discussed. Overall, LLMs may be used qualitatively in HE [13] and contribute to the elevation of the educational potential of learners [20] (p. 342) by individualizing and personalizing learning [2,3,10].

2.4.1. System-Oriented Potentials

The system-oriented use of LLMs is based on improving organizational conditions via automated data collection [21], for example, by taking over routine student administration tasks [10,22]. In addition, LLMs may act as facilitators of communication between students and administrators [3,22]. On the other hand, as a universal tool, LLMs may take over the functions of, e.g., search engines and language translators and—in general—facilitate everyday work [23].

2.4.2. Teaching-Oriented Potentials

Students may be supported within lectures by intensifying interactions during learning [24]. In addition, more discussions may be encouraged, and more feedback may be integrated as a supportive element in teaching [24]. Beyond the course, LLMs may contribute to new teaching methods [17,24]. Further, LLM may support instructors’ research activities away from teaching, e.g., as a tool for literature reviews [25] and support the preparation of scientific papers [5,7,8,17].

2.4.3. Learning-Oriented Potentials

In HE, LLMs focus on “Intelligent Tutoring Systems” (ITS) [3], which develop new topics interactively with learners and react flexibly to their needs [7,26]. In this regard, LLMs are to be understood as time- and location-independent tools that enrich learners’ daily HE lives flexibly [27,28]. Continuing, LLMs emphasize an experimental approach to experiences in contrast to conventional methods [24]. By intensifying the interaction between learners and digital tools, learning outcomes are improved [17]. On the other hand, LLMs may be used in assessments [29] and simulations of exams [8]. Here, the focus is on the preparation of text-oriented coursework, such as essays and student research papers [7,8,17,23,24,30,31].

2.5. Integration into Everyday HE Life

The availability of AI tools, such as LLMs [29], in HE teaching [1] creates the necessity to integrate them in HE. Thus, numerous studies already presented the impact of AI tools on teaching and learning using pilot implementations [3,18,32,33,34]. An integration of LLMs in HE teaching might comprise the following steps.

2.5.1. Perception

A prior requirement for the use of LLMs in HE is an awareness of LLMs’ potential, as well as the willingness to change [16]. Important are opportunities that allow for independent application of LLMs in individual learning environments and subsequent reflection [16]. According to Witt and Rampelt [4] (p. 10), the avoidance of “technology determinism in teaching and learning” is the foundation for exploiting potentials in line with HE goals. Accordingly, an identification of available resources [14,35] and possible applications of LLMs is required for implementation [4,9]. Guidelines for handling LLM-generated content are required, e.g., how LLM-generated content is declared and what monitoring methods are available on the part of teaching [9].

2.5.2. Accumulation

The second step is the accumulation of LLM usage in teaching. For the most part, digital technologies, including LLMs, are currently not actively integrated into teaching but only insufficiently interspersed [14]. Pilot deployments of LLM are needed to evaluate and measure performance in actual teaching as well [36]. Specifically, no coordination and adjustments should be made [36] to prevent a “devaluation of classroom teaching” [13] (p. 249), which insufficiently takes advantage of the potential.

2.5.3. Integration

The third step, integration, builds on the alignment of teaching formats with LLMs [12]. This may lead to new formats that are not only supplemented with LLMs but actively use their potential for teaching [12]. As a result, teaching formats also serve to deepen what has been learned [36], in which instructors moderate the teaching content and assist learners within their individual learning phase in applying LLMs [2]. A key aspect is reaching acceptance toward LLMs [16,21]. Accordingly, a purposeful integration of LLMs that unites the views of instructors and learners might be beneficial [37].

2.6. Challenges

The meaningful use of LLM in HE is being faced not only with technical difficulties but also with didactic and organizational challenges [38]. Consequently, problems such as discrimination by LLMs in the sense of manipulation of data sets [39] highlight the need for legal frameworks and data protection regulations in handling LLMs [14,40]. Further, ensuring inclusive use of LLMs in education is gaining importance [33]. The inclusive use of LLM requires the alignment of monetary, ethical, legal, social, and didactic factors [41]. In addition, LLM-specific literacy skills must be ensured [42], and the responsibility of users [43] must be emphasized. Building on this, new assessment regulations that consider the use of LLMs in teaching formats [9,10] should be integrated.

2.7. Curricular Implications

For the integration of LLM into HE, embedding LLM-specific literacy meta-skills as learning objectives into curricula is mandatory [4,12]. Accordingly, a curriculum analysis may identify modules suitable for the integration of LLMs [12]. Building on the integration in existing modules, new modules may then be provided to teach LLM-specific meta-skills [14].

2.8. Chatbots

With the advent of ChatGPT at the latest, chatbots have become a technology to be considered in higher education. For example, Wu et al. [44] concluded from a meta-analysis that chatbots have a large significant effect on learning outcomes, especially in HE and short-running learning activities. Accordingly, Guo et al. [45] used the example of chatbot-assisted in-class debates to show that chatbots generate new learning activities. Hwang and Chang [46], however, found that chatbots have been predominantly used in guided learning activities and that more diverse instructional design integration is needed. In a systematic scoping review, Yan et al. [47] identified nine categories in which LLMs can help automate learning activities: profiling/labeling, detection, grading, teaching support, prediction, knowledge representation, feedback, content generation, and recommendation. From the perspective of educators, there are no objections related to technology acceptance [48]. Nevertheless, there are significant unresolved ethical issues, such as a lack of reproducibility and transparency and insufficient privacy measures [47].

Collectively, the literature review shows that AIEd has emerged as a serious cornerstone of HE—although it is still considered a field to be developed [49] —and LLMs, in particular, may have a significant impact on teaching. Accordingly, the subsequent investigation on the potential of LLM-based ChatGPT is an important measurement to align the HE-relevant regulations.

3. Materials and Methods

The exploratory study is based on a bachelor thesis, written by the first author from February to June 2023 and supervised by the two other authors. A bachelor thesis is a scientific assignment of several months that students of bachelor’s degree programs in European education systems, among others, do at the end of their studies in order to demonstrate their ability to carry out scientific work under the guidance of HE lecturers. The objective of the bachelor thesis was to identify the potentials and challenges of ChatGPT for HE based on the study course of environmental engineering as a reference. Thus, the use of ChatGPT in university teaching was considered in general, and whenever a specific subject-related reference was found to be helpful for the methodology used, the environmental engineering study course was taken as an example. For instance, this was conducted for the practice study presented in Section 3.2.1 and for the assessment model described in Section 3.2.2. In the second meta-level assignment part, the use of ChatGPT for the development of this bachelor thesis was documented and analyzed. Neither ChatGPT nor other AI-assisted tools were used to write this article.

3.1. Bachelor Thesis

Methodically, the bachelor thesis was divided into four sections.

- Literature Review. The theoretical foundation was built on literature research, which allowed the outline of the importance of LLM in teaching (see Section 2).

- Practice Study. A practical study, i.e., the application of ChatGPT (3.5, GPT-3) to a final exam, was used to assess the performance of ChatGPT.

- Evaluation Model. The third step was to develop a simplified evaluation model for the suitability of an LLM to support the learning performance of a teaching–learning activity. The goal of such an evaluation model was to identify teaching–learning activities that particularly benefit from the availability of LLMs.

- Autoethnography. Autoethnography was to be used to document all decisions to use ChatGPT, as well as all ChatGPT chat history created during the development of the bachelor thesis. In general, autoethnography is defined as a research method that aims at describing and analyzing personal experiences to understand mostly cultural phenomena, often in a broader sense [50]. Applications include, amongst others, the experience of studying abroad during an epidemic [51] or learning practices in multiplayer online games [52]. Accordingly, autoethnography is regarded in this study as a valid method for capturing the potential uses of ChatGPT. By documenting decisions on the use of ChatGPT, the results and experiences during its use, and the evaluations of the results, estimations of the implications of using ChatGPT are enabled.

3.2. Results

3.2.1. Practice Study

A final exam of the course Urban Engineering Water consisting of open questions was answered via ChatGPT. The answers to the one-hour exam with 18 questions were graded by the regular instructor of the course. In the first iteration, 51% of the points were obtained based on a rigorous assessment, leading to a passed exam. This was followed by a second iteration in which ChatGPT was instructed to pay particular attention to those three books on which the course was based. The responses scored 61% of the points, using the same assessment scheme as before. The assessing instructor, who is to be described as quite demanding regarding digital tools in HE, was appreciatively surprised by ChatGPT’s performance. Thus, it could be shown that ChatGPT might be used to pass a final exam. Admittedly, this study is slightly theoretical, as the large-scale use of ChatGPT required in a proctored exam is challenging to achieve.

3.2.2. Assessment Model

The purpose of the multi-criteria assessment model—developed on the theoretical foundations of multicriteria decision analysis (MCDA) [53,54,55]—was to provide a measurement of the suitability of ChatGPT to support teaching formats. The objective was to provide a simple multi-criteria assessment model that could roughly assess the usefulness of ChatGPT for the learning effectiveness of a teaching format. Based on a literature review, the model was discussed and specified by the authors in an interactive session. It is vital to stress that this model is only a measure of the extent to which the learning functionality of a teaching format is enhanced by ChatGPT, but not—as described above—what effect ChatGPT has on the assessment functionality of a teaching format.

We choose interactivity (or What is the regular level of interactivity of the teaching format?) as a criterion because interactivity is important for high learning effectiveness [56] and is promoted by discussion and reflection of the learners [57]. According to Van Laer and Elen [58], interactivity may be seen as a foundation for so-called blended learning environments, which drive the design of effective teaching formats [59]. ChatGPT is considered to provide interactivity into teaching formats from the students’ perspective and thus be conducive to learning outcomes.

Feedback (or What is the regular level of feedback given to the student within the teaching format?) was chosen as a criterion because formative feedback, in addition to individualizing teaching formats [56], also supports student learning within blended learning environments [58], which, according to Trigwell and Prosser [60], impacts learning outcomes. Again, we see ChatGPT as a tool that may support the learning process via feedback.

We applied values for these two criteria to prevalent teaching formats and determined the suitability of ChatGPT to support the learning for these teaching formats (Table 1). Methodologically, the two criteria were each rated in three parts by the author team in an interactive session as high (3), medium (2), and low (1). For the suitability of ChatGPT, the mean of both criteria was used, rounded, and inverted. For example, for a thesis, interactivity is to be considered low (since it is individual work). The feedback is likewise to be estimated as low, since also here predominantly individual work takes place and actual feedback has to be always requested by the learner actively, for example, by consultations with advisers. On average, this results in a value of 1, which inverts—because ChatGPT might remedy this shortcoming—to a value of 3 (high) for the suitability of ChatGPT to support learning while developing a thesis. We argue that this rating reflects reality well. In our assessment, ChatGPT can be seen as very helpful for learning during a thesis due to features such as structuring and summarizing text. In this assessment, we assume that the results of ChatGPT are critically reflected by the learners and thus foster learning.

Table 1.

Suitability of prevalent teaching formats to foster learning based on ChatGPT support.

3.3. Autoethnography

All considerations regarding the use of ChatGPT were documented in terms of autoethnographic logs [50,61,62,63] regardless of whether ChatGPT was finally used or not. Similarly, all chat histories were retained with ChatGPT. In total, five log entries (ca. 5000 words total) as well as 15 chat histories (ca. 1200 words total) were available for the following analysis. In addition, the log entries were supplemented by a posteriori reflections of the first author. Based on a rough estimate of the first author, the final bachelor thesis is based.

- textually on 1% of texts generated from ChatGPT 1% (measure: ratio of word-for-word adoption of ChatGPT (compared to complete text),

- structurally on 15% items suggested by ChatGPT 15% (measure: structuring elements, such as headings and lists),

- ideationally on 10% of ideas suggested by ChatGPT 10%. (measure: ideas, which are explicated in the thesis)

The autoethnographic data were aligned with the nine recommendations for learners—as the autoethnographic data has been collected by a learner—by Gimpel et al. [9]. Gimpel et al. [9] is a guideline for instructors and learners for handling ChatGPT and other LLMs, which was published in March 2023 as a result of the collaboration of a panel of experts from HE. These nine recommendations were chosen by us because they foster constructive use of ChatGPT and therefore, in our view, might be a benchmark for ChatGPT use.

4. Autoethnographic Experiences

For the following, the autoethnographic data are presented according to the recommendations of Gimpel et al. [9]. For each recommendation, a description of the recommendation itself is given first, followed by selected autoethnographic experiences made during the writing of the bachelor thesis, and finally, a summary, striving to abstract the ChatGPT experience into generally applicable findings. When spoken of here in the first-person singular, this is written from the perspective of the first author, who is the author of the autoethnographic data.

4.1. Respect the Law and Examination Regulations

Recommendation. This recommendation indicates that learners have to observe applicable laws and regulations and, where appropriate, label AI-generated content, including the information provided.

My experiences. Essential for the use of ChatGPT in the development of the bachelor thesis was the orientation toward applicable guidelines for the handling of artificially generated content. Here, the focus was on a self-responsible use of ChatGPT under consideration of prevailing regulation frameworks, such as the examination regulations of the HE institution. These, as well as further modalities concerning the development of theses, had to be found out and implemented during the development of the thesis.

In addition, given the technical capabilities and limitations of ChatGPT, such as in generating outcomes, plausibility had to be checked. Since I had already successfully taken all courses of my study program at the time of the release of ChatGPT in November 2022, this was my first application of ChatGPT. My hope was that ChatGPT would help me to work more effectively in a more structured way and that ChatGPT could handle some chore work, e.g., during the literature research and the writing. A review of the study regulations revealed that they did not contain any rules on artificially generated content. Also, no information in this regard was given in the preparatory course Scientific Work. Accordingly, I assumed that AI-generated outcomes could be used when marked as third-party content. In addition, I was explicitly advised by the other supervising authors to use ChatGPT for writing the bachelor thesis and to document the results of the interactions between me and the AI. This request was also documented in the assignment of the bachelor thesis.

Summary. There was an understanding of relevant regulations, but it was apparent that the HE institutions’ regulatory framework did not fully account for the use of AI, including ChatGPT. By documenting the use of ChatGPT, its involvement was made transparent. However, it is important to note that this use of ChatGPT for research needs to be considered an exception, and therefore these findings may not be applicable to all theses. Moving forward, it may be beneficial to make documenting the use of ChatGPT, such as by including all chat histories, standard practice.

4.2. Reflect on Your Learning Goals

Recommendation. This recommendation indicates that interactions with ChatGPT need to be carried out in a structured way. First, the learning goals must be clear. The learning goals drive the information that ChatGPT should provide to support the learning goals. The information requested results in chat commands (prompts) to ChatGPT. Finally, the outcomes generated have to be checked for plausibility. In summary, the achievement of domain-specific learning goals requires the mastery of a variety of ChatGPT-related-meta skills, such as digital literacy and critical thinking.

My experiences. When developing the bachelor thesis, the learning goals are rather to be considered as information goals. Primarily, it is important to find information that can be processed in the bachelor thesis; secondarily, it is also important to learn in terms of internalizing new knowledge. Accordingly, the main goal was the completion of the bachelor’s thesis. Nevertheless, knowledge goals were also defined, such as a literature review, the development of an assessment model, and the conduct of a study. In addition, autoethnographic experiences about incorporating ChatGPT in developing the bachelor thesis were to be documented. Here, details such as the structure and handling of interactions with ChatGPT were developed during the writing process.

I swiftly developed the following process structure:

- Awareness of learning objectives: I had to be aware of my learning goals for supporting ChatGPT with the necessary information. These include the context (writing a bachelor thesis), the prompts used (e.g., generating a chapter outline), and the expected outcome of the interaction (e.g., an outline).

- Development of prompts: Based on learning goals and the expected outcomes, such as an outline or the generation of ideas via brainstorming, prompts had to be developed first. These were to guide ChatGPT to specifically generate the expected outcomes

- Content validation: Afterward, the outcomes had to be checked to see if they provided the expected information. In addition, a technical review (validation) had to be performed. On one hand, the validation was based on my knowledge (self-expertise) and an assessment of the applicability of the outcome based on external, easily accessible resources, such as Wikipedia. On the other hand, validation often also requires in-depth research, such as searching for other sources, e.g., articles or books).

- Reflection on the learning goals: Reflecting on the outcomes again raised my awareness of the learning goals. This revealed whether valid outcomes were generated by ChatGPT. These reflected newly learned knowledge, whereas unsuitable content was discarded. If necessary, consideration was given to adjusting the context, the prompts used, or even the interaction goal. Content validation provided learning in both positive and negative cases.

For example, I used ChatGPT to request 10 references about the use of AI in HE (see Table 2). Here, I pursued the goal of matching the previous results of my research and generating suggestions and keywords for further literature research. By instructing ChatGPT to refer to literature reviews, technical reports, and meta-studies, I specified my prompts for obtaining scientifically relevant references.

Table 2.

Literature research.

ChatGPT reliably returned 10 references. I started the validation process with author verification via Google Scholar author search to confirm the existence of the named authors and their subject specialization. Subsequently, I checked the named journals and conferences for their existence, discipline, and year of publication. Article titles were searched via Google Scholar and Scopus, both in full and in sections. Finally, I validated discoverable references based on abstracts and conclusions. This revealed that 6 out of 10 references depicted literature reviews, technical reports, and meta-studies. However, it turned out that about half of the authors existed but were not in the relevant research areas (AI, IT, computer science, education). In addition, only one of the 10 references was immediately available under the stated title and authors, and one was further available under a slightly different title. Upon review, 9 of 10 references proved to be implausible. As a result, I weighed the benefits of ChatGPT in the further literature search and decided not to use ChatGPT for this purpose anymore due to mostly invalid outcomes.

Summary. The use of ChatGPT requires a clear structure that emanates from the learning objectives. Further, the development of suitable prompts and the validation of the outcomes are of particular importance. Using ChatGPT in the literature review made evident that ChatGPT did not meet the achievement of the learning objective—provision of relevant references—despite the iterative development of prompts. However, ChatGPT was beneficial in the generation of further keywords for the research process.

4.3. Use ChatGPT as a Writing Partner

Recommendation. This recommendation indicates that ChatGPT may serve as a writing partner for various writing-related activities, such as brainstorming, text structuring, and writing. In doing so, the acquisition of digital competencies is necessary. These competencies include the purposeful development of prompts as well as the validation of the results. Further, users need to be aware of the limitations of ChatGPT.

My Experiences. When working with ChatGPT as a writing partner, brainstorming interactions were mainly used. From these, about 10% of the bachelor thesis resulted from suggestions for text structuring and the evaluation of text sections. Here, I interacted with ChatGPT, not having any concrete expectations about the usefulness of the generated structure. This reservedness was grounded in my experience with ChatGPT during the literature research (see Section 4.2), where I became aware of the outcomes generally to be questioned critically. Nevertheless, I hoped to be able to adopt the generated chapter structure for my thesis, at least in parts. As a result, I prompted ChatGPT to structure a chapter about the definition and functionality of ChatGPT. To obtain an answer as clear and simple as possible, I limited ChatGPT to the output of bullet points (see Table 3). I also did this to adapt ChatGPT to my previous brainstorming outcomes. The focus was to ensure that I had freedom in the further development of the chapter (selection of topics, prioritization, form of presentation) and had not simply to adapt an outline that already seemed logical in itself without critical validation and my suggestions.

Table 3.

Chapter structuring.

ChatGPT generated the expected structure and suggested, including a thematic introduction, as well as the basics of the GPT architecture (see Table 3). I had not considered a more detailed description of the GPT architecture until then. Nevertheless, I found the idea suitable and worked out an appropriate structure. Here, I specifically explained the concept behind the GPT architecture. Furthermore, points 3, 4, and 5 coincided with my outline of the chapter about the definition and functionality of ChatGPT. Accordingly, I began to include these as subchapters in my thesis as well.

In developing text and chapter structures, the focus was on reviewing already developed texts. For example, I asked ChatGPT whether the given text section on ChatGPT’s capabilities and limitations addressed all relevant aspects. In relation to this, I had already read a large number of studies, some of which relied on very different data. Therefore, I decided to take an approach from other studies on ChatGPT and survey ChatGPT about themselves to obtain accurate information about ChatGPT-specific issues. In this way, I hoped to obtain confirmation of my texts via ChatGPT. Thus, I pursued obtaining an overview and not a lengthy output of ChatGPT. Accordingly, I limited ChatGPT to the generation of five key points.

According to the evaluation of ChatGPT (see Table 4), my argumentation considers all key points contrary to my assumption. In addition, five aspects highlighted the relevance of my reasoning. Hereby, by stating some capabilities and limitations of ChatGPT, my confidence was raised that ChatGPT seems to be familiar with the subject matter and that the assessment generated was conclusive. At this point, I realized that regardless of whether human or machine, a conclusive and, above all, positive assessment of one’s own performance generated confidence for the further work process. As a result, I perceived those certain interactions with ChatGPT triggered an emotional process. Subsequently, I trusted the expertise of ChatGPT and finished the work on the chapter with a clear conscience.

Table 4.

Evaluation of the argumentation structure.

In addition, ChatGPT helped with the formal review of already written sections. The goals of the review were, among others, the writing style, the technical terms, as well as the elimination of filler words. Accordingly, I asked ChatGPT to evaluate a section of my conclusion of the bachelor thesis regarding an objective writing style and to shorten filler words. I refrained from a full evaluation because it bothered me to submit several pages to ChatGPT compared to a smaller section. In addition, I could not refer to appropriate guidelines and experience reports that guided such a processing of data. In this regard, I hoped that ChatGPT would issue an improved version of my text section in a more pointed manner and use technical terms. Accordingly, I asked to replace colloquial expressions with technical terms and phrases in a more academic style. The generated text served as a template for me to make selective changes at my own discretion. This was carried out because I was convinced that my expertise would allow me to make more qualitative changes manually than if I had simply applied the ChatGPT outcomes. In addition, I assumed that I would be able to make statements about the quality of the entire conclusion on the basis of the template.

From ChatGPT’s response, I inferred that I could still make changes regarding my writing style to improve the quality of my conclusion. I was also curious about the changes ChatGPT had made and whether I might learn any new technical terms. After comparing both texts, I integrated a few new technical terms into my work at my discretion, i.e., I could adapt the basic vocabulary of my work based on ChatGPT. In doing so, it was important to me that I personally identified with the text and did not simply adopt generated content. As a result, I looked at the generated section of ChatGPT (see Table 5) very critically. While I trusted ChatGPT’s expertise regarding writing style, I was not always sure of the changes made by ChatGPT. Unlike ChatGPT, I had finally developed a context and made thematic prioritizations based on experience from many hours of work and over 120 pages written. On the other hand, ChatGPT generated a new version based on a small section of text, which in some places reflected the meaning of the content but changed prioritizations and context.

Table 5.

Writing style evaluation.

Summary. In conclusion, ChatGPT could be used as a versatile writing partner. Further, the relevance of building digital literacy skills in prompt development became apparent. Particularly via specifications regarding the length, structure, and content of sections to be generated, individual adjustments could be made. These facilitated the work process with ChatGPT. In addition, obviously, that awareness of ChatGPT’s limitations was conducive to a critical reflection of the results: The limitations sharpened the view of which responses of ChatGPT in particular are to be reflected, such as in Table 5. It was important to interact with ChatGPT without the expectation of actual perfect outcomes to avoid demotivating frustration. As a result, confidence in the generated content of ChatGPT could be built up, which, on one hand, accelerated the work process and, on the other hand, gave more security.

4.4. Use ChatGPT as a Learning Partner

Recommendation. This recommendation indicates that ChatGPT may be used systematically as a learning partner. This possible use of ChatGPT has already been addressed in the evaluation model of the bachelor thesis. On one hand, ChatGPT acts as a tutor, which moderates new learning content for the user. Therefore, adaptations of the learning content may be made according to the user’s cognitive abilities, competencies, and needs. On the other hand, the user can access feedback via ChatGPT independent of time and place in order to actively support the learning process even outside of formal learning activities.

My Experiences. During my work, I used ChatGPT for brainstorming. I hoped to identify relevant aspects of my work with less mental effort. Again, I had no expectations regarding the usefulness of ChatGPT’s outcomes for my work. Also, comparisons with developed ideas were mostly missing. However, I needed to validate the outcomes generated by ChatGPT, an activity that led to a learning process. Thus, I challenged ChatGPT to demonstrate a possible integration of AI in HE exclusively based on three to five aspects (see Table 6). In this, I limited ChatGPT. After all, I wanted to stimulate my thoughts with new suggestions, but without replacing them with prefabricated content or influencing it too much. Therefore, it was important for me to decide about the further procedure based on my thinking. Thereby, I felt mostly strongly limited by already existing outcomes of ChatGPT, so on my part, no full-fledged brainstorming could take place.

Table 6.

Brainstorming.

As a result, I realized that ChatGPT had taken away part of the visualization of my thought process. This made brainstorming easier, but without influencing my thoughts too much by existing outcomes and taking away the decision about further work from me. On the other hand, I was surprised that ChatGPT had mapped quite a complex process using such simple cues. On this occasion, I was impressed by the power of ChatGPT. In conclusion, based on the five generated aspects (see Table 6), I worked out an integration process of AI into HE, in which I combined two of the mentioned aspects into one each. In conclusion, I was able to develop my representation of the integration, which was based on three aspects (1. Perception, 2. Enrichment, and 3. Integration).

Further, the interactions with ChatGPT as a learning partner were also based on the preparation of the colloquium of the bachelor thesis. Thus, I presented a summary of my bachelor thesis to ChatGPT, which I evaluated once again regarding the thematic relevance for a technically foreign audience since my two supervisors were interested in the work not as AI experts but as instructors who are interested in integrating ChatGPT into their courses.

Thereby, I enjoyed the easy accessibility of ChatGPT and interacted location-independently during a train ride with ChatGPT. In doing so, I requested an assessment from ChatGPT in a short time, which allowed me to rank the quality of my summary. Rather, the request followed my interest in what such an assessment of ChatGPT looked like. In addition, I hoped to gain confidence about my work from ChatGPT’s feedback.

ChatGPT pointed out the strengths of my summary. I was surprised that ChatGPT had generated unprompted stars that visualized the rating (see Table 7). The stars delighted me, similar to good grades in high school. In addition, ChatGPT made it possible to prepare my content independent of location and time.

Table 7.

Evaluation of thematic relevance.

Summary. ChatGPT proved to be usable as a learning partner by low-threshold provision of feedback independent of time and place. Also, the contents of the bachelor thesis could be summarized and moderated. The ever-necessary validation of the ChatGPT outcomes was another base for learning. Furthermore, ChatGPT supported brainstorming by generating structured outcomes, e.g., by keywords. Additionally, ChatGPT’s evaluation of texts contributes to learning experiences. In addition, surprising parts of the outcomes produced learning experiences.

4.5. Iterate and Converse with ChatGPT

Recommendation. This recommendation indicates that by iterating with ChatGPT, i.e., repeatedly adjusting the prompt after evaluating the results and re-entering the adjusted prompt, an optimization of outcomes may take place. Here, unspecific answers to ChatGPT can be specified by improved prompts. In addition, via reinforcement learning and learning analytics methods, ChatGPT may adapt future content generation to the user’s “preferences”.

My Experiences. By iterating with ChatGPT, outcomes may be adapted to personal preferences and requirements—e.g., the scientific writing style of a bachelor thesis. On one hand, ChatGPT’s outcomes may be adapted by concretizing the prompt. Thus, besides the writing styles available to ChatGPT, grammatical preferences or forms of presentation preferred by the user, such as the answering of questions in bullet points, can be specified. The idea for this came from a YouTube video from 2023 [64], which I had picked out in preparation for working with ChatGPT. This video suggested, amongst others, enriching the prompts for ChatGPT with as much information as possible, such as context, length, and the writing style to be used. As a result, I had the idea to ask ChatGPT about the writing styles available to provide my prompts with more precise information (see Table 8).

Table 8.

Inquiring about writing styles.

ChatGPT generated a total of 12 writing styles, which I could use for a more precise design of prompts for my scientific work. In addition, a hint was generated, which pointed out the contextual use of different writing styles. Here, I was surprised by the variety of styles available to ChatGPT.

As a result, I checked the effect of the writing styles in generating a text section about the perception of AI in German HE. In preparation, I checked the meaning of writing styles 3 (argumentative) and 7 (explorative). I assumed that the focus of the outcome would change depending on the writing style. Specifying an argumentative style focused on presenting different views and rationales of the student and faculty respondents about the use of AI in HE. Divergently, an exploratory style focused on presenting an overview of the topic, which was not yet complete due to its topicality. The generated section resembled an introduction, which outlined the topic of AI in HE and selectively outlined opinions for and against its use. As a result, the specification of different writing styles influenced the context of the text section to be generated.

Here, I noticed that about half of the writing style designations do not constitute a writing style as such but rather a research method. For example, writing styles 7, 8, and 9 are more likely to represent a research method. Nevertheless, this observation might indicate that ChatGPT is not only aware of various research methods but is also capable of adapting the outcomes to the characteristics of research methods.

Describing another example, I noticed that in the course of longer interactions (10–20 questions), ChatGPT automatically adjusted the outcomes to my preferences. Thus, ChatGPT started to respond using bullet points. Further, content from past interactions was involved. For example, ChatGPT used an interaction about the potential uses of AI in HE to generate a list of curricular challenges of the AI integration process (see Table 9).

Table 9.

Conversation with ChatGPT.

ChatGPT listed 12 aspects with a short explanation in both interactions. Here, ChatGPT created a list unprompted for question 1, but only for (Chat1_Prompt16_Thema2) was this explicitly requested. Therefore, I had the impression of having seen the headings before in a similar form. Accordingly, I checked my chat history for potential influences, such as previous prompts, similar prompts, or duplication of prompts. I noticed headings that were identical to those of my last prompt. I included that the answers to prompts on a comparable topic within the subject area of AI in HE are influenced by previous questions and their outcomes. Thus, ChatGPT generated matching curricular challenges based on the named uses of AI in HE. Here, the headings of the enumerations were congruent; only the explanations suggested belonged to a different prompt. At this point, I recalled my literature review. Some studies reported that increasing iteration and the use of contextual questions on ChatGPT trigger the reinforcement learning method. Here, ChatGPT can identify the user’s requirements during the chat and take them into account when generating future outcomes, drawing on previous instructions [7,24,30].

These session-related adjustments captured my attention. Thus, Table 10 presents an exemplary chat history of successive 20 interactions. For each interaction, the prompts used are categorized according to their interaction goal (here: brainstorming, structuring, overview) and their topic. A remark describes the result of each interaction. In addition to the length and conciseness of the outcomes, the fulfillment of the interaction goal is also described. Here, in the case of Success, the interaction goal was achieved, and the result was satisfactory. In the case of a Cancel (Table 10, Prompt # 10–12), the result was not satisfactory, so the interaction and further iterations were discontinued. In this case, the overall goal was to develop a thematic overview, which was generated in sufficient form regardless of whether the output was too long or too short, making further interactions obsolete. Overall, Table 10 provides an overview of the development of prompts. Such developments can be very time-consuming and lengthy, and the outcomes are not always successful.

Table 10.

Prompt iteration.

Summary. Entire chat histories based on prompt iterations with ChatGPT demonstrated that the definition of the work goal and the structuring of prompts is highly relevant for the outcomes. Nevertheless, it has been shown that iterations have allowed the outcomes to be aligned with the work goal. For example, prompt characteristics (see Table 8) may be requested, which specify the design options of prompts. As a by-product of prompt iterations, the user’s requirements (e.g., form of presentation, text composition, and writing style) are incorporated over time as preferences in the outcomes of ChatGPT in an automated manner, and the need for precisions is eliminated.

4.6. Summarize Learning Material with ChatGPT

Recommendation. This recommendation indicates that ChatGPT may be used to summarize learning content in a more concise manner. Text sections can be reduced to their essential core in a short time. Thus, a targeted adaptation of the learning content to the individual needs of the user supports learning.

My Experiences. The contents of the bachelor thesis are regarded herein as learning material. I used ChatGPT for the extraction of the contents of scientific articles. This was beneficial, especially during the literature review. Actually, I provided selected parts of an article, such as the abstract, to ChatGPT for generating a summary (see Table 11). Thereby, I hoped to be able to judge the suitability of a study regarding aspects such as relevance, method, topic, and findings in a short time. Firstly, this was conducted based on my expertise in the abstract and the summary generated by ChatGPT. I also provided ChatGPT with additional text sections besides the abstract to summarize long studies and to clarify passages that were technically complex for me. Hereby, I limited ChatGPT to the generation of five key points. Thus, I wanted to present the contents of the study clearly. For example, I created the prompts for a systematic review of Zawacki-Richter et al. [3] to summarize the abstract in five bullet points. In this case, there were no comprehension problems; rather, I wanted to check whether this way of reviewing references was meaningful for me.

Table 11.

Study summarization.

ChatGPT generated, as requested, a summary of five key points (see Table 12). Additionally, in my assessment of the abstract, I was able to use the key points for developing an overview of the article for the bachelor thesis. Here, I was impressed by the effectiveness of ChatGPT in summarizing text sections in only a few key points. As a result, ChatGPT supported me in developing a meaningful bibliography using reference manager software (Zotero). I was able to annotate the articles with outcomes from ChatGPT. Therefore, I annotated each article with notes regarding their main statements. This greatly facilitated my writing, as I created folders in the reference manager software for each chapter of my bachelor’s thesis. Afterward, I used the notes, restructured, and rephrased them. Finally, the references used in the process were inserted.

Table 12.

Conclusion summarization.

Apart from the literature research, ChatGPT helped me to summarize the content of the bachelor thesis during writing. I used ChatGPT to create summaries of my texts to include all relevant aspects in the conclusion of a chapter. This approach also gave me an advantage in the later selection of contents for my colloquium, in which I mostly referred to my conclusions from single chapters of my thesis. In doing so, I instructed ChatGPT to summarize the conclusions I had gathered in bullet point form. This approach was used for the literature review, feasibility study, evaluation model, and autoethnography, which were the most effort-consuming chapters. ChatGPT helped me not to miss any aspect due to a careless mistake.

ChatGPT generated, as requested, a summary in five bullet points. These formed the relevant summary of the chapter after my review of my conclusion. As a result, I was able to use ChatGPT in the development of the presentation slides for my colloquium to summarize the content of three chapters in a few bullet points. Here, the focus was on adapting the contents of my bachelor thesis with the help of ChatGPT in order to then organize them for the preparation of the colloquium.

Summary. The chats with ChatGPT demonstrated that learning content may be efficiently summarized in a short time. ChatGPT is able to prepare learning content according to the user’s needs and adapt it for further work. Also, by employing suitable prompts, learning content can be adapted to the individual work process. However, it appears necessary to organize the contents of each chapter outside of ChatGPT to summarize them. As a result, ChatGPT is merely a tool that does not take over the preparation of learning content but requires the skills of the user to prepare the content meaningfully.

4.7. Boost Coding with ChatGPT

Recommendation. This recommendation indicates that ChatGPT may also be used for programming. Here, assistance may be given, programming templates may be recommended, and also errors might be identified and fixed.

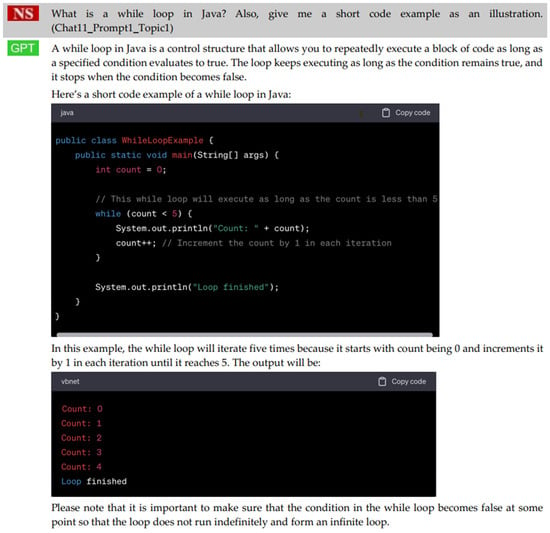

My Experiences. During my research on ChatGPT, I came across its capabilities for generating program code and wanted to explore them myself—even though it was not part of my bachelor thesis. Here, I remembered a lecture in a computer science course about the function of while loops in the programming language Java. Thus, I refreshed my knowledge about while loops and asked ChatGPT for an explanation and a small programming example. Here, I was especially curious about the comprehensibility of the code example because, in the course, Java programming appeared complicated to me.

ChatGPT generated a small section about the definition and functionality of while loops (see Figure 1). The code example was also understandable for me, although computer science has always been a minor subject for me. I was surprised that ChatGPT was able to prepare a topic that was previously not very clear to me in a comprehensible way.

Figure 1.

Learning code by interest.

Based on this good experience, I planned to spend more time with code programming in my spare time and to learn the basics of the programming language Python. Thus, I requested ChatGPT to generate a mini curriculum for me, which structured theoretical and practical learning content according to a pedagogical order. Conclusively, appropriate content, questions, and programming tests should be provided for me to acquire and check my knowledge level. Further, I stated that my theoretical and practical skills should be checked via tests to evaluate my knowledge level.

ChatGPT generated a total of 12 lessons with three suggestions for learning materials each (see Table 13). In addition, a total of four self-tests and four practical exercises were generated for assessing the knowledge after the completion of every third lesson. As a result, I tried the learning contents of the first lesson to judge the applicability of the curriculum generated.

Table 13.

Learning code by ChatGPT.

So, as part of the first lesson, I asked ChatGPT about the meaning of the term programming. ChatGPT provided an overview of the definition, functionality, and meaning of programming in computer science. Since I was educated as an environmental engineer, questions remained open; I iterated, driven by curiosity about the impact of programming in industry during the last 20 years and which areas could be influenced by it substantially. As a result, ChatGPT generated a text more than one screen long, which referred to the increasing digitalization process of the industry—Industry 4.0—and presented new professional fields opened up by it. In conclusion, there was a longer chat with ChatGPT in which I worked out the topic according to my own interest on the basis of the generated curriculum and let ChatGPT prepare and structure the content and visualize it by generating code examples (see Figure 1).

Although I had no competencies in programming with Python, I considered the generated curriculum as coherent. All the essential content for acquiring a basic knowledge of programming with Python was addressed from my perspective. In addition, I was excited by ChatGPT’s suggestion of tackling a larger programming project as a final exercise. Overall, based on my experience with the first lesson, I could imagine continuing to learn Python following the curriculum generated by ChatGPT. Further, I was impressed by the option to execute lessons multiple times if required and by the option to ask for feedback (see Section 4.4. Use ChatGPT as a learning partner). I was satisfied that I could validate ChatGPT’s outcomes using a Python interpreter without much mental effort.

Summary. ChatGPT offers various options in the field of programming. On one hand, ChatGPT can be used as a tutor to teach learner-oriented competencies in the field of programming. Thereby, the user has many options, e.g., guided by a curriculum or interactively as a learning partner, to actively design his learning process with ChatGPT. In addition, ChatGPT might be used for troubleshooting: ChatGPT can generate targeted suggestions that allow the user to go his own creative ways at any time when programming, which is not restricted by the specifications of a curriculum.

4.8. Beware of Risks When Using ChatGPT

Recommendation. This recommendation points out that interaction with ChatGPT is associated with risks. In addition to false statements, ethically questionable, e.g., discriminatory, content can also be generated. As a result, there is a need for awareness of applicable guidelines in the use of ChatGPT. Furthermore, in the context of preparing scientific papers, artificially generated content must be clearly declared as such and checked for plausibility.

My Experiences. Crucial was an awareness of guidelines for handling artificially generated content during thesis writing (see Section 4.1). The documentation of interactions can contribute to a transparent use of ChatGPT. For example, chat logs might be provided via attachments, for example. Nevertheless, the use of AI—especially LLM like ChatGPT—is not yet anchored in the regulatory framework of the HEI.

Especially when working with ChatGPT as a research tool (see Section 4.2), the focus was on applicable guidelines of the HEI. However, due to the novelty of ChatGPT, no guidelines existed. As a result, I worked out a reference style for artificially generated content derived from the Harvard citation style to ensure traceability, which is a criterion of scientific quality of the bachelor thesis. In addition, the validation of ChatGPT outcomes during the literature reviews revealed that almost no existing references were generated.

Awareness of the risks of using ChatGPT also impacted the writing process (see Section 4.3). It became apparent that knowledge about the limitations of ChatGPT could support the validation of ChatGPT’s outcomes. Accordingly, I identified validated content susceptible to flaws with higher priority.

Summary. In conclusion, working with ChatGPT resulted in a reflective handling of ChatGPT’s outcomes. Furthermore, based on the risk of erroneous statements and the lack of guidelines in dealing with artificially generated content in the preparation of theses, the interaction with ChatGPT was focused on selected areas of application, such as brainstorming or structuring (see Table 10). Also, the creation of chat logs for the purpose of documenting the use of ChatGPT turned out to be useful, especially for analyzing purposes of the bachelor thesis. For occasional use, the documentation of ChatGPT’s user interface is beneficial.

4.9. Read This Checklist before Using ChatGPT

Recommendation. This recommendation indicates that a checklist increases the quality of using ChatGPT. Via prompt templates, prompt parameters, and evaluation criteria, the interaction with ChatGPT can be intensified. At the same time, the user’s own responsibility for the implementation of given regulations is brought to the fore.

My experiences. The discussion of the recommendations in the previous sections shows that it turned out that almost all recommendations were relevant to the bachelor thesis. The previous study of the recommendations contributed to a more purposeful use of ChatGPT and accelerated the work process.

Thus, due to the non-existing guidelines at the HEI, the handling of artificially generated content was based on agreement with the supervisors of the bachelor thesis (see Section 4.1). Also, a referencing of ChatGPT outcomes in Harvard style was developed. In addition, chat logs captured interactions in a traceable manner. The focus was on the development of a working structure that, starting from the definition of the learning goal, provided orientation for the use of ChatGPT as a writing and learning partner (see Section 4.2).

Summary. Using ChatGPT when writing a bachelor thesis requires skills regarding the use of ChatGPT. The acquisition of such skills is facilitated by the use of this checklist.

5. Discussion

The implementation of ChatGPT in learning activities is an innovation that changes learning processes and has disruptive potential for certain learning activities, i.e., these learning activities become obsolete as the learning functionality of the activity is eliminated. On the other hand, ChatGPT also opens new opportunities; for example, it may be used for different activities that previously had to be accomplished without any assistance from a digital tool, such as brainstorming, structuring, and text revision. Accordingly, ChatGPT is also helpful in the development of longer texts, as the experience described here in writing a bachelor thesis demonstrates. Although it could be evidenced that ChatGPT is able to pass knowledge-oriented exams, ChatGPT currently is to be seen as a tool and not as a disruptive game changer when writing theses. Limitations, such as referencing non-existent sources, suggested that ChatGPT on its own is currently not capable of producing a bachelor’s thesis of acceptable quality.

It should be noted that the use of ChatGPT calls for meta-skills [65,66]. In particular, technical literacy skills specific to ChatGPT are required: Prompt engineering in particular, i.e., the ability to design questions and commands (prompts) to ChatGPT in a manner that ChatGPT responds with the expected outcomes. Furthermore, information literacy is demanded from users via the continuous monitoring of the output of ChatGPT for plausibility and also quality. While the ChatGPT-specific technical literacy skills are to be learned additionally, the information literacy skills are considered to be beneficial for other activities as well.

When dealing with ChatGPT, prompt engineering was especially trained. Furthermore, ChatGPT had to be integrated into individual work processes so that there was a change in the work process compared to other writing tasks already conducted. On one hand, time could be saved by generating information and texts; on the other hand, time had to be spent for a validation of the ChatGPT outcomes. Due to the validation, the work processes described here appeared to be rather more time-consuming. However, the lack of experience and the research context itself requiring documentation may also be regarded as time-consuming. Additional effort arises from the integration of ChatGPT outcomes and manually generated content as well.

In addition to the meta-skills required for ChatGPT operation, there might also be other learning outcomes. The writing of a bachelor thesis has both a learning functionality, i.e., the writer learns during the writing, and an assessment functionality, i.e., the completed thesis represents a measure of the writer’s knowledge and skills. An answer to the question certainly depends on the examinee’s approach. In the present case, the examinee is considered to be highly ambitious and conscientious. Due to what he perceives as the continuous need for validation, the learning functionality is inherent, especially also due to ChatGPT acting as a learning partner, as described above. The assessment functionality does not seem to be diminished since, due to the accompanying learning processes, the bachelor thesis represents a real measure of the knowledge of the examinee. On the other hand, however, less ambitious writers might use ChatGPT for pure structure and text generation, bypassing validation to a large extent and resulting in poor learning outcomes. Then, it is up to the conscientiousness of the examiners to what extent the non-validated content of the bachelor thesis is identified and the assessment functionality is preserved.

One of the limitations of the study is the small sample size, i.e., only the experiences from writing one bachelor thesis were described. Besides a dependency on the individual commitment of the writer, there might also be a dependency on the topic of the thesis.

6. Conclusions

Digital tools that integrate artificial intelligence are increasingly common in formal education environments. A milestone was the release of the ChatGPT chatbot in November 2022. ChatGPT is based on a large language model (LLM) capable of interpreting and generating text. The free availability of ChatGPT causes especially text-heavy teaching formats to be limited in their effectiveness regarding learning and assessment. Accordingly, the question is raised about how ChatGPT affects the writing of theses. For evaluation purposes, the use of ChatGPT was explicitly allowed for the writing of a bachelor thesis wherever it would be useful. Accompanying this, an autoethnographic log of ChatGPT usage was created. Based on the analysis of this log, we were able to identify various potentials of ChatGPT, such as structuring, brainstorming, and text revision. Among the challenges of its use is the permanent requirement of validation, which at the same time has to be seen as a trigger for learning. Overall, we found that for the case at hand of a research-heavy and highly committed thesis, ChatGPT did not lead to a reduction in thesis-typical learning and assessment functionality. An increase in productivity seems likely. However, due to the expected—and with further versions yet to be demonstrated—performance improvements in ChatGPT, our findings might have limited durability. A re-evaluation should take place at regular intervals to determine the extent to which the assessment functionality is maintained. ChatGPT and, correspondingly, LLMs are to be regarded as valuable digital learning tools, but they require the review and reconceptualization of teaching formats at regular intervals.

Author Contributions

Conceptualization, N.S. and H.S.; methodology, N.S. and N.S.; validation, H.S.; investigation, N.S.; resources, H.S. and N.S.; data curation, N.S.; writing—original draft preparation, N.S. and H.S.; writing—review and editing, H.S.; visualization, H.S. and N.S.; supervision, E.K. and H.S.; project administration, E.K. and H.S.; funding acquisition, E.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schmoll, T.; Löffel, J.; Falkemeier, G. Künstliche Intelligenz in der Hochschullehre. In Lehrexperimente der Hochschulbildung. Didaktische Innovationen aus den Fachdisziplinen; no. 2, vollständig überarbeitete und erweiterte Auflage; wbv: Bielefeld, Germany, 2019; pp. 117–122. [Google Scholar] [CrossRef]

- Alexander, B.; Ashford-Rowe, K.; Barajas-Murph, N.; Dobbin, G.; Knott, J.; McCormack, M.; Pomerantz, J.; Seilhamer, R.; Weber, N. EDUCAUSE Horizon Report: 2019 Higher Education Edition; Horizon Report; Educause: Louisville, CO, USA, 2019; Available online: https://library.educause.edu/-/media/files/library/2019/4/2019horizonreport.pdf (accessed on 23 February 2023).

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- de Witt, C.; Rampelt, F. Künstliche Intelligenz in der Hochschulbildung Whitepaper; KI-Campus: Berlin, Germany, 2020; pp. 1–59. [Google Scholar] [CrossRef]

- Gao, C.A.; Howard, F.M.; Markov, N.S.; Dyer, E.C.; Ramesh, S.; Luo, Y.; Pearson, A.T. Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. Sci. Commun. Educ. 2022; preprint. [Google Scholar] [CrossRef]

- King, M.R.; Chatgpt. A Conversation on Artificial Intelligence, Chatbots, and Plagiarism in Higher Education. Cell. Mol. Bioeng. 2023, 16, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Susnjak, T. ChatGPT: The End of Online Exam Integrity? arXiv 2022, arXiv:2212.09292. Available online: http://arxiv.org/abs/2212.09292 (accessed on 4 February 2023).

- Zhai, X. ChatGPT User Experience: Implications for Education. SSRN J. 2022, 1–18. [Google Scholar] [CrossRef]

- Gimpel, H.; Hall, K.; Decker, S.; Eymann, T.; Lämmermann, L.; Maedche, A.; Röglinger, M.; Ruiner, C.; Schoch, M.; Schoop, M.; et al. Unlocking the Power of Generative AI Models and Systems such as GPT-4 and ChatGPT for Higher Education A Guide for Students and Lecturers; University of Hohenheim: Stuttgart, Germany, 2023. [Google Scholar] [CrossRef]

- Baker, T.; Smith, L.; Educ-AI-tion Rebooted? Exploring the future of artificial intelligence in schools and colleges. Nesta Found. 2019, pp. 1–56. Available online: https://media.nesta.org.uk/documents/Future_of_AI_and_education_v5_WEB.pdf (accessed on 26 January 2023).

- Luckin, R.; Holmes, W.; Forcier, L.; Griffiths, M. Intelligence Unleashed. An argument for AI in Education; Pearson: London, UK, 2016; pp. 1–61. [Google Scholar]

- Wannemacher, K.; Bodmann, L. Künstliche Intelligenz an den Hochschulen—Potenziale und Herausforderungen in Forschung, Studium und Lehre sowie Curriculumentwicklung. In Hochschulforum Digitalisierung; Arbeitspapier Nr. 59; Hochschulforum Digitalisierung: Berlin, Germany, 2021; pp. 1–66. [Google Scholar]

- Handke, J. Digitale Hochschullehre—Vom einfachen Integrationsmodell zur Künstlichen Intelligenz. In Hochschule der Zukunft: Beiträge zur zukunftsorientierten Gestaltung von Hochschulen; Dittler, U., Kreidl, C., Eds.; Springer VS: Wiesbaden, Germany, 2018; pp. 249–263. [Google Scholar] [CrossRef]

- Hochschulforum Digitalisierung. The Digital Turn—Auf dem Weg zur Hochschulbildung im Digitalen Zeitalter; Abschlussbericht Arbeitspapier Nr. 28; Hochschulforum Digitalisierung: Berlin, Germany, 2016; Available online: https://hochschulforumdigitalisierung.de/sites/default/files/dateien/HFD_Abschlussbericht_Kurzfassung.pdf (accessed on 24 January 2023).

- Hobert, S.; Berens, F. Einsatz von Chatbot-basierten Lernsystemen in der Hochschullehre-Einblicke in die Implementierung zweier Pedagogical Conversational Agents. In DELFI 2019; Konert, J., Pinkwart, N., Eds.; Gesellschaft für Informatik e.V.: Bonn, Germany, 2019; pp. 297–298. [Google Scholar] [CrossRef]

- Stützer, C.M. Künstliche Intelligenz in der Hochschullehre: Empirische Untersuchungen zur KI-Akzeptanz von Studierenden an (sächsischen) Hochschulen; Technische Universität Dresden: Dresden, Germany, 2022. [Google Scholar] [CrossRef]

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. TechRxiv, 2022; preprint. [Google Scholar] [CrossRef]

- Lensing, K. Künstliche Intelligenz im Lehr-Lernlabor. In Künstliche Intelligenz im Lehr-Lernlabor; wbv Media: Bielefeld, Germany, 2020; pp. 1–22. [Google Scholar] [CrossRef]

- Shirouzu, H. How AI Technology is Helping to Transform Education in Japan. IBM Customer Stories: As Told by Our Customers. Available online: https://www.ibm.com/blogs/client-voices/how-ai-is-helping-transform-education-in-japan/ (accessed on 22 January 2023).

- Fürst, R.A. Zukunftsagenda und 10 Thesen zur Digitalen Bildung in Deutschland in Digitale Bildung und Künstliche Intelligenz in Deutschland; AKAD University Edition; Fürst, R.A., Ed.; Springer: Wiesbaden, Germany, 2020; pp. 301–347. [Google Scholar] [CrossRef]

- Kieslich, K.; Lünich, M.; Marcinkowski, F.; Starke, C. Hochschule der Zukunft—Einstellungen von Studierenden Gegenüber Künstlicher Intelligenz an der Hochschule; Institute for Internet and Democracy (Précis), Heinrich-Heine-Universität Düsseldorf: Düsseldorf, Germany, 2019; Available online: https://www.researchgate.net/publication/336588629_Hochschule_der_Zukunft_-_Einstellungen_von_Studierenden_gegenuber_Kunstlicher_Intelligenz_an_der_Hochschule (accessed on 27 February 2023).

- Alshater, M. Exploring the Role of Artificial Intelligence in Enhancing Academic Performance: A Case Study of ChatGPT. SSRN J. 2022, 1–22. [Google Scholar] [CrossRef]

- Aljanabi, M.; Ghazi, M.; Ali, A.H.; Abed, S.A.; Gpt, C. ChatGpt: Open Possibilities. Iraqi J. Comput. Sci. Math. 2023, 4, 62–64. [Google Scholar]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 2023, 6, 342–363. [Google Scholar] [CrossRef]

- Aydın, Ö.; Karaarslan, E. OpenAI ChatGPT Generated Literature Review: Digital Twin in Healthcare. SSRN J. 2022, 2, 22–31. [Google Scholar] [CrossRef]

- Azaria, A. ChatGPT Usage and Limitations. Open Science Framework. 2022. preprint. Available online: https://hal.science/hal-03913837v1/document (accessed on 4 February 2023).

- Frieder, S.; Pinchetti, L.; Chevalier, A.; Gri, R.-R.; Salvatori, T.; Lukasiewicz, T.; Petersen, P.C.; Berner, J. Mathematical Capabilities of ChatGPT. arXiv 2023, arXiv:2301.13867. Available online: http://arxiv.org/abs/2301.13867 (accessed on 28 February 2023).

- Guo, B.; Zhang, X.; Wang, Z.; Jiang, M.; Nie, J.; Ding, Y.; Yue, J.; Wu, Y. How Close is ChatGPT to Human Experts? Comparison Corpus, Evaluation, and Detection. arXiv. 2023. Available online: http://arxiv.org/abs/2301.07597 (accessed on 18 January 2023).

- OpenAI. GPT-4 Technical Report. arXiv 2023. [Google Scholar] [CrossRef]

- Ventayen, R.J.M. OpenAI ChatGPT Generated Results: Similarity Index of Artificial Intelligence-Based Contents. Adv. Intell. Syst. Comput. 2023, 1–6. [Google Scholar] [CrossRef]

- Yeadon, W.; Inyang, O.-O.; Mizouri, A.; Peach, A.; Testrow, C. The Death of the Short-Form Physics Essay in the Coming AI Revolution. arXiv 2022, arXiv:2212.11661. Available online: http://arxiv.org/abs/2212.11661 (accessed on 6 February 2023).

- Keller, B.; Baleis, J.; Starke, C.; Marcinkowski, F. Machine Learning and Artificial Intelligence in Higher Education: A State-of-the-Art Report on the German University Landscape. Heinrich-Heine-Univ. Düsseldorf. 2019, pp. 1–31. Available online: https://www.sozwiss.hhu.de/fileadmin/redaktion/Fakultaeten/Philosophische_Fakultaet/Sozialwissenschaften/Kommunikations-_und_Medienwissenschaft_I/Dateien/Keller_et_al.__2019__-_AI_in_Higher_Education.pdf (accessed on 30 January 2023).

- Pedro, F.; Subosa, M.; Rivas, A.; Valverde, P. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development; UNESCO: Paris, France, 2019; pp. 1–48. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000366994 (accessed on 23 January 2023).

- Seufert, S.; Guggemos, J.; Sonderegger, S. Digitale Transformation der Hochschullehre: Augmentationsstrategien für den Einsatz von Data Analytics und Künstlicher Intelligenz. Z. Für Hochschulentwicklung 2020, 15, 81–101. [Google Scholar] [CrossRef]

- Kreutzer, R.T.; Sirrenberg, M. Anwendungsfelder der Künstlichen Intelligenz—Best Practices. In Künstliche Intelligenz Verstehen; no. Arbeitspapier Nr. 15; Springer Gabler: Wiesbaden, Germany, 2019; pp. 107–270. [Google Scholar] [CrossRef]

- Wannemacher, K.; Tercanli, H.; Jungermann, I.; Scholz, J.; von Villiez, A. Digitale Lernszenarien im Hochschulbereich; Hochschulforum Digitalisierung: Berlin, Germany, 2016; pp. 1–115. [Google Scholar]

- Blumentritt, M.; Schwinger, D.; Markgraf, D. Lernpartnerschaften—Eine vergleichende Erhebung des Rollenverständnisses von Lernenden und Lehrenden im digitalen Studienprozess. In Digitale Bildung und Künstliche Intelligenz in Deutschland; AKAD University Edition; Fürst, R.A., Ed.; Springer: Wiesbaden, Germany, 2020; pp. 475–499. [Google Scholar] [CrossRef]

- Rensing, C. Informatik und Bildungstechnologie. In Handbuch Bildungstechnologie; Niegemann, H., Weinberger, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 585–603. [Google Scholar] [CrossRef]

- Dunkelau, J.; Leuschel, M. Fairness-Aware Machine Learning; Universität Düsseldorf: Düsseldorf, Germany, 2020; pp. 1–60. [Google Scholar]