Abstract

The rapid proliferation of metaverse platforms with heterogeneous architectures, functionalities, and purposes poses a significant challenge for informed technology selection. Consequently, there is a need for structured evaluation approaches that enable comparison based on functional and non-functional attributes relevant to specific application contexts. The objective of this study was to propose a model for evaluating the quality of metaverse-type platforms based on a hybridization of the aspects defined in the ISO/IEC 25000 family of standards, a maturity model extracted from recent literature, and the Metagon metaverse characterization typology. The proposed model operationalizes 35 evaluation attributes grouped into seven categories, enabling a comprehensive assessment of metaverse platforms. Using this model, 23 metaverse platforms were evaluated through a hierarchical ranking strategy with tolerance. The results show that platforms such as Decentraland and Roblox achieve the highest levels of maturity (ML5), although open-architecture platforms demonstrated superior structural robustness in comparative tie-breakers. The results provide a taxonomy of characteristics refined and validated by experts and used in the evaluation of the analyzed platforms, resulting in a reproducible classification that enables systematic comparison across different application contexts. The discussion presents the basis for future studies focused on the evaluation of specific categories, such as educational, therapeutic, or social interaction platforms.

1. Introduction

Metaverses integrate technologies such as virtual reality, augmented reality, and mixed reality to create interactive and persistent digital environments whose use and potential application in educational contexts have generated growing academic and commercial interest [1]. Even so, its application in the field of education has been the subject of multiple studies [1,2]. A systematic review and bibliometric analysis mention the recent explosion of research analyzing the use of virtual reality in education [3]. They highlight the leading role of China and the United States, the diversity of topics being worked on, and the ability of VR to influence the improvement or reinvention of teaching processes. This trend is corroborated by more recent systematic reviews [4], which specifically address the growing body of evidence related to the integration of immersive technologies to improve learning outcomes in primary education.

Its learning objective goes beyond a conventional course or learning activity; it seeks to allow students to have a different experience, an environment where they can consolidate the development of skills, experiencing an authentic training process [5].

The metaverse has positioned itself as an immersive digital space that combines augmented reality (AR), virtual reality (VR), artificial intelligence, and collaborative 3D environments for the following applications: (a) providing immersive and experiential learning through interaction with realistic virtual environments, facilitating the understanding of abstract or complex content and the simulation of real scenarios without the risks or costs of the physical world [6]; (b) enabling personalized and adaptable learning paths by adjusting difficulty, pace, and resources to students’ needs, thereby supporting inclusive education for diverse abilities and learning styles [7,8]; (c) promoting collaboration and networking through synchronous and asynchronous interactions in shared virtual spaces, supporting collaborative work and the development of digital communication and global citizenship skills [9]; (d) facilitating didactic and pedagogical innovation by enabling the use of active methodologies and the creation of virtual laboratories, digital campuses, immersive museums, and interactive classrooms [10]; (e) fostering the development of digital and 21st century skills, including critical thinking, creativity, problem solving, digital collaboration, and technological literacy [11]; and (f) promoting the democratization of access to knowledge and educational equity by enabling high-level educational experiences for learners in remote or resource-limited contexts [12]. In summary, the metaverse not only expands the boundaries of the traditional classroom but also redefines the way people learn and teach [13,14]. Its importance lies in the possibility of offering more immersive, inclusive, collaborative, and innovative experiences that develop key skills for the challenges of today’s and tomorrow’s society, transforming education into a more motivating and engaging experience for students, increasing engagement, and reducing dropout rates [15,16,17].

Towards the middle of the 20th century, the so-called Third Industrial Revolution or Digital Revolution led to the emergence of spaces where individuals can receive multimedia signals that enable new forms of symbolic interaction. This transformation made it possible, in its initial stage, to create digitally generated environments, known as virtual worlds, thus laying the foundations for the development of virtual reality (VR) [18,19]. Subsequently, with augmented reality, digital objects are superimposed on physical world scenarios in real time [20], and when the characteristics of interaction with physical and digital objects are indistinguishable, it is considered mixed reality. The space, still theoretical, where virtual worlds, augmented reality interaction spaces, and mixed reality spaces—extended reality—converge is known as the metaverse [21]. It is characterized by being immersive, with few limitations or blurred boundaries, persistent, decentralized, and oriented toward enriching social experiences [10,22]. Within the metaverse, there may be virtual worlds entirely dedicated to education, known as Virtual World Learning Environments (VWLE).

To achieve the metaverse, technologies have been developed such as devices that reproduce fully digital scenes on a screen very close to the eyes, other translucent devices that allow the environment to be seen and introduce digital elements; suits with sensors and actuators; three-dimensional immersion scenarios; and software systems that enable the reification of virtual worlds, augmented reality, and mixed reality in collaborative environments, connecting users, most of whom are represented by digital entities called avatars, through networks, mainly the Internet [23,24]. VR was conceived as a technology capable of transforming computers from simple processing tools into authentic generators of unrestricted realities that promote the creation of arbitrary environments for individualized and affective instruction [25]. Dede [26] proposed that Virtual Worlds represent a new vision in which students no longer interact with the phenomenon but rather shape the nature of how they experience their physical and social context.

In 2021, leveraging the effects of the pandemic, Meta (then Facebook) presented the community with a utopian vision of a unified, immersive metaverse deployed in the cloud, where millions of users would connect to have social experiences. Due to the high market penetration of Facebook, the idea attracted the spotlight of the mass media, and over the past three years, mayor conglomerates such as Meta, Alphabet, Microsoft, NVIDIA, Epic Games, and Roblox have invested significantly to create or improve the necessary technological elements in hardware and software infrastructure. During those years, a boom began in which digital objects were traded at high prices with the figure of Non-Fungible Tokens (NFTs) and, perhaps because of the novelty of the experiment, digital land began to be purchased by thousands of people who, without yet understanding what it meant, wanted to secure a place in this new Noah’s Ark [27,28].

Then came the explosion of generative artificial intelligence. The spotlight and investments shifted to another field, and the metaverse, lacking real traction and facing social, economic, and technological complications, entered a slowdown, moving at a slower pace but without losing sight of its future vision [29]. Like certain cities in decline when the industry that supported them closes, the platforms that emerged during the boom years now look abandoned, failing to retain the millions of promised users. However, there was transformation. Online game creators strengthened their platforms through the constant support of the gaming community, hundreds of applications continue to be developed and are available in software stores, VR devices have improved, and many researchers, seeing the potential impact of the metaverse, are focusing their efforts on capitalizing on it in different areas such as education [30]. It is clear that technology, the economy, and society are not yet ready to support the metaverse. Currently, the industry faces significant challenges, including severe fragmentation and lack of interoperability, chich is compounded by a proliferation of non-standardized devices, high technological volatility, software products that often fail to progress beyond the prototype stage, commercial promises, and suggestive but not definitive impacts on education [31,32].

Despite its expansion, the metaverse remains an evolving concept. Research shows that the metaverse technology platform, far from being a unified environment, is a collection of hardware and software elements that, despite the efforts of organizations such as the Metaverse Standards Forum, lacks standards that define the characteristics of its components, facilitate interoperability, and provide a fully immersive experience.

These limitations not only hinder adoption but also impede implementation in educational contexts. One of the main challenges today lies in the proliferation of metaverse platforms with diverse characteristics, architectures, and purposes, which complicates the task of making informed technology choices. Given this diversity, a systematic approach is required to compare platforms based on functional and non-functional attributes relevant to specific application contexts. Furthermore, although proposals such as OpenUSD (an open-source standard whose code is publicly available, allowing for collaborative modification and distribution) exist, the ecosystem is still closed, characterized by proprietary protocols that lead to a lack of traction in achieving a metaverse with an acceptable degree of functionality and reliability.

This study proposes and validates a maturity level assessment model (ML1–ML5) for 35 attributes of virtual world platforms. The objective is to estimate and compare the maturity status of 23 platforms in seven categories (technical, identity, content/economy, interoperability, governance, literacy/support, and accessibility/inclusion), providing (i) operational rules for rating by attribute; (ii) formal inter-rater reliability (ICC); (iii) a reproducible hierarchical ranking model; and (iv) a traceable statistical pipeline (PCA/k-means with explicit selection criteria). This study had two objectives:

- To propose a quality assessment model based on (a) the ISO/IEC 25000 [33] family of standards, (b) a maturity model according to Weinberger and Gross [34], and (c) the Metagon typology of metaverses by Schöbel et al. [35].

- To analyze a set of 23 metaverses from Schultz’s [36] list, using the proposed quality assessment model.

To frame the contribution of this study, the proposed quality assessment model integrates three complementary conceptual foundations. First, the ISO/IEC 25010 [37] standard provides the reference framework for defining and organizing functional and non-functional quality characteristics relevant to virtual world platforms. Second, a metaverse maturity model is incorporated to support the assessment of platforms across progressive levels of development (ML1–ML5), enabling the estimation and comparison of maturity status rather than isolated quality attributes. Third, the Metagon typology is used to contextualize the evaluation by characterizing platforms according to their purpose and technological orientation, ensuring that quality attributes are interpreted in relation to distinct application contexts. The integration of these perspectives enables a systematic and multidimensional comparative analysis of platforms.

While existing evaluation frameworks for virtual worlds and metaverse platforms typically rely on single aggregation strategies, fixed maturity models, or descriptive taxonomies, the proposed model advances the state of the art through a hybrid, operational, and evaluable evaluation pipeline. Unlike prior approaches that focus either on conceptual maturity levels or isolated quality dimensions, this work integrates geometric aggregation (radar-area analysis), statistical latent modeling (PCA-based synthesis), and structural typology (k-means clustering) within a unified and reproducible framework.

The novelty of the proposed model lies not merely in combining methods, but in their complementary operational roles: radar geometry captures global magnitude of maturity, PCA reveals latent multivariate structure and trade-offs, and clustering introduces a discrete, comparative typology of platform profiles. This hybridization enables both continuous scoring and categorical interpretation, which are rarely addressed simultaneously in existing frameworks.

2. Materials and Methods

This study was developed using a research design organized into two sequential phases that respond to the two main objectives. The first phase focused on building a quality assessment model for metaverses, using the Design Science Research (DSR) approach. The second phase consisted of the empirical application of this model to a set of 23 metaverse platforms using an expert-based quantitative evaluation to validate its usefulness and produce a comparative analysis.

2.1. Phase 1. Design and Development of the Hybrid Quality Assessment Model (Integrating Multiple Evaluation Dimensions and Expert Judgment)

To develop the central artifact of this research (the evaluation model), an approach based on DSR was adopted, as its objective is the creation and evaluation of innovative artifacts that solve practical problems while maintaining a high level of scientific rigor [38]. The process was divided into the five stages described below.

2.1.1. Problem Identification and Motivation

The starting point was the identification of a significant gap in the literature: the absence of a standardized, multidimensional framework for evaluating the quality of metaverse platforms. While several models exist for evaluating the quality of traditional software and conceptual frameworks for describing metaverses, no integrated model has been proposed that combines the technical perspective of product quality with the dimensions of maturity and typological characterization specific to these immersive environments. This gap makes it difficult for developers, investors, and end users to make informed decisions.

2.1.2. Review and Selection of Foundational Artifacts

A systematic review of the literature was conducted to identify theoretical frameworks that could serve as pillars for the new model. Based on this, three frameworks were selected for their relevance and complementarity:

- ISO/IEC 25000 family of standards (SQuARE): This was adopted as the base framework because it is the international standard for software product quality assessment. SQuARE provides a hierarchical, robust, and validated quality model with characteristics (e.g., functional adequacy, usability, reliability, security) and sub-characteristics that offer a solid and generic basis for evaluation (ISO/IEC, 2011 [37]).

- Metaverse Maturity Model [34]: To incorporate the evolutionary dimension, this model was integrated, which allows platforms to be classified according to their degree of development towards a fully realized metaverse. This approach considers that quality attributes vary depending on the maturity of the platform.

- Typology for Characterizing Metaverses [35]: In order to contextualize the assessment, this typology was used, which classifies metaverses according to their purpose (e.g., social, gaming, business) and their underlying technological characteristics. This allows the assessment to be context-sensitive, recognizing that not all platforms pursue the same goals nor should they be judged by the same priority criteria.

2.1.3. Hybrid Artifact Design

At this stage, the three selected frameworks were synthesized. The Hybrid Quality Assessment Model for Metaverses was designed using a conceptual relationship analysis, aligning the characteristics of SQuARE with the maturity levels and typologies of the metaverse. The result was a multidimensional artifact that defines:

- Evaluation Processes: Structured steps to guide the evaluator from data collection to the issuance of results.

- Comparison Matrices: Structures that cross-reference quality characteristics with metaverse typologies to weigh the relevance of each attribute according to the context.

- Quality Criteria Matrices: A unified set of characteristics, sub-characteristics, and quality indicators specific to metaverses at each maturity level (ML1–ML5). Although the evaluation criteria were defined to be as technology-agnostic as practicable, a moderate risk of obsolescence affecting 14 attributes was identified, attributable to their dependency on hardware components or software standards with short life cycles. Consequently, the continued validity of these evaluation criteria should be periodically reviewed at intervals of 6 to 12 months.

- Measurement and Analysis Mechanisms: Rating scales and methods for calculating total and partial quality scores.

Table 1 summarizes the interpretation of the maturity levels (ML1–ML5) across the seven evaluation categories of the proposed model. Detailed criteria, indicators, and decision rules used to assign maturity levels to individual attributes are reported in the Supplementary Materials.

Table 1.

Interpretation of maturity levels (ML1–ML5) by evaluation category.

2.1.4. Expert Implementation and Validation of the Model

Once designed, the model was implemented in a set of templates and instrumental guides. To ensure its content validity and applicability, it underwent a validation process by expert judgment. A panel of three experts was formed (See Table 2). Using a structured questionnaire (see Appendix A), the experts evaluated the relevance, consistency, clarity, and applicability of each component of the model. The results were analyzed using Aiken’s V coefficient, supplemented by a qualitative assessment to refine and consolidate the final version of the artifact.

Table 2.

Profile of domain experts involved in the evaluation process.

2.1.5. Evaluation and Refinement of the Method

A pilot test of the model was carried out by applying the evaluation process to a set of two metaverses. This evaluation cycle made it possible to verify the internal consistency of the model, the feasibility of the data collection process, and the usefulness of the results generated, making minor adjustments to the wording of some indicators and the evaluation protocol.

2.2. Phase 2. Empirical Application and Comparative Analysis

To address the second objective and demonstrate the practical usefulness of the model, it was systematically applied to a broad sample of platforms.

2.2.1. Case Selection

A set of 23 metaverses was evaluated based on Schultz’s sectoral analysis [36]. This sample was selected for its relevance and representativeness, covering platforms across distinct levels of maturity (from emerging to established), types (gaming, social, business, content creation), and technological architectures (e.g., systems integrating Virtual Reality (VR), Augmented Reality (AR), Extended Reality (XR), and Mixed Reality (MR)). Additional inclusion criteria included search engine visibility (Google and DuckDuckGo), the size and activity of the user base—evidenced by active users or engagement in discussion channels such as Discord or Reddit—user interaction dynamics, and the availability of platform updates released within the year preceding this comparative analysis.

2.2.2. Data Collection

A multi-source secondary data collection protocol was established to obtain the information necessary for the evaluation. Sources included:

- Official documentation and white papers from the platforms.

- Technical data sheets and code repositories (when available).

- Academic publications and market reports analyzing these platforms.

- Technical reviews from specialized media and user community analyses.

The evaluation of each metaverse was conducted independently by three researchers using the model templates. To ensure inter-rater reliability, discrepancies were resolved through discussion and consensus.

2.2.3. Hierarchical Ranking Procedure

To avoid the multicollinearity issues inherent in summing latent variables with observed metrics, a lexicographical hierarchical strategy with tolerance was adopted. This method prioritizes the latent structural maturity of the platform (Cluster Value) while using functional performance (Radar Area) as the primary differentiator. However, to account for measurement variability, a tolerance threshold was introduced.

Let be the set of platforms. The ranking order is defined such that for any two platforms :

where

: Structural Tier (0–5), derived from K-Means clustering on principal components.

: Functional Performance (%), derived from the radar chart area.

: Latent Robustness Score (PCA Score), representing structural consistency.

: Tolerance Threshold, set at 5.0%.

A threshold of 5% was selected to account for feature measurement variability. Within this margin, functional differences are considered negligible, and priority is given to the platform with superior latent structural robustness (). This ensures that architecturally solid platforms are favored over those with superficial feature bloat in cases of functional parity.

2.2.4. PCA-Based Synthetic Indicator

Principal Component Analysis (PCA) was employed to extract a latent representation of the multidimensional maturity space. PCA was applied to the covariance matrix of the ordinal maturity levels (NM1–NM5 mapped to 1–5) after mean centering.

The PCA score for platform p was computed as:

where:

- is the score of platforms p on the j-th principal component,

- is the proportion of variance explained by component j.

The first five components were retained, jointly explaining approximately 82% of the total variance, which provides a balance between parsimony and information preservation.

3. Results

3.1. Results of Objective 1

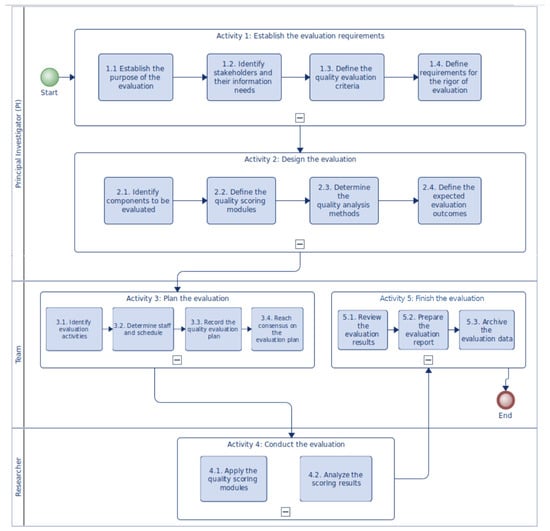

An evaluation model was defined with the components shown in Figure 1.

Figure 1.

ArchiMate view of the fundamental components of the evaluation model. Source: Own elaboration.

The Assessment Process component defines a general process for assessment in accordance with ISO/IEC 25040 [39] (see Figure 2).

Figure 2.

Metaverse Platform Evaluation Process. Source: Own elaboration, based on the ISO/IEC 25040 standard.

The Metaverse Evaluation Purpose Statement articulates the following need: considering the current lack of standardization, the heterogeneity of platforms, and the technological market shift driven by artificial intelligence, it is imperative to assess both functional and non-functional characteristics. This study aims to provide a benchmark tool designed to systematize the quality assessment process of metaverse platforms.

The Stakeholder and Needs matrices identify researchers in ICT integration in specific domains as stakeholders. Their need for information is related to the comprehensive characterization of metaverse platforms.

On the other hand, to define the Category Matrix component, a hybridization was used between the Metagon typology [35] for the characterization of metaverses, the metaverse maturity model proposed by Weinberger and Gross [34], the taxonomy of metaverse characteristics by Sadeghi-Niaraki et al. [40], and the Systems and Software Quality Assessment and Requirements (SQuaRE) standard.

The Rigor Requirements Matrix specifies the minimum rigor requirements that must be taken into consideration when conducting evaluations. These aspects must be understood and adopted by the entire group of researchers through training workshops and continuous monitoring.

The Target Entities component states that the functional components and official/community documentation of metaverse platforms are the entities to be evaluated. In particular, quality indicators for five maturity levels (ML1–ML5) are evaluated for 35 attributes, grouped into the categories of Technical Aspects, Identity and Representation, Content and Economy, Interoperability, Governance and Accountability, Literacy and Support, and Accessibility and Inclusion.

The functional components are those available in a production environment, accessed from user interfaces or application programming interfaces (APIs). The documentation artifacts are those defined in the matrix of primary and secondary sources. These matrices were constructed by experts considering official metaverse portals, technical documentation, available functionalities, artifacts published by developers, specialized articles, user reviews, technical forums, and third-party reports (see File S4 of the Supplementary Material, [41]).

The Quality Assessment Module was defined using a multi-criteria approach with three assessment modules. The first module, with a descriptive scope, was based on the matrix of attributes derived from the work of Schultz [36] (see File S1 of the dataset, [41]). The second module, which employed a predominantly quantitative method, utilized the criteria-based rating module adapted from the metaverse maturity model proposed by Weinberger and Gross [34]. This module defines five levels, each with membership criteria (see File S2 of the Supplementary Material, [41]). The third module, a quantitative approach, is based on the quality indicators for each of the maturity levels (see Files S3 and S5 of the Supplementary Material, [41]).

For the Quality Analysis Methods component, a staged inferential approach was adopted. First, three evaluators independently assigned maturity levels (ML1–ML5) using the indicator-based scoring rules and source matrices. Inter-rater reliability was assessed on the independent, pre-consensus ratings using complementary statistics. Global reliability across the three evaluators was estimated using the intraclass correlation coefficient (ICC), adopting a two-way random-effects model with absolute agreement and average measures (ICC(2,k)). Individual cases exhibiting a maximum divergence greater than one maturity level were examined in structured consensus workshops. The resulting adjudicated matrix was subsequently used for quantitative aggregation and multivariate analysis.

The second stage defines the quality of each platform using the geometric performance method, also known as the “Area-Based Aggregation Method” or “Radar Chart Area Method,” used in the Metagon typology [35]. This consists of representing the quality level of the metaverse platform through a radar chart where each axis corresponds to an evaluated attribute. The score obtained (between 0 and 5) is translated into a vertex of a closed polygon. It is proposed that the surface area of the polygon represents the multidimensional quality of the evaluated platform. The larger the area, the closer it is to the ideal, defined as maturity level 5 (ML5) in the dimensions. While this metric provides a valuable summary of performance, it is sensitive to the arbitrary ordering of axes. Therefore, to prevent classification artifacts derived from axial arrangement, the evaluation model triangulates this geometric indicator in the third stage and compares the platforms using three complementary approaches: k-means Cluster Analysis, Principal Component Analysis (PCA), and the geometric Radar Area method.

The Evaluation Expected Products Matrix proposes a set of artifacts that allow the results to be validated, the maturity level of each platform to be determined, and the quality of the different platforms to be compared (see Table 3).

Table 3.

Quality Categories Matrix.

3.2. Results of Objective 2

In order to evaluate the platforms, several activities were conducted to ensure methodological rigor (see Table 4). These activities are aligned with those defined in the proposed model (see Table 5) and with the set of expected deliverables (see Table 6).

Table 4.

Rigor Requirements Matrix.

Table 5.

Activities performed in the evaluation process.

Table 6.

Expected Products Matrix.

Twenty-three metaverse platforms were selected for the study, including solutions provided by manufacturers of VR, AR, or XR devices (see Table 7).

Table 7.

Metaverse platforms considered in the study.

The evaluation of each platform used triangulation of sources and researchers. It was carried out, obtaining an evaluation matrix per platform with comments.

As an illustrative example, the evaluation process of the OpenSimulator platform is presented. To simplify the explanation, only the assessment of the Dynamic Interactivity attribute is reported. This attribute is one of the nine attributes belonging to the Technical Aspects category. Overall, a total of 35 attributes were evaluated for each platform.

During the researchers’ training session, a conceptual alignment was carried out to establish a shared understanding of what Dynamic Interactivity entails. This step was necessary because one evaluator initially regarded the term as pleonastic, whereas the analytical framework of the study distinguishes interactivity as the mere capability for bidirectional communication, without necessarily characterizing the quality, richness, or complexity of such interactions. Once consensus on the concept was reached, the criteria associated with each maturity level were explained and clarified.

For this specific attribute, a set of elements was defined to operationalize concepts with an inherent degree of ambiguity, such as simple navigation, noticeable latency, dynamic simulation, complex interaction, and real-time transformation. Subsequently, each evaluator interacted independently with the platform and assessed the attribute by progressing sequentially through the maturity levels in ascending order. A maturity level was assigned only when all criteria corresponding to that level were fully satisfied, and the evaluation process concluded when a level could no longer be assigned.

For the selected attribute, Evaluators 1 and 2 assigned a maturity level of ML4, while Evaluator 3 assigned ML3. As full consensus was not achieved, the final maturity level was determined by simple majority, given that the maximum divergence between ratings was limited to one level. Had the divergence exceeded this threshold, a dedicated consensus session would have been required.

The inter-rater reliability analysis yielded an ICC(2,k) for absolute agreement of 0.763, with a 95% confidence interval of [0.733, 0.790], indicating good reliability among the evaluators. The narrow confidence interval reflects the stability of the estimate given the large number of evaluated targets (n = 805). Inter-rater reliability was assessed using the original evaluation matrices produced independently by the experts, prior to any consensus process. Although approximately 21% of the attributes exhibited discrepancies greater than one maturity level between evaluators, these discrepancies were intentionally preserved for the ICC computation in order to assess the intrinsic reliability of the evaluation instrument. Consequently, consensus workshops were conducted to review these cases and generate an aggregate matrix for subsequent quantitative analysis (see File S6 of the Supplementary Material [51]).

Based on these matrices, radar diagrams were generated for each platform and the areas were calculated (See Figure 3).

Figure 3.

Radar Charts of Maturity Levels by Attribute.

Algorithms written in Python 3 were used to analyze the classification results, using libraries such as numpy, pandas, and scikit-learn. The first step was to obtain the sorted table of the radar chart areas (See Table 8). Then, a simple segmentation of the platforms was defined based on the quintiles. Thus, the relative quality of the platforms is classified as Very High (Compliance above 53.31%), High (Compliance between 43.29% and 53.31%), Medium (Compliance between 25.16% and 43.29%), Low (Compliance between 22.47% and 25.16%), and Very Low (Compliance below 22.47%).

Table 8.

Analysis by Area of the Radar Chart.

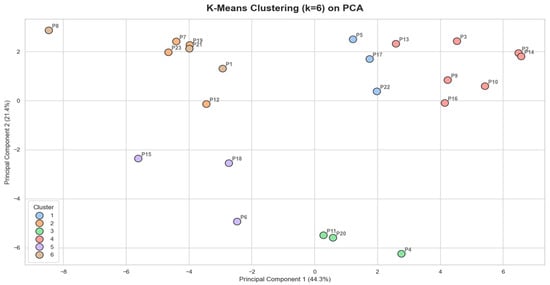

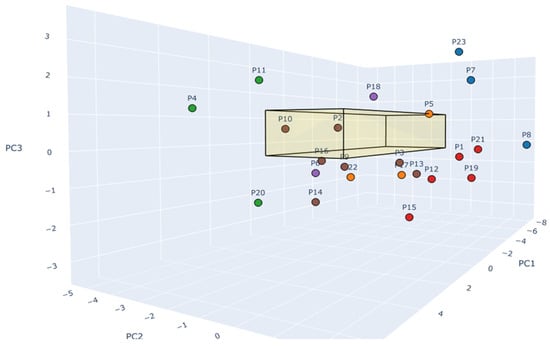

This initial classification was complemented by a subsequent cluster analysis. This analysis began with dimensional reduction using PCA (See Figure 4) and unsupervised clustering employing the K-means algorithm (See Figure 5 and Figure 6). Following the analysis of the sedimentation graph, the first five principal components were retained, which explained 82% of the total variance, and the weight matrices of the attributes within each principal component were generated. The weight matrices were assigned to three experts for consensus on the naming of each cluster. The relevance defined for each cluster was mapped to a value that acts as a discriminant (See Table 9).

Figure 4.

PCA Sedimentation Graph. Source: The authors.

Figure 5.

Cluster graph on principal components PC1 and PC2. Source: The authors.

Figure 6.

Conglomerates by K-Means and PCA with PC1, PC2, and PC3. Source: The authors.

Table 9.

Identified Clusters.

The Decentraland (P2), Overte (P10), Engage VR (P3), and Frame VR (P5) platforms are in the first octant of the PC1, PC2, and PC3 relationship graph. Cluster 6 (Value 5), or the reference cluster, includes Decentraland (P2), Opensimulator (P9), Overte (P10), Roblox (P14), Second Life (P16), and Webaverse (P22).

It should be noted that open source platforms (Overte, OpenSimulator, Webaverse) are consistently ranked at high maturity levels, suggesting that open code favors scalability and infrastructural evolution. In contrast, closed commercial platforms such as Horizon Worlds or vTime XR have limitations in interoperability and accessibility, which restricts their educational applicability.

Finally, a quality classification of the platforms was defined, resulting in a general assessment matrix. Table 10 presents the final ranking. The hierarchical model segments the ecosystem into distinct maturity tiers. Platforms in Cluster 6 (Relevance Value of 5) (e.g., Decentraland, Overte) represent the ‘Benchmark Tier’, characterized by robust architectures. Within this tier, Roblox leads in functional performance (Area = 52.76%) compared to Decentraland (Area = 50.98%).

Table 10.

Metaverse Platforms Quality Assessment.

4. Discussion

4.1. Discussion of the Results of Objective 1

The first objective of this study was to propose a model for evaluating the quality of metaverses. As a result, unlike previous studies that focused on lists of functionalities, this study validated a model for evaluating metaverse platforms by integrating three approaches: the ISO/IEC 25010 software quality standard, the Weinberger and Gross [34] maturity model, and the Metagon typology by Schöbel et al. [35]. This hybridization provides a multidimensional assessment that ranges from technical aspects (e.g., system scalability) to governance attributes (policy development) and user experience (accessibility and inclusion). Thus, this proposal overcomes traditional approaches focused solely on lists of functionalities by offering a multidimensional perspective that comprehensive considers technical, governance, and user experience aspects.

Therefore, it contributes to multidimensional integration and methodological robustness. This hybrid strategy is aligned with emerging approaches that promote more holistic and adaptive assessments in immersive technological environments [52].

Some recent studies highlight the importance of considering quality of experience, user perception, and economic context in the evaluation of metaverse services. For example, Du et al. [53] propose an economic-consumer framework that integrates quality of experience and perceived value into the service offering, while Lin et al. [54] developed the Metaverse Announcer User Experience (MAUE) model to identify the factors that affect the quality of user experience through a configuration of settings to the preferences of the majority of users, covering different parameters. This research reinforces the relevance of incorporating subjective and experiential dimensions into the proposed model, consistent with the inclusion of attributes such as accessibility and inclusion.

Specifically in education, the metaverse has been highlighted as a resource capable of transforming learning. In the contexts of teaching and learning processes, immersive platforms allow for the co-creation of scenarios and real-time feedback, although they also pose challenges such as privacy and ethics [55]. In addition, a metaverse-based framework has been proposed to improve the reliability, accuracy, and legitimacy of educational assessment processes through technologies such as VR, AR, and blockchain [56]. This confirms the relevance of considering the pedagogical dimension, now linked to governance and technical quality within the model.

Some studies warn that the metaverse, in its current state, is not yet fully consolidated and carries technological, ethical, social, and environmental risks [1]. The evolving regulatory landscape requires the inclusion of governance attributes, such as policy development and sustainability, in assessment models. This, in turn, reinforces the incorporation of this dimension into the proposal.

4.2. Discussion of the Results of Objective 2

Applying the evaluation model to 23 platforms revealed that the virtual worlds ecosystem is highly fragmented and heterogeneous, with very disparate levels of maturity and quality. This situation confirms the findings of Allam et al. [31] and Jagatheesaperumal et al. [32], who warn that the development of the metaverse does not follow a linear pattern of evolution, but rather responds to particular interests, divergent business models, and differentiated technological capabilities. The results obtained through the radar chart area analysis and clustering, provide an empirical and systematized overview of this ecosystem for the first time, moving beyond the descriptive approaches currently found in the literature.

The application of the hierarchical ranking with a 5% tolerance threshold revealed significant insights regarding the trade-off between functionality and structure. By prioritizing the PCA Score in cases of functional proximity (Area difference < 5%), the model exposed the structural superiority of open-architecture platforms.

For instance, in the Benchmark Tier (Cluster 6), Decentraland ranked above Roblox, and OpenSimulator surpassed Engage VR. Although the commercial platforms offered slightly broader out-of-the-box functionality, the open-source alternatives demonstrated significantly higher latent robustness (PCA scores > 0.80). Similarly, in Cluster 2, Vircadia ascended to the top of its tier despite having a smaller functional area than Bigscreen. This validates the premise that, for sustainable metaverse adoption, architectural consistency and openness (reflected in high PCA loadings) are critical quality differentiators that outweigh marginal gains in feature quantity.

In the case of cluster 6, called “Mature and robust platforms,” virtual worlds such as Decentraland, Roblox, Second Life, and Overte were identified. What is interesting about this finding is that the group includes platforms with very different business models and architectures: centralized and commercial (Roblox), decentralized blockchain-based (Decentraland), and open source (Overte). This result suggests that maturity is not determined by a single type of architecture or economic model, but rather by the ability to sustain continuous development in multiple dimensions of quality (functionality, interoperability, security, user experience). This corroborates the idea that the evolution of the metaverse relies more on the comprehensive consolidation of the ecosystem rather than on specific design decisions. This finding further expands upon the work of Dwivedi et al. [1] who established that adoption depends on social and cultural factors rather than exclusively on technical ones.

In contrast, the existence of groups such as cluster 5 (Value 0) (“Lagging or experimental platforms”) and cluster 1 (Value 1) (“Platforms with rigid or closed architecture”) reflects that a significant portion of metaverses do not progress beyond the prototype stage or are limited to very specific application niches [57]. These platforms present clear barriers in terms of scalability, accessibility, and interoperability, which limits their potential for mass adoption [58]. This finding is consistent with the work of Lee et al. [59] who describe how most metaverses remain in the technological validation stage rather than achieving commercial or educational consolidation. In this sense, the results show a risk of permanent fragmentation, where only a small subset of platforms will achieve a level of sustainable development over time [60].

Cluster 3 (Value 4) (“Advanced platforms with technological integration”) is particularly relevant to the discussion, given that it is characterized by its high score in Principal Component 2, mainly associated with the attributes of API Integration (0.3457) and Data and Identity Portability (0.3027). This indicates that interoperability is the main differentiating factor for this group, reinforcing recent studies that highlight the importance of data integration and mobility as a critical condition for the development of sustainable virtual ecosystems [61,62]. The evidence from this study confirms that the platforms demonstrating the greatest potential are those that not only offer attractive immersive experiences but also facilitate seamless connection with other digital environments, respecting the continuity of digital identity and the flow of information.

Finally, although the metaverse has been recognized as one of the technologies with the greatest disruptive potential today, the results of this study reflect that its use for educational purposes remains marginal. Most of the platforms analyzed prioritize entertainment, commerce, or social interaction, with no advanced features explicitly geared toward learning. This finding coincides with the conclusion of Hwang and Chien [5], who argue that most educators remain unaware of the characteristics and full potential of the metaverse in educational settings. Consequently, a gap exists between technological advancement and its appropriation in education. This reality necessitates the development of models for curricular integration and teacher training that allow the metaverse to be leveraged as a setting for educational innovation and immersive learning [63]. In summary, the comparative analysis confirms the fragmented nature of the virtual worlds ecosystem and highlights key determinants of platform maturity, including interoperability, scalability, and continuity of the user experience.

4.3. Limitations of the Present Study

The validity of this study should be considered in light of certain limitations. First, the inherent role of expert judgment in the evaluation process. Although a consensus process among researchers was rigorously employed, the assignment of maturity levels across 35 attributes necessarily incorporated a component of expert judgment. Future implementations could benefit from the use of more highly detailed rubrics and the inclusion of evaluators with even more diverse professional profiles (e.g., teachers without technical training). Second, the inherent volatility of the metaverse ecosystem. The assessment is a snapshot taken at a specific point in time (2024–2025). Platforms classified as “emerging” could mature rapidly, while others might be discontinued. However, the model is designed for reusability allowing for future longitudinal analysis to address this limitation. Finally, the scope of the analysis was limited to accessible platforms. This excluded those with high-cost barriers or those requiring exclusively business access, which potentially biases the representativeness of the sample.

4.4. Future Lines of Research

This work opens up several avenues for future research. First, the possibility of conducting context-weighted evaluations, i.e., applying the model by adjusting the weights of the attribute categories according to specific contexts (e.g., K-12 education, psychological therapy, corporate collaboration) to generate context-specific rankings. Second, developing qualitative and quantitative studies with end-users to correlate the evaluation results with their perceptions of usability, effectiveness, and satisfaction, thereby contributing to the model’s further validation. Third, it would be beneficial to replicate the evaluation annually to track the evolution of platforms and the ecosystem in general, thereby identifying trends and maturity trajectories. Fourth, it is also valuable to expand the model by incorporating new attributes as technology evolves, such as native integration with generative AI or sovereign digital identity frameworks.

Although the proposed model was applied at a general platform level, several of its dimensions, such as governance, identity management, accessibility, and persistence, are relevant to future analyses of specific educational use cases. For example, platforms with mature governance and identity mechanisms may better support sustained learning communities, while accessibility and interoperability are essential for inclusive educational initiatives. These considerations suggest that the model can serve as a foundation for more targeted evaluations in subsequent studies.

Building on this potential, the proposed model is structured to support the derivation of domain-specific weighting schemes without modifying its core architecture. Given its compatibility with the SQuaRE framework and its formulation as a metamodel, domain adaptation can be systematically addressed by defining a set of domain-specific use cases, which act as a reference framework to assess the suitability and relevance of the existing evaluation categories, attributes, and maturity indicators. Within this process, use cases are used to assess whether each element of the general model is applicable, meaningful, or non-essential for the domain under consideration. Based on this analysis, domain-specific weighting profiles can be established by assigning relative importance to the selected attributes and indicators, while the overall structure of the model remains unchanged.

For instance, educational scenarios typically emphasize accessibility, collaborative interaction, and pedagogical affordances; therapeutic contexts prioritize usability, privacy, emotional safety, and system stability; and industrial applications focus on scalability, interoperability, performance efficiency, and security. This approach enables the model to function as a stable and reusable evaluation framework, supporting multiple domain-specific assessment instances in a consistent and comparable manner. Accordingly, future research can focus on formally defining and empirically validating such domain-specific weighting profiles, extending the applicability of the model while preserving its methodological coherence and alignment with international quality evaluation standards.

5. Conclusions

As the metaverse transitions from speculation to practical application, the need for rigorous evaluation frameworks is critical, and this study proposed to address the need for a systematic method to evaluate the quality of metaverse platforms in a fragmented and rapidly evolving market. In this regard, a robust and multidimensional quality assessment model has been developed and validated. This model, which hybridizes the ISO/IEC 25010 standard, a maturity model, and the Metagon typology, constitutes the main contribution of the study. It offers a valuable tool for academics, developers, and educators to analyze and compare platforms in an informed manner, thereby moving beyond purely descriptive reviews.

The quality and maturity of existing metaverse platforms exhibit significant variation. An evaluation of 23 platforms reveals that, while a select group—such as Decentraland, Overte, Resonite, and Roblox—demonstrates high maturity across multiple dimensions, a significant portion of the market comprises niche, experimental, or limited-functionality solutions that are not yet sufficiently developed or suitable for widespread adoption.

There is no single “best” metaverse platform; rather, the optimal selection is context-dependent. Our analysis demonstrates that a platform with a high overall rating is not necessarily the superior option for all purposes. The proposed model breaks down quality into specific categories whose attributes can be reconfigured, thereby facilitating technology selection that is aligned with specific needs.

Supplementary Materials

Files S1–S6 can be downloaded from the following link: https://doi.org/10.6084/m9.figshare.29821580.v3 (accessed on 30 November 2025).

Author Contributions

Conceptualization, F.S.-D., J.M.-N. and P.C.; methodology, F.S.-D., J.M.-N., P.C. and Y.L.-A.; software, J.M.-N., P.C. and G.R.; validation, F.S.-D., Y.L.-A., G.R. and A.C.; formal analysis, F.S.-D., J.M.-N. and P.C.; investigation, F.S.-D., P.C., M.B.-Q. and A.C.; resources, F.S.-D. and M.B.-Q.; data curation, J.M.-N., P.C. and G.R.; writing—original draft preparation, F.S.-D., P.C., Y.L.-A. and A.C.; writing—review and editing, Y.L.-A., G.R. and M.B.-Q.; visualization, P.C., Y.L.-A. and A.C.; supervision, F.S.-D., Y.L.-A. and M.B.-Q.; project administration, F.S.-D. and M.B.-Q.; funding acquisition, F.S.-D. and M.B.-Q. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the National Fund for Scientific and Technological Development (Fondecyt Regular), number 1231136, of the National Agency for Research and Development, Chile (ANID-Chile).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Universidad Católica de la Santísima Concepción (Fondecyt Nº1231136) on 14 April 2023.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study.

Data Availability Statement

The data is available at the following link [Dataset. https://doi.org/10.6084/m9.figshare.29821580.v3 (accessed on 30 November 2025)]. Any additional information can be provided by the lead author of this study.

Acknowledgments

To the Consolidated Research Group “Research and Innovation Group in Socioemotional Learning, Well-Being and Mental Health to Foster Thriving” (THRIVE4ALL) UCSC. We thank Universidad de La Universidad de La Sabana (Group Technologies for Academia—Proventus (Project EDUPHD-20-2022), for the support received in the preparation of this article.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| VW | Virtual World |

| VWLE | Virtual World Learning Environments |

| AI | Artificial Intelligence |

| APIs | Application Programming Interfaces |

| AR | Augmented Reality |

| MR | Mixed Reality |

| VR | Virtual Reality |

| XR | Extended Reality |

| DSR | Design Science Research |

| ICT | Information and Communication Technologies |

| ISO | International Organization for Standardization |

| IEC | International Electrotechnical Commission |

| NFTs | Non-Fungible Tokens |

| UGC | User-Generated Content |

| SQuaRE | Software Quality Assessment and Requirements |

Appendix A. Validation Questionnaire by Expert Judges

Title of the Instrument: Checklist for Determining the Maturity Level of Virtual Worlds.

Objective of the Questionnaire. Evaluate the content validity of the items (attributes) that make up the checklist. Your expert opinion is essential to ensure that each attribute is relevant, clear, and pertinent to the maturity level you intend to measure.

Expert Judge Information

| Name | |

| Area of Expertise | |

| Years of Experience |

Instructions

Below are a series of key assessment attributes, organized by category and maturity level (ML1 to ML5). For each attribute, please assess the following three criteria using the scales provided:

- Relevance (R): How essential is this attribute in defining the specified maturity level?

- Clarity (C): Is the attribute description clear, concise, and easy to understand?

- Relevance (P): Do you consider that the attribute is correctly located at this maturity level (e.g., ML1) or would it belong to another (a lower or higher one)?

Evaluation Scales

| Relevance | Clarity | Relevance of the Level |

| 1 = Not relevant | 1 = Unclear | 1 = Incorrect (belongs to another level) |

| 2 = Not very relevant | 2 = Clear | 2 = Adequate |

| 3 = Relevant | 3 = Very clear | |

| 4 = Very relevant |

Quality Attributes Assessment Questionnaire

| ID | Main Attribute | Key Attribute Description | Level | R | C | P | Comment |

| AT1.1 | System Scalability | Supports ≤ 10 concurrent users without failure | ML1 | ||||

| AT1.2 | System Scalability | There is no load balancing or fault tolerance | ML1 | ||||

| AT1.3 | System Scalability | Maximum CPU usage > 95% in basic tests | ML1 | ||||

| AT2.1 | System Scalability | Supports up to 50 users with limited stability | ML2 | ||||

| AT2.2 | System Scalability | Basic balancing without dynamic monitoring | ML2 | ||||

| IR1.1 | Representation of the Person | Default generic rendering only | ML1 | ||||

| IR1.2 | Representation of the Person | No avatar customization option | ML1 | ||||

| CE1.1 | Imaginative Creation | There are no creation or design tools | ML1 | ||||

| GO1.1 | Policy Development | There are no documented policies | ML1 | ||||

| AS1.1 | Educational Resources | Total absence of educational resources | ML1 |

References

- Dwivedi, Y.K.; Hughes, L.; Baabdullah, A.M.; Ribeiro-Navarrete, S.; Giannakis, M.; Al-Debei, M.M.; Dennehy, D.; Metri, B.; Buhalis, D.; Cheung, C.M.; et al. Metaverse beyond the hype: Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2022, 71, 102642. [Google Scholar] [CrossRef]

- López-Belmonte, J.; Pozo-Sánchez, S.; Moreno-Guerrero, A.; Lampropoulos, G. Metaverse in education: A systematic review. Rev. Educ. Dist. 2023, 23, 1–25. [Google Scholar] [CrossRef]

- Rojas-Sánchez, M.; Palos-Sánchez, P.; Folgado-Fernández, J. Systematic literature review and bibliometric analysis on virtual reality and education. Educ. Inf. Technol. 2023, 28, 155–192. [Google Scholar] [CrossRef] [PubMed]

- Sandoval-Henríquez, F.; Sáez-Delgado, F.; Badilla-Quintana, M.G. Systematic review on the integration of immersive technologies to improve learning in primary education. J. Comput. Educ. 2025, 12, 477–502. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Chien, S.-Y. Definition, roles, and potential research issues of the metaverse in education: An artificial intelligence perspective. Comput. Educ. Artif. Intell. 2022, 3, 100082. [Google Scholar] [CrossRef]

- Onu, P.; Pradhan, A.; Mbohwa, C. Potential to use metaverse for future teaching and learning. Educ. Inf. Technol. 2024, 29, 8893–8924. [Google Scholar] [CrossRef]

- Shu, X.; Gu, X. An empirical study of A smart education model enabled by the edu-metaverse to enhance better learning outcomes for students. Systems 2023, 11, 75. [Google Scholar] [CrossRef]

- Yeganeh, L.; Fenty, N.; Chen, Y.; Simpson, A.; Hatami, M. The future of education: A multi-layered metaverse classroom model for immersive and inclusive learning. Future Internet 2025, 17, 63. [Google Scholar] [CrossRef]

- Jovanović, A.; Milosavljević, A. VoRtex Metaverse platform for gamified collaborative learning. Electronics 2022, 11, 317. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, Y.; Hu, L.; Wang, Y. The metaverse in education: Definition, framework, features, potential applications, challenges, and future research topics. Front. Psychol. 2022, 13, 1016300. [Google Scholar] [CrossRef]

- Ermağan, E. 21st Century Skills and Language Education: Metaverse Technologies and Turkish Education as a Case. Int. J. Mod. Educ. Stud. 2025, 9, 414–433. [Google Scholar] [CrossRef]

- Vaz, E. The Role of the Metaverse and the Geographical Dimension of Knowledge Management. In Regional Knowledge Economies; Springer Nature: Cham, Switzerland, 2024; pp. 23–40. [Google Scholar] [CrossRef]

- Göçen, A. Metaverse in the context of education. Int. J. West. Black Sea Soc. Humanit. Sci. 2022, 6, 98–122. [Google Scholar] [CrossRef]

- Kye, B.; Han, N.; Kim, E.; Park, Y.; Jo, S. Educational applications of metaverse: Possibilities and limitations. J. Educ. Eval. Health Prof. 2021, 18, 32. [Google Scholar] [CrossRef]

- Al Yakin, A.; Seraj, P. Impact of metaverse technology on student engagement and academic performance: The mediating role of learning motivation. Int. J. Comput. Inf. Manuf. 2023, 3, 10–18. [Google Scholar] [CrossRef]

- Çelik, F.; Baturay, M. The effect of metaverse on L2 vocabulary learning, retention, student engagement, presence, and community feeling. BMC Psychol. 2024, 12, 58. [Google Scholar] [CrossRef]

- De Felice, F.; Petrillo, A.; Iovine, G.; Salzano, C.; Baffo, I. How does the metaverse shape education? A systematic literature review. Appl. Sci. 2023, 13, 5682. [Google Scholar] [CrossRef]

- Bell, M. Toward a Definition of “Virtual Worlds”. J. Virtual Worlds Res. 2008, 1, 1–5. [Google Scholar] [CrossRef]

- Girvan, C. What is a virtual world? Definition and classification. Educ. Technol. Res. Dev. 2018, 66, 1087–1100. [Google Scholar] [CrossRef]

- INTEL. Demystifying the Virtual Reality Landscape. Available online: https://www.intel.la/content/www/xl/es/tech-tips-and-tricks/virtual-reality-vs-augmented-reality.html (accessed on 30 November 2025).

- Ng, D. What is the metaverse? Definitions, technologies and the community of inquiry. Australas. J. Educ. Technol. 2022, 38, 190–205. [Google Scholar] [CrossRef]

- Cheng, S. Metaverse. In Metaverse: Concept, Content and Context; Springer Nature: Cham, Switzerland, 2023; pp. 1–23. [Google Scholar] [CrossRef]

- Dionisio, J.D.; III, W.G.; Gilbert, R. 3D virtual worlds and the metaverse: Current status and future possibilities. ACM Comput. Surv. 2013, 45, 34. [Google Scholar] [CrossRef]

- Xu, M.; Ng, W.; Lim, W.; Kang, J.; Xiong, Z.; Niyato, D.; Yang, Q.; Shen, X.S.; Miao, C. A full dive into realizing the edge-enabled metaverse: Visions, enabling technologies, and challenges. IEEE Commun. Surv. Tutor. 2022, 25, 656–700. [Google Scholar] [CrossRef]

- Bricken, W. Learning in Virtual Reality. In Proceedings of the 1990 ACM SIGGRAPH Symposium on Interactive 3D Graphics, San Diego, CA, USA, 25–28 March 1990; pp. 177–184. Available online: https://eric.ed.gov/?id=ED359950 (accessed on 28 November 2025).

- Dede, C. The evolution of constructivist learning environments: Immersion in distributed, virtual worlds. Educ. Technol. 1995, 35, 46–52. Available online: https://www.jstor.org/stable/44428298 (accessed on 28 November 2025).

- Kaddoura, S.; Al Husseiny, F. The rising trend of Metaverse in education: Challenges, opportunities, and ethical considerations. PeerJ Comput. Sci. 2023, 9, e1252. [Google Scholar] [CrossRef]

- Wang, H.; Ning, H.; Lin, Y.; Wang, W.; Dhelim, S.; Farha, F.; Ding, J.; Daneshmand, M. A survey on the metaverse: The state-of-the-art, technologies, applications, and challenges. IEEE Internet Things J. 2023, 10, 14671–14688. [Google Scholar] [CrossRef]

- Yıldız, T. The Future of Digital Education: Artificial Intelligence, the Metaverse, and the Transformation of Education. Sosyoloji Derg. 2024, 44, 969–988. [Google Scholar] [CrossRef]

- McClarty, K.; Orr, A.; Frey, P.; Dolan, R.; Vassileva, V.; McVay, A. A literature review of gaming in education. Gaming Educ. 2012, 1, 1–35. Available online: https://surl.lu/mxzrry (accessed on 28 November 2025).

- Allam, Z.; Sharifi, A.; Bibri, S.; Jones, D.; Krogstie, J. The metaverse as a virtual form of smart cities: Opportunities and challenges for environmental, economic, and social sustainability in urban futures. Smart Cities 2022, 5, 771–801. [Google Scholar] [CrossRef]

- Jagatheesaperumal, S.; Ahmad, K.; Al-Fuqaha, A.; Qadir, J. Advancing education through extended reality and internet of everything enabled metaverses: Applications, challenges, and open issues. IEEE Trans. Learn. Technol. 2024, 17, 1120–1139. [Google Scholar] [CrossRef]

- ISO/IEC 25000:2014; Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Guide to SQuaRE. International Organization for Standardization: Geneva, Switzerland, 2014.

- Weinberger, M.; Gross, D. A metaverse maturity model. Glob. J. Comput. Sci. Technol. 2023, 22, 39–45. Available online: https://surl.li/cdevrd (accessed on 28 November 2025). [CrossRef]

- Schöbel, S.; Karatas, J.; Tingelhoff, F.; Leimeister, J.M. Not everything is a metaverse?! A Practitioners Perspective on Characterizing Metaverse Platforms. In Proceedings of the Hawaii International Conference of System Science (HICSS), Maui, HI, USA, 3–6 January 2023. [Google Scholar] [CrossRef]

- Schultz, R. Comparison Chart of 15 Social VR Platforms. Available online: https://ryanschultz.com (accessed on 30 November 2025).

- ISO/IEC 25010:2011; Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—System and Software Quality Models. International Organization for Standardization: Geneva, Switzerland, 2011.

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design science in information systems research. MIS Q. 2004, 28, 75–105. [Google Scholar] [CrossRef]

- ISO/IEC 25040:2011; Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Evaluation Process. International Organization for Standardization: Geneva, Switzerland, 2011.

- Sadeghi-Niaraki, A.; Rahimi, F.; Binti Azlan, N.; Song, H.; Ali, F.; Choi, S.-M. Groundbreaking taxonomy of metaverse characteristics. Artif. Intell. Rev. 2025, 58, 244. [Google Scholar] [CrossRef]

- Creswell, J.W.; Creswell, J.D. Research design: Qualitative, Quantitative, and Mixed Methods Approaches, 5th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2018; Available online: https://surl.li/rtqenb (accessed on 28 November 2025).

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 8th ed.; Pearson: Harlow, UK, 2019. [Google Scholar]

- Gravetter, F.J.; Forzano, L.-A. Research Methods for the Behavioral Sciences, 6th ed.; Cengage: Boston, MA, USA, 2018. [Google Scholar]

- Patton, M.Q. Qualitative Research & Evaluation Methods, 4th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Flick, U. An Introduction to Qualitative Research, 6th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Shadish, W.R.; Cook, T.D.; Campbell, D.T. Experimental and Quasi-Experimental Designs for Generalized Causal Inference; Houghton Mifflin: Boston, MA, USA, 2002; Available online: https://psycnet.apa.org/record/2002-17373-000 (accessed on 28 November 2025).

- Miles, M.B.; Huberman, A.M.; Saldaña, J. Qualitative Data Analysis: A Methods Sourcebook, 4th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2019. [Google Scholar]

- American Psychological Association. Publication Manual of the APA, 7th ed.; APA: Washington, DC, USA, 2020. [Google Scholar]

- World Medical Association. World Medical Association Declaration of Helsinki: Ethical principles for medical research involving human subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef]

- Open Science Collaboration. Estimating the reproducibility of psychological science. Science 2015, 349, aac4716. [Google Scholar] [CrossRef]

- Sáez-Delgado, F.; Coronado Sánchez, P.C. Metaverse Platforms—SQuaRE Based Assesment; Figshare Dataset: London, UK, 2025. [Google Scholar] [CrossRef]

- Adhini, N.; Prasad, C. Perceptions and drivers of the metaverse adoption: A mixed-methods study. Int. J. Consum. Stud. 2024, 48, e13069. [Google Scholar] [CrossRef]

- Du, H.; Ma, B.; Niyato, D.; Kang, J.; Xiong, Z.; Yang, Z. Rethinking quality of experience for metaverse services: A consumer-based economics perspective. IEEE Netw. 2023, 37, 255–263. [Google Scholar] [CrossRef]

- Lin, Z.; Duan, H.; Li, J.; Sun, X.; Cai, W. MetaCast: A Self-Driven Metaverse Announcer Architecture Based on Quality of Experience Evaluation Model. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 6756–6764. [Google Scholar] [CrossRef]

- Sinha, E. ‘Co-creating’experiential learning in the metaverse-extending the Kolb’s learning cycle and identifying potential challenges. Int. J. Manag. Educ. 2023, 21, 100875. [Google Scholar] [CrossRef]

- Zi, L.; Cong, X. Metaverse Solutions for Educational Evaluation. Electronics 2024, 13, 1017. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, B.; Mayer, I. Understanding the social construction of the metaverse with Q methodology. Technol. Forecast. Soc. Change 2024, 208, 123716. [Google Scholar] [CrossRef]

- Hamdan, I.K.A.; Aziguli, W.; Zhang, D.; Alhakeem, B. Barriers to Adopting Metaverse Technology in Manufacturing Firms. J. Manuf. Eng. 2024, 19, 146–162. [Google Scholar] [CrossRef]

- Lee, J.; Kim, Y. Sustainable educational metaverse content and system based on deep learning for enhancing learner immersion. Sustainability 2023, 15, 12663. [Google Scholar] [CrossRef]

- De Giovanni, P. Sustainability of the Metaverse: A transition to Industry 5.0. Sustainability 2023, 15, 6079. [Google Scholar] [CrossRef]

- Al-Kfairy, M.; Alomari, A.; Al-Bashayreh, M.; Alfandi, O.; Tubishat, M. Unveiling the Metaverse: A survey of user perceptions and the impact of usability, social influence and interoperability. Heliyon 2024, 10, e31413. [Google Scholar] [CrossRef] [PubMed]

- Ruijue, Z. Theoretical Challenges and Solutions for Data Interconnectivity and Interoperability in the Metaverse Context. J. Jishou Univ. (Soc. Sci. Ed.) 2025, 46, 37. Available online: https://skxb.jsu.edu.cn/EN/Y2025/V46/I1/37 (accessed on 28 November 2025).

- Mitra, S. Metaverse: A potential virtual-physical ecosystem for innovative blended education and training. J. Metaverse 2023, 3, 66–72. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.