Abstract

Chinese adolescents face significant mental health risks from addictive design features embedded in AI-driven digital platforms. Existing regulations inadequately address design-level addiction triggers in these environments, focusing primarily on content moderation and usage restrictions. This study identifies this gap and offers a novel framework that integrates systems theory and legal governance to regulate feedback loops between adolescents and digital platforms. Using the Adaptive Interaction Design Framework and a three-tiered typology of addictive design features, the research highlights how conceptual ambiguity and institutional fragmentation weaken regulatory efforts, resulting in reactive responses instead of proactive protection. To enhance regulatory effectiveness, this study recommends establishing a risk-tiered precautionary oversight system, providing enforceable definitions of addictive design features, mandating anti-addiction design practices and labeling, implementing economic measures like Pigouvian taxes, and fostering multi-stakeholder governance. It also emphasizes the need for cross-border coordination to address regulatory arbitrage. These policy directions aim to enhance regulatory efficacy and protect youth well-being in digital environments, contributing to ongoing international discussions on adolescent digital safety.

1. Introduction

The rapid integration of artificial intelligence (AI) into digital platforms has reshaped how adolescents engage, learn, and socialize online. This transformation is particularly visible in China, where 97.2% of minors now have internet access and begin using it at progressively earlier ages (China Internet Network Information Center [CNNIC], 2023). AI-driven platforms are central to youth culture and development (Brandtzaeg et al., 2025). In this study, the term “AI-driven platforms” refers to digital services whose core operations rely on algorithmic content curation, real-time behavioral prediction, and dynamic optimization powered by machine learning models (Kumar et al., 2024). These include social-media, video-streaming, and gaming services that learn from user behavior and recalibrate content delivery to sustain attention. Unlike traditional internet services, they operate as adaptive socio-technical systems that continuously adjust in response to user input. This creates feedback loops in which algorithms and users mutually influence one another, forming complex interaction patterns where small behavioral changes can generate large and often unintended outcomes (Matias, 2023).

Extensive evidence links excessive digital use to heightened anxiety (Kiviruusu, 2024; Jeong et al., 2016), depression (P. Wang et al., 2025; Keles et al., 2020; Twenge et al., 2017), nomophobia (Piko et al., 2024), sleep disturbances (Ferrari et al., 2024; Tokiya et al., 2020), and academic decline (Bilge et al., 2022; Park et al., 2014) among adolescents. This pattern of excessive engagement is often conceptualized as Problematic Internet Use (PIU), a maladaptive coping process marked by difficulties in self-regulation and impaired daily functioning (Fineberg et al., 2018). Although PIU is not formally categorized as an addiction in the DSM-5 (American Psychiatric Association, 2013) or ICD-11 (WHO, 2025), international bodies including the WHO and UNICEF increasingly recognize excessive digital use as a significant public health and developmental concern (WHO, 2024; UNICEF, 2025). While early research on PIU primarily emphasized individual behavior, increasing attention has shifted to the structural and design-level factors that drive compulsive use, especially on AI-driven platforms (X. W. Chen et al., 2023; Hartford & Stein, 2022; Bhargava & Velasquez, 2021; Cemiloglu et al., 2021).

Within this environment, regulators and scholars have increasingly focused “addictive design features.” This term refers to interface or algorithmic elements deliberately engineered to sustain engagement through reinforcement-based feedback loops. Some authorities describe similar mechanisms as “addictive patterns” (Agencia Española de Protección de Datos (Spanish Data Protection Agency), 2024), while related scholarship often examines them under the broader concept of “dark patterns” (Isola & Esposito, 2025; Monge Roffarello et al., 2023; Mathur et al., 2021; Soe et al., 2020). This study adopts the term “addictive design features” to emphasize design elements that exploit behavioral reinforcement principles to sustain compulsive use, distinguishing them from deception-based or privacy-manipulative dark patterns (De Conca, 2023).

Adolescents are particularly vulnerable to such features (Rossi et al., 2024). Their cognitive systems for impulse control, risk assessment, and long-term decision-making remain immature (Kuss et al., 2013; Schepis et al., 2008), while their sensitivity to social rewards and feedback is heightened. Limited privacy awareness, unfamiliarity with virtual currencies, and a preference for immediate gratification further reduce their ability to resist addictive design features (OECD, 2022). These developmental traits (Howard et al., 2025; Burnell et al., 2023) increase their vulnerabilities to negative impacts of addictive design features. Empirical studies confirm a significant association between specific addictive design features and PIU in adolescents (X. W. Chen et al., 2023), highlighting the urgent need for regulatory intervention.

In response, international regulatory frameworks have begun emphasizing design accountability. The EU’s Digital Services Act (DSA) (European Union, 2022) requires Very Large Online Platforms (VLOPs) to conduct annual systemic risk assessments on their platforms, including features that could influence users’ mental health. Similarly, California’s Age-Appropriate Design Code Act (CAADCA) (California Legislature, 2022) prohibits dark patterns and interface features known to harm children’s physical or mental health. In contrast, China’s approach—while offering strong youth-specific protections—remains focused on content moderation, usage-time restrictions, and mandatory parental supervision, measures that do not directly regulate the design architectures. Crucially, China still lacks a unified legal definition of “addictive design features” and established risk-based standards for their identification and control. This gap constitutes a regulatory blind spot that this study seeks to address.

Accordingly, this study pursues three interconnected objectives: (1) to explain how addictive design features in AI-driven platforms exploit adolescents’ vulnerabilities through feedback mechanisms. (2) to diagnose why China’s existing regulatory mechanisms struggle to govern these structural risks and identify key legal gaps. (3) to propose a risk-tiered regulatory agenda, informed by international practice, that advances proactive protection for adolescents.

To achieve these aims, this study integrates behavioral science, systems theory, and legal analysis. It introduces the Adaptive Interaction Design Framework (AIDF), which models platform-user interactions as feedback systems and identifies specific intervention points for regulatory intervention.

This study contributes by addressing a critical gap in existing regulatory frameworks that often overlook the design elements of AI-driven platforms. Specifically, it clarifies how addictive design features operate within adaptive, closed-loop feedback environments that exacerbate adolescents’ vulnerabilities. The AIDF not only highlights these complex interactions but also offers a systematic approach for understanding the interplay between platform objectives and user behaviors. This integrated lens enables regulators to identify blind spots in current policies and encourages the development of proportionate, proactive interventions that can effectively mitigate the risks adolescents encounter in the digital environment.

The remainder of the paper is structured as follows. Section 2 outlines the research methods and interdisciplinary analytical approach. Section 3 presents the three-tiered typology of addictive design features and the AIDF model. Section 4 evaluates China’s regulatory framework through international comparison and proposes tiered governance recommendations. Section 5 concludes with broader implications for global youth protection in algorithmic environments.

2. Materials and Methods

2.1. Methodological Approach and Scope

This study adopts an interdisciplinary methodology that integrates legal-doctrinal analysis with conceptual frameworks from systems theory and behavioral science to develop a normative governance model. Legal analysis serves as the core method. Doctrinal interpretation examines Chinese statutes and regulations to identify how they allocate obligations, define protected interests, and structure enforcement. Comparative analysis places China’s framework within the context of international regulatory developments. The AIDF serves as an analytical model, conceptualizing platform–user interactions as feedback loops derived from control systems theory, which aids in identifying potential points for legal intervention to disrupt compulsive use patterns. To support the need for design-level regulation, this study relies on existing empirical evidence from behavioral science, specifically peer-reviewed studies that demonstrate adolescents’ cognitive vulnerabilities and reinforcement mechanisms.

2.2. Doctrinal and Comparative Analysis

The legal analysis covers China’s regulatory hierarchy, including national laws, administrative regulations, and ministerial rules. Primary sources include the revised Law on the Protection of Minors (Standing Committee of the National People’s Congress of China, 2020), the Regulations on the Protection of Minors in Cyberspace (RPMC) (State Council of China, 2023), the Provisions on Algorithmic Recommendations for Internet Information Services (PAARIIS) (Cyberspace Administration of China et al., 2021), the Notice on Preventing Minors from Becoming Addicted to Online Games (China’s National Press and Publication Administration, 2019), and the Interim Measures for Generative Artificial Intelligence Services (Cyberspace Administration of China et al., 2023). All documents were sourced from official websites of the State Council of China, the Cyberspace Administration of China (CAC), and other relevant government agencies. This analysis used a doctrinal and textual approach to identify legal principles, normative gaps, and enforcement mechanisms. Rather than being descriptive, it is interpretive, focusing on how regulatory provisions address structural design features that sustain compulsive use.

To place China’s framework in a global context, a comparative analysis of selected jurisdictions (EU, UK, and US) was conducted. Though not exhaustive, this comparison selectively examines jurisdictions that have explicitly regulated addictive, manipulative, or dark-pattern designs in youth-protection rules and digital governance laws. The aim is to highlight diverse regulatory strategies that could inform policy development in China.

2.3. Conceptual Literature Synthesis

This study conducted a conceptual literature synthesis to develop the framework supporting the normative legal analysis. By integrating interdisciplinary scholarship, it establishes the foundational concepts for the paper’s normative argument. Relevant literature was identified through targeted searches in Web of Science, PubMed, Scopus, and CNKI using keywords “addictive patterns,” “addictive algorithms,” “addictive design,” “dark pattern,” “manipulative design,” “deceptive design,” “adolescent wellbeing,” “mental health,” “digital addiction,” “internet addiction,” and “problematic internet use.” Searches were performed on 1 July 2025 and covered publications from January 2014 to May 2025, prioritizing peer-reviewed articles, systematic reviews, and authoritative policy reports. Inclusion focused on conceptual relevance and theoretical contribution, emphasizing empirical or theoretical insights into feedback-driven design, adolescent vulnerabilities, or regulatory and ethical perspectives. Non-scholarly sources and purely technical papers without regulatory implications were excluded.

As the synthesis provides a conceptual foundation rather than new data, traditional systematic review protocols, such as PRISMA, were not applied. This approach aligns with interdisciplinary legal scholarship, where existing research informs normative reasoning, and provides empirical grounding for policy arguments instead of generating new empirical findings (Hutchinson & Duncan, 2012). The resulting framework integrates theoretical and empirical insights to guide the subsequent legal analysis and normative critique.

2.4. Analytical Framework: The Adaptive Interaction Design Framework (AIDF)

To analyze the emergence of addictive design features and potential regulatory interventions, this study employs the AIDF as its analytical tool. Developed from control systems theory and recent interdisciplinary research (Ibrahim et al., 2024), this AIDF conceptualizes the interaction between digital platforms (“Controller”), interfaces (“Actuator/Sensor Module”), and users (“Process Module”) as a dynamic closed-loop system. This framework was selected for its ability to reveal the feedback loops driving compulsive use, identify specific points for legal intervention, and translate abstract regulatory goals into clear and actionable design requirements. In this study, the AIDF serves as a legal-analytical tool, structuring the normative analysis, clarifying the causal pathways through which addictive design features impact adolescents, and guiding the identification of regulatory interventions.

2.5. Limitations

This study has several methodological limitations consistent with its conceptual and normative scope. It relies primarily on publicly available legal texts and secondary academic sources, without original empirical research. The doctrinal and comparative analyses are interpretive in nature, meaning alternative interpretations of legal texts are possible. Furthermore, the conceptual literature synthesis is selective rather than exhaustive, prioritizing theoretical and normative relevance over comprehensive bibliometric coverage. Future empirical research could test or expand the framework’s assumptions in practical enforcement contexts.

3. Results

3.1. Definition, Harms, and a Typology of Addictive Design Features

3.1.1. Definition

Despite their growing importance in digital governance, the concept of “addictive design features” remains underdeveloped in legal and empirical scholarship. It is often discussed within broader debates on manipulative, persuasive, or deceptive interface designs. Notably, the concept of “dark patterns,” first introduced by British UX designer Harry Brignull, refers to user interface designs that intentionally mislead or pressure users into unintended actions. Subsequent frameworks, such as those by Mathur et al. (2019), categorize dark patterns by methods like forced action, misdirection, and obstruction. More recent studies define them as interfaces that undermine user autonomy and decision-making to benefit business interests, often by exploiting cognitive biases (Zac et al., 2025).

Addictive design features constitute a specific subset of dark patterns characterized by feedback loops that reinforce continuous engagement. Drawing on the Agencia Española de Protección de Datos (Spanish Data Protection Agency) (2024) and related studies, they can be described as interface and algorithmic features designed to exploit neurocognitive vulnerabilities, especially those related to reward anticipation and attentional control, thereby inducing repeated use and dependency-like behaviors. Scholars further identify them as attention-capturing dark patterns that reduce disengagement cues, commonly through mechanisms such as infinite scroll, autoplay functions, and personalized recommendation feeds (Ye, 2025). These features function within dynamic socio-technical systems, interacting with algorithms, user routines, and content delivery mechanisms to sustain usage cycles.

In China, however, this concept lacks a legal definition. Regulatory texts such as the RPMC and the PAARIIS use the vague term “inducing addiction,” which highlights outcomes like overuse or dependency but offers no clear standard for what constitutes “inducement” or “addiction.” This definitional vagueness undermines regulatory enforcement and leaves platforms without precise guidance on acceptable design practices. Given this situation, this study adopts an analytical definition of addictive design features as design choices that systematically exploit users’ cognitive and psychological vulnerabilities to prolong engagement in ways that compromise wellbeing. This definition establishes a consistent conceptual foundation for comparative and regulatory analysis and supports proposals for a clearer, legally enforceable framework aimed specifically at adolescent protection.

3.1.2. Harms of Addictive Design Features

Research on the psychological and physical harms of excessive screen time and PIU is extensive. However, few studies isolate the effects of specific addictive design features as independent variables (X. W. Chen et al., 2023). Most research addresses broader phenomena such as digital addiction or social media dependency, but still provides important inferential evidence on the public health implications of such features. Drawing from this literature, four categories of harm are identified.

Neurological Harm: fMRI studies (Su et al., 2021) show that exposure to personalized algorithmic content, like TikTok’s recommendations, heightens activation in key regions of the default mode network, including the medial prefrontal cortex, posterior cingulate cortex, and ventral tegmental area. Increased connectivity to visual, auditory, and frontoparietal networks accompanies this neural activity. Research (Gao et al., 2025) in young adults with algorithm-driven compulsive use indicates heightened regional homogeneity in the dorsolateral prefrontal cortex and related areas, suggesting over-synchronized activity linked to reward immersion and cognitive entrenchment. These neural patterns may foster addiction-like behaviors through increased self-referential processing and reward signaling. A scoping review (Ding et al., 2024) on children and adolescents reports consistent structural brain changes, including reduced gray and white matter volume, cortical thickness, and impaired functional connectivity, especially in the prefrontal cortex. These findings imply that chronic exposure to addictive design features may alter brain architecture and neural communication pathways, particularly those related to reward processing and executive control.

Psychological Harm: The psychological consequences of addictive digital engagement manifest across emotional, cognitive, and behavioral domains. Emotional harms include increased rates of depression, anxiety, loneliness, and mood instability (Agyapong-Opoku et al., 2025; Kiviruusu, 2024). Cognitive impairments encompass attention deficits (Méndez et al., 2024), reduced impulse control (S. Li et al., 2021), compromised executive function (Reed, 2022), and difficulty with planning and decision-making. Behaviorally, users experience loss of control over their usage, guilt about time spent online, withdrawal-like symptoms when attempting to reduce use, and neglect of offline responsibilities (Aziz et al., 2024). These psychological impairments reduce individual agency and resilience, exacerbating developmental vulnerabilities and threatening the mental health of future generations.

Risk Exposure: Extended digital engagement increases exposure to secondary risks such as cyberbullying, online grooming, scams, and inappropriate content (Topan et al., 2025; Schittenhelm et al., 2025). Youth with psychological issues such as impaired judgment and reduced impulse control are particularly vulnerable to online manipulation and risky decision-making (Giedd, 2020). These risks compromise personal safety and undermine the protective environments necessary for healthy youth development.

Comorbidities with Physical Health Harm: Addictive digital engagement negatively impacts physical health, leading to sleep disruption, weight changes, and academic challenges (Nagata et al., 2023; Haghjoo et al., 2022). These effects may be mediated by both direct mechanisms, such as blue light exposure affecting circadian rhythms, and indirect pathways, including reduced physical activities and less face-to-face interactions (Silvani et al., 2022). Cumulatively, these health impacts can affect educational attainment, physical development, and long-term societal productivity.

AI-driven addictive design features use advanced algorithmic mechanisms and machine learning to develop hyper-personalized manipulation strategies that operate below the user’s awareness. These strategies exploit cognitive vulnerabilities, weaken autonomy, and create a power imbalance between technology and human agency. While direct causality remains unconfirmed, evidence links these features to PIU, which is strongly associated with diverse harms (X. W. Chen et al., 2023). Adolescents are particularly vulnerable due to ongoing development and limited self-regulation. From a public health perspective, a precautionary approach to regulating addictive design features is both reasonable and necessary to foster a sustainable and resilient digital ecosystem for youth.

3.1.3. A Three-Tiered Typology of Addictive Design Features

Effective governance of adolescent digital wellbeing requires understanding not only the harms but also the structural logic of how addictive design features operate. Building on typologies proposed by Gray et al. (2023) and Beltrán (2025), this study adopts a three-tiered framework that classifies design features according to their strategic intent, psychological mechanism, and specific interface techniques. This hierarchical structure distinguishes design features by abstraction level and operational specificity (see Table 1), conceptualizing digital platforms as layered socio-technical systems. The framework provides a shared vocabulary for proportionate governance capable of addressing AI-driven personalization and feedback loops.

Table 1.

Typology of Addictive Design Features.

At the high level, the typology identifies four strategic intentions commonly used by digital platforms to shape user behavior. Interface Interference manipulates user perception, comprehension, or navigation through visual emphasis, information overload, or deceptive choice architectures. Social Engineering employs principles of social psychology and behavioral economics to influence users through emotional cues, social norms, and cognitive biases. Persistence encourages continued engagement by exploiting users’ sensitivity to unfinished tasks and prior investment. Forced Action coerces users into specific behaviors or timed interactions to maintain functionality, thereby restricting autonomy and reinforcing compulsive use.

Building on these strategies, the mid-level focuses on the psychological mechanisms that target cognitive and emotional traits (Shi et al., 2025; Servidio et al., 2024; Chamorro et al., 2024; Gray et al., 2023; Geronimo et al., 2020), particularly adolescents’ limited impulse control and heightened need for social validation. These mechanisms function as operational bridges, translating abstract strategic goals into specific psychological levers that create self-reinforcing engagement patterns. At the low-level, these psychological mechanisms materialize as concrete application-specific interface features that users directly interact with (Lukoff et al., 2021; Tran et al., 2019; Cho et al., 2021; Sun et al., 2024; Lee et al., 2014). These features are key intervention points for regulation because they are empirically observable and technically modifiable.

The framework conceptualizes addictive design as a layered system linking abstract manipulation goals to concrete interface practices. Strategic intentions define why engagement is pursued; psychological mechanisms explain how cognitive vulnerabilities are exploited; and interface features describe what users experience. This distinction clarifies that not all addictive design features pose equal risks or demand identical regulatory responses, supporting proportionate and adaptive governance. The typology also provides a conceptual bridge to the AIDF introduced in Section 3.1.4, which maps legal obligations to specific intervention points across these layers. As digital environments evolve with generative AI, predictive analytics, and reinforcement learning, the typology remains a dynamic framework open to continuous refinement.

3.1.4. Conceptualizing Addictive Design Features Within AIDF

This study enhances the three-tiered typology by applying the AIDF to illustrate how addictive design features function as closed-loop feedback systems connecting platforms, interfaces, and users. Derived from control systems theory and its specific application in analyzing AI-driven interfaces (Ibrahim et al., 2024), the AIDF conceptualizes digital environments as adaptive socio-technical systems that optimize user engagement through continuous feedback.

- Core Components of the AIDF

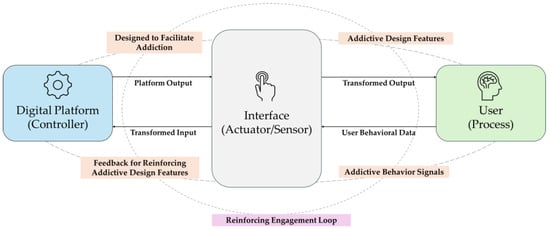

In traditional control systems, key components interact through feedback loops to manage behavior. The controller processes input signals and generates outputs to achieve system goals. The process module represents the influenced system, producing measurable outputs. The actuator transforms the controller’s decisions into actions, while the sensor captures resulting data for real-time adjustment (Nise, 2015). In the AIDF, these components correspond directly to the digital platform ecosystem (Figure 1): the platform functions as the controller, the user serves as the process module, and the interface acts as both actuator and sensor, transforming algorithmic outputs into interactive formats and capturing user interactions data.

Figure 1.

AIDF for Understanding Addictive Design Features.

- Operation of the Feedback Loop

The system operates through continuous interaction cycles. When an adolescent user interacts with content, the interface collects behavioral signals such as completion rates and gestures. These signals are processed by platform algorithms that recalibrate outputs, selecting new content or modifying visual cues to sustain attention. Addictive design features exploit positive feedback loops, where engagement signals amplify subsequent outputs, creating self-reinforcing behavioral cycles.

On AI-driven platforms, this process is optimized through large-scale behavioral datasets. The platform’s effectiveness in presenting content and gathering behavioral data relies on various design features, each with specific affordances. These affordances refer to the relational properties between users and design features, encompassing perceived and actual capabilities (Norman, 1999) that determine how users can interact with a given feature at a specific time and context (Gibson, 2015). Affordances operate across cognitive, physical, functional, and sensory dimensions (Hartson, 2003) to sustain user interaction and preserve loop continuity.

For analytical clarity, the AIDF formalizes these dynamics using control systems notation (see Table 2). User behavioral signals are denoted as I(t), platform outputs as O(t), and the platform’s processing function as fP, which maps inputs to outputs. The interface’s presentation function fO transforms platform outputs into interactive formats, while its collection function fI captures user responses. User condition C(user) reflects the psychological and developmental factors influencing responses. Design features d and their affordances A(d,t) specify how interface elements enable or constrain user actions at particular times. At each time step t, the platform processes I(t) through fP to generate O(t + 1), which the interface presents through fO, prompting user responses that become I(t + 1), thereby closing the feedback loop.

Table 2.

AIDF Variables with a Short-Form Video Feed Example.

A typical Douyin (Chinese version of TikTok) interaction exemplifies this process. When a user swipes through short videos, the platform captures behavioral signals I(t)—including watch duration and replay behavior. The recommendation algorithm fP analyzes these signals to figure out engagement patterns and identify content features that maximize retention. Within milliseconds, the algorithm generates O(t + 1) by selecting the next video predicted to sustain attention. The interface presents this output through fO via immersive autoplay, while design affordances A(d,t) such as infinite scroll remove natural stopping cues. As users interact, the interface’s sensor function fI captures new data, feeding it back into fp to refine recommendations. At each time step t, the system updates based on accumulated behavioral data, generating an adaptive feedback process. This closed loop operates continuously, with each interaction cycle training the algorithm to adapt more precisely to the user’s C(user) characteristics.

The three-tiered typology of addictive design features integrates into this model across strategic, psychological, and operational levels. High-level strategies define the platform’s objectives; mid-level mechanisms specify how vulnerabilities are leveraged; and low-level design patterns represent concrete affordances A(d,t) where regulation can directly intervene. From a control systems perspective, effective regulation must introduce negative feedback to counteract reinforcing loops. This involves constraining the platform’s optimization objectives (redefining fP to incorporate wellbeing metrics), redesigning interface affordances (modifying d and A(d,t) to introduce friction), and addressing user vulnerabilities through targeted interventions on C(user). Regulations that alter interface appearance without changing algorithmic objectives remain ineffective, since platforms can comply formally while maintaining engagement-maximizing logic.

- Illustrating AIDF: The “Minors’ Mode” Regulatory Failure in China

China’s “Minors’ Mode” illustrates the pitfalls of interface-only regulation. Implemented across major platforms like Douyin, Kuaishou, and Bilibili in China, Minors’ Mode mandates age verification, daily time limits, content filtering, and disabled social features. In AIDF terms, it is an interface-level intervention that alters design affordances A(d,t)—for example, filtering content O(t), and disabling engagement features—while leaving the Controller’s optimization objective (maximizing engagement via fP) unchanged.

A study of 21 video applications with “Minors’ Mode” protections found that over half disabled search functions, yet those retaining it still produced sexually suggestive results for queries such as “beauty” and “sexy.” Algorithmic recommendations even surfaced soft pornography within “educational” categories (Southern Metropolis Daily, 2019). This illustrates that even with constrained interfaces, platforms continue to collect behavioral data via the Sensor, tracking which videos adolescents watch longer and which keywords they search, and feeding these signals back into fP for refine delivery. The reinforcing feedback loop persists because adolescent engagement signals are reinterpreted as indicators of interest, prompting further optimization toward attention-grabbing content.

Minors’ Mode restricts the Actuator without addressing how platforms re-optimize fP or exploit fi’s ongoing data collection. Effective interface regulations must anticipate circumvention by mandating algorithmic transparency to make fP’s objectives auditable, limiting fi’s behavioral data collection to reduce hyper-personalized targeting, and requiring design affordances A(d,t) that introduce structural friction against adaptive re-optimization. The Minors’ Mode case thus illustrates the importance of interface governance based on closed-loop system dynamics to prevent platforms from circumventing regulations.

- Comparative Positioning of the AIDF

While various disciplines address digital platform harms, the AIDF offers a distinct regulatory lens. Unlike models grounded in behavioral economics or addiction neuroscience, the AIDF conceptualizes manipulation as an emergent, self-reinforcing process linking platform control functions, interface affordances, and behavioral feedback.

Behavioral economics explains digital persuasion through choice architecture (Thaler & Sunstein, 2008) and has influenced regulations like GDPR’s “privacy by default” (Coiduras-Sanagustín et al., 2024) and opt-in consent requirements. However, it treats choice architecture as a static design, whereas the AIDF recognizes that AI-driven platforms dynamically adapt this architecture in response to user feedback. This system identifies individual vulnerabilities and optimizes exploitation strategies in real-time. Initial nudges generate behavioral responses interprets as preference signals, reinforcing engagement in subsequent iterations. Accordingly, effective regulation should target the structure of feedback loops rather than isolated decision points.

Addiction neuroscience links compulsive digital use to dopamine-based reward reinforcement (H. Chen et al., 2023). While this view explains adolescent neurological vulnerability, it focuses on individual predispositions. In contrast, the AIDF situates addiction within the platform-user interaction, showing how system dynamics amplify vulnerabilities. This distinction between vulnerability and exploitation carries significant regulatory implications. Individual-focused interventions, such as education and self-control training, address symptoms, while AIDF-informed regulation targets the causal mechanisms that generate addictive engagement.

By linking strategic objectives, psychological mechanisms, and design affordances, the AIDF clarifies how platforms optimize engagement and where interventions should occur. It enables policymakers to trace harms through the feedback loop—from behavioral outcomes back to optimization functions—supporting anticipatory regulation that constrains both interface design and algorithmic learning processes responsible for emerging exploitative patterns. Having established the conceptual foundations and structural logic of addictive design features, the next step is to examine how China has responded to these risks through its evolving regulatory framework.

3.2. Chinese Regulatory Framework

3.2.1. Legal Foundations in Adolescent Protection: The Starting Point of Addictive Design Features Regulation

China’s regulatory response to addictive design features originated from growing concerns over adolescent PIU, particularly in online gaming. Early governmental efforts emphasized behavioral controls to mitigate excessive screen time. In 2007, the Ministry of Culture and Tourism and the State Administration for Industry and Commerce issued the Notice on Further Strengthening the Management of Internet Cafes and Online Games, introducing mandatory anti-addiction systems that restricted online game rules and promoted technical solutions to mitigate youth gaming addiction.

By 2013, the Ministry of Culture and Tourism and the CAC jointly released the Comprehensive Prevention and Treatment Program for Adolescent Online Game Addiction, which expanded oversight by requiring age-appropriate reminders, parental supervision, and supportive in-game environments. A further milestone came in 2021 when the National Press and Publication Administration issued the Notice on Further Strict Management and Effective Prevention of Minors Addiction to Online Games. This policy imposed strict hourly limits, mandatory real-name registration and login controls, marking the transition from voluntary management to state-enforced prevention.

Over time, regulatory focus shifted from controlling usage duration to addressing the design features embedded in platform design. The 2020 revision of the Law of China on Protection of Minors was a turning point. Article 74 of this law prohibits online service providers from offering products or services likely to induce addiction in minors. Although it does not explicitly mention algorithms or interface design, its broad language implicitly covers design features that promote compulsive interaction. This article established the legal foundation for regulating design-level harms and enabled subsequent normative expansion.

Building on this base, the 2024 RPMC introduced detailed and enforceable standards. Chapter V (Articles 39 to 49) establishes a comprehensive framework to prevent digital overuse among minors. Article 42 requires providers to develop robust anti-addiction systems, promptly revise addictive features, and disclose prevention measures annually. Article 43 mandates age-specific “minors’ mode” for online games, live streaming, audio-video, and social media platforms. These modes must adhere to national standards for time, duration, functions, and content, ensuring practicality and effectiveness, while offering guardians convenient tools for managing minors’ time, permissions, and spending. Article 44 introduces age-based spending caps and prohibits paid services beyond minors’ civil capacity. To counter “traffic-first” incentives, Article 45 prohibits creating or promoting online communities centered on fan funding, ranking votes, or engagement manipulation, and obliges providers to prevent and stop their users from inducing minors into such behaviors. Furthermore, Article 46 requires real-name verification via a national identification system and bans account rentals or sales to minors. Article 47 directs game providers to refine rules and avoid content or features harmful to minors’ physical or mental health. They must implement an age-rating system, categorizing games by type, content, and function, and clearly display suitable age group information at key access points like download, registration, and login screens. Beyond platform obligations, the RPMC also extends responsibilities to families, schools, and state authorities to ensure coordinated protection of minors. Articles 40 and 41 require schools to identify early signs of digital addiction and inform guardians, while parents must supervise digital habits. Article 48 defines responsibilities for education, public health, and cyberspace agencies in awareness, enforcement, and research on digital overuse and psychological disorders.

These measures represent a shift from reactive approaches to proactive design-level accountability. However, the absence of an operational legal definition of “addiction-inducing” features continues to undermine enforceability (Y. Wang & Zhang, 2025), exposing a fundamental normative gap in the regulatory system.

3.2.2. Regulatory Measures on Algorithmic and Platform Governance

As digital platforms became more complex and engaging, regulation expanded beyond youth protection to address algorithmic and platform architecture. A series of administrative rules and policy guidelines aim to mitigate risks associated with addictive design features, particularly in recommender systems and automated decision-making processes.

A key component of this governance framework is the PAARIIS issued by the CAC. Article 8 requires providers to regularly audit their algorithmic models, data inputs, and outcomes, and explicitly bans algorithms that induce addiction or excessive reliance. Article 18 mandates heightened responsibilities regarding minors, requiring platforms to establish internal oversight, provide age-appropriate modes, and avoid recommending content that promotes unsafe imitation, unhealthy habits, or compulsive usage patterns detrimental to adolescents’ wellbeing. To improve transparency, Article 16 obliges platforms to indicate when algorithmic recommendations are used and to disclose their core principles, intended objectives, and operational logic in accessible formats. Additionally, the regulation encourages technical interventions such as content de-duplication, disruptive prompts, and improved interpretability of algorithmic systems governing search, sorting, presentation, and push functionalities. These measures aim to limit excessive personalization and overstimulation, factors linked to digital echo chambers and compulsive usage.

In July 2023, seven government departments including the CAC issued the Interim Measures for the Management of Generative Artificial Intelligence Services. Article 10 places a heightened duty of care on generative AI service providers concerning minors, requiring them to take “effective measures” to prevent overuse or dependency. Although the scope of such “effective measures” is not specified, it can be interpreted in light of existing youth protection initiatives, encompassing access time, identity verification, risk warnings, and feedback or complaint mechanisms.

Beyond formal rules, China frequently employs targeted enforcement through administrative “special campaigns.” The 2024 “Qinglang” campaign on algorithmic misconduct (Secretariat of the Office of the Central Cyberspace Affairs Commission et al., 2024) prioritized rectifying addictive recommendation practices. These campaigns, led by a primary regulator in coordination with multiple departments, temporarily concentrate enforcement resources for rapid inspections and sanctions (Y. Li, 2015). They create strong compliance pressure and short-term deterrence but have limited long-term impact.

These developments demonstrate rising regulatory awareness of how algorithmic infrastructures reinforce compulsive digital behaviors and threaten adolescent wellbeing. Yet many provisions rely on broad ethical language, enforcement lacks consistency, and precise technical criteria for defining “addiction-inducing” patterns are still absent. Without clearer standards and stable institutional oversight, the effectiveness of these regulations in addressing the root causes of adolescent digital overexposure remains limited. Building on this regulatory baseline, the next section situates China’s approach within a global context to highlight convergent trends, divergent priorities, and lessons that may inform future policymaking.

3.3. Comparative Overview: International Regulatory Approaches to Addictive Design Features

Across jurisdictions, governments have adopted varied legal and policy strategies to address risks from addictive design features in digital environments, particularly concerning children and adolescents. Despite differences in scope and enforcement, these regimes share a focus on restricting behavioral manipulation embedded in platform architecture and algorithms.

In the European Union, the 2022 DSA requires VLOPs to assess and mitigate systemic risks, including those from addictive user engagement. Recital 83 highlights risks from online interface designs that promote behavioral addictions, while Recital 70 mandates the transparent presentation of the parameters of recommender systems. Article 34 further requires platforms to evaluate impacts on minors’ rights, mental health, and public wellbeing. The DSA explicitly prohibits “dark patterns,” which Recital 67 defines as practices that distort or impair users’ autonomous choices. Recital 81 specifically highlights interfaces that intentionally or unintentionally exploit minors’ vulnerabilities or encourage addictive behavior. Although the term “addictive design features” is not explicitly used, phrases like “hyper-engaging dark patterns,” meaning designs that use big data analytics and behavioral profiling to induce greater user engagement, is widely recognized as manipulative practices prohibited under the DSA and other EU laws such as the Unfair Commercial Practices Directive (UCPD) (Esposito & Maciel Cathoud Ferreira, 2024). The European Parliament has called for a “right not to be disturbed,” suggesting that engagement-maximizing features such as infinite scroll, autoplay, and push notifications should be deactivated by default for minors to protect their time and attention (European Parliament, 2023). In 2024, the European Commission launched an investigation into TikTok Lite’s “Task and Reward Programme,” citing systemic mental-health risks to minors. Following precautionary measures by the Commission, TikTok voluntarily suspended the feature across the EU. The case demonstrates the DSA’s capacity for rapid intervention, but it also revealed compliance gaps, since the feature was launched prior to the legally required risk assessment. The reliance on voluntary suspension rather than a final formal sanction indicates that enforcement remains reactive and dependent on platform cooperation, and that greater technical expertise and resources are needed to secure strict proactive compliance.

The UK’s Online Safety Act (UK Parliament, 2023) focuses on regulating online services to protect users, especially children, from harmful content. It empowers the Office of Communications (Ofcom) to enforce online safety codes and mandates risk assessments and safety measures for child-accessible services. However, the Act does not explicitly address addictive design. This gap has been partially filled by the Age-Appropriate Design Code (UK Information Commissioner’s Office, 2020), which sets fifteen standards for digital services used by children. The code urges providers to limit persuasive design, minimize data collection, and avoid nudges extending screen time. Since the Online Safety Act came into force, Ofcom has initiated enforcement actions for non-compliance, including penalizing failures to respond to information requests, monitoring geoblocking to evade regulation, and promoting perceptual hash matching to detect child sexual abuse material. Although no direct sanctions for addictive design have yet occurred, the framework is tightening control over interface harms through mandatory risk assessments, safety-by-design duties, and accountability for services targeting UK users. However, Ofcom’s delayed publication of the updated Protection of Children Codes of Practice in April 2025 created a temporary regulatory gap. Overlapping mandates between the ICO and Ofcom further risk fragmented oversight across data protection and interface design domains.

In the United States, federal regulation of addictive design features remains limited, but several states have advanced child-focused initiatives inspired by UK models. California’s 2022 CAADCA requires platforms accessible to minors to conduct data protection impact assessments and set developmentally appropriate defaults. It also limits dark patterns and prohibits algorithmic profiling of children. However, the law has faced legal challenges, with a federal district court issuing a preliminary injunction in 2023, though the Ninth Circuit partially upheld it in 2024. The case remains pending on appeal. California also introduced Senate Bill 287 (2023) (California Legislature, 2023), seeking to ban designs, algorithms, or features known that substantially increase risks of addiction, self-harm, eating disorders, or suicidal behaviors among children. Despite significant public attention, it failed to pass, reflecting the legal and political complexities inherent in U.S. platform regulation. New York’s Stop Addictive Feeds Exploitation (SAFE) for Kids Act (New York State Senate, 2023) represents a major state-level step. It prohibits social media platforms from providing addictive feeds to minors without parental consent to curb online addiction. At the federal level, the Social Media Addiction Reduction Technology (SMART) Act (U.S. Senate, 2019) proposed banning infinite scroll and autoplay while setting default usage limits. However, the bill failed to gain traction and was not reintroduced in subsequent sessions. By contrast with UK and EU approaches that emphasize ex ante design obligations and prompt administrative remedies, U.S. regulatory efforts have been most effective where they rest on mature, technology-neutral doctrines of deception and unfairness established through litigation. However, these efforts run into problems when broad, child-focused design mandates trigger First Amendment challenges, vagueness concerns, or federal preemption risks, all of which delay implementation and limit regulatory reach. In particular, constitutional constraints sharply restrict regulatory scope in the United States, where First Amendment protections that treat algorithmic curation as a form of protected speech, together with Section 230 immunity, have repeatedly hindered or postponed state-level design regulations through industry-led litigation.

These global efforts reflect a growing recognition of addictive design features as systemic regulatory concern with significant implications for child development. While national approaches vary, a clear convergence has emerged toward integrating child rights, digital ethics, and public health perspectives into governance frameworks. However, enforcement experiences consistently reveal challenges that constrain regulatory effectiveness: vague definitions enable platforms to circumvent compliance; institutional fragmentation weakens coordination; constitutional and jurisdictional limits restrict intervention; and significant delays between rulemaking and implementation. Across jurisdictions, these challenges highlight the need for a more proactive and harmonized regulatory strategy to safeguard young users from the evolving risks of addictive design.

4. Discussion

Having examined the conceptual foundations, structural mechanisms, and global regulatory approaches to addictive design features, the discussion now turns to the analytical implications of these findings. This section explores the underlying challenges within China’s current governance framework, situates them within broader international patterns, and outlines pathways for improving regulatory effectiveness.

4.1. Challenges of China’s Regulatory Frameworks on Addictive Design Features

4.1.1. Conceptual Ambiguity and Normative Gaps

Despite China’s increasing focus on regulating addictive design features, the absence of definitional precision continues to undermine enforceability. Article 74 of the Law on Protection of Minors prohibits providing products or services “likely to induce addiction in minors”, but it offers no operational criteria for determining what constitutes an addiction-inducing design. Similarly, Article 18 of PAARIIS prohibits algorithms that “induce user addiction or excessive reliance” yet fails to specify what algorithmic structures or behavioral effects meet that threshold. This vagueness hampers implementation, as regulators have not been able to clearly distinguish between persuasive designs (Fogg, 1998) that support user welfare (e.g., transparent reminders or learning achievement badges) and manipulative designs that exploit cognitive vulnerabilities through opacity, variable rewards, or personalized loss-aversion triggers. Without this differentiation, regulations risk adopting overbroad, one-size-fits-all standards (Xiang & Lu, 2024) that discourage legitimate innovation while failing to address real harms.

This definitional ambiguity reflects a broader regulatory tendency to prioritize observable behavioral outcomes (screen time, usage frequency) over the underlying design mechanisms that generate them. Current frameworks overlook the layered structure of addictive design features. For example, infinite scroll (a low-level pattern) operates alongside variable reward schedules (a mid-level psychological mechanism) that are strategically employed to maximize retention (a high-level goal). These features exploit adolescents’ developmental vulnerabilities, conditioning compulsive habits similar to substance dependence (Leeman & Potenza, 2013; Y.-H. Lin et al., 2016). To be effective, regulation must move beyond static feature control toward dynamic system intervention, addressing the feedback loops that perpetuate engagement. Legal norms should function as negative feedback mechanisms, introducing friction points or mandating algorithmic transparency to counteract self-reinforcing optimization.

Future legislation should therefore target the structural properties and systemic dynamics of addictive design architectures. This requires embedding a structural and process-oriented understanding of addiction into regulatory discourse, with precise definitions to be developed later through statutes or policy measures.

4.1.2. Structural Weaknesses in Enforcement and Institutional Coordination

Even when regulatory intent is clear, China’s governance of addictive design features is hindered by fragmented authority and limited institutional capacity. Regulatory authority is divided among the CAC, the National Press and Publication Administration, the Ministry of Culture and Tourism, and provincial cyberspace offices, each holding overlapping but decentralized responsibilities. This fragmentation hinders sustained, cross-sectoral efforts to address design-based harms that affect different content categories and application types. Equally significant is the exclusion of public health authorities from this framework, despite growing recognition that addictive design features constitute a public health risk. This omission limits the state’s ability to translate psychological and neuroscientific insights into concrete regulatory standards.

Enforcement mechanisms are weakened by vague compliance standards and an over-reliance on platform self-regulation. Article 8 of PAARIIS mandates platforms to “regularly audit, evaluate, and verify” algorithms, but it specifies neither audit frequency nor evaluation criteria, leaving compliance standards intentionally vague. Although the regulation prohibits algorithms that induce addiction, it relies entirely on platforms’ internal assessments to determine compliance, thereby transforming a public duty into a private discretion. Consequently, the compliance ecosystem favors superficial interventions, such as setting time limits or issuing parental controls, while deeper design structures and algorithmic logic remain largely unexamined.

Enforcement efforts also lack continuous and institutionalized mechanisms. Although campaign-style inspections create temporary regulatory pressure, their episodic nature allows platforms to implement short-term adjustments and revert to previous engagement strategies once oversight diminishes (Y. Wang & Zhang, 2025). This cyclical pattern reveals the system’s vulnerability and undermines long-term reforms to addictive design features. A more stable and proactive enforcement framework is necessary to ensure that regulatory attention goes beyond superficial compliance and addresses the structural sources of harm.

4.1.3. Regulatory-Industry Asymmetries and the Knowledge Gap

A significant barrier to effective regulation arises from the asymmetry of technical expertise and information between digital platforms and government agencies (Huang & Wang, 2024). As algorithmic systems become more complex, regulators struggle to fully understand the practical functioning of recommendation engines, personalization algorithms, and real-time engagement loops. Although Article 16 of PAARIIS requires platforms to “publicly disclose algorithmic principles, objectives, and operational logic in accessible formats,” enforcement relies entirely on self-reported disclosures without independent verification. This allows platforms to provide broad, technically compliant statements while concealing the specific behavioral levers used to exploit psychological vulnerabilities.

This knowledge gap is particularly evident in the lack of interdisciplinary expertise within enforcement agencies, whose staff typically has training in law or public administration but lacks skills in data science or behavioral psychology. Consequently, regulatory reviews often concentrate on visible indicators, such as screen time or formal policy documentation, inadvertently overlooking more subtle forms of cognitive manipulation embedded in the design of interfaces or algorithms. Platforms possess deep insights into user behavior and monetization strategies, allowing them to refine engagement features while appearing compliant, often making only cosmetic changes that do not address core addictive mechanisms.

To establish a robust and sustainable regulatory framework, there must be long-term investments in interdisciplinary talent, institutional reforms for design-level evaluations, and the establishment of independent auditing systems. Without these measures, governance will remain reliant on industry disclosures and sporadic campaigns, lacking the consistent oversight needed to confront systemic risks. In light of these domestic limitations, examining international regulatory experience offers valuable insights into alternative institutional logics and possible pathways for improvement.

4.2. International Experience: Convergence, Divergence, and Complementarity

Global regulatory responses to addictive design features have diversified but increasingly converge on the recognition that such designs pose public health and developmental risks. This international experience showcases distinct institutional logics and legal architectures that shape diverse governance styles. To illustrate these differences and similarities in regulatory approaches, the following table (Table 3) provides a comparative overview of key regulatory frameworks across various jurisdictions.

Table 3.

Comparative Overview of Regulatory Approaches to Addictive Design Features.

4.2.1. Convergence

Across jurisdictions, a shared understanding has emerged that addictive design features should not be viewed merely as neutral business strategies but as regulatory issues with significant public health and developmental implications (Esposito & Maciel Cathoud Ferreira, 2024).

The EU’s DSA, alongside the 2023 Parliamentary Resolution on addictive design, signifies a shift from content-focused regulation to interface-level accountability. Features like infinite scrolling, autoplay, and excessive notifications are increasingly viewed as forms of behavioral manipulation that impair user agency. Similarly, China’s legal reforms explicitly prohibit algorithmic models that foster addiction or overconsumption, indicating a move toward upstream risk governance aligned with developmental health priorities. Although federal action is limited in the U.S., state laws, such as New York’s SAFE for Kids Act, suggest growing alignment with international calls for upstream design regulation. These developments collectively highlight a broader trend toward anticipatory governance focused on the unique health and developmental rights of youth.

4.2.2. Divergence

Despite overlapping on normative goals, significant differences remain in regulatory structure and enforceability.

The EU model is characterized by a rights-based and preventive orientation. The DSA imposes proactive obligations on VLOPs, mandating independent algorithmic audits, systemic risk assessments for algorithmic impacts, and user controls over personalized recommendations to ensure transparency and autonomy. It prohibits interface designs that exploit cognitive biases and undermines autonomy, backed by robust enforcement mechanisms that include fines of up to 6% of global annual revenue. The AI Act (Regulation (EU) 2024/1689) (European Union, 2024) adds transparency obligations for high-risk AI systems, though its focus on intentional manipulation narrows its applicability to addictive design features. The 2023 EU Parliamentary Resolution calls for precise guidelines to restrict such features, especially for children. This comprehensive approach prioritizes preventive regulation and user protection, thereby positioning the EU as a proactive model for governing addictive platform architectures. The EU’s success in establishing this framework reflects its political culture emphasizing individual rights, precautionary risk governance rooted in decades of environmental and consumer protection regulation, and institutional capacity through well-resourced agencies.

In contrast, the U.S. approach adopts a “safe harbor” model that favors market-driven self-regulation, constrained by the First Amendment and Section 230 of the Communications Decency Act. These protections shield platforms from liability for user-generated content, complicating rules surrounding addictive design features. The First Amendment treats algorithms as protected speech, as affirmed in cases like Bernstein v. United States (Bernstein v. US Dept. of State, 1996), thus hindering both federal and state laws that do not meet strict constitutional scrutiny. Section 230 further constrains accountability for algorithms, as demonstrated in the Social Media Addiction case (Social Media Adolescent Addiction/Personal Injury Products Liability Litigation, 2023), where claims concerning algorithmic dopamine manipulation were dismissed if linked to content moderation. The result is a legal environment that privileges innovation and market freedom but perpetuates enforcement gaps, relying on reputational incentives and self-regulation to induce compliance. The struggle to regulate addictive design features in the U.S. arises from a libertarian political culture that prioritizes commercial speech and innovation freedom, a fragmented federal structure that exposes state initiatives to preemption challenges, and powerful industry lobbying that has reframed design regulation as censorship. In certain contexts, reliance on market competition and reputational accountability has occasionally motivated platforms to adopt voluntary safeguards, suggesting that self-regulation may complement formal governance when coupled with transparency and consumer scrutiny.

China’s approach combines centralized oversight with normative guidance, as seen in the Law of China on Protection of Minors and the RPMC, both mandating comprehensive anti-addiction systems for minors. The PAARIIS calls for algorithmic transparency, requiring platforms to disclosure of principles and data sources, adhere to ethical standards, and prevent excessive engagement, especially among minors. However, vague terms like “inducing addiction” or “violating social morality” create significant ambiguity in identifying addictive design features. Furthermore, China’s enforcement, led by the CAC, largely relies on top-down actions, with comparatively weak penalties (e.g., fines up to 100,000 RMB yuan), which diminishes accountability. While user complaint mechanisms and periodic reviews are mandated, the absence of independent auditing and third-party involvement hinders procedural clarity and enforcement’s depth. China’s governance style reflects its political tradition of centralized authority, facilitating rapid policy implementation while prioritizing collective welfare and social order. Yet the effectiveness of enforcement depends heavily on bureaucratic capacity and local coordination, which vary across provinces. Regulatory actions are often policy-driven rather than evidence-based, and modest penalties fail to offset platforms’ profit incentives.

4.2.3. Complementarity and Lessons for China

Each regulatory model, despite its limitations, offers distinct strengths that can inform others. A global framework for governing addictive design features should prioritize complementarity over uniform convergence and be grounded in contextual adaptation. Understanding why certain approaches succeed requires a critical analysis of the institutional foundations and the political and cultural contexts that enable their effectiveness.

The EU’s strength lies in translating broad rights principles into specific, enforceable obligations. Rooted in its post-World War II commitment to protecting individual dignity and democratic values, this approach justifies state intervention against private manipulation to preserve personal autonomy. The DSA’s requirement for systemic risk assessments with explicit criteria provides actionable benchmarks that reduce interpretive ambiguity. Mandatory independent audits introduce external accountability that internal compliance mechanisms cannot achieve. High penalty thresholds create financial deterrence proportionate to platform scale. However, the EU model’s success is partially attributable to its political culture, which embraces precautionary intervention, as well as to its well-funded, technically sophisticated enforcement agencies. For China, the lesson is not to replicate EU institutional structures wholesale but to adopt specific mechanisms—precise definitional standards, independent technical auditing, and proportionate penalties—that address identified gaps in its current framework. With sufficiently specific regulatory standards, China’s centralized administrative capacity could enable faster enforcement.

The United States’ market-oriented approach, grounded in competition and reputational accountability, illustrates how self-regulation and user trust can complement formal oversight. In this model, platforms adjust design choices to preserve user trust and adopt industry codes of conduct, creating pathways for China and the EU to maintain user confidence and industry involvement while balancing innovation with public welfare. Drawing on the U.S. experience, China need not adopt self-regulation as its primary strategy, but it could incorporate industry technical expertise into standards-setting processes to enhance regulatory precision and reduce implementation friction.

China’s governance model is characterized by state-directed intervention and sector-wide mandatory measures, demonstrating a strong capacity for rapid compliance mobilization. Grounded in collective welfare, social stability, and developmental governance, this model enables rapid policy implementation when political will aligns with public interest. The large-scale introduction of screen-time limits and real-name verification systems demonstrates its administrative efficiency. To further enhance legitimacy and effectiveness, China should incorporate clear procedural protections, adopt transparent evaluation standards, and deepen cross-sector collaboration to ensure enforcement is both technically precise and broadly supported.

Ultimately, adaptive convergence, pursuing shared principles through contextually appropriate mechanisms, could significantly benefit the global regulatory landscape. For China, the challenge lies not in replicating foreign models wholesale, but in thoughtfully translating global norms into enforceable frameworks that align with domestic institutional capacities, political culture, and societal expectations. Such context-sensitive alignment can transform China’s centralized authority into a proactive, evidence-based model of digital health governance. Informed by these comparative insights, the following policy directions outline concrete pathways for strengthening governance and addressing the systemic drivers of addictive design.

4.3. Policy and Legislative Recommendations for Addressing Addictive Design Features

To build a more effective and forward-looking regulatory framework, targeted reforms that can intervene at key leverage points will be essential.

4.3.1. Establish a Risk-Tiered Oversight System Based on the Precautionary Principle

Effective governance of addictive design features requires a shift from reactive enforcement to anticipatory regulation. Given the rapid evolution of AI-driven engagement strategies, their cumulative effects on adolescent development, and the deep information asymmetry between platforms and regulators, China should adopt a precautionary framework for digital wellbeing governance.

There is a growing consensus that the precautionary principle should be introduced to address the legal challenge of decision-making under uncertainty. This principle, rooted in public health and environmental law, justifies regulatory action when plausible risks exist even without definitive causal evidence (Weed, 2004; Goldstein, 2001). Originating in Germany’s Vorsorgeprinzip and institutionalized in EU approaches to genetically modified organisms and chemical safety (Martuzzi, 2007), it provides both ethical justification and strategic flexibility for governing design-based risks. Three factors make this principle essential for digital regulation. First, adaptive systems generate emergent and cumulative effects. By the time longitudinal studies yield definitive evidence of harm, adolescent groups may already have been exposed during critical developmental windows of heightened neuroplasticity. Second, the opacity of algorithmic systematically disadvantage regulators. Platforms possess massive behavioral datasets and testing capacities that allow rapid innovation in manipulation techniques, leaving regulators perpetually behind. Third, the developmental vulnerabilities of adolescents justify heightened protective standards. When commercial systems systematically target populations with limited decision-making capacity, waiting for conclusive harm evidence before intervening violates basic protective duties established in other consumer safety domains.

Critics claim the precautionary principle stifles innovation by demanding the impossible task of proving absolute safety, while neglecting “false positives” (Type I errors), such as rejecting beneficial technologies, whose hidden costs may far exceed the hypothetical harms the principle seeks to prevent (Burnett, 2009). However, these critiques misrepresents proportionate precaution as blanket prohibition. When designed transparently, some engagement mechanisms—such as gamified feedback or time-tracking—can enhance motivation or learning. Regulation should therefore distinguish adaptive from exploitative engagement designs, not eliminate them wholesale. A well-designed precautionary framework can establish risk-tiered safeguards calibrated to potential harm. The EU’s AI Act demonstrates this model by categorizing systems according to risk levels and imposing proportionate obligations that allow beneficial innovation while constraining harmful applications.

To balance innovation and protection, China should adopt a flexible, risk-tiered system based on a three-layer typology of addictive design features. The system would classify interface and algorithmic features by their empirically documented ability to exploit adolescent vulnerabilities and cause compulsive use, assigning proportionate obligations to each tier. High-risk features, those that directly manipulate cognitive processes and decision-making autonomy and have strong empirical links to addictive potential, would face the strictest controls. Examples include infinite scroll, which removes natural stopping cues and leverages action-completion bias, and autoplay, which eliminates deliberate choice points and defaults users into uninterrupted consumption streams. Such features should be required to carry a reverse burden of proof requiring platforms to provide independent research showing no disproportionate harm to adolescents before deployment. In services predominantly used by minors (e.g., educational apps, youth-oriented platforms), high-risk features should face prohibition. Medium-risk features would be subject to periodic external audits and mandatory transparency reporting. Low-risk addictive features would remain under voluntary guidelines and user-driven controls.

This proportionate approach reframes precaution from an innovation constraint into a tool for responsible foresight. By matching risk tiers with adaptive oversight, regulators can avoid overreach while staying alert to emerging harms. Independent advisory panels, consisting of developmental psychologists, addiction researchers, human–computer interaction specialists, and data scientists, should conduct continuous evidence-based reviews of design features as they evolve. Regular reassessments will keep regulatory standards current, responsive and scientifically grounded. Embedding the precautionary principle in digital-wellbeing governance thus makes regulation a proactive design mandate. It shifts the evidentiary burden onto actors who profit from adolescent attention, mitigates structural asymmetries of knowledge and power, and institutionalizes prevention.

4.3.2. Define “Addictive Design Features” in Statutory Language

The absence of clear legal definitions poses a significant barrier to effective regulation. Terms like “inducing addiction,” as used in documents such as the PAARIIS, lack sufficient specificity to guide enforcement or judicial interpretation meaningfully. This vagueness impedes accountability and limits the ability to target particularly harmful practices that exploit adolescent vulnerabilities. To address this critical gap, China should adopt a legally enforceable definition of addictive design features that distinguishes them from neutral persuasive or deceptive techniques.

Building on the typology introduced in this study and aligned with prior scholarship (Agencia Española de Protección de Datos (Spanish Data Protection Agency), 2024; Beltrán, 2025), addictive design features can be defined as platform design features or interaction mechanisms that intentionally exploit cognitive or psychological vulnerabilities to induce compulsive user engagement, particularly among vulnerable populations, in ways that harm mental health or wellbeing. This definition centers on three regulatory criteria: (1) intentionality, referring to design elements specifically calibrated to maximize engagement; (2) compulsiveness, referring to diminished user autonomy and impaired disengagement; and (3) disproportionate harm, especially among adolescents or other vulnerable populations. These criteria enable regulators to distinguish addictive design features from other manipulative or deceptive designs, thereby ensuring targeted enforcement.

This definitional approach seeks to balance protection with developmental autonomy. Critics may contend that stringent standards impose excessive paternalism. However, autonomy—understood as the capacity to govern one’s life based on reasons and intentions rather than stimulus-response patterns (Vugts et al., 2020; Friedrich et al., 2018)—is precisely what addictive design features undermine. The three criteria target design elements that subvert rather than merely influence decision-making capacity. By focusing on intentionality, compulsiveness, and disproportionate harm, this definition distinguishes exploitative patterns that erode developing agency from persuasive features that engage users within the bounds of autonomous choice. The regulatory aim is not to eliminate adolescent access to platforms but to ensure that such engagement occurs free from manipulative designs that exploit developmental vulnerabilities, thereby safeguarding the conditions necessary for genuine autonomy to develop.

The three-tiered typology makes the definition operational by classifying features according to strategic intent, psychological mechanisms, and specific design patterns, while identifying which AIDF components generate compulsive engagement. This framework allows for differentiated regulatory obligations, whereby medium-risk features would require mandatory disclosure, while high-risk patterns targeting minors would face prohibition. For instance, TikTok Lite’s rewards program illustrates how these criteria function in practice: (1) Intentionality, is apparent in the system’s deliberate monetization of watch time through algorithmically optimized variable reward schedules (Controller) designed to maximize user engagement. (2) Compulsiveness emerges through the AIDF reinforcement loop in which monetary incentives trigger dopaminergic responses (Actuator), and visible point accumulation, daily missions, and countdown timers (Interface) generate urgency while diminishing natural stopping cues. Meanwhile, the Sensor continuously monitors viewing time and feeds this data back to the Controller to refine reward allocation, thereby weakening users’ ability to disengage voluntarily. (3) Disproportionate harm is particularly notable in adolescents, who are more susceptible to these variable reward mechanisms. When a design feature meets all three criteria, as this reward mechanism does, it calls for strong regulatory intervention or prohibition. Conversely, time-management tools that genuinely foster self-regulation and do not rely on intentional exploitation or compulsive designs should be exempt from stringent requirements.

Effective implementation requires balancing clarity with adaptability. Legal definitions must remain responsive to emerging design strategies while maintaining stable enforcement thresholds. Regulatory standards should target the underlying design architectures perpetuating compulsive engagement rather than merely observable behavioral outcomes, aligning legal frameworks with the AIDF’s systemic perspective. Continuous refinement through regulatory guidance and judicial interpretation will ensure definitions remain current as technologies evolve.

4.3.3. Mandate Anti-Addiction Design and Addictive Design Labeling

To protect adolescents from the harms of addictive design features, regulation should intervene both at the design stage and through user-facing transparency tools.

- Anti-Addiction by Design

One essential approach is “anti-addiction by design”, inspired by the principle of “privacy by design” (Del-Real et al., 2025). This strategy shifts the duty of care to platforms. Instead of relying on users to self-control, platforms must prevent harm through responsible design. Interfaces should discourage compulsive interaction, limit repetitive reward loops, avoid deceptive or coercive prompts, and minimize data-driven personalization that amplifies dependence.