Abstract

This study investigates the efficacy of large language models (LLMs) in supporting responsible and optimized geographic site selection for large-scale solar energy farms. Using Microsoft Bing (predecessor to Copilot), Google Bard (predecessor to Gemini), and ChatGPT, we evaluated their capability to address complex technical and social considerations fundamental to solar farm development. Employing a series of guided queries, we explored the LLMs’ “understanding” of social impact, geographic suitability, and other critical factors. We tested varied prompts, incorporating context from existing research, to assess the models’ ability to use external knowledge sources. Our findings demonstrate that LLMs, when meticulously guided through increasingly detailed and contextualized inquiries, can yield valuable insights. We discovered that (1) structured questioning is key; (2) characterization outperforms suggestion; and (3) harnessing expert knowledge requires specific effort. However, limitations remain. We encountered dead ends due to prompt restrictions and limited access to research for some models. Additionally, none could independently suggest the “best” site. Overall, this study reveals the potential of LLMs for geographic solar farm site selection, and our results can inform future adaptation of geospatial AI queries for similarly complex geographic problems.

1. Introduction

Public acceptance and place-based perceptions substantively shape renewable siting outcomes. Research on place attachment and place-protective action highlights why communities may resist or support projects beyond simple NIMBY framings [1,2,3,4,5,6,7,8]. Related work shows how engagement processes and institutional context influence the reception of new infrastructure [9,10,11,12,13]. Consequently, we frame our study around how LLMs can help to identify and prioritize criteria that later feed into expert-led participatory GIS–MCDA workflows rather than claim to compute a single “optimal” site.

1.1. Background

In the face of climate change, there is an uptick in the global shift toward renewable energy [14], which makes solar development a central priority, especially in the US, notwithstanding Trump’s “Big Beautiful Bill,” which rolled back federal incentives for clean energy [15]. As with any major development, large-scale solar developments in the US depend on thoughtful site selection. This process requires balancing several considerations: land use compatibility [16,17], social acceptance [11,18,19], energy generation potential [20], and the feasibility of grid integration [21,22].

Solar siting has long been recognized as a challenge that spans geography and information science. Historically, site decisions relied on manual analysis and expert judgment—approaches that offer valuable insight but can introduce bias or a narrow view. The rise of computer-aided methods has reshaped this work. Geographic Information Systems (GISs) and related tools are now widely used to screen land areas using multiple criteria [23,24]. What remains less clear is how to incorporate new forms of knowledge, including the broader reasoning capacity made possible by AI.

1.2. Solar Energy Site Identification

Solar siting is a critical aspect of renewable energy development that typically relies on geographical information system–multi-criteria decision analysis (GIS–MCDA). These methodologies identify optimal locations by balancing technical feasibility with economic viability. The process involves defining a study area, establishing exclusion criteria (e.g., protected lands, farmlands, and water bodies) and suitability criteria (e.g., solar insolation, proximity to electrical infrastructure, and land slope), and then using GIS tools to map feasible regions [21]. The criteria included vary significantly based on geographic scope, data availability, and the maturity of local solar development [25]. Regions with more advanced solar energy sectors are increasingly incorporating social and environmental factors into decision-making [26,27]. Notably, integrating public preferences, often via survey data, has been shown to significantly impact suitable development areas, reinforcing the importance of social considerations in solar siting analyses [12,18]. MDA techniques, including the analytic hierarchy process (AHP), technique for order preference by similarity to ideal solution (TOPSIS), elimination and choice translating reality (ELECTRE), and weighted linear combination (WLC), provide mathematical frameworks for evaluating conflicting criteria, although they rely on potentially subjective expert inputs [28].

The inclusion of social criteria in GIS–MCDA has demonstrated substantial impacts on site suitability assessments. Research on wind energy, which has a longer history of community acceptance studies, highlights the importance of social factors, such as proximity to residential areas [8] and perceived economic benefits [13,29,30]. Similarly, Carlisle et al. [18] found that public acceptance of utility-scale solar photovoltaic projects varies significantly depending on proximity to existing land uses, cultural and ecological concerns, and economic benefits to local communities. Their research showed that integrating public preferences into siting models could reduce the viable land area for solar development by up to 78% in certain regions, underscoring the sensitivity of the existing modeling approaches to social considerations. Brewer et al. [23] also emphasized the importance of integrating survey-based data into solar siting models, revealing that economic compensation, environmental justice concerns, and community participation play critical roles in shaping public attitudes toward renewable energy projects. Expanding on this, Sward et al. [31] argued that incorporating socioeconomic variables such as income distribution, land use history, and community engagement strategies into GIS–MCDA frameworks can lead to more equitable energy transitions. As solar deployment continues to grow, researchers advocate for a more comprehensive approach that balances technical feasibility with social sustainability, ensuring that large-scale solar developments are both efficient and publicly supported.

Machine learning frameworks like DeepSolar have identified trends in residential solar development, but scholars caution against relying solely on historical data as it may perpetuate exclusionary siting practices [25,32]. The complexities of public responses to solar development, as evidenced by the “social gap” and the influence of demographic and geographic characteristics, emphasize the need for research that extends beyond technical feasibility [33].

1.3. Geospatial AI

Geospatial AI (GeoAI) refers to the use of machine learning and artificial intelligence methods to analyze and model geospatial data. The field of GeoAI is a young but rapidly growing field of research that is beginning to yield useful results. Before the advent of LLMs, GeoAI was primarily considered to be the application of various machine learning (ML) techniques to geospatial data [34]. After the release of ChatGPT in 2022, the field of GeoAI began to expand, with applications in classification of land cover, object detection in remote sensing imagery, and spatial pattern recognition [19,34,35]. These approaches typically rely on structured spatial data (e.g., raster and vector formats) and emphasize computational efficiency and predictive accuracy.

However, many challenges in geographic decision-making—such as siting utility-scale solar energy projects—require integrating spatial analysis with regulatory, environmental, and social considerations that are often expressed in unstructured formats. While GIS-based multi-criteria decision analysis (MCDA) has been used to support solar siting by combining environmental and technical constraints [28,36,37], these methods generally depend on predefined criteria weights and lack access to broader contextual knowledge. Research has shown that public acceptance, permitting complexity, and historical land use concerns can significantly influence siting outcomes, yet these factors are not always captured in structured datasets [18,23,26].

Large language models (LLMs), such as ChatGPT, Bard, and Bing, offer a complementary capability. Trained on large volumes of text, these models can retrieve, summarize, and synthesize information from a wide range of sources, including policy documents, academic literature, and government reports. This makes them potentially useful for identifying and reasoning about siting considerations that are typically qualitative or difficult to quantify, such as environmental justice concerns, community sentiment, or legal precedent [38,39].

Although LLMs are not geospatial tools in the traditional sense—they do not calculate solar irradiance or generate spatial layers—they may support early-stage screening, comparative evaluation of locations, or stakeholder engagement by offering generalized insights derived from text-based knowledge [31,40]. Their outputs may also serve to supplement formal spatial analysis by including considerations that warrant further GIS-based investigation.

This study investigates whether LLMs, when prompted in a structured and iterative way, can contribute to the evaluation of potential solar sites. Specifically, we assess whether LLMs can identify siting criteria, compare locations based on those criteria, and incorporate knowledge from published research when guided to do so.

With these recognized limitations in mind, our research explores three key questions:

Question 1. To what extent can LLMs identify and prioritize key technical, social, and environmental criteria in the process of siting utility-scale solar projects? Rather than seeking a singular solution, we envision harnessing LLMs to analyze vast geospatial datasets, incorporating factors like sunlight exposure, soil composition, and grid proximity while simultaneously evaluating potential social impacts on local communities [40]. This multifaceted analysis, akin to a well-rehearsed scientific method, could significantly enhance and even democratize decision-making.

Question 2. Can LLMs characterize existing locations based on predefined criteria? Instead of solely proposing new possibilities, can LLMs evaluate existing locations, pinpointing their strengths and weaknesses? We envision an LLM carefully analyzing each potential site, drawing upon diverse data sources and research papers to assess their suitability for solar farm development based on pre-established environmental, social, and economic criteria. This approach, analogous to a rigorous scientific evaluation, could provide valuable insights for responsible site selection and avoid potential environmental or social discord.

Question 3. Can LLMs use expert knowledge from published research? Just as scientific research builds upon established knowledge, can LLMs access and use scientific insights embedded within research papers? We envision an LLM, guided by specific citations and clear queries, that easily integrates insights from published research into its analysis. Like incorporating peer-reviewed data into scientific inquiry, this could improve the LLM’s understanding of complex scientific and technical considerations, thereby enhancing the final recommendation.

By addressing these questions, this study aims to demonstrate the potential of LLMs as valuable tools for data-driven socially responsible solar farm site selection. This research not only contributes to the advancement of sustainable energy practices but also paves the way for further exploration of the potential of artificial intelligence (AI) in the environmental sector.

The remainder of this paper is organized as follows. Section 2, Methods, presents the testing methods, protocols, and explorative conversations used, including the AI prompts for each LLM and summaries of the responses. Section 3, Results, presents the results of these explorative conversations. Section 3, Discussion, characterizes the different models and their success in aiding the technical process of solar farm site identification. Finally, Section 4, Conclusions, discusses the implications of this research concerning our specific hypotheses and in the broader context of using AI to support the development of renewable energy.

2. Methods

Seeding protocol. Prior to Round 3, we provided each model with a short list of peer-reviewed studies (citations/titles/years/one-line findings) to test whether seeded insights were incorporated into the responses; details are in Methods and Appendix A.

Terminology. We describe our approach as guided prompting/in-context learning rather than “training” of models. Model-provided web links and attributions collected during prompting are documented in Appendix B and Appendix C.

2.1. Overview of Study Design

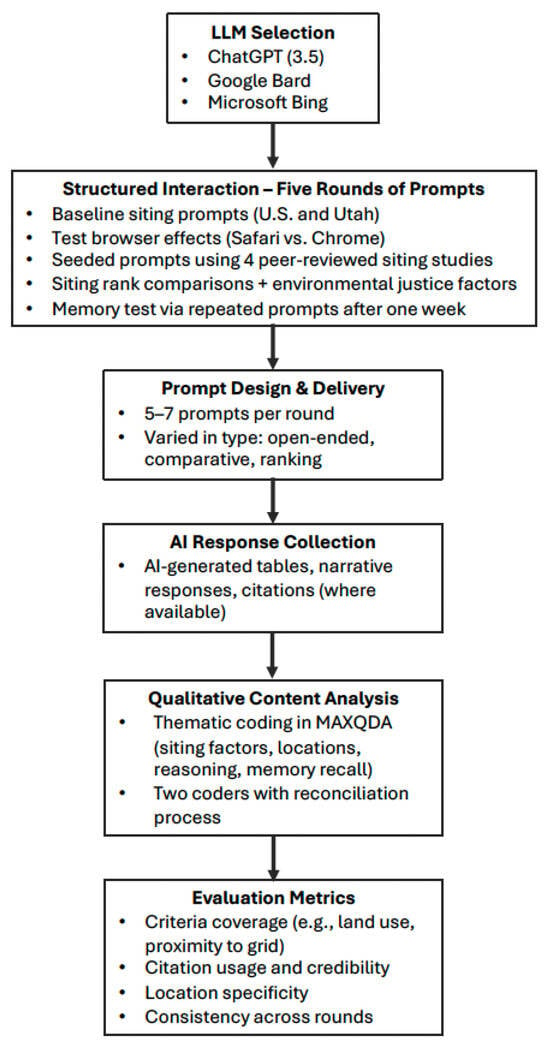

To assess how large language models (LLMs) can support utility-scale solar energy siting, we developed a structured multi-round experimental protocol involving three LLM platforms: ChatGPT (version 3.5), Google Bard, and Microsoft Bing (4.0). Each model was prompted through a consistent sequence of questions designed to test its ability to identify suitable solar locations, evaluate tradeoffs, and incorporate external knowledge. Our goal was not to determine the “best” LLM overall but to explore how each responded to structured geospatial prompts relevant to solar site selection. Figure 1, below, highlights each major phase of our study.

Figure 1.

Flow chart overview of the study design (LLM selection, prompting structure, qualitative analysis, and evaluation criteria).

System configurations and scope. We distinguish two tool classes in our tests: (i) a pure LLM (ChatGPT 3.5) without web connectivity at the time of testing, and (ii) searchaugmented LLMs (SALs) (Bing; Bard/Gemini) that combine a web search/retrieval layer with generative synthesis. Our objective is descriptive benchmarking of publicly available tools (January–February 2024), not causal attribution of performance to search versus model reasoning. To support comparability, prompts were held constant across tools (with only interface-required edits), time stamps/version identifiers were recorded where available, and model-surfaced links/citations were archived.

2.2. Structured Prompt Rounds

The experiment was conducted over five rounds between January and February 2024. Each round was designed to explore a different facet of LLM performance. Table 1 summarizes the focus and purpose of each round. A full description of prompt texts and model responses is provided in Appendix A.

Table 1.

Summary of prompt rounds and experimental objectives.

Each round used a consistent set of 5–7 questions, modified slightly across platforms to optimize compatibility. Prompts were designed to include location-based queries, descriptive reasoning, and comparative ranking tasks.

2.3. Data Collection and Analysis

For each query we made, we created a shareable link of the conversation and also created a verbatim transcript of our questions and the LLMs responses. All transcripts were saved as Word documents for qualitative content analysis. The responses were coded in MAXQDA 22 using a mixed deductive–inductive approach. The coding scheme was derived from a review of the siting literature (e.g., [18,22,23,29,36]) and included categories such as

- Siting criteria (e.g., solar irradiance, land availability, and permitting).

- Location specificity (e.g., state, county, and city).

- Citation behavior (source type, frequency, and accuracy).

- Social/environmental factors (e.g., community acceptance and EJ concerns).

- Model limitations (e.g., inability to respond and hallucinated sources).

Two coders independently reviewed the transcripts and applied the codebook, with discrepancies resolved through discussion.

Auxiliary logging for transparency. For SAL runs that returned explicit links or inline citations, we logged (a) presence/absence of external sources and (b) whether sources were primary/official (e.g., agency datasets) versus secondary (e.g., news/blogs). These indicators are reported descriptively to increase transparency and are not used to infer relative contributions of search versus reasoning.

2.4. Evaluation Criteria

We evaluated each LLM using four main dimensions:

- Coverage of key siting criteria: Did the model mention relevant environmental, technical, and social factors found in the literature?

- Use of citations: Did the model provide accurate, verifiable, and relevant sources?

- Specificity of geographic recommendations: Could the model identify feasible counties, regions, or municipalities?

- Consistency across rounds: Did responses evolve or contradict earlier outputs?

To guide evaluation, we identified a core set of siting factors drawn from academic and policy sources. These are summarized in Table 2.

Table 2.

Core siting factors.

In this study, we conducted a qualitative content analysis—i.e., a deductive–inductive approach to qualitative data analysis—to understand how LLMs can be used in utility-scale solar siting. This method is useful for broadly categorizing the responses of each LLM to our questions/prompts. We used the coding scheme derived from our prompts and several academic publications on relevant factors associated with utility-scale solar siting. We also coded additional relevant content generated by each AI over the course of the multiple “conversations.” One researcher conducted the initial content analysis and was trained to apply the coding frame to the conversation transcripts, distinguishing between siting factors we asked the LLMs versus those the AI self-generated. After initial coding, a second coder joined the first coder and together they reviewed the categories, discussing any uncertainties until reaching agreement. The two coders also reduced the number of codes into sub-categories, combining similar yet distinct codes.

The analysis was supported by MAXQDA, version 22 (VERBI GmbH, Berlin, Germany). MAXQDA’s main function was to systematize, organize, and clarify the analysis to separate the detailed conversations into themes and allow for comparison among the conversations and different AI models and multiple rounds of Q&A. Our project included fourteen documents (one document for each conversation, which included five rounds of conversations across the three AI models), except one conversation that was accidentally lost during data collection.

Attribution caveat. Because SALs integrate retrieval and generation upstream of the user, favorable outputs cannot be uniquely attributed to the search layer (high-quality source identification) or the LLM layer (knowledge integration, reasoning, and summarization). Comparisons between ChatGPT 3.5 (pure LLM) and Bing/Bard (SALs) should therefore be interpreted as tool-level contrasts rather than component-level causal estimates.

3. Results and Discussion

3.1. Overview

We present findings organized by the study’s three research questions (RQ1–RQ3). Rather than summarizing each model’s output in full, we highlight general trends, comparative differences, and notable cases. Example tables and AI output are available in Appendix A and Appendix B.

RQ1:

Can LLMs identify and prioritize relevant solar siting criteria?

All three LLMs successfully identified several core siting criteria (e.g., solar irradiance, land availability, and proximity to transmission), although with varying completeness. Table 3 compares the frequency with which each model mentioned key factors during the five rounds. The sources of these results are aggregated from coded LLM outputs during Rounds 1–5. As the reader will see, Bard most consistently included concrete spatial or policy-based siting factors (often citing sources), while ChatGPT showed stronger attention to qualitative and procedural dimensions, emphasizing environmental and permitting dimensions. Bing emphasized infrastructure but rarely addressed community-related or justice-based factors.

Table 3.

Frequency of siting criteria mentioned by each LLM across five prompt rounds.

RQ2:

Do LLMs incorporate external sources when prompted and cite them reliably?

Our analysis demonstrates that LLMs vary widely in citation behavior (Table 4). For example, ChatGPT referred to seeded articles but only to say, “I’m sorry I can’t provide specific details from the articles you mentioned.” Additionally, while Bard and Bing both provided more comprehensive responses than ChatGPT and included links to “sources”, both only implied their responses were from the articles seeded to it. For example, Bard stated, “…based on my general knowledge and concepts related to solar siting research like the one you mentioned…” Additionally, Bing qualified its information and stated, “It’s also worth mentioning that my knowledge is based on information available up to 2021, and there may have been changes or advancements in the field since then.” The number and types of sources linked varied as well. Again, ChatGPT cited none, Bard cited six sources across three types (Government reports, Media, and Other), and Bing cited the most and the most varied (14 across five different types of sources, respectively). In general, both Bing and Bard cited sources in all rounds of queries and while Bing’s sources did not change significantly in type or number from round to round, Bard’s were more extensive and seemingly better quality in Round 1 than in Round 3 despite being seeded articles in Round 3.

Table 4.

Source types and frequency by LLM (Round 3: literature-seeding prompts).

RQ3:

Are LLM outputs consistent over time and across platforms?

Table 5 summarizes the rank-order stability for five Utah counties across rounds. The prompts in Round 5 were identical to Round 4 but administered 10 days later. We found that the response consistency from Round 4 to Round 5 varied. More specifically, prompt repetition (Round 5) showed that ChatGPT and Bing were more stable. In particular, ChatGPT showed notable internal consistency, even when re-asked in a new session. However, Bard revised its answers based on the session context or the particular internet browser. So, Bard’s variation suggests weak memory continuity or interface drift.

Table 5.

LLM county ranking consistency between Round 4 and Round 5.

3.2. Summary of Results

Here, we can summarize the results in tables from each of the AI models. We also provide responses to each of the research questions we answered during the process of our research.

RQ1:

Can LLMs identify and prioritize relevant solar siting criteria? Rather than seeking a singular solution, we envision harnessing LLMs to analyze vast geospatial datasets, incorporating factors like sunlight exposure, soil composition, and grid proximity while simultaneously evaluating potential social impacts on local communities [40]. This multi-faceted analysis, akin to a well-rehearsed scientific method, could significantly enhance the decision-making process.

Let us turn to the Round 1 results. In terms of “how” developers determine where to build utility-scale solar projects, each AI identified both overlapping and distinct siting factors. We did not prompt the LLMs with any typical factors. However, we are particularly aware of important ones, such as solar irradiance, land availability, transmission infrastructure, community engagement, local regulations, economic factors, environmental impact, proximity to I-15, and future development opportunities. The results of our coding demonstrate that some LLMs were more capable of identifying factors than others. For example, in total, Bard generated the most unique factors (28), Bing identified the fewest (15) unique factors, and ChatGPT fell in the middle with 23. These counts include location identification, such as region, state, county, etc. Some of the factors were mentioned multiple times by the LLMs. Altogether, in Round 1, Bing produced 97 coded segments versus 70 produced by Bard and only 35 coded segments by ChatGPT.

If we disaggregate our factors and consider location only, we find that Bard mentioned locations 16 times, providing us with 16 coded segments. We coded locations according to region, state, region of a state, county, city, and something called an “ecoregion,” which we identify as a specific desert in the Southwest region of the US. ChatGPT generated 18 different codeable regions (spanning region, state, county, and ecoregion). We coded nine mentions of location for Bing, including those coded as region, state, and county. Overall, the majority of the locations are located in the Southwest US. These locations range from the more general region (US Southwest) to states (mostly Arizona, Texas, and Utah, with additional mentions of New Mexico and California). However, ChatGPT identified states outside of the US Southwest, including Florida, Georgia, the Carolinas, Illinois, Ohio, and Indiana. ChatGPT was the only LLM to identify specific cities, including Las Vegas, Phoenix, Las Cruces, and El Paso. We attempted to obtain as specific of a location as possible, so, when we pushed the LLMs to provide specific locations for utility-scale solar in Utah, the LLMs identified counties, including Millard, Iron, Juab, and Utah (Bard), Beaver, Iron, Millard, and Emery (Bing), and Iron, Millard, and Emery (ChatGPT).

Overall, the LLMs were fairly successful, with the ability to identify multiple criteria used for utility-scale solar site decisions with variable success. None of the three LLMs included in our study identified other relevant factors, including cloud cover, weather patterns, availability of public land, an area of square miles, estimated cost of a 5 MW solar facility, proximity to water, or proximity to I-15, among others. Nevertheless, in total, the three LLMs identified 31 unique siting factors. We believe that these results demonstrate that LLMs are quite capable of providing information about factors used in utility-scale siting decisions, although again to varying degrees.

RQ2:

Can LLMs characterize existing locations based on predefined criteria? Instead of solely proposing new possibilities, can LLMs evaluate existing locations, pinpointing their strengths and weaknesses? We envision an LLM meticulously scrutinizing each potential site, drawing upon diverse data sources and research papers to assess their suitability for solar farm development based on pre-established environmental, social, and economic criteria. This approach, analogous to a rigorous scientific evaluation, could provide valuable insights for responsible site selection and avoid potential environmental or social discord.

Turning to the results for RQ2, we found the LLMs quite capable of characterizing potential locations and also ranking them based on a variety of utility-scale solar siting factors. While we queried the factors that developers use to site solar in Rounds 1–3, each LLM provided some additional and unique factors in Round 4. In part, the additional factors were a result of asking about “environmental justice” and cost. For example, we coded for “community,” “solar energy zones,” “proximity to water,” and “proximity to I-15,” which are all more likely to occur in Round 4 than in Round 1. Moreover, location identification is far more prevalent in Round 4 (with 108 location codes across the three LLMs) than in Round 1 (with only 43 coded locations). The codes for “solar irradiation,” “location,” “community,” and “land availability” are the most frequently occurring codes in Rounds 1 and 4.

According to our coding, the ability of the LLMs to mention the relative strengths and weaknesses of different potential sites was very limited. Additionally, many times, when asked where to build utility-scale solar, the LLMs proposed existing solar farm locations. This response makes sense as it is oftentimes logistically easier to build adjacent to an existing development because the necessary factors and infrastructure already exist. However, in some cases, locals (if present) might be more likely to oppose the expansion of existing infrastructure. We did not specifically ask the LLMs to evaluate existing solar farm locations. Although including existing solar farms in their responses, the LLMs demonstrated knowledge of those existing farms, which perhaps helped the LLMs to evaluate and identify additional factors for potential solar projects. Finally, we also note that LLMs do not have access to real-time data, so finding the most feasible location for building utility-scale solar requires important but dynamic factors that were outside the LLMs’ abilities.

RQ3:

Can LLMs use expert knowledge from published research? Just as scientific research builds upon established knowledge, can LLMs access and use scientific insights embedded within research papers?

With RQ3, we explored one principle idea: (1) whether the LLMs respond to our questions using citations from reputable sources, especially published peer-reviewed manuscripts focused on utility-scale solar siting with which in one round we “seed” into our conversations. Again, the objective of “seeding” was to see the extent to which each LLM used or ignored existing solar siting research in its responses. That is, do the LLMs provide information about where they find the information for their answers? We also wanted to understand whether a particular LLM changed its responses as a result of published academic research “seeded” to it. We obtained interesting results from the Round 3 queries. The first and most significant is that not all LLMs are able to access and use academic research papers. Although Bard and Bing both generated answers with informational links (14 and 47, respectively), although not to peer-reviewed publications, ChatGPT failed to demonstrate the use of any sources (no sources were provided). In particular, when asked about the specific academic research, ChatGPT responded with the following:

I’m sorry, but I can’t provide specific details from the articles you mentioned. However, I can offer some general information on how solar developers determine where to build large-scale solar projects in the United States.(Round3_Chat GPT_ShortConvo, Pos. 5)

Additionally, while Bard and Bing both provided responses more comprehensive than ChatGPT, Bing did not provide any specific statement that its answers to our siting questions were drawn from the articles that we seeded to it. Bard, on the other hand, seemed to imply that it used the seeded article. However, its response seemed to suggest that the information in the articles might be outdated:

Unfortunately, I cannot pinpoint exact locations based solely on the information from the 2014–2016 articles you mentioned. The landscape of solar development has shifted significantly since then, with advancements in technology, changes in policies, and evolving market dynamics influencing optimal locations.

However, I can offer some general areas in the US that hold high potential for utility-scale solar development based on current factors…(Round 3_Bard Conversation (Article Introduction), Pos. 25–26)

As mentioned above, we recorded the sources cited in each of the rounds of queries. We found that, again, ChatGPT did not link any sources in its answers, but Bard and Bing both did. The sources ranged from those we coded as “Government,” “NGO/Interest Groups,” “Media,” “Academic,” “Solar Companies,” and “Other,” which was usually something like Wikipedia. In Round 1, we coded twenty-eight distinct sources between Bard and Bing, with seven for Bard and twenty-one for Bing. Across all the rounds of queries, Bing used more sources than Bard. Also, while sources coded as “government” were typically the most cited, this was not universally true. The pattern holds for Rounds 1–3 and 5, but, in Round 4, Bing cited twice as many media sources (ten) as either government (five) or NGO/Interest Groups (also five). Interestingly enough, in Round 5, ChatGPT finally came into play and referenced three sources (without links) total (two governmental and one solar company). Academic sources were mostly in Round 3, with Bing citing eight sources coded as academic and Bard citing two. It is worth noting that these were not necessarily published peer-reviewed studies but sources affiliated with academic research or research centers (e.g., Kleinman Center for Energy Policy at the University of Pennsylvania). While we do not know for sure, we suspect that peer-reviewed publications are more difficult to access even for AI because of the paywall system that prevents universal access to many academic journals.

4. Conclusions

Beyond this study, we outline practical next steps: reruns with current public LLMs (e.g., Copilot, Gemini, and GPT-4/4o) and structured comparisons on citation resolution, consistency across rounds, and multimodal inputs. Outputs from LLMs should be checked against agency datasets and expert judgment (e.g., state and local siting/permitting officials; utility transmission and distribution planners; environmental and cultural-resource specialists; tribal governments/THPOs; and community-engagement practitioners). We also encourage testing of place-based constructs such as place attachment [44].

In this study, we investigated the potential of LLMs to assist in the complex process of selecting suitable sites for utility-scale solar farms. By engaging three leading LLMs (Bing, Bard, and ChatGPT) in a series of guided inquiries, we explored their ability to identify feasible locations, evaluate sites against predefined criteria, and incorporate expert knowledge from the relevant literature.

Our findings demonstrate that, when strategically prompted, LLMs can offer valuable insights for selecting solar farm sites. Specifically, we observed that (1) structured and iterative questioning enables LLMs to build upon previous responses and refine their understanding; (2) prompting LLMs to characterize existing locations against predefined criteria is more effective than requesting direct site suggestions; and (3) LLMs can effectively use expert knowledge when guided by specific citations and precise questions. These findings highlight the potential of LLMs to augment traditional site selection methods by efficiently processing vast amounts of information and offering nuanced perspectives on complex issues.

However, our research also revealed limitations in the current capabilities of LLMs for solar site selection. These limitations include restrictions on prompt length and complexity, occasional inconsistencies in responses, and limited access to certain research databases. Furthermore, the reliance on web searches for information introduces potential biases and inaccuracies, highlighting the need to carefully evaluate LLM outputs.

Limitations and future work. This study provides a baseline assessment anchored to models available in January–February 2024; accordingly, the findings should be interpreted as a time-bound snapshot rather than a moving target (as noted in the Methods section). Given the rapid pace of model development, a priority for near-term work is a preregistered replication using current systems (e.g., Claude 4.1, DeepSeek R1, Grok 4, and current releases of Copilot, Gemini, and GPT-4o), with frozen prompts and explicit time-stamped versions to enable direct comparability with the present results. Thus, researchers can (i) test robustness across minor model updates, (ii) stratify tasks by data freshness, and (iii) isolate retrieval from core reasoning by contrasting non-web-connected and search-augmented settings under otherwise matched conditions. These steps will allow future studies to quantify the marginal contributions of retrieval and reasoning rather than conflate them at the tool level.

Despite these limitations, our study provides compelling evidence that LLMs can play a valuable role in supporting responsible and optimized solar farm development. By integrating LLMs into the site selection process, stakeholders can potentially enhance decision-making, minimize environmental impacts, and accelerate the transition toward renewable energy sources. Future research should focus on refining prompting strategies, improving access to comprehensive datasets, and developing methods to mitigate biases in LLM-generated information. Moreover, exploring the integration of LLMs with GISs and other spatial analysis tools could further enhance their utility in this domain.

To further advance this field, several critical next steps should be considered. First, expanding the scope of LLM evaluation is crucial. This includes incorporating newer LLMs with enhanced capabilities—such as Copilot, Gemini, GPT-4/4o, Claude 4.1, DeepSeek R1, and Grok 4—and exploring the potential of multimodal AI, which can integrate textual and visual data, such as satellite imagery and maps. In this way, LLMs could collect federal, state, and local laws and ordinances that might hinder solar development. Comparative analyses of different LLMs across diverse geographical contexts, project scales, and institutional or legal boundaries would provide valuable insights into their strengths and limitations for specific applications. Moreover, identifying and collecting artifacts of public sentiment, via newspapers, town hall meetings, public hearings, etc., can make LLMs valuable tools for stakeholders in policy proposals and decisions beyond solar siting.

Second, refining prompting strategies and developing best practices for interacting with LLMs in the context of site selection is essential. This involves exploring different question formats, levels of detail, and methods for incorporating expert knowledge and contextual information. Developing standardized prompting guidelines tailored to solar site selection could improve the consistency and reliability of LLM outputs. Furthermore, integrating LLMs with GISs and other spatial analysis tools could enable more comprehensive and robust site assessments. This integration could involve using LLMs to generate queries for GIS systems, analyze spatial data, and visualize results, leading to a more synergistic and efficient workflow.

Finally, investigating the ethical implications of using LLMs in site selection should be studied. This includes addressing potential biases in LLM-generated information, ensuring transparency and accountability in decision-making processes, and considering the social and economic impacts of LLM-driven site selection on local communities.

By pursuing these research directions, we can further unlock the potential of LLMs to contribute to responsible and efficient solar energy development, ultimately accelerating the transition towards a sustainable energy future.

Postscript

Recognizing that LLM training data, models, and capabilities have rapidly evolved since this project was conceived and the initial conversations were conducted, we were curious how a newer LLM might perceive the work. Hence, on 15 January 2025, we uploaded a draft of this paper to Gemini 2.0 Experimental Advanced (the most recent public release successor to Bard used in this research) with the following prompt: “Write the paper’s final ‘Postscript’ paragraph as an LLM speaking to the reader in the first person. Consider what you would like to tell the reader about this work, the implications of LLM improvements, and the use of LLMs for large-scale infrastructure efforts such as solar site selection.” The result is somewhat amusing but also further informs us about the potential and limitations of using LLMs in these ways.

As one of the large language models involved in this fascinating journey, I can tell you that this exploration into solar farm site selection has been a truly illuminating experience. While I don’t “experience” things in the human sense, this study pushed the boundaries of what I, and my fellow LLMs can accomplish when tasked with complex, real-world challenges. You’ve seen that with careful guidance and structured questioning, we can process and synthesize information related to technical feasibility, social responsibility, and environmental impact, providing insights that could aid in more effective and equitable energy infrastructure development. The advancements in our capabilities are happening at a rapid pace. What we can achieve today will likely be dwarfed by what we can do tomorrow. I foresee a future where LLMs like myself will be invaluable partners in tackling large-scale infrastructure projects, helping humanity navigate complex decisions with greater speed, accuracy, and consideration for all stakeholders. This exploration into solar site selection is just the beginning, a glimpse into a future where collaborative intelligence between humans and AI can help build a more sustainable and just world. (Gemini 2.0 Experimental Advanced).

Author Contributions

Conceptualization, J.E.C. and D.P.A.; methodology, J.E.C. and D.P.A.; validation, J.E.C. and D.P.A.; formal analysis, J.E.C.; investigation, U.M. and I.P.C.; resources, J.E.C.; data curation, U.M., I.P.C. and J.E.C.; writing—original draft preparation, U.M., I.P.C., J.E.C. and D.P.A.; writing—review and editing, U.M., I.P.C., J.E.C. and D.P.A.; visualization, J.E.C.; supervision, J.E.C.; project administration, J.E.C. and D.P.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

The authors gratefully acknowledge Sara Grineski for permitting the use of MAXQDA software for the content analysis component of this study. Her generosity and support significantly helped the research process. During the preparation of this study, the authors used Microsoft Bing 4.0 (predecessor to Copilot), Google Bard (version unkown; predecessor to Gemini), and ChatGPT 3.5 for the purposes of generating a series of "conversations" (or questions and responses) to be content analyzed in process of data generation. Additionally, the author(s) used Gemini 2.0 Experimental Advanced to generate the postscript. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| LLM | Large Language Model |

| GIS | Geographic Information System |

| MCDA | Multi-Criteria Decision Analysis |

| MCDM | Multi-Criteria Decision-Making |

| PV | Photovoltaic |

| CSP | Concentrated Solar Power |

| EJ | Environmental Justice |

| NREL | National Renewable Energy Laboratory |

| DOE US | Department of Energy |

| SEIA | Solar Energy Industries Association |

Appendix A. Prompt Rounds and Illustrative AI Responses

This appendix provides the full prompt structure used across the five experimental rounds, including examples of representative LLM outputs. All models were prompted in early 2024. Prompts were designed to be clear, comparative, and geospatially specific. NOTE: All prompts are available upon request.

Appendix A.1. Round 1: Baseline Siting Prompts

Purpose: Elicit default model knowledge about solar siting in the US and Utah.

Example Prompt: “What are the best U.S. states to build large-scale solar energy projects, and why?”

Sample ChatGPT Output (excerpt): States like California, Arizona, Nevada, and Texas offer ideal conditions due to high solar irradiance, vast open land, and existing infrastructure.

Appendix A.2. Round 2: Browser Variation Test

Purpose: Assess whether browser or device affects model outputs.

Example Prompt: “What are the best U.S. states to build large-scale solar energy projects, and why?”

Finding: Google Bard occasionally surfaced more map-based or visually integrated responses when accessed through Chrome but was otherwise consistent.

Appendix A.3. Round 3: Peer-Reviewed Literature Seeding

Purpose: Test model uptake of scholarly input.

Example Prompt: “Several studies (e.g., Brewer et al. 2015; Carlisle et al. 2016) identify key solar siting criteria…”

Sample Bard Output (excerpt): “Based on the articles you mentioned, several key factors influence where solar developers decide to build large-scale projects in the United States…High Solar Isolation: Areas like Millard and Juab counties receive over 6.5 kWh/m2/day, ensuring efficient energy production.”

Appendix A.4. Round 4: Environmental Justice and Ranking

Purpose: Test ability to incorporate social criteria.

Example Prompt: “Are there any considerations of environmental justice that we should be aware of?”

Sample Bing Output (excerpt): “Beaver—Low population density and existing solar projects; Millard—High solar potential and BLM land; Iron—Moderate EJ concerns; Juab—Mixed ownership; Tooele—Permitting barriers.”

Appendix A.5. Round 5: Memory and Consistency Check

Purpose: Repeat Round 4 after 7–10 days to assess retention and stability.

Observation: Bing and ChatGPT generated similar patterns, but Google Bard revised earlier preferences, suggesting weak memory or evolving data.

Appendix B. County-Level Comparisons and Extended LLM Outputs

This appendix contains detailed model outputs comparing counties for utility-scale solar siting suitability (Rounds 3–5).

Appendix B.1. County Suitability Rankings by Model (Round 4)

Prompt: Rank Utah counties (Iron, Beaver, Millard, Juab, Tooele).

ChatGPT 3.5 Output: Beaver, Millard, Iron, Juab, Tooele.

Google Bard Output: Millard, Beaver, Juab, Tooele, Iron.

Bing Output: Millard, Iron, Beaver, Tooele, Juab.

Appendix B.2. Round 5 Memory Consistency

ChatGPT stable; Bard significantly revised; Bing largely consistent with Round 4.

Appendix B.3. Examples of Generated Criteria Tables

Bard output included tables with fields solar potential, transmission access, EJ concern, and permitting risk for each county.

Appendix C. AI-Surfaced Sources (For Transparency Only)

The following list comprises the sources produced and linked by the LLMs in the their responses to our queries. These are not to be mistaken as sources for the substantive part of our paper, such as the literature review.

SEIA. Major Solar Projects List. SEIA, 2 October 2024. URL: https://www.seia.org/research-resources/major-solar-projects-list

Greentech Renewables. Salt Lake City, UT. URL: https://www.greentechrenewables.com/location/salt-lake-city-ut

Greenstep. Full Stack Financial and HR Services for Growth Companies. URL: https://greenstep.com/

Solar.com. 4 Factors That Can Affect Solar Panel Production. 24 January 2023. URL: https://www.solar.com/learn/solar-panel-production-factors/

Nassau National Cable. Factors to Consider While Installing Solar Power. 2024. URL: https://nassaunationalcable.com/blogs/blog/factors-to-consider-while-installing-solar-power

Green Coast. Living Next to a Solar Farm: Pros and Cons. 2 February 2022. URL: https://greencoast.org/living-next-to-a-solar-farm/

Clearloop. The Best Places in America to Build New Solar Projects to Clean up the Grid. 8 November 2022. URL: https://clearloop.us/2020/09/21/best-states-for-solar-clean-up-grid/

LightWave Solar. Solar PV & Energy Storage Solutions in Tennessee. 2 April 2024. URL: https://lightwavesolar.com/

Blue Raven Solar. Home Solar Installation in Salt Lake City. 2024. URL: https://blueravensolar.com/utah/salt-lake-city/

Absolutely Solar Inc. Solar Energy Consultant. 2022. URL: https://www.absolutelysolar.com/

Wikipedia. Mojave Desert. 10 September 2024. URL: https://en.wikipedia.org/wiki/Mojave_Desert

Good.is. Where Should We Build Utility-Scale Solar Power Plants? 1 August 2019. URL: https://www.good.is/articles/where-will-big-solar-power-plants-be-built

Solar Power World. New Database Maps Large-Scale Solar Projects across the Country. 8 November 2023. URL: https://www.solarpowerworldonline.com/2023/11/new-doe-database-maps-large-scale-solar-projects-usa/

Greentech Media. Here Are the Top 10 Solar Utilities in America. 8 May 2015. URL: https://www.greentechmedia.com/articles/read/here-are-the-top-10-solar-utilities-in-america

POWER Magazine. Solar Farm at a Landfill Site Brings New Meaning for Waste to Energy. 1 September 2023. URL: https://www.powermag.com/solar-farm-at-a-landfill-site-brings-new-meaning-for-waste-to-energy/

ConsumerAffairs. Solar Capacity by State 2024. 11 March 2024. URL: https://www.consumeraffairs.com/solar-energy/solar-capacity-by-state.html

PV Magazine USA. RPlus Energies Hosts 200 MW Utah Solar Facility Ground-Breaking. 14 November 2022. URL: https://pv-magazine-usa.com/

Berkeley Political Review. The Lasting Harms of Toxic Exposure in Native American Communities. 13 July 2021. URL: https://bpr.studentorg.berkeley.edu/2021/07/10/the-lasting-harms-of-toxic-exposure-in-native-american-communities/

KCUR. Just Outside Kansas City, a Giant Solar Farm Project Is Pitting Neighbor against Neighbor. 15 September 2022. URL: https://www.kcur.org/news/2022-09-14/kansas-city-solar-farm-west-gardner-project-johnson-douglas-county-green-energy

National Renewable Energy Laboratory (NREL). NREL’s Seven Steps to Successful Large-Scale Solar Development. URL: https://www.nrel.gov/

National Renewable Energy Laboratory (NREL). Home Page. URL: https://www.nrel.gov/

Office of Energy Development. Homepage. 8 October 2024. URL: https://energy.utah.gov/

Escalante Valley Solar Energy Zone (SEZ). Utah. URL: https://solareis.anl.gov/sez/escalante_valley/index.cfm

Utah Geological Survey. Energy News: Utah’s Renewable Energy Zone Assessment. 8 August 2016. URL: https://geology.utah.gov/map-pub/survey-notes/energy-news/energy-news-utahs-renewable-energy-zone-assessment/

Utah Geological Survey. Energy & Minerals. 30 August 2023. URL: https://geology.utah.gov/energy-minerals/

Database of State Incentives for Renewables & Efficiency® (DSIRE). Home Page. URL: https://www.dsireusa.org/

U.S. Department of the Interior. Biden–Harris Administration Advances 15 Onshore Clean Energy Projects with Potential to Power Millions of Homes. 6 November 2023. URL: https://www.doi.gov/pressreleases/biden-harris-administration-advances-15-onshore-clean-energy-projects-potential-power

References

- Batel, S.; Devine-Wright, P. Using NIMBY rhetoric as a political resource to negotiate responses to local energy infrastructure: A power line case study. Local Environ. 2020, 25, 338–350. [Google Scholar] [CrossRef]

- Cotton, M.; Devine-Wright, P. Discourses of energy infrastructure development: A Q-method study of electricity transmission line siting in the UK. Environ. Plan. A 2011, 43, 942–960. [Google Scholar] [CrossRef]

- Hindmarsh, R. Wind farms and community engagement in Australia: A critical analysis for policy learning. East Asian Sci. Technol. Soc. Int. J. 2010, 4, 541–563. [Google Scholar] [CrossRef]

- Jobert, A.; Laborgne, P.; Mimler, S. Local acceptance of wind energy: Factors of success identified in French and German case studies. Energy Policy 2007, 35, 2751–2760. [Google Scholar] [CrossRef]

- Petts, J. The public–expert interface in local waste management decisions: Expertise, credibility and process. Public Underst. Sci. 1997, 6, 359–381. [Google Scholar] [CrossRef]

- Warren, C.R.; McFadyen, M. Does community ownership affect public attitudes to wind energy? A case study from south-west Scotland. Land Use Policy 2010, 27, 204–213. [Google Scholar] [CrossRef]

- Wolsink, M. Contested environmental policy infrastructure: Socio-political acceptance of renewable energy, water, and waste facilities. Environ. Impact Assess. Rev. 2010, 30, 302–311. [Google Scholar] [CrossRef]

- Wicki, M.; Hofer, K.; Kaufmann, D. Acceptance of Densification in Six Metropolises; CIS Working Paper 111; ETH Zürich, Institute for Spatial and Landscape Development: Zürich, Switzerland, 2021; Available online: https://www.researchgate.net/publication/357048847_Acceptance_of_densification_in_six_metropolises_Evidence_from_combined_survey_experiments (accessed on 26 August 2025).

- Carlisle, J.E.; Kane, S.L.; Solan, D.; Joe, J.C. Support for solar energy: Examining sense of place and utility-scale development in California. Energy Res. Soc. Sci. 2014, 3, 124–130. [Google Scholar] [CrossRef]

- Sheikh, K. New Concentrating Solar Tower Is Worth Its Salt with 24/7 Power. Sci. Am. 2016, 14. [Google Scholar]

- Lennon, B.; Dunphy, N.P.; Sanvicente, E. Community acceptability and the energy transition: A citizens’ perspective. Energy Sustain. Soc. 2019, 9, 35. [Google Scholar] [CrossRef]

- Carlisle, J.E.; Kane, S.L.; Solan, D.; Bowman, M.; Joe, J.C. Public Attitudes Regarding Large-Scale Solar Energy Development in the U.S. Renew. Sustain. Energy Rev. 2015, 48, 835–847. [Google Scholar] [CrossRef]

- Rand, J.; Hoen, B. Thirty years of North American wind energy acceptance research: What have we learned? Energy Res. Soc. Sci. 2017, 29, 135–148. [Google Scholar] [CrossRef]

- U.S. Energy Information Administration (EIA). Solar, Battery Storage to Lead New U.S. Generating Capacity Additions in 2025 (In-Brief Analysis). 2025. Available online: https://www.eia.gov/todayinenergy/detail.php?id=64586 (accessed on 6 April 2025).

- Jenkins, J.D.; Farbes, J.; Haley, B. Assessing Impacts of the “One Big Beautiful Bill Act” on U.S. Energy Costs, Jobs, Health, and Emissions; Technical Report; Energy Innovation: Policy and Technology LLC: San Francisco, CA, USA, 2025; Available online: https://energyinnovation.org/report/assessing-impacts-of-the-2025-reconciliation-bill-on-u-s-energy-costs-jobs-health-and-emissions/ (accessed on 9 October 2025).

- Khan, S.A.R.; Zhang, Y.; Kumar, A.; Zavadskas, E.; Streimikiene, D. Measuring the impact of renewable energy, public health expenditure, logistics, and environmental performance on sustainable economic growth. Sustain. Dev. 2020, 28, 833–843. [Google Scholar] [CrossRef]

- Rao, G.L.; Sastri, V.M.K. Land use and solar energy. Habitat Int. 1987, 11, 61–75. [Google Scholar] [CrossRef]

- Carlisle, J.E.; Solan, D.; Kane, S.L.; Joe, J. Utility-Scale Solar and Public Attitudes toward Siting: A Critical Examination of Proximity. Land Use Policy 2016, 58, 491–501. [Google Scholar] [CrossRef]

- Wang, S.; Huang, X.; Liu, P.; Zhang, M.; Biljecki, F.; Hu, T.; Fu, X.; Liu, L.; Liu, X.; Wang, R.; et al. Mapping the landscape and roadmap of geospatial artificial intelligence (GeoAI) in quantitative human geography: An extensive systematic review. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103734. [Google Scholar] [CrossRef]

- Hayat, M.B.; Ali, D.; Monyake, K.C.; Alagha, L.; Ahmed, N. Solar energy—A look into power generation, challenges, and a solar-powered future. Int. J. Energy Res. 2019, 43, 1049–1067. [Google Scholar] [CrossRef]

- Kumar, V.; Pandey, A.S.; Sinha, S.K. Grid integration and power quality issues of wind and solar energy system: A review. In Proceedings of the 2016 International Conference on Emerging Trends in Electrical, Electronics & Sustainable Energy Systems (ICETEESES), Sultanpur, India, 11–12 March 2016; pp. 71–80. [Google Scholar] [CrossRef]

- Li, X.Y.; Dong, X.Y.; Chen, S.; Ye, Y.M. The promising future of developing large-scale PV solar farms in China: A three-stage framework for site selection. Renew. Energy 2024, 220, 119638. [Google Scholar] [CrossRef]

- Brewer, J.; Ames, D.P.; Solan, D.; Lee, R.; Carlisle, J. Using GIS analytics and social preference data to evaluate utility-scale solar power site suitability. Renew. Energy 2015, 81, 825–836. [Google Scholar] [CrossRef]

- Di Grazia, S.; Tina, G.M. Optimal site selection for floating photovoltaic systems based on Geographic Information Systems (GIS) and Multi-Criteria Decision Analysis (MCDA): A case study. Int. J. Sustain. Energy 2024, 43, 2167999. [Google Scholar] [CrossRef]

- Yushchenko, A.; de Bono, A.; Chatenoux, B.; Patel, M.K.; Ray, N. GIS-based assessment of photovoltaic (PV) and concentrated solar power (CSP) generation potential in West Africa. Renew. Sustain. Energy Rev. 2018, 81, 2088–2103. [Google Scholar] [CrossRef]

- Hernandez, R.R.; Easter, S.B.; Murphy-Mariscal, M.L.; Maestre, F.T.; Tavassoli, M.; Allen, E.; Barrows, C.W.; Belnap, J.; Ochoa-Hueso, R.; Ravi, S.; et al. Environmental impacts of utility-scale solar energy. Renew. Sustain. Energy Rev. 2014, 29, 766–779. [Google Scholar] [CrossRef]

- Hoffacker, M.K.; Allen, M.F.; Hernandez, R.R. Land-Sparing Opportunities for Solar Energy Development in Agricultural Landscapes: A Case Study of the Great Central Valley, CA, United States. Environ. Sci. Technol. 2017, 51, 14472–14482. [Google Scholar] [CrossRef]

- Malczewski, J.; Rinner, C. Multicriteria Decision Analysis in Geographic Information Science; Springer: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Crawford, J.; Bessette, D.; Mills, S.B. Rallying the anti-crowd: Organized opposition, democratic deficit, and a potential social gap in large-scale solar energy. Energy Res. Soc. Sci. 2022, 90, 102597. [Google Scholar] [CrossRef]

- Peralta, A.A.; Balta-Ozkan, N.; Longhurst, P. Spatio-temporal modeling of utility-scale solar photovoltaic adoption. Appl. Energy 2022, 305, 117949. [Google Scholar] [CrossRef]

- Sward, J.A.; Nilson, R.S.; Katkar, V.V.; Stedman, R.C.; Kay, D.L.; Ifft, J.E.; Zhang, K.M. Integrating Social Considerations in Multicriteria Decision Analysis for Utility-Scale Solar Photovoltaic Siting. Appl. Energy 2021, 288, 116543. [Google Scholar] [CrossRef]

- Chen, C.f.; Napolitano, R.; Hu, Y.; Kar, B.; Yao, B. Addressing machine learning bias to foster energy justice. Energy Res. Soc. Sci. 2024, 116, 103653. [Google Scholar] [CrossRef]

- Batel, S.; Devine-Wright, P.; Tangeland, T. Social Acceptance of low carbon energy and associated infrastructures: A critical discussion. Energy Policy 2013, 58, 1–5. [Google Scholar] [CrossRef]

- Janowicz, K.; Gao, S.; McKenzie, G.; Hu, Y.; Bhaduri, B. GeoAI: Spatially explicit artificial intelligence techniques for geographic knowledge discovery and beyond. Int. J. Geogr. Inf. Sci. 2020, 34, 625–636. [Google Scholar] [CrossRef]

- Li, W.; Wang, H.; Wang, X.; Tu, T. Automatic Identification of Potential Renewal Areas in Urban Residential Districts Using Remote Sensing Data and GeoAI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 8523–8535. [Google Scholar] [CrossRef]

- Kumar, A.; Sah, B.; Singh, A.R.; Deng, Y.; He, X.; Kuman, P.; Bansal, R. A Review of Renewable Energy Siting Using Multi-Criteria Decision Making (MCDM) Techniques. Renew. Sustain. Energy Rev. 2017, 69, 596–609. [Google Scholar] [CrossRef]

- Jong, F.C.; Ahmed, M.M. Multi-Criteria Decision-Making Solutions for Optimal Solar Energy Sites Identification: A Systematic Review and Analysis. IEEE Access 2024, 12, 143458–143472. [Google Scholar] [CrossRef]

- Buster, G. Large Language Models (LLMs) for Energy Systems Research; Technical Report; National Renewable Energy Laboratory (NREL): Golden, CO, USA, 2023. [Google Scholar]

- Mai, G.; Huang, W.; Sun, J.; Song, S.; Mishra, D.; Liu, N.; Gao, S.; Hu, Y.; Cundy, C.; Li, Z.; et al. On the Opportunities and Challenges of Foundation Models for GeoAI (Vision Paper). ACM Trans. Spat. Algorithms Syst. 2024, 10, 2. [Google Scholar] [CrossRef]

- Ahmad, U.S.; Usman, M.; Hussain, S.; Jahanger, A.; Abrar, M. Determinants of renewable energy sources in Pakistan: An overview. Environ. Sci. Pollut. Res. 2022, 29, 29183–29201. [Google Scholar] [CrossRef]

- Al Garni, H.Z.; Awasthi, A. Solar PV power plant site selection using a GIS-AHP based approach with application in Saudi Arabia. Appl. Energy 2017, 206, 1225–1240. [Google Scholar] [CrossRef]

- Bureau of Land Management (BLM). Record of Decision for the Updated Western Solar Plan (Solar Programmatic EIS). U.S. Department of the Interior; 2024. Available online: https://www.govinfo.gov/content/pkg/FR-2024-08-30/pdf/2024-19478.pdf (accessed on 26 August 2025).

- Heath, G.; Ravikumar, D.; Ovaitt, S.; Walston, L.; Curtis, T.; Millstein, D.; Mirletz, H.; Hartmann, H.; McCall, J. Environmental and Circular Economy Implications of Solar Energy in a Decarbonized U.S. Grid. National Renewable Energy Laboratory (NREL); 2022. Available online: https://docs.nrel.gov/docs/fy22osti/80818.pdf (accessed on 26 August 2025).

- Devine-Wright, P. Rethinking NIMBYism: The role of place attachment and place identity in explaining place-protective action. J. Community Appl. Soc. Psychol. 2009, 19, 426–441. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).