Abstract

This paper introduces a parameter-efficient transformer-based model designed for scientific literature classification. By optimizing the transformer architecture, the proposed model significantly reduces memory usage, training time, inference time, and the carbon footprint associated with large language models. The proposed approach is evaluated against various deep learning models and demonstrates superior performance in classifying scientific literature. Comprehensive experiments conducted on datasets from Web of Science, ArXiv, Nature, Springer, and Wiley reveal that the proposed model’s multi-headed attention mechanism and enhanced embeddings contribute to its high accuracy and efficiency, making it a robust solution for text classification tasks.

1. Introduction

The scientific community has witnessed an unprecedented surge in the volume of published literature, with millions of articles disseminated annually across myriad platforms. As of recent estimates, over 2.5 million scientific articles are published each year across more than 30,000 peer-reviewed journals globally [1,2]. In addition to journal articles, the proliferation of scientific conferences contributes significantly to the literature pool, with thousands of conferences generating substantial numbers of proceedings and papers annually. The academic sector also plays a crucial role, with universities around the world producing a vast number of theses and dissertations each year. For instance, in 2018 alone, the United States saw the publication of approximately 60,000 doctoral dissertations [3]. This exponential growth in scientific publications is facilitated by advances in digital technology and open access initiatives, further underscoring the dynamic and expansive nature of modern scientific research. The usefulness of such a large amount of information depends on how it is automatically organized and grouped into various subjects, domains, and themes. Text classification is a crucial tool for organizing, managing, and retrieving textual data repositories.

Classic machine learning-based classification algorithms have been used in the task of text classification. The problems inherent to such algorithms, such as the need for feature engineering, have limited their application. To address this, deep learning algorithms including models based on convolutional neural networks (CNN) [4,5,6,7,8] and recurrent neural networks (RNNs) [9,10] have been proposed.

Transformer-based models [11,12,13,14,15,16] have recently been used. When it comes to text categorization tasks, these models perform better than the other simpler models. However, the performance gains are accompanied by a larger and more complex model. Requiring such complex models is necessary to achieve satisfactory outcomes in sequence-to-sequence tasks. However, these models are not the best to utilize for relatively easy tasks such as text classification.

1.1. Research Questions Addressed in This Paper

- How do different text classification models compare in terms of performance for scientific literature classification (SLC)

- −

- This paper evaluates the performance of various text classification models, including CNN, RNN, and transformer-based models across multiple datasets, such as WOS, ArXiv, Nature, Springer, and Wiley.

- −

- Detailed performance metrics are provided, which highlight the strengths and weaknesses of each model.

- −

- The results indicate that sBERT (small BERT) outperforms other models in accuracy and robustness across diverse datasets.

- What are the limitations of existing CNN and RNN models in handling text classification tasks?

- −

- −

- This sets the stage for introducing transformer-based models that address these limitations through mechanisms such as self-attention.

- How can parameter efficiency be achieved while maintaining high performance?

- −

- The proposed sBERT model is designed to utilize a multi-headed attention mechanism and hybrid embeddings to capture global context efficiently.

- −

- The paper details the architecture of sBERT, emphasizing its parameter efficiency compared to other transformer-based models.

- −

- It requires only 15.7 MB of memory and achieves rapid inference times, demonstrating a significant reduction in computational resources without compromising performance.

1.2. Paper Outline

2. Related Work

In this section, we summarize the relevant literature on various approaches to text classification. The review covers works published between 2015 and 2022 to provide a comprehensive overview of recent advances in this field.

2.1. Convolutional Neural Networks for Sentence Classification

The foundational work of Kim [4] demonstrated the effectiveness of convolutional neural networks (CNNs) for sentence classification, illustrating significant improvements in text classification tasks by employing simple yet powerful CNN architectures. Building upon this, Zhang et al. [17] introduced the Multi-Group Norm Constraint CNN (MGNC-CNN), which leveraged multiple word embeddings to enhance sentence classification performance. The utility of CNNs in combining contextual information was further explored by Wu et al. [18], who integrated self-attention mechanisms with CNNs to improve text classification accuracy.

2.2. Advances in CNN Architectures

Several studies have proposed advancements in CNN architectures for text classification. Zhang et al. [19] introduced character-level convolutional networks, highlighting their ability to handle texts at a granular level and outperforming traditional word-level models. Similarly, Conneau and Schwenk [20] developed very deep convolutional networks, emphasizing the importance of depth in CNNs for capturing intricate text patterns. Johnson and Zhang [21] compared shallow word-level CNNs and deep character-level CNNs, demonstrating that deep character-level models achieve superior performance in text categorization tasks. More recently, Wang et al. [22] combined n-gram techniques with CNNs to enhance short text classification, while Soni et al. [23] introduced TextConvoNet, a robust CNN-based architecture specifically designed for text classification.

2.3. Word Embeddings and Contextual Information

The role of word embeddings in enhancing sentence classification has been a focal point in many studies. Mandelbaum and Shalev [24] explored various word embeddings and their effectiveness in sentence classification tasks. Senarath and Thayasivam [25] further advanced this by employing multiple word-embedding models for implicit emotion classification in tweets. Additionally, the combination of recurrent neural networks (RNNs) and CNNs with attention mechanisms, as proposed by Liu et al. [9], demonstrated significant improvements in sentence representation and classification.

2.4. Hierarchical and Attention-Based Models

The introduction of hierarchical and attention-based models has significantly influenced sentence classification methodologies. Yang et al. [26] proposed Hierarchical Attention Networks (HANs), which effectively captured the hierarchical structure of documents for better classification. Zhou et al. [27] extended this approach by using attention-based LSTM networks for cross-lingual sentiment classification. Furthermore, Bahdanau et al. [28] introduced the attention mechanism in neural machine translation, which has since been widely adopted in various text classification models.

2.5. Hybrid Models and Domain-Specific Applications

Hybrid models that combine CNNs with other neural network architectures have also shown promising results. Hassan and Mahmood [29] developed a deep-learning convolutional recurrent model that took advantage of the strengths of CNN and RNN for sentence classification. In domain-specific applications, Gonçalves et al. [30] utilized deep learning approaches for classifying scientific abstracts, while Jin and Szolovits [31] proposed hierarchical neural networks for sequential sentence classification in medical scientific abstracts. Yang and Emmert-Streib [32] introduced a CNN specifically designed for multi-label text classification of electronic health records.

2.6. Transformer-Based Models

The advent of transformer-based models has revolutionized text classification. Devlin et al. [11] introduced BERT, a pre-trained deep bidirectional transformer, which set new benchmarks in various NLP tasks. Subsequent models, such as RoBERTa by Liu et al. [14], ALBERT by Lan et al. [15], and XLNet by Yang et al. [13], further optimized the BERT architecture for improved performance. More recent innovations include ConvBERT by Jiang et al. [33], which incorporated dynamic convolution into the BERT architecture, and ELECTRA by Clark et al. [34], which proposed a new pretraining method for text encoders. DeBERTa by He et al. [35] and its subsequent versions [36] have continued to push the boundaries of what is achievable with transformer-based models in text classification.

The reviewed literature illustrates significant progress in text classification methodologies and the broader context of scientific publishing (Table 1). This period has seen the evolution of deep learning techniques, particularly CNNs and transformer-based models, which have dramatically improved the accuracy and efficiency of text classification systems.

Table 1.

Comparison of different models based on their main features and limitations.

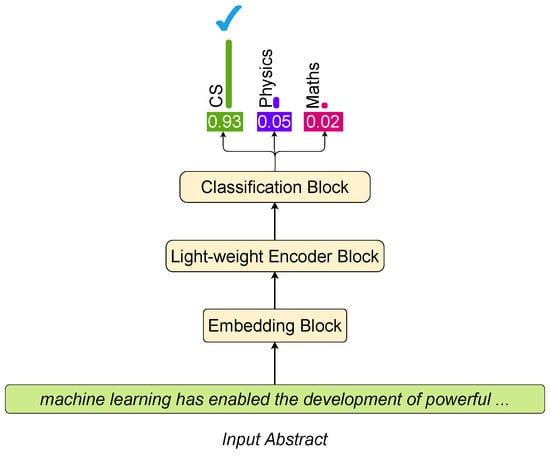

This paper presents sBERT, a multi-headed attention model for text classification, which optimizes transformer architectures for efficiency, reduces memory use, training and inference times, and reduces the carbon footprint of large language models. Applied to classifying scientific literature, sBERT outperforms previous methods and various deep learning models. Figure 1 illustrates its classification process for an abstract.

Figure 1.

Example showing how the proposed model is used to classify a single input abstract.

3. Proposed Model

3.1. Overview

The motivation for the proposed model is the successful application of transformer-based models such as BERT [11] for tasks that require understanding of natural language. However, these models have large parameter spaces. Additionally, in our experiments, we found that simpler models can perform well on the task of text classification. For example, BERT [11] uses an embedding size of 768 to represent each input token in the text. It also uses 12 transformer blocks, and each block in turn uses 12 attention heads. These design choices are suboptimal and result in parameter inefficiency when applied to text classification. This also results in a huge model (the BERT base has 108 M parameters). Although useful in other more complex NLP tasks, using such a large model is inefficient when used for text classification. For comparison, the model architectures and corresponding parameter space sizes are provided in Table 2.

Table 2.

Transformer-based models with their parameter size, layers, attention heads, and hidden size.

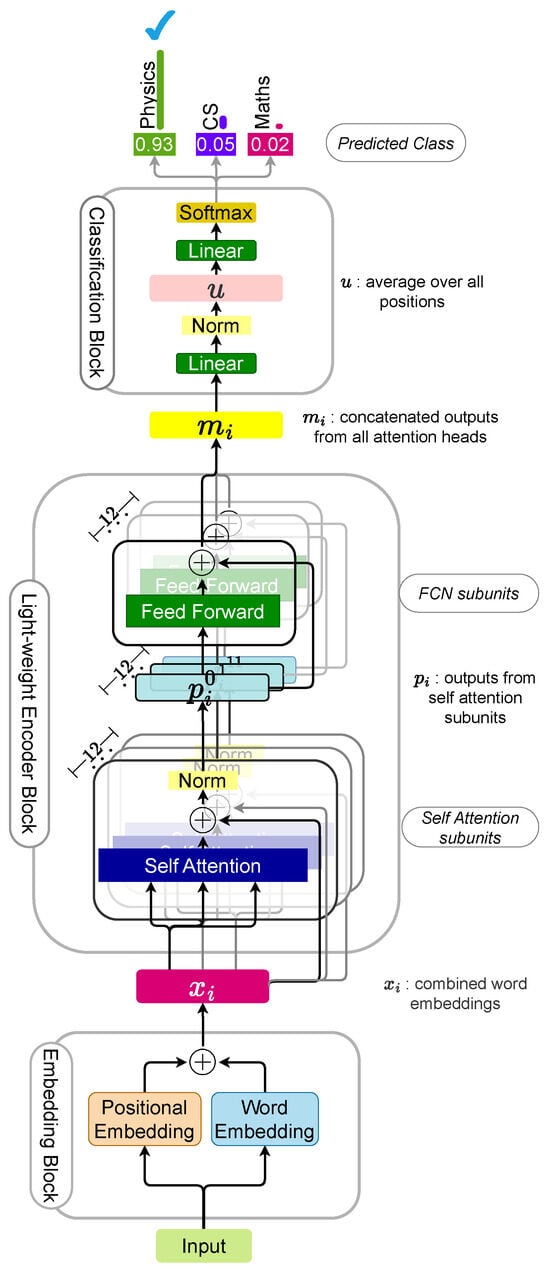

Figure 2 provides an overview of the proposed approach. The input text is subjected to an embedding block that generates input to a lightweight encoder block. The encoded input is then used for classification using the classification block. The subsequent sections provide detailed descriptions of the blocks.

Figure 2.

Proposed model at a conceptual level.

3.2. Detailed Description

The following subsections provide an in-depth description of the different steps involved in the proposed approach.

3.2.1. Embedding Block

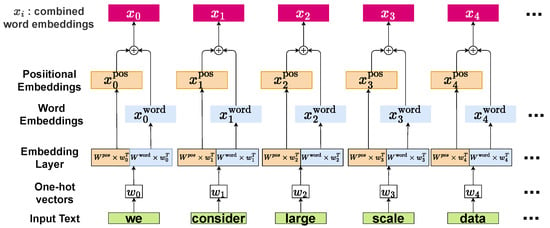

The embedding block reduces the dimensionality of the input text and encodes the semantics and positional information of the words. This enriched and compact input representation serves to reduce computational requirements and improve performance. sBERT employs a hybrid embedding comprising a word embedding and a positional embedding. This is depicted in Figure 3.

Figure 3.

Combined word and positional embeddings.

The weights in the word embedding layer are initialized using GloVe [41] and refined during training. The layer outputs the word vectors as a linear projection of the input words (Equation (1)).

In Equation (1), denotes the output word vector corresponding to the ith position in the input, . denotes the word embedding matrix. Here, is the dimensionality of word embedding vectors. The order and position of different input words is encoded by the positional embedding layer. This layer outputs a vector by transformation using a weight matrix (Equation (2)).

Here, is the positional embedding vector corresponding to the kth word in the input, . denotes the weight matrix for the positional embedding layer. This matrix is initialized by weights calculated as a function of the position of a token in the input. A pair of even and odd positions in a row of the embedding matrix corresponding to the kth word, i.e., and , is calculated using Equation (3) and Equation (4), respectively. Here, .

Here, d is the intended embedding dimensionality, and n in the denominator is used to manage frequencies across the dimensions of the embeddings. By using a large value such as 10,000 for n, the frequency spectrum is spread to ensure that the embeddings can capture patterns that occur over different sequence lengths. The outputs of the two embeddings are summed to obtain an output vector. This creates a word embedding enriched with position information (Equation (5)).

Here, represents the new hybrid embedded word vector, and is the dimensionality of the word embedding vector.

3.2.2. Query, Key, and Value Projections

To prepare the word representations for input to the encoder block (Figure 2), we use three linear transformation layers (query, key, and value) on the combined word vectors. Equations (6)–(8) depict these. The three projections are shown in Figure 3.

Here, denotes the query vector for the input word , denotes the hybrid word vector obtained from the embedding block, and represents the weight matrix associated with generating query vectors. Also, and is the dimensionality of the query vector.

Here, denotes the the key vector for the input word , and represents the weight matrix associated with generating key vectors. Also, .

Here, denotes the the value vector for the input word , and represents the weight matrix associated with generating value vectors. Also, .

3.2.3. Self-Attention

The purpose of the self-attention mechanism is to obtain a context-aware representation of the input words. This helps detect long-range dependencies within the input, which in turn improves model performance. The following subsections describe the self-attention mechanism employed by the proposed model to enhance word representation and hence the overall model performance.

Overview

To represent a sequence of words, self-attention connects various positions of the sequence. If the same word is surrounded, in two different instances, by different words, humans understand it differently. By self-attention, we mean attending to other words in the context when interpreting each word in a text sample.

Regularities within a natural language such as sentence structure, grammar, and semantics associated with each word (word embedding vectors) cause a model with an attention mechanism built into its architecture to learn to attend to important words within the text. Attention is learned because it is rewarding for the task that the model is trained on. Training the model on a task that requires language understanding such as text classification improves this attention mechanism. This is because training improves contextual representation (one that attends to other areas of the text). Since the contextual representation is calculated using self-attention, the representation can only be improved by improving the attention mechanism itself.

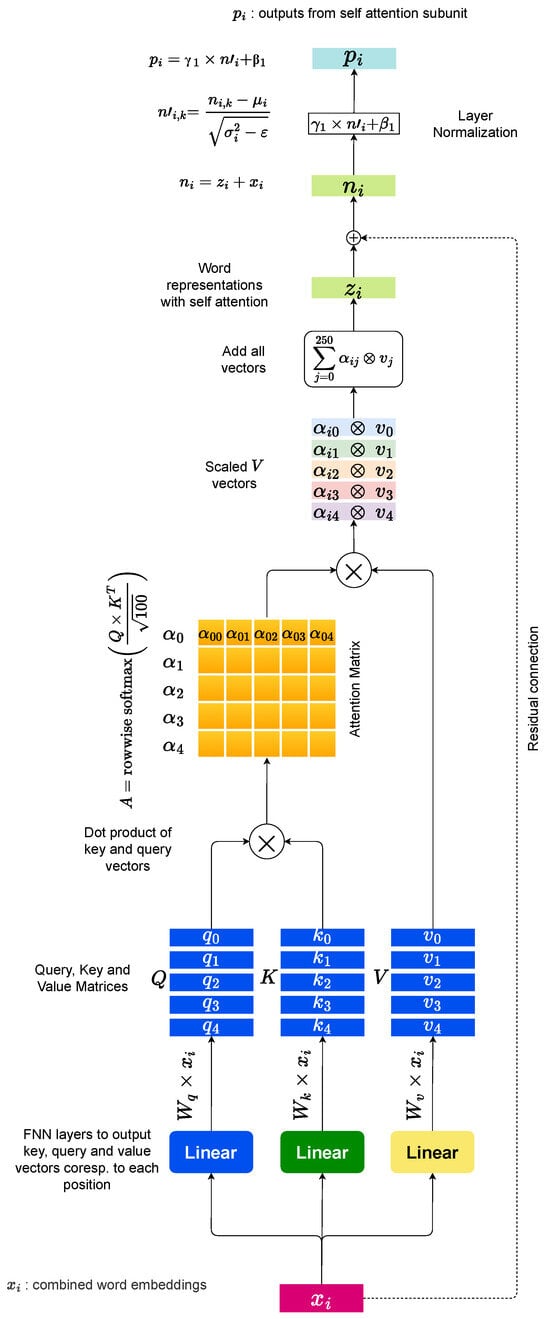

Self-Attention Mechanism

The encoder block generates an attention-based representation that can focus on specific information from a large context. The attention score for a keyword when generating a representation for a given query word is calculated by scaling the dot product of the query vector and key vector (of dimensionality ). This is represented in Equation (9).

In Equation (10), denotes the query vector, and is a key vector whose attention score against is being determined, while is the dimensionality of key vectors. The three projections (query, key, and value) corresponding to each input word are calculated by using three linear transformations. To obtain the attention scores for each token (query word) in the input, its dot product with all the words (key words) in the input is calculated. The dot product is calculated between the query vector representation of the query word and the key vector representation of the keyword. Scaling of the dot product is achieved by dividing it by the square root of the dimensionality of the key vectors to obtain a (attention score for against each , as shown in Equation (10)). Scores for each query are subjected to a soft-max normalization to obtain the attention vector , as shown in Equation (11).

Here, denotes the attention score for the ith word against the jth word. The above operations can be combined into a single matrix operation that calculates an attention matrix A, in which each row represents the attention score vector for the word at the ith position in the input (Equation (12)).

Here, Q is the matrix, in which each row denotes the ith query word; similarly, K denotes the key matrix, in which each row denotes the ith key word. The ith row in the matrix A in Equation (12) denotes the scaled dot product of the query word with every key word . Each row of A is subjected to soft-max to calculate , which makes the sum of all attention weights equal to one, i.e., . To reduce the effect of dot products growing in values, which in turn pushes the soft-max function into flat regions, the dot products are scaled by the fraction , where is the dimensionality of the key vector. The above steps are shown in Figure 4. Next, each value vector is scaled by the attention weight . To obtain the attention-enriched word representation for the ith position in the input (), we scale the value vectors and sum them (Equation (13)). Figure 4 shows this diagrammatically. Here, ⊗ represents the scaling operation.

Figure 4.

Applying the self-attention sub-unit of the encoder block to an embedded input position i (Figure 3). A single attention head has been shown for simplicity.

3.2.4. Residual Connections

Residual connections [42] in the proposed model improve model performance by addressing the problem of vanishing gradients, facilitating easier optimization, encouraging feature reuse, and leveraging the residual learning principle to focus on learning challenging parts of the mapping. Nonlinear activation functions cause the gradients to expand or disappear (depending on the weights). Skip connections theoretically provide a path that travels through the network, and gradients may also travel backward along it.

The outputs calculated by attention mechanism are added to the outputs from the encoder block to obtain , vectors that are used in the subsequent layer normalization step (Equation (14)).

3.2.5. Layer Normalization

sBERT employs layer normalization to improve model performance by stabilizing training, reducing sensitivity to initialization, improving generalization, and facilitating faster convergence, thereby reducing the training time [43]. We first calculate , the mean, and , the variance of each input word vector , as shown in Equations (15) and (16).

Here, K represents the dimensionality of the input which, in our case, is equal to the .

Each of the K features of the word is subtracted by the mean, and the difference is divided by the square root of the standard deviation calculated above. A very small number is added to the standard deviation for numerical stability. In our experiments, we use a value of 0.001 for . This is shown in Equation (17).

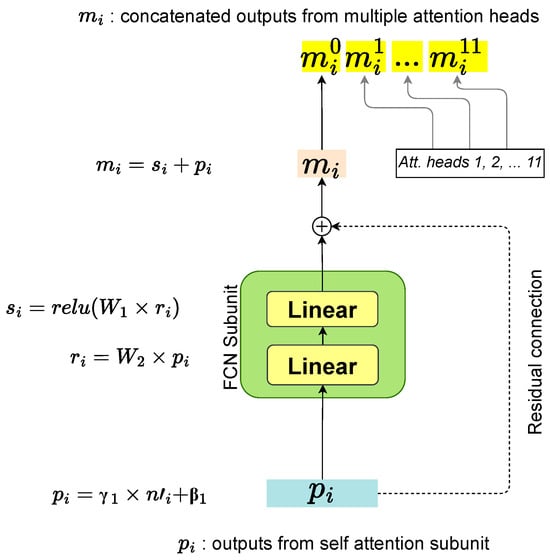

As the final step in layer normalization, we scale the normalized vector by a factor of and shift its value by , as shown in Equation (18) and depicted in Figure 5.

All of the above four steps in layer normalization can be represented as shown in Equation (19).

Figure 5.

Applying an FCNN (fully connected neural network) sub-unit of the encoder block to the outputs of the self-attention sub-unit (Figure 4). The superscript of the output vectors denotes the attention head index (0).

3.2.6. FCNN (Fully Connected Neural Network)

Two linear transformation layers are applied to the normalized output (Equations (20) and (21)). The fully connected layers in the model help capture and model intricate relationships within the data, leading to improved performance. Additionally, the model can extract features at different levels of granularity.

Figure 5 shows the application of the FCNN to the layer normalized outputs obtained by adding scaled value projections according to the attention vector corresponding to the position.

3.2.7. Concatenating Outputs from Multiple Attention Heads

To generate a consolidated representation from multiple attention heads for each word, we concatenate the vectors obtained from all attention heads (Figure 5).

3.2.8. Residual Connections

3.2.9. Combining the Representations Obtained from All Attention Heads

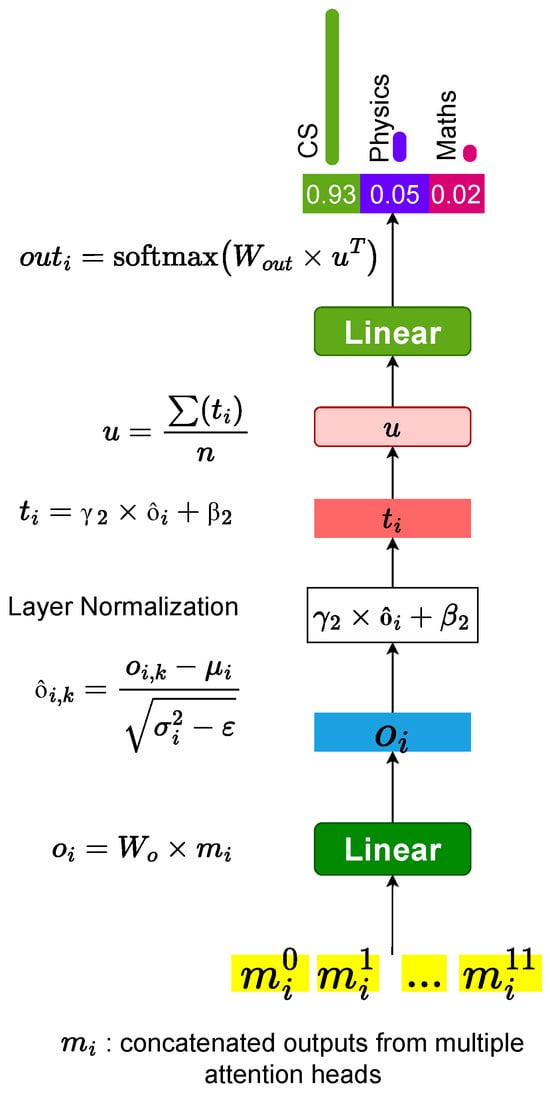

Outputs from multiple attention heads are consolidated by employing a linear transformation layer on the concatenated token representations obtained from all heads. This is shown in Equation (23) and illustrated in Figure 6.

Figure 6.

Obtaining a single vector representation for each position by transforming the concatenated attention outputs () from 12 attention heads (Figure 5) using a fully connected layer. This representation is then used for classification after normalization and averaging over positions.

3.2.10. Classification

Finally, we average all (n) positions () to compute the vector that represents the entire text, as shown in Equation (25). Averaging over all positions helps improve classification performance by capturing global context, reducing positional bias, enhancing robustness to input variations, and enhancing semantic understanding.

A soft-max-activated dense layer of neurons is used to output classification probabilities (Equations (26) and (27)).

Here, represents the weight matrix of the output layer, and

where . Figure 6 depicts the soft-max classification step.

4. Datasets and Experiments

The proposed model was applied to eight different datasets summarized in Table 3. For comparison, seven other deep-learning-based text classification models were also tested on the task. We ran a grid search to find the best-performing combination of hyperparameters. The grid search was run on eight datasets, and five-fold cross-validation was used to determine the best configuration for each dataset. The configuration described above showed the best performance for most datasets. The following subsections describe the datasets and the experimental setup used in the study.

Table 3.

Summary of datasets used.

4.1. Datasets

Ten different datasets comprising abstracts of research papers were used in the study. In addition to the three Web of Science (WOS) datasets [7], we created seven new SLC datasets for the experiments. The following subsections describe the datasets.

4.1.1. WOS

The WOS datasets [7] consist of paper abstracts from 134 categories and 7 subcategories.

4.1.2. ArXiv

The dataset was gathered from ArXiv [44], an online preprint repository. It includes works in mathematical finance, electrical engineering, math, quantitative biology, physics, statistics, economics, astronomy, and computer science. There are 7 categories and 146 subcategories.

4.1.3. Nature

The dataset contains 49,782 abstracts from [45], and it is divided into 8 categories and 102 subcategories.

4.1.4. Springer

This dataset contains 116,230 abstracts from Springer [46], which are divided into 24 categories and 117 subcategories. A subset (SPR-50317) consisting of the largest 6 categories was also created.

4.1.5. Wiley

The dataset contains 179,953 samples and was obtained from Wiley [47]. It contains 494 categories and 74 subcategories. A subset (WIL-30628) consisting of the largest 6 categories was also created.

4.1.6. COR233962

COR233962 has 233,962 abstracts divided into 6 categories. It was obtained from the repository made available by Cornell University [48].

The datasets differ in domains, sample sizes for training and testing, average words and characters per sample, and vocabulary size. These factors can affect the performance of text classification models. Generally, larger and more diverse datasets enhance model performance. Table 3 outlines the datasets used in this study.

4.2. Experimental Setup

Experiments for the study were performed on a hardware configuration that uses an Intel® Xeon® CPU @ 2.30 GHz, 12 GB of RAM, and approximately 358 GB of free disk space (Table 4). It also uses an NVIDIA Tesla T4 GPU. Table 5 lists the training times of different models. Code for the proposed model can be found online (Code: https://github.com/munziratcoding/sBERT, accessed on 11 July 2024). The following subsections present a detailed account of the experimental setup.

Table 4.

System configuration.

Table 5.

Training times for sBERT on different datasets.

4.2.1. Data Acquisition

To acquire some of the datasets, an HTTP request was sent to retrieve the required data, and the HTML content was retrieved using the HTTP library. The lxml library was then used to parse the HTML content and extract the abstracts and categories. The BeautifulSoup library was used to transform the data into a Pandas dataframe that was stored as a CSV file for further processing and analysis.

The datasets were sourced from individual repositories, ensuring consistency in class distribution between samples and their respective sources. In instances where certain classes contained an insufficient number of samples, exacerbating class imbalance, such classes were omitted from the datasets. Additionally, the variation in the number of classes within the created datasets aimed to enhance diversity.

4.2.2. Data Cleaning and Preprocessing

Special characters were filtered out from the abstracts, and tokenization (vocabulary size of 20,000) was performed. The input was restricted to the length of 250 tokens. This was achieved by padding and truncation.

4.2.3. Data Splitting for Training, Validation, and Testing

The datasets were randomly shuffled to remove ordering bias and then split into training and testing subsets using an 80:20 ratio. The training subsets were used for model training, while the test subsets were used for performance evaluation.

4.2.4. Hyperparameter Selection

For hyperparameter selection for sBERT, we ran a grid search over the ranges of hyperparameters, such as the number of attention heads [1–12], number of encoder blocks [1–12], embedding size [100–300], and the size of the fully connected and neural network layers [32–512]. The configuration discussed in the proposed model showed optimal performance across most datasets.

4.2.5. Training Details

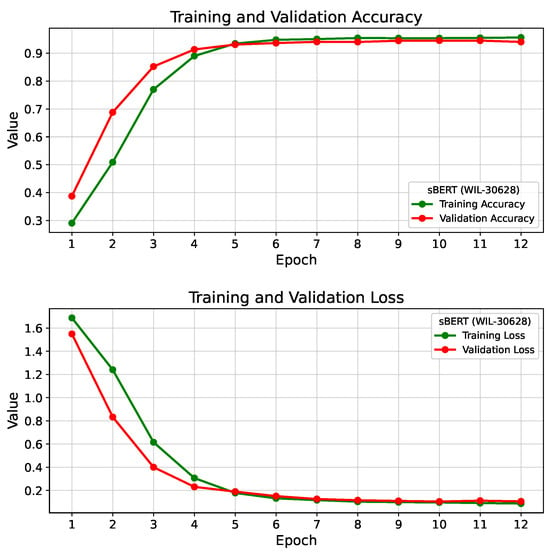

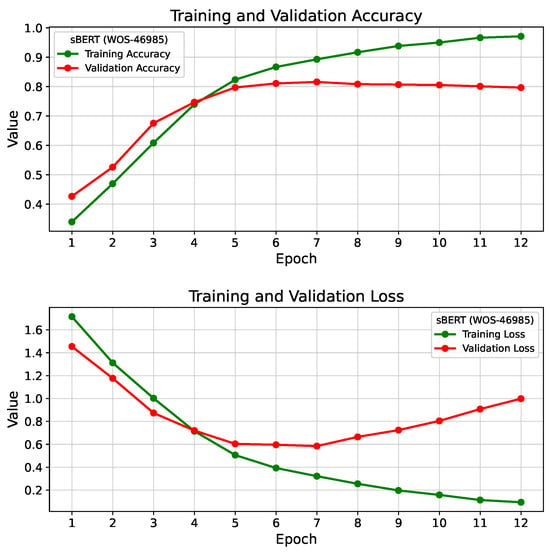

The Adam optimizer with a learning rate of was employed to train sBERT. A batch size of 16 and 100 epochs with early stopping was used. Figure 7 and Figure 8 show the training graphs obtained during training sBERT on the WOS-46985 and COR-233962 datasets. Training times on different datasets for sBERT are listed in Table 5.

Figure 7.

Training graphs for sBERT on the WIL-30628 dataset.

Figure 8.

Training graphs for sBERT on the WOS-46985 dataset.

4.2.6. Performance Metric

Classification accuracy percentage, a measure of the percentage of correctly classified instances in a dataset, was employed. It is defined as the ratio of the number of correctly classified instances to the total number of instances in the dataset, multiplied by 100. Classification accuracy can be described mathematically as shown in Equation (28):

5. Results and Discussion

We evaluate the performance of sBERT against several other deep-learning text classification techniques using classification accuracy percentages. Section 5.1 contrasts the parameter space sizes of different transformer-based models with the proposed model. In Section 5.2, we compare different models based on their carbon emissions. Section 5.3 explores the classification accuracy outcomes for various datasets. Finally, in Section 5.4, we present the results of hypothesis testing.

5.1. Parameter Space Comparison

Table 2 shows a comparison of various language models based on their parameter space size, which is an important consideration when selecting a model for a specific task.

As described in Table 2, BERT base, XLNet, and RoBERTa base all have 12 transformer layers, with varying hidden sizes, attention heads, and number of parameters. Models such as BERT large have 24 encoder layers, with larger hidden sizes and attention heads than their base counterparts, resulting in significantly larger parameter spaces. BERT large has 340 million parameters, while RoBERTa large has 355 million parameters. DistilBERT and ALBERT are both designed to be smaller and more efficient versions of BERT. DistilBERT has only six transformer layers, resulting in a smaller parameter space of 66 million parameters. ALBERT has 24 transformer layers like BERT large, but with a smaller hidden size and fewer attention heads, resulting in a much smaller parameter space of only 18 million parameters.

sBERT uses a single lightweight encoder block, a hidden size of 100, and 12 attention heads. This ensures a very small parameter space of million. sBERT’s parameter efficiency makes it optimal for applications involving text classification tasks. This also makes sBERT more suitable for low-resource applications and reduces the carbon footprint associated with training and fine-tuning more complex models (Table 6).

Table 6.

Energy consumption and carbon emission of various models.

5.2. Carbon Emissions Comparison

The carbon emissions for training a deep learning model largely depend on the model’s complexity and size, including the number of parameters and layers, which directly influence computational demands. Larger, more intricate models require more processing power and memory, leading to higher energy consumption. Additionally, the duration of training, dictated by the number of epochs and iterations, significantly impacts overall energy use. Efficient software implementation and optimization algorithms can mitigate some of these demands, but ultimately, more complex and sizable models inherently consume more energy, contributing to greater carbon emissions. Table 6 compares different models in terms of their carbon emissions. Measurements have been taken for training the models for 20 epochs in a training environment with the specifications in Table 4.

Carbon Emissions Calculation

- Power Consumption (Watts)Power consumption is the total power used by the hardware resources (CPU, GPU, and RAM) during model training. It can be calculated using Equation (29):where , , and represent the power consumption of the CPU, GPU, and RAM, respectively.

- Energy Consumption (kWh)Energy consumption is the total amount of power consumed over a period of time. It is given by Equation (30),where t is the duration of the model training in hours.

- Carbon Emission (kg CO2 per kWh)The carbon emission is calculated by multiplying the energy consumption by the carbon intensity of the electricity grid. The carbon emission can be calculated using Equation (31),where is the carbon intensity factor.

5.3. Performance Comparison

In this section, we report evaluation results for various models on the WOS datasets and the other seven datasets.

5.3.1. WOS Datasets

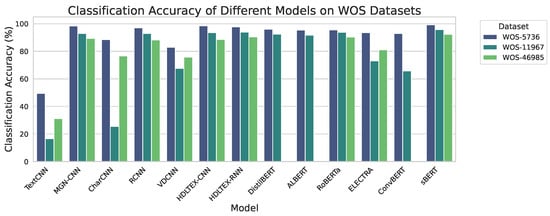

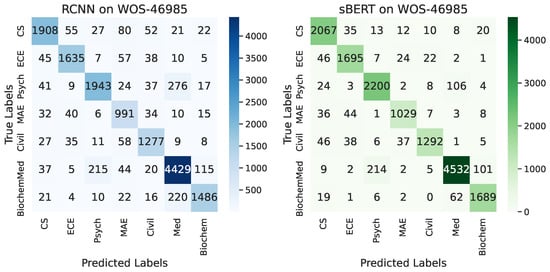

Table 7 lists the classification accuracy percentage measurements of different models across the three WOS datasets. Figure 9 presents the results graphically. Figure 10 shows the confusion matrix of the proposed model on the largest of the WOS datasets, WOS-46985. Table 8 and Table 9 list the Precision, Recall, and F1 scores corresponding to the seven classes corresponding to the two confusion matrices.

Table 7.

Results (classification accuracy %) on the WOS datasets.

Figure 9.

Graph showing classification accuracy (percentage) of different models on the WOS datasets.

Table 8.

Precision, Recall, and F1 scores for RCNN on WOS-46985 (Figure 10).

Table 9.

Precision, Recall, and F1 scores for sBERT on WOS-46985 (Figure 10).

5.3.2. Discussion

The experimental results offer insights into the performance of various text classification models across the three datasets: WOS-5736, WOS-11967, and WOS-46985. Each model’s classification accuracy percentages were evaluated on these datasets. Among the models assessed, TextCNN exhibited relatively modest performance, achieving the highest accuracy on WOS-5736 at 49.46%. However, its overall performance across datasets was less than satisfactory, implying limitations in capturing intricate relationships within the data. Conversely, the Multi-Group Norm Constraint CNN (MGN-CNN) demonstrated better performance across the datasets, most notably on WOS-5736, with an accuracy of 98.41%.

The Character-level CNN (CharCNN) displayed moderate performance, with the highest accuracy on WOS-5736 at 88.48%. Its performance may be attributed to its ability to exploit character-level information, making it suitable for datasets where such details are pivotal. In contrast, the Recurrent CNN (RCNN) showcased robust performance across the three datasets, suggesting its competence in capturing both local and sequential patterns in the data.

The Very Deep CNN (VDCNN) showed the highest accuracy on WOS-5736 at 82.9%. However, deep models like VDCNN often entail higher computational requirements. The hybrid models, HDLTEX-CNN and HDLTEX-RNN, demonstrated strong performance, particularly on WOS-5736 and WOS-11967.

Most notably, the proposed model, sBERT, consistently outperformed other models across the datasets, achieving the highest accuracy on WOS-5736 at 99.21%. This superior performance is achieved by the incorporation of a multi-headed attention mechanism that enables sBERT to focus on the most salient portions of the text. Moreover, the utilization of word embeddings enriched by position information contributes to its success by effectively capturing semantic relationships and context—a vital aspect of text classification tasks.

5.3.3. Other Datasets

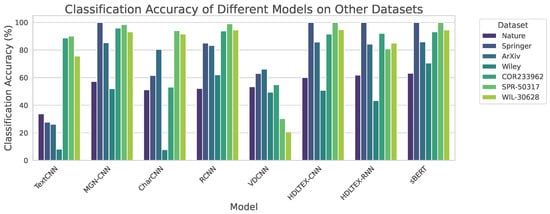

Table 10 lists the classification accuracy percentage measurements across the Nature, Springer, ArXiv, Wiley, and CornellArXiv datasets. Figure 10 shows confusion matrices for sBERT and RCNN on the WOS-46985 dataset. Figure 11 presents the results graphically.

Table 10.

Classification accuracy (%) comparison on other datasets.

Figure 11.

Graph showing classification accuracy (percentage) of different models on the other datasets.

5.3.4. Discussion

The experimental results present a comprehensive evaluation of various text classification models applied across the five distinct datasets: Nature, Springer, ArXiv, Wiley, and CornellArXiv.

TextCNN, the first model considered, demonstrates variable performance across datasets, achieving relatively lower accuracy percentages. It notably struggles on the Wiley dataset, attaining an accuracy of 8.15%. However, it exhibits more robust performance on the COR233962 dataset, reaching an accuracy of 85.06%. These variations in performance suggest that TextCNN may face challenges in datasets with diverse characteristics.

Conversely, the Multi-Group Norm Constraint CNN (MGN-CNN) showcases consistent and robust performance across all datasets, with notable accuracy percentages of 100% on Springer and 85.35% on ArXiv.

The Character-level CNN (CharCNN) exhibits moderate performance, with its highest accuracy observed on the ArXiv dataset at 80.53%. However, it faces challenges in the Wiley dataset, where it attains an accuracy of 7.82%. These results may be attributed to CharCNN’s reliance on character-level information, which may be less relevant or informative in certain datasets.

The Recurrent CNN (RCNN) achieves competitive accuracy percentages across datasets, notably reaching 85% on Springer and 83.32% on ArXiv. Its recurrent architecture enables it to effectively capture sequential patterns in the data, which contributes to its adaptability.

The Very Deep CNN (VDCNN) demonstrates its highest accuracy on Springer at 63%. Nevertheless, it encounters challenges in the ArXiv dataset, where it achieves an accuracy of 66.11%. These variations in performance suggest that the depth of the model may not universally benefit all datasets.

The models HDLTEX-CNN and HDLTEX-RNN perform well across most datasets, with notable accuracy percentages. These models effectively leverage CNN and RNN architectures, showcasing their adaptability and utility in various text classification scenarios.

The proposed model, sBERT, performs well in all datasets, achieving the highest accuracy on Springer at 100% and on ArXiv at 85.94%. sBERT’s exceptional performance underscores its versatility, which can be attributed to its multi-headed attention-based architecture and utilization of hybrid word and positional embeddings. These qualities enable sBERT to excel in various domains and dataset characteristics. The exceptional performance of sBERT across different datasets underscores its robustness and generalizability, suggesting its effectiveness in handling diverse domains and dataset sizes. sBERT’s parameter efficiency is particularly crucial in mitigating the computational resources and carbon footprint associated with large-scale language models. The proposed model requires just 15.7 MB of memory and takes 0.06 s (average over inference times for 100 samples) to predict in the training environment (described in Section 4.2).

5.4. Hypothesis Testing

Hypothesis testing was performed to compare sBERT with other models using the cross-validation method. For example, comparison with the RCNN model on the WOS-5736 dataset yielded the following results.

5.4.1. Accuracy Measurements of Compared Models

The accuracy measurements of the two models for the 10 splits in cross-validation are presented in Table 11.

Table 11.

Accuracy measurements of RCNN and sBERT for the 10 splits in cross-validation.

5.4.2. Paired t-Test

- t-statistic:

- p-value:

denotes the average difference between paired observations;

denotes the standard deviation of the differences;

n is the number of pairs.

5.4.3. Interpretation of the Results

The paired t-test is used to decide if there is a statistically significant difference between the means of two related groups (in this case, the classification accuracy of sBERT and RCNN across the different splits).

- t-statistic: The t-statistic of is a measure of the difference between the two groups relative to the variability observed within the groups. The large negative value shows that the accuracy of sBERT is significantly different from that of RCNN.

- p-value: The p-value of is much lower than the significance level threshold (0.05), suggesting that the difference in classification accuracy between sBERT and RCNN is statistically significant. This indicates that there is strong evidence to reject the null hypothesis (there is no difference in performance between the two models).

The low p-value in the test provides strong evidence against the null hypothesis, indicating that the observed difference in classification accuracy is highly unlikely to be due to random chance.

6. Conclusions and Future Work

In this study, we proposed sBERT, a parameter-efficient transformer model tailored for the classification of scientific literature. Through extensive experiments on multiple datasets, sBERT has been shown to outperform traditional models in both accuracy and efficiency. Our findings highlight the advantages of the multi-headed attention mechanism and optimized embeddings used in sBERT. Furthermore, the reductions in memory use, training and inference times, and carbon footprint emphasize the model’s efficiency and environmental benefits. Future work will explore the application of sBERT to other text classification domains and further optimize its architecture for even greater performance.

Author Contributions

Conceptualization, M.M.A., M.A.W. and V.P.; methodology, M.M.A.; software, M.M.A.; validation, M.M.A., M.A.W., and V.P.; formal analysis, M.M.A.; investigation, M.M.A.; resources, M.M.A.; data curation, M.M.A.; writing—original draft preparation, M.M.A.; writing—review and editing, M.M.A., M.A.W. and V.P.; visualization, M.M.A.; supervision, M.A.W. and V.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets used in the study can be found online (Datasets: https://data.mendeley.com/datasets/9rw3vkcfy4/6, https://www.kaggle.com/datasets/Cornell-University/arxiv, accessed on 11 July 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ware, M.; Mabe, M. The STM Report: An Overview of Scientific and Scholarly Journal Publishing; Technical Report 4; International Association of Scientific, Technical and Medical Publishers: Oxford, UK, 2015. [Google Scholar]

- Jinha, A.E. Article 50 Million: An Estimate of the Number of Scholarly Articles in Existence. Learn. Publ. 2010, 23, 258–263. [Google Scholar] [CrossRef]

- National Center for Education Statistics. Doctor’s Degrees Conferred by Postsecondary Institutions, by Field of Study: Selected Years, 1970-71 through 2018-19. Digest of Education Statistics, 2019. Available online: https://nces.ed.gov/programs/digest/d21/tables/dt21_324.10.asp (accessed on 15 June 2024).

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the EMNLP, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Georgakopoulos, S.V.; Tasoulis, S.K.; Vrahatis, A.G.; Plagianakos, V.P. Convolutional Neural Networks for Toxic Comment Classification. In Proceedings of the 10th Hellenic Conference on Artificial Intelligence, Patras, Greece, 9–12 July 2018; pp. 1–6. [Google Scholar]

- Hughes, M.; Li, I.; Kotoulas, S.; Suzumura, T. Medical Text Classification Using Convolutional Neural Networks. Stud. Health Technol. Inform. 2017, 235, 246–250. [Google Scholar]

- Kowsari, K.; Brown, D.E.; Heidarysafa, M.; Meimandi, K.J.; Gerber, M.S.; Barnes, L.E. HDLTex: Hierarchical Deep Learning for Text Classification. In Proceedings of the 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2018; Volume 2018, pp. 364–371. [Google Scholar]

- Aripin; Agastya, W.; Huda, S. Multichannel Convolutional Neural Network Model to Improve Compound Emotional Text Classification Performance. IAENG Int. J. Comput. Sci. 2023, 50, 866. [Google Scholar]

- Zhou, P.; Qi, Z.; Zheng, S.; Xu, J.; Bao, H.; Xu, B. Text classification improved by integrating bidirectional LSTM with two-dimensional max pooling. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 3485–3495. [Google Scholar]

- McCann, B.; Bradbury, J.; Xiong, C.; Socher, R. Learned in Translation: Contextualized Word Vectors. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Sydney, Australia, 2019; Volume 32. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A lite BERT for self-supervised learning of language representations. In Proceedings of the 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019. [Google Scholar] [CrossRef]

- Zhang, Y.; Roller, S.; Wallace, B.C. MGNC-CNN: A Simple Approach to Exploiting Multiple Word Embeddings for Sentence Classification. arXiv 2016, arXiv:1603.00968. [Google Scholar]

- Wu, H.L.X.; Cai, Y.; Xu, J.; Li, Q. Combining Machine Learning and Lexical Features for Readability Assessment of User Generated Content. In Proceedings of the COLING, Dublin, Ireland, 23–29 August 2014. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level Convolutional Networks for Text Classification. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 649–657. [Google Scholar]

- Conneau, A.; Schwenk, H.; Cun, Y.L.; Barrault, L. Very deep convolutional networks for text classification. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, EACL 2017—Proceedings of Conference, Valencia, Spain, 3–7 April 2017; Volume 1, pp. 1107–1116. [Google Scholar] [CrossRef]

- Johnson, R.; Zhang, T. Convolutional Neural Networks for Text Categorization: Shallow Word-level vs. Deep Character-level. arXiv 2016, arXiv:1609.00718. [Google Scholar]

- Wang, H.; He, J.; Zhang, X.; Liu, S. A short text classification method based on N-gram and CNN. Chin. J. Electron. 2020, 29, 248–254. [Google Scholar] [CrossRef]

- Soni, S.; Chouhan, S.S.; Rathore, S.S. TextConvoNet: A convolutional neural network based architecture for text classification. Appl. Intell. 2023, 53, 14249–14268. [Google Scholar] [CrossRef] [PubMed]

- Mandelbaum, A.; Shalev, A. Word Embeddings and Their Use In Sentence Classification Tasks. arXiv 2016, arXiv:1610.08229. [Google Scholar]

- Senarath, Y.; Thayasivam, U. DataSEARCH at IEST 2018: Multiple Word Embedding based Models for Implicit Emotion Classification of Tweets with Deep Learning. In Proceedings of the 9th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Brussels, Belgium, October 2018; Balahur, A., Mohammad, S.M., Hoste, V., Klinger, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 211–216. Available online: https://aclanthology.org/W18-6230 (accessed on 11 July 2024).

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical Attention Networks for Document Classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar] [CrossRef]

- Zhou, X.; Wan, X.; Xiao, J. Attention-based LSTM network for cross-lingual sentiment classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 247–256. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Hassan, A.; Mahmood, A. Convolutional recurrent deep learning model for sentence classification. IEEE Access 2018, 6, 13949–13957. [Google Scholar] [CrossRef]

- Gonçalves, S.; Cortez, P.; Moro, S. A Deep Learning Approach for Sentence Classification of Scientific Abstracts. In Discovery Science; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11198, pp. 21–35. [Google Scholar] [CrossRef]

- Jin, D.; Szolovits, P. Hierarchical Neural Networks for Sequential Sentence Classification in Medical Scientific Abstracts. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2561–2572. [Google Scholar] [CrossRef]

- Yang, Z.; Emmert-Streib, F. Threshold-learned CNN for multi-label text classification of electronic health records. IEEE Access 2023, 11, 17574–17583. [Google Scholar] [CrossRef]

- Jiang, Z.H.; Yu, W.; Zhou, D.; Chen, Y.; Feng, J.; Yan, S. Convbert: Improving bert with span-based dynamic convolution. Adv. Neural Inf. Process. Syst. 2020, 33, 12837–12848. [Google Scholar]

- Clark, K.; Luong, M.T.; Le, Q.V.; Manning, C.D. ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- He, P.; Liu, X.; Gao, J.; Chen, W. Deberta: Decoding-enhanced bert with disentangled attention. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- He, P.; Gao, J.; Chen, W. DeBERTaV3: Improving DeBERTa using ELECTRA-Style Pre-Training with Gradient-Disentangled Embedding Sharing. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the National Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 3, pp. 2267–2273. [Google Scholar]

- Sun, Z.; Yu, H.; Song, X.; Liu, R.; Yang, Y.; Zhou, D. MobileBERT: A Compact Task-Agnostic BERT for Resource-Limited Devices. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J., Eds.; pp. 2158–2170. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016. [Google Scholar] [CrossRef]

- arXiv.org e-Print Archive. Available online: https://arxiv.org/ (accessed on 1 June 2024).

- Nature. Available online: https://www.nature.com/nature (accessed on 1 June 2024).

- Springer—International Publisher. Publisher: Springer. Available online: https://www.springer.com/us (accessed on 1 June 2024).

- Wiley Online Library: Scientific Research Articles, Journals, Books, and Reference Works. Publisher: Wiley. Available online: https://onlinelibrary.wiley.com/ (accessed on 1 June 2024).

- Cornell arXiv Dataset. arXiv Dataset and Metadata of 1.7M+ Scholarly Papers across STEM. Available online: https://www.kaggle.com/datasets/Cornell-University/arxiv (accessed on 28 September 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).