Abstract

Three-dimensional time-of-flight (ToF) sensors are increasingly utilized for human pose and gesture recognition. This paper explores the application of low-resolution 3D ToF sensors for detecting fall events in indoor environments. We present a novel retrospective fall confirmation approach based on XGBoost that integrates fall posture data from distance snapshots and suspected fall trajectories. Our experiment results demonstrate strong detection performance, including improved accuracy and response time, compared to traditional methods, highlighting the efficacy of leveraging the history posture change process from stored sensor data alongside real-time ranging data judgment.

1. Introduction

Falls among the elderly population represent a significant public health concern. A substantial percentage of elderly people experience falls annually, with a lot of these incidents resulting in severe injuries, including fractures and head trauma [1,2,3].

The critical nature of these statistics underscores the importance of prompt fall detection, particularly for elderly individuals and those with medical conditions that increase fall risk. While various fall detection systems exist, automated detection mechanisms have emerged as essential components of contemporary elderly care solutions [4,5,6]. Although wearable device-based fall detection systems have been widely implemented, contactless fall detection utilizing remote sensors offers distinct advantages and has increasingly become the preferred approach [7,8].

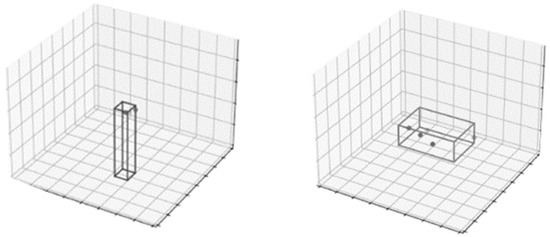

Three-dimensional time-of-flight (ToF) sensors present a promising solution for real-time monitoring of spatial positioning and postural changes [9,10,11,12]. These sensors can effectively differentiate between various human postures, such as standing, sitting, and lying positions. As illustrated in Figure 1, when viewed through a low-resolution (8 × 8 zones) ToF sensor [13], a standing person appears as a vertical rectangular pattern (Figure 1 left), while a fallen person presents as a horizontal pattern (Figure 1 right).

Figure 1.

Posture detection using an 8 × 8 multi-zone ToF sensor.

However, low-resolution ToF sensors face significant challenges in distinguishing between genuine fall events and other scenarios where a person might intentionally assume a horizontal position, such as during maintenance activities. These situations can trigger false alarms, potentially compromising the system’s reliability.

This paper presents a novel approach to differentiate between actual fall events and intentional horizontal positioning, thereby reducing false-positive rates in low-resolution ToF sensor-based fall detection systems.

2. Methods

2.1. System Configuration and Data Acquisition

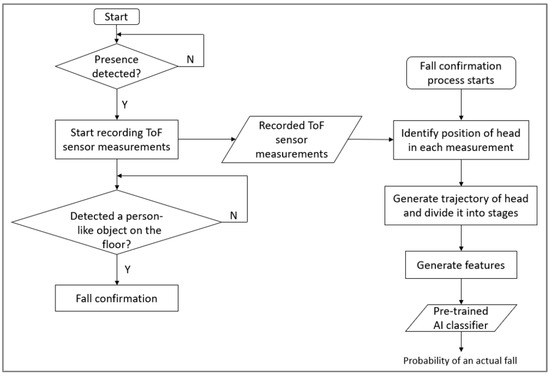

The proposed system utilizes a ceiling-mounted multi-zone time-of-flight (ToF) sensor configured to monitor human motion and posture within an enclosed space (e.g., a bathroom) while preserving privacy. The sensor’s positioning enables comprehensive spatial monitoring. Upon detecting human presence, the system initiates a continuous rolling measurement buffer, e.g., 20 s. Concurrent with this temporal recording, the system actively monitors for potential fall events by identifying person-sized objects at floor level. When such an object is detected, the system activates a sophisticated fall confirmation process. The complete system workflow is illustrated in Figure 2.

Figure 2.

Workflow of fall detection system using ToF sensor.

2.2. Fall Detection Algorithm

The fall confirmation process comprises several sequential analytical stages, detailed below.

2.2.1. Head Trajectory Analysis

The system first extracts head position data from the recorded measurements to generate a comprehensive trajectory. Head position is determined through a dual-criteria approach:

- When the highest moving point exceeds a specific height, e.g., 1 m, it is designated as the head position.

- Below the specific height threshold, the system identifies the head as the point furthest from the detected foot position (defined as the lowest body contact point with the floor).

The resultant trajectory is analyzed from its apex (highest head position) to its nadir (lowest head position).

2.2.2. Trajectory Segmentation

The complete trajectory is systematically divided into several distinct stages based on head height variations, e.g., 5 stages. This segmentation enables detailed analysis of the falling motion’s characteristics at different phases.

2.2.3. Feature Extraction

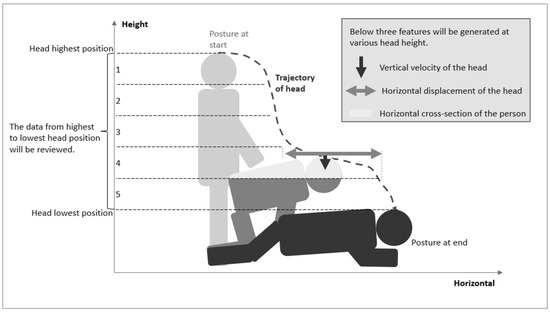

For each identified stage, the system calculates three key biomechanical features, as illustrated in Figure 3:

Figure 3.

Feature extraction for each identified stage in fall detection.

- Vertical Velocity (Vv): The head’s vertical velocity at stage termination.

- Horizontal Displacement (Dh): The cumulative horizontal distance traversed during each stage.

- Cross-sectional Occupation (Co): The number of sensor zones occupied by the subject at stage conclusion.

These parameters were selected based on their high correlation with human postural dynamics and activity patterns.

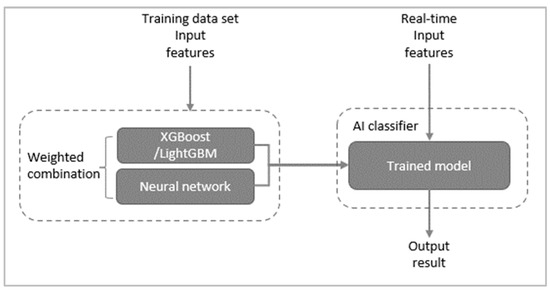

2.3. Classification System

The extracted features are processed through an ensemble-based artificial intelligence classifier, as illustrated in Figure 4. The system employs a hybrid approach combining the following:

Figure 4.

Hybrid AI classifier architecture.

- Tree-based models (XGBoost/LightGBM) for decision boundary optimization.

- Neural network components for logistic regression analysis.

This complementary architecture leverages the strengths of both approaches, particularly suitable for spatial–temporal data that do not conform to traditional time-series patterns.

2.4. Experimental Validation

2.4.1. Experimental Setup

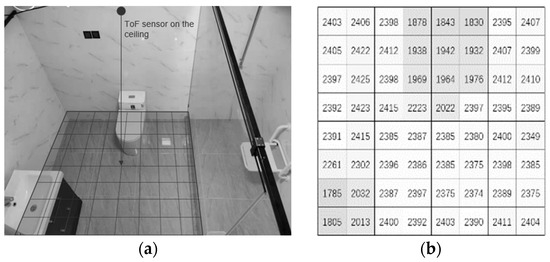

The experimental apparatus consisted of a multi-zone time-of-flight (ToF) sensor mounted on the bathroom ceiling in a downward-facing orientation, as illustrated in Figure 5a. The sensor features an 8 × 8 array configuration, capable of simultaneously capturing 64 discrete ranging measurements per frame. The system operates at a temporal resolution of 10 Hz (10 frames per second), providing continuous spatial monitoring of the environment.

Figure 5.

(a) Experimental setup with ceiling-mounted ToF sensor; (b) a representative single-frame output of ToF of the sensor.

Figure 5b demonstrates a representative single-frame output from the sensor, where each cell value represents the measured distance in millimeters from the sensor to the detected floor. Darker shades indicate smaller distances, and lighter shades indicate larger distances. This high-precision ranging data enables detailed spatial mapping of the monitored area.

2.4.2. Experimental Protocol

The experimental validation was conducted using a comprehensive dataset with the following specifications:

Data Composition:

- Total Sample Size: 80 events.

- Distribution: 40 genuine fall incidents, 40 intentional lying-down events.

Validation Methodology:

- Data Partitioning: 80% training set, 20% test set.

- Cross-validation: Multiple randomized partitioning iterations to ensure statistical robustness and generalizability of results.

This experimental protocol was designed to ensure thorough validation of the system’s fall detection capabilities while minimizing potential biases in the dataset.

3. Results and Discussions

3.1. Results

3.1.1. Overall Performance Metrics

The system achieved the following performance metrics:

- Overall accuracy: 98.41%.

- Error rate: 1.59%.

3.1.2. Detailed Performance Analysis

Table 1 presents the classification performance matrix. The classification results can be broken down as follows. All values represent average numbers across test sets (out of 8 samples per category).

Table 1.

Classification performance matrix.

The achieved accuracy of 98.41% was obtained after parameter optimization, with key adjustments mainly including feature weighting modifications.

- Increased weights for vertical velocity in stages 4 and 5.

- Enhanced emphasis on horizontal cross-sectional occupation patterns.

3.2. Discussions

3.2.1. System Performance and Advantages

The experimental results demonstrate several significant advantages of our proposed system. The achieved 98.41% accuracy in distinguishing between falls and intentional lying-down events demonstrates robust performance across varied scenarios.

On the other hand, this method triggered by detecting people that have fallen to the ground is unlike traditional velocity-based detection methods, as it is less affected by speed. This is particularly valuable for elderly individuals who may experience gradual falls due to weakness.

This system also eliminates the traditional waiting period for fall confirmation, and it enables immediate classification upon floor-level people presence detection.

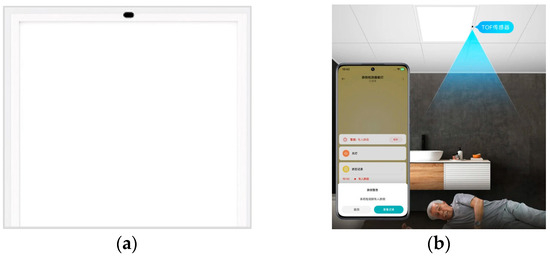

3.2.2. Product Implementation: Smart Fall Detection Panel Lamp

Based on our architecture, we developed an integrated monitoring solution in the form of a panel lamp with a ToF sensor integrated, as illustrated in Figure 6. Figure 6b illustrates a fall detection scenario involving an elderly person. The mobile application interface displays an alert in Chinese indicating that the system has successfully detected a fall event. Such a lighting product further addresses the need for fall detection at home while maintaining privacy.

Figure 6.

(a) A panel lamp integrated with a ToF sensor; (b) an example application scenario of the panel lamp with the ToF sensor.

Compared with standalone sensors, this dual-function approach allows for effort-less integration into the existing room infrastructure: there is no need to install and power the sensor separately. The ToF sensor’s placement within the lighting fixture provides optimal coverage of the indoor area.

3.2.3. Limitations and Future Work

While the system shows promising results, several areas warrant further investigation. In terms of environmental factors, this includes studies on the performance in varying room configurations and the effects of obstacles in the monitoring area. Future work could focus on addressing these limitations and expanding the system’s capabilities for broader applications in healthcare settings.

4. Conclusions

This paper presents a novel fall detection system utilizing low-resolution ToF sensors and a retrospective confirmation approach. Our experimental results demonstrate exceptional accuracy (98.41%) in distinguishing between genuine falls and intentional lying-down events, addressing a critical challenge in elderly care monitoring systems. The proposed method’s key innovation lies in its retrospective analysis of fall trajectories and posture data, enabling more reliable detection compared to traditional velocity-based approaches. This is particularly valuable for detecting gradual falls common among elderly individuals.

While the current implementation shows robust performance, future research should address environmental variables such as room configurations. The success of this system suggests significant potential for improving elderly care monitoring while maintaining privacy, potentially reducing the severity of fall-related injuries through prompt detection and response.

Author Contributions

Conceptualization, G.W. (Gongming Wei) and G.W. (Gang Wang); methodology, Y.W., S.L., J.Q. and G.W. (Gongming Wei); software, Y.W. and J.Q.; validation, Y.W. and J.Q.; writing—original draft preparation, Y.W.; writing—review and editing, Y.W. and G.W. (Gongming Wei); supervision, G.W. (Gang Wang); project administration, G.W. (Gang Wang). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors are employed by Signify. The authors declare no conflicts of interest.

References

- Bergen, G.; Stevens, M.R.; Burns, E.R. Falls and Fall Injuries Among Adults Aged≥65 Years—United States, 2014. Morb. Mortal. Wkly. Rep. 2016, 65, 993–998. [Google Scholar] [CrossRef] [PubMed]

- Jager, T.E.; Weiss, H.B.; Coben, J.H.; Pepe, P.E. Traumatic brain injuries evaluated in U.S. emergency departments, 1992–1994. Acad. Emerg. Med. 2000, 7, 134–140. [Google Scholar] [CrossRef] [PubMed]

- James, S.L.; Lucchesi, L.R.; Bisignano, C.; Castle, C.D.; Dingels, Z.V.; Fox, J.T.; Hamilton, E.B.; Henry, N.J.; Krohn, K.J.; Liu, Z.; et al. The global burden of falls: Global, regional and national estimates of morbidity and mortality from the global burden of disease study 2017. Inj. Prev. 2020, 26, i3–i11. [Google Scholar] [CrossRef] [PubMed]

- Majumder, S.; Aghayi, E.; Noferesti, M.; Memarzadeh-Tehran, H.; Mondal, T.; Pang, Z.; Deen, M.J. Smart homes for elderly healthcare—Recent advances and research challenges. Sensors 2017, 17, 2496. [Google Scholar] [CrossRef] [PubMed]

- Ramachandran, A.; Karuppiah, A. A survey on recent advances in wearable fall detection systems. Biomed Res. Int. 2020, 2020, 2167160–2167217. [Google Scholar] [CrossRef] [PubMed]

- Pierleoni, P.; Belli, A.; Palma, L.; Pellegrini, M.; Pernini, L.; Valenti, S. A High Reliability Wearable Device for Elderly Fall Detection. IEEE Sens. J. 2015, 15, 4544–4553. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Thompson, H.; Demiris, G. Fall Detection Devices and their use with Older Adults: A Systematic Review. J. Geriatr. Phys. Ther. 2014, 37, 178–196. [Google Scholar] [CrossRef] [PubMed]

- Nahian, M.J.A.; Raju, M.H.; Tasnim, Z.; Mahmud, M.; Ahad, M.A.R.; Kaiser, M.S. Contactless fall detection for the elderly. In Contactless Human Activity Analysis; Springer: Cham, Switzerland, 2021; pp. 203–235. [Google Scholar]

- Diraco, G.; Leone, A.; Siciliano, P. Human posture recognition with a time-of-flight 3D sensor for in-home applications. Expert Syst. Appl. 2013, 40, 744–751. [Google Scholar] [CrossRef]

- Lu, H.; Tuzikas, A.; Radke, R.J. A zone-level occupancy counting system for commercial office spaces using low-resolution time-of-flight sensors. Energy Build. 2021, 252, 111390. [Google Scholar] [CrossRef]

- Plank, H.; Egger, T.; Steffan, C.; Steger, C.; Holweg, G.; Druml, N. High-performance indoor positioning and pose estimation with time-of-flight 3D imaging. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar]

- Diraco, G.; Leone, A.; Siciliano, P. In-home hierarchical posture classification with a time-of-flight 3D sensor. Gait Posture 2014, 39, 182–187. [Google Scholar] [CrossRef] [PubMed]

- Martin, F.; Mellot, P.; Caley, A.; Rae, B.; Campbell, C.; Hall, D.; Pellegrini, S. An all-in-one 64-zone SPAD-based direct-time-of-flight ranging sensor with embedded illumination. In Proceedings of the 2021 IEEE Sensors, Sydney, Australia, 31 October–3 November 2021; pp. 1–4. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).