1. Introduction

With the rapid development of global manufacturing and related technology, companies are constantly improving production efficiency, product quality, and environmental sustainability. Intelligent manufacturing has become essential, using technologies such as artificial intelligence (AI), the Internet of Things (IoT), and robotic arms. Tiny machine learning (TinyML) is an emerging technology, with the potential to run ML models on low-power devices. These low-power devices, often called edge devices, can process data directly on the device [

1].

As the manufacturing industry continues to develop, the role of automation technology in improving production efficiency and product quality has become increasingly significant. The demand for intelligent equipment increases, especially in a highly competitive market environment. However, many robotic arms applied to manufacturing production lines are of limited use, due to outdated technology and a lack of advanced automated optical inspection (AOI) capabilities. This limitation restricts their flexibility when carrying out precise operations and handling complex production tasks. For many companies, the high cost of completely upgrading the equipment is not feasible. For instance, companies have improved their production efficiency and reduced their maintenance costs using TinyML technology in their production lines for over two years, as TinyML was successfully used for anomaly detection and image recognition applications [

2].

Although TinyML has yet to be widely adopted in the manufacturing industry, it has great potential. This technology allows edge devices to directly process and analyze data and provides an efficient and energy-saving solution for manufacturing environments that require real-time responses. Particularly in resource-constrained situations, TinyML effectively handles data processing and reduces the dependence on central processing units (CPUs), by lowering energy consumption and facilitating the automation and intelligence of production processes.

Robotic arms play a key role in enhancing production efficiency and product quality, due to their precision and flexibility. They have been widely used as automation equipment in the manufacturing industry. By integrating TinyML technology, robotic arms can perform object recognition, classification, and manipulation precisely, without relying on cloud computing or large data centers [

2]. Tsai demonstrated the use of this technology using real-time auditory recognition, which was successfully applied in bolt loosening detection involving robotic arms [

2]. TinyML technology expands the application scope of robotic arms in manufacturing by improving production efficiency, reducing energy consumption, and promoting resource reutilization.

Therefore, we integrated TinyML technology into robotic arms for efficient multi-object recognition. The feasibility and benefits of this innovative technology were assessed to reveal the potential of TinyML in enhancing the level of automation and intelligence in manufacturing. The results enable new strategies to be developed for the process transformation of the manufacturing industry. We developed a low-cost and low-power image recognition system and deployed it on edge devices to apply TinyML in production lines and provide an upgrade solution to existing technology. TinyML technology and additional cameras enabled the real-time identification and positioning of target objects in an automatic positioning system. By integrating data from surrounding sensors, the system calculated the optimal grasping position, thereby enhancing the operational precision and flexibility of the robotic arms.

In this study, we designed and implemented a reliable image recognition system, using a low-cost camera mounted on the robotic arm, to achieve automatic positioning functionality. Image processing algorithms were developed using TinyML edge devices for the classification of various types of objects. Sensor data were used to accurately calculate the optimal grasping position and improve the operational precision of the robotic arm. A Socket communication-based system was used to provide stable and real-time control of the robotic arm to enhance the automation and accuracy of the production process.

Using the developed system, the performance of existing manufacturing equipment was enhanced through technological innovation, even in resource-limited conditions. Modern communication technologies and machine learning algorithms were used to upgrade the equipment. The outcomes of this research provide a basis for the development of the manufacturing industry.

In this article, the research background, motivation, and objectives are introduced in the first section. The results from a literature review on robotic arm control, image recognition technology, and the current applications of and potential development of TinyML in the manufacturing industry are described in

Section 2.

Section 3 provides a detailed explanation of the system design concept, experimental setup, and data collection and processing methods. The results and analysis are presented in

Section 4, explaining the development process of the image recognition model and the real-time control of the robotic arm using Socket communication technology. Test data and performance evaluation results are also provided.

Section 4 of this article also provides the conclusion.

2. Methodology

The architecture and overall system workflow are illustrated in

Figure 1. The hardware includes sensors, edge devices, a Raspberry Pi (Raspberry Pi Foundation, Cambridge, United Kingdom), and a robotic arm. During the preparation phase, all the hardware components are checked in regard to their functions and activation. During the starting phase, the object recognition model and initial images are prepared, while simultaneously activating the edge devices to capture images and provide detection feedback. The Raspberry Pi is used to control subsequent processes, acquiring data from distance sensors via the general-purpose input/output (GPIO) connector to determine the height of the gripper. The system processes the images captured by the edge devices for object recognition and calculates the grasping path. Once the path calculation is completed, the robotic arm automatically grasps objects, while its operations are recorded to ensure regular operation is maintained. After each grasping, the object’s position, category, and grasping time are recorded in the database. When all the objects along the path are grasped, the system re-detects them, and the data in the database are visualized.

During the process, the system continuously monitors the environment using sensors installed at specific locations to detect potential hazards. For example, excessive rotation angles beyond set limits, abnormal vibrations of the robotic arm, or excessively high ambient temperatures are detected by the system. This ensures workplace safety and the stable operation of the system.

2.1. Hardware

In this study, Socket and Modbus communication technology is used to control the robotic arms. As a standardized network protocol, Socket communication technology provides stable and reliable data transmission, enabling the system to command the robotic arm for precise operations in real-time after identifying and positioning objects. Modbus communication technology is a widely used automation protocol in the industry, as it has a simple and efficient design for connecting industrial equipment. By integrating these two communication technologies, the system interacts effectively with various sensors and actuators, allowing precise and flexible control of the robotic arm. This hybrid communication approach enhances the performance of robotic arms and provides an economical upgrade solution for the manufacturing industry.

The SparkFun Edge development board, Apollo3 Blue (SparkFun, Boulder, CO, USA), is used as the edge device, primarily due to its low power consumption design and its compatibility with TinyML optimization. The ultra-low power characteristics of the Apollo3 Blue microcontroller limit its maximum power consumption to only 0.6 watts, making it particularly appropriate for battery-powered devices. The development board has built-in support for TensorFlow Lite in order for it to run small machine learning models efficiently. Even in resource-constrained environments, real-time object recognition and inference are enabled. The specifications of the SparkFun Edge development board are presented in

Table 1.

2.2. Software

The object recognition model is compressed into a smaller model and deployed on a Raspberry Pi for efficient and low-power operation. We combined TensorFlow and Edge Impulse for model training and compression [

3]. TensorFlow is used for model training, while Edge Impulse is used for model optimization, generating a file format for edge devices [

4]. The deep learning capabilities of TensorFlow and the optimization features of Edge Impulse for edge devices are seamlessly integrated.

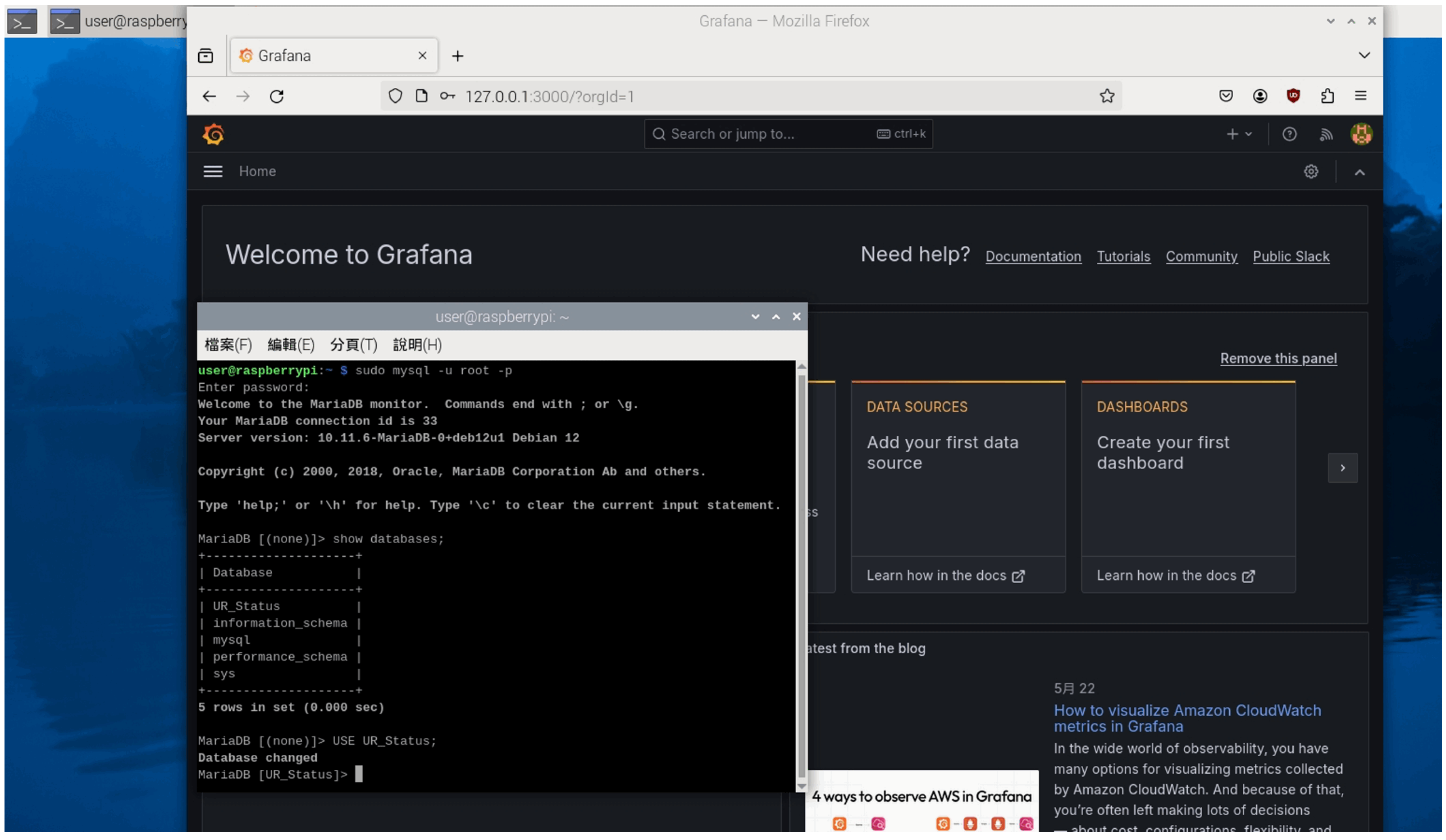

During model training, a deep neural network (DNN) is used as it has strong learning capabilities and efficient computational performance, which are appropriate for precise object recognition and classification. After model training, the model is converted into a format for efficient execution on the resource-limited Raspberry Pi device, using the quantization and optimization tools provided by Edge Impulse. The data on the system operation are recorded in a database. MariaDB is used as it is lightweight, efficient, stable, and performs well. It replaces the Microsoft SQL Server.

Figure 2 illustrates an instance when the MariaDB and Grafana v.10.3.0 servers run simultaneously.

3. Results and Discussion

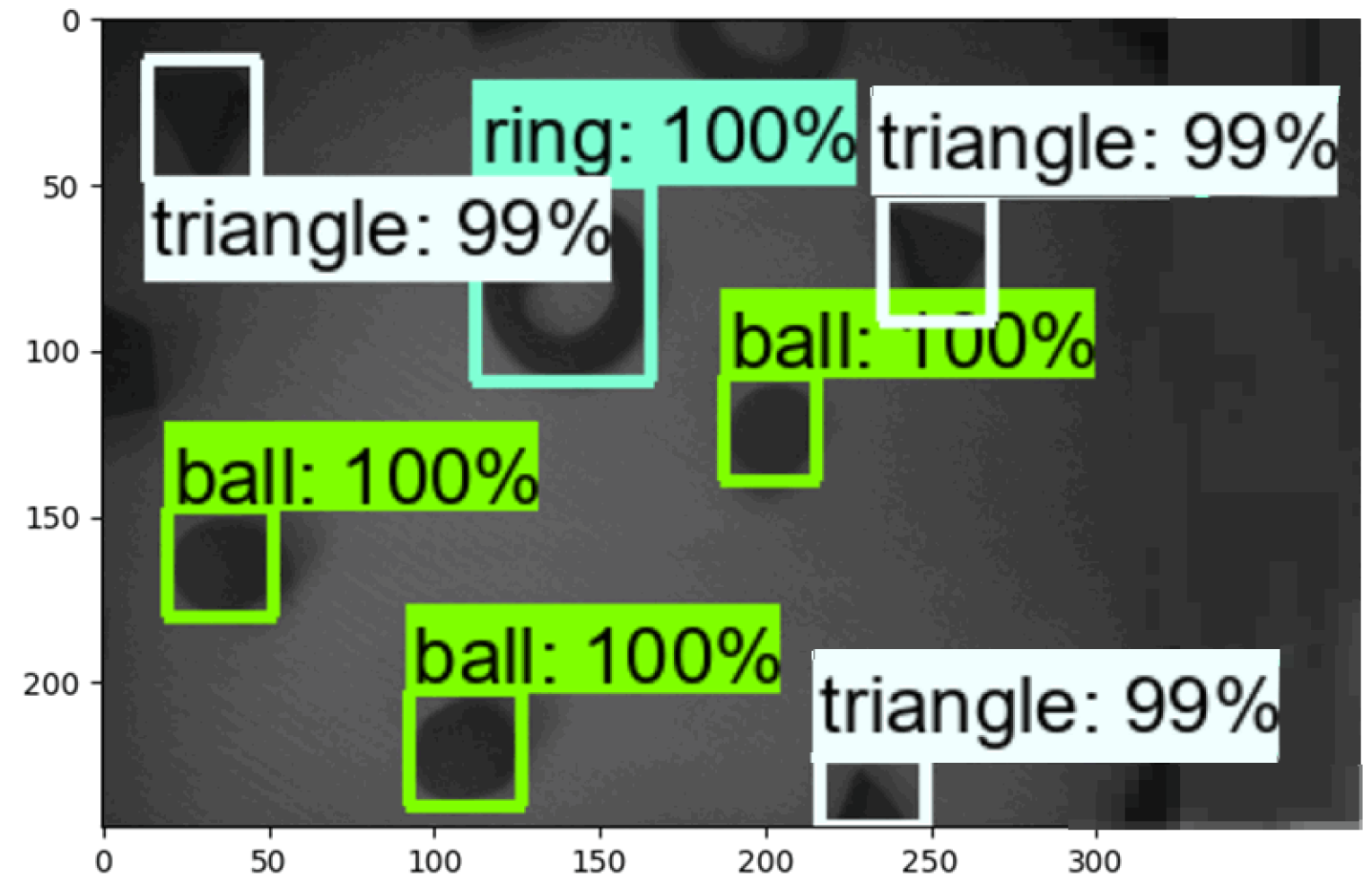

Multi-sensor fusion and TinyML are used for the intelligent control of robotic arms in this study. Using them requires the definition of a machine learning model. To establish an object recognition model, we define objects to simulate a robotic arm’s operation. Four shapes are assumed: a sphere (ball), a cube (cube), a ring (ring), and a triangle (triangle). Functions are then designed to randomly reposition the objects across the working platform and capture images. The random displacement values are set on the X, Y, and Z-axes, and in regard to the rotation of six-axis movable joints. The values are defined as −80 to +200 mm, −150 to +150 mm, −100 to 0 mm, and −90 to +90°, respectively. A total of 690 images were captured as the dataset and labeled accordingly.

Labeling the images was conducted using Roboflow, which offers various training and export formats and allows for the post-processing of images. It simulates images captured with different image sizes, brightnesses, rotation angles, scaling, and masking. The images were augmented by Roboflow for image resizing, brightness adjustment, and automatic label rotation. A total of 90% of the images were used as the training set, while 10% were used as the test set. A total of 621 images for training and 69 for testing were randomly assigned by the system. After post-processing, the number of training set images increased from 621 to 1848, which were then used for model training. The results on the performance of the trained model are shown in

Figure 3.

Modbus communication technology is used to read the real-time status of the robotic arm and write the control parameters. A Modbus client is used to connect the robotic arm and periodically read the data on the joint angles and speed. This is highly useful for monitoring the operational status of the robotic arm and performing error diagnosis. Once a Modbus connection is established, the status information of the robotic arm is obtained by reading the values of specific registers. For example, the position of a particular joint is monitored to check whether a specific action has been completed.

3.1. Experiment Results

The MariaDB database is used to record the system’s operational history. Grafana is used to visualize the database data to monitor the visual recognition results and the operational status of the robotic arm. Before generating visual charts with Grafana, the relevant database parameters are configured by installing the database software, setting up user profiles, creating databases, and creating tables. Subsequently, the Grafana and database connection settings are configured in regard to the database address, connection port, user credentials, target database, and tables. Then, visualization charts are created based on the desired data fields. The fields recorded in the visual recognition results database include the timestamp when recognition is completed, the object name, and the object’s relative coordinates based on its origin. The recorded database tables are shown in

Figure 4.

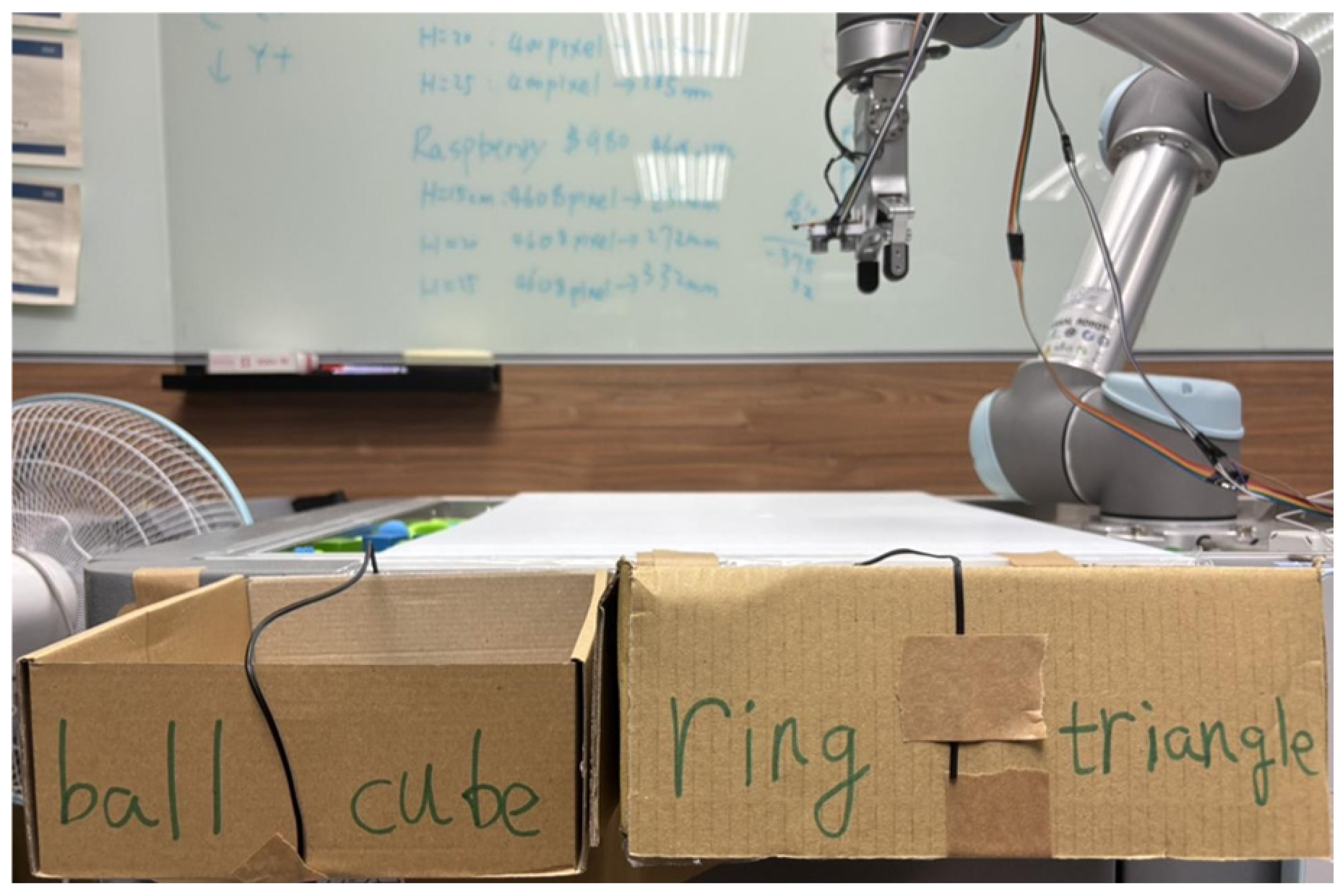

We designed an experimental procedure in which one, two, three, four, and five objects are placed sequentially for testing. The experiment is conducted to assess various aspects of the process, including the robotic arm connection time, initial image loading time, model loading time, movement path, object type and quantity, register status, and grasping time. The initial image refers to the state of the platform when no objects are present to determine whether materials are placed correctly (

Figure 5). For object classification functionality, we setup an object placement area beside the working platform. Due to limited space, two cardboard boxes are used to create four compartments. From left to right, the compartments are designated for the following object categories: sphere, cube, ring, and triangle.

The movement path refers to the path taken from the starting position to grasp each object, including the order of operations. The register status refers to the six-axis movement state of the robotic arm, obtained through Modbus communication. Both the register status and grasping time are visualized using Grafana charts. The register status is continuously retrieved through Modbus communication, every 0.5 s after the system starts, and is recorded in the “UR_Status_axis” table.

3.2. Explanation of the Experimental Outcomes

The results of the recognition and grasping process, along with the outcomes, are summarized in

Table 2. In the table, the experiment run numbers correspond to the number of objects, and the number after the “-” in each run number represents the multiplier mode code. The average recognition time for the model is 7.6 s. For the target objects designed in this research, the recognition accuracy reached 99% for all the objects, except for one which shows an accuracy of 91.75%. The average grasping time per attempt is 14.6 s, reflecting the stability of the robotic arm during operation. Insignificant variations are observed, depending on the size of the object.

4. Conclusions

We enhance the multi-object recognition capabilities of robotic arms by implementing real-time image processing and object recognition on low-power edge devices using TinyML. By utilizing TinyML technology, we ran a lightweight Edge Impulse model on resource-constrained edge devices for real-time image processing and multi-object recognition. This enabled the robotic arm to accurately identify and manipulate various objects on the production line, significantly improving production efficiency and operational flexibility. The developed system presents high recognition accuracy and speed, highlighting the potential of TinyML technology to enhance the intelligence of robotic arms. TinyML technology demonstrates significant advantages in terms of saving computational resources and energy consumption. The lightweight models developed operate efficiently on low-power edge devices and perform complex computational tasks without relying on large data centers. TinyML simplifies the model deployment process, allowing developers to more quickly and conveniently apply machine learning models in real-world production environments. The Edge Impulse platform quickly converts the trained models into lightweight formats and deploys them onto edge devices on the Raspberry Pi device. This process accelerates the model deployment speed and ensures the efficient operation of the models in resource-constrained environments. The rapid deployment of the features enables the system to quickly change the production environment and promptly update the models in response to new production demands, thereby enhancing the system’s flexibility and adaptability. The experiment results prove the successful application of multi-object recognition and grasping using the UR5 robotic arm with various sensors and TinyML technology for intelligent control. The average model recognition time was 7.6 s, with an accuracy close to 100%. Each grasping action takes 14.6 s, demonstrating the stability of the robotic arm. The results in this research highlight the potential of TinyML technology in enhancing the intelligence and operational efficiency of robotic arms. The system provides a basis for the intelligent upgrade of the manufacturing process. The feasibility of machine learning models for application in low-power edge devices was verified, laying a practical foundation for further integration of machine learning and automation technologies. Through the application of these technologies, more flexible and efficient production systems can be developed, advancing the manufacturing industry towards greater intelligence and automation.

Author Contributions

Conceptualization, N.-Z.H.; methodology, B.-A.L.; software, B.-A.L.; validation, H.-L.H. and Y.-Y.W.; formal analysis, Y.-X.L.; investigation, C.-C.L.; resources, P.-H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data are unavailable due to privacy or ethical restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Moore, M.R.; Buckner, M.A. Learning-from-signals on edge devices. IEEE Instrum. Meas. Mag. 2012, 15, 40–44. [Google Scholar] [CrossRef]

- Tsai, P.-C. Research on Robotic Arm with Real-Time Acoustic Recognition for Bolt Loosening Detection. Master’s Thesis, National Central University, Taoyuan, Taiwan, 2023. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Warden, P.; Situnayake, D. TinyML: Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Microcontrollers; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).