Abstract

Human Action Recognition is considered to be a critical problem and it is always a challenging issue in computer vision applications, especially video surveillance applications. State-of-the-art classifiers introduced to solve the problem are computationally expensive to train and require very large amounts of data. In this paper, we solve the problems of low data and resource availability in surveillance datasets by employing transfer learning and fine-tuning the Inflated 3D CNN model and the SlowFast Network model to automatically extract features from surveillance videos in the SPHAR dataset for classification into respective action classes. This approach works well to process the spatio-temporal nature of videos. Fine-tuning is carried out in the networks by replacing the last classification (dense) layer as per the available number of classes in the constructed new dataset. We ultimately compare the performance of both fine-tuned networks by taking accuracy as the metric, and find that the I3D model performs better for our use-case.

1. Introduction

Human Activity Recognition (HAR) is a technique carried out in computing environments for identifying various human activities based on a specific movement or action performed by humans present in the video. HAR has a myriad of applications, including healthcare monitoring, assisted living, surveillance, robotics, etc. Military bases that require constant surveillance currently rely on human operators that may be subject to fatigue, and thereby cause human error in detecting activities that may be a possible threat to the base. Employing computer systems for surveillance that recognize and classify human activities could broaden the area of surveillance, lessen the reliance on human resources and eliminate the possibility of human error. The process of recognizing human activities in video data is a daunting problem as most videos are cluttered with background noise, poor lighting, subject occlusion, etc. While Human Activity Recognition is a heavily researched problem that finds applications in automated military surveillance, the drawback within existing research is the inability of systems to detect complex movements, and movements that involve multiple persons, a person interacting with the environment and with objects. These systems also require large computational complexity and memory resources, in addition to large amounts of training data.

Human Action Recognition’s motivation is grounded in the analysis of surveillance videos. These surveillance systems have proved to be important assets in secure banks and law enforcement, commercial and military facilities. Until now, most HAR models developed have focused on the recognition and understanding of general day-to-day activities in videos recorded from a human perspective, not from surveillance camera perspective; thus, these trained models can be leveraged and fine-tuned to detect motion of objects in surveillance videos, overcoming the short-comings presented by the high computational complexity of CNN models, hazy backgrounds, perspective changes, and varying lighting conditions.

In this paper, we aim to analyze how the computational complexities of training CNNs from scratch can be addressed by fine-tuning pre-trained networks such as the Inflated 3D model (I3D) [1] and SlowFast networks [2] and compare their performance on SPHAR dataset. The I3D model is based on the inflation of 2D ConvNet pooling layers and filters, thereby adding another dimension into consideration to take advantage of the spatio-temporal features in videos for seamless action recognition. SlowFast networks have quite a slow path for capturing the spatial information, but a fast path to capture moving or motion and temporal information, and concluded that the best accuracy was achieved on the Kinetics dataset [3]; thus, we chose to fine-tune this model for our purpose. As the authors of the I3D [1] adopted the strategy of analyzing transfer behavior of their model after pretraining, in this paper we attempt the same on the two previously stated SOTA models and compare their performance on the SPHAR dataset. Figure 1. demonstrate the different actions of humans like walking, sitting and running.

Figure 1.

Example frames in our subset of SPHAR dataset.

Due to the scarcity of annotated data for the purpose of surveillance video action recognition, it was useful to apply fine-tuning to the I3D and SlowFast models to leverage their performance after being pre-trained on the large and well-annotated Kinetics [3] dataset to make better predictions on surveillance data. The results also prove that it is possible to obtain satisfactory results through fine-tuning, even for problems where data availability is extremely low.

2. Related Works

In their review, Islam M. et al. [4] summarized the role of CNN based deep neural networks in HAR. They categorized their reviewed works into four distinct categories according to the input devices used for data collection, as follows: vision devices, smartphones, radar and multi-modal sensing devices. They used performance, strengths, weaknesses and hyperparameters used in CNNs as parameters to compare and contrast each of the 10 reviewed works. They concluded that single, atomic movements are very accurately recognized and classified by current systems; their failures are highlighted in the case of complex activities in real-life circumstances, which is partly due to the lack of datasets involving such complex movements. They also highlighted the lack of datasets with group activity information, opposed to singular activity; these involve standing in queues, group sports, etc. They highlighted the computational expense of using CNN based approaches, the lack of standardization and issues of data privacy in addition to more complexities. They have also introduced further directions for research that can be adopted, including the prediction of future data or information which will incorporate the various activity data of contextual information, and also other generative models which will perform and enhance class imbalance. This also creates a versatile human activity recognition system, and the standardization of representations of relevant data in open datasets.

This study [5] presents TL-HAR, a transfer learning framework for use in HAR that consists of three phases: pre-training on a generic dataset to adjust weights, data preprocessing and augmentation and finally recognition by transfer learning, following which, they present five architectures, both one stream and two stream in conjunction with LSTM. The authors found that Two-Stream-TL-Temporal framework outperforms the compared SOTA models on UCF101 dataset. This study highlights the importance of using combined architectures over self-paced architectures and recommends the usage of transfer learning.

In any use-case, we may often be forced to train networks on small datasets; study [5] proposes an internal transfer learning framework and utilizes a 3D CNN to extract spatial and temporal features from videos for classification; with the use of sub-data classification, the performances of networks trained on small datasets improves greatly. Here, the proposed system proves that it has achieved approximately 98.2% and 100% accuracy on the respective KTH and Weizmann datasets, respectively. This opens pathways to apply the ITL strategy to challenging datasets as its performance is improved compared to models trained from scratch.

Our problem involves transferring learned parameters and applying them to a dataset that is different from the ones our base models were pre-trained on; thus, study [6] was particularly vital for their contributions in applying transfer learning for sign language recognition. They used the inflated two-stream 3D ConvNet but relied only on RGB video data, with no dependency on optical flow or depth data. Their method showed an increase in accuracy for transfer-learned networks trained for action recognition.

Paper [7] presents how the hybrid Support Vector Machine (SVM) and K-nearest neighbors (KNN) classifier model along with a trained (pre-trained) convolution neural network (CNN) architecture. They concluded that hybrid classifiers show an improvement in the accuracy of recognition systems compared to single classifiers. Their approach works well with RGB images and reports accuracies of 98.15% and 91.47% on the KTH and UCF sports datasets, respectively. Their approach works well but depends on the extraction of single image frames from videos; thus, it may be ignoring temporal information.

Along these lines, S. Chakraborty et al. [8] produced transfer learning approaches for HAR in still images for situations where data may lack temporal information. They trained CNNs such as VGG and ResNets using fine-tuning, and applied data augmentation to make up for the dearth of data. They validated their model on the Stanford 40 and PPMI datasets and reported benchmark results. Their work shows an opportunity to be extended to video action recognition tasks by inflation of the 2D CNNs used but fails in extracting features and distinguishing subjects in the case of noisy backgrounds.

A. Manaf F. and S. Singh were the first to test the SPHAR dataset using their proposed model [9]. As the dataset contains real-time surveillance camera footage with varying background lighting conditions, it is suited to complex action recognition. They present a hybridized 2D CNN and a stacked parallel bidirectional LSTM to recognize actions in prolonged sequences. They also train and validate their model on the UCF101 dataset and report a 94.5% validation accuracy. This work is vital to note as it pioneers the usage of the SPHAR dataset for surveillance video HAR.

The authors of this paper reported that SlowFast [10], a 3D CNN, produces better results in action recognition than a 2D CNN. They employed transfer learning to enhance anomaly detection in surveillance videos and provided promising results on citySCENE benchmarks. The Surveillance Perspective Human Action Recognition Dataset was built to encourage research in action recognition. The videos in this dataset have been collated from several benchmark datasets including UCF- Crime, UCF- Aerial, VIRAT [11], among others, which were shot using mounting angle of a surveillance camera under varying lighting conditions.

The authors applied these inflation techniques to InceptionV1 [12], which reported SOTA results on ImageNet, to develop the I3D network. In other paper GluonCV’s [13] custom data loader to load, preprocess and augment the data has been done. Various architectures of I3D and SlowFast Models as demonstarted in GluonCV’s [14] paper.The trained SVM classifier model identified on an optimal hyperplane in the larger dimensional feature space, which classifies the unseen images and test samples [15]. D P Acharya et al. concluded that a defensive possibilistic fuzzy c-mean clustering technique was best for the image detection of abnormal images in their FCM technique [16]. Mouazma Batool et al. provided a method to spike the gap in the normal intra-substructure nodes of a tree through entropy clustering accumulation [17]. A cross-modality dual attention fusion module (CMDA) was proposed by Dafeng Wei et al. to specifically communicate spatial–temporal information between any of the two routes in a two-stream SlowFast network [18]. Xiao, Fanyi et al. concluded that an efficient regularization strategy which will offer Drop Pathway which skips the Audio pathway during training could be used to tackle training challenges arising from differing learning dynamics for audio and visual modalities [19]. Wei Zeng et al. proposed a new localization loss function with a new penalty term based on the Lance and Williams distance [20].

3. Methodology

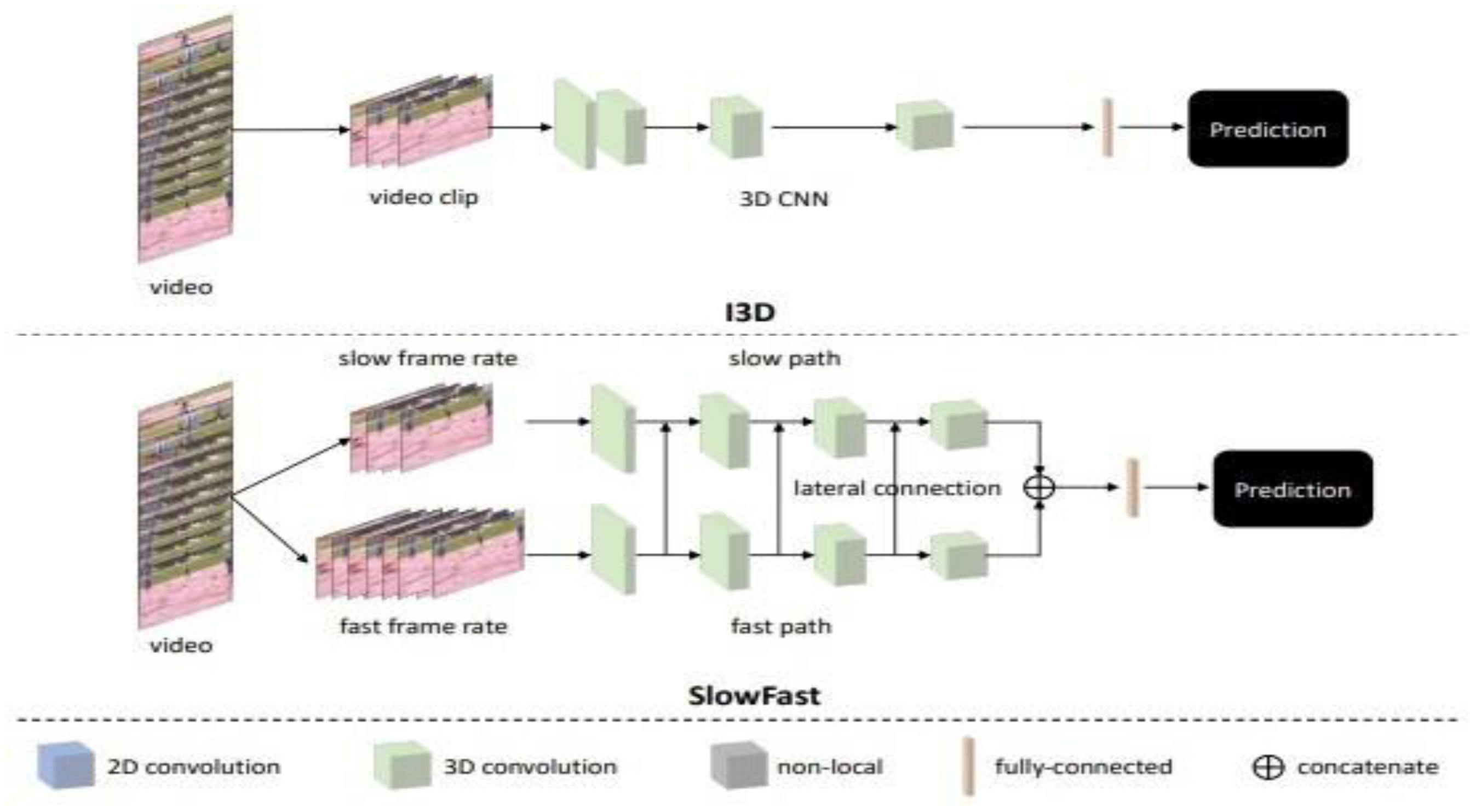

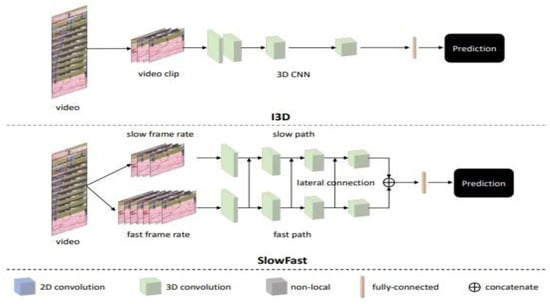

In this paper we chose to study two widely used and reputed pre-trained models that achieved the best results in the specific benchmark datasets such as Kinetics [3,10], and later finetuned them on the SPHAR dataset and finally compared their performance. The Figure 2. given below shows how I3D and SlowFast models behave for predicting the human action recognition.

Figure 2.

Architectures of I3D and SlowFast Models as illustrated in GluonCV’s [14].

3.1. The I3D Model

The first model we chose to understand and evaluate was the I3D model proposed in the paper ’Quos Vadis, Action Recognition?’ [1]. They illustrate that video data differs from single images in that it contains a temporal element; thus, models need to account for the additional dimension by incorporating 3D processing. To take the time dimension into account, the authors of the ground-breaking paper converted a 2D CNN into its 3D counterpart, to seamlessly extract spatiotemporal features from videos. For the conversion, they adopted 3 main strategies:

- (i)

- Inflating 2D CNNs to 3D: 2D filters and pooling kernels in the N × N dimension were typically square; they were inflated to N × N × N to make them cubic.

- (ii)

- Bootstrapping 3D filters from 2D filters: This process is meant to define the number of times an image is repeated for processing (T times). To perform the validation more accurately, the 2D filters’ weights for N times will be repeated along the time axis followed by re-scaling of the weights by dividing by N. This step is carried out to maintain the same non-linearity scale and number max-pooling layers as the 2D network on average.

- (iii)

- Both time- and space-receptive field pacing network depth: The researchers of this article identified that an optimal receptive field is dependent on frame rate and image dimensions, thus the kernels in I3D are not symmetrical.

The authors applied these inflation techniques to InceptionV1 [12], which reported SOTA results on ImageNet, to develop the I3D network. Here, generally, training is performed on individual I3Ds with any RGB input values and also on another specific I3D with any optical flow inputs, and their output values are averaged for test time to obtain a robust two-stream architecture.

SlowFast Networks

The second architecture we chose to fine-tune is the SlowFast network. We chose this for its state-of-the-art novel architecture for video understanding. The basic idea in this paper was the use of two pathways: a slow pathway that would capture spatial semantics by having a lower frame rate in general, and another fast pathway with a high frame rate that is very much useful in capturing motion data and information dynamics. Both pathways use raw video as the input to extract spatial and temporal information but at different temporal rates. The authors also introduced 3 new methods of fusing to combine the features extracted from the FastPath within the SlowPath which were: time-to-channel, stride time sampling, and stride time convolution. This architecture achieved SOTA results on both Kinetics-400 and Kinetics-600 datasets, reporting 79% accuracy in the former.

3.2. SlowFast Networks

The second architecture we chose to fine-tune is the SlowFast network. We chose this for its state-of-the-art novel architecture for video understanding. The basic idea in this paper was the use of two pathways: a slow pathway that would normally capture the spatial semantics by having a low frame rate and a fast pathway will be used with a high frame rate that captures motion information. We take both the pathways which use raw video as the input to extract spatial along with temporal information but at various threshold temporal rates. Here we also introduced three new methods of fusing to combine the features extracted from the FastPath within the SlowPath which were: stride time sampling, time-to-channel and stride time convolution. This architecture achieved SOTA results on both Kinetics-400 and Kinetics-600 datasets, reporting 79% accuracy in the former.

3.3. Core Methodology

Our core methodology consisted of the following steps:

- (1)

- Data processing: We translated the given surveillance action recognition problem into a multi-class classification problem with 3 activities: sitting, walking and running. We chose both walking and running as they are similar movements, thus intricate [features would be extracted to differentiate between the two movements, and sitting for its pronounced differentiability. The clips were cropped spatiotemporally to contain a single action at any given time. We chose videos with a frame rate of 30fps, which were 2–5 s long to take advantage of the atomicity of each action, and applied a combination of transforms to train the videos, including size, scale ratios, mean pixels to be extracted and standard deviation to be divided from the frame. We used GluonCV’s [13] custom data loader to load, preprocess and augment the data for our research.

We first split the dataset into training, validation and test sets. As an initial stage of the process a general data augmentation techniques like random cropping or flipping and rotations are performed on the chosen dataset, which naturally increases the variations in and robustness of the quality data. Then preprocessing should be performed by resizing the images and normalizing them in a certain threshold range.

As the next step, GluonCV API is used to train and evaluate our models. Here, a variety of hyperparameters, such as number of epochs, learning phase/rate, are captured and optimized to improve the performance of our model. At the end of our evaluation the performance of our models is analyzed based on the test set.

Here, we arrive at the conclusion by the use of GluonCV’s data loader, which helped us to improve the quality of our data and the performance of our models. This output provides confidence and experimental proof in the form of qualitative results for other researchers in the Human Action Recognition domain.

- (2)

- Model training and tuning: After performing the data preprocessing and loading in the required platform, we built the classification models using the I3D [1] and SlowFast [4] models from GluonCV’s model. Here, what we use are normally pre-trained on large video datasets, which normally allows them to learn the general features of various human actions and motions. We also fine-tuned these models by applying various constraints at various levels to the available surveillance data using transfer learning.

Here, we chose Stochastic Gradient Descent (SGD) as our optimizer because it is a very simple and efficient algorithm that can be used with large video datasets. We also chose softmax cross entropy loss as our loss function because it can be used to measure the difference between the predicted labels and the ground truth labels.

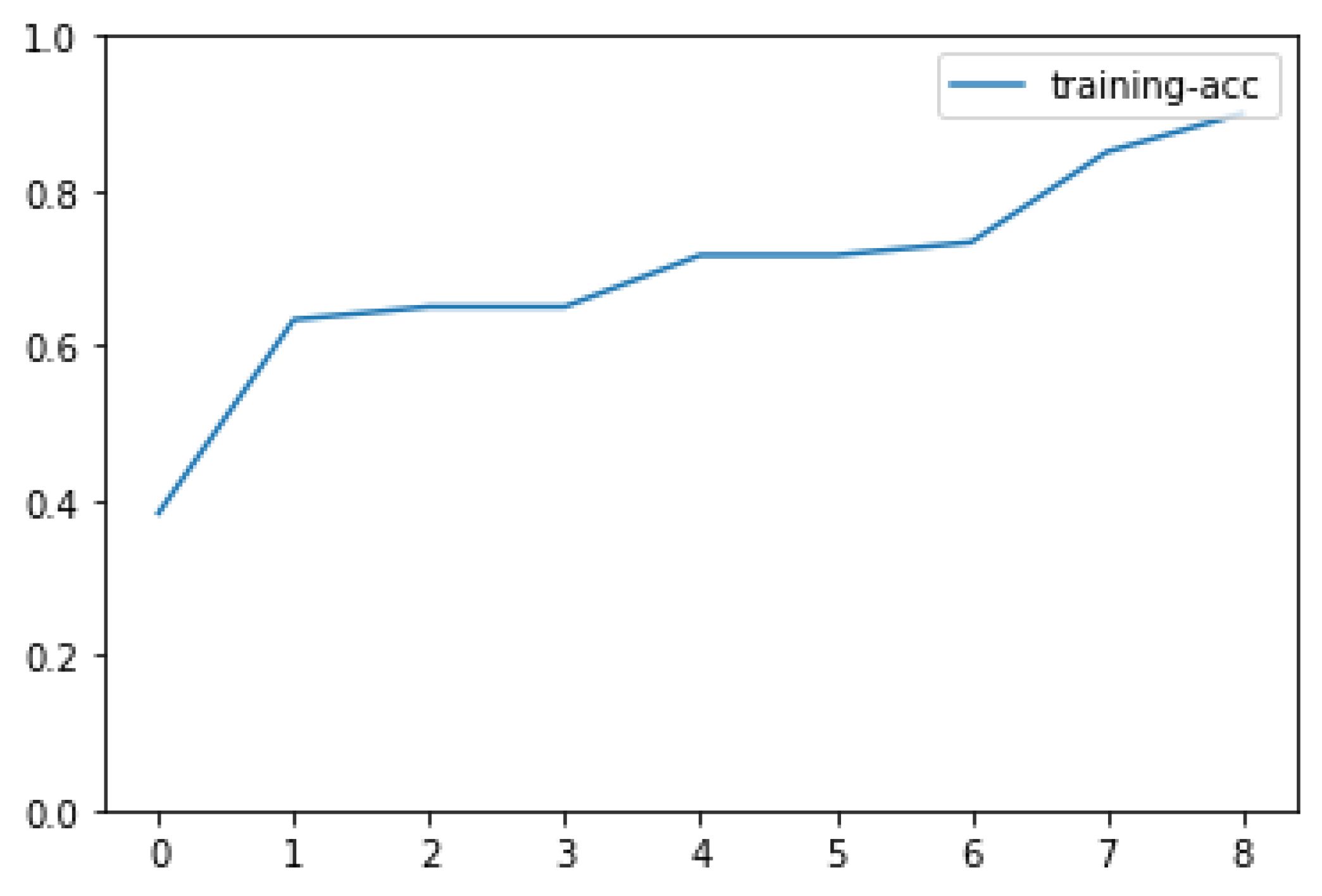

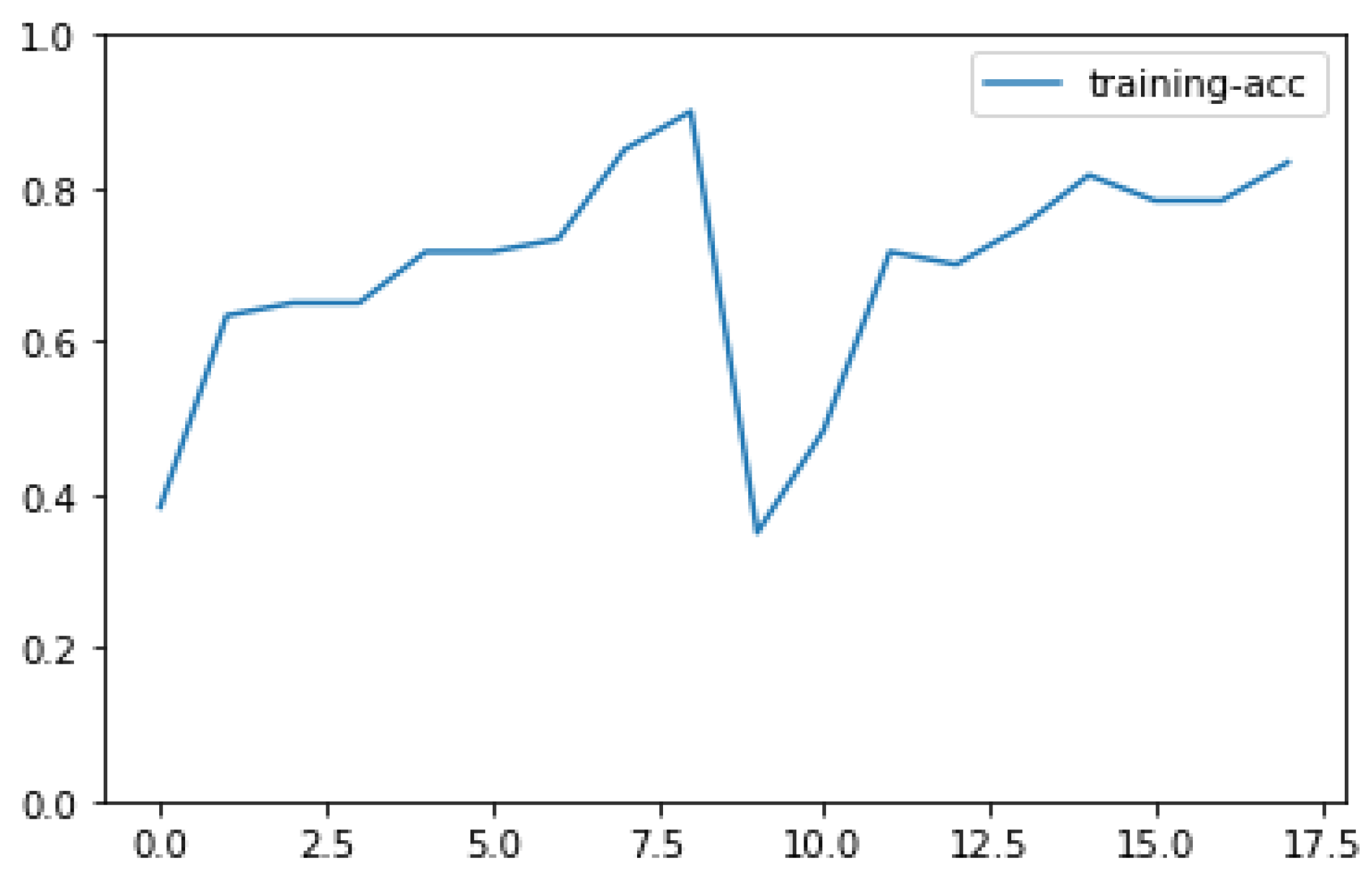

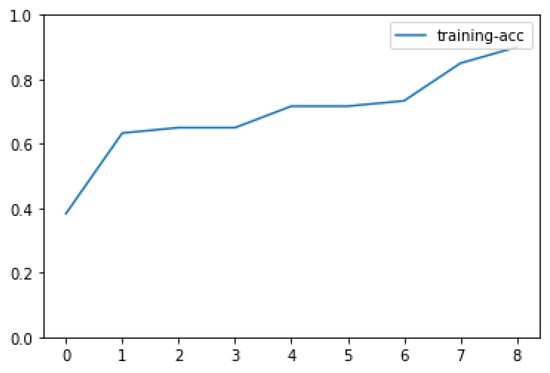

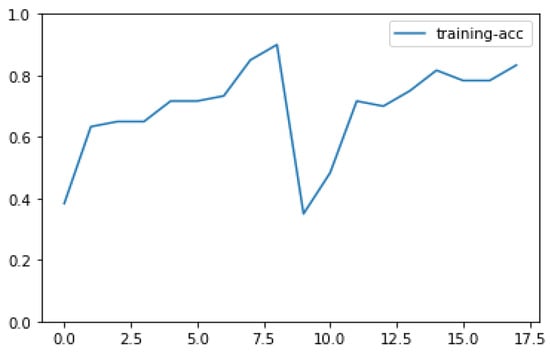

We trained both fine-tuned I3D models and the fine-tuned SlowFast Network for 9 epochs and also constrained them to have the batch size of 32. Ecopchs are the iterations we submit to the model training which will basically have N number of iterations on the opted dataset. It will always be a better choice to have a greater number of epochs as the quality of the data will be high, which helps to run the model, and accuracy will be greater. In addition, one contemporary issue is that the time taken will be higher in cases of a greater number of epochs. In this case, we chose 9 epochs because we wanted to strike a balance between performance and training time. The batch size is the number of samples that are used to update the model’s parameters in each iteration. A larger batch size will generally lead to faster training, but it will also require more memory. In this case, we chose a batch size of 32 because it is a good compromise between training speed and memory usage. The outputs in the Figure 3 and Figure 4 shows the accuracy of both models obtained.

Figure 3.

Training accuracy plot for the fine-tuned I3D model with 9 epochs.

Figure 4.

Training accuracy plot for the fine-tuned SlowFast Network model with 9 epochs.

We fine-tuned the outer layer of the networks, which has 3 outputs corresponding to our classes. This allowed the outer layer to be trained from scratch, while the other layers in the network were fine-tuned on the basis of the parameters of the source models (I3D and SlowFast).

In this work we evaluated the performance of both I3D and SlowFast models on the various test sets and found that the I3D model achieved and provided a better performance than the SlowFast model in recognizing video images. This is most likely because the I3D model naturally has an extended field receptive, which allows it to capture more fast and dynamic information about the videos.

- (3)

- Comparison of performance: Fine-tuning I3D and SlowFast networks pre-trained on Kinetics400 enabled us to find the best parameters specific to action recognition and especially for the problem of surveillance perspective videos; the parameters derived included optimal number of frames, stride for sampling and number of segments. I3D model inputs video segments of 3 frames while we input 64 frames into the SlowFast networks due to the difference in stride: the slow path has a stride of 16 while the fast path has a stride of 2, which implies that the fast path takes 32 images as input while the slow path takes 4, which translates to a total of 36 frames. Apart from these differences in operation, both models report comparable performance when we choose accuracy as the metric of comparison, illustrated in the training accuracy plots above, as shown in Table 1 and Table 2.

Table 1. Comparison of Performance of both the Fine-Tuned Models with Accuracy values.

Table 1. Comparison of Performance of both the Fine-Tuned Models with Accuracy values. Table 2. Comparison of performance of both the fine-tuned models with precision and recall.

Table 2. Comparison of performance of both the fine-tuned models with precision and recall.

4. Results

With the experimental support herein, we fine-tuned and optimized two pre-trained models, I3D and SlowFast, on a dataset of 90 videos, with 20 videos per class for training and 10 videos per class for testing. The results showcased a training accuracy of 90% and a testing accuracy of 76.67% for the I3D model. In contrast to the I3D model, the SlowFast model achieved a training accuracy of 83.33% and a testing accuracy of 70%. Furthermore, when comparing the precision and recall scores, those for the I3D model were slightly higher than the SlowFast model in terms of recall value. The I3D produced a precision of 0.70 and a recall value of 0.8571, whereas the precision and recall scores for the SlowFast model were 0.80 and 0.8333, respectively.

Hence, the results show that fine-tuning of both the I3D models and the SlowFast model can be performed to improve the performance of any motion recognition systems and models even with very limited datasets available. The accuracy of the SlowFast model has also achieved much greater precision and recall scores in comparison to the I3D model. Apart The I3D model used here had attained better accuracy in SlowFast model.

5. Conclusions

With this study and the implementation of the SlowFast and I3D networks for action recognition in surveillance videos, it is concluded that both the models are capable of achieving good performance, with the I3D network model scoring subtly higher in precision testing and the SlowFast network scoring slightly higher in terms of recall. The best network will vary based on the use case and application.

Apart from those features under consideration, that we have summarized here, some additional considerations should be considered to make a decision about which network to use based on the size of the dataset, resources available and expected accuracy.

If the dataset is small, then the I3D network may be a better choice because it is more likely to generalize new data well. In terms of accuracy, the I3D network may be a better choice. In terms of any computational resources available, the SlowFast network is more computationally expensive than the I3D network. If computational resources are limited, then the I3D network may be a better choice.

With these findings, it can be suggested that transfer learning will improve the performance of Human Action Recognition models, especially on surveillance videos. In addition, there is a need to explore the possible use of other pre-trained networks, such as C3D and ResNet3D, for Human Action Recognition in surveillance video applications.

Overall, our study provides valuable insights into the use of transfer learning for action recognition in surveillance videos. We believe that our findings will be of interest to researchers and practitioners working in this area.

Author Contributions

In this this research article, a study design was carried outby the following persons: T.G., N.W. and N.K.; supervision, R.J.K. and V.K.B.; reading and approving the final manuscript, T.G., N.W., N.K., R.J.K. and V.K.B.; manuscript—writing, T.G. and N.W. All authors have read and agreed to the published version of the manuscript.

Funding

Since no funding was received for our work, we declare this research received no source for any external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data captured from benchmark dataset repository SPHAR at gitup and are publicly available as cited.

Acknowledgments

Thanks to the Manipal Academy of Higher Education for their support in attending the conference.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef] [PubMed]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Repulic of Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, T.; Chen, Y.; Zhang, M.; Chen, J.; Snoussi, H. Internal transfer learning for improving performance in human action recognition for small datasets. IEEE Access 2017, 5, 17627–17633. [Google Scholar] [CrossRef]

- Abdulazeem, Y.; Balaha, H.M.; Bahgat, W.M.; Badawy, M. Human Action Recognition Based on Transfer Learning Approach. IEEE Access 2021, 9, 82058–82069. [Google Scholar] [CrossRef]

- Sargano, A.B.; Wang, X.; Angelov, P.; Habib, Z. Human action recognition using transfer learning with deep representations. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 463–469. [Google Scholar]

- Chakraborty, S.; Mondal, R.; Singh, P.K.; Sarkar, R.; Bhattacharjee, D. Transfer learning with fine tuning for human action recognition from still images. Multimed. Tools Appl. 2021, 80, 20547–20578. [Google Scholar] [CrossRef]

- Manaf, A.; Singh, S. A novel hybridization model for human activity recognition using stacked parallel lstms with 2d-cnn for feature extraction. In Proceedings of the 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kharagpur, India, 6–8 July 2021; pp. 1–7. [Google Scholar]

- Liu, K.; Zhu, M.; Fu, H.; Ma, H.; Chua, T.S. Enhancing Anomaly Detection in Surveillance Videos with Transfer Learning from Action Recognition; Association for Computing Machinery: New York, NY, USA, 2020; pp. 4664–4668. [Google Scholar]

- Oh, S.; Hoogs, A.; Perera, A.; Cuntoor, N.; Chen, C.C.; Lee, J.T.; Mukherjee, S.; Aggarwal, J.K.; Lee, H.; Davis, L.; et al. A large-scale benchmark dataset for event recognition in surveillance video. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3153–3160. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Guo, J.; He, H.; He, T.; Lausen, L.; Li, M.; Lin, H.; Shi, X.; Wang, C.; Xie, J.; Zha, S.; et al. Gluoncv and gluonnlp: Deep learning in computer vision and natural language processing. J. Mach. Learn. Res. 2020, 21, 1–7. [Google Scholar]

- Zhu, Y.; Li, X.; Liu, C.; Zolfaghari, M.; Xiong, Y.; Wu, C.; Zhang, Z.; Tighe, J.; Manmatha, R.; Li, M. A comprehensive study of deep video action recognition. arXiv 2020, arXiv:2012.06567. [Google Scholar]

- Maurya, R.; Singh, N.; Jindal, T.; Pathak, V.K.; Dutta, M.K. Computer-aided automatic transfer learning based approach for analysing the effect of high-frequency EMF radiation on brain. Multimed. Tools Appl. 2022, 81, 13713–13729. [Google Scholar] [CrossRef]

- Chowdhary, C.L.; Acharjya, D.P. Segmentation of Mammograms Using a Novel Intuitionistic Possibilistic Fuzzy C-Mean Clustering Algorithm. In Nature Inspired Computing; Springer: Singapore, 2018; Volume 652. [Google Scholar]

- Batool, M.; Alotaibi, S.S.; Alatiyyah, M.H.; Alnowaiser, K.; Aljuaid, H.; Jalal, A.; Park, J. Depth Sensors-Based Action Recognition Using—A Modified K-Ary Entropy Classifier. IEEE Access 2023, 11, 58578–58595. [Google Scholar] [CrossRef]

- Wei, D.; Tian, Y.; Wei, L.; Zhong, H.; Chen, S.; Pu, S.; Lu, H. Efficient dual attention SlowFast networks for video action recognition. Comput. Vis. Image Underst. 2022, 222, 103484. [Google Scholar] [CrossRef]

- Xiao, F.; Lee, Y.J.; Grauman, K.; Malik, J.; Feichtenhofer, C. Audiovisual SlowFast Networks for Video Recognition. arXiv 2020, arXiv:2001.08740. [Google Scholar] [CrossRef]

- Zeng, W.; Huang, J.; Zhang, W.; Nan, H.; Fu, Z. SlowFast Action Recognition Algorithm Based on Faster and More Accurate Detectors. Electronics 2022, 11, 3770. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).