Abstract

Accurately predicting welding performance measures like ultimate strength (UTS), weld bead hardness, and HAZ mechanical hardness is crucial for ensuring the structural integrity and performance of welded components. Multitask learning (MTL) refers to a machine learning approach in which one model is designed to handle several interconnected tasks at the same time. Instead of training separate models for each task, MTL shares representations among tasks, allowing them to leverage common patterns while maintaining task-specific distinctions. In this study, we compared two advanced machine learning techniques, namely multitask neural network (MTNN) and stacking ensemble learning, for predicting these parameters based on a shared dataset. A multitask neural network (MTNN) is a specific type of multitask learning (MTL) model that uses a deep neural network architecture to handle multiple related tasks simultaneously. In MTNN, different tasks share some hidden layers while having task-specific output layers. This shared representation allows the model to learn common patterns across tasks while maintaining task-specific outputs. Both methods are evaluated using RMSE and R2 to determine their predictive accuracy and overall effectiveness. It showed robust prediction strength, as its RMSE outcomes are 0.1288 for UTS, 0.0886 for weld hardness, and 0.1125 for HAZ hardness, whereas R2 values are 0.6724, 0.9215, and 0.8407, respectively. This underlines that it can generalize well in interrelated tasks. Stacking ensemble learning outperformed MTL in the accuracy of individual tasks: the RMSE for UTS is 0.0263 and R2 is 0.9863; for weld hardness, it is 0.0467 and 0.9782; and for HAZ hardness, it is 0.1109 and 0.8453. Such results indicate the good ability of ensemble methods to produce highly accurate, task-specific predictions. This comparison reveals the trade-offs between the two approaches. MTL is good in scenarios where the tasks are related and the data are sparse, giving efficient training and good generalization; stacking ensembles work better in the case of accurate, independent predictions. In both cases, they show remarkable potential for improving the predictive power of welding applications, making them suitable precursors to further investigation into hybrid models that bring the best features of both approaches together.

1. Introduction

Welding is a fundamental process in manufacturing and construction, playing a critical role in industries such as automotive, aerospace, shipbuilding, and infrastructure development. Ensuring the quality of welds is essential to guarantee the structural integrity and performance of welded components. Weld quality is often assessed using critical parameters like ultimate tensile strength (UTS), weld hardness, and hardness within the heat-affected zone (HAZ), as these indicators reflect the joint’s overall strength, durability, and reliability. Accurately predicting these metrics is vital to optimizing welding parameters and minimizing defects during production.

Figure 1 represents the integration among key parameters influencing weld quality, i.e., UTS, weld hardness, and HAZ hardness. UTS highlights the material’s ability to withstand tension while weld hardness and HAZ hardness represent resistance to deformation in weld area and heat-affected zone, respectively. Together, these interrelated factors provide a comprehensive measure of weld quality, reflecting the importance of understanding and optimizing these properties to achieve reliable and high-performing welds in manufacturing and engineering applications.

Figure 1.

Weld quality.

Traditionally, the quality of welding has been evaluated based on mechanical testing and empirical models based on experimental data. Such methods are time-consuming, resource-intensive, and unable to capture complex relationships between welding parameters and quality outcomes. This paper identifies the growing demand for predictive modeling techniques in the automation of industries and smart manufacturing by efficiently analyzing large datasets to uncover non-linear patterns in welding processes.

Machine learning has thus emerged as a crucial tool that may be applied in a predictive modeling application in welding. With historical data at their disposal, machine learning algorithms can capture complex relationships between input variables such as welding current, voltage, and speed and output metrics like UTS, weld hardness, and HAZ hardness. With the growing interest in machine learning techniques for enhancing predictive accuracy, researchers have focused on MTL and ensemble learning.

MTL is very suitable when there are several correlated output variables to be predicted. MTL can take advantage of the interdependencies of output variables by sharing representations across tasks and hence attaining better generalization and speeding up the training process. Ensemble learning, especially the stacking ensemble, focuses on ensemble learning where the strength of multiple models is combined to improve individual robustness and accuracy in predictions.

2. Literature Survey

Knaak et al. present an ensemble deep learning method that uses convolutional neural networks (CNNs) and gated recurrent units (GRUs) to analyze infrared image sequences for real-time detection of defects in laser welding processes. The model is efficient in detection rates and is particularly optimized for implementation on low-power embedded systems, thus proving its feasibility in real-world industrial settings [1]. Feng et al. propose ‘DeepWelding,’ a system that utilizes deep learning methods to facilitate gas tungsten arc welding (GTAW) process monitoring and penetration detection from multisource optical sensing images. The system diagnoses multiple types of optical sensing images at the same time and improves the accuracy and reliability of welding quality evaluation [2]. Deng et al. study a position-independent smart welding robot that avoids human interference as much as possible and achieves maximum performance. The structure and the driving system of the robot are optimized; the multi-information fusion laser welding system is integrated into it, and a data management expert system is applied for overall control. Laser sensors and robust algorithms realize real-time weld tracking. A high-speed imaging-based deep learning system adjusts welding parameters and tracks target components [3]. Horváth et al. report a new modeling approach for weld bead profiles on irregular surfaces during multi-pass tungsten inert gas welding. The proposed approach anticipates the coefficients of the bead profile using fuzzy systems incorporated with an optimization technique supported by the Bacterial Memetic Algorithm. Empirical verification indicates that the proposed model performs better than linear regression in terms of performance and is reliable for bead configuration predictions as well as areas likely to be defective. This method offers precise positioning of the weld bead, hence enhancing the quality of the weld [4]. Sarsilmaz et al. study the influence of friction-stir welding parameters on the mechanical properties of dissimilar AA2024/AA7075 plates. Tensile strength and hardness are measured through experimentation, and further optimized using SVM and ANN. The proposed ANN model with Nelder–Mead algorithm is more precise and efficient in comparison to existing SVM. Simulation studies proved the superiority of this model in the optimization of FSW [5]. Tsuzuki et al. provides a review of state-of-the-art production systems including intelligent manufacturing that uses automation and AI for aeroengine welding and inspection. This includes the digitalization of the welding and inspection process, AI-based optimization of robot welding, and automation of skilled operator techniques. A machine learning-based inspection system improves the accuracy and judgment involved. This paper focuses on relevant engineering methodologies, with respect to emerging automation opportunities in digital smart factory frameworks pertinent to the aerospace industry [6]. Mishra et al. investigate AI-based algorithms for predicting fracture locations in dissimilar friction-stir-welded AA5754–C11000 alloys. The supervised methods included decision tree, logistic classification, Random Forest, and AdaBoost. A new unsupervised self-organizing map neural network was used. The inputs were tool shoulder diameter, rotational speed, and traverse speed, with outputs identifying the fracture zones. The SOM algorithm had the highest accuracy of 96.92%, surpassing other methods [7]. Wang et al. introduce a multiscale ensemble learning method for product quality prediction in the iron and steel industry based on multiscale data. By using macroscopic and mesoscopic considerations of data, the ensemble model achieves improved prediction accuracy and generalizability, addressing the complex interactions involved in manufacturing processes in a suitable manner [8]. Lin et al. investigate issues that are created due to high nonlinearity in welding processes, and introduce a deep learning system for welding quality analysis and prediction. The proposed model employs deep neural networks to detect complex interactions among many variables, thus encouraging the prevention of quality defects in welding processes [9]. Xie et al. develops a physics-informed, DCNN-based approach for fatigue-life prediction of welded joints using mechanical properties and data augmentation; the method achieves an average error of 30.5%, outperforming other methods. It thus provides an efficient way of conducting accurate low-resource fatigue-life assessments for multiple materials and increasing the reliability and safety of engineering structures [10]. Sexton et al. investigate the effects of electrode misalignment (tilt) on resistance spot weld quality measured as nugget diameter. The application of PCA in reducing the dimension of dynamic resistance signals extracts features for linear regression. The result shows that larger misalignment drops peak resistance, lowers nugget diameter, and finally, decreases the weld quality [11]. Kumar et al. used regression models to predict tensile strength in hybrid laser arc welding. Of all the models, polynomial regression was identified as the most effective for the prediction of joint performance in a wide range of conditions [12]. A. Sata et al. present a comparative analysis of Artificial Neural Networks (ANNs) and Multivariate Regression (MVR) for predicting mechanical properties of investment cast components. Using industrial data from over 500 heats, 24 process and material variables were recorded. Principal Component Analysis (PCA) was applied to reduce dimensionality, resulting in ten influential parameters. Several ANN models, trained with different algorithms and MVR were tested to predict casting defects and mechanical characteristics. The ANN model utilizing the Levenberg–Marquardt algorithm demonstrated the highest accuracy. The work illustrates the potential of machine learning techniques in enhancing quality prediction and control in the investment casting process [13]. Pan et al. used finite element analysis in combination with one-dimensional convolutional neural networks to predict the temperature distribution that occurs in plasma arc additive manufacturing. This study enhanced the efficiency of manufacturing processes, thus achieving high accuracy and minimizing the training time [14]. In a related discussion, Panigrahi et al. discussed how ANNs can be used for predicting strength of weld joints in various welding techniques. According to the authors, ANNs are much better for welding quality and integrity prediction than those conventional models [15]. Hahn et al. suggest deep learning for an online quality prediction system for gas metal arc welding (GMAW) in particular. This system handles multi-sensor data in real-time efficiently, does feature engineering with autoencoders, and then predicts quality with recurrent deep learning models to enable adaptive control mechanisms for real industrial welding processes [16]. Moreno et al. suggest a computer vision system that uses a deep learning ensemble for weld quality control in door panel manufacturing. By averaging several deep learning models, this system enhances accuracy and reliability in weld quality inspection significantly, thus enabling fully automated manufacturing processes [17]. Liu et al. suggest a multi-objective evolutionary learning mechanism based on a two-stage model that includes a topological sparse autoencoder (TSAE) with ensemble learning techniques. The aim of this mechanism is to generate better steel materials by making multitask predictions of material properties, thus meeting the need for more intelligent manufacturing technologies [18]. Ferguson suggests a Mask Region-based convolutional neural network (CNN) method for defect detection in X-ray casting. Employing transfer learning, this method is capable of high accuracy in defect detection, highlighting the potential of deep learning in manufacturing defect detection [19]. Investment casting can suffer from internal defects such as misruns, shrinkage, slag inclusion, flash, and ceramic particles. Predicting these defects using process and material parameters can help reduce their occurrence. A. Sata et al. investigate the use of Artificial Neural Networks (ANNs) and Multivariate Regression (MVR) to predict defects based on industrial data from 500 heats. Twenty-four variables were collected and reduced to ten principal components using PCA. Several ANN models and MVR were trained for prediction. Among them, the ANN model using the Levenberg–Marquardt algorithm delivered the most accurate results, showing its effectiveness in forecasting casting defects [20].

MTL improves multiple related tasks by sharing information. It introduces various MTL settings, including supervised, unsupervised, semi-supervised, active, reinforcement, online, and multi-view learning, along with representative models. The applications in computer vision, bioinformatics, and natural language processing are reviewed, and recent theoretical insights into MTL are presented. The applications in healthcare, autonomous vehicles, and manufacturing validate the versatility of MTL [21]. Thung et al. review MTL, a method for optimizing related tasks simultaneously, an approach that has been broadly used in natural language processing, computer vision, and biomedical imaging. This research reviews the motivation of MTL, comparing algorithms, handling incomplete data, and its incorporation with deep learning. Also, it connects MTL to related subfields; finally, it provides a concise and accessible introduction to the topic [22]. Sener et al. treats MTL as a multi-objective optimization problem in which the inherent conflict between tasks is handled. The work differs from traditional approaches that minimize weighted combinations of per-task losses, as it seeks Pareto optimal solutions using gradient-based multi-objective optimization methods. To circumvent scalability issues, an efficient upper bound for the multi-objective loss is proposed and is shown to yield Pareto optimal solutions under realistic assumptions. It is applied to various deep learning problems, such as digit classification, scene understanding, and multi-label classification, and performs better than existing MTL approaches [23]. A. Sata et al. evaluated the effectiveness of various Artificial Neural Network (ANN) models and Multivariate Regression (MVR) methods in predicting the mechanical properties of investment castings. Using data from an industrial foundry, 24 variables related to process conditions and material composition were collected from 500 heats. Principal Component Analysis (PCA) was used to reduce dimensionality. Multiple ANN models, including those trained with the Levenberg–Marquardt algorithm, were developed and compared with MVR. The results showed that ANN models provided more accurate predictions, with the Levenberg–Marquardt algorithm outperformed others. The study demonstrates the value of AI-based modeling in improving casting quality predictions [24].

Zhou et al. proposed domain adaptive ensemble learning (DAEL) that shares the CNN feature extractor across domains while utilizing several classifiers for the individual source domains. In the context of multisource unsupervised domain adaptation, pseudo-labels supervise ensemble learning, and the source domains play as pseudo-targets in the domain generalization approach. Huge performance improvement has been observed during experiments performed over three UDA and two DG datasets [25]. Zhou et al.’s study integrates semi-supervised learning and ensemble learning, combining the exploitation of unlabeled data with multiple learners to enhance generalization. Semi-supervised learning uses unlabeled data, while ensemble learning combines multiple classifiers. Results show that the combination of these paradigms produces stronger learning models, thereby pointing out their mutual benefits and potential for improved machine learning performance [26]. Barber et al. research extends ensemble learning by reducing the Kullback–Leibler divergence existing between the actual posterior distribution and the parametric approximating distribution; this is achieved using full-covariance Gaussian distributions to adequately reflect posterior correlations. In addition, hyperparameters are approached by re-estimation within the framework presented. Computational efficiency and an exact marginal likelihood lower bound are realized, thus improving the treatment for Bayesian neural networks [27].

Gupta et al. introduce the idea of evolutionary multi tasking, a method that is optimized to address multiple optimization tasks simultaneously by transferring knowledge between tasks. The authors demonstrate that this method enhances generalization by allowing useful information to be shared between related tasks and thus trading off task-specific accuracy for overall generalization [28]. Feng et al. present an autoencoding approach in evolutionary multi tasking for increasing the transparency of knowledge sharing across tasks. It addresses the trade-off between generalization and accuracy for individual tasks adequately by making shared representations beneficial for multiple tasks [29]. Liu et al.’s work deals with MTL negative transfer problems by suggesting a gradient-based optimization approach that suppresses task rivalry. It thus strikes a balance between the needs of generalizability between tasks and the demands of correctness per task [30]. Liu et al. introduced the concept of impartial multi tasking learning (IMTL). Impartial multi tasking learning (IMTL) framework enables end-to-end training without the need for heuristic hyperparameter tuning and can be applied universally to various loss functions without assuming any specific distribution [31]. Navon et al. imagine MTL as a game of bargaining, whereby the tasks bargain to achieve an equitable outcome. This is a vision that supports a trade-off between accuracy and generalization in a balanced manner, as the tasks work together to maximize shared representations without undermining single-task performance [32]. Bhowmik et al. developed aluminum matrix composites reinforced with 0–9 wt.% SiC using stir casting. Increased SiC content improved density, reduced porosity, and enhanced tensile strength (140–205 MPa) and hardness (66–84 HV). Microstructural analysis confirmed strong bonding, while wear resistance improved with SiC, as shown by pin-on-disk dry sliding tests [33]. Metallurgical properties, specifically grain structure, influence the mechanical properties. Bhowmik et al. studied the effect of metal matrix of SiC particulate and aluminum in stir casting. They used the gray relation analysis and gay fuzzy grade to identify the optimum parameters [34]. Sen B et al. compare the partical swarm optimiztion and genetic algorithm to optimize the process parameters in wire-cut EDM. PSO is an efficient algorithm to optimize the process parameter of wire-cut EDM [35].

There are not many comparative studies that have systematically investigated such approaches in the welding context. The present work aims to fill this gap by considering MTL and stacking ensemble methods for the estimation of UTS, weld hardness, and HAZ hardness. Considering a common set of data related to welding parameters and their outputs, the approaches were trained and tested while the predictive performances of these two methods are assessed based on RMSE and R2 metrics.

This study offers meaningful insights into the advantages and drawbacks of multitask learning (MTL) and stacking ensemble approaches. While MTL demonstrates efficiency and generalization for related tasks, stacking ensembles excel in precision for independent predictions. These findings highlight the trade-offs between the two approaches, offering guidance for selecting the appropriate method based on the specific requirements of welding applications. By advancing predictive modeling in welding, this study supports the wider objective of incorporating machine learning techniques into intelligent manufacturing systems.

3. Methodology

This study compares two advanced machine learning techniques, MTL and stacking ensemble learning, in the prediction of welding quality metrics: UTS, weld hardness, and HAZ hardness. The methodology is systematic in comparing these approaches based on their architecture, training, and evaluation metrics.

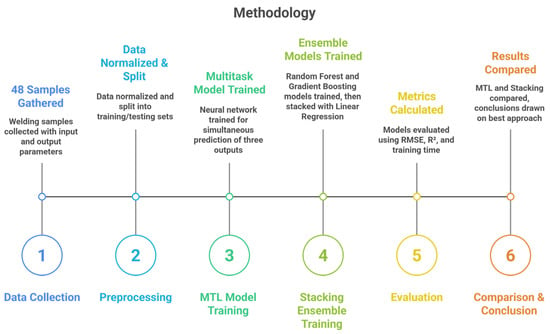

Figure 2 shows the step of methodology for predicting welding quality. It starts with dataset collection, where relevant data is gathered, followed by data preprocessing to clean and prepare the data. In that process, an MTL is set up for shared tasks, and a stacking ensemble framework is configured for improved accuracy. The MTL models and ensemble models are trained to refine the predictions through task-specific and combined approaches. This workflow ends with model evaluation, assessing the performance of both methods. This step-by-step approach is discussed as follows:

Figure 2.

Research methodology for machine learning technique for welding quality prediction.

3.1. Dataset

Table 1 shows the data collected from the manufacturer of stainless-steel products. Out of 48 total samples, 38 (80%) are used for training purpose and 10 (20%) are used for testing purpose. The summary of the dataset is given in Table 2 and in Table 3, the range of parameters are given. The dataset contains welding process parameters like thickness of plate, current, weld speed, electrode diameter, gas flow rate, and arc gap, and the target variables: UTS in MPa, weld hardness, and HAZ hardness.

Table 1.

Dataset.

Table 2.

Dataset summary.

Table 3.

Variable range.

Plate Thickness (mm): Thicker plates require higher heat input to achieve full penetration. Insufficient heat due to thin plates can cause incomplete fusion, leading to lower UTS. Conversely, excessive thickness without adequate current may lead to cold welds, affecting hardness consistency across zones.

Welding Current (A): Higher current increases arc energy, enhancing penetration and promoting grain refinement in the fusion zone, which contributes to improved UTS and hardness. However, very high currents can overheat the weld pool, causing coarser grains and potential defects, reducing strength.

Electrode Diameter (mm): Arc concentration is dependent on electrode diameter. Arch concentration increase with decreasing electrode diameter. However, excessive heat concentration may lead to undercut or burn-through in thin plates, affecting weld integrity and hardness.

Gas Flow Rate (L/min): Adequate shielding gas flow prevents atmospheric contamination, ensuring clean, defect-free welds with improved UTS. Insufficient flow may lead to oxidation and porosity, reducing strength. Excessive flow can cause arc turbulence and loss of shielding effectiveness.

Welding Speed (mm/min): It determines the cooling rate. High speed results in lower heat input and rapid cooling, which can refine grains and increase hardness but may reduce penetration. Low speed may overheat the material, coarsen grains and reducing UTS.

Electrode Gap (mm): It influences arc stability and arc length. A larger gap reduces arc concentration and heat density, decreasing penetration and weakening mechanical properties. A smaller gap yields a focused arc, enhancing weld pool control and consistent hardness.

3.2. Preprocessing

For consistency, all input features and output variables were normalized to the range [0, 1] using MinMaxScaler. Such normalization brings down the effects of different scales among the variables and speeds up convergence during training.

3.3. Multitask Neural Network (MTNN)

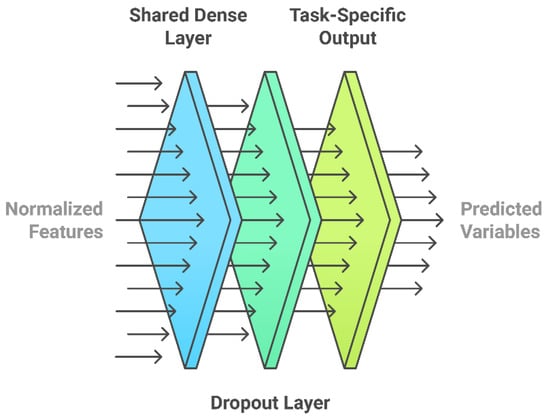

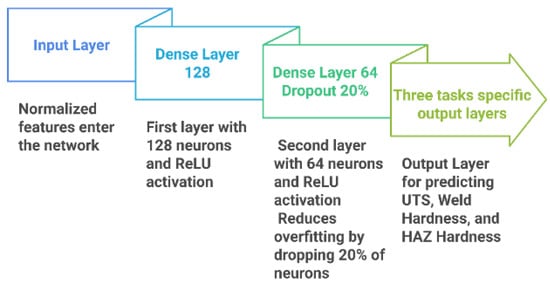

Figure 3 represents the architecture of a multitask neural network (MTNN). The multitask neural network (MTNN) implemented in this research predicts three interrelated target variables, UTS (ultimate tensile strength), weld hardness, and HAZ (Heat-Affected Zone) hardness, simultaneously. Starting with the input layers, which accept normalized features as inputs, the architecture proceeds to the shared dense layers to extract generalized patterns common to all tasks. Shared layers include a dense layer with 128 neurons and ReLU activation, followed by a 20% dropout layer to prevent overfitting and another dense layer with 64 neurons and ReLU activation as shown in Figure 4. After the shared layers, the network branches out into three task-specific output layers, one for each target variable prediction. All output layers use linear activation functions, since this is a regression task.

Figure 3.

Multitask neural network (MTL).

Figure 4.

Neural network architecture.

The model minimizes the combined loss, which is calculated as the sum of the Mean Squared Error (MSE) for all three tasks. It is optimized using the Adam optimizer with a learning rate of 0.001. Training is performed over 50 epochs with a batch size of 8, and 20% of the training data is reserved for validation to monitor performance. During testing, the model generates three simultaneous predictions, which are then combined and inverse-transformed back to their original scale for comparison with actual values.

Its shared layers allow the model to learn generalized features across all tasks, and the task-specific layers fine-tune these features for accurate predictions of each target. The design improves efficiency by obviating the need for a model per target and enhances generalization by leveraging the shared patterns among the tasks. The MTL framework is thus effective in solving interrelated regression problems by balancing shared learning with task-specific specialization.

3.4. Stacking Ensemble Learning

The research will also use stacking ensemble learning to predict each target variable independently—UTS (ultimate tensile strength), weld hardness, and HAZ (Heat-Affected Zone) hardness. Stacking is a meta-learning approach that combines the outputs of multiple base regressors to enhance prediction accuracy and model robustness. In this study, Random Forest and Gradient Boosting are employed as base models to leverage their ability to identify varied data patterns. These base models are trained independently for each target variable. After training them, the predictions on the training data are used as inputs for a meta-model a Linear Regression model, in this case. The meta-model learns to optimally weight the predictions from the base models to produce the final output. In testing, the trained base regressors make predictions on the test data, and those are combined by the meta-model to create the final prediction for each target.

Random Forest Regressor (RF): It is known for handling non-linear relationships and reducing overfitting through ensemble averaging. Default parameters were used with a fixed random_state = 42 for reproducibility.

Gradient Boosting Regressor (GBR): It is efficient for learning complex interactions via sequential boosting and minimizing bias. It was also initialized with random_state = 42.

The meta-learner used to combine the predictions of these base models was a Linear Regression model, which effectively learns the optimal weighted combination of base learner outputs for each target variable.

To evaluate multi-output prediction (i.e., UTS, weld hardness, and HAZ hardness), a separate stacking model was trained for each target variable, ensuring specialized learning for each quality metric. This architecture is implemented using StackingRegressor from Scikit-learn, where the fitting of base learners and meta-learner is handled internally through cross-validation.

The stacking pipeline was as follows:

- Inputs were normalized using MinMaxScaler.

- Two base learners (RF, GBR) were trained on the training dataset.

- Their predictions were used as input to the meta-learner (Linear Regression).

- The final output was generated through the meta-learner’s optimized combination of base predictions.

This approach enhances model generalization by leveraging model diversity and mitigating individual learner biases. The stacking architecture contributed to improved prediction accuracy across all three target variables when compared to a standalone multitask neural network (MTL) model.

3.5. Evaluation Metrics

The two techniques are compared based on three critical measures: Root Mean Squared Error (RMSE) (using Equation (1)), R2 (Coefficient of Determination) (using Equation (2)), and Training Time. RMSE reflects the average magnitude of prediction errors; the smaller the value, the better the model performance. R2 gives the model’s ability to explain the variance in the target variable; the closer to 1, the more explanatory it is and the better the fit of the data. Training Time provides computational efficiency of the methods and therefore the practicality of their application.

The methodology ensures comprehensiveness of the evaluation by using shared data preprocessing, robust architectures, and consistent metrics. While MTL emphasizes efficiency and generalization across related tasks, stacking ensembles optimize accuracy for individual predictions. This structured comparison provides valuable insights into selecting the most appropriate approach for welding quality prediction.

4. Results and Discussion

4.1. Training of MTNN

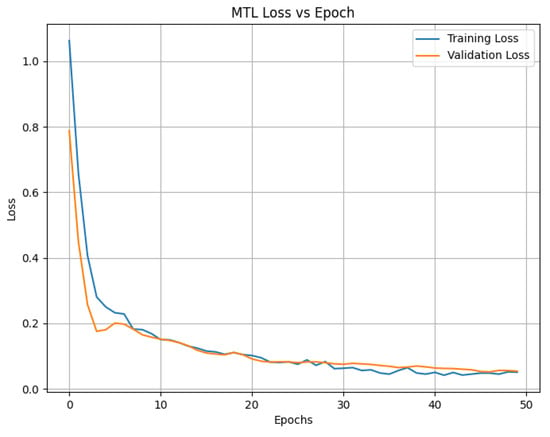

The Figure 5 shows plot of training and validation loss of over 50 epochs for the multitask neural network. The first few epochs show a rapid decrease in both the training and validation losses, indicating good learning. The stabilization and convergence of the losses in later epochs prove that the model has reached a steady state with very low overfitting, as the validation loss closely follows the training loss. This suggests good generalization performance of the multitask learning model.

Figure 5.

Plot Epoch vs. Loss in MTNN.

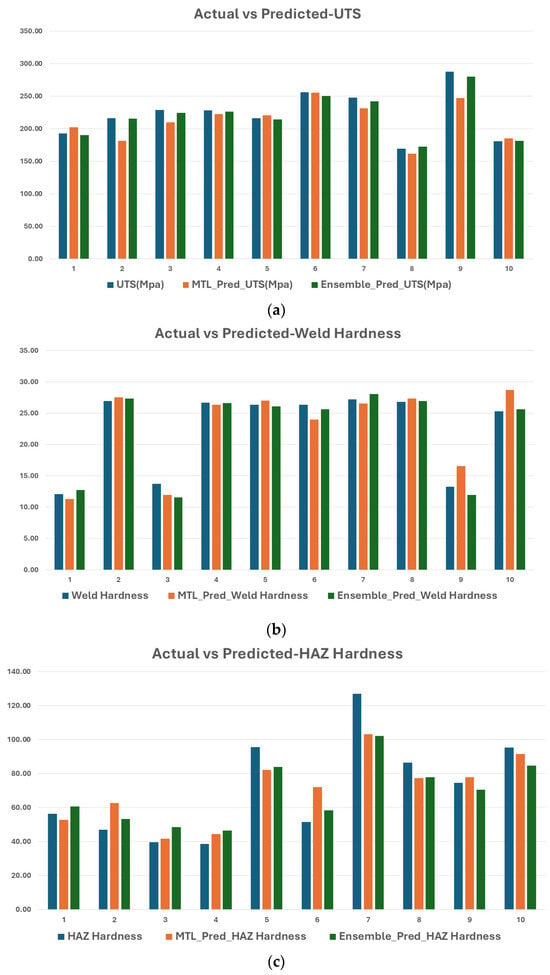

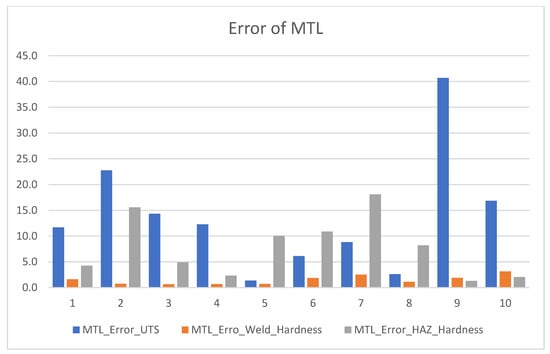

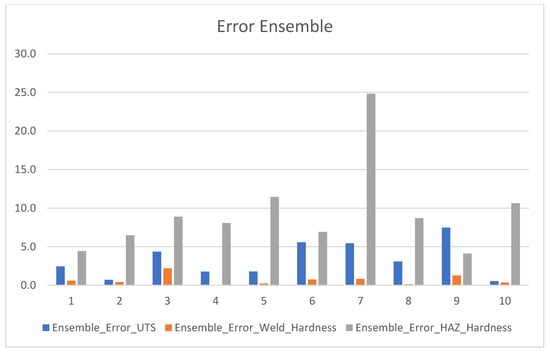

Figure 6a–c represent the comparison of actual vs. predicted for UTS, weld hardness, and HAZ hardness, respectively. Figure 7 and Figure 8 compare the error of MTL and ensemble algorithm.

Figure 6.

Graphical comparison of actual and predicted values for UTS, weld hardness, and HAZ hardness. (a) Evaluation of predicted and actual ultimate tensile strength (UTS) values. (b) Evaluation of predicted and actual values of weld hardness. (c) Evaluation of predicted and actual values of HAZ hardness.

Figure 7.

Error comparison MTL.

Figure 8.

Error comparison ensemble.

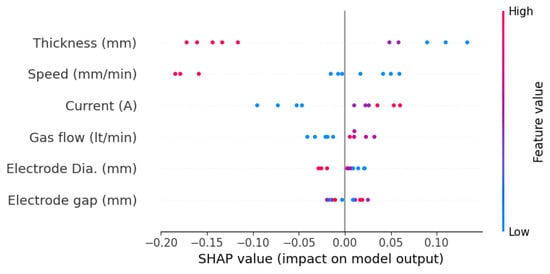

4.2. Feature Importance Analysis Using SHAP

To better comprehend our models, we employed SHAP (SHapley Additive exPlanations) to inspect the influence of input variables on the prediction. The SHAP summary plot, which graphically displays to what degree each of the welding parameters contributes to the predicted target variables, is shown in Figure 9. The plot shows that thickness (mm) and speed (mm/min) make the highest contribution to the predictions of the model, i.e., for UTS and weld hardness. Gas flow rate and current also have moderate contribution, whereas electrode diameter and electrode gap have relatively lower contribution. The gradient in the SHAP plot shows how feature values affect predictions where red is for higher values and blue is for lower values. This analysis confirms that the most important welding parameters affecting mechanical properties are thickness and speed, as dictated by welding physics principles. These results help practitioners order process parameters in optimizing welding conditions.

Figure 9.

Feature importance analysis using SHAP.

4.3. Statistical Significance Testing

To confirm whether the differences in RMSE and R2 values between MTL and stacking ensembles are statistically significant, T-tests and ANOVA were conducted. The results, shown in Table 4, indicate that all p-values > 0.05, suggesting that the observed differences are not statistically significant.

Table 4.

Statistical significance testing.

The results of both statistical tests show high p-values (all above 0.70), suggesting that the differences in predictions between the two models are not statistically significant. In simpler terms, this means both models perform similarly when it comes to prediction accuracy. This insight is quite useful—since their accuracy is comparable, the choice between the two can be based on other practical considerations like how complex the model is, how fast it runs, how easy it is to interpret, and how well it scales.

For instance, the MTL (multitask learning) model, with its single, unified structure, might be a better fit for real-time or embedded systems where memory usage and speed are critical.

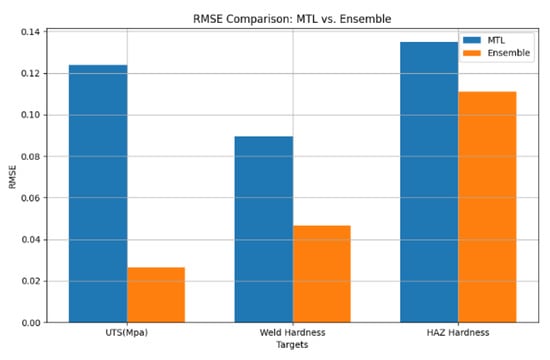

4.4. Performance Evaluation

To evaluate the model performance, we utilized Root Mean Squared Error (RMSE) and Coefficient of Determination (R2). RMSE is used to measure the difference between actual and predicted values. It may be calculated using Equation (1). Figure 10 shows the RSME comparison of MTL vs Ensemble.

Figure 10.

RSME comparison MTL vs. Ensemble.

When comparing the performance of multitask neural networks (MTLs) and stacking ensemble learning, it becomes clear that each method comes with its own strengths and trade-offs. As shown in Figure 8, MTL performs quite well in predicting weld hardness and HAZ hardness, with RMSE values of 0.0886 and 0.1125, respectively. This suggests that MTL is effective at learning shared features across related tasks.

On the other hand, the stacking ensemble approach shows a clear edge when it comes to predicting ultimate tensile strength (UTS), achieving a significantly lower RMSE of 0.0263 compared to MTL’s 0.1288. This indicates that stacking ensembles are better suited for highly task-specific predictions, offering higher precision where it is most needed.

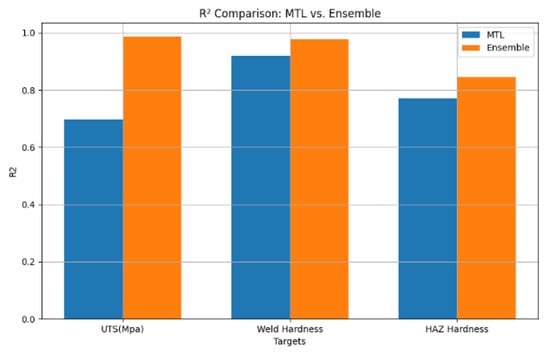

Coefficient of Determination (R2) measures how well the model explains variability in the target values. R2 is calculated using Equation (2).

When considering R2 (Coefficient of Determination), MTL showed strong generalization across all targets as shown in Figure 11, achieving R2 values of 0.6724 for UTS, 0.9215 for weld hardness, and 0.8407 for HAZ hardness. Stacking ensembles outperformed MTL for UTS and weld hardness, with R2 values of 0.9863 and 0.9782, respectively. For HAZ hardness, the R2 for stacking ensembles (0.8453) is slightly higher than that of MTL (0.8407), demonstrating its robustness.

Figure 11.

R2 Comparison MTL vs. ensemble.

Alongside commonly used metrics like Root Mean Squared Error (RMSE) and the Coefficient of Determination (R2), Table 5 also includes Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE) to offer a well-rounded view of model performance.

Table 5.

MAE and MAPE.

Each of these metrics brings something valuable to the table. RMSE gives more weight to larger errors, while R2 shows how well the model explains variation in the data. MAE, on the other hand, tells us the average size of the errors in a straightforward way. MAPE goes a step further by expressing errors as a percentage of the actual values—something especially helpful when understanding relative performance across different scales.

Including both absolute and percentage-based metrics paints a clearer picture of the model’s reliability, particularly in real-world industrial settings where maintaining consistent quality and managing relative deviations are essential. This comprehensive evaluation helps ensure that the model is not just statistically accurate but also practically dependable for tasks like predictive maintenance and weld quality monitoring.

4.5. Bias–Variance Trade-Off Analysis

Bias in MTL: MTL’s common learning architecture results in reduced variance but can cause minor underfitting in certain tasks because of generalization between tasks.

Variance of Stacking Ensembles: Stacking ensembles attempt to minimize bias by fitting task-specific models but might have greater variance because each training is performed separately for all target variables.

Trade-off Observation: MTL is robust in generalizing across a variety of welding quality metrics but lacks task-specific accuracy. Stacking ensembles are task-specifically more accurate but overfit task-specifically when using task-specific optimization.

The results well demonstrate the unique strengths of each approach: with shared representations, MTL can handle related tasks very efficiently, which would be important in applications where targets are interdependent. This capability is reflected in the high R2 scores it achieved on weld hardness and HAZ hardness. On the other hand, stacking ensembles outperform in achieving high accuracy over independent predictions, most remarkably for UTS, where task-specific optimization gave it a distinct lead.

Comparisons of RMSE and R2 for all targets, which are shown via bar charts, effectively demonstrate the trade-offs between MTL and stacking ensembles. These visualizations highlight how MTL performs better with respect to generalization (higher R2 for weld hardness and comparable R2 for HAZ hardness) and how stacking ensembles can be more precise in certain specific tasks (lower RMSE for UTS). Such graphical representations provide a clear and intuitive understanding of the relative performance of the methods.

This analysis underscores that both MTL and stacking ensembles are valuable tools for predictive modeling in welding applications. MTL is well-suited for scenarios where tasks are related, while stacking ensembles are ideal for independent predictions requiring high precision. These findings offer practical insights for selecting the appropriate machine learning approach based on the specific needs of welding quality assessment.

4.6. Computational Cost Analysis

This study takes a close look at the balance between how complex a model is to train and how efficiently it can be deployed. When comparing training times, the multitask learning (MTL) model took 4.22 s to train, whereas the stacking ensemble model completed the same task in just 1.38 s for all three output variables.

The longer training time for MTL makes sense—it uses shared layers and a joint optimization approach, which adds to the overall complexity. On the flip side, the stacking ensemble allows trains each base model (Random Forest and Gradient Boosting) separately, allowing them to run in parallel. This makes the training process faster and more efficient, which can be a significant advantage; in the real-world applications where time and computational resources are limited.

5. Conclusions

The findings emphasize the effectiveness of multitask learning (MTL) and stacking ensemble methods as promising machine learning strategies for predicting key welding quality indicators such as UTS, weld hardness, and HAZ hardness. Each of the methods showed unique strengths, so they could become valuable tools for different use cases in welding applications.

MTL was particularly efficient, utilizing its shared architecture to predict multiple targets at once. This not only saved training time but also built strong generalization capabilities, especially for related tasks like weld hardness and HAZ hardness. The high R2 values of 0.9215 for weld hardness and 0.8407 for hAZ hardness imply that MTL succeeded in extracting interdependencies between the targets. While its performance for UTS was a bit less accurate (RMSE: 0.1288, R2: 0.6724), the balance between speed and accuracy of MTL makes it an excellent choice for application in tasks that require simultaneous prediction over multiple correlated outputs.

On the other hand, stacking ensemble models showed strong performance when it came to delivering high precision for individual target variables. For instance, the ensemble achieved an impressive RMSE of 0.0263 and an R2 of 0.9863 for predicting ultimate tensile strength (UTS), highlighting its strength in task-specific optimization. Similarly, for weld hardness and HAZ hardness, the stacking ensemble delivered lower RMSE values—0.0467 and 0.1109, respectively—compared to MTL. However, the need to train separate models for each output increases the computational cost, making this approach less practical in time-sensitive or resource-constrained environments.

Ultimately, the choice between MTL and stacking depends on the specific needs of the application. If real-time efficiency and generalization across related outputs are priorities, MTL is a better fit. On the other hand, if the goal is to achieve the highest accuracy for independent predictions, stacking ensembles are more suitable.

Interestingly, there is great potential in combining both methods. A hybrid approach—where MTL captures shared features across tasks and stacking enhances precision for individual metrics—could offer the best of both worlds. Such a model could push predictive performance even further for complex welding applications.

This study underscores the valuable role of machine learning in improving the prediction of welding quality. It opens up new possibilities for smarter, more efficient manufacturing processes. Implementing MTL and stacking models can significantly enhance automated welding inspection, improve process control, and support the development of intelligent factory systems.

In practice, these models can help detect defects in real time, predict maintenance needs, and optimize welding operations. MTL’s adaptability to varying welding conditions makes it ideal for dynamic environments, while stacking’s accuracy makes it highly effective for quality monitoring. To take it a step further, the proposed hybrid model—leveraging MTL for learning shared patterns and stacking for refining specific outputs—enables real-time, accurate decision-making in smart welding systems.

When integrated into IIoT-enabled welding setups, these advanced models pave the way for scalable, reliable, and intelligent quality evaluation—ultimately boosting productivity and minimizing defects in modern manufacturing.

6. Future Scope

Microstructure plays important role in the quality of weld joints. Predicting the microstructure using input parameters improve the welding processes. Need to implement esemble learning to predict the microsture to improve the weld quality.

To enhance industrial relevance, study by Sen et al. (2025) [35], which uses evolutionary algorithms to optimize wire-cut EDM parameters for cost efficiency in turbo-machinery manufacturing [32]. Inspired by this approach, we propose that future work could integrate similar optimization frameworks to balance model accuracy with deployment cost in welding quality prediction, thus enhancing the practical applicability of our machine learning pipeline.

Author Contributions

Conceptualization, S.M., A.S., G.J. and D.S.; methodology, S.M., A.S., G.J. and D.S.; software S.M. and A.S.; validation, A.S. and G.J.; formal analysis, S.M., A.S., G.J. and D.S.; writing—original draft preparation, S.M., A.S. and G.J.; writing—review and editing, S.M., A.S., G.J. and D.S.; supervision, A.S. and G.J.; project administration, A.S.; funding acquisition, A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Marwadi University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Knaak, C.; von Eßen, J.; Kröger, M.; Schulze, F.; Abels, P.; Gillner, A. A Spatio-Temporal Ensemble Deep Learning Architecture for Real-Time Defect Detection during Laser Welding on Low Power Embedded Computing Boards. Sensors 2021, 21, 4205. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Chen, Z.; Wang, D.; Chen, J.; Feng, Z. DeepWelding: A deep learning enhanced approach to GTAW using multisource sensing images. IEEE Trans. Ind. Informatics 2019, 16, 465–474. [Google Scholar] [CrossRef]

- Deng, X.; Liu, Z.; Li, R. Design of an all-position intelligent welding robot system. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 3683–3688. [Google Scholar]

- Horváth, C.M.; Botzheim, J.; Thomessen, T.; Korondi, P. Bead geometry modeling on uneven base metal surface by fuzzy systems for multi-pass welding. Expert Syst. Appl. 2021, 186, 115356. [Google Scholar] [CrossRef]

- Sarsilmaz, F.; Kavuran, G. Prediction of the optimal FSW process parameters for joints using machine learning techniques. Mater. Test. 2021, 63, 1104–1111. [Google Scholar] [CrossRef]

- Tsuzuki, R. Development of automation and artificial intelligence technology for welding and inspection process in aircraft industry. Weld. World 2022, 66, 105–116. [Google Scholar] [CrossRef]

- Mishra, A.; Dasgupta, A. Supervised and unsupervised machine learning algorithms for forecasting the fracture location in dissimilar friction-stir-welded joints. Forecasting 2022, 4, 787–797. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Tang, L.; Zhang, Q. Multiobjective ensemble learning with multiscale data for product quality prediction in iron and steel industry. IEEE Trans. Evol. Comput. 2023, 28, 1099–1113. [Google Scholar] [CrossRef]

- Lin, J.; Lu, J.; Xu, J.; Li, D. Welding quality analysis and prediction based on deep learning. In Proceedings of the 2021 4th World Conference on Mechanical Engineering and Intelligent Manufacturing (WCMEIM), Barcelona, Spain, 12–14 May 2021; pp. 173–177. [Google Scholar]

- Xie, T.; Huang, X.; Choi, S.-K. Metric-based meta-learning for cross-domain few-shot identification of welding defect. J. Comput. Inf. Sci. Eng. 2023, 23, 30902. [Google Scholar] [CrossRef]

- Sexton, A.; Doolan, M. Effect of electrode misalignment on the quality of resistance spot welds. Manuf. Lett. 2023, 35, 952–957. [Google Scholar] [CrossRef]

- Kumar, G.P.; Balasubramanian, K.R.; Kottala, R.K.; Chigilipalli, B.K.; Prabhakar, K.P. Prediction of tensile behaviour of hybrid laser arc welded Inconel 617 alloy using machine learning models. Int. J. Interact. Des. Manuf. (IJIDeM) 2025, 19, 435–447. [Google Scholar] [CrossRef]

- Sata, A.; Ravi, B. Mechanical Property Prediction of Investment Castings Using Artificial Neural Network and Multivariate Regression Analysis. In Proceedings of the Indian Foundry Congress, Greater Noida, India, 27 February−1 March 2015; pp. 1–15. [Google Scholar]

- Pan, N.; Ye, X.; Xia, P.; Zhang, G. The temperature field prediction and estimation of Ti-Al alloy twin-wire plasma arc additive manufacturing using a one-dimensional convolution neural network. Appl. Sci. 2024, 14, 661. [Google Scholar] [CrossRef]

- Panigrahi, B.S.; Manjunath, H.R.; Chipade, A.; Babu, S.B.G.T.; Pavithra, G.; Hussain, B.I. Artificial neural networks for weld joint strength prediction in various welding techniques. In Proceedings of the 2024 5th International Conference on Recent Trends in Computer Science and Technology (ICRTCST), Delhi, India, 15–17 June 2024; pp. 188–192. [Google Scholar]

- Hahn, Y.; Maack, R.; Buchholz, G.; Purrio, M.; Angerhausen, M.; Tercan, H.; Meisen, T. Towards a deep learning-based online quality prediction system for welding processes. Procedia CIRP 2023, 120, 1047–1052. [Google Scholar] [CrossRef]

- Moreno, R.; Sanjuán, J.M.; Cristóbal, M.D.R.; Muthuselvam, R.S.; Wang, T. A deep learning ensemble for ultrasonic weld quality control. In Proceedings of the International Conference on Soft Computing Models in Industrial and Environmental Applications, Porto, Portugal, 10–12 April 2023; pp. 145–152. [Google Scholar]

- Liu, C.; Tang, L.; Zhang, K.; Xu, X. Multiobjective evolutionary learning for multitask quality prediction problems in continuous annealing process. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 13742–13753. [Google Scholar] [CrossRef] [PubMed]

- Ferguson, M.; Ak, R.; Lee, Y.-T.T.; Law, K.H. Detection and segmentation of manufacturing defects with convolutional neural networks and transfer learning. Smart Sustain. Manuf. Syst. 2018, 2, 137–164. [Google Scholar] [CrossRef]

- Sata, A. Investment casting defect prediction using neural network and multivariate regression along with principal component analysis. Int. J. Manuf. Res. 2016, 11, 356–373. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. An overview of multi-task learning. Natl. Sci. Rev. 2018, 5, 30–43. [Google Scholar] [CrossRef]

- Thung, K.-H.; Wee, C.-Y. A brief review on multi-task learning. Multimed. Tools Appl. 2018, 77, 29705–29725. [Google Scholar] [CrossRef]

- Sener, O.; Koltun, V. Multi-task learning as multi-objective optimization. arXiv 2018, arXiv:1810.04650. [Google Scholar]

- Sata, A.; Ravi, B. Comparison of Some Neural Network and Multivariate Regression for Predicting Mechanical Properties of Investment Casting. J. Mater. Eng. Perform. 2014, 23, 2953–2964. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, Y.; Qiao, Y.; Xiang, T. Domain adaptive ensemble learning. IEEE Trans. Image Process. 2021, 30, 8008–8018. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.-H. When semi-supervised learning meets ensemble learning. Front. Electr. Electron. Eng. China 2011, 6, 6–16. [Google Scholar] [CrossRef]

- Barber, D.; Bishop, C.M. Ensemble learning in Bayesian neural networks. Nato ASI Ser. F Comput. Syst. Sci. 1998, 168, 215–238. [Google Scholar]

- Gupta, A.; Ong, Y.-S.; Feng, L. Multifactorial evolution: Toward evolutionary multitasking. IEEE Trans. Evol. Comput. 2015, 20, 343–357. [Google Scholar] [CrossRef]

- Feng, L.; Zhou, L.; Zhong, J.; Gupta, A.; Ong, Y.S.; Tan, K.C.; Qin, A.K. Evolutionary multitasking via explicit autoencoding. IEEE Trans. Cybern. 2018, 49, 3457–3470. [Google Scholar] [CrossRef]

- Liu, B.; Liu, X.; Jin, X.; Stone, P.; Liu, Q. Conflict-averse gradient descent for multi-task learning. Adv. Neural Inf. Process. Syst. 2021, 34, 18878–18890. [Google Scholar]

- Liu, L.; Li, Y.; Kuang, Z.; Xue, J.; Chen, Y.; Yang, W.; Liao, Q.; Zhang, W. Towards impartial multi-task learning. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021; Available online: https://openreview.net/forum?id=IMPnRXEWpvr (accessed on 15 January 2025).

- Navon, A.; Shamsian, A.; Achituve, I.; Maron, H.; Kawaguchi, K.; Chechik, G.; Fetaya, E. Multi-task learning as a bargaining game. arXiv 2022, arXiv:2202.01017. [Google Scholar] [CrossRef]

- Bhowmik, A.; Dey, D.; Biswas, A. Microstructure, mechanical and wear behaviour of Al7075/SiC aluminium matrix composite fabricated by stir casting. Indian J. Eng. Mater. Sci. (IJEMS) 2021, 28, 46–54. [Google Scholar]

- Bhowmik, A.; Biswas, A. Wear Resistivity of Al7075/6wt.% SiC Composite by Using Grey-Fuzzy Optimization Technique. Silicon 2022, 14, 3843–3856. [Google Scholar] [CrossRef]

- Sen, B.; Dasgupta, A.; Bhowmik, A. Optimizing Wire-Cut EDM Parameters through Evolutionary Algorithm: A Study for Improving Cost Efficiency in Turbo-Machinery Manufacturing. Int. J. Interact. Des. Manuf. 2025, 19, 2049–2060. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).