Abstract

Dense video captioning involves identifying, localizing, and describing multiple events within a video. Capturing temporal and contextual dependencies between events is essential for generating coherent and accurate captions. To effectively capture temporal and contextual dependencies between events, we propose Dense Video Captioning with Dual Contextual, Semantical, and Linguistic Modules (DVC-DCSL), a novel dense video captioning model that integrates contextual, semantic, and linguistic modules. The proposed approach employs two uni-directional LSTMs (forward and backward) to generate distinct captions for each event. A caption selection mechanism then processes these outputs to determine the final caption. In addition, contextual alignment is improved by incorporating visual and textual features from previous video segments into the captioning module, ensuring smoother narrative transitions. Comprehensive experiments conducted using the ActivityNet dataset demonstrate that DVC-DCSL increases the Meteor score from 11.28 to 12.71, representing a 12% improvement over state-of-the-art models in the field of dense video captioning. These results highlight the effectiveness of the proposed approach in improving dense video captioning quality through contextual and linguistic integration.

1. Introduction

Videos offer a valuable and captivating source of information. However, the process of analyzing and extracting meaningful insights from a video can be time-consuming and labor-intensive. In applications such as surveillance log generation and query-based video retrieval systems, this challenge becomes even more critical. Video summarization addresses this issue by reducing manual effort and accelerating video analysis. Video summaries fall into two main categories: textual summaries and visual summaries. Visual summaries are frequently employed for television recaps and sports highlights, which entail the extraction of key frames or segments to generate brief video clips. In contrast, textual summaries generate descriptive narratives of crucial visual frames. Video captioning, a subset of textual summarization, focuses on producing coherent and contextually relevant textual descriptions for videos, aiding in content understanding and retrieval.

Typically, traditional video captioning models produce one or more sentences that provide a thorough description of the video’s content. Traditional models encounter challenges in accurately identifying and describing real-world videos due to the presence of overlapping events.

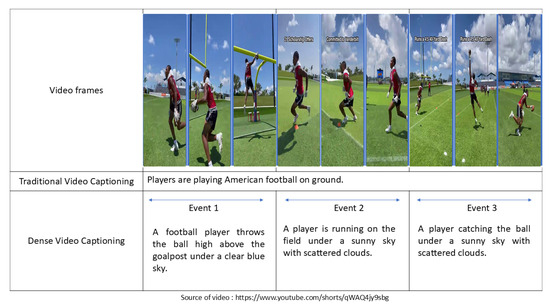

Dense video captioning (DVC) offers a solution to the limitations of conventional video captioning methods by enabling the analysis of videos containing multiple events. Unlike traditional approaches that generate a single, generic caption for an entire video, DVC is capable of identifying, segmenting, and distinguishing between overlapping or sequential events. Once these events are detected, a captioning module produces a detailed description for each one. As shown in Figure 1 conventional models summarize the video as a whole, whereas DVC provides fine-grained captions for individual events. This automated approach to event detection and caption generation has numerous practical applications, such as video summarization [1,2,3], content-based video retrieval [4], query-based video segment localization [5,6], visual assistance for the visually impaired [7], and the creation of instructional videos [8].

Figure 1.

This image demonstrates the differences between traditional and dense video captioning. Dense video captioning creates a distinct, context-aware caption for each segment of the video, allowing for more accurate and detailed video interpretation than traditional models that only produce a single global description. Source of video: https://www.youtube.com/shorts/qWAQ4jy9sbg (accessed on 20 April 2025).

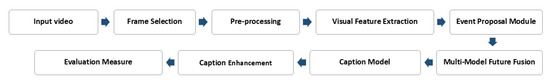

Figure 2 illustrates the well-structured procedure of the DVC pipeline. The process begins by sampling frames from the video, either at fixed temporal intervals () or through random selection. Learning-based methods, such as PickNet [9] or reinforcement learning techniques [10], can automate this frame selection process. Once the frames are selected, the pre-processing step divides the data into training, validation, and testing sets and performs essential pre-processing operations, such as resizing each frame to a fixed width and height and applying data augmentation, before proceeding to feature extraction. To capture spatiotemporal information, models like Convolution 3D (C3D), Temporal CNN, or visual transformers are employed on pre-processed video frames in the visual feature extraction step. These encoded visual features are then processed by a sequential encoder, which segments the video into distinct activity proposals, each represented as an individual video clip in the event proposal module step. In the multi-model feature fusion stage, the extracted activity clips are combined with supplementary information such as Mel Frequency Cepstral Coefficients (MFCCs) from the audio stream, C3D features, relevant keywords, etc. The enriched encoded features are forwarded into sequential models such as LSTM, GRU, or transformer-based architectures to produce event-level captions. Techniques such as reinforcement learning, semantic attention, generative adversarial networks (GANs), and large language models (LLMs) can be employed to enhance the captioning module. The quality of the generated captions is typically evaluated using either standard video captioning metrics such as BLEU [11], METEOR [12], ROUGE [13], SPICE [14], CIDEr [15], and WMD [16], or dense video captioning metrics such as SODA [17], which compare the output to human-generated references. Furthermore, some studies incorporate human evaluation to judge the relevance, fluency, and overall coherence of captions, offering a more holistic view of the system’s effectiveness.

Figure 2.

The block diagram of the dense video captioning procedure is illustrated in the image above.

The DVC framework primarily consists of two key components: the proposal module and the captioning module. The proposal module detects and identifies all events within a video, while the captioning module generates a detailed description for each event. The overall performance of the DVC model depends on accurately detecting activity proposals and the subsequent generation of meaningful captions. Victor Escorcia et al. introduced the sliding window technique in their temporal action detection approach [18], which was a widely used method for proposal detection. This technique involves moving a fixed-size window across the video timeline to classify segments as either activity or background. However, its performance is constrained by the need to predefine the window size and to process the video through multiple passes. To address these limitations, Shyamal Buch et al. developed Single Stream Temporal Action Proposals (SST), which processes the video in a single pass and predicts event proposals at each time step t using k distinct temporal offsets [19]. Once proposals are generated, the captioning module utilizes sequential models to generate fluent and contextually rich descriptions for each event segment.

Numerous studies have implemented either visual transformer models or C3D-LSTM architectures for extracting spatiotemporal features from videos. Both methods improve the understanding of current events by incorporating contextual information from preceding and succeeding segments. Although transformer-based visual models generally yield higher accuracy, their substantial computational requirements pose challenges for deployment on resource-constrained devices such as smartphones or cameras. The captioning module receives these extracted spatiotemporal features and uses them to create a description for every event. In order to convert visual input into coherent captions, researchers have explored both transformer-based architectures and recurrent neural networks (RNNs) in captioning modules. Transformer-based models have demonstrated superior performance in generating more accurate and contextually rich event descriptions in comparison to their RNN counterparts. Integrating visual features derived from a C3D-LSTM-based proposal module into a transformer-based captioning architecture poses several challenges. One of the primary challenges is the necessity of explicitly integrating positional encodings into the C3D-LSTM features prior to their processing by the transformer. This process demands additional computational resources, as both feature types must be projected into a common space. In an effort to circumvent this projection space, researchers have begun employing an end-to-end transformer-based model for dense video captioning. This method necessitates a substantial amount of computational resources, which limits its use in real-time scenarios.

This challenge motivates us to develop a practical solution that accurately describes the event in a contextually relevant manner. In the era of transformers and large language models (LLMs), the proposed model deliberately opts for a uni-directional LSTM approach for dense video captioning. Transformer-based models have demonstrated remarkable performance; however, they frequently incur substantial computational overhead. However, LSTMs require less processing power and have a proven history of handling sequential data. This makes them the best choice when resources are limited. Furthermore, incorporating C3D features into transformer-based models typically requires an additional embedding module, which adds to the computational complexity of the model. However, the proposed LSTM-based framework seamlessly integrates these features, resulting in an efficient and practical solution for dense video captioning.

The proposed solution contributes in the following areas: (i) the proposed captioning module integrates two independent uni-directional LSTMs, one processing the video sequence forward and the other backward, to generate two candidate captions for each video clip. This dual-directional approach leverages the chronological flow of events from both perspectives, ultimately enhancing caption quality. (ii) An innovative caption selection method has been proposed that leverages entropy-based measures to evaluate candidate captions. This approach selects the caption that demonstrates the highest semantic and contextual consistency. Since the proposal and captioning modules operate on individual detected events, each event is treated in isolation. This leads to a lack of alignment between sequentially generated captions. To overcome this limitation, we introduce a linguistic module that enhances coherence by modeling the narrative flow across events. (iii) The proposed captioning module incorporates a dedicated linguistic and contextual sub-module that integrates features from preceding events and their captions. This ensures that the generated captions are contextually coherent and linguistically aligned throughout the video. This emphasizes that this research aims to improve the captioning module and caption refinement component of the dense video captioning pipeline, as depicted in Figure 2.

The organization of this document is as outlined below: Section 2 contains information about a literature review of diverse dense video captioning techniques. Section 3 presents a comprehensive analysis of the proposed model. Section 4 offers an exhaustive examination of the findings generated by the proposed model, juxtaposing them with the outcomes attained by leading dense video captioning algorithms. The analysis concludes in Section 5, which identifies relevant domains for future research.

2. Literature Review of Dense Video Captioning Methods

The DVC involves identifying and localizing temporal activity proposals within video and generating descriptive sentences for each detected event. This section provides a comprehensive review of the key literature in the field of dense video captioning.

A foundational work in this field was introduced by Ranjay Krishna et al. in 2017 [20]. The model extracts C3D features from input videos and employs a temporal proposal generation module known as Deep Action Proposals (DAPs). The DAPs generate K candidate proposals at each time step, each representing a potential activity. These proposed events are fed into the captioning module, which utilizes attention-based LSTMs and incorporates contextual information from both preceding and succeeding proposals to generate event-specific captions. The only drawback of this model is that DAPs used the sliding window concept, which increased the computational cost.

In order to circumvent the constraints of DAPs, Jingwen Wang et al. implemented two SST models to extract temporal event proposals in both forward and backward directions [21]. A two-layer LSTM model with context gates is implemented by the captioning module. A regression-based proposal module was introduced by Yehao Li et al. [22], where the captioning module utilizes reinforcement learning to generate appropriate captions. In the following years, several advancements were made in the DVC domain. Notable contributions include the integration of R-C3D for joint event detection and description [23], the combination of SST and GRU networks for streamlined captioning [24], the use of graph-based partitioning and summarization (GPaS) to capture relationships between events [25], and the design of an event sequence generation network [26]. Recognizing that only a subset of video frames may be sufficient to accurately represent an event, Maitreya Suin et al. proposed an efficient dense video captioning framework that leverages this insight to reduce computational cost while maintaining descriptive quality [10].

With the advent of transformer architectures, several researchers have adopted them to enhance caption generation. Luowei Zhou et al. pioneered the initial transformer-based architectures specifically designed for dense video captioning (DVC) [27]. Departing from the traditional C3D approach, they employed a visual transformer to extract spatiotemporal features. Their model incorporates two distinct decoders: a proposal decoder and a captioning decoder. The proposal decoder analyzes the visual features of each frame and decodes it to produce temporal event proposals. The proposal decoder interprets frame-level visual features to generate temporal event proposals, while the captioning decoder converts these proposals into distinguishable event-specific captions. Expanding on this approach, Iashin et al. proposed a multi-modal transformer-based DVC model that integrates multiple modalities: I3D features for visual content, VGGish for audio feature extraction [28,29], GloVe embeddings for capturing linguistic semantics [30,31], and a speech model for additional audio–linguistic context [32]. Later, some researchers have adopted parallel decoding strategies to simultaneously detect and describe events, significantly accelerating the output generation process [33,34,35].

Subsequently, Nayyer Aafaq et al. introduced the ViSE (Visual–Semantic Embedding) framework, which projects visual features extracted using 2D CNNs and encoded captions represented via weighted n-grams into a shared semantic space, thereby incorporating linguistic knowledge into the caption generation process [36]. Deng et al. proposed an unconventional reverse approach in which event descriptions are generated prior to event localization in the video [37]. In order to enhance the proposal module, Wanhyu Choi et al. decomposed the task into two subtasks: boundary detection and event count estimation [38]. Antoine Yang et al. improved both modules by utilizing time-tokens during the training [39]. A hierarchical temporal–spatial summary and a multi-perspective attention mechanism were employed by Yiwei Wei et al. to develop a Multi-Perspective Perception (MPP) network that produces dense captions [40]. To address the challenge of long video sequences, Xingyi Zhou et al. introduced Streaming DVC, which integrates a clustering-based memory module for efficient processing of extended video content [41]. Minkuk et al. incorporated the external knowledge memory block along with cross attention to generate meaningful captions [42]. Hao Wu et al. utilized a large language model to optimize the boundaries of the proposed event and enrich the caption [43]. TL-DVC model used an encoder–decoder-based transformer model to generate a caption at the event level and an LLM-based model to generate the captioning at the video level [44].

The ActivityNet dataset remains a widely adopted benchmark for evaluating dense video captioning (DVC) systems due to its extensive annotations of temporal event segments and corresponding captions. Although most of the DVC models have utilized this dataset, some research has explored alternative paradigms such as weakly supervised and unsupervised learning approaches. In contrast to supervised learning, which relies on explicit event boundaries and paired captions, weakly supervised methods are capable of learning from loosely labeled or unsegmented video data. Weakly supervised models can infer key temporal regions and generate relevant descriptions without requiring event level localization in the dataset. Some researchers have proposed weakly supervised dense video captioning models that emphasize aligning sentences with corresponding video events through sentence localization techniques [45,46,47,48]. Rahman et al. proposed a weakly supervised multi-modal DVC framework which combined visual, audio, and linguistic inputs for event understanding and caption generation [47]. In order to enhance the model’s comprehension of video content and enhance the quality of the generated captions, audio signals were processed using methods such as Mel-Frequency Cepstral Coefficients (MFCCs), Constant-Q Transform (CQT), and SoundNet [49]. Furthermore, Valter Estevam et al. introduced a unique method which uses unsupervised learning to predict soft labels on short clips and generate visual sentences [50]. This approach demonstrates that the performance of a model can be significantly improved in the absence of detailed labels by utilizing multiple modalities.

As mentioned before, the proposal and captioning modules are essential for generating contextually accurate and informative captions in dense video captioning. In order to identify temporal activity segments, various models have implemented a variety of techniques, such as sliding window-based approaches [20], Single Stream Temporal (SST) networks [21,24], regression-based methods [22,23], and transformer-driven architectures [21,27,30,36]. Capturing the complete context of an event often requires understanding its surrounding temporal information. To address this, some models incorporate contextual cues from neighboring events, both preceding and succeeding, by using attention mechanisms [20] or employing bi-directional SST models [21,24], thereby enhancing the coherence and relevance of the generated captions.

Many existing models prioritize the capture of long-range temporal dependencies, often at the expense of semantic depth, contextual relevance, or linguistic coherence. For instance, the method described in [21] improves visual features by integrating data from preceding and subsequent proposals, which are then processed by an LSTM network with a gating mechanism to generate event descriptions. Although it is effective in simulating temporal context, it fails to consider the significance of incorporating linguistic and contextual comprehension during the caption generation process. Conversely, the model proposed in [22] emphasizes the generation of linguistically rich captions through reinforcement learning but does not leverage information from surrounding events, resulting in limited contextual awareness. These limitations highlight the need for a novel captioning module that effectively balances semantic, contextual, and linguistic components while maintaining computational efficiency, particularly when compared to transformer-based models.

The proposed model leverages both past and future event proposals to more accurately define the visual features of the current event. These features are then passed through two separate uni-directional LSTMs to generate captions in forward and backward temporal directions. To further enhance the quality of the generated captions, a caption selection mechanism along with a contextual–linguistic module is integrated into the architecture, resulting in a more coherent and semantically rich captioning process.

Table 1 presents a detailed comparison of various dense video captioning models developed over the years. It highlights the primary components of each model, such as the visual feature extractor, event localization strategy, captioning architecture, and loss functions employed. The table also captures the shift from LSTM-based methods to transformer-based approaches, reflecting the progression of techniques in the field. This comprehensive summary enables researchers to better understand the trends and innovations in dense video captioning.

Table 1.

Comparative survey of dense video captioning methods using deep learning. This table extends the results originally presented in [44].

3. Context-Aware Dense Video Captioning with Linguistic Modules and a Caption Selection Method

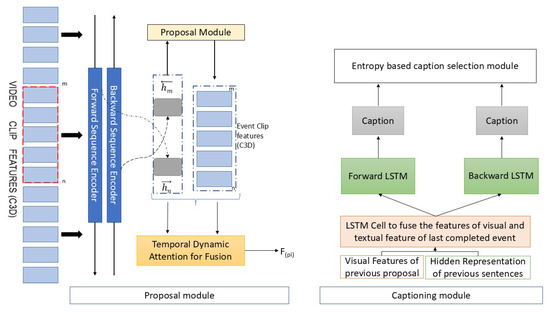

The dense video captioning model proposed in this work, referred to as DVC-DCSL, comprises two core components: a proposal module and a captioning module (Algorithm 1). Accurate interpretation of a current event in a video often relies on contextual cues from both preceding and succeeding events. To address this, the proposal module in DVC-DCSL captures features from both past and future segments to enrich the representation of the current event. For event localization, the model adopts the temporal activity proposal module introduced in [21] and integrates it with a novel captioning architecture designed to enhance descriptive accuracy and contextual relevance. Figure 3 presents the block diagram of the proposed DVC-DCSL model. In the event proposal module, spatiotemporal features are extracted from the input video using the Convolution 3D (C3D) model. These features are then processed by two sequence encoders, one operating in the forward direction and the other in the backward direction, both implemented using LSTM networks to capture temporal dependencies in both directions. The output of these LSTMs is used to classify segments as either event proposals or background. The detected event proposals, along with their associated C3D features, are forwarded to the captioning module. This module employs two uni-directional LSTM networks, one for each temporal direction, to generate candidate captions. A caption selection mechanism evaluates these candidates and selects the most contextually appropriate caption. Furthermore, the model incorporates contextual cues from previous event proposals by integrating both visual and linguistic features, resulting in a more coherent and relevant final output.

| Algorithm 1 Context-Aware Dense Video Captioning with Linguistic Modules and a Caption Selection Method | |

Input: X = [(]) | ▹ N = Total Frames |

Input: V = [(]) | ▹ T = N/ |

Input: E = Word embedding vector | |

Input: = 16 | |

Output: R = [()] | |

: C3D features of video V for frames | ▹ i = 1,2,…T |

: n number of events (P) from video | ▹ i = 1,2,…n |

: n number of captions (c) from video | ▹ i = 1,2,…n |

: List of captions for (p) event in video | |

: Generated n proposal at t time stamp | |

: confidence score of n proposal at t time stamp | |

: hidden vector of selected event at m time stamp, m is start time | |

: hidden vector of selected event at n time stamp, n is end time | |

: C3D features + hidden vectors of event | |

: C3D features of selected event | |

: word Vector in forward direction | |

: word Vector in backward direction | |

: Feature vector(visual + textual features of previous caption) | |

| |

Figure 3.

The figure demonstrates the core architectural components of our proposed DVC-DCSL framework.

3.1. Event Proposal Module

The event proposal module aims to detect temporal segments within a video and classify them as either events or background activity. A video is a collection of N sequential frames, represented as . To capture spatiotemporal information, the Convolution 3D (C3D) network [51] is employed, which processes the video using a fixed temporal window of 16 frames (). This results in the division of the video into multiple temporal segments, also referred to as time steps, given by . At each time step, the C3D visual features are represented as , where each corresponds to the features derived from a 16-frame segment. Principal Component Analysis (PCA) is used to compress the extracted features to 500 dimensions in order to decrease dimensionality and improve computational efficiency.

The extracted visual features (C3D features) are input into a forward and backward directional LSTM model to sequentially encode them in both directions. This bidirectional encoding captures dependencies from both past and future segments, enabling a more complete representation of the current event. The forward and backward LSTM models process visual feature sequences and accumulate contextual information over time. The hidden state of the forward LSTM at each time step, denoted as , encodes the temporal dynamics of visual input up to time t.

At each time step t, the hidden representation is partitioned into K candidate event proposals using a set of predefined anchor lengths. Each proposal is defined as , where . In the forward pass, each proposal starts at and ends at t, with representing the length of K proposals. While all proposals terminate at the same time step t, they differ in their start points in the forward direction. These hidden states of each proposal are subsequently analyzed by independent binary classifiers to produce confidence scores. The confidence score of each classifier indicates the classifier’s certainty regarding the classification of the encoded features as either event or background. A K confidence score is obtained by passing each of the K proposals through independent classifiers. These confidence scores are obtained by employing a fully connected neural network.

Equation (1) demonstrates how the sigmoid activation function is used to compute the confidence score for each event proposal. represents the sigmoid nonlinearity. is the weight matrix used to compute the confidence score through the sigmoid function, while is the corresponding bias term. represents the confidence score vector for all proposals at time step t. The weight matrix () and bias () are shared across all time steps. The resulting confidence score , where represents the probability of the K event proposals. We will later combine this proposal confidence score with the caption confidence score to eliminate irrelevant event–caption pairs.

Similarly, visual information is encoded across all time steps by the backward-directional LSTM, starting from the final frame and moving in reverse sequence. By reversing the video sequence, it generates K proposals at each time step, represented as , along with K corresponding confidence scores, .

The forward and backward LSTMs collectively produce a number of proposals, denoted as . To enhance these results, proposals with low confidence (<50%) scores are eliminated, while the remaining proposal scores from both directions are combined using a multiplication method. Finally, the cumulative confidence score is represented by the following equation.

In the above equation, the variable refers to the total count of event proposals obtained through both forward and backward passes of the LSTM. Proposals with cumulative confidence scores above a predefined threshold (set at 0.8 tIoU) are selected to input the captioning module, allowing it to focus on high-quality event segments.

The context vectors represent the hidden states of the proposal model, where corresponds to the forward-directional LSTM and corresponds to the backward-directional LSTM. m and n denote start and end time steps for the detected event proposal . Rather than directly passing these context vectors to the captioning module, the context vectors are fused with the original C3D features of the event proposal . The visual feature vector in the forward direction for proposal is defined in the following equation:

In Equation (3), represents the C3D features of the detected event. represents the C3D feature of an event that starts at the m time stamp and ends at the n time stamp. represents the context vector in a forward direction, while represents the context vector in a backward direction. All these features, along with the previous hidden state of the LSTM (), are fed into the captioning module. Temporal dynamic attention is applied to identify key visual features (C3D) using context vectors, helping to recognize important frames. The integration of context vectors with C3D features enhances the model’s ability to understand the event’s context more effectively. The relevance score at each time step t is calculated using the following equation:

In Equation (4), represents a C3D feature of a specific time step. The value of i starts from zero. signifies a vector concatenation, and is a hidden representation of the previous timestamp. , , , and are weight matrices. The softmax normalization is used to compute the weight () of visual features ():

where signifies the event proposal’s length. The attended visual feature () is obtained by calculating a weighted sum:

The relevance score () is crucial in determining the most suitable original visual features that are in alignment with the context vectors (). The event is comprehensively understood through the aggregation of visual features and context vectors, denoted as . Thus, the final input to LSTM node of the captioning module is as follows:

In the above equation, the forward-direction feature vector is constructed by combining the attended C3D visual features with the contextual information encoded in the forward and backward LSTM hidden states, represented as . Similarly, the feature vector in the backward direction () is obtained using the same approach. The only difference is that n is considered the start timestamp, while m is considered the end timestamp.

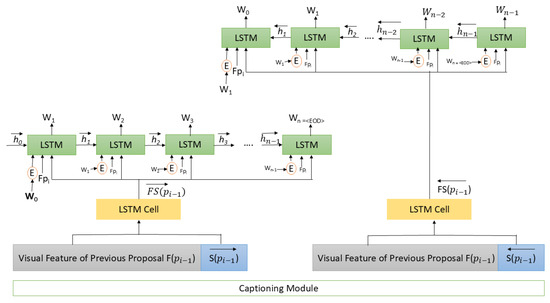

3.2. Caption Module with Semantic and Linguistic Model

The captioning module generates corresponding descriptions by accepting the visual feature vectors for each event. Figure 4 illustrates the block diagram of the captioning module in the proposed model. Two uni-directional LSTMs are used to generate captions in both the forward and backward directions. Initially, visual and textual features are merged using the LSTM layer. The captioning module then utilizes this merged representation as an input feature to enhance its understanding of the overall video context. The LSTM node in the captioning module accepts the text embedding vector, integrates the visual features from the context vector and C3D, and combines the visual–textual feature vector from the previous event. Before embedding the textual information, we apply forward (right-side) and backward (left-side) padding. To ensure contextual, semantic, and linguistic accuracy in event descriptions, it is crucial to incorporate information from past and future events. Additionally, we use the caption from the preceding event to maintain coherence, ensuring that the generated caption aligns with the overall narrative of the video.

Figure 4.

The diagram illustrates the caption module of DVC-DCSL.

To improve the model’s contextual and semantic understanding between two event of the same video, we introduce a fusion vector that combines the previous event’s feature with the hidden representation of its caption . This fusion vector is then fed into the LSTM node. By integrating visual and textual features from the preceding event, the model gains a better understanding of the current event, and it helps the model to understand the narrative of the video while generating the caption of the current time stamp. This leads to captions that are more semantically rich and better aligned with the video’s narrative. The LSTM layer plays a crucial role in efficiently merging these feature vectors. This fused vector, , together with the current event feature and the embedding vectors, is fed into the two uni-directional LSTMs in the captioning module. The same process is applied in the backward direction to ensure consistency and coherence in the generated captions. In the backward process, the current event feature is fed into the captioning module along with the previous event’s feature and the hidden representation of its caption . This approach results in two captions: one generated using the forward direction features and another using the backward directional features. Thus, a caption selection method is required to determine the most appropriate caption.

3.3. Caption Selection Module

Our proposed captioning module generates two captions, one in the forward direction and another in the reverse direction. A common strategy for final caption selection is to aggregate word probabilities and choose the caption with the highest cumulative score. However, this approach struggles with captions of varying lengths. To address this limitation, we employ log-based and mean-based methods for final caption selection. Low-probability words tend to contribute little to sentence quality, and high-probability words—such as stop words—offer limited contextual information. Therefore, focusing extensively on these word categories is unnecessary. The log-based method uses information entropy to prioritize words with intermediate probabilities, ensuring a balanced selection of meaningful words. Meanwhile, the mean-based approach mitigates the influence of sentence length, providing a more consistent evaluation. The formulas for both methods are presented below:

Here and represent the likelihood of selecting words for caption formation in both the forward and backward directions, with respect to the visual feature ( and ). and . and represent the aggregated probability of each word in the generated caption for the proposal. and denote the length of the caption (word count) produced by the forward and backward modules, respectively. Using the previously indicated techniques, we calculate the score and choose the statement with the highest score.

To summarize the caption selection process, two confidence-based strategies are employed. In the entropy-based method, the normalized entropy is calculated for both forward and backward captions using the predicted word probabilities. The caption with the lower entropy score—indicating higher confidence—is selected. Alternatively, in the average-probability-based method, the mean of the predicted word probabilities is computed across all time steps for both captions. The caption with the higher average probability is selected, as it indicates more consistent confidence in word prediction. In both methods, only one of the two captions (forward or backward) is retained as the final output based on its confidence score.

Individual confidence scores are computed for both the proposal and captioning stages in order to identify the most pertinent event–caption pairs. The likelihood of a segment being accurately localized and classified as an event is reflected in the confidence score of the event proposal module, denoted as . The captioning module assigns a confidence score that indicates the quality of the generated description. The selection process is guided by the final confidence score, , which is the sum of these two scores. The final output is composed of the top n event–caption combinations with the highest combined scores during inference.

3.4. Loss Function

Following our previous discussion, the DVC framework comprises two primary phases: caption generation and event proposal generation. For training the proposal module, a weighted multi-class categorical cross-entropy loss function denoted as is employed. This loss is computed by evaluating the alignment between predicted proposal segments and ground truth annotations based on the temporal Intersection over Union (tIoU) metric. To facilitate this, the durations of all annotated event segments are collected and clustered into 128 groups, which correspond to the predefined number of proposal anchors K. Each training instance is then associated with a ground truth label sequence , where each is a binary vector of dimension K. A value of 1 is assigned to the k-th entry of if the corresponding proposal achieves a tIoU score of at least 0.5 with the ground truth; otherwise, it is set to 0. The following equation is used to calculate the proposal loss at time t for video V with ground truth (y):

In the preceding formulation, and represent the weights assigned to negative and positive samples, respectively, based on their distribution within the dataset. The variable corresponds to the predicted confidence score for the j-th proposal at time step t, while represents the corresponding ground truth label. Loss functions are calculated separately for the forward and backward sequences, and gradients are propagated in both directions to allow for joint optimization of the entire training process.

Proposals that have a temporal Intersection over Union (tIoU) greater than 0.8 are considered valid and are forwarded to the captioning module. The captioning loss for both the forward () and backward () directions is computed as the sum of the negative log-likelihoods corresponding to the ground truth words in a sentence of length M. This loss measures the discrepancy between the predicted word probabilities and the actual words and is mathematically expressed as follows:

where denotes the i-th word in the ground truth sentence for both forward and backward directions. The overall training objective, represented by the total loss L, is obtained by summing the forward and backward captioning losses:

where is a weighting factor (defaulted to 0.5) used to balance the respective contributions of the proposal loss () and the captioning loss () in the overall objective function.

4. Result and Implementation

Real-world videos frequently feature multiple overlapping activities that are executed by distinct actors. It is imperative that datasets contain precise temporal annotations and corresponding textual descriptions in order to facilitate effective dense video captioning. Ranjay Krishna et al. introduced the ActivityNet Captions dataset to satisfy this requirement, and it has since become a benchmark resource in dense video captioning research [20]. This dataset is divided into training, validation, and testing splits and comprises approximately 20,000 videos, including both trimmed and untrimmed versions. The precise temporal localization is made possible by the clearly defined start and end times of each annotated event. The dataset comprises approximately 100,000 captions that are extracted from 180 s videos. Each video contains an average of 3.65 temporally aligned sentences. The length of each sentence is approximately 13.46 words. ActivityNet Captions is extensively employed in the development and evaluation of DVC models due to its detailed labeling and richness.

The proposal module was pre-trained separately for five epochs to improve initial parameter learning, prior to the joint end-to-end training of the full model. The Adam optimizer was implemented during the training process, with an initial learning rate of 0.001, which was gradually reduced as the training progressed. The C3D network was employed in its pre-trained state for feature extraction, utilizing transfer learning to capture spatiotemporal representations from the video input. The F1 score was employed as a selection criterion to ascertain the most effective temporal Intersection over Union (tIoU) threshold for the dataset, after a number of temporal Intersection over Union (tIoU) thresholds—0.5, 0.7, 0.8, and 0.9—were investigated during the generation of event proposals.

The dense video captioning model is assessed using metrics that are widely accepted, including BLEU [11], METEOR [12], CIDEr [15], and ROUGE [13]. In addition, the SODA metric [17], which was recently introduced for dense video captioning tasks, provides a more specialized evaluation. Nevertheless, its limited use within the research community makes it difficult to make fair comparisons with existing baseline models. BLEU is a precision-oriented metric that quantifies the overlap of n-grams between the reference and generated captions. In contrast, ROUGE is recall-oriented and evaluates the comprehensiveness of the generated content. METEOR is regarded as more consistent than BLEU, particularly for datasets that contain a single reference caption, as a result of its ability to identify stems, synonyms, and paraphrases. The alignment between visual and textual modalities is reflected in the degree of consensus between machine-generated captions and human annotations, which is captured by CIDEr, which applies tf-idf weighting to n-gram matches.

A comparative evaluation of the proposed DVC-DCSL model and its variants with leading dense video captioning approaches on the ActivityNet Captions dataset is shown in Table 2. Standard metrics, such as BLEU, METEOR, ROUGE, and CIDEr, are employed to evaluate the performance, with higher values indicating enhanced captioning quality. An initial variant, DVC-CSL, was introduced as part of the ablation analysis. This variant utilizes a single-LSTM-based framework. In this configuration, a unified context vector is created by merging visual features from both preceding and succeeding events. This allows for the generation of a single caption per event without the need for a caption selection mechanism. In contrast, the improved version, DVC-DCSL, consists of two distinct unidirectional LSTM networks: one processes the sequence in the forward direction, while the other processes it in the backward direction. L2 regularization is implemented to mitigate overfitting. This architecture enhances temporal modeling by generating captions that are autonomous from each directional LSTM. Subsequently, each LSTM output undergoes its own softmax layer, which generates probability distributions over the vocabulary for caption generation.

Table 2.

Assessment of DVC models on the ActivityNet Caption dataset. Better performance is indicated by higher scores. Multiple captions are represented by the notation +MC, regularization is employed in addition to the base model (+R), an entropy-based approach is employed for caption selection (+E), and a mean-based method is employed for caption selection (+M). * The METEOR and CIDEr scores were obtained from [41], as vid2seq does not provide them.

The model’s ability to capture a more comprehensive temporal context is enhanced by the dual-LSTM design, which leads to an improvement in the quality of the captions. Specifically, it achieves a 4.3% increase in METEOR score compared to the baseline model [21], which is significantly greater than the 1.46% improvement achieved by the single-LSTM variant, DVC-CSL. These results substantiate the dual-LSTM structure’s efficacy and establish a robust foundation for future improvements. In comparison to numerous existing dense video captioning methods, the model exhibits competitive performance in METEOR scores, with only a few exceptions. Additionally, the integration of a caption selection module improves performance by selecting more precise and contextually appropriate words, resulting in an overall improvement in caption quality.

Later, a caption selection module was introduced to replace the earlier method that relied on concatenating word probability vectors. Two strategies were implemented for caption selection: an entropy-based method (DVC-DCSL +R+MC+E) and a mean-based method (DVC-DCSL +R+MC+M). These approaches allowed the model to select more appropriate words, resulting in a 7.5% improvement in the METEOR score compared to the baseline version. During the development of the overall framework, the potential of integrating a large language model (LLM) was also explored to improve coherence in the generated captions. However, LLMs often introduce hallucinated content that is not grounded in the visual input. To address this concern, a linguistic module was designed to refine the generated captions. This module helps ensure that the captions remain faithful to the visual content and that linguistic consistency is maintained across adjacent event captions, leading to a more coherent and context-aware narrative. To generate inter event captions that are more aligned, we decide to input the visual and caption feature vectors of the previous event, which helps the model to understand the narrative of the video. Our models, DVC-DCSL R+MC+M+L and DVC-DCSL R+MC+E+L with caption selection and linguistic modules, demonstrated a 12% enhancement in the METEOR score relative to current state-of-the-art dense video captioning models. The model’s ability to understand the visual and narrative aspects of the video is proved. Also, it helps the model to describe the event in continuous form. In this way, our model generated the caption, which is contextually, semantically, and linguistically aligned.

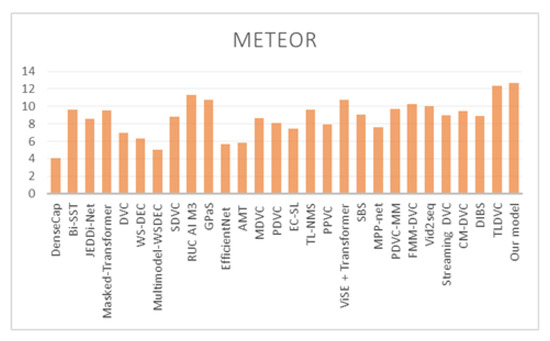

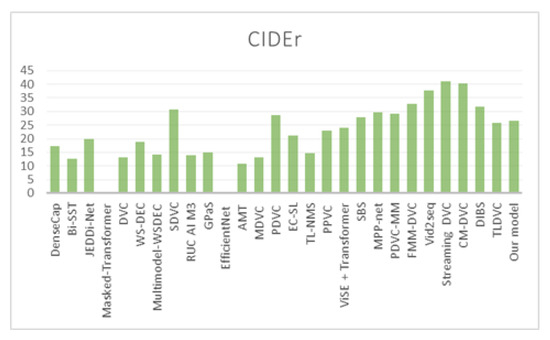

The mean-based caption selection method showed improved performance as sentence length increased, particularly for extended n-gram matches. While our model achieved significant gains in the CIDEr score compared to the baseline model [21], further evaluation is required using transformer-based visual feature extraction models. Figure 5 and Figure 6 illustrate the performance of the proposed model (DVC-DCSL) compared to other state-of-the-art dense video captioning models using the METEOR and CIDEr evaluation metrics. The higher value of the METEOR score indicates better synonym matching and overall caption quality. The CIDEr score evaluates the consensus between generated and reference captions based on TF-IDF weighting. The results demonstrate that the DVC-DCSL model outperforms existing approaches, challenges remain in achieving higher CIDEr scores. This indicates that enhancing the alignment between visual and textual features is a promising direction for improving caption generation in future research.

Figure 5.

METEOR score comparison of DVC-DCSL with existing state-of-the-art dense video captioning models.

Figure 6.

CIDEr score comparison of DVC-DCSL with existing state-of-the-art dense video captioning models.

While several recent lightweight video-language models such as SmolVLM2 [54] have gained popularity due to their compactness and efficiency, it is important to note that these models are designed primarily for frame-level or single-shot video understanding tasks. In contrast, dense video captioning involves the dual challenge of temporally localizing multiple events and generating semantically rich captions for each event segment. Therefore, direct comparisons between our model and such lightweight architectures are not appropriate, as the underlying objectives, input processing paradigms, and evaluation criteria differ substantially. Our comparisons are instead aligned with the current state-of-the-art in dense video captioning, ensuring relevance and task-specific fairness.

Despite the improvements introduced by our model, certain limitations remain. The performance of the dense video captioning system is closely tied to the accuracy of both the proposal generation and captioning modules. One common failure case occurs when two distinct events have identical or nearly overlapping start and end timestamps. In such scenarios, the model struggles to differentiate between the events, leading to merged or missing captions. This highlights the need for more temporally precise event proposal mechanisms that can better handle fine-grained distinctions between adjacent or overlapping activities. Future work could explore adaptive proposal strategies or attention-based refinement modules to improve event boundary detection and reduce such failures. In future work, we plan to explore end-to-end transformer-based frameworks that incorporate LLMs with quantization techniques for efficiency, while maintaining visual grounding.

5. Conclusions and Future Direction

This paper presents a novel methodology for dense video captioning, termed the Dense Video Captioning model with Contextual, Semantic, and Linguistic modules (DVC-DCSL). By leveraging contextual, semantic, and linguistic information from preceding and subsequent events, the proposed model effectively generates coherent and accurate event descriptions. Rather than solely focusing on dual uni-directional LSTMs, our approach emphasizes the integration of visual and textual features from adjacent events to enhance contextual understanding. Additionally, the innovative entropy- or mean-based caption selection mechanism ensures that the generated captions are both contextually and linguistically aligned across events. The proposed method outperformed the existing state-of-the-art model by 12% on the ActivityNet dataset. It improved the Meteor score from 11.28 to 12.7. These findings highlight the importance of contextual information in enhancing video captioning accuracy. Future research can evaluate the model on datasets containing parallel activities, such as sports videos. Further optimization of the proposal module, particularly for capturing long-range dependencies, may enhance performance. While the DVC-DCSL model has proven effective for recorded videos, adapting it for streaming video remains an important direction. This would enable real-time event detection and caption generation, making the model more applicable to dynamic real-world scenarios, such as log generation from CCTV footage.

Author Contributions

Conceptualization, D.B. and P.T.; methodology, D.B.; software, D.B.; validation, D.B. and P.T.; formal analysis, D.B.; investigation, D.B.; resources, D.B.; data curation, D.B.; writing—original draft preparation, D.B.; writing—review and editing, D.B.; visualization, D.B.; supervision, P.T.; project administration, D.B. and P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available at https://cs.stanford.edu/people/ranjaykrishna/densevid (accessed on 20 April 2025).

Acknowledgments

We thank the CSE Department, School of Technology, Institute of Technology, Nirma University, Ahmedabad, for helping us carry out this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kim, J.; Rohrbach, A.; Darrell, T.; Canny, J.; Akata, Z. Textual Explanations for Self-Driving Vehicles. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 577–593. [Google Scholar]

- Potapov, D.; Douze, M.; Harchaoui, Z.; Schmid, C. Category-specific video summarization. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 540–555. [Google Scholar]

- Dinh, Q.M.; Ho, M.K.; Dang, A.Q.; Tran, H.P. Trafficvlm: A controllable visual language model for traffic video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 7134–7143. [Google Scholar]

- Yang, H.; Meinel, C. Content based lecture video retrieval using speech and video text information. IEEE Trans. Learn. Technol. 2014, 7, 142–154. [Google Scholar] [CrossRef]

- Anne Hendricks, L.; Wang, O.; Shechtman, E.; Sivic, J.; Darrell, T.; Russell, B. Localizing moments in video with natural language. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 22–25 July 2017; pp. 5803–5812. [Google Scholar]

- Aggarwal, A.; Chauhan, A.; Kumar, D.; Mittal, M.; Roy, S.; Kim, T. Video Caption Based Searching Using End-to-End Dense Captioning and Sentence Embeddings. Symmetry 2020, 12, 992. [Google Scholar] [CrossRef]

- Wu, S.; Wieland, J.; Farivar, O.; Schiller, J. Automatic Alt-text: Computer-generated Image Descriptions for Blind Users on a Social Network Service. In Proceedings of the CSCW, Portland, OR, USA, 25 February–1 March 2017; pp. 1180–1192. [Google Scholar]

- Shi, B.; Ji, L.; Liang, Y.; Duan, N.; Chen, P.; Niu, Z.; Zhou, M. Dense Procedure Captioning in Narrated Instructional Videos. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 6382–6391. [Google Scholar]

- Chen, Y.; Wang, S.; Zhang, W.; Huang, Q. Less is more: Picking informative frames for video captioning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 358–373. [Google Scholar]

- Suin, M.; Rajagopalan, A. An Efficient Framework for Dense Video Captioning. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; pp. 12039–12046. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic And Extrinsic Evaluation Measures For Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29–30 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004. [Google Scholar]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. Spice: Semantic propositional image caption evaluation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 382–398. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Kusner, M.; Sun, Y.; Kolkin, N.; Weinberger, K. From word embeddings to document distances. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 957–966. [Google Scholar]

- Fujita, S.; Hirao, T.; Kamigaito, H.; Okumura, M.; Nagata, M. SODA: Story Oriented Dense Video Captioning Evaluation Framework. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VI 16; Springer: Cham, Switzerland, 2020; pp. 517–531. [Google Scholar]

- Escorcia, V.; Heilbron, F.C.; Niebles, J.C.; Ghanem, B. Daps: Deep action proposals for action understanding. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 768–784. [Google Scholar]

- Buch, S.; Escorcia, V.; Shen, C.; Ghanem, B.; Carlos Niebles, J. Sst: Single-stream temporal action proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2911–2920. [Google Scholar]

- Krishna, R.; Hata, K.; Ren, F.; Fei-Fei, L.; Niebles, J.C. Dense-Captioning Events in Videos. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 706–715. [Google Scholar]

- Wang, J.; Jiang, W.; Ma, L.; Liu, W.; Xu, Y. Bidirectional Attentive Fusion with Context Gating for Dense Video Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7190–7198. [Google Scholar]

- Li, Y.; Yao, T.; Pan, Y.; Chao, H.; Mei, T. Jointly Localizing and Describing Events for Dense Video Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7492–7500. [Google Scholar]

- Xu, H.; Li, B.; Ramanishka, V.; Sigal, L.; Saenko, K. Joint event detection and description in continuous video streams. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 396–405. [Google Scholar]

- Mun, J.; Yang, L.; Ren, Z.; Xu, N.; Han, B. Streamlined Dense Video Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6588–6597. [Google Scholar]

- Zhang, Z.; Xu, D.; Ouyang, W.; Zhou, L. Dense Video Captioning using Graph-based Sentence Summarization. IEEE Trans. Multimed. 2020, 23, 1799–1810. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 652–660. [Google Scholar]

- Zhou, L.; Zhou, Y.; Corso, J.J.; Socher, R.; Xiong, C. End-to-End Dense Video Captioning with Masked Transformer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8739–8748. [Google Scholar]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar]

- Huang, X.; Chan, K.H.; Wu, W.; Sheng, H.; Ke, W. Fusion of multi-modal features to enhance dense video caption. Sensors 2023, 23, 5565. [Google Scholar] [CrossRef] [PubMed]

- Iashin, V.; Rahtu, E. A Better Use of Audio-Visual Cues: Dense Video Captioning with Bi-modal Transformer. arXiv 2020, arXiv:2005.08271. [Google Scholar]

- Yu, Z.; Han, N. Accelerated masked transformer for dense video captioning. Neurocomputing 2021, 445, 72–80. [Google Scholar] [CrossRef]

- Iashin, V.; Rahtu, E. Multi-modal Dense Video Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 958–959. [Google Scholar]

- Wang, T.; Zhang, R.; Lu, Z.; Zheng, F.; Cheng, R.; Luo, P. End-to-end dense video captioning with parallel decoding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 6847–6857. [Google Scholar]

- Choi, W.; Chen, J.; Yoon, J. Parallel Pathway Dense Video Captioning With Deformable Transformer. IEEE Access 2022, 10, 129899–129910. [Google Scholar] [CrossRef]

- Huang, X.; Chan, K.H.; Ke, W.; Sheng, H. Parallel dense video caption generation with multi-modal features. Mathematics 2023, 11, 3685. [Google Scholar] [CrossRef]

- Aafaq, N.; Mian, A.S.; Akhtar, N.; Liu, W.; Shah, M. Dense Video Captioning with Early Linguistic Information Fusion. IEEE Trans. Multimed. 2022, 25, 2309–2322. [Google Scholar] [CrossRef]

- Deng, C.; Chen, S.; Chen, D.; He, Y.; Wu, Q. Sketch, ground, and refine: Top-down dense video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 234–243. [Google Scholar]

- Choi, W.; Chen, J.; Yoon, J. Step by step: A gradual approach for dense video captioning. IEEE Access 2023, 11, 51949–51959. [Google Scholar] [CrossRef]

- Yang, A.; Nagrani, A.; Seo, P.H.; Miech, A.; Pont-Tuset, J.; Laptev, I.; Sivic, J.; Schmid, C. Vid2seq: Large-scale pretraining of a visual language model for dense video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 10714–10726. [Google Scholar]

- Wei, Y.; Yuan, S.; Chen, M.; Shen, X.; Wang, L.; Shen, L.; Yan, Z. MPP-net: Multi-perspective perception network for dense video captioning. Neurocomputing 2023, 552, 126523. [Google Scholar] [CrossRef]

- Zhou, X.; Arnab, A.; Buch, S.; Yan, S.; Myers, A.; Xiong, X.; Nagrani, A.; Schmid, C. Streaming dense video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 18243–18252. [Google Scholar]

- Kim, M.; Kim, H.B.; Moon, J.; Choi, J.; Kim, S.T. Do You Remember? Dense Video Captioning with Cross-Modal Memory Retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 13894–13904. [Google Scholar]

- Wu, H.; Liu, H.; Qiao, Y.; Sun, X. DIBS: Enhancing Dense Video Captioning with Unlabeled Videos via Pseudo Boundary Enrichment and Online Refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 18699–18708. [Google Scholar]

- Bhatt, D.; Thakkar, P. Improving Narrative Coherence in Dense Video Captioning through Transformer and Large Language Models. J. Innov. Image Process. 2025, 7, 333–361. [Google Scholar] [CrossRef]

- Shen, Z.; Li, J.; Su, Z.; Li, M.; Chen, Y.; Jiang, Y.G.; Xue, X. Weakly supervised dense video captioning. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; Volume 2. [Google Scholar]

- Duan, X.; Huang, W.; Gan, C.; Wang, J.; Zhu, W.; Huang, J. Weakly Supervised Dense Event Captioning in Videos. arXiv 2018, arXiv:abs/1812.03849. [Google Scholar] [CrossRef]

- Rahman, T.; Xu, B.; Sigal, L. Watch, listen and tell: Multi-modal weakly supervised dense event captioning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8907–8916. [Google Scholar]

- Chen, S.; Jiang, Y.G. Towards bridging event captioner and sentence localizer for weakly supervised dense event captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8425–8435. [Google Scholar]

- Aytar, Y.; Vondrick, C.; Torralba, A. Soundnet: Learning sound representations from unlabeled video. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 892–900. [Google Scholar]

- Estevam, V.; Laroca, R.; Pedrini, H.; Menotti, D. Dense video captioning using unsupervised semantic information. arXiv 2021, arXiv:2112.08455. [Google Scholar] [CrossRef]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Chen, S.; Zhao, Y.; Jin, Q. Team RUC_AIM3 Technical Report at Activitynet 2020 Task 2: Exploring Sequential Events Detection for Dense Video Captioning. arXiv 2020, arXiv:2006.07896. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, H.; Yu, M.; Tian, Q.; Hu, H. Event-Centric Hierarchical Representation for Dense Video Captioning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1890–1900. [Google Scholar] [CrossRef]

- Marafioti, A.; Zohar, O.; Farré, M.; Noyan, M.; Bakouch, E.; Cuenca, P.; Zakka, C.; Allal, L.B.; Lozhkov, A.; Tazi, N.; et al. Smolvlm: Redefining small and efficient multimodal models. arXiv 2025, arXiv:2504.05299. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).