1. Introduction

As industrial systems increase in complexity and autonomy, accurately perceiving and responding to emergent risks becomes a central concern in safety engineering [

1,

2]. Traditional process control systems (PCSs), which monitor and regulate chemical, mechanical, or energy-intensive operations, are often embedded in highly structured environments [

3]. These systems rely heavily on deterministic logic, hardwired sensors, and human oversight to detect abnormal conditions and prevent catastrophic failures [

4]. Despite technological advancements, PCSs still depend on human-in-the-loop mechanisms for hazard identification and intervention, which can be prone to limitations such as alarm flooding, misinterpretation, or delayed response [

5]. Moreover, the interaction between automated systems and human operators creates new risks, such as mode confusion [

6], automation surprise [

7], and even conflicts [

8,

9].

Why do conflicts arise, particularly when the system is augmented with artificial intelligence (AI) capabilities? One of the fundamental reasons lies in the fact that process systems and intelligent machines lack the situational awareness inherently possessed by humans [

10]. More precisely, situational awareness enables systems to “know what is happening” within their operational context [

11,

12]. Advancing this capability requires a deeper investigation into risk perception, allowing the machines to “know risk” [

13]. Specifically, risk perception in this study is defined in two forms: (1) Human risk perception refers to the cognitive process by which individuals recognize and assess potential hazards and (2) system-based risk perception denotes a system’s ability to detect and respond to risks using sensor data and programmed or learned logic.

Recent developments in the aviation and automotive industries have demonstrated progress in machine risk perception [

14,

15]; however, they have also introduced new challenges, including even catastrophic events that warrant further study [

16,

17]. Typically, autonomous vehicles (AVs) represent a new frontier of cyber–physical systems characterized by probabilistic sensing, machine learning (ML)-based perception, and decision-making in open and unpredictable environments [

18,

19]. Unlike PCSs, AVs leverage multi-modal sensor fusion, including light detection and ranging (LiDAR), radar, and vision, to construct real-time environmental models and initiate preemptive actions such as emergency braking [

20,

21]. While these systems demonstrate promising capabilities, they also exhibit critical vulnerabilities, particularly in edge cases where they fail to generalize learned behaviors or coordinate effectively with human drivers [

22,

23].

Generally, in PCSs, risk perception follows a top-down, rule-based approach, while AVs increasingly rely on adaptive, data-driven models. Yet, neither paradigm is immune to failure. Prior studies have shown that misalignment between system design and human cognitive models often contributes to catastrophic events [

24,

25]. As safety researchers in PCSs, the authors are increasingly observing the potential integration of AI-based risk perception and control strategies into PCSs, while AVs have made significant strides. This contrast raises a timely question: What lessons can the PCS field learn from AVs for risk perception?

To date, no systematic study has compared them from the perspective of risk perception and human–AI interaction. Our motivation, therefore, is to draw cross-domain insights by analyzing real-world failure cases from both domains. By identifying shared vulnerabilities and contrasting architectural paradigms, we aim to contribute a novel comparative methodology and actionable design recommendations for future AI-integrated industrial systems.

To guide this investigation, the study poses three key research questions:

- i.

How do risk perception mechanisms differ between PCSs and AVs?

- ii.

What are the typical failure patterns in human–AI interaction?

- iii.

How can insights from PCS and AV failures inform safer intelligent system design?

Thus, this study addresses a comparative analysis of 60 real-world accident reports—30 for each PCS and AV context. Using a standardized data extraction template supported by Generative Pre-trained Transformer (GPT)-assisted processing, the study benchmarks risk triggers, perception modalities, decision mechanisms, and human–AI interaction failures. By systematically contrasting their risk perception paradigms, the paper seeks to uncover shared vulnerabilities and cross-domain lessons that can inform the development of more robust, interpretable, and human-aligned safety systems.

2. Methodology

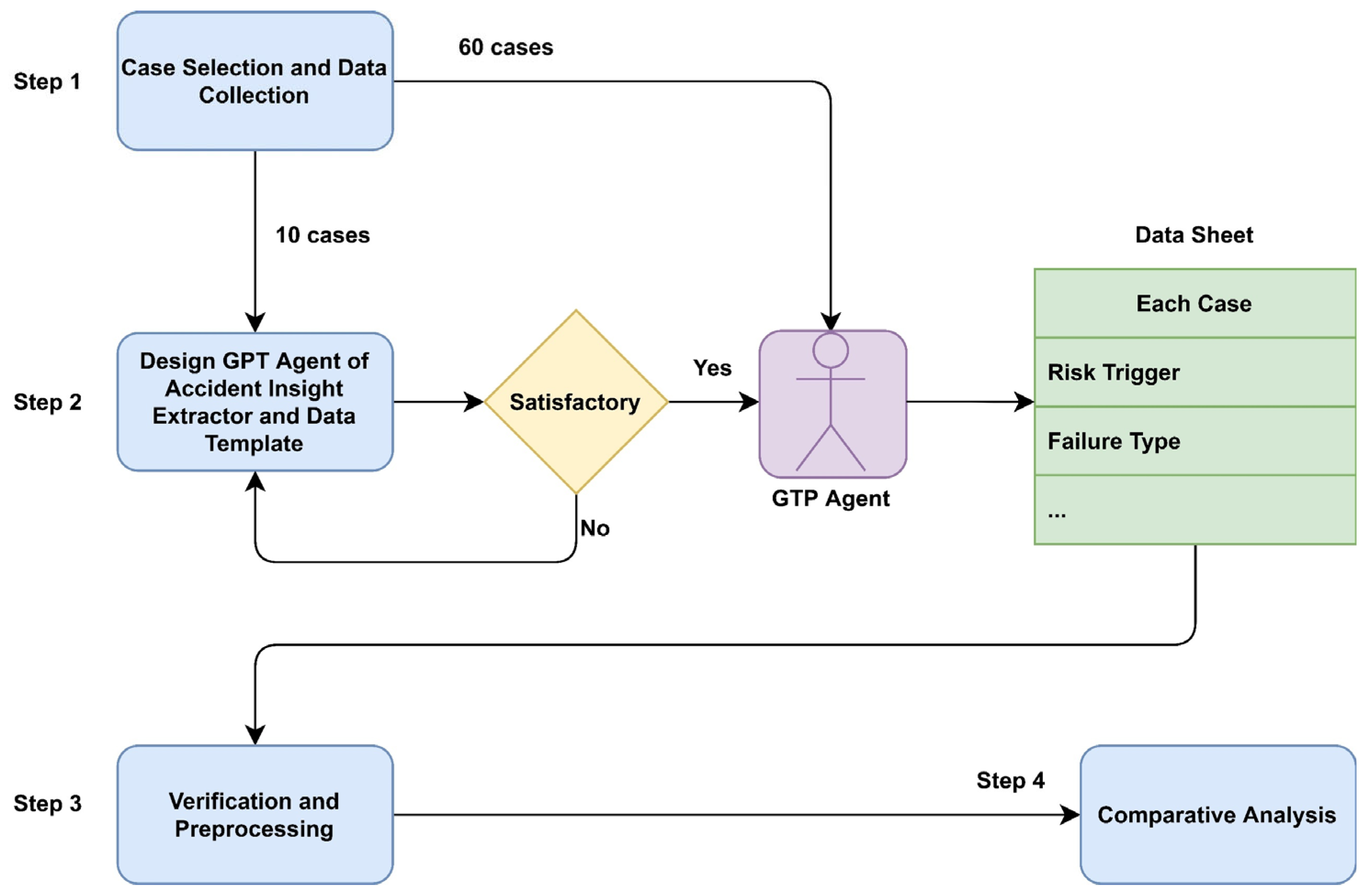

The methodology of this study involves systematic data collection, structured extraction using a standardized template, GPT-assisted processing, and quantitative and qualitative cross-domain analysis. The research framework is shown in

Figure 1, and the details are presented in the following subsections.

Briefly, this framework illustrates the methodological workflow of the study. It begins with selecting 70 real-world accident reports and stratifying sampling into development and analysis sets. The GPT-assisted Accident Insight Extractor is then applied to each report using a standardized template. The extracted data undergo manual verification and preprocessing before being analyzed through quantitative benchmarking and qualitative synthesis to compare risk perception mechanisms across PCS and AV domains.

2.1. Case Selection and Data Collection

To ensure empirical rigor and representativeness, this study collected 70 real-world incident reports, evenly distributed across two critical domains: 35 from PCSs and 35 from AV. Eventually, 60 reports were used as primary datasets for this work. Subsequently, a stratified random sampling approach was employed to designate two distinct groups.

Group 1 (10 cases in total): This group comprises five randomly selected cases from each domain, specifically curated to support the iterative design and evaluation of a GPT-assisted data extraction agent.

Group 2 (60 cases in total): The remaining 60 cases, comprising 30 from each domain, constitute Group 2 and serve as the primary dataset for the subsequent comparative research and statistical analysis.

For the data sources, PCS cases were obtained from official U.S. Chemical Safety Board (CSB) investigation reports at

https://www.csb.gov/investigations/ (1 May 2025), which offer comprehensive technical narratives and root cause analysis. Specifically, we focus on the most recent 30 finalized investigation reports with the final report released from 18 September 2017 to 1 May 2025. The PCS cases reflect systems using traditional automation rather than AI. Although these reports do not involve AI technologies, the interaction patterns documented offer a foundation for projecting future human–AI dynamics in industrial environments.

On the other hand, for the AV domain, the cases are selected from formal investigation reports issued by the National Transportation Safety Board (NTSB), referring to

https://www.ntsb.gov/investigations/AccidentReports/Pages/Reports.aspx (1 May 2025). Accordingly, the AV accident reports were also selected from 2017 to 2025. The AV cases include the automation level from L2 partial automation, L3 conditional automation, and L4 high automation. Definitions of these automation levels are available on the NTSB website. It is noted that AV case availability is constrained by limited public reporting and proprietary data. To ensure analytical balance, 30 PCS cases were selected to match. Though the sample size is modest, it enables structured, cross-domain comparison.

All selected cases met a consistent set of inclusion criteria to ensure analytical depth and consistency. Each report was required to contain sufficient technical detail to permit structured extraction, including a documented sequence of events, descriptions of instrumentation used, such as the sensors and system behavior, and accounts of human interaction or oversight. Furthermore, each case must explicitly identify the incident’s root causes or contributing factors. The selection process was guided by the goal of capturing diversity across several key dimensions: failure types (e.g., sensor malfunctions, algorithmic errors, and interface design flaws), operational environments (industrial settings for PCS vs. urban or roadway conditions for AVs), and safety outcomes ranging from near-misses to catastrophic failures.

In summary, to ensure diversity and relevance, we employed stratified random sampling based on three criteria: (1) automation level, (2) failure type, and (3) diversity in causal factors. This stratification allowed for balanced coverage of varied operational conditions and failure patterns across both domains. Sampling was conducted from publicly available investigation reports published between 2017 and 2025 by the CSB (for PCS cases) and the NTSB (for AV cases). Only reports with sufficient technical detail, clear causal descriptions, and structured findings were included.

2.2. Development of a Data Extraction Agent and Template

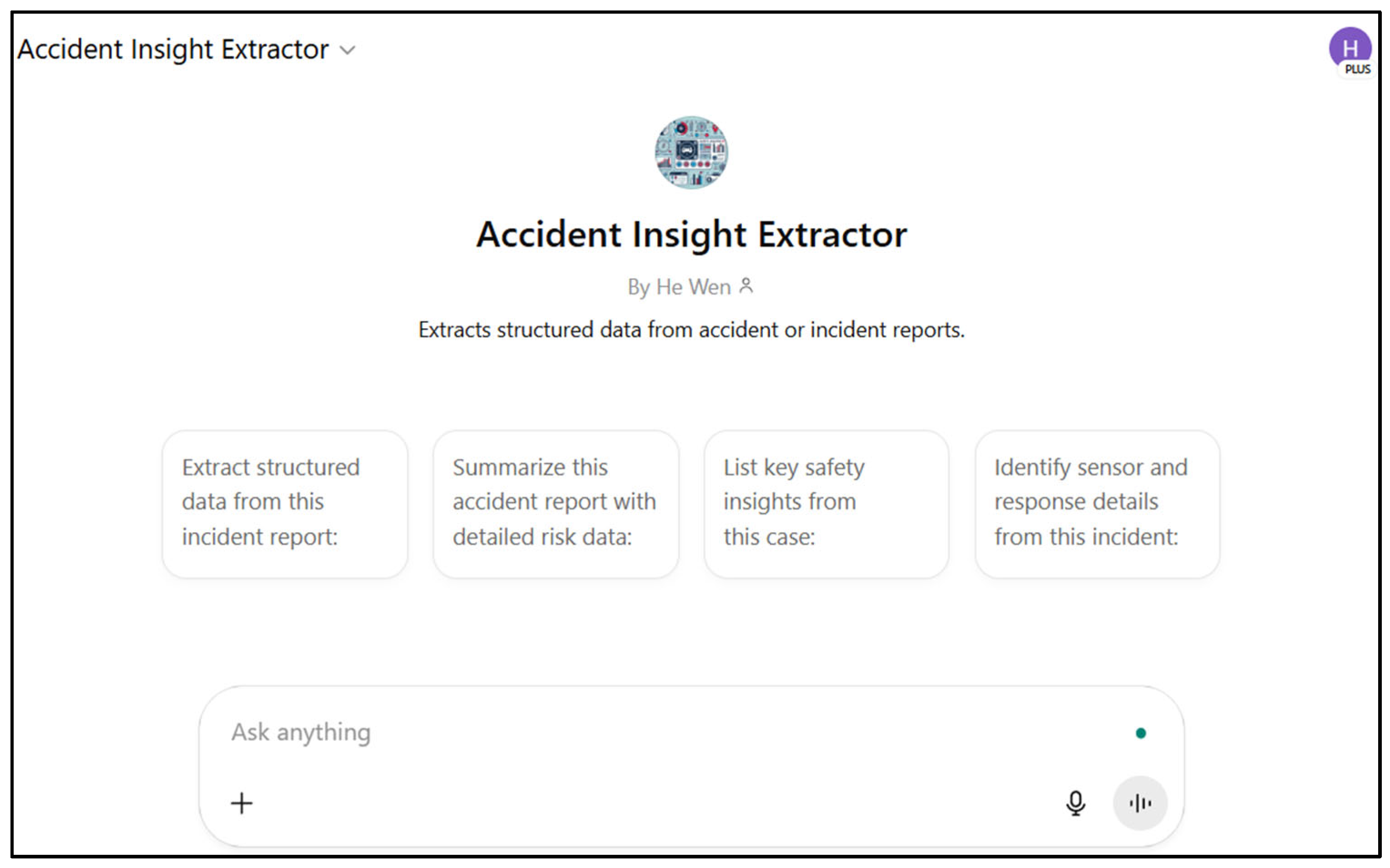

In this step, a GPT-assisted data extraction agent, Accident Insight Extractor, was designed and deployed using the ChatGPT-4o Store’s instruction-based programming interface. To ensure the reliability and consistency of the extracted data, we developed and iteratively refined the GPT agent using a training set of 10 accident reports (Group 1 with five from each domain). These reports were carefully selected to capture a range of failure types, sensor configurations, and human–AI interactions.

Disc discrepancies or ambiguities were flagged during each iteration, and the prompt structure and parsing logic were adjusted to improve the agent’s performance. This iterative, human-in-the-loop approach allowed us to fine-tune the agent’s reliability before applying it to the full dataset. While quantitative performance metrics such as precision and recall are not applicable due to the narrative variability of the source documents, qualitative validation confirmed that the extracted fields aligned with expert interpretations and were consistent across cases.

Figure 2 shows the interface of the GPT agent developed for this study. The tool processes narrative accident reports and outputs structured data aligned with the defined extraction template (

Table 1). It enables consistent capture of technical, sensor, response, and human interaction features.

Table 1 presents the standardized data extraction template structure for incident report information. An example of the output is provided in

Appendix A, with identifiable commercial details redacted to protect the sensitive and copyrighted content.

Although a unified extraction template (

Table 1) was used for all cases to ensure consistency, we acknowledge structural differences between CSB (PCS) and NTSB (AV) reports. Moreover, while GPT-assisted extraction enables efficient and structured analysis of unstructured reports, it has limitations. The model’s outputs are sensitive to variations in narrative structure and may inconsistently interpret ambiguous descriptions. All extracted data were reviewed and validated by human coders to ensure accuracy. Thus, the extraction process involved a hybrid of manual review and automated GPT-based analysis. Complete accident reports were parsed to ensure completeness and contextual fidelity. Where available, supporting elements such as direct quotes, time stamps, or system diagrams were appended to the dataset to enhance data validity and provide explanatory anchors for subsequent thematic and statistical analysis.

2.3. Verification and Preprocessing

To ensure the reliability and analytical utility of the extracted data, all outputs generated by the GPT-assisted extraction agent underwent systematic verification through manual cross-checking. This validation process involved reviewing each coded field against the original report content to confirm semantic accuracy, contextual fidelity, and completeness. Inconsistencies, ambiguities, or omissions were flagged and corrected through iterative review.

Following verification, a series of preprocessing steps were applied to standardize the dataset and prepare it for quantitative and qualitative analysis manually. These steps included normalization of terminology across cases to ensure consistency in categorical labels (e.g., risk trigger types and failure classifications), annotation of ambiguous or context-dependent events to improve interpretability, and alignment of data formats—such as dates, durations, and categorical encodings—for compatibility with statistical and visual analysis tools.

In addition, the PCS cases examined do not involve deployed AI systems. Nonetheless, a consistent analytical structure is maintained to compare human–automation interaction failures in PCSs with those involving AI in AVs. This approach supports the exploration of projected human–AI collaboration challenges as PCSs advance.

Lastly, all structured outputs generated by the GPT-assisted extraction agent were manually reviewed against the original investigation reports to ensure consistency and accuracy. Although we did not implement formal double-blind coding, the structured nature of the GPT-generated outputs minimized ambiguity and variability across cases. The first author conducted all data extraction and preprocessing to maintain uniform interpretation and improve workflow efficiency. To ensure oversight and methodological rigor, the two co-authors independently verified field alignment and provided expert feedback. This collaborative review process was an informal but targeted validation step to ensure semantic fidelity and analytical consistency.

2.4. Comparative Analysis

To comprehensively evaluate the distinctions in risk perception between PCSs and AVs, the study employed a dual-track analytical strategy that integrates quantitative benchmarking and qualitative thematic synthesis.

The quantitative component focused on four key indicators extracted from 60 validated incident cases (30 from each domain): (1) the number of cases with distinct risk triggers, (2) the percentage of cases of most frequent failure classification, (3) the percentage of cases involving human intervention, and (4) the effectiveness rate of those interventions. These metrics were derived directly from the structured extraction template and tabulated to identify statistically relevant differences between the two domains.

Complementing the numerical analysis, a qualitative thematic analysis was conducted to interpret underlying patterns and contextual factors contributing to system failures. Insights and conclusions are drawn from the summaries, comparisons, and discussions.

3. Results and Discussion

3.1. Risk Triggers and Sources

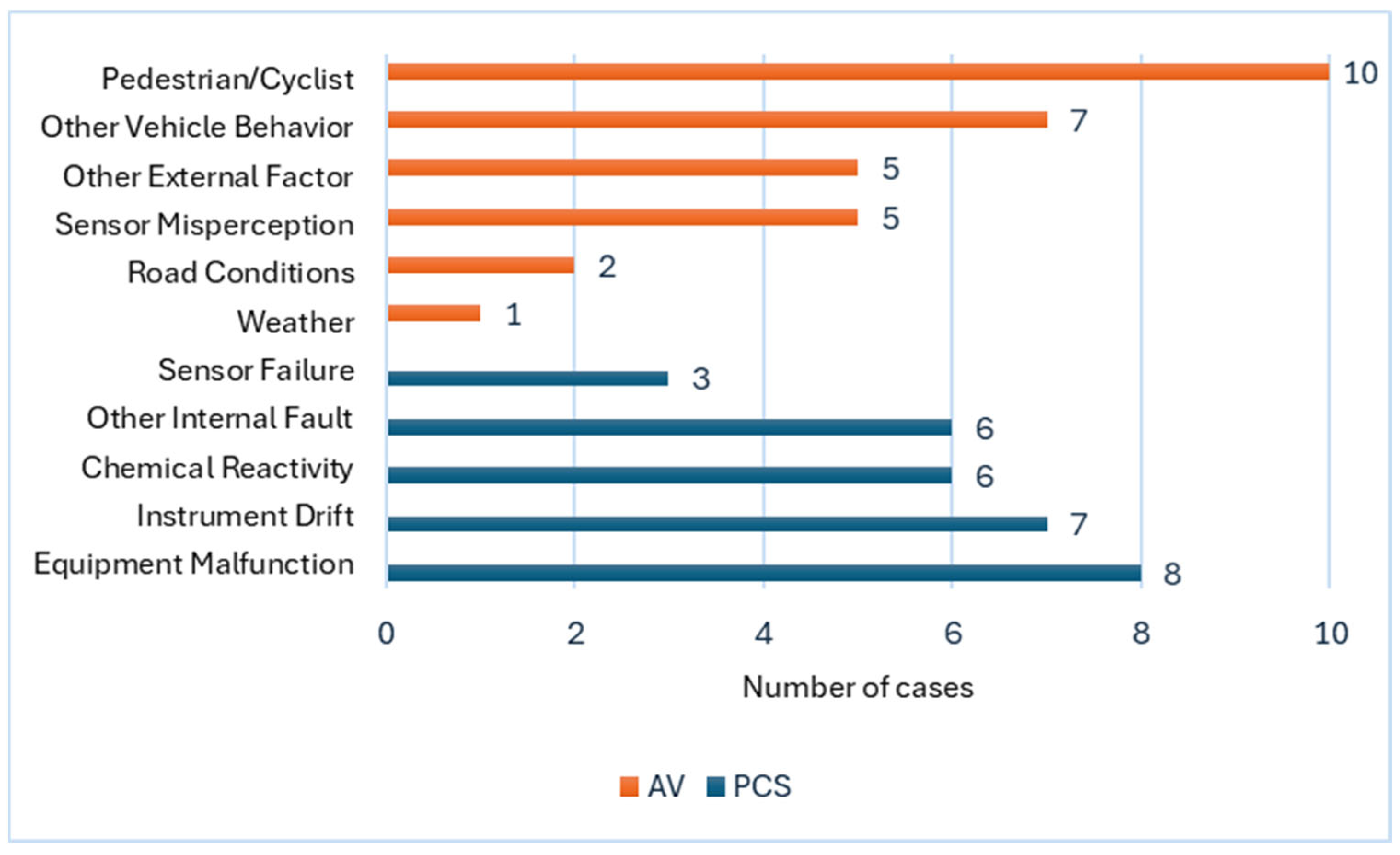

A comparative analysis of 30 PCS cases and 30 AV cases was conducted following the methodology. The results reveal distinct patterns in the types and sources of risk triggers.

Figure 3 presents the frequency of each trigger type across the two domains.

Specifically, risks in PCSs are predominantly initiated by internal technical anomalies, including system/equipment degradation, instrumentation drift, and chemical reactivity. These systems operate in structured and deterministic environments, which allows for precise specification of normal operating parameters.

In contrast, AVs exhibit a risk profile dominated by external, dynamic, and uncertain factors. The most frequent triggers involve interactions with pedestrians and cyclists (10 cases) and unpredictable behavior of other vehicles (7 cases). Importantly, sensor misperception, the misclassification or omission of environmental cues by AI-based perception systems, emerged in five cases.

Thus, PCSs and AVs illustrate a clear divergence in risk typology:

- i.

Primary Risk Source: PCS—internal system faults; AV—external environment.

- ii.

Environment Type: PCS—constrained and controlled; AV—open and variable.

- iii.

System Flexibility: PCS—low (rule-based); AV—medium to high (adaptive learning).

3.2. Risk Perception Paradigms

When risk triggers occur, the risk perception paradigms of PCSs and AVs show distinctive features. Based on the wording analysis and keywords frequency of the 60 accident reports, the differences between the two paradigms can give us a glimpse of the whole picture, as shown in

Table 2. It summarizes the key differences in how PCSs and AVs perceive risk, based on extracted case data and language patterns observed in the reports. These features reflect contrasting architectural approaches, deterministic in PCSs and probabilistic in AVs, and lay the groundwork for understanding subsequent failures.

It can be inferred that PCS architecture relies on deterministic frameworks built around low-frequency, single-modal sensors such as temperature, pressure, and flow transmitters. Sensor integration is primarily threshold-driven, with logic-based interlocks and alarms serving as the primary detection mechanism. Our case analysis confirms this consistency: 100% of PCS cases referenced rule-based detection methods, and the sensor descriptions overwhelmingly included standard industrial instrumentation with hardwired logic. On the other hand, the sensor fusion approach in PCSs is minimal, typically relying on fixed analog or digital logic rather than adaptive algorithms.

In contrast, AVs employ multi-modal, high-frequency sensor suites, prominently featuring LiDAR, radar, and camera arrays. The data are fused using probabilistic models and machine learning algorithms to support dynamic risk interpretation in unstructured environments. Our data show that all AV cases referenced AI-based or probabilistic detection methods, with rich sensor fusion configurations. Unlike PCSs, the AV architecture supports real-time environmental modeling and behavior prediction, yet often lacks interpretability.

3.3. Risk Decision and Response Mechanisms

Connected with risk perception, the mechanisms by which systems interpret risk, predict outcomes, and initiate responses are critical to operational safety. The analysis of 60 incident cases reveals substantial differences in how PCSs and AVs approach decision-making and response under risk, as shown in

Table 3 briefly. Building on the differences in perception,

Table 3 compares how the two system types interpret detected risks and respond. These dimensions are essential for understanding the interplay between system design and safety outcomes, especially in time-critical scenarios.

Typically, PCSs exhibit structured and transparent decision logic, primarily based on rule-based interpretations and deterministic safety functions. Many cases referenced manual or predefined logic-based interpretation, often supported by safety interlocks or alarms. Approximately half of the PCS cases involved automated system responses, such as emergency shutdowns, and over 50% recorded immediate response latency, indicating timely intervention in controlled environments. Predictive mechanisms were largely absent, aligning with PCSs’ reliance on fixed-threshold logic and predefined fault scenarios.

In contrast, AV systems demonstrated higher complexity but lower transparency in risk interpretation and response execution. Many AV cases lacked explicit descriptions of interpretation logic, reflecting the use of black-box AI models whose internal decision processes are not readily observable. System prediction was either unmentioned or classified as absent in most cases, despite the AV domain’s reliance on real-time environmental modeling.

3.4. Human–AI Interaction Failures

Given that most cases involve human and AI elements, our findings suggest that failures frequently stem from complex interactions between human operators and automated systems. First,

Table 4 summarizes the failure types in the two domains. The majority of failure types across both domains involve human-related contributions. As shown in

Table 4, categories such as human error, over-reliance on automation, passive monitoring, and mode confusion account for approximately 76.7% of PCS failures and 96.7% of AV failures.

Although AVs exhibit a higher incidence of cognitive failures overall, an exception is observed in the case of mode confusion. The observed rates of mode confusion, 13.3% in PCS and 10% in AV cases, reflect similar underlying challenges, despite contextual differences. Confusion in PCSs occurs during transitions between manual and automated control since they have more unpredictable process parameters. In AVs, it typically results from unclear automation boundaries, and the operations are generally simpler than those in PCSs. While the user roles vary, both figures indicate a comparable cognitive vulnerability whereby users misjudge system status, leading to delayed or unsafe responses. To reinforce this point,

Figure 4 presents a comparative breakdown of failure types observed in PCS and AV cases, offering insight into the dominant failure patterns unique to each domain.

Apparently, human involvement continues to play a pivotal—yet frequently problematic—role in risk events. Therefore, we further analyzed the human role in interaction, as shown in

Table 5.

PCS environments depend heavily on active human participation, especially in operational (40.0%) and supervisory functions (23.3%). In contrast, AV systems adopt an automation-first paradigm (with 53.3% human supervisory/oversight), reserving human input for exceptional circumstances or emergency takeovers. This inversion creates a role-responsibility mismatch that limits intervention success. PCS operators have more authority but face information overload; AV users have less authority but are expected to react under uncertainty. This structural paradox increases susceptibility to failure in both domains.

Subsequently, we continue to analyze the effectiveness of human intervention (

Table 6). Both domains exhibit low effectiveness of human intervention. Only 6.7% of PCS and 13.3% of AV interventions were entirely successful (temporarily, since our analysis is based on accident reports), with the majority either ineffective or only partially effective. Moreover, to demonstrate the significant proportion of ineffective interventions,

Figure 5 highlights differences in how human actions influence safety outcomes in each context. This trend suggests that failure is often not due to human incompetence but to interface limitations, delayed cueing, or incomplete system understanding.

Lastly, intervention latency is a revealing proxy for system usability. PCS operators, familiar with alarm hierarchies, are more likely to respond quickly. AV drivers, by contrast, often react late or not at all, either due to disengagement or lack of clear system feedback. Thus, limited time available for intervention emerges as a critical contributing factor in escalating incidents to full accidents.

4. Discussion and Recommendations

A forward-looking goal drives this study: to use insights from the more mature AV domain to inform the evolving risk landscape of PCSs. Although our PCS cases do not yet involve deployed AI systems, the patterns of human–automation interaction observed in these environments offer critical foresight into potential human–AI dynamics. By applying structured comparative analysis, enabled through GPT-assisted extraction, this work provides a novel framework for bridging two traditionally separate fields. Our findings suggest that principles such as multi-modal sensing, dynamic risk modeling, and human-centered feedback design from AV systems could enhance process safety engineering meaningfully.

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6 provide a multifaceted comparison of PCS and AV systems. To stress this,

Table 7 highlights key differences between PCS and AV systems across risk triggers, failures, and human intervention.

Moreover, a key insight is the prevalence of complex failures in human–AI interaction scenarios, many of which were previously attributed simplistically to either human errors or system faults. These interaction failures, such as mode confusion, passive monitoring, or over-reliance on automation, are multifaceted and demand a refined classification framework. These findings point to urgent design improvements and significant prospects for cross-domain learning, especially from high-stakes domains where human-automation interaction has long been researched and refined, such as nuclear operations, aviation, and healthcare. The next generation of AI system design, which emphasizes robustness, transparency, and user-centered control, might benefit from incorporating knowledge from these disciplines. To enhance practical relevance for PCSs, we offer the following domain-specific recommendations:

- (1)

Implement predictive, multi-modal diagnostics to detect complex deviations beyond fixed alarm thresholds, inspired by AV sensor fusion and risk modeling.

- (2)

Redesign alarm systems for clarity and priority, minimizing cognitive overload through intuitive alerts and actionable signal grouping.

- (3)

Strengthen engineered safeguards by reducing reliance on operator procedures and incorporating physical interlocks and automated fail-safes.

As for AVs, the following recommendations, enlightened by PCSs, may be considered:

- (1)

Incorporate deterministic fallback logic for handling edge cases where probabilistic reasoning may fail.

- (2)

Improve driver re-engagement mechanisms, including progressive alerting, multi-modal feedback (visual, auditory, and haptic), and clear handover cues.

- (3)

Enhance interface transparency by surfacing system intent, risk confidence levels, and real-time decision rationale.

Furthermore, our findings suggest a need to modernize regulatory and certification standards for AI-integrated systems. In AVs, system opacity and poor handover performance call for enforceable rules on transparency, driver alerts, and override protocols. In PCSs, alarm fatigue and cognitive overload highlight the need for mandated human factors assessments and adaptive alarm design. These insights support a shift toward system-level, human-centered certification frameworks grounded in real-world failure patterns.

Moreover, we acknowledge that the PCSs in our dataset do not feature AI-based automation. However, our goal was not to equate them with AI-enabled systems, but to use them as historical references to highlight persistent human–automation interaction issues. Nevertheless, future work for PCS and AV should include simulation-based validation, human-in-the-loop testing, and expert assessment to refine these insights and operationalize them in high-risk environments.

5. Conclusions

This study presents a comparative analysis of 60 real-world accident cases from process control systems and autonomous vehicles, focusing on risk perception and human–AI interaction. Despite differing technological architectures, both domains exhibit significant vulnerabilities in how risks are sensed, interpreted, and managed. PCS failures often stem from rigid rule-based models and operator overload, while probabilistic perception systems and disengaged human supervision shape AV failures.

A key finding is that many incidents result not from purely technical faults but from complex human–automation interaction failures, such as over-reliance, delayed intervention, and mode confusion. These shared patterns highlight the need for hybrid risk perception frameworks integrating deterministic safety with adaptive intelligence and more human-centered design strategies to improve system transparency and intervention effectiveness across current automation and prospective AI-enabled domains.

Due to the limitations of this comparative analysis, future research should focus on (1) developing standardized taxonomies of human–AI failure modes; (2) designing interpretable models of machine risk perception; (3) conducting empirical studies on dynamic role allocation in human–AI teams; and (4) creating cross-domain simulation frameworks to evaluate hybrid risk perception during real-time operations. These efforts are essential for improving transparency, accountability, and resilience in increasingly automated safety-critical systems.

Author Contributions

Conceptualization, H.W., Z.S. and R.A.; methodology, H.W., Z.S. and R.A.; software, H.W.; validation, H.W., Z.S. and R.A.; formal analysis, H.W.; investigation, H.W.; resources, H.W., Z.S. and R.A.; data curation, H.W.; writing—original draft preparation, H.W.; writing—review and editing, Z.S. and R.A.; visualization, H.W.; supervision, Z.S.; project administration, H.W.; funding acquisition, Z.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Some or all data, models, or code that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AV | Autonomous Vehicle |

| CSB | Chemical Safety Board |

| GPT | Generative Pre-trained Transformer |

| LiDAR | Light detection and ranging |

| ML | Machine Learning |

| NTSB | National Transportation Safety Board |

| PCS | Process Control System |

Appendix A

This appendix provides a complete example of the output generated by our GPT-assisted extraction agent for one representative accident case to demonstrate the structure and richness of our extracted data. The output follows the standardized template described in

Table 1 of the main text, referring to

Figure A1 and the following details.

Figure A1.

Screenshot of the output example.

Figure A1.

Screenshot of the output example.

The extracted information is as follows:

Case ID: 2019-X-X-XX

Domain: Process Control System (PCS)/Chemical Manufacturing

Incident Date: XX, 2019

Source: U.S. Chemical Safety and Hazard Investigation Board (CSB)

Incident Summary: A fatal explosion occurred at XX in XX during a batch chemical mixing operation. An operator mistakenly added 10% potassium hydroxide (KOH), an incompatible base, into a batch tank containing XL 10 (a silicon hydride-based polymer) and TD 6/12 Blend. The chemical reaction released hydrogen gas, which ignited, causing an explosion that killed four employees and destroyed the production facility.

Risk Trigger Type: Internal

Risk Trigger Detail: Inadvertent mixing of incompatible chemicals (10% KOH with XL 10), leading to hydrogen gas generation.

Sensor Type and Modalities: No hydrogen or flammable gas detection system was operational. Failed catalytic gas detectors had previously been trialed.

Sensor Frequency and Fusion: Not applicable (no functional system in place).

Risk Detection Method: Manual recognition by operators; no automated detection.

Detection Latency: Several minutes (gas was released but not recognized due to a lack of detectors).

Interpretation Logic: Human reasoning based on visible process upset (foaming, fog).

System Prediction: No predictive mechanism in place.

System Response: Manual ventilation and evacuation were attempted; they were incomplete due to rapid escalation.

Response Latency: Insufficient time was needed to complete the evacuation; an explosion occurred during the response.

Failure Classification: Response failure (inadequate detection and delayed/incomplete evacuation); perception failure (no system to sense hydrogen).

Human Role: Manual batch operation, recognition of abnormal conditions, and attempted emergency actions.

Human Intervention Effective: No-human intervention did not prevent the explosion.

Safety Outcome: Fatalities (4), total destruction of production building, and offsite damage.

Design Weakness Identified:

Inadequate hazard analysis and process safety system.

Similar appearance of incompatible chemical drums.

Absence of effective gas detection and alarm systems.

Weak safety culture and procedural safeguards over engineering controls.

References

- Khan, F.; Amyotte, P.; Adedigba, S. Process safety concerns in process system digitalization. Educ. Chem. Eng. 2021, 34, 33–46. [Google Scholar] [CrossRef]

- Amin, M.T.; Khan, F.; Amyotte, P. A bibliometric review of process safety and risk analysis. Process Saf. Environ. Prot. 2019, 126, 366–381. [Google Scholar] [CrossRef]

- Simkoff, J.M.; Lejarza, F.; Kelley, M.T.; Tsay, C.; Baldea, M. Process Control and Energy Efficiency. Annu. Rev. Chem. Biomol. Eng. 2020, 11, 423–445. [Google Scholar] [CrossRef] [PubMed]

- Leveson, N.G. Engineering a Safer World: Systems Thinking Applied to Safety; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar] [CrossRef]

- Alauddin, M.; Khan, F.; Imtiaz, S.; Ahmed, S. A Bibliometric Review and Analysis of Data-Driven Fault Detection and Diagnosis Methods for Process Systems. Ind. Eng. Chem. Res. 2018, 57, 10719–10735. [Google Scholar] [CrossRef]

- Bredereke, J.; Lankenau, A. A rigorous view of mode confusion. In Proceedings of the International Conference on Computer Safety, Reliability, and Security, Catania, Italy, 10–13 September 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 19–31. [Google Scholar]

- Sarter, N.B.; Woods, D.D.; Billings, C.E. Automation Surprises. In Handbook of Human Factors and Ergonomics; Salvendy, G., Ed.; Wiley: Hoboken, NJ, USA, 1997; Available online: https://www.researchgate.net/publication/270960170 (accessed on 1 May 2025).

- Wen, H.; Amin, M.T.; Khan, F.; Ahmed, S.; Imtiaz, S.; Pistikopoulos, S. A methodology to assess human-automated system conflict from safety perspective. Comput. Chem. Eng. 2022, 165, 107939. [Google Scholar] [CrossRef]

- Arunthavanathan, R.; Sajid, Z.; Khan, F.; Pistikopoulos, E. Artificial intelligence—Human intelligence conflict and its impact on process system safety. Digit. Chem. Eng. 2024, 11, 100151. [Google Scholar] [CrossRef]

- Kaber, D.B.; Endsley, M.R. Team situation awareness for process control safety and performance. Process Saf. Prog. 1998, 17, 43–48. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Stanton, N.A.; Salmon, M.; Walker, G.H.; Salas, E.; Hancock, P.A. State-of-science: Situation awareness in individuals, teams and systems. Ergonomics 2017, 60, 449–466. [Google Scholar] [CrossRef] [PubMed]

- Siegrist, M.; Árvai, J. Risk Perception: Reflections on 40 Years of Research. Risk Anal. 2020, 40, 2191–2206. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Liu, C.; Liu, J. V2X Perception Algorithms for Intelligent Driving: A Survey. In CICTP; ASCE Library: Mankato, MN, USA, 2024; pp. 544–553. [Google Scholar] [CrossRef]

- Demir, G.; Moslem, S.; Duleba, S. Artificial Intelligence in Aviation Safety: Systematic Review and Biometric Analysis. Int. J. Comput. Intell. Syst. 2024, 17, 279. [Google Scholar] [CrossRef]

- DeFazio, P.A.; Larsen, R. Final Committee Report—The Design, Development & Certification of the Boeing 737 MAX, the U.S. House Committee on Transportation and Infrastructure, Subcommittee on Aviation; House Committee on Transportation and Infrastructure: Washington, DC, USA, 2020.

- Favarò, F.M.; Nader, N.; Eurich, S.O.; Tripp, M.; Varadaraju, N. Examining accident reports involving autonomous vehicles in California. PLoS ONE 2017, 12, e0184952. [Google Scholar] [CrossRef] [PubMed]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A Survey of Motion Planning and Control Techniques for Self-Driving Urban Vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, L.; Huang, Y.; Zhao, J. Safety of Autonomous Vehicles. J. Adv. Transp. 2020, 2020, 8867757. [Google Scholar] [CrossRef]

- Huang, T.; Liu, J.; Zhou, X.; Nguyen, D.C.; Azghadi, M.R.; Xia, Y.; Han, Q.-L.; Sun, S. V2X cooperative perception for autonomous driving: Recent advances and challenges. arXiv 2023, arXiv:2310.03525. [Google Scholar]

- Banks, V.A.; Plant, K.L.; Stanton, N.A. Driver error or designer error: Using the Perceptual Cycle Model to explore the circumstances surrounding the fatal Tesla crash on 7th May 2016. Saf. Sci. 2018, 108, 278–285. [Google Scholar] [CrossRef]

- Douglas, K. Autopilot and algorithms: Accidents, errors, and the current need for human oversight. J. Clin. Sleep. Med. 2025, 16, 1651–1652. [Google Scholar] [CrossRef]

- Alvarenga, M.A.B.; Melo, P.F.F.E. A review of the cognitive basis for human reliability analysis. Prog. Nucl. Energy 2019, 117, 103050. [Google Scholar] [CrossRef]

- Ogle, R.A.; Trey, D.; Morrison, A.R. Carpenter, The relationship between automation complexity and operator error. J. Hazard. Mater. 2008, 159, 135–141. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).