Abstract

Embedded vision systems play a crucial role in the advancement of intelligent transportation by supporting real-time perception tasks such as traffic sign recognition and lane detection. Despite significant progress, their performance remains sensitive to environmental variability, computational constraints, and scene complexity. This review examines the current state of the art in embedded vision approaches used for the detection and classification of traffic signs and lane markings. The literature is structured around three main stages, localization, detection, and recognition, highlighting how visual features like color, geometry, and road edges are processed through both traditional and learning-based methods. A major contribution of this work is the introduction of a practical taxonomy that organizes recognition techniques according to their computational load and real-time applicability in embedded contexts. In addition, the paper presents a critical synthesis of existing limitations, with attention to sensor fusion challenges, dataset diversity, and deployment in real-world conditions. By adopting the SALSA methodology, the review follows a transparent and systematic selection process, ensuring reproducibility and clarity. The study concludes by identifying specific research directions aimed at improving the robustness, scalability, and interpretability of embedded vision systems. These contributions position the review as a structured reference for researchers working on intelligent driving technologies and next-generation driver assistance systems. The findings are expected to inform future implementations of embedded vision systems in real-world driving environments.

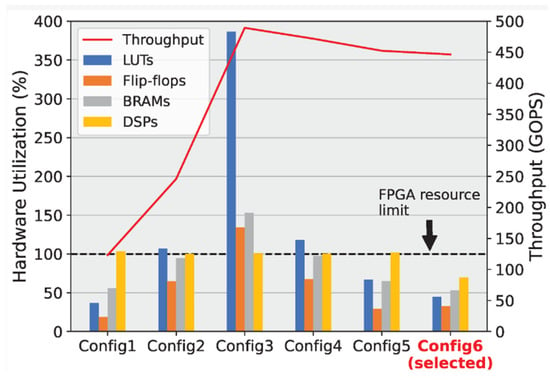

1. Introduction

Road safety has been growing in the last few years, due to road traffic that can be interpreted as exponentially evolving [1,2]. The thing that can guarantee the safety of all road users is the proposal of an intelligent in-vehicle system (ITS) that can be feasibly used in the future for Smart Cities [3,4]. Smart roads also play a key role by integrating technologies that support automation, connectivity, and energy-efficient traffic management. Nonetheless, recent studies [5] have highlighted existing uncertainties regarding their real impact on road safety and traffic operations.

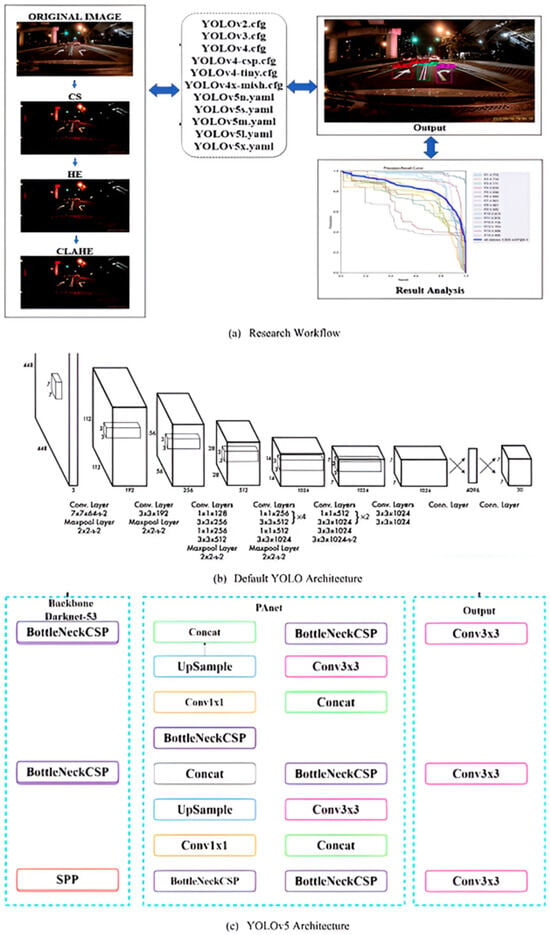

This system has the objective of identifying and recognizing traffic road signs, as well as road edges, to assist the vehicle’s driver, as this is a major problem in the analysis of road scenes [6]. Several driver assistance systems have been proposed and implemented, and until now, research has continued to optimize traffic sign detection and recognition [7], as well as road lane detection [8]. Most approaches can be divided into three phases: localization, detection, and recognition (classification) [9,10,11,12]. Apparently, the first two phases are complementary, and most authors summarize them into only two categories: detection [13] and recognition for traffic signs [14,15] and road lanes [16,17,18]. The detection phase is very important, because it is used to detect all the signs and lanes of the road in order to eliminate as many objects as possible that may appear in the image, allowing us to reduce the false positive rate. Even if there is false detection in the detection phase, the performance will be corrected in the recognition phase, as this is performed in an intelligent way, using artificial intelligence for signs [19,20,21,22,23] and lanes [24,25,26].

Intelligent Transport Systems (ITSs) play a crucial role in the evolution of modern transportation, integrating advanced technologies to enhance safety, efficiency, and user comfort. For example, one study explored research and development programs for intelligent vehicles across the globe, highlighting emerging trends in this field. Another paper [27] explored the application of intelligent systems to optimize urban mobility and security through IoT-based innovations. It proposed models for smart traffic lights, parking systems, and vehicle theft detection, leveraging technologies like sensors, Raspberry Pi processors, and cloud databases. These systems dynamically adjust traffic signals based on density, monitor parking space availability, and provide real-time theft alerts. The research highlights the role of intelligent systems in improving traffic flow, enhancing parking experiences, and ensuring vehicle security. This research [28] investigates the role of unmanned aerial vehicles (UAVs) in optimizing the movement of emergency medical vehicles in Smart Cities. Using a hybrid Cascade-ResNet model, the study analyzed video data to detect congestion and unusual activity, facilitating faster and more efficient route planning. UAVs, functioning as IoT edge devices, enhanced traffic management and public safety through real-time insights. The approach demonstrated a 2.5% improvement in accuracy over comparable methods, showcasing its practical utility in urban emergency response systems. Moreover, research on embedded systems for autonomous vehicles has emphasized the importance of artificial intelligence in real-time decision-making [29], enabling vehicles to dynamically analyze their environment and the context in which they operate. These technological advancements highlight the growing importance of ITSs in developing safer and smarter vehicles [30], addressing the challenges of modern urban environments.

The evolution of visual perception in autonomous vehicles has progressed from rule-based methods and handcrafted features (e.g., Hough Transform, SVM) to modern deep learning architectures such as CNNs and Vision Transformers. Each generation has improved accuracy, generalizations, and robustness in complex road environments. Recent developments include end-to-end models that integrate perception, reasoning, and control in unified frameworks. This historical trajectory underscores the growing importance of AI-driven vision in automotive systems.

This paper undertakes an in-depth review of embedded vision systems tailored to the detection and recognition of road signs and lane markings, which are critical for enhancing road safety. By analyzing existing studies, it highlights both the strengths and limitations of current approaches, with a focus on their practical implementation under diverse conditions. The primary aim is to underscore the role of these systems in reducing accidents and improving traffic flow while addressing challenges such as environmental variability and computational efficiency. To add clarity and originality, the review intends to identify research gaps and suggest potential advancements, positioning itself as a resource for developing more effective vision-based solutions in modern transportation systems. Including a distinct problem statement in the Introduction further emphasizes the paper’s objectives and its contribution to the field. Also, a comprehensive summary of modern, real-time techniques utilized for both detecting and classifying traffic signs and road markings is provided. In this discourse, we delineate the methodologies for locating and detecting road signage and boundaries into a tripartite framework:

- Colorimetric Techniques: This division includes strategies that implement segmentation processes for the purpose of localization.

- Geometrical Approaches: This segment comprises methods that utilize geometric principles.

- Learning-Based Algorithms: This category encompasses techniques that incorporate learning paradigms for identification and classification tasks.

The categorization of recognition methodologies within this study bifurcates into two distinct streams:

- Feature-Based Methods: This category encompasses techniques wherein attributes are meticulously traced by domain experts.

- Deep Learning Approaches: This classification pertains to methods that employ deep learning algorithms for feature extraction and pattern recognition tasks [31].

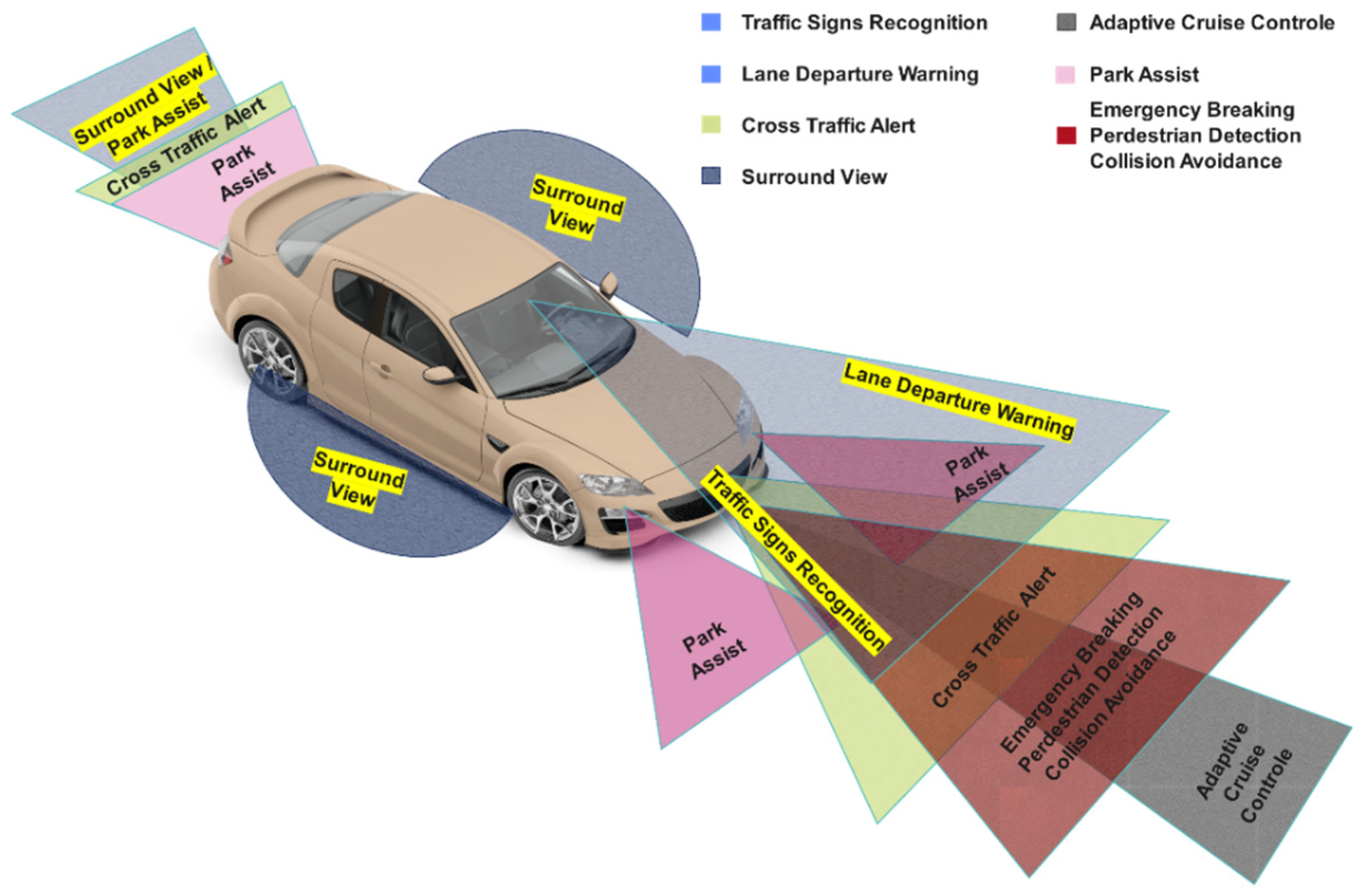

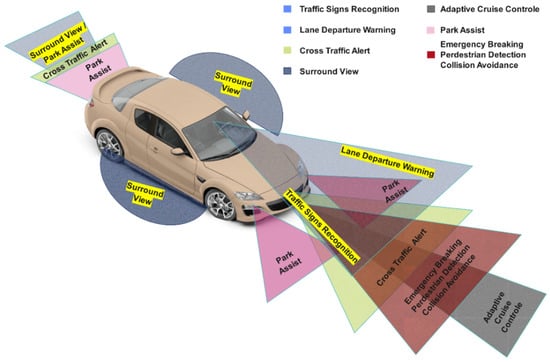

Embedded vision systems are quintessentially integrated within a paradigm known as advanced driver assistance systems (ADASs), depicted in Figure 1 [32]. These systems harness sensorial input, predominantly from cameras, to assimilate environmental data. Subsequently, they synthesize decisions that align with both the perceived information and predefined objectives, thereby influencing the environment through iterative actions. This cyclical process persists until the attainment of the designated goal [33]. Specifically, within autonomous vehicular technology, the perception component is pivotal for obstacle detection including other vehicles, pedestrians, and miscellaneous objects, as well as for infrastructure recognition and self-localization within the milieu. It is customary to categorize perception systems into distinct classes based on their functional attributes and sensor modalities.

Figure 1.

Driver assistance systems: the circle of safety.

Road signs and road lanes play a vital role in allowing traffic to develop under very good conditions (speed, safety), but we would nevertheless like to remind you that

- -

- Sign supports must not encroach on the left and right lane and must be positioned as far as possible from surfaces accessible to vehicles.

- -

- Supports for gantries, jibs, etc., must generally be isolated by safety guardrails.

The role of road signs is to

- To make road traffic safer and easier.

- To remind you of certain traffic regulations.

- To indicate and remind the various special regulations.

- To provide information about the road user.

To structure this comprehensive review and guide the reader through its analytical goals, the following research questions are addressed:

- RQ1: What are the most efficient embedded vision techniques for road element detection?

- RQ2: What are the current limitations of these systems under real-world constraints?

- RQ3: Which unexplored areas can lead to safer, more scalable ADAS integration?

- RQ4: What types of processors are most commonly used in embedded vision systems for traffic sign and lane detection, and how do they impact system performance under real-time constraints?

The objective of this manuscript is to furnish a comprehensive survey delineating the myriad methodologies employed in the detection and recognition of traffic signs and lane markings within the realm of embedded vision systems. Section 2 delineates the categorization of signage into horizontal and vertical typologies. Section 3 proffers an exhaustive overview of the extant systems prevalent within the automotive sector, accompanied by a systematic critique. Section 4 scrutinizes the prevalent Image Processing (IM) and Artificial Intelligence (AI) [34] techniques utilized for the localization, detection, and recognition of traffic elements, analyzing the most ubiquitously adopted methodologies. Section 5 deliberates on extensive empirical experimentation pertaining to detection and recognition processes. Lastly, Section 6 encapsulates the findings and implications of the study.

2. Materials and Methods

To ensure clarity, focus, and scientific rigor of this review, our primary aim is to assist researchers working specifically on the development of embedded vision systems in the automotive sector, with a particular emphasis on road user safety. Rather than addressing a general audience, this paper is intended as a research-oriented contribution, offering both a structured overview of existing techniques and a critical discussion of ongoing challenges and future opportunities. To structure this narrative review, we followed the SALSA framework (Search, Appraisal, Synthesis, and Analysis) (Table 1). This methodology not only helps narrow the scope of relevant studies but also highlights technical advances, gaps, and implementation barriers within real-world embedded automotive systems.

Table 1.

Overview of the SALSA methodology applied in this review.

2.1. Types of Signs

Several techniques or identification methods can be applied for the detection and recognition of road signs and road markings to extract and identify them in an urban environment, for example, using image processing techniques, computer vision, and artificial intelligence. An embedded vision system allows changing the information with a large number of devices on the road:

- ❖

- Road infrastructure server;

- ❖

- From one vehicle to another.

In the context of this inquiry, we commence with a succinct exposition on the typologies of road signs employed for the regulation of vehicular flow. The taxonomy of these signs is bifurcated into two categories: vertical signs, which are predominantly utilized for conveying regulations, warnings, and guidance in a perpendicular orientation to the road; and horizontal signs, which are marked directly on the road surface to delineate traffic lanes, convey directional information, and provide other operational instructions [34,35,36].

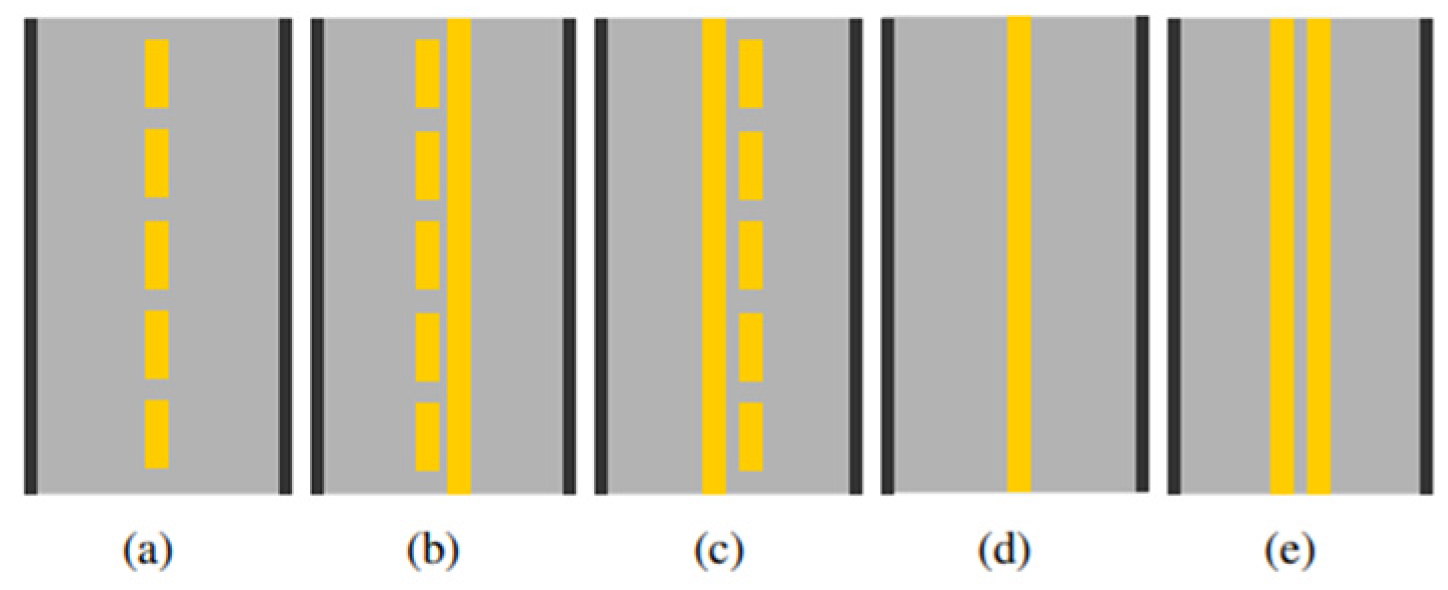

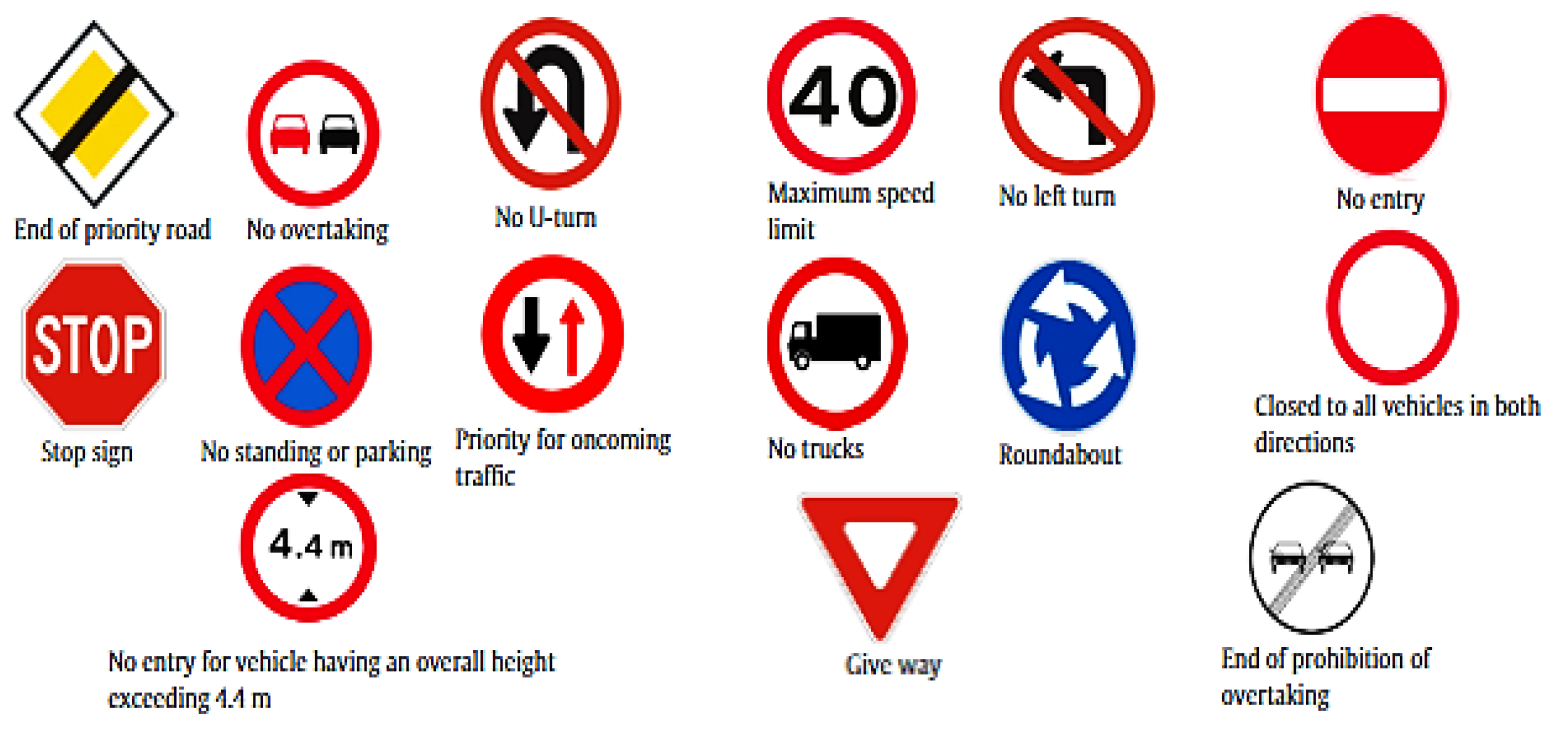

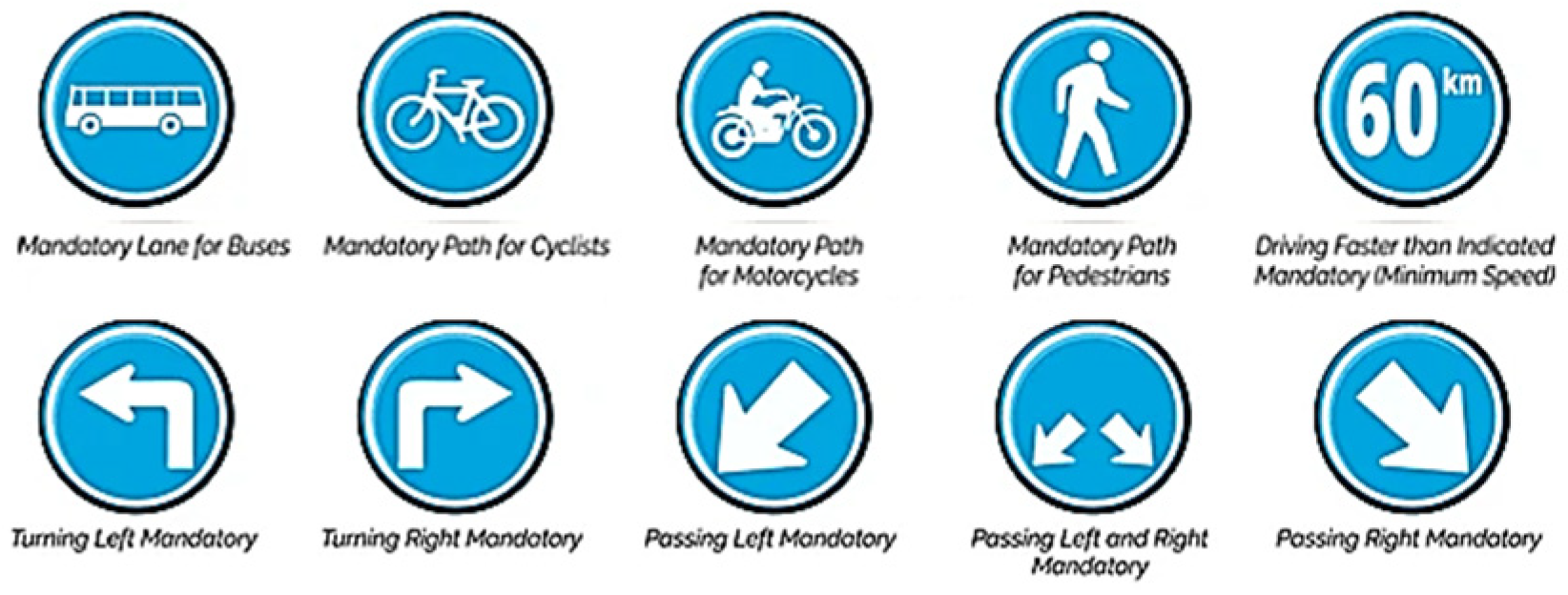

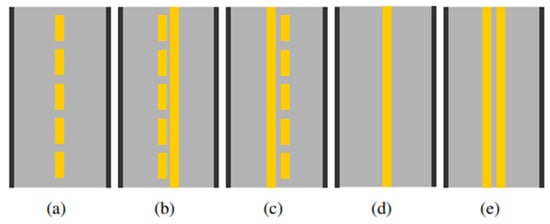

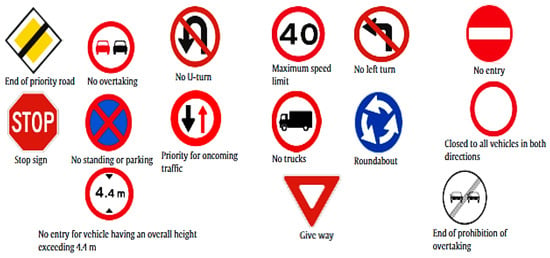

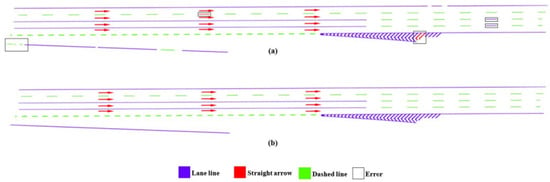

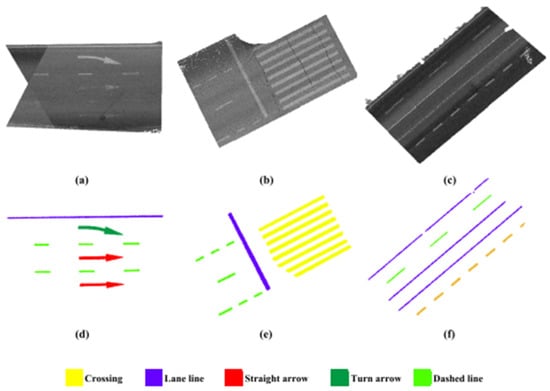

Road signs and markings are key elements for safe and structured traffic flow. Horizontal markings such as dashed, solid, dashed–solid, and solid–dashed lines define lane boundaries and dictate overtaking or lane-changing rules (Figure 2). Dashed lines allow movement between lanes, while solid or double solid lines indicate restrictions or prohibitions. Vertical road signs (Figure 3, Figure 4, Figure 5 and Figure 6) provide regulatory, warning, informational, and mandatory messages to drivers. These include circular prohibition signs, triangular warning signs, rectangular information signs, and round blue obligation signs, all of which follow standardized shapes and colors to support quick recognition [37,38,39].

Figure 2.

Varieties of lane markings corresponding to categories (a–e): (a) dashed, (b) dashed–solid (dashed to solid), (c) solid–dashed (solid to dashed), (d) single solid, (e) and double solid.

Figure 3.

Examples of regulatory signs.

Figure 4.

Examples of warning signs.

Figure 5.

Signs of the information.

Figure 6.

Signals of obligation.

Both horizontal and vertical signs are essential visual cues interpreted by embedded vision systems in modern vehicles. Their standardization enables accurate real-time detection, which is crucial for advanced driver assistance systems (ADASs) and autonomous driving functions. Recognizing these signals helps systems navigate roads, enforce safety rules, and interact effectively with the traffic environment. The upcoming section introduces commonly used optical character detection and recognition techniques in such embedded vision applications.

According to what was explained before, generally, a road sign can have a triangular, square, or rectangular shape. It should be noted that the style or shape of road signs is standardized. Based on these characteristics, we propose, in the subsequent section, the most commonly employed techniques in systems for optical character detection and recognition.

2.2. The Different Systems Existing in the Automotive Industry

Nowadays, there are many traffic sign recognition systems in the automotive sector. Currently, there are some car brands that have already been equipped with these TSR systems. Below is an example of a TSR application in a car (Figure 7).

Figure 7.

Examples of TSR application in a car.

- a.

- Advantages of TSR Systems

- Facilitate accurate traffic sign readings;

- Ensure interrupted performance;

- Provide complete speed solutions (avoid accidents);

- Ability to encrypt image data with other systems;

- Reads all types of infrared and non-reflective infrared plates.

- b.

- Limitations of TSR Systems

- A road sign outside the camera’s detection zone will not be detected.

- The systems operate only within the limits of the system and assist the driver.

- The driver must remain attentive while driving and remains fully responsible for their actions.

When it comes to TSR systems integrated in vehicles, there are two types of TSR technology applications:

- Passive application: consists of informing the driver by means of pictograms or sounds that they are entering a zone that has a new limit indicated by a traffic light. In this case, it will be the driver’s decision whether they obey the signal or not.

- Active application: consists of automatically intervening in the car when it detects a sign. For example, if the driver is driving at an excessive speed and the TSR system detects a stop sign but the car does not interpret that the driver intends to stop, the command to brake will be sent directly to the car to avoid a possible accident.

2.3. Horizontal Signs: Road Lanes

The utilization of image processing in the design and development of embedded vision systems for the detection and recognition of traffic signs and road markings is a critical aspect of modern vehicular technology. These systems integrate advanced algorithms that analyze visual data to identify and interpret traffic signs and lane information, thereby enhancing the safety and efficiency of navigation in both driver-assisted and autonomous vehicles [40]. The sophistication of these systems lies in their ability to process complex visual inputs with high accuracy and speed, contributing significantly to the advancement of intelligent transportation systems. Image processing techniques are fundamental to the development of embedded vision systems in the automotive sector [41]. These techniques enable vehicles to interpret and understand the environment around them, which is crucial for both driver assistance and autonomous driving applications. Here is a deeper look into their essential role:

- Enhanced Perception of Safety: Image processing algorithms are key to enhancing a vehicle’s perception of its surroundings. They process visual data from cameras to detect objects, lanes, signs, and pedestrians, which is vital for safety features like collision avoidance and lane-keeping assistance [34,42].

- Real-time processing: Embedded vision systems must process and analyze visual data in real time to be effective. Image processing techniques allow for the quick interpretation of data, enabling immediate responses to dynamic road conditions [43].

- Machine Learning Integration: The integration of machine learning with image processing has led to more accurate and adaptive vision systems. These systems can learn from vast amounts of data, improving their ability to recognize and respond to various traffic scenarios over time [44].

- Reduced Computational Load: Advanced image processing techniques help in reducing the computational load on embedded systems. By preprocessing visual data and extracting relevant features, these systems can operate efficiently without compromising on speed or accuracy.

- Sensor Fusion: Although camera-based vision systems are fundamental in enabling perception for autonomous vehicles, they exhibit several inherent limitations. Environmental conditions such as fog, shadows, glare, or heavy rain can reduce the reliability of image-based detection. Moreover, vision alone, especially from monocular cameras, fails to provide accurate depth information, which is critical for tasks like distance estimation and obstacle avoidance. In contrast, sensors like LiDAR and radar offer more consistent depth measurements and greater resilience to weather variations, though they may lack the resolution and semantic detail provided by visual sensors. By combining complementary sensor modalities, sensor fusion techniques significantly enhance perception reliability. As demonstrated in [45], fusing visual data with LiDAR or radar improves detection confidence, reduces false positives, and strengthens decision-making, particularly in edge-case scenarios [46].

In summary, image processing is a cornerstone of modern automotive technology, enabling vehicles to see and interpret the world with precision and intelligence. As the automotive industry continues to evolve towards autonomous driving, the role of image processing in embedded vision systems becomes increasingly significant.

2.4. Harnessing Artificial Intelligence in the Automotive Sector

Artificial intelligence (AI) refers to a range of technologies enabling the simulation of intelligent behavior and the automated execution of perception, comprehension, and decision-making tasks. These methodologies primarily encompass mathematics (particularly statistics), computer science, electronics, cognitive science, and neuroscience. Consequently, artificial intelligence has developed very strongly for more than 10 years, with an acceleration in the last 5 years, to allow uses such as [15,47,48]

- Visual perception: object recognition or scene description.

- Understanding of written or spoken natural language: automatic translation, automatic production of press articles, and sentiment analysis.

- Automatic analysis by “understanding” a query and returning relevant results, even if the result does not contain the words of the query.

- Autonomous decision-making for ADASs and autonomous vehicles.

AI currently requires considerable data and computational resources to learn efficiently. Research is now developing techniques to reduce energy consumption and limit the need for data [49] and other techniques to allow a solution to be generalized to multiple uses or to make AI robust to a single disruptive event.

- a.

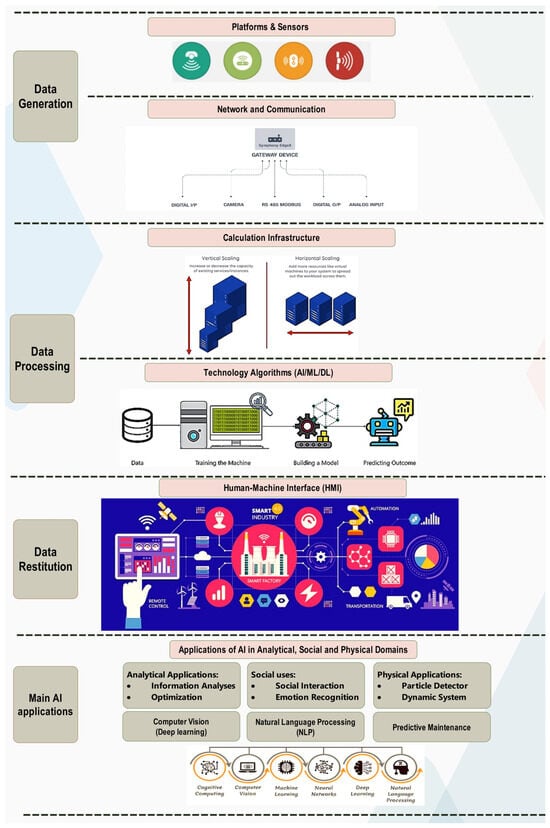

- Exploring Artificial Intelligence Technologies

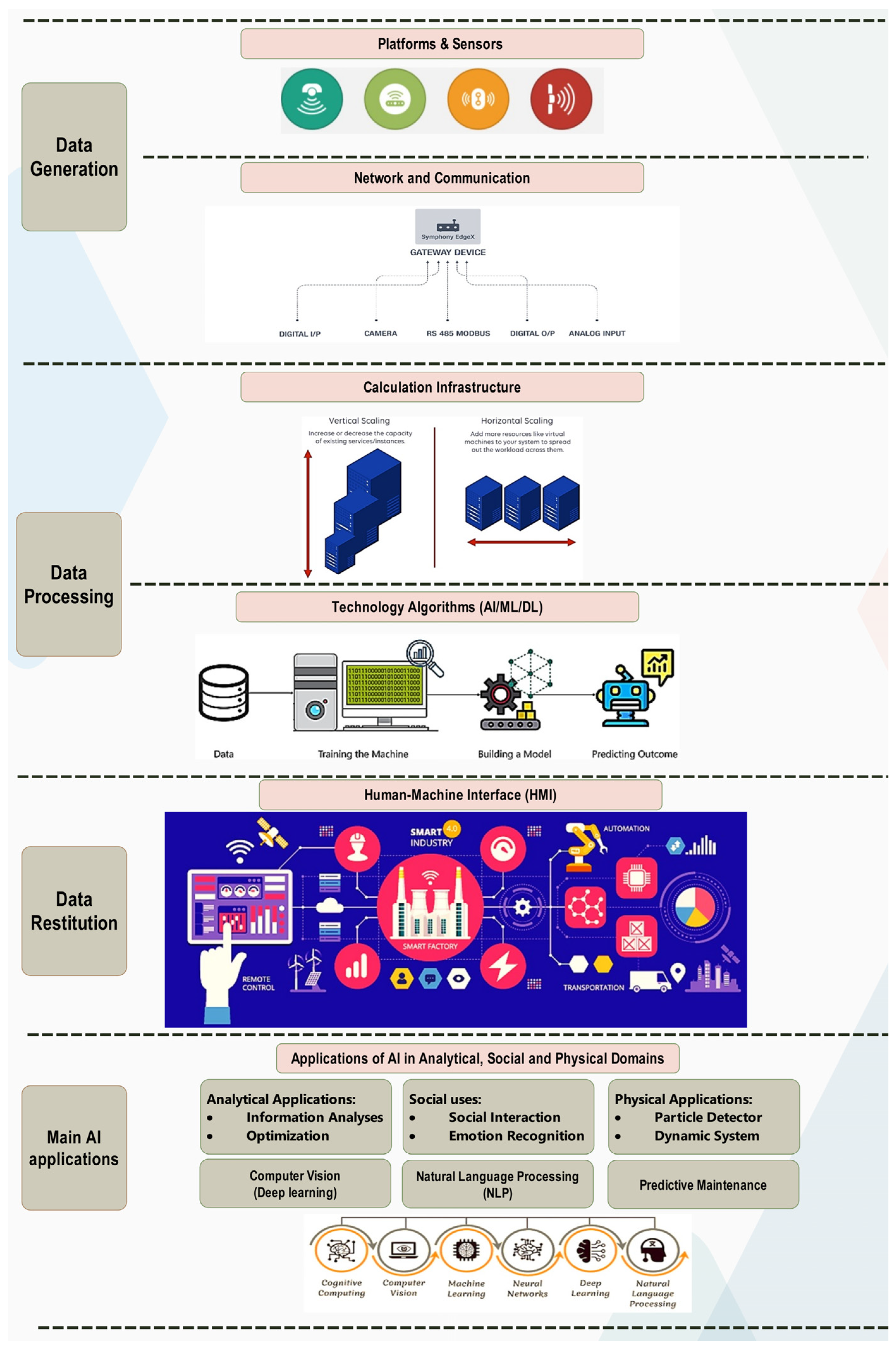

Even though artificial intelligence is mainly associated with mathematical disciplines and algorithmic techniques, it also includes other aspects to support comprehensive use, as shown in Figure 8. The main building blocks of an AI system are digital platforms (user data) or sensor infrastructure [45,50] (machine or environmental data) to generate regular data/events flow. This infrastructure consists of networks of sensors strategically deployed to capture and transmit data from the physical world to computational systems for analysis and interpretation [51,52,53]. A communication network allows the collection of data/events used by the AI. This data must be sufficiently representative of the use case we are trying to address. By taking these special considerations into account and implementing robust data collection strategies, organizations can ensure that the data captured by sensor infrastructure is sufficiently representative of the specific use case, enabling more accurate information to be obtained and informed decisions to be made [54].

Figure 8.

AI technology bricks.

A hyperscale computing infrastructure stores and exploits the data streams in a reasonable timeframe. Globally, hyperscale IT infrastructure [55] represents a paradigm shift in data center design and operation, enabling organizations to efficiently and cost-effectively support the growing demands of the digital enterprise, cloud computing, big data analytics, and emerging technologies such as AI/ML [56]. Artificial intelligence algorithmic technologies (machine learning, deep learning, neural networks, etc.), a performance measurement, an error measurement, and a set of reference events for learning are required. These performance measurement techniques are essential for assessing the effectiveness and reliability of AI algorithms in different tasks and domains. By carefully choosing appropriate metrics and methodologies, researchers and practitioners can gain valuable insights into algorithm performance, identify areas for improvement, and make informed decisions about algorithm selection and deployment [57].

A simple man/machine interface of the platform types accessible via mobile or computer for “decision support”-type uses or an advanced interface (drone, robot, autonomous vehicle) for “autonomous decision”-type uses is the element that associates an algorithmic technology with a sectorial use [58].

- b.

- The usefulness of AI for embedded vision systems in the automotive sector

The application of AI in embedded vision systems is pivotal for the progression towards fully autonomous vehicles, as it allows for the interpretation of complex visual data, ensuring safety and reliability of diverse driving conditions. Moreover, AI models and methods have been systematically reviewed for their application in automotive manufacturing, highlighting the potential and applicability of AI for automotive original equipment manufacturers (OEMs) and suppliers [47]. Furthermore, advancements in computer vision algorithms and remote sensing data fusion techniques contribute significantly to the automotive sector by enhancing visual perception and navigation systems [59]. In the realm of automotive technology, AI-driven embedded vision systems are pivotal for the evolution of vehicles that are not only autonomous but also cognizant of safety and operational efficiency. The burgeoning corpus of scholarly articles attests to the critical role and swift progression of AI in this domain. The recent literature has elucidated the integration of deep learning with autonomous vehicle systems (AVSs), significantly impacting road safety and the future of transportation [60].

These advancements are particularly noteworthy in the context of sensor fusion cost reduction and the amalgamation of top-tier solutions to address uncertainties on the road. The systematic review of AVS employing deep learning spans a decade, focusing on RGB (Red, Green, and Blue) camera vision and its application in various vehicular functions, from perception analysis to augmented reality-based head-up displays [61]. Furthermore, the digitalization of the automotive sector accelerates the convergence of perception, computing, connectivity, and data fusion for Electric, Connected, Autonomous, and Shared (ECAS) vehicles. This convergence fosters cutting-edge computing paradigms with embedded cognitive capabilities, propelling vehicles towards sustainable green mobility through intelligent functions and automotive digital infrastructure [62].

The challenges of embedding computer vision in automotive safety systems are manifold, yet the continuous innovation in AI methodologies and virtual validation and testing is paving the way for more advanced and reliable ECAS architectures [63]. As vehicles become increasingly intelligent and interconnected, functioning as edge microservers on wheels, the embedded AI facilitates a myriad of autonomous capabilities, enhancing the overall driving experience and safety [62]. In essence, AI for embedded vision systems is not merely a technological enhancement but a transformative force driving the automotive sector towards an era of intelligent mobility. The commitment to research and development in this field is crucial for realizing the full potential of AI in automotive applications, ensuring that the vehicles of tomorrow are equipped to navigate the complexities of real-world driving environments.

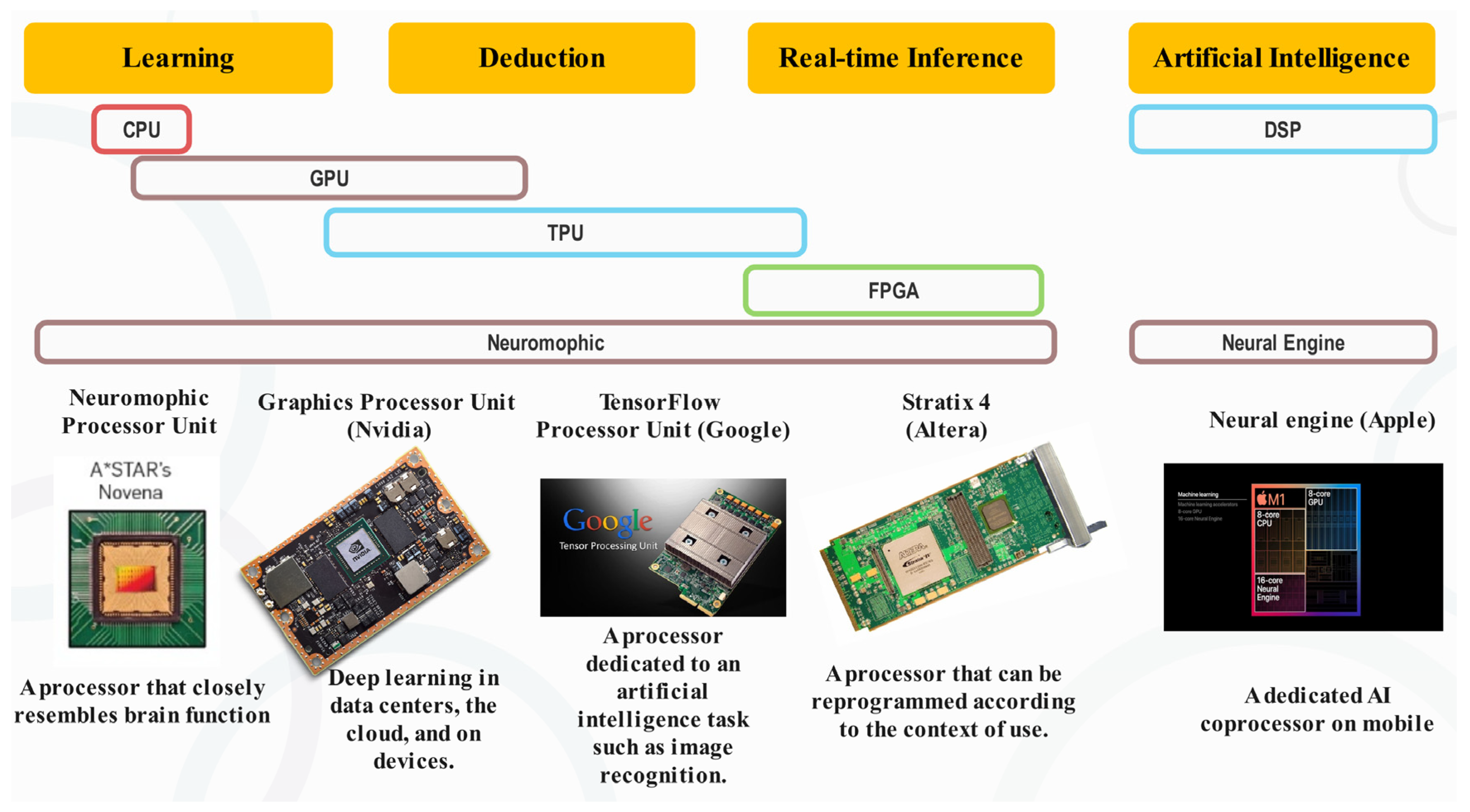

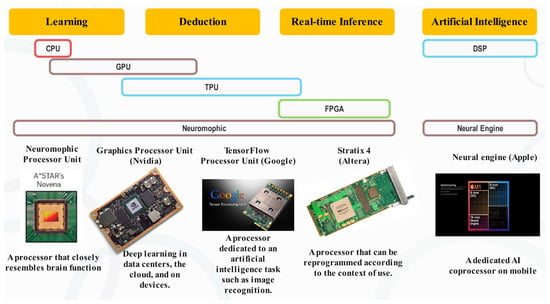

Furthermore, the following diagram (Figure 9) shows the evolution of processor sales by economic sector over the last few years. The industrial, tertiary (including financial services), and commercial sectors are the three sectors that consume the most chips. This number is growing overall in all sectors, apart from the economic crisis of 2008. GPU-based processors (led by NVIDIA) currently dominate the AI gas pedal market. Other technological approaches are still in the research and development stage. Just like the GPU, which took a decade to become established, solutions should be available by 2020/2025. They focus on solving specific AI problems, once the learning phase is over, such as the inference phase (FPGA) [57,58] or the use of constrained consumption (QUALCOMM) on mobile devices.

Figure 9.

AI usage of processors according to their architecture.

2.5. Vision Transformers in Object Detection and Tracking

Vision Transformers (ViTs) have recently emerged as strong alternatives to CNN-based (convolution neural network) approaches for object detection and tracking, particularly in autonomous driving scenarios. Unlike convolutional models, ViTs utilize self-attention mechanisms to capture the global context, which improves detection performance in complex visual scenes. However, their computational demands often limit their use in embedded systems. To address this, recent works have focused on designing lightweight and hybrid models. For example, MobileViT integrates convolutions with transformer blocks to reduce latency while maintaining accuracy, showing promising results in mobile and real-time platforms [64]. Similarly, DeViT introduces a modular design that partitions the model across low-power devices for efficient inference without significant accuracy degradation [65]. Fast-COS, introduced by [66], proposes reparametrized attention tailored for driving scenes, outperforming traditional models like YOLO and FCOS in terms of speed and throughput on edge devices. Moreover, Lai-Dang highlighted the importance of software–hardware co-design and model compression in deploying ViTs under strict resource constraints [67]. These developments underline a key trade-off: ViTs offer better detection accuracy, but only recent innovations have made their deployment feasible on embedded platforms. A comparative discussion of these models is essential to reflect the current state of the art and guide future research.

3. Related Work

This section is divided by subheadings. It should provide a concise and precise description of the experimental results, their interpretation, and the experimental conclusions that can be drawn.

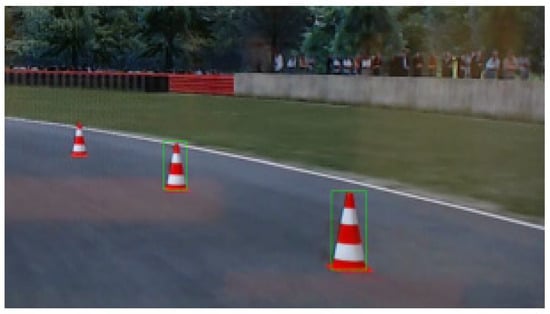

The proposal of an embedded vision system should efficiently and accurately detect and recognize traffic signs, while also robustly identifying road edges. These systems play a vital role in functions such as driver assistance systems [68], pedestrian detection, lane departure warning, traffic sign recognition, and autonomous driving. By leveraging AI algorithms and advanced image processing techniques, these systems can accurately detect and recognize objects, pedestrians, road signs, and lane markings, providing invaluable insights to both drivers and autonomous driving systems. As automotive manufacturers continue to invest in the research and development of embedded vision technologies, we can expect further advancements that will drive the future of transportation towards safer, smarter, and more efficient vehicles.

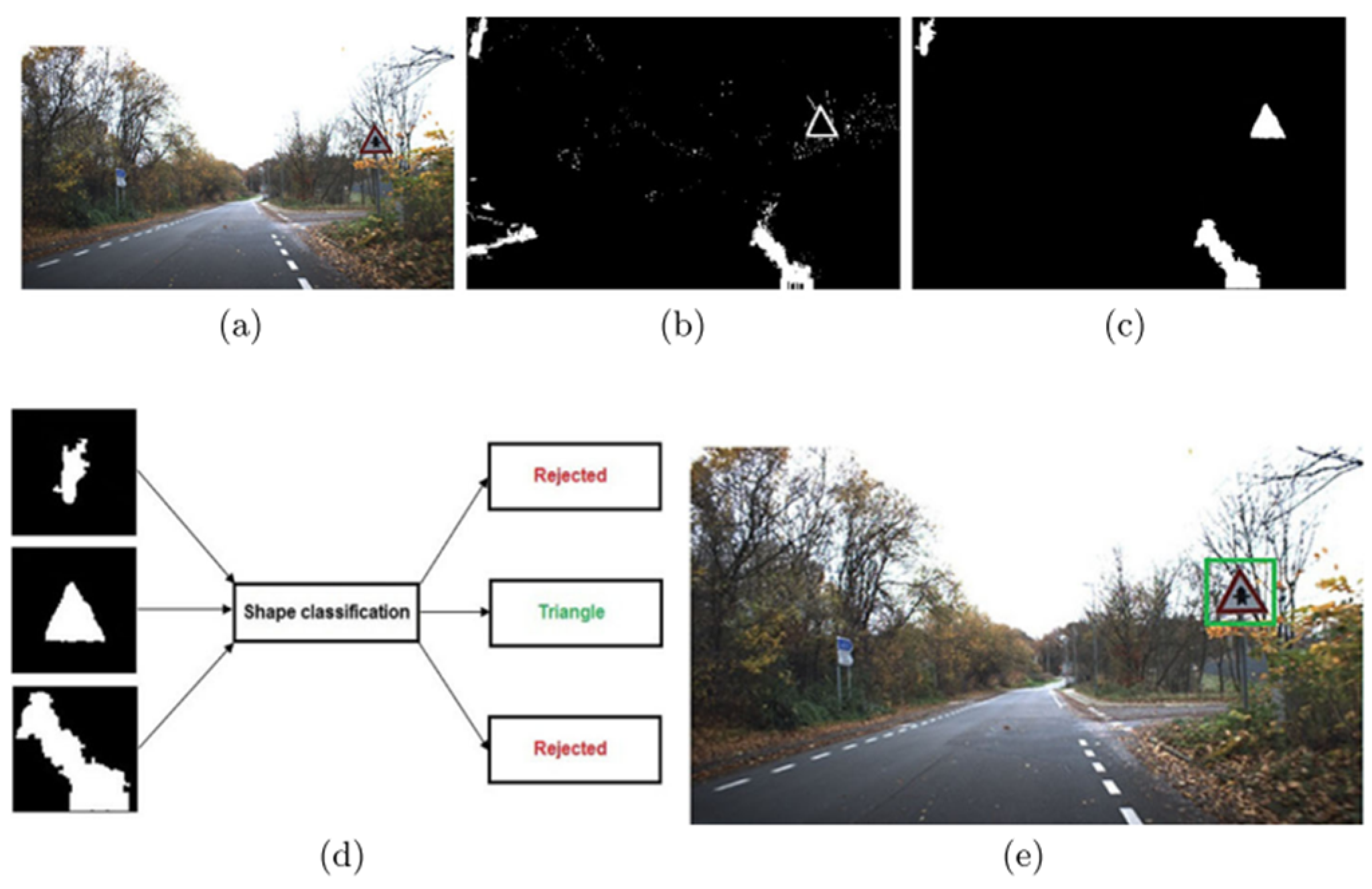

Traffic signs are characterized by regions of interest that can identify them in road traffic (color and geometric shape), followed by the recognition or classification of the detected signs according to the type of their pictograms. For road edges, detection is based on a model of the road, followed by the recognition or estimation of the steering angle of wheels according to the scenario (left, middle, or right), in order to assist the driver during the conduction period.

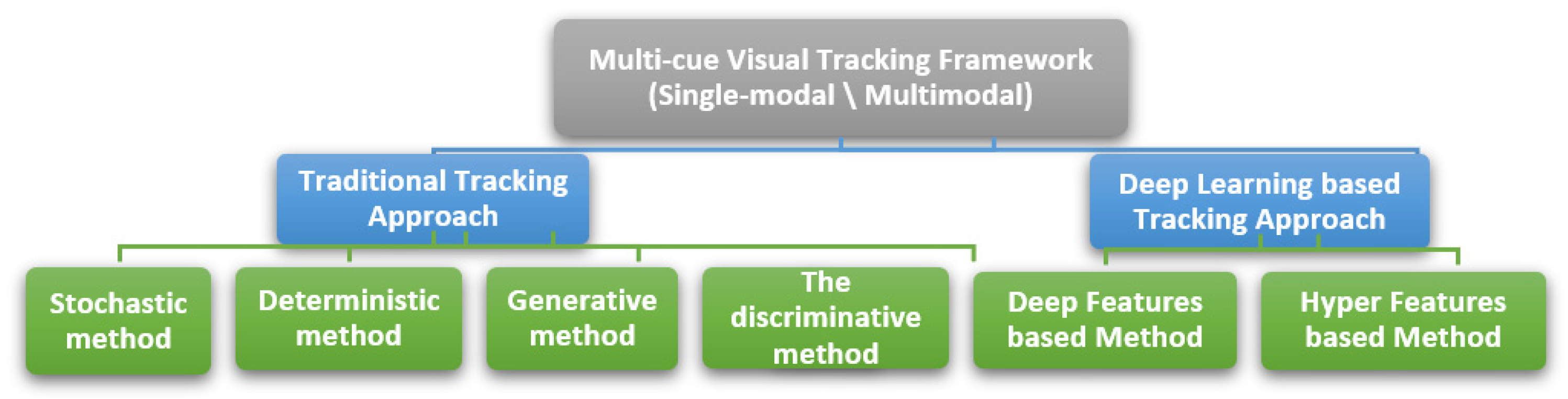

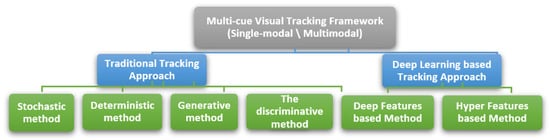

A Multi-Cue Visual Tracking Framework refers to a system in computer vision designed to track objects using a combination of visual cues, which can originate from a single modality or multiple sources. Single-modal tracking relies solely on one type of input, typically visual data, while multimodal tracking integrates various inputs such as depth, motion, or thermal information to enhance robustness. These frameworks aim to improve accuracy and adaptability by incorporating features like color, shape, texture, and motion.

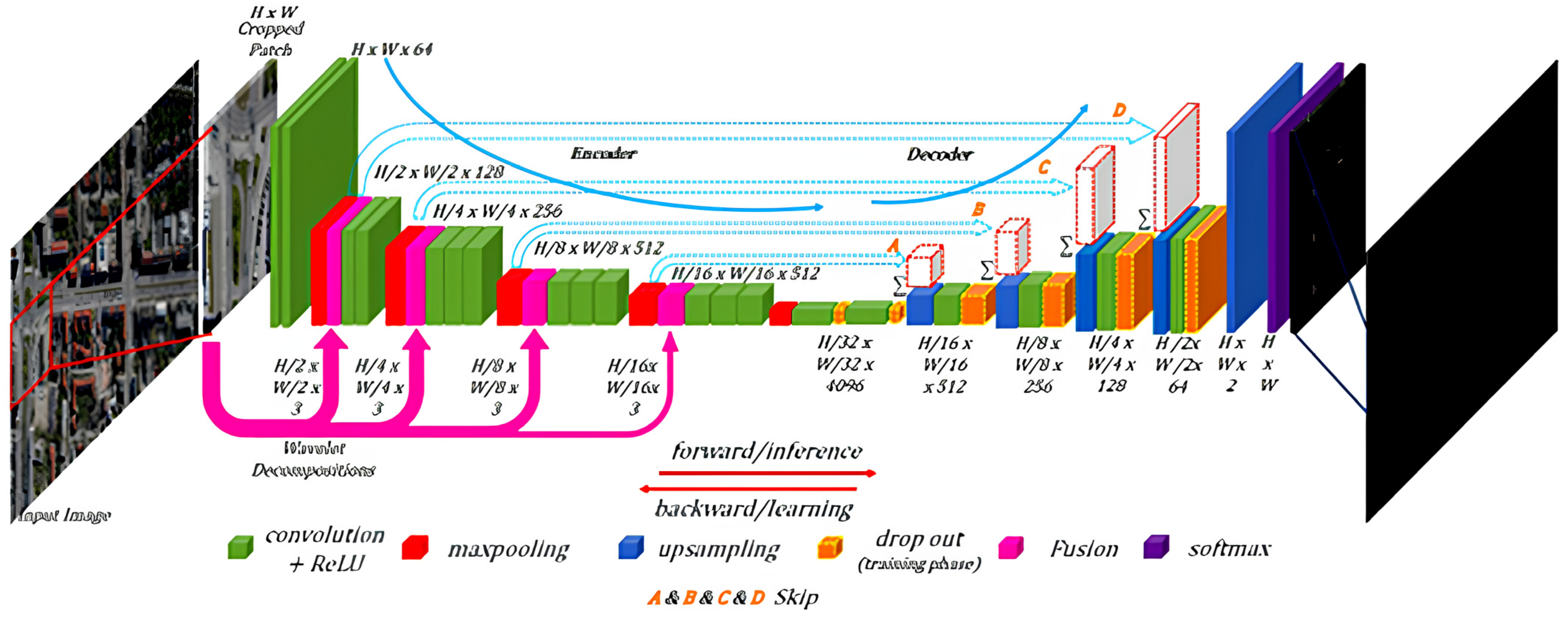

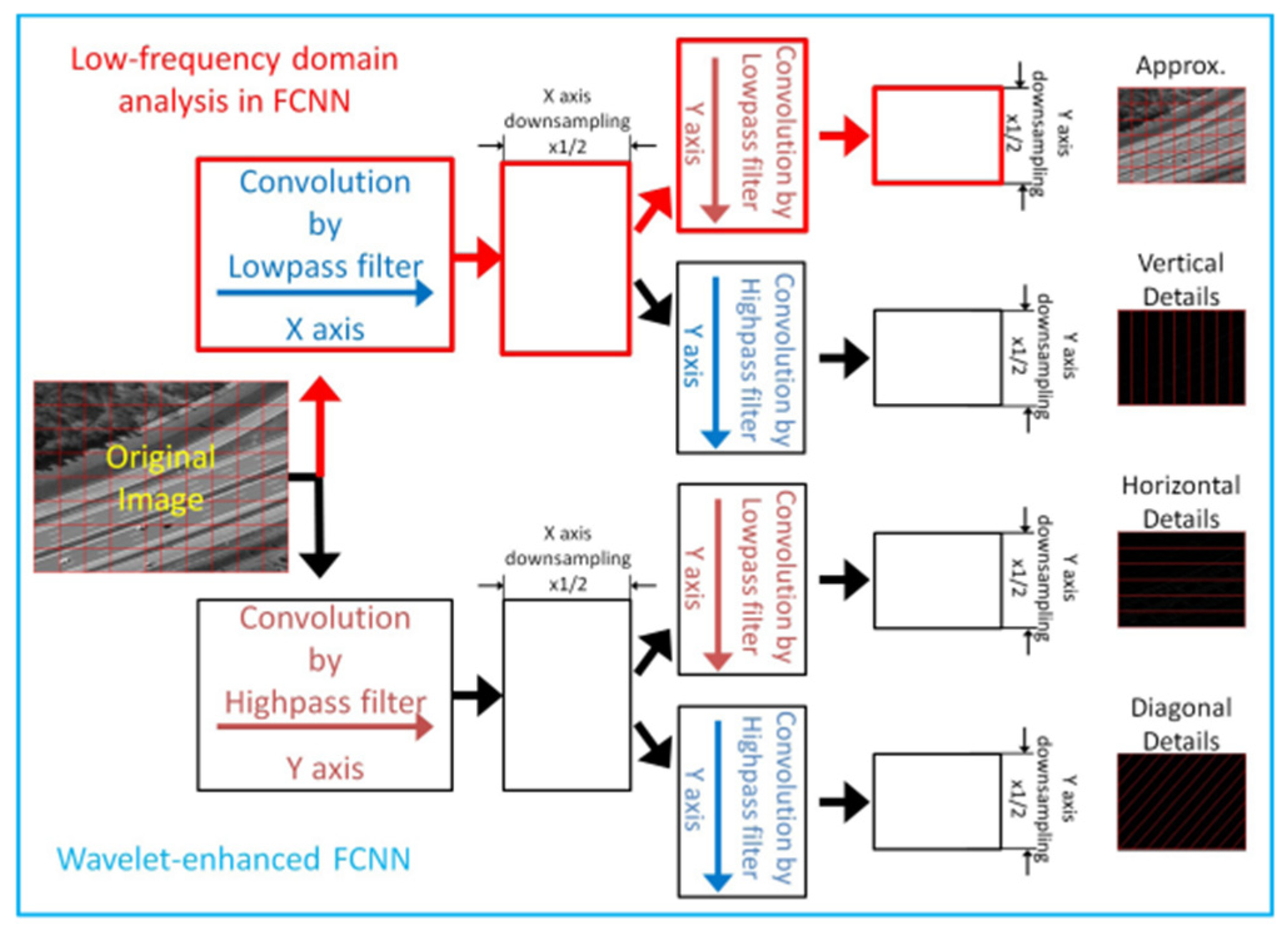

As illustrated in Figure 10, visual tracking methods are generally divided into two major categories: traditional tracking approaches and deep learning-based approaches. Traditional methods include stochastic, deterministic, generative, and discriminative strategies, which are typically based on handcrafted features and fixed models. In contrast, deep learning-based methods, including those that utilize deep or hyper features, offer more flexible and data-driven solutions that adapt to complex environments and object variations. This comparison highlights the evolution from rule-based models to learning-based systems, reflecting a broader trend in the field toward higher accuracy and scalability. Implementation of these frameworks involves algorithms for feature extraction, object representation, and model updating, often leveraging modern machine learning techniques. Ongoing research continues to explore more efficient and reliable tracking solutions, particularly for use in real-time, dynamic settings such as autonomous vehicles, surveillance, and interactive systems.

Figure 10.

Object tracking methods.

3.1. Traditional Tracking Approach

The traditional tracking approach in computer vision relies on predefined rules and manually designed features for object tracking in a video. It involves extracting features like color, texture, or edges, creating a model or template for object representation, and estimating motion using methods like optical flow or template matching. Matching and localization are performed across frames, often employing correlation-based techniques. Filtering methods, such as Kalman filters, are used for accuracy improvement [69].

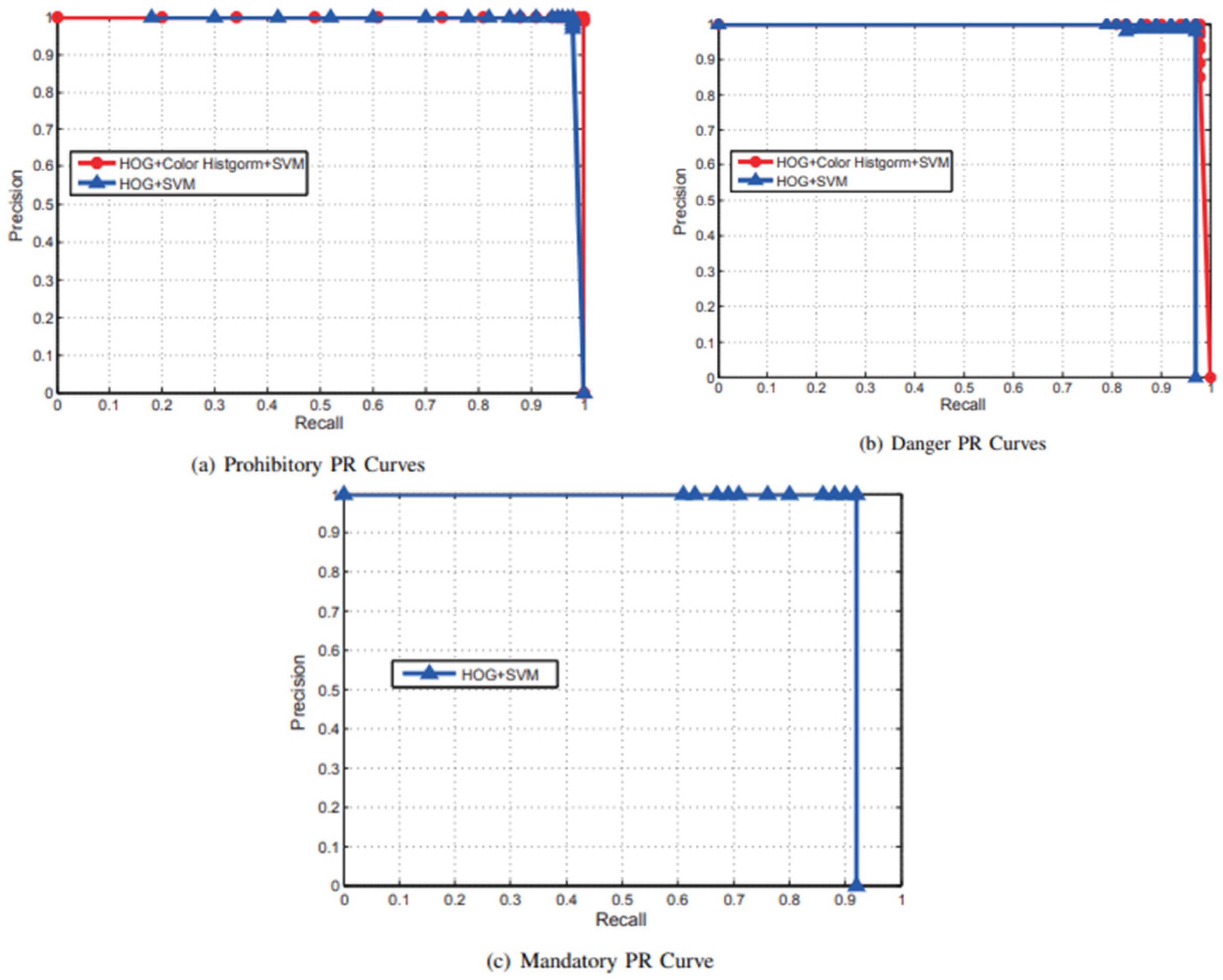

Each tracking method offers distinct strengths (Table 2): deterministic approaches ensure stability and precision in structured tasks, while stochastic methods enhance adaptability under uncertainty. Generative models enable synthetic data creation by learning data distributions, supporting augmentation and representation learning.

Table 2.

Two-dimensional tracking approaches and their mathematical models.

The discriminative methods excel in classification by learning decision boundaries, but require labeling data. The choice of method depends on the task complexity, data variability, and system objectives.

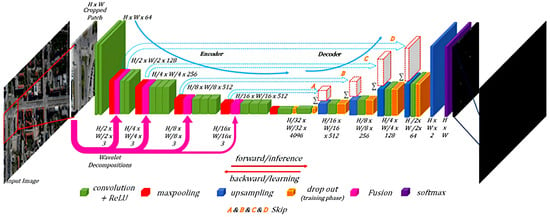

3.2. Deep Leaming Based Tracking Approach

In tracking objects in video sequences, a deep learning-based approach employs neural networks, particularly deep ones, to enhance tracking performance. This methodology leverages convolutional neural networks (CNNs) for extracting hierarchical features, Siamese networks for discrimination between target objects and backgrounds, and recurrent neural networks (RNNs) or Long Short-Term Memory (LSTM) networks to capture temporal dependencies. Object detection networks like region-based CNNs (R-CNN) or You Only Look Once (YOLO) are integrated for simultaneous detection and tracking. Online learning and fine-tuning strategies allow adaptation during tracking. Tracking-by-detection methods use pre-trained object detectors, and data augmentation techniques enhance generalizations. Challenges include addressing occlusions, scale, and pose variations and maintaining real-time performance. Some approaches strive for end-to-end learning, directly processing raw input frames to object tracks without explicit feature engineering. These methods showcase improved accuracy, especially in scenarios with complex motions and appearance variations, evolving with advancements in neural network architectures and training strategies.

This Table 3 summarizes advanced tracking strategies leveraging deep learning and hybrid features. Deep and hyper features improve robustness by combining semantic and handcrafted cues, while transformer-based models offer global attention mechanisms for superior context modeling. These methods significantly enhance accuracy and adaptability in complex visual environments.

Table 3.

Deep feature extraction methods in visual tracking.

3.3. Three-Dimensional Perception and Structured Scene Understanding

Current research in intelligent transportation systems increasingly leverages 3D perception and structured scene understanding to complement traditional 2D approaches. In railway applications, RailVoxelDet introduces a lightweight voxel-based LiDAR pipeline optimized for long-range object detection with competitive inference speed and computational efficiency [81,82]. Similarly, Rail-PillarNet builds upon PointPillars with a parallel attention encoder and transfer learning enhancements to detect foreign objects effectively without substantial parameter increase.

Beyond railway scenarios, Bird’s-Eye-View (BEV) representations have become a standard for structuring multi-sensor data. Frameworks like BEVFormer learn unified BEV features via spatio-temporal transformers, effectively fusing camera and LiDAR data into a robust 3D scene understanding model [83]. End-to-end fusion models such as BevDrive integrate cameras–LiDAR fusion directly into motion planning via BEV constructs, demonstrating the value of perception planning synergy [84].

Another emerging area is non-destructive measurement and interpretable diagnostics, especially relevant in safety-critical systems. For example, methods using physical constraints-guided quadratic neural networks enable interpretable fault diagnosis without needing fault samples, enhancing reliability through explainability, a trend gaining traction for embedding perception into maintenance workflows. To provide a concise overview of the mathematical foundations supporting recent 3D perception methods, Table 4 summarizes representative approaches, their core equations, and key references.

Table 4.

Representative mathematical models in 3D perception and structured scene understanding.

Overall, these approaches reinforce the role of 3D perception as a necessary complement to traditional 2D methods, providing a more comprehensive perspective for intelligent transportation systems.

3.4. Dataset Description

Both traditional tracking methods and deep learning-based approaches fundamentally rely on comprehensive datasets of images and lane markings to achieve reliable performance. Traditional techniques, which utilize handcrafted features like edges and colors, require diverse image datasets to handle variations in lighting, weather, and road conditions. Likewise, deep learning methods demand well-annotated datasets for training and testing, allowing the models to learn and generalize effectively across different scenarios. To ensure robust real-world applications, datasets must encompass a wide range of environments, including challenging conditions like occlusions or extreme weather. Without such data, neither strategy can perform reliably or adapt to dynamic situations. These datasets cater to various aspects of the automotive sector, including lane detection, geolocation, traffic sign recognition, and driver behavior analysis, making them vital for advancing intelligent transportation systems.

3.4.1. German Traffic Sign Recognition Benchmark (GTSRB)

The GTSRB dataset is essential for training and evaluating automatic traffic sign classification algorithms, particularly for advanced driver assistance systems (ADASs) and autonomous vehicles [87]. GTSRB contains 43 classes of German traffic signs, with over 50,000 annotated images. The images vary significantly in terms of perspective, lighting, resolution, and environmental conditions (e.g., blur, shadows, and distortions). These characteristics make the dataset ideal for testing the robustness of models in real-world scenarios.

3.4.2. LISA Traffic Sign Dataset

This dataset is used to train models capable of recognizing American traffic signs, even in complex environments with variations in perspective, occlusions, and lighting conditions.

The LISA datatest contains over 6600 images with 7855 annotations covering 47 classes of signs [88]. The images are captured in urban and suburban environments. Each sign is annotated with detailed information about its position, size, and type, making it a valuable resource for training and testing visual recognition systems.

3.4.3. Cityscapes Dataset

Cityscapes is widely used for semantic segmentation of urban scenes, a crucial task for autonomous navigation and understanding complex road environments [89]. This dataset features thirty annotated classes, including eight related to traffic signs and road markings. It offers 5000 finely annotated images and 20,000 images with simplified annotations, captured in 50 European cities. The annotations include pedestrians, vehicles, signs, and other urban elements, enabling detailed interpretation of traffic scenarios.

3.4.4. TuSimple Lane Detection Dataset

This dataset is specifically designed for lane detection tasks, which are essential for lane-keeping systems and autonomous driving applications.

TuSimple provides 6408 annotated road images, primarily of highways. The annotations include precise lane line positions captured under various conditions (e.g., daylight and twilight). This dataset presents realistic challenges for developing reliable lane detection algorithms [90].

3.4.5. CULane Dataset

CULane is suited for studying road markings in complex urban environments, such as intersections and poorly marked roads. With approximately 133,000 annotated images, this dataset covers diverse scenarios, including roads bordered by vegetation, intersections, and unmarked areas [91]. The detailed annotations help identify both straight and curved lanes, making it ideal for navigation studies in real-world environments.

3.4.6. U.S. Traffic Signs Dataset

This dataset is critical for recognizing traffic signs specific to the United States, offering a wide range of scenarios to test the robustness of recognition models [92]. It includes several thousand annotated images representing over 100 classes of traffic signs. The images show variations in lighting, perspective, and weather conditions, reflecting real-life situations.

3.4.7. Traffic Sign Dataset—Classification

This dataset enables the study of traffic signs in European environments, providing data tailored to regional diversity of robust models. It contains thousands of annotated images across 58 classes of signs [93]. The images capture varied environments, from rural areas to large cities, under different weather conditions.

3.4.8. Caltech Pedestrian Dataset

This dataset is crucial for pedestrian detection, a fundamental task for ensuring the safety of autonomous driving systems in urban environments.

The dataset contains approximately 250,000 annotated images capturing pedestrians under diverse conditions, including varying lighting and crowd density [94]. These annotations help train models to detect pedestrians accurately and efficiently.

3.4.9. KITTI Dataset

The KITTI dataset is widely recognized in the autonomous driving community as a foundational benchmark for evaluating both visual and geometric perception algorithms. Collected using a real-world vehicle equipped with stereo cameras, a rotating LiDAR scanner, GPS, and inertial sensors, KITTI captures diverse urban and highway scenes with high precision. It includes ground truth annotations for object detection, lane estimation, optical flow, and depth prediction, enabling robust cross-modal training and validation of perception systems [95]. Its multimodal structure makes it particularly valuable for the development of end-to-end models that integrate both spatial and semantic understanding. Recent advancements in this area include Vision–Language Models (VLM) and Vision–Language–Action (VLA) frameworks, which unify perception and decision-making by leveraging aligned visual and textual data. These architectures enhance interpretability and improve decision robustness in complex urban scenarios [96].

3.4.10. Malaysia Roads Dataset

This dataset is designed for recognizing road markings and signs specific to Malaysian roads. It contains thousands of annotated images featuring road markings and signs unique to the region. This data is crucial for adapting visual recognition systems to local requirements [97].

3.4.11. STS Dataset (Simulated Traffic Sign Dataset)

The STS dataset is designed for training and testing algorithms for traffic sign recognition in simulated and real-world environments. This dataset includes a large number of traffic sign images generated through simulation tools to represent diverse conditions [98]. It focuses on various weather conditions, lighting scenarios, and perspectives. STS datasets are particularly useful for augmenting training datasets where real-world data is limited.

3.4.12. Belgian Traffic Sign Classification Dataset

The BTSC dataset is used for traffic sign classification tasks, specifically focusing on Belgian traffic signs. It supports the development of systems that recognize diverse regional traffic signs [99]. The dataset contains more than 10,000 images of 62 classes of Belgian traffic signs, including regulatory, warning, and informational signs. The images cover varying conditions such as partial occlusions, different angles, and lighting variations, making it highly suitable for robust model training.

3.4.13. Driver Inattention and Traffic Sign Dataset

The DITS dataset focuses on driver behavior analysis in relation to traffic sign recognition. It is useful for evaluating driver attention and alert systems. DITS includes synchronized data of driver behavior, traffic signs, and vehicle performance [100]. The dataset contains recordings of traffic sign encounters in various driving conditions, including challenging scenarios like night driving or adverse weather. It helps in developing systems that detect driver inattention and generate warnings when signs are ignored.

3.4.14. Text-Based Traffic Sign Dataset in Chinese and English (TTSDCE) Dataset

The Traffic Guide Panel dataset includes thousands of images focused on highway guide panels with English text, serving as a benchmark for text-based traffic sign recognition. Due to limited availability of annotated training data, the method relies on a self-collected dataset named TTSDCE. This dataset contains 1800 images featuring traffic signs in Chinese and English, collected from various sources such as cameras and online images. It demonstrates flexibility in multilingual scenarios and is expected to be released for research in the future.

3.4.15. Comprehensive Analysis and Comparison of Automotive Datasets

Table 5 offers an in-depth comparison of various automotive datasets, each tailored to specific research and application needs in the field of intelligent transportation systems. The datasets vary widely in terms of category, image volume, class diversity, and annotation types, reflecting their unique purposes. For instance, the GTSRB dataset and LISA datatest are focused on traffic sign recognition, boasting 43 and 47 classes, respectively, making them ideal for developing models that classify diverse road signs. In contrast, datasets like CULane and TuSimple prioritize lane detection tasks, with thousands of annotated images capturing road lane points under challenging conditions such as occlusions and poor lighting. The Cityscapes datatest stands out for its pixel-level annotations across 30 classes, making it a prime choice for urban scene segmentation in autonomous navigation.

Table 5.

Comparison of automotive datatests.

The KITTI datatest and Caltech Pedestrian datatest diversify the landscape by targeting multiple vision tasks, including 3D bounding box annotations and pedestrian detection, respectively. While some datasets, such as the GNSS datatest, offer geospatial metadata crucial for localization, others like the STS datatest and BTSC datatest excel in providing robust traffic sign classification data. Additionally, datasets such as Malaysia Roads and Tunisian Road Signs are particularly valuable for region-specific research, ensuring adaptability across varying environments. The inclusion of details like resolution, annotation type, and metadata availability further highlights the datasets’ tailored applications. Datasets offering augmentation options, such as GTSRB, present an edge by simulating real-world complexities, whereas proprietary datasets like U.S. Traffic Signs and DITS underline the importance of accessible data-sharing initiatives in advancing the field. The Traffic Guide Panel dataset and the TTSDCE dataset are essential for advancing text-based traffic sign detection and recognition, particularly in multilingual contexts.

Each dataset, while addressing unique challenges, collectively forms a comprehensive foundation for developing innovative solutions in intelligent transportation, showcasing a balance between global applicability and specialized focus areas. This diversity ensures that researchers can choose the most appropriate datasets for their specific needs, whether the task involves recognizing a multitude of traffic signs, detecting lanes in varied conditions, or analyzing driver behavior in response to environmental cues.

Beyond the technical details summarized in Table 5, the comparison should also account for differences in licensing and commercial restrictions, as well as the strategies and costs of adapting models trained on synthetic data to real-world driving scenarios.

4. Results and Discussions

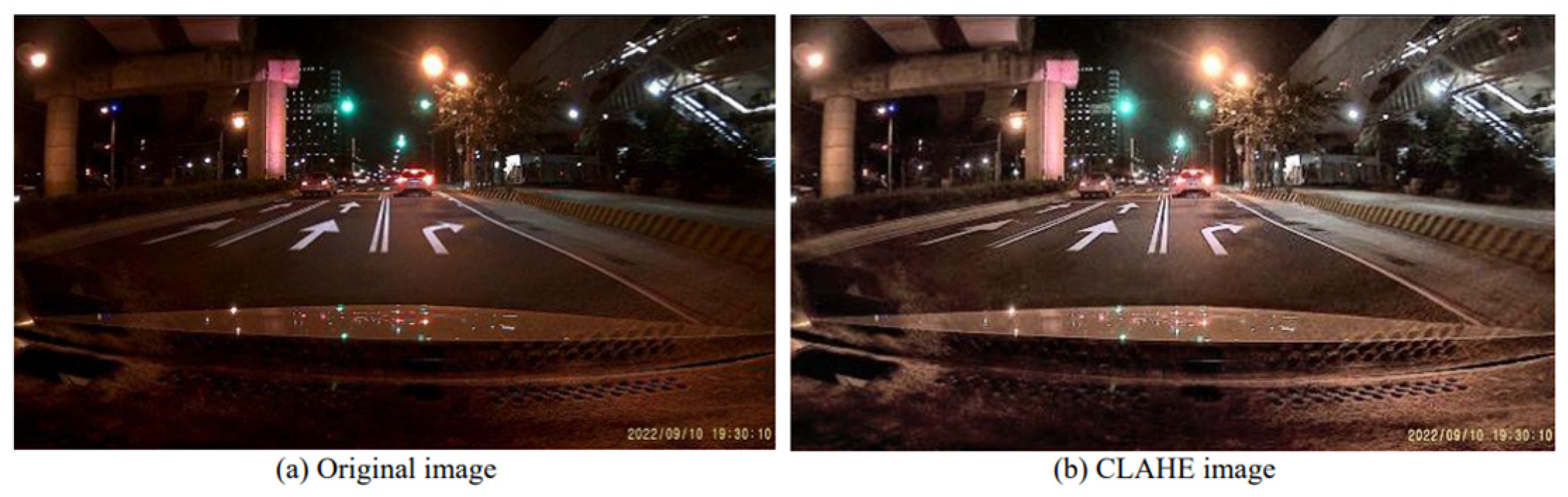

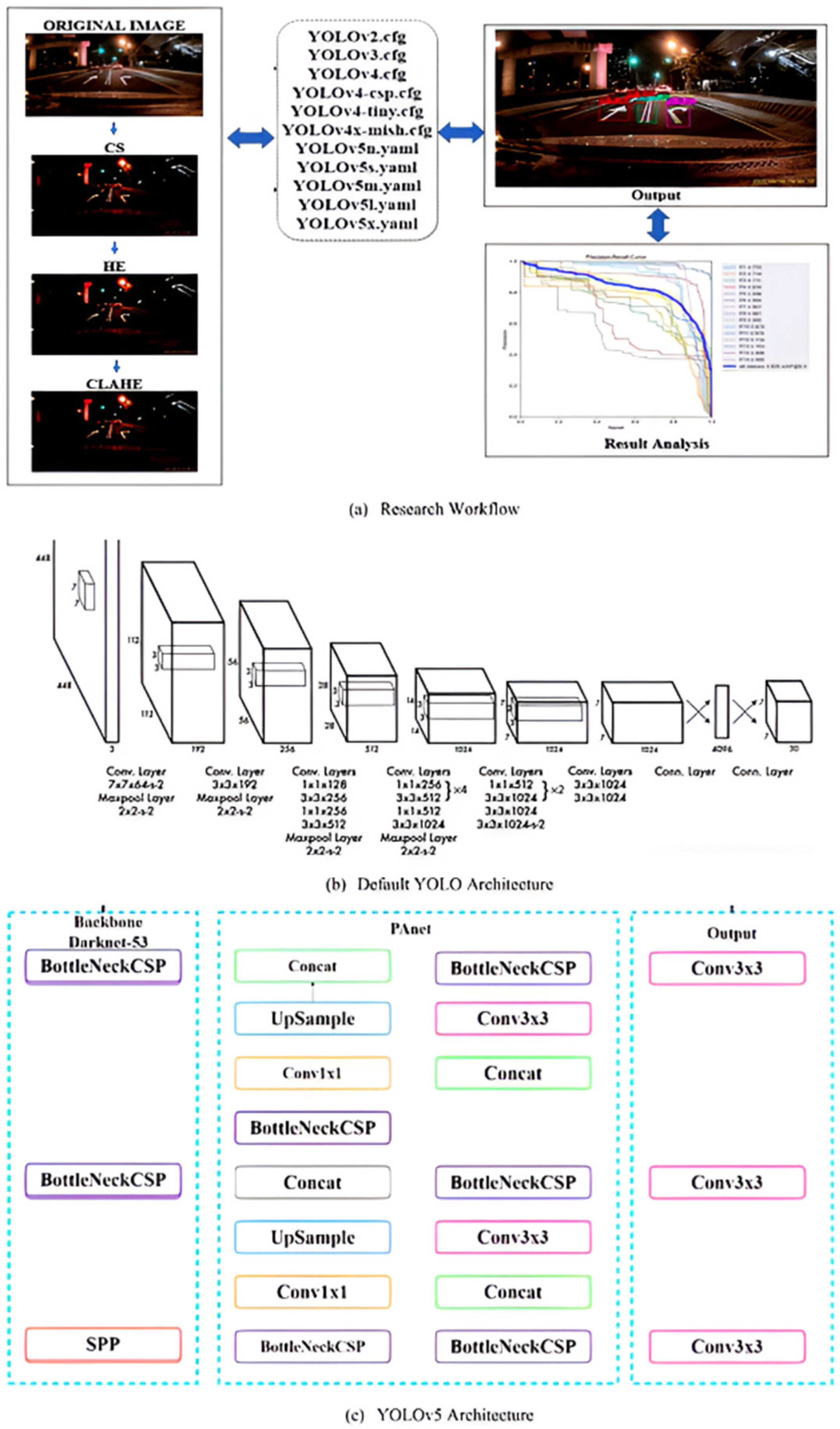

Identifying and recognizing traffic signs as well as road lane markings are essential for modern intelligent transportation systems and autonomous vehicle operations. Various innovative techniques rooted in artificial intelligence (AI) and image processing have been developed to enhance precision and robustness in these tasks. Deep learning methods, including convolutional neural networks (CNNs), are commonly utilized for classifying traffic signs, while object detection frameworks such as YOLO (You Only Look Once) and the Faster R-CNN have shown success in detecting signs in real time. For lane marking detection, segmentation models such as U-Net and SegNet are frequently applied, providing accurate delineation of road boundaries. Traditional approaches like edge detection using Canny and Sobel filters, alongside Hough Transform techniques, also play a significant role in identifying lane structures. Recent studies have further incorporated temporal analysis using neural networks like RNNs and LSTM to address dynamic road environments. To optimize performance, preprocessing techniques such as noise reduction, contrast adjustment, and region-of-interest (ROI) selection are often implemented.

While vision-based techniques offer detailed spatial and semantic information, they remain sensitive to variations in lighting, weather, and occlusion. Visual sensors alone cannot guarantee consistent performance across all conditions. As a result, many systems now incorporate data from complementary sensors, such as LiDAR and radar, which provide depth and robustness in challenging environments. This fusion of modalities helps compensate for the weaknesses of camera-only perception, making the overall system more reliable and better suited for real-world deployment. While the reviewed approaches highlight the strengths and weaknesses of 2D vision-based detection, several recent studies stress that extending perception into 3D provides a way to mitigate these weaknesses. Depth-aware cues from LiDAR or stereo enhance stability under occlusions, night driving, or adverse weather, where 2D methods alone often fail [101]. This perspective confirms that 3D is not a substitute but a complementary extension, ensuring more reliable intelligent transportation systems [102].

4.1. Image Processing Methods

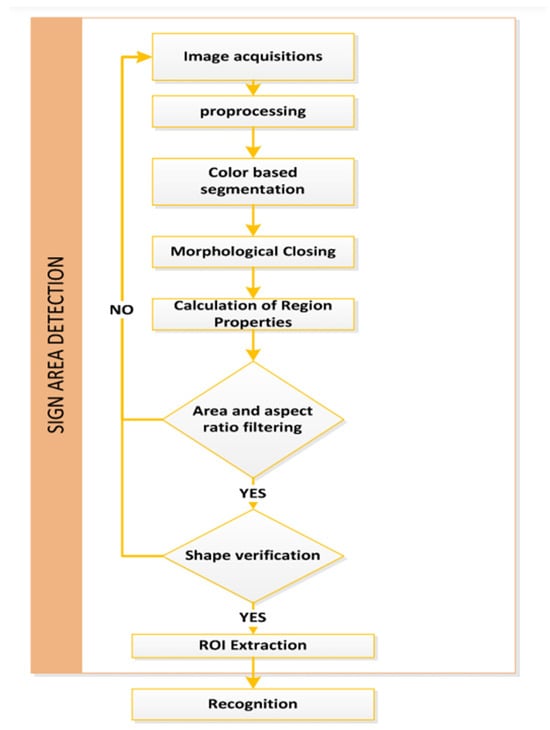

Detection approaches vary depending on the methodology or strategy adopted, but they generally fall into three main categories. Firstly, there is the detection of object geometry. Secondly, there is color-based detection for localizing objects through segmentation, which identifies regions of interest. Lastly, artificial intelligence, particularly deep learning techniques, is increasingly employed for object detection tasks.

4.2. The Color-Based Methods

Color-based segmentation stands out as a swift detection method. Utilizing specific filters, algorithms swiftly extract regions of interest, enabling the identification of distinct objects. Nonetheless, fluctuations in lighting conditions can introduce inaccuracies or unwanted outcomes. To mitigate this challenge, researchers have explored diverse segmentation techniques based on various color spaces. These approaches find utilities across a spectrum of applications, including lane recognition, traffic sign detection, and vehicle license plate recognition.

4.2.1. The Methods That Use the RGB Space

The majority of algorithms directly use the color space of vision, i.e., RGB, provided that the parameters are fixed. Ruta [20] applied a segmented color enhancement technique, specifically using the colors yellow, blue, and red, with the choice of the channel of the dominant color in relation to the others made according to the following transformation (Equation (26)):

For each pixel, S = r + b + g; x = [r, g, b].

One downside of employing RGB space is its vulnerability to variations in lighting conditions, as we can encounter scenes with insufficient or excessive light that can cause problems in segmentation [103].

An approach based on SVFs (Simple Vector Filters) [104] (Formula (27)) uses f(r, g, b) < 1 for achromatic color and f(r, g, b) ≥ 1 for chromatic color. R, g, and b represent the brightness of each color, respectively, and D is the degree of extraction.

The authors obtained good results in the separation of colors: red, blue, and yellow. For each pixel, x = [xr, xg, xb], the SVF will be calculated according to the following Equation (28):

The images are divided according to illumination changes into two classes: cloudy and sunny. The segmentation step takes 60 ms on average.

4.2.2. Methods That Use Non-Linear Color Spaces

Some algorithms use color enhancement techniques, which require color coordinate transforms, so Nnolim [8] applied color segmentation using non-linear color spaces. Studies using this solution have shown that the system is feasible. This includes the HSI and HSV color spaces. Equation (29) represents RGB transformation into HSV, as follows [105]:

In the HSV color space, hue represents the angle of rotation between the red plane and the origin, while saturation denotes the distance from any point in the color space to the color’s surface. The value (V) signifies the intensity or brightness attribute of the image’s channel.

Equations (30) and (31) for the conversion from RGB to HSI are given as follows:

such that

The HSI color space is grounded in human visual perception and color interpretation, characterized by a color hexagon, triangle, or circle model. Its attributes—hue, saturation, and intensity—bear resemblance to the HSV system, yet their mathematical formulations differ. In the hexagonal model, primary and secondary colors serve as vertices, while in the color triangle model, they are represented by line segments. The color wheel illustration demonstrates that primary and secondary colors are spaced apart by 120°.

Other authors [106] have used Otsu’s method with fuzzy logic in order to locate the regions of interest, applied exactly to extract the thresholds of the constituent elements (H, S, and V) of the HSV space, but the constraint that was posed is that the calculated distance between the colors is very close, because the HSV space is not symmetric. The method of Otsu applies automatic thresholding, compared to others that prefer to use manual thresholding [107], due to variations in the illumination of the RGB space. To limit the values of the optimal thresholds (Red, Blue) as is illustrated in Table 6, the pixel is considered red or blue if the H, S and V components meet the conditions. The advantage of this method is to eliminate noise.

Table 6.

The range of thresholds for the H, S, and V components of traffic signs.

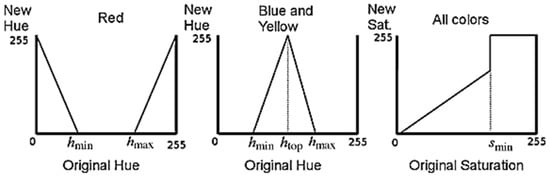

Liu [108] also used manual thresholding, which performed well across different lighting conditions. Additionally, he introduced a novel thresholding approach that leverages color information from neighboring pixels to obtain output masks for each color using hue/saturation thresholding (Formula (32)).

where H and S are the hue and saturation channels; and et are the fixed thresholds that can be found in [109].

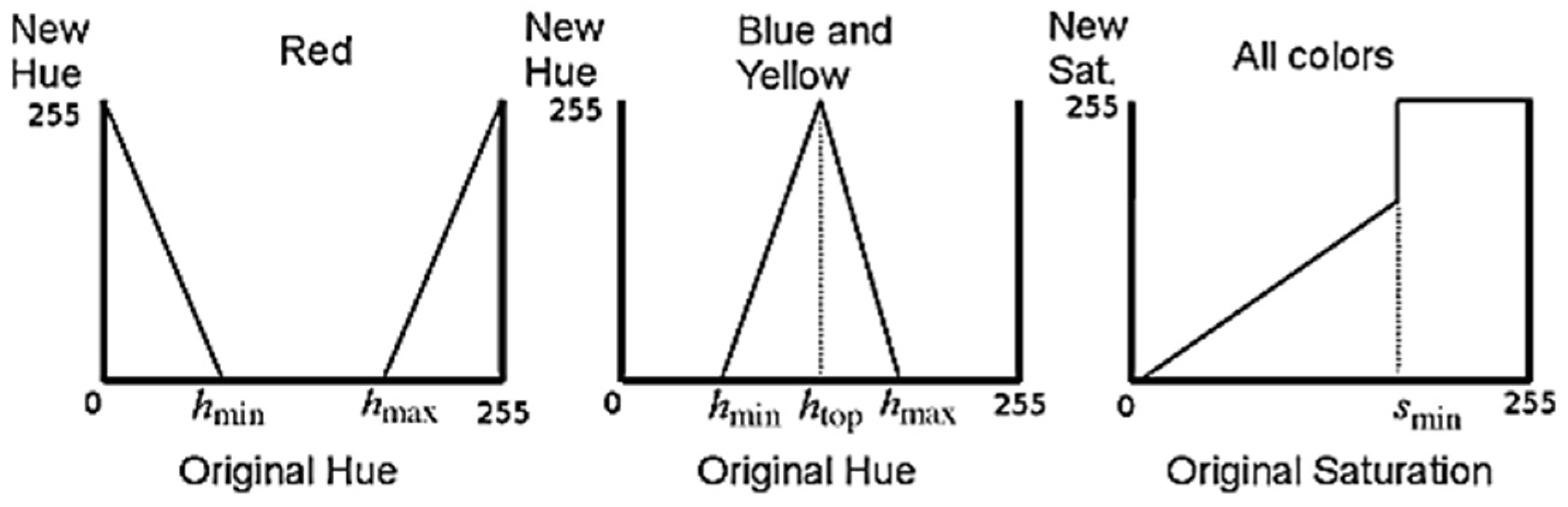

The HSI space has two color components, hue and saturation, which are closely related to human perception. Hue represents the dominant value of color. Saturation represents the purity of color. HSI components can be obtained from RGB [110]. Moreover, this space is unstable near achromatic colors and cannot be used directly on these pixels. So the authors applied the HST technique [109] for the colors red, yellow, and blue, as shown in Table 7. The obtained hue site H was in the range [0, 360], and the saturation S was between [0 and 255]. They also used the HEST method, based on the LUT technique, described by four parameters, hmin, htop, hmax and smin, shown in Figure 11, to improve the hue and saturation of colors that can be found in their paper [111]. It is in fact a flexible threshold, where different values are assigned using linear functions.

Table 7.

The range of thresholds for the HST space.

Figure 11.

HSET color LUTs for the HSET method [111].

4.2.3. The Methods That Use Color Spaces Linear

Specific lighting conditions profoundly affect the color perception of road signs. Most approaches are based on HSV or HSI color space, but the camera used for this application has a raw Bayer output, and a conversion would be too computationally expensive due to the introduced nonlinearity. L. Song [112] chose to use the color space YUV, where Y represents the intensity, and U/V represents the color difference. The linear transformation between RGB and YUV is given by the following Formula (33):

H. T. Manjunatha [113] used the YCbCr color space. The proposed strategy converts the traffic signs captured with RGB space to YCbCr space. The color thresholding technique is utilized to isolate the red color, which represents hazard signs, and subsequently eliminate any extraneous elements from the images. The transformation (Equation (34)) from RGB to YCbCr is shown below [114]:

Implementing the different methods led us to find the best method (color space), according to [115]:

- In individual images, optimal outcomes were achieved using the RGB method; however, in videos, this was not the case.

- The HSV space gives higher results, but on condition that the execution time constraint is eliminated.

4.3. Geometry-Based Methods

Detecting road signs through color segmentation faces numerous challenges, including adverse weather conditions like snow or rain, varying times of the day (morning, night), and fluctuations in object distance between the camera and the road sign. These factors significantly impact the signs’ appearance due to light reflections and other environmental factors. As a result, approaches relying solely on color-based methods may lack effectiveness and robustness in perceiving the environment accurately. To address this, some researchers are exploring more reliable detection techniques focusing on the signs’ geometry. This involves analyzing the contours of the objects in the image to detect signs, thus minimizing overlap with surrounding objects in the road environment.

4.3.1. Hough Transform

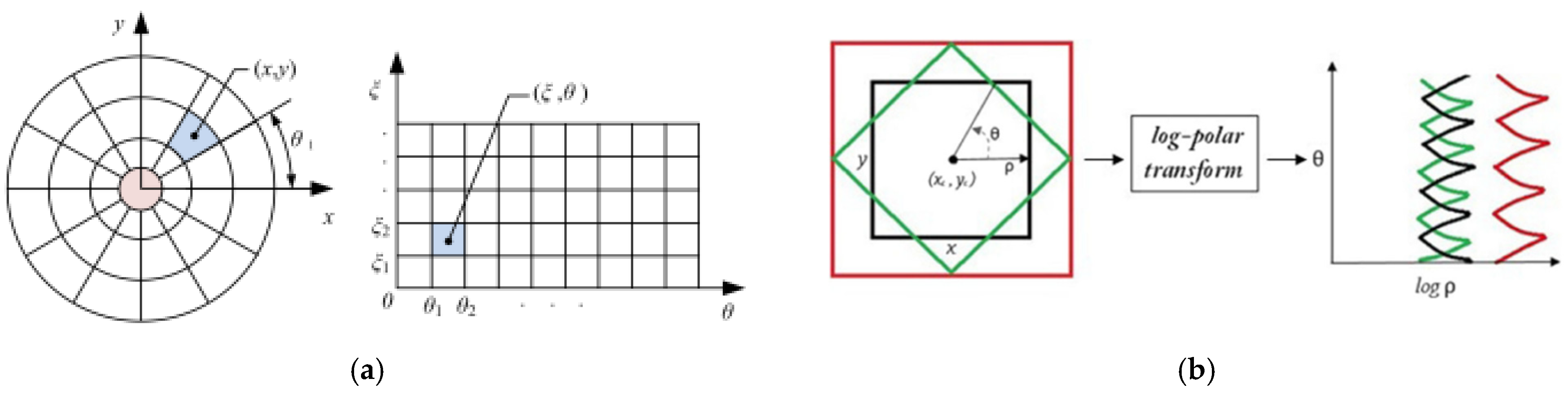

The Detection of the Roadway

During forward motion, a vehicle can be considered to undergo continuous movement. When searching for lane lines within a specific area, it is essential to streamline image processing. In cases where lane lines are typically distributed on both the left and right sides of the road, the Hough transform proves to be a valuable technique for feature extraction [116]. This method detects objects with distinct shapes, such as straight lines, circles, and ellipses. X. Wei [117] presented a road line detection approach primarily based on Hough line detection statistics. The core idea involves converting the Cartesian coordinate system of the image into a polar coordinate Hough space, transforming (Equation (35)) each pixel coordinate (P (x, y)) into (ρ, θ).

The point P (x, y) on the same line is satisfied:

t (Sx, Sy), and is the direction.

The discretization of and θ directly affects detection: coarse steps risk missing fine lane markings, while very fine steps increase computation and noise sensitivity. Selecting an adequate resolution is therefore a compromise between accuracy and real-time performance.

When utilizing an adjusted camera for lane line detection, there exists a substantial likelihood of the lane lines being obscured. Typically, these lines are situated on both the left and right sides of the roadway. To enhance the precision of the detection process, it is imperative to employ tracking technology, which augments both the velocity and accuracy of detection. This necessitates the application of the Hough transform to confine the scope of its voting space, as cited in [117]. Specifically, the parameters ρ and θ are adjusted to modulate the voting space’s range. The polar angle and polar radius are delimited by the boundary lines flanking the left and right sides, respectively.

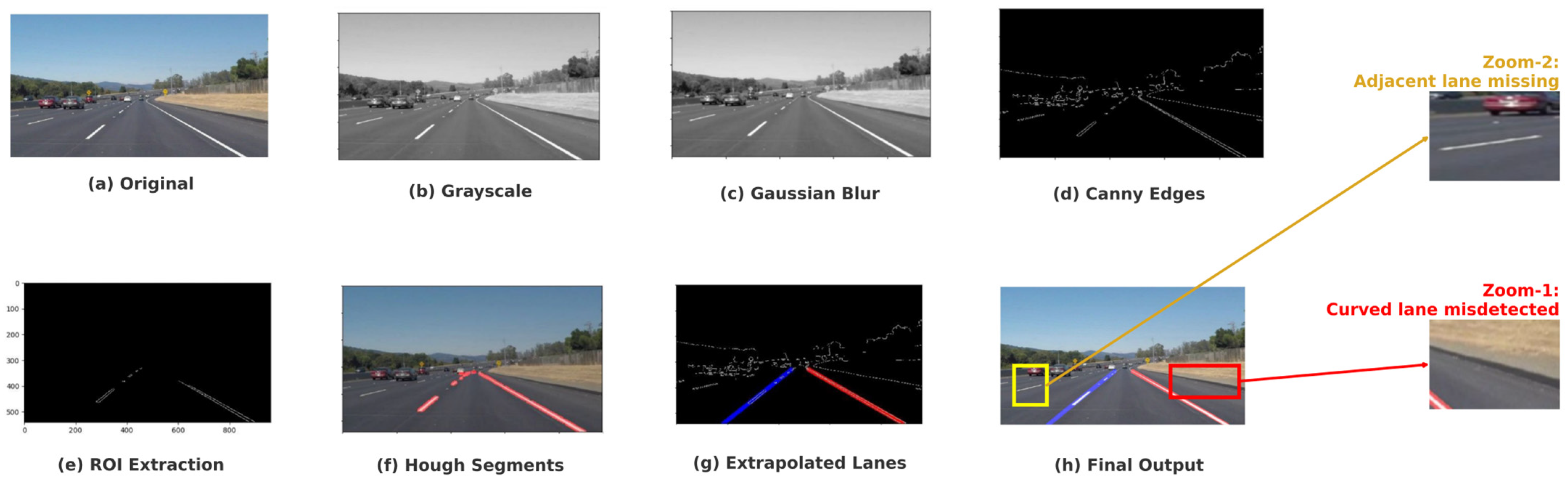

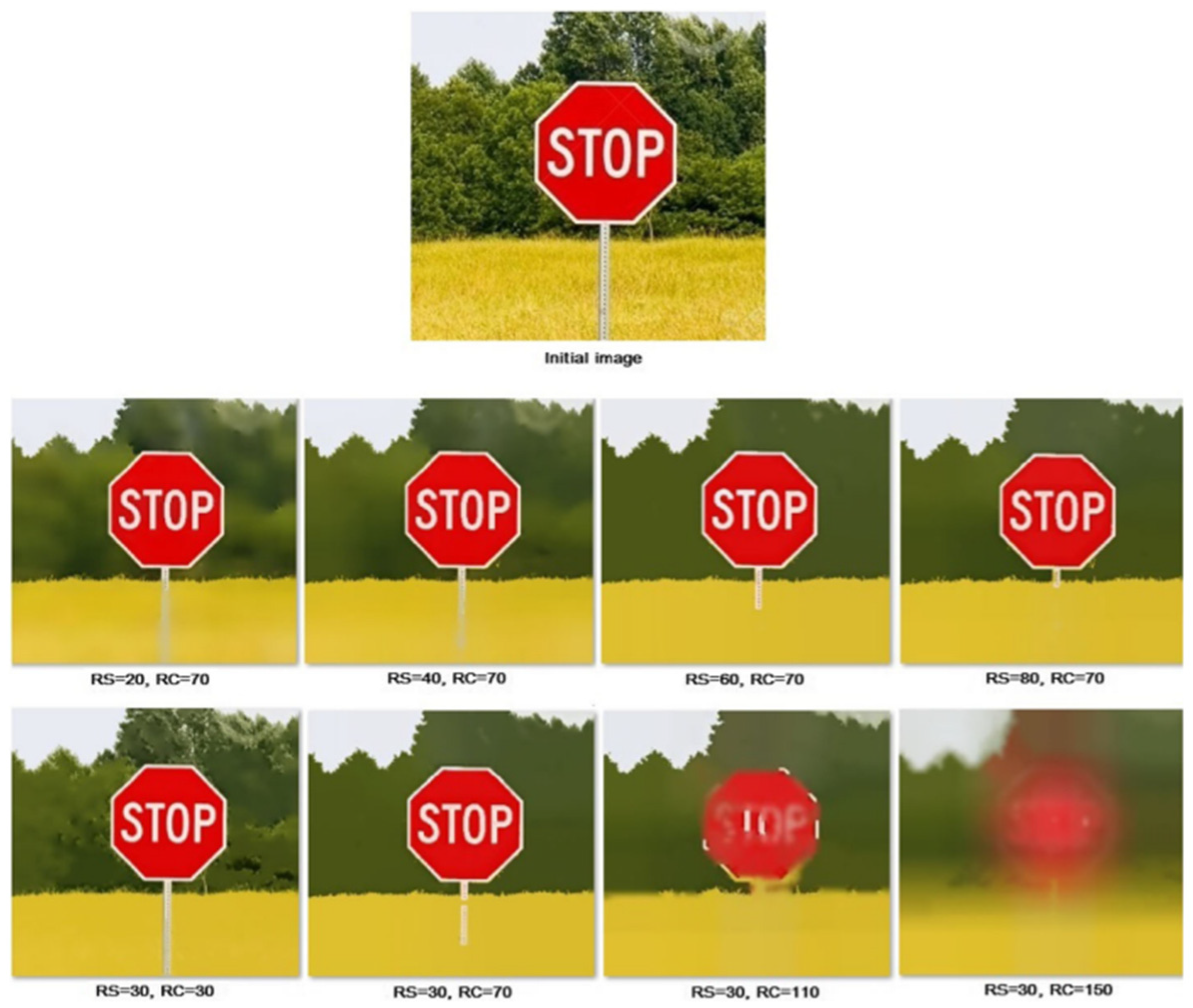

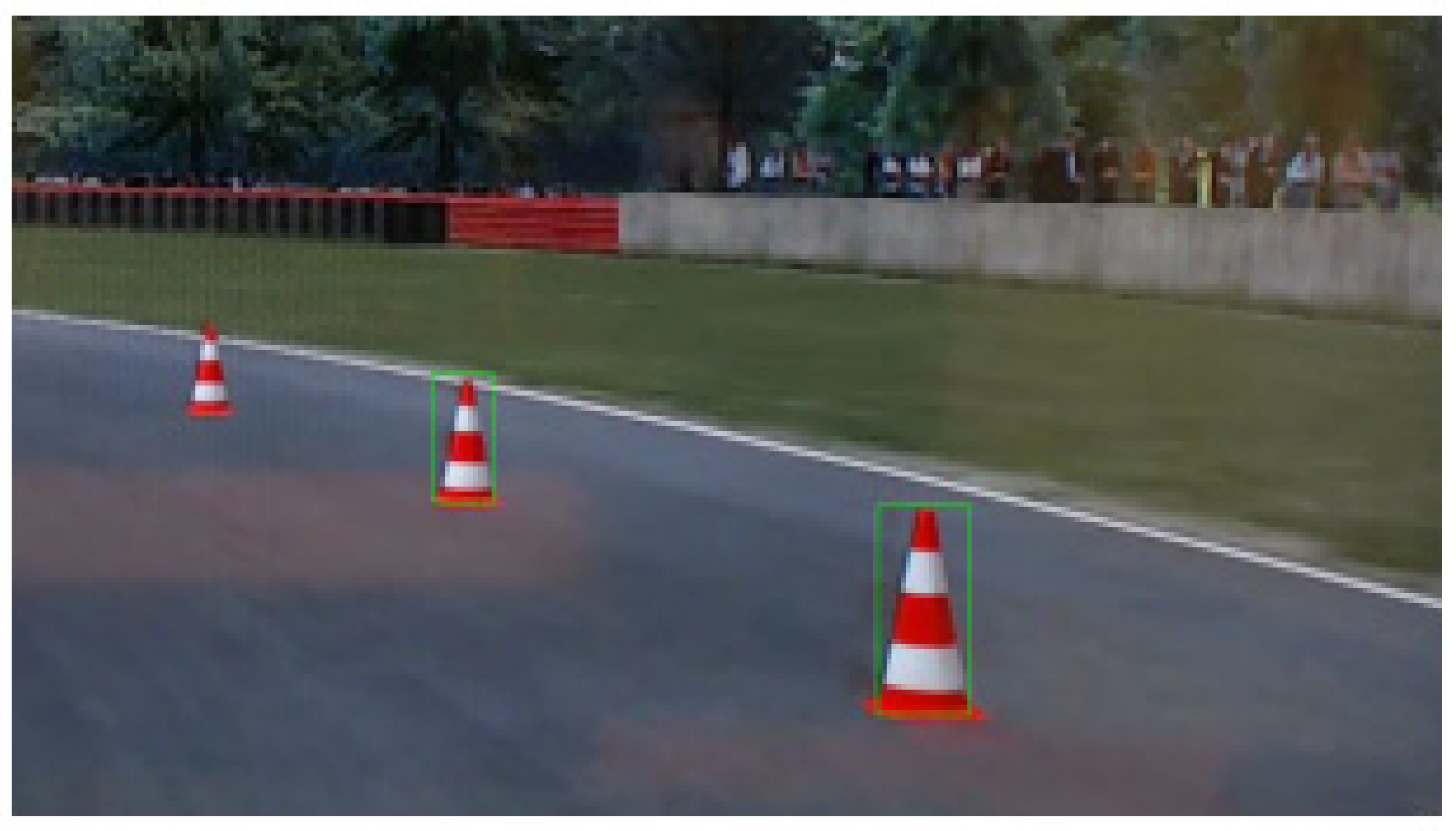

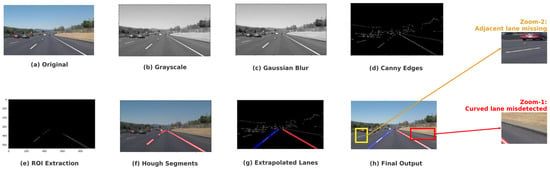

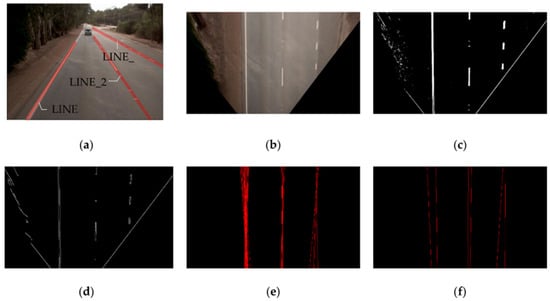

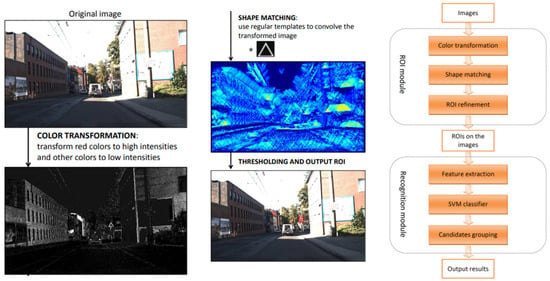

The paramount criteria for traffic lane detection and tracking methods encompass both its velocity and dependability. W. Farage [118] introduced a novel approach termed LaneRTD (Lane Real-Time Detection), which leverages raw RGB imagery to delineate road margins, as depicted in Figure 12, through the segmentation of lane lines. The principal innovation of this method lies in its meticulous equilibrium between rapid processing, minimal resource consumption (notably in terms of memory and CPU usage), consistent reliability, and robustness, thereby satisfying the operational demands of systems designed for advanced driver assistance (ADAS) or autonomous vehicles. Predicated on the Hough Transform technique, this methodology incorporates a smoothing phase to mitigate the impact of discernible noise on the edge detection process, achieved through the application of a Gaussian filter. The Formula (36) for a Gaussian filter kernel with dimensions (2k + 1) × (2k + 1) is as follows:

Figure 12.

LaneRTD pipeline for lane detection with annotated zooms highlighting failure cases (adjacent lane omission and misdetection of curved lanes). The red line represents the right lane boundary, and the blue line represents the left lane boundary.

The smoothing strength depends on σ and the kernel size k: larger values reduce noise but may blur lane edges, while smaller values preserve details but risk leaving residual noise. Proper tuning ensures a balance between robustness and efficiency.

For the purpose of edge detection and extraction, the Canny operator is employed. Given that an edge within an image may be oriented in various directions, the Canny method utilizes four distinct filters to accurately detect horizontal, vertical, and diagonal edges within the blurred image. The edge detection operator yields a value for the first derivative of both the horizontal direction (Gx) and the vertical direction (Gy). The gradient and direction of the edge can be ascertained as follows (Formula (37)):

The LaneRTD (Figure 12) method encounters important limitations: it mainly detects straight lane segments, which results in poor representation of curved lanes unless additional parameters are introduced to handle curvature. Experimental evaluation confirms this behavior: while detection accuracy on straight lanes remained above 90%, it dropped below 65% on curved lanes. Furthermore, adjacent lanes in multi-lane scenarios were frequently missed, with omission rates approaching 50% in our tests. Performance degradation was also observed under occlusions caused by vehicles and shadow patterns, where the false negative rate increased by nearly 40%. These findings highlight the need for more robust parameterization and feature modeling to improve stability under diverse driving conditions.

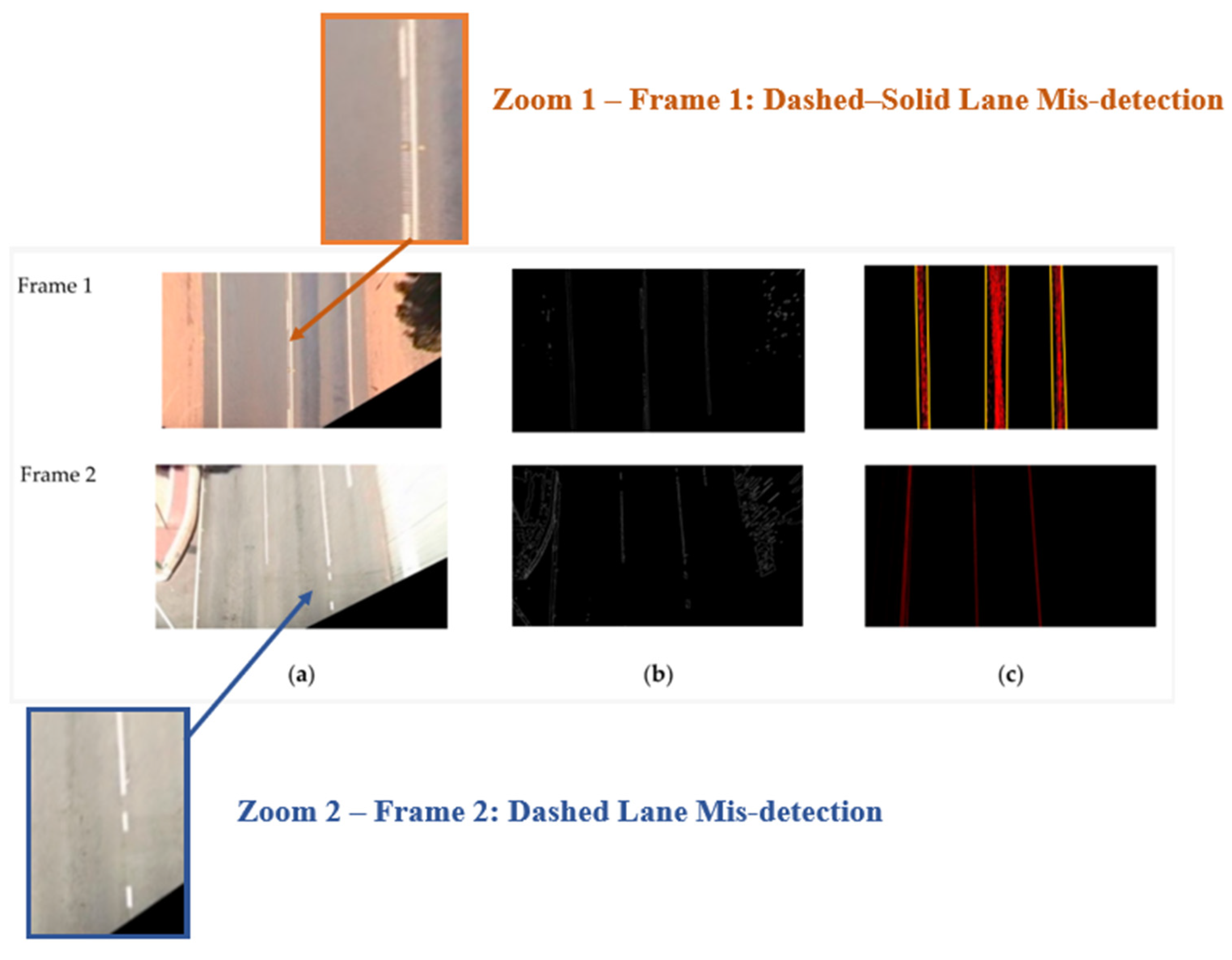

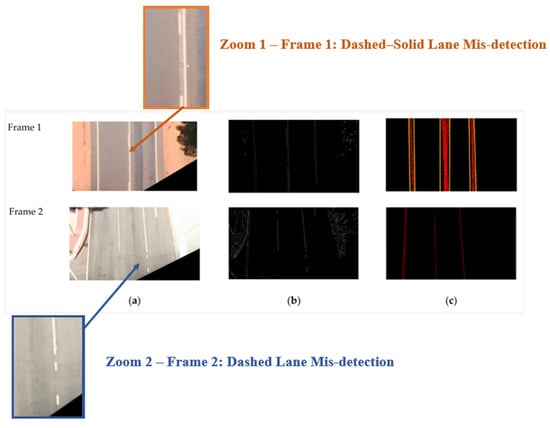

The segmentation method [119], utilizing the Hough Transform, was tested on sample pavement images (Figure 13), leveraging its intensive use for line detection by assessing connections between random point pairs and selecting those exceeding a user-defined voting threshold.

Figure 13.

Analysis of lane detection on pavement images highlighting misdetections: (a) input frames; (b) Canny edge maps; and (c) grouped Hough lines. Zoom 1—dashed–solid lane mis-detection; Zoom 2—dashed lane mis-detection. The red lines represent the detected lane boundaries, and the yellow lines represent the reference or fitted lanes.

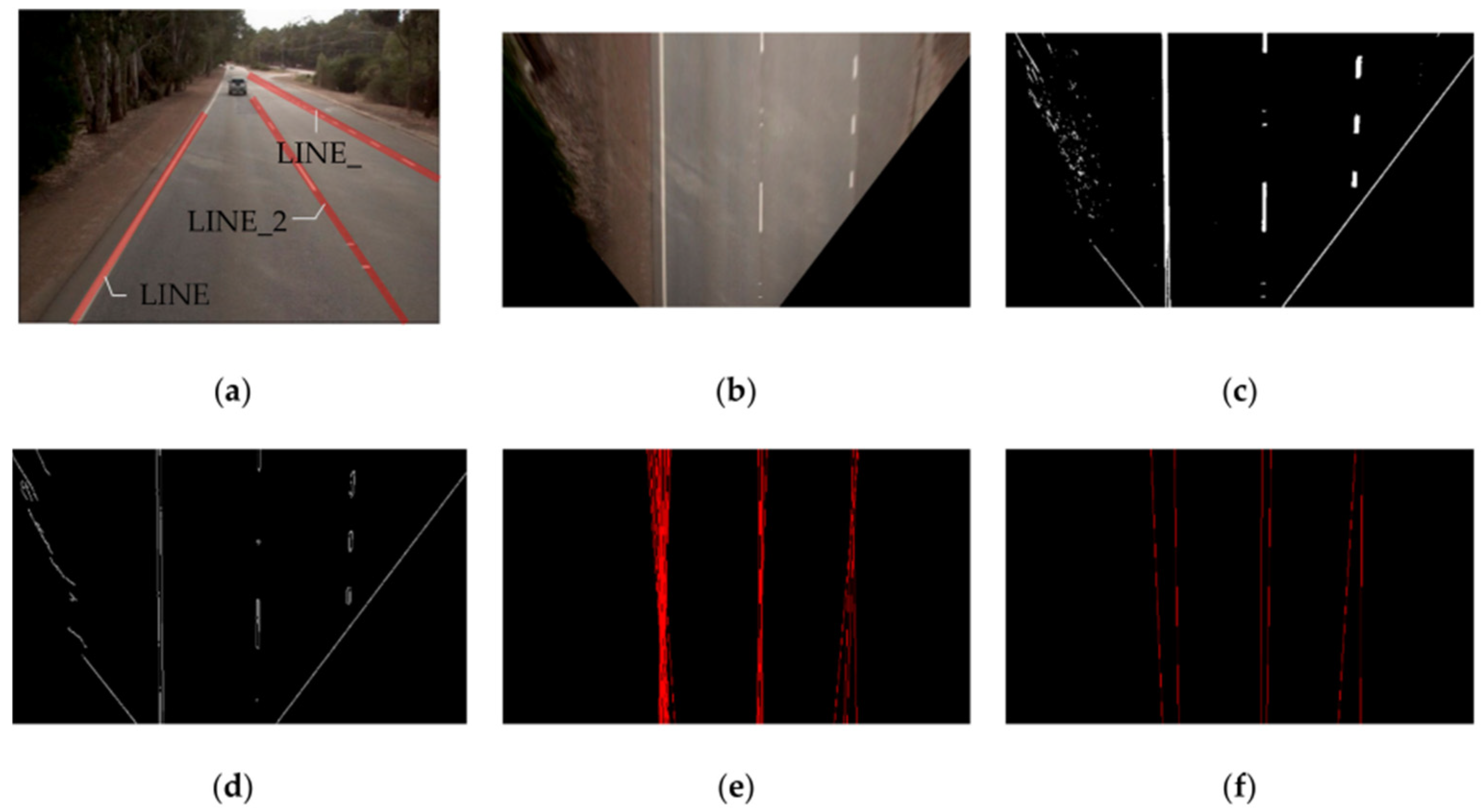

For curved roads, the assumption remains valid, as only a 20 m segment in the near field was focused on. The transformation matrix was calculated to ensure that the resulting images approximately encompass the widest part of the pavement along the route and span a length of 20 m, enabling significant reductions in roadside facilities and plants (Figure 14).

Figure 14.

Illustration of the image processing pipeline with segmentation results: (a) original input; (b) bird’s-eye perspective; (c) hybrid descriptor output; (d) Canny edge detection; (e) Hough transform output; and (f) segmentation stage.

The performance of lane detection methods depends strongly on the tuning of their parameters. Proper adjustment of discretization, smoothing, and thresholding ensures a balance between robustness, accuracy, and computational efficiency.

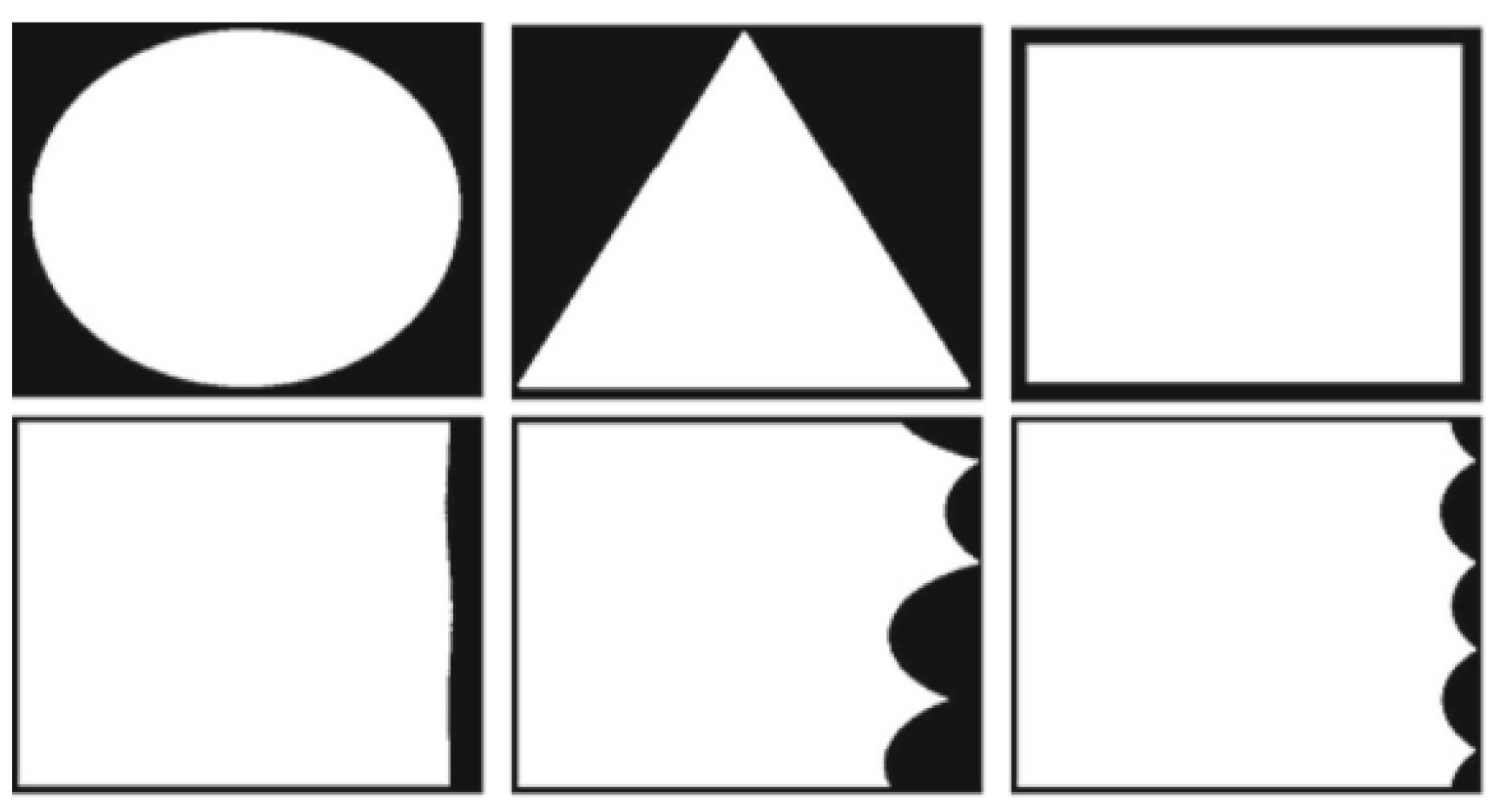

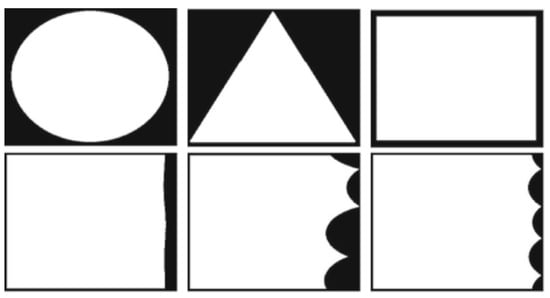

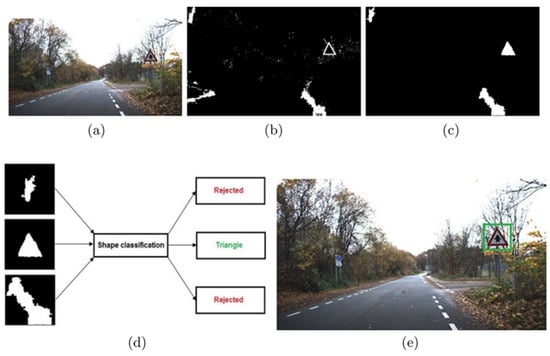

Detection of Traffic Signs

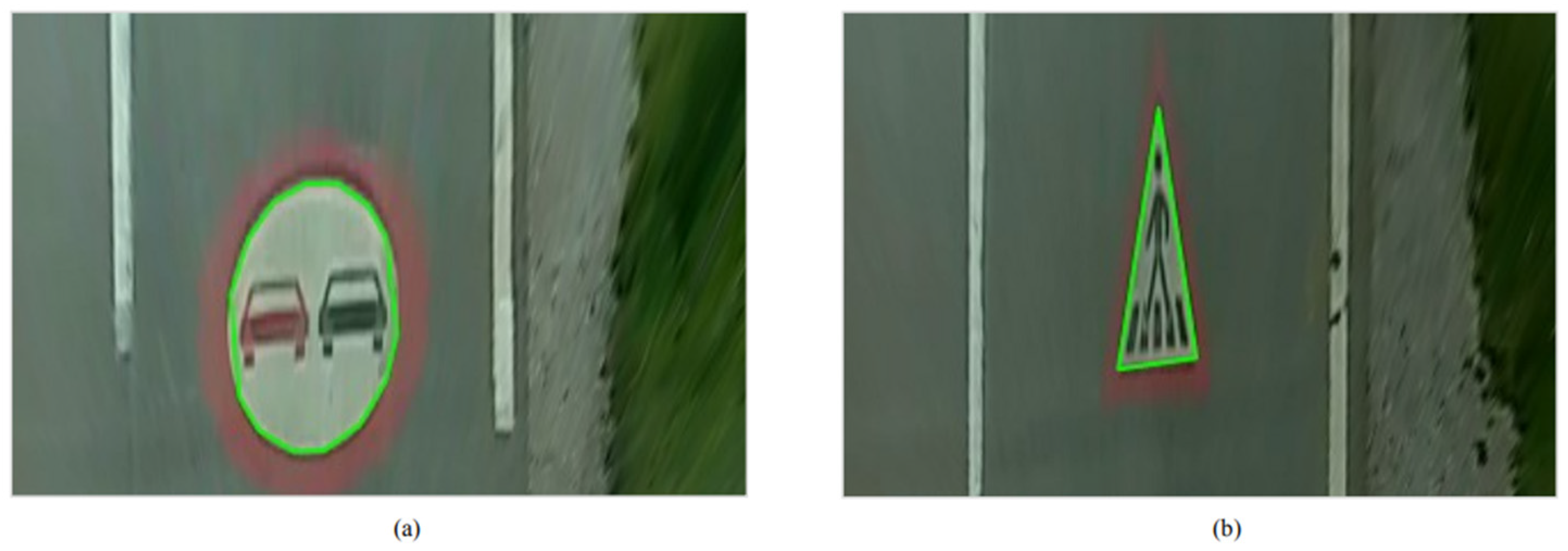

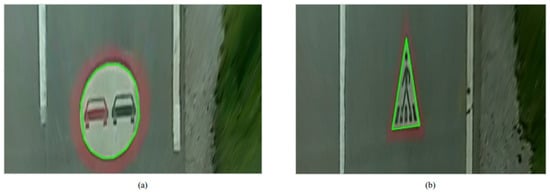

Traffic signs are categorized into three distinct groups based on geometric parameters that delineate their shapes: rectangles, circles, and triangles. The Hough transform, a dependable shape detection algorithm, is commonly employed due to its robustness against variations in illumination and partial obstructions. T. Bente [120] presented a driver assistance system in his paper, which is adept at identifying traffic signs through the use of an on-board camera. This system is also designed to alert drivers to potential lane deviations in videos recorded during daylight with moderately clear road markings. For the detection of traffic signs, the system utilizes an edge detection technique predicated on the Hough transform, as illustrated in Figure 15.

Figure 15.

Identifying various geometric shapes by HT: (a) Circular traffic sign forbidding overtaking.; (b) Triangular road sign alerting drivers to a pedestrian crossing.

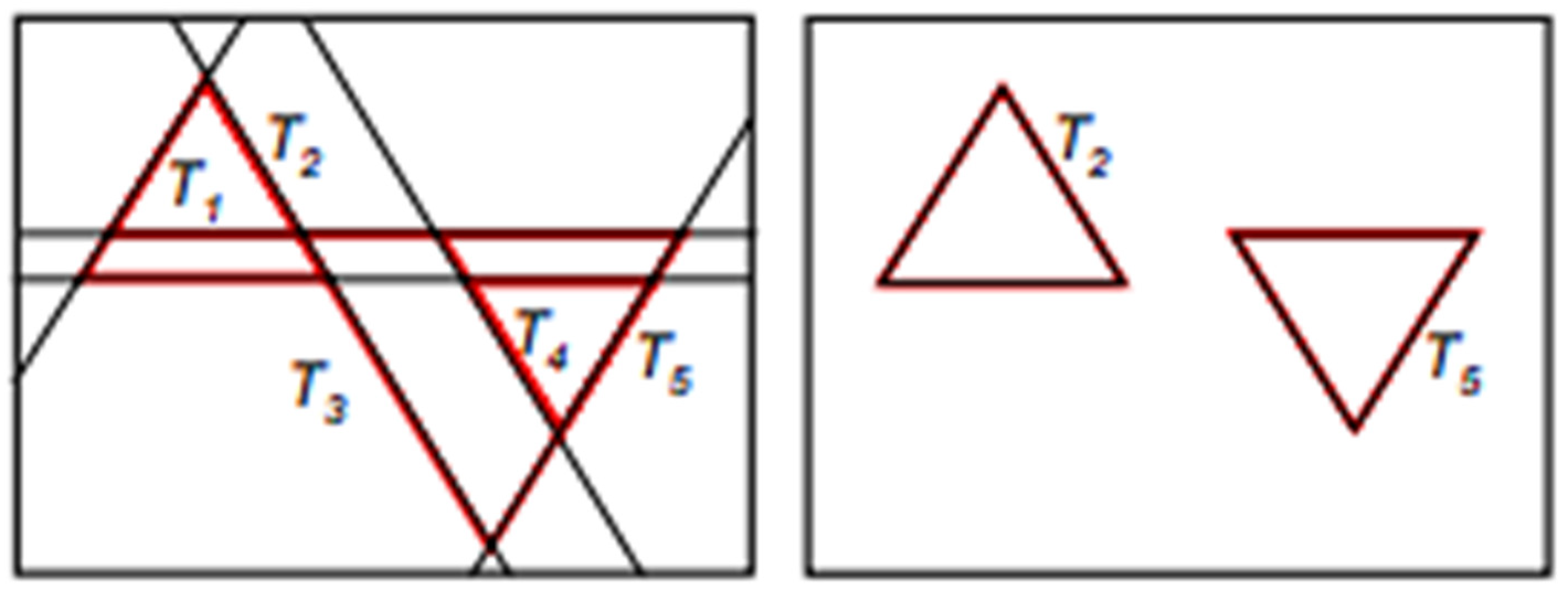

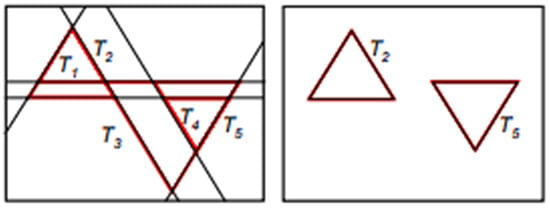

Compared to other authors, M. García-Garrido [121] proposed a method for detecting triangular-shaped traffic signs. The idea is to detect three intersecting straight lines forming an angle of 60 degrees. Note that, since the number of intersecting straight lines could be very large if the Hough transform were applied to the entire image, more lines than the actual triangles existing in Figure 16 would be detected in the real road, presented in Figure 16.

Figure 16.

Straight lines detected with the HT.

A limitation of this method is that we know neither the beginning nor the end of a straight line. This makes it necessary to apply the Hough transform to each contour, one after the other, which can increase the computation time in Figure 17.

Figure 17.

Showcasing the detection of circular and triangular traffic signs.

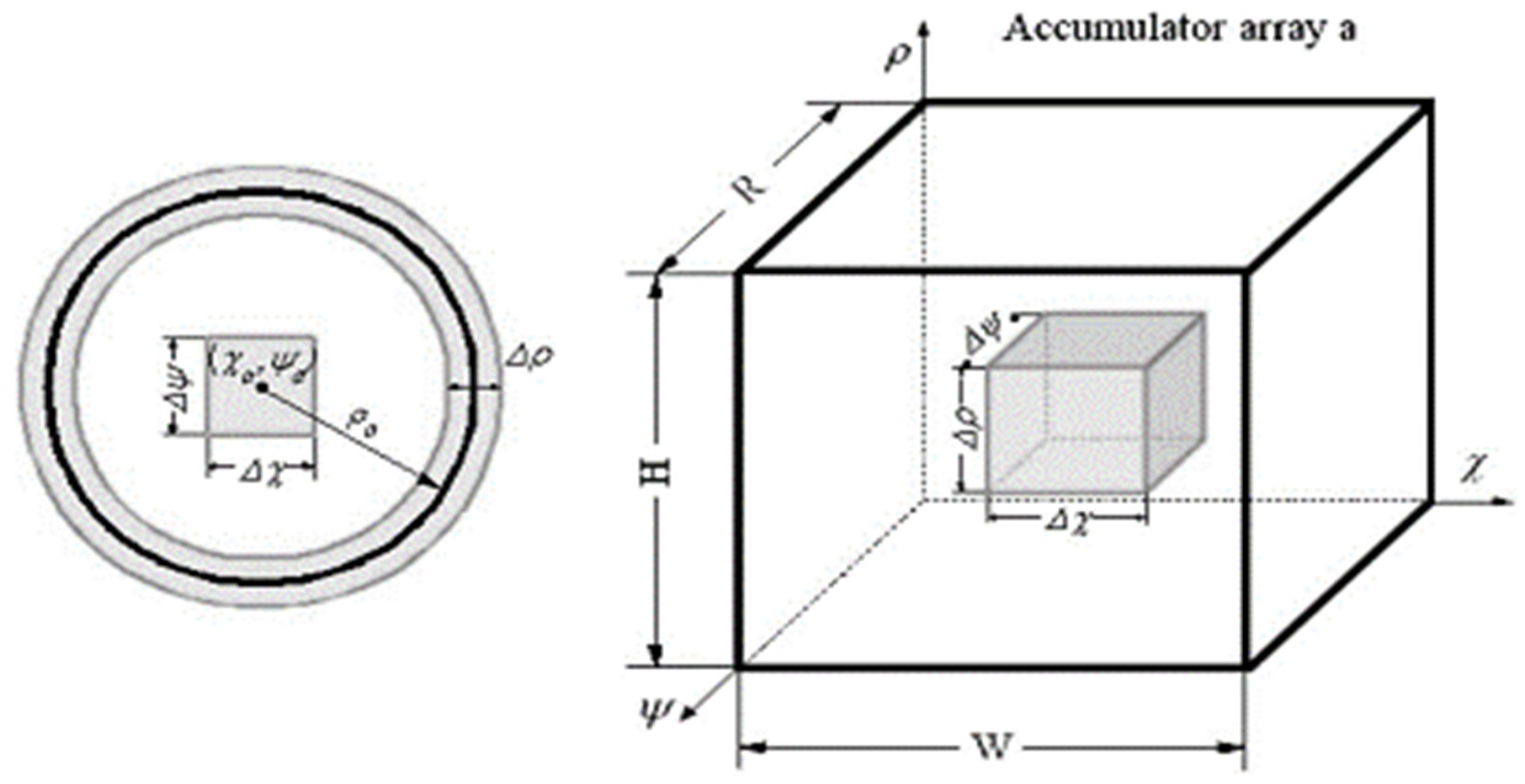

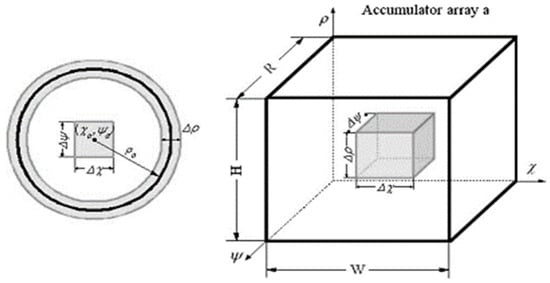

The authors applied the same strategy for the detection of circular signs (including the stop sign). The estimation of the circular shape parameter is computed using the direction of the gradient of the studied contour according to [122]. Although the stop sign is octagonal, the difference between octagonal and circular signs is very small, and the former are also accepted. A circle in the (x, y) plane with center (χ, ψ) and radius ρ can be expressed according to the following Formula (38):

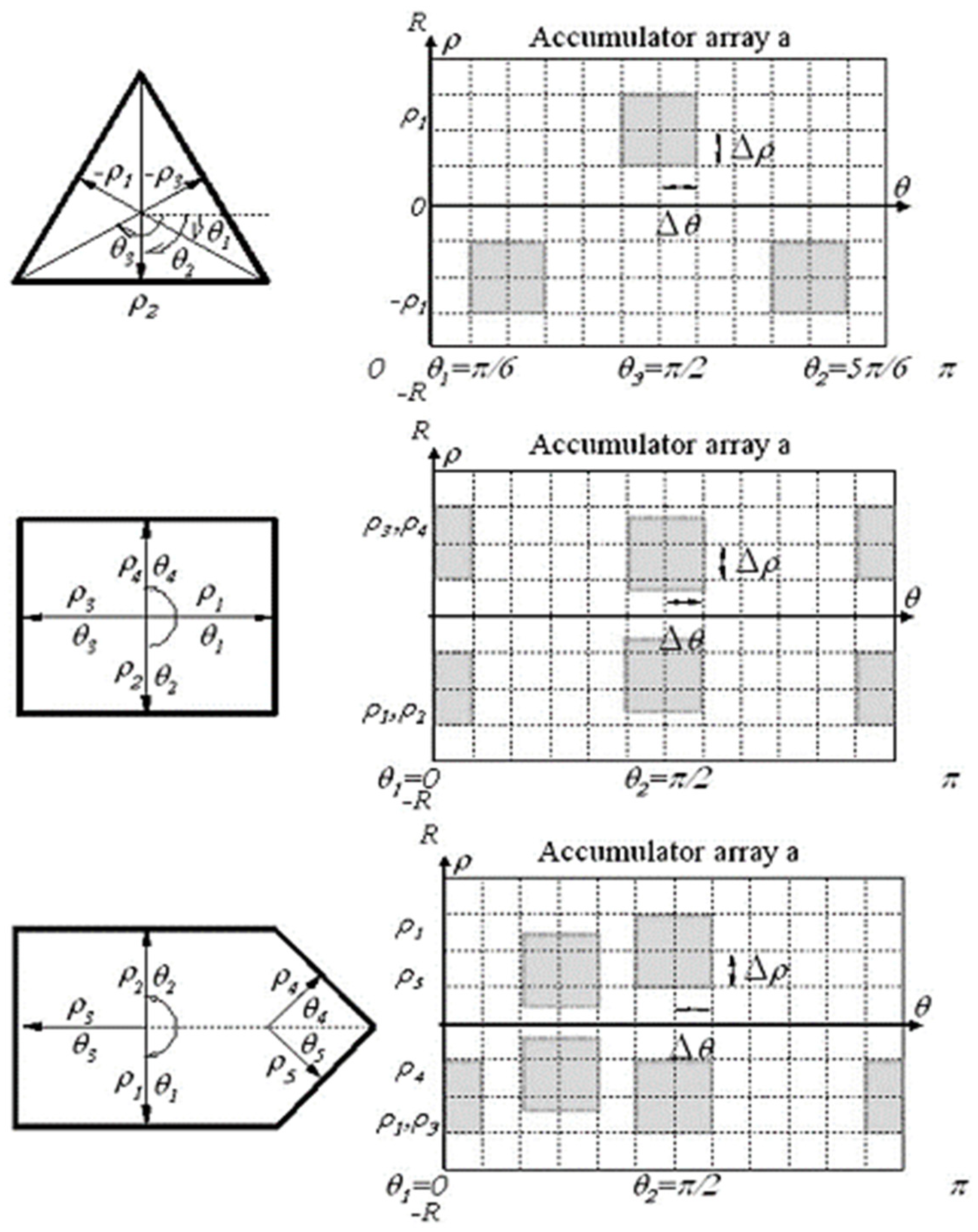

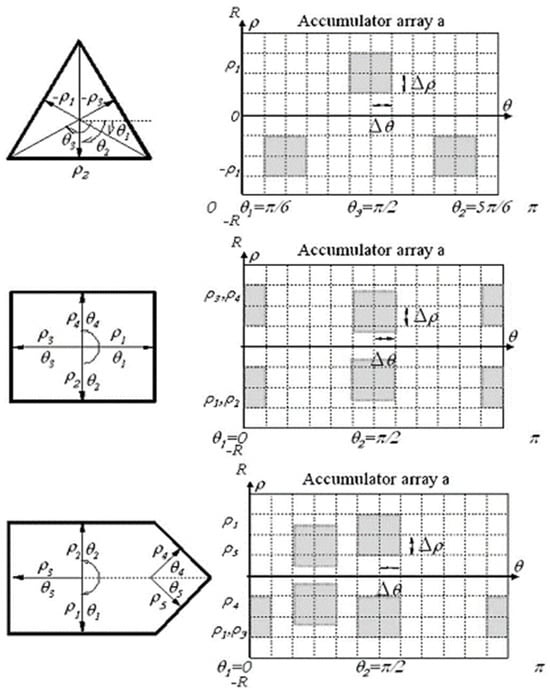

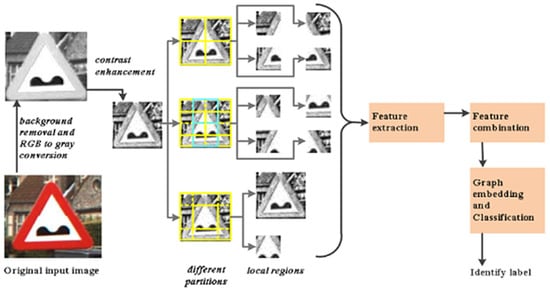

Á. González [123] conducted an extensive examination of the geometries present in traffic signs to enhance detection accuracy. The algorithm’s goal is to precisely locate signs within images. This is achieved through an analysis of shapes derived from edge images. The Hough transform is applied to detect arrow-shaped, triangular, and rectangular panels, as illustrated in Figure 18. Edge detection is performed using Canny’s method, preserving closed contours as an essential aspect of leveraging shape information in traffic sign detection. Encoded contours obtained through the Canny method include area and perimeter information, facilitating the identification of closed contours. Subsequently, only accepted contours meeting these criteria undergo Hough transform applications, leading to reduced CPU computation time.

Figure 18.

HT Applied to detect triangular and rectangular panels.

The parameters that define these shapes are used to reduce the search space using an accumulation matrix. Therefore, any estimate of the parameters of a straight line is calculated using the following Formula (39).

where and have points belonging to the studied contour. These estimated parameters determine the search area inside matrix a, i.e., the shaded area in Figure 19.

Figure 19.

HT applied to circular panel detection.

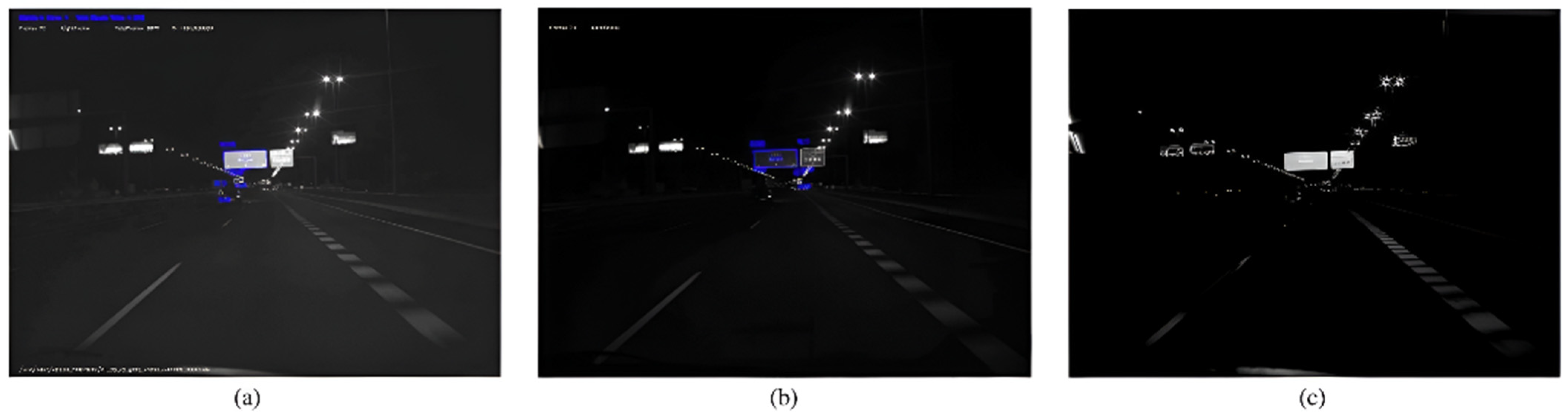

The search domain within the accumulator is confined; parameters pertaining to circular shapes are exclusively probed within designated shadow regions, as delineated in Figure 13. The pivotal advancement of this phase is the implementation of the Hough transform within constrained sectors of the accumulation matrix, maintaining precision. Employing this tactic enables the robust and real-time detection of diamonds, octagonal, and circular configurations, as evidenced in Figure 20.

Figure 20.

Nighttime detection in real road environments. (a) Illuminated frame; (b) non-illuminated frame; (c) difference result.

The authors performed an assessment of detection and classification performances, outlined in Table 8, which presents the Hough detection ratios categorized by panel shapes. Panels were differentiated between detected and validated, with the latter requiring at least ten accurate detections. The overall detection rate achieved 99.81%, with a validation ratio of 98.10% for the signs. Particularly high ratios were observed for triangular, circular (STOP), and rectangular signs.

Table 8.

Detection ratio for HT method.

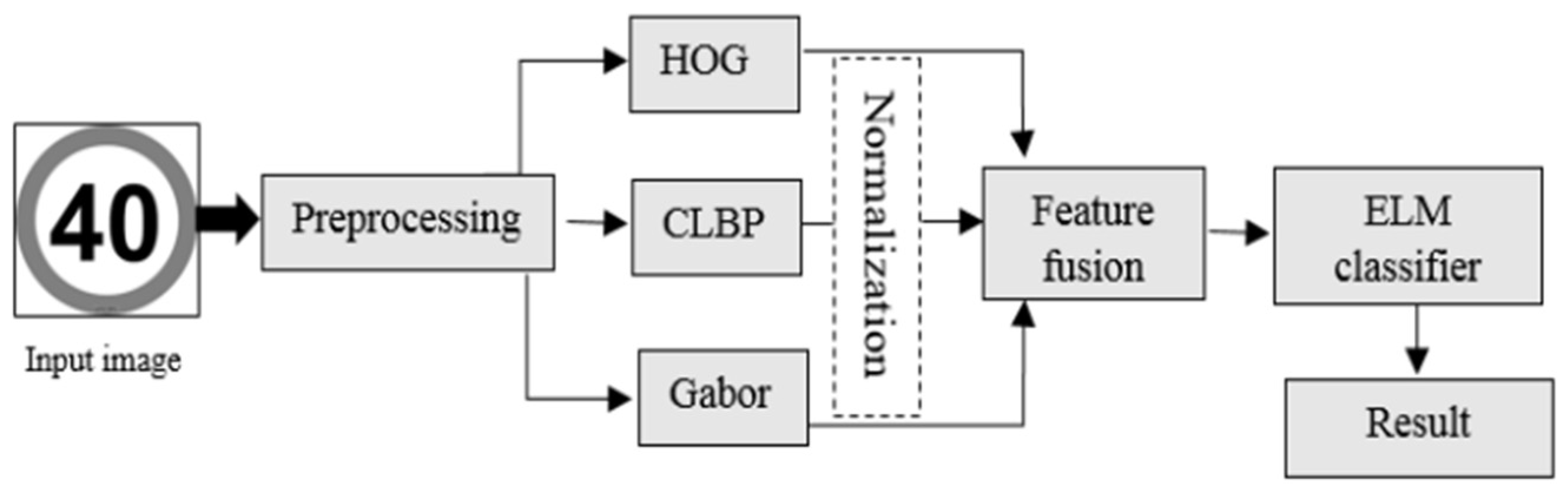

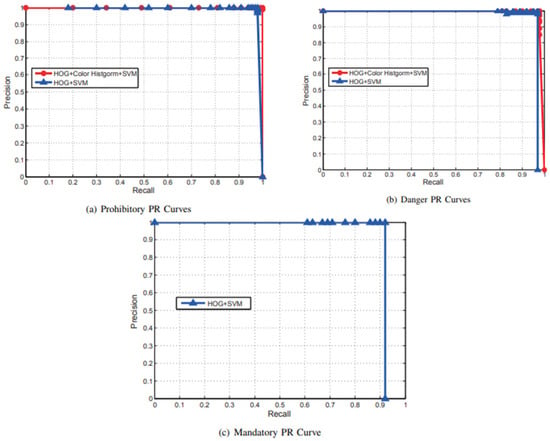

4.3.2. HOG Transforms

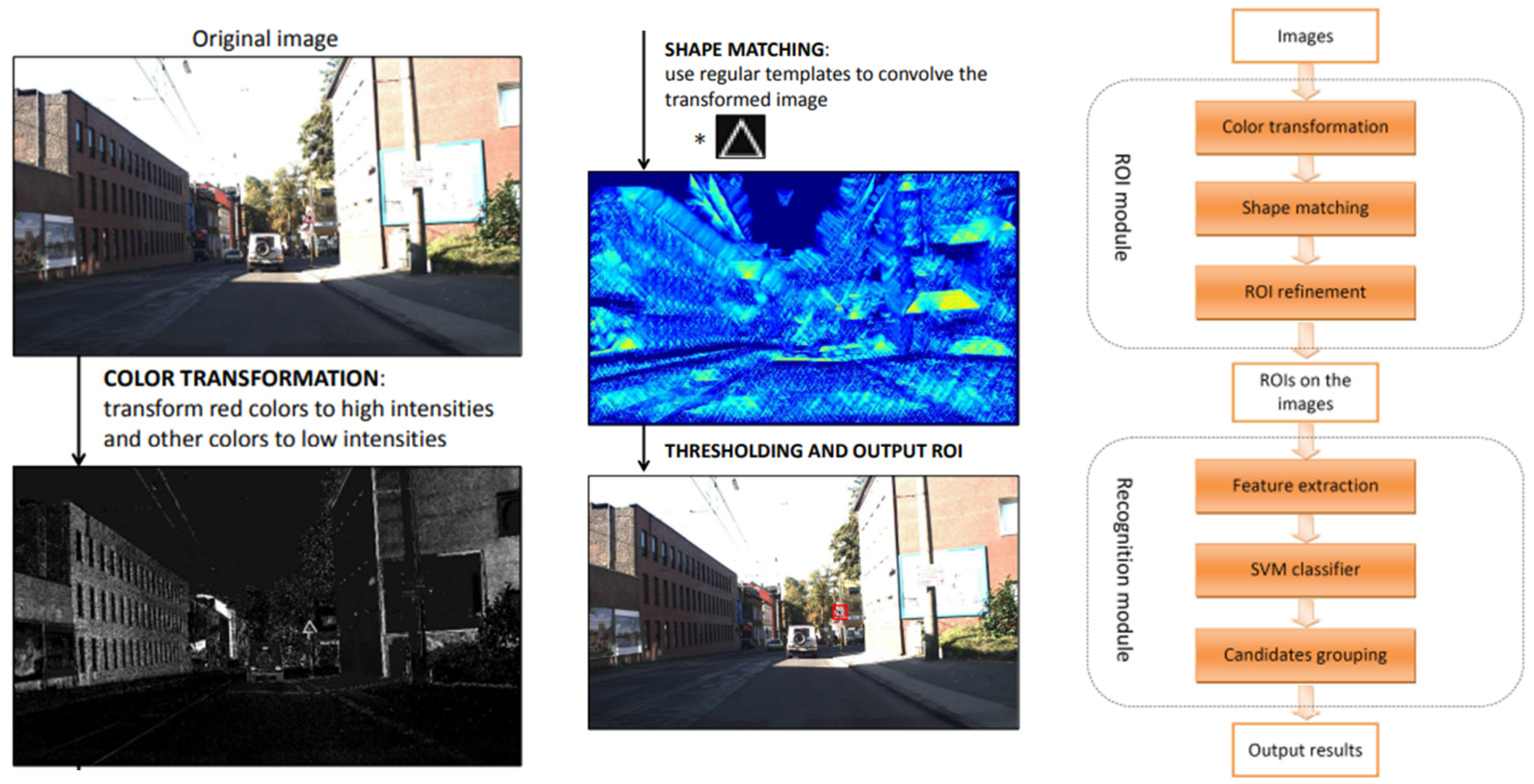

N. Romdhane [124] proposed a novel vision-based technique for detecting traffic signs. Initially, the method employs monocular segmentation, focusing on the color characteristics of candidate traffic signs. Subsequently, the Histogram of Oriented Gradients (HOG) operator is utilized to extract pertinent features. The results of this process are illustrated in Figure 21.

Figure 21.

Three-dimensional processing traffic sign distances.

In the proposed method, every potential traffic sign region is standardized to 32 × 32 pixels. This region is then subdivided into non-overlapping local regions of size 12 × 12. From each of these local regions, Histogram of Oriented Gradient (HOG) features are extracted. This process involves computing histograms of edge gradients with nine orientations from each of the 4 × 4 local cells. Each pixel’s gradient is discretized within one of the nine orientation bins. Ultimately, the primary aim of the HOG is to encode the detected traffic signs and generate the feature vector. This vector is subsequently utilized as input for a Support Vector Machine (SVM) classifier to determine the class of traffic signs.

N. Romdhane [124] performed tests with a sample of the images resized to 288 × 384 pixels and comparisons to the literature methods to prove the performance of the proposed detection method. Detection requires about 0.957 s per image, which demonstrates its applicability in real-time systems, demonstrated in Table 9. The HOG implementations were made on a GPU processor [125].

Table 9.

Treatment times.

The reported processing time of 0.957 s per image for traffic sign detection raises questions about its applicability in autonomous driving, where rapid and reliable decision-making is crucial. Although the authors optimized the method of resizing images to 288 × 384 pixels and implementing the HOG on a GPU, this processing speed may not meet the stringent real-time requirements of dynamic driving environments. While suitable for moderate computational scenarios, further improvements are necessary for high-speed applications.

To address this, incorporating lightweight models like MobileNet or YOLO-Tiny could help achieve faster processing times. Additionally, leveraging hardware accelerators such as FPGAs or TPUs could further enhance computational performance. Streamlining the current GPU implementation by reducing the complexity of feature extraction or introducing batch processing could also contribute to improved efficiency. Future research should evaluate this approach under more realistic and demanding conditions, including high-resolution images and diverse road scenarios. By refining these aspects, the method could better align with the performance expectations of autonomous driving systems, ensuring timely and accurate traffic sign recognition for safe navigation.

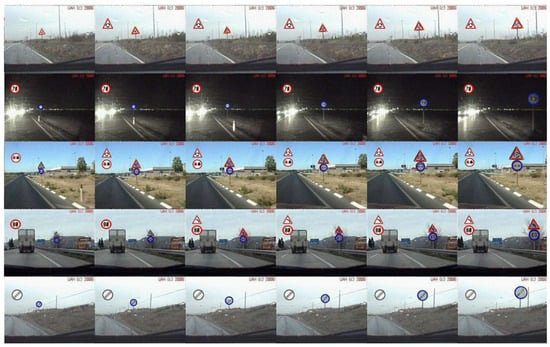

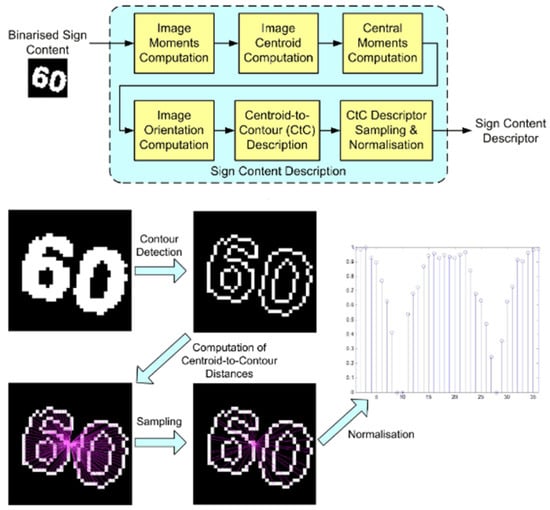

4.4. Centroids and Contours

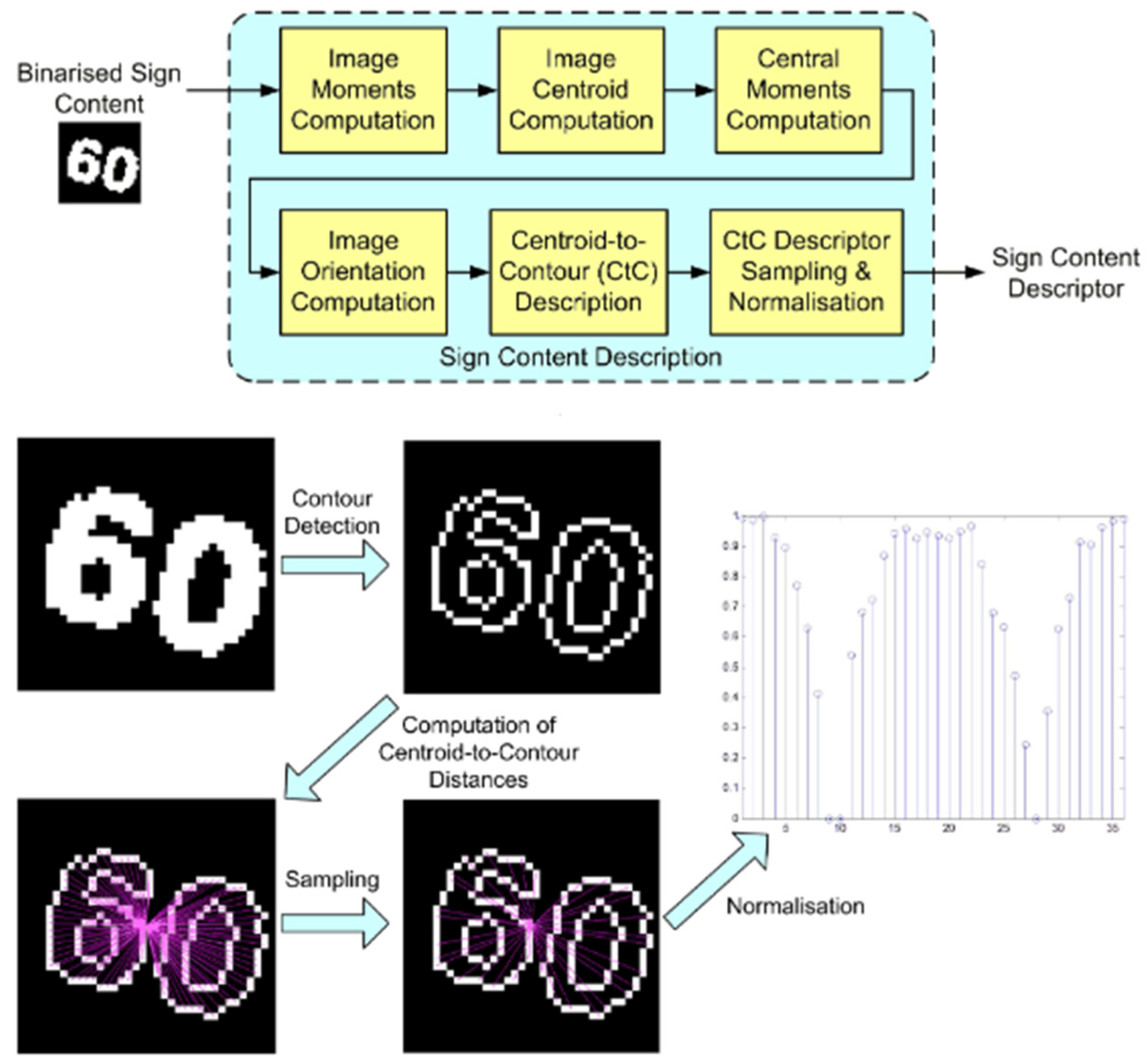

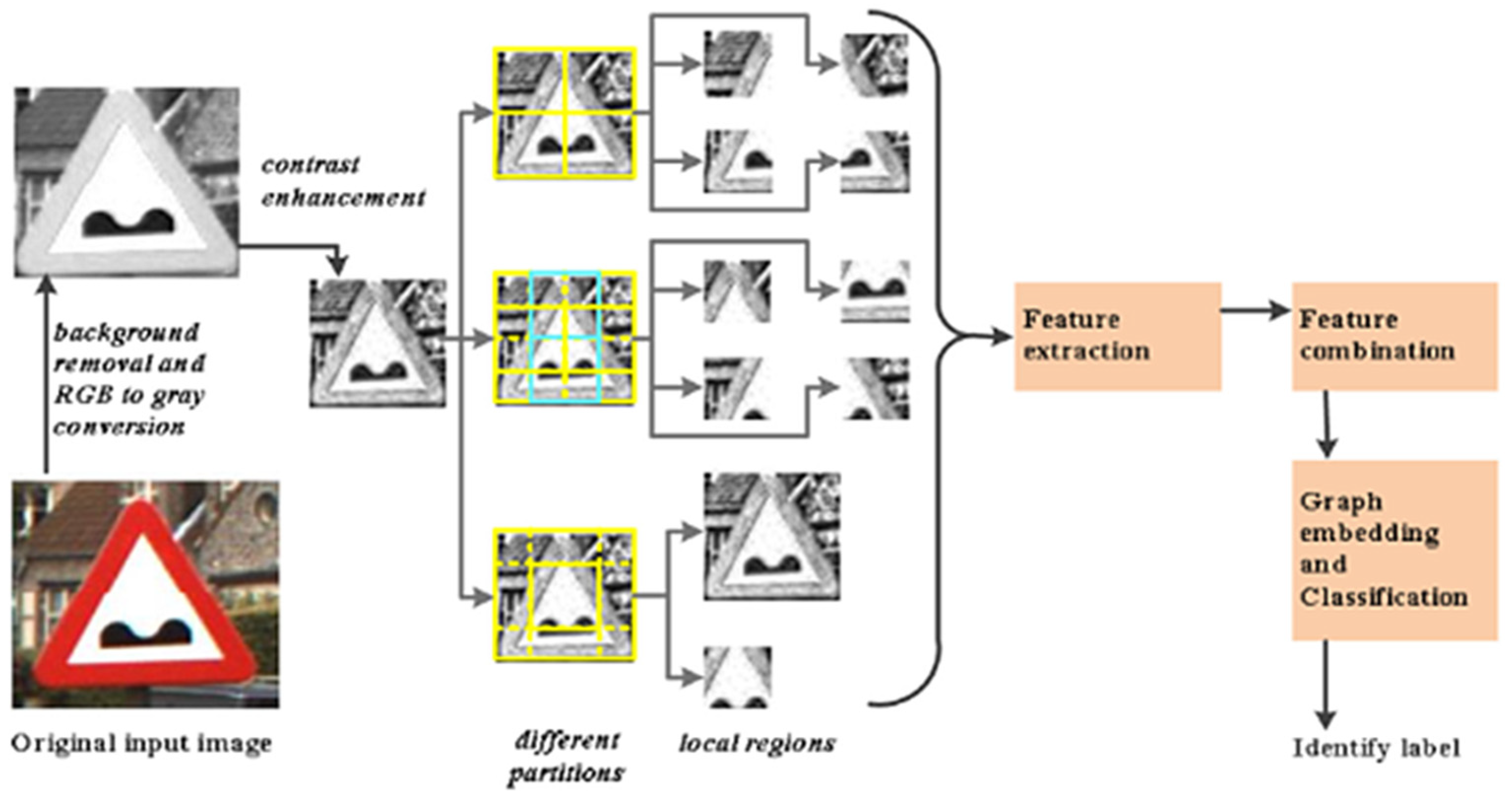

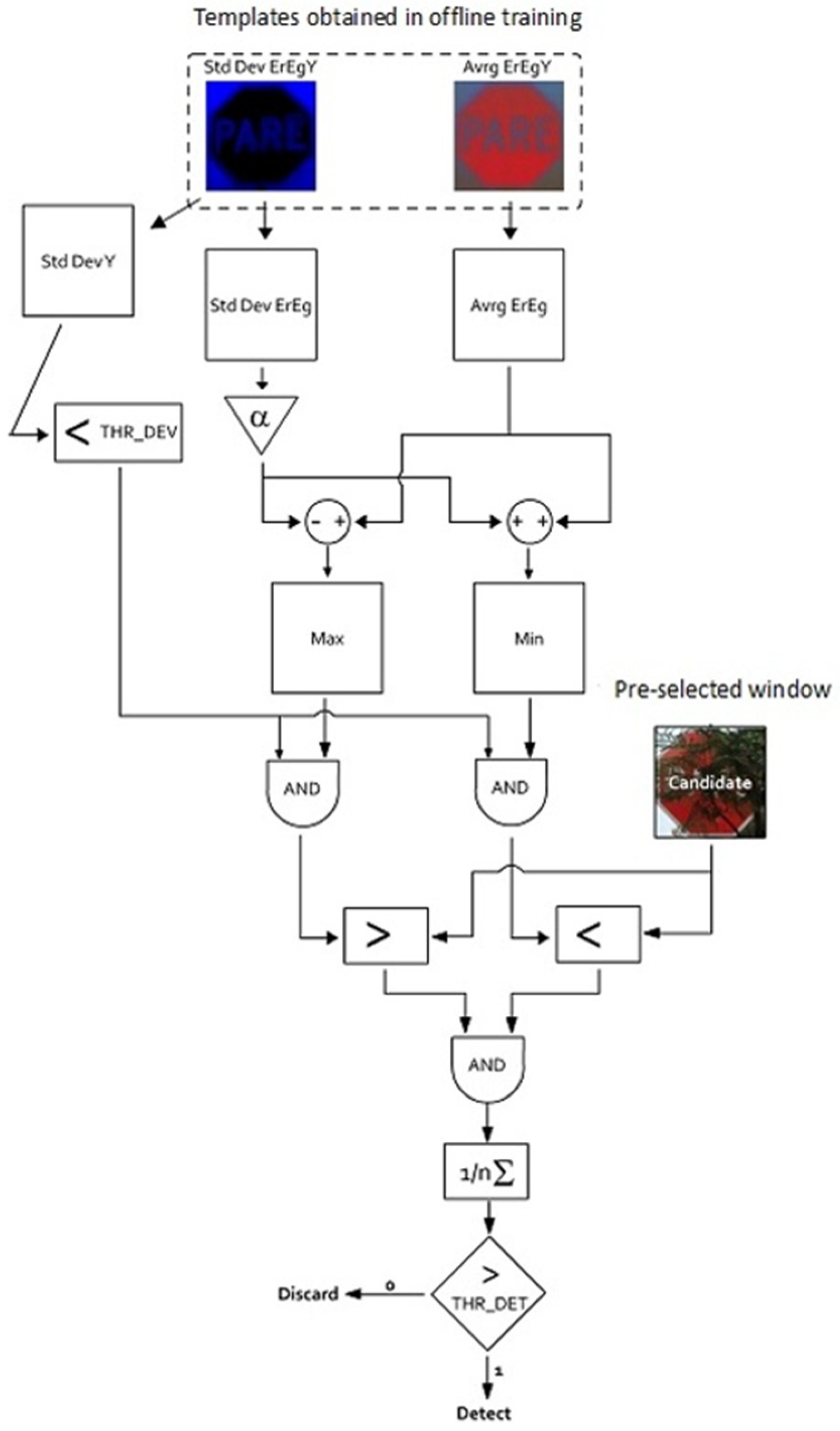

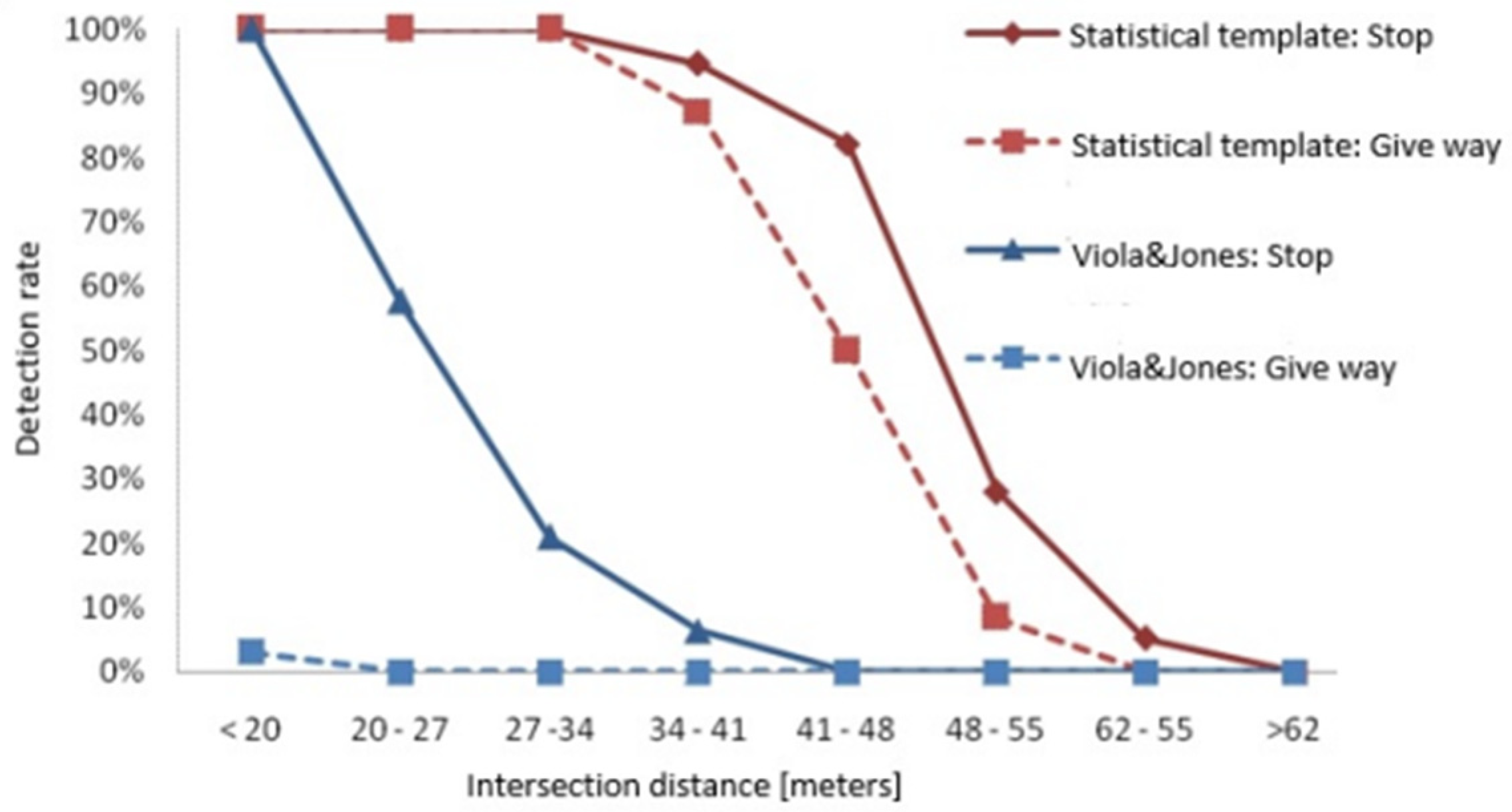

C. Tsai [128] dedicated their efforts to crafting a vision-based Advanced Driver Assistance System (ADAS) centered around the Speed Limit Sign (SLS) algorithm. This algorithm is engineered to automatically detect and identify speed limit signs on the road in real time, leveraging captured images. To enhance the SLS’s recognition capability across various orientations and sizes of signs within the images, the study introduced a novel algorithm. It delineates the recognized road sign by leveraging the distances between centroids and CtC (Center-to-Contour) contours of the extracted sign content. Known as the CtC description method, it utilizes CtC distances as shape features to describe a binarized image of the sign content. Figure 14 depicts the block function diagram of the proposed sign content method, named DeepL, which comprises six processing steps. Denoting the input binary image as Ib (x,y), the initial step involves calculating moments (Equation (40)) of the image of order {0, 1, 2}:

where p, q = 0, 1, 2. Then, the centroid of the binary image can be calculated using Equation (41):

where and are the components of the centroid. On the basis of (42) and (43), three central moments of the second order, , and can be determined by Equation (15):

Then, the orientation of the binarized sign content is computed using the second-order central moments, such that (Equation (39))

where is the orientation angle of the panel.

Figure 22 depicts the concluding pair of principles associated with the CtC descriptor technique. Let us define () as a contour trajectory encompassing (n) contour points, extending from coordinates () to , where consecutive points and are neighbors for 1 ≤ i ≤ n − 1. If we consider that (N) distinct contour trajectories have been extracted from the input binary image, for instance, N = 4 in the context of Figure 23, then for the jème contour trajectory , with (j) ranging from 1 to (N), the computation involves determining the distance (Equation (44)) from the centroid of the image to each specific point along :

Figure 22.

Method of Description of the Form of Panels.

Figure 23.

SLS recognition with dynamic scale adaptation. Experimental results. (a,b) Overcast-day sequences with continuous translation and scale variation; (c,d) rainy-day sequences with varying rotation poses.

In this context, the coordinates for 1 ≤ i ≤ nj specify the position of a contour point on . The term nj represents the count of contour points on , and denotes CtC distance for each contour point on .

The study introduces the CtC descriptor, which demonstrates robustness against translational, rotational, and scale variations observed in Speed Limit Signs (SLS) within images, as illustrated in Figure 23. This robustness substantially improves the accuracy of a support vector classifier that has been trained on a comprehensive traffic sign database. Upon deployment, the SLS recognition method functions efficiently on two separate embedded platforms, both powered by a Quad-Core ARM processor and operating under Android 4.4. The experimental results corroborate the efficacy of the proposed method, evidencing high recognition rates coupled with the capability for real-time processing. Moreover, the method attains real-time performance, achieving up to 30 frames per second while processing video streams at a resolution of 1280 × 720 on a commercially available ARM-based smartphone, the Asus PF500KL (Figure 23).

4.5. Comparative Analysis of Traffic Signs and Road Lane Detection Methods

Each author proposes a unique method for detecting road signs, underscoring the diversity within the field. Our paper’s primary focus lies in developing an efficient detector capable of real-time operation. To ensure the reliability and practicality of approaches, we will conduct an in-depth comparison of the geometric methods previously discussed. This comparison will evaluate their suitability for real-time applications concerning both traffic signs and roadways. The findings of this analysis will be meticulously documented in Table 10. From our extensive study, we aim to discern the most effective strategy for constructing a robust detector capable of precisely identifying regions pertinent to road signs and road lines, including both right and left lanes, crucial for accurate lane detection. We hypothesize that a synergistic integration of color-based and geometric methods will prove to be the most efficacious approach in achieving conditions for realization vision system applicable for automotive.

Table 10.

Detection method based on shape.

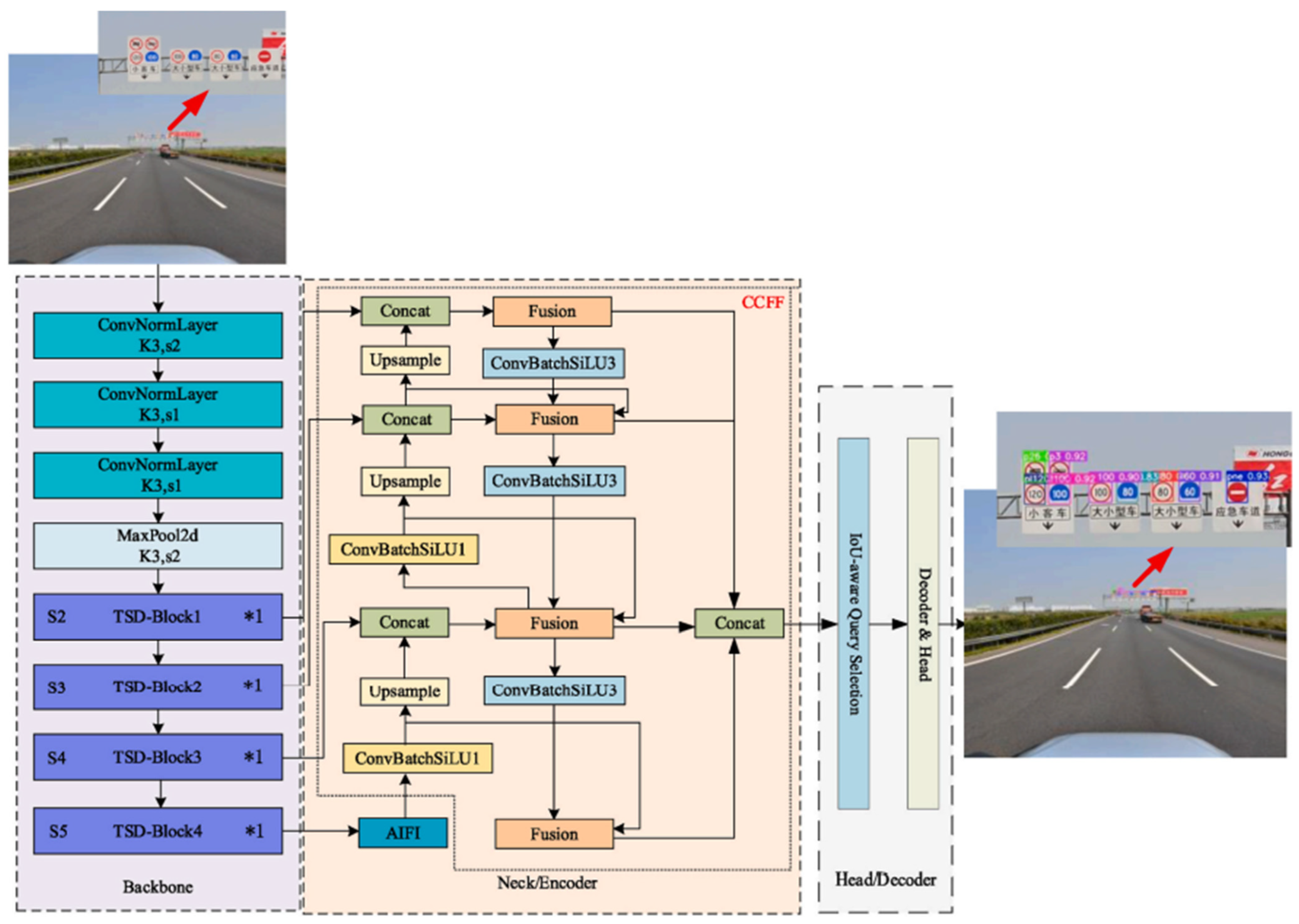

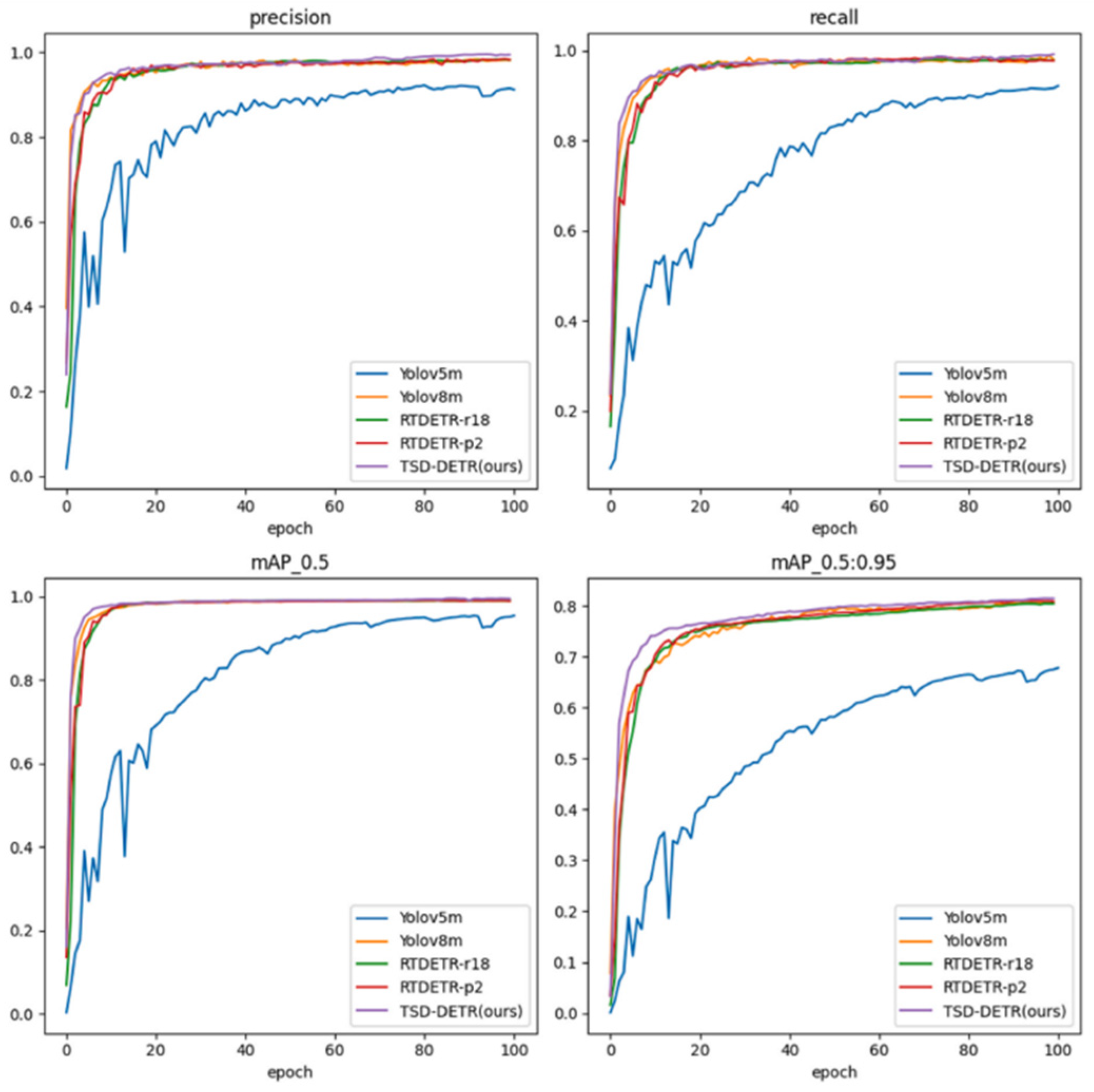

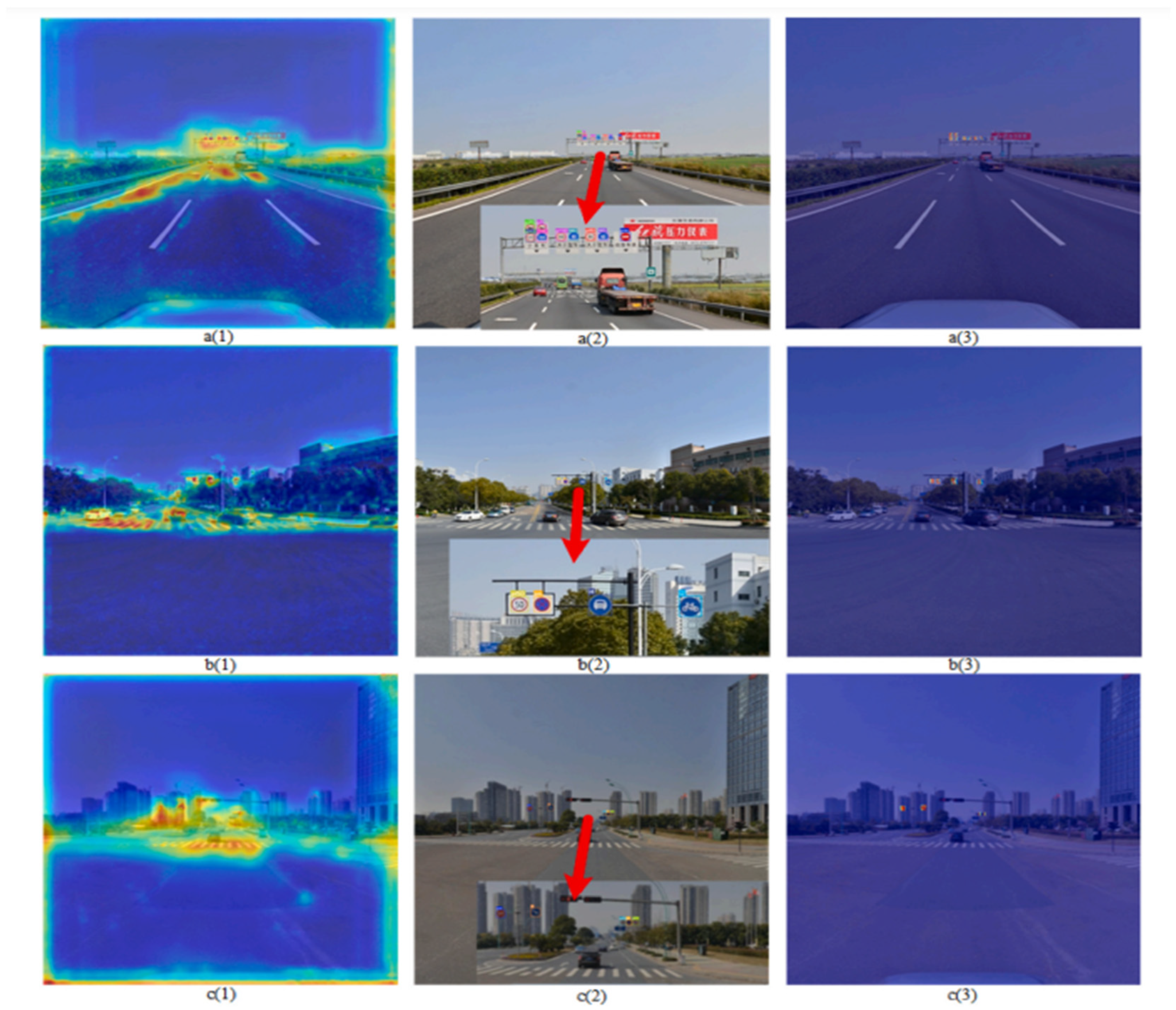

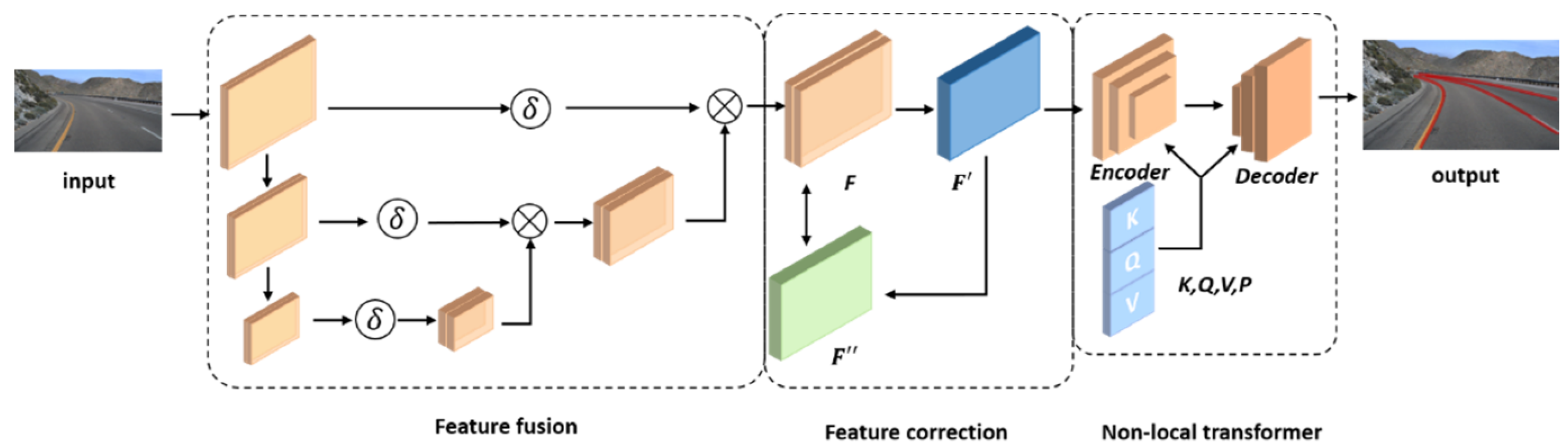

4.6. Artificial Intelligence Methods

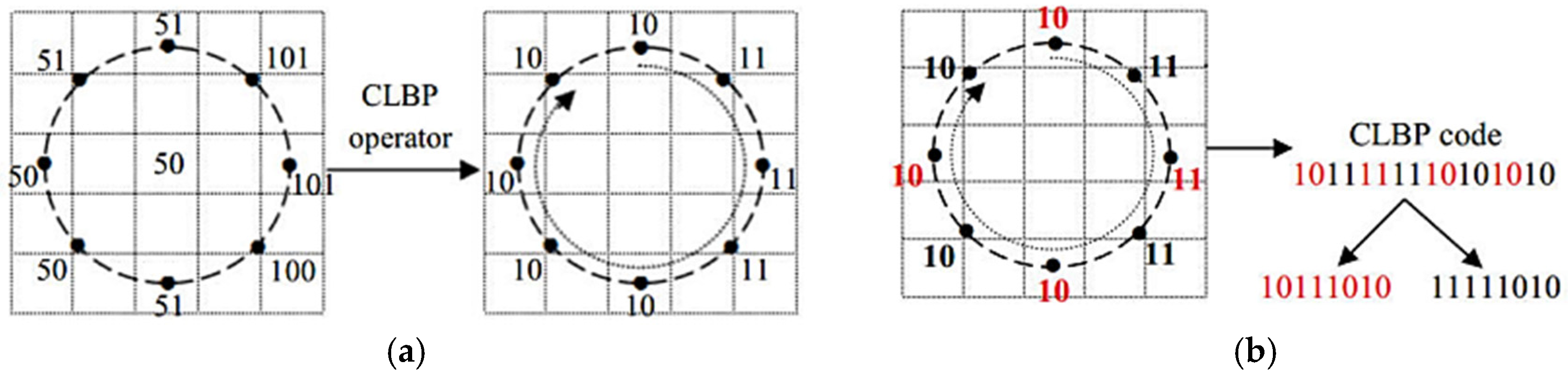

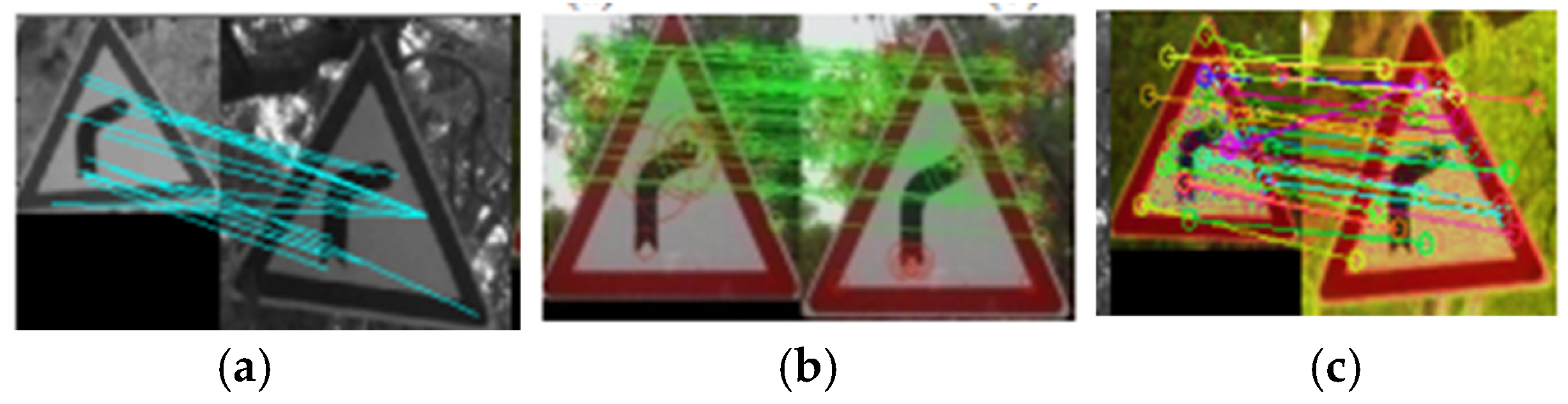

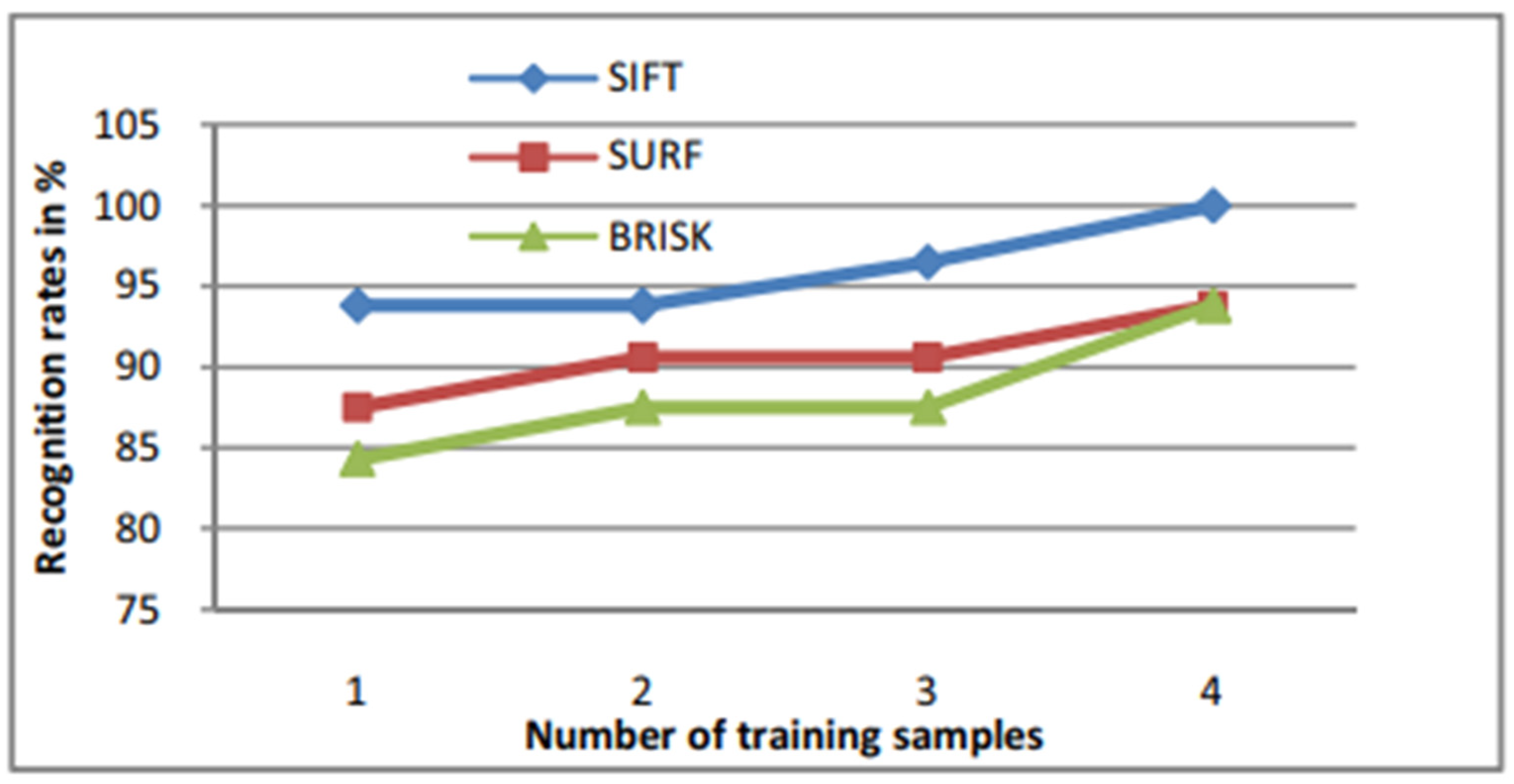

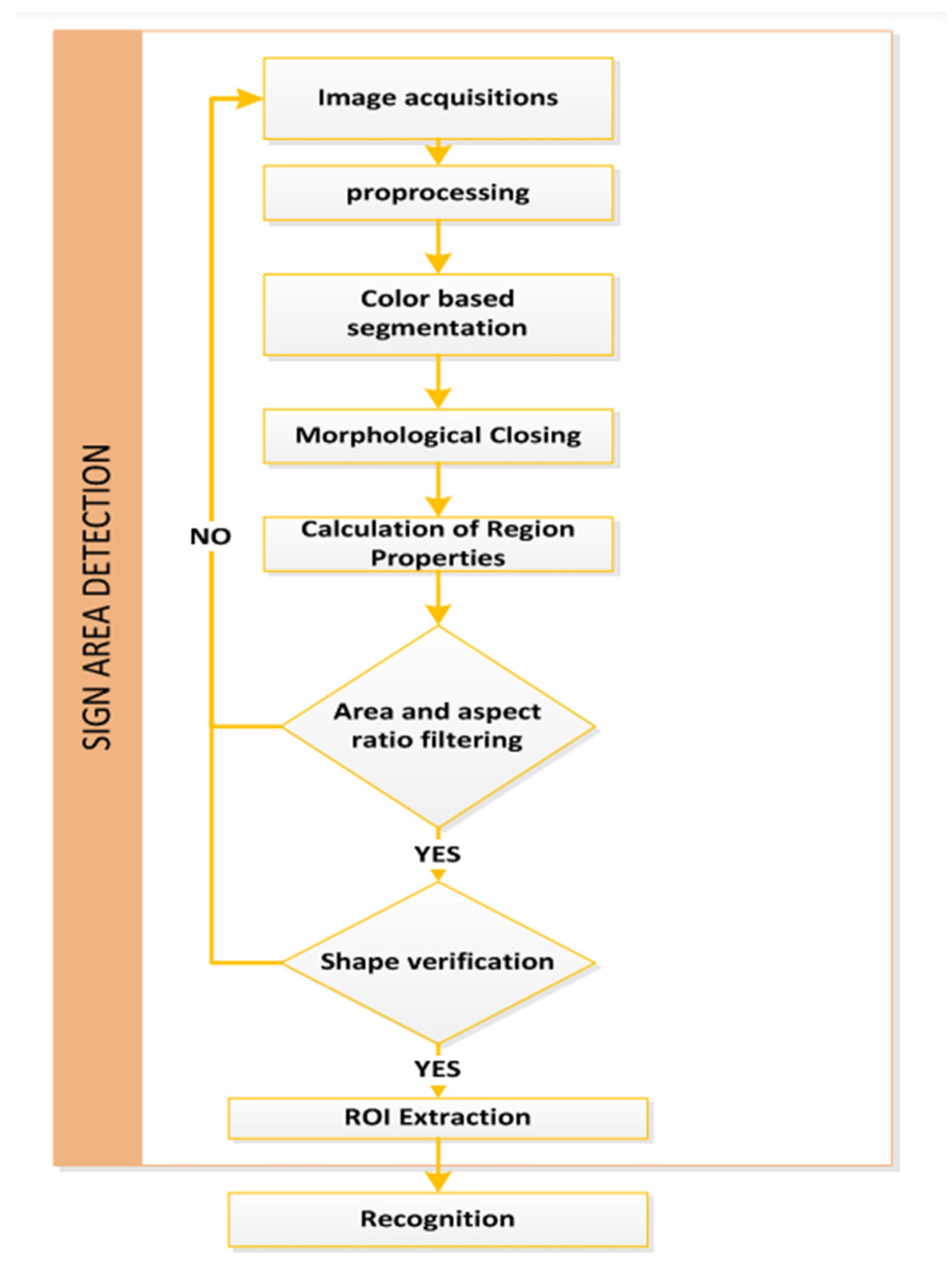

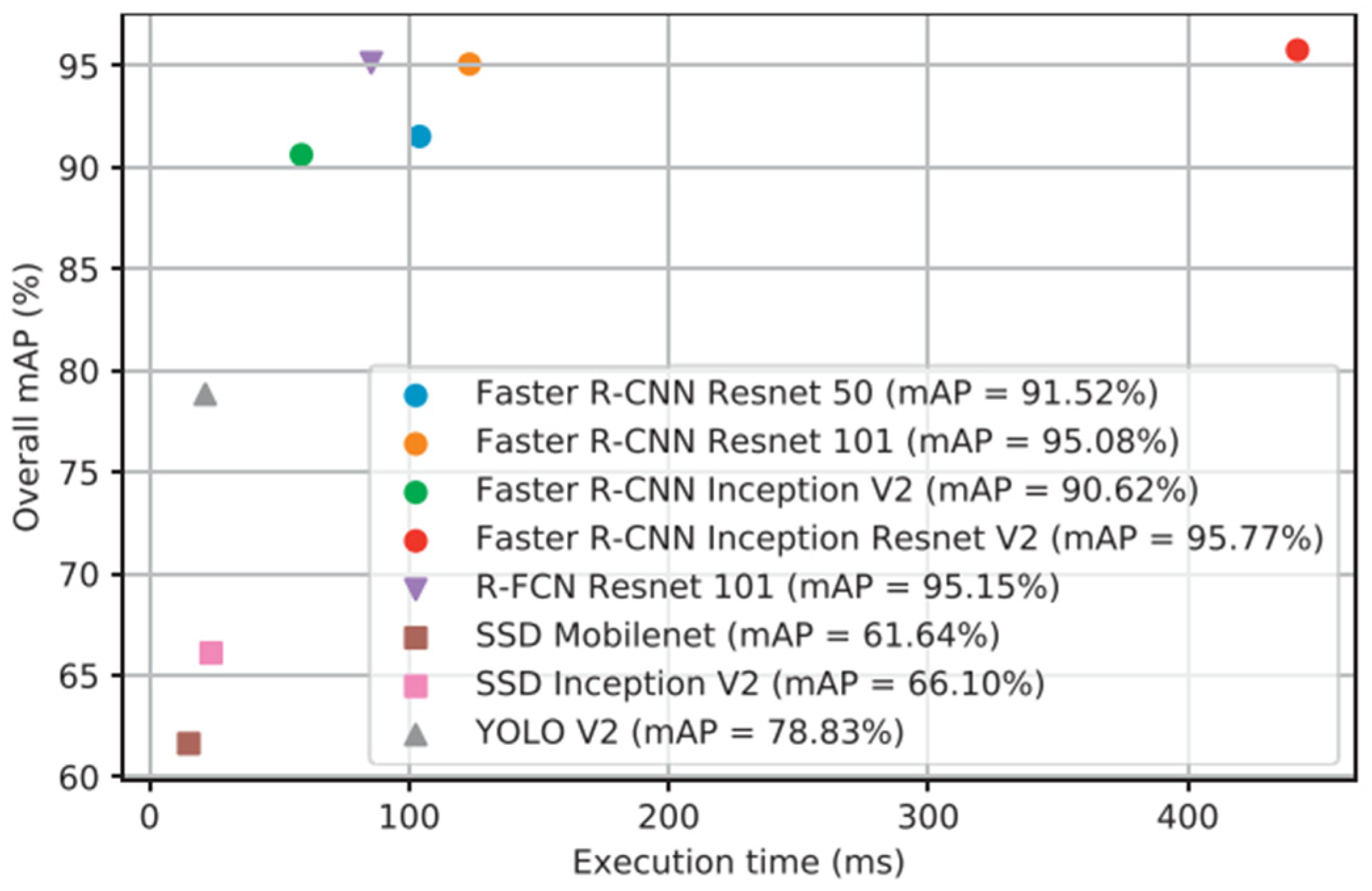

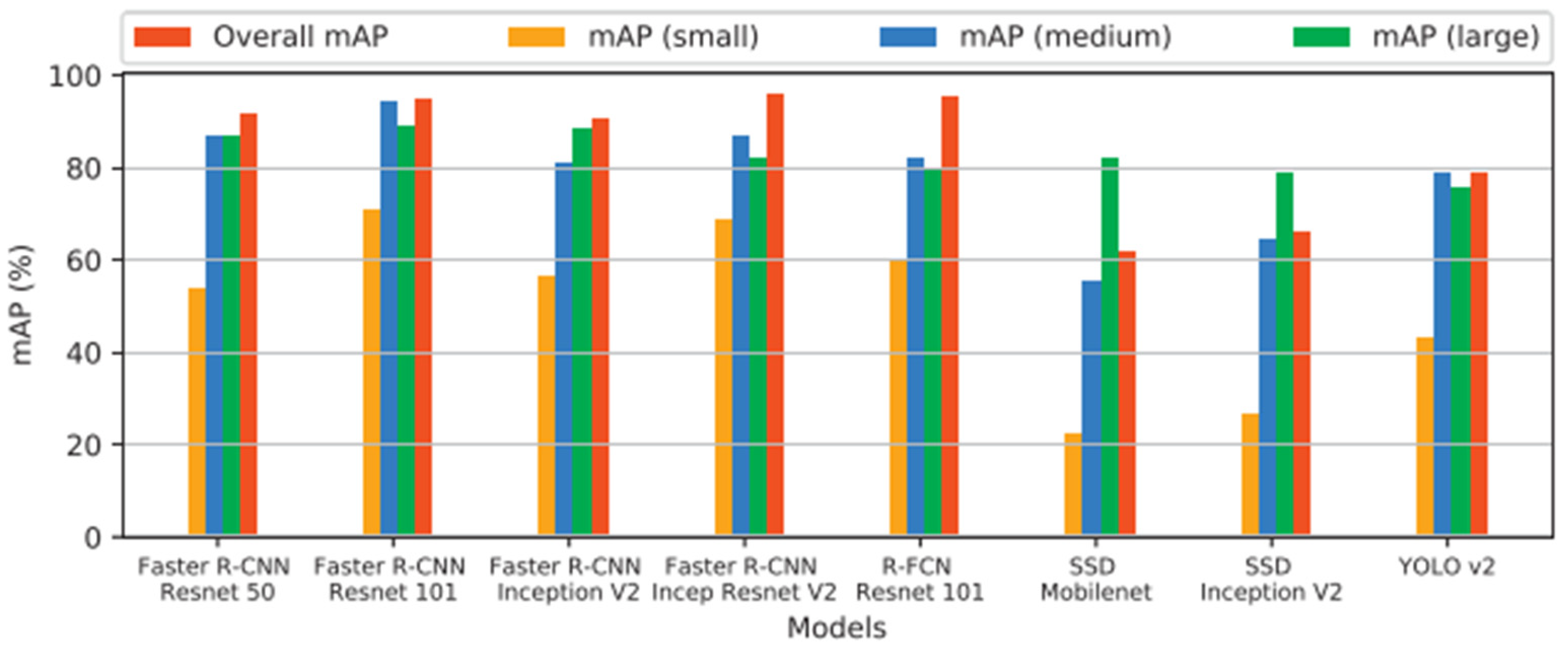

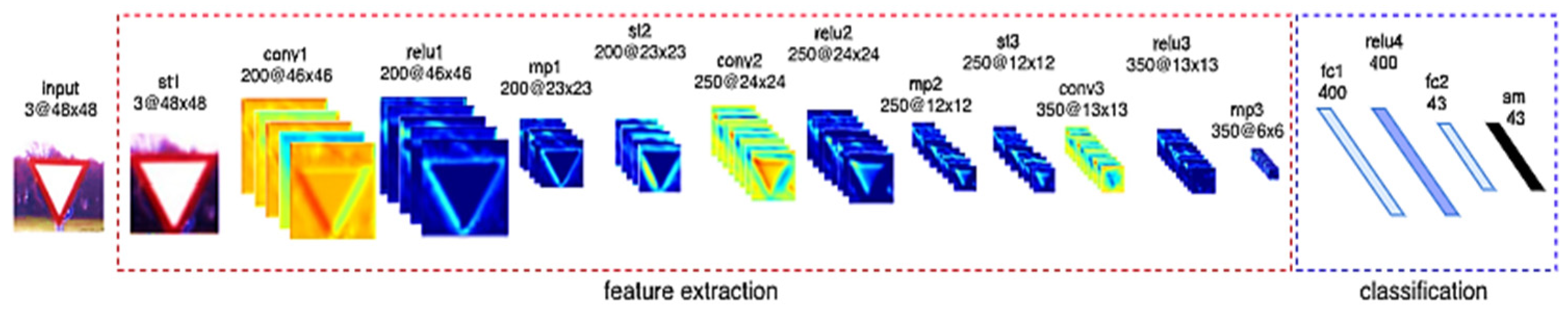

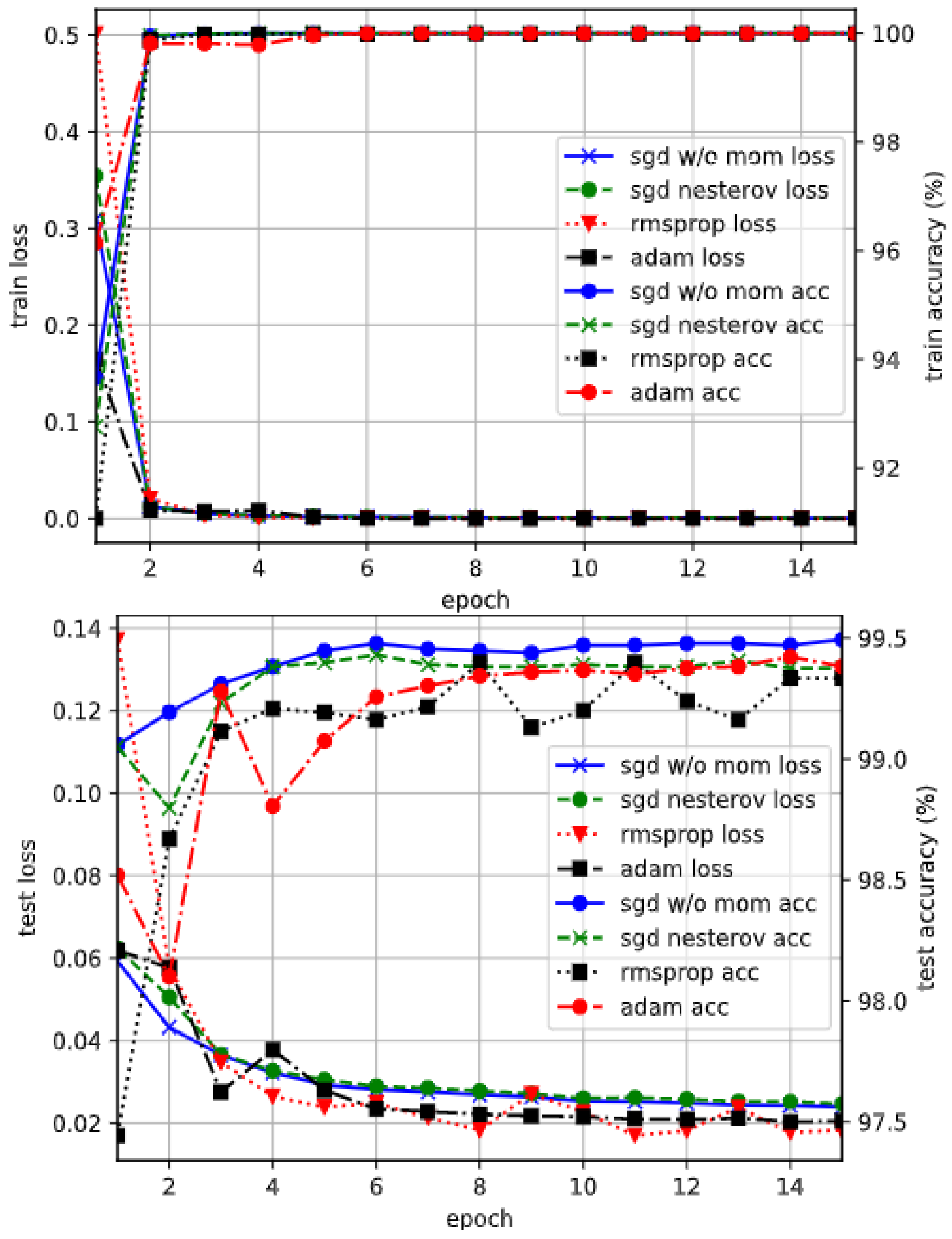

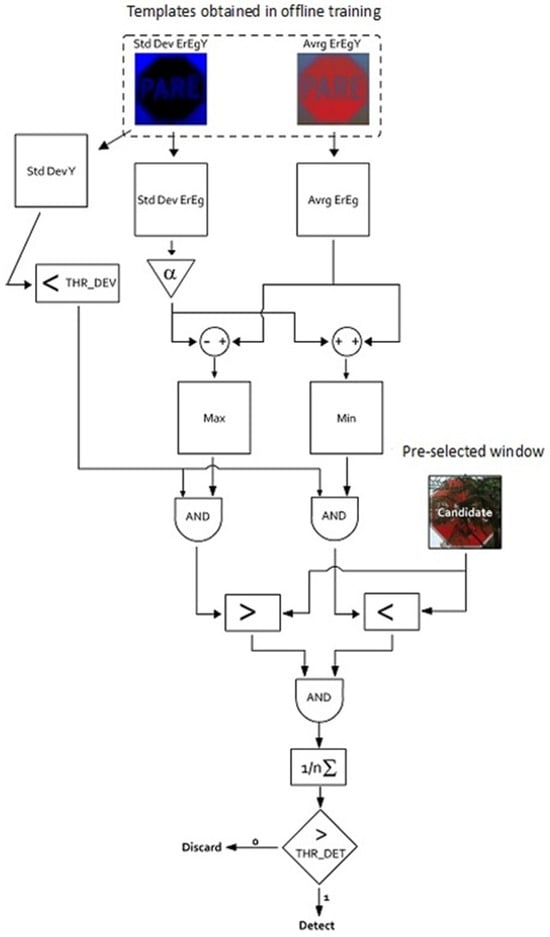

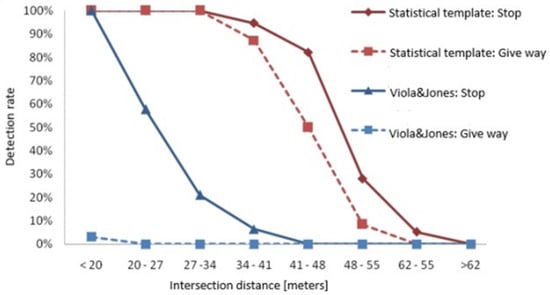

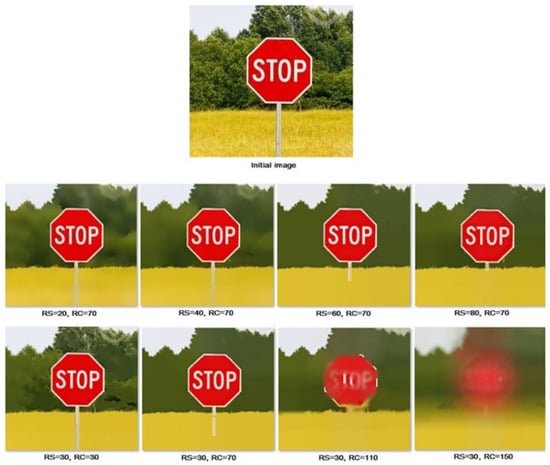

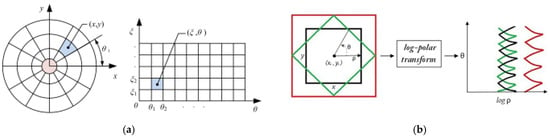

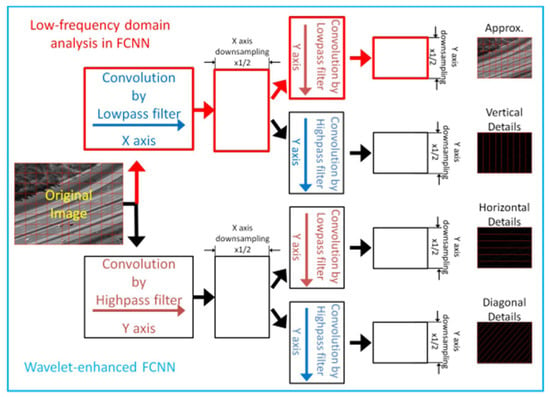

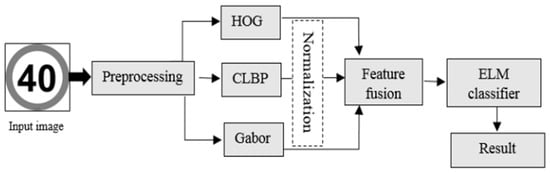

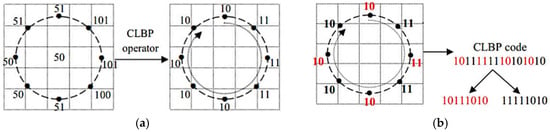

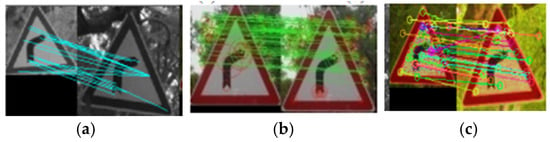

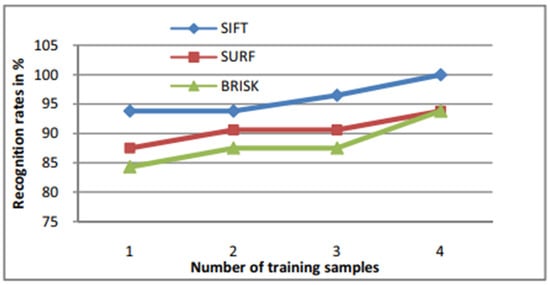

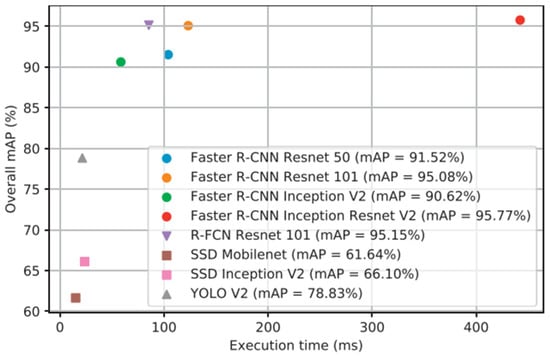

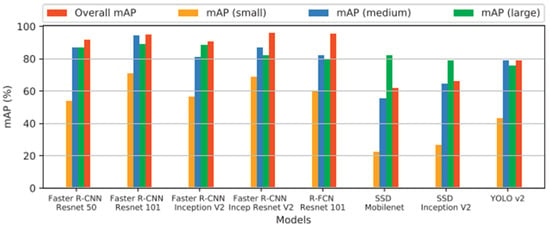

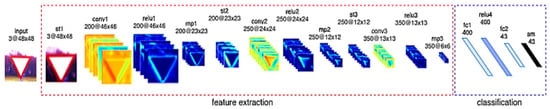

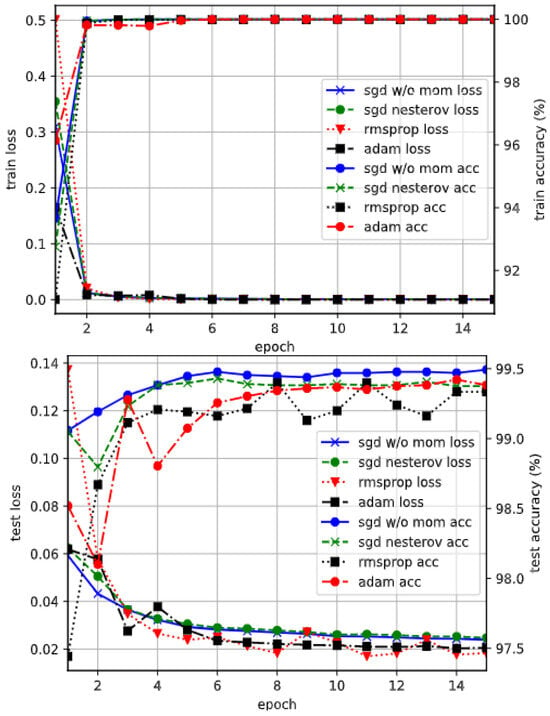

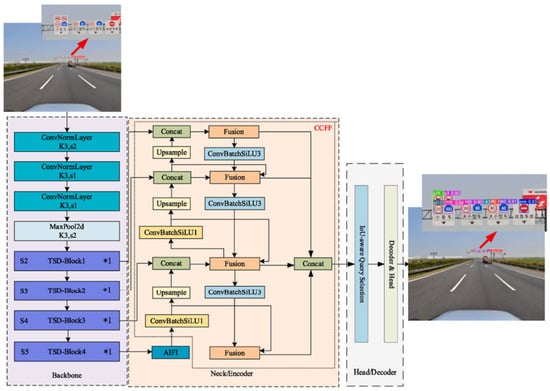

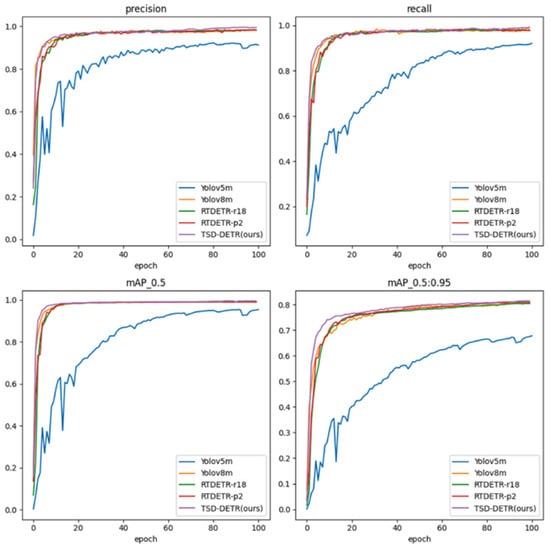

Learning methods, a category within machine learning techniques, have instigated a revolution across multiple industrial sectors, particularly within automotive embedded systems. Unlike traditional geometric and color-based approaches for object detection, learning methods offer substantial advancements. They mitigate challenges such as variations in brightness, temporal changes, scale adjustments, and object rotations. At the core of the effectiveness of learning methods lies the availability of extensive datasets and robust hardware infrastructure.