Abstract

This study developed identification models for five domestic rice varieties—Akitakomachi (Akita 31), Hitomebore (Tohoku 143), Hinohikari (Nankai 102), Koshihikari (Etsunan 17) and Nanatsuboshi (Soriku 163)—using fluorescence spectroscopy, near-infrared (NIR) spectroscopy, and machine learning. Two-dimensional fluorescence images were generated from excitation emission matrix (EEM) spectra in the 250–550 nm and 900–1700 nm ranges. Four machine learning hybrid models combining a convolutional neural network (CNN) with k-nearest neighbor algorithm (KNN), random forest (RF), logistic regression (LR), and support vector machine (SVM), were constructed using Python (ver. 3.13.2) by integrating feature extraction from CNN with traditional algorithms. The performances of KNN, RF, LR, and SVM were compared with NIR spectra. The NIR+KNN model achieved 0.9367 accuracy, while the fluorescence fingerprint+CNN model reached 0.9717. The CNN+KNN model obtained the highest mean accuracy (0.9817). All hybrid models outperformed individual algorithms in discrimination accuracy. Fluorescence images revealed at 280 nm excitation/340 nm emission linked to tryptophan, and weaker peaks at 340 nm excitation/440 nm emission, likely due to advanced glycation end products. Hence, combining fluorescent fingerprinting with deep learning enables accurate, reproducible rice variety identification and could prove useful for assessing food authenticity in other agricultural products.

1. Introduction

In most Asian countries, rice is a staple food with major agricultural, economic, and cultural importance, and this is especially true in Japan. Recent concerns about domestic rice shortages, stemming from climate change and global market fluctuations, have prompted government market interventions, including the release of stockpiled rice. Additionally, premium rice varieties are being traded at high prices, with added value attributed to their origin and variety []. While maintaining brand names provides economic incentives for producers, it also increases the risk of mislabeling and false origin claims.

From September 2022, Japan will require all processed foods to label the country of origin for key ingredients []. This highlights the urgent need for rapid, scientific methods to accurately identify the source of ingredients. Accordingly, various studies have explored combining spectroscopic techniques with machine learning for food authentication. For example, Yang et al. [] used terahertz (THz) spectroscopy, whereas Quan et al. [] and Gu et al. [] employed UV-visible spectroscopy. Fluorescence spectroscopy has also been applied to olive oil, citrus fruits, honey, wine, and tea [,,,,,]. Due to its rapid and highly sensitive characteristics, fluorescence fingerprinting has become an effective method for evaluating the quality and authenticity of a variety of agricultural products. Although NIR spectroscopy has also been applied to tea [,] and peaches [], and several studies have demonstrated its applicability to rice using chemometrics and machine learning approaches [,,], few have compared NIR with fluorescence fingerprinting or integrated deep learning–based models across multiple Japanese varieties. This study is intended to fill that gap.

Nevertheless, some progress has been made using Raman spectroscopy and chemometrics for quality assessment and counterfeit detection [], EEM spectra to identify geographic origin [], and fluorescence spectroscopy and support vector machine (SVM) for high-precision classification [,]. While research on camellia oil and green tea has been reported [,,,], and more recently on oolong and blended teas [,], very few studies have specifically focused on rice. DNA markers have proven successful in identifying species and varieties of seaweed and other crops, demonstrating high reliability []. However, DNA analysis requires specialized equipment and is destructive to the sample, limiting its routine use for food authentication. DNA marker analysis is a widely accepted and highly reliable method for varietal identification; however, it involves higher costs, longer processing times, and destructive sampling. In contrast, the spectroscopic and machine learning approach offers a rapid and cost-effective alternative, making it more suitable for routine industrial applications.

A method combining spectroscopy and machine learning enables rapid and low-cost analysis, providing a practical tool for industrial applications.

Fluorescence and NIR spectroscopy were selected because they provide complementary information: fluorescence reflects specific fluorophores such as amino acids and Maillard reaction products [,,,,,], whereas NIR captures bulk compositional features including water, carbohydrates, proteins, and lipids [,,]. Using both techniques allowed us to directly compare their discrimination ability and to explore the potential of multimodal approaches.

Previously, we identified the origins and varieties of dried kelp [] and green tea [] by combining fluorescence spectroscopy and a CNN. Expanding on this, in the current study, we collected fluorescence and NIR spectroscopic data from five polished rice varieties in Japan—Akitakomachi, Hitomebore, Hinohikari, Koshihikari and Nanatsuboshi—and constructed identification models using machine learning techniques, namely, CNN, KNN, RF, LR, and SVM. These varieties were selected because they are widely cultivated, economically important, and closely related, making accurate identification particularly relevant for authenticity assurance. No previous study has combined both fluorescence and NIR spectroscopy with machine learning for rice variety authentication. We also developed hybrid models by integrating CNN features with traditional algorithms to improve identification accuracy. Finally, we compared the identification performance of models using NIR spectra or fluorescence fingerprint data. Collectively, the findings of this study provide a practical tool for food authentication, brand protection, and counterfeit prevention.

2. Materials and Methods

2.1. Target Foods

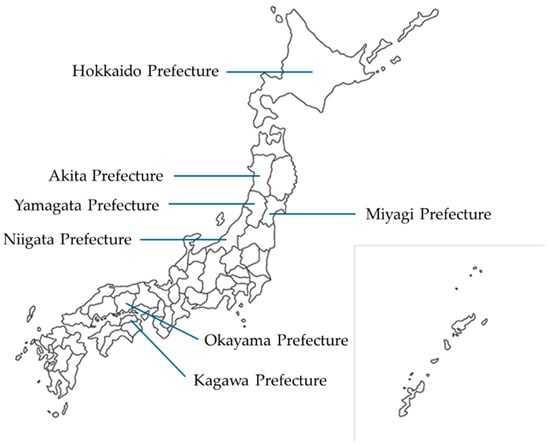

This study examined five polished rice varieties (Akitakomachi, Hinohikari, Hitomebore, Koshihikari, and Nanatsuboshi; Table 1) descended from the Koshihikari lineage that are widely cultivated and distributed throughout Japan (Figure 1), with the largest planting area among the non-glutinous rice varieties harvested in 2023. Three samples of each variety were purchased in 2024 [].

Table 1.

Specimen variety and origin.

Figure 1.

Geographical location of the sample sources throughout Japan.

The genealogical background of each variety are detailed as follows. Akitakomachi was developed by crossing Koshihikari with Ou 292 and is extensively cultivated throughout the Tohoku region []. Hitomebore originated from a cross between Koshihikari and Hatsuboshi (Aichi 26), and is predominantly grown in Miyagi Prefecture []. Hinohikari was developed by crossing Koganebare (Aichi 40) with Koshihikari, and is widely cultivated across western Japan []. Nanatsuboshi was developed in Hokkaido by crossing Hitomebore with Ku-kei 90242A, followed by an additional cross with Akiho (Soriku 150), and is known for its stable quality under cold climate conditions []. Koshihikari, introduced in 1945, is regarded as a representative Japanese variety and continues to maintain high popularity and market value [].

Before spectrofluorometric and NIR spectrometric analysis, all samples were ground for approximately 1 min (30 s × 2 repetitions) in a DR MILLS mill (model: DM-7452; Guangzhou, Guangdong, China). The samples were subsequently stored in airtight containers at room temperature (~25 °C) to prevent degradation due to oxygen and humidity exposure for less than 1 month. This preparation process minimized variability during spectroscopic measurements and improved reproducibility and analytical accuracy.

2.2. Acquisition of EEM Spectra

EEM spectral data were acquired for rice flour samples using a calibrated F-7100 fluorescence spectrophotometer (Hitachi High-Tech Science, Hitachi-shi, Ibaraki, Japan). Subsamples of three ground products from different manufacturers per variety were measured, with 100 independent readings for each of the 15 products, totaling 1500 fluorescence spectral data points. Measurements used excitation/emission ranges of 250–550 nm, with intervals of 10 nm (excitation) and 5 nm (emission), at a photomultiplier voltage of 680 V.

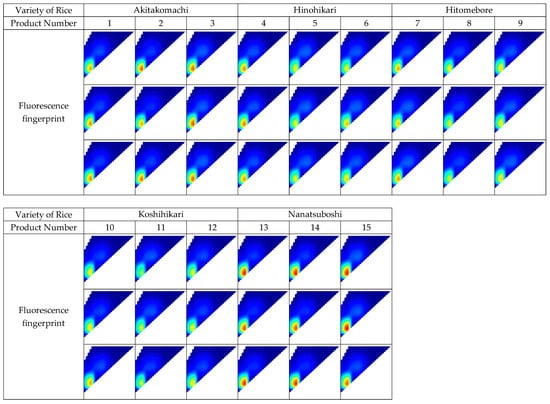

Each EEM data point was saved as a three-dimensional fluorescence matrix FD3 file, converted to text format (TXT file) via FL Solution 4.2, and then to PNG images using Python. During preprocessing, a ± 30 nm around each wavelength was masked to eliminate scattered light, and fluorescence intensities from all 2D images were rescaled between 10 and 2630. Images were cropped to 360 × 360 pixels using Python to standardize scale and remove excessive whitespace. ensure a common scale and to remove unnecessary white spaces (Figure 2). Four-digit sequential numbers (0001–1500) were assigned to the 2D image file names. A CSV file was created to match the file number of each image. The “No.” column contained a four-digit number; the “class” column contained the variety name, and the “class_maker” column contained the variety and manufacturer information. The 2D images and CSV files were used as training and evaluation data for the machine learning models.

Figure 2.

Representative example of a 2D image (cropped). Each row indicates a different product; x-axis: excitation wavelength (250–550 nm), y-axis: emission wavelength (250–550 nm).

2.3. Acquisition of Near-Infrared Spectroscopic Data

NIR spectroscopy measurements were conducted using a compact spectrometer (NIR Meter, Spectra Co-op, Tokyo, Japan) operating within the 900–1700 nm wavelength range.

All NIR spectral data were compiled into a single CSV file for subsequent machine learning model training and validation. Absorbance data were stratified according to the “product” column in the CSV file, with training and test datasets divided at an 8:2 ratio (1200 training sets, 300 test sets). To maintain reproducibility, the random seed was set to 42.

2.4. Developing a Machine Learning Model: Fluorescence Fingerprint Data

Rice varieties were identified using traditional machine learning algorithms (KNN, RF, LR, and SVM) and a deep learning algorithm (CNN). KNN, RF, LR, and SVM were selected as widely used benchmark algorithms in chemometrics and food authenticity research. CNN was also employed because it can directly process fluorescence fingerprint images and extract high-dimensional features. In addition to single-algorithm models, hybrid models were constructed that combined CNN feature extraction with other classifiers. For non-CNN models, 2D images were stratified by the class_marker column and split 8:2 into training (1200) and test (300) sets. The training set was further split into training (960 items) and validation (240 items) data. For both data divisions, the random seed was fixed at random_state = 42 for reproducibility. Additionally, stratified sampling was applied to all splits to avoid dataset bias.

2.4.1. Single-Algorithm Models

Fluorescent fingerprint images served as the input data to develop five classification models using distinct algorithms: CNN, KNN, RF, LR, and SVM. All models were implemented using Python.

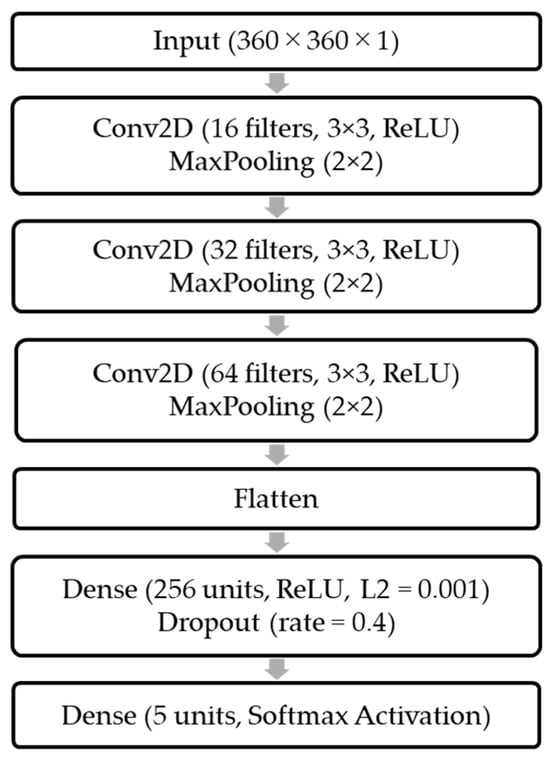

The CNN was constructed using TensorFlow (ver. 2.18.0) and Keras (ver. 3.9). Its configuration comprised three convolutional layers (Conv2D) with 16, 32, and 64 filters, each with a kernel size of 3 × 3. The ReLU activation function was applied throughout, with L2 regularization (coefficient = 0.001). Each convolutional layer was followed by a 2 × 2 max pooling layer (MaxPool2D). Post-convolution, the model included a fully connected dense layer with 256 units, activated by ReLU and regularized with L2, followed by a dropout layer (rate = 0.4) to prevent overfitting. The output layer consisted of five units corresponding to the classification targets, employing a softmax activation function. The model training utilized an initial learning rate of 0.0001, the Adam optimizer, categorical cross-entropy loss, accuracy as the evaluation index, 100 epochs, and a batch size of 16. The ModelCheckpoint mechanisms preserved the network upon achieving peak validation accuracy. The CNN-based identification program was run ten times, and the highest-performing model from each run was saved (.keras format), yielding ten sequentially numbered model files.

The KNN, RF, LR, and SVM models were implemented using scikit-learn with standard parameter settings, while key hyperparameters were systematically optimized to maximize test accuracy (e.g., n_neighbors for KNN, n_estimators for RF, and C for LR and SVM). For each algorithm, the smallest parameter value yielding the highest accuracy was adopted. This consistent optimization framework was applied across all algorithms to enable a fair comparison with the CNN-based and hybrid models, which has not been explicitly reported in previous studies.

The overall architecture of the CNN model is shown in Figure 3. It consists of three convolutional layers (Conv2D: 16, 32, and 64 filters, kernel size 3 × 3, ReLU activation) followed by 2 × 2 max pooling layers, a fully connected dense layer (256 units, ReLU, L2 regularization = 0.001), a dropout layer (rate = 0.4), and an output layer with five softmax units corresponding to the rice varieties. This schematic provides a visual summary of the configuration described above, clarifying the structure of the CNN model used in this study.

Figure 3.

Architecture of the CNN model used for rice variety identification, consisting of three convolutional and pooling blocks, a fully connected layer with dropout, and a softmax output layer.

2.4.2. Hybrid Models

Rice variety identification follows a two-stage process, involving feature extraction and classification, which is typical in image-based deep learning applications. In this study, hybrid models were developed that integrated optimized algorithms from both stages. Specifically, feature extraction was performed using a CNN, while classification leveraged conventional machine learning algorithms (KNN, RF, LR, or SVM), resulting in four hybrid model combinations: CNN+KNN, CNN+RF, CNN+LR, and CNN+SVM.

A trained model (.keras format) with the highest validation accuracy among the CNN-only models was selected. The output vector of the fully connected layer (dense, units = 256, activation = ReLU, and kernel regularizer = L2[0.001]) of the CNN model generated a 256-dimensional feature vector for each image.

The conventional algorithms used for classification were configured under the same conditions as those applied in their independent model. However, key hyperparameters—n_neighbors for KNN, n_estimators for RF, and C (inverse of the regularization term) for LR and SVM—were further optimized to maximize the variety identification accuracy on the test dataset. The most effective parameters identified through this process were subsequently adopted.

2.5. Developing Machine Learning Models: Near-Infrared Spectroscopy Data

Identification models for NIR spectroscopy were constructed using KNN, LR, RF, and SVM. All the algorithms were implemented using Python and the scikit-learn library, and the key hyperparameters for each algorithm were optimized through searches.

For KNN, n_neighbors ranged from 3 to 20; for LR and SVM, C ranged from 0.0001 to 10,000; and for RF, n_estimators ranged from 10 to 200. Parameters maximizing test accuracy were selected, and max_iter (LR) was set to 1000, probability (SVM) to true, and randome_state to 42 in all applicable cases.

3. Results

3.1. Recognition Accuracy of Machine Learning Models Using Fluorescence Fingerprint Data

Among the single-algorithm models, the CNN model achieved the highest mean accuracy (0.9717) over ten iterations (Table 2). The KNN model followed with a maximum accuracy of 0.9533 (n_neighbors = 6). The RF model reached 0.9267 (n_estimators = 41), the LR model achieved 0.9100 (C = 10), and the SVM model peaked at 0.9033 (C = 1000). Overall, the CNN model outperformed the other single-algorithm models in classification accuracy.

Table 2.

Classification accuracy of single-algorithm machine learning models.

In the hybrid models, features extracted from CNN were classified using the KNN, RF, LR, and SVM algorithms. The CNN+KNN model achieved the highest accuracy, with an average precision of 0.9817 over 10 trials (Table 3). Both CNN+KNN and CNN+SVM showed the highest average accuracies (0.9817 and 0.9813, respectively), suggesting that both hybrid methods are optimal for rice variety identification. Based on this, in this study, we further explored CNN+KNN as a representative hybrid model in subsequent analyses. While CNN+SVM performed similarly, CNN+KNN was chosen for further consideration because it is a good example of combining CNN feature extraction with a simple, highly interpretable classification algorithm. The optimal n_neighbors value varied for each CNN model (3 was selected 5 times, 4 was selected 3 times, and 6 was selected twice). CNN+RF, CNN+LR, and CNN+SVM also performed well, with average accuracies of 0.9770, 0.9757, and 0.9813, respectively. The optimal hyperparameters (such as n_estimators and C) differed for each CNN model and were determined individually.

Table 3.

Comparison of classification accuracy between CNN and hybrid models.

The confusion matrix (Table 4) and precision and recall (Table 5) results confirm that all hybrid models demonstrated higher varietal identification accuracy than the corresponding single-algorithm models, with the CNN+KNN model demonstrating the best performance. A paired t-test comparing the accuracies of the CNN and CNN+KNN models across ten runs revealed a statistically significant difference (t (9) = −5.20, p = 0.00056), indicating that the CNN+KNN model outperformed the CNN model.

Table 4.

Confusion matrix for the CNN+KNN model.

Table 5.

Precision and recall for the CNN+KNN model.

The rice varietal identification program using the CNN+KNN model resulted in 56 misclassifications across ten runs. Nine distinct data types were misclassified (Table 6). Several samples were repeatedly misclassified across the ten iterations and consistently assigned to the same incorrect variety (files 224, 275, 492, 674, 993, and 1111). This pattern suggests that the features of these particular samples may closely resemble those of other varieties.

Table 6.

Number of misclassifications of test data using the KNN+CNN model.

For all varieties, fluorescence intensity significantly increased in two wavelength regions. The first peak appeared at an excitation of 280 nm and emission of 340 nm, exhibiting high intensity and a narrow base. The second, broader and shorter peak occurred at an excitation of 340 nm and emission of 440 nm. These fluorescence differences may help distinguish each variety.

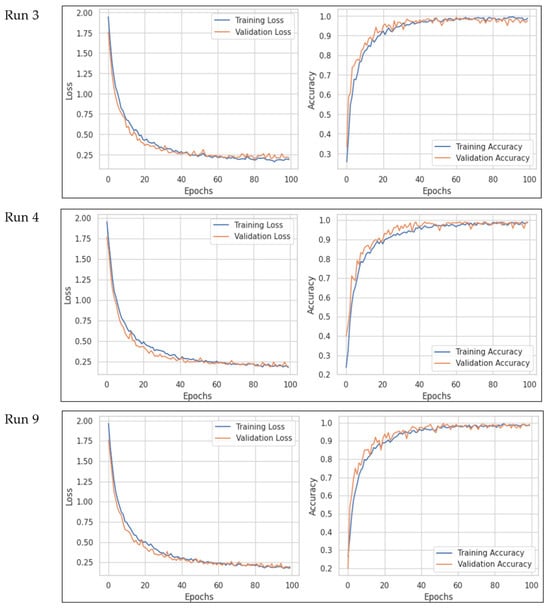

3.2. Learning Curves

Figure 4 shows the learning curves for the third run (accuracy = 0.9833), which showed the highest accuracy, and the fourth and ninth runs (accuracy = 0.9633), which showed the lowest accuracy, out of 10 independent runs of the CNN model alone. In none of the learning curves were there any significant discrepancies between the training and validation curves, and the two converged smoothly, showing no signs of overfitting. This confirmed that the model was properly trained. The model was trained for a maximum of 100 epochs. The average number of epochs required for the CNN model to achieve the best validation accuracy across 10 independent runs was 70.40 epochs, with a standard deviation of 16.70. Furthermore, little improvement in accuracy was observed between 80 and 100 epochs. Based on these results, setting the training time to 100 epochs appears to be an appropriate setting for ensuring that the model is trained properly.

Figure 4.

Learning curves of the CNN model for three representative runs (Run 3, Run 4, and Run 9). Training and validation accuracy and loss over 100 epochs show smooth convergence without divergence, confirming stable training and absence of overfitting.

3.3. Classification Accuracy of Machine Learning Models Using Near-Infrared Spectroscopy Data

Among the rice variety identification models using NIR spectral data, the KNN model achieved the highest discrimination performance, with a maximum accuracy of 0.9367 (n_neighbors = 3), followed by the RF model (0.9300, n_estimators = 72), and LR and SVM models (0.9267, C = 0.0001 for both; Table 7). Hence, the KNN algorithm was identified as the most suitable option for the identification models using NIR spectra.

Table 7.

Classification accuracy of machine learning models using near-infrared data.

To further illustrate the discrimination results using the KNN model, a confusion matrix (Table 8) and precision and recall (Table 9) analysis were performed for each variety. Hitomebore was most frequently misclassified as Akitakomachi, and vice versa. Meanwhile, few misclassifications were recorded for Hinohikari, Koshihikari, and Nanatsuboshi, indicating high discrimination accuracy.

Table 8.

Confusion matrix for the KNN model.

Table 9.

Precision and recall for the KNN model.

4. Discussion

This study demonstrated that rice variety identification achieved high accuracy rates (>0.9) utilizing both fluorescence fingerprints and NIR spectra. Among the models employing NIR spectra, the KNN algorithm attained the highest accuracy (0.9367), surpassing the LR, RF, and SVM models applied to fluorescence fingerprint data. The highest overall accuracy was observed with the CNN model (0.9717) using fluorescence fingerprints as input. While NIR spectra primarily provide absorption information from bulk constituents, such as water, carbohydrates, lipids, and proteins [], fluorescence fingerprints offer a two-dimensional matrix of excitation and emission wavelengths [], potentially enabling clearer visualization of differences in the chemical properties and abundance of fluorescent compounds within the samples.

Hybrid models integrating a CNN utilizing fluorescent fingerprints with conventional algorithms (KNN, RF, LR, and SVM) consistently achieved superior classification accuracy than single-algorithm models. This enhancement is attributed to the 256-dimensional feature vector extracted by the deep learning model (CNN), which was effectively leveraged by conventional classification algorithms to discriminate subtle inter-varietal differences. Notably, the CNN+KNN model yielded the best performance, achieving an average classification accuracy of 0.9817.

Increased fluorescence intensity was detected in 2D images at an excitation of 280 nm and emission of 340 nm across all varieties. Among these, Nanatsuboshi exhibited a particularly strong fluorescence, resulting in precision and recall values of 1.000. This pronounced peak likely contributed to Nanatsuboshi’s high classification accuracy. Similarly, Akitakomachi exhibited robust fluorescence within the same spectral range, demonstrating high classification accuracy with a precision of 1.000 and a recall of 0.9933. In contrast, Hinohikari, Hitomebore, and Koshihikari shared similar fluorescence profiles in this wavelength range, contributing to their mutual misclassification.

The fluorescence peak at excitation 280 nm and emission 340 nm is likely attributable to tryptophan, a fluorescent amino acid known to emit strongly at these wavelengths []. Prior research has also confirmed the presence of tryptophan in rice flour [], supporting the attribution of the observed peak in the current study. An additional peak at excitation 340 nm and emission 440 nm may be ascribed to advanced glycation end products (AGEs), which are fluorescent compounds formed via the non-enzymatic Maillard reaction between proteins and sugars. The observed excitation and emission parameters are consistent with those reported for AGEs []. Given that unheated polished rice was analyzed in this study, the formation of AGEs associated with heat-induced Maillard reaction is presumed to be minimal; however, the presence of trace naturally occurring AGEs cannot be ruled out. Notably, AGEs are generally produced in lower quantities in carbohydrate-based grains relative to food with higher fat and protein content [], which is consistent with the relatively weak fluorescence signal attributed to AGEs observed in the present study.

Furthermore, in this study, the CNN model using fluorescence fingerprinting consistently achieved higher discrimination accuracy than the model using NIR spectra. This is likely attributable to the CNN’s capability for extracting detailed patterns from two-dimensional image data using deep-learning algorithms optimized for image processing. The observed image variations are presumed to reflect differences in tryptophan content and other chemical constituents among rice varieties, indicating that fluorescence fingerprinting serves as an effective approach for variety discrimination.

Nevertheless, several limitations in this study should be noted. The present dataset consisted of 15 products (three per variety) collected in a single harvest year (2023) from commercial sources, which may limit the generalizability of the model. All samples were commercially sourced, with variability in harvest time, milling dates, and storage conditions. These factors may influence compositional changes in rice, such as lipid oxidation and protein degradation during storage, potentially affecting fluorescence and NIR spectra profiles. To mitigate these variables, future studies should incorporate samples of identical variety and origin, with stringent control over harvest timing and storage periods. Furthermore, as harvest times vary across regions in Japan, seasonality may further impact discrimination accuracy. Thus, subsequent validation studies considering seasonal influences are warranted. Future studies will extract wavelength-specific importance from NIR spectra (e.g., using RF feature importance) and apply visualization techniques such as Grad-CAM to identify influential regions in fluorescence images, thereby improving interpretability. Additional methods such as PCA or PLS may further improve interpretability of varietal discrimination and will be considered in future work.

5. Conclusions

Overall, the findings of this study confirm that integrating fluorescence fingerprint data with a CNN-based deep learning model represents an accurate and reproducible method for identifying rice varieties. The CNN+KNN hybrid model achieved the highest mean accuracy of 0.9817, followed by the fluorescence fingerprint + CNN model (0.9717) and the NIR + KNN model (0.9367). This methodology holds promise for application to other grains and agricultural products, and is anticipated to enhance food traceability and protect brand value as a rapid, low-cost and highly accurate tool for authenticity assessment. This framework can be extended to other grains and food matrices, providing a versatile tool for authenticity assessment across agricultural products. Future work will focus on extending this approach to multi-year datasets and additional food categories.

Author Contributions

Conceptualization, T.M.; methodology, R.A.; software, R.A. and T.M.; validation, T.M.; data curation, R.A.; writing—original draft preparation, R.A.; review, Y.L.; writing—review and editing, T.M.; project administration, T.M.; funding acquisition, T.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JSPS KAKENHI (grant number JP25K05727); https://www.jsps.go.jp/j-grantsinaid/16_rule/rule.html (accessed on 28 July 2025).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The Data supporting the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AGEs | advanced glycation end products |

| CNN | convolutional neural network |

| EEM | excitation emission matrix |

| KNN | k-nearest neighbor algorithm |

| LR | logistic regression |

| NIR | near-infrared spectroscopy |

| RF | random forest |

| SVM | support vector machine |

| THz | terahertz |

References

- Ministry of Agriculture, Forestry and Fisheries. Monthly Report on Rice. Available online: https://www.maff.go.jp/j/seisan/keikaku/soukatu/mr.html (accessed on 28 July 2025). (In Japanese)

- Ministry of Agriculture, Forestry and Fisheries. About the System for Labeling the Origin of Ingredients in Processed Foods. Available online: https://www.maff.go.jp/j/syouan/hyoji/gengen_hyoji.html (accessed on 28 July 2025). (In Japanese)

- Yang, S.; Li, C.; Mei, Y.; Liu, W.; Liu, R.; Chen, W.; Han, D.; Xu, K. Determination of the Geographical Origin of Coffee Beans Using Terahertz Spectroscopy Combined with Machine Learning Methods. Front. Nutr. 2021, 8, 680627. [Google Scholar] [CrossRef]

- Quan, N.M.; Phung, H.M.; Uyen, L.; Dat, L.Q.; Ngoc, L.M.; Hoang, N.M.; Tu, T.K.M.; Dung, N.H.; Ai, C.T.D.; Trinh, D. Species and geographical origin authenticity of green coffee beans using UV–VIS spectroscopy and PLS–DA prediction model. Food Chem. Adv. 2023, 2, 100281. [Google Scholar] [CrossRef]

- Gu, H.-W.; Zhou, H.-H.; Lv, Y.; Wu, Q.; Pan, Y.; Peng, Z.-X.; Zhang, X.-H.; Yin, X.-L. Geographical origin identification of Chinese red wines using ultraviolet-visible spectroscopy coupled with machine learning techniques. J. Food Compos. Anal. 2023, 119, 105265. [Google Scholar] [CrossRef]

- Poulli, K.I.; Mousdis, G.A.; Georgiou, C.A. Monitoring olive oil oxidation under thermal and UV stress through synchronous fluorescence spectroscopy and classical assays. Food Chem. 2009, 117, 499–503. [Google Scholar] [CrossRef]

- Milori, D.M.B.P.; Raynaud, M.; Villas-Boas, P.R.; Venâncio, A.L.; Mounier, S.; Bassanezi, R.B.; Redon, R. Identification of citrus varieties using laser-induced fluorescence spectroscopy (LIFS). Comput. Electr. Agric. 2013, 95, 11–18. [Google Scholar] [CrossRef]

- Ruoff, R.; Luginbuhl, W.; Kunzli, R.; Bogdanov, S.; Bosset, J.O.; von der Ohe, K.; von der Ohe, W.; Amandoa, R. Authentication of the Botanical and Geographical Origin of Honey by Front-Face Fluorescence Spectroscopy. J. Agric. Food Chem. 2006, 54, 6858–6866. [Google Scholar] [CrossRef] [PubMed]

- Dufour, E.; Letort, A.; Laguet, A.; Lebecque, A.; Serra, J.N. Investigation of variety, typicality and vintage of French and German wines using front-face fluorescence spectroscopy. Anal. Chim. Acta 2006, 563, 292–299. [Google Scholar] [CrossRef]

- Fang, H.; Wang, T.; Chen, L.; Wang, X.-Z.; Wu, H.-L.; Chen, Y.; Yu, R.-Q. Rapid authenticity identification of high-quality Wuyi Rock tea by multidimensional fluorescence spectroscopy coupled with chemometrics. J. Food Compos. Anal. 2024, 135, 106632. [Google Scholar] [CrossRef]

- Hu, Y.; Wu, Y.; Sun, J.; Geng, J.; Fan, R.; Kang, Z. Distinguishing Different Varieties of Oolong Tea by Fluorescence Hyperspectral Technology Combined with Chemometrics. Foods 2022, 11, 2344. [Google Scholar] [CrossRef]

- Chen, Q.; Zhao, J.; Fang, C.H.; Wang, D. Feasibility study on identification of green, black and Oolong teas using near-infrared reflectance spectroscopy based on support vector machine (SVM). Spectrochim. Acta A Mol. Biomol. Spectrosc. 2007, 66, 568–574. [Google Scholar] [CrossRef]

- Cardoso, V.G.; Poppi, R.J. Non-invasive identification of commercial green tea blends using NIR spectroscopy and support vector machine. Microchem. J. 2021, 164, 106052. [Google Scholar] [CrossRef]

- Yang, Q.; Tian, S.; Xu, H. Identification of the geographic origin of peaches by VIS-NIR spectroscopy, fluorescence spectroscopy and image processing technology. J. Food Compos. Anal. 2022, 114, 104843. [Google Scholar] [CrossRef]

- Xie, L.H.; Shao, G.N.; Sheng, Z.H.; Hu, S.K.; Wei, X.J.; Jiao, G.A.; Wang, L.; Tang, S.Q.; Hu, P.S. Rapid identification of fragrant rice using starch flavor compound via NIR spectroscopy coupled with GC–MS and Badh2 genotyping. Int. J. Biol. Macromol. 2024, 281, 136547. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Li, Y.; Peng, Y.; Yang, Y.; Wang, Q. Detection of fraud in high-quality rice by near-infrared spectroscopy. J. Food Sci. 2020, 85, 2773–2782. [Google Scholar] [CrossRef]

- Rizwana, S.; Hazarika, M.K. Application of Near-Infrared Spectroscopy for Rice Characterization Using Machine Learning. J. Inst. Eng. India Ser. A 2020, 101, 579–587. [Google Scholar] [CrossRef]

- Vafakhah, M.; Asadollahi-Baboli, M.; Hassaninejad-Darzi, S.K. Raman spectroscopy and chemometrics for rice quality control and fraud detection. J. Consum. Prot. Food Saf. 2023, 18, 403–413. [Google Scholar] [CrossRef]

- Hu, L.; Zhang, Y.; Ju, Y.; Meng, X.; Yin, C. Rapid identification of rice geographical origin and adulteration by excitation-emission matrix fluorescence spectroscopy combined with chemometrics based on fluorescence probe. Food Control 2023, 146, 109547. [Google Scholar] [CrossRef]

- Li, C.; Tan, Y.; Liu, C.; Guo, W. Rice Origin Tracing Technology Based on Fluorescence Spectroscopy and Stoichiometry. Sensors 2024, 24, 2994. [Google Scholar] [CrossRef]

- Wang, S.; Yang, X.; Zhang, Y.; Phillips, P.; Yang, J.; Yuan, T.F. Identification of green, oolong and black teas in China via wavelet packet entropy and fuzzy support vector machine. Entropy 2015, 17, 6663–6682. [Google Scholar] [CrossRef]

- Chen, A.-Q.; Wu, H.-L.; Wang, T.; Wang, X.-Z.; Sun, H.-B.; Yu, R.-Q. Intelligent analysis of excitation-emission matrix fluorescence fingerprint to identify and quantify adulteration in camellia oil based on machine learning. Talanta 2023, 251, 123733. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Li, M.; Fan, B.; Sun, Y.; Tong, L.; Wang, F.; Li, L. A rapid and low-cost method for detection of nine kinds of vegetable oil adulteration based on 3-D fluorescence spectroscopy. LWT 2023, 188, 115419. [Google Scholar] [CrossRef]

- Hu, Y.; Wei, C.; Wang, X.; Wang, W.; Jiao, Y. Using three-dimensional fluorescence spectroscopy and machine learning for rapid detection of adulteration in camellia oil. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2025, 329, 125524. [Google Scholar] [CrossRef] [PubMed]

- Zou, Z.; Wu, Q.; Long, T.; Zou, B.; Zhou, M.; Wang, Y.; Liu, B.; Luo, J.; Yin, S.; Zhao, Y.; et al. Classification and adulteration of mengding mountain green tea varieties based on fluorescence hyperspectral image method. J. Food Compos. Anal. 2023, 117, 105141. [Google Scholar] [CrossRef]

- Kawai, T.; Yotsukura, N. Current remarks of phylogeny and taxonomy on genus Laminaria. Rishiri Stud. 2005, 24, 37–47. Available online: https://riishiri.sakura.ne.jp/Sites/RS/archive/242005/2406.pdf (accessed on 28 July 2025). (In Japanese).

- Suzuki, K.; Akiyama, R.; Llave, Y.; Matsumoto, T. Origin and Variety Identification of Dried Kelp Based on Fluorescence Fingerprinting and Machine Learning Approaches. Appl. Sci. 2025, 15, 1803. [Google Scholar] [CrossRef]

- Akiyama, R.; Suzuki, K.; Llave, Y.; Matsumoto, T. Fluorescence Spectroscopy and a Convolutional Neural Network for High-Accuracy Japanese Green Tea Origin Identification. AgriEngineering 2025, 7, 95. [Google Scholar] [CrossRef]

- Rice Supply Stability Support Organization (Public Interest Incorporated Association). Planting Trends by Variety of Rice for the 2023 Harvest. 2023. Available online: https://www.komenet.jp/pdf/R05sakutuke.pdf (accessed on 28 July 2025). (In Japanese).

- National Agriculture and Food Research Organization. Next Generation Crop Development Research Center, Rice Variety Database Search System, Akitakomachi. Available online: https://ineweb.narcc.affrc.go.jp/search/ine.cgi?action=inedata_top&ineCode=AKI0000310 (accessed on 28 July 2025). (In Japanese)

- National Agriculture and Food Research Organization. Next Generation Crop Development Research Center, Rice Variety Database Search System, Hitomebore. Available online: https://ineweb.narcc.affrc.go.jp/search/ine.cgi?action=inedata_top&ineCode=TOH0001430 (accessed on 28 July 2025). (In Japanese)

- National Agriculture and Food Research Organization. Next Generation Crop Development Research Center, Rice Variety Database Search System, Hinohikari. Available online: https://ineweb.narcc.affrc.go.jp/search/ine.cgi?action=inedata_top&ineCode=NAN0001020 (accessed on 28 July 2025). (In Japanese)

- National Agriculture and Food Research Organization. Next Generation Crop Development Research Center, Rice Variety Database Search System, Nanatsuboshi. Available online: https://ineweb.narcc.affrc.go.jp/search/ine.cgi?action=inedata_top&ineCode=SOR0001630 (accessed on 28 July 2025). (In Japanese)

- National Agriculture and Food Research Organization. Next Generation Crop Development Research Center, Rice Variety Database Search System, Koshihikari. Available online: https://ineweb.narcc.affrc.go.jp/search/ine.cgi?action=inedata_top&ineCode=ETU0000170 (accessed on 28 July 2025). (In Japanese)

- Tsuta, M.; Sugiyama, J.; Sagara, Y. Development of Food Quality Measurement Methods Based on Near-infrared Imaging Spectroscopy-Applications to Visualization of Sugar Content Distribution in Fresh Fruits and Fruit Sorting. Jpn. Soc. Food Sci. Technol. 2011, 158, 73–80. (In Japanese) [Google Scholar] [CrossRef]

- Kokawa, M.; Sugiyama, J.; Tsuta, M. Development of the Fluorescence Fingerprint Imaging Technique. Jpn. Soc. Food Sci. Technol. 2015, 62, 477–483. [Google Scholar] [CrossRef]

- Ladokhin, A.S. Fluorescence Spectroscopy in Peptide and Protein Analysis. In Encyclopedia of Analytical Chemistry; Meyers, R.A., Ed.; Wiley: Hoboken, NJ, USA, 2000; pp. 5762–5779. Available online: https://cmb.i-learn.unito.it/pluginfile.php/24675/mod_folder/content/0/Fluorescence-advanced.pdf?forcedownload=1 (accessed on 28 July 2025).

- Amagliani, L.; O’Regan, J.; Kelly, A.L.; O’Mahony, J.A. Composition and protein profile analysis of rice protein ingredients. J. Food Compos. Anal. 2017, 59, 18–26. [Google Scholar] [CrossRef]

- Wei, Q.; Liu, T.; Sun, D.-W. Advanced glycation end-products (AGEs) in foods and their detecting techniques and methods: A review. Trends Food Sci. Tech. 2018, 82, 32–45. [Google Scholar] [CrossRef]

- Uribarri, J.; Woodruff, S.; Goodman, S.; Cai, W.; Chen, X.; Pyzik, R.; Yong, A.; Striker, G.E.; Vlassara, H. Advanced Glycation End Products in Foods and a Practical Guide to Their Reduction in the Diet. J. Am. Diet Assoc. 2010, 110, 911–916.e12. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).